Abstract

Humans devote much time to the exchange of memories within the context of shared general and personal semantic knowledge. Our hypothesis was that functional imaging in normal subjects would demonstrate the convergence of speech comprehension and production on high-order heteromodal and amodal cortical areas implicated in declarative memory functions. Activity independent of speech phase (that is, comprehension and production) was most evident in the left and right lateral anterior temporal cortex. Significant activity was also observed in the posterior cortex, ventral to the angular gyri. The left and right hippocampus and adjacent inferior temporal cortex were active during speech comprehension, compatible with mnemonic encoding of narrative information, but activity was significantly less during the overt memory retrieval associated with speech production. Therefore, although clinical studies suggest that hippocampal function is necessary for the retrieval as well as the encoding of memories, the former appears to depend on much less net synaptic activity. In contrast, the retrosplenial/posterior cingulate cortex and the parahippocampal area, which are closely associated anatomically with the hippocampus, were equally active during both speech comprehension and production. The results demonstrate why a severe and persistent inability both to understand and produce meaningful speech in the absence of an impairment to process linguistic forms is usually only observed after bilateral, and particularly anterior, destruction of the temporal lobes, and emphasize the importance of retrosplenial/posterior cingulate cortex, an area known to be affected early in the course of Alzheimer's disease, in the processing of memories during communication.

Keywords: auditory, motor, cortex, language, memory, speech

Introduction

It has been proposed that the processing of a stimulus is elaborated through a synaptic hierarchy, progressing from unimodal sensory association cortex to neocortical and paralimbic heteromodal cortices and, thence, to limbic regions, and that this architecture underlies the perception and comprehension of language (Mesulam, 1998). Actions under conscious control are the product of another hierarchy in frontal cortex, between prefrontal, premotor, and motor cortices (Fuster, 1997). Parallel reciprocal connections between the posterior and anterior systems allow associations to be made between a stimulus and remembered knowledge and experience, conclusions to be made and inferences drawn, followed by an appropriate response, which, in essence, is what happens during conversational turn-taking.

A previous study investigated areas common to the comprehension of language independent of the auditory or visual modality (Spitsyna et al., 2006). These areas are widely distributed in heteromodal cortex located at discrete locations within the posterior, anterior, and inferior temporal lobe, with additional posterior and anterior midline amodal regions. Based on the general hypothesis that the synaptic hierarchies for attentive perception and willed action interact through the same mnemonic systems, one hypothesis is that the high-order areas observed by Spitsyna et al. (2006) are also involved in speech production. An additional expectation about where activity associated with speech comprehension and production might converge originates from the demonstration that posterior frontal mirror neurons of the nonhuman primate respond both to the perception of the sight or sound of an action and to the execution of that action (Gallese et al., 1996; Kohler et al., 2002). These observations supported the proposal that, in the human, there is direct mapping between the perception and articulation of speech (Rizzolatti and Arbib, 1998; Liberman and Whalen, 2000; Wilson et al., 2004; Pulvermüller et al., 2006).

Notwithstanding the proposed contribution of the mirror neuron system to speech perception as well as production, our hypothesis was that common activity during an exchange of narrative information would principally reflect access to declarative memory systems: mental repositories both of general knowledge (semantic memories) and of personal experiences (episodic memories). We predicted that comprehension depends principally on those polysynaptic pathways through heteromodal and amodal cortical areas described by Mesulam (1998), and that activity generated by spontaneous speech, which draws on the same internal representations of knowledge and experiences used to understand speech, converges on the same areas. To this end, we set up a study on normal subjects that, in effect, set up a dialogue in the scanning environment while measurements were made of relative regional changes in blood flow as an index of underlying net regional synaptic activity. The subjects were required both to listen to recordings of people talking about personal events and to respond to prompts to talk about episodes in their lives. Scans were also obtained during a range of baseline conditions, and these were used to generate contrasts with the scans obtained during speech comprehension and production.

Materials and Methods

We used positron emission tomography (PET) as the imaging modality to avoid the problems of articulation-related movement artifact associated with functional magnetic resonance imaging (fMRI). Separate scans were allocated to the subject hearing someone talk about himself or herself and speaking in response to a conversational prompt. We included a range of baseline conditions to optimize interpretation of the functional imaging data (Friston et al., 1996).

Participants.

Thirteen healthy right-handed unpaid volunteers (11 males) with normal hearing for their age participated in the study. The age range was 38–53 years, except for one male subject aged 70 years. The studies were approved both by the Administration of Radioactive Substances Advisory Committee (Department of Health, United Kingdom) and by the local research ethics committee of the Hammersmith Hospitals NHS Trust.

Behavioral tasks.

Intelligible speech was contrasted with its “matched” baseline condition of an unintelligible auditory stimulus (spectrally rotated speech), matched in that it closely resembled speech in terms of auditory complexity. The speech passages were individual recordings of responses to enquiries that evoked self-referential conversation (e.g., “tell me about one of the last Christmas presents you received”). Digital recordings, 1 min long, were made in an anechoic chamber, using a male and female speaker. The modality-specific unintelligible auditory baseline stimuli were created by spectral rotation of the digitized spoken narratives (Scott et al., 2000), using a digital version of the simple modulation technique described by Blesser (1972). Spectrally rotated speech preserves the acoustic complexity, the intonation patterns, and some of the phonetic cues and features of normal speech, but it is unintelligible. The stimuli were presented on an Apple (Cupertino, CA) Macintosh laptop computer using Psyscope software, via insert earphones. The subjects were instructed to listen attentively, but there was no explicit task demand. Each passage of intelligible and rotated speech was presented only once, and the recordings from the male and female speakers were randomized within and across subjects.

During separate scans, the subjects generated self-referential propositional speech in response to cues (e.g., “tell me what you did last weekend”). The prompts were different from those that had been used to elicit the speech that had been recorded for the speech comprehension scans. The subjects were encouraged to talk for at least 1 min, and a brief prompt (“tell me more”) was used when necessary to encourage continued speech production. The “matched” baseline condition for propositional speech production was counting (nonpropositional speech). Subjects counted upward from one at a rate of approximately one number per second. This has been shown in a previous study to approximate propositional speech in terms of rate of syllable production (Blank et al., 2002).

Although the use of conditions that create two sides of a dialogue in the scanning environment has the advantage of “ecological validity,” a potential confound is that personal narratives vary in the richness of episodic detail they contain. This can impact on the activity observed (Svoboda et al., 2006). Thus, a description of a personally unique and perhaps emotionally charged event (e.g., “we went on a cruise to Antarctica and saw a blue whale come up alongside the ship”) is qualitatively different from describing a frequent repetition of the same event (e.g., “we always go to Brighton for our holidays in August, and visit the pier each day”). Because the subjects were naive to the prompts they were going to receive during the speech production scans, to prevent previous rehearsal of stories with loss of spontaneity, we had no control over this aspect of the study. To investigate for possible differences in episodic content between speech comprehension and production, we took two narratives each from the recordings made from the two speakers who provided the passages the subjects heard in the scanner, and two recordings each from six subjects, randomly selected, as they spoke in the scanner. Six volunteers not involved in the scanning study scored each transcription for “the apparent richness of detail for a specific event.” The scorers were blind to which passages were spoken or heard.

An additional confound relates to the observation that subjects whose attention is not held by perception of meaningful stimuli or by performance of an explicit task exhibit a reproducible pattern of activity in midline anterior (prefrontal) and posterior (retrosplenial and posterior cingulate) cortex and in bilateral temporoparietal cortex. This distributed activity may be the consequence of stimulus-independent thoughts and self-referential recollections (Binder et al., 1999; Gusnard and Raichle, 2001; Gusnard et al., 2001; Mazoyer et al., 2001). They are the same regions that are most frequently observed in studies of autobiographical memory recall (Svoboda et al., 2006), typically prompted by verbal cues. Our hypothesis was that self-generated memories might contribute to brain activity measured during passive perception of the unintelligible rotated speech and during the monotonous automatic task of counting. Contrasting the language and baseline conditions would, therefore, result in at least partial masking of activity associated with the comprehension and production of speech. To optimize detection of this activity, we used an attention-demanding numerical task, developed by Stark and Squire (2001), as an additional “unmatched” baseline condition; a strategy that proved effective in a previous study (Spitsyna et al., 2006). During the numerical task, the subjects heard a series of numbers from 1–10, randomly selected, and were instructed to press one of two buttons with the right hand after each number to indicate whether the number was odd or even. Each button press resulted in the immediate presentation of the next number. Equal emphasis was placed on response speed and accuracy. Contrasts of the speech comprehension and production conditions with the numerical task were used to reveal common activity that might otherwise have been masked by intrusions of stimulus-independent thoughts and self-referential reflections during the matched baseline conditions.

Functional neuroimaging.

The subjects were scanned on a Siemens (Knoxville, TN) HR++ (966) PET camera operated in high-sensitivity three-dimensional mode, performed in conjunction with Hammersmith Imanet. The field of view (20 cm) covers the whole brain with a resolution of 5.1 mm full-width at half-maximum in x-, y- and z-axes. A transmission scan was performed for attenuation correction. Fifteen scans were performed on each subject, three scans each per condition, with the subjects' eyes closed. Condition order was randomized within and between subjects.

The dependent variable in functional imaging studies is the hemodynamic response: a local increase in synaptic activity is associated with increased local metabolism, coupled to an increase in regional cerebral blood flow (rCBF). Water labeled with a positron-emitting isotope of oxygen (H215O) was supplied by Hammersmith Imanet. This tracer was used to demonstrate changes in rCBF, equivalent to changes in tissue concentration of H215O. During each scan, approximately five mCi H215O was infused as a slow bolus over 40 s, resulting in a rapid rise in measurable emitted radioactivity (head counts) that peaked after 30–40 s. Stimulus presentation and speech production encompassed the incremental phase by commencing 10 s before the rise in head counts and continuing for 10–15 s after the counts began to decline because of wash-out and radioactive decay. Individual scans were separated by intervals of 6 min.

Whole-brain subtractive analyses.

Standard image preprocessing (image realignment, anatomical normalization, and smoothing with a 12 mm Gaussian filter) and whole-brain statistical analyses were performed using SPM2 software (Wellcome Department of Cognitive Neurology, Queen Square, London, www.fil.ion.ucl.ac.uk/spm). We used a fixed-effects model to generate statistical parametric maps (SPMs) representing the results of voxel-wise t test comparisons for the contrasts between language and baseline conditions. The voxel-level statistical threshold was set at p < 0.05, with family-wise error (FWE) correction for multiple comparisons, and a cluster extent threshold of 10 voxels. Inclusive masking was used to identify activation regions common to more than one contrast, with a statistical threshold equivalent to p < 0.05 (z-score, >4.8), FWE corrected, for each contrast (Nichols et al., 2005). Use of a fixed-effects model allowed relative activation for all conditions to be directly visualized and evaluated, using effect size histograms, in all contrasts of interest. Describing regional activity during one condition relative to activity across all conditions avoids some of the potential errors of interpretation inherent in cognitive subtractions (Friston et al., 1996).

Region-of-interest analyses.

For the region-of-interest (ROI) analyses, we defined three regions in the left temporal lobe and their homotopic regions on the right from a probabilistic electronic brain atlas (Hammers et al., 2003). Informed by our previous studies of the automatic comprehension of spoken language (Scott et al., 2000; Crinion et al., 2003; Spitsyna et al., 2006) and propositional speech production (Blank et al., 2002), we selected anatomical ROIs for the ventrolateral temporal pole and anterior fusiform gyrus. We also included the hippocampus, defined from the same electronic brain atlas, to investigate medial temporal lobe activity associated with the implicit mnemonic encoding of what had been heard, and with memory retrieval during speech production.

We defined one more region in each hemisphere, which we termed the temporal-occipital-parietal (TOP) junction in a previous study (Spitsyna et al., 2006). There is no probabilistic atlas for this region, which lies just ventral to the angular gyrus. As in the previous study (Spitsyna et al., 2006), we defined the ROI on functional grounds, as a sphere, with a radius of 6 mm, centered on the peak activity during speech comprehension (ListSp) and speech production (PropSp), each condition contrasted separately with the numerical task (NumTsk). In the left hemisphere, the Montreal Neurological Institute (MNI) stereotactic coordinates for the peak voxels in the two separate contrasts were almost identical (0–4 mm in the x, y, and z planes), and the sphere was centered on the mean of each coordinate, at x = −48, y = −74, z = 28. The coordinates for the right hemisphere were also very similar in the two separate contrasts (0–2 mm in the three planes), and the sphere was centered at x = 54, y = −72, z = 26. The locations of these ROIs are closely consistent with two previous studies, one contrasting ListSp with NumTsk (Spitsyna et al., 2006), and the other contrasting PropSp with counting (Count) (Blank et al., 2002). The coordinates for the peak voxels at the left and right TOP junctions in these two studies was within 0–6 mm in the three planes relative to center of the spheres used in this study. As further validation for the choice of the TOP regions in the present study in relation to those reported by Blank et al. (2002), which did not include NumTsk as a condition, the contrast of PropSp with Count instead of with NumTsk changed the coordinates of the peak voxels at the left and right TOP junctions by no >4 mm.

All ROI analyses were performed using the MarsBaR software toolbox (Brett et al., 2002). Individual measures of the difference in activity, that is the difference in radioactive counts across all voxels within an ROI, normalized to an arbitrary whole-brain activity of 100, between the language and their baseline conditions when each was contrasted with the common baseline of NumTsk, were obtained for each ROI in each subject. Because there was more than one scan per condition, the mean for each condition in each individual was used for a random-effects statistical analysis. For illustrations, these measures, equivalent to percentage difference in activity between the contrasted conditions, were displayed as columns of group means, with 95% confidence intervals for the between-subject variances. Repeated-measures ANOVAs investigated temporal lobe functional dissociations, using the factors condition [ListSp, PropSp, rotated speech (RotSp), and Count] and either hemisphere (left and right) or region (ventrolateral temporal pole, TOP junction, anterior fusiform gyrus and hippocampus). Because five planned ANOVAs were performed, significance was set at p < 0.01. When informative, significant effects or interactions were further examined using post hoc paired t test comparisons; significance was set at p < 0.05.

Results

Behavioral data

During the ListSp, the subjects heard the male speaking at a mean rate of 172 words per minute (range 149–191), and the female speaking at a mean rate of 215 words per minute (range 166–238). The “rate” of hearing during RotSp was equivalent, because the spectrally rotated versions of speech were prepared from the same recordings. The rate of speech production by the subjects and, hence, the rate of hearing their own utterances, was somewhat slower, partly because of the novel experience of speaking while lying supine in a scanner. Across the subjects, the mean rate was 151 words per minute (range 112–189). Speech production during counting was not recorded, but subjects had counted up to 55–80 after 1 min, equating to a word production rate of 90–135 words per minute. Hearing speech during the NumTsk was <50 words per minute.

The scores that the six raters gave for the detail apparent in the random samples of heard and spoken narratives (see Materials and Methods) demonstrated a higher mean score for the heard narratives (7.5, range 6–8) than the spoken narratives (6.8, range 3–9). The wider range of scores in the spoken narratives reflects the variable success of the subjects in remembering and describing events in detail when in the scanner.

PET imaging data

The SPM of the contrast of ListSp with RotSp demonstrated activity in the left and right anterior superior temporal sulcus, spreading into anterolateral temporal cortex at the poles (Fig. 1). There was an additional posterior area, located at the left TOP junction. An analysis at a lower threshold (p < 0.0001, uncorrected) demonstrated activity at the right TOP junction. There was medial prefrontal activity within both superior frontal gyri. ListSp also activated cortex behind the splenium of the corpus callosum (where retrosplenial and posterior cingulate cortex are in close proximity), with symmetrical distribution of activity between the hemispheres, although this activity was only above threshold in a few voxels.

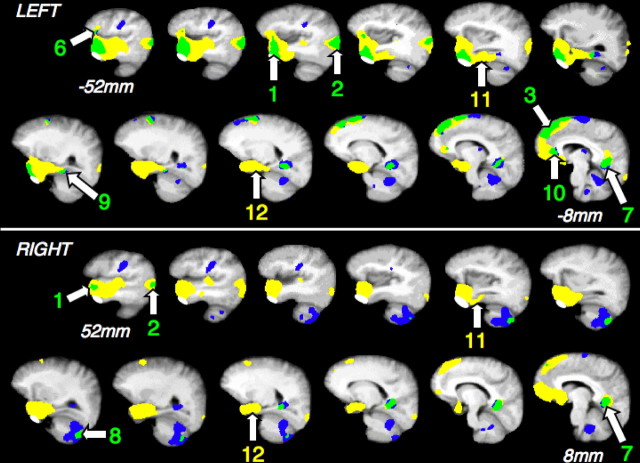

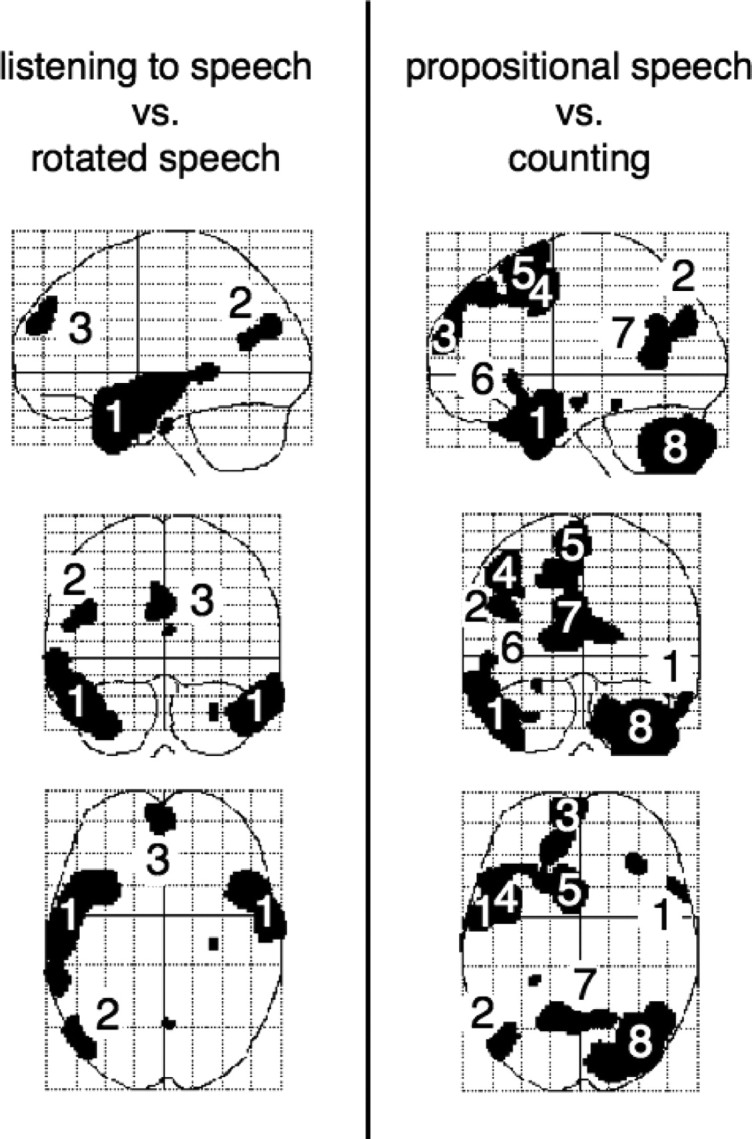

Figure 1.

Left, Listening to speech contrasted with listening to rotated speech, performed within SPM2 and displayed as statistical parametric maps, with sagittal (upper), coronal (middle), and axial (lower) views. On the sagittal view, anterior is to the left, and on the coronal and axial views the left side of the brain is on the left. Right, The equivalent display of the contrast of propositional speech with counting. 1, Lateral anterior temporal cortex; 2, temporo-occipito-parietal junction; 3, anterior prefrontal activity; 4, 5, left premotor/posterior prefrontal cortex (lateral area and presupplementary motor area, respectively); 6, lateral orbitofrontal cortex, inferior to Broca's area; 7, retrosplenial/posterior cingulate cortex; 8, posterior and lateral surface of the right cerebellar hemisphere.

The SPM of the contrast of PropSp with Count also demonstrated activity in both anterolateral temporal lobes, more extensive on the left, and at the left TOP junction (Fig. 1). Analysis at a lower threshold (p < 0.0001, uncorrected) demonstrated activity at the right TOP junction. Medial prefrontal activity was confined to the left superior frontal gyrus, extending back into the presupplementary motor area. Lateral frontal activity was present at the posterior extent of the left middle frontal gyrus, at the junction of dorsolateral prefrontal and anterior premotor cortex. The left cerebral hemisphere activations were associated with activity in the posterior and lateral right cerebellum. Activity in retrosplenial/posterior cingulate cortex was very similar to that observed in the contrast of ListSp with RotSp.

Voxels that were common to ListSp and PropSp in the contrasts with their matched baseline conditions were confined to both anterolateral temporal lobes, with greater spatial extent on the left at the statistical threshold used, the left TOP junction, and two medial regions of small spatial extent: within the left superior frontal gyrus and left and right retrosplenial/posterior cingulate cortex. Using the NumTsk as the baseline condition extended the observed activations common to ListSp and PropSp (Fig. 2). This was mainly because of an extension of the activated regions observed in the contrast of ListSp with RotSp; for the most part, NumTsk and Count were equivalent when investigating higher-order cortices, so contrasting PropSp with NumTsk produced a similar result to the contrast of PropSp with Count shown in Figure 1.

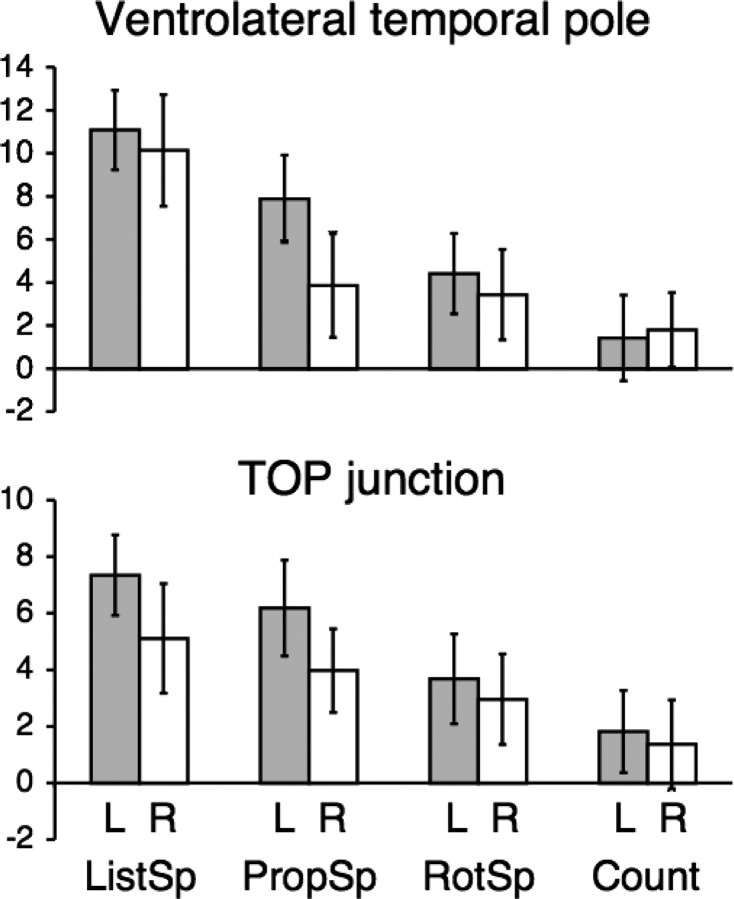

Figure 2.

Activity for speech comprehension and production, each contrasted with the number task, overlaid on a mean T1-weighted MRI template. The left (top) and right (bottom) cerebral and cerebellar hemispheres are displayed as serial sagittal slices, oriented along the horizontal plane of the temporal lobes and separated by 4 mm, extending from 8 to 52 mm lateral to the midline. Significant activity for speech comprehension alone is in yellow and for speech production alone in blue. Convergence of activity is in green. The white masks over the inferior aspect of the anterior temporal lobes exclude the area where activity related to the pterygoid muscles was observed during articulation. Activity in the superior temporal gyrus for speech comprehension alone reflects the greater rate of hearing words, and motor cortex and areas in both cerebellar hemispheres were activated exclusively by speech production. Convergence was observed in lateral anterior temporal cortex, of greater extent on the left (1), temporo-occipito-parietal junction, of greater extent on the left (2), left medial superior frontal gyrus (3), inferior to Broca's area (6), retrosplenial/posterior cingulate cortex (7), posterior right cerebellar hemisphere (8), and left medial orbital prefrontal cortex (10). Activity in the left and right anterior fusiform gyrus (11) and left and right hippocampus (12) was only observed for speech comprehension.

Within anterolateral temporal cortex there was bilateral activity, as observed in the contrasts of the language conditions with their matched baseline conditions. Activity in the most inferior part of the anterior temporal lobes had to be excluded as smoothing of the grouped imaging data allowed some pterygoid muscle-related activity during articulation to extend up into the most inferior part of anterior temporal cortex. Excluding this region resulted in peak activity being centered on the lateral aspect of the region. For the contrast of ListSp with NumTsk, the MNI coordinates that identified the location of peak activity were as follows: left, x = −50, y = 12, z = −28; right, x = 56, y = 14, z = −26. For the contrast of PropSp with NumTsk, the MNI coordinates were very similar: left, x = −48, y = 12, z = −28; right, x = 48, y = 14, z = −32.

The ROI data from left and right ventrolateral temporal pole are shown in Figure 3. Mean activities across subjects were entered into a four (condition) by two (hemisphere) ANOVA. There was a significant main effect of condition (F(3,10) = 56.61; p < 0.0001), but not hemisphere (F(1,12) = 1.47; p = 0.25). There was a significant condition by hemisphere interaction (F(3,10) = 8.85; p < 0.005), the result of asymmetrical activity during PropSp (left > right, t(12) = 2.69; p < 0.05). The responses to the other conditions were symmetrical (p > 0.4 for the three left–right comparisons). As is evident from Figure 3, activity in the left ventrolateral temporal pole was not only significantly greater during ListSp and PropSp relative to NumTsk, but also significantly greater relative to their matched baseline conditions (t(12) = 8.07, p < 0.0001 and t(12) = 7.01, p < 0.0001, respectively). On the right, ListSp resulted in significantly greater activity than RotSp (t(12) = 9.03; p < 0.0001), but there was only a borderline difference between PropSp and Count (t(12) = 2.06; p = 0.06). In summary, the ventrolateral temporal poles responded symmetrically to both auditory conditions, but the responses were significantly greater when the subjects heard intelligible speech. In contrast, counting elicited little activity, whereas propositional speech resulted in bilateral activity, but with evidence of asymmetry, left greater than right.

Figure 3.

Columns of group mean activity (relative to the common baseline NumTsk condition, in arbitrary units after normalizing to remove differences in whole brain activity), with 95% confidence intervals for between-subjects variance, in the left and right ventrolateral temporal poles and TOP junctions. The columns for speech comprehension (ListSp), speech production (PropSp), listening to rotated speech (RotSp) and counting (count) are relative to the common baseline condition of the number task.

The other lateral neocortical region that was activated by both ListSp and PropSp was the TOP junction (Fig. 3). As in the anterior temporal region, a four (condition) by two (hemisphere) ANOVA demonstrated a significant main effect of condition (F(3,10) = 30.65; p < 0.0001), but not hemisphere (F(1,12) = 2.02; p > 0.1). The significance of the condition by hemisphere interaction was marginal (F(3,10) = 3.48; p = 0.06), the result of both ListSp and PropSp eliciting marginally greater activity on the left (t(12) = 1.89, p = 0.08 and t(12) = 2.25, p = 0.04, respectively), with no difference between the hemispheres for RotSp and Count (p > 0.5). Again, as in the ventrolateral temporal pole, activity at the TOP junctions was not only significantly greater during ListSp and PropSp compared with NumTsk, but also significantly greater than during their matched baseline conditions: on the left, t(12) = 9.47, p < 0.0001 and t(12) = 7.87, p < 0.0001, respectively; on the right, t(12) = 3.94, p < 0.01 and t(12) = 4.06, p < 0.01, respectively. In summary, the responses at both TOP junctions demonstrated greater activity during speech comprehension and production relative to both the matched and unmatched baseline conditions, with evidence of some asymmetry, left greater than right.

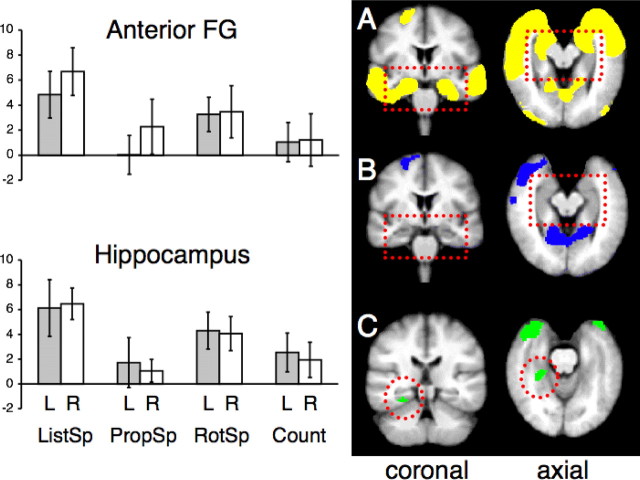

The response profiles across conditions were different in the anterior fusiform gyri and hippocampi. Most apparent was the small effect size associated with PropSp (Fig. 4). For the anterior fusiform gyri, a four (condition) by two (hemisphere) ANOVA demonstrated a significant main effect of condition (F(3,10) = 28.89; p < 0.0001) but not hemisphere (F(1,12) = 1.26; p > 0.25), and there was no condition by hemisphere interaction (F(3,10) = 2.40; p > 0.1). In contrast to the lateral neocortical areas, activity associated with the language conditions in the fusiform gyri was no greater than with their matched baseline conditions (p > 0.1), except for ListSp on the right (t(12) = 5.44; p = 0.0001). The analyses of the hippocampal ROIs produced similar results. Thus, the four (condition) by two (hemisphere) ANOVA demonstrated a significant main effect of condition (F(3,10) = 28.89; p < 0.0001) but not hemisphere (F(1,12) = 1.26; p > 0.25), and there was no condition by hemisphere interaction (F(3,10) = 2.40; p > 0.1). Again, activity associated with the language conditions was no greater than with their matched baseline conditions (p > 0.1), except for ListSp on the right (t(12) = 3.99; p < 0.01). In summary, activity in the left and right inferior and medial temporal lobes demonstrated strong response to both auditory conditions, but little or no response during speech production. The one region in inferomedial temporal cortex where there was activity common to ListSp and PropSp was close to or within the left collateral sulcus, which separates the parahippocampal and fusiform gyri (Fig. 4).

Figure 4.

Right, Activity during speech comprehension (A; yellow) and speech production (B; blue), contrasted with the number task, overlaid on to coronal and axial slices from a mean T1-weighted MRI template; the discontinuous red rectangle highlights the inferomedial temporal lobes. Row C (green) shows activity, common to the two language conditions within the inferomedial temporal lobes, confined to the left parahippocampal area (discontinuous red circle). Note that the slices in row C are not from the same planes as in rows A and B. Left, ROI data from the left and right anterior fusiform gyrus (FG) and hippocampus, in the same manner as used in Figure 3. The columns for speech comprehension (ListSp), speech production (PropSp), listening to rotated speech (RotSp) and counting (Count) are all relative to the common baseline condition of the number task.

The scores for richness of detail about the remembered events in the random samples of heard and spoken narratives did differ (mean scores of 7.5 and 6.8, respectively), but this cannot explain the functional dissociation in the fusiform gyrus and hippocampus during ListSp and PropSp. Activity during PropSp was no greater than during Count, and only marginally greater than during the NumTsk, conditions during which spontaneous episodic and semantic memory retrieval will have been minimal compared with that during spontaneous self-referential speech production. In addition, analysis of the individual values of activity in the fusiform gyrus and hippocampus during PropSp demonstrated no correlation with the rate of speech production nor, in the samples of speech analyzed, the scores for detail about the remembered events.

A comparison of the profile of responses in medial and posterolateral temporal lobes was investigated by performing a four (condition) by two (region) ANOVA on the ROI data from the left hippocampus and left TOP junction. There was a significant main effect of condition (F(3,10) = 21.08; p < 0.0001) but not region (F(1,12) = 1.28; p > 0.25), and there was a condition by region interaction (F(3,10) = 12.2; p > 0.001). The interaction was the result of greater activity during PropSp at the TOP junction (t(12) = 4.04; p < 0.01), with no difference in activity between the two regions during the other three conditions (p < 0.4). In summary, there was dissociation between the responses within medial and posterolateral temporal lobe areas, but only during speech production.

Although speech comprehension activated both the left and right superior frontal gyri, activity in response to propositional speech production was lateralized to the left, with inferior extension into medial orbitofrontal cortex (Fig. 2). The common activity in the posterior medial region had a midline peak that was located close to the junction of the parieto-occipital and calcarine sulci; bilateral and more inferior peaks, 10 mm either side of the midline, were located in close proximity to the anterior part of the calcarine sulcus (Fig. 2). Within the limits of spatial resolution in a group PET study, it seems most probable that the activity was generated from cortex that lay anterior and dorsal to the depths of these sulci; that is, within posterior cingulate cortex (Brodmann's area 23) and retrosplenial cortex (Brodmann's areas 29 and 30).

There was activation in response to both speech comprehension and production in the left lateral orbitofrontal cortex, located inferior to Broca's area, (www.bic.mni.mcgill.ca.cytoarchitectonics/), within Brodmann's areas 12/47 (Fig. 2). Activity in response to the language conditions was increased relative to both Count and NumTsk. It was not possible to objectively define the precise homotopic region on the right, but the mirror voxel showed a very different pattern of activity, with activity across all conditions being similar except during PropSp, the condition when activity was least.

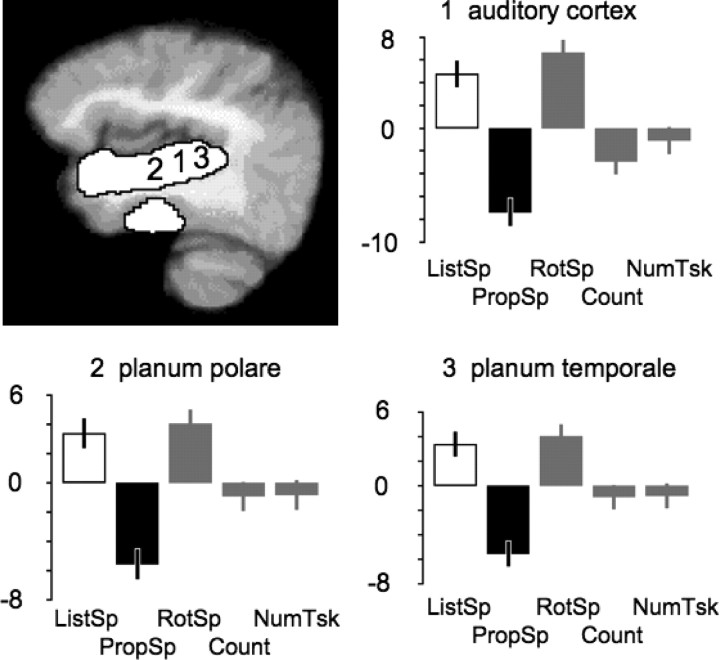

Finally, we investigated the possibility that the responses of the ventrolateral temporal pole and the TOP junction during PropSp were attributable not to processes before articulation, but were consequences of postarticulatory processing of own speech, in other words, that the conjunction of activity for ListSp and PropSp might reflect hearing speech in both conditions, another's voice during ListSp and one's own voice during PropSp. We directly contrasted ListSp with PropSp within SPM2 and demonstrated that ListSp resulted in significantly greater activity along the length of the superior temporal gyrus. This might be partly attributable to differences in the rates of hearing another's voice during ListSp and one's own voice during PropSp, as the rates were not matched (see above, Behavioral data). However, Figure 5 shows the profile of responses across conditions within the left primary auditory cortex (located according to the probabilistic atlas of Penhune et al., 1996), planum temporale (located according to the probabilistic atlas of Westbury et al., 1999), and anterior to primary auditory cortex in the supratemporal plane (the planum polare). In all three regions (and at the equivalent peak voxels in the right superior temporal gyrus) (data not shown in Fig. 5), activity across the five conditions was lowest during PropSp. This evidence suggests that activity during PropSp in “upstream” ventrolateral temporal cortex and TOP junction was caused by prearticulatory retrieval processes.

Figure 5.

Activity in the contrast of ListSp with PropSp, overlaid in white on a paramedian sagittal slice through the left superior temporal gyrus of a mean T1-weighted MRI template (top left). Profiles of activity at the peak voxel within the left primary auditory cortex (1), planum polare (2), and planum temporale (3) are shown in the other three panels. The mean activity across all five conditions is normalized to zero within SPM2, and displayed as the difference in activity between conditions, in arbitrary units. ListSp is shown as a white column and PropSp as a black column, and activity during the three conditions not involved in generating the contrast (RotSp, Count, and NumTsk) are shown as gray columns. The error bars depict 90% confidence intervals. In these three primary or association auditory regions, activity during PropSp was least, despite the subjects being exposed to the sound of their own voice.

Discussion

This study demonstrated a system for language processing within which activity was independent of the speech phase, comprehension or production. This effect was most apparent in left anterior temporal neocortex, consistent with our previous studies that separately investigated language comprehension (Spitsyna et al., 2006) and speech production (Blank et al., 2002). This consistency across studies also extended to the left TOP junction.

A potential confound was that speech production is associated with the perception of one's own voice. However, like previous studies that have investigated activity within the superior temporal gyri in response to one's own utterances (Hirano et al., 1996; Paus et al., 1996; Wise et al., 1999), activity was low within auditory cortex during speech production. Therefore, the response of the anterior temporal cortex and TOP junction during propositional speech can be attributed to processes other than auditory sensory processing.

Patients with semantic dementia demonstrate a progressive amodal impairment of semantic knowledge (Hodges et al., 1992; Bozeat et al., 2000), and this is associated with progressive atrophy of the anterior, inferior and medial temporal lobes (Chan et al., 2001; Davies et al., 2005). Based on this evidence, a computational model of semantic cognition locates the neural instantiation of semantic “hidden units” within anterior temporal cortex. These units represent neurons that map external stimuli, including words, on to distributed representations of semantic knowledge (McClelland and Rogers, 2003; Rogers et al., 2004). The results presented here indicate that the function of anterior temporal neurons extends to retrieval of knowledge during speech production. This inference accords with the semantically “empty” spontaneous speech of semantic dementia patients (Bird et al., 2000), and with anterior temporal activity observed during task-dependent retrieval of the meaning of single nouns (Marinkovic et al., 2003; Scott et al., 2003; Sharp et al., 2004).

There is an important caveat. A number of imaging studies have attributed a role for anterior temporal cortex at the level of sentence and narrative speech processing (Mazoyer et al., 1993; Vandenberghe et al., 2002; Humphries et al., 2005, 2006). Because semantic dementia patients retain the ability to detect grammatical violations in the speech of others and avoid them in their own utterances (Hodges et al. 1992; Gorno-Tempini et al., 2004), it is improbable that representations for the rules of syntax are located in anterior temporal cortex. Nevertheless, this region responds less to words presented randomly than to coherent sentences and narratives (Xu et al., 2005). During the processing of sentences, access to lexical meaning and the parsing of sentences are not independent processes (MacDonald et al., 1994; Traxler and Tooley, 2007), and the integration of the contributions to meaning represented at the lexical, sentential, and narrative levels modulates brain activity (Xu et al., 2005). Therefore, the activity observed within anterior temporal cortex during speech comprehension and production reflected the net sum of an interaction between lexical and syntactic processes, and not access to lexical semantics alone.

The syndrome of transcortical sensory aphasia after stroke is also associated with poor language comprehension and “semantically empty” speech production. In contrast to the patients with semantic dementia, the cortical localization for this syndrome has been attributed to inferior parietal and posterior temporal cortex (Alexander et al., 1989; Berthier, 1999). Based on our data, a more precise location may be the left TOP junction. The functions of anterior temporal cortex and the TOP junction are unlikely to be identical, and poor comprehension could result from damage to amodal semantic representations or be observed when there is impaired task-dependent access to the semantic system (Jefferies and Lambon-Ralph, 2006). Nevertheless, a functional link between the TOP junction and anterior temporal cortex can be inferred from their connection via the middle longitudinal fasciculus (Schmahmann and Pandya, 2006). As a consequence, a temporoparietal lesion may affect integrated function along the posterior-anterior axis of the temporal lobe (Crinion et al., 2005). In terms of recovery from brain injury, activity within anterior and posterior and left and right temporal cortical areas may be compensatory. Thus, transcortical sensory aphasia after a left temporoparietal stroke is usually transient (Berthier, 1999), and a left anterior temporal lobectomy performed to alleviate intractable temporal lobe seizures has a relatively minor impact on language (Hermann and Wyler, 1988; Davies et al., 1995). In contrast, in semantic dementia, the loss of semantic knowledge becomes most evident when both left and right anterior temporal cortex are atrophic (Lambon-Ralph et al., 2001; Nestor et al., 2006).

Within the anterior fusiform gyrus, there was a strong response to hearing speech. The medial border of the anterior fusiform gyrus is the site of transition from unimodal visual association cortex to heteromodal perirhinal cortex (Insausti et al., 1998). Activity in response to heard speech indicated that the signal from the anterior fusiform gyrus originated from heteromodal perirhinal cortex, part of a synaptic hierarchy between sensory cortex and the hippocampus linking perception to memory (Squire et al., 2004).

The response of the hippocampi during listening to speech can be attributed to encoding of the novel narrative information, with incidental associative memory retrieval; for example, hearing about someone's holiday may trigger recollections of visiting the same destination. The response to rotated speech is compatible with the establishment of auditory recognition memory in response to a novel auditory stimulus (Squire et al., 2001). An additional explanation is activity associated with intrusive stimulus independent thoughts. The lack of response to propositional speech was unexpected. Factors that were not controlled in this study, such as the intensity of “reliving” an experience during recall, may impact on hippocampal activity (Svoboda et al., 2006). Nevertheless, analysis of samples of the subjects' speech demonstrated that there was no lack of self-referential detail. Patients with isolated hippocampal pathology have impaired retrieval of memories encoded before the injury occurred (Spiers et al., 2001; Chan et al., 2007), and so the null result in the left and right hippocampus cannot be equated with their lack of involvement during narrative production. Furthermore, other imaging studies specifically investigating autobiographical memory retrieval have demonstrated a hippocampal response, although some studies may have confounded retrieval with incidental encoding of the stimuli used to elicit retrieval (Stark and Okado, 2003). Speech production is also accompanied by encoding; we remember what we have said. Nevertheless, and whatever the balance between mnemonic encoding and retrieval during the two halves of discourse, this study has demonstrated that net synaptic activity within the hippocampi is low during propositional speech.

The functional dissociation within the hippocampi was not present elsewhere in the limbic system: activity within the left parahippocampal area and retrosplenial/posterior cingulate cortex was independent of speech phase. The latter region is affected early in the course of Alzheimer's disease (Mosconi et al., 2004; Godbolt et al., 2006), a condition characterized by impaired memory encoding and retrieval, and the degree of impairment correlates with local metabolism (Nestor et al. 2006). Additional evidence comes from the effects of focal lesions in this region [for a discussion of “retrosplenial amnesia” and its relation to the anatomical connections of this area, see Kobayashi and Amaral (2000, 2003)].

Communication is dependent on a coherent and logical relationship across elements of information that unfold over time, and knowing how this construct relates to the way the world works, in general and in relation to oneself and one's interactions with others. Such diverse functions have been related to medial prefrontal activity (Frith and Frith, 1999; Ferstl and von Cramon, 2001, 2002; Gusnard et al., 2001; Scott et al., 2003; Sharp et al., 2004; Xu et al., 2005; Svoboda et al., 2006). Although medial prefrontal function cannot be defined in terms of one process, the frequency with which activity in this region is observed attest to its importance in the executive control of language and memory. The extent of medial prefrontal activity contrasted with that in the left inferior frontal gyrus. Within the latter region there are discrete neuronal populations that respond to sensory (auditory and visual) stimuli (Sugihara et al., 2006), articulatory movements (Blank et al., 2002), hand movements (Fadiga and Craighero, 2006) (the subjects made button presses during the numerical task), and language-related cognitive processes (Dapretto and Bookheimer, 1999). Thus, anatomical variability of the inferior frontal gyrus (Amunts et al., 1999), the spatial resolution of PET, and the contrasts used in this study may have masked the responses of closely adjacent but functionally independent populations of inferior frontal neurons.

In summary, we have demonstrated convergence of speech comprehension and production within heteromodal and amodal neocortical and limbic systems supporting declarative memory. This result corresponds closely with our previous study, which demonstrated that the anterolateral temporal cortex and the TOP junction respond to the perception and automatic comprehension of both spoken and written narratives (Spitsyna et al., 2006). Separation of communication-related semantic and episodic memory processes can be inferred from converging clinical evidence, with semantic functions attributable to both anterior and posterior temporal cortex and episodic memory functions to the medial temporal lobes and retrosplenial/posterior cingulate cortex.

Footnotes

This work was supported by the Wellcome Trust, United Kingdom (J.E.W.), and S.K.S. is a Wellcome Trust Senior Fellow. This work was conducted in collaboration with Imanet, GE Healthcare (Little Chalfont, UK).

References

- Alexander MP, Hiltbrunner B, Fischer RS. Distributed anatomy of transcortical sensory aphasia. Arch Neurol. 1989;46:885–892. doi: 10.1001/archneur.1989.00520440075023. [DOI] [PubMed] [Google Scholar]

- Amunts K, Schleicher A, Burgel U, Mohlberg H, Uylings HB, Zilles K. Broca's region revisited: cytoarchitecture and intersubject variability. J Comp Neurol. 1999;412:319–341. doi: 10.1002/(sici)1096-9861(19990920)412:2<319::aid-cne10>3.0.co;2-7. [DOI] [PubMed] [Google Scholar]

- Berthier ML. Transcortical aphasias. Hove, UK: Psychology; 1999. [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PSF, Rao SM, Cox RW. Conceptual processing during the conscious resting state: a functional MRI study. J Cogn Neurosci. 1999;11:80–93. doi: 10.1162/089892999563265. [DOI] [PubMed] [Google Scholar]

- Bird H, Lambon Ralph MA, Patterson MA, Patterson K, Hodges JR. The rise and fall of frequency and imageability: noun and verb production in semantic dementia. Brain Lang. 2000;73:17–49. doi: 10.1006/brln.2000.2293. [DOI] [PubMed] [Google Scholar]

- Blank SC, Scott SK, Murphy K, Warburton E, Wise RJ. Speech production: Wernicke, Broca and beyond. Brain. 2002;125:1829–1838. doi: 10.1093/brain/awf191. [DOI] [PubMed] [Google Scholar]

- Blesser B. Speech perception under conditions of spectral transformation. I. Phonetic characteristics. J Speech Hear Res. 1972;15:5–41. doi: 10.1044/jshr.1501.05. [DOI] [PubMed] [Google Scholar]

- Bozeat S, Lambon Ralph MA, Patterson K, Garrard P, Hodges JR. Non-verbal semantic impairment in semantic dementia. Neuropsychologia. 2000;38:1207–1215. doi: 10.1016/s0028-3932(00)00034-8. [DOI] [PubMed] [Google Scholar]

- Brett M, Anton JL, Valabregue R, Poline JB. Region of interest analysis using an SPM toolbox. NeuroImage. 2002;16:497. [Google Scholar]

- Chan D, Fox NC, Scahill RI, Crum WR, Whitwell JL, Leschziner G, Rossor AM, Stevens JM, Cipolotti L, Rossor MN. Patterns of temporal lobe atrophy in semantic dementia and Alzheimer's disease. Ann Neurol. 2001;49:433–442. [PubMed] [Google Scholar]

- Chan D, Henley SM, Rossor MN, Warrington EK. Extensive and temporally ungraded retrograde amnesia in encephalitis associated with antibodies to voltage-gated potassium channels. Arch Neurol. 2007;64:404–410. doi: 10.1001/archneur.64.3.404. [DOI] [PubMed] [Google Scholar]

- Crinion JT, Lambon Ralph MA, Warburton EA, Howard D, Wise RJ. Temporal lobe regions engaged during normal speech comprehension. Brain. 2003;126:1193–1201. doi: 10.1093/brain/awg104. [DOI] [PubMed] [Google Scholar]

- Crinion JT, Warburton EA, Lambon Ralph MA, Howard D, Wise RJ. Listening to narrative speech after aphasic stroke: the role of the left anterior temporal lobe. Cereb Cortex. 2005;16:1116–1125. doi: 10.1093/cercor/bhj053. [DOI] [PubMed] [Google Scholar]

- Dapretto M, Bookheimer SY. Form and content: dissociating syntax and semantics in sentence comprehension. Neuron. 1999;24:427–432. doi: 10.1016/s0896-6273(00)80855-7. [DOI] [PubMed] [Google Scholar]

- Davies KG, Maxwell RE, Beniak TE, Destafney E, Fiol ME. Language function after temporal lobectomy without stimulation mapping of cortical function. Epilepsia. 1995;36:130–136. doi: 10.1111/j.1528-1157.1995.tb00971.x. [DOI] [PubMed] [Google Scholar]

- Davies RR, Hodges JR, Kril JJ, Patterson K, Halliday GM, Xuereb JH. The pathological basis of semantic dementia. Brain. 2005;128:1984–1995. doi: 10.1093/brain/awh582. [DOI] [PubMed] [Google Scholar]

- Fadiga L, Craighero L. Hand actions and speech representation in Broca's area. Cortex. 2006;42:486–490. doi: 10.1016/s0010-9452(08)70383-6. [DOI] [PubMed] [Google Scholar]

- Ferstl EC, von Cramon DY. The role of coherence and cohesion in text comprehension: an event-related fMRI study. Cogn Brain Res. 2001;11:325–340. doi: 10.1016/s0926-6410(01)00007-6. [DOI] [PubMed] [Google Scholar]

- Ferstl EC, von Cramon DY. What does the frontomedian cortex contribute to language processing: coherence or theory of mind? NeuroImage. 2002;17:1599–1612. doi: 10.1006/nimg.2002.1247. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Price CJ, Fletcher P, Moore C, Frackowiak RS, Dolan RJ. The trouble with cognitive subtraction. NeuroImage. 1996;4:97–104. doi: 10.1006/nimg.1996.0033. [DOI] [PubMed] [Google Scholar]

- Frith C, Frith U. Interacting minds—a biological basis. Science. 1999;286:1692–1695. doi: 10.1126/science.286.5445.1692. [DOI] [PubMed] [Google Scholar]

- Fuster JM. The prefrontal cortex: anatomy, physiology, and neurophysiology of the frontal lobe. Ed 3. Philadelphia: Lipincott-Raven; 1997. [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Godbolt AK, Waldman AD, MacManus DG, Schott JM, Frost C, Cipolotti L, Fox NC, Rossor MN. MRS shows abnormalities before symptoms in familial Alzheimer disease. Neurology. 2006;66:718–722. doi: 10.1212/01.wnl.0000201237.05869.df. [DOI] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Dronkers NF, Rankin KP, Ogar JM, Phengrasamy L, Rosen HJ, Johnson JK, Weiner MW, Miller BL. Cognition and anatomy in three variants of primary progressive aphasia. Ann Neurol. 2004;55:335–346. doi: 10.1002/ana.10825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gusnard D, Raichle ME. Searching for a baseline: functional imaging and the resting human brain. Nat Rev Neurosci. 2001;2:685–694. doi: 10.1038/35094500. [DOI] [PubMed] [Google Scholar]

- Gusnard D, Akbudak E, Shulman GL, Raichle ME. Medial prefrontal cortex and self-referential mental activity: relation to a default mode of brain function. Proc Natl Acad Sci USA. 2001;98:4259–4264. doi: 10.1073/pnas.071043098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammers A, Allom R, Koepp MJ, Free SL, Myers R, Lemieux L, Mitchell TN, Brooks DJ, Duncan JS. Three-dimensional maximum probability atlas of the human brain, with particular reference to the temporal lobe. Hum Brain Mapp. 2003;19:224–247. doi: 10.1002/hbm.10123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hermann BP, Wyler AR. Effects of anterior temporal lobectomy on language function: a controlled study. Ann Neurol. 1988;23:585–588. doi: 10.1002/ana.410230610. [DOI] [PubMed] [Google Scholar]

- Hirano S, Kojima H, Naito Y, Honjo I, Kamoto Y, Okazawa H, Ishizu K, Yonekura Y, Nagahama Y, Fukuyama H, Konishi J. Cortical speech processing mechanisms while vocalizing visually presented languages. NeuroReport. 1996;8:363–367. doi: 10.1097/00001756-199612200-00071. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K, Oxbury S, Funnell E. Semantic dementia: progressive fluent aphasia with temporal lobe atrophy. Brain. 1992;115:1783–1806. doi: 10.1093/brain/115.6.1783. [DOI] [PubMed] [Google Scholar]

- Humphries C, Love T, Swinney D, Hickok G. Response of anterior temporal cortex to syntactic and prosodic manipulations during sentence processing. Hum Brain Mapp. 2005;26:128–138. doi: 10.1002/hbm.20148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries C, Binder JR, Medler DA, Liebenthal E. Syntactic and semantic modulation of neural activity during auditory sentence comprehension. J Cogn Neurosci. 2006;18:665–679. doi: 10.1162/jocn.2006.18.4.665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insausti R, Juottonen K, Soininen H, Insausti AM, Partanen K, Vainio P, Laakso MP, Pitkanen A. MR volumetric analysis of the human entorhinal, perirhinal, and temporopolar cortices. Am J Neuroradiol. 1998;19:659–671. [PMC free article] [PubMed] [Google Scholar]

- Jefferies E, Lambon Ralph MA. Semantic impairment in stroke aphasia versus semantic dementia: a case-series comparison. Brain. 2006;129:2132–2147. doi: 10.1093/brain/awl153. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. Macaque monkey retrosplenial cortex: I. Three-dimensional and cytoarchitectonic organization. J Comp Neurol. 2000;426:339–365. doi: 10.1002/1096-9861(20001023)426:3<339::aid-cne1>3.0.co;2-8. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. Macaque monkey retrosplenial cortex.: II. Cortical afferents. J Comp Neurol. 2003;466:48–79. doi: 10.1002/cne.10883. [DOI] [PubMed] [Google Scholar]

- Kohler E, Keysers C, Umiltà MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297:846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Lambon-Ralph MA, McClelland JL, Patterson K, Galton CJ, Hodges JR. No right to speak? The relationship between object naming and semantic impairment: neuropsychological evidence and a computational model. J Cogn Neurosci. 2001;13:341–356. doi: 10.1162/08989290151137395. [DOI] [PubMed] [Google Scholar]

- Liberman A, Whalen D. On the relation of speech to language. Trends Cogn Sci. 2000;4:187–196. doi: 10.1016/s1364-6613(00)01471-6. [DOI] [PubMed] [Google Scholar]

- MacDonald MC, Pearlmutter NJ, Seidenberg MS. Lexical nature of syntactic ambiguity resolution. Psychol Rev. 1994;101:676–703. doi: 10.1037/0033-295x.101.4.676. [DOI] [PubMed] [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E. Spatiotemporal dynamics of modality-specific and supramodal word processing. Neuron. 2003;38:487–497. doi: 10.1016/s0896-6273(03)00197-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazoyer B, Dehaene S, Tzourio N, Frak V, Murayama N, Cohen L, Levrier O, Salamon G, Syrota A, Mehier J. The cortical representation of speech. J Cogn Neurosci. 1993;4:467–479. doi: 10.1162/jocn.1993.5.4.467. [DOI] [PubMed] [Google Scholar]

- Mazoyer B, Zago L, Mellet E, Bricogne S, Etard O, Houde O, Crivello F, Joliot M, Petit L, Tzourio-Mazoyer N. Cortical networks for working memory and executive functions sustain the conscious resting state in man. Brain Res Bull. 2001;54:287–298. doi: 10.1016/s0361-9230(00)00437-8. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Rogers TT. The parallel distributed processing approach to semantic cognition. Nat Rev Neurosci. 2003;4:310–322. doi: 10.1038/nrn1076. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. From sensation to cognition. Brain. 1998;121:1013–1052. doi: 10.1093/brain/121.6.1013. [DOI] [PubMed] [Google Scholar]

- Mosconi L, Perani D, Sorbi S, Herholz K, Nacmias B, Holthoff V, Salmon E, Baron JC, De Cristofaro MT, Padovani A, Borroni B, Franceschi M, Bracco L, Pupi A. MCI conversion to dementia and the APOE genotype: a prediction study with FDG-PET. Neurology. 2004;63:2332–2340. doi: 10.1212/01.wnl.0000147469.18313.3b. [DOI] [PubMed] [Google Scholar]

- Nestor PJ, Fryer TD, Hodges JR. Declarative memory impairments in Alzheimer's disease and semantic dementia. NeuroImage. 2006;30:1010–1020. doi: 10.1016/j.neuroimage.2005.10.008. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wagner T, Poline JB. Valid conjunction inference with the minimum statistic. NeuroImage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Paus T, Perry DW, Zatorre RJ, Worsley KJ, Evans AC. Modulation of cerebral blood flow in the human auditory cortex during speech: role of motor-to-sensory discharges. Eur J Neurosci. 1996;8:2236–2246. doi: 10.1111/j.1460-9568.1996.tb01187.x. [DOI] [PubMed] [Google Scholar]

- Penhune VB, Zatorre RJ, MacDonald JD, Evans AC. Interhemispheric anatomical differences in human primary auditory cortex: probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Huss F, Kherif F, Moscoso del Prado Martin F, Hauk O, Shtyrov Y. Motor cortex maps articulatory features of speech sounds. Proc Natl Acad Sci USA. 2006;103:7865–7870. doi: 10.1073/pnas.0509989103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Arbib MA. Language within our grasp. Trends Neurosci. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- Rogers TT, Lambon-Ralph MA, Garrard P, Bozeat S, McClelland JL, Hodges JR, Patterson K. Structure and deterioration of semantic memory: a neuropsychological and computational investigation. Psychol Rev. 2004;111:205–235. doi: 10.1037/0033-295X.111.1.205. [DOI] [PubMed] [Google Scholar]

- Schmahmann JD, Pandya DN. Fiber pathways of the brain. Oxford: Oxford UP; 2006. [Google Scholar]

- Scott SK, Blank SC, Rosen S, Wise RJ. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Leff AP, Wise RJ. Going beyond the information given: a neural system supporting semantic interpretation. NeuroImage. 2003;19:870–876. doi: 10.1016/s1053-8119(03)00083-1. [DOI] [PubMed] [Google Scholar]

- Sharp DJ, Scott SK, Wise RJ. Monitoring and the controlled processing of meaning: distinct prefrontal systems. Cereb Cortex. 2004;14:1–10. doi: 10.1093/cercor/bhg086. [DOI] [PubMed] [Google Scholar]

- Spiers HJ, Maguire EA, Burgess N. Hippocampal amnesia. Neurocase. 2001;7:357–382. doi: 10.1076/neur.7.5.357.16245. [DOI] [PubMed] [Google Scholar]

- Spitsyna G, Warren JE, Scott SK, Turkheimer FE, Wise RJS. Converging language streams in the human temporal lobe. J Neurosci. 2006;26:7328–7336. doi: 10.1523/JNEUROSCI.0559-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR, Schmolck H, Stark SM. Impaired auditory recognition memory in amnesic patients with medial temporal lobe lesions. Learn Mem. 2001;8:252–256. doi: 10.1101/lm.42001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR, Stark CE, Clark RE. The medial temporal lobe. Annu Rev Neurosci. 2004;27:279–306. doi: 10.1146/annurev.neuro.27.070203.144130. [DOI] [PubMed] [Google Scholar]

- Stark CE, Squire LR. When zero is not zero: the problem of ambiguous baseline conditions in fMRI. Proc Natl Acad Sci USA. 2001;98:12760–12766. doi: 10.1073/pnas.221462998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark CE, Okado Y. Making memories without trying: medial temporal lobe activity associated with incidental memory formation during recognition. J Neurosci. 2003;23:6748–6753. doi: 10.1523/JNEUROSCI.23-17-06748.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugihara T, Diltz MD, Averbeck BB, Romanski LM. Integration of auditory and visual communication in the primate ventrolateral prefrontal cortex. J Neurosci. 2006;26:11138–11147. doi: 10.1523/JNEUROSCI.3550-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svoboda E, McKinnon MC, Levine B. The functional neuroanatomy of autobiographical memory: a meta-analysis. Neuropsychologia. 2006;44:2189–2208. doi: 10.1016/j.neuropsychologia.2006.05.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Traxler MJ, Tooley KM. Lexical mediation and context effects in sentence processing. Brain Res. 2007;1146:59–74. doi: 10.1016/j.brainres.2006.10.010. [DOI] [PubMed] [Google Scholar]

- Vandenberghe R, Nobre AC, Price CJ. The response of left temporal cortex to sentences. J Cogn Neurosci. 2002;14:550–560. doi: 10.1162/08989290260045800. [DOI] [PubMed] [Google Scholar]

- Westbury CF, Zatorre RJ, Evans AC. Quantifying variability in the planum temporale: a probability map. Cereb Cortex. 1999;9:392–405. doi: 10.1093/cercor/9.4.392. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Saygin AP, Sereno MI, Iacoboni M. Listening to speech activates motor areas involved in speech production. Nat Neurosci. 2004;7:701–702. doi: 10.1038/nn1263. [DOI] [PubMed] [Google Scholar]

- Wise RJ, Greene J, Büchel C, Scott SK. Brain regions involved in articulation. Lancet. 1999;353:1057–1061. doi: 10.1016/s0140-6736(98)07491-1. [DOI] [PubMed] [Google Scholar]

- Xu J, Kemeny S, Park G, Frattali C, Braun A. Language in context: emergent features of word, sentence and narrative comprehension. NeuroImage. 2005;25:1002–1015. doi: 10.1016/j.neuroimage.2004.12.013. [DOI] [PubMed] [Google Scholar]