Abstract

The goal of this study was to characterize multisensory interaction patterns in cortical ventral intraparietal area (VIP). We recorded single-unit activity in two alert monkeys during the presentation of visual (drifting gratings) and tactile (low-pressure air puffs) stimuli. One stimulus was always positioned inside the receptive field of the neuron. The other stimulus was defined so as to manipulate the spatial and temporal disparity between the two stimuli. More than 70% of VIP cells showed a significant modulation of their response by bimodal stimulations. These cells included both bimodal cells, i.e., cells responsive to both tested modalities, and seemingly unimodal cells, i.e., cells responding to only one of the two tested modalities. This latter observation suggests that postsynaptic latent mechanisms are involved in multisensory integration. In both cell categories, neuronal responses are either enhanced or depressed and reflect nonlinear sub-, super-, or additive mechanisms. The occurrence of these observations is maximum when stimuli are in temporal synchrony and spatially congruent. Interestingly, introducing spatial or temporal disparities between stimuli does not affect the sign or the magnitude of interactions but rather their occurrence. Multisensory stimulation also affects the neuronal response latencies of bimodal stimuli. For a given neuron, these are on average intermediate between the two unimodal response latencies, again suggesting latent postsynaptic mechanisms. In summary, we show that the majority of VIP neurons perform multisensory integration, following general rules (e.g., spatial congruency and temporal synchrony) that are closely similar to those described in other cortical and subcortical regions.

Keywords: multisensory integration, posterior parietal cortex, neurophysiology, visual, tactile, macaque monkey

Introduction

Multisensory integration is the process by which sensory information coming from different sensory channels is combined at the level of the single neuron. It is thought to improve the detection, localization, and discrimination of external stimuli and to produce faster reaction times (Welch and Warren, 1986; Giard and Peronnet, 1999).

This process requires the convergence of inputs from different sensory modalities on the same neurons. Such a convergence has been described in parietal area 7b (Hyvärinen, 1981; Graziano and Gross, 1995), in the ventral intraparietal area (VIP) (Colby et al., 1993; Duhamel et al., 1998; Bremmer et al., 2002; Schlack et al., 2002, 2005), in the ventral premotor area (Rizzolatti et al., 1981; Graziano and Gross, 1995; Graziano et al., 1999; Graziano and Gandhi, 2000), in the superior temporal sulcus (Benevento et al., 1977; Leinonen et al., 1980; Bruce et al., 1981; Hikosaka et al., 1988), in the putamen (Graziano and Gross, 1995), and in the superior colliculus (SC) (Meredith and Stein, 1986a; Stein and Meredith, 1993; Wallace et al., 1996; Bell et al., 2001, 2003).

The neural bases of multisensory integration have been studied in great details in the superior colliculus (for review, see Stein and Meredith, 1993). The responses of its neurons to bimodal stimuli are either enhanced or depressed with respect to their unimodal responses (Meredith and Stein, 1983; King and Palmer, 1985; Bell et al., 2001), reflecting nonlinear effects (Meredith and Stein, 1983) that depend on stimulus properties such as temporal synchrony, spatial congruence (Meredith and Stein, 1986b, 1996; Meredith et al., 1987; Kadunce et al., 1997), and stimulus efficacy (Meredith and Stein, 1986a; Perrault et al., 2003, 2005) but also on the state of alertness and behavioral engagement of the animal (Populin and Yin, 2002; Bell et al., 2003).

In the present study, we report the correlates of multisensory integration in the spiking activity of single neurons of the VIP of awake behaving monkeys engaged in a central fixation task while visual and/or tactile stimuli are presented in the near space around the animal's face. VIP has been shown to be a site of multisensory convergence (Colby et al., 1993; Duhamel et al., 1998; Bremmer et al., 2002; Schlack et al., 2002, 2005; Schroeder and Foxe, 2002). We show that the majority of VIP neurons integrate multisensory stimuli. This process can take two major forms. (1) The response to bimodal stimuli by the neuron is different from its response to the unimodal stimuli and to the sum of these unimodal responses, reflecting a nonlinear process. Interestingly, this process is seen in both bimodal and seemingly unimodal neurons, suggesting that latent postsynaptic mechanisms are at play. (2) The response latency to bimodal stimuli by a neuron is intermediate between its unimodal response latencies. Compatible with the behavioral effects of bimodality (Welch and Warren, 1986; Giard and Peronnet, 1999), multisensory integration is maximized by synchronous and spatially congruent bimodal stimulations.

Parts of this work have been published previously in abstract form (Avillac et al., 2003).

Materials and Methods

Surgical preparation.

Two adult rhesus monkeys (Macaca mulatta, monkeys N and M), weighing 4.5 and 6.5 kg, were used in this study. Procedures were approved by the local Animal Care Committee in compliance with the guidelines of European Community on Animal Care. Animals were prepared for chronic recording of eye position and single-neuron activity in VIP. A single surgery was induced with Zoletil 20 (6 mg/kg) and atropine (0.25 mg) and maintained under isoflurane anesthesia (2.5%). A search coil was implanted subconjunctivally to measure eye position (Judge et al., 1980), and both a head-restraint post and a stainless steel recording chamber were embedded into an acrylic skull explant. The recording chamber was positioned over a craniotomy centered on the intraparietal sulcus (IPS) at P5 L12 (left and right hemispheres in monkeys N, left hemisphere in monkey M); the chamber was mounted flat on the skull so that electrode penetrations were approximately parallel to the orientation of the IPS surface.

General experimental procedures.

Throughout the duration of the experiments, the monkeys were seated in a primate chair with the head restrained. The experiments were conducted in a dark room. The monkeys faced a tangent liquid crystal display screen (Iiyama, Oude Meer, The Netherlands) with a 1024 × 768 pixels resolution, 25 cm away that spanned a 72 × 30° area of the central visual field in which visual stimuli were delivered. Eye movements were recorded with the magnetic search coil technique (Primelec, Regensdorf, Switzerland). Tactile stimuli consisted of brief air puffs delivered through needles oriented toward the animal's face. Behavioral paradigms, visual displays, and storage of both neuronal discharge and eye movements were under the control of a computer running a real-time data acquisition system (REX) (Hays et al., 1982). Single-neuron activity was recorded extracellularly with tungsten microelectrodes (1–2 MΩ at 1 kHz; Frederick Haer Company, Bowdoinham, ME), which were lowered into VIP through 23-gauge stainless steel guide tubes set inside a delrin grid (Crist Instruments, Hagerstown, MD) adapted to the recording chamber. The electrode signal was amplified using a Neurolog system (Digitimer, Hertfordshire, UK) and digitized for on-line spike discrimination using the MSD software (Alpha Omega, Nazareth, Israel). Discriminated spike pulses were sampled at 1 kHz and stored on the REX personal computer along with behavioral and stimulation data for off-line analysis.

VIP is located in the fundus of the IPS, at ∼7 mm from the cortical surface during electrode penetrations made parallel to the sulcus. VIP was identified on the basis of a set of physiological criteria described previously (Colby et al., 1993; Duhamel et al., 1998). Access from the lateral bank of the IPS shows a transition from simple visual and saccade-related activities specific to the lateral intraparietal area to direction-selective visual responses often accompanied by direction-selective somatosensory responses on the face and the head region. Access to VIP from the medial bank of the intraparietal sulcus is characterized by a transition from purely hand or arm somatosensory activity to direction-selective visual and tactile responses. Visual receptive fields are generally large (10–30°), central or peripheral, often bilateral, whereas tactile receptive fields are mostly located on the head and face regions. Vestibular (Bremmer et al., 2002; Schlack et al., 2002) and auditory (Schlack et al., 2005) responses have also been described in VIP.

Sensory stimulation procedures.

Quantitative data on visual, tactile, and bimodal responses were obtained while the monkeys were engaged in a visual fixation task. The monkeys fixated a 0.1 × 0.1° spot of light for a randomly varying duration (2000–3000 ms) while different configurations of visual and/or tactile stimuli were presented. These stimuli were task irrelevant, and the monkeys were rewarded with a drop of liquid for maintaining the eyes within a 2.5 × 2.5° window around the fixation spot. Sensory stimuli were presented 500–900 ms after the onset of fixation and lasted either 200 or 500 ms.

Multisensory interactions were investigated using a standard set of visual and tactile stimuli. Visual stimuli consisted of oriented gratings of 9 × 9°. Orientation (0, 45, 90, 135, 180, or 270°), motion direction, and velocity (from 2.15, 4.3, or 8.6°/s based on a frame rate of 60 Hz) were adjusted to be optimal for each recorded neuron. The grating was presented in the center of the visual receptive field of the neuron whose location and size had first been determined manually with an ophthalmoscope.

Tactile stimuli consisted of brief and light air puffs applied to specific locations of the monkey's face through a system of compressed air, computer-controlled solenoids, Teflon tubing, and stainless steel needles (diameter of 0.8 mm). Two needles were mounted inside two articulated arms (diameter of 0.7–1.5 cm) on either sides of the monkey's face, 0.5 cm away from it. This apparatus made possible the stimulation of any region of the face. As for the visual receptive field, the tactile receptive field of the neuron on the monkey's face was hand mapped using the tip of a cotton applicator to define appropriate locations for positioning the tactile stimulation device. Exiting air at the skin surface was set at a pressure of 0.7 bar at 0.5 cm of the face. This was found to be sufficient to elicit reliable neuronal responses (Avillac et al., 2005) without provoking saccades, eye blinks, or other observable aversive reactions that may occur with air puffs applied at stronger pressure values (1.0 bar) and from a larger distance from the face (at 5 cm) (Cooke and Graziano, 2003). Because auditory responses have been reported in VIP (Schlack et al., 2005), white noise was continuously delivered through headphones to mask any air-puff noise.

Multisensory stimulus configurations.

Once a neuron was isolated, its visual and tactile receptive fields were mapped, and two different multisensory stimulation procedures were successively conducted to measure the effects of (1) temporal and (2) spatial relationships between the visual and tactile stimuli. In the “temporal asynchrony” paradigm, the stimuli were applied at spatially congruent locations either simultaneously or with an onset time difference of 50, 100, or 200 ms. The visual stimuli could lead the tactile stimulus or vice versa, thus defining seven different asynchrony conditions. In the “spatial congruence” paradigm, visual and tactile stimuli were presented simultaneously but at different locations relative to one another: one of the two stimuli was inside the receptive field of the neuron (generally contralateral to the recorded hemisphere), and the other was positioned either at the congruent (same side of space) or at the incongruent (mirror location on the opposite side of space) spatial location.

Temporal and spatial variations of bimodal stimulation were presented in distinct blocks of trials; each trial block also included unimodal stimulus presentations.

Data processing and statistical analyses.

Neuronal responses to stimuli were assessed by counting the number of spikes evoked in a poststimulus time window, time locked to the evoked response. This window was adapted to the temporal response profile of the neuron, i.e., tonic or phasic, on, off, or on/off.

Poststimulus discharge activity was compared with prestimulus activity by means of t tests (p ≤ 0.05 adjusted). Visual and tactile neuronal responses latencies were estimated using a sliding-window ANOVA procedure (Ben Hamed et al., 2001) designed to find a significant difference in the firing rate of a neuron between a period preceding the stimulus and a period after the stimulus. The firing rate measured in a 100-ms-wide fixed window preceding the stimulation is compared with the firing rate measured in a 20-ms-wide sliding poststimulus window. The latency of the response is defined as the sliding-window shift for which the probability level associated with the computed F ratio is equal to or <0.05.

Multisensory integration was defined following the criteria commonly used in the literature (Meredith and Stein, 1986a; Wallace et al., 1996; Bell et al., 2001, 2003; Ghazanfar et al., 2005), as a significant difference between the bimodal response and the response evoked by the most effective unimodal stimulus (t test, p ≤ 0.05 adjusted). To further describe multisensory effects in VIP and compare these effects with observations made in other brain regions, we adapted two multisensory indices used in previous work. The first one (Meredith and Stein, 1983) reflects the enhancive or suppressive effects of adding a second stimulus modality and compares the bimodal response of the neuron (Bi) with the maximal unimodal response (Unimax): Index1 = [(Bi − Unimax)/Unimax] × 100.

The second index directly evaluates the super-additivity hypothesis by comparing the bimodal response with the sum of the two unimodal responses (V for visual, T for tactile) (King and Palmer, 1985; Populin and Yin, 2002; Perrault et al., 2005, their “multisensory contrast index”): Index2 = [(Bi − (V + T))/(V + T)] × 100. In this study, we chose to use both indices because they provide complementary information. However, we introduced two modifications to their calculation. The first modification was made to take into account the baseline activity of the neurons. Indeed, compared with the superior colliculus, cortical activity exhibits a substantial spontaneous activity. We reasoned that the measure of interest in assessing multisensory interaction effects is the comparison between the activity induced by a bimodal as opposed to a unimodal stimulus, not that absolute spike discharge. This is best captured by subtracting spontaneous from stimulus-evoked activity. Not doing so when the spontaneous activity of a neuron is high can bias indices toward weaker values and mask genuine enhancement or suppression effects (Stanford et al., 2005). We thus normalized each sensory response to bimodal, visual, and tactile stimuli with respect to baseline activity. Second, both indices have the disadvantage of being asymmetric: for example, an index of +50% and an index of −50% do not represent equivalent degrees of enhancement and suppression. To render the indices symmetric, we included the same factors at the numerator and the denominator. We redefined index 1 as follows: Indexamplification = [(|Bi| − |Unimax|)/(|Bi| + |Unimax|)] × 100. The sign of this amplification index describes two kinds of integrative responses: an enhancement of the neuronal bimodal response compared with the maximal unimodal response when the index was positive and a depression when it was negative. Note that the index we used is slightly different from the one introduced by Meredith and Stein (1986a). A value of 60% with this newly defined index corresponds to an amplification factor of 4, which would give an index value of 300% index 1 and would correspond to the high end of the effects reported in the studies cited above. We similarly redefined index 2 as follows: Indexadditive = [(|Bi| −(|V| + |T|))/(|Bi| +(|V| + |T|)] × 100. Here a positive index corresponds to a super-additive process (i.e., the bimodal response is superior to the sum of each unimodal response), and a negative one reflects sub-additive mechanisms (i.e., the bimodal response is less than the sum of each unimodal response). The statistical significance of additivity was evaluated by computing a t test of the difference between the bimodal response and the arithmetical sum of unimodal responses, after subtraction of the spontaneous firing rate (Stanford et al., 2005). For each recorded neuron, the two indices were calculated for all possible stimulus pairs used in the study. The stimulus pair selected to represent effects at the population level and to classify the neurons was the one giving rise to the largest absolute index value. This corresponded to the “standard” stimulus arrangement (i.e., spatially congruent, synchronous visual, and tactile stimulation) for 83% of cells, the asynchronous arrangement for 11% of cells, and spatially noncongruent arrangement for 6% of cells.

Results

We recorded 150 cells in VIP in two behaving monkeys (96 in monkey N and 54 in monkey M). Most of the recorded neurons were bimodal visual–tactile (87 of 150, 58%), 35% were exclusively visual (53 of 150), and a minority was exclusively tactile (10 of 150, 7%) (t test, p ≤ 0.05 corrected).

Multisensory integration in response level of VIP cells

The majority of VIP cells (106 of 150, 71%) showed a response to bimodal stimuli significantly different from their response to the maximal unimodal stimuli (t test, p ≤ 0.05 corrected) and were thus defined as integrative. Multisensory integration took the form of either an enhancement (43 of 106, 41%) or a depression (63 of 106, 59%) of the level of the response (Table 1).

Table 1.

Multisensory integration effects on spike discharge rate

| Unimodal cells | Bimodal cells | Total | |

|---|---|---|---|

| Integrative cells | 42 | 64 | 106 |

| Depression | 28 | 35 | 63 |

| Sub | 26 | 31 | 57 |

| Additive | 2 | 4 | 6 |

| Super | 0 | 0 | 0 |

| Enhancement | 14 | 29 | 43 |

| Sub | 2 | 3 | 5 |

| Additive | 2 | 13 | 15 |

| Super | 10 | 13 | 23 |

| Non-integrative cells | 21 | 23 | 44 |

| Total | 63 | 87 | 150 |

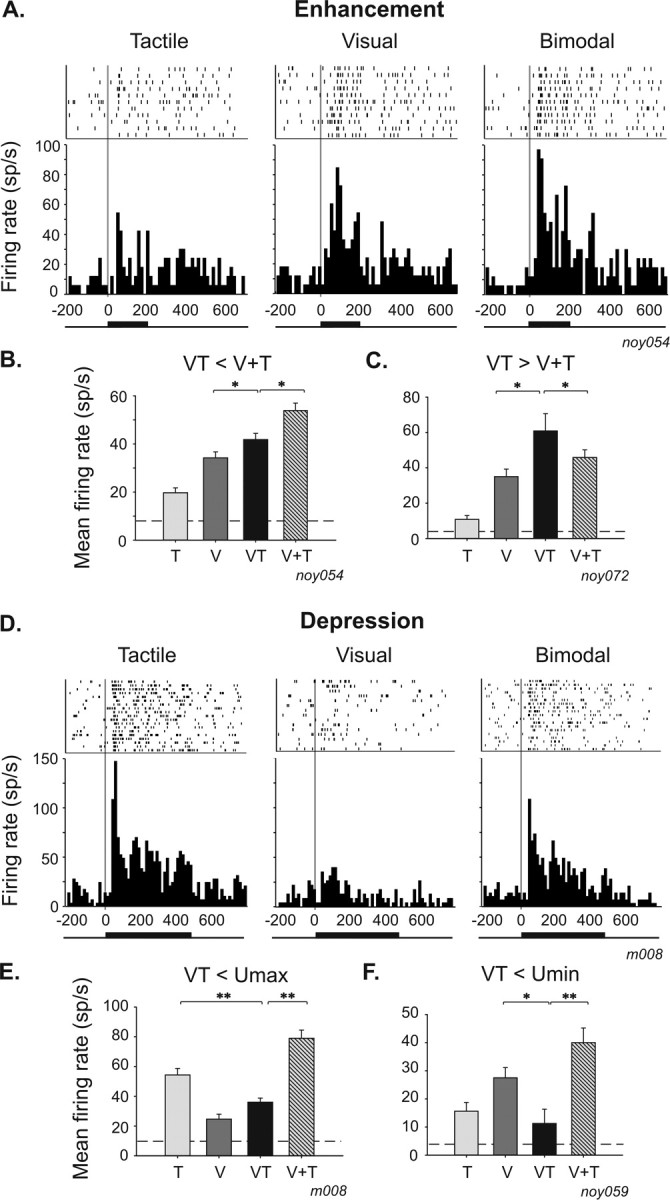

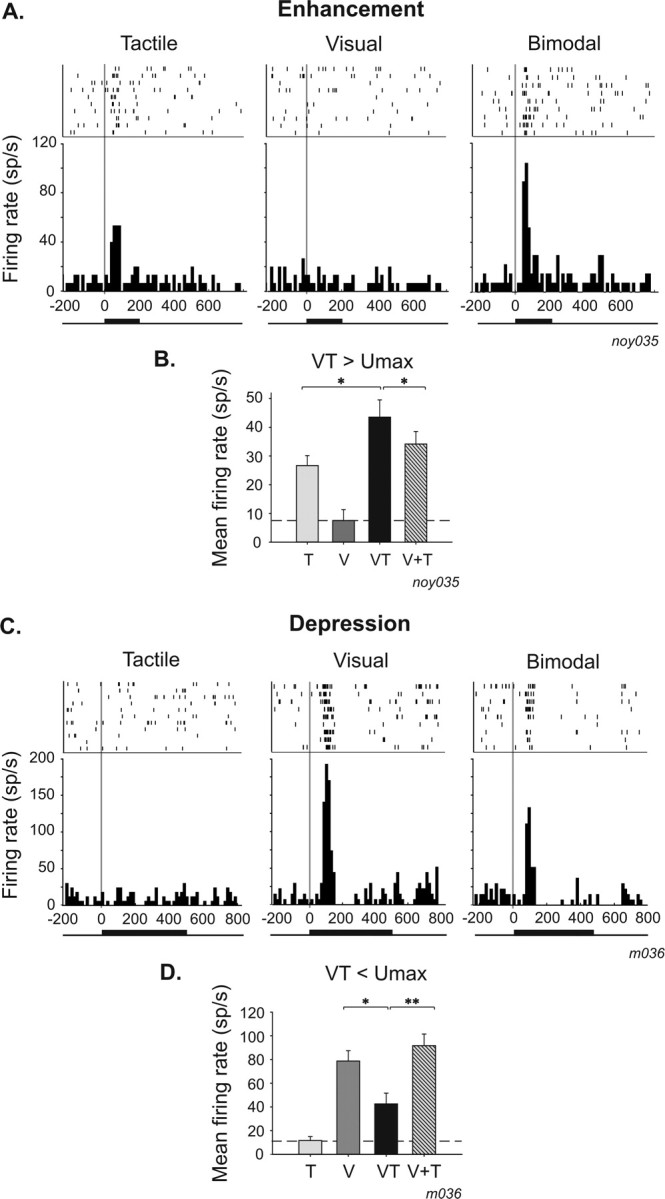

Single-cell examples of multisensory integration effects are depicted in Figure 1. In the top (Fig. 1A), the neuron exhibits significant responses to tactile (t test, p = 0.01) and visual (t test, p = 0.001) stimulation; it is thus a bimodal neuron. The synchronous presentation of tactile and visual stimuli, in a spatially congruent configuration, produces a significant increase of the neuronal firing rate (t test, p = 0.05). In this case, the bimodal response is superior to both the tactile- and visual-evoked responses and thus corresponds to an enhancement response. Forty-five percent (29 of 64) of all bimodal cells exhibit this pattern (Table 1). However, it is inferior to the arithmetical sum of the responses evoked by each sensory modality taken independently and thus reflects a sub-additive interaction mechanism (Fig. 1B) [3 of 29, 10% (Table 1)]. Additive [13 of 29, 45% (Table 1)] and super-additive enhancive responses are observed as well. In the latter case, the bimodal response is superior to the arithmetical sum of responses evoked by each sensory modality (Fig. 1C) [13 of 29 of the enhanced bimodal cells exhibit this pattern, i.e., 45% (Table 1)]. The bimodal response can also be decreased and is labeled as depression (Fig. 1D–F). Fifty-five percent (35 of 64) of all bimodal cells exhibit this pattern (Table 1). Most frequently, the neuronal firing rate is decreased compared with the maximal unimodal response: for example, the bimodal response of the neuron shown in Figure 1D is between its maximal (tactile) and minimal (visual) unisensory responses (Fig. 1E) (27 of 35 of the depressed cells exhibit this pattern, i.e., 77%). However, the bimodal response can also be depressed to a level below the minimal unimodal response (Fig. 1F) (8 of 35, 23%).

Figure 1.

Single-cell examples of multisensory integration in bimodal neurons. Rasters and response peristimulus time histograms to tactile (left), visual (middle), and bimodal (right) stimulation. Bars on raster represent spikes, and rows indicate trials. Neuronal responses are aligned to stimulus onset (gray line). Peristimulus time histograms are the summed activity across all trials in a given stimulus condition (bin width of 15 ms). Tick lines at the bottom of the peristimulus time histograms represent stimulation duration. A–C, Single-cell examples of enhancement responses. A, The neuronal activity is significantly higher in the bimodal condition compared with the visual condition (t test, p = 0.05), and multisensory integration takes the form of a sub-additive enhancement (amplification index, +17%; additive index, −5%). B, Mean firing rate histogram of the same cell as in A of the visual (V), tactile (T), bimodal (VT), and arithmetical sum of visual and tactile (V+T) responses (t test, *p ≤ 0.05; **p ≤ 0.01). The dashed line represents spontaneous firing rate. C, Example of a super-additive enhancement effect in a bimodal neuron (t test, p = 0.05; amplification index, +30%; additive index, +21%). D–F, Single-cell examples of depression responses. D, Visual–tactile neuron whose tactile response is significantly depressed (t test, p = 0.01) by the concurrent presentation of a visual stimulus (amplification index, −20%; additive index, −33%). E, This integrative response falls between the tactile and the visual response. F, Example of a VIP neuron, for which the bimodal response is inferior to the minimal unimodal response (amplification index, −60%; additive index, −70%).

A neuron is classically defined as unimodal when it responds to one sensory modality and is totally insensitive to the presentation of stimuli from other sensory modalities. To study multisensory interactions in VIP as a whole, we did not a priori reject such unimodal cells. One prediction that could be made in this regard is that the combination of an effective stimulus in one sensory modality with an ineffective stimulus in the other modality would not change the response of the neuron compared with its response to the effective stimulus. An alternative prediction is that the stimulation of a sensory modality, despite being unable to drive the neuron on its own, will modulate the response to the other stimulated effective modality. Strikingly, we found that this second possibility was the rule rather than the exception. An ineffective stimulus could modify the neuronal response when it was combined with a stimulus from a different sensory modality, thus revealing the multisensory nature of these seemingly unimodal neurons. Sixty-seven percent (42 of 63) of unimodal neurons were found to integrate multisensory events, a proportion very similar to that observed in bimodal neurons (74%) (Table 1). Single-neuron examples are shown in Figure 2. The tactile unimodal neuron (t test, p = 0.001) shown in Figure 2, A and B, is unresponsive to the presentation of a visual stimulus (t test, p = 0.41). However, the bimodal presentation of these two stimuli significantly enhanced the response of the neuron (14 of 42, 33%; t test, p = 0.05) (Table 1). As was the case for bimodal neurons, multisensory integration effects in unimodal neurons mainly took the form of a depression response (28 of 42, 67%; t test, p = 0.05) (Fig. 2C,D).

Figure 2.

Single-cell examples of multisensory integration in unimodal neurons. A, B, Single-cell examples of enhancement responses. A, Unimodal tactile neuron (t test; tactile response, p = 0.001; visual response, p = 0.41) whose tactile response is significantly enhanced when combined with ineffective visual information (t test, p = 0.05; amplification index, +36%; additive index, +36%; the indices are identical because it is a unimodal cell: V+T will thus be equal to Unimax, and both indices are equivalent). B, Mean firing rate histogram of the same neuron. C, D, Single-cell examples of depression responses. C, Unimodal visual neuron (t test; visual, p = 0.001; tactile, p = 0.47) whose visual response is significantly depressed when combined with an ineffective tactile information (t test, p = 0.05; amplification index, −45%; additive index, −45%; here also the two indices are equal, for the same reasons as described above). D, Mean firing rate histogram of the same neuron. Same conventions as in Figure 1. V, Visual; T, tactile; VT, bimodal; V+T, arithmetical sum of visual and tactile.

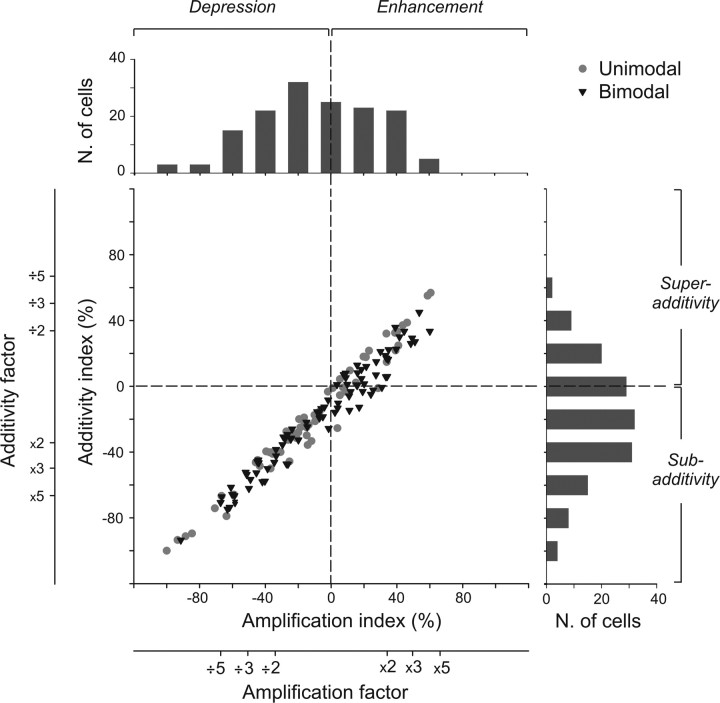

To quantify these integrative effects, for each recorded neuron, we calculated two indices derived from previous work on multisensory integration (see Materials and Methods). Calculating these metrics offers the opportunity to describe different characteristics of the multisensory integrative response (Perrault et al., 2005) and permits to test two hypotheses about the nature of multisensory effects at the neuronal level: the amplification hypothesis and the additive hypothesis. The amplification hypothesis compares, thanks to an “amplification index,” the multisensory response with the dominant unimodal response (Meredith and Stein, 1986a) and describes multisensory integration as enhancive (positive index) or depressive (negative index). The additive hypothesis compares, thanks to an “additivity index,” the multisensory response with the arithmetical sum of the sensory responses to each modality (Populin and Yin, 2002) and enables to differentiate between sub-additive (negative index), additive (null index), and super-additive (positive index) mechanisms. We plotted the additivity index as a function of the amplification index for each recorded neuron (Fig. 3). Neurons integrate multisensory events through either enhancement (43 of 106, 41% of neurons) or depression (63 of 106, 59% of neurons) of their bimodal response (Table 1). This modulation also reflects nonlinear sub-additive (62 of 106, 58%), additive (21 of 106, 20%), or super-additive mechanisms (23 of 106, 22%). Amplification index ranged from −100 to 61%, with a mean ± SE index of −9 ± 3%. Additive index distributed between −100 to 57%, with a mean ± SE index of −19 ± 3%. The multisensory integrative responses of the majority of VIP neurons reflect both a depression and sub-additive interactions. Integrative characteristics in unimodal neurons are closely similar to those revealed in bimodal neurons.

Figure 3.

Distribution of the amplification and the additive indices in the entire population of recorded cells (n = 150). Gray circles illustrate unimodal neurons (n = 63), and black triangles indicate bimodal neurons (n = 87). On the top and on the right of the scatter plot are shown the specific distribution plots for each index. The amplification index is derived from the work of Meredith and Stein (1986a,b), whereas the additive index has been adapted from the work of Populin and Yin (2002). The amplification index reflects either an increase or a decrease of the discharge to a bimodal stimulation with respect to the discharge evoked by a visual or tactile stimulus alone (see Materials and Methods). The additive index compares the bimodal response to the predicted linear sum of the response to each single sensory modality. A negative [respectively (resp.) positive] index characterizes a sub-additive (resp. super-additive) response. Moreover, an amplification (resp. additive) index of 33% corresponds to a bimodal response that is twice the maximal unimodal response (resp. the arithmetical sum), an index of 50% corresponds to a bimodal response that is three times the maximal unimodal response (resp. the arithmetical sum), and an index of 66% corresponds to a bimodal response that is five times the maximal unimodal response (resp. the arithmetical sum).

Multisensory integration in neuronal latency of VIP cells

In a previous study, we reported that response latencies to tactile stimulation were significantly shorter than visual latencies in VIP (Avillac et al., 2005). Here we compare the latency of the neuronal responses to unimodal stimuli and to bimodal stimuli.

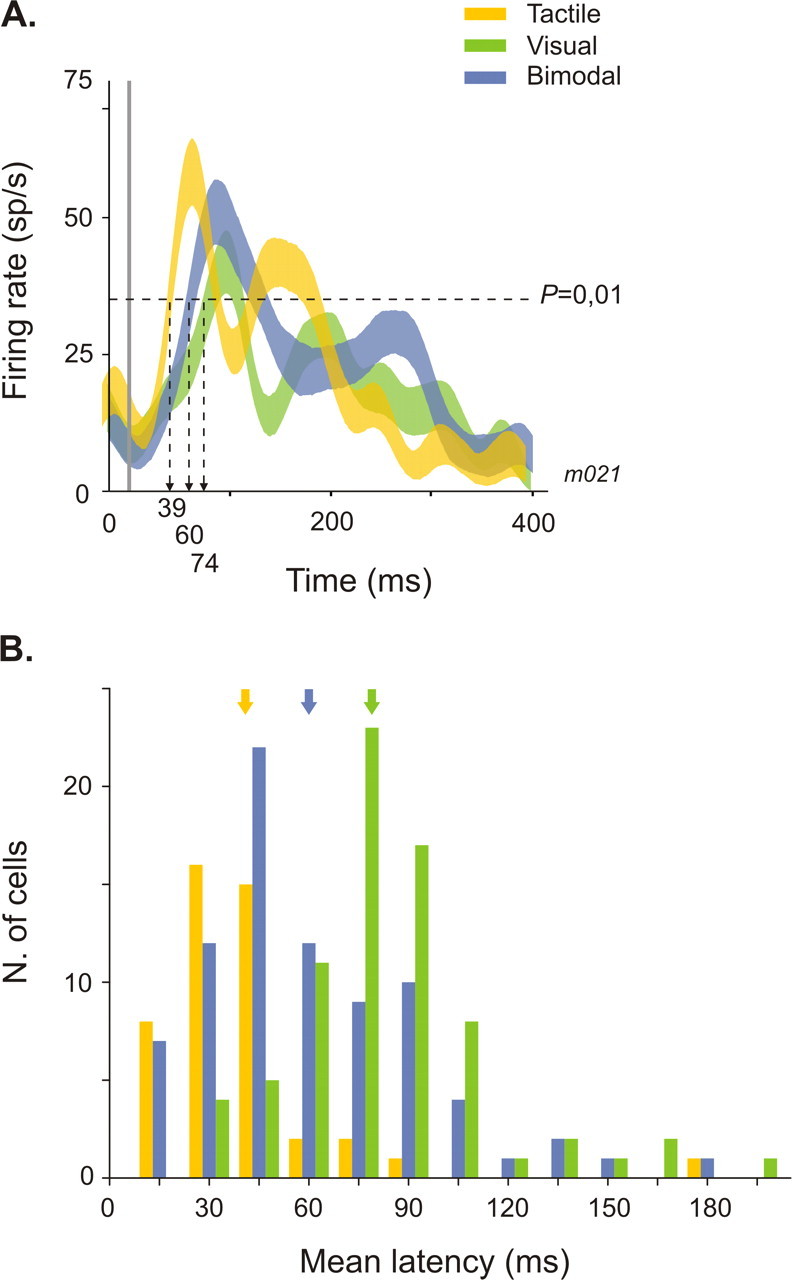

Tactile latencies were significantly shorter (41.5 ± 3.5 ms) than visual (85 ± 3 ms) (one-way ANOVA, p ≤ 0.001) (Table 2). There are no significant differences between the neuronal latencies of integrative and non-integrative neurons to unimodal stimuli (one-way ANOVA, p = 0.323 for visual, p = 0.388 for tactile). Interestingly, response latency to bimodal stimulation is on average 68.8 ± 3.1 ms, which is significantly shorter than the visual latency but significantly longer than the tactile latency (one-way ANOVA, p ≤ 0.001). A representative neuron showing such multisensory integration effects on neuronal latency is plotted in Figure 4A. Its tactile latency is 39 ms, and its visual latency is 74 ms. The response to the concurrent presentation of these two stimuli began with a delay falling between the early tactile and later visual latency, at 60 ms. We found a marginally significant difference in bimodal response latency between integrative and non-integrative cells (one-way ANOVA, p = 0.062), suggesting that the multisensory effects observed on latencies could be dependent on discharge rate. These effects on bimodal latencies were exclusively found in bimodal neurons. In unimodal visual neurons, bimodal latencies were similar to the response latency of the neuron to the effective modality (one-way ANOVA, p = 0.107) (Table 2). The distribution of tactile, visual, and bimodal latencies across the entire population of bimodal neurons is shown in Figure 4B.

Table 2.

Multisensory integration effects on neuronal latency

| V | T | VT | |

|---|---|---|---|

| Integrative cells (n = 97) | 81.5 ± 3.1 | 43.4 ± 4.3 | 65.3 ± 3.5 |

| Unimodal V (n = 29) | 86.9 ± 5.5 | 81.1 ± 6.2 | |

| Unimodal T (n = 8) | 45.5 ± 9.3 | 57.9 ± 14.2 | |

| Bimodal (n = 60) | 78.5 ± 3.8 | 43 ± 4.9 | 58.6 ± 4.2 |

| Non-integrative cells (n = 41) | 91.5 ± 6.7 | 35.9 ± 4.6 | 77.3 ± 6.1 |

| Unimodal V (n = 19) | 91.8 ± 9.8 | 95.3 ± 8.8 | |

| Unimodal T (n = 1) | |||

| Bimodal (n = 21) | 91.2 ± 9.5 | 37.2 ± 4.8 | 63.9 ± 7 |

| All cells (n = 138) | 85 ± 3 | 41.5 ± 3.5 | 68.8 ± 3.1 |

Mean ± SE (in milliseconds). V, Visual; T, tactile; VT, bimodal.

Figure 4.

Multisensory integration effects on neuronal latency. A, Single-cell example. Bimodal response (blue spike density function) is significantly shorter than visual response (green) (one-way ANOVA, p = 0.01) and significantly longer than tactile response (yellow) (one-way ANOVA, p = 0.01). B, Distribution of tactile (yellow bars), visual (green), and visuo-tactile (blue) latencies in the population of bimodal integrative and non-integrative cells (n = 81). Arrows point to the mean tactile, visual, and bimodal latencies.

Effects of temporal asynchrony on multisensory integration

The dependency of multisensory integration on the timing of stimulus events was assessed by introducing a temporal disparity between the time onset of the visual and tactile stimuli while keeping a spatially congruent configuration. Visual and tactile stimuli were presented in exact physical synchrony (i.e., without any correction for the difference in response latency) or with forward or backward temporal delays of 50, 100, or 200 ms in the onset of the tactile stimulus relative to the visual stimulus.

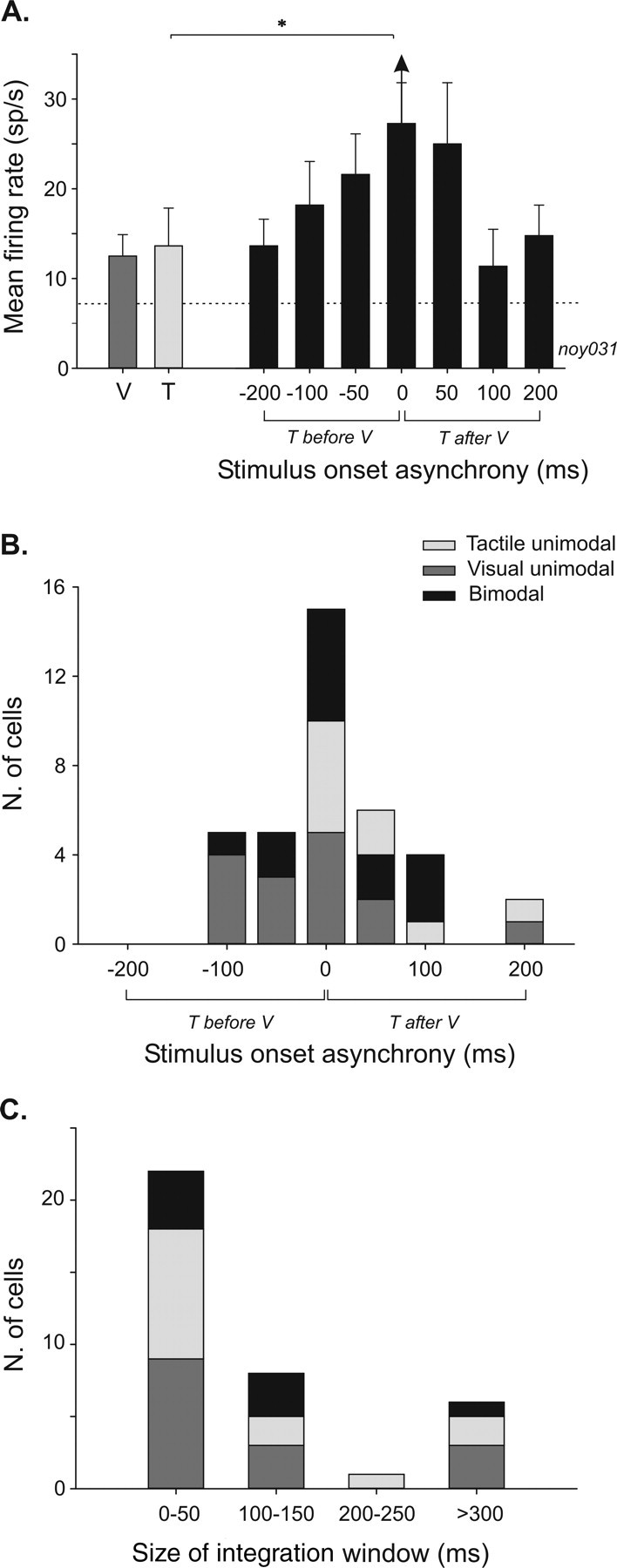

A typical neuron recorded in such conditions is shown in Figure 5A. This neuron presented a significantly enhanced response to visual and tactile stimuli when presented in synchrony (t test, p = 0.05). The introduction of a delay between these two stimuli produced a progressive decrease in the bimodal firing rate until the unimodal response level is reached with increasing temporal asynchrony. None of the asynchrony conditions yielded a significant difference between the bimodal response and the maximal unimodal response. Thus, this neuron integrated multisensory events only when stimuli were presented in perfect synchrony. The prevalence of multisensory integration effects for synchronous stimuli was confirmed at the population level (Fig. 5B). Of 37 integrative cells tested for stimulus onset asynchrony (SOA) effects, 26 (70%) showed maximal integrative effects when visual and tactile stimuli were presented in perfect synchrony (n = 15) or within 50 ms of each other (n = 11). Multisensory integration effects at longer SOA (100 ms or more) were less frequent (11 of 37, 30%) and could be related to the tonic characteristics of the sensory response of the cells exhibiting this pattern (mean ± SE sensory response duration, 423 ± 24 ms). We did not find any trend relating enhancement and depression effects with synchronous versus asynchronous presentations. Both effects were equally distributed along the SOA range.

Figure 5.

Effects of stimulus time onset asynchrony on multisensory integration in VIP. A, Histogram plot of a single-cell example of the mean firing rate as a function of SOA (error bars indicate mean ± SE). Single-modality responses of the cell are shown on the left part of the histogram (visual, dark gray bar; tactile, light gray bar). On the right part, bimodal responses to different SOA conditions are represented by black bars. Dashed line corresponds to the spontaneous activity. Multisensory integration is significant only when bimodal stimulations are synchronous (t test, *p = 0.05; amplification index, +34%; additive index, +6%). B, Population distribution of maximal integrative effects in function of stimulus onset asynchrony (n = 37). Same color conventions as in A. C, Population distribution of temporal window sizes in which multisensory integration can be found across the recorded population (n = 37). Same color conventions as in A. T, Tactile; V, visual.

To test whether multisensory integration effects could occur at more than one visuo-tactile delay, we also measured the width of the integration window. This analysis shows that, in VIP, multisensory integration takes place within a narrow temporal window (<50 ms, for 22 of 37, 60% of the cells) (Fig. 5C) across a maximum of two adjacent asynchrony intervals, separated by no more than 50 ms.

Effects of spatial congruency on multisensory integration

Next we analyze how the relative location between visual and tactile stimuli determines the multisensory response by comparing two spatial configurations: a congruent configuration, in which stimuli are presented at the same position in space and within their respective receptive fields, or an incongruent configuration, in which one stimulus is presented inside its receptive field and contralateral to the recorded hemisphere and the other stimulus outside its receptive field and ipsilaterally to the other stimulus.

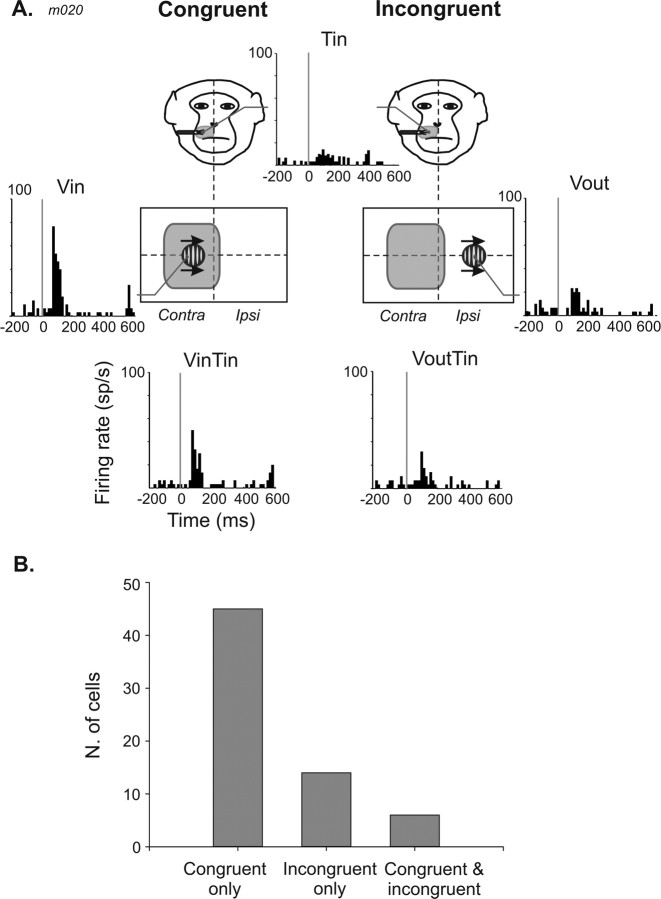

A typical example is shown in Figure 6A. A tactile stimulus presented on the contralateral side of the muzzle evoked a weak neuronal response (t test, p = 0.05). A visual stimulus presented on the contralateral portion of the screen produced a strong response (t test, p = 0.001). On the ipsilateral portion of the screen, the same stimulus drove the neuron poorly, although statistically significantly (t test, p = 0.001). The combined presentation of the tactile and the visual stimuli in an incongruent configuration (with tactile inside and visual outside the respective receptive fields) did not change the firing rate compared with either the visual (t test, p = 0.48) or tactile (t test, p = 0.13) responses. In contrast, the congruent presentation of the visual and tactile stimuli in the contralateral region of space (visual and tactile inside the respective receptive fields) markedly depressed the neuronal response (t test, p = 0.001; amplification index, −33%; additivity index, −39%).

Figure 6.

Effect of spatial congruence on multisensory integration in VIP. A, Single-cell example, spatially congruent configuration (left) and incongruent configuration (right). At the top, the tactile response of the neuron and a drawing of the tactile receptive field location (shaded area) on a monkey face are shown. The tactile stimulus is always presented inside the tactile receptive field (Tin) in both configurations. The visual stimulus is either presented inside (Vin) or outside (Vout) the visual receptive field (middle panel, left and right, respectively). Schematic representation of the location of the visual stimulation is shown. At the bottom, the neuronal responses to congruent bimodal stimulations (Vin Tin, left) and incongruent bimodal stimulations (Vout Tin, right). B, Population distribution of multisensory integration effects as a function of spatial congruence/incongruence (n = 65).

At the population level, multisensory integration occurs most often when the two stimuli in the two modalities are spatially aligned (45 of 65, 69%) (Fig. 6B). Some neurons combine multisensory events for incongruent stimuli (14 of 65, 22%), and few showed multisensory integration for stimuli that are both spatially congruent and incongruent (6 of 65, 9%). Visual and tactile stimuli presented in the same position of space mainly produced depression responses (31 of 44, 75%), whereas integration produced by an incongruent configuration of stimuli could take the form of either an enhancement (7 of 14, 50%) or a depression (7 of 14, 50%).

Effect of the strength of sensory responsiveness on multisensory integration

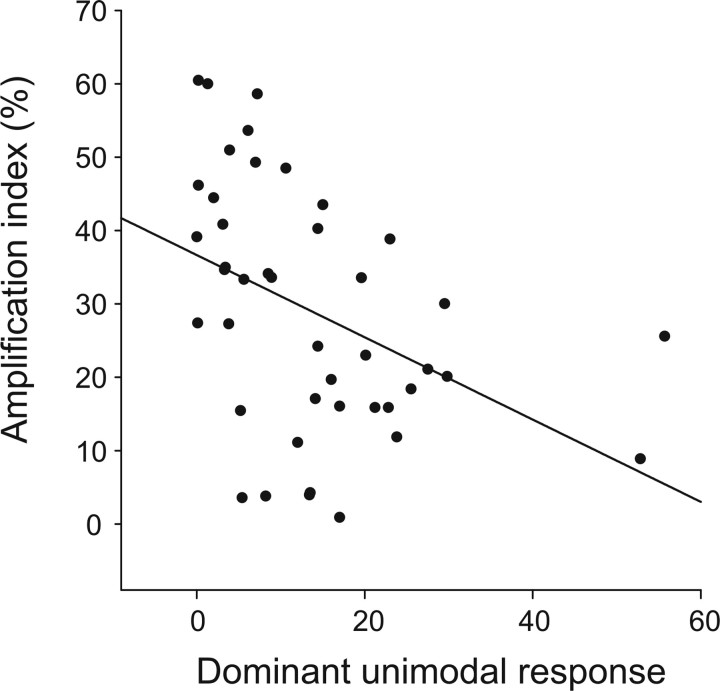

The level of multisensory response has been demonstrated to be related to the effectiveness of the unimodal stimuli in an inversely proportional manner in single neurons in the superior colliculus (Meredith and Stein, 1986a; Wallace et al., 1996). This is to say that the strongest multisensory interactions have been reported for combinations of sensory stimuli evoking the weakest responses. To test the “inverse effectiveness” principle in VIP, we plotted the amplification index (taken as an indicator of multisensory gain) as a function of the dominant unimodal response for integrative neurons showing response enhancement on bimodal stimulations (n = 43) (Fig. 7). There was a clear trend for decreased multisensory enhancement as dominant unimodal response increased (linear regression, p = 0.005). Enhancement responses in VIP are thus also governed by the inverse effectiveness principle. However, we did not find evidence for such a relationship for multisensory depression responses (linear regression, p = 0.765).

Figure 7.

Inverse effectiveness principle in VIP. The amplification index is plotted as a function of the dominant unimodal response for the subset of integrative neurons with enhancive multisensory responses (n = 43). Enhanced bimodal integration increases when the maximal unimodal response decreases.

Discussion

Several cortical and subcortical areas have been identified as multisensory areas. However, these areas may contribute in different ways to the analysis of the multisensory environment because multisensory integration can take different forms. For example, only a small proportion of the visually responsive neurons of the superior temporal sulcus show discharge activities modulated by sound (Barraclough et al., 2005), whereas up to 60% of the SC neurons integrate these two modalities (Meredith and Stein, 1986a).

We show that >70% of VIP neurons integrate visual and tactile events. This multisensory integration takes place on the discharge rate and response latency of the neurons. Modulations are observed in both multimodal neurons and unimodal neurons.

Discharge rate

Depression and enhancement

This is the prevalent signature of multisensory integration in VIP cells, in agreement with many other reports: in the SC of anesthetized (Meredith and Stein, 1983) and awake (Wallace et al., 1998; Populin and Yin, 2002) cats, in the cat (Wallace et al., 1992) and monkey (Barraclough et al., 2005) cortex, and in local field potential signals (Ghazanfar et al., 2005). Enhancement is usually described as more common than depression (Meredith and Stein, 1983; Perrault et al., 2003; Ghazanfar et al., 2005). In VIP, we found the opposite trend (60% of the cells show depression). It is unlikely that this is attributable (1) to a specificity of visuo-somatosensory integration because the sign of integration is independent of the particular modalities in play (Meredith and Stein, 1986a; Meredith et al., 1987); (2) to spatial alignment factors: the spatial congruence of bimodal stimuli controls the presence or absence of multisensory integration but not its sign; or (3) to the use of ineffective stimuli (Meredith and Stein, 1986a): the relative proportion of enhancement and depression when both visual and tactile stimuli are effective is very similar to that when only one modality activates the neurons. Some studies have reported higher rates of depression in awake than in anesthetized animals (Populin and Yin, 2002; Bell et al., 2003), suggesting that anesthesia depresses the neuronal activity of inhibitory interneurons (Populin, 2005) or that active fixation, arousal, and selective attention alters the ratio of cortical and subcortical inhibition and excitation (Bell et al., 2003). The parietal cortex is known to play an important role in attentional selection and perceptual decision. The exact contribution of these mechanisms to our results is not known and would require to be explicitly tested in an adequate experimental framework.

Sub-, super-, and additivity

As a correlate of depression, multisensory integration in VIP is highly nonlinear and is inferior to the sum of activity evoked by each sensory modality in >60% of the cells. Sub-additivity is thus more represented in VIP than super-additivity or additivity. However, super-additivity on enhanced cells is found in the same proportions as in the SC (Meredith and Stein, 1983, 1986a; King and Palmer, 1985; Wallace et al., 1996).

Interestingly, a recent study by Stanford et al. (2005) provides the first quantification of “enhancement” effects in multisensory SC neurons. Contrary to previous observations, the majority of bimodal combinations yielded responses consistent with simple summation of modality-specific influences (69% of sampled neurons). Super-additivity was observed in only 24% of neurons and sub-additivity in 7%. This is different from our observations in VIP, and again this may be related to anesthesia (Populin and Yin, 2002). Indeed, in anesthetized animals, super-additivity is common within a narrow range of stimulus effectiveness, whereas more effective stimuli produce either additive (Stanford et al., 2005) or sub-additive (Perrault et al., 2005) responses. This seems to hold in VIP (Fig. 7) (see below, Inverse effectiveness).

The case of unimodal neurons

A neuron was classified as unimodal when it responded to only one of the two tested modalities. One would assume that no multisensory integration should take place in this neuronal population. Surprisingly, 67% (42 of 63) of these neurons exhibited a modulation of their response to bimodal stimulations with respect to their unimodal response. Enhancement and depression are represented in the same proportions as in bimodal neurons. Sub-, super-, and additive effects were also similar across the two populations.

Overall, 71% (106 of 150) of all VIP neurons perform visuo-tactile bimodal integration. This percentage is impressive given that other sensory modalities are represented and other kinds of bimodal interactions are likely to occur (audio-visual, audio-tactile, visuo-vestibular, etc.). Moreover, this observation suggests that synaptic mechanisms are at play during multisensory integration. The fact that the visual response of a unimodal visual cell is modulated by tactile stimulations reveals synaptic inputs from tactile cells that remain silent during unimodal tactile stimulation but whose induced EPSP or IPSP influences the neuronal response to an effective visual stimulus. Thus, identical mechanisms seem to underlie multisensory integration in both unimodal and bimodal neurons, differing only in degree and not in nature.

Response latencies

Multisensory integration confers behavioral advantages (for review, see Stein and Meredith, 1993), such as shorter orienting reaction times (Frens et al., 1995; Giard and Peronnet, 1999; Colonius and Arndt, 2001; Corneil et al., 2002; Bell et al., 2005). This could be attributable to faster neuronal response latencies for bimodal compared with unimodal stimuli or to bimodal latencies equivalent to the shorter unimodal latencies (Wallace et al., 1996; Brett-Green et al., 2003).

In VIP, tactile latencies are on average 40 ms shorter than visual latencies. Surprisingly, bimodal response latencies are intermediate between the unimodal tactile and visual latencies. This paradoxical observation by which a fast neuronal response to a first sensory modality is slowed down by the adjunction of a second sensory modality could be explained by complex interactions between postsynaptic excitation and inhibition. However, why is this effect specific to bimodal cells and how does it relate to behavioral observations? Additional studies should be performed to address this question.

Temporal and spatial rules

Temporal congruence

As in the SC (Meredith et al., 1987; Wallace et al., 1996), the majority of integration effects are seen when two stimuli are presented simultaneously, although visual and tactile inputs do not reach their primary cortical projection, or the multisensory convergence areas such as VIP, at the same time. Adjusting for this architectural delay does not increase multisensory integration effects (Wallace et al., 1996; Bell et al., 2001; present study) (Fig. 5, +50 ms delay condition). It is worth noting that the temporal window allowing for multisensory integration is much smaller in VIP (<150 ms) than in the SC (600 ms).

Spatial congruence

Previous work shows that spatial register between receptive fields is another key condition for two sensory cues to be integrated (Meredith and Stein, 1986b). VIP visual and tactile receptive fields are spatially aligned (Duhamel et al., 1998). In centrally fixating monkeys, this defines an overlap area of 80% between receptive fields (Avillac et al., 2005) and determines the presence or not of multisensory integration. Moreover, spatial congruence essentially causes neuronal depression, in contrast to what is seen in the SC (Meredith and Stein, 1986b; Meredith et al., 1987; Wallace et al., 1996). Interestingly, these interactions are also observed for spatially incongruent stimuli for a small subset of VIP neurons (20%), possibly attributable to interactions between portions of the nonclassical receptive fields or to the fact that a subset of VIP neurons encodes visual space in terms of the projected impact point of movement trajectories in depth rather than absolute location or origin of the stimulus (Colby et al., 1993; Duhamel et al., 1998), the receptive fields being aligned in a more complex, dynamic three-dimensional volume.

Inverse effectiveness

We find that, in VIP, strong unimodal responses are associated with small enhancement and weak unimodal responses with large enhancement effects. This result is consistent with the principle of inverse effectiveness described in the multisensory SC neurons (Meredith and Stein, 1986a; Wallace et al., 1996; Perrault et al., 2005; Stanford et al., 2005) and other brain regions (Ghazanfar et al., 2005) and makes ecological sense.

Conclusions

Multisensory integration is a ubiquitous process found in midbrain structures and in the cortex. It is governed by an ensemble of common rules: spatial congruence, temporal alignment, and effectiveness of sensory stimuli. This output neuronal signal is well suited to participate in many functions, such as spatial orientation, attention, or goal-directed movement (Driver and Spence, 1998; Frens and Van Opstal, 1998).

We show that the majority of VIP cells perform multisensory integration, that this integration is often a depression rather than an enhancement, and, as a correlate, that it is more often sub- than super-additive or purely additive. We also show that multisensory integration affects neuronal response latencies in bimodal cells, these latencies being intermediate between the unimodal latencies. The functional significance of multisensory processing in VIP is still unclear, although several propositions have been made: peripersonal space representation (Graziano and Gross, 1995; Ladavas and Farne, 2004), the guidance of head movements (Duhamel et al., 1998), and spatial navigation (Bremmer, 2005). Future experiments should address this issue by directly correlating multisensory interactions in single-neuron with specific perceptual or behavioral functions.

Footnotes

We thank Belkacem Messaoudi, Anne Cheylus for technical support, Etienne Olivier, and Sophie Denève for enriching discussions on data analysis. J.-R.D. and S.B. were supported by National Science Foundation Grant 0346785 and Agence Nationale de la Recherche Grant ANR-05-NEUR-024-01. M.A. was supported by a French Minister of Research fellowship.

References

- Avillac M, Ben Hamed S, Olivier E, Duhamel J-R. Multisensory integration in the macaque ventral intrapariel area (VIP) Soc Neurosci Abstr. 2003;29:912–4. [Google Scholar]

- Avillac M, Deneve S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Barraclough NE, Xiao D, Baker CI, Oram MW, Perrett DI. Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. J Cogn Neurosci. 2005;17:377–391. doi: 10.1162/0898929053279586. [DOI] [PubMed] [Google Scholar]

- Bell AH, Corneil BD, Meredith MA, Munoz DP. The influence of stimulus properties on multisensory processing in the awake primate superior colliculus. Can J Exp Psychol. 2001;55:123–132. doi: 10.1037/h0087359. [DOI] [PubMed] [Google Scholar]

- Bell AH, Corneil BD, Munoz DP, Meredith MA. Engagement of visual fixation suppresses sensory responsiveness and multisensory integration in the primate superior colliculus. Eur J Neurosci. 2003;18:2867–2873. doi: 10.1111/j.1460-9568.2003.02976.x. [DOI] [PubMed] [Google Scholar]

- Bell AH, Meredith MA, Van Opstal AJ, Munoz DP. Crossmodal integration in the primate superior colliculus underlying the preparation and initiation of saccadic eye movements. J Neurophysiol. 2005;93:3659–3673. doi: 10.1152/jn.01214.2004. [DOI] [PubMed] [Google Scholar]

- Benevento LA, Fallon J, Davis BJ, Rezak M. Auditory-visual interaction in single cells in the cortex of the superior temporal sulcus and the orbital frontal cortex of the macaque monkey. Exp Neurol. 1977;57:849–872. doi: 10.1016/0014-4886(77)90112-1. [DOI] [PubMed] [Google Scholar]

- Ben Hamed S, Duhamel JR, Bremmer F, Graf W. Representation of the visual field in the lateral intraparietal area of macaque monkeys: a quantitative receptive field analysis. Exp Brain Res. 2001;140:127–144. doi: 10.1007/s002210100785. [DOI] [PubMed] [Google Scholar]

- Bremmer F. Navigation in space. The role of the macaque ventral intraparietal area. J Physiol (Lond) 2005;566:29–35. doi: 10.1113/jphysiol.2005.082552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Brett-Green B, Fifkova E, Larue DT, Winer JA, Barth DS. A multisensory zone in rat parietotemporal cortex: intra- and extracellular physiology and thalamocortical connections. J Comp Neurol. 2003;460:223–237. doi: 10.1002/cne.10637. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol. 1981;46:369–384. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, Goldberg ME. Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol. 1993;69:902–914. doi: 10.1152/jn.1993.69.3.902. [DOI] [PubMed] [Google Scholar]

- Colonius H, Arndt P. A two-stage model for visual-auditory interaction in saccadic latencies. Percept Psychophys. 2001;63:126–147. doi: 10.3758/bf03200508. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Graziano MS. Defensive movements evoked by air puff in monkeys. J Neurophysiol. 2003;90:3317–3329. doi: 10.1152/jn.00513.2003. [DOI] [PubMed] [Google Scholar]

- Corneil BD, Van Wanrooij M, Munoz DP, Van Opstal AJ. Auditory-visual interactions subserving goal-directed saccades in a complex scene. J Neurophysiol. 2002;88:438–454. doi: 10.1152/jn.2002.88.1.438. [DOI] [PubMed] [Google Scholar]

- Driver J, Spence C. Attention and the crossmodal construction of space. Trends Cogn Sci. 1998;2:254–262. doi: 10.1016/S1364-6613(98)01188-7. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Frens MA, Van Opstal AJ. Visual-auditory interactions modulate saccade-related activity in monkey superior colliculus. Brain Res Bull. 1998;46:211–224. doi: 10.1016/s0361-9230(98)00007-0. [DOI] [PubMed] [Google Scholar]

- Frens MA, Van Opstal AJ, Van der Willigen RF. Spatial and temporal factors determine auditory-visual interactions in human saccadic eye movements. Percept Psychophys. 1995;57:802–816. doi: 10.3758/bf03206796. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci. 2005;25:5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Gandhi S. Location of the polysensory zone in the precentral gyrus of anesthetized monkeys. Exp Brain Res. 2000;135:259–266. doi: 10.1007/s002210000518. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Gross CG. The representation of extrapersonal space: a possible role for bimodal, visual-tactile neurons. In: Gazzaniga MS, editor. The cognitive neurosciences. Cambridge, MA: MIT; 1995. pp. 1021–1034. [Google Scholar]

- Graziano MS, Reiss LA, Gross CG. A neuronal representation of the location of nearby sounds. Nature. 1999;397:428–430. doi: 10.1038/17115. [DOI] [PubMed] [Google Scholar]

- Hays AV, Richmond BJ, Optican LM. A UNIX-based multiple-process system for real-time acquisition and control. WESCON Conference Proceedings. 1982:1–10. [Google Scholar]

- Hikosaka K, Iwai E, Saito H, Tanaka K. Polysensory properties of neurons in the anterior bank of the caudal superior temporal sulcus of the macaque monkey. J Neurophysiol. 1988;60:1615–1637. doi: 10.1152/jn.1988.60.5.1615. [DOI] [PubMed] [Google Scholar]

- Hyvärinen J. Regional distribution of functions in parietal association area 7 of the monkey. Brain Res. 1981;206:287–303. doi: 10.1016/0006-8993(81)90533-3. [DOI] [PubMed] [Google Scholar]

- Judge SJ, Richmond BJ, Chu FC. Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res. 1980;20:535–538. doi: 10.1016/0042-6989(80)90128-5. [DOI] [PubMed] [Google Scholar]

- Kadunce DC, Vaughan JW, Wallace MT, Benedek G, Stein BE. Mechanisms of within- and cross-modality suppression in the superior colliculus. J Neurophysiol. 1997;78:2834–2847. doi: 10.1152/jn.1997.78.6.2834. [DOI] [PubMed] [Google Scholar]

- King AJ, Palmer AR. Integration of visual and auditory information in bimodal neurones in the guinea-pig superior colliculus. Exp Brain Res. 1985;60:492–500. doi: 10.1007/BF00236934. [DOI] [PubMed] [Google Scholar]

- Ladavas E, Farne A. Visuo-tactile representation of near-the-body space. J Physiol (Paris) 2004;98:161–170. doi: 10.1016/j.jphysparis.2004.03.007. [DOI] [PubMed] [Google Scholar]

- Leinonen L, Hyvärinen J, Sovijarvi AR. Functional properties of neurons in the temporo-parietal association cortex of awake monkey. Exp Brain Res. 1980;39:203–215. doi: 10.1007/BF00237551. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221:389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol. 1986a;56:640–662. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 1986b;365:350–354. doi: 10.1016/0006-8993(86)91648-3. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial determinants of multisensory integration in cat superior colliculus neurons. J Neurophysiol. 1996;75:1843–1857. doi: 10.1152/jn.1996.75.5.1843. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Nemitz JW, Stein BE. Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J Neurosci. 1987;7:3215–3229. doi: 10.1523/JNEUROSCI.07-10-03215.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrault TJ, Jr, Vaughan JW, Stein BE, Wallace MT. Neuron-specific response characteristics predict the magnitude of multisensory integration. J Neurophysiol. 2003;90:4022–4026. doi: 10.1152/jn.00494.2003. [DOI] [PubMed] [Google Scholar]

- Perrault TJ, Jr, Vaughan JW, Stein BE, Wallace MT. Superior colliculus neurons use distinct operational modes in the integration of multisensory stimuli. J Neurophysiol. 2005;93:2575–2586. doi: 10.1152/jn.00926.2004. [DOI] [PubMed] [Google Scholar]

- Populin LC. Anesthetics changes the excitation/inhibition balance that governs sensory processing in the cat superior colliculus. J Neurosci. 2005;25:5903–5914. doi: 10.1523/JNEUROSCI.1147-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Populin LC, Yin TC. Bimodal interactions in the superior colliculus of the behaving cat. J Neurosci. 2002;22:2826–2834. doi: 10.1523/JNEUROSCI.22-07-02826.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M. Afferent properties of periarcuate neurons in macaque monkeys. I. Somatosensory responses. Behav Brain Res. 1981;2:125–146. doi: 10.1016/0166-4328(81)90052-8. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1877–1886. doi: 10.1046/j.1460-9568.2002.02251.x. [DOI] [PubMed] [Google Scholar]

- Schlack A, Sterbing-D'Angelo SJ, Hartung K, Hoffmann KP, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 2005;25:4616–4625. doi: 10.1523/JNEUROSCI.0455-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Foxe JJ. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res Cogn Brain Res. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Stanford TR, Quessy S, Stein BE. Evaluating the operations underlying multisensory integration in the cat superior colliculus. J Neurosci. 2005;25:6499–6508. doi: 10.1523/JNEUROSCI.5095-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The merging of the senses. Cambridge, MA: MIT; 1993. [Google Scholar]

- Wallace MT, Meredith MA, Stein BE. Integration of multiple sensory modalities in cat cortex. Exp Brain Res. 1992;91:484–488. doi: 10.1007/BF00227844. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Wilkinson LK, Stein BE. Representation and integration of multiple sensory inputs in primate superior colliculus. J Neurophysiol. 1996;76:1246–1266. doi: 10.1152/jn.1996.76.2.1246. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Meredith MA, Stein BE. Multisensory integration in the superior colliculus of the alert cat. J Neurophysiol. 1998;80:1006–1010. doi: 10.1152/jn.1998.80.2.1006. [DOI] [PubMed] [Google Scholar]

- Welch RB, Warren DH. Intersensory interactions. In: Kaufman KR, Thomal JP, editors. Handbook of perception and human performance. New York: Wiley Interscience; 1986. pp. 21–25.pp. 25–36. [Google Scholar]