Abstract

Deficits in parsing concurrent auditory events are believed to contribute to older adults' difficulties in understanding speech in adverse listening conditions (e.g., cocktail party). To explore the level at which aging impairs sound segregation, we measured auditory evoked fields (AEFs) using magnetoencephalography while young, middle-aged, and older adults were presented with complex sounds that either had all of their harmonics in tune or had the third harmonic mistuned by 4 or 16% of its original value. During the recording, participants were asked to ignore the stimuli and watch a muted subtitled movie of their choice. For each participant, the AEFs were modeled with a pair of dipoles in the superior temporal plane, and the effects of age and mistuning were examined on the amplitude and latency of the resulting source waveforms. Mistuned stimuli generated an early positivity (60–100 ms), an object-related negativity (ORN) (140–180 ms) that overlapped the N1 and P2 waves, and a positive displacement that peaked at ∼230 ms (P230) after sound onset. The early mistuning-related enhancement was similar in all three age groups, whereas the subsequent modulations (ORN and P230) were reduced in older adults. These age differences in auditory cortical activity were associated with a reduced likelihood of hearing two sounds as a function of mistuning. The results reveal that inharmonicity is rapidly and automatically registered in all three age groups but that the perception of concurrent sounds declines with age.

Keywords: aging, auditory cortex, evoked potential, attention, speech, streaming, MEG

Introduction

Everyday social interactions often occur in noisy environments in which the voices of others as well as the sounds generated by surrounding objects (e.g., computer, radio, television) can degrade the quality of auditory information. This ambient noise presents a serious obstacle to communication because it can mask the voice of interest, making it difficult for subsequent perceptual and cognitive processes to extract speech signals and derive meaning from them. The impact of background noise on speech perception is greater in older than young adults (Prosser et al., 1990; Tun and Wingfield, 1999; Schneider et al., 2000) and may be related to older adults' difficulties in partitioning the incoming acoustic wave into distinct representations (i.e., auditory scene analysis). This proposal has received support from studies showing higher thresholds in older than young adults for detecting a mistuned harmonic (Alain et al., 2001a; Grube et al., 2003), which remains even after controlling for age differences in hearing sensitivity (Alain et al., 2001a), suggesting impairment in central auditory functioning.

Nonetheless, the neural mechanisms underlying concurrent sound segregation and how they are affected by age are not fully understood. Event-related brain potential (ERP) studies have revealed an attention-independent component that peaks at ∼150 ms after sound onset, referred to as the object-related negativity (ORN) (Alain et al., 2001b, 2002; Alain and Izenberg, 2003; Dyson et al., 2005). The ORN overlaps in time with the N1 and P2 deflections elicited by sound onset and is best illustrated by the difference wave between ERPs elicited by complex sounds that are grouped into one auditory object and those elicited by complex sounds that are segregated into two distinct auditory objects based on frequency periodicity (Alain et al., 2001b, 2002; Alain and Izenberg, 2003), interaural time difference (Johnson et al., 2003; Hautus and Johnson, 2005; McDonald and Alain, 2005), or both (McDonald and Alain, 2005).

Recently, Snyder and Alain (2005) found an age-related decline in concurrent vowel identification, which was paralleled by a decrease in neural activity associated with this task. This age difference in concurrent sound segregation may reflect deficits in periodicity coding in the ascending auditory pathways (Palmer, 1990; Cariani and Delgutte, 1996) and/or primary auditory cortex (Dyson and Alain, 2004) in the left, right, or both hemispheres. Using magnetoencephalography (MEG), Hiraumi et al. (2005) found a right-hemisphere dominance in processing mistuned harmonics. Consequently, the aim of the present study was to examine the level and locus at which aging affects concurrent sound segregation and perception using MEG. First, we measured in the same participants middle- and long-latency auditory evoked fields to complex sounds that had either all of their harmonics in tune or included a mistuned partial. Then, in a subsequent experiment, the effect of age on concurrent sound perception was assessed behaviorally. We hypothesized that the likelihood of reporting hearing two concurrent sounds as a function of mistuning would decline with age and would be associated with reduced ORN amplitude.

Materials and Methods

Participants.

Twelve young (mean age, 26.4 years; SD, 2.6; range, 22–31; 6 female; 1 left handed), 12 middle-aged (mean age, 45; SD, 4.2; range, 40–50; 7 female; 2 left handed), and 14 older (mean age, 66; SD, 5.2; range, 61–78; 7 female; 1 left handed) adults participated in the study. All participants had pure-tone thresholds ≤30 dB hearing level in the range of 250–2000 Hz in both ears (Table 1) and were within normal, clinical range for these frequencies. Nevertheless, there were age differences in audiometric thresholds, with young adults having the lowest thresholds (M = 2.55), followed by the middle-aged group (M = 4.95), and older adults having the highest audiometric thresholds (M = 12.93; F(2,34) = 10.15; p < 0.001). Planned comparisons revealed higher audiometric thresholds in older adults than in young or middle-aged adults (p < 0.005 in both cases). The difference in audiometric thresholds between young and middle-aged adults was not significant (p = 0.34). Participants were recruited from the local community and laboratory personnel. All participants provided informed consent in accordance with the guidelines established by the University of Toronto and Baycrest Centre for Geriatric Care. Data from one older adult were excluded because of excessive ocular artifacts.

Table 1.

Group mean audiometric threshold in young, middle-aged, and older adults

| Frequency |

||||

|---|---|---|---|---|

| Groups | 250 Hz | 500 Hz | 1000 Hz | 2000 Hz |

| Young | −0.8 (5.5) | 6.0 (4.9) | 3.1 (6.0) | 1.9 (7.0) |

| Middle-aged | 0.6 (7.3) | 5.8 (8.2) | 7.1 (8.2) | 6.3 (9.4) |

| Older | 9.6 (10.0) | 14.6 (8.1) | 12.9 (7.4) | 14.6 (10.2) |

Values in parentheses are SD.

Stimuli and task.

The stimuli were complex sounds comprised of 10 tonal elements at equal intensity. Stimuli were digitally generated using a System 3 Real-Time Processor from Tucker-Davis Technologies (Alachua, FL) and presented binaurally via an OB 822 Clinical Audiometer using ER30 transducers (Etymotic Research, Elk Grove Village, IL) and reflectionless plastic tubes of 2.5 m in length. The stimuli were 40 ms in duration, including a 2 ms rise/fall time, and were presented at 80 dB sound pressure level (SPL). Stimuli were either “harmonic,” in that all of the harmonics were an integer multiple of a fundamental frequency (ƒ0) of 200 Hz, or “mistuned,” in which the third harmonic was shifted upward by 4 or 16% of its original value.

Auditory evoked fields (AEFs) in response to tuned and mistuned stimuli were recorded in a passive listening session as participants watched a muted subtitled movie of their choice. Three blocks of trials were presented to participants. The interstimulus interval (ISI) varied randomly between 800 and 1200 ms in 100 ms steps for one block of trials to enable examination of the N1 and ORN components. Approximately 850 trials were recorded in this block. The remaining two blocks of trials contained an ISI of 160–260 ms in 10 ms steps to facilitate investigation of the middle-latency components, particularly the Na and Pa. Each of these blocks contained ∼3800 trials.

Data acquisition and analysis.

The MEG recording took place in a magnetically shielded room using a helmet-shaped 151-channel whole-cortex neuromagnetometer (OMEGA; CTF Systems, VSM Medtech, Vancouver, British Columbia, Canada). To minimize movement, participants were lying down throughout the recording. The neuromagnetic activity was recorded with a sampling rate of 2500 Hz and low-pass filtered at 200 Hz. The analysis epoch included 200 ms of prestimulus activity and 200 or 600 ms of poststimulus activity, for middle- and long-latency evoked responses, respectively. We used BESA (Brain Electrical Source Analysis) software (version 5.1.6) for averaging and dipole source modeling. The artifact rejection threshold was adjusted for each participant such that a minimum of 90% of trials was included in the average. Before averaging the middle- and long-latency responses, the data were high-pass filtered at 16 and 1 Hz, respectively. AEFs were then averaged separately for each site and stimulus type. Middle- and long-latency AEFs were digitally filtered to attenuate frequencies >100 and 20 Hz, respectively.

A dipole source model including a left and a right dipole in the temporal lobe was used as a data reduction method. The dipole source analysis was performed on the grand averaged data and then was kept constant to extract source waveforms for each stimulus condition in each participant. For the middle-latency responses, we fit a pair of dipoles on a small window that encompassed the Na and Pa waves. For the long-latency responses, we fit a pair of dipoles on a 40 ms interval centered on the peak of the N1 wave. Peak amplitude and latency were determined as the largest positivity or negativity in the individual source waveforms during a specific interval. The measurement intervals were 15–35 (Na), 25–45 (Pa), 30–70 (P1), 70–150 (N1), and 140–240 (P2) ms. Latencies were measured relative to sound onset. AEF amplitudes and latencies were analyzed using a mixed-model repeated-measures ANOVA with age as the between-groups factor and mistuning and hemisphere as the within-group factors. The effects of mistuning on AEF amplitude and latency were also analyzed by orthogonal polynomial decomposition (with a focus on the linear and quadratic trends) to assess whether the slope of changes in AEFs as a function of inharmonicity differed among young, middle-aged, and older adults. When appropriate, the degrees of freedom were adjusted with the Greenhouse–Geisser ε, and all reported probability estimates are based on the reduced degrees of freedom, although the original degrees of freedom are reported.

Behavioral task.

After the MEG recordings, participants completed a behavioral task in a double-walled sound-attenuated chamber (model 1204A; Industrial Acoustics, Bronx, NY). Participants were presented with a sample of 500 ms stimuli before data collection to familiarize them with the task and response box. The extended duration of the sample trials was to increase the likelihood that participants would be able to distinguish the different stimulus types, although the actual experimental task presented the shorter 40 ms stimuli used during the MEG recording. After the presentation of each stimulus, participants were asked to indicate whether they heard one sound (i.e., a buzz) or two sounds (i.e., a buzz plus another sound with a pure tone quality). Responses were registered using a multibutton response box, and the next stimulus was presented 1500 ms after the previous response. Participants did not receive any feedback on their performance. A total of 300 trials were presented over two blocks (100 of each stimulus type; 0, 4, and 16% mistuning). The stimuli were presented at 80 dB SPL over Eartone 3A insert earphones using a System 3 Real-Time Processor and a GSI 61 Clinical Audiometer.

Results

Dipole source location

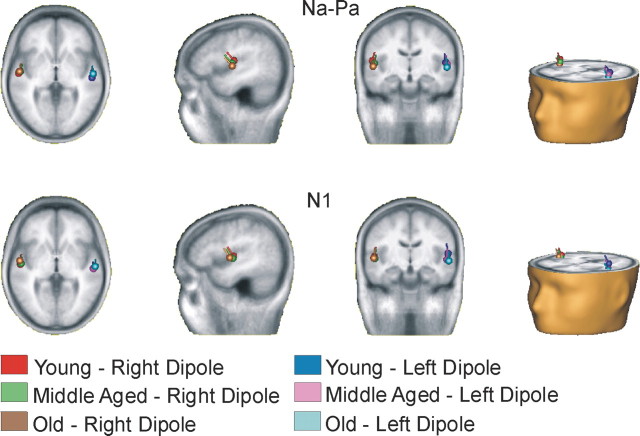

Figure 1 shows the group mean source locations for the Na–Pa and N1 waves. In both cases, the sources were located along the superior temporal plane near Heschl's gyrus. The effects of age on Na–Pa and N1 source locations were examined by comparing the source coordinates (i.e., x, y, and z coordinates) separately with components (Na–Pa and N1), hemisphere (left and right), and group as factors. Overall, the Na–Pa and N1 source locations in the right hemisphere were anterior to those obtained for the left hemisphere (F(1,34) = 57.23; p < 0.001). There was no difference in Na–Pa and N1 locations along the anterior–posterior axis, nor was the effect of age significant. However, the N1 source locations were inferior to those obtained for the Na–Pa responses (F(1,34) = 9.51; p < 0.001). Overall, the older adults' source locations were inferior to those obtained for young adults (main effect of age, F(1,34) = 3.68; p < 0.05; young vs older adults, p < 0.02). Last, there was a significant group × component × hemisphere interaction (F(2,34) = 5.79; p < 0.01). To gain a better understanding of this interaction, the effects of age were examined separately for the Na–Pa and N1 source locations. For the Na–Pa, there was a main effect of age (F(2,34) = 3.50; p < 0.05), with Na–Pa sources being more inferior in older than in young adults (p < 0.02). For the N1, there was a significant group × hemisphere interaction (F(2,34) = 4.08; p < 0.05), which was attributable to an age effect on the source location obtained in the left hemisphere only. The N1 sources were more inferior in middle-aged and older adults than in young adults (p = 0.051 and 0.02, respectively).

Figure 1.

Group mean location for the left and right dipoles overlaid in magnetic resonance imaging template from BESA (5.1.6).

Auditory evoked fields

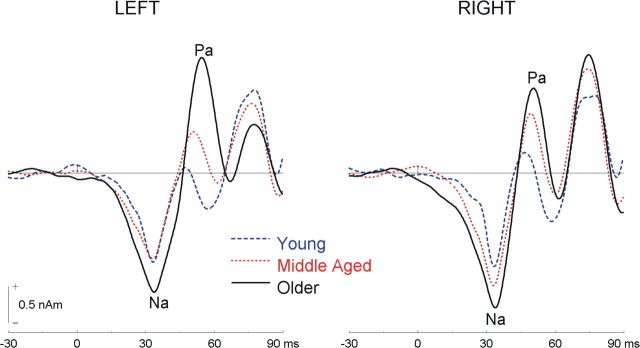

Middle-latency AEF source waveforms from auditory cortex are shown in Figure 2. The source waveforms comprise several transient responses, including the Na and Pa, which peak respectively at ∼24 and 38 ms after subtracting the 10 ms delay of the tube system. The effect of mistuning on the Na and Pa peak amplitude and latency was not significant, nor was the interaction between group and mistuning. The main effect of age on the Na peak amplitude or latency was not significant (F(2,33) = 1.99 and 1.54, respectively). However, there was a main effect of age on the Pa peak amplitude and peak latency (F(2,34) = 3.78 and 6.55, respectively; p < 0.05 in both cases). Pairwise comparisons reveal larger Pa amplitude in older adults than in younger adults (p < 0.02). Older adults also tended to generate larger amplitude than middle-aged adults, but this difference did not reach significance (p = 0.063). There was no significant difference in Pa amplitude between young and middle-aged adults. For the Pa latency, pairwise comparisons revealed longer Pa latency for middle-aged (M = 39 ms) and older (M = 40 ms) adults than for young adults (M = 35 ms; p < 0.01 in both cases). There was no difference in Pa latency between middle-aged and older adults.

Figure 2.

Group mean source waveforms for middle-latency evoked fields averaged over stimulus type in young, middle-aged, and older adults.

Figure 3 shows long-latency AEF source waveforms. In all three age groups, complex sounds generated P1, N1, and P2 waves, peaking at ∼53, 109, and 197 ms, respectively, after sound onset. Although the P1 and N1 appear larger in older adults, these differences were not statistically reliable (F(2,34) < 1 in both cases), nor was the difference in P1 latency among the three age groups (F(2,34) < 1). However, the effect of age on the N1 latency was significant (F(2,34) = 4.27; p < 0.05), with older adults showing delayed N1 relative to middle-aged adults (p < 0.01). No other pairwise comparisons reached significance.

Figure 3.

Group mean source waveforms for long-latency evoked fields in young, middle-aged, and older adults. The ORN is best illustrated in the difference in source waveforms between the tuned and the 16% mistuned stimuli.

In a recent MEG study, Hiraumi et al. (2005) reported a larger and delayed N1 amplitude for mistuned compared with tuned stimuli in the right hemisphere, suggesting a right-hemisphere dominance in segregating a mistuned partial. In the present study, an ANOVA on the N1 peak amplitude and latency yielded a main effect of mistuning, but only for latency (F(2,68) = 16.07; linear trend, F(1,34) = 28.79; p < 0.001 in both cases), which may be accounted for by the ORN being superimposed on the N1 wave (see below). The main effect of mistuning on N1 amplitude was not significant, nor was the interaction between hemisphere and mistuning. However, the N1 was larger in the right than the left hemisphere (F(1,34) = 11.55; p < 0.005).

Last, the P2 wave showed similar amplitude in young, middle-aged, and older adults (F(2,34) < 1), although there was a main effect of age on the P2 latency (F(2,30) = 4.94; p < 0.05). Pairwise comparisons revealed a delayed P2 in older adults relative to middle-aged adults (p < 0.005). The difference in P2 latency between young and older adults failed to reach significance (p = 0.08). The ANOVA on P2 latency also revealed a main effect of mistuning (F(2,68) = 10.03; linear trend, F(1,34) = 17.03; p < 0.001 in both cases). The group × mistuning interaction was not significant.

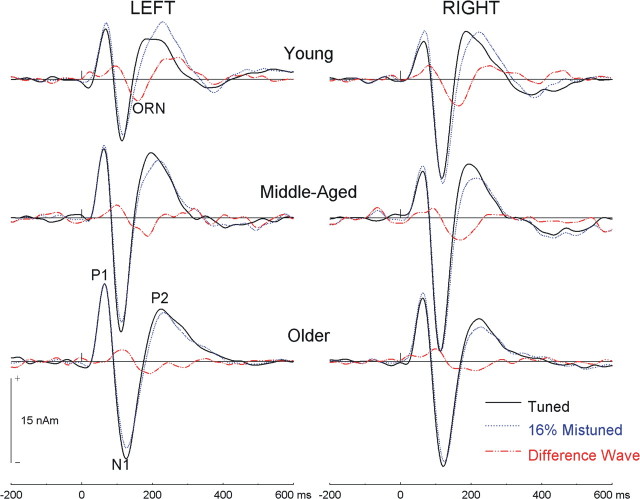

Object-related negativity

The neural activity associated with the processing of a mistuned harmonic is best illustrated by subtracting the source waveform obtained from the tuned stimulus from those acquired for the 4 and 16% mistuned stimuli (cf. Alain et al., 2001b). In young adults, this subtraction procedure yielded a triphasic response comprised of an early positive peak (∼80 ms), followed by the ORN (∼160 ms) and a second positive peak (∼250 ms) (Fig. 3). The modulations before and after the ORN were caused by a shift in N1 and P2 latency, respectively, with increased mistuning. Differences in source waveforms for young, middle-aged, and older adults are shown in Figure 4. The effects of age on neural activity associated with processing a mistuned harmonic were quantified by comparing mean amplitude over the following three intervals: 60–100, 140–180, and 230–270 ms.

Figure 4.

Object-related negativity: age effects. The difference in source waveforms between the tuned and the 16% mistuned stimuli in young, middle-aged, and older adults is shown.

For the 60–100 ms interval, there was a main effect of mistuning (F(2,68) = 12.45; p < 0.001; linear trend, F(1,34) = 19.45; p < 0.001). Pairwise comparisons revealed greater positivity for complex sounds with a 16% mistuned harmonic relative to the tuned and the 4% mistuned stimuli (p < 0.001 in both cases). There was no difference in amplitude between the source waveform amplitude elicited by the tuned and the 4% mistuned harmonic. The main effect of age was not significant, nor was the interaction between group and mistuning. The mistuning by hemisphere interaction was not significant.

For the 140–180 ms interval, the effect of mistuning was significant (F(2,68) = 22.51; p < 0.001; linear trend and quadratic trend, F(1,34) = 31.80 and 7.05, respectively; p < 0.02 in both cases). The source waveforms were more negative when the complex sound comprised a 16% mistuned harmonic, rather than a tuned or a 4% mistuned harmonic (p < 0.001 in both cases). There was no difference in source waveforms between the tuned and 4% mistuned stimuli. The main effect of age was significant (F(2,34) = 4.74; p < 0.05). More importantly, the group × mistuning interaction was significant (F(4,68) = 3.73; p < 0.05; linear and quadratic trend, F(2,34) = 3.37 and 4.35, respectively; p < 0.05 in both cases). Planned comparisons revealed smaller ORN amplitude in older adults than in young adults (p < 0.05). There was no significant difference in ORN amplitude between young and middle-aged adults, nor was the difference in ORN amplitude between the middle-aged and older adults significant. Last, the interaction between mistuning and hemisphere was significant (linear trend, F(1,34) = 8.01; p < 0.01) and reflected greater ORN amplitude in the right than in the left hemisphere. The interaction among group, mistuning, and hemisphere was not significant, indicating that the age-related decrease in ORN amplitude was similar in both hemispheres.

The ANOVA on the mean amplitude between 230 and 270 ms yielded a main effect of mistuning (F(2,68) = 3.92; p < 0.05; linear trend, F(1,34) = 5.08; p < 0.05). The source waveforms were more positive when the harmonic was mistuned by 16% than with the tuned (p < 0.05) or the 4% mistuned (p = 0.053) stimuli. There was no difference in source waveform amplitude between the tuned and the 4% mistuned stimuli. In addition, there was a significant group × mistuning interaction (F(4,68) = 3.92; p < 0.02; linear trend, F(2,34) = 5.30; p = 0.01). Separate ANOVA in each group revealed mistuning-related changes in source waveform amplitude only in young adults (F(2,22) = 4.94; p < 0.05; linear trend, F(1,11) = 6.24; p < 0.05). The group × mistuning × hemisphere interaction was not significant.

Behavioral results

In the behavioral task, using the same stimuli as in the MEG measurements, listeners were asked to indicate whether they heard one sound (i.e., a buzz) or two sounds (i.e., a buzz plus another sound with a pure tone quality). Figure 5 shows the effects of age on listeners' likelihood of reporting hearing two sounds as a function of mistuning. The effects of age on concurrent sound perception as a function of mistuning were analyzed by orthogonal polynomial decomposition (with a focus on the linear and quadratic trends). Overall, listeners' likelihood of reporting hearing two concurrent sounds increased with mistuning in all three age groups (main effect of mistuning, F(2,68) = 113.67; p < 0.001; linear and quadratic trend, F(1,34) = 145.03 and 51.93, respectively; p < 0.001 in both cases). More importantly, the interaction between group and mistuning was significant (F(4,68) = 9.03; p < 0.001; linear trend, F(2,34) = 11.98; p < 0.001; quadratic trend, F(1,34) = 3.21; p = 0.053), indicating that concurrent sound perception was affected by age. Young and middle-aged adults showed similar increases in reporting hearing two concurrent auditory objects with increasing mistuning. However, older adults were more likely to report hearing two concurrent sounds when the harmonics were in tune than young adults (t(23) = 2.22; p < 0.05), and conversely, older adults were less likely to report hearing two concurrent sounds than young adults when the partial was mistuned by 16% (t(23) = 3.62; p = 0.001).

Figure 5.

Perceptual judgment. The likelihood of reporting hearing two concurrent sounds as a function of mistuning in young, middle-aged, and older adults is shown. The error bars indicate SEM.

This age difference in concurrent sound perception could be partly attributed to the elevated audiometric thresholds in older adults. To assess the impact of this factor on behavioral judgment, we conducted an analysis of covariance using the mean audiometric thresholds for 250, 500, 1000, and 2000 Hz and slope of the audiogram for these frequencies as covariates. The analysis of covariance yielded a significant group × mistuning interaction (linear trends, F(2,32) = 7.23; p < 0.005). This analysis indicates that the age effect on concurrent sound perception remains even after controlling for age differences in hearing sensitivity.

Brain behavior correlations

Figure 6 illustrates the changes in neuromagnetic responses during the ORN interval as a function of listeners' subjective reports of hearing two concurrent sound objects. As the amplitude of the source waveforms becomes more negative with mistuning, so does the listener's likelihood of reporting hearing two concurrent sounds. To quantify this relationship, Spearman rank order correlation was calculated for each group with participants entered as a blocking variable (Taylor, 1987). For the 140–180 ms interval measured from the source waveforms from the right hemisphere, Spearman correlation coefficient across subjects was −0.70 (p < 0.001) for the younger adults, −0.76 (p < 0.005) for middle-aged adults, and −0.34 (p = 0.08) for the older adults. In the older adult group, three individuals showed an opposite pattern from the remaining participants. When these individuals were excluded, the group average correlation was −0.64 (p < 0.02). In comparison, no significant correlation was found between the amplitude of the source waveform from the left hemisphere and participants' phenomenological experience. The group mean Spearman correlation coefficients were −0.24, −0.35, and −0.16 for young, middle-aged, and older adults, respectively. The relationship between changes in neuromagnetic responses before and after the ORN was also examined. For the 60–100 ms interval, the Spearman correlation between the source waveform amplitude recorded from the right hemisphere and listeners' perception was significant in young (r = 0.70; p < 0.01), middle-aged (r = 0.45; p < 0.05), and older (r = 0.49; p < 0.05) adults. There was no significant correlation between the source waveform amplitude recorded from the left hemisphere and perception. For the 230–270 ms interval, only the source waveforms from the left hemisphere correlated with perception in young adults (r = 0.70; p < 0.001), although there was a borderline effect in the right hemisphere (r = 0.36; p = 0.07). There was no significant correlation between source waveform amplitude and perception in middle-aged or older adults.

Figure 6.

Brain/behavior relationship. Group mean changes in source waveform amplitude during the ORN interval (i.e., 140–180 ms) are plotted against listeners' likelihood of reporting hearing two concurrent sounds as a function of mistuning for young, middle-aged, and older adults. RH, Right hemisphere; LH, left hemisphere; ERF, event-related field.

In a second analysis, we examined the relationship between the mistuning-related changes in source waveform amplitude (obtained in the difference between the tuned and the 16% mistuned stimuli) and a composite index of perception taking into account response bias (i.e., proportion of reporting hearing two sounds for the 16% mistuned stimuli minus that obtained for the tuned stimuli), with each participant contributing one observation. For the 60–100 ms interval, there was significant Pearson correlation between amplitude and perception in both right and left hemispheres (r = 0.39 and 0.38, respectively; p < 0.05 in both cases). For the 140–180 ms interval, this analysis revealed significant correlations between the ORN amplitude recorded from the right (r = −0.53; p < 0.001) and left (r = −0.38; p < 0.05) hemispheres and perception, with the ORN amplitude increasing with subjective report of hearing two concurrent sound objects. Last, for the 230–270 ms interval, no significant correlation was found between the difference source waveform amplitude recorded from the right (r = 0.21) and left (r = 28) hemispheres and perception.

Discussion

This study demonstrated an age-related decline in concurrent sound perception that was paralleled by neuromagnetic changes in auditory cortex. Although the likelihood of reporting hearing two concurrent auditory objects increased with mistuning in all three age groups, older adults were less likely to report perceiving two concurrent sounds, even if the partial was mistuned by 16% of its original value. This amount of mistuning is considerably large and was chosen because it usually leads to a “pop-out” effect, in much the same way that a visual target defined by a unique color pops out of a display filled with homogeneous distractors defined by a secondary color (Treisman and Gelade, 1980). This amount of mistuning is also well above older adults' thresholds for detecting mistuning, which averaged ∼5–6% for 100 ms sounds (Alain et al., 2001a). More importantly, the effect of age on concurrent sound perception remains even after controlling for differences in hearing sensitivity between young and older adults. Hence, the age difference in concurrent sound perception cannot easily be accounted for by problems in the peripheral auditory system (e.g., broadening of auditory filters) and is likely to involve changes in central auditory processes supporting concurrent sound segregation and perception.

In all three age groups, processing of complex sounds composed of several tonal elements was associated with activity in or near the primary auditory cortex. In addition to the P1, N1, and P2 waves, the 16% mistuned stimuli generated early mistuning-related enhancement in positivity that was similar in all three age groups and may reflect an early registration of inharmonicity in primary auditory cortex. The latency of this effect (∼80 ms), in conjunction with the fundamental frequency of the complex sound (200 Hz), implies that frequency periodicity, on which concurrent sound segregation depends, is extracted quickly (i.e., within the first 16 cycles of the stimulus). This speedy registration of inharmonicity is consistent with findings from a recent study by Dyson and Alain (2004), who found an effect of mistuning on auditory evoked potentials as early as 30 ms after sound onset. Evidence from animal studies also suggests that frequency periodicity is already coded in the auditory nerve (Palmer, 1990; Cariani and Delgutte, 1996) and inferior colliculus (Sinex et al., 2002; Sinex et al., 2005). Together, these findings suggest that segregation based on periodicity coding may be taking place in auditory pathways before the primary and/or associative auditory cortices. More importantly, this early and automatic registration of a suprathreshold mistuned partial is little affected by aging: age effects on concurrent sound perception were associated with changes occurring at a subsequent stage of processing (i.e., after the extraction of inharmonicity has taken place).

In the present study, older adults were less likely to report hearing the 16% mistuned harmonic as a separate object, and this change in subjective report was paralleled by a decrease in ORN amplitude. The ORN is a relatively new ERP component that correlates with listeners' likelihood of reporting hearing two concurrent sound objects (Alain et al., 2001b). Its generation is minimally affected by manipulation of attentional load (Alain and Izenberg, 2003; Dyson et al., 2005). Sounds segregated based on harmonicity, interaural difference, or both generate an ORN, indicating that this component indexes a relatively general process that groups sound according to common features. In agreement with previous research, the ORN amplitude correlated with perceptual judgment in all three age groups and was obtained even when participants were not attending to the stimuli, consistent with the proposal that concurrent sound segregation may occur independently of listeners' attention. The age-related decrease in ORN amplitude provides additional evidence suggesting that older adults experience difficulties in parsing concurrent sounds. Although older adults showed elevated thresholds relative to young adults, it is unlikely that this small difference in hearing sensitivity could account for the reduced ORN amplitude observed in the present study, because the N1 amplitude, which is thought to index sound audibility (Martin et al., 1997, 1999; Oates et al., 2002; Martin and Stapells, 2005), was similar in all three age groups. This suggests that older adults could adequately hear the complex sounds: age differences emerged only with respect to concurrent sound segregation and perception.

The age-related decrease in ORN amplitude may reflect a failure to automatically group tonal elements into concurrent, yet separate, sound objects. Another possibility would be that the grouping process itself is little affected by age but that aging impairs listeners' ability to “access” information in sensory memory. Evidence from magnetic resonance imaging suggests that aging is associated with damage to white matter tracts caused by axonal loss (O'Sullivan et al., 2001; Charlton et al., 2006), which is thought to disrupt the flow of information among nodes composing neural networks supporting working memory (Charlton et al., 2006) as well as concurrent sound perception.

In the present study, the effect of mistuning on source waveforms was greater in the right than the left hemisphere. Moreover, the amplitude of the source waveforms from the right hemisphere showed significant correlations with perception, whereas no such correlation was found between perception and source activity from the left auditory cortex. These findings are consistent with the proposal of a right-hemisphere dominance in processing mistuned harmonics (Hiraumi et al., 2005). The right hemisphere is thought to play an important role in processing spectral complexity and may play a key role in encoding signal periodicity (Hertrich et al., 2004). In the present study, the laterality difference occurred later than the one observed by Hiraumi et al. (2005). This small discrepancy between our findings and those of Hiraumi et al. (2005) may be related to several factors, including differences in sound duration, number of trials presented to participants, and the utilization of fixed versus random sound presentation. For example, previous behavioral research has shown that detecting harmonicity decreased with increasing sound duration (Moore et al., 1985; Alain et al., 2001a). Hence, it is possible that the longer stimulus durations used by Hiraumi et al. (2005) have generated earlier ORN latencies, which would have overlapped the N1 wave and caused an enhanced N1 amplitude and shift in latency.

The perception of concurrent sounds was also associated with enhanced positivity at ∼230 ms after sound onset. This modulation likely reflects an automatic process, as participants were not required to pay attention or respond to the stimuli during the recording of neuromagnetic activity. This enhancement may also index attention capture when the mistuned harmonic popped out of the harmonic series.

Middle-latency evoked responses

In the present study, the sources of the Na–Pa responses were located in the planum temporale along the Sylvian fissure and could be separated from the N1 sources. The time course of the source waveforms revealed an age-related increase in Pa amplitude, which is consistent with previous EEG studies (Chambers and Griffiths, 1991; Amenedo and Diaz, 1998). The observed age-related increases in amplitude may reflect a failure to inhibit the response to repetitive auditory stimuli and has been associated with age-related changes in prefrontal cortex (Chao and Knight, 1997). However, the enhanced amplitude could also be partly related to inhibitory deficits occurring in cochlear nucleus (Caspary et al., 2005) and/or at different relays (e.g., inferior colliculus) along the ascending auditory pathway.

Concluding remarks

The auditory environment is complex and often involves many sound sources that are simultaneously active. Therefore, listeners' ability to partition the incoming acoustic wave into its constituents is paramount to solving the cocktail party. Here, we show that normal aging impairs auditory processing at various levels in the auditory system. We found an early age-related change in sensory registration, which could be partly related to changes in inhibitory properties of auditory neurons. In addition, we found age-related differences in auditory cortical activity that paralleled older adults' difficulties in parsing concurrent auditory events. Hence, the results show that concurrent auditory objects are represented in auditory cortices and that normal aging impedes listeners from forming accurate representations of concurrent sound sources and/or prevents them from accessing those representations. In the present study, middle-aged adults showed brain responses and performance similar to those of young adults, indicating that age-related problems in concurrent sound segregation and perception are taking place for the most part after the fifth decade of life.

The effects of age on concurrent sound perception are in contrast with the lack of age-related changes in auditory stream segregation (Alain et al., 1996; Snyder and Alain, 2007), which requires listeners to sequentially process successive tones over several seconds. The apparent dissociation between the effects of age on concurrent and sequential sound segregation might be attributable to the presence of different neural mechanisms for these two types of sound segregation. Although concurrent sound perception depends on a fine and detailed analysis of incoming acoustic data, the segregation of sequentially occurring stimuli may rely on a more coarse representation of acoustic information, which may remain relatively spared by normal aging.

Footnotes

This work was supported by grants from the Canadian Institutes of Health Research, the Natural Sciences and Engineering Research Council of Canada, and the Hearing Research Foundation of Canada. We thank Ben Dyson and Malcolm Binns for valuable discussion and for their suggestions on a previous version of this manuscript.

References

- Alain C, Izenberg A. Effects of attentional load on auditory scene analysis. J Cogn Neurosci. 2003;15:1063–1073. doi: 10.1162/089892903770007443. [DOI] [PubMed] [Google Scholar]

- Alain C, Ogawa KH, Woods DL. Aging and the segregation of auditory stimulus sequences. J Gerontol B Psychol Sci Soc Sci. 1996;51:91–93. doi: 10.1093/geronb/51b.2.p91. [DOI] [PubMed] [Google Scholar]

- Alain C, McDonald KL, Ostroff JM, Schneider B. Age-related changes in detecting a mistuned harmonic. J Acoust Soc Am. 2001a;109:2211–2216. doi: 10.1121/1.1367243. [DOI] [PubMed] [Google Scholar]

- Alain C, Arnott SR, Picton TW. Bottom-up and top-down influences on auditory scene analysis: evidence from event-related brain potentials. J Exp Psychol Hum Percept Perform. 2001b;27:1072–1089. doi: 10.1037//0096-1523.27.5.1072. [DOI] [PubMed] [Google Scholar]

- Alain C, Schuler BM, McDonald KL. Neural activity associated with distinguishing concurrent auditory objects. J Acoust Soc Am. 2002;111:990–995. doi: 10.1121/1.1434942. [DOI] [PubMed] [Google Scholar]

- Amenedo E, Diaz F. Effects of aging on middle-latency auditory evoked potentials: a cross-sectional study. Biol Psychiatry. 1998;43:210–219. doi: 10.1016/S0006-3223(97)00255-2. [DOI] [PubMed] [Google Scholar]

- Cariani PA, Delgutte B. Neural correlates of the pitch of complex tones. II. Pitch shift, pitch ambiguity, phase invariance, pitch circularity, rate pitch, and the dominance region for pitch. J Neurophysiol. 1996;76:1717–1734. doi: 10.1152/jn.1996.76.3.1717. [DOI] [PubMed] [Google Scholar]

- Caspary DM, Schatteman TA, Hughes LF. Age-related changes in the inhibitory response properties of dorsal cochlear nucleus output neurons: role of inhibitory inputs. J Neurosci. 2005;25:10952–10959. doi: 10.1523/JNEUROSCI.2451-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers RD, Griffiths SK. Effects of age on the adult auditory middle latency response. Hear Res. 1991;51:1–10. doi: 10.1016/0378-5955(91)90002-q. [DOI] [PubMed] [Google Scholar]

- Chao LL, Knight RT. Prefrontal deficits in attention and inhibitory control with aging. Cereb Cortex. 1997;7:63–69. doi: 10.1093/cercor/7.1.63. [DOI] [PubMed] [Google Scholar]

- Charlton RA, Barrick TR, McIntyre DJ, Shen Y, O'Sullivan M, Howe FA, Clark CA, Morris RG, Markus HS. White matter damage on diffusion tensor imaging correlates with age-related cognitive decline. Neurology. 2006;66:217–222. doi: 10.1212/01.wnl.0000194256.15247.83. [DOI] [PubMed] [Google Scholar]

- Dyson BJ, Alain C. Representation of concurrent acoustic objects in primary auditory cortex. J Acoust Soc Am. 2004;115:280–288. doi: 10.1121/1.1631945. [DOI] [PubMed] [Google Scholar]

- Dyson BJ, Alain C, He Y. Effects of visual attentional load on low-level auditory scene analysis. Cogn Affect Behav Neurosci. 2005;5:319–338. doi: 10.3758/cabn.5.3.319. [DOI] [PubMed] [Google Scholar]

- Grube M, von Cramon DY, Rubsamen R. Inharmonicity detection. Effects of age and contralateral distractor sounds. Exp Brain Res. 2003;153:637–642. doi: 10.1007/s00221-003-1640-0. [DOI] [PubMed] [Google Scholar]

- Hautus MJ, Johnson BW. Object-related brain potentials associated with the perceptual segregation of a dichotically embedded pitch. J Acoust Soc Am. 2005;117:275–280. doi: 10.1121/1.1828499. [DOI] [PubMed] [Google Scholar]

- Hertrich I, Mathiak K, Lutzenberger W, Ackermann H. Time course and hemispheric lateralization effects of complex pitch processing: evoked magnetic fields in response to rippled noise stimuli. Neuropsychologia. 2004;42:1814–1826. doi: 10.1016/j.neuropsychologia.2004.04.022. [DOI] [PubMed] [Google Scholar]

- Hiraumi H, Nagamine T, Morita T, Naito Y, Fukuyama H, Ito J. Right hemispheric predominance in the segregation of mistuned partials. Eur J Neurosci. 2005;22:1821–1824. doi: 10.1111/j.1460-9568.2005.04350.x. [DOI] [PubMed] [Google Scholar]

- Johnson BW, Hautus M, Clapp WC. Neural activity associated with binaural processes for the perceptual segregation of pitch. Clin Neurophysiol. 2003;114:2245–2250. doi: 10.1016/s1388-2457(03)00247-5. [DOI] [PubMed] [Google Scholar]

- Martin BA, Stapells DR. Effects of low-pass noise masking on auditory event-related potentials to speech. Ear Hear. 2005;26:195–213. doi: 10.1097/00003446-200504000-00007. [DOI] [PubMed] [Google Scholar]

- Martin BA, Sigal A, Kurtzberg D, Stapells DR. The effects of decreased audibility produced by high-pass noise masking on cortical event-related potentials to speech sounds /ba/ and /da/ J Acoust Soc Am. 1997;101:1585–1599. doi: 10.1121/1.418146. [DOI] [PubMed] [Google Scholar]

- Martin BA, Kurtzberg D, Stapells DR. The effects of decreased audibility produced by high-pass noise masking on N1 and the mismatch negativity to speech sounds /ba/ and /da/ J Speech Lang Hear Res. 1999;42:271–286. doi: 10.1044/jslhr.4202.271. [DOI] [PubMed] [Google Scholar]

- McDonald KL, Alain C. Contribution of harmonicity and location to auditory object formation in free field: evidence from event-related brain potentials. J Acoust Soc Am. 2005;118:1593–1604. doi: 10.1121/1.2000747. [DOI] [PubMed] [Google Scholar]

- Moore BC, Peters RW, Glasberg BR. Thresholds for the detection of inharmonicity in complex tones. J Acoust Soc Am. 1985;77:1861–1867. doi: 10.1121/1.391937. [DOI] [PubMed] [Google Scholar]

- Oates PA, Kurtzberg D, Stapells DR. Effects of sensorineural hearing loss on cortical event-related potential and behavioral measures of speech-sound processing. Ear Hear. 2002;23:399–415. doi: 10.1097/00003446-200210000-00002. [DOI] [PubMed] [Google Scholar]

- O'Sullivan M, Jones DK, Summers PE, Morris RG, Williams SC, Markus HS. Evidence for cortical “disconnection” as a mechanism of age-related cognitive decline. Neurology. 2001;57:632–638. doi: 10.1212/wnl.57.4.632. [DOI] [PubMed] [Google Scholar]

- Palmer AR. The representation of the spectra and fundamental frequencies of steady-state single- and double-vowel sounds in the temporal discharge patterns of guinea pig cochlear-nerve fibers. J Acoust Soc Am. 1990;88:1412–1426. doi: 10.1121/1.400329. [DOI] [PubMed] [Google Scholar]

- Prosser S, Turrini M, Arslan E. Effects of different noises on speech discrimination by the elderly. Acta Otolaryngol [Suppl] 1990;476:136–142. doi: 10.3109/00016489109127268. [DOI] [PubMed] [Google Scholar]

- Schneider BA, Daneman M, Murphy DR, See SK. Listening to discourse in distracting settings: the effects of aging. Psychol Aging. 2000;15:110–125. doi: 10.1037//0882-7974.15.1.110. [DOI] [PubMed] [Google Scholar]

- Sinex DG, Sabes JH, Li H. Responses of inferior colliculus neurons to harmonic and mistuned complex tones. Hear Res. 2002;168:150–162. doi: 10.1016/s0378-5955(02)00366-0. [DOI] [PubMed] [Google Scholar]

- Sinex DG, Li H, Velenovsky DS. Prevalence of stereotypical responses to mistuned complex tones in the inferior colliculus. J Neurophysiol. 2005;94:3523–3537. doi: 10.1152/jn.01194.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder JS, Alain C. Age-related changes in neural activity associated with concurrent vowel segregation. Brain Res Cogn Brain Res. 2005;24:492–499. doi: 10.1016/j.cogbrainres.2005.03.002. [DOI] [PubMed] [Google Scholar]

- Snyder JS, Alain C. Sequential auditory scene analysis is preserved in normal aging adults. Cereb Cortex. 2007 doi: 10.1093/cercor/bhj175. in press. [DOI] [PubMed] [Google Scholar]

- Taylor JM. Kendall's and Spearman's correlation coefficients in the presence of a blocking variable. Biometrics. 1987;43:409–416. [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cognit Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Tun PA, Wingfield A. One voice too many: adult age differences in language processing with different types of distracting sounds. J Gerontol B Psychol Sci Soc Sci. 1999;54:317–327. doi: 10.1093/geronb/54b.5.p317. [DOI] [PubMed] [Google Scholar]