Abstract

Although knowledge indexes our experiences of the world, the neural basis of this relationship remains to be determined. Previous neuroimaging research, especially involving knowledge biased to visual and functional information, suggests that semantic representations depend on modality-specific brain mechanisms. However, it is unclear whether sensory cortical regions, in general, support retrieval of perceptual knowledge. Using neuroimaging methods, we show that semantic decisions that index tactile, gustatory, auditory, and visual knowledge specifically activate brain regions associated with encoding these sensory experiences. Retrieval of tactile knowledge was specifically associated with increased activation in somatosensory, motor, and premotor cortical regions. In contrast, decisions involving flavor knowledge increased activation in an orbitofrontal region previously implicated in processing semantic comparisons among edible items. Perceptual knowledge retrieval that references visual and auditory experiences was associated with increased activity in distinct temporal brain regions involved in the respective sensory processing. These results indicate that retrieval of perceptual knowledge relies on brain regions used to mediate sensory experiences with the referenced objects.

Keywords: memory, language, imaging, fMRI, sensorimotor, decision, cerebral cortex

Introduction

Even as some sensory brain regions appear to instantiate knowledge of objects, it is unclear whether this relationship is evident across the sensory modalities to explain how perceptual knowledge is retrieved. The mental representation of meaning has traditionally been understood as some type of symbolic abstraction such as sets of propositions (Anderson, 1993), lists of features (Katz and Fodor, 1963), or networks of associated lexical items (Landauer and Dumais, 1997). These amodal theories offer the parsimony of a unitary system that specifies, for example, semantic knowledge as a list of features [e.g., sweet (taste, sugar or saccharine; pleasing)] or co-occurrences in context. An alternative understanding is that for concrete objects, the sensory input is critical to knowledge retrieval. The brain's sensory mechanisms might support not only the perceptual encoding of visual, auditory, tactile, and gustatory experiences, but also semantic decisions which reference that knowledge.

Although some neuroimaging evidence has accumulated to support such modality-specific accounts of semantic memory (for in-depth review, see Martin and Chao, 2001; Thompson-Schill, 2003) as typically based on visual and functional differences, it is unclear whether sensory brain regions, in general, support retrieval of perceptual knowledge. Regions in the ventral temporal cortex close to color perception areas are activated by the generation of color words (Martin et al., 1995). Furthermore, Mummery and colleagues (Mummery et al., 1998) found that dorsal and ventral cortical regions respectively support color and location judgments, likely based on the delineation of extrastriate visual processing streams (Mishkin and Ungerleider, 1982). Semantic decisions involving other modalities suggest a broad relationship between perceptual knowledge retrieval and sensory brain mechanisms. An area in the posterior superior temporal cortex, adjacent to the auditory-association cortex, is activated when participants are asked to judge the sound that an object makes (Kellenbach et al., 2001). In addition, comparisons between fruit names show a specific recruitment of medial orbitofrontal regions (Goldberg et al., 2006) implicated in processing olfactory and gustatory information (Rolls, 2004). Yet although these disparate results are suggestive, no one study has examined with common task demands whether retrieval of perceptual knowledge, in general, relies on sensory brain mechanisms.

To investigate the neuroanatomical basis of perceptual knowledge retrieval, we scanned participants using functional magnetic resonance imaging (fMRI) during property verification decisions across the sensory modalities. We hypothesized that brain regions involved in encoding sensory information for each modality would specifically support perceptual decisions that reference those object experiences. No previous research to our knowledge has shown areas in the somatosensory cortex to be active during a tactile semantic judgment, although we expected such activation when participants verified tactile knowledge. To test whether comparisons among fruit names activate the medial orbitofrontal cortex because of the flavor properties involved (Goldberg et al., 2006), we examined activity in this region when participants verified taste knowledge. Finally, auditory mechanisms in the superior temporal cortex were expected to support decisions based on sound knowledge, whereas verifications of color knowledge were expected to activate the ventral temporal extrastriate cortex.

Materials and Methods

Participants.

We scanned 15 right-handed, native American-English speakers enrolled as students at the University of Pittsburgh while they verified perceptual properties of word items. One participant was removed from the sample because of excessive head motion (> 3 mm) during the scanning session. Therefore, a total of 14 participants (six female, eight male; mean age, 21.86; range, 18–27) were included in data analyses.

Procedures.

During the experiment, participants were asked to determine whether a concrete word item possessed a given property from one of four sensory modalities, including color (green), sound (loud), touch (soft), or taste (sweet). These property verification decisions were blocked by modality with six consecutive trials of the same question. In each trial, participants had up to 1500 ms to verify the item–property relationship with a fixation cross after the stimulus for 1500 ms. During each trial, only the stimulus word appeared on the screen. The verification question for each modality was presented on an instruction screen for eight seconds, immediately before each block of six trials. As an additional test of the semantic role of the predicted sensory regions, during control blocks, participants indicated whether a given letter was present in pronounceable pseudoword stimuli. An equal number of affirmative and negative items were randomly presented for each verification question and within each block of six trials. Participants were trained to respond as quickly as possible in the verification decisions using a practice version of the paradigm with properties and stimuli not presented during the experiment.

Materials.

For each sensory modality, norming experiments with behavioral-only participants were used to (1) ensure that item–property relations within each question relied on perceptual knowledge to the same degree, (2) to identify clearly affirmative and negative items and to match each modality for average behavioral response time, and 3) to match the set of items within each verification block for possibly confounding lexical factors including familiarity, letter length, and number of syllables. Affirmative and negative items within each block were drawn from the same modalities and superordinate categories. Lexical familiarity was calculated from response times and accuracy patterns of behavioral-only participants, drawn from the same population, when the items were presented in a lexical decision experiment. Stimuli in the letter detection blocks consisted of pronounceable pseudowords constructed from rearranged letters from words used in the property verifications.

Data acquisition.

The protocol for this study was approved by the Institutional Review Board at the University of Pittsburgh. All MRI scanning was conducted at the Brain Imaging Research Center of the University of Pittsburgh and Carnegie Mellon University on a 3-Tesla Siemens (Munich, Germany) Allegra magnet. The scanning session began with the collection of scout, in-plane, and volume anatomical series, while functional scans corresponded to runs of the experiment. The in-plane structural scan served as the anatomical reference for all functional series that were collected in the same axial slices using a T2*-weighted echo-planar imaging pulse sequence (echo time = 30; repetition time = 3000; field of view = 210; slice thickness = 3.0 mm, with no gap between slices; flip angle = 90; in-plane resolution = 3.125 mm2). The volume anatomical scan was acquired using the Siemens MPRAGE sequence and the functional data were coregistered to these images for analyses. Stimuli were presented and responses were collected using the E-Prime software (Psychology Software Tools, Pittsburgh, PA).

Data analysis.

The neuroimaging data were preprocessed and analyzed using the BrainVoyager 2000 software (BrainInnovation, Maastricht, The Netherlands). Preprocessing steps included six-parameter three-dimensional motion-correction, slice-scan time correction using linear interpolation, voxel-wise linear detrending, and spatial smoothing with an 8 mm full-width at half-maximum Gaussian kernel. Spatial normalization was performed using the standard nine-parameter landmark method of Talairach and Tournoux (1988). A general linear model was defined for each subject that included regressors that modeled an expected blood oxygen level-dependent response (Boynton et al., 1996) to each verification block. Because we wanted to examine activations specific to each modality, a conjunction analysis (Friston et al., 1999) was conducted for the pair-wise contrasts between the perceptual decision of interest and each of the other verifications, as well as the pseudoword control (e.g., for the tactile verification: touch–color, touch–sound, touch–taste, and touch–control). This analysis represents the intersection map of each of these paired contrasts at significance (p < 0.01) with the requirement that each contrast shows an effect at threshold (Nichols et al., 2005). The null hypothesis of this test presents the case where one or more of the paired contrasts shows no effect and so any voxel that did not reach significance (p < 0.01) in all of the four paired contrasts was excluded from additional analysis. Because we were only interested in regions specifically active for each perceptual retrieval decision (i.e., modality-specific semantic regions), and in minimizing false positives, this approach is preferred even if it is overly conservative by rejecting voxels in which any of the paired contrasts are not significant (Friston et al., 2005). A cluster threshold (> = 135 mm3) was applied to the resultant conjunction maps to further lessen the probability of type I error (Forman et al., 1995).

Results

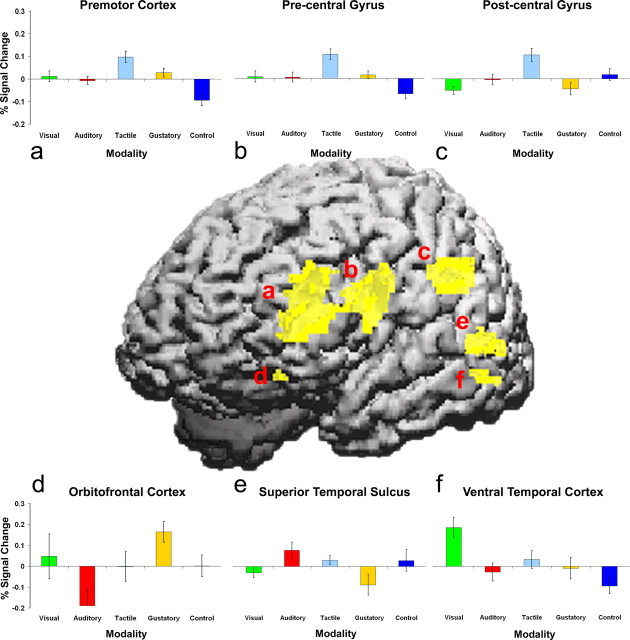

The fMRI results show that the predicted sensory brain regions were activated by perceptual semantic retrieval across the four sensory modalities. The modality examined in each decision was specifically associated with increased activation of the respective sensory brain regions (Fig. 1, Table 1).

Figure 1.

Cortical surface projection of the t statistic (p < 0.01) for modality-specific regions involving perceptual knowledge retrieval. Areas for tactile knowledge in regions of the premotor (a), motor (b), and somatosensory cortex (c), taste knowledge in the orbitofrontal cortex (d), auditory knowledge in the superior temporal sulcus (e), and visual knowledge in the ventral temporal cortex (f) are shown. During control blocks, participants detected the presence or absence of a given letter in pseudoword stimuli. Error bars represent SEM.

Table 1.

Modality-specific perceptual regions in the left hemisphere (p < 0.01) with anatomical region, Brodmann area (BA), coordinates, size, and the average statistical value

| Modality | Region | BA | Talairach coordinates |

Voxel count | p value | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Visual | L middle temporal | 37 | −59 | −46 | −6 | 10 | 0.0030 |

| L middle temporal | 37 | −52 | −58 | 0 | 10 | 0.0017 | |

| L superior parietal | 7 | −35 | −71 | 45 | 125 | 0.0017 | |

| Auditory | L superior temporal sulcus | 22/42 | −56 | −48 | 7 | 39 | 0.0027 |

| Tactile | L postcentral gyrus | 2/40 | −54 | −33 | 34 | 83 | 0.0023 |

| L precentral gyrus | 4/6 | −47 | 5 | 25 | 142 | 0.0024 | |

| L premotor | 6/9 | −34 | 29 | 20 | 165 | 0.0025 | |

| Gustatory | L orbitofrontal | 11/ 2 | −19 | 29 | −7 | 5 | 0.0030 |

L, Left.

We expected that increased activity in the somatosensory cortex would be specifically associated with verification of tactile knowledge. In addition to the somatosensory cortex, tactile knowledge retrieval was also associated with increased activation in motor and premotor regions. A ventral premotor region in the left prefrontal cortex (Fig. 1a) and one in the left motor cortex overlapping with the precentral gyrus (Fig. 1b) showed increased activation in contrast to the other stimulus modalities and the pseudoword control. As expected, retrieval of tactile knowledge, in contrast to the other sensory modalities and nonwords, also led to activation increases in the left somatosensory cortex corresponding to an area overlapping with the postcentral gyrus (Fig. 1c). This network of cortical regions more generally supports tactile object recognition (Rizzolatti and Luppino, 2001; Reed et al., 2004) and the formation of internal representations for actions (Tanji, 2001), whereas the somatotopic organization of the motor cortex is elicited by passive reading of action words (Hauk et al., 2004).

The verification of taste knowledge was associated with specific increased activity in the left orbitofrontal cortex (Fig. 1d), in contrast to the other sensory modalities and the pseudoword control. This result provides direct support for our previous interpretation (Goldberg et al., 2006) that this region supports knowledge of semantic categories (e.g., fruit) in which flavor properties are necessary and relevant. The involvement of this region during the retrieval of gustatory knowledge is consistent with the role of the orbitofrontal cortex in representing specific aspects of taste and smell, including flavor identity (Small et al., 2004) and reward contingencies (Rolls, 2004), even when photographs of foods are presented (Simmons et al., 2005).

Property decisions involving sound knowledge were specifically associated with increased activity centered on the left superior temporal sulcus (STS) just inferior and posterior to the primary auditory cortex (Fig. 1e). The STS has been implicated in the integration of visual and auditory knowledge (Beauchamp et al., 2004) and in retrieving sounds associated with pictures (Wheeler et al., 2000). By comparison, the visual property verification was specifically associated with increased activity in a more ventral aspect of the left temporal cortex (Fig. 1f), an area shown previously to be involved in the generation of object color knowledge (Martin et al., 1995) and in visual object recognition (Haxby et al., 2001). The visual decision was also associated with specific increased activation in a superior parietal region widely implicated in mental imagery and visual attention (Posner and Petersen, 1990).

Discussion

Sensory experiences of objects and perceptual decisions that reference them appear to rely on a common neural substrate. These results support and extend modality-specific accounts (Warrington and McCarthy, 1983; Martin and Chao, 2001) on the cortical organization and processing of semantic knowledge. Perceptual symbol systems (Barsalou, 1999) predict this relationship through how selective attention mechanisms focus on, and elaborate, specific attributes of object experiences and how simulations of the associated sensory mechanisms enable retrieval of perceptual information. By activating brain regions associated with touch, flavor, audition, and vision, these findings indicate a direct relationship between perceptual knowledge and sensory brain mechanisms. The retrieval of perceptual knowledge appears to rely on a widely distributed network of regions necessary for encoding specific sensory experiences of objects. However, an overriding uncertainty is how these regions are linked together to create the intuition of a unitary representation in memory. Theoretical arguments against such semantic decomposition have typically illustrated that the distinguishing properties of an object can be changed (e.g., an albino tiger) even as the object's identity appears to remain constant (Putnam, 1970). Although our results suggest that Humpty Dumpty's “egg” has fallen off of the proverbial wall, a solution to this linguistic variant of the binding problem (Treisman, 1996) will need to indicate how the meaning of a given object and its diverse array of associated experiences [e.g., egg (color: white, brown; shape: oval; sound: crack; flavor: rich, salty; motor: beat, fry, poach); birds lay) are linked together in the brain and yet flexibly retrieved as necessary.

One possibility for such a conceptual cortical network is the relationship between primary sensory regions and multimodal convergence areas (Damasio et al., 2004). Alternatively, the interaction of sensation and knowledge may depend on an “anterior shift” with primary regions involved in perceptual encoding mechanisms and information passed forward to secondary areas involved in knowledge representation (Martin and Chao, 2001). Although the visual system includes such increasing abstraction from retinal representation with more anterior regions (Tanaka, 1996), and the current and previous reports of color semantic retrieval support this relationship, the other tested modalities appear to indicate that secondary areas involved in perceptual knowledge retrieval may not be strictly anterior to primary sensory regions. The auditory region in the superior temporal sulcus is just inferior and posterior to the primary auditory cortex (Seifritz et al., 2002) and this relationship is consistent with previous reports of auditory semantic retrieval (Kellenbach et al., 2001; James and Gauthier, 2003). By comparison, the tactile region in the area of the postcentral gyrus appears to overlap substantially with the secondary somatosensory cortex in the inferior parietal cortex just anterior to the angular gyrus (Ruben et al., 2001). In addition, although food intake is one of the least studied areas of human sensation, evidence is emerging that the orbitofrontal cortex represents a secondary gustatory region (Rolls, 2005), perhaps after primary processing in the anterior insula, and may be related to the identity and pleasantness of particular flavors (de Araujo et al., 2003) and integration with olfactory information (Small et al., 2004). In general, the current results suggest that retrieval of perceptual knowledge specifically involves secondary sensory brain regions. Future studies will need to examine this indicated relationship between sensory systems and knowledge retrieval and how activation varies with multimodal task demands and object experiences, as well as when such associations may be insufficient for understanding, as in the case of abstract concepts.

Although the current results indicate a broad modality-specific relationship between sensory brain regions and perceptual knowledge retrieval, this relationship has been hypothesized in previous theoretical accounts (Allport, 1985; Barsalou, 1999) and supported by more restricted findings (Martin and Chao, 2001). In line with this account, patients with localized brain damage provided the first direct evidence that object knowledge depends on specific, and separable, sensory brain regions. Warrington and colleagues, in a series of case studies (Warrington and McCarthy, 1983, 1987; Warrington and Shallice, 1984), found that visual and functional knowledge of object categories could be selectively impaired with focal brain damage. Commonly termed the sensory-functional theory, this account explains the selective sparing and impairment of semantic categories as arising from a modality-based system in which object classes are biased to rely on different kinds of sensory experiences. On this account, category knowledge with a visual bias, like animals and fruits, are more likely to be impaired with damage to visual brain regions while sparing functional knowledge, whereas deficits for functional categories, including tools and household objects, are explained as arising from damage to motor brain regions while relatively sparing visual object classes. Yet even while the sensory–functional account is challenged based on more sparse and restrictive patterns of selective impairments and sparing (Caramazza and Mahon, 2003), few studies have reported effects for categories in which sensory modalities other than vision and function are specifically affected (Martin and Caramazza, 2003). The current results suggest that more sensitive neuropsychological testing, likely with more focused categories or properties than typically examined, will indicate whether the additional sensory regions implicated in the current findings are necessary or sufficient for perceptual knowledge retrieval. For instance, damage to the medial orbitofrontal cortex may specifically affect flavor knowledge, even as taste recognition is impaired with focal damage to neighboring regions (Small et al., 2005). In contrast, as lesions to the somatosensory cortex specifically degrade tactile object recognition (Platz, 1996) and result in impaired tactile working memory performance after transcranial magnetic stimulation (Harris et al., 2002), tactile semantic knowledge may be similarly disrupted. Such effects are likely to arise from a cortical semantic network with multiple processing pathways depending on task and stimulus demands (Crutch and Warrington, 2003).

In conclusion, the present results reveal a specific reliance on sensory brain regions when participants resolve perceptual semantic decisions. These findings broadly support and extend modality-based views (Warrington and McCarthy, 1983; Martin and Chao, 2001) on the cortical organization of semantic knowledge. By activating brain regions associated with touch, flavor, audition, and vision, these results indicate that the cortical instantiation of semantic knowledge involves sensory brain regions rather than strictly amodal mechanisms. Perceptual knowledge retrieval appears to rely on a widely distributed set of brain regions, depending on the role of sensory mechanisms in encoding specific aspects of world experience to which the decision refers.

Footnotes

This work was supported by National Science Foundation Grants BCS-0121943 and PGE-9987588. We thank M. McHugo, I. Suzuki, and B. Smith for their invaluable assistance and L. Barsalou, J. Fiez, J. McClelland, and S. Thompson-Schill for their helpful advice and suggestions.

References

- Allport DA (1985). Distributed memory, modular subsystems and dysphasia. In: Current perspectives in dysphasia (Newman SK, Epstein R, eds) pp. 32–60. Edinburgh: Churchill Livingstone.

- Anderson JR (1993). In: Rules of the mind Hillsdale, NJ: Erlbaum.

- Barsalou LW (1999). Perceptual symbol systems. Behav Brain Sci 22:577–660. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argali BD, Martin A (2004). Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41:809–823. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ (1996). Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci 16:4207–4221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caramazza A, Mahon BZ (2003). The organization of conceptual knowledge: the evidence from category-specific semantic deficits. Trends Cogn Sci 7:354–361. [DOI] [PubMed] [Google Scholar]

- Crutch SJ, Warrington EK (2003). The selective impairment of fruit and vegetable knowledge: a multiple processing channels account of fine-grain category specificity. Cogn Neuropsychol 20:355–372. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski TJ, Adolphs R, Damasio AR (2004). Neural systems behind word and concept retrieval. Cognition 92:179–229. [DOI] [PubMed] [Google Scholar]

- de Araujo IET, Rolls E, Kringelbach ML, McGlone F, Phillips N (2003). Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur J Neurosci 18:2059–2068. [DOI] [PubMed] [Google Scholar]

- Forman S, Cohen J, Fitzgerald M, Eddy W, Mintun M, Noll D (1995). Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): Use of a cluster-size threshold. Magn Reson Med 33:636–647. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Price CJ, Buchel C, Worsley KJ (1999). Multisubject fMRI studies and conjunction analyses. NeuroImage 10:385–396. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Penny WD, Glaser DE (2005). Conjuction revisited. NeuroImage 25:661–667. [DOI] [PubMed] [Google Scholar]

- Goldberg RF, Perfetti CA, Schnedier W (2006). Distinct and common cortical activations for multimodal semantic categories. Cogn Affect Behav Neurosci in press. [DOI] [PubMed]

- Harris JA, Miniussi C, Harris IM, Diamond ME (2002). Transient storage of a tactile memory trace in primary somatosensory cortex. J Neurosci 22:8720–8725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermueller F (2004). Somatotopic representation of action words in human motor and premotor cortex. Neuron 41:301–307. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- James TW, Gauthier I (2003). Auditory and action semantic features activate sensory-specific perceptual brain regions. Curr Biol 13:1792–1796. [DOI] [PubMed] [Google Scholar]

- Katz JJ, Fodor JA (1963). The structure of a semantic theory. Language 39:170–210. [Google Scholar]

- Kellenbach ML, Brett M, Patterson K (2001). Large, colorful, or noisy? Attribute- and modality-specific activations during retrieval of perceptual attribute knowledge. Cogn Affect Behav Neurosci 1:207–221. [DOI] [PubMed] [Google Scholar]

- Landauer TK, Dumais ST (1997). A solution to Plato's problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychological Rev 104:211–240. [Google Scholar]

- Martin A, Caramazza A (2003). Neuropsychological and neuroimaging perspectives on conceptual knowledge: an introduction. Cogn Neuropsychol 20:195–212. [DOI] [PubMed] [Google Scholar]

- Martin A, Chao LL (2001). Semantic memory and the brain: structure and processes. Curr Opin Neurobiol 11:194–201. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG (1995). Discrete cortical regions associated with knowledge of color and knowledge of action. Science 270:102–105. [DOI] [PubMed] [Google Scholar]

- Mishkin M, Ungerleider LG (1982). Two cortical visual systems. In: analysis of visual behavior (Ingle DJ, Goodale MA, Mansfield RJW, eds) pp. 549–586. Cambridge, MA: MIT.

- Mummery CJ, Patterson K, Hodges JR, Price CJ (1998). Functional neuroanatomy of the semantic system: Divisible by what? J Cogn Neurosci 10:766–777. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB (2005). Valid conjunction inference with the minimum statistic. NeuroImage 25:653–660. [DOI] [PubMed] [Google Scholar]

- Platz T (1996). Tactile agnosia. Casuistic evidence and theoretical remarks on modality-specific meaning representations and sensorimotor integration. Brain 119:1565–1574. [DOI] [PubMed] [Google Scholar]

- Posner MI, Petersen SE (1990). The attention system of the human brain. Annu Rev Neurosci 13:25–42. [DOI] [PubMed] [Google Scholar]

- Putnam H (1970). Is semantics possible? Metaphilosophy 1:187–201. [Google Scholar]

- Reed CL, Shoham S, Halgren E (2004). Neural substrates of tactile object recognition: an fMRI study. Hum Brain Mapp 21:236–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G (2001). The cortical motor system. Neuron 31:889–901. [DOI] [PubMed] [Google Scholar]

- Rolls E (2004). The functions of the orbitofrontal cortex. Brain Cogn 55:11–29. [DOI] [PubMed] [Google Scholar]

- Rolls ET (2005). Taste, olfactory, and food texture processing in the brain, and the control of food intake. Physiol Behav 85:45–56. [DOI] [PubMed] [Google Scholar]

- Ruben J, Schwiemann J, Deuchert M, Meyer R, Krause T, Curio G, Villringer K, Kurth R, Villringer A (2001). Somatotopic organization of human secondary somatosensory cortex. Cereb Cortex 11:463–473. [DOI] [PubMed] [Google Scholar]

- Seifritz E, Esposito F, Hennel F, Mustovic H, Neuhoff JG, Bilecen D, Tedeschi G, Scheffler K, Di Salle F (2002). Spatiotemporal pattern of neural processing in the human auditory cortex. Science 297:1706–1708. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Martin A, Barsalou LW (2005). Pictures of appetizing foods activate gustatory cortices for taste and reward. Cereb Cortex 15:1602–1608. [DOI] [PubMed] [Google Scholar]

- Small DM, Voss J, Mak YE, Simmons KB, Parrish T, Gitelman D (2004). Experience-dependent neural integration of taste and smell in the human brain. J Neurophysiol 92:1892–1903. [DOI] [PubMed] [Google Scholar]

- Small DM, Bernasconi N, Bernasconi A, Sziklas V, Jones-Gotman M (2005). Gustatory agnosia. Neurology 64:311–317. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988). In: A co-planar stereotaxic atlas of the human brain New York: Thieme Medical.

- Tanaka K (1996). Inferotemporal cortex and object vision. Annu Rev Neurosci 19:109–139. [DOI] [PubMed] [Google Scholar]

- Tanji J (2001). Sequential organization of multiple movements: involvement of cortical motor areas. Annu Rev Neurosci 24:631–651. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL (2003). Neuroimaging studies of semantic memory: inferring “how” from “where”. Neuropsychologia 41:280–292. [DOI] [PubMed] [Google Scholar]

- Treisman A (1996). The binding problem. Curr Opin Neurobiol 6:171–178. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy R (1983). Category specific access dysphasia. Brain 106:859–878. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy RA (1987). Categories of knowledge. Further fractionations and an attempted integration. Brain 110:1273–1296. [DOI] [PubMed] [Google Scholar]

- Warrington EK, Shallice T (1984). Category specific semantic impairments. Brain 107:829–854. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL (2000). Memory's echo: vivid remembering activates sensory-specific cortex. Proc Natl Acad Sci USA 97:11125–11129. [DOI] [PMC free article] [PubMed] [Google Scholar]