Abstract

The sound localization abilities of three rhesus monkeys were tested under head-restrained and head-unrestrained conditions. Operant conditioning and the magnetic search coil technique were used to measure eye and head movements to sound sources. Whereas the results support previous findings that monkeys localize sounds very poorly with their heads restrained, the data also reveal for the first time that monkeys localize sounds much more accurately and with less variability when their heads are allowed to move. Control experiments using acoustic stimuli known to produce spatial auditory illusions such as summing localization confirmed that the monkeys based their orienting on localizing the sound sources and not on remembering spatial locations that resulted in rewards. Overall, the importance of using ecologically valid behaviors for studies of sensory processes is confirmed, and the potential of the rhesus monkey, the model closest to human, for studies of spatial auditory function, is established.

Keywords: rhesus monkey, head-restrained orienting, head-unrestrained orienting, sound localization, summing localization, Franssen effect

Introduction

Given its position on the evolutionary tree, the rhesus monkey (Macaca mulatta) should be an ideal animal model for the study of the neural mechanisms underlying spatial auditory function. However, it is presently unclear whether the species can actually localize sound sources. For instance, Grunewald et al. (1999) reported that monkeys could not make saccadic eye movements to the sources of sounds in the context of a simple saccade task. In a companion study performed in the same monkeys used by Grunewald et al. (1999), Linden et al. (1999) only presented acoustic targets from two locations, one on each side of the midline, an experimental task that hardly required sound localization. The view that the ability of nonhuman primates to orient to sound sources might be poor is reinforced by the paradigms used in various studies of audiovisual integration in which acoustic stimuli were used as distractors (or attractors), but not as targets for saccadic eye movements (Frens and Van Opstal, 1998). Jay and Sparks (1990) and Metzger et al. (2004), in contrast, found that monkeys oriented to sound sources but with large undershooting errors after training that included visual feedback about localization errors.

A common factor of the studies cited above was that the heads of the subjects were restrained, which may have prevented proper execution of orienting to acoustic targets. Whittington et al. (1981), using a partially head-unrestrained preparation, reported that rhesus monkeys oriented to sound sources with relatively small errors (∼5°) independently of initial eye position. However, the heads of Whittington et al.'s (1981) monkeys were attached to an apparatus that allowed only horizontal movements, which complicates the evaluation of the results. Tollin et al. (2005) demonstrated significant improvements in sound localization accuracy when cats were allowed to orient to acoustic targets with the head unrestrained.

Lastly, Waser (1977) reported that the free-ranging Gray-cheeked Mangabey (Lophocebus albigena), an Old World monkey, can approach the sources of species-specific vocalizations presented from hundreds of meters away with an accuracy of 6°. Thus, allowing unrestricted movements, in particular, allowing the head to move freely, could be important for accurate orienting to acoustic targets.

In summary, it is unclear whether rhesus monkeys can localize sound sources in the surrounding space and, if so, how accurately and precisely, and under what conditions. The answers to these questions are essential to determine whether this species can used in subsequent studies of higher-order auditory function such as spatial attention in which gaze shifts could be used by the monkey to communicate its perceptions.

The data show that rhesus monkeys localized sounds very poorly with their heads restrained, but did so accurately and with much less variability when their heads were free to contribute to the orienting behavior. The value of the species as a model for studies of various aspects of spatial auditory function is, therefore, established. Preliminary accounts of these findings have been presented previously (Populin, 2003; Dent et al., 2005).

Materials and Methods

Subjects and surgery

Three young adult, male rhesus monkeys (Macaca mulatta) 5–8 kg in weight served as subjects. The animals were purchased from the Wisconsin Regional Primate Center (Madison, WI). All surgical and experimental procedures were approved by the University of Wisconsin-Madison Animal Care Committee and were in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals.

Under aseptic conditions, eye coils and a head post were implanted in each monkey. The eye coils were constructed with stainless steel wire (SA632; Conner Wire, Chatsworth, CA), and were implanted according to the method developed by Judge et al. (1980). An additional coil of similar construction was embedded in the acrylic of the head cap, in the frontal aspect, to measure head movements. The head posts and the surgical screws used to attach the acrylic holding the implants to the skull were made of titanium. Because changes in the position of the external ears are known to affect the input to the eardrums (Young et al., 1996), much effort was invested in restoring the pinnae to their preimplant position.

Experimental setup, eye and head movement recording

The experiments were done in a 3 × 2.8 × 2 m double-walled acoustic chamber (Acoustic Systems, Austin, TX). The interior of the chamber and the major pieces of equipment in it were covered with reticulated foam (Ilbruck, Minneapolis, MN) to attenuate acoustic reflections. Gaze and head movements were recorded with the scleral search coil technique (Robinson, 1963) using a phase angle system (CNC Engineering, Seattle, WA). Horizontal and vertical gaze and head position signals were low-passed filtered at 250 Hz (Krohn-Hite, Brockton, MA), sampled at 500 Hz with an analog to digital converter (System 2; Tucker Davis Technologies, Alachua, FL), and stored on a computer disk for off-line analysis.

Gaze position signals were calibrated with a behavioral procedure that relied on the animals' tendency to look at a spot of light in a dark environment (Populin and Yin, 1998). Linear functions were fit separately to the horizontal and vertical data and the coefficients used to convert the voltage output of the coil system into degrees of visual angle. To calibrate the head position signals, a laser pointer was attached to the head post and the head of monkey manually moved by the investigator to align the light of the laser pointer with light-emitting diodes (LEDs) at known positions. Linear functions were also fit to the head calibration data.

Stimulus presentation

Acoustic signals were generated with Tucker Davis Technologies System 3, and played with either Radio Shack (Fort Worth, TX) super tweeters (modified to transduce low frequencies) or Morel MDT-20 28 mm soft dome tweeters. Acoustic stimuli were presented from up to 24 speakers located in the frontal hemifield, 84 cm from the center of the subject's head. To minimize the likelihood of presenting unwanted cues arising from switching artifacts during the process of selecting a speaker, all speakers were selected and de-selected in random order at the start of every auditory and visual trial, leaving selected only the speaker from which sound was to be presented in that trial.

Broadband (0.1–20 kHz) noise bursts of various durations, with 10 ms rise/fall linear windows, were used as standard acoustic stimuli for localization. The stimuli used to test the monkeys' ability to orient to remembered acoustic targets consisted of 50 ms broadband noise bursts with 10 ms rise/fall linear windows. Summing localization stimuli, used in control experiments, consisted of pairs of 25 ms broadband noise presented from speakers at (±60°, 0°) or (±31°, 0°) with interstimulus times ranging from 0 to 1000 μs; by convention, we designated positive interstimulus delays as those in which the leading stimulus of the pair was presented from the speaker to the right of the subject and negative interstimulus delays as those in which the leading stimulus was presented from the speaker to the left of the subject. Stimuli of this type evoke the perception that sounds originate from phantom sources in humans (Blauer, 1983) and cats (Populin and Yin, 1998).

Acoustic stimuli known to induce the perception of the Franssen effect (FE) were presented to further test the monkeys' spatial auditory perceptual abilities. The FE is an auditory illusion in which a long-duration, slowly rising single-tone stimulus presented from one side of the subject is perceived on the opposite side, at the location of the presentation of an abruptly rising, short-duration tone (Franssen, 1962; Hartmann and Rakerd, 1989; Yost et al., 1997). The stimuli were constructed as in Dent et al. (2004), who showed that cats perceive this auditory illusion. They consisted of pairs of signals presented from symmetrically positioned transducers (e.g., ±30° on the horizontal plane) at eye level. One of the signals, defined as the transient, consisted of an abruptly rising 50 ms single tone that ended with a 50 ms linear fall. The other signal, defined as the sustained, consisted of a single 500 ms tone starting with a 50 ms linear rise, and ending with a 100 ms linear fall. Responses to the FE stimuli were compared with responses to single source (SS) stimuli that consisted of the sum of the transient and sustained components of the FE stimuli. Stimuli with those characteristics were constructed using single tones of 1000, 2000, 4000, and 8000 Hz, and broadband (0.1–20 kHz) noise. A schematic representation of an FE pair of stimuli is shown in Figure 1D. Visual stimuli were presented with red LEDs positioned in front of some of the speakers. The LEDs subtended visual angles of 0.2°.

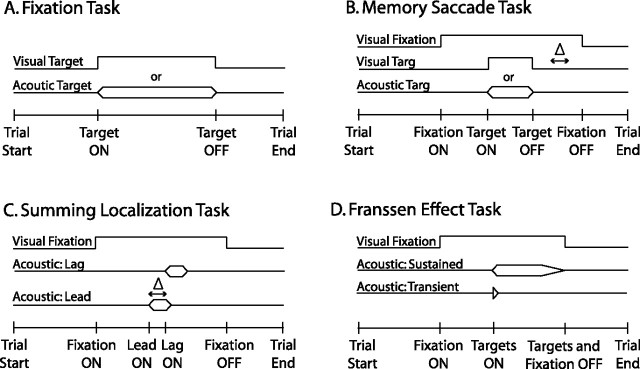

Figure 1.

Schematic diagrams of the experimental tasks. A, Fixation task. Either visual or acoustic stimuli were presented in the frontal hemifield without behavioral constraints. To receive a reward, the subject was expected to make a saccadic eye movement to the location of a stimulus and to maintain fixation on the source until it was turned off. B, Memory-saccade task. This task began with a visual fixation event at the straight-ahead position. While the subject maintained fixation, a visual or an acoustic target was presented elsewhere. The time elapsed between the offset of the target and the offset of the visual fixation event, illustrated with Δ, is the delay period. The subject was required to maintain fixation on the LED straight ahead for the entire duration of the delay period and not to respond until it was turned off. The delay period was varied randomly (200–1400 ms) from trial to trial. C, Summing localization task. Pairs of noise bursts were presented from speakers located at either (±31°, 0°) or (±60°, 0°) with interstimulus delays, illustrated with Δ, ranging from 0 to 1000 μs. These stimuli were presented in the context of the memory-saccade task. Lead ON, First stimulus of the pair to be turned on; Led OFF, second stimulus of the pair to be turned on. D, Franssen effect task. Pairs of single-tone stimuli were presented from speakers located at (±31°, 0°) while the subject maintained fixation on an LED located straight ahead.

Behavioral training and experimental tasks

Each monkey wore a collar with a ring for handling with a pole. The first stage of training consisted of teaching the monkey to enter a primate chair (Crist Instrument, Hagerstown, MD) for a fruit or nut reward. Subsequently, using operant conditioning, monkeys were rewarded with a small amount of water for orienting to the sources of acoustic, visual, or bimodal (visual plus acoustic) stimuli according to temporal and spatial criteria set by the investigator. Temporal criteria included maintaining fixation on the source of a stimulus for its entire duration, or delaying a response until instructed to respond. Spatial criteria involved the application of an electronic acceptance window around each target. The size of the acceptance window defined the margin of error allowed for each target. A discussion of the criteria used to determine the size of the electronic window for determining success for acoustic targets is found in Populin and Yin (1998). Briefly, the major concern was to balance the need to provide windows large enough for the subject to respond without spatial constraints with the need to provide windows small enough to encourage the subject to try diligently to localize the acoustic targets accurately. The size of the acceptance windows was ∼2° for visual targets and ∼8° for acoustic targets. No efforts were made to improve the accuracy or precision with which subjects oriented to visual targets.

Fixation task.

This task entailed the presentation of acoustic, visual, or bimodal (acoustic plus visual) stimuli without behavioral requirements (see Fig. 1A). The subject was required to direct its eyes to the location of the source of the stimulus, and to maintain fixation until the stimulus was turned off. If both temporal and spatial criteria were met, a liquid reward (H2O) was delivered.

Memory saccade task.

The memory-saccade task (see Fig. 1B,C,D) required subjects to withhold an overt response to the source of a stimulus until instructed to orient.

The subject was first required to fixate an LED at the straight-ahead position for a randomly varying period of time (500–1500 ms). During fixation, a 50 ms (±10 ms rise/fall) broadband noise target was presented elsewhere in the frontal hemifield. After a delay period, which varied randomly between 200–1400 ms, the fixation LED was turned off, signaling the subject to orient to the location of the remembered target. This task was first used with broadband single stimuli to study whether monkeys could orient to the sources of acoustic stimuli stored in working memory. Subsequently, the task was also used to study the responses of monkeys to summing localization stimuli (see Fig. 1C). Summing localization trials were presented with low probability of occurrence, amounting to <1% of the total number of trials in one session, and without reward provided because the subjective nature of the perceived location of the phantom sources did not allow a definition of what constituted correct and incorrect responses.

A variant of this task without a delay period was used to test the subjects' perceptions of FE stimuli (see Fig. 1D). During the fixation period, the pair of signals that made up the FE stimuli was presented. The fixation LED at the straight-ahead position was turned off at the end of the stimuli. The subject was required to maintain fixation until the LED was turned off. As in the summing localization trials, no criteria for success could be defined; thus, no reward was delivered. Trials of this type constituted <1% of the total number of trials in a session. The advantage of this approach is that the subject can be tested without having to provide a reward for performance that cannot be evaluated objectively. The disadvantage is that subjects must be tested in several experimental sessions to obtain enough trials, which is likely to result in increased variability.

Procedures with head restrained

The initial stages of training were done with the heads of the subjects restrained and aligned with the straight-ahead position. The goals of the first experimental sessions were to obtain accurate calibration coefficients, and to convey to the monkeys that orienting to the source of a stimulus resulted in reward. Acoustic, visual, and bimodal (acoustic plus visual) targets were presented within ±40–45° on the horizontal axis, and within ±25° on the vertical axis.

Procedures with head unrestrained

With the exception of a neck piece that confined the monkeys to the primate chair while allowing free head and body movements, the monkeys were completely unrestrained during these experiments. We found that while thirsty, they sat quietly facing the array of speakers and LEDs in the frontal hemifield without turning. Data acquisition was stopped if they turned. A custom-made acrylic piece attached to the head post held a spout in front of the monkey's mouth to deliver liquid rewards regardless of head position. The heads of the monkeys were released at the start of the experimental sessions, and restrained again at the end.

Experimental sessions and rewards

A typical experimental session consisted of a mixture of auditory, bimodal, and visual trials presented in random order. The proportion of trials corresponding to each condition could be adjusted during the experimental session without interruptions. The amount of water consumed by each monkey every day varied considerably across subjects. With some exceptions, monkeys drank enough water during the experimental sessions and did not require supplements. In days in which no experiments were done, monkeys were provided with larger amounts of water than consumed in an average experimental session. Typically, monkeys remained on task for 2–4 h, which allowed them to perform 2500–3500 trials, with occasional experimental sessions of up to 5 h, producing >5000 trials. The end of an experimental session was routinely marked by the monkey turning around and looking away from the array of speakers and LEDs.

Dependent variables, data analysis, and presentation

Gaze position at the end of a trial was used as the measure of sound localization. We will refer to this measure as final eye position for the head-restrained experiments, and as final gaze position for the head-unrestrained experiments. Both measures were determined with a velocity criterion described in detail in Populin and Yin (1998). Briefly, a mean velocity baseline was computed for each trial from an epoch starting 100 ms before to 10 ms after the presentation of the target, a period during which the eyes were expected to be stationary. Saccade onset was defined by the end of fixation, the time at which the velocity exceeded 2 SDs of the mean baseline. Similarly, saccade offset was defined by the return to fixation, the time at which the velocity of the eye/gaze returned to within 2 SDs of the mean baseline. Custom graphics software written in Matlab (The MathWorks, Natick, MA) was used for the analysis. All gaze shifts within each trial were analyzed in this manner. In some trials subjects made a single saccade whereas in others the initial saccade was followed by one or more corrective saccades. Gaze position at the end of the last saccade was considered the final gaze position for the trial.

The following spherical statistics were used to describe the final eye/gaze position data to measure sound localization: (1) the spherical correlation coefficient (SCC) (Fisher et al., 1987), computed for targets and individual final eye positions, provides an overall measure of localization performance, (2) angular error, the mean of the unsigned angles between each final eye position and the corresponding target, provides a measure of accuracy, and (3) κ−1, a measure of dispersion of spherical data given by the length of the vector resulting from adding the vectors representing individual observations, provides a measure of precision. A modified version of the SPAK software package developed by Carlile et al. (1997) was used to carry out the data analyses. An excellent discussion of the meaning of these descriptive spherical statistics is found in Wightman and Kistler (1989).

Summaries of final eye position are presented in spherical plots from the perspective of the observer. That is, targets to the left of the subject are shown on the right of the plots. In all spherical plots, the meridians are plotted every 20° and the parallels every 10°. The spheres are plotted 10° tilted forward to facilitate visual inspection of the data. The centroids shown in all spherical plots represent the mean final eye/gaze position computed for individual targets using 20–50 trials, as illustrated in the inset of Figure 3B. A thin line connects each centroid, a filled round dot, to its corresponding target, a rhomboid. The circular/elliptical functions surrounding each centroid represent 1 SD.

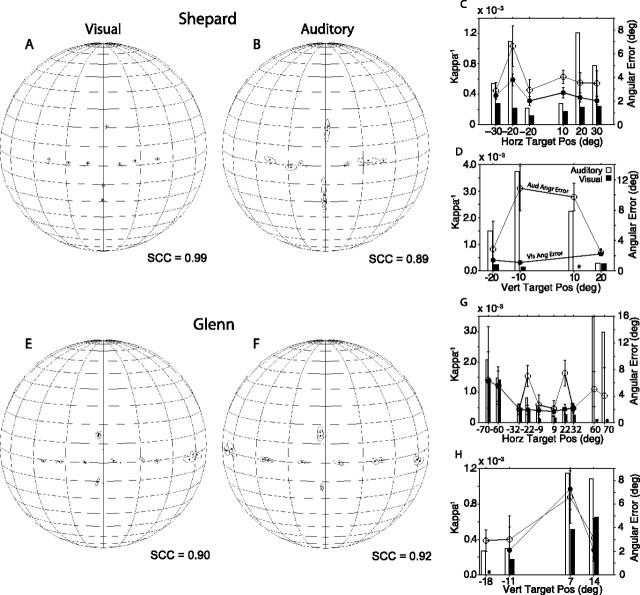

Figure 3.

Summaries of final eye position recorded with the head restrained using the fixation task κ−1 and angle errors. The final eye position data (A, B, E, F, I, J) are plotted in spherical coordinates from the perspective of an external observer. The stimuli were 500–1000 ms broadband noise bursts. The straight-ahead position is at the center of each spherical plot. The meridians are drawn every 20°, and the parallels are drawn every 10°. The inset in B shows the final eye position data from the acoustic target at (22°, 0°) that were used to compute the centroid and the ellipse representing 1 SD. The angle errors and the corresponding values of κ−1 (C, D, G, H, K, L) are plotted separately for targets located on the main horizontal and vertical axes. Aud/Vis Ang Error, Auditory or visual angle error. Error bars indicate SE.

Front-back confusions, responses directed to the incorrect (front or back) hemifield, are removed in some studies (Carlile et al., 1997) or corrected in others (Wightman and Kistler, 1989) because they are thought to arise from ambiguity in the interaural cues used for localization in front of each ear. We have chosen not to alter the data because (1) only two to four of the targets used in this study could be affected by this problem, and (2) despite of the physical explanation for the confusion, we deemed it appropriate to present the data as they were collected.

Results

Sound localization with restrained head

The data will be presented separately for each of the three subjects to preserve their characteristics. The initial sound localization experiments were performed with the head of the subjects restrained. The stimuli and the experimental task were selected to facilitate the task of localizing sound sources. Broadband, long duration (500–1000 ms) noise burst targets were presented well within the monkey's oculomotor range (±35°, 0°), in the context of the fixation task (Fig. 1A).

Figure 2 illustrates the main components of horizontal and vertical eye movements to visual and acoustic targets located along the main axes. The vertical components of eye movements to horizontal targets and horizontal components of eye movements to vertical targets were not plotted for clarity, but were taken into account for the analysis of final eye position. The dots that make up each trace represent digital samples of the voltage output of the coil system. All data are plotted synchronized to the onset of the stimuli, which took place at the 0 ms mark. The position of the targets is illustrated by small arrows. Most of these data were collected in the seventh experimental session of subject Shepard, in which trials using the auditory, visual, and bimodal fixation task (Fig. 1A) were presented. The exception were the eye movements directed at the visual target located at (22°, 0°), which were recorded in the 19th and 20th sessions because Shepard refused to make saccadic eye movements to this target in the initial experimental sessions; he started to orient to this target in the 19th session without any change in the procedures or experimental setup. Biases against orienting to certain targets are commonly observed in animals participating in experiments of this type (Populin and Yin, 1998). Interestingly, Shepard showed no difficulty in orienting to a more eccentric target at (31°, 0°).

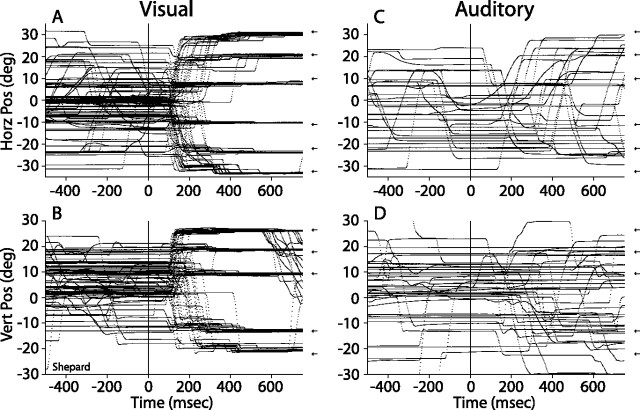

Figure 2.

Horizontal and vertical components of eye movements to visual (A, B) and acoustic (C, D) targets recorded with the fixation task under head-restrained conditions. The position of the targets on the main axes is illustrated by small arrows plotted on the right of each graph. All data are plotted synchronized to the onset of the stimuli, at time 0 ms. The secondary components of the eye movements were omitted for simplicity.

Subject Shepard made numerous eye movements between trials, and like subjects Glenn and Conrad, he exhibited no difficulty in making accurate saccadic eye movements to most visual targets located on the horizontal and vertical axes from the start of the behavioral training (Fig. 2A,B). No emphasis was placed on requiring a high degree of accuracy. Importantly, the subjects were able to orient accurately to the most eccentric targets, demonstrating that no damage was made to the extraocular muscles and associated structures during the surgical procedures in which scleral search coils were implanted to measure eye movements.

Saccadic eye movements to acoustic targets, however, were inaccurate and variable (Fig. 2C,D). Final eye position at the end of most trials bore little relation to the location of the acoustic targets. Subject Shepard tried diligently to localize the acoustic targets throughout the experimental sessions despite not obtaining rewards in most trials of this type. In contrast, subjects Glenn and Conrad did not orient in most acoustic trials and shook the primate chair when several acoustic trials were presented in succession.

Summaries of Shepard's final eye position from the visual and auditory conditions recorded with the fixation task are plotted in spherical coordinates in Figure 3A,B. The conventions for this type of plot are explained above. Briefly, the view corresponds to that of an observer outside the sphere. Thus, targets to the left of the subject are shown on the right side of the plots. Angular errors and κ−1 are plotted separately for horizontal (Fig. 3C) and vertical (D) targets.

Shepard oriented to all visual targets accurately (Fig. 3A). The angular errors for horizontal targets were ∼2° for all eccentricities tested (Fig. 3C). The angular errors for the visual targets on the vertical plane were slightly larger, particularly for those targets below the horizontal plane (Fig. 3D). Overall, the variability of the responses was small, as illustrated by the filled bars in Figure 3C,D. The SCC for the visual condition was 0.99, indicating a high degree of correspondence between the final eye positions and the targets.

The accuracy and precision of eye movements to broadband, long-duration (500–1000 ms) acoustic targets performed by Shepard in the same experimental sessions stand in stark contrast to the eye movements directed to visual targets presented from the same spatial locations (Fig. 3B). Shepard was able to distinguish between targets located to the left and to the right of the midline, but was unable to distinguish targets located in the same hemifield. Localization of targets on the vertical plane was also poor. Interestingly, Shepard made down-up and up-down confusions (i.e., he made downward saccadic eye movements to acoustic targets presented above the horizontal plane and upward saccadic eye movements to acoustic targets presented below the horizontal plane). The angular errors ranged between 7 and 15° and 11 and 18° for horizontal and vertical targets, respectively (Fig. 3C,D). Most notable is the large variability of the auditory responses, illustrated by the large values of κ−1 (Fig. 3C,D, open bars), compared with those of the visual condition (Fig. 3C,D, filled bars). The SCC from the auditory condition reached 0.31, demonstrating the poor degree of correspondence between final gaze position and the acoustic targets.

Subject Glenn was also very accurate in the visual condition (Fig. 3E). The angular errors for both horizontal and vertical targets were <2° with the exception of the large undershooting error made in orienting to the target located at (−22°, 0°), which exceeded 5° (Fig. 3G). The persistency of this error and the small variability of the responses, prompted us to examine the operation of the LED and its placement. Subject Shepard, who was tested in the same setup, did not make such an error. We cannot offer a logical explanation for this behavior because proper function and placement of the LED were confirmed. Most interestingly, Glenn oriented much more accurately to a more eccentric target located at (−31°, 0°). The SCC from visual condition was 0.99.

Like Shepard, Glenn's sound localization was also very poor (Fig. 3F). Data from the 10th, 25th, and 26th experimental sessions are included in this analysis. Glenn did not attempt to orient to acoustic stimuli presented from a speaker located at (−31°, 0°) in the selected experimental sessions. Eye movements to the sources of acoustic stimuli presented from the other speakers grossly undershot their targets and tended to cluster on two locations, below the horizontal plane, near the midline, and above the horizontal plane to the right (Fig. 3F). The angular errors were large, particularly for targets on the vertical plane, with numerous up–down confusions (Fig. 3G,H). Consistent with subject Shepard, Glenn's responses in the auditory condition were much more variable than those from the visual condition (Fig. 3G,H), and yielded an SCC of 0.25.

Subject Conrad was the poorest of the three subjects tested (Fig. 3I–L). In the visual condition, Conrad's final eye position was slightly more variable than those of the other two subjects, and he did not orient to the target located at (−31°, 0°). We do not think that Conrad's lack of orienting to this target was attributable to damage to his eyes because he made spontaneous saccadic eye movements to the left that exceeded the eccentricity of the target (data not shown). Note also that Conrad overshot all targets to his right, the most eccentric by ∼6° (Fig. 3I). The SCC from the visual condition was 0.98.

In the auditory condition, Conrad responded with eye movements to only four acoustic targets (Fig. 3J). He did not respond to acoustic stimuli presented from speakers at (±°31, 0°), (±°9, 0°), (−18°, 0°), and (0°, 7°), which were not included in the plot. The responses to stimuli presented from the speaker located at (22°, 0°) were relatively accurate (Fig. 3J,K), but those to the stimuli presented from the other three speakers grossly undershot the targets. As with the data from the other two subjects, Conrad's responses to acoustic stimuli were much more variable than those directed to visual targets along both the horizontal and vertical axes (Fig. 3K,L). The SCC from the auditory condition was 0.21.

Sound localization with unrestrained head

The poor sound localization performance observed under head-restrained conditions (Figs. 2, 3) prompted us to re-examine the experimental approach, particularly in light of our findings in the cat that show much improved sound localization under head-unrestrained conditions (Tollin et al., 2005). Accordingly, we released the head of our subjects to allow them to orient to visual and acoustic targets with movements of both the eyes and the head.

Subject Shepard was first tested with the head unrestrained in the 45th experimental session, subject Glenn in the 29th, and subject Conrad in the 22nd session. At the time of switching to the head-unrestrained condition, all subjects had demonstrated proficiency in the mechanics of our experimental paradigm. As in the head-restrained condition, the fixation task was used for the first tests.

Releasing the head of the subjects resulted in marked improvement in the overall behavior of all three animals. The number of instances in which the monkeys shook the primate chair decreased considerably, the duration of the experimental sessions increased, and as shown below, overall sound localization accuracy and precision improved.

Figure 4 shows the main components of gaze shifts to acoustic targets located on the horizontal and vertical axes and their associated head movements recorded in the context of the auditory fixation task (Fig. 1A); the vertical components of gaze shifts to horizontal targets and horizontal components of gaze shifts to vertical targets were not plotted for clarity. As in Figure 2, all data are plotted as a function of time and synchronized to the onset of the acoustic targets, illustrated with thin vertical lines at 0 ms. The acoustic stimuli were the same broadband noise bursts, 500–1000 ms in duration, used in the head-restrained experiments. The mechanics of the experiment were identical to those with the head restrained in terms of the frequency with which stimuli were presented and rewards delivered.

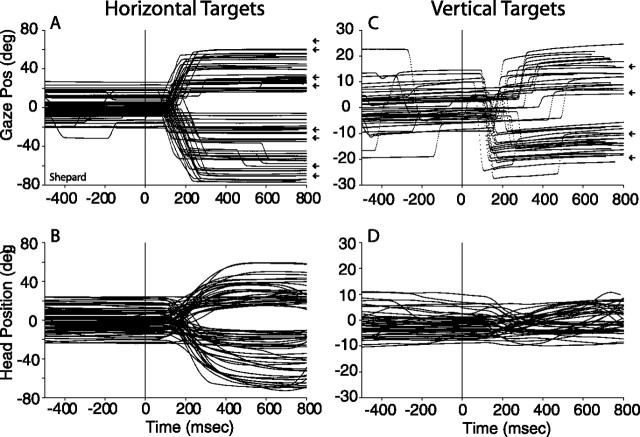

Figure 4.

Gaze and head movements to acoustic targets on the main horizontal (A, B) and vertical (C, D) axes recorded with the fixation task from subject Shepard. All data are plotted synchronized to the onset of the acoustic target at times 0 ms. A, C, The location of the targets is illustrated by small arrows plotted to the right of the gaze plots. The stimuli were 500–1000 ms broadband noise bursts. The secondary components of the gaze shifts and head movements were omitted for simplicity.

Inspection of the data shown in Figure 4 reveals that releasing the head of the subject resulted in much improved performance compared with the head-restrained condition (Fig. 2C,D). Both gaze and head position were mostly stationary before the presentation of the stimuli and within ±20° of the midline on the horizontal plane (Fig. 4A) and ±10° on the vertical plane (Fig. 4C). As with cat (Populin and Yin, 1998) and human (Populin et al., 2001) subjects, monkeys quickly adopted the strategy of waiting for the initiation of the next trial by holding gaze near the straight-ahead position. Gaze shifts to acoustic targets on the horizontal plane (Fig. 4A) consisted of movements of the eyes and head (Fig. 4B), whereas gaze shifts to similar acoustic targets on the vertical plane (Fig. 4C) were accomplished primarily with movements of the eyes, as indicated by the small changes in the position of the head (Fig. 4D).

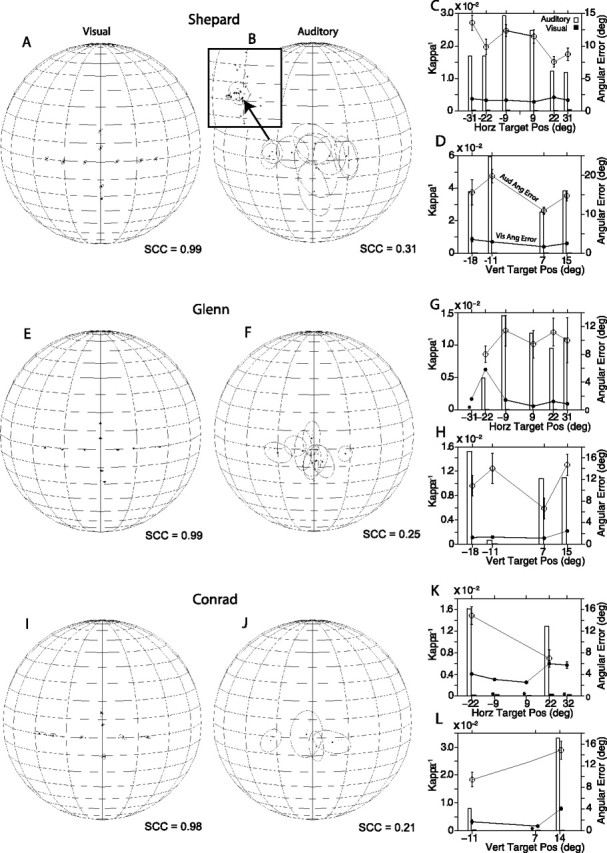

The summary of Shepard's localization behavior from the sixth through the 12th experimental sessions with the head unrestrained is shown in Figure 5. Shepard oriented to the visual targets accurately (Fig. 5A), as indicated by the SCC of 0.98 computed for this condition, but with greater variability than in the head-restrained condition. The final position of gaze shifts directed at acoustic targets, however, stand in stark contrast to those collected under head-restrained conditions. Although the acoustic stimuli and the experimental task were the same, Shepard's overall localization improved considerably, with the SCC improving from 0.31 for the head-restrained data to 0.71 when the head was free to move. It is also worth noting that the improvement did not result from the inclusion of a larger number of targets because the SCC computed for the same targets used in the head-restrained condition reached 0.76. The magnitude of the angular errors of sound localization, 4–15° for targets on the horizontal plane and 5–10° for targets on the vertical plane, were comparable with those documented in the head-restrained conditions, albeit of a very different nature. Under head-unrestrained conditions, sound localization errors were mostly caused by a consistent upward bias for all targets, including those located on the vertical plane (Fig. 5B). On the horizontal plane, the only two targets that were clearly undershot were those located at (70°, 0°) and (60°, 0°) plotted on the left side of Figure 5B, but he did not undershoot those located on the opposite side at (−70°, 0°) and (−60°, 0°). Most interesting is the reduction in the variability of the responses, illustrated by the smaller areas occupied by the ellipses and documented by the magnitude of κ−1 plotted in Figure 5C, demonstrating an improvement in precision. Overall, Shepard was able to point with his gaze to specific locations in space with little overlap among the various targets.

Figure 5.

Summary of final eye position recorded with the head unrestrained using the fixation task. The acoustic stimuli were 500–1000 ms broadband noise bursts. The asterisks represent target locations from which data are not available. All other details are as in Figure 3.

Subject Glenn's localization behavior also changed when allowed to orient with the head unrestrained. The summary of final gaze position from his first through his 18th experimental sessions with head unrestrained are shown in Figure 5, E and F. Glenn's orienting to visual targets remained essentially unchanged, as revealed by an SCC of 0.97, but the variability of his responses increased (Fig. 5G,H). Glenn was not tested with the visual target located at (−18°, 0°). In contrast, the accuracy with which Glenn oriented to acoustic targets showed a marked increase under head-unrestrained conditions, as documented by an SCC of 0.75. The improvement in sound localization under head-unrestrained conditions was actually greater (SCC, 0.82) if only the targets used under head-restrained conditions are included in the analysis. The four more peripheral targets located at (±60°, 0°) and (±70°, 0°) evoked more variable responses (Fig. 5F,G), which could be attributed to the proximity of the targets to the cone of confusion. Note that the 1 SD functions extend beyond the position of the targets on the horizontal plane, indicating that some responses actually overshot the targets, and that unlike subject Shepard, Glenn did not exhibit an upward bias in the localization of acoustic targets.

Glenn's localization of acoustic targets on the vertical plane improved in comparison to the head-restrained condition, but it remained inconsistent and variable, judging by the magnitude of the angular errors and κ−1 (Fig. 5F,H). That is, Glenn was able to distinguish between targets presented above and below the horizontal plane, but not among them.

Figure 5I illustrates final gaze position summaries from subject Conrad obtained in the first through the third head-unrestrained experimental sessions. Conrad did not experience difficulties in orienting to the visual targets, as indicated by the SCC of 0.96 computed from those data. The magnitude of the errors was comparable with those measured under head-restrained conditions, but the variability was greater (Fig. 5K,L).

Releasing the head also produced large changes in Conrad's orienting behavior in the auditory condition (Fig. 5J). First, unlike in the head-restrained condition, in which Conrad oriented reliably to only four targets, left, right, up and down (Fig. 3J), under head-unrestrained conditions he readily oriented to more targets. The major improvement was in orienting to targets on the horizontal plane, which, like subject Shepard, he miss-localized with a consistent upward bias. For targets on the vertical plane, Conrad's behavior was erratic and inconsistent. The responses to targets presented above the horizontal plane were grouped on the same spatial location, whereas responses to targets below the horizontal plane had numerous down–up confusions, as illustrated by the elongated ellipsoidal shapes of the 1 SD functions corresponding to those targets. Overall, Conrad's orienting behavior to acoustic targets improved from a SCC of 0.21 in the head-restrained condition to a SCC of 0.57 in the head-unrestrained condition; the SCC actually reached 0.68 if only the four acoustic targets Conrad oriented to in the head-restrained condition were included in the analysis.

Localization of remembered acoustic targets (memory saccade task)

Both social and nonsocial situations require primates to withhold immediate overt responses to stimuli until the conditions are appropriate. The execution of behavioral responses after the stimuli have disappeared is based on information stored in working memory, therefore, involves different mechanisms that those underlying sensory guided responses (Goldman-Rakic, 1987).

The auditory memory-saccade task (Fig. 1B), an oculomotor variant of the type of experimental tasks first introduced by Hunter (1913), was used to test the ability of two of our subjects to orient to the sources of brief sounds after a mandatory, randomly varying (200–1400 ms) delay period. In addition to testing the subjects' ability to orient to remembered sound locations, the mechanics of the memory saccade task standardized gaze and head position at the time of stimulus presentation at the straight-ahead position thus provided an important control not included in the preceding experiments because of the nature of the fixation task.

Figure 6 shows the horizontal components of gaze shifts and their corresponding head movements to acoustic targets located at (±31°, 0°) from subjects Shepard and Glenn. All data are plotted synchronized to the onset of the stimuli, a 50 ms broadband noise burst, at time 0 ms. The delay period was defined as the time elapsed between the offset of the target stimuli and the offset of the fixation LED, the event that signaled the subject to orient. Various delay periods were used in every experimental session to prevent subjects from anticipating the time at which they could respond. Several delay periods can be distinguished by the time of initiation of the gaze shifts shown in Figure 6. Note that the two subjects were able to withhold their gaze shifts while maintaining fixation on the LED at the straight-ahead position. Head position was maintained in rough alignment with the straight-ahead position, although small, very slow movements were observed after the presentation of the target (Fig. 6B,D).

Figure 6.

Gaze (A, C) and head (B, D) movements to acoustic targets at (±31°, 0°) illustrating subjects Shepard's and Glenn's orienting behavior in the context of the auditory memory-saccade task. All data are plotted synchronized to the onset of the acoustic stimuli, which were 50 ms broadband noise bursts. No information is provided on the onset and offset of the fixation LED, although the occurrence of the latter can be inferred from the onset of the gaze shifts.

The summary of final gaze positions recorded with the memory saccade task in the visual and auditory conditions from subjects Shepard and Glenn are shown in Figure 7; data from subject Conrad, our poorest performer, were omitted because his behavior in this task was erratic and inconsistent. No differences were observed among the various periods of delay used. Thus, the data were collapsed for presentation and analysis.

Figure 7.

Summary of final gaze positions recorded in the context of the memory saccade task with head unrestrained. The stimuli were 50 ms broadband noise bursts. Delay periods ranged from 200 to 1400 ms. Other details are as in Figures 3 and 5.

In the remembered visual target condition, Shepard was very accurate and precise (Fig. 7A,C,D). The magnitude of the angular errors was ∼2° for targets along both the horizontal and vertical axes. Note that Shepard's final gaze position for the horizontal targets was shifted 1–2° upward, which is consistent with previous reports (Barton and Sparks, 2001). The variability of the final gaze position was also small, as illustrated by the small area of the one SD deviation functions shown in Figure 7A, and the small values of κ−1 shown in Figure 7C. The SCC computed for the responses in the visual condition was 0.99. Glenn also made angular errors of ∼2° for targets on the horizontal plane (Fig. 7E), but he made much larger errors for targets on the vertical plane. Interestingly, Glenn oriented to targets presented from the most eccentric positions to his left (−70°, 0°) and (−60°, 0°). Glenn's SCC for the visual condition was 0.9.

In the auditory memory-saccade condition, both Shepard and Glenn exhibited a higher degree of accuracy and consistency than expected based on their performance in the fixation task. Shepard's angular errors for targets on the horizontal plane were larger than those made in the visual condition (Fig. 7C). He was the least accurate in orienting to the target at (−20°, 0°). In terms of variability, the targets at (±20°, 0°) exhibited the largest scatter (Fig. 7C). For targets on the vertical plane, Shepard did not distinguish between the two targets located above the horizontal plane and between the two targets located below the horizontal plane, as demonstrated by the large degree of overlap between the one SD functions from the responses to these targets (Fig. 7B). Importantly, Shepard's SCC for auditory targets under memory-saccade conditions reached 0.89.

Glenn also exhibited a higher degree of accuracy than expected in orienting to acoustic targets in the context of the memory-saccade task (Fig. 7F). The SCC computed for this data set was 0.92. Similar to Shepard, Glenn was not able to distinguish between the two targets presented above the horizontal plane and between the two targets presented below the horizontal plane. The magnitude of Glenn's angular errors on the horizontal plane were, with the exception of the targets at (±22°, 0°), indistinguishable from the angular errors made in the visual condition. Note that Glenn oriented to the remembered locations of stimuli presented from the most eccentric targets (±60°, 0°) and (±70°, 0°) degrees. Worthy of note is the fact that the magnitude of the κ−1 values was smaller in the memory-saccade (Fig. 7G) than in the fixation task (Fig. 5G), indicating higher precision.

Summing localization: did monkeys localize the sources of sounds?

Many behavioral experiments in animals rely on some form of conditioning to compel the subjects to execute a motor action in response to the presentation of a stimulus. The paradigm selected for the present experiments relied on a form of positive reinforcement called operant conditioning, in which subjects were rewarded with a small drop of fluid after the completion of every successful trial. Although convenient for preparing animals to generate consistent behavior suitable for electrophysiological studies, this approach relies on extensive practice, which may lead the animals to remember the locations of the acoustic targets because of their overlap with visual targets, or to memorize the spatial locations that resulted in rewards in the auditory modality.

To control for the effects of these potentially confounding variables, we tested our subjects with acoustic stimuli known to elicit the perception of summing localization in humans (Blauer, 1983) and cats (Populin and Yin, 1998). Pairs of broadband noise stimuli (25 ms; ±10 ms linear rise/fall) were presented from speakers located symmetrically at either (±60°, 0°) or at (±31°, 0°), with interstimulus delays ranging between 0 and 1000 μs. Acoustic stimuli presented in this manner create the illusion of a single sound originating from a phantom source located between the two transducers, the laterality and eccentricity of which are a function of the location of the leading speaker and the interstimulus delay, respectively. That is, the longer the interstimulus delay, the closer the phantom source is perceived to be to the leading speaker.

We hypothesized that if the monkeys performed their gaze shifts by localizing the sound sources, they should orient to the location of phantom sources close to the leading speaker. Furthermore, we hypothesized that final gaze position should be a function of interstimulus time. The memory-saccade task was used in these experiments. Trials of this type were presented in random order with trials of other types and with low probability, <1% of the total number of trials in one session. Rewards were not given in trials of this type because objective criteria for success could not be unequivocally defined.

Figure 8A illustrates the presentation of the summing localization stimuli, the resulting phantom source, and the monkey's expected behavior. First, the monkey was expected to fixate an LED at the straight-ahead position. During the fixation period, a pair of noise bursts was presented from the speakers located to the left and right of the subject. In this example, the stimulus presented from the speaker to the left of the subject preceded the stimulus presented from the right of the subject by a hypothetical −300 μs interstimulus delay. Stimuli of this type lead humans to perceive a single phantom source located to the left of the midline; thus, the monkey was expected to shift his gaze to a location to the left of the midline.

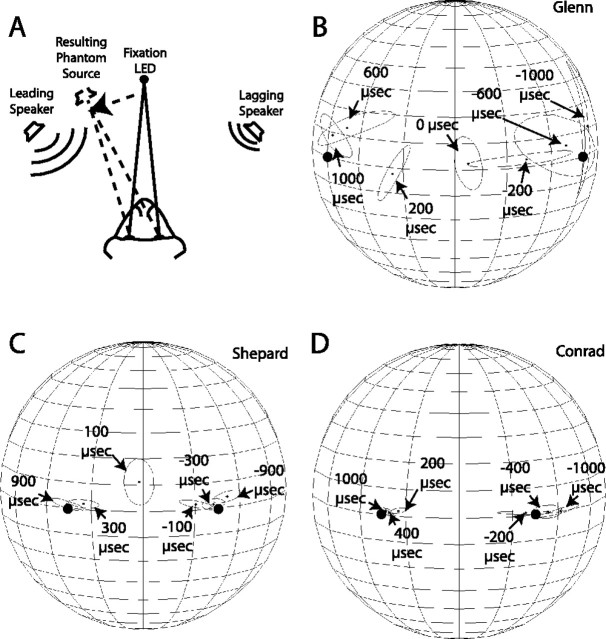

Figure 8.

Control experiments. A, Schematic representation of the summing localization task. The subject was first presented with a fixation LED at the straight-ahead position. While fixating, two sounds were presented from speakers located to each side, with interstimulus times ranging from 0 to 1000 μs. In this example, the stimulus presented from the speaker to the left of the subject preceded the stimulus presented from the right of the subject. This configuration of stimuli was expected to create the perception that a single sound was presented from a phantom source to the left of the fixation LED. B–D, Summaries of final gaze positions from subjects Glenn, Shepard, and Conrad. The location of the speakers used to present the stimuli is illustrated by large, filled round dots.

Summaries of final gaze position from summing localization trials are presented in Figure 8B–D. All three subjects directed their gaze shifts in the direction of the leading speaker, indicating that they oriented to phantom sound sources. The extent to which final gaze position changed as a function of the interstimuli delay, however, varied across subjects.

Subject Glenn's responses to summing localization stimuli presented from speakers located at (±60°, 0°) followed an orderly pattern. Pairs of stimuli presented simultaneously, without an interstimulus delay (0 μs), evoked gaze shifts close to the midline. Stimuli with an interstimulus delay of ±200 μs were localized more laterally, as were the stimuli with delays of ±600 and ±1000 μs.

Subjects Shepard and Conrad were tested with summing localization stimuli presented from speakers located at (±30°, 0°). The final gaze position of both subjects also followed an orderly representation of the interstimulus interval, albeit more compressed in space, presumably because of the proximity of the speakers. Interestingly, both subjects localized the stimuli with the largest negative interstimulus delay to a more eccentric position than the physical location of the speaker (Fig. 8C,D).

Additional tests of spatial auditory function: the Franssen effect

To extend our understanding of the spatial auditory abilities of the rhesus monkey, two of the subjects in this study, Glenn and Shepard, were tested with acoustic stimuli of the type known to evoke the perception of the FE in humans (Hartmann and Rakerd, 1989; Yost et al., 1997) and cats (Dent et al., 2004). The FE (Franssen, 1962) is an auditory illusion created by presenting single-tone stimuli from speakers located symmetrically about the midline, on the horizontal plane; in the experiments reported here, the sources were located at (±31°, 0°). One stimulus of the pair, defined as the sustained, started with a long, slowly rising ramp (50 ms) and ended with a long decaying ramp (100 ms). The other stimulus of the pair, defined as the transient, started abruptly and ended with a slowly decaying ramp (50 ms).

Humans exposed to FE stimuli, particularly in a reverberant environment, report hearing the sustained component at the location of the source of the transient component, in the opposite hemifield. The FE is strongest for tonal stimuli of ∼1–1.5 KHz, which are difficult for humans to localize because of ambiguities in the physical cues used for localization (Hartmann and Rakerd, 1989; Yost et al., 1997),

The data reported here were obtained in the context of the memory saccade task (Fig. 1D), which compelled the monkeys to listen to the stimuli for their entire duration before permitting a response. Broadband stimuli as well as 1, 2, 4, 6, and 8 KHz stimuli were used in both the SS and FE configurations used in the same sessions.

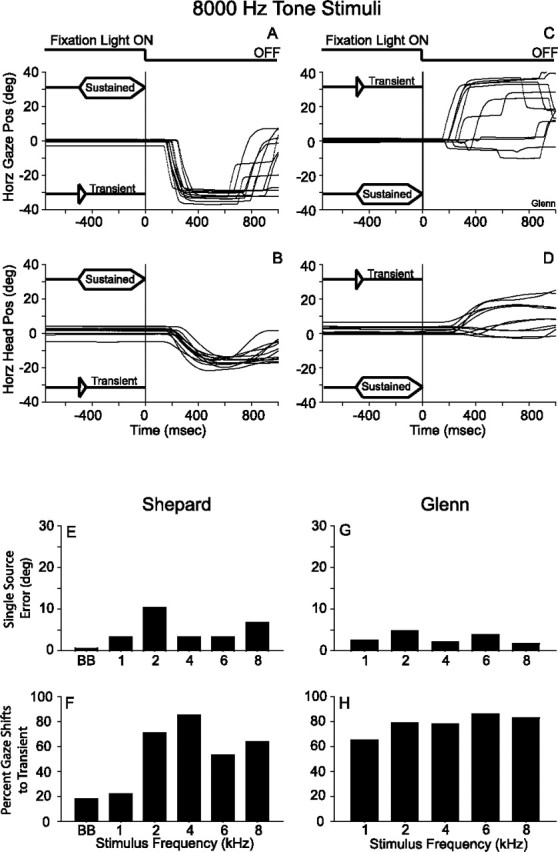

The oculomotor behavior of one of the subjects in response to FE stimuli is illustrated in Figure 9 with data from the 8 KHz condition. Gaze and head position are plotted as a function of time and synchronized to the offset of the fixation LED, which is illustrated at the top of Figure 9, A and C. The subjects were required to fixate on an LED at the straight-ahead position (0°, 0°) until it was turned off. The offset of the fixation LED, which coincided with the offset of the sustained component of the stimuli, was the signal for the subject to respond. Premature responses (i.e., gaze shifts executed before the offset of the fixation LED), resulted in an immediate termination of the trial. Figure 9, A and B, illustrate the condition in which the sustained component of the stimuli was presented from a speaker located at (31°, 0°), and the transient from a speaker at (−31°, 0°). The opposite configuration of the stimuli is illustrated in Figure 9, C and D.

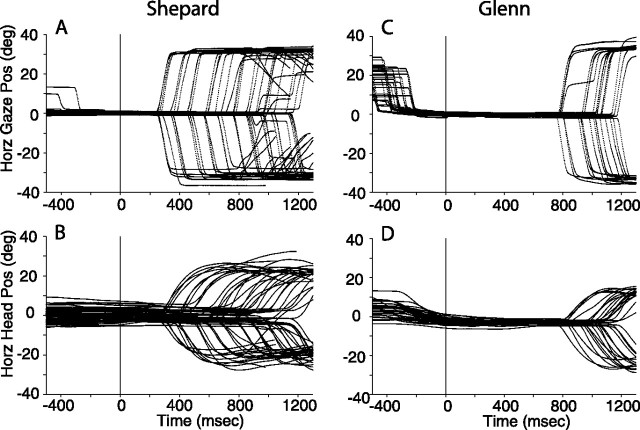

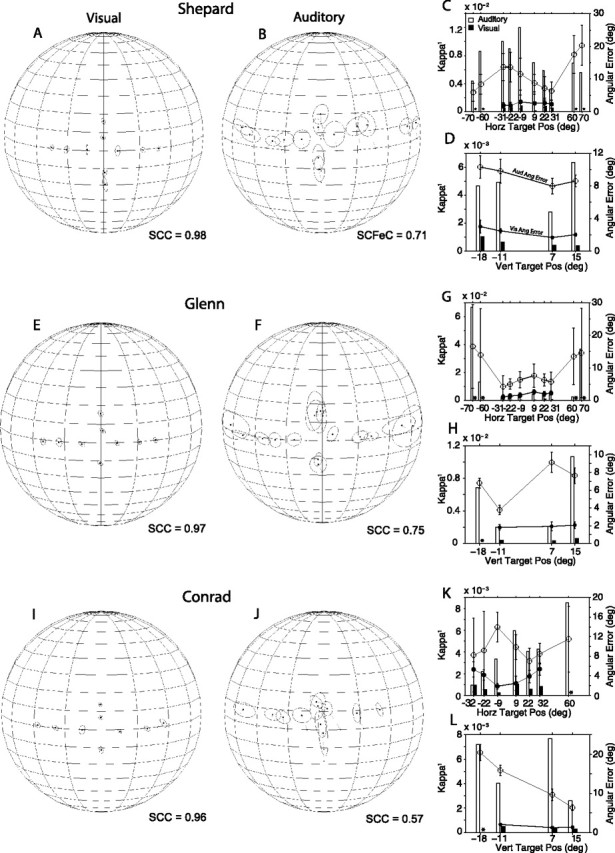

Figure 9.

Demonstration of the Franssen effect. The configuration of the stimuli is illustrated in each of the panels. The visual fixation stimuli are illustrated at the top of A and C. All data are plotted synchronized to the offset of the fixation LED, which constituted the signal for the subject to respond. A, B, Gaze and head position plotted as a function of time with the transient component of the FE stimuli presented to the left of the subject and the sustained component presented to the right. The subject oriented in the direction of the transient indicating that the FE was perceived. The reversed configuration of the FE stimuli and the associated gaze and head movement data are presented in C and D. Note that the head of the subject remained stationary during the presentation of the stimuli, demonstrating that no dynamic cues were available to resolve this task. E, G, Angular localization errors of SS stimuli for each of the two subjects as a function of the frequency of the stimuli. F, H, Percent of trials in which the subject made a gaze shift in the direction of the transient component of the FE stimuli. BB, Broadband noise.

It was hypothesized that if the monkeys experienced the FE, they should orient to the source of the transient component of the stimuli, where cats orient to when presented with similar stimuli (Dent et al., 2004), and humans report to hear the sustained component of the stimuli in the context of a discrimination task (Yost et al., 1997).

Consistent with our expectations, the monkeys oriented to the source of the transient stimuli in most trials, regardless of the fact that the transient stimuli expired after the first 50 ms and the sustained component continued for 500 ms on the opposite hemifield until the time in which the fixation LED was turned off, signaling the animal to respond. The results were more consistent when the transient component of the stimuli was presented from the source located to the left of the subject (Fig. 9A,B). Note that the subject maintained his head stationary, aligned with the straight-ahead position during the fixation period, demonstrating that he was exposed to the entire stimuli without the introduction of dynamic cues caused by head movements.

The summary of the results comparing the errors in the localization of SS stimuli and the rate of occurrence of the FE is shown in Figure 9E–H; subject Glenn was not tested with broadband stimuli. Subject Shepard made the smallest errors in localizing SS stimuli in the broadband condition, as expected. For single tone stimuli, he made larger errors, reaching nearly 10° in the 2 KHz condition (Fig. 9E). Subject Glenn's localization of SS tonal stimuli, was consistent across all frequencies tested (Fig. 9G). It must be noted that the SS tonal stimuli were presented with an abrupt onset, which must have resulted in spectral splatter. Thus, in reality, the subjects were not required to localize true single frequency stimuli.

Judging by the percent of trials in which subject Shepard oriented to the transient component of the stimuli in the broadband and 1 KHz single tone conditions, he did not perceive the FE in most of those trials (i.e., in 80% of the trials he oriented to the sustained component of the stimuli). To the contrary, in the 2, 4, 6, and 8 KHz conditions, he oriented to the transient component of the stimuli between 55–80%, indicating that he perceived the FE. As indicated above, subject Glenn was not tested with broadband stimuli but his responses to FE stimuli were indicative of a strong perception of the FE. Unlike subject Shepard, who in the 1 KHz condition only oriented to the transient component of the stimuli 20% of the time, subject Glenn oriented to the source of the transient component of the stimuli >60% of the time. In the 2, 4, 6, and 8 KHz conditions, the response to the source of the transient component was ∼80%, suggesting a strong perception of the FE in those conditions (Fig. 9H). These data constitute clear evidence that the two monkeys perceived the FE stimuli.

Discussion

The results show that rhesus monkeys oriented to the sources of broadband sounds very poorly with their heads restrained, but did so accurately and with much less variability when their heads moved freely. Control experiments using precedence effect stimuli in the range of summing localization showed that the monkeys oriented to phantom sources. This confirmed that they experience summing localization like humans (Blauer, 1983) and cats (Populin and Yin, 1998), and most importantly that they based their responses on localizing the sound sources, not on remembering spatial locations that resulted in rewards. Moreover, tests with FE stimuli show that monkeys perceived this auditory illusion. The importance of using ecologically valid behaviors for studies of sensory processes is demonstrated, and the potential of the rhesus monkey as a model for studies of spatial auditory function is established.

Orienting with the head restrained

Although no emphasis was placed on requiring accurate responses, all three subjects oriented accurately to visual targets in the first experimental session. To the contrary, the auditory condition presented insurmountable problems to our head-restrained subjects. The magnitude of the errors and the large variability of the responses show that all three monkeys were inaccurate and imprecise.

Comparison with previous studies

Only a few studies have used eye position as a measure of sound localization in the head-restrained monkey. It is impossible to compare our results with those of Grunewald et al. (1999) because they showed little behavioral data. Nevertheless, they reported that after extensive training, which included specific visual feedback, their monkeys could perform a localization task that included only two targets located at (±16°, 8°) with acceptance windows 32° in diameter. The sound localization behavior of our three monkeys was much superior, including that of our worse subject (Conrad), who was able to orient to four acoustic targets after twenty practice sessions. A comparison with Metzger et al.'s (2004) data is inappropriate because they did not take into account corrective saccades.

Jay and Sparks (1990), in contrast, provided a detailed account of their monkeys' sound localization accuracy. Thus, a comparison is possible. Unfortunately, they did not provide information about the variability of their data. The magnitude of the total errors made by Jay and Sparks' (1990) monkeys increased systematically with target eccentricity and at a similar rate in both acoustic and visual conditions. The angular errors in the present study were also larger in the acoustic condition, but there was no systematic change in magnitude as a function of eccentricity, with the exception of the targets near the cone of confusion.

Although several factors may have contributed to the differences in sound localization between Jay and Sparks (1990) and the present study, the most important was probably the training. First, it is not clear how much practice Jay and Sparks' (1990) subjects had at the time the data presented were acquired. Second, Jay and Sparks' (1990) presented visual feedback at the location of acoustic targets if the subject failed to acquire them within 700 ms. Visual feedback specifically instructed monkeys on the magnitude and direction of the errors, or showed the location of the acoustic targets when the subjects did not respond. Feedback of that type and a potentially longer period of training could be responsible for the differences in sound localization accuracy between the two studies.

Orienting with the head unrestrained

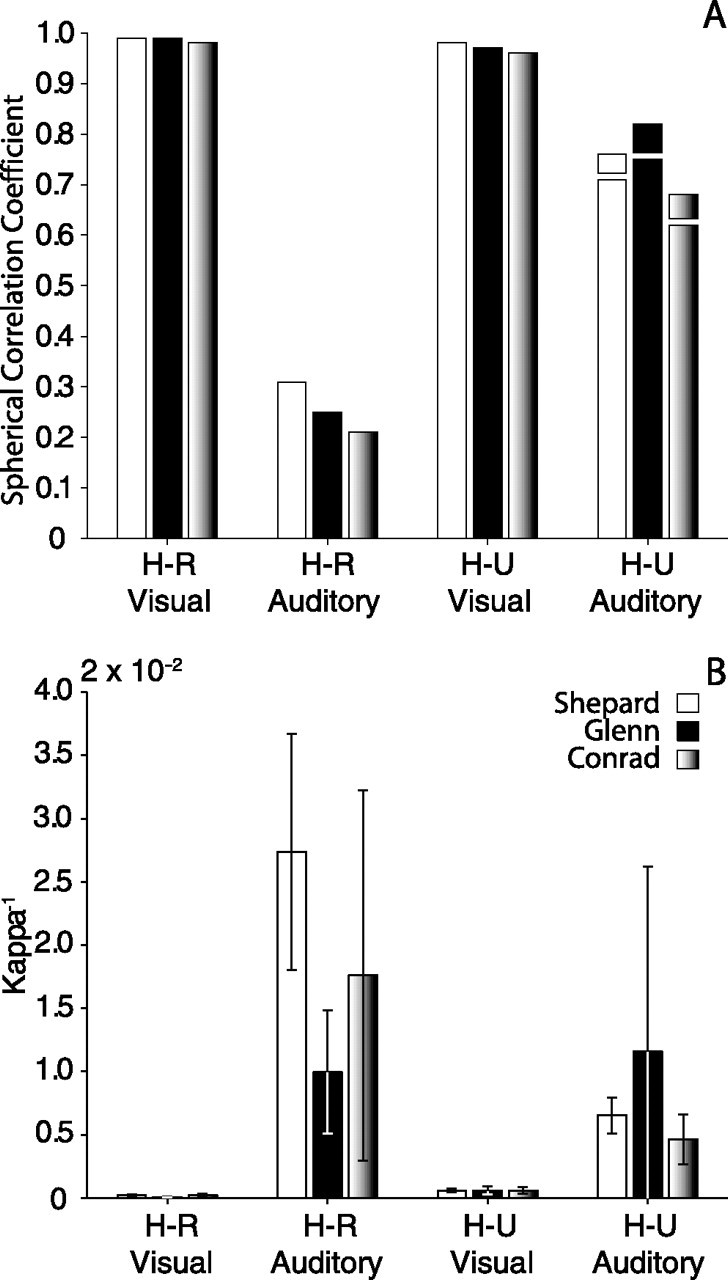

There was practically no change in orienting performance to visual targets as a result of releasing the subjects' heads (Fig. 10). The variability in final gaze position was slightly larger than in the head-restrained condition for Shepard and Glenn and comparable for Conrad. This decrease in precision cannot be attributed to lack of practice because the subjects arrived at the laboratory with a lifetime of experience in orienting to visual targets with the head unrestrained. Nor can it be attributed to changes in the characteristics of the targets because the experiments were done with the same parameters used in the head-restrained experiments. The most likely explanation for the decrease in precision was the movement of the head.

Figure 10.

A, Spherical correlation coefficients illustrating sound localization performance under head-restrained and head-unrestrained conditions in the context of the fixation task for each for the three subjects. The small blocks stacked on the data corresponding to the head-unrestrained condition (H-U Auditory) represent the SCC measured on the same targets used in the head-restrained condition (more targets were used in the head-unrestrained condition). B, Summary of κ−1, a measure of dispersion of spherical data. Error bars indicate SE.

In contrast to the visual condition, releasing the head affected orienting to acoustic targets. The changes documented in the first experimental sessions were the most salient, particularly from Conrad, who immediately started to orient to all ten acoustic targets used in the head-restrained condition. Overall sound localization improved in all three subjects (Fig. 10). Spherical correlation coefficients more than doubled in size and variability was reduced. Inspection of Shepard and Conrad's data in Figure 5 reveals that the horizontal component of the responses was consistent with the position of the targets, with errors arising primarily from upward misjudgments. Glenn's final gaze positions, however, did not show the distinct upward shift and exhibited a greater degree of variability near the cone of confusion. Although the monkeys were unrestrained in the primate chair, the marked improvement in sound localization documented after allowing the head to move raises the question of whether removing other restrictions, such as those imposed by the chair, would result in further improvements.

Comparison with previous studies

There are no studies, to my knowledge, in which monkeys have been allowed to orient to acoustic targets without restrictions on head movements. The most similar (Whittington et al., 1981) focused on eye-head coordination of gaze shifts to acoustic and visual targets on the horizontal plane. The head of the monkeys was attached to an apparatus that allowed horizontal movements only. The errors, measured as a function of initial eye position, were ∼5°, and relatively constant for a wide range of initial eye positions. However, it is not clear if the measurement represented the total error or the horizontal component. Thus, little data were presented on the sound localization behavior of those monkeys.

The only study in which gaze was used as a measure of sound localization was done in cats (Tollin et al., 2005). Their results were generally consistent with the present showing that sound localization accuracy improved significantly when the subjects were allowed to orient with their heads unrestrained. However, the variability of the cats' responses remained essentially unchanged.

Did the monkeys base their responses on localizing the sound sources or on remembered locations that led to rewards?

Learning in studies that rely on operant conditioning is always of concern because it could lead to erroneous interpretation of the data. Therefore, did the monkeys orient based on localizing the sound sources, or based on remembering locations that resulted in rewards?

We addressed this question by presenting acoustic stimuli known to evoke the illusion that sounds originate from phantom sources in humans (Blauer, 1983) and cats (Populin and Yin, 1998). A negative result would have been problematic because it was possible, although unlikely, that monkeys did not perceive summing localization.

Because all three monkeys oriented to locations where no acoustic stimuli were presented, and no double gaze shifts (to the left and right targets) were observed in summing localization trials, we conclude that the monkeys localized sound sources and did not orient to memorized locations. Additional evidence in support of this conclusion was found in the results of the FE experiments, which showed that monkeys also perceived this spatial auditory illusion.

Why do monkeys exhibit such differences in orienting behavior to acoustic targets between the head-restrained and head-unrestrained conditions?

Two alternative explanations are possible. The first concerns the incorrect execution of orienting movements to acoustic targets under head-restrained conditions. According to this explanation, the magnitude of any undershoot in final eye position would be equivalent to the contribution the head should have made to the gaze shift if it had been allowed to move. This explanation, suggested by Sparks (2005) and Tollin et al. (2005), is compelling but problematic for several reasons. (1) It implies that cats and monkeys would be able to take into account the restrained condition of the head when planning and executing gaze shifts to visual, but not acoustic targets. This would be improbable if the position of acoustic targets is encoded in a spatial or body-centered frame of reference (Goossens and Van Opstal, 1999) because final eye position would not match the perceived location of the target. (2) Animals conditioned to orient to the sources of acoustic stimuli for a reward are unlikely to orient to different points in space if they perceive the location of the targets to be the same regardless of the restrained or unrestrained condition of the head. However, it has been shown that the neck muscles of animals required to orient with the head restrained continue to be activated (Guitton et al., 1980; Vidal et al., 1982), suggesting that head movements are planned regardless of the restrain.

The second alternative concerns the potential effects that restraining the head could have on sensorimotor integration. Specifically, if information about the position of the head (and the eyes) is taken into account for computing the location of sound sources (Goossens and Van Opstal, 1999), and such information is distorted because of the prolonged restraint of the head, the location of a target could be computed incorrectly. If this were the case, the animals in our study might have executed the correct responses to erroneously computed targets.

Footnotes

This work was supported by National Science Foundation Grant (IOB-0517458), National Institutes of Health Grant DC03693, and the Deafness Research Foundation. The contributions of J. Sekulski, E. Neuman, and S. Sholl for computer programming and M. Biesiadecki for technical help are acknowledged. I also thank N. Cobb and J. Hudson for insightful discussions on primate behavior; S. Carlile for the software package SPAK used for data analysis; E. Langendijk for an update for some of the SPAK functions provided by D. Kistler; M. Biesiadecki, J. Harting, and P. Smith for comments on a previous version of this manuscript; and Micheal Dent and Elizabeth McClaine for their contribution to the analysis of the Franssen effect data.

References

- Barton EJ, Sparks DL. Saccades to remembered targets exhibit enhanced orbital position effects in monkeys. Vision Res. 2001;41:2393–2406. doi: 10.1016/s0042-6989(01)00130-4. [DOI] [PubMed] [Google Scholar]

- Blauer J. Cambridge, MA: MIT; 1983. Spatial hearing: the psychophysics of human sound localization. [Google Scholar]

- Carlile S, Leong P, Hyams S. The nature and distribution of errors in sound localization by human listeners. Hear Res. 1997;114:179–196. doi: 10.1016/s0378-5955(97)00161-5. [DOI] [PubMed] [Google Scholar]

- Dent ML, Tollin DJ, Yin TC. Cats exhibit the Franssen effect illusion. J Acoust Soc Am. 2004;116:3070–3074. doi: 10.1121/1.1810136. [DOI] [PubMed] [Google Scholar]

- Dent ML, Weinstein JM, Populin LC. New Orleans: Association for Research in Otolaryngology; 2005. Localization of stimuli that produce the Franssen Effect in mammals. [Google Scholar]

- Fisher NI, Lewis T, Embleton EJ. Cambridge, UK: Cambridge UP; 1987. Statistical analysis of spherical data. [Google Scholar]

- Franssen NV. Stereophony. Phillips technical lecture, Einhoven, The Netherlands. 1964 English translation. 1962 [Google Scholar]

- Frens MA, Van Opstal AJ. Visual-auditory interactions modulate saccade-related activity in monkey superior colliculus. Brain Res Bull. 1998;46:211–224. doi: 10.1016/s0361-9230(98)00007-0. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. Circuitry of primate prefrontal cortex and regulation of behavior by representational memory. In: F Plum., editor. Handbook of physiology. The nervous system, Vol V, Higher functions of the brain. Bethesda, MD: The American Physiological Society; 1987. pp. 373–417. [Google Scholar]

- Goossens HH, Van Opstal AJ. Influence of head position on the spatial representation of acoustic targets. J Neurophysiol. 1999;81:2720–2736. doi: 10.1152/jn.1999.81.6.2720. [DOI] [PubMed] [Google Scholar]

- Guitton D, Crommelinck M, Roucoux A. Stimulation of the superior colliculus of the alert cat. I. Eye movements and neck EMG activity evoked when the head is restrained. Exp Brain Res. 1980;39:63–73. doi: 10.1007/BF00237070. [DOI] [PubMed] [Google Scholar]

- Grunewald A, Linden JF, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area. I. Effects of training. J Neurophysiol. 1999;82:330–342. doi: 10.1152/jn.1999.82.1.330. [DOI] [PubMed] [Google Scholar]

- Hartmann WM, Rakerd B. Localization of sound in rooms IV: the Franssen effect. J Acoust Soc Am. 1989;86:1366–1373. doi: 10.1121/1.398696. [DOI] [PubMed] [Google Scholar]

- Hunter WS. The delayed reaction in animals and children. Behav Monogr. 1913;2:1–86. [Google Scholar]

- Jay FJ, Sparks DL. Localization of auditory and visual targets for the initiation of saccadic eye movements. In: Berkley MA, Stebbins WC, editors. Comparative perception. Basic mechanisms. New York: Wiley; 1990. pp. 351–374. [Google Scholar]

- Judge SJ, Richmond BJ, Shu FC. Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res. 1980;20:535–537. doi: 10.1016/0042-6989(80)90128-5. [DOI] [PubMed] [Google Scholar]

- Linden JF, Grunewald A, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area II. Behavioral modulation. J Neurophysiol. 1999;82:343–358. doi: 10.1152/jn.1999.82.1.343. [DOI] [PubMed] [Google Scholar]

- Metzger RR, Mullette-Gillman OA, Underhill AM, Cohen YE, Groh JM. Auditory saccades from different eye positions in the monkey: implications for coordinate transformations. J Neurophysiol. 2004;92:2622–2627. doi: 10.1152/jn.00326.2004. [DOI] [PubMed] [Google Scholar]

- Populin LC. Sound localization in monkey with head unrestrained. Soc Neurosci Abstr. 2003;29:488–7. [Google Scholar]

- Populin LC, Yin TC. Behavioral studies of sound localization in the cat. J Neurosci. 1998;18:2147–2160. doi: 10.1523/JNEUROSCI.18-06-02147.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Populin LC, Tollin DH, Weinstein JM. Human orienting responses: head and eye movements to acoustic and visual targets. Soc Neurosci Abstr. 2001;27:60–15. [Google Scholar]

- Robinson DA. A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Trans Biomed Eng. 1963;10:137–145. doi: 10.1109/tbmel.1963.4322822. [DOI] [PubMed] [Google Scholar]

- Sparks DL. An argument for using ethologically “natural” behaviors as estimates of unobservable sensory processes. Focus on “sound localization performance in the cat: the effect of restraining the head”. J Neurophysiol. 2005;93:1136–1137. doi: 10.1152/jn.01109.2004. [DOI] [PubMed] [Google Scholar]

- Tollin DJ, Populin LC, Moore JM, Ruhland JL, Yin TC. Sound-localization performance in the cat: the effect of restraining the head. J Neurophysiol. 2005;93:1223–1234. doi: 10.1152/jn.00747.2004. [DOI] [PubMed] [Google Scholar]

- Vidal PP, Roucoux A, Berthoz A. Horizontal eye position-related activity in neck muscles of the alert cat. Exp Brain Res. 1982;46:448–453. doi: 10.1007/BF00238639. [DOI] [PubMed] [Google Scholar]

- Waser PM. Sound localization by monkeys: a field experiment. Behav Ecol Sociobiol. 1977;2:427–431. [Google Scholar]

- Whittington DA, Hepp-Reymond MC, Flood W. Eye and head movements to auditory targets. Exp Brain Res. 1981;41:358–363. doi: 10.1007/BF00238893. [DOI] [PubMed] [Google Scholar]

- Wightman FL, Kistler DJ. Headphone simulation of free-field listening. II. Psychophysical validation. J Acoust Soc Am. 1989;85:868–878. doi: 10.1121/1.397558. [DOI] [PubMed] [Google Scholar]

- Yost WA, Mapes-Riordan D, Guzman SJ. The relationship between localization and the Franssen Effect. J Acoust Soc Am. 1997;101:2994–2997. doi: 10.1121/1.418528. [DOI] [PubMed] [Google Scholar]

- Young ED, Rice JJ, Tong SC. Effects of the pinna position on head-related transfer functions in the cat. J Acoust Soc Am. 1996;99:3064–3076. doi: 10.1121/1.414883. [DOI] [PubMed] [Google Scholar]