Abstract

In addition to the goal-directed preplanned control, which strongly governs reaching movements, another type of control mechanism is suggested by recent findings that arm movements are rapidly entrained by surrounding visual motion. It remains, however, controversial whether this rapid manual response is generated in a goal-oriented manner similarly to preplanned control or is reflexively and directly induced by visual motion. To investigate the sensorimotor process underlying rapid manual responses induced by large-field visual motion, we examined the effects of contrast and spatiotemporal frequency of the visual-motion stimulus. The manual response amplitude increased steeply with image contrast up to 10% and leveled off thereafter. Regardless of the spatial frequency, the response amplitude increased almost proportionally to the logarithm of stimulus speed until the temporal frequency reached 15–20 Hz and then fell off. The maximum response was obtained at the lowest spatial frequency we examined (0.05 cycles/°). These stimulus specificities are surprisingly similar to those of the reflexive ocular-following response induced by visual motion, although there is no direct motor entrainment from the ocular to manual responses. In addition, the spatiotemporal tuning is clearly different from that of perceptual effects caused by visual motion. These comparisons suggest that the rapid manual response is generated by a reflexive sensorimotor mechanism. This mechanism shares a distinctive visual-motion processing stage with the reflexive control for other motor systems yet is distinct from visual-motion perception.

Keywords: involuntary visuomotor response, reflexive manual response, large-field visual motion, reaching arm movement, contrast tuning, ocular-following response

Introduction

Visual information plays a significant role in generating goal-oriented responses during motor planning (Wolpert and Ghahramani, 2000) and also during ongoing arm movements. Rapid arm adjustment is observed when the physical or apparent position of reaching target is suddenly shifted (Georgopoulos et al., 1981; Goodale et al., 1986; Prablanc and Martin, 1992; Brenner and Smeets, 1997; Desmurget et al., 1999; Day and Lyon, 2000; Rossetti et al., 2003; Whitney et al., 2003). In addition to such goal-oriented response, a recent study (Saijo et al., 2005) reported a quick manual response to follow a sudden background visual motion. This occurred without explicit presentation of a reaching target. This manual-following response (MFR) would aid in producing quick arm adjustment when the body is unexpectedly moved in relation to the reaching environment. In this respect, the MFR is apparently similar to the ocular-following response (OFR), which is also rapidly and reflexively induced by the background motion (Kawano and Miles, 1986; Miles et al., 1986; Shidara and Kawano, 1993; Kawano et al., 1994). Based on this behavioral similarity between the MFR and OFR, we propose a hypothesis that these different visuomotor responses may share some common neural process through which visual-motion signals directly drive motor responses. This hypothesis can be contrasted with an alternative hypothesis that the MFR is generated in a goal-oriented manner similarly to planned voluntary manual responses. According to the alternative hypothesis, the background motion causes a perceptual mislocalization of the reaching target, which in turn produces an arm adjustment.

One of the key issues in comparing these hypotheses is to reveal the sensorimotor processing of the quick manual response induced by a surrounding visual motion. The present study focuses on a novel aspect of the on-line manual control: the effects of image contrast and spatiotemporal frequency. These tuning specificities would be influenced by the characteristics of visual information processing whose tuning functions have been extensively investigated in many visual area (Derrington and Lennie, 1984; Foster et al., 1985; Sclar et al., 1990; Merigan and Maunsell, 1993; Perrone and Thiele, 2001; Priebe et al., 2003). Additionally, it is well known how the changes in image contrast and spatiotemporal frequency affect voluntary and involuntary eye movements (Miles et al., 1986; Gellman et al., 1990; Kawano et al., 1994; Priebe and Lisberger, 2004), as well as various sorts of perceptual responses (Kelly, 1979; Burr and Ross, 1982; Levi and Schor, 1984; De Valois and De Valois, 1991).

Therefore, to investigate the sensorimotor mechanisms underlying the quick manual response induced by a large-field visual motion, we measured how this response is affected by stimulus contrast and spatiotemporal frequency. We compared the tunings with those of other visual-motion-related phenomena. Our results show that the stimulus tunings of MFR are surprisingly similar to those for the reflexive OFR. This finding not only indicates that the MFR is reflexively induced by a large-field visual motion as is the OFR, but also suggests that it shares a distinctive visual-motion processing with the reflexive control for other motor systems.

Materials and Methods

Experimental setup.

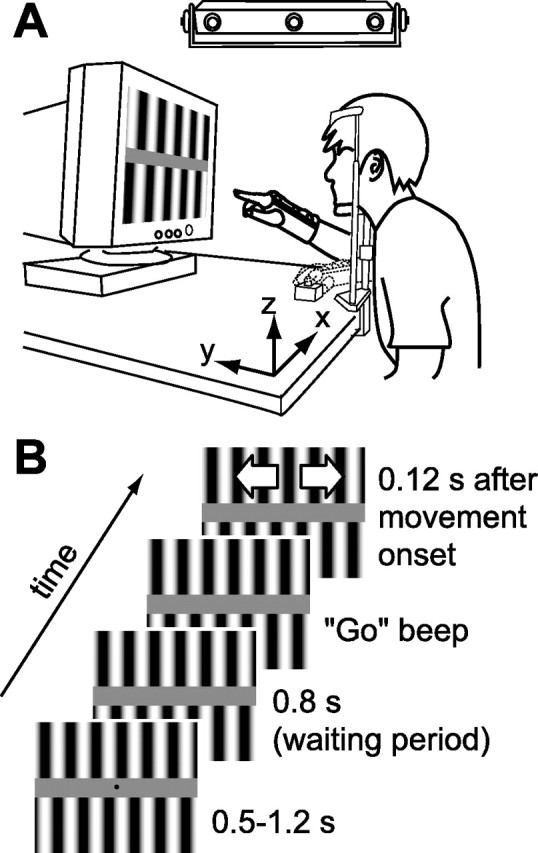

Each subject sat in front of a desk (height of 78–81 cm, fitted comfortably for each subject) in a darkened room with the head stabilized on a chin support as shown in Figure 1A. A computer monitor (22 inch, CV921PJ; Totoku Electric, Tokyo, Japan) was placed in front of the subject’s face (45 cm from the center of the eyes) as shown in Figure 1. To detect the actual time of visual stimulus onset, a photodiode (S1223-1; Hamamatsu Photonics, Shizuoka, Japan) was attached on the top left corner of the monitor, which is not drawn in this figure. The axes of coordinates were taken as shown in Figure 1 in which x is the horizontal direction parallel to the display, y is the direction perpendicular to the display, and z is the vertical direction. A button switch connected to the printer port of the computer was placed on the table [(x, y, z) of (0, 7, −30) cm from the eye position] and was used to detect the arm movement onset for driving the visual stimulus. The right-hand position (monitored by a marker placed on the back of the hand around the bottom of the ring finger) was obtained with an optical position sensor (OPTOTRAK3020; Northern Digital, Waterloo, Ontario, Canada) at 250 Hz, and his/her wrist motion was prevented by a simple cast.

Figure 1.

Experimental setup. A, Configuration of the experimental apparatus. The subject was instructed to move the hand from the button switch to the center of the display in response to a series of auditory signals. Experiments were performed in a darkened room. B, Time course of the visual stimulus. For details, see Materials and Methods.

In two arm-reaching experiments (using “contrast” and “spatiotemporal frequency” stimuli; see below, Visual stimuli), at the beginning of each trial, the subject’s hand was placed at the start position, pressing against a button with the index finger. After a random interval (0.5–1.2 s), four beeps were presented at intervals of 0.4 s. After these instructional beeps, the subject had to move the hand to the target position on the screen. He/she was requested to release the button to initiate the hand movement at the third beep and reach the target position in 0.4 s (i.e., at the fourth beep). The target was placed at the center of the screen, which was fixed throughout the experiment to compare the arm movements toward the same target position obtained under different visual stimulus conditions. The target position was indicated by a small red marker (0.38°). To avoid direct influence of the background image motion on the perceived target position (Brenner and Smeets, 1997; Whitney and Cavanagh, 2000; Whitney et al., 2003), the position marker was eliminated 0.8 s before the third “go” beep.

In the “motion perception” experiment, each subject sat in front of the computer monitor with the head stabilized on a chin support as in the above experiment and judged the visual-motion direction of each visual stimulus (see below, Visual stimuli) by pressing one of two keys.

Six subjects (five naive, of whom four male, and one author, all right handed, all aged 23–30 years) participated in the contrast experiment, and five of them participated in the spatiotemporal frequency experiment. Five subjects (two from the contrast experiment, and three new subjects whose age and sex were matched with those of the subjects in the contrast experiment) participated in the motion perception experiment. None of the subjects had ever experienced any visual or motor deficits, and all gave informed consent to participate in the study, which was approved by the NTT Communication Science Laboratories Research Ethics Committee.

Visual stimuli.

All visual stimuli were presented on the cathode ray tube monitor with a vertical refresh rate of 100 Hz. For controlling the stimuli in the arm-reaching experiments, we used Matlab (MathWorks, Natick, MA) and Cogent Graphics (University College London, London, UK) software on the Microsoft (Seattle, WA) Windows Operating System. The visual angle of the monitor display was 46.5° × 36.1°. A pair of vertical sinusoidal grating patterns (antiphase) of a given contrast was shown on the top and bottom sides of the monitor, which were separated by a constant gray zone (vertical width, 5.2°; 15.9 cd/m2) as shown in Figure 1. Note that the small region of the top left corner of the monitor was occluded by a black cover of the photodiode. Both of these grating patterns started to move either rightward or leftward (randomized) 120 ms after arm movement began. To reduce the total number of trials, we did not introduce any trials without visual motion in this experimental set. We confirmed in a pilot experiment that manual responses were similarly produced whether or not no-visual-motion trials were randomly introduced.

In the contrast experiment, the grating contrast was fixed in a particular set consisting of 90 trials (45 trials for each side motion). In the series of experimental sets, the contrast was increased from 2, 4, 7, 10, 40, to 70% for three subjects and was decreased in the reverse order for the other three subjects. Here, contrast is defined as (Lmax − Lmin)/(Lmax + Lmin), where Lmax and Lmin are maximum and minimum luminance values in the grating pattern, respectively. The spatiotemporal frequency for different contrast stimuli was fixed at 8.37 Hz, 0.087 cycles per degree (c/°). An intermission of 2–15 min was taken between the successive experimental sets.

In the second, spatiotemporal frequency experiment, we changed the spatiotemporal frequency of the grating stimulus in the range of 0.4–25 Hz and 0.05–1.26 c/° with the contrast fixed at 50% (32 conditions, mainly along the several constant temporal or spatial frequency axes; for data points, see Fig. 6B). The relationship between temporal frequency, Ft, the spatial frequency of the grating pattern, Fs, and the velocity of image motion, V, is defined as Ft = Fs × V. The spatial and temporal frequencies were fixed in each experimental set (90 trials per set, 45 trials for the motion of each side in random order). This block design for stimuli is for avoiding small changes in movement trajectories across the multiple sets, which could introduce biases in extracting the MFR components. The order of the change in temporal frequency for a particular spatial frequency was counterbalanced between subjects. There was again an intermission between successive sets.

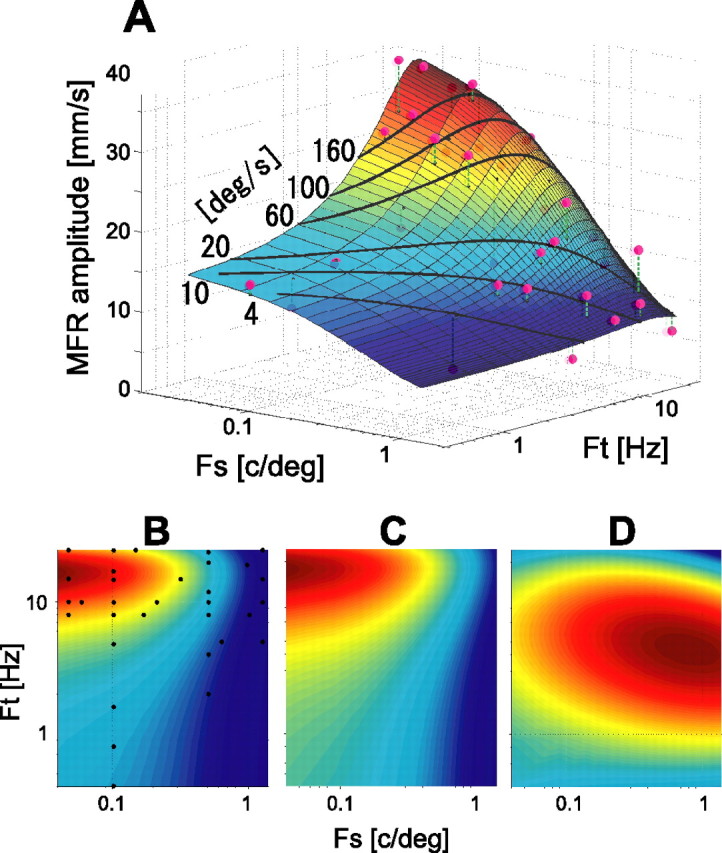

Figure 6.

Spatiotemporal tuning surfaces of MFR, OFR, and motion perception sensitivity. A, A two-dimensional Gaussian surface fitted to the MFR amplitudes for different spatiotemporal frequencies. Fs, Spatial frequency; Ft, temporal frequency. The color indicates the height (MFR amplitude) of the surface normalized by maximum and minimum values in the overall data: dark red, highest; dark blue, lowest. Magenta circles are the data points obtained in the experiment. The VAF was 0.84. The black curves superimposed on the fitted surface represent the data points for the constant stimulus velocities of 4, 10, 20, 60, 100, and 160 °/s. B, Top view of the fitted surface (color contour plot). MFR amplitude along any constant spatial frequency (ordinate) increases as spatial frequency decreases. MFR amplitude increases with temporal frequency until ∼15–20 Hz but decreases thereafter. This trend was also observed in the experimental data in Figure 4C. Small black dots denote the data point on the surface corresponding to the experimental data. C, Color contour plot of the tuning surface of the OFR amplitudes, fitted to the data of Miles et al. (1986). The VAF value was 0.91. D, Color contour plot of the tuning surface of the perceptual contrast sensitivity of visual motion. The VAF value was 0.99. For description of the basis functions fitted to the data, see Materials and Methods. The peak of the sensitivity-tuning surface is clearly different from those of the MFR (B) and OFR (C). In B–D, color indicates the height of the fitted surface normalized by maximum and minimum values in each region: dark red, highest; dark blue, lowest.

In the motion perception experiment, contrast sensitivity of motion direction discrimination was measured at the same spatial and temporal frequencies as those used in the above second experiment. The order of measurement for each spatiotemporal frequency was randomly determined for each subject. In each block, the image contrast of a given spatiotemporal frequency was changed from trial to trial according to a Bayesian adaptive psychometric procedure, QUEST (Watson and Pelli, 1983), implemented in Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). In each trial, a grating motion linearly ramped on for 250 ms, held the specified contrast for 500 ms, and then linearly ramped off for 250 ms. The subject fixated on a marker continuously presented at the center of the display and made a forced-choice judgment about whether the perceived motion direction was leftward or rightward. The temporal profile was designed to avoid presenting a broad range of temporal frequency at stimulus onset/offset. In a supplementary experiment (three subjects), we also tested the temporal profile similar to that used for the arm-reaching experiments (sudden-motion onset) and found no significant change of the spatiotemporal perceptual sensitivity function. The visual stimulus was presented through a 14-bit luminance-resolution graphics system (VSG2/5; Cambridge Research Systems, Rochester, UK).

Data analysis.

Hand-position data were filtered (fourth-order Butterworth low-pass filter with the cutoff 30 Hz), and the velocity and acceleration patterns were obtained by three- and five-point numerical time differentiations (without delay) of the filtered position data, respectively. To exclude the outliers, we chose 90% (36–40) of successful trials in each stimulus condition (leftward or rightward grating motion) by means of the root mean square of the trajectory difference from the median trajectory. A few reaching trials in several experimental sets (one trial in 7 of 36 sets for the contrast experiment; one to five trials in 17 of 160 sets for the spatiotemporal experiment) failed because of occlusion of the hand marker. The ensemble mean of the selected trajectories in each stimulus condition was calculated. The amplitude of arm response caused by the grating motion was quantified by the difference between the rightward and leftward moving conditions in the mean hand velocity averaged over a period of 150–230 ms after the visual-motion stimulus onset.

The response onset was defined by the time from which the acceleration difference continuously exceeded a threshold value of 100 mm/s2 for at least 40 ms in a window between 80 and 200 ms after the stimulus onset. The threshold value approximately corresponded to 2 SDs of the acceleration during a period of 0–50 ms after the stimulus onset, during which the muscle response to the visual stimulus was not observed (Saijo et al., 2005). In a few cases, a reliable latency could not be estimated by this method (2 of 36 datasets, six conditions for six subjects) because of intertrial fluctuation and the low signal-to-noise ratio. The data for these exceptional cases were excluded from the analysis.

To capture the global spatiotemporal tuning of the MFR amplitude, we fitted the data by the following Gaussian function: AMFR = a0 + a1 exp(−(a22(Fs − a5)2 + 2a2a3a4(Fs − a5)(Ft − a6) + a32(Ft − a6)2)), where AMFR denotes MFR amplitude, and Fs and Ft denote the spatial and temporal frequencies, respectively. The ai (i = 0 − 6) denotes fitting coefficients, which were estimated by minimizing the prediction error with using the nonlinear fitting function of Matlab. Note that initial values of a5 and a6 in the nonlinear estimation were set to the spatial and temporal frequencies at which maximum MFR amplitude was observed. The initial values of the other parameters were set to 0. We also use this fitting function to describe the variation of OFR amplitude with spatiotemporal frequency. We also tested another fitting function, second-order polynomial (n = 6 model parameters), to describe the response variation. Although the fitting performance with this function [variance accounted for (VAF) value of 0.85] was close to that obtained with the Gaussian function (VAF value of 0.84), we used the latter one to avoid overfitting caused by the sparse samplings of the experimental data and to keep consistency of the fitting method for the MFR and OFR.

For fitting the data for perceptual contrast sensitivity obtained in the motion perception experiment, instead of the above Gaussian function with which sufficient fitting performance was not obtained in this case, we used the following Gaussian plus first-order polynomial function in log-spatiotemporal frequency domain: ACS = b0 + b1 log Fs + b2 log Ft + b3 exp(−(b42(log Fs − b7)2 + 2b4b5b6(log Fs − b7)(log Ft − b8) + b52(log Ft − b8)2)), where ACS denotes log contrast sensitivity, and Fs and Ft denote the spatial and temporal frequencies, respectively. The bi (i = 0 − 8) are fitting parameters, which were estimated as in the above fitting. Note that initial values of b7 and b8 in the nonlinear estimation were set to the spatial and temporal frequencies at which maximum motion contrast sensitivity was obtained. The initial values of the other parameters were set to 0.

Results

MFRs elicited by grating motion

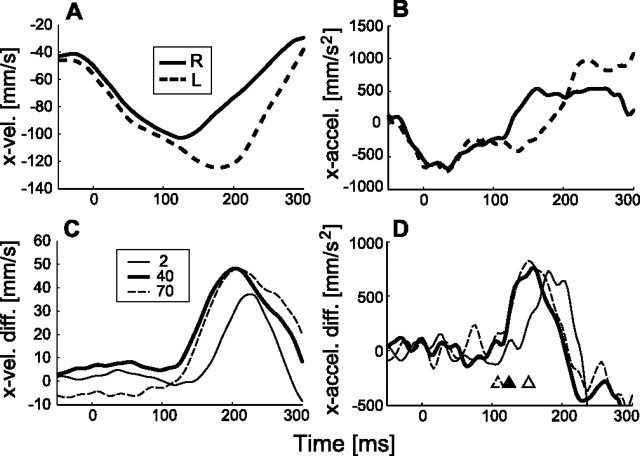

When the visual image on a computer display is suddenly moved during arm movement without a target marker being simultaneously shown, the arm accelerates in the direction of the visual motion with a short latency. To investigate the mechanism producing this MFR, we here focus on how the response characteristics change when the contrast and spatiotemporal frequency of a sinusoidal grating image (see Materials and Methods) are changed. Figure 2, A and B, show examples of the mean velocity and acceleration patterns of hand movements in the x-direction for the rightward (solid curve) and leftward (dashed curve) visual stimuli (40% contrast, 96.2 °/s, 0.087 c/°, 8.37 Hz). These temporal patterns started to deviate in the corresponding visual stimulus directions ∼120 ms after the onset of visual motion.

Figure 2.

Temporal patterns of manual response induced by visual motion. A, Mean hand velocity patterns (n = 40) in x-direction in the rightward (R; solid curve) and leftward (L; dashed curve) visual motion (40% contrast, 0.087 c/°, 8.37 Hz). B, Corresponding acceleration patterns. C, Differences between x-directional hand velocity patterns for the rightward and leftward stimuli with image contrast of 2% (thin solid curve), 40% (thick solid curve), and 70% (dashed curve) (0.087 c/°, 8.37 Hz). D, These acceleration patterns. The open, filled, and open-dashed triangles indicate the latencies (152, 124, and 108 ms) detected from MFR acceleration responses in 2, 40, and 70% contrast conditions.

To compare the temporal profiles of manual responses with motion of visual stimuli with different contrasts, we took the differences between the leftward and rightward visual-motion conditions in x-velocity and x-acceleration patterns, respectively. Figure 2, C and D, show the temporal patterns for three contrast levels: 2, 40, and 70%. As shown in C, the peak of the response pattern in the x-velocity difference for 2% contrast was smaller and slower than those at 40 and 70%, whereas those at 40 and 70% were not much different. The response latency detected by the threshold of the acceleration difference (Fig. 2D, triangles) was shorter for the higher-contrast conditions (40 and 70%, indicated by the dashed line and filled triangles, respectively) than for the low-contrast conditions (2%, indicated by the open triangle).

Contrast modulation of the MFR

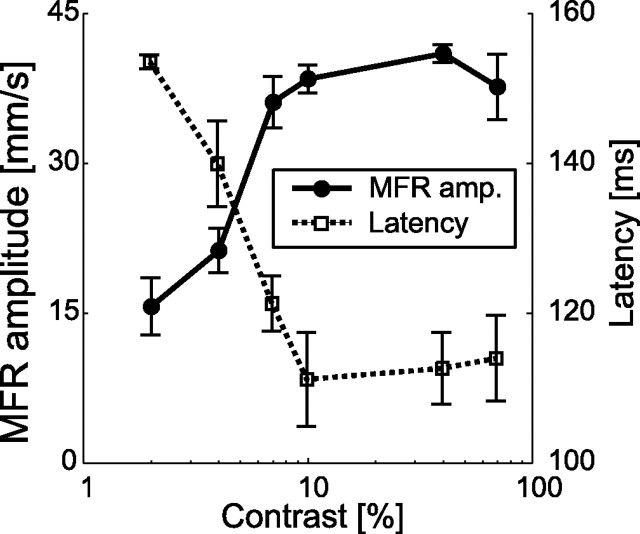

To obtain the contrast tuning of the MFR, we took the temporal mean of the x-velocity difference in each stimulus condition (see Materials and Methods). Figure 3 shows the variation in response amplitude and latency with changes in image contrast. The mean response amplitude increased steeply as the image contrast increased from 2 to 10% and then saturated in the range of 10–70%. In addition, the latency decreased as the image contrast increased in the range of 2–10% and then leveled off when the contrast increased beyond 10%. The response amplitude for 2% contrast was significantly different (p < 0.05, paired t test) from those for 4–70% contrasts, and the latency for 2% contrast was significantly different (p < 0.05, paired t test) from those for 7–70% contrasts.

Figure 3.

MFR amplitude and latency modulation by changing image contrast. Solid and dotted lines show the mean amplitudes and latencies of the MFR, respectively. Amplitude error bars denote the SE for all subjects (n = 6). Each error bar for the mean latency denotes the SE for subjects whose MFR latency was reliably detected (n = 5 for 2 and 10% contrast conditions; n = 6 for other contrast conditions). Spatial and temporal frequencies of the stimulus were 0.087 c/° and 8.37 Hz.

Velocity-dependent modulation of the MFR

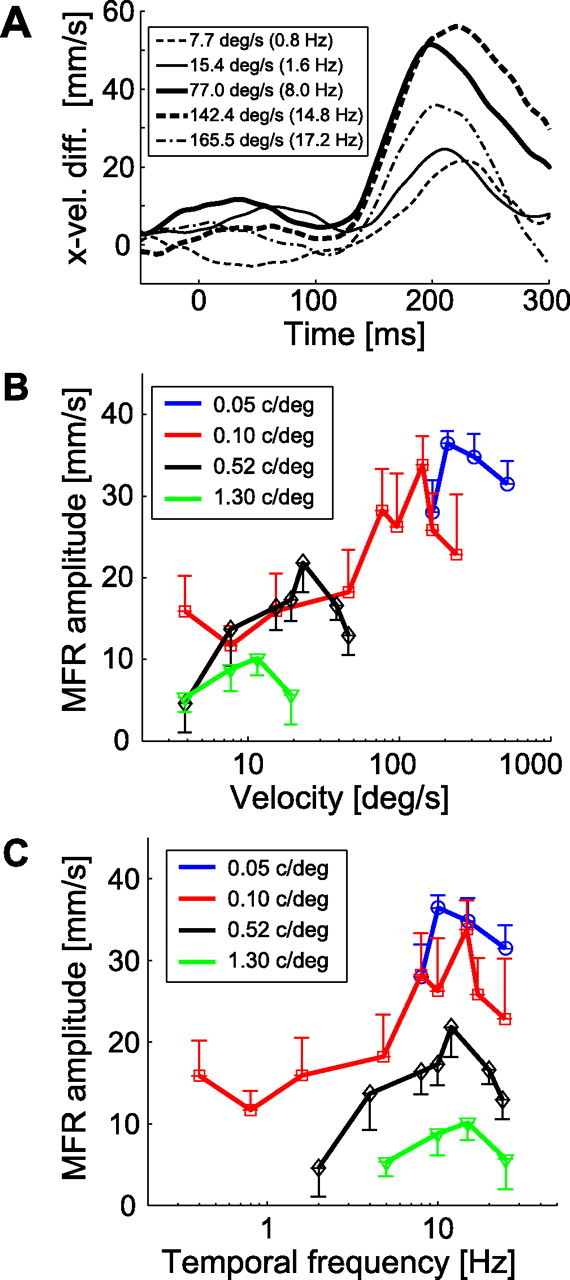

The stimulus velocity also affected the response amplitude. Figure 4A depicts the temporal patterns of x-velocity difference for a grating image with a given spatial frequency (0.10 c/°) drifting at various velocities. The response amplitude increased as the velocity increased up to 142.4 °/s but then decreased at the highest stimulus velocity (165.5 °/s). When the image spatial frequency was changed, the stimulus velocity at which the MFR amplitude became the maximum varied, being low for higher spatial frequency stimuli and high for lower spatial frequency stimuli (Fig. 4B).

Figure 4.

Variations of MFR response with changing stimulus image spatiotemporal frequencies. A, Temporal patterns of hand x-velocity of a typical subject for several stimulus velocities. The stimulus velocity and corresponding temporal frequency for each curve are shown in the legend box. Image spatial frequency was 0.10 c/° for all conditions. B, Velocity tuning functions of MFR for four constant spatial frequencies, 0.05, 0.10, 0.52, and 1.30 c/°, averaged over subjects (n = 5). C, Temporal frequency tuning functions of MFR for those stimuli. In B and C, each colored line represents a single spatial frequency condition. Error bars show the SE across subjects (n = 5).

However, when these tuning functions are replotted as a function of temporal frequency, these maxima are almost aligned (Fig. 4C). The MFR amplitude increased markedly with temporal frequency below 10 Hz independent of the image spatial frequency and then decreased in the high temporal frequency range beyond 20 Hz. This indicates that the stimulus temporal frequency is a key factor defining the limit of MFR amplitude for any image spatial frequency.

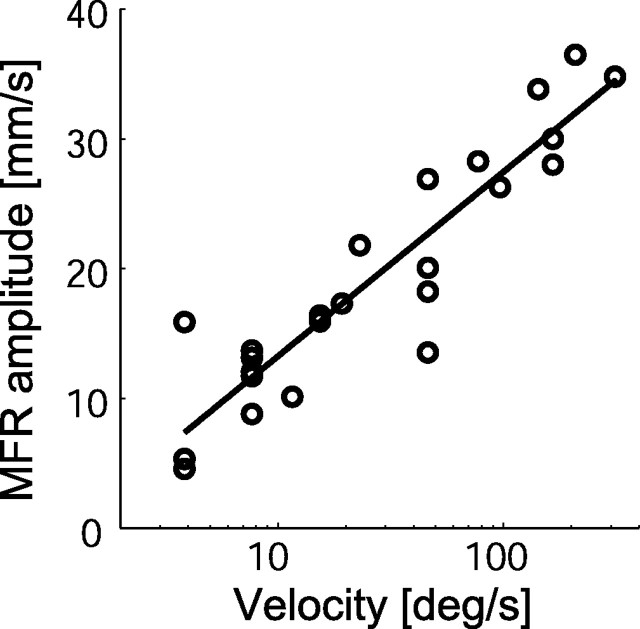

Because the MFR amplitude increases with stimulus velocity under the temporal frequency limit, we applied a linear regression to describe the relationship between the logarithm of stimulus velocity and MFR amplitude. This analysis was restricted to the data obtained for the stimulus temporal frequencies below 15.1 Hz (Fig. 5). The good fit obtained (R2 = 0.85) suggests that the MFR is nearly proportional to the logarithm of stimulus velocity in this range. The fitting performance for the log-spatial and for the log-temporal frequencies (R2 = 0.60 and 0.18, respectively) was lower than that for the log velocity. The early stages of visual processing, conversely, do not represent visual motion as “velocity” per se but encoded it within local ranges of spatiotemporal frequency (Tolhurst and Movshon, 1975; Holub and Morton-Gibson, 1981; Foster et al., 1985). These facts suggest that the MFR generation process includes a rapid transformation from the local spatiotemporal representation of visual motion to global velocity-dependent rate coding as discussed later.

Figure 5.

Relationship between stimulus velocity and MFR amplitude. Open circles denote the experimental data points for which temporal frequency was lower than 15.1 Hz. The solid line shows a linear regression line of these data, AMFR = 6.17 × log(V) + 0.96, where AMFR and V denote MFR amplitude and stimulus velocity, respectively. The coefficient of determination by the regression was 0.85.

Spatiotemporal tuning surface of MFR

To capture the global variation of MFR amplitude with stimulus spatiotemporal frequency, we fitted the total variation of the MFR amplitudes using a two-dimensional Gaussian function (see Materials and Methods). Figure 6A shows the observed MFR amplitudes at the sampling points (magenta circles) and the surface fitted by the Gaussian function. The fitting performance, VAF, was 0.84, indicating that MFR amplitudes were well fitted by this surface. The fitted surface height decreases as spatial frequency increases, and it increases with temporal frequency until 16.9 Hz (the temporal frequency at the peak point of fitted surface in the spatiotemporal frequency range shown) and quickly decreases thereafter.

The black curves superimposed on the fitted surface represent the lines of constant stimulus velocity. The MFR amplitude (i.e., height of the surface in the graph) does not much vary along the black curve for the constant stimulus velocity of ∼20 °/s within the low temporal frequency range (less than ∼15 Hz). This supports a spatiotemporal-independent representation of the MFR amplitude in this velocity range (Fig. 5). Interestingly, the contour plot of the fitted surface (Fig. 6B) indicates that the whole spatiotemporal response function is approximately space–time separable (i.e., axes of Gaussian function are almost parallel to the spatial and temporal frequency axes, respectively). This feature is frequently discussed to examine the neural representation of visual motion (Perrone and Thiele, 2001; Priebe et al., 2003). In the MFR tuning surface, the peak of the function is located at a very low spatial frequency (<0.1 c/°) and a high temporal frequency (15–20 Hz), for which image velocity is very fast. Therefore, despite the space–time separable feature, this high-speed tuning gives speed-dependent MFR for the stimuli with higher spatial and lower temporal frequencies.

Our modeling also revealed additional local effects of spatiotemporal frequency on the MFR amplitude. The MFR amplitude slightly decreases as spatial frequency increases (or temporal frequency decreases) along the low stimulus-velocity curve (see the color change along the black curve of 4 °/s) but reverses along the high stimulus-velocity curves (see the surface color change along the black curve of 60, 100, and 160 °/s) in the range below the temporal frequency limit. In fact, in the observed data, mean MFR amplitude for the 3.8 °/s stimulus was significantly smaller (p < 0.02 by paired t test; n = 5) at 0.5 c/° (mean MFR of 4.6 mm/s) than at 0.1 c/° (mean MFR of 15.9 mm/s). The mean MFR amplitude for 46.2 °/s stimulus was greater (although not significantly so, p = 0.10 by paired t test; n = 5) at 0.17 c/° (mean MFR of 26.9 mm/s) than at 0.1 c/° (mean MFR of 18.2 mm/s). From these results, we conclude that the MFR amplitude is weakly affected by the spatiotemporal frequency, but it is approximately represented by the stimulus velocity in the global range below the temporal frequency limit.

To examine the stimulus specificity of the MFR, we compare the spatiotemporal tunings between the MFR and OFR, both of which are induced by a large-field visual motion. Figure 6C shows the tuning function (contour plot) of the final-velocity amplitude modulation of the OFR. The data were resampled from Miles et al. (1986), their Figure 16, and then fitted by the Gaussian function. The index of fitting performance, the VAF value, was 0.91, indicating that modulation of OFR by spatial and temporal frequencies can be captured by the same basis function as for the MFR. Interestingly, comparison of Figure 6, C with B, shows that the fitted surface of the OFR quite closely resembles that of the MFR in shape (Gaussian axis orientation) and in spatiotemporal frequency at the maximum (colored dark red) in the sampled spatiotemporal region (MFR, 16.9 Hz, 0.04 c/°; OFR, 17.4 Hz, 0.04 c/°). These results suggest a common computation of visual motion underlying the MFR and OFR.

Figure 6D shows the contour plot of the surface fitted to the spatiotemporal tuning of the perceptual contrast sensitivity of visual motion obtained from the motion perception experiment. We used a Gaussian plus a first-order polynomial function in log scale (see Materials and Methods), instead of a simple Gaussian function in linear scale, to obtain a good fit for the data of perceptual sensitivity. The VAF value was 0.99, suggesting that this surface well represents the global characteristics of the tuning of perceptual sensitivity in the measured range. The temporal and spatial frequencies at the maximum point were 4.4 Hz and 0.79 c/°, and the motion perception sensitivity decreased when spatial frequency decreased and temporal frequency increased. This specificity is clearly different from those of the MFR and OFR tuning functions shown above. This suggests that the spatiotemporal frequency tuning of the MFR does not parallel the contrast sensitivity function of visual motion.

Discussion

Many studies of visually guided arm control have extensively examined computational problems of motor coordination (Kalaska et al., 1992; Georgopoulos, 1995; Andersen et al., 1997; Henriques et al., 1998; Buneo et al., 2002). However, the dependency on purely visual stimulus properties, such as contrast and spatiotemporal frequency tunings, has been essentially ignored. We therefore investigated the effects of image contrast and spatiotemporal frequency on the short-latency manual response elicited by a sudden visual motion. We found that the response rapidly increases with stimulus contrast and is almost proportional to the logarithm of stimulus speed, additionally, that tuning function has a peak at low-spatial and high-temporal frequencies. This stimulus specificity is quite similar to that of the reflexive OFR induced by a large-field visual motion but is different from that of motion perception sensitivity.

On-line manual and ocular motor controls share visual-motion processing

Because a large-field visual motion elicits a short-latency OFR, one might consider the possibility that the MFR is induced not by visual motion but by some form of motor entrainment with the ocular movement (Henriques et al., 1998; Soechting et al., 2001; Ariff et al., 2002; Henriques et al., 2003). The EMG latency of the arm muscles producing MFR, however, is almost comparable with that for the OFR (Saijo et al., 2005). Moreover, the gain modulations of MFR and OFR with fixation and change in visual stimulus size are not compatible with each other (Saijo et al., 2005). Thus, the MFR is unlikely to be a direct consequence of OFR occurrence.

Instead, we suggest common sensory processing of the inputs to both of these motor responses. To examine this possibility, we here compare in detail the contrast and spatiotemporal frequency tunings of the MFR shown in this study and of OFR reported previously (Miles et al., 1986; Gellman et al., 1990). The amplitude increase and latency decrease in the low-contrast range (<10%) for the MFR (Fig. 3) is also found for the OFR (Miles et al., 1986). As for the temporal frequency characteristics, the MFR (Fig. 4C) has a peak at ∼15–20 Hz, weakening above 20 Hz (i.e., bandpass characteristics) which is independent of both spatial frequency and stimulus velocity. This is in good agreement with the temporal frequency tunings of the OFR of monkeys [10–20Hz (Miles et al., 1986)] and humans [16 Hz (Gellman et al., 1990)]. Finally, when focusing on the spatial frequency tuning of the MFR amplitude (Fig. 6B), we found that the MFR was maximum in the low spatial frequency range (< 0.1c/°) at any temporal frequency. This tendency was also found in the OFR amplitude (Miles et al., 1986) as shown in Figure 6C. These close similarities of the tuning specificities are quite surprising because the kinematics, dynamics, and functions are quite different in the motor systems of the arm and eye. This suggests that the similarities do not arise in the motor generation process, such as the neural coding of motor commands and peripheral motor dynamics. Instead, we suggest the similar specificities of OFR and MFR reflect a common sensory pathway forming the input to both motor control circuits. This view is supported by the similar short latencies of the arm muscle activity and ocular response for the large-field visual motion (Saijo et al., 2005). Additional specific features of OFR have been demonstrated recently (Sheliga et al., 2005, 2006). By investigating additional similarities between the OFR and MFR, we would be able to argue about the details of the computational mechanisms of quick sensorimotor control.

Tuning discrepancy between the MFR and perceptual effects

We found that spatiotemporal tuning of the MFR is completely different from those of the perceptual effects caused by visual motion (Burr and Ross, 1982; Levi and Schor, 1984; De Valois and De Valois, 1991). As shown in Figure 6, the spatiotemporal tuning of the MFR has a peak at a lower spatial and higher temporal frequency than motion perception sensitivity measured with the same stimulus size and location. In addition, as for the perceptual position shift induced by visual motion (Ramachandran, 1987; Murakami and Shimojo, 1993; Nishida and Johnston, 1999; Whitney and Cavanagh, 2000), the temporal frequency of the largest positional bias (De Valois and De Valois, 1991) is also clearly lower than that of the MFR and is rather close to the peak of the motion perception sensitivity (Burr and Ross, 1982) (Fig. 6D). Thus, the induced position-shift effect on the target representation would not account for the MFR in terms of the spatiotemporal frequency tuning.

Furthermore, the induced motion effect (Soechting et al., 2001) is also unlikely to explain the MFR because the direction of induced motion of the “target representation” is opposite to the MFR direction. Instead, one could assume that the MFR is driven by the induced motion of the “hand.” However, because the spatiotemporal frequency tuning of the induced motion (Levi and Schor, 1984) is quite different from that of the MFR, this possibility can be rejected. These tuning dissimilarities indicate that the MFR response is quite independent of the perceptual effects caused by visual motion. The dissociation of MFR and perception is analogous to a recent finding that reflexive saccades are less influenced by perceptual illusions than voluntary saccades and perceptual judgments (McCarley et al., 2003). Similarly, our result is also consistent with the more general dissociation between perception and action in the neural processing (Bridgemen et al., 1981; Goodale et al., 1986).

Representation of the MFR

The high correlation between MFR amplitude and the stimulus log velocity under the temporal frequency limit (Fig. 5) indicates that the MFR is approximately represented by stimulus velocity in the “global” range and not by the spatial or temporal frequency of the visual stimulus. How does the brain represent this velocity-dependent coding for the MFR? We examine this question based on many previous physiological studies.

The high sensitivity of the MFR in the low-contrast range (Fig. 3) is consistent with the tuning properties of motion-sensitive neurons in the early stages of visual-motion processing (Sclar et al., 1990). These neurons are not tuned to particular velocities but to particular ranges of spatiotemporal frequency (Tolhurst and Movshon, 1975; Holub and Morton-Gibson, 1981; Foster et al., 1985). In a higher stage of visual-motion processing, area MT (middle temporal), some neurons appear to show velocity tuning (Maunsell and van Essen, 1983; Allman et al., 1985; Lagae et al., 1993; Treue and Andersen, 1996; Perrone and Thiele, 2001; Priebe et al., 2003), but the ranges of stimulus velocity they encode are not very wide. Additionally, area MT might encode the stimulus speed not by the intensity of neural firing but by “population coding” (Perrone and Thiele, 2001; Priebe et al., 2003). These observations suggest that the neural representations of visual motion in the early stages of visual-motion processing and in the area MT might be insufficient to account for the global velocity tuning of MFR amplitude.

Conversely, in the next visual-motion processing stage, area MST (medial superior temporal) (Duffy and Wurtz, 1991, 1997; Orban et al., 1995), many neurons responding to a wide field of visual motion are most active at high stimulus speed (160 °/s), and the averaged activity is proportional to the log velocity of visual motion (Kawano et al., 1994). Furthermore, its spatiotemporal tuning (Kawano et al., 1994) is similar to that of the MFR shown in this study. These correspondences suggest that the area MST is responsible for characterizing tuning specificity of the MFR. It should also be noted that MST is a visual area suggested to play a critical role in generating OFR (Kawano et al., 1994). Because the area MST has connections with several parietal and temporal regions (Boussaoud et al., 1990; Morel and Bullier, 1990; Andersen et al., 1997) that are sensitive to visual motion (Bruce et al., 1981; Phinney and Siegel, 2000; Merchant et al., 2004) as well as with subcortical areas (Boussaoud et al., 1992; Kawano et al., 1994; Distler et al., 2002), additional neurophysiological studies would be required identifying the total pathway for generating MFR.

The stimulus-velocity-dependent coding mentioned above is functionally important in guiding behavior for interaction with environments: faster visual motion may require a quicker manual response, independent of the environmental spatial structure. Although the gains of the MFR calculated using the stimulus-input and motor-output velocities were very small (<0.26), the MFR is useful in guiding the very earliest phase of reaction to environmental changes. Voluntary reactions require 150–200 ms for decision making and command generation before response initiation (Saijo et al., 2005). Thus, late phase of movement adjustment (more than ∼200 ms) can be regulated by the voluntary motor command. In contrast, automatic reactions such as MFR may be important only in the early phases of the adjustments.

We investigated contrast and spatiotemporal tunings of the MFR and compared these specificities with those of the neural activities, the short-latency OFR, and perceptual effects caused by visual motion. Dissimilarities between the stimulus tuning functions of the MFR and perceptual effects argue against the idea that the manual response is induced by a similar mechanism to perception-based motor control. On the contrary, the many similarities between the tuning functions of the MFR and OFR would suggest a common visual processing pathway providing input to both ocular and manual motor systems. Both the MFR and OFR involve reflexive visuomotor control designed for rapid reactions to surrounding visual motion. Both responses would be also functional for motor adjustments when the body is unsteady. Integration of several distinct on-line control mechanisms, such as target-based, surrounding visual-motion-based as in MFR, and vestibular-based controls, allows humans to archive fine manual movements in the external world. It is important to elucidate the mechanisms of rapid, implicit sensorimotor processes underlying fundamental human motor control through additional physiological and psychophysical investigations.

We thank P. Haggard, I. Murakami, and S. Shimojo for constructive discussion and manuscript improvement, N. Saijo for technical support, and S. Katagiri, T. Moriya, and M. Kashino for support and encouragement. Some of the experiments used Cogent Graphics developed by John Romaya at the Laboratory of Neurobiology at the Wellcome Department of Imaging Neuroscience (University College London, London, UK).

References

- Allman J, Miezin F, McGuinness E (1985). Stimulus specific responses from beyond the classical receptive field: neurophysiological mechanisms for local-global comparisons in visual neurons. Annu Rev Neurosci 8:407–430. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Snyder LH, Bradley DC, Xing J (1997). Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci 20:303–330. [DOI] [PubMed] [Google Scholar]

- Ariff G, Donchin O, Nanayakkara T, Shadmehr R (2002). A real-time state predictor in motor control: study of saccadic eye movements during unseen reaching movements. J Neurosci 22:7721–7729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R (1990). Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol 296:462–495. [DOI] [PubMed] [Google Scholar]

- Boussaoud D, Desimone R, Ungerleider LG (1992). Subcortical connections of visual areas MST and FST in macaques. Vis Neurosci 9:291–302. [DOI] [PubMed] [Google Scholar]

- Brainard DH (1997). The psychophysics toolbox. Spat Vis 10:433–436. [PubMed] [Google Scholar]

- Brenner E, Smeets JB (1997). Fast responses of the human hand to changes in target position. J Mot Behav 29:297–310. [DOI] [PubMed] [Google Scholar]

- Bridgemen B, Kirch M, Sperling A (1981). Segregation of cognitive and motor aspects of visual function using induced motion. Percept Psychophys 29:336–342. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG (1981). Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol 46:369–384. [DOI] [PubMed] [Google Scholar]

- Buneo CA, Jarvis MR, Batista AP, Andersen RA (2002). Direct visuomotor transformations for reaching. Nature 416:632–636. [DOI] [PubMed] [Google Scholar]

- Burr DC, Ross J (1982). Contrast sensitivity at high velocities. Vision Res 22:479–484. [DOI] [PubMed] [Google Scholar]

- Day BL, Lyon IN (2000). Voluntary modification of automatic arm movements evoked by motion of a visual target. Exp Brain Res 130:159–168. [DOI] [PubMed] [Google Scholar]

- De Valois RL, De Valois KK (1991). Vernier acuity with stationary moving Gabors. Vision Res 31:1619–1626. [DOI] [PubMed] [Google Scholar]

- Derrington AM, Lennie P (1984). Spatial and temporal contrast sensitivities of neurones in lateral geniculate nucleus of macaque. J Physiol (Lond) 357:219–240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desmurget M, Epstein CM, Turner RS, Prablanc C, Alexander GE, Grafton ST (1999). Role of the posterior parietal cortex in updating reaching movements to a visual target. Nat Neurosci 2:563–567. [DOI] [PubMed] [Google Scholar]

- Distler C, Mustari MJ, Hoffmann KP (2002). Cortical projections to the nucleus of the optic tract and dorsal terminal nucleus and to the dorsolateral pontine nucleus in macaques: a dual retrograde tracing study. J Comp Neurol 444:144–158. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH (1991). Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J Neurophysiol 65:1329–1345. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH (1997). Medial superior temporal area neurons respond to speed patterns in optic flow. J Neurosci 17:2839–2851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster KH, Gaska JP, Nagler M, Pollen DA (1985). Spatial and temporal frequency selectivity of neurones in visual cortical areas V1 and V2 of the macaque monkey. J Physiol (Lond) 365:331–363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gellman RS, Carl JR, Miles FA (1990). Short latency ocular-following responses in man. Vis Neurosci 5:107–122. [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP (1995). Current issues in directional motor control. Trends Neurosci 18:506–510. [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP, Kalaska JF, Massey JT (1981). Spatial trajectories and reaction times of aimed movements: effects of practice, uncertainty, and change in target location. J Neurophysiol 46:725–743. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Pelisson D, Prablanc C (1986). Large adjustments in visually guided reaching do not depend on vision of the hand or perception of target displacement. Nature 320:748–750. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD (1998). Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci 18:1583–1594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henriques DY, Medendorp WP, Gielen CC, Crawford JD (2003). Geometric computations underlying eye-hand coordination: orientations of the two eyes and the head. Exp Brain Res 152:70–78. [DOI] [PubMed] [Google Scholar]

- Holub R, Morton-Gibson M (1981). Response of visual cortical neurons of the cat to moving sinusoidal gratings: response-contrast functions and spatiotemporal interactions. J Neurophysiol 46:1244–1259. [DOI] [PubMed] [Google Scholar]

- Kalaska JF, Crammond DJ, Cohen DAD, Prud’homme M, Hyde ML (1992). Comparison of cell discharge in motor, premotor, and parietal cortex during reaching. In: Control of arm movement in space (Caminiti R, Johnson PB, Burnod Y, eds) pp. 129–146. Berlin: Springer.

- Kawano K, Miles FA (1986). Short-latency ocular following responses of monkey. II. Dependence on a prior saccadic eye movement. J Neurophysiol 56:1355–1380. [DOI] [PubMed] [Google Scholar]

- Kawano K, Shidara M, Watanabe Y, Yamane S (1994). Neural activity in cortical area MST of alert monkey during ocular following responses. J Neurophysiol 71:2305–2324. [DOI] [PubMed] [Google Scholar]

- Kelly DH (1979). Motion and vision. II. Stabilized spatio-temporal threshold surface. J Opt Soc Am 69:1340–1349. [DOI] [PubMed] [Google Scholar]

- Lagae L, Raiguel S, Orban GA (1993). Speed and direction selectivity of macaque middle temporal neurons. J Neurophysiol 69:19–39. [DOI] [PubMed] [Google Scholar]

- Levi DM, Schor CM (1984). Spatial and velocity tuning of processes underlying induced motion. Vision Res 24:1189–1195. [DOI] [PubMed] [Google Scholar]

- Maunsell JH, van Essen DC (1983). The connections of the middle temporal visual area (MT) and their relationship to a cortical hierarchy in the macaque monkey. J Neurosci 3:2563–2586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarley JS, Kramer AF, DiGirolamo GJ (2003). Differential effects of the Muller-Lyer illusion on reflexive and voluntary saccades. J Vis 3:751–760. [DOI] [PubMed] [Google Scholar]

- Merchant H, Battaglia-Mayer A, Georgopoulos AP (2004). Neural responses in motor cortex and area 7a to real and apparent motion. Exp Brain Res 154:291–307. [DOI] [PubMed] [Google Scholar]

- Merigan WH, Maunsell JH (1993). How parallel are the primate visual pathways? Annu Rev Neurosci 16:369–402. [DOI] [PubMed] [Google Scholar]

- Miles FA, Kawano K, Optican LM (1986). Short-latency ocular following responses of monkey. I. Dependence on temporospatial properties of visual input. J Neurophysiol 56:1321–1354. [DOI] [PubMed] [Google Scholar]

- Morel A, Bullier J (1990). Anatomical segregation of two cortical visual pathways in the macaque monkey. Vis Neurosci 4:555–578. [DOI] [PubMed] [Google Scholar]

- Murakami I, Shimojo S (1993). Motion capture changes to induced motion at higher luminance contrasts, smaller eccentricities, and larger inducer sizes. Vision Res 33:2091–2107. [DOI] [PubMed] [Google Scholar]

- Nishida S, Johnston A (1999). Influence of motion signals on the perceived position of spatial pattern. Nature 397:610–612. [DOI] [PubMed] [Google Scholar]

- Orban GA, Lagae L, Raiguel S, Xiao D, Maes H (1995). The speed tuning of medial superior temporal (MST) cell responses to optic-flow components. Perception 24:269–285. [DOI] [PubMed] [Google Scholar]

- Pelli DG (1997). The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10:437–442. [PubMed] [Google Scholar]

- Perrone JA, Thiele A (2001). Speed skills: measuring the visual speed analyzing properties of primate MT neurons. Nat Neurosci 4:526–532. [DOI] [PubMed] [Google Scholar]

- Phinney RE, Siegel RM (2000). Speed selectivity for optic flow in area 7a of the behaving macaque. Cereb Cortex 10:413–421. [DOI] [PubMed] [Google Scholar]

- Prablanc C, Martin O (1992). Automatic control during hand reaching at undetected two-dimensional target displacements. J Neurophysiol 67:455–469. [DOI] [PubMed] [Google Scholar]

- Priebe NJ, Lisberger SG (2004). Estimating target speed from the population response in visual area MT. J Neurosci 24:1907–1916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priebe NJ, Cassanello CR, Lisberger SG (2003). The neural representation of speed in macaque area MT/V5. J Neurosci 23:5650–5661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramachandran VS (1987). Interaction between colour and motion in human vision. Nature 328:645–647. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Pisella L, Vighetto A (2003). Optic ataxia revisited: visually guided action versus immediate visuomotor control. Exp Brain Res 153:171–179. [DOI] [PubMed] [Google Scholar]

- Saijo N, Murakami I, Nishida S, Gomi H (2005). Large-field visual motion directly induces an involuntary rapid manual following response. J Neurosci 25:4941–4951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sclar G, Maunsell JH, Lennie P (1990). Coding of image contrast in central visual pathways of the macaque monkey. Vision Res 30:1–10. [DOI] [PubMed] [Google Scholar]

- Sheliga BM, Chen KJ, Fitzgibbon EJ, Miles FA (2005). Initial ocular following in humans: a response to first-order motion energy. Vision Res 45:3307–3321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheliga BM, Kodaka Y, Fitzgibbon EJ, Miles FA (2006). Human ocular following initiated by competing image motions: evidence for a winner-take-all mechanism. Vision Res 46:2041–2060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shidara M, Kawano K (1993). Role of Purkinje cells in the ventral paraflocculus in short-latency ocular following responses. Exp Brain Res 93:185–195. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Engel KC, Flanders M (2001). The Duncker illusion and eye-hand coordination. J Neurophysiol 85:843–854. [DOI] [PubMed] [Google Scholar]

- Tolhurst D, Movshon J (1975). Spatial and temporal contrast sensitivity of striate cortical neurones. Nature 257:674–675. [DOI] [PubMed] [Google Scholar]

- Treue S, Andersen RA (1996). Neural responses to velocity gradients in macaque cortical area MT. Vis Neurosci 13:797–804. [DOI] [PubMed] [Google Scholar]

- Watson AB, Pelli DG (1983). QUEST: a Bayesian adaptive psychometric method. Percept Psychophys 33:113–120. [DOI] [PubMed] [Google Scholar]

- Whitney D, Cavanagh P (2000). Motion distorts visual space: shifting the perceived position of remote stationary objects. Nat Neurosci 3:954–959. [DOI] [PubMed] [Google Scholar]

- Whitney D, Westwood DA, Goodale MA (2003). The influence of visual motion on fast reaching movements to a stationary object. Nature 423:869–873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert DM, Ghahramani Z (2000). Computational principles of movement neuroscience. Nat Neurosci [Suppl] 3:1212–1217. [DOI] [PubMed] [Google Scholar]