Abstract

What would a comprehensive atlas of human emotions include? For 50 years, scientists have sought to map emotion-related experience, expression, physiology, and recognition in terms of the “basic six”—anger, disgust, fear, happiness, sadness, and surprise. Claims about the relationships between these six emotions and prototypical facial configurations have provided the basis for a long-standing debate over the diagnostic value of expression (for review and latest installment in this debate, see Barrett et al., p. 1). Building on recent empirical findings and methodologies, we offer an alternative conceptual and methodological approach that reveals a richer taxonomy of emotion. Dozens of distinct varieties of emotion are reliably distinguished by language, evoked in distinct circumstances, and perceived in distinct expressions of the face, body, and voice. Traditional models—both the basic six and affective-circumplex model (valence and arousal)—capture a fraction of the systematic variability in emotional response. In contrast, emotion-related responses (e.g., the smile of embarrassment, triumphant postures, sympathetic vocalizations, blends of distinct expressions) can be explained by richer models of emotion. Given these developments, we discuss why tests of a basic-six model of emotion are not tests of the diagnostic value of facial expression more generally. Determining the full extent of what facial expressions can tell us, marginally and in conjunction with other behavioral and contextual cues, will require mapping the high-dimensional, continuous space of facial, bodily, and vocal signals onto richly multifaceted experiences using large-scale statistical modeling and machine-learning methods.

Keywords: emotion, affect, expression, face, voice, signal, semantic space

The strange thing about life is that though the nature of it must have been apparent to everyone for hundreds of years, no one has left any adequate account of it. The streets of London have their map; but our passions are uncharted.

—Virginia Woolf (1922; Chapter 8, para. 18)

What would be included in an atlas of human emotions? In other words, what are the emotions? This question has captivated great thinkers, from Aristotle to the Buddha to Virginia Woolf, each in a different form of inquiry seeking to understand the contents of conscious life. The question of what the emotions are was first brought into modern scientific focus in the writings of Charles Darwin and William James. Over the next century, methodological discoveries gradually anchored the science of emotion to a predominant focus on prototypical facial expressions of the “basic six”: anger, disgust, fear, sadness, surprise, and happiness. By the late 20th century, many scientists had come to treat these six prototypical facial expressions as if they were exhaustive of human emotional expression; methodological convenience had evolved into scientific dogma.

Clearly, even explorers of the human psyche less astute than Virginia Woolf are likely to question this approach. Is there no more complexity to human emotional life than six coarse, mutually exclusive emotional states? What about the wider range and complexity of emotions people feel at graduations, weddings, funerals, and births; on falling in and out of love; in playing with children; when transported by music; and during the first days of school or on the job? Surely humans express emotions with a broader array of behaviors than only movements of facial muscles—by shifting their bodies and gaze, for instance, and by making sounds that actors, novelists, painters, sculptors, singers, and poets have long portrayed. Our answer to these questions today echoes Virginia Woolf’s sentiment from nearly 100 years ago: The focus on six mutually exclusive emotion categories leaves much, even most, of human emotion uncharted.

In this essay, we provide a map of the passions, one that—while still in the making—moves beyond models of emotion that have focused on six discrete categories or two core dimensions of valence and arousal and prototypical facial expressions. To appreciate where our review will go, consider emotional vocalizations, such as laughs and cries, and varying ones at that: Exultant shouts; sighs and coos; shrieks; growls and groans; “oohs” and “ahhs” and “mmms.” The human voice conveys upward of two dozen emotions (Anikin & Persson, 2017; Laukka et al., 2013; Sauter, Eisner, Ekman, & Scott, 2010) that can be blended together in myriad ways (Cowen, Elfenbein, Laukka, & Keltner, 2018; Cowen, Laukka, Elfenbein, Liu, & Keltner, 2019). To begin to explore this high-dimensional space of emotional expression, one can examine the interactive map of human vocal expression found at https://s3-us-west-1.amazonaws.com/vocs/map.html (Cowen, Elfenbein, Laukka, & Keltner, 2018). New studies such as these are revealing that the realm of emotional expression includes more than six mutually exclusive categories registered in a set of prototypical facial-muscle movements.

In fact, these new discoveries reveal that the two most commonly studied models of emotion—the basic six and the affective circumplex (comprising valence and arousal)—provide an incomplete representation of emotional experience and expression. As we shall see, each of those models captures at most 30% of the variance in the emotional experiences people reliably report and in the distinct expressions people reliably recognize. That leaves 70% or more of the variability in our emotional experience and expression uncharted. The new empirical and theoretical work we summarize in this review points to robust progress in arriving at a richer characterization—a high dimensional taxonomy—of emotional experience and expression. And these findings echo suggestions long made by numerous emotion researchers: that a full understanding of emotional expression and experience requires an appreciation of a wide degree of variability in display behavior, subjective experience, patterns of appraisal, and physiological response, both within and across emotion categories (Banse & Scherer, 1996; Roseman, 2011; Russell, 1991).

The Focus on Six Mutually Exclusive Emotion Categories: A Brief History

In 1964, Paul Ekman traveled to New Guinea with photographs of prototypical facial expressions of six emotions—anger, disgust, fear, sadness, surprise, and happiness. He sought to investigate whether those photos capture human universals in the emotional expressions people recognize. Having settled into a village in the highlands of New Guinea, Ekman presented local villagers with brief, culturally appropriate stories tailored to these six emotions. His participants selected from one of three photos the facial expression that best matched each story. Accuracy rates for children and adults hovered between 80% and 90% for all six expressions (chance guessing would be 33%; Ekman & Friesen, 1971).

It is not an exaggeration to say that this research would launch the modern scientific study of emotion. Studies replicated Ekman and Friesen’s basic result 140 times (see Elfenbein & Ambady, 2002; by now, the number of replications is likely much higher). The photos themselves are among the most widely used methodological tools in the science of emotion and a centerpiece of studies of emotion recognition in the brain, in children, in special groups such as individuals with autism, and in other species (Parr, Waller, & Vick, 2007; Sauter, 2017; Schirmer & Adolphs, 2017; Shariff & Tracy, 2011; Walle, Reschke, Camras, & Campos, 2017; Whalen et al., 2013). Ekman and colleagues’ research would inspire psychological science to consider the evolutionary origins of many other aspects of human behavior (Pinker, 2002).

As the science of emotion has matured, one line of scholarship has converged on the thesis that the Ekman and Friesen findings overstate the case for universality of the recognition of emotion from facial expression. Those critiques have centered, often reasonably, on the ecological validity of the photos, the forced-choice paradigm Ekman and Friesen used (Nelson & Russell, 2013; Russell, 1994), the strength of the cross-cultural evidence for universality (Crivelli, Russell, Jarillo, & Fernández-Dols, 2017), the fact that labeling of prototypical facial expressions can shift depending on context (Aviezer et al., 2008; Carroll & Russell, 1996), and questions about whether emotional expressions signal interior feelings, social intentions, or appraisals (Crivelli & Fridlund, 2018; Frijda & Tcherkassof, 1997; Scherer & Grandjean, 2008).

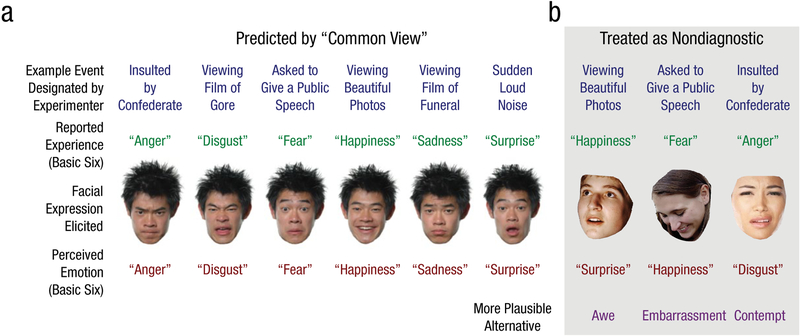

For example, Barrett and colleagues’ review in this issue of Psychological Science in the Public Interest (p. 1) fits squarely within this tradition. They ask whether the findings that have emerged from empirical studies on facial expression are in keeping with “common beliefs” about emotion. What are those common beliefs? We represent them in Figure 1a. A first is that facial expressions can be sorted into six discrete categories. In this commitment, Barrett and colleagues continue the scientific tradition of focusing on the basic six.

Fig. 1.

Barrett and colleagues’ portrayal of the “common view” of emotion and example violations of a “common view” model of emotion. In Barrett and colleagues’ portrayal of lay and scientific views on emotion, particular emotion antecedents consistently elicit experiences that are captured by six coarse, mutually exclusive categories—“anger,” “disgust,” “fear,” “happiness,” “sadness,” and “surprise” (a). These experiences in turn give rise to prototypical facial configurations. Example antecedents that have been used experimentally to elicit each of the basic six emotion categories are shown to the left, along with the prototypical facial configurations that they are expected to evoke. In their review, Barrett and colleagues assume that any violations of this model can serve as evidence against the diagnostic value of facial expression more generally. We illustrate some counterexamples to this tenet in (b). Plausible responses to some of the antecedents that have been used to elicit the basic six also include the three expressions presented here, which are reliably recognized as signals of “awe,” “embarrassment,” and “contempt” (Cordaro et al., in press; Cowen & Keltner, in press; Keltner, 1995; Shiota, Campos, & Keltner, 2003). Compared with the basic six prototypes in terms of facial muscle activation, they most closely resemble “surprise,” “happiness,” and “disgust,” respectively, categories that notably contrast with the emotions people readily perceive in these expressions. In fact, emotional expressions convey a wide variety of states, including blends of emotion, that cannot be accounted for by the basic six.

A second stipulated common belief is that there is a one-to-one mapping between experiences and expressions of the basic six and specific contexts in which they consistently occur. For example, as illustrated in Figure 1, being insulted should necessarily lead a person to express anger, and giving a public speech should lead to facial expressions of fear. We note, however, that most emotion researchers assume that cognitive appraisals mediate relations between events and emotion-related responses (Moors, Ellsworth, Scherer, & Frijda, 2013; Roseman, 2011; Scherer, 2009; Smith & Ellsworth, 1985; Tracy & Randles, 2011). More specifically, individuals vary in basic appraisal tendencies toward perceiving threat, rewards, novelty, attachment security, coping potential, and other core themes as a function of their particular life histories, genetics, class, and culture of origin (e.g., Buss & Plomin, 1984; Kraus, Piff, Mendoza-Denton, Rheinschmidt, & Keltner, 2012; Mikulincer & Shaver, 2005; Tsai, 2007). These individual differences produce different emotional responses to the same event. Individual variation in emotional expression in response to the same stimulus—a stranger approaching with a mask on, winning a competition, or arm restraint—therefore does not serve as evidence against coherence between experience and expression. Instead, this variation in expression may follow from differences in individuals’ evaluations of those stimuli. These differences have been worked out to a significant degree by appraisal theorists (Lazarus, 1991; Roseman, 1991, 2013; Scherer, Schorr, & Johnstone, 2001) but are compatible with multiple theoretical perspectives (Scarantino, 2015).

Third, the “common view” holds that each of the six emotions is expressed in a prototypical pattern of facial-muscle movements. Hundreds of empirical studies, though, have documented that (a) people express more than 20 states with multimodal expressions that include movements of the face, movements of the body, and postural shifts, as well as vocal bursts, gasps, sighs, and cries (for review, see Keltner, Sauter, Tracy, & Cowen, 2019; Keltner, Tracy, Sauter, Cordaro, & McNeil, 2016) and that (b) each emotion is associated with a number of different expressions, as Ekman (1993) observed. Thus, we agree with Barrett et al. that emotions are not expressed in six prototypical patterns of facial-muscle movement. However, this is not because facial-muscle movements do not convey emotion but because the mapping between emotion and expression is more complex. Based on the empirical and theoretical developments we have outlined so far, studies that do not observe a predicted prototypical facial expression in response to an emotion induction are open to many interpretations: Perhaps the emotion was expressed in one of many ways other than the prototypical facial expression; perhaps the stimulus elicited an emotion not included among the basic six or a complex blend of emotions (see Roseman, 2011).

Finally, the “common view” holds that people around the world should label the six prototypical facial expressions of emotion with discrete emotion words. This assumption receives only modest support in Barrett and colleagues’ review and is disconfirmed when participants from different cultures use different words to label the same facial expression (e.g., Crivelli, Russell, Jarillo, & Fernández-Dols, 2016). Single emotion words vary in their meaning across cultures, calling into question whether cross-cultural comparisons using this approach are sound (Boster, 2005; Cordaro et al., in press; Russell, 1991).

Indeed, the methodological reliance on single-word labeling paradigms introduces other problems related to more complex interpretations of emotional expression. Imagine that a person from one culture perceives an anger expression to be communicating 55% anger and 45% sadness, and a person from a second culture perceives the same expression to be communicating 45% anger and 55% sadness (e.g., Cowen et al., 2019). Despite the considerable overlap in their interpretations, single-word labeling paradigms would classify the two individuals as offering different responses. As a result of these and other ambiguities in single-word paradigms, the field of emotion has moved on to other methods—matching expressions to situations, appraisals, and intentions; nonverbal tasks; and using free-response data (e.g., Haidt & Keltner, 1999; Sauter, LeGuen, & Haun, 2011). Of course, such methods are limited in other important ways—for example, when matching expressions to situations, people across cultures may appraise the same situations in different ways, and free response does not measure recognition per se (i.e., it should never be assumed that a subject who calls a green apple “a fruit” is unable to recognize that it is green or is an apple). Nevertheless, efforts to move beyond single emotion words have led to important advances in understanding how people conceptualize and categorize emotional expressions, a theme we develop later in this essay.

Notwithstanding these concerns about the portrayal of the “common view” of emotions, we agree with several conclusions arrived at by Barrett and colleagues. A pressing need in the study of emotional expression is indeed to move beyond the narrow focus on prototypical facial-muscle movements. New studies are turning to the voice, the body, gaze, and the question of how these modalities combine to express emotion (Baird et al., 2018). Little is known about expressions of complex blends of emotion (Cowen et al., 2018; Du, Tao, & Martinez, 2014; Parr, Cohen, & De Waal, 2005). The context in which emotional expressions occur—the social setting for example, or relational dynamics between individuals—most certainly contributes to the production and interpretation of emotional expression, and empirical work is needed to probe these complexities (Aviezer et al., 2008; Chen & Whitney, 2019; Hess, Banse, & Kappas, 1995; Jakobs, Manstead, & Fischer, 2001). It will be critical for the study of expression, as Barrett and colleagues note, to move out of the lab and its many constraints to more naturalistic contexts in which emotion arises. In fact, work guided by this approach is already yielding impressive results regarding the universality and coherence of emotional expression (Anderson, Monroy, & Keltner, 2018; Cowen et al., 2018; Matsumoto, Olide, Schug, Willingham, & Callan, 2009; Matsumoto & Willingham, 2009; Sauter & Fischer, 2018; Tracy & Matsumoto, 2008; Wörmann, Holodynski, Kärtner, & Keller, 2012). (This approach is, of course, complementary to controlled experiments that probe the mechanisms underlying specific expressive signals.)

Ultimately, the common view of emotion represented in Figure 1 offers one way to answer the question “What are the emotions?” As our review will make clear, empirical data now point to a different answer. This emergent view, synthesized here, reveals that with a careful attention to additional emotions beyond the basic six, additional modalities of expressive behavior, and the use of large-scale statistical modeling, we are arriving at a picture of a rich, high-dimensional taxonomy of emotional experience and expression.

Deriving a High-Dimensional Taxonomy of Emotions: Conceptual Foundations and Methodological Approaches

Emotions are internal states that arise following appraisals (evaluations) of interpersonal or intrapersonal events that are relevant to an individual’s concerns—for example over threat, fairness, attachment security, the promise of sexual opportunity, violations of norms and morals, or the likelihood of enjoying rewards (Keltner, Oatley, & Jenkins, 2018; Lazarus, 1991; Roseman, Spindel, & Jose, 1990)—and promote certain patterns of response. As emotions unfold, people draw on the language of emotion—hundreds and even thousands of words, concepts, metaphors, phrases, and sayings (Majid, 2012; Russell, 1991; Wierzbicka, 1999)—to describe emotion-related responses, be they subjective experiences, physical sensations, or, as is the focus here, expressive behaviors.

Of the many ways people describe their emotions, how many correspond to distinct experiences and expressions? What are these experiences and expressions? How are they structured? To answer these questions empirically, we must first map the meanings people ascribe to emotion-related responses onto what we have called a semantic space (Cowen et al., 2018, 2019; Cowen & Keltner, 2017, 2018). We have already presented one such space, that of vocal bursts, the study of which illustrates that a semantic space is defined by three properties. The first is its dimensionality, or the number of distinct varieties of emotion that people represent within a response modality. To what extent are emotional experience and expression captured by six categories? As we will show, this realm is in fact much richer than six coarse categories (see also Keltner et al., 2019; Sauter, 2017; Shiota et al., 2017).

Second, semantic spaces are defined by the distribution of expressions within the space. Are there discrete boundaries between emotion categories, or is there overlap (Barrett, 2006a; Cowen & Keltner, 2017)? Within a category of emotion, are there numerous varieties of expressions, as Ekman long ago observed (he claimed, for example, that there were 60 kinds of anger expressions; see Ekman, 1993)? Or do people recognize only a single maximally prototypical facial configuration?

Third, semantic spaces are defined by the conceptualization of emotion: What concepts most precisely capture the emotions people express, report experiencing, or recognize in others’ expressive behavior (Scherer & Wallbott, 1994a, 1994b; Shaver, Schwartz, Kirson, & O’Connor, 1987)? Of critical theoretical relevance is the extent to which emotion categories (e.g., “sympathy,” “love,” “anger”) or domain-general affective appraisals such as valence and arousal provide the foundation for judgments of emotional experience and expression (Barrett, 2006a, 2006b; Russell, 2003). It has been suggested that the emotions people reliably recognize in expressions may be accounted for by appraisals of valence and arousal (a possibility alluded to by Barrett et al. in this issue). Recently, rigorous statistical methods have been brought to bear on this question, and, as we will see, valence and arousal capture only a fraction of the information reliably conveyed by expressions. Moreover, these features seem to be inferred in a culture-specific manner from representations of states that are more universally conceptualized in terms of emotion categories.

Overall, the framework of semantic spaces highlights new methods of answering old questions (see, e.g., Roseman, Wiest, & Swartz, 1994; Scherer & Wallbott, 1994a, 1994b; Shaver et al., 1987): What are the emotions? Within our framework, this question becomes the following: In a particular modality—the production or recognition of expressive behavior in the face or voice, peripheral physiological response, central nervous system patterning—how many dimensions are needed to explain the systematic variance in emotion-related response? Are emotions discrete or continuous—that is, how are emotional experiences, expressions, or physiological responses distributed in a multidimensional space? And what concepts best capture emotion—do we need categories, or can the variance they capture be explained in simpler, more general terms (e.g., with an affective circumplex model comprising valence and arousal; see also Hamann, 2012; Kragel & LaBar, 2016; Lench, Flores, & Bench, 2011; Vuoskoski & Eerola, 2011)?

To arrive at rigorous empirical answers to these questions, researchers need to be guided by certain methodological design features departing significantly from the methods of studies guided by the model portrayed in Figure 1a (the reliance on prototypical facial expressions of a very narrow range of emotions—the basic six—and discrete emotion terms or situations assumed to map to those expressions). In Table 1, we highlight these features of empirical inquiry, and we then turn to illustrative studies and a summary of the empirical progress made thus far in capturing the semantic space of emotional experience and the recognition of emotional expression.

Table 1.

Separate Consideration of the Dimensionality, Distribution, and Conceptualization of Emotion Clarifies Past Methodological Limitations

| Focus | Methodological feature of study | Approach of most studies reviewed by Barrett et al. | Approach necessary to derive semantic space of emotion |

|---|---|---|---|

| Studying the dimensionality, or number of varieties, of emotion | Range of emotions studied | Focus on basic six | Open-ended exploration of a rich variety of states and emotional blends |

| Source of emotional states to study | Scientists’ assumptions | Empirical evidence, including ethnological and free response data | |

| Measurement of expressive behavior | Facial-muscle movements sorted into basic six | Multimodal expressions involving the face, body, gaze, voice, hands, and visible autonomic response measured | |

| Statistical methods | Recognition accuracy | Multidimensional reliability analysis | |

| Studying the distribution of emotion, or how emotions are structured along dimensions (e.g., whether emotion-related responses fall into discrete categories or form continuous gradients) | Stimuli used in experiments | Small set of prototypical elicitors and expressions | Numerous naturalistic variations in elicitors and behavior |

| Statistical methods | Recognition accuracy; confusion patterns | Large-scale data-visualization tools and closer study of variations at the boundaries between emotion categories | |

| Studying the conceptualization of emotion, including whether emotions are more accurately conceptualized in terms of emotion concepts or more general features | Labeling of expression | Choice of discrete emotion in matching paradigms | Wide range of emotion categories, affective features from appraisal theories, and free-response data |

| Statistical methods | Confirmatory analysis of assumed one-to-one mapping of stimuli to discrete emotion concepts | Inductive derivation of mapping from stimuli to emotion concepts using statistical modeling | |

| Qualitative examination of whether emotion-related responses seem like they could be accounted for by valence and/or arousal; sorting paradigms, factor analysis, and other heuristic based approaches | Statistical modeling of the extent to which the reliable recognition of expression and elicitation of emotional experience can be accounted for by valence, arousal, and other broad concepts |

The Nature of Emotion Categories: 25 States and Complex Blends

What are the emotions? The focus on the basic six in the “common view” portrayed in Figure 1 traces back to Ekman and Friesen’s study but has no rigorous empirical or theoretical rationale. Darwin, who was an inspiration of Ekman’s, described the expressive behavior of over 40 psychological states (see Keltner, 2009). More recently, social functionalist approaches highlight how emotions are vital to human attachment, social hierarchies, and group belongingness. This theorizing makes a case for the distinctiveness of emotions such as love, desire, gratitude, pride, sympathy, shame, awe, and interest (e.g., Keltner & Haidt, 1999). What do emotion researchers believe? One recent survey (Ekman, 2016)—cited by Barrett and colleagues—found that 80% of emotion scientists believe that five of the basic six are associated with universal nonverbal expressions. This statistic is unsurprising given that these expressions were the predominant focus of the first 50 years of emotion science. Note that a significant proportion of scientists surveyed indicated that additional emotions, such as embarrassment and shame, have recognizable nonverbal expressions. Likewise, taxonomies proposed by emotion scientists most typically include more states than the basic six (Keltner & Lerner, 2010; Panksepp, 1998; Roseman et al., 1994; Shaver et al., 1987).

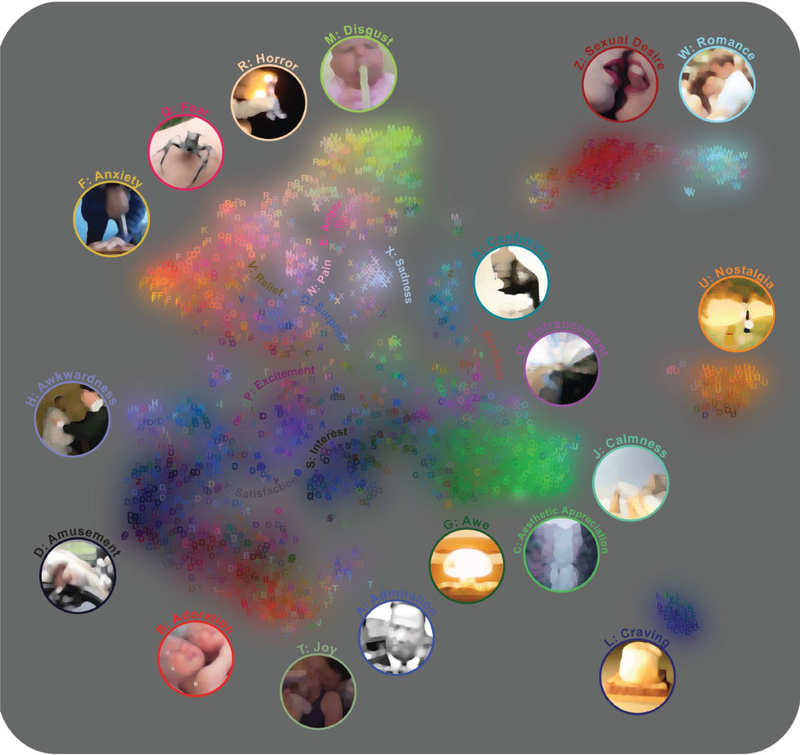

Figures 2 and 3 present two recent studies that capture empirically, in different ways, people’s common beliefs about emotion. Figure 2 presents a map derived from 757 participants’ judgments of the similarity of 600 different English emotion words. Nearby concepts within the map had similar loadings on the 49 dimensions. As one can see, English speakers distinguish dozens of states (for examples of earlier studies that make a similar point, see Roseman, 1984; Scherer & Wallbott, 1994a, 1994b; Shaver, Murdaya, & Fraley, 2001). Moving clock-wise from the top, one finds many varieties of emotions beyond the basic six that have drawn the attention of recent scholars: contempt, shame, pain, sympathy, love, lust, gratitude, relief, triumph, awe, and amusement, among others. Ignoring these states limits the inferences to be drawn from studies of expression.

Fig. 2.

Map of 600 emotion concepts derived from similarity judgments (Cowen & Keltner, 2019). Participants (N = 757; 348 female, mean age = 34.2 years) were presented with one “target” emotion concept and a list of 25 other pseudorandomly assigned concepts and were asked to choose, from the 25 options, the concept most similar to the target concept. We collected a total of 43,756 such judgments. Using these judgments, we constructed a pairwise similarity matrix and analyzed the dimensionality of the emotion concepts by applying eigen decomposition and parallel analysis (Horn, 1965). These methods derived 49 candidate dimensions of emotion. Our results indicate that emotion concepts carry a much wider variety of meanings than the “basic” six. We mapped the distribution of individual concepts within this 49-dimensional space using a nonparametric visualization technique called t-distributed stochastic neighbor embedding (t-SNE), which extracts a two-dimensional space designed to preserve the local “neighborhood” of each concept. Adjacent concepts within the map had similar loadings on the 49 dimensions. Colors were randomly assigned to each dimension, and the color of each concept represents a weighted average of its two maximal dimension loadings. Labels are given to example concepts (larger dots). For an interactive version of this figure in which all terms can be explored, see https://s3-us-west-1.amazonaws.com/emotionwords/map49.html.

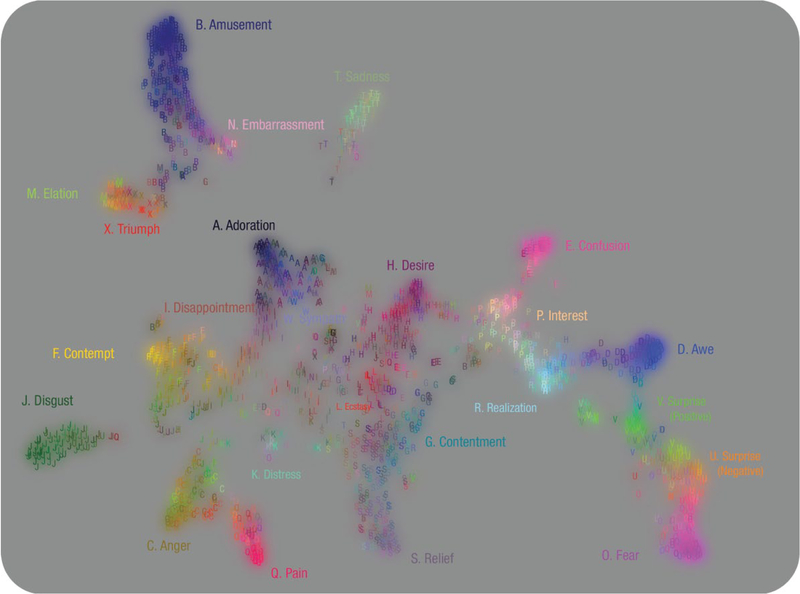

Fig. 3.

Map of 27 varieties of emotional experience evoked by 2,185 videos. Participants judged each video in terms of 34 emotion categories (free response) and 14 scales of affective appraisal, including valence, arousal, dominance, certainty, and more. At least 27 dimensions, each associated with a different emotion category, were required to capture the systematic variation in participants’ emotional experiences. We mapped the approximate distribution of videos along these 27 dimensions using a technique called t-distributed stochastic neighbor embedding (t-SNE). Emotion categories often treated as discrete are in fact bridged by continuous gradients, found to correspond to smooth transitions in meaning (Cowen & Keltner, 2017). For an interactive version of this figure, see https://s3-us-west-1.amazonaws.com/emogifs/map.html.

Perhaps, though, when people label their own spontaneous emotional experiences, or recognize emotions in the expressive behaviors of others, the more complex space of emotion knowledge portrayed in Figure 2 reduces to the basic six, as portrayed in the “common view” of emotion. Perhaps our feelings of sympathy or shame, for example, are in fact simply variants of sadness. Perhaps our experiences of love, amusement, interest, or awe are, at the core, simply shades of happiness. Empirical data suggest otherwise. In a study on this point, people reported on their emotional reactions to more than 2,100 short film clips (Cowen & Keltner, 2017). Figure 3 presents the resultant semantic space of emotional experience. In mapping the dimensionality of emotion that emerged with this class of stimuli, reported emotional experiences cannot be reduced to 6; rather they require at least 27 varieties of emotion to be explained (a lower bound, given that with different classes of stimuli, different emotions can be elicited). Note that these findings converge with robust empirical literature documenting distinctions in the experiences of 7 to 13 positive emotions total (Kreibig, 2010; Shiota et al., 2017; Tong, 2015), including several self-conscious emotions (Scherer & Wallbott, 1994a, 1994b; Tangney & Tracy, 2012; Tracy & Robins, 2007b), attachment-related emotions (Diamond, 2003; Goetz, Keltner, & Simon-Thomas, 2010), and self-transcendent emotions such as gratitude, contentment, awe, and ecstasy (Cordaro, Brackett, Glass, & Anderson, 2016; Stellar et al., 2017).

Figure 3 also maps the regularity with which people experience complex emotional blends (Du et al., 2014; Watson & Stanton, 2017). Yes, there are prototypical experiences of amusement, for example, or of fear, love (adoration), sympathy, or disgust. At the same time, many—even most—experiences of emotion are complex, involving blends between disgust and horror, for example, or awe and feelings of aesthetic appreciation, or love and desire, or sympathy and empathic pain. The model portrayed in Figure 1a derives from the research conducted by Ekman and Friesen some 50 years ago. Emotion science, and Ekman himself (Ekman & Cordaro, 2011), have evolved considerably in the range of states considered emotions.

Hewing to this older model fails to capture the breadth and blending of emotional experience that contemporary emotion researchers study. This omission has problematic consequences for research on emotional expression. For example, a recent meta-analysis cited by Barrett and colleagues found that when facial expressions and reported emotional experiences evoked by laboratory stimuli are sorted into six discrete categories, the raw correlation between them averages to about .32 (Duran & Fernández-Dols, 2018). However, this may be close to the highest correlation that could possibly be achieved by studies that sort emotional experiences and facial expressions into the basic six. Across several empirical studies we will review, the basic six were found to represent 30%, at best, of the explainable variance in experience and expression (Cowen et al., 2018; Cowen & Keltner, 2017, in press). Correlations between expression and antecedent elicitors, reported experience, and observer judgment sorted into the basic six are thus relating measures that capture only 30% of the explainable variance. The remaining 70% of variation in expression is left unaccounted for, but it may still add to the total variance, which determines the denominator of the correlation between expression and other phenomena. When put into this perspective, the meta-analytic results imply that methods that capture the much wider range of the expressions people actually produce will likely have much greater diagnostic value in predicting an individual’s subjective affective state. To truly address the diagnostic value of expression, researchers will thus need to move beyond six discrete categories of facial expressions and instead use inductive methods to predict internal states from the high-dimensional, continuous space of dynamic expressions of the face, voice, and body.

The narrow focus on the basic six also masks distinctions between emotions that have been established in the literature. For example, studies that seek to document associations between expressions of “happiness” and self-reported experience or physiological response ignore established distinctions among different positive emotions and their accompanying expressions. As is evident in Figures 2 and 3, and in dozens of empirical studies, the positive emotions are numerous—including love, desire, awe, amusement, pride, enthusiasm, and interest, for example (Campos, Shiota, Keltner, Gonzaga, & Goetz, 2013; Shiota et al., 2017)—and there are varieties of smiles and other facial expressions that covary with these distinct positive emotions (Cordaro et al., in press; Cowen & Keltner, in press; Keltner et al., 2016; Martin, Rychlowska, Wood, & Niedenthal, 2017; Oveis, Spectre, Smith, Liu, & Keltner, 2013; Sauter, 2017; Wood et al., 2016). To give another example, the focus on sadness to the exclusion of sympathy and distress fails to capture the various emotions and blends engaged in responding to the suffering of others (Eisenberg et al., 1988; Singer & Klimecki, 2014; Stellar, Cohen, Oveis, & Keltner, 2015).

On this point, one study reviewed by Barrett and colleagues assumes that if facial expressions had diagnostic value, winning a judo match would consistently elicit a smile (Crivelli, Carrera, & Fernández-Dols, 2015). Contrary to this assumption, inductive and ecological studies indicate that body gestures such as arm raises, fist clenches, and chest expansions are diagnostic of triumph (or pride), but that a smile is not necessary to signal this emotion (Cowen & Keltner, in press; Matsumoto & Hwang, 2012; Tracy & Matsumoto, 2008). This confusion reinforces the need to move beyond the study of the basic six emotions and facial-muscle movements and offers a broader lesson: Any study aiming to test the diagnostic value of an expression should empirically derive its mapping to experience, not assume it in advance.

Our capacity to understand and make predictions about the natural world relies critically on the precision of the concepts that are the basis of inference. If meteorologists started from the assumption there were 3 kinds of clouds, the inferences they would draw about the processes—air temperature, air pressure, humidity, rainfall, wind, tides—that produce such clouds would be simplistic and imprecise. Were they to form a science based on a much more differentiated taxonomy of clouds—10, which is the case today—the understanding of the causes, dynamics, and consequences of clouds and the weather patterns of which they are the product becomes necessarily more exact. The same is true for the science of emotion: The reliance on six categories of emotion constrains attempts to understand how experience manifests in expressive behavior that is perceived and responded to by others. Such a narrow focus impedes progress in understanding the structure and dynamics of emotional response and answering questions such as the following: How do emotions organize human attachments and navigate social hierarchies? How does emotional expression and recognition change with development? What are the neurophysiological processes that underlie the experience, expression, and recognition of emotion?

More generally, tests of a basic-six model of emotion cannot be construed as tests of the broader diagnostic value of facial expression. It is problematic to assume that emotions can be treated as interchangeable data points in an experiment. Barrett and colleagues maintain that their review, “which is based on a sample of six emotion categories,” therefore “generalizes to the population of emotion categories that have been studied.” However, emotions such as “anger” serve as variables in a study, not as points that survey a population. Across trials, the emotions people report perceiving or experiencing are compared with other variables of interest—measurements of facial expression, for instance. Statistical theory does not justify generalizing to the “population” of variables—there is little reason to believe a model comprising 6 emotions will predict facial expression as well as a model comprising 25. On the contrary, a model comprising more features will often perform better. Indeed, moving toward a broader space of emotions, one encounters greater systematicity in the nature of emotional expression.

The Nature of Emotional Expression

Perhaps what is most striking and divergent from everyday experience regarding Figure 1a are the static photos of prototypical facial-muscle configurations. Do people really express emotion in such caricature-like fashion, with unique configurations of facial-muscle movements (for a relevant methodological critique, see Russell, 1994)? Although the “common view” of emotional expression treats this question as interchangeable with that of whether expressions have diagnostic value, many studies of emotional expression have moved well beyond the focus on prototypical facial muscle configurations.

Since the Ekman and Friesen findings of 50 years ago, considerable advances have been made in understanding how we express emotion in nuanced, multimodal patterns of behavior (Bänziger, Mortillaro, & Scherer, 2012; Cordaro et al., in press; Keltner & Cordaro, 2015; Paulmann & Pell, 2011; Scherer & Ellgring, 2007; Tracy & Matsumoto, 2008; Tracy & Robins, 2004). For example, shifts in gaze as well as movements in the face, head, body, and hands differentiate expressions of self-conscious emotions—pride, shame, and embarrassment (Keltner, 1995; Tracy & Robins, 2007a). The same is true of positive emotions such as amusement, awe, contentment, desire, love, and sympathy, in which subtle movements such as the head tilted back and open mouth of amusement, or the gaze and head oriented upward of awe, express these different emotions (Cordaro et al., 2018, in press; Eisenberg et al., 1988; Gonzaga, Keltner, Londahl, & Smith, 2001; Keltner & Bonanno, 1997; Shiota, Campos, & Keltner, 2003). Reports of recent empirical work show that when these nuanced patterns of expressive behavior are captured in still photographs, 18 affective states are recognized across 9 different cultures with accuracy rates often exceeding those observed in studies of the basic six (Cordaro et al., in press).

Consider the realm of touch, so important in parent-child relationships, friendships, intimate bonds, and at work. With brief, half second touches to a stranger’s arm, people can communicate sympathy, gratitude, love, sadness, anger, disgust, and fear at levels of recognition 6 to 8 times that of chance guessing (Hertenstein, Holmes, McCullough, & Keltner, 2009). In a similar vein, people are adept at communicating a variety of emotions with postural movements (Dael, Mortillaro, & Scherer, 2012; Lopez, Reschke, Knothe, & Walle, 2017).

The voice may prove to be the richest modality of emotional communication (Kraus, 2017; Planalp, 1996). New empirical work, building on the seminal theorizing of Klaus Scherer (Scherer, 1984; Scherer, Johnstone, & Klasmeyer, 2003), has documented that when people vary their prosody while uttering sentences with neutral content, they can convey at least 12 different emotions, which are reliably identified in distinct cultures (Cowen et al., 2019; Laukka et al., 2016). People also communicate emotion with vocal bursts, which predate language in human evolution and have parallels in the vocalizations of other mammals (Scott, Sauter, & McGettigan, 2010; Snowdon, 2003). In relevant empirical work, people can communicate more than 13 emotions with brief sounds, a finding that has been replicated across 14 cultures, including two remote, small-scale societies (Cordaro, Keltner, Tshering, Wangchuk, & Flynn, 2016; Cowen et al., 2018; Sauter et al., 2010; Simon-Thomas, Keltner, Sauter, Sinicropi-Yao, & Abramson, 2009).

Building on these advances, two new studies guided by more open-ended methodological features outlined in Table 1 have revealed how the face and voice communicate a rich array of emotions (the dimensionality of the semantic space), and variations within each category of emotion (the distribution of expressions). In one study, participants made categorical and dimensional judgments of 2,032 voluntarily produced and naturalistic vocal bursts (Cowen et al., 2018). In another, participants judged 1,500 facial expressions culled from naturalistic contexts (at funerals, sporting events, weddings, classrooms; Cowen & Keltner, in press). Figures 4 and 5 present taxonomies of vocal and facial expression derived from these judgments.

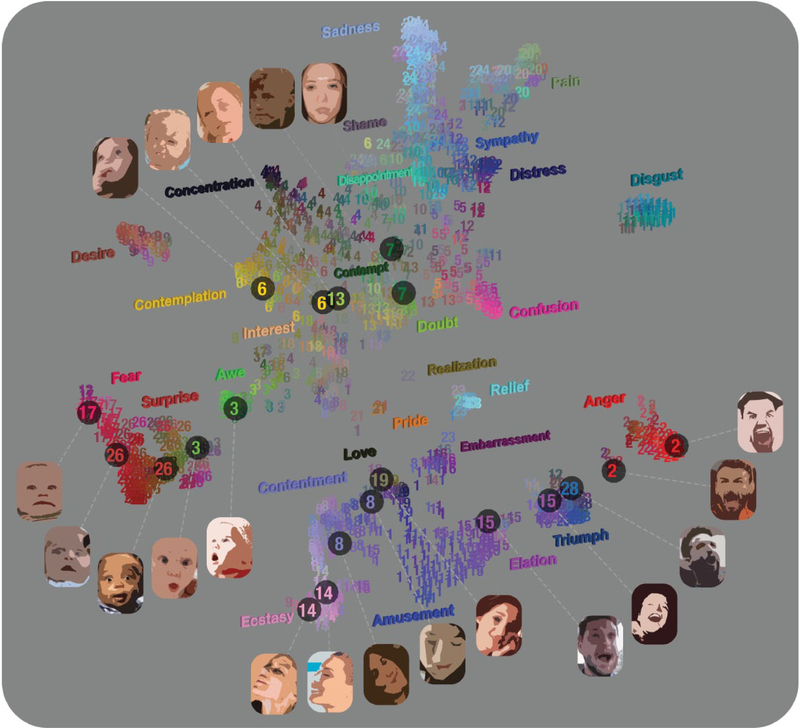

Fig. 4.

Map of 24 varieties of emotion recognized in 2,032 vocal bursts (Cowen et al., 2018). Participants judged each vocal burst in terms of 30 emotion categories (free response) and 13 scales of affective appraisal, including valence, arousal, dominance, certainty, and more. At least 24 dimensions were required to capture the systematic variation in participants’ judgments. As with the emotions evoked by video (Fig. 3), the emotions recognized in vocal expression were most accurately conceptualized in terms of the emotion categories. Visualizing the distribution of vocal bursts using t-distributed stochastic neighbor embedding (t-SNE), we can again see that categories often treated as discrete are bridged by continuous gradients, which we find correspond to smooth transitions in meaning. For an interactive version of this figure, see https://s3-us-west-1.amazonaws.com/vocs/map.html.

Fig. 5.

Map of 28 varieties of emotion recognized in 1,500 facial/bodily expressions (Cowen & Keltner, in press). Participants judged each expression in terms of 28 emotion categories (free response) and 13 scales of affective appraisal, including valence, arousal, dominance, certainty, and more. All 28 categories were required to capture the systematic variation in participants’ judgments. As with the emotions evoked by video and recognized in vocal expression (Figs. 3 and 4), the emotions recognized in facial/bodily expression were most accurately conceptualized in terms of the emotion categories, and we can see that emotion categories often treated as discrete are bridged by continuous gradients. For an interactive version of this figure, see https://s3-us-west-1.amazonaws.com/face28/map.html.

In terms of the dimensionality of emotional expression, at least 24 emotions can be reliably communicated with vocal bursts, and 28 can be reliably communicated through visual cues from the face and body. With respect to the distribution of emotional expression, each emotion category involves a rich variety of distinct expressions. There is no single expression of anger, for example, or embarrassment, but myriad variations. And at the boundaries between categories—say between awe and interest as expressed in the face, or amusement and love—lie expressions with blended meanings. For example, there are subtly varying ways in which people communicate sympathy with vocal bursts or love in facial and bodily movements. Studies of expressions of embarrassment, shame, pride, love, desire, mirth (laughter), and interest in different modalities all reveal systematic variants within a category of emotion that convey the target emotion to varying degrees (Bachorowski & Owren, 2001; Gonzaga et al., 2001; Keltner, 1995; Tracy & Robins, 2007a).

The shift away from the face to expressions in multiple modalities has yielded critical insights into understanding emotional expression. Here is but a sampling of recent discoveries; as the field matures, we expect many new insights. By the age of 2, children can readily identify at least five positive emotions from brief emotion-related vocalizations (Hertenstein & Campos, 2004; Wu, Muentener, & Schulz, 2017). Emotions vary in the degree to which they are signaled in different modalities (App, McIntosh, Reed, & Hertenstein, 2011): Gratitude is hard to convey from the face and voice but readily detected in tactile contact (Hertenstein et al., 2009); awe may be more readily communicated in the voice than the face (Cordaro et al., 2018); pride is best recognized from a combination of postural and facial behaviors (Tracy & Robins, 2004, 2007a). And critical progress is being made in understanding the sources of within-category variations in expression, in particular in terms of culture (Elfenbein, Beaupré, Lévesque, & Hess, 2007). Different populations develop culturally specific dialects in which they express emotion in ways that are partially distinctive yet largely consistent across cultural groups (Elfenbein, 2013). Occasionally, they produce expressions that are unique to their own cultures; for example, in India, embarrassment is expressed with an iconic tongue bite and shoulder shrug (Haidt & Keltner, 1999).

How culturally variable are expressions of emotion? In one study, participants belonging to five different cultures—China, India, Japan, Korea, and the United States—heard 22 emotion-specific situations described in their native languages and expressed the elicited emotion in whatever fashion they desired (Cordaro et al., 2018). Intensive coding of participants’ expressions of these 22 emotions revealed that 50% of an individual’s expressive behavior was shared across the five cultural groups and might be thought of as universal facial/bodily expressions of emotion. Fully 25% of the expressive behavior was culturally specific and in the form of a dialect shaped by the particular values and practices of that culture.

The Conceptualization of Emotion

Emotions involve the dynamic unfolding of appraisals of the environment, expressive tendencies, the representation of bodily sensations, intentions and action tendencies, perceptual tendencies (e.g., seeing the world as unfair or worthy of reverence), and subjective feeling states. Labeling one’s own experience or another person’s expression as one of “interest,” “love,” or “shame,” can therefore refer to many different internal processes: representations of likely causes of the expression, inferred appraisals, sensations, feeling states, and intended courses of action plausible for the person expressing the emotion (Shaver et al., 1987; Shuman, Clark-Polner, Meuleman, Sander, & Scherer, 2017). As long noted (Ekman, 1997; Fehr & Russell, 1984), emotion words can refer to many different phenomena.

The “common view” approach to emotion, as shown in Figure 1, does not consider the multiple meanings inherent in labeling emotion-related responses with words (along with evidence that language is unnecessary for emotion-related processes; see Sauter, 2018). Moving beyond the emotion-to-face matching paradigms, now 50 years old, Fridlund’s (2017) behavioral ecology theory posits that what is most critical for perceivers is to discern an individual’s intentions in his or her expressive behavior. This theorizing has led to a broader consideration of the kinds of social information that people perceive in expressive behavior, beyond experiences of distinct emotions (Crivelli & Fridlund, 2018; Ekman, 1997; Keltner & Kring, 1998; Knutson, 1996; Scarantino, 2017). Important theoretical advances have illuminated how, in interpreting the expressive behavior of another person, observers might label that person’s state in terms of (a) a current feeling, (b) what is happening in the present context, (c) intentions or action tendencies, (d) desired reactions in others, and (e) characteristics of the social relationship. Should a person witness another individual’s blush and awkward smile, the observer might label the behavior as expressing embarrassment, as a marker of the uncomfortable nature of the present interaction, as a signal of an intention to make amends, as a plea for forgiveness, or as a signal of submissiveness and lower rank (Roseman et al., 1994). Emotional expressions convey multiple meanings, and distinct feeling states are but one of them.

This move beyond word-to-face matching paradigms raises the question of what is given priority when people recognize emotion from others’ expressive behavior. In a relevant study illustrative of where the field is going, observers matched dynamic, videotaped portrayals of five different emotions—happiness, sadness, fear, anger, and disgust—to one of the following: feelings (“fear”), appraisals (“that is dangerous”), social relational meanings (“you scare me”), or action tendencies (“I might run”). Consistent with other emotion-recognition work, participants labeled the dynamic expressions with the expected response 62% of the time; greater accuracy was observed when labeling expressions with feeling states, and reduced accuracy was observed for action tendencies (Horstmann, 2003). By contrast, recent work in the Trobriand Islands found that action tendencies were more prominent in the interpretation of facial expressions than were emotion words, pointing to cultural variations in the way that emotional expressions are interpreted (Crivelli et al., 2016). One of the most intriguing questions facing the field is how the multiple kinds of meaning that people perceive in expressive behavior vary across cultures, with development, and in different contexts (Matsumoto & Yoo, 2007).

How, then, does emotion recognition from expressions work (Scherer & Grandjean, 2007)? Do observers recognize distinct emotion categories—disgust, awe, shame—and then make inferences about underlying appraisals, including valence, arousal, dominance, fairness, or norm appropriateness? Or is the process the reverse, so that people see an expression, automatically evaluate it in terms of basic affect dimensions—valence, arousal, and so on—and then arrive at a distinct emotion label for the expression?

One widespread approach to the conceptualization of emotion from expressions posits that people appraise the expression in terms of valence and arousal; then they infer categorical labels (e.g., anger, fear) depending on other sources of information, such as the present context (e.g., Barrett et al., this issue; Russell, 2003). However, our work has subjected this hypothesis to empirical scrutiny in more than five studies of emotional experience and expression across multiple cultures, and we found support for a notably different conclusion.

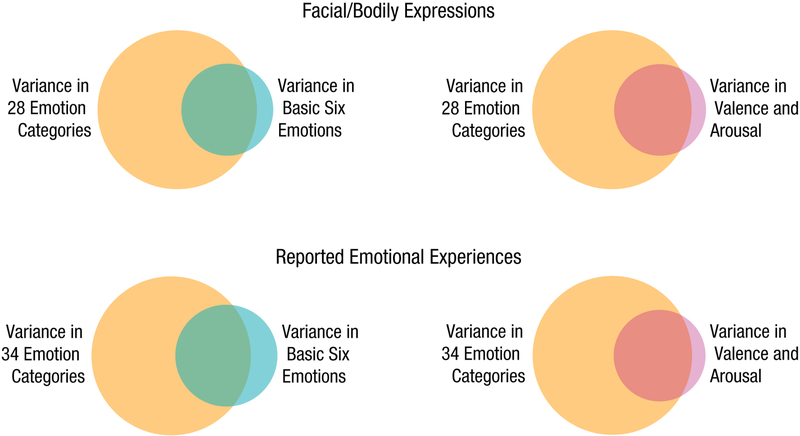

Figure 6 presents results from two of these studies. In the findings that are most relevant to the present review (Cowen & Keltner, in press), participants judged each of 1,500 expressions using emotion categories (including a free-response format) and valence and arousal (along with 11 other appraisal dimensions that have been proposed to underlie emotion recognition, including dominance, certainty, and fairness; see Roseman et al., 1990; Scherer et al., 2001; Smith & Ellsworth, 1985). These large-scale data allowed us to ascertain whether distinct emotion categories or appraisals of valence and arousal explain greater variance in emotion recognition.

Fig. 6.

Variance captured by high-dimensional models of emotion versus the basic six and valence plus arousal (Cowen & Keltner, 2017, in press). By mapping reported emotional experiences and facial expressions into a high-dimensional space (see Fig. 5), we can predict how they are recognized in terms of the basic six emotions and valence and arousal. However, we can also see that these traditional models are highly impoverished. For these analyses, we collected separate judgments of 1,500 faces and 2,185 videos in terms of just the basic six categories (anger, disgust, fear, happiness, sadness, and surprise). Each Venn diagram represents the proportion of the systematic variance in one set of judgments that can be explained by another set of judgments, using nonlinear regression methods (k-nearest neighbors). Although high-dimensional models largely capture the systematic variance in separate judgments of the basic six and valence and arousal, both the basic six (left) and valence and arousal (right) capture around 30% or less of the systematic variance in the high-dimensional models (28.0% and 28.5%, respectively, for facial expressions; 30.2% and 29.1%, respectively, for emotional experiences). (Note that in predicting other judgments from the basic six, we used only the category chosen most often by raters, assigning equal weight when there were ties, in accordance with the assumption of discreteness inherent in research cited in Barrett and colleagues’ review in their portrayal of common beliefs about emotion).

As one can see in the top row of Figure 6, a comprehensive array of 28 emotion categories such as “awe” and “love” were found to capture a much broader and richer space of emotion recognized in facial expression than could be explained by just valence and arousal (pink circles). These emotion categories also capture a substantially richer space than the six discrete emotion categories that constitute Barrett and colleagues’ portrayal of common beliefs about emotion (left Venn diagrams). Specifically, valence and arousal and the basic six both captured only about 30% of the variance. We replicated this pattern of results in a study of emotional experience in response to videos, as portrayed in the bottom row of Figure 6 (Cowen & Keltner, 2017). To capture the richness of emotional experience and recognition, then, we cannot rely only on the basic six, but we also cannot reduce the rich set of categories of emotion that people distinguish to simpler dimensions of valence and arousal.

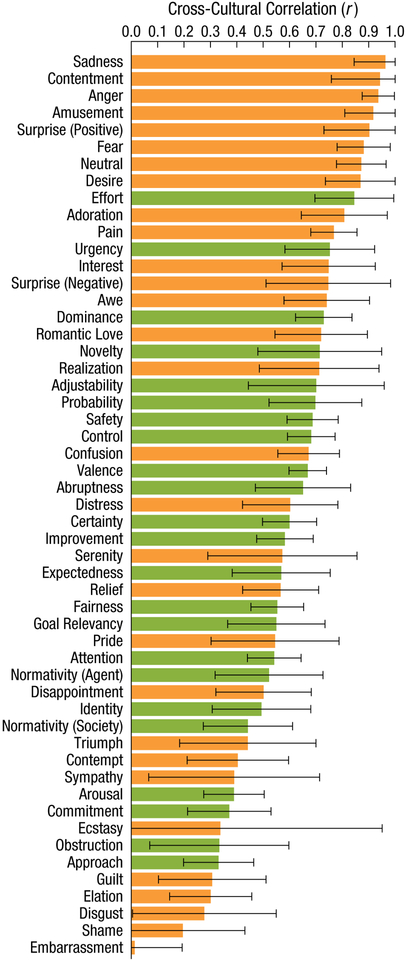

Although valence and arousal capture a small proportion (around 30%) of the variance in emotional experience and emotion recognition, it is worth asking whether this variance represents what is preserved across cultures. Another recent study (Cowen et al., 2019) offers an initial answer to this question, exploring the processes by which people across cultures conceptualize emotional expression in prosody (i.e., the non-lexical patterns of tune, rhythm, and timbre in speech). U.S. and Indian participants were presented with 2,519 speech samples of emotional prosody produced by 100 actors from five cultures. They were then asked, in separate response formats, to judge the samples in terms of 30 emotion categories and 23 more general appraisals (e.g., valence, arousal). Statistical analyses revealed that emotion categories (including many beyond the basic six, such as amusement, contentment, and desire) drove similarities in emotion recognition across cultures more so than many fundamental appraisals—even valence (pleasantness vs. unpleasantness), which Barrett and colleagues and others appear to consider the foundational building block of the conceptualization of emotion (Barrett, 2006b; Colibazzi, 2013; Russell, 2003). These results are shown in Figure 7, which portrays the degree to which emotion-category judgments of emotional prosody and core-affect appraisals are similar across two cultures. These results cast doubt on the notion that cultural universals in the emotions people recognize in expression are constructed from the perception of valence, arousal, and other general appraisals.

Fig. 7.

Correlations in the meaning of emotional speech prosody across cultures (Cowen et al., 2019). The correlation (r) for each emotion category (orange bars) and scale of appraisal (green bars) captures the degree to which each judgment is preserved across participants from India and the United States across 2,519 vocalizations. Our methods control for within-culture variation in each judgment. Error bars represent standard error. For an interactive map of the varieties of emotion recognized cross-culturally in speech prosody, see https://s3-us-west-1.amazonaws.com/venec/map.html.

Toward a Future Science of Emotion and Its Applications

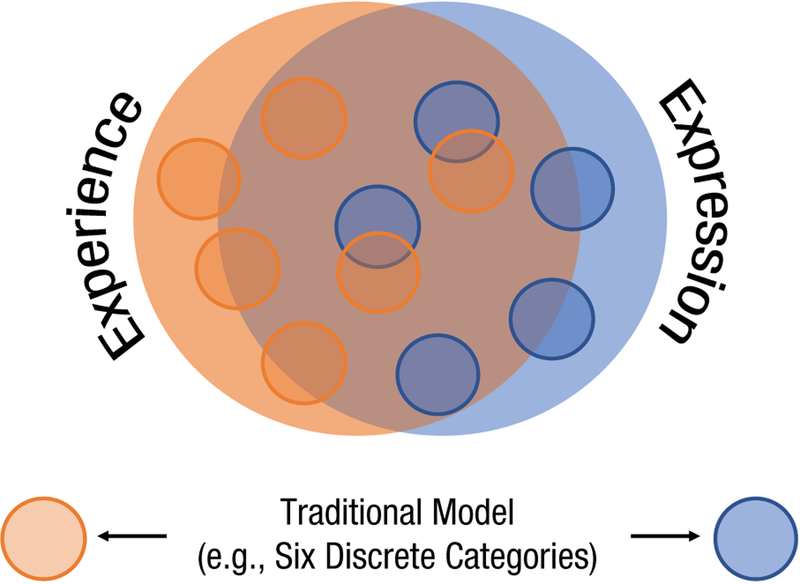

The “common view” model of emotion portrayed in Figure 1a is incomplete in essential ways. Events or stimuli do not elicit single emotions; instead, they elicit a wide array of emotions and emotional blends, mediated by appraisals. Emotional experience does not reduce to six emotions but rather to a complex space of 25 or so kinds of emotional experience and emotion blends (e.g., Fig. 3). Emotional experience does not manifest itself in prototypical facial-muscle configurations alone but rather in multimodal expressions involving the voice, touch, posture, gaze, head movements, the body, and varieties of expressions within a given modality (e.g., Cordaro, Keltner, et al., 2016, in press; Cowen et al., 2018, 2019; Cowen & Keltner, in press; Figs. 4 and 5). Social observers do not necessarily label expressions with single emotion words but instead use a richer conceptual language of inferred causes and appraisals, ascribed intentions, and inferred relationships between the expresser and their environment, including the observer. The realm of emotion is a complex, high-dimensional space.

These empirical advances at the heart of our review bring into sharp focus the problems inherent in attempts to draw conclusions about the diagnostic value of facial expression—or any other emotional expression modality, for that matter—from studies that sort expressions and reported emotional experiences into six discrete categories. In particular, such studies ignore the majority of explainable variance in any modality of emotional response and thus reduce the validity of conclusions about its diagnostic value. The basic six (anger, disgust, fear, happiness, sadness, and surprise), it is now clear, are a small subset of the emotions people might experience and express in any context. Moreover, facial-muscle movements are just a portion of expressive behavior. The same is true of labeling expressive behavior with single words representing just six emotions. When studies seek to link elicitors to single experiences, or experiences to prototypical facial expressions, or expressions to observer judgments, those studies ignore potential variance to be explained, which is all the more amplified by the narrow focus on the basic six.

More specifically, note that the basic six represent 30%, at best, of the explainable variance in experience and expression. Given this, correlations between expression and antecedent elicitors, reported experience, and observer judgment sorted into the basic six are relating measures that capture only 30% of the explainable variance to one another. As depicted in Figure 8, 70% of the variation in expression is left unaccounted for but still adds to the total variance, which determines the denominator of the correlation between expression and other phenomena. As a result, it is likely that the narrow focus on the basic six greatly underestimates the relations between events and expressive behavior, experiences and expressive behavior, and expressions and observer inference. This point is illustrated visually in Figure 8.

Fig. 8.

Illustration of why a basic-six model of emotion should be expected to generate low estimates of coherence between emotional experience and expression. Treating emotional experience and expression as six discrete categories captures about 30% of the systematic variance in each. As this diagram illustrates, measures that capture 30% of the variance in each of two phenomena may capture only a fraction of the shared variance between them. This is likely to be true when we measure emotional experience and expression in terms of the basic six. For example, a model in which happiness encompasses all positive emotion and has a one-to-one mapping to a smile is unable to account for degrees of happiness, for positive emotions that do not necessarily involve smiles (e.g., awe, desire, triumph, ecstasy, pride), and for emotions and communicative displays that are not necessarily positive but also involve smiles (e.g., embarrassment, posed smiles). These are sources of systematic variance disregarded by the basic six (i.e., the area of outside of the small circles).

These concerns, and the high-dimensional taxonomy of emotion uncovered in the studies we have reviewed, point to an alternative approach to the future scientific study of emotional expression, and emotion more generally (see Table 1) as follows:

To capture experience, measure appraisals (e.g., valence, arousal) and emotion categories.

Use methods that can account for numerous dimensions of emotion, including those we have brought into focus in our review, and that capture emotional blends, rather than focusing narrowly on the basic six.

Look beyond prototypical facial expressions to varying multimodal expressions.

Capture the more complex inferences observers make in ascribing meaning to expressive behavior.

From studies guided by these methods, answers to intriguing questions await. How do appraisals produce the dozens of distinct varieties of emotional response we observe and their fascinating blends? How do complex blends of emotional experience map onto the different modalities of expressive behavior? To what extent do the different modalities of expressive behavior—face, voice, body, gaze, and hands—signal the dozens of emotions that, as we have shown, people conceptualize and communicate? And building on findings reviewed here showing that perceivers conceptualize emotion at a basic level, from which they may infer broader appraisals (valence, arousal), and perhaps intentions and causes, what is the nature of that inferential process, and how might it vary with development, culture, and personality? What is the neurophysiological patterning that maps onto the 25 or so emotions considered in this article?

To achieve a full understanding of the diagnostic value of expression, researchers will need more advanced methods to account for the complex structures of emotional experience, expression, and real-world emotion attribution. Studies will need to accommodate the dozens of distinct dimensions of facial-muscle movement, vocal signaling, and bodily movement from which people reliably infer distinct emotions. They will need to capture the equally complex and high-dimensional space of emotional experiences that people reliably distinguish. Finally, they will need to account for social contingencies—including how expressive signals may reflect goals for communication when they diverge from emotional experience—and how real-world emotion attribution incorporates information about a person’s circumstances, temperament, expressive tendencies, and cultural context. Accommodating all of these factors requires statistical models sufficiently complex that they will call for the application of large-scale data collection, statistical modeling, and machine-learning methods. (This approach to capturing the diagnostic value of expression is, of course, complementary to controlled experiments that probe the mechanisms underlying specific expressive signals.)

It is important to note that in many ways, this work is well under way in the realm of neuroscience. For example, brain-imaging studies that have attempted to map the basic six emotions to activity in coarse brain regions have yielded inconsistent results (Hamann, 2012; Lindquist, Wager, Kober, Bliss-Moreau, & Barrett, 2012; Pessoa, 2012; Scarantino, 2012), but more recent studies have found that multivariate patterns of brain activity can reliably be decoded into the basic six categories (Kragel & LaBar, 2015, 2016; Saarimäki et al., 2016). These seemingly discrepant findings can be explained in part by the limitations of a basic-six model of emotion. Studies designed to uncover neural representations of the basic six inevitably confound many distinct emotional responses—for example, by sorting adorable, beautiful, and erotic images into a single category of “happiness” or empathically painful injuries and unappetizing food into a single category of “disgust.” Their results could thus vary depending on the profile of emotions that are actually evoked by stimuli placed into each category. Multivariate predictive methods are more robust to these confounds because they can discriminate multiple brain-activity patterns from multiple other brain-activity patterns by taking into account the levels of activation or deactivation in many regions at once. However, an alternative approach, one more conducive to nuanced inferences regarding the brain mechanisms emotion-related response, is to incorporate a more precise taxonomy of emotion. Indeed, recent neuroscience investigations incorporating high-dimensional models of emotion—informed by the work we have reviewed—are beginning to uncover more specific neural representations of more than 15 distinct emotions (Koide-Majima, Nakai, & Nishimoto, 2018; Kragel, Reddan, LaBar, & Wager, 2018). This ongoing work has the promise of significantly advancing our understanding of the neural mechanisms of emotion-related response.

Similar work is well under way in the study of the peripheral physiological correlates of emotion. In one recent meta-analysis of peripheral physiological responses associated with a wide range of distinct emotions, several positive emotions (e.g., amusement awe, contentment, desire, enthusiasm), as well as self-conscious emotions, were found to have subtly distinct patterns of peripheral physiological response (Kreibig, 2010). Other, more focused work has dissociated the physiological correlates of food-related disgust (decreases in gastric activity) from those of empathic pain (decelerated heart rate and increased heart rate variability), emotions that would be grouped under “disgust” by a basic-six approach but distinguished within a high-dimensional emotion taxonomy. Likewise, recent work has uncovered distinct peripheral physiological correlates for five different positive emotions—enthusiasm, romantic love, nurturant love, amusement, and awe (Shiota, Neufeld, Yeung, Moser, & Perea, 2011)—all of which would be grouped under “happiness” by a basic six approach. As this growing body of work moving beyond the basic six indicates, studies will need to incorporate a high-dimensional taxonomy of emotion and inductive modeling approaches to fully capture the diagnostic value of peripheral physiological response.

By moving beyond the basic six to a high dimensional taxonomy of emotion, we believe the application of this science will benefit our culture more generally. Richer approaches to empathy and emotional intelligence can orient people to learn how to perceive subtler expressions of emotions that are invaluable to relationships (compassion, desire, sympathy) and work (gratitude, awe, interest, triumph). Children might learn to hear the similarities in how the human voice conveys emotion in ways that resemble how they perceive emotion in a cello or guitar solo (Juslin & Laukka, 2003). The high-dimensional taxonomy of emotion language (Fig. 2), experience (Fig. 3), and expression (Figs. 4 and 5) we have detailed here should provide invaluable information to programs that seek to train children who live with autism and other conditions defined by difficulties in representing and reading one’s own and others’ emotion. Technologies that automatically map emotional expressions into a rich multidimensional space may have life-altering clinical applications, such as pain detection in hospitals, which call for close collaboration between science and industry.

The narrow focus on the basic six, something of an accidental intellectual byproduct of the seminal Ekman and Friesen research 50 years ago, has inadvertently led to an entrenched state of affairs in the science of emotion that features diametrically opposed positions, derived from the same data, about the recognition of six emotions from six discrete configurations of facial-muscle movements. Barrett et al.’s review identifies some important shortcomings of that approach. However, emotional expression is far richer and more complex than six prototypical patterns of facial-muscle movement. By opening up the field to a high-dimensional taxonomy of emotion, more refined and nuanced answers to central questions are emerging, as are entire new fields of inquiry.

Funding

D. Sauter’s research is funded by the European Research Council (ERC) under the European Union’s Horizon 2020 Program for Research and Innovation Grant 714977.

Footnotes

Declaration of Conflicting Interests

The author(s) declared that there were no conflicts of interest with respect to the authorship or the publication of this article.

References

- Anderson CL, Monroy M, & Keltner D (2018). Emotion in the wilds of nature: The coherence and contagion of fear during threatening group-based outdoors experiences. Emotion, 18, 355–368. doi: 10.1037/emo0000378 [DOI] [PubMed] [Google Scholar]

- Anikin A, & Persson T (2017). Nonlinguistic vocalizations from online amateur videos for emotion research: A validated corpus. Behavior Research Methods, 49, 758–771. doi: 10.3758/s13428-016-0736-y [DOI] [PubMed] [Google Scholar]

- App B, McIntosh DN, Reed CL, & Hertenstein MJ (2011). Nonverbal channel use in communication of emotion: How may depend on why. Emotion, 11, 603–617. doi: 10.1037/a0023164 [DOI] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, … Bentin S (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science, 19, 724–732. doi: 10.1111/j.1467-9280.2008.02148.x [DOI] [PubMed] [Google Scholar]

- Bachorowski JA, & Owren MJ (2001). Not all laughs are alike: Voiced but not unvoiced laughter readily elicits positive affect. Psychological Science, 12, 252–257. doi: 10.1111/1467-9280.00346 [DOI] [PubMed] [Google Scholar]

- Baird A, Rynkiewicz A, Podgórska-Bednarz J, Lassalle A, Baron-Cohen S, Grabowski K, … Łucka I (2018). Emotional expression in psychiatric conditions: New technology for clinicians. Psychiatry and Clinical Neurosciences, 73, 50–62. doi: 10.1111/pcn.12799 [DOI] [PubMed] [Google Scholar]

- Banse R, & Scherer KR (1996). Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology, 70, 614–636. doi: 10.1037/0022-3514.70.3.614 [DOI] [PubMed] [Google Scholar]

- Bänziger T, Mortillaro M, & Scherer KR (2012). Introducing the Geneva Multimodal expression corpus for experimental research on emotion perception. Emotion, 12, 1161–1179. doi: 10.1037/a0025827 [DOI] [PubMed] [Google Scholar]

- Barrett LF (2006a). Are emotions natural kinds? Perspectives on Psychological Science, 1, 28–58. [DOI] [PubMed] [Google Scholar]

- Barrett LF (2006b). Valence is a basic building block of emotional life. Journal of Research in Personality, 40, 35–55. doi: 10.1016/j.jrp.2005.08.006 [DOI] [Google Scholar]

- Boster JS (2005). Emotion categories across languages In Cohen H & Lefebvre C (Eds.), Handbook of categorization in cognitive science (pp. 187–222). Oxford, England: Elsevier. doi: 10.1016/B978-008044612-7/50063-9 [DOI] [Google Scholar]

- Buss A, & Plomin R (1984). Temperament: Early personality traits. Hillsdale, NJ: Erlbaum. [Google Scholar]

- Campos B, Shiota MN, Keltner D, Gonzaga GC, & Goetz JL (2013). What is shared, what is different? Core relational themes and expressive displays of eight positive emotions. Cognition & Emotion, 27, 37–52. [DOI] [PubMed] [Google Scholar]

- Carroll JM, & Russell JA (1996). Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology, 70, 205–218. doi: 10.1037/0022-3514.70.2.205 [DOI] [PubMed] [Google Scholar]

- Chen Z, & Whitney D (2019). Tracking the affective state of unseen persons. Proceedings of the National Academy of Sciences, USA, 116, 7559–7564. doi: 10.1073/pnas.1812250116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colibazzi T (2013). Journal Watch of Neural systems subserving valence and arousal during the experience of induced emotions. Journal of the American Psychoanalytic Association, 61, 334–337. doi: 10.1177/0003065113484061 [DOI] [Google Scholar]

- Cordaro DT, Brackett M, Glass L, & Anderson CL (2016). Contentment: Perceived completeness across cultures and traditions. Review of General Psychology, 20, 221–235. doi: 10.1037/gpr0000082 [DOI] [Google Scholar]

- Cordaro DT, Keltner D, Tshering S, Wangchuk D, & Flynn LM (2016). The voice conveys emotion in ten globalized cultures and one remote village in Bhutan. Emotion, 16, 117–128. doi: 10.1037/emo0000100 [DOI] [PubMed] [Google Scholar]

- Cordaro DT, Sun R, Kamble S, Hodder N, Monroy M, Cowen AS, … Keltner D (in press). Beyond the “Basic 6”: The recognition of 18 facial bodily expressions across nine cultures. Emotion. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cordaro DT, Sun R, Keltner D, Kamble S, Huddar N, & McNeil G (2018). Universals and cultural variations in 22 emotional expressions across five cultures. Emotion, 18, 75–93. doi: 10.1037/emo0000302 [DOI] [PubMed] [Google Scholar]

- Cowen AS, Elfenbein HA, Laukka P, & Keltner D (2018). Mapping 24 emotions conveyed by brief human vocalization. American Psychologist, 22, 274–276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, & Keltner D (2017). Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proceedings of the National Academy of Sciences, USA, 114 E7900–E7909. doi: 10.1073/pnas.1702247114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, & Keltner D (2018). Clarifying the conceptualization, dimensionality, and structure of emotion: Response to Barrett and Colleagues [Letter]. Trends in Cognitive Sciences, 22, 274–276. doi: 10.1016/j.tics.2018.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, & Keltner D (2019). The taxonomy of emotion concepts. Manuscript in preparation. [Google Scholar]

- Cowen AS, & Keltner D (in press). What the face displays: Mapping 28 emotions conveyed by naturalistic expression. American Psychologist. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen AS, Laukka P, Elfenbein HA, Liu R, & Keltner D (2019). The primacy of categories in the recognition of 12 emotions in speech prosody across two cultures. Nature Human Behavior, 3, 369–382. doi: 10.1038/s41562-019-0533-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crivelli C, Carrera P, & Fernández-Dols JM (2015). Are smiles a sign of happiness? Spontaneous expressions of judo winners. Evolution & Human Behavior, 36, 52–58. doi: 10.1016/j.evolhumbehav.2014.08.009 [DOI] [Google Scholar]

- Crivelli C, & Fridlund AJ (2018). Facial displays are tools for social influence. Trends in Cognitive Sciences, 22, 388–399. doi: 10.1016/j.tics.2018.02.006 [DOI] [PubMed] [Google Scholar]

- Crivelli C, Russell JA, Jarillo S, & Fernández-Dols J-M (2016). The fear gasping face as a threat display in a Melanesian society. Proceedings of the National Academy of Sciences, USA, 113, 12403–12407. doi: 10.1073/pnas.1611622113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crivelli C, Russell JA, Jarillo S, & Fernández-Dols JM (2017). Recognizing spontaneous facial expressions of emotion in a small-scale society of Papua New Guinea. Emotion, 17, 337–347. doi: 10.1037/emo0000236 [DOI] [PubMed] [Google Scholar]

- Dael N, Mortillaro M, & Scherer KR (2012). Emotion expression in body action and posture. Emotion, 12, 1085–1101. doi: 10.1037/a0025737 [DOI] [PubMed] [Google Scholar]

- Diamond LM (2003). What does sexual orientation orient? A biobehavioral model distinguishing romantic love and sexual desire. Psychological Review, 110, 173–192. doi: 10.1037/0033-295X.110.1.173 [DOI] [PubMed] [Google Scholar]

- Du S, Tao Y, & Martinez AM (2014). Compound facial expressions of emotion. Proceedings of the National Academy of Sciences, USA, 111, E1454–E1462. doi: 10.1073/pnas.1322355111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duran JI, & Fernández-Dols J-M (2018). Do emotions result in their predicted facial expressions? A meta-analysis of studies on the link between expression and emotion. PsyArXiv preprint. Retrieved from https://psyarxiv.com/65qp7 [DOI] [PubMed] [Google Scholar]

- Eisenberg N, Schaller M, Fabes RA, Bustamante D, Mathy RM, Shell R, & Rhodes K (1988). Differentiation of personal distress and sympathy in children and adults. Developmental Psychology, 24, 766–775. doi: 10.1037/0012-1649.24.6.766 [DOI] [Google Scholar]

- Ekman P (1993). Facial expression and emotion. American Psychologist, 48, 384–392. doi: 10.1037/0003-066X.48.4.384 [DOI] [PubMed] [Google Scholar]

- Ekman P (1997). Should we call it expression or communication? Innovation: The European Journal of Social Science Research, 10, 333–344. doi: 10.1080/13511610.1997.9968538 [DOI] [Google Scholar]

- Ekman P (2016). What scientists who study emotion agree about. Perspectives on Psychological Science, 11, 31–34. [DOI] [PubMed] [Google Scholar]