Abstract

Focused Ion Beam Scanning Electron Microscopy (FIB-SEM) is an imaging approach that enables analysis of the 3D architecture of cells and tissues at resolutions that are 1–2 orders of magnitude higher than that possible with light microscopy. The slow speeds of data collection and manual segmentation are two critical problems that limit the more extensive use of FIB-SEM technology. Here, we present an easily accessible robust method that enables rapid, large-scale acquisition of data from tissue specimens, combined with an approach for semi-automated data segmentation using the open-source machine learning Weka segmentation software, which dramatically increases the speed of image analysis. We demonstrate the feasibility of these methods through the 3D analysis of human muscle tissue by showing that our process results in an improvement in speed of up to three orders of magnitude as compared to manual approaches for data segmentation. All programs and scripts we use are open source and are immediately available for use by others.

Keywords: FIB-SEM, 3D electron microscopy, Machine learning, Aging, Skeletal muscle, Mitochondrial structure, Semi-automated segmentation, Tissue imaging

1. Introduction

Focused Ion Beam Scanning Electron Microscopy (FIB-SEM) is an approach for 3D imaging of specimens with thicknesses greater than ~1 μm that cannot be imaged using transmission electron microscopy due to their thickness. In biological FIB-SEM imaging, a focused gallium ion beam is used to progressively remove material from the surface of a macroscopic specimen such as a cell pellet or tissue specimen, with the recording of a backscattered electron microscopic image using a scanning electron beam. The resulting volumes contain useful information on subcellular architecture at spatial resolutions as high as ~10 nm. FIB-SEM holds great promise for high resolution study and visualization of tissues through segmentation and can provide new and unexpected insights into the organization of organelles and membranes in the cell (Glancy et al., 2017; Glancy et al., 2015; Narayan and Subramaniam, 2015).

However, 3D structural analysis of sub-cellular assemblies requires accurate segmentation and delineation of organelle boundaries, as currently used, the speed of interpreting the image stack using manual segmentation approaches is the principal bottleneck in the application of FIB-SEM.

To realistically address biologically and medically interesting problems, increases in the speed of segmentation of at least two orders of magnitude are required. A recent estimate put the amount of time required, using present approaches for manual segmentation, to segment a 1x105 um3 volume to take between 2 × 104 and 1 × 105 work hours to complete (Berning et al., 2015), this is not including the time taken to acquire such large volumes at high resolutions in the first place.

We estimate that to obtain a statistically meaningful analysis of the effect of aging on human tissues we would require several volumes from an individual, each a minimum of 1 × 104 μm3 in size across a population dispersed over a wide age range. Using this approach, to discern potential age-related variations in mitochondrial architecture with manual segmentation could take years.

Machine learning, and other advanced computational techniques have begun to dramatically reduce the time taken to convert an imaged volume to discrete and quantifiable structures within the volume (Januszewski et al., 2018; Berning et al., 2015; Lucchi et al., 2010; Meijs et al., 2017; Camacho et al., 2018; Kasaragod et al., 2018).

The approach we present here, is a robust, easily accessible, step-by-step workflow from sample preparation to 3D visualization using the readily available Weka segmentation platform on ImageJ (Arganda-Carreras et al., 2017) combined with parallelization scripts for high-throughput segmentation of large volumes. This approach dramatically reduces the time required to describe such structural characteristics on a population wide scale and takes an integrative approach towards sample collection, segmentation and analysis with a view to creating a versatile but accurate methodology for tackling a multitude of biologically relevant problems.

Our methodology enabled the collection of 3.44 × 105 μm3 of human skeletal muscle samples from four healthy male individuals in ~12 instrument days. Segmentation of the data sets was achieved at the rate of ~2800 μm3 per hour based on actual time estimates of time taken from initial training to the generation of complete 3D segmentations. This corresponds to a ~500–3000-fold increase in speed relative to manual segmentation without loss of the detail required to interpret the image data. Our goal is to combine this high-resolution structural information with biochemical and proteomic analyses of the samples to define the biology of aging. Using our technique described herein, we have taken a major step towards this goal by quantifying multiple 3D characteristics of human skeletal muscle mitochondrial networks in a population of healthy individuals aged 65 and older.

2. Materials and methods

2.1. Candidate selection and muscle biopsy

This study was conducted in healthy men participating in the Baltimore Longitudinal Study and Aging (BLSA) and the Genetic and Epigenetic Signatures of Translational Aging Laboratory Testing (GESTALT) studies. The design and description of the BLSA and GESTALT studies have been previously reported (Tanaka et al., 2018; Shock et al., 1984; Stone and Norris, 1966). Skeletal muscle biopsies were performed in fasting conditions as described elsewhere (Gonzalez-Freire et al., 2018). Briefly, a ~250 mg muscle biopsy was obtained from the middle portion of the vastus lateralis muscle using a 6-mm Bergstrom biopsy needle inserted through the skin in the muscle. A small portion of muscle tissue (~5mg) was immediately placed in 2% Glutaraldehyde (GA) and 2% Paraformaldehyde (PFA) in 100 mM sodium cacodylate buffer, pH 7.3–7.4 at 4 °C until required for sample preparation. The rest of the biopsy specimen was snap frozen in liquid nitrogen and subsequently stored at −80 °C until used for further analyses.

2.2. Fixation, contrasting, and embedding

Muscle biopsy samples from human donors were fixed with 5% glutaraldehyde in 100 mM sodium cacodylate buffer at pH 7.4 as in a murine skeletal muscle study (Glancy et al., 2015). In order to achieve the contrast required to be able to consistently identify mitochondria with similar signal to noise ratio the standard post-fixation protocol used for the murine muscle skeletal muscle samples was changed. Here we post-fixed with 2% Osmium Tetroxide (OsO4) in sodium cacodylate buffer for 1hr at RT, washed with ddH2O and treated with 4% tannic acid in sodium cacodylate buffer. A second treatment of 2% OsO4 in cacodylate buffer either reduced or not reduced with 0.6% Potassium Ferrocyanide was performed for 1hr at RT. Samples were then washed in ddH2O and treated with 2% Uranyl Acetate (UA) in ddH2O at 4 °C overnight (Lewinson, 1989; Kobayashi et al., 1980). The samples were then washed in ddH2O, 5 × 10 min, and dehydrated using a graded ethanol series ending in 100% propylene oxide. Infiltration of embedding media was performed using a ratio of 2:1, 1:1, 1:2 propylene oxide to Eponate12 resin formula (EMS). Samples were embedded in resin molds and placed in an oven set at 60 °C overnight for polymerization.

2.3. Area selection for FIB-SEM analysis

Areas of muscle were chosen for FIB-SEM data collection following a survey of 0.5–1 μm thick sections of resin-embedded muscle tissue; sections were created using an Ultracut S microtome from Leica Microsystems. The sections were stained with Toluidine blue which stains nucleic acids blue and polysaccharides purple. Once stained, the orientation and morphology of the fiber was assessed using a light microscope. Suitable areas with intact muscle fibers were chosen for FIB-SEM data collection using the last section taken from the top of the block-face, and digital images were taken for reference. These images were used as maps to pinpoint the previously selected areas for data collection in the FIB-SEM (Glancy et al., 2015). The resin was cut to create a suitable sample for SEM and samples were sonicated in ethanol: water (70:30) for 15 min to remove dust and particulates which would hinder imaging. The sample was then mounted on an aluminum stub using a double-sided adhesive conductive carbon tab, and the sides painted with silver paint to prevent charge build-up. Once the sample dried, it was placed in a sputter coater (Cressington model 108), and coated with gold for 40 s at 30 mA. After gold coating, the sample was placed into the sample chamber of the FIB-SEM. FIB-SEM imaging was performed using a Zeiss NVision 40 microscope, with the SEM operated at 1.5 keV landing energy, a 60 μm aperture and backscattered electrons were recorded at an energy selective back-scattered electron (EsB) detector. The user interface employed ATLAS 3D from Carl Zeiss, consisting of a dual 16-bit scan generator assembly to simultaneously control both the FIB and SEM beams and dual signal acquisition inputs, as well as the necessary software and firmware to control the system.

The fiber of interest was located using the SEM, and the instrument was then brought to eucentric and coincidence point at a specimen tilt of 54°, i.e. the specimen height where the specimen does not move laterally with a change in tilt and where the focal point of both FIB and SEM coincide. Once the exact milling area was determined with reference to the microscope images, a protective platinum pad was laid down on top of the area using a Gas Injection System (GIS) of size 60 μm × 30 μm and 5 μm in thickness. Then alignment marks were etched into the platinum pad using an 80 pA FIB aperture to allow for automated tracking of milling progress, SEM focus and stigmation during acquisition. After alignment etching, the platinum pad was covered with a carbon pad using the GIS to protect the etched marks from the milling process. After deposition of the carbon pad, a trench was dug using a 27 nA FIB aperture to allow for line-of-sight for the SEM ESB detector. After the trench was dug, the imaging face was polished using a 13 nA FIB aperture. The FIB aperture was changed to 700 pA and SEM imaging area selected (Typical Image size: 4000 px × 2000 px/Pixel size: 15 × 15 × 15 nm [xyz]) the automated acquisition software was set up and run until all the sample area was acquired.

2.4. Image processing and segmentation

After SEM acquisition the individual image files (.tif) were aligned using a cross-correlation algorithm (Murphy et al., 2011). The images were then opened in ImageJ, and the volume was cropped to ensure a minimum distance of at least 1 μm away from the cell boundary in any direction, this was performed to reduce measurement variability of mitochondrial density due to the non-uniform distribution of mitochondria near capillaries and cell boundaries. (Sjöström et al., 1982).

To reduce noise volumes were median filtered by 1 pixel in the x, y and z directions and then binned by 2 in all three axes to produce a final voxel size of 30 × 30 × 30 nm. The volume’s contrast was normalized and equalized using ImageJ’s “Enhance Contrast” function.

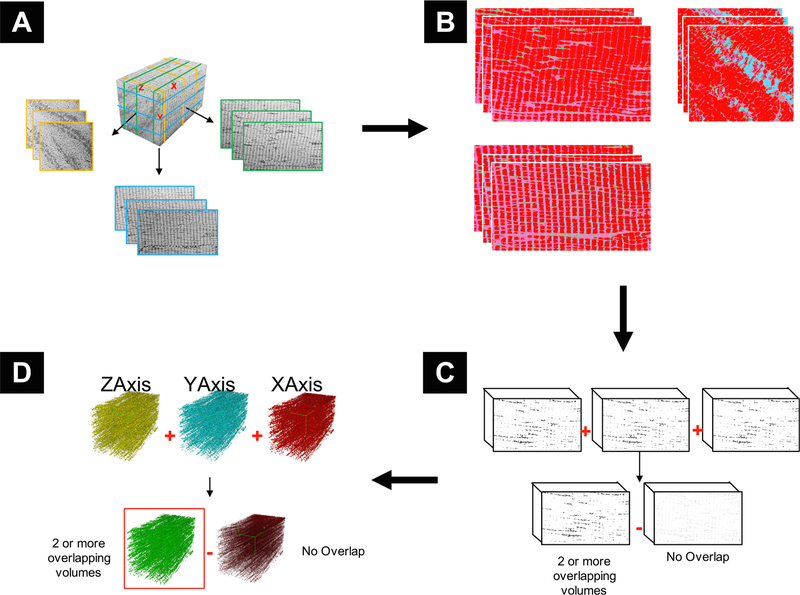

Sample images were required for preliminary training to construct the necessary machine learning classifiers for automatic segmentation. Referring to the schematic in Fig. 2, three representative slices (one from each 3rd of the volume), were taken at random from each of the principal axes: x, y and z (9 slices in total) (Fig. 2A). A classifier was trained for each slice by sampling several main structures found in each sample image (Fig. 2B). The primary structures, based on standard histological examples, were as follows: z-disk, mitochondria, A-band, I-band, sarcoplasmic reticulum and lipids. The classifier was trained using all training features available in the “Trainable Weka Segmentation” plugin for ImageJ Fiji, a robust machine learning plugin that is professionally maintained by The University of Waikato. The Weka algorithm, in brief, extracts image features using common filters that can be categorized as edge detectors (e.g. Laplacian and Sobel filters), texture filters, (such as minimum, maximum, and median filters), noise reduction filters (such as Gaussian blur and bilateral filter), and membrane detectors, which detect membrane-like structures of a specified thickness and size. Furthermore, the feature set also included additional features from the ImageScience suite (https://imagescience.org/meijering/software/imagescience).

Fig. 2.

Graphical representation of key steps in segmentation pipeline. A) Classifier Selection: Three representative slices are taken from each of the principal axes. B) Classifier Generation: Each slice is manually classified based on the organelles within the volume. C) Volume Classification and Refinement: The classifiers are applied to the entire volume and produce segmented volumes of each class. The mitochondrial class is isolated, and each of the 3 volumes from the same axes are combined and non-overlapping data removed to produce an axial volume. D) Axial Volume Combination: Each of the refined volumes from the principal axes are combined, and non-overlapping data is removed.

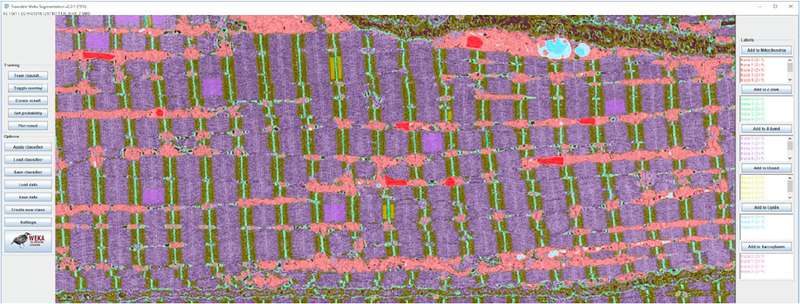

Since only 2D image features were calculated, classifiers were trained and applied on all three image axes to compensate for the loss of a third dimension. In our machine learning approach, we applied the multi-threaded version of the random forest classifier with 200 trees and 2 random features per node. Probability measurements of each class were generated, allowing for a class-by-class assessment of the performance of each classifier during training. Segmentation masks of the key skeletal structures were then outputted based on these probability measurements (Fig. 1).

Fig. 1.

Example of Weka software manual classification in ImageJ.

Once all 9 classifiers were trained (3 for each axis), they were exported as separate “.model” files and applied to each slice in the volume according to the respective axes which they were trained.

The segmentation of the full volume was performed on the Biowulf supercomputer cluster at NIH, and its implementation is as follows:

To simultaneously process many image slices at once, each slice was opened in a separate instance of ImageJ Fiji, and then Weka machine learning was executed in each instance. Since only 2D image features were calculated, each instance of ImageJ Fiji could effectively classify an image without needing access to any other image data.

Thus, images were simultaneously classified by parallel processors running multiple instances of ImageJ Fiji. Each instance executed a Beanshell script (source.bsh) that automatically performed Weka machine learning on a specified image using a specific classifier file. The process of opening instances of ImageJ Fiji was automated through the command line interface by using the existing “–headless” option that came with the ImageJ Fiji package. Biowulf effectively allocated and launched hundreds of processors at once with the use of the “swarm” command that already existed on the supercluster.

The command required a formatted file containing independent commands to distribute to each processor and to generate such a file quickly we wrote an automated Bash script “generate_swarm_script.sh”. If a different system other than Biowulf is being used, then it is advised to create a script that launches parallel instances of ImageJ Fiji that execute the “source.bsh” Beanshell script. It should be noted that Weka machine learning is optimized to run faster by utilizing a substantial amount of RAM. For the classification of our large FIB-SEM images, we allocated 25 GB RAM per processor per image and ran between 16 and 28 processors in parallel.

A total of 9 semi-automated segmentation volumes were created. The 3 volumes from each axis were first added together using ImageJ’s “Image calculator” function, and density which did not overlap with at least one of the other 2 volumes was removed through simple thresholding (Fig. 2C). Each axis volume was then added together using the previously mentioned function, and density which did not overlap with at least one of the other 2 axes was removed through simple thresholding (Fig. 2D). The combination of several segmented volumes reduced the overall amount of noise and mitigated the effects of any artefacts such as curtaining and contrast variation in the volume. The volume was then filtered by 2 pixels in the x, y and z directions using ImageJ’s “Median 3D Filter” function and was used for statistical analysis of mitochondrial densities.

2.5. Quantitative evaluation of segmentation pipeline against manual standards

Semi-automated segmentation methods were compared to manually segmented volumes using the following metrics:

Absolute Volume Difference (AVD) (%): Absolute volume difference measurements were performed to measure the total volume difference between manually and automatically segmented volumes, allowing for a global metric of volume-to-volume difference. For labels with identical volume, %Difference (A, M) = 0, with increasing values indicating a greater volume difference between the two labels.

3D Model-to-Model distance (Mean Surface Distance/MSD): Both the manual and semi-automated volumes were converted to ASCII mesh surfaces using ImageJ’s “3D viewer” (Chmid, 2010). These meshes were then transferred to the Cloud Compare platform (Cloud Compare, 2018), where the manually segmented (reference) volume was compared to the semi-automatically generated (comparison) volume, using the “Compute cloud/mesh distance” tool a map of the model-to-model distance was created. The max distance between the reference and semi-automated datasets was set to 0.3 μm (any greater distance was set to the maximum threshold), and the model-to-model distance distribution was fitted to a Gaussian distribution, and the mean ± standard deviation calculated. A tricolor histogram was applied to the map with red representing semi-automated density areas greater than manually segmented density, blue representing semi-automated density areas less than manually segmented density and white represents <~15 nm difference between structures.

Sensitivity, Specificity, Accuracy, Dice Similarity Coefficient (DSC) and Cohen’s Kappa (κ) calculations. Sensitivity, specificity and accuracy were calculated according to the conventional equations. The Dice similarity coefficient was calculated using a variation of the original formula (Dice, 1945):

Cohen’s Kappa was calculated according to the equation found in (McHugh, 2012):

where Pa = Actual Observed Agreement = Accuracy;

where n = total number of observations = TP + FP + FN + TN and TP = True positive (Automated [A] ∩ Manual [M]); FP = False Positive (A\M); FN = False Negative (M\A) and TN = True Negative (U\[A∪B]).

2.6. Statistical analysis of mitochondrial distribution in human skeletal muscle

Mitochondrial Density measurements were made by splitting each volume into 100 μm3 segments and the volume of white pixels in the binary segmented image were measured using the “3D Objects Counter” function in ImageJ. Mitochondrial Orientation, aspect ratio, sphericity and surface area to volume ratio were calculated according to the methods described in (Bleck et al., 2018).

3. Results and discussion

3.1. Qualitative analysis of mitochondrial distribution in human skeletal muscle

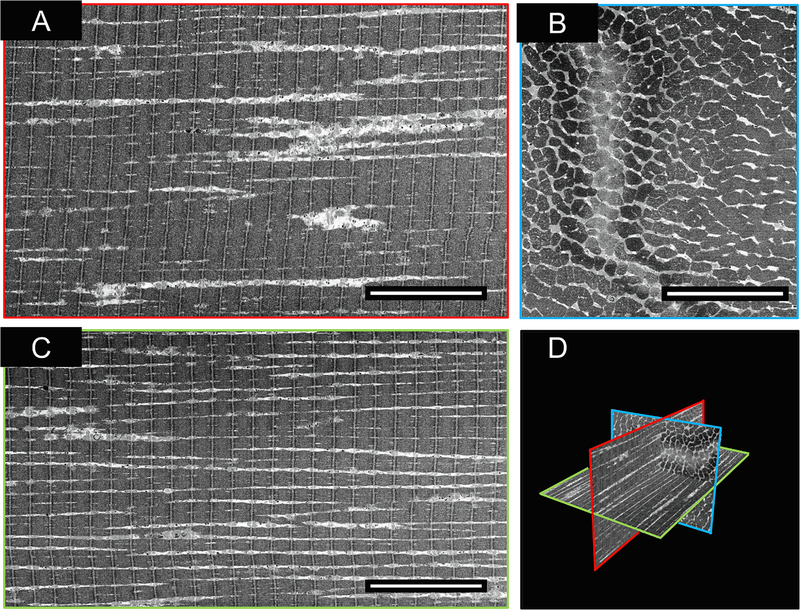

In Fig. 3, we present orthogonal views of the SEM images from a representative FIB-SEM data collection run with a muscle tissue specimen. Early in the design of our experiments, we found that increasing the voxel size from 5 × 5 × 15 nm3 to 15 × 15 × 15 nm3 increased the rate of data acquisition ~3-fold from 500 μm3 per hour to 1500 μm3 per hour. We established that the information required for segmentation was not compromised by the use of larger pixel sizes in the × and y dimensions (Fig. 3 – Supplemental Fig. 1) for the purpose of recognizing mitochondria.

Fig. 3.

A typical example of a muscle fiber acquired with FIB-SEM at a voxel size of 15 nm3. A) Z-Axis (Imaging) face of FIB-SEM volume. B) X-Axis face of FIB-SEM volume. C) Y-Axis face of FIB-SEM volume. D) 3D Orthoslice representation of slices A-C. Scale Bar = 10 μm. Manual analysis of the 3D image stack shows that the mitochondria display two distinct architectural arrangements, with one class displaying thick, densely packed networks (type A) and those with thin, sparse networks (type B).

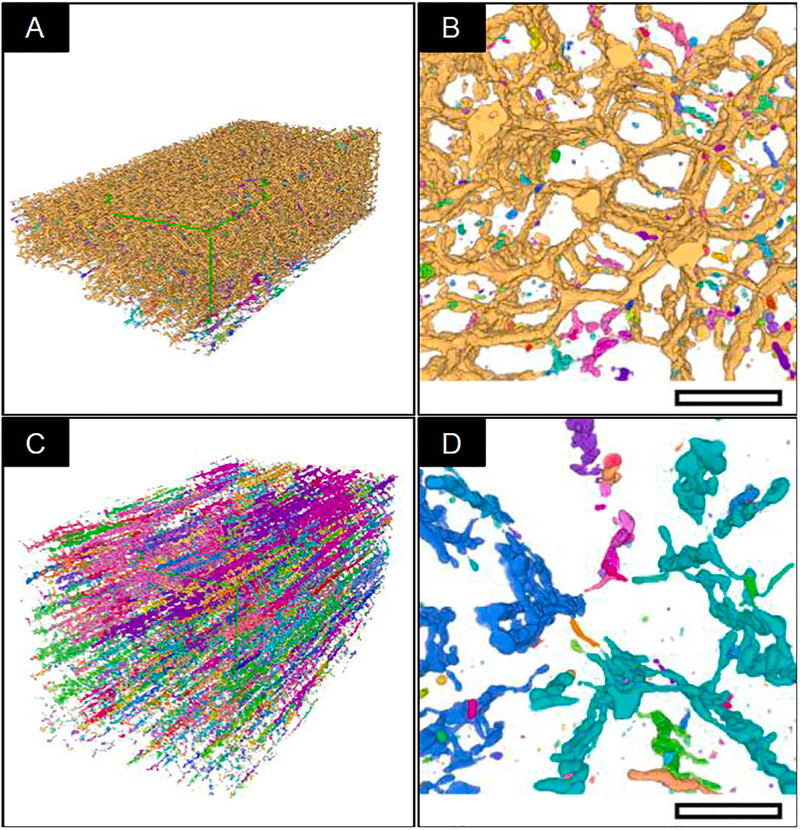

The overall spatial arrangements of these mitochondrial types (Fig. 4) are distinct, with the type A fibers (Fig. 4A, B) forming a highly connected assembly, while the type B fibers (Fig. 4C, 4D) are arranged in smaller clusters in addition to being loosely packed. See Fig. 4 – Supplemental Figs. 1, 2 for video of Type A and B 3D segmentation respectively.

Fig. 4.

Morphological Classification. Each continuous network of connected mitochondria, as determined by ImageJ’s “MorphoLibJ” plugin, in the above images were labelled a single color. A) Typical “Type A” fiber segmentation volume. B) Transverse (X-Axis) “Type-A” image of a mitochondrial sub-volume. The majority of mitochondria in this volume are from a single network, indicated by a uniform label across the whole volume. C) Typical “Type B” fiber segmentation volume D) Transverse (X-Axis) “Type B” image of mitochondrial sub-volume. The majority of mitochondria in this volume are from multiple discontinuous networks indicated by the multi-colored labelling evident in the volume. Scale bar: 2 μm.

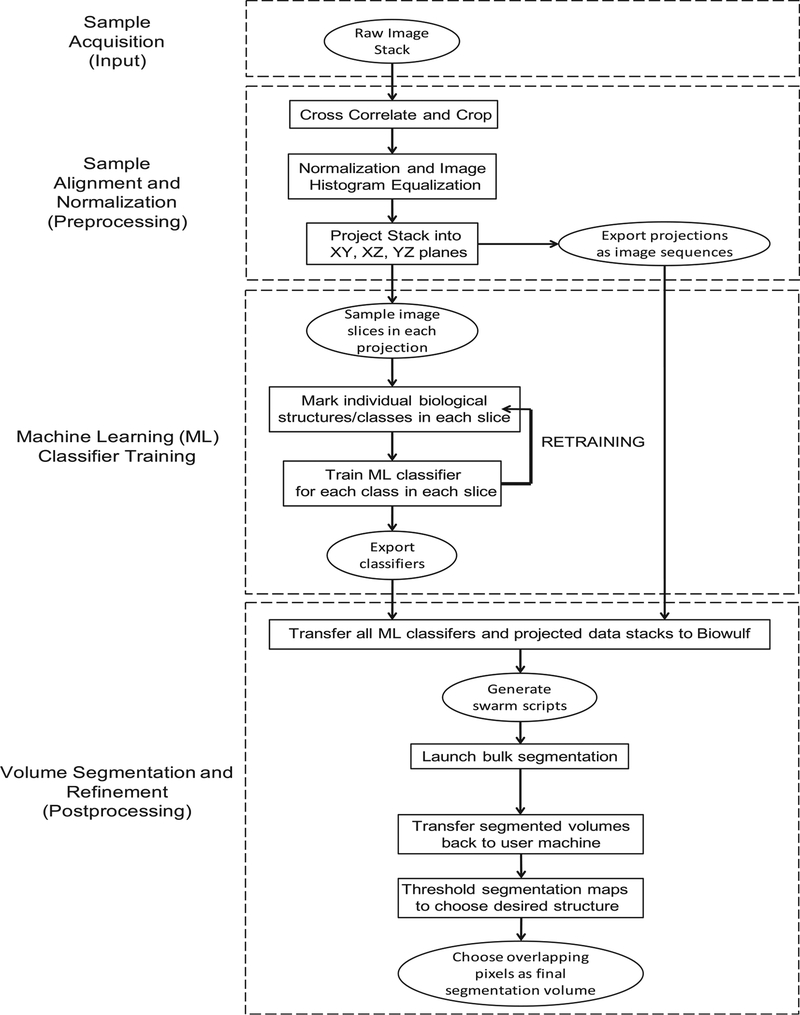

We combined volume acquisition, alignment and normalization, machine learning (ML) training, semi-automated segmentation and statistical analysis into a pipeline and used it to segment multiple tissue volumes (Fig. 6).

Fig. 6.

Segmentation Pipeline. Flowchart indicating the significant steps in the acquisition, segmentation and analysis of 3D volumes.

Below, we summarize the main steps of our approach:

Sample Acquisition (Input): Once a suitable area was found on a given tissue block, it was imaged with a 15 × 15 × 15 nm voxel size (xyz) resulting in final volume dimensions of 60 × 30 × 30 μm3 (54,000 μm3).

Sample Alignment and Normalization (Preprocessing): The individual images (in tiff format) were aligned to a complete 3D stack using a cross-correlation algorithm as described previously (Murphy et al., 2011). The resulting (.mrc) file was opened in ImageJ cropped, median filtered, binned to a voxel size of 30 nm3 and the stack histogram was normalized and equalized for reproducibility between volumes. 3 slices were selected from each of the principal axes, evenly spaced across the volume, resulting in 9 images for manual classification.

Machine Learning Training (Manual Classification): Each of the major biological structures in the 9 images were classified based on their standard histological features (z-disk, mitochondria, A-band, I-band, sarcoplasmic reticulum and lipids). After sufficient annotation, the Weka segmentation platform was used to train the machine learning software on the images. The output was inspected, and if the software failed to classify the image adequately, the above classification process was repeated iteratively until the software produced an accurate classification of the slice. A (.model) file was then exported to the biowulf computing resource at the NIH (details on how to port the Weka segmentation platform to a generic computing cluster are included in the Supplementary information).

Volume Segmentation and Refinement (Postprocessing): The volume prepared in step 2 was exported to biowulf as a series of individual image slices, and each of the 9 classifiers were applied to the image stack, producing 9 × 32-bit tiff format outputs of classified images, which were then imported from biowulf and processed on local computers using the ImageJ image processing package. Images classified as mitochondria were isolated as binary 8-bit tiff format files. The 3 volumes from each axis were first added together using ImageJ’s “Image calculator” function; densities that did not overlap with at least one of the other 2 volumes were removed through simple thresholding. Each axis volume was then added together using the previously mentioned function, and density which did not overlap with at least one of the other 2 axes was removed through simple thresholding. The resulting 3D volume, after low-pass filtering was used for statistical analysis of mitochondrial densities.

Statistical Analysis: Each volume was classified according to the mitochondrial density pattern into either a “type A” or “type B” fiber (as defined in Fig. 4). The resulting average densities were analyzed to quantitatively assess the reliability of the segmentation (Fig. 7). The segmented mitochondrial volume was measured and tabulated (Fig. 5).

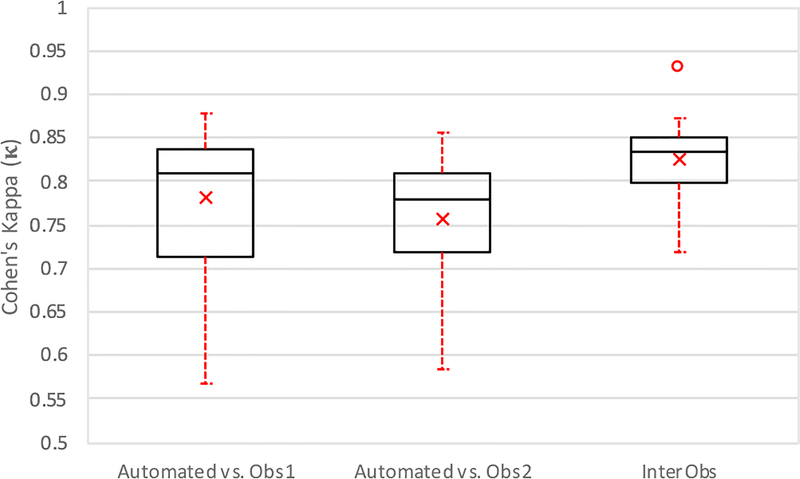

Fig. 7.

Boxplot of Quantitative Evaluation. Study of inter-observer variability and method versus each observer independently (n = 12), reported as a mean ± standard deviation. The center line indicates the median value; a × indicates the mean, the box edges depict the 25th and 75th percentiles. The error bars show the extremes at 1.5 inter-quartile range, calculated inclusive of the median, excluding outliers, indicated by °.

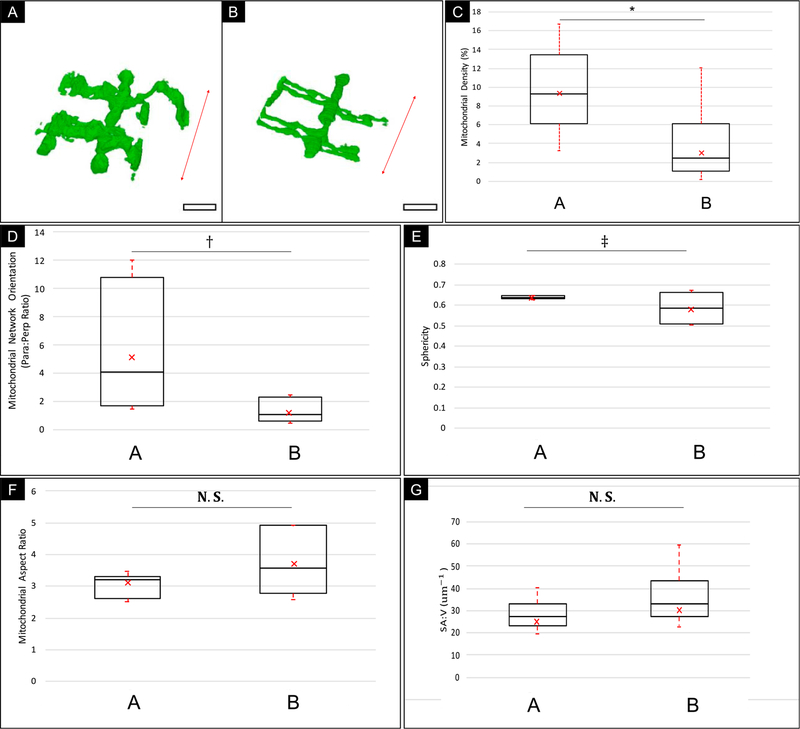

Fig. 5.

Boxplot graphical overview of Morphological Analysis of Human Skeletal Muscle Mitochondria Distribution from two sub-populations of data (Type A vs. Type B). A) Typical section of a Type A Mitochondrial Network. B) Typical Section of a Type B Mitochondrial Network; Red arrow = Orientation of fiber contraction; Scale bar: 1.5 μm. C) Mitochondrial Density Measurement. D) Mitochondrial Network Orientation. E) Mitochondrial Sphericity. F) Mitochondrial Aspect Ratio. G) Surface Area-to-Volume Ratio (SA:V). The center line indicates the median values; a × indicates the mean, the box edges depict the 5th and 95th percentiles. The error bars show the maxima and minima of each population. * indicates a statistically significant difference was found between the muscle types with high power (p-value < 0.01, α = 0.05; Power (1 – β) > 0.95). † Indicates that a statistically significant difference was found between the muscle types with moderate power (p-value < 0.01, α = 0.05; Power > 0.7). ‡ Indicates a marginal difference was found between the muscle types (p-value = 0.03, α = 0.05; Power < 0.7). N.S. indicates no statistically significant difference found between muscle types (p-value > 0.05, α = 0.05). Total Sampled volume = 343,600 μm3 across 4 healthy individuals.

3.2. Statistical analysis of mitochondrial distribution in human skeletal muscle

An essential step in the evaluation of this method was in determining its sensitivity to subtle differences in 3D volumes. Fig. 5 demonstrates this by differentiating between two muscle types across 4 healthy individuals. This type of analysis has the potential to generate statistically relevant data for the study of age and disease-related differences in sub-cellular architecture across a population of individuals, where detection of subtle differences between populations may provide a wealth of insight into the mechanism and progression of disease states.

In Fig. 5C, the mean density values measured for type A and B muscle are 8.99 ± 1.82% and 3.21 ± 1.52%, respectively. This mitochondrial density was found to be a defining characteristic of the skeletal muscle types, consistent with similar density values obtained in murine skeletal muscle measurements (Bleck et al., 2018). Analysis of mitochondrial network orientation in Fig. 5D, showed a higher degree of variation in the type A muscle relative to type B muscle indicating a higher heterogeneity in the structural composition of type A muscle with a trend towards high mitochondrial density parallel to the mitochondrial contraction axis, whereas type B muscle types had similar densities in both the parallel and perpendicular orientations. The mean orientation values were 5.23 ± 4.2 and 1.3 ± 0.63 for type A and type B muscle types respectively. Mitochondrial Sphericity (Fig. 5E) values for the two muscle types are similar but there is a higher variance among the type B muscle fibers. The mean sphericity values were 0.64 ± 0.01 and 0.58 ± 0.06 for type A and type B respectively. Both Mitochondrial Aspect Ratio (AR) and Surface area to Volume (SAV) ratio show no significant difference between muscle types in this study, with mean AR values of 3.7 ± 0.73 and 3.12 ± 0.37 and mean SAV values of 25.6 ± 3.3 μm−1 and 30.8 ± 5.0 μm−1 for type A and B muscle respectively.

Normalization of the average densities across different data sets minimized variability between data sets and allowed us to develop a generalized model of mitochondrial distribution across the muscle samples from different individuals. Combining multiple segmented volumes along each of the principal axes further increased the reproducibility of the results of semi-automated segmentation.

3.3. Quantitative evaluation of segmentation pipeline against manual standards

Table 1 shows the quantitative evaluation of the performance of the method vs two independently segmented versions of the same data set by two individuals along with inter-individual variability, calculated according to the equations above.

Table 1.

Quantitative evaluation. Study of inter-observer variability and method versus each observer independently (n = 12), reported as a mean ± standard deviation.

| Semi-Automated vs. Observer 1 | Semi-Automated vs. Observer 2 | Inter-Observer | |

|---|---|---|---|

| Sensitivity (SENS) | 0.9 ± 0.07 | 0.92 ± 0.06 | 0.83±0.01 |

| Specificity (SPEC) | 0.99±0.02 | 0.98±0.01 | 0.99±0.01 |

| Accuracy (ACC) | 0.98±0.02 | 0.98±0.02 | 0.99±0.01 |

| Dice Similarity Coefficient (DSC) | 0.79 ± 0.07 | 0.79 ± 0.08 | 0.83 ± 0.05 |

| Absolute Volume Difference (AVD) (%) | 26.7 ± 23.2 | 32.7 ± 27 | 21.1 ± 16 |

| Cohen’s Kappa (κ) | 0.78 ± 0.08 | 0.76 ± 0.1 | 0.83 ± 0.05 |

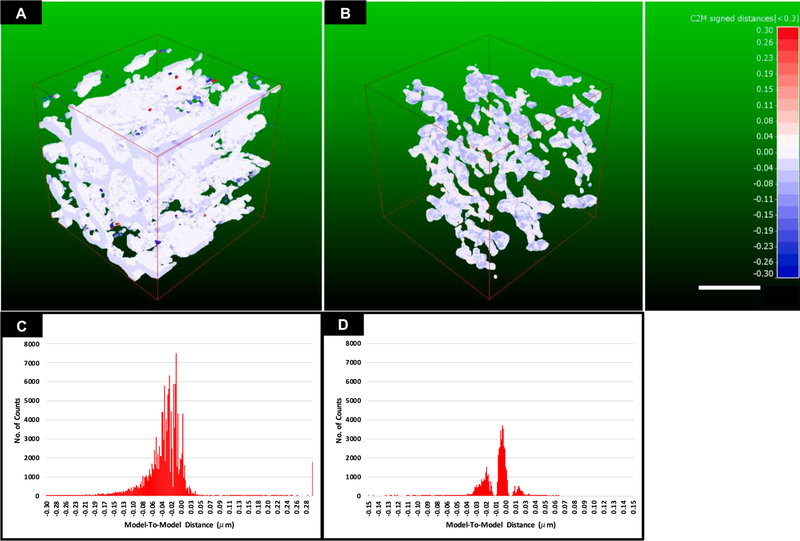

Sensitivity, specificity and accuracy are 0.83 ± 0.12, 0.99 ± 0.01 and 0.99 ± 0.01 respectively between the independent manually segmented data sets. A similar relative distribution of the mean sensitivity, specificity and accuracy were found between each manually segmented data set and the semi-automated segmentation with average values of 0.91 ± 0.07, 0.98 ± 0.01 and 0.98 ± ± 0.02 respectively. The relatively low sensitivity and large absolute volume difference (avg.26.8 ± 22.1%) in all comparisons is indicative of the difficulty in defining the mitochondrial boundary, even between manual observers, a 1-pixel difference in mitochondrial thickness across a volume can lead to dramatic decreases in sensitivity and an overall large difference between segmented volumes. To illustrate the effect of these deviations on the overall volume and measure the Mean Surface Difference (MSD) between segmented volumes, a 3D volume-to-volume comparison was performed on a typical “type A” and “type B” muscle volume in Fig. 8, which demonstrates that the majority of the difference is within 2–3 voxels across the whole volume.

Fig. 8.

Model-to-Model Distance measurement. A) Isometric projection of 100 μm3 3D model-to-model distance map for a typical “Type A” sub-volume. B) Isometric projection of 100 μm3 3D model-to-model distance map for a typical “Type B” sub-volume. C) A graphical representation of the distribution of the mean surface distances between manual (reference) and semi-automated (comparison) volumes across the 3D mesh map for a typical type A sub-volume. D) A graphical representation of the distribution of the mean surface distances between manual (reference) and automated (comparison) volumes across the 3D mesh map for a typical type B sub-volume. Red-White-Blue distance map represents distances in microns, Red: Manual model > Automated model; White: Manual ≈ Automated model (±15 nm); Blue: Manual < Automated model. Scale bar = 2 μm.

There was excellent agreement between the two manual datasets and between the manual and semi-automated datasets in the overall specificity and accuracy of identification of mitochondria. The high level of agreement between segmentations is illustrated using DSC and Cohen’s Kappa similarity coefficients, between the manually segmented data sets (0.83 ± 0.05 and 0.83 ± 0.05 respectively) and between each manual and automated segmented data set (with average values of 0.79 ± 0.08 and 0.77 ± 0.1 respectively). Analysis of the similarity coefficients across the sampled population indicated no significant difference in agreement between both the manual and automated segmentations, demonstrating the reliability of our methodology to reproduce structures which approach human level performance (p > 0.05, α= 0.05).

Fig. 7 provides a graphical representation of Cohen’s Kappa values showing how the majority of the manual segmentations (75%) are above the widely accepted threshold of 0.7 for semi-automated segmentations (McHugh, 2012). We anticipate that this could be further improved with refinement of the classifiers or increasing the number of classifiers per volume.

Fig. 8 provides a graphical representation of the model-to-model distance map between semi-automated and manual segmentations of two muscle types. The mean surface distance (MSD) was calculated by fitting the above distributions (Fig. 8C, D) to a Gaussian distribution, and the mean ± standard deviation was determined. The MSD showed the semi-automated segmentation was accurate to 0.03 ± 0.06 μm, indicating a segmentation accurate to 2 or 3 voxels, with a slight bias to overestimate the size of the mitochondria relative to the manual segmentation. Of note, differences of the same magnitude were detected between observers, as mentioned previously and is indicative of the difficulty in defining precisely mitochondrial boundaries.

4. Evaluation of semi-automated segmentation performance using CA1 hippocampal test dataset

Fig. 9 is a demonstration of the performance of the segmentation approach against a hippocampal dataset. The time for obtaining this segmentation of 400 μm3 volume took <24 h. We estimate that a 100-fold increase in the volume of the data to be segmented would not increase the segmentation time considerably, once the classes are produced they can be applied across an extremely large volume with little-added input.

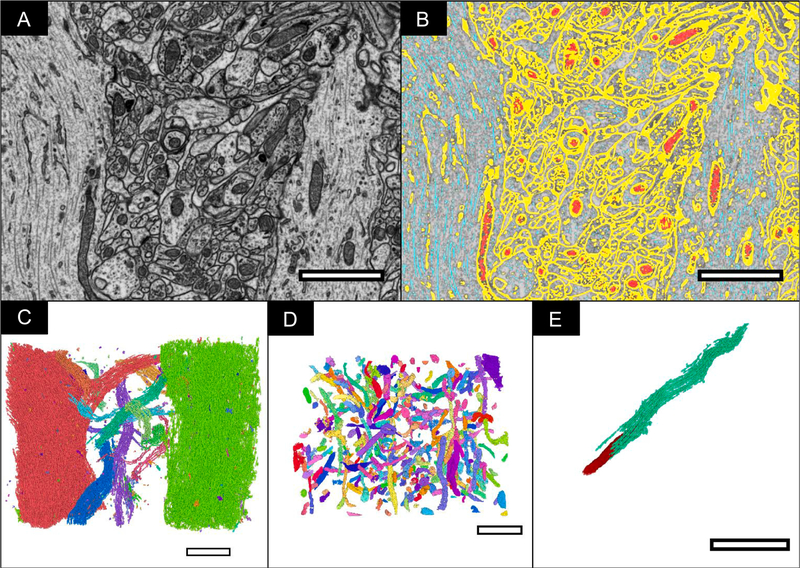

Fig. 9.

Other applications of this software. A) FIB-SEM slice from the CA1 hippocampal region of the brain with a voxel size of 5 × 5 × 5 nm3 B) FIB-SEM slice with automated segmentation overlaid (Yellow = Cell membrane; Red = Mitochondria; Cyan = Microtubules) C) 3D volume of segmented microtubules labelled separately, allowing for the straightforward isolation of individual cells for focused study. D) 3D volume of segmented mitochondria labelled separately. E) Individual microtubule (cyan) and mitochondria (red).

5. Conclusion

In this work, we have presented a method for 3D segmentation and statistical analysis of human skeletal muscle volumes using a semi-automated segmentation framework. The results demonstrate that rapid analysis of 3D muscular mitochondrial characteristics in relatively large volumes (>10,000 μm3) can be achieved consistently with high accuracy across multiple data sets.

Our data collection approach enables rapid acquisition of large volumes at a rate of >1500 μm3/h. The acquisition rate is dependent on, among other variables, the pixel size which in turn determines the scanning area and resolution of subsequent volumes. Therefore, there is an inherent trade-off between resolution and volume acquisition rate. In this study, we determined that a 15 nm2 pixel area returned sufficient resolution and volume acquisition rate for statistical analysis of the mitochondria in the 3D image data from muscle tissue.

The Weka machine learning (Arganda-Carreras et al., 2017) software was chosen specifically for its segmentation capabilities. The Weka software is a robust software that is professionally maintained by The University of Waikato in New Zealand. Weka’s software is powerful and versatile, allowing it to be ported to various operating systems and be used as a component of larger software. Our approach to full volume segmentation is to manually classify a small set of images and then export the manually trained classifier to use on the entire data set. These methods are generalizable to a variety of other data sets.

Large-scale, high-resolution volume segmentation and validation of multiple cellular components can be achieved by a single individual in an extremely brief timespan using our approach. We demonstrate this by generating multiple structural benchmark values for human skeletal muscle which will expand with time to cover all healthy age groups, opening the door for its use in the study of the structural implications of disease. We have also been able to delineate between two different morphological types found in muscular tissue demonstrating the sensitivity of this approach to detect and describe structural differences on an individual scale.

We illustrate the versatility of our methodology through a publicly available dataset of neural tissue, (Computer Vision Laboratory – Electron Microscopy Dataset, 2018) used as a standard to test automated segmentation approaches, to produce several 3D segmentations of major cellular organelles in less than 24 h. This dataset was acquired and segmented at a spatial resolution of 5 nm3. Currently, the majority of neuronal tissue segmentations (Zheng et al., 2018) are performed using manual tracing methods, however, due to its time-consuming nature, much of the intra-cellular detail is lost. Through the use of our approach, this information can be rescued and used in conjunction with manually traced data to build a complete picture of the sub-cellular environment in neuronal tissues. In conclusion, we note that our approach, which is available online to any interested user, can be readily applied to a wide variety of biological problems, with minimal human input, from tackling large-scale population-wide studies to the sensitive high-resolution analysis of cellular components.

Supplementary Material

Acknowledgments

We thank members of the Ferrucci and Subramaniam laboratories for helpful discussions. We thank Kunio Nagashima for resin infiltration and embedding of muscle samples. We thank Jessica De Andrade for help with validation of the semi-automated segmentation. This research was supported by the Intramural Research Programs of the National Institute on Aging, NIH, Baltimore, MD to LF, by funds from the Center for Cancer Research, National Cancer Institute, NIH, Bethesda, MD to SS. This work utilized the computational resources of the NIH HPC Biowulf cluster (http://hpc.nih.gov).

Abbreviations:

- FIB-SEM

Focused Ion Beam-Scanning Electron Microscopy

- ML

Machine Learning

- AVD

Absolute Volume Difference

- MSD

Mean Surface Distance

- DSC

Dice Similarity Coefficient

Footnotes

Appendix A. Supplementary data

Supplementary data to this article can be found online at https://doi.org/10.1016/j.jsb.2019.03.008.

References

- Arganda-Carreras I, Kaynig V, Rueden C, Eliceiri KW, Schindelin J, Cardona A, Sebastian Seung H, 2017. Trainable Weka Segmentation: a machine learning tool for microscopy pixel classification. Bioinformatics (Bioinformatics) 33 (15), 2424–2426. [DOI] [PubMed] [Google Scholar]

- Berning M, Boergens KM, Helmstaedter M, 2015. SegEM: efficient image analysis for high-resolution connectomics. Neuron 87 (6), 1193–1206. [DOI] [PubMed] [Google Scholar]

- Bleck CKE, Kim Y, Willingham TB, Glancy B, 2018. Subcellular connectomic analyses of energy networks in striated muscle. Nature Commun. 9, (5111). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camacho DM, Collins KM, Powers RK, Costello JC, Collins JJ, 2018. Next-generation machine learning. Cell 173. [DOI] [PubMed] [Google Scholar]

- Chmid B, Schindelin J, Cardona A, Longair M, Heisenberg M, 2010. A high-level 3D visualization API for Java and ImageJ. BMC Bioinformatics 11, (274). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2018. Cloud Compare. Accessed 2018. www.cloudcompare.org.

- 2018. Computer Vision Laboratory – Electron Microscopy Dataset. http://cvlab.epfl.ch/data/em.

- Dice Lee R., 1945. Measures of the Amount of Ecologic Association between Species Vol. 26. [Google Scholar]

- Glancy B, Hartnell L, Combs CA, Femnou A, Sun J, Murphy E, Subramaniam S, Balaban RS, 2017. Power grid protection of the muscle mitochondrial reticulum. Cell Rep. 19 (3), 487–496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glancy B, Hartnell LM, Malide D, Yu ZX, Combs CA, Connelly PS,Subramaniam S, Balaban RS, 2015. Mitochondrial reticulum for cellular energy distribution in muscle. Nature 523 (7562), 617–620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gonzalez-Freire M, Scalzo P, D’Agostino J, Moore ZA, Diaz-Ruiz A, Fabbri E, Zane A, et al. , 2018. Skeletal muscle ex vivo mitochondrial respiration parallels decline in vivo oxidative capacity, cardiorespiratory fitness, and muscle strength: The Baltimore Longitudinal Study of Aging. Aging Cell 17 (2). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Januszewski M, Kornfield J, Li PH, Pope A, Blakely T, Lindsey L, Maitin-Shepard J, Tyka M, Denk W, Jain V, 2018. High-precision automated reconstruction of neurons with flood-filling networks. Nature Methods 15 (8), 605–610. [DOI] [PubMed] [Google Scholar]

- Kasaragod D, Makita S, Hong YJ, Yasuno Y, 2018. Machine-learning based segmentation of the optic nerve head using multi-contrast Jones matrix optical coherence tomography with semi-automatic training dataset generation. Biomed. Opt. Express 9 (7), 3220–3243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobayashi T, Gunji M, Wakita S, 1980. Conductive staining in SEM with especial reference to tissue transparency. J. Scanning Microscopies 227–232. [Google Scholar]

- Lewinson D, 1989. Application of the ferrocyanide-reduced osmium method for mineralizing cartilage: further evidence for the enhancement of intracellular glycogen and visualization of matrix components. J. Histochem 259–270. [DOI] [PubMed] [Google Scholar]

- Lucchi A, Smith K, Achanta R, Knott G, Fua P, 2010. Supervoxel-based segmentation of EM image. Technical Report, Lausanne: Ecole Polytechnique Fédérale de Lausanne (EPFL). [Google Scholar]

- McHugh ML, 2012. Interrater reliability: the kappa statistic. Biochemia Medica 22 (3). [PMC free article] [PubMed] [Google Scholar]

- Meijs M, Patel A, van de Leemput SC, Prokop M, van Dijk EJ, de Leeuw F-E, Meijer FJA, van Ginneken B, Manniesing R, 2017. Robust segmentation of the full cerebral vasculature in 4D CT of suspected stroke patients. Scientific Reports (Scientific Reports) 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy GE, Narayan K, Lowekamp BC, Hartnell LM, Heymann JA, Fu J,Subramaniam S, 2011. Correlative 3D imaging of whole mammalian cells with light and electron microscopy. J. Struct. Biol 176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narayan Kedar, Subramaniam Sriram, 2015. Focused ion beams in Biology. Nature Methods 1021–1031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shock NW, Greulich RC, Andres R, 1984. Normal human aging: The Baltimore longitudinal study of aging. US Government Printing Office, Washington DC. [Google Scholar]

- Sjöström M, Ängquist K-A, Bylund A-C, Fridén J, Gustavsson L, Scherstén T, 1982. Morphometric analyses of human muscle fiber types. Muscle Nerve 538–553. [DOI] [PubMed] [Google Scholar]

- Stone JL, Norris AH, 1966. Activities and attitudes of participants in the Baltimore longitudinal study. J. Gerontol 575–580. [DOI] [PubMed] [Google Scholar]

- Tanaka R, Takimoto H, Yamasaki T, Higashi A, 2018. Validity of time series kinematical data as measured by a markerless motion capture system on a flatland for gait assessment. J. Biomech 281–285. [DOI] [PubMed] [Google Scholar]

- Zheng Z, Lauritzen JS, Perlman E, Robinson CG, Nichols M, Milkie D, Torrens O, et al. , 2018. A complete electron microscopy volume of the brain of adult drosophila melanogaster. Cell 730–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.