Abstract

Background:

The ACGME case log is one of the primary metrics used to determine resident competency; it is unclear if this is an accurate reflection of the residents’ role and participation.

Methods:

Residents and faculty were independently administered 16-question surveys following each case over a three-week period. The main outcome was agreement between resident and faculty on resident role and percent of the case performed by the resident.

Results:

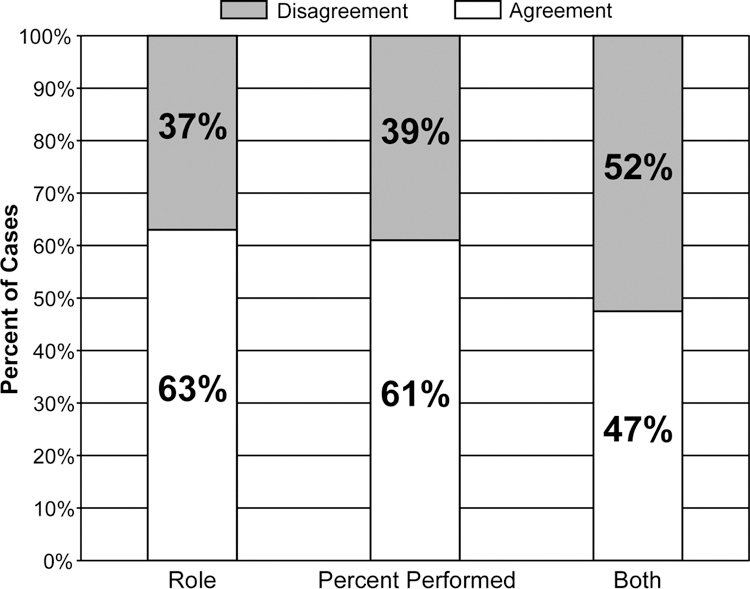

Matched responses were collected for 87 cases. Agreement on percent performed occurred in 61% of cases, on role in 63%, and on both in 47%. Disagreement was more often due to resident perception they performed more of the case. Faculty with <10 years experience were more likely to have disagreement compared to faculty with ≥10 years (p=0.009).

Conclusions:

There was a high degree of disagreement between faculty and residents regarding percent of the case performed and role. Accurate understanding of participation and competency is vital for accrediting institutions and for resident self-assessment meriting further study of the causes for this disagreement to improve training and evaluation.

Keywords: surgical education, competency, operative skills, milestones, perceptions, residency training

Summary

The ACGME operative case log is one of the primary metrics used to determine resident competency however, it is unclear if residents and faculty agree on the residents’ role and participation. In this study, residents and faculty participating in the same case have different perceptions of resident participation as defined by resident role and percent performed.

INTRODUCTION

Historically, operative volume has been a proxy for competence. However, factors such as resident work hour restrictions and increasing medico-legal/ patient safety considerations have significantly changed the context of surgical training, including operative autonomy.1 These factors, along with more to learn, contribute to the perception among graduating residents that they are inadequately prepared for practice.2 Despite this, organizations such as the American Board of Surgery (ABS) and the Accreditation Council for Graduate Medical Education (ACGME) still rely on case volumes as a metric for competence.

Currently the ABS requires residents perform 750 operations within discrete categories as “operating surgeon” in order to sit for the General Surgery Qualifying Examination, and the ACGME uses the surgical case log as one metric of a residency program’s operative training. Presently, the ACGME requires all residents log operative cases on the ACGME case log website, and categorize their role as “first assistant”, “surgeon junior”, “teaching assistant”, or “surgeon chief”, without clear guidelines for each role. It is unclear however if residents know when they act as “operating surgeon” in a given case.3 Tradition holds that if the residents perform >50% of the “most important part of the case”, then they have functioned as the “operating surgeon”. This is slightly different from the definition given by the ABS, which states that a resident must “have personally performed either the entire operative procedure or the critical parts thereof…”4 Based on these definitions, the accuracy of the resident case log relies not only on honest resident reporting, but also on agreement between the resident and faculty on what are the “critical parts” and whether the resident performed them.

Despite these limitations, there has been no evaluation of the degree to which faculty and residents agree on the resident’s role, which is critical to the current training paradigm and to future frameworks. The purpose of this study was to determine if a difference exists between faculty and resident perceptions of resident role within a given case and to test the hypothesis that there is poor agreement as to what percent of the operation the resident performed and what their role was.

METHODS

Following approval from the Institutional Review Board, faculty and resident participants were recruited from the Department of Surgery. Participants were asked to complete surveys following each operative case. Participation was voluntary and uncompensated. All faculty members within the Department of Surgery were eligible for participation in the project including subspecialties. All residents on the general surgery services were eligible.

Survey Design

The survey instrument was developed by a team of residents and faculty in conjunction with a PhD educator, and was based on literature reviews,2, 5–23 and informal focus groups. The survey was designed to evaluate resident and faculty perceptions of operative experience and involvement, as well as evaluate the potential relationship between resident participation and factors such as surgeon experience and resident skill level.17, 20, 22 An initial 18-question survey was piloted with a small group of residents and faculty. Subsequent revisions also underwent pilot testing. The final survey consisted of 15 questions for faculty and 16 questions for residents with a combination of “yes/no” questions, as well as rating scales and short answers (eFigure1 and eFigure2).

Covariates

Demographic data obtained from faculty and residents included years experience or PGY level, the case performed, and the approximate number of times they had performed the case previously. A list of all cases was distributed to two senior faculty members in vascular and general surgery for categorization as basic versus complex with 100% agreement among reviewers.

Outcomes

The main outcome studied was resident and faculty perception of resident involvement in the case, as evaluated by agreement on what percentage of the case was performed by the resident (<25%, 25–50%, 50–75%, and >75%) and the residents’ role in the case (first assistant, surgeon junior, surgeon chief, or teaching assistant).

Survey Administration

Study personnel administered parallel versions of the survey to residents and faculty following every eligible case during a three-week period from July to August 2015. Cases were considered eligible if the faculty performing the case had volunteered to participate in the study, and if there was at least one resident participating in the surgery. Surveys were de-identified, and coded with matching numeric identifiers to facilitate evaluation by operative case.

Analysis

Data were compiled and compared for inter-case agreement between residents and faculty with regards to percent of the case performed by the resident and resident role. The agreement on both percent performed and resident role was determined using descriptive statistics. Cohen’s kappa was used to determine agreement between resident and physician reported outcomes. Cohen’s kappa can be interpreted as follows: values less than 0.20 indicate poor agreement, 0.21–0.40 as fair, 0.41– 0.60 as moderate, 0.61–0.80 as substantial, and 0.81–1.00 as almost perfect agreement. Bivariate analysis was used to evaluate agreement on percent of the case performed and resident role with the variables: operative type, case complexity, PGY year, and faculty experience as well as to determine association of resident and faculty characteristics with agreement, faculty and resident perceptions of role, and faculty and resident perceptions of percent performed. Exact logistic regression analyses were used to analyze the variables associated with resident and faculty perceptions of role, as well as faculty and resident perceptions of percent performed. Significance was set at p ≤ 0.05. All statistical analysis was performed with SAS 9.4. (Cary, NC).

RESULTS

One-hundred thirteen cases out of 187 cases performed during the study period were eligible for inclusion. Seventy cases were excluded because faculty were not participating in the study, 4 cases did not involve resident participation, 16 cases were eligible but not given surveys, and 10 cases failed to have either faculty or resident surveys returned. Paired responses were collected for 87/113 cases (77% response rate). Thirteen of 22 faculty members participated in the study.

Demographics

Residents in years 1–3 (PGY1–3) composed 54% of responses completing at total of 47 surveys, while senior level residents (PGY 4–5) composed 46% of responses completing 40/87 surveys (Table 1). Fifty-five faculty responses were from faculty members with less than 10 years post training (63%), and the remaining 32 responses were from faculty who had been in practice for more than 10 years (37%). Of the 87 cases, 65 cases were general surgery and 22 were endovascular cases (Table 2). The majority of cases were basic cases, such as inguinal hernia repairs. Of the 65 general surgery cases, 26% were laparoscopic (N=17; 8 basic and 9 complex) and 74% were open cases (N=48; 38 basic and 10 complex). Of the 22 endovascular cases, 9 were basic (e.g. angiograms and vein ablation) and 13 were complex (such as endovascular aneurysm repair).

Table 1:

Demographics of respondents.

| N (%) | |

|---|---|

| PGY Year | |

| PGY 1–3 | 47 (54%) |

| PGY 4–5 | 40 (46%) |

| Faculty # Years Experience | |

| <10 Years | 55 (63%) |

| ≥ 10 Years | 32 (37%) |

Table 2:

Case characteristics.

| Type of Case | Total Cases N (%) | Basic Cases N (%) | Complex Cases N(%) |

|---|---|---|---|

| Open | 48 (55%) | 38 (44%) | 10 (11%) |

| Laparoscopic | 17 (20%) | 8 (9%) | 9 (10%) |

| Endovascular | 22 (25%) | 9 (10%) | 13 (15%) |

Percent of Case Performed by Resident

The results of bivariate analysis of both percent performed and role are shown in Table 3, while those of the multivariate analysis are shown in Table 4. Sixty-one percent of cases had agreement between residents and faculty on what percentage of the case the resident performed (Figure 1). Cohen’s Kappa for agreement between faculty and residents on percent performed was 0.61 indicating a substantial agreement. Agreement was highest for the resident performing >75% of the case. The number of years post-training for faculty was associated with disagreement on percent performed by resident on bivariate analysis, (p=0.0042) and logistic regression (OR= 6.001, CI= 1.761–20.444).

Table 3:

Results of bivariate analysis.

| Characteristics | No. of cases |

Concordance on resident role |

Concordance on percentage performed |

||

|---|---|---|---|---|---|

| N (%) | N (%) | p-value | N (%) | p-value | |

| Surgery type | 0.938 | 0.923 | |||

| Lap | 18 (21.69) | 12 (66.67) | 12 (66.67) | ||

| Open | 44 (53.01) | 29 (65.91) | 27 (61.36) | ||

| Vascular | 21 (25.30) | 13 (61.90) | 13 (61.90) | ||

| Case type | 0.228 | 0.707 | |||

| Basic | 53 (63.86) | 37 (69.81) | 34 (64.15) | ||

| Complex | 30 (36.14) | 17 (56.67) | 18 (60.00) | ||

| PGY Year | 0.844 | 0.508 | |||

| PGY 1–3 | 47 (56.63) | 31 (65.96) | 28 (59.57) | ||

| PGY 4–5 | 36 (43.37) | 23 (63.89) | 24 (66.67) | ||

| Faculty Years Experience | 0.022 | 0.009 | |||

| <10 years | 52 (62.65) | 29 (55.77) | 27 (51.92) | ||

| >=10 years | 31 (37.35) | 25 (80.65) | 25 (80.65) | ||

Table 4:

Results of multivariate analysis.

| Characteristics | Concordance on role | Concordance on % performed | Resident perception role as a surgeon | Faculty perception role as a surgeon | Resident perception performed >=50% | Faculty perception performed >=50% |

|---|---|---|---|---|---|---|

| Surgery Type | ||||||

| Lap | Ref. | Ref. | Ref. | Ref. | Ref. | Ref. |

| Open | 0.55 (0.14, 2.16) | 0.58 (0.15, 2.31) | 1.64 (0.25, 10.63) | 4.25 (0.98, 18.32) | 1.49 (0.36, 6.12) | 0.68 (0.16, 2.84) |

| Vascular | 0.94 (0.16, 5.35) | 1.80 (0.32, 10.31) | 0.98 (0.11, 8.95) | 1.80 (0.28, 11.79) | 0.33 (0.05, 2.01) | 0.84 (0.14, 5.20) |

| Case Type | ||||||

| Basic | Ref. | Ref. | Ref. | Ref. | Ref. | Ref. |

| Complex | 0.69 (0.24, 7.99) | 1.01 (0.35, 2.98) | 0.25 (0.07, 0.98) | 0.44 (0.15, 1.32) | 0.30 (0.10, 0.93) | 0.21 (0.06, 0.65) |

| PGY Year | ||||||

| < 4 years | Ref. | Ref. | Ref. | Ref. | Ref. | Ref. |

| >=4 years | 0.75 (0.22, 2.59) | 1.48 (0.43, 5.13) | 5.99 (1.01, 35.91) | 1.66 (0.37, 7.49) | 1.64 (0.45, 6.01) | 2.65 (0.70, 9.69) |

| Faculty Years Experience | ||||||

| <10 years | Ref. | Ref. | Ref. | Ref. | Ref. | Ref. |

| >=10 years | 3.97 (1.20, 13.18) | 6.00 (1.76, 20.44) | 0.57 (0.12, 2.68) | 2.39 (0.60, 9.58) | 0.48 (0.14, 1.67) | 2.83 (0.84, 9.58) |

Figure 1.

Agreement between residents and faculty on resident role, percent of the case performed by the resident, and both role and percent performed.

Both the type and complexity of the case were significant factors for residents’ perception that they performed >50% of the case. Residents were more likely to rate themselves as having performed >50% of the case in general versus endovascular cases (p=0.008) and in basic versus complex cases (p=0.008). PGY- level and faculty experience did not show significance (p=0.097 and p=0.38, respectively). On logistic regression, both case type (endovascular versus general) and complexity of the case were significant (OR= 4.57, CI=1.01–20.71 and OR=3.35, CI 1.07–10.44, respectively). In addition, faculty were more likely to perceive that the resident performed >50% of the case in general surgery versus endovascular cases (p=0.0559). Similar to the residents, faculty were more likely to associate the residents with performing >50% of the case in basic cases (p= 0.0010) and when faculty had greater than 10 years experience post training (p=0.0034). On logistic regression analysis, only case type (basic vs. complex) was significant for an association with performing >50% of the case (OR=4.87, CI=1.55– 14.37).

In 65% of cases with disagreement on the percent of the case performed by the resident, the residents believed they performed more of the case than the faculty perceived. Most cases of disagreement (79%) occurred with faculty with less than 10 years experience.

Resident Role

Residents and faculty agreed on the resident role in 55/87 (63%) of cases (Figure 1), with an equal representation of lower level and upper level residents (PGY 1–3=31/55 and PGY 4–5= 24/55). Cohen’s kappa for agreement between faculty and resident role was 0.23 indicating a fair agreement. Faculty with 10 or more years experience comprised 45% of the cases of agreement. However, in cases where there was not agreement on resident role, faculty with 10 or more years experience represented only 22% (7/32) of respondents. In 63% of cases with disagreement on resident role, residents perceived a greater role than faculty did.

On bivariate analysis, agreement on resident role was associated only with faculty experience (p=0.0215). There was no significant association between agreement and the type of surgery, case complexity, or PGY level. On logistic regression analysis, faculty experience remained statistically significant (OR=3.97, CI=1.19–13.18). In evaluating resident role of surgeon as assessed by the resident, residents were more likely to consider themselves as “surgeon” in general surgery cases compared to endovascular cases (p=0.0058) and in basic cases as compared to complex cases (p=0.0291). PGY4–5 residents were more likely to consider themselves the “surgeon” on a case compared to PGY 1–3 (p= 0.0164). On logistic regression analysis, both PGY level and case type remained significant for resident determination of role.

Faculty were more likely to identify the resident as operative surgeon on open cases as compared to laparoscopic or endovascular cases (p=0.0048) as well as on basic cases compared to complex (p=0.0073). Resident year did not appear to impact faculty determination of resident role but faculty experience was significantly associated with their determination of resident as “surgeon” (p=0.0130). However, on logistic regression analysis there were no significant variables associated with faculty perspectives of resident role.

Cases were evaluated for agreement on both percent performed and role and only 47% of cases had agreement between faculty and residents on both (Figure 1). Cases with agreement on resident role were evaluated for additional agreement on what percentage of the case was performed. In cases where residents and faculty agreed that the resident functioned as first assistant, 88% also agreed on what percentage of the case was performed by the resident (<25%). In cases where the resident functioned as surgeon, 65% also agreed on the percentage of the case performed by the resident, with 88% agreeing the resident performed >75% of the case.

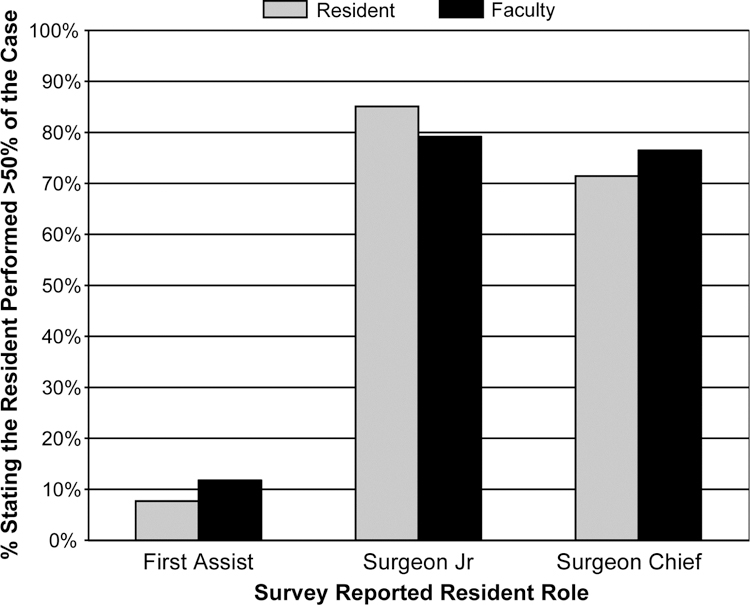

Aggregate Analysis

The data were evaluated irrespective of agreement between faculty physicians and residents on a given case. In the 26 cases where residents identified themselves as first assistant, 92% also appropriately identified themselves as having performed <50% of the case. In those cases where residents identified themselves as acting as surgeon junior, 85% also identified themselves as having performed >50% of the case; and those identified as surgeon chief, 71% identified themselves as having performed >50% of the case. A similar trend was found with faculty surveys. Across all cases, when the resident claimed the role of first assistant, 88.2% of residents and 92.3% of faculty indicated that the resident performed <50% of the procedure. In cases where the resident acted as surgeon junior, 79.2% of residents and 85.1% of faculty identified the resident as having performed >50% of the procedure. Similarly, those cases where the resident was surgeon chief, 76.4% of residents and 71.4% of faculty indicated the resident performed >50% of the procedure (Figure 2).

Figure 2.

Correlation between performing >50% of the case and resident role.

DISCUSSION

The landscape of surgical education has changed significantly over the past 20 years but the ultimate goal of surgical residency remains to produce competent surgeons. The previous metrics by which competency was judged (volume and time of exposure) are inadequate in modern surgical education. Our study contributes to the growing understanding that we lack a shared understanding of what constitutes “competency” as well as an accurate way of measuring competency. Although the ABS is moving to more concrete competency assessments, using tools like the Operative Performance Rating System (OPRS) 24, 25, the two principal methods of assessing competency (resident case log and the milestones curriculum) still rely on the resident’s perception of participation in the operation. The flaw in relying on resident perception participation is highlighted by the high degree of disagreement in the resident’s role in the case and percentage performed by the resident. This suggests that residents and faculty do not share a clear understanding of the residents’ role and participation.

One potential method for improving this discrepancy is to improve the communication between residents and faculty utilizing a model like the “Briefing, Intraoperative, Debriefing” (BID) model proposed by Roberts et al.19 In this model, faculty ask residents before cases what portions of the case they want to perform. This promotes discussion about the case. Intraoperatively, the faculty’s instruction focuses on areas identified by the resident. Postoperative discussion includes resident perspective on the case and feedback from faculty. This allows the faculty to understand the resident’s perspective and address any issues with performance. One advantage of this model is the resident’s ability to share their understanding of the case and their capabilities. Moreover, the delivery of formative feedback has been shown to lead to quicker development of competence.20

Formative feedback and guided intraoperative instruction may be reasons that the most consistent factor associated with agreement on resident role and percent performed was increased faculty experience. It is likely because these surgeons are more comfortable with leading residents through cases and allowing them to operate. In addition, residents and faculty alike were less likely to rate the resident as having a primary role in endovascular and complex cases. We believe the root cause of this is a lack of case exposure and familiarity. Due to the uniqueness of the skill set required for endovascular surgery, it is not unexpected that residents would not be prepared or permitted to take an active role. Similarly, residents typically have less exposure and familiarity with more complex procedures, and therefore may be less likely to take an active role. However, if the ultimate goal is competency, we must work towards helping senior residents function not only safely but also autonomously in complex procedures. Moreover, if residents are not allowed to operate as the “surgeon” on a complex case, the question of true competency remains. For example, if residents perform 90% of a colon resection but do not perform the anastomosis, they may log the case as “surgeon”, but may not be able to perform the case independently in the future.

One manner to concretely assess operative competence is with the ABS Operative Performance Rating System (OPRS). Under this new initiative, all residents graduating in or after 2016 will be required to have at least six operative and six clinical performance assessments by a faculty member. Residents will be evaluated on instrument handling, case difficulty, and degree of prompting or direction.24, 25 The grading sheets are procedure-specific and provide explicit criteria for evaluating crucial components of the case (such as the anastomosis in a colon resection). However, the OPRS criteria exist for only certain procedures.

Operative surgical training requires multiple facets of education. We propose a model that integrates simulation with components of the OPRS tool and the BID model, utilizing a pre-operative faculty-resident discussion. We believe this model will provide an efficient and useful augment to the current metrics. In this model, residents prepare for cases by developing a list of the key steps to discuss with the faculty. They also explain which the components they feel capable of performing. This provides the faculty with an opportunity to evaluate the resident’s understanding of the case and insight into their capabilities. Ideally, in each PGY level there will be an understanding of what is appropriate for their training, and an opportunity to come to agreement on which portions of the procedure the resident is capable of (and should be capable of performing). Faculty can then recommend specific steps for preparation- from use of an atlas to practice in the simulation lab. The use of simulation provides an opportunity for residents to develop familiarity with equipment as well as procedures, which should increase resident participation in technically complex procedures. As residents progress in training, the components of the case they perform will change. Systems such as the OPRS provide a framework for understanding the procedural steps and complexity for each level. Ideally, the OPRS provides the benchmarks for each level of training while the BID-like model provides integration between residents and faculty for the implementation. This integrates the milestones framework of competency-based assessment and allows for concrete assessment.

Our primary limitation in this study is the potential for variability within our survey. Although we piloted several versions, there was still the potential for misinterpretation, which could result in bias. Another limitation of this study was the sample size. These surveys were administered to only thirteen faculty members and a limited number of residents at a single institution. A small sample of participating residents may lend toward sample bias. Therefore, these responses may not be representative of the surgery residents in general. In addition, because the study was voluntary, there is potential for selection bias, as previous research has shown that faculty members who volunteer in research evaluating teaching skills tend to be better educators.11 Finally, the possible variation in resident experience is a source of potential confounding. Although we used PGY level as a surrogate for experience, it does not account for residents who had previous training, and more importantly, does not account for varying skill levels and exposure. Notwithstanding these limitations, our study does suggest a need for more directed competency evaluations with less subjective evaluation of resident participation.

In summary, the results of our study suggest a significant gap between resident and faculty understanding of resident intraoperative participation and its corresponding competency. Further evaluation of this discrepancy is necessary to identify potential interventions to improve training and assessment tools. The use of a structured faculty-resident pre-case discussion could provide a format to integrate a more concrete metric of competency-based assessment.

Supplementary Material

ACKNOWLEDGEMENTS

Drs. Perone and Brown had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Authors have no conflicts of interest to disclose.

Portions of this study have been presented in abstract form at the Academic Surgical Congress, Jacksonville, FL on February 2, 2016.

REFERENCES

- 1.Ustinova EE, Shurin GV, Gutkin DW, Shurin MR. The role of TLR4 in the paclitaxel effects on neuronal growth in vitro. PLoS One 2013;8:e56886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Yeo H, Viola K, Berg D, et al. Attitudes, training experiences, and professional expectations of US general surgery residents. JAMA 2009;301:8. [DOI] [PubMed] [Google Scholar]

- 3.Cadish LA, Fung V, Lane FL, Campbell EG. Surgical case logging habits and attitudes: A multispecialty survey of residents. J Surg Educ 2016;73:474–81. [DOI] [PubMed] [Google Scholar]

- 4.The American Board of Surgery. Philadelphia, PA: The American Board of Surgery. Available at: www.absurgery.org Accessed 2015. [Google Scholar]

- 5.Irby DM, Gillmore GM, Ramsey PG. Factors Affecting Ratings of Clinical Teachers By Medical Students and Residents. Journal of Medical Education 1987;62:1–7. [DOI] [PubMed] [Google Scholar]

- 6.Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med 2004;79:870–81. [DOI] [PubMed] [Google Scholar]

- 7.Fisher AT, Alder JG, Avasalu MW. Lecturing performance appraisal criteria: Staff and student differences. Australian Journal of Education 1998;42:153–68. [Google Scholar]

- 8.Reznick RK, MacRae H. Teaching surgical skills--changes in the wind. N Engl J Med 2006;355:2664–69. [DOI] [PubMed] [Google Scholar]

- 9.Kraiger K Perspectives on training and development. In: Borman WC, Ilgen DR, Klimoski RJ, eds. Hanbook of Psychology: Volume 12, Industrial and Organizational Psychology Hoboken, NJ: John Wiley & Sons, Inc.; 2003. p. 171–92. [Google Scholar]

- 10.Boor K, Teunissen PW, Scherpbier AJ, et al. Residents’ perceptions of the ideal clinical teacher--a qualitative study. Eur J Obstet Gynecol Reprod Biol 2008;140:152–7. [DOI] [PubMed] [Google Scholar]

- 11.Claridge JA, Calland JF, Chandrasekhara V, et al. Comparing resident measurements to attending surgeon self-perceptions of surgical educators. Am J Surg 2003;185:323–7. [DOI] [PubMed] [Google Scholar]

- 12.Dysvik A, Kuvaas B. The relationship between perceived training opportunities, work motivation and employee outcomes. International Journal of Training and Development 2008;12:138–57. [Google Scholar]

- 13.Huff NG, Roy B, Estrada CA, et al. Teaching behaviors that define highest rated attending physicians: a study of the resident perspective. Med Teach 2014;36:991–6. [DOI] [PubMed] [Google Scholar]

- 14.Keskitalo T, Ruokamo H, Gaba D. Towards meaningful simulation-based learning with medical students and junior physicians. Med Teach 2014;36:230–9. [DOI] [PubMed] [Google Scholar]

- 15.Ko CY, Escarce JJ, Baker L, et al. Predictors of surgery resident satisfaction with teaching by attendings. Ann Surg 2005;241:373–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lim DH, Morris ML. Influence of trainee characteristics, instructional satisfaction, and organizational climate on perceived learning and training transfer. Human Resource Development Quarterly 2006;17:85–115. [Google Scholar]

- 17.Pugh CM, DaRosa DA, Glenn D, Bell RH Jr. A comparison of faculty and resident perception of resident learning needs in the operating room. J Surg Educ 2007;64:250–5. [DOI] [PubMed] [Google Scholar]

- 18.Reznick RK. Teaching and testing technical skills. Am J Surg 1993;165:358–61. [DOI] [PubMed] [Google Scholar]

- 19.Roberts NK, Williams RG, Kim MJ, Dunnington GL. The briefing, intraoperative teaching, debriefing model for teaching in the operating room. J Am Coll Surg 2009;208:299–303. [DOI] [PubMed] [Google Scholar]

- 20.Rose JS, Waibel BH, Schenarts PJ. Disparity between resident and faculty surgeons’ perceptions of preoperative preparation, intraoperative teaching, and postoperative feedback. J Surg Educ 2011;68:459–64. [DOI] [PubMed] [Google Scholar]

- 21.Schrag D, Panageas KS, Riedel E, et al. Hospital and surgeon procedure volume as predictors of outcome following rectal cancer resection. Ann Surg 2002;236:583–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Vollmer CM Jr., Newman LR, Huang G, et al. Perspectives on intraoperative teaching: divergence and convergence between learner and teacher. J Surg Educ 2011;68:485–94. [DOI] [PubMed] [Google Scholar]

- 23.Larson JL, Williams RG, Ketchum J, et al. Feasibility, reliability and validity of an operative performance rating system for evaluating surgery residents. Surgery 2005;138:640–7; discussion 647–9. [DOI] [PubMed] [Google Scholar]

- 24.A User’s Manual for the Operative Performance Rating System (OPRS) 2012; Available at: http://www.absurgery.org/xfer/assessment/oprs_user_manual.pdf Accessed 2016.

- 25.Forkink M, Willems PH, Koopman WJ, Grefte S. Live-cell assessment of mitochondrial reactive oxygen species using dihydroethidine. Methods Mol Biol 2015;1264:161–9. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.