Abstract

Burn is one of the serious public health problems. Usually, burn diagnoses are based on expert medical and clinical experience and it is necessary to have a medical or clinical expert to conduct an examination in restorative clinics or at emergency rooms in hospitals. But sometimes a patient may have a burn where there is no specialized facility available, and in such a case a computerized automatic burn assessment tool may aid diagnosis. Burn area, depth, and location are the critical factors in determining the severity of burns. In this paper, a classification model to diagnose burns is presented using automated machine learning. The objective of the research is to develop the feature extraction model to classify the burn. The proposed method based on support vector machine (SVM) is evaluated on a standard data set of burns—BIP_US database. Training is performed by classifying images into two classes, i.e., those that need grafts and those that are non-graft. The 74 images of test data set are tested with the proposed SVM based method and according to the ground truth, the accuracy of 82.43% was achieved for the SVM based model, which was higher than the 79.73% achieved in past work using the multidimensional scaling analysis (MDS) approach.

Keywords: Image preprocessing, burn, classification, graft, SVM

Feature Extraction for Human Burn Diagnosis from Burn Images using Machine Learning.

I. Introduction

A human body is covered and protected by skin. Out of the total weight of a human body, skin is 15% of the complete weight of the body. The skin provides sensation, temperature regulation and synthesis of vitamin D. Skin get damaged during burn accidents. Burn is one of the serious public health problems [1]. Burn injuries are severe problems for a human being. In the case of death from unintentional injury, burns represent the fourth leading cause [2].

Basically, in the burn injury, the skin and adjacent tissues of burned part of the body is destroyed. After burn injury, the first treatment is required, as soon as possible. The first treatment is done based on the intensity and severity of the burned part. Burn area, depth, and location are the critical factors in determining the severity of burns. So it is essential to know the various types of skin and the position of the skin to judge the severity of the injury. Epidermis and dermis are an essential part of the skin. The outer thinner part of the skin is known as an epidermis whereas the dermis is the thick inner layer. It is composed of elastic fibers of connective tissue.

Generally, superficial dermal burn, deep dermal burn, and full-thickness burn are the three types of burns and it is vital to make the difference between these three types of burns [3], [4]. Superficial dermal burns affect the outer part of the skin or epidermis. The affected part appears red, painful, and dry. It may also have blisters. Deep dermal burns affect the epidermis and part of the dermis layer of skin. The burn part appears red, blistered and painful. Full thickness burns destroy both epidermis and dermis and may also enter into the subcutaneous tissue. The burn affected part appears white or charred.

The crucial part of this work is to label these images so that the proper treatment can be given. As specialist diagnoses for the proper treatment, in the same manner, the skin burn images are enhanced, and then images are labeled [2]. The primary objective of this work is to develop a classification system for burn injury images using color characteristics. The skin burn could be classified into three categories, i.e. superficial dermal burn, deep dermal burn and full-thickness burn which is based on its color, hue, and intensity of shade, from center to the periphery.

After 14–21 days with proper treatment, superficial burns heal and may leave scars whereas early surgical excision and skin grafting is required for deep dermal burns and full thickness skin burns, otherwise healing time is longer.

It is important to diagnose whether a burn needs surgical demand as early as possible. The delayed and inaccurate diagnose will lead to increase dangers for affected persons [3], [4]. At the last, multi-region burns are universal and will representatively have an amalgamation of burn distance down [5]. Taking out with a cut and grafting of complex burns need the expert idea. The careful diagnose with different conditions ensures good selection therapy of the complete burn part.

The classification of all burn as mentioned earlier wounds is required to be done by an automated system. The grading can be done on the graft base. In this paper, the effort on burn depth estimation, specifically classification of burn based on with graft and without graft is described. Usually, the burns surgeons differentiate different types of burn based on their clinical experience. Therefore, the accuracy is also varied to the experienced burn surgeon and inexperienced from 64–76% to 50%, respectively [2].

The recent approach uses digital image processing and machine learning approach for the classification of different types of burn. So that if grafting needed it can be done by the surgeon.

II. Related Work

In the paper of Badea et al. [2], the author has proposed a method for burn image detection. As per the properties of an entire encompassing patch, they have applied the pixel-wise method. This method is computationally expensive and also sensitive to noise. Therefore, their classifier is not able to provide optimized results. It also requires supplemental medical information which supports for classification.

Making the automatic burn classification, King et al. [5] has incorporated the useful wavelengths in this paper and tunes the device for automated burn classification. They also expect future porcine burn experiments. As the porcine burn knowledge-base is a strong base, therefore one can map to eventually test the first working design in a medical frame for events and act Receiver Operating Characteristic (ROC) observations to measure the help that the apparatus provides clinicians in medical decision making and diagnosis.

Multispectral imaging (MSI) is implemented by Li et al. [6] to diagnose burn injury. It assists burn surgeons for classifying burn tissue for the burn debridement surgery. Their algorithm significantly increased burn model classification accuracy.

In the paper [7], Rangaraju et al. have described the clinical method to determine types of burn, a degree of burn and grafting technique. Due to heat on skin original structure of skin changes and densely packed structure appears on the skin. Clotting (coagulation) is used to measure the degree of burn. Collagen ratio below 0.35 is considered as superficial burn and the ratio between 0.35 and 0.65 is considered as deep dermal burn, whereas, a ratio greater than 0.65 is considered as full thickness burn.

Sabeena and Kumar have given a careful way using SVM for burn identification and its segmentation [8]. The main idea behind their work is to get well burn or acted-on process. Their system uses a range of methods for the image acquiring and pre-processing. It clearly outlines and extracts the burn from the images, but the accuracy is not up to the mark.

In the paper of Suvarna and Niranjan [9], the authors have done a comparative study on three classifiers, like Template Matching (TM), k-Nearest Neighbours (k-NN) and SVM. They have discovered that the SVM provides a better accuracy comparison to TM and k-NN. But this research has not taken into accunt the real facts of burn images and their features. Therefore, further improvements are also needed.

Acha et al. have answered one of the hard questions of getting the depth of the burn [10]. They have labeled the burn image and measure the depth of the burn by selecting features from the burn image. They have used a Fuzzy-ARTMAP neural network classifier, ARTMAP refers to a family of neural network architectures based on Adaptive Resonance Theory (ART) that is capable of fast, stable, online, unsupervised or supervised, incremental learning, classification, and prediction. It combines an ART unsupervised neural network with a map field and non-linear support guide machines to find the depth of the burn.

In the paper of Tran et al. [11], the authors have identified and extended in the model of Convolution Neural Network (CNN). They have used it for burn images recognition and identifying the degrees of burn. Their burns analysis helps to classify the degrees of burns and give a suitable medical decision.

In the paper of Li [12], the authors have determined the degree of skin burns in quantities taking the mean of skin burns images histogram, the standard deviation of color maps, and the percentage of burned areas as the indicators of the burns and distribute indicators the weighted values to construct the evaluation model of skin burns. The author has also defined a threshold, which can segregate the different burn types.

In the research of Kuan et al. [13], the author has proposed a Convolution Neural Network (CNN) model for the degree of skin burn image recognition. Their model uses the probability distribution of the degree of skin burn.

Concerning the identification of burns, first the features are selected for decision making between the healthy skin and the burned skin. Another is to find out the qualities of features for making accurate system. Colors play a major role in the assessment of the depth of a burn that the physician considers; as an outcome of that, an image classification system with zero error must be developed.

In the related literary works, there are several methods have been suggested to support the burns surgeon through the automated system in the making of the best decision. The use of color imaging helps of identification of the starting point of the burn wound; it suggests that the burn must first be automatically taken to be against the healthy skin. To design an efficient classification system, the following assumptions are considered:

-

(i)

Usually, physician categorizes the burn based on pink-whitish tone, beige-yellow color or dark brown color. Therefore, the proposed method considers color and texture to categorize the burn.

-

(ii)

It is assumed that the color and texture uniquely identifies the type of burn. Thus, the proposed method utilized

color space, which accurately identifies uniqueness of color according to human perception [14].

color space, which accurately identifies uniqueness of color according to human perception [14].

There are many classifiers available like Bayesian classifiers, artificial neural networks, support vector machine, and distance-based classifiers. In harmony with all classifiers, it seems reasonable and likely to undergo to develop a more accurate automated system to help the physician to diagnosis the burn depth.

The primary objective of the proposed method is to develop an automated classification system for burn identification as graft and non-graft.

III. Methods

The burn wound images in this work were obtained in accordance to all regulations of the university local institutional review board ethics committee with approval and the research was carried out according to the Declaration of Helsinki.

In literature, methods have been proposed to classify the burn skin as super dermal, deep dermal and full thickness wounds [3], [15]. However, these methods lacks in accuracy, and unable to classify burn skin as grafting and non-grafting. Therefore, a method is required to improve accuracy of burn skin classification, and to segment the burn skin as grafting and non-grafting.

The classification of graft is much more complex, and requires immediate attentions due to its severity. However, non-graft burn is just like a normal burn which can be cured easily. To achieve this objective, the proposed method is three-fold as follows:

-

(i)

Identification of physical characteristics to assess the burn.

-

(ii)

Mathematical modeling of physical characteristics.

-

(iii)

Classification of burn using mathematical model.

The proposed method is based on CIELAB color space due to the property of perceptual uniformity, and capable to exceed gamut of the RGB.

The first image is resized and then it is converted to  color image as it is more perceptually uniform with respect to human color vision.

color image as it is more perceptually uniform with respect to human color vision.

The human color spectrum is identified using  . To get the complete information of the color, channel extraction is required from

. To get the complete information of the color, channel extraction is required from  color space.

color space.  indicates lightness, whereas

indicates lightness, whereas  indicates the red/green and

indicates the red/green and  indicates the yellow/blue color.

indicates the yellow/blue color.

After that, the channel is separated from the  color image. From separated channels, features are selected so that the method can differentiate different types of burns. Selected feature are fed to the SVM for training, and after training, burns are classified using the SVM.

color image. From separated channels, features are selected so that the method can differentiate different types of burns. Selected feature are fed to the SVM for training, and after training, burns are classified using the SVM.

The proposed method extracts  and

and  color channels as feature channel. A positive value of

color channels as feature channel. A positive value of  and

and  represents the red region and yellow region respectively. The mean of red and green channel is calculated to get the more accurate color in the image.

represents the red region and yellow region respectively. The mean of red and green channel is calculated to get the more accurate color in the image.

Further, the region of interest (ROI) is identified using the variance of channel  . The ROIs are to be classified using the intensity of the color, which is provided by kurtosis and skewness of channel

. The ROIs are to be classified using the intensity of the color, which is provided by kurtosis and skewness of channel  . This intensity helps to identify the distribution of color on the burn part.

. This intensity helps to identify the distribution of color on the burn part.

The proposed method is able to classify the burn wounds as super dermal, deep dermal and full thickness wounds respectively. It minutely differentiates the real burn type by judging the colorfulness of ROI using chroma. The color perception is calculated using hue for separating the different tone. The depth of the burn using kurtosis is used to measure the severity of the burn. The high kurtosis tends to have more depth whereas, the low kurtosis tend to low depth.

In the case of super dermal, the grafting is not required. However, in the case of deep dermal and full thickness, grafting is essential. Skin grafting technique is used to move or transplant skin from one area of a body to a different area of the body to cure burned skin.

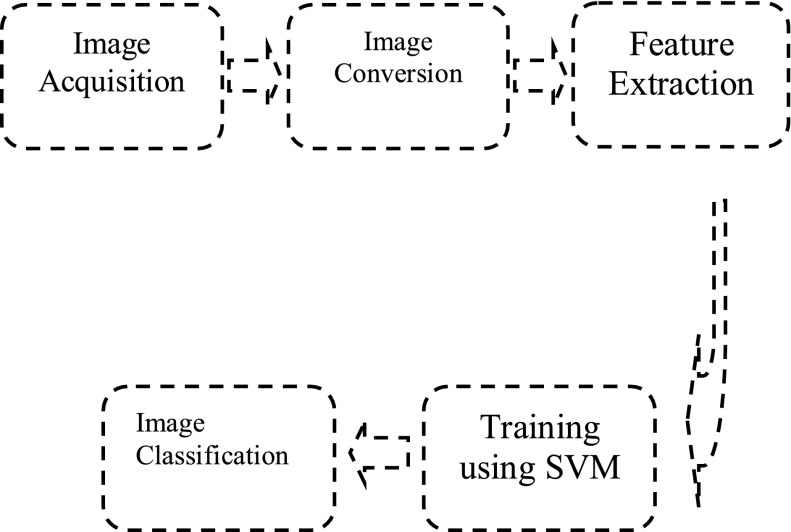

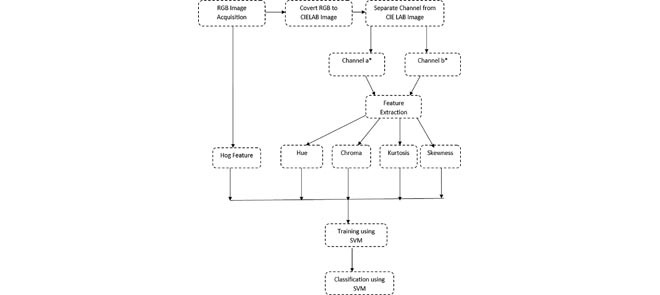

The flow chart of the proposed method is shown in figure 1. First, input image is converted to  color image, and channels are separated from the

color image, and channels are separated from the  color image. Features are calculated from

color image. Features are calculated from  and

and  channels. Which are used to train and classify burn via SVM. The data for training is a set of points (vectors)

channels. Which are used to train and classify burn via SVM. The data for training is a set of points (vectors)  , along with their categories

, along with their categories  . For some dimension d, then

. For some dimension d, then  , and

, and  . The equation of a hyper plane is

. The equation of a hyper plane is  where,

where, ,

,  is the inner dot product of w and b is real.

is the inner dot product of w and b is real.

FIGURE 1.

SVM feature classifier.

The hyper planes H are defined such that:  when

when  when

when  .

.

H1 and H2 are the planes: that are used to separate the images from graft to non-graft.

|

The burn effect identification requires first to identify the local shape of the burn part, how much part is burned. It can be determined by using Hog feature (Histogram of Oriented Gradients) because hog feature identification gives local shape information from the region within an image. After identifying the local shape, subsequent identification is required for color perception.

Because human color perception gives the idea of burn type, an expert can easily identify the burn by their color, like red skin, red-yellow skin, and white skin represents superficial dermal, deep dermal and full thickness respectively. All these different types of color give the different classification of the burned image.

Usually, all of the colors in the spectrum, as well as colors outside of human perception, are available in  color space. The color extraction is used to identify the colors, however the color intensity differs in the burn image, therefore it is necessary to calculate the mean of the channel. The mean value of core fragments and the periphery fragments are significantly different, based on the site location. The higher mean value indicates the more coloration for core fragments, whereas low mean value indicates periphery fragments. The colors that are more yellow in appearance have more elevated mean. The colorfulness of an image is essential for decimation when specifying colors of skin.

color space. The color extraction is used to identify the colors, however the color intensity differs in the burn image, therefore it is necessary to calculate the mean of the channel. The mean value of core fragments and the periphery fragments are significantly different, based on the site location. The higher mean value indicates the more coloration for core fragments, whereas low mean value indicates periphery fragments. The colors that are more yellow in appearance have more elevated mean. The colorfulness of an image is essential for decimation when specifying colors of skin.

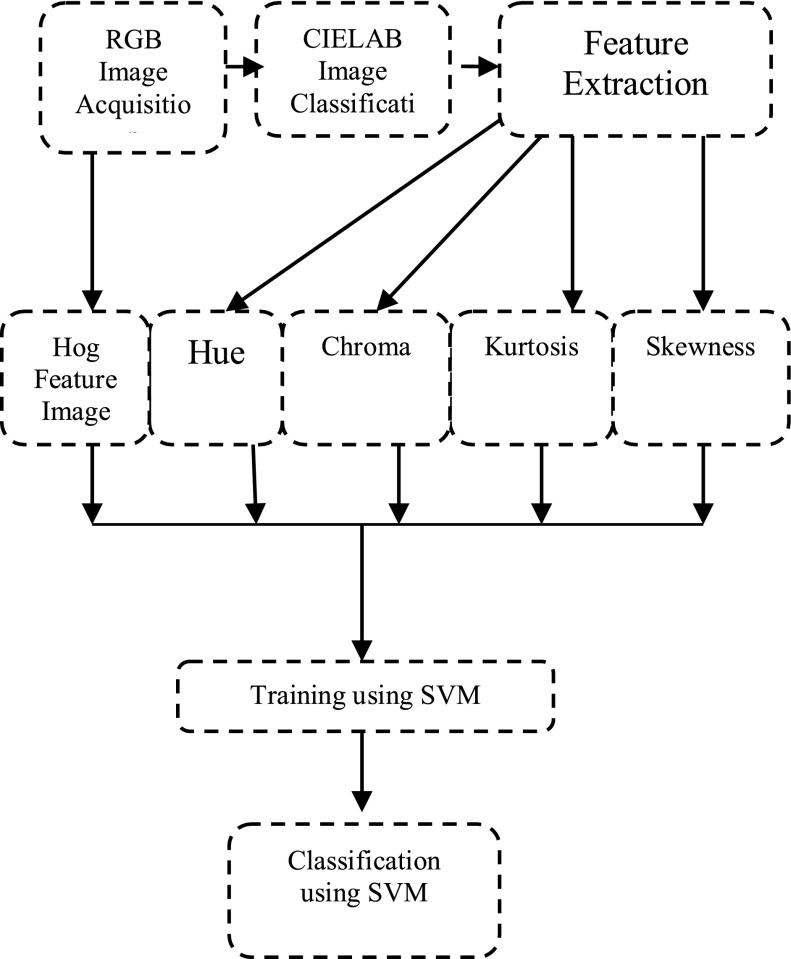

The accuracy of the system depends upon right features, selected for training and classification. The Feature Extraction Model (Figure 2) is trained with the features {Hog, Hue, Chroma, Kurtosis, Skewness}. Hog texture feature on the true color image is applied because it gives features encode local shape information from regions within an image.

FIGURE 2.

Feature extraction model.

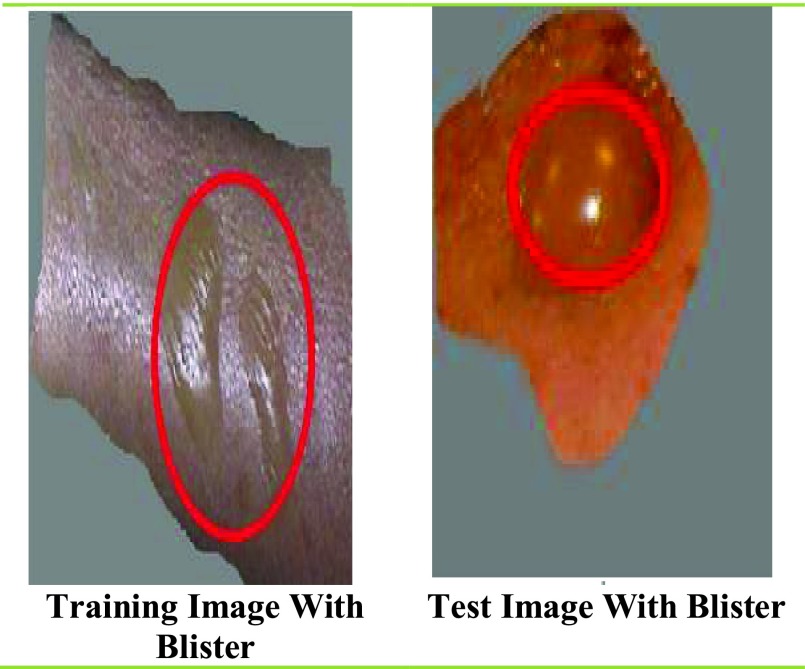

In present study hog feature is used to detect blisters in training and test images to classify the burn. It is computed on a dense grid of uniformly spaced cells and uses overlapping local contrast normalization for improved accuracy. Hog is a feature extraction method for images. It is used for dense feature extraction. It considers all locations in the image for features extractions as opposed to only the local neighbourhood of key points like SIFT (Scale-Invariant Feature Transform).

The  colour space includes all colours in the band, as well as colours outside human perception. It is also apparatus independent and has an unlimited number of possible pictures of colours. Therefore the images with Red, Green, Blue (RGB) channels are transformed into

colour space includes all colours in the band, as well as colours outside human perception. It is also apparatus independent and has an unlimited number of possible pictures of colours. Therefore the images with Red, Green, Blue (RGB) channels are transformed into  colour space. The actual colour channel recognition which is extracted by

colour space. The actual colour channel recognition which is extracted by  channel and

channel and  channel from to

channel from to  colour space.

colour space.

After that the smoothing of color intensity is done by the mean value of channel  and

and  . The colourfulness of an image is essential for specifying colours of skin. The chroma provides colourfulness of an image which can be calculated by hue. The hue gives closest color match for most closely resembles.

. The colourfulness of an image is essential for specifying colours of skin. The chroma provides colourfulness of an image which can be calculated by hue. The hue gives closest color match for most closely resembles.

Hue is calculated as:

|

where  and

and  represents,

represents,  channel and

channel and  channel of

channel of  .

.

The severity of burn is depends upon quality of the color’s purity and saturation in the image, which is identified by the chroma.

Chroma is calculated as:

|

The burn intensity identification is required to classify the burn. In this stage, the variance of  channel is calculated so that the intensity of green (− a) to red (+ a) can be determined for distinguishing burn images.

channel is calculated so that the intensity of green (− a) to red (+ a) can be determined for distinguishing burn images.

The depth of the burn is one of the important aspects to know the severity of the burn. Kurtosis and skewness of a channel are calculated to support this information. With the help of skewness, one can identify the shape of the distribution of data to get the size of burn and the degree of peakedness is obtained from kurtosis which helps to find the depth of the burn.

The kurtosis of a distribution is defined as:

|

where  is the mean of A,

is the mean of A,

|

where  is the mean of

is the mean of  ,

,  is the standard deviation of

is the standard deviation of  . Skewness is calculated as:

. Skewness is calculated as:

|

IV. Training Using SVM

Features of burn images are calculated and used in SVM for training and classifications. The accuracy of classification is dependent on the best feature selected from the images.

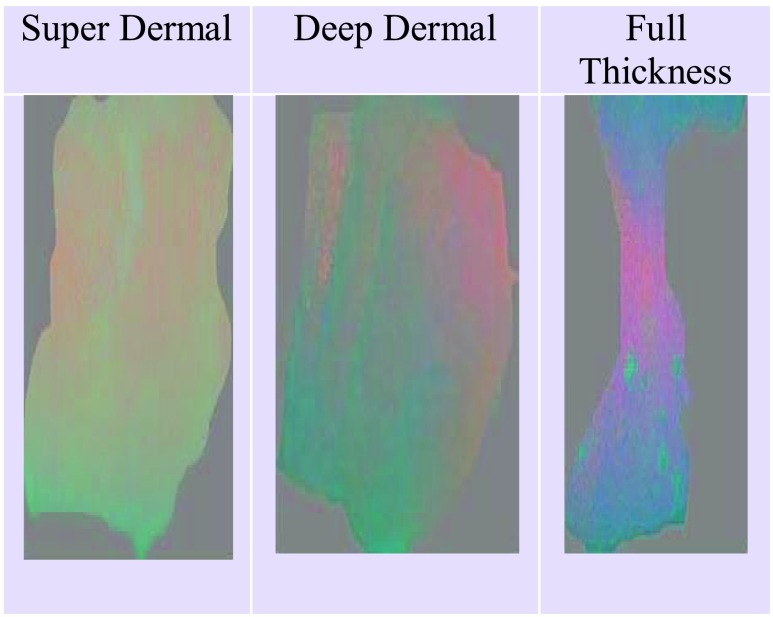

The  color space of an input image is shown in figure 3. After the pre-processing from RGB space to

color space of an input image is shown in figure 3. After the pre-processing from RGB space to  space, a plane has relation to chrominance of the

space, a plane has relation to chrominance of the  space is selected for point extraction.

space is selected for point extraction.

FIGURE 3.

Converted images from RGB To  image.

image.

The classification is based on the training of the system with grafting and non-grafting. The model requires differentiating between burn which require grafting and non-grafting. As suggested by physician super dermal burn does not require grafting, but deep dermal and full thickness burn requires grafting. So the data set is divided into two classes in class-1, superdermal and in class-2, deep dermal and full thickness images are taken. Further, the model is trained with the features {Hog, Hue, Chroma, Kurtosis, Skewness}.

V. Results

Data Set: The model is tested on the standard dataset which contain 74 images out of which 20 bitmap images with size ranges from 64 kb to 1164 kb for training and 74 jpeg images with size range from 3 kb to 78 kb for the test. This database belongs to the Biomedical Image Processing (BIP) Group from the Signal Theory and Communications Department (University of Seville, SPAIN) and Virgen del Rocío Hospital (Seville, SPAIN) [19].

The experiments are performed on the i-7 processor with 8 GB RAM and implemented in MATLAB 16a.

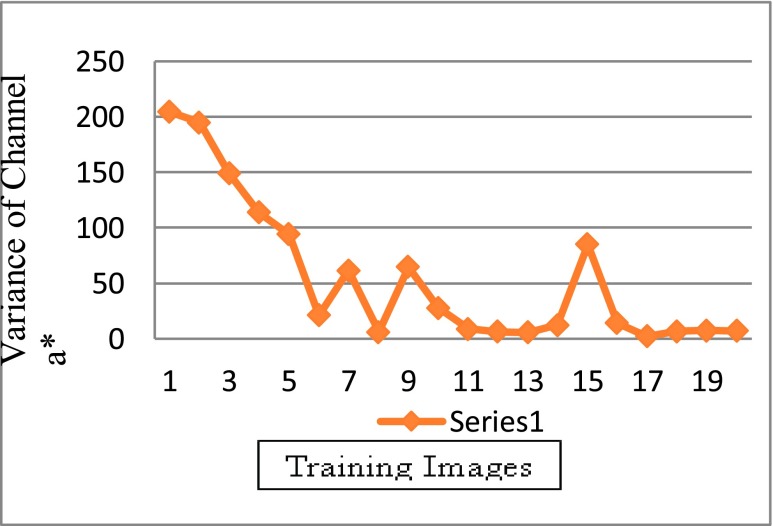

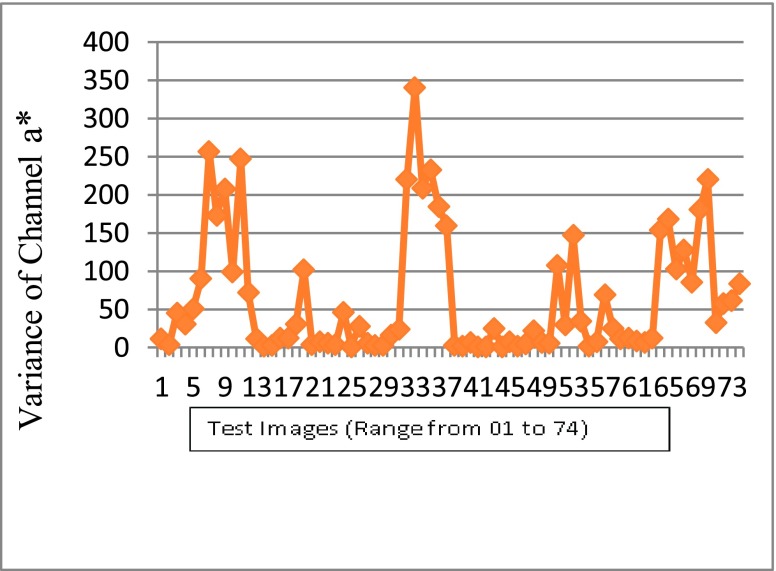

The results in the following graphs are based on training and test data. The variance of channel red is maximum in superdermal burn, further it gradually decreases in deep dermal and full thickness burn, as per experimental analysis.

Graph between the variance of channel  and training images has been shown in figure 4. In the figure 7. Image

and training images has been shown in figure 4. In the figure 7. Image  where i =1 to 20. The value of

where i =1 to 20. The value of  from i =1 to 9 represents superdermal, from i = 10 to 14 represents deep dermal and from i = 15 to 20 represents full-thickness. It can be observed from the figure 4, that the most of the highest peaks are of superdermal burns, and accordingly, the intensity decreases in deep dermal and full thickness burn. The proposed methods have used variance of channel

from i =1 to 9 represents superdermal, from i = 10 to 14 represents deep dermal and from i = 15 to 20 represents full-thickness. It can be observed from the figure 4, that the most of the highest peaks are of superdermal burns, and accordingly, the intensity decreases in deep dermal and full thickness burn. The proposed methods have used variance of channel  as a feature for training. This variance helps to deicide, whether grafting is required or not.

as a feature for training. This variance helps to deicide, whether grafting is required or not.

FIGURE 4.

Variation in intensity of red channel of SD, DD and FT.

The variance of the red channel for test image is plotted in the figure 5 which reflects the same nature of the test data set. It ensures that it is one of best feature to distinguish different types of burn.

FIGURE 5.

Variation of red channel intensity in SD, DD, FT.

VI. Discussion

Based on the clinical knowledge [1], types of burn are classified using the proposed method. The proposed method performs better than the pixel-wise method [6] by selecting image wise texture and color features for training and classification.

Sabeena and Kumar [8] differentiated normal images and burn images using SVM. This work extends this further by differentiating burns which require grafting and non- grafting using SVM. Suvarna and Niranjan [9] suggested SVM provides better results as compare to other techniques. This was also shown to be the case in this work by selection of appropriate features.

Another important aspect of burn is its severity, which is detected by the depth. The proposed method in this work uses the kurtosis to measure the depth of the burn. Acha et al. [10] have used a fuzzy ARTMAP neural network and SVM to guide machines to find the depth of the burn, but the kurtosis provides the depth of the burn more accurate than [10], because the proposed method can classify the burn with different degrees.

Severity of the burn depends on its degree of the burn, which is used to calculate the depth of burn. Burn images recognition and identifying the degrees of burn using Convolution Neural Network (CNN) helps to classify the degrees of burns and give a suitable medical decision [11].

Table 1 illustrates the results of the proposed method. The results are much better for all the parameters compared to other techniques which have been discussed in the literature survey [17], [18]. The accuracy in the training phase is 98%, and in testing phase it is 82.43%, which is a remarkable achievement. All previous methods have achieved a range of 79 – 80 % of accuracy in the testing phase.

TABLE 1. Comparison of Multidimensional Scaling Analysis or MDS [17] and Proposed SVM-Based Method.

| Proposed Method | MDS Approach | |

|---|---|---|

| Accuracy | 82.43 | 79.73 |

| Precision | 0.82 | 0.73 |

| F1 Score | 0.85 | 0.83 |

| Recall | 0.88 | 0.97 |

| True positives (TP) | 36 | 38 |

| False positives (FP) | 8 | 14 |

| False negatives (FN) | 5 | 1 |

| True negatives (TN) | 25 | 21 |

| Sensitivity (S) | 87.80 | 97.0 |

| Specificity (E) | 83.33 | 60.0 |

| True-Positive Rate | 87.80 | - |

| False-Positive Rate | 24.2 | - |

| False-Negative Rate | 0.07 | - |

In the training phase, the accuracy level of the proposed method is much better because of the HoG feature, which accurately detects blisters present in superficial dermal skin. For any burn disease, the blisters are the initial symptoms for superficial dermal, most of the burn affect the superficial part of the skin, which leads to blisters. It is one of the basic and important features of the burn that was not taken care by any of the other papers.

Separation of the burn image is also required to check the color intensity, as the variation in the color intensity provides a better separation that is taken care by the variance of  , i.e. the variance of the red channel. The variance of channel red is maximum in superdermal burn and gradually decreases in deep dermal and full thickness. In Table 1, the false positives (FP) are 8 for the proposed method, which is much less and much better than a FP of 14, a classification by the previously published work of Serrano et al.

[17].

, i.e. the variance of the red channel. The variance of channel red is maximum in superdermal burn and gradually decreases in deep dermal and full thickness. In Table 1, the false positives (FP) are 8 for the proposed method, which is much less and much better than a FP of 14, a classification by the previously published work of Serrano et al.

[17].

The novelty of the paper is that the automatic burn classification is done based on different features. The system is able to classify burn with high accuracy due to the features which are selected in current approach for the training and classifications. It is identified in the analysis phase of the experiment that the right training will give the right output which will improve the accuracy.

In some of the previous works [17], they have not used any method to identify the blister in the images. To identify the blister a separate procedure is applied which improves the training. The blister requires a method which can easily identify the shape of the blister and its clarity in the images.

The HoG method is appropriate to distinguish such features in the images because HoG features are based on the histograms of directions of gradients. Gradients of an image are useful to find the object shape than flat regions. In the training procedure, burn images with blisters are present, and the HoG feature is able to classify correctly these images. In test image data set, 4 burn images with blisters are present which are correctly classified by the automated system. Images with blisters in training and test data set are shown figure 6. Variance of red channel intensity enhances the performance of the outcome which is shown in figure 4, which represents the intensity of red channel training image data set and figure 5 represents the intensity of red channel test image data set.

FIGURE 6.

Training and test image with blister.

Experimental results are shown in Table 2, of test data sets which contain 74 jpeg images with size range from 3 kb to 78 kb. Here 33 out of 74 images are superdermal, which did not require grafting and the rest 41 images are deep dermal and full thickness, which required grafting.

TABLE 2. Confusion Matrix of Test Data Set.

| Class | Non-Graft (SD) | Graft (DD & FT) |

|---|---|---|

| Non-Graft (SD) | 25 | 8 |

| Graft (DD & FT) | 5 | 36 |

The main advantage of the proposed method relies on the fact that the feature selected for SVM classifier is able to classify the burn image into those which require graft and non-graft. Since images are captured under different physical conditions with different devices, automatic analysis of the images becomes difficult. However, the proposed method has shown higher accuracy than previous methods in accurately detecting and classifying the burn images.

VII. Conclusion

Machine learning methods can be employed to automate human burn image classification. The proposed method is a combination of feature extraction and a classification model that discriminates vitality of the numerical features and to perceive the burn and its intensity. The significance is also picked up by the subset of features for better checks.

Usually, only burn surgeons can accurately differentiate burns based on their clinical experience. Therefore, the accuracy of detecting burns and classifying them is varied from the experienced burn surgeon to the inexperienced from 64–76%.

In this paper, the effort on burn depth estimation, specifically classification of burn based on with graft and non-graft is described. The proposed method provides better detection and classification for the burn with graft and non- graft. According to the ground truth, the accuracy of 82.43% was obtained which is much better than the previous method, i.e. MDS.

In the method, the physical features are changed over into logical features. In the initial state to differentiate between healthy skin and burn wound the color imaging is considered. The learning procedure for the burn image classification is developed under a supervised scenario.

The accuracy of the proposed method in this work is higher compared with the available literature, but still needs to tested on a larger data set. Also the accuracy could be further improved by selecting other features like texture and color. Finally, other methods such as data mining and artificial intelligence methods [20] could also be tested to see if they are effective in detecting and classifying burn wounds in future studies.

References

- [1].Haller H. L., Giretzlehner M., Dirnberger J., and Owen R., “Medical documentation of burn injuries,” in Handbook of Burns, Jeschke M. G., Kamolz L. P., Sjöberg F., and Wolf S. E., Eds. Vienna, Austria: Springer, 2012. [Online]. Available: https://link.springer.com/chapter/10.1007/978-3-7091-0348-7_8#citeas. doi: 10.1007/978-3-7091-0348-7_8. [DOI] [Google Scholar]

- [2].Badea M.-S., Vertan C., Florea C., Florea L., and Bǎdoiu S., “Automatic burn area identification in color images,” in Proc. Int. Conf. Commun. (COMM), 2016, pp. 65–68. [Google Scholar]

- [3].Jaskille A. D., Shupp J. W., Jordan M. H., and Jeng J. C., “Critical review of burn depth assessment techniques: Part I. Historical review,” J. Burn Care Res., vol. 30, no. 6, pp. 937–947, Nov-Dec 2009. [DOI] [PubMed] [Google Scholar]

- [4].Atiyeh B. S., Gunn S. W., and Hayek S. N., “State of the art in burn treatment,” World J. Surg., vol. 29, pp. 131–148, Feb. 2005. [DOI] [PubMed] [Google Scholar]

- [5].King D. R.et al. , “Surgical wound debridement sequentially characterized in a porcine burn model with multispectral imaging,” Burns, vol. 41, no. 7, pp. 1478–1487, 2015. [DOI] [PubMed] [Google Scholar]

- [6].Li W.et al. , “Burn injury diagnostic imaging device’s accuracy improved by outlier detection and removal,” Proc. SPIE, vol. 9472, May 2015, Art. no. 947206. [Google Scholar]

- [7].Rangaraju L. P., Kunapuli G., Every D., Ayala O. D., Ganapathy P., and Mahadevan-Jansen A., “Classification of burn injury using Raman spectroscopy and optical coherence tomography: An ex-vivo study on porcine skin,” Burns, vol. 45, no. 3, pp. 659–670, May 2019. [DOI] [PubMed] [Google Scholar]

- [8].Sabeena B. and Kumar P. D. R., “Diagnosis and detection of skin burn analysis segmentation in colour skin images,” Int. J. Adv. Res. Comput. Commun. Eng., vol. 6, no. 2, pp. 369–374, 2017. [Google Scholar]

- [9].Suvarna M. and Niranjan U. C., “Classification methods of skin burn images,” Int. J. Comput. Sci. Inf. Technol., vol. 5, no. 1, p. 109, 2013. [Google Scholar]

- [10].Acha B., Serrano C., Palencia S., and Murillo J. J., “Classification of burn wounds using support vector machines,” Proc. SPIE, vol. 5370, pp. 1018–1026, May 2004. [Google Scholar]

- [11].Tran H. S., Le T. H., and Nguyen T. T., “The degree of skin burns images recognition using convolutional neural network,” Indian J. Sci. Technol., vol. 9, no. 45, pp. 1–6, 2016. [Google Scholar]

- [12].Li H. Y., “Skin burns degree determined by computer image processing method,” Phys. Procedia, vol. 33, pp. 758–764, Jan. 2012. doi: 10.1016/j.phpro.2012.05.132. [DOI] [Google Scholar]

- [13].Kuan P. N., Chua S., Safawi E. B., Wang H. H., and Tiong W., “A comparative study of the classification of skin burn depth in human,” J. Telecommun., Electron. Comput. Eng., vol. 9, no. 2, pp. 15–23, 2010. [Google Scholar]

- [14].Rangayyan R. M., Acha B., and Serrano C., Color Image Processing With Biomedical Applications. Bellingham, WA, USA: SPIE Press, 2011. [Google Scholar]

- [15].Hardwicke J., Thomson R., Bamford A., and Moiemen N., “A pilot evaluation study of high resolution digital thermal imaging in the assessment of burn depth,” Burns, vol. 39, pp. 76–81, Feb. 2013. [DOI] [PubMed] [Google Scholar]

- [16].Devlin J. B. and Herrmann N. P., “Bone colour,” in The Analysis of Burned Human Remains. Amsterdam, The Netherlands: Elsevier, 2015. [Google Scholar]

- [17].Serrano C., Boloix-Tortosa R., Gómez-Cía T., and Acha B., “Features identification for automatic burn classification,” Burns, vol. 41, no. 8, pp. 1883–1890, 2015. [DOI] [PubMed] [Google Scholar]

- [18].Gonzalez R. C. and Woods R. E., Digital Image Processing, 3rd ed. Upper Saddle River, NJ, USA: Prentice-Hall, 2008. [Google Scholar]

- [19].Biomedical Image Processing (BIP) Group From the Signal Theory and Communications Department (University of Seville, SPAIN) and Virgen del Rocío Hospital (Seville, Spain). Burns BIP_US Database. Accessed: Nov. 1, 2018. [Online]. Available: http://personal.us.es/rboloix/Burns_BIP_US_database.zip

- [20].Mishra S., Sagban R., Yakoob A., and Gandhi N., “Swarm intelligence in anomaly detection systems: An overview,” Int. J. Comput. Appl., 2018. doi: 10.1080/1206212X.2018.1521895. [DOI]