Abstract

Background

Implementation strategies are essential for promoting uptake of evidence-based practices and for patients to receive optimal care. Yet strategies differ substantially in their intensity and feasibility. Lower-intensity strategies (e.g., training, technical support) are commonly used, but may be insufficient for all clinics. Limited research has examined the comparative effectiveness of augmentations to low-level implementation strategies for non-responding clinics.

Objectives

To compare two augmentation strategies for improving uptake of an evidence-based collaborative care model (CCM) on 18-month outcomes for patients with depression at community-based clinics non-responsive to lower-level implementation support.

Research Design

Providers initially received support using a low-level implementation strategy, Replicating Effective Programs (REP). After 6 months, non-responsive clinics were randomized to add either External Facilitation (REP+EF) or External and Internal Facilitation (REP+EF/IF).

Measures

The primary outcome was patient SF-12 mental health score at month 18. Secondary outcomes were PHQ-9 depression score at month 18 and receipt of the CCM during months 6 through 18.

Results

27 clinics were non-responsive after 6 months of REP. 13 clinics (N=77 patients) were randomized to REP+EF and 14 (N=92) to REP+EF/IF. At 18 months, patients in the REP+EF/IF arm had worse SF-12 (diff=8.38; 95%CI=3.59, 13.18) and PHQ-9 scores (diff=1.82; 95%CI=−0.14, 3.79), and lower odds of CCM receipt (OR=0.67, 95% CI=0.30,1.49) than REP+EF patients.

Conclusions

Patients at sites receiving the more intensive REP+EF/IF saw less improvement in mood symptoms at 18 months than those receiving REP+EF, and were no more likely to receive the CCM. For community-based clinics, EF augmentation may be more feasible than EF/IF for implementing CCMs.

Trial Registration

Clinical Trials

Keywords: implementation, mood disorders, depression, bipolar disorder, health behavior change, collaborative care, care management

BACKGROUND

Effective implementation of evidence-based practices (EBPs) into community-based settings is slow,1 especially for mental disorders, resulting in poor outcomes and millions of research dollars wasted when EBPs fail to reach those most in need.2 As a prime example, the Collaborative Chronic Care Model (CCM) is an EBP shown to improve physical and mental health outcomes for persons with mood disorders,3 including depression and bipolar disorder,4 but has not been widely implemented, a few strategies have proven effective for reducing provider and organizational barriers to CCM implementation, especially in lower-resourced community-based practices.5–14 As a consequence, mood disorders result in significant functional impairment, high medical costs, and preventable mortality.15,16

Implementation strategies, which are theory-based tools designed to mitigate barriers to EBP adoption through provider behavior change and/or organizational capacity building, can potentially enhance adoption of EBPs and ultimately improve patient outcomes. While many implementation strategies have been identified,17,18 empirical testing of their comparative effectiveness in enhancing EBP uptake and improving patient outcomes is required. To date, most implementation strategy trials have been done in large health care systems such as VA.19 However, individuals with mood disorders are primarily managed in solo or small group practices, where implementation strategies are both most needed and understudied.14

Two promising implementation strategies used previously to enhance uptake of mental health EBPs are Replicating Effective Programs (REP) and Facilitation. REP, based on the Centers for Disease Control and Prevention’s Research-to-Practice Framework20–22 and derived from Social Learning Theory23 and Rogers’ diffusion model,24 is a low-intensity, low-cost, and low-burden strategy that uses didactic training and brief technical support to customize EBPs to local needs and improve uptake of EBPs in community organizations.19,24–30 Facilitation, based on the Promoting Action on Research Implementation in Health Services (PARiHS) framework31–35, is a higher-intensity strategy that mitigates organizational and leadership barriers not addressed by REP by mentoring providers in strategic thinking skills to encourage effective EBP promotion in their organization, and has been shown to enhance EBP uptake in large health care systems.30,36–39 We selected Facilitation as an augmentation to REP as we expected most barriers to CCM adoption to be organizational, related to workflow and stakeholder buy-in. Two Facilitation roles have recently been operationalized:40 an External Facilitator (EF) from the study team, and an Internal Facilitator (IF), who is a local employee of a site. EFs are EBP experts who provide mentoring in implementation across sites. In contrast, IFs are on-site clinical managers who support providers in implementing EBPs by helping them align EBP activities with provider and site leadership priorities.

As a low-cost and low-burden strategy, REP is easily scalable, even for a large number of community-based clinics. However, while REP alone may be enough for some clinics to adequately adopt a new EBP, evidence suggests that it is unlikely to be sufficient for EBP uptake and improved patient outcomes in many lower-resourced community-based clinics,37 and augmentations to REP may be necessary. For clinics where REP does not sufficiently improve EBP uptake, augmenting REP with EF may help to address organizational barriers; however it may still be insufficient for penetrative and sustainable implementation.41 Augmenting REP with EF and IF would be more intensive and more costly, but could further enhance EBP uptake by strengthening local EBP support more effectively than EF alone. However, EF/IF has not been compared to EF alone as an augmentation to improve EBP uptake and patient outcomes at sites non-responsive to REP.

This study reports 18-month outcomes from the Adaptive Implementation of Effective Programs Trial (ADEPT), a clustered, sequential multiple assignment randomized trial (SMART)42–44 designed to determine the comparative effectiveness of enhanced implementation strategies (EF, EF/IF) applied to community-based clinics not responding to initial low-level implementation support (REP) on outcomes among patients with depression. The primary aim of this study is to determine, among patients with depression at clinics that did not respond after 6 months of REP support for uptake of a CCM, Life Goals (LG), the effect of augmenting REP with EF/IF (REP+EF/IF) versus REP+EF on patient-level changes in mental health-related quality of life (SF-12 MH-QOL; primary outcome) and depression (PHQ-9) after 18 months, and receipt of the CCM between months 6 and 18 (secondary outcomes). Clinical patient-level outcomes were selected based on prior studies that found significant improvement in quality of life and depression among patients that received LG.4,45,46

METHODS

Details regarding the ADEPT study design are available elsewhere.41 In brief, ADEPT included community-based mental health or primary care clinics in Michigan and Colorado who were non-responsive after six months of REP implementation support. Eligible sites had at least 100 unique patients diagnosed with or treated for unipolar or bipolar depression and could designate at least one provider with a mental health background to provide individual or group CCM sessions for adult patients. Life Goals, the EBP being implemented, is an evidence-based CCM that, in line with prior meta-analyses on effectiveness of CCMs,3,47 focuses on three core CCM components: patient self-management, clinical information systems, and care management (Table 1). LG was found in previous trials48,49 to improve physical or mental health outcomes for bipolar as well as depressive disorders. 4,37,48–51

Table 1:

Life Goals: Psychosocial Sessions and Care Management

| Life Goals Self-Management Program | Six individual or group psychosocial sessions (lasting ~50 minutes or ~90 minutes each, respectively) focused on active discussions around personal goals, mental health symptoms, stigma, and health behaviors. The Life Goals self-management program utilizes a bookend approach in which participants complete the “Introduction” session first and the “Managing Your Care session” last. The four sessions completed in between are customizable to the individual or the group. Customizable sessions include mental health topics (e.g. Depression, Anxiety, Mania, Psychosis) and wellness topics (e.g. physical activity, nutrition, sleep, substance use). A sample program is listed below with example customizable sessions included. |

| Session 1: Introduction | Self-management; Collaborative care; Factors impacting mental health; Understanding stigma; Personal values; Goal setting |

| Session 2: Customizable (e.g. Depression: Identifying symptoms and triggers) | Identify personal symptoms and triggers of depression |

| Session 3: Customizablen (e.g. Depression: Managing the symptoms) | Identify responses to symptoms of depression; Develop personal action plan for managing symptoms in the future |

| Session 4: Customizable (e.g. Foods and Moods) | Understanding effects of mental health on eating habits; Identify triggers of high-risk eating; Identify current eating habits and areas for improvement |

| Session 5: Customizable (e.g. Sleep and Mood) | Understanding effects of sleep on health; Identify current sleep habits; Relationship between daily routine and biological rhythms; Planning for improvements in sleep |

| Session 6: Managing Your Care | Building behavior change goals; Relapse prevention and monitoring |

| Care Management* | Monthly individualized follow-up contacts for 6 months to support Life Goals session goals, reinforce lessons learned in Life Goals sessions, and monitor if further clinical intervention is needed |

| Provider Decision Support* | Disseminate knowledge re: best practice treatments for mental and physical health conditions either in-house or via consult. |

Care Management and Decision Support are two components of the Life Goals model but were not required components of this project.

The study was approved by local institutional review boards and registered under clinicaltrials.gov.

Study Design and Implementation Strategies

Run-in phase with all sites

The full study design is provided in Appendix Figure 1. In the run-in phase, all sites received REP24–27 for six months.41 Through REP, providers received an LG manual, secure access to a website with LG materials, a one-day training in LG that also provided information on customizing program delivery (e.g., individual vs. group sessions, module selection) and advertising to site needs,31,37,52,53 and ongoing assistance through monthly site reports, quarterly newsletters, and conference calls.

Trial phase with only non-responder sites

After six months, sites were evaluated for response to REP, and non-responsive sites entered the trial. Sites were considered non-responsive to REP if after 6 months either <10 patients were receiving LG or <50% of patients receiving LG had received ≤3 sessions, considered the minimum dose for clinically significant results.54–58 This definition of response was intended to identify sites as in need of additional implementation support if they failed either to reach a breadth of patients or deliver an adequate dose to treated patients, and was based on prior research showing that sites that failed to reach this level of implementation in the first six months were unlikely to achieve widespread adoption of LG without additional support.4,45,46

At month 6, non-responding sites were randomized to receive either EF, where the a study-team employed facilitator provided mentoring to providers on LG adoption; or EF/IF, where a clinical manager was also identified at each site to assist with day-to-day implementation and aligning LG with site priorities.38 The EF was study-funded, and trained in Facilitation through the Quality Enhancement Research Initiative (QUERI) for Team-based Behavioral Health. For EF/IF sites, IF identification began at training, with providers asked to identify individuals that can generally “get something done.” EF/IF sites were offered $5,500 for each six-month period to protect IF time. After IF selection, the EF and site IFs had biweekly calls for a minimum of 12 weeks, working with site providers to implement LG.

Randomizations were stratified by state, practice type (primary care or mental health),59,60 and site-averaged Month 0 MH-QOL (low (<40) vs. high (≥40)) using stratified permuted-block random allocation lists. The study analyst generated randomizations using SAS and intervention assignment was communicated by the study EF.

Patient Identification and Assessment

During the run-in phase, a key component of REP was to support provider identification of patients from current caseloads who met criteria for LG: (1) aged 21 and over; (2) primary diagnosis of either depression or bipolar disorder; (3) not currently enrolled in intensive mental health treatment; (4) no terminal illness/cognitive impairment that precludes participation in outpatient treatment; and (5) no guardian. For study purposes, they also had to be willing to complete brief surveys. Sites were asked to provide the names of at least 20 patients suitable for LG, whom the study survey coordinator then contacted to confirm eligibility and obtain consent. The survey coordinator, who was blinded to randomization, collected patient demographic and clinical assessments at Months 0, 6 (prior to identifying site response status and randomization), 12 and 18. Full assessment details can be found elsewhere,41 but all time points included clinical assessments for SF-12 MH-QOL,61 PHQ-9 for depression symptoms,62,63 and self-reported receipt of LG, as well as patient demographics.

Analyses

The intent-to-treat sample included all consented patients at sites that (1) identified ≥1 patient that consented to study participation, and (2) were non-responsive to REP after 6 months (e.g., failed to deliver LG to ≥10 patients or failed to deliver ≥3 LG sessions to 50% of treated patients). Descriptive statistics and bivariate analyses compared site and patient characteristics across initial EF and EF/IF arms. The primary outcomes analyses compared implementation strategies in non-responding sites initially augmenting REP with EF/IF versus REP+EF on longitudinal patient-level change in SF-12 MH-QOL and PHQ-9 scores and self-reported LG receipt between months 6–18. For SF-12 and PHQ-9 analyses, outcomes were measured at months 0, 6 (pre-randomization), 12, and 18. The primary contrast is the between-groups difference in change from month 6 to month 18. For PHQ-9, we also examined remission (PHQ-9 < 5) and response (decrease of ≥50%). Analyses used piecewise linear mixed models. The unit of analysis was the individual patient within a site. Fixed effects were included for the intercept, effect for time during first six months (not differentiated by treatment), effect for time post-treatment (months 6–18), and a group-by-time interaction term for months 6–18, where group was an indicator of initial randomization to REP+EF/IF vs. REP+EF. Models included random effects for site and time, an unstructured within-person correlation structure for residual errors, and were adjusted for state, rural vs. urban, and site-aggregated Month 0 SF-12 stratum. Practice type (primary care or mental health) was not adjusted due to lack of variability. For receipt of LG between months 6–18, a binary logit model with a random intercept for site was estimated including a fixed effect for treatment and adjusting for state, rural vs. urban, and SF-12 stratum.

Sensitivity analyses examined separate time-by-treatment effects for the first six months and random slopes at patient and site levels for SF-12 and PHQ-9 models, a fixed effect for pre-randomization LG receipt for LG receipt model, and added controls for age and retirement status to address imbalance.

Power calculations have been previously described.41 Initial sample size was calculated for 1200 patients across 60 sites. Assuming a between-site correlation of 0.01 and a type-1 error rate of 5%, we had 94% power to detect an effect side of 0.23 in the difference in change in patient-level MH-QOL between months 6–18 for patients at sites initially randomized to REP+EF vs. REP+EF/IF. This estimate was conservative as it did not account for an anticipated within-person correlation of 0.6, based on prior data.

Missing Data

As planned,41 multiple imputation was used to address missing data including missingness due to treatment or study dropout; all analyses were performed with and without imputed data.

RESULTS

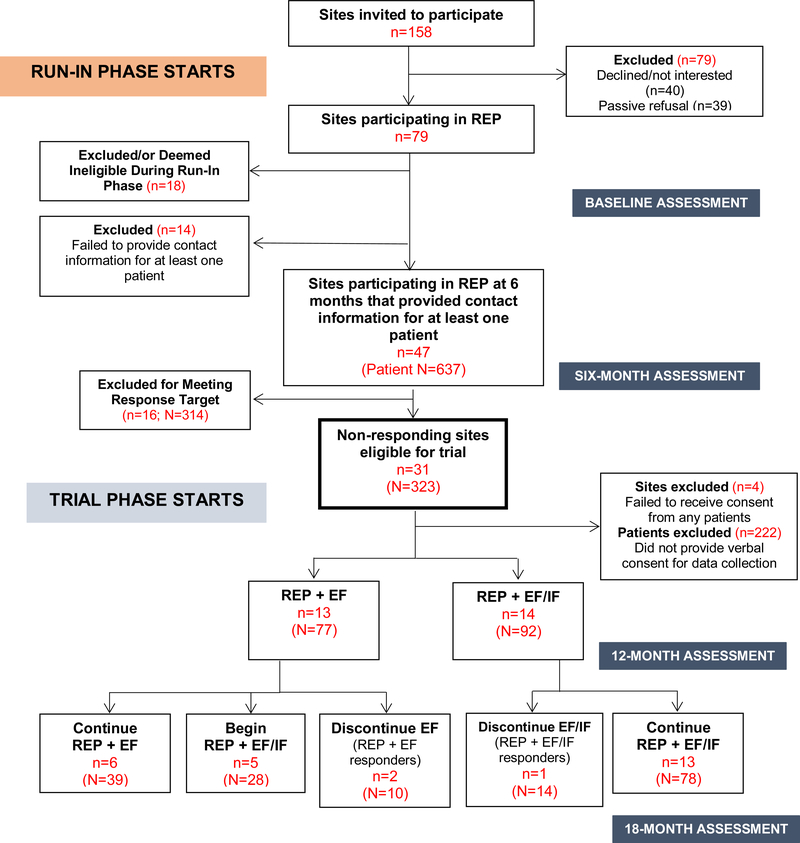

Of 158 sites approached, 79 opted to participate in the run-in phase. 43 (54%) sites had ≥1 patient identified and consented for study participation, making them eligible for inclusion in patient-level analyses. Twenty-seven of these 43 sites (63%) were deemed non-responsive to REP after six months and included in the trial. Thirteen sites (patient N=77) were randomized to REP+EF and 14 to REP+EF/IF (N=92) (Figure 1). Post-randomization, 2 REP+EF sites and 5 REP+EF/IF sites dropped out of treatment, at which point EF and/or IF strategies were discontinued. EF logs showed the EF followed study protocol for all sites, spending a median of 41.6 and 26.8 minutes per month with EF/IF and EF sites, respectively.

Figure 1:

Consort Diagram: Patient outcomes for ADEPT sites

Non-responder clinic and patient characteristics

Fourteen sites (52%) were in Michigan, and the majority rural (n=18; 67%). Twenty-six were community mental health, and one was primary care. Patients were predominantly female (74%) and white (79%). Few were employed (12%) and the majority reported experiencing homelessness at some point (59%) (Table 2). Across study arms, there were no significant differences with respect to site characteristics, but EF patients were significantly older (48.18 vs. 43.01) and more likely to be retired (18% vs. 4%) at Month 0.

Table 2:

Baseline Characteristics and Pre-Post Outcomes for Patient Participants Overall and By First Randomization

| Overall (N=169) | REP+EF (N=77) | REP+EF/IF (N=92) | p-value | |

|---|---|---|---|---|

| BASELINE CHARACTERISTICS | ||||

| Michigan | 108 (64) | 52 (68) | 56 (61) | 0.369 |

| Rural site | 129 (76) | 58 (75) | 71 (77) | 0.079 |

| Age in years: Mean (SD) | 45.38 (11.43) | 48.2 (11.14) | 43.0 (11.19) | 0.003** |

| Physical health quality of life: Mean (SD) | 37.60 (12.69) | 36.14 (11.83) | 38.82 (13.31) | 0.172 |

| Mental health qualify of life: Mean (SD) | 38.08 (13.07) | 39.75 (12.92) | 36.66 (13.10) | 0.135 |

| WHO-DAS: Mean (SD) | 30.63 (10.67) | 30.93 (10.80) | 30.39 (10.61) | 0.753 |

| Female, N (%) | 124 (73) | 54 (70) | 71 (77) | 0.299 |

| White, N (%) | 134 (79) | 61 (79) | 73 (79) | 0.984 |

| College graduate, N (%) | 20 (12) | 10 (13) | 10 (11) | 0.671 |

| Employed, N (%) | 21 (12) | 10 (13) | 11 (12) | 0.840 |

| Retired, N (%) | 18 (11) | 14 (18) | 4 (4) | 0.004** |

| Lives alone, N (%) | 53 (31) | 25 (32) | 28 (30) | 0.777 |

| Ever homeless, N (%) | 101 (60) | 50 (65) | 51 (55) | 0.210 |

| OUTCOMES | ||||

| Mental health qualify of life: Mean (SD) (Baseline) | 38.08 (13.07) | 39.75 (12.92) | 36.66 (13.10) | 0.135 |

| Mental health qualify of life: Mean (SD) (Month 18) | 40.89 (12.40) | 45.57 (11.77) | 37.58 (11.85) | 0.001** |

| PHQ-9: Mean (SD) (Baseline) | 12.08 (6.73) | 11.45 (6.46) | 12.61 (6.94) | 0.268 |

| PHQ-9: Mean (SD) (Month 18) | 9.11 (6.18) | 7.86 (5.93) | 10.05 (6.24) | 0.08 |

| PHQ-9 Remission: N (%) (Month 18) | 28 (17) | 15 (19) | 13 (14) | 0.352 |

| PHQ-9 Response: N (%) (Month 18) | 23 (14) | 16 (21) | 7 (8) | 0.013* |

Note: REP=Replicating Effective Programs; EF=External Facilitation; IF=Internal Facilitation; test statistic refers to either t-test (for continuous variables) or chi-square test (for binary variables). Measures reflect raw data without imputation.

p<0.05

p<0.01

Estimated Effects of EF vs. EF/IF

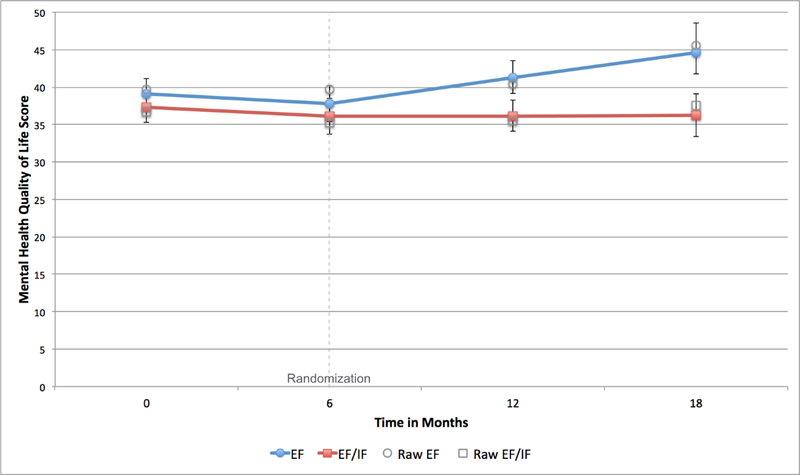

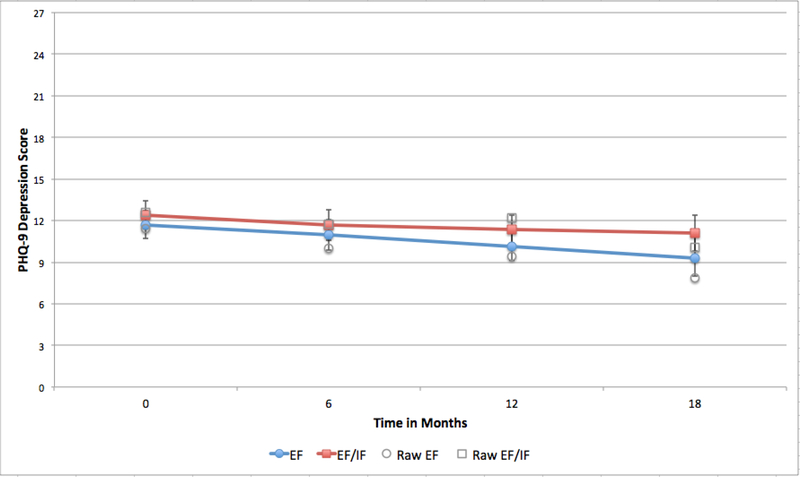

After adjusting for patient and site characteristics, analyses showed a significant difference in SF-12 MH-QOL between patients at sites initially randomized to REP+EF compared to REP+EF/IF (B=−0.55, 95% confidence interval (CI)=−0.97, −0.15) (Appendix Table 1), indicating that patients at REP+EF sites improved more than those at REP+EF/IF sites. Twelve months post-randomization, patients at REP+EF sites had average MH-QOL scores 8.38 points higher than those at REP+EF/IF sites (Figure 2). This difference was significant (95% CI=−3.59, −13.18). Patients at REP+EF/IF clinics also had PHQ-9 scores than were 1.82 points higher than patients at REP+EF clinics (95%CI=−0.14, 3.79) (Figure 3). A smaller proportion of patients in the REP+EF/IF arm also reported receiving LG between Months 6–18 (43% vs. 33%), however in adjusted analyses this difference was not statistically significant (OR=0.67; 95% CI=0.30, 1.49).

Figure 2:

Predicted and raw means for Mental Health Quality of Life over study period, by first randomization

Note: EF=External Facilitation; IF=Internal Facilitation. Raw means show values for non-imputed data with no adjustment. Error bars show 95% confidence interval for model predictions.

Figure 3:

Predicted and raw means for PHQ-9 over study period, by first randomization

Note: EF=External Facilitation; IF=Internal Facilitation. Raw means show values for non-imputed data with no adjustment. Error bars show 95% confidence interval for model predictions.

All results were unchanged by sensitivity analyses, including exclusion of imputed cases, allowing patient trajectories to differ during the first six months (pre-randomization), and adjusting for unbalanced patient characteristics (age, retired).

DISCUSSION

This paper describes primary analyses for the first clustered SMART to evaluate implementation of a CCM in community-based practices and inform understanding of the added value of different implementation strategy augmentations in real-world practices. Notably, there are few implementation strategy comparative effectiveness studies examining EBP uptake and patient outcomes in smaller, community-based practices, even though such practices serve a substantial proportion of patients with mood disorders.1,25 Previous implementation studies have focused on highly organized practices such as the VA or staff-model HMOs, and used more intensive implementation strategies such as systems redesign that are likely not feasible for smaller, lower-resourced practices that lack capacity to support large-scale quality improvement efforts.

Main Findings

The primary aim of this study was to evaluate whether augmenting REP with EF or EF/IF for sites non-responsive to REP alone would better improve patient mental health outcomes. As expected, the majority of sites participating in the study run-in phase failed to adequately implement LG under REP alone.64 Contrary to our hypotheses, though, as well as to other large-scale CCM implementation studies that have recommended intensive, local change agent-driven support,65 the simpler EF-only augmentation to REP consistently performed as well as, if not better than, the higher-intensity, greater-scope, and costlier EF/IF augmentation with respect to both clinic and downstream patient outcomes.

There are likely several reasons for these findings. First, the augmentations differed in their ease of scalability. EF proved relatively easy to scale up, with all 27 sites recording multiple interactions with the study EF. In spite of prior effectiveness studies showing EF/IF to be an effective implementation strategy, in this community-based trial it proved difficult to ‘scale-up’ with fidelity, often due to difficulty in identifying an IF for a clinic. Several EF/IF sites had difficulty identifying someone to serve as an IF, and even after identification, not all IFs had the time or inclination to perform their duties. This resulted in an implementation of REP+EF/IF that was much more heterogeneous across sites than REP+EF. Unfortunately as our data do not capture measures of IF quality, we are not able to disentangle whether all instances of EF/IF are comparatively less effective augmentations than EF or if EF/IF is equally (or more) effective when high quality, committed IFs are identified.

Second, while EF logs showed that the study EF spent more time with EF/IF than EF sites, the EF also performed multiple roles during EF/IF interactions. In addition to providing Facilitation and helping providers address implementation barriers, their interaction time was also used to train the identified IF to take on IF duties. In effect, then, the EF/IF intervention led to reduced ‘dose’ of EF over the course of intervention relative to EF-only. To the extent that EF was an active ingredient in improving outcomes, this lower dosage may explain the lesser improvement for patients at EF/IF sites.

Third, recall that more EF/IF (n=5) than EF sites (n=2) dropped out of treatment post-randomization. This may indicate that augmenting REP with EF/IF was too burdensome for, or less well tolerated by, non-responding sites already struggling to establish a modicum of LG implementation. Further analyses will examine whether EF/IF was more effective when added as an augmentation to non-responsive sites initially randomized to EF that continued to be non-responsive after an additional six months.

Limitations and Lessons Learned

Our team learned a number of lessons in implementing this novel, large-scale, community-based implementation trial, some of which represent study limitations. Most notably, while we succeeded in recruiting our targeted number of sites for the run-in phase (N=79), we underestimated the number of sites that would drop out of the study (N=18) or would fail to identify patients suitable for LG (N=14) during this phase. Four additional sites had no patients consent to be part of the study (typically because they identified few patients).

Both of these issues relate to the importance of fostering early implementation buy-in from providers. With respect to site dropout, as with many implementation studies, most sites were recruited at the executive/administrative level, often with little input from frontline providers. For some sites, it was only after they began implementing LG through REP that key frontline staff realized that they did not have the capacity or capability to provide the intervention, thus leading to early treatment drop or determinations of site ineligibility. Further, even amongst sites that remained in the study, low provider buy-in resulted in a failure to even identify patients that might benefit from LG during the run-in phase.

This shortcoming also limits the generalizability of our results, as identification and consent of at least one patient was a site-eligibility requirement for inclusion in patient-level analyses of non-responder sites. Thus, the results presented herein inform augmentations to REP (REP+EF or REP+EF/IF) only for non-responsive clinics that were able to identify at least one patient eligible for LG during the run-in phase using the criteria provided during REP. Further, the lack of common electronic medical records containing information on key mental health outcomes at many of our sites meant that we had to rely on providers to identify patients for outcomes tracking. Although we have no reason to doubt that providers exposed to LG implementation under REP outside of this study would identify patients differently than the providers at sites studied here, we acknowledge that this approach is not as optimal as sampling and following outcomes for all LG-eligible patients at non-responding sites. Future large-scale hybrid-type implementation trials could forgo these limitations by ensuring access to necessary patient outcomes from a common existing source (e.g., an electronic medical record).

Another lesson learned/limitation relates to our operationalization of a definition for non-response to REP after six months. The initial protocol41 defined non-response as <10 of a clinic’s identified patients receiving ≥3 LG sessions. As sites were expected to identify 20 patients, this definition was equivalent to <50% of identified patients receiving ≥3 LG sessions. However, executing this definition met with two major obstacles. First, as a number of sites failed to identify any patients, and more sites failed to identify 20 patients, there was no way to evaluate their response to REP under this definition. Second, while sites were able to keep track of how many patients were receiving LG and how many sessions, they were not able to confirm that these were the same patients that providers had identified as candidates for LG. To accommodate these obstacles but maintain the original spirit, the non-responder definition was changed to include sites that failed to deliver LG to ≥10 patients or failed to deliver ≥3 LG sessions to 50% of treated patients. In the future, it will be important for adaptive implementation interventions to employ tailoring variables (i.e., variables used to make changes in the provision of subsequent implementation strategies) that are easy to monitor and use.

Finally, relative to other CCM implementation initiatives, notably the COMPASS initiative, our sites saw less improvement in depression symptoms (e.g., 20% remission or response vs. 40%),66 and a number of sites remained non-adopters are study end. This suggests that while augmenting with EF/IF did not significantly improve patient outcomes relative to EF alone, community-based practices may require significantly more support in implementing a complex CCM.

CONCLUSIONS

Large-scale efforts to implement EBPs like CCMs in community-based settings often face significant barriers, and require additional implementation support. This study found that, for sites initially non-responsive to a low-level implementation strategy (REP), augmenting with EF resulted in as much or more improvement in mental health outcomes and patient-reported receipt of the CCM as more costly augmentation with EF/IF. The results of this study inform efforts to implement EBPs in community-based practices, as well as efforts to further practical implementation in real-world healthcare settings.

Supplementary Material

Acknowledgements

This research was supported by the National Institute of Mental Health (R01 099898). The views expressed in this article are those of the authors and do not necessarily represent the views of the VA. The authors have no conflicts of interest- financial or non-financial- in the methods described in this manuscript

REFERENCES

- 1.Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health. 2009;36(1):24–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Woltmann E, Grogan-Kaylor A, Perron B, Georges H, Kilbourne AM, Bauer MS. Comparative effectiveness of collaborative chronic care models for mental health conditions across primary, specialty, and behavioral health care settings: systematic review and meta-analysis. American Journal of Psychiatry. 2012;169(8):790–804. [DOI] [PubMed] [Google Scholar]

- 4.Kilbourne AM, Li D, Lai Z, Waxmonsky J, Ketter T. Pilot randomized trial of a cross‐diagnosis collaborative care program for patients with mood disorders. Depression and anxiety. 2013;30(2):116–122. [DOI] [PubMed] [Google Scholar]

- 5.Roy-Byrne PP, Sherbourne CD, Craske MG, et al. Moving treatment research from clinical trials to the real world. Psychiatric Services. 2003;54(3):327–332. [DOI] [PubMed] [Google Scholar]

- 6.Kilbourne AM, Schulberg HC, Post EP, Rollman BL, Belnap BH, Pincus HA. Translating evidence‐based depression management services to community‐based primary care practices. The Milbank Quarterly. 2004;82(4):631–659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wells K, Miranda J, Bruce ML, Alegria M, Wallerstein N. Bridging community intervention and mental health services research. American Journal of Psychiatry. 2004;161(6):955–963. [DOI] [PubMed] [Google Scholar]

- 8.Hovmand PS, Gillespie DF. Implementation of evidence-based practice and organizational performance. The journal of behavioral health services & research. 2010;37(1):79–94. [DOI] [PubMed] [Google Scholar]

- 9.Goodman RM, Steckler A, Kegler M. Mobilizing organizations for health enhancement: Theories of organizational change. Health behavior and health education: Theory, research, and practice. 1997;2. [Google Scholar]

- 10.Kilbourne AM, Greenwald DE, Hermann RC, Charns MP, McCarthy JF, Yano EM. Financial incentives and accountability for integrated medical care in Department of Veterans Affairs mental health programs. Psychiatric Services. 2010;61(1):38–44. [DOI] [PubMed] [Google Scholar]

- 11.Schutte K, Yano EM, Kilbourne AM, Wickrama B, Kirchner JE, Humphreys K. Organizational contexts of primary care approaches for managing problem drinking. Journal of substance abuse treatment. 2009;36(4):435–445. [DOI] [PubMed] [Google Scholar]

- 12.Kilbourne AM, Pincus HA, Schutte K, Kirchner JE, Haas GL, Yano EM. Management of mental disorders in VA primary care practices. Administration and Policy in Mental Health and Mental Health Services Research. 2006;33(2):208–214. [DOI] [PubMed] [Google Scholar]

- 13.Insel TR. Translating scientific opportunity into public health impact: a strategic plan for research on mental illness. Archives of general psychiatry. 2009;66(2):128–133. [DOI] [PubMed] [Google Scholar]

- 14.Bauer MS, Deane Leader HU, Lai Z, Kilbourne AM. Primary care and behavioral health practice size: the challenge for healthcare reform. Medical care. 2012;50(10):843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Murray CJ, Lopez AD. Global mortality, disability, and the contribution of risk factors: Global Burden of Disease Study. The lancet. 1997;349(9063):1436–1442. [DOI] [PubMed] [Google Scholar]

- 16.The Impact of Mental Illness on Society. Bethesda: National Institutes of Health;2001. [Google Scholar]

- 17.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Medical care research and review. 2012;69(2):123–157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science. 2015;10(1):21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kilbourne AM, Abraham KM, Goodrich DE, et al. Cluster randomized adaptive implementation trial comparing a standard versus enhanced implementation intervention to improve uptake of an effective re-engagement program for patients with serious mental illness. Implement Sci. 2013;8:136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. Implementing evidence-based interventions in health care: application of the replicating effective programs framework. Implementation Science. 2007;2(1):42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kilbourne AM, Neumann MS, Waxmonsky J, et al. Public-academic partnerships: evidence-based implementation: the role of sustained community-based practice and research partnerships. Psychiatric Services. 2012;63(3):205–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Neumann MS, Sogolow ED. Replicating effective programs: HIV/AIDS prevention technology transfer. AIDS Education and Prevention. 2000;12:35–48. [PubMed] [Google Scholar]

- 23.Bandura A, Walters RH. Social learning theory. Vol 1: Prentice-hall Englewood Cliffs, NJ; 1977. [Google Scholar]

- 24.Rogers EM. Diffusion of innovations. Simon and Schuster; 2010. [Google Scholar]

- 25.Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. Implementing evidence-based interventions in health care: application of the replicating effective programs framework. Implement Sci. 2007;2:42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Green LW, Kreuter MW. Health promotion planning: an educational and environmental approach Health promotion planning: an educational and environmental approach: Mayfield; 1991. [Google Scholar]

- 27.Bandura A Self-efficacy: toward a unifying theory of behavioral change. Psychological review. 1977;84(2):191. [DOI] [PubMed] [Google Scholar]

- 28.Kirchner JE, Kearney LK, Ritchie MJ, Dollar KM, Swensen AB, Schohn M. Research & services partnerships: lessons learned through a national partnership between clinical leaders and researchers. Psychiatric Services. 2014;65(5):577–579. [DOI] [PubMed] [Google Scholar]

- 29.Kilbourne AM, Goodrich DE, Lai Z, et al. Reengaging veterans with serious mental illness into care: preliminary results from a national randomized trial. Psychiatr Serv. 2015;66(1):90–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kilbourne AM, Goodrich DE, Lai Z, et al. Reengaging veterans with serious mental illness into care: preliminary results from a national randomized trial. Psychiatric Services. 2014;66(1):90–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stetler CB, Damschroder LJ, Helfrich CD, Hagedorn HJ. A guide for applying a revised version of the PARIHS framework for implementation. Implementation Science. 2011;6(1):99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. BMJ Quality & Safety. 1998;7(3):149–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implementation science. 2008;3(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Harvey G, Loftus‐Hills A, Rycroft‐Malone J, et al. Getting evidence into practice: the role and function of facilitation. Journal of advanced nursing. 2002;37(6):577–588. [DOI] [PubMed] [Google Scholar]

- 35.Stetler CB, Legro MW, Rycroft-Malone J, et al. Role of” external facilitation” in implementation of research findings: a qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implementation Science. 2006;1(1):23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Goodrich DE, Bowersox NW, Abraham KM, et al. Leading from the middle: replication of a re-engagement program for veterans with mental disorders lost to follow-up care. Depression research and treatment. 2012;2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kilbourne AM, Goodrich DE, Nord KM, et al. Long-term clinical outcomes from a randomized controlled trial of two implementation strategies to promote collaborative care attendance in community practices. Administration and Policy in Mental Health and Mental Health Services Research. 2015;42(5):642–653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kirchner JE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. Outcomes of a partnered facilitation strategy to implement primary care–mental health. Journal of general internal medicine. 2014;29(4):904–912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kilbourne AM, Almirall D, Goodrich DE, et al. Enhancing outreach for persons with serious mental illness: 12-month results from a cluster randomized trial of an adaptive implementation strategy. Implementation Science. 2014;9(1):163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kirchner J, Edlund CN, Henderson K, Daily L, Parker LE, Fortney JC. Using a multi-level approach to implement a primary care mental health (PCMH) program. Families, Systems, & Health. 2010;28(2):161. [DOI] [PubMed] [Google Scholar]

- 41.Kilbourne AM, Almirall D, Eisenberg D, et al. Protocol: Adaptive Implementation of Effective Programs Trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implement Sci. 2014;9:132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Almirall D, Compton SN, Gunlicks‐Stoessel M, Duan N, Murphy SA. Designing a pilot sequential multiple assignment randomized trial for developing an adaptive treatment strategy. Statistics in medicine. 2012;31(17):1887–1902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lei H, Nahum-Shani I, Lynch K, Oslin D, Murphy SA. A” SMART” design for building individualized treatment sequences. Annual review of clinical psychology. 2012;8:21–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.NeCamp T, Kilbourne A, Almirall D. Comparing cluster-level dynamic treatment regimens using sequential, multiple assignment, randomized trials: Regression estimation and sample size considerations. Statistical methods in medical research. 2017;26(4):1572–1589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kilbourne AM, Bramlet M, Barbaresso MM, et al. SMI Life Goals: Description of a randomized trial of a Collaborative Care Model to improve outcomes for persons with serious mental illness. Contemporary clinical trials. 2014;39(1):74–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kilbourne AM, Prenovost KM, Liebrecht C, et al. Randomized Controlled Trial of a Collaborative Care Intervention for Mood Disorders by a National Commercial Health Plan. Psychiatric Services. 2019:appi ps. 201800336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Miller CJ, Grogan-Kaylor A, Perron BE, Kilbourne AM, Woltmann E, Bauer MS. Collaborative chronic care models for mental health conditions: cumulative meta-analysis and meta-regression to guide future research and implementation. Medical care. 2013;51(10):922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bauer MS, McBride L, Williford WO, et al. Collaborative care for bipolar disorder: Part II. Impact on clinical outcome, function, and costs. Psychiatric services. 2006;57(7):937–945. [DOI] [PubMed] [Google Scholar]

- 49.Kilbourne AM, Goodrich DE, Lai Z, Clogston J, Waxmonsky J, Bauer MS. Life Goals Collaborative Care for patients with bipolar disorder and cardiovascular disease risk. Psychiatric Services. 2012;63(12):1234–1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kilbourne AM, Post EP, Nossek A, Drill L, Cooley S, Bauer MS. Improving medical and psychiatric outcomes among individuals with bipolar disorder: a randomized controlled trial. Psychiatric Services. 2008;59(7):760–768. [DOI] [PubMed] [Google Scholar]

- 51.Simon GE, Ludman EJ, Bauer MS, Unützer J, Operskalski B. Long-term effectiveness and cost of a systematic care program for bipolar disorder. Archives of general psychiatry. 2006;63(5):500–508. [DOI] [PubMed] [Google Scholar]

- 52.Bauer MS, McBride L, Williford WO, et al. Collaborative care for bipolar disorder: part I. Intervention and implementation in a randomized effectiveness trial. Psychiatric services. 2006;57(7):927–936. [DOI] [PubMed] [Google Scholar]

- 53.Simon GE, Ludman E, Unützer J, Bauer MS. Design and implementation of a randomized trial evaluating systematic care for bipolar disorder. Bipolar Disorders. 2002;4(4):226–236. [DOI] [PubMed] [Google Scholar]

- 54.Spitzer RL, Kroenke K, Williams JB, Lowe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006;166(10):1092–1097. [DOI] [PubMed] [Google Scholar]

- 55.Bauer MS, Vojta C, Kinosian B, Altshuler L, Glick H. The Internal State Scale: replication of its discriminating abilities in a multisite, public sector sample. Bipolar Disord. 2000;2(4):340–346. [DOI] [PubMed] [Google Scholar]

- 56.Glick HA, McBride L, Bauer MS. A manic-depressive symptom self-report in optical scanable format. Bipolar Disord. 2003;5(5):366–369. [DOI] [PubMed] [Google Scholar]

- 57.Gordon AJ, Maisto SA, McNeil M, et al. Three questions can detect hazardous drinkers. J Fam Pract. 2001;50(4):313–320. [PubMed] [Google Scholar]

- 58.Kessler RC, Andrews G, Mroczek D, Ustun B, Wittchen HU. The World Health Organization composite international diagnostic interview short‐form (CIDI‐SF). International journal of methods in psychiatric research. 1998;7(4):171–185. [Google Scholar]

- 59.Scott NW, McPherson GC, Ramsay CR, Campbell MK. The method of minimization for allocation to clinical trials. a review. Control Clin Trials. 2002;23(6):662–674. [DOI] [PubMed] [Google Scholar]

- 60.Taves DR. Minimization: a new method of assigning patients to treatment and control groups. Clin Pharmacol Ther. 1974;15(5):443–453. [DOI] [PubMed] [Google Scholar]

- 61.Ware J Jr., Kosinski M, Keller SD A 12-Item Short-Form Health Survey: construction of scales and preliminary tests of reliability and validity. Med Care. 1996;34(3):220–233. [DOI] [PubMed] [Google Scholar]

- 62.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001;16(9):606–613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Spitzer RL, Kroenke K, Williams JB. Validation and utility of a self-report version of PRIME-MD: the PHQ primary care study. Primary Care Evaluation of Mental Disorders. Patient Health Questionnaire. JAMA. 1999;282(18):1737–1744. [DOI] [PubMed] [Google Scholar]

- 64.Bauer AM, Azzone V, Goldman HH, et al. Implementation of collaborative depression management at community-based primary care clinics: an evaluation. Psychiatr Serv. 2011;62(9):1047–1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Coleman KJ, Hemmila T, Valenti MD, et al. Understanding the experience of care managers and relationship with patient outcomes: the COMPASS initiative. General hospital psychiatry. 2017;44:86–90. [DOI] [PubMed] [Google Scholar]

- 66.Rossom RC, Solberg LI, Magnan S, et al. Impact of a national collaborative care initiative for patients with depression and diabetes or cardiovascular disease. General hospital psychiatry. 2017;44:77–85. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.