Abstract

Computerized clinical decision support systems can help to provide objective, standardized, and timely dementia diagnosis. However, current computerized systems are mainly based on group analysis, discrete classification of disease stages, or expensive and not readily accessible biomarkers, while current clinical practice relies relatively heavily on cognitive and functional assessments (CFA). In this study, we developed a computational framework using a suite of machine learning tools for identifying key markers in predicting the severity of Alzheimer’s disease (AD) from a large set of biological and clinical measures. Six machine learning approaches, namely Kernel Ridge Regression (KRR), Support Vector Regression, and k-Nearest Neighbor for regression and Support Vector Machine (SVM), Random Forest, and k-Nearest Neighbor for classification, were used for the development of predictive models. We demonstrated high predictive power of CFA. Predictive performance of models incorporating CFA was shown to consistently have higher accuracy than those based solely on biomarker modalities. We found that KRR and SVM were the best performing regression and classification methods respectively. The optimal SVM performance was observed for a set of four CFA test scores (FAQ, ADAS13, MoCA, MMSE) with multi-class classification accuracy of 83.0%, 95%CI = (72.1%, 93.8%) while the best performance of the KRR model was reported with combined CFA and MRI neuroimaging data, i.e., R2 = 0.874, 95%CI = (0.827, 0.922). Given the high predictive power of CFA and their widespread use in clinical practice, we then designed a data-driven and self-adaptive computerized clinical decision support system (CDSS) prototype for evaluating the severity of AD of an individual on a continuous spectrum. The system implemented an automated computational approach for data pre-processing, modelling, and validation and used exclusively the scores of selected cognitive measures as data entries. Taken together, we have developed an objective and practical CDSS to aid AD diagnosis.

Keywords: dementia, Alzheimer’s disease, decision support system, machine learning, diagnosis support, cognitive impairment

1. Introduction

Recent advances in machine learning (ML) and big data analytics have led to the emergence of a new generation of clinical decision support systems (CDSSs) designed to exploit the potentials of data-driven decision making in patient monitoring, particularly in the area of internal medicine, general practice, and remote monitoring of vital signs (Gálvez et al., 2013, Helldén et al., 2015 Lisboa & Taktak, 2006, Skyttberg, Vicente, Chen, Blomqvist, & Koch, 2016). Improved access to large and heterogeneous healthcare data and an integration of advanced computational procedures into CDSSs has enabled the real-time discovery of similarity metrics for patient stratification, development of predictive analytics for risk assessment, and selection of patient-specific therapeutic interventions at the time of decision-making (Brown, 2016, Dagliati et al., 2018, Farran, Channanath, Behbehani, & Thanaraj, 2013). CDSSs provide clinical decision support at the time and location of care rather than prior to or after the patient encounter and therefore, help streamline the workflow for clinicians and assist real-time decision-making (diagnosis, prognosis, treatment) (Castaneda et al., 2015, Wright et al., 2016). Numerous studies demonstrated that CDSSs contributed to improving patient safety and care by decreasing the number of therapeutic and diagnostic errors that are unavoidable in human clinical practice (Lindquist, Johansson, Petersson, Saveman, & Nilsson, 2008) and reduced the workload of medical staff, especially in contexts that require frequent monitoring or complex decision-making, such as management of chronic diseases (Wright et al., 2016). Current research directions in dementia, with Alzheimer's disease (AD) being its most common form, focuses on interventions and treatments that can modify progression of dementia symptoms or lead to an early identification of individuals at risk of developing dementia (Brodaty et al., 2016, Ritchie et al., 2017). Increasing evidence suggests that early diagnosis of dementia can lead to significant clinical and economic benefits. However, the underdiagnosis of dementia is currently one of the key deficiencies in disease management in the primary care setting (Dodd, Cheston, & Ivanecka, 2015, Lang et al., 2017, Paterson & Pond, 2009). Research indicates that low dementia detection rates in primary care are mainly related to the absence of standardized and reliable screening tools, inadequate training on dementia of general practitioners (GPs), and the GPs' lack of confidence in providing a correct diagnosis (Koch, Iliffe, & EVIDEM-ED project, 2010).

Technology-based tools have considerable potential to transform the dementia care pathway. CDSS utilized in the early diagnosis of AD may allow for the selection of patients for clinical trials at the earliest possible stage of disease development and enable clinicians to initiate the treatment as early in the disease process as possible to more effectively arrest or slow disease progression. A number of applications have been developed to serve as enabling tools for dementia diagnostics (Mandala, Saharana, Khana & Jamesa, 2015). These include software applications that provide practical information for those caring for dementia patients (e.g., Dementia Support by Swedish Care International, Alzheimer's and Other Dementias Daily Companion, MindMate) as well as tools used for mobile cognitive screening (e.g., MOBI-COG, Mobile Cognitive Screening, Dementia Screener, Sea Hero Quest, CANTAB). In addition, CDSSs, designed to aid clinical decision making by adapting computerized clinical practice guidelines to individual patient characteristics or integrating machine learning methodologies for pattern recognition, have been recently gaining more interest in expediting dementia diagnosis and disease management (Antila et al., 2013, Frame, LaMantia, Bynagari, Dexter, & Boustani, 2013, Lindgren, 2011, Lindgren, Eklund, & Eriksson, 2002). It has been shown that such systems are more sensitive in detecting an early-stage disease and more objective than diagnostic decisions made by a single practitioner (Moja et al., 2015).

Despite the fact that advanced computational approaches for AD classification and progression have been applied to large sets of patient data, including magnetic resonance imaging (MRI) (Karas et al., 2008, Lebedeva et al., 2017, Moradi et al., 2015), positron emission tomography (PET) (Higdon et al., 2004, Grimmer et al., 2016, Sanchez-Catasus et al., 2017), cerebrospinal fluid (CSF) biomarkers (Forlenza et al., 2015, Handels et al., 2017, Mattsson et al., 2009), combination of the neuroimaging modalities (Youssofzadeh, McGuinness, Maguire, & Wong-Lin., 2017), and cognitive and functional assessments (CFA) (Ding et al., 2018, Chapman et al., 2011, Korolev, Symonds, Bozoki & Alzheimer's Disease Neuroimaging Initiative, 2016, Maroco et al., 2011), there is a significant gap between research outputs and their actual utilization in daily clinical practice. In contrast to other disease areas, the integration of machine learning methodologies into CDSSs and their deployment for a routine use in AD diagnostics is still very rare. The few systems that are used in dementia diagnostics require information from expensive and labour-intensive biomarkers (Antila et al., 2013, Soininen et al., 2012) or implement predictive methodologies based on discrete classes for the different stages of the disease even if the underlying neurobiology could possibly evolve in a continuous manner (Onoda & Yamaguchi, 2014). Furthermore, to the best of our knowledge, no CDSS for dementia detection or management has been developed so far for the use in the primary care setting.

The aim of this study is two-fold: (1) to describe the developmental process of a computational framework for identifying key measures in predicting the severity of AD; and (2) to build upon this framework to develop a data-driven and self-adaptive prototype of a CDSS for evaluating the severity of AD of an individual on a continuous spectrum. In order to achieve this, we first utilize a suite of machine learning techniques to extract useful information from large volumes of patient data and provide a disease outcome prediction for different types and combinations of AD markers. We demonstrate that CFA can reliably and accurately provide prediction of AD severity. Next, we design a CDSS that incorporates an automated computational approach for data pre-processing, modelling, and validation and uses selected CFA scores as data input. Since our system was designed to utilize information from readily available and cost-effective CFA markers, it can be easily implemented in general clinical practice.

2. Material and methods

2.1. Development of a computational framework

2.1.1. Participants

Patient records from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu) were used to develop the computational approach for evaluating the cognitive decline of an individual associated with AD. The primary goal of ADNI has been to test whether MRI, PET, other biological markers, and clinical and neuropsychological assessments can be combined to measure the progression of mild cognitive impairment (MCI) and early AD.

The Clinical Dementia Rating Sum of Boxes (CDRSB) scores of 488 patients with a complete dataset of structural MRI and PET imaging, CSF biomarkers, CFA scores, sociodemographic features and medical history were used to describe AD staging and acted as an outcome (response) measure in prediction models. The CDRSB score is widely accepted in the clinical setting as a reliable and objective AD assessment tool (Cedarbaum et al., 2013). In total, we identified 178 cognitively healthy controls (CDRSB = 0), 263 subjects with questionable cognitive impairment (QCI) (0.5 ≤ CDRSB ≤ 4.0), 46 patients with mild AD (4.5 ≤ CDRSB ≤ 9.0), and 1 patient with moderate AD (9.5 ≤ CDRSB ≤ 15.5). Since only one patient with moderate AD was identified, the subjects from mild and moderate AD categories were combined into one mild/moderate AD category.

2.1.2. Data types

We considered 66 features as potential predictors of cognitive decline associated with AD including 38 assessments/biomarkers (10 clinical and 28 biological measures) and 28 risk factors (family history, medical history, and sociodemographic characteristics). The cognitive and functional assessments offered information on memory deficits and behavioural symptoms of AD, CSF measures corresponded to the pathological changes at the biological level, while neuroimaging features allowed us to evaluate the neural degeneration related to AD. Sociodemographic, family, and patient’s medical history data enabled the identification of risk factors associated with increased risk of developing AD.

Clinical measures included: Mini-Mental State Examination (MMSE) (Folstein, Robins & Helzer, 1983); Alzheimer’s Disease Assessment Scale 13 (ADAS13) (Mohs et al., 1997); Montreal Cognitive Assessment (MoCA) (Nasreddine et al. 2005); Logical Memory – Immediate Recall (LIMMTOTAL) (Abikoff et al., 1987); Logical Memory – Delayed Recall (LDELTOTAL) (Abikoff et al., 1987); Rey Auditory Verbal Learning Test (RAVLT): Immediate, Learning, Forgetting, and Perc Forgetting (Rey, 1964); and Functional Assessment Questionnaire (FAQ) (Pfeffer, Kurosaki, Harrah Jr, Chance & Filos, 1982).

Biological data consisted of neuroimaging measurements and CSF biomarkers. Neuroimaging measures utilized MRI and PET (FDG and 18F-AV-45) data. MRI measures included volumetric data of hippocampus, ventricles, entorhinal, fusiform gyrus, middle temporal gyrus (MidTemp), whole brain, and intracerebral volume (ICV). The regional brain volumes were normalized by ICV. We also considered the volumetric data of intracranial gray matter (GRAY), white matter (WHITE), cerebrospinal fluid (CSF_V), and white matter hyperintensities (WHITMATHYP). Furthermore, two Boundary Shift Integral (BSI) measures were evaluated: whole brain (BRAINVOL) and ventricle (VENTVOL). Finally, we analysed the Florbetapir summary data represented by the gray matter regions of interest (frontal, anterior/posterior cingulate, lateral parietal, lateral temporal) normalized by the reference region of whole cerebellum (WHOLECEREBNORM). FDG-PET (FDG) was determined as a sum of mean glucose metabolism averaged across 5 regions of interest, i.e., right and left angular gyri (Angular Right and Temporal Left respectively), bilateral posterior cingulate (CingulumPost Bilateral), right and left inferior temporal gyri (Temporal Right and Temporal Left respectively) (Landau et al., 2011). Beside the composite FDG-PET, we also considered measurements for separate FDG-ROIs (i.e., Angular Right and Left, Temporal Right and Left, CingulumPost Bilateral) (Jagust et al., 2010). 18F-AV-45 PET (AV45) was represented by the mean of Florbetapir (F-18) standardized uptake value ratios (SUVR) of frontal, anterior and posterior cingulate, lateral parietal, and lateral temporal cortex (Landau et al., 2012). Other PET measures included spatial extent of hypometabolism determined using 3-dimensional stereotactic surface projection analysis (SUMZ2, SUMZ3) (Chen et al., 2010). In addition, CSF concentrations of total tau protein - t-tau (TAU), amyloid-β peptide of 42 amino acids - Aβ1–42 (ABETA), and phosphorylated tau - p-tau181p (PTAU) were studied, as were ratios of t-tau to Aβ1–42 (TAU_ABETA), and p-tau181p to Aβ1–42 (PTAU_ABETA). The complete overview of data types used in our study and their abbreviations are shown in Table A.1.

2.1.3. Feature selection and modelling approach

The development of the computational framework consists of several steps. First, we conducted feature standardization to assimilate clinical measurements of diverse scales (Liu & Motoda, 2007). Accordingly, all features were rescaled so that they had the properties of a standard normal distribution with a mean of 0 and a standard deviation of 1 (Liu & Motoda, 2007). The full dataset was then split into a model development set (90%) and a testing set (10%) was used for evaluating and comparing performances of competing models. The model development set was further split into training and validation sets (Barber, 2012). The training data was used to predict the responses for the observations in the validation set (Barber, 2012). This provided us with an unbiased evaluation of a model fit on the training dataset while tuning the hyperparameters of the model. For the validation procedure, we used the leave-one-out cross validation (LOOCV), which is a k-fold validation where k = n (Elisseeff & Pontil, 2003). The final model evaluation was conducted on a held-out testing set that has not been used prior, either for training the model or tuning the model’s parameters.

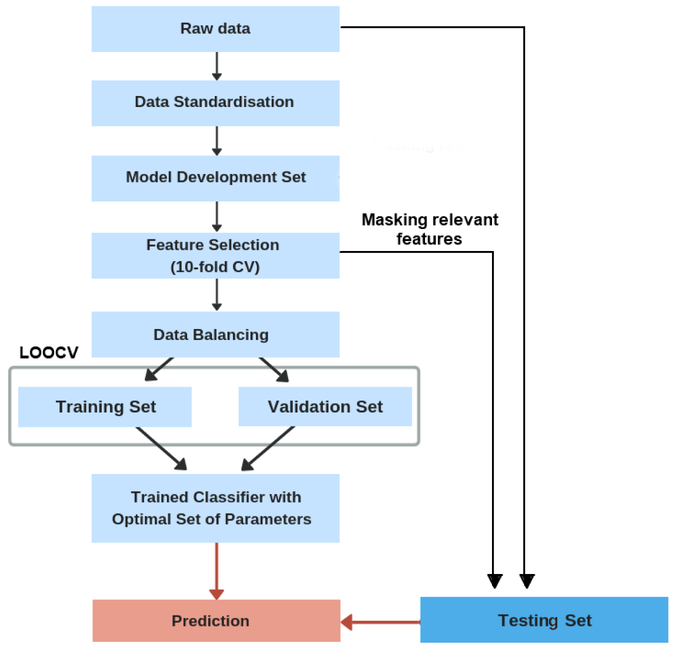

Since machine learning algorithms tend to produce biased models when dealing with imbalanced datasets, the Synthetic Minority oversampling technique (SMOTE) was used to handle the class imbalance in the model development set by resampling original patient data and creating synthetic instances (Chawla, Bowyer, Hall & Kegelmeyer, 2002). For improved generalization performance of predictive models, feature selection was implemented to identify the most relevant subset of features for predicting AD severity. Three regression models (Kernel Ridge Regression (KRR), Support Vector Regression (SVR), and k-Nearest Neighbor Regression (kNNreg)) and three classification models (Support Vector Machine (SVM), Random Forest (RF), k-Nearest Neighbor Classification (kNNclass)) were developed and their performance tested for different modality types and their combinations. The selection of features that achieved high predictive accuracy for the best performing classification and regression model was later used as entry input for CDSS. A leave-one-out cross validation (LOOCV) was applied for hyper-parameters optimization. The overall procedure for model development and evaluation is shown in Fig. 1.

Fig. 1.

Overview of the model development and validation procedure.

2.1.3.1. Feature selection

Previous studies typically used the univariate filtering methods to filter out the least promising features before the development of a predictive model (Michalak & Kwaśnicka, 2006). However, such filtering approaches can prompt loss of relevant features that are meaningless by themselves but when considered together, can improve model performance (Perez-Riverol, Kuhn, Vizcaíno, Hitz & Audain, 2017). To overcome this, the wrapper methods can be applied to assess the importance of specific feature sets. It has been shown that wrappers obtain subsets with better performance than filters. Wrappers use a search procedure to generate and evaluate different subsets of features in the space of possible feature subsets by training and testing a specific classification model (Hira & Gillies, 2015). The commonly used classification algorithms for identifying the most relevant input variables are: Naïive Bayes (Cortizo & Giraldez, 2006, Panthong & Srivihok, 2015), SVM (Maldonado & Weber, 2009, Maldonado, Weber & Famili, 2009), Random Forest (Rodin et al., 2009), Bagging (Panthong & Srivihok, 2015), AdaBoost (Panthong & Srivihok, 2015), and Extreme Learning Machines (Benoít, Van Heeswijk, Miche, Verleysen & Lendasse, 2013). These classification techniques combined with a greedy search algorithm allow for finding the optimal number of features by iteratively selecting features based on the classifier performance (Bengio et al., 2003).

Since ADNI dataset is characterized by high dimensionality that increases the complexity of computation and analysis, we used the feature selection technique that was found to minimize redundancy and allowed for identifying features with the highest relevance to the disease class (Granitto, Furlanello, Biasioli & Gasperi, 2006). As such, we applied the Recursive Feature Elimination (RFE) method coupled with Random Forest for measuring variable importance. The RFE technique has been widely applied in healthcare applications due to its efficiency in reducing the complexity (Li, Xie, & Liu, 2018). Furthermore, studies demonstrated that RF-RFE outperformed SVM-RFE in finding small subsets of features with a high discrimination capability and required no parameter tuning to produce competitive results (Granitto, Furlanello, Biasioli & Gasperi, 2006).The RFE method with the 10-fold validation was applied on the model development set (Bengio et al., 2003). For better replicability, the 10-fold CV procedure was repeated 10 times with different partitions of the data to avoid any bias introduced by randomly partitioning dataset in the cross-validation. The RFE technique searched for the optimal combination of predictors (among all possible subsets) that maximized model performance through backward feature elimination based on the predictor importance measure as a ranking criterion. At each iteration, the Random Forest (RF) algorithm, incorporating a hierarchical decision tree structure was used to explore all possible subsets of the features and measure their importance with respect to the classification outcome (Gregorutti, Michel & Saint-Pierre, 2017). To assess the robustness of RFE-RF process in selecting optimal subset of features, we applied the RFE technique to another similar type of ensemble methods, namely, bootstrap aggregated (bagged) trees (RFE-BT) and compared their results (Panthong & Srivihok, 2015). As with RFE-RF, the RFE-BT performance was evaluated in a 10-fold cross-validation repeated five times with different split positions.

2.1.3.2. Development of predictive models

A number of ML techniques have been used for AD detection. Classification approaches have been derived using Random Forest (RF) (Gray et al., 2013, Sarica, Cerasa & Quattrone, 2017), Logistic Regression (Barnes et al., 2010, Bauer, Cabral & Killiany, 2018, Chary et al. 2013, Wolfsgruber et al., 2014), and SVM (Casanova, Hsu, & Espeland, 2015, Cui et al., 2011, Klöppel et al., 2008, Ritter et al., 2015, Weygandt et al., 2011). In particular, the SVM showed great promise in improving diagnosis and prognosis in AD, especially in the studies characterized by a relatively small number of participants and disparate and high-dimensional data types (Dyrba, Grothe, Kirste, & Teipel, 2015, Klöppel et al., 2008, Long, Chen, Jiang, Zhang, & Alzheimer’s Disease Neuroimaging Initiative, 2017, Magnin et al., 2009). Furthermore, the SVM often outperformed other machine learning algorithms used for AD classification (e.g. RF, logistic regression) (Samper-González et al., 2018, Tripoliti, Fotiadis, Argyropoulou, & Manis, 2010).

Compared to the ML classification methods, regression approaches focus on the estimation of continuous clinical variables along the continuum of disease severity (Wang, Fan, Bhatt & Davatzikos, 2010). Several regression methods have been applied in AD studies (Duchesne, Caroli, Geroldi, Collins, & Frisoni, G. 2009, Duchesne, Caroli, Geroldi, Frisoni, & Collins, 2005, Youssofzadeh et al., 2017). However, linear regression models have been often ineffective in capturing nonlinear relationships between biomarkers (e.g. neuroimaging data) and cognitive scores, especially when limited training examples of high dimensionality were used (Duchesne et al., 2009). On the other hand, nonparametric kernel regression methods yielded relatively robust estimations of continuous variables with good generalization ability (Liu, Cao, Yang, & Zhao, 2018, Wang et al., 2010). Regularized regression techniques, such as Ridge Regression, performed especially well given high dimensional and colinear AD data (Teipel et al., 2017, Youssofzadeh et al., 2017). In addition, the Ridge Regression combined with the kernel trick demonstrated high predictive performance when applied to individual patient data (Youssofzadeh et al., 2017).

Our study built upon earlier findings and used six different non-parametric methods for the development of predictive models, namely SVM, RF, and kNNclass for classification and KRR, SVR, and kNNreg for regression. For each selected technique, we tested a series of values for the tuning process with the optimal parameters determined based on the model performance. The results of the best performing regression and classification algorithms are presented in the main text; the results of the remaining methods can be found in the Supplementary Material (Supplementary Table A.2., A.3, and A.4).

The distinction between regression and classification models was reflected in definition of the response variable (CDRSB). The regression models predicted a numerical value from a range of continuous values (i.e., 0 < CDRSB < 15.5) while the classification models predicted the target class, i.e., ‘Normal’ (CDRSB = 0), ‘QCI’ (0.5 ≤ CDRSB ≤ 4.0), ‘Mild/Moderate’ (4.5 ≤ CDRSB ≤ 15.5). Since the model performance greatly depends on the choice of a kernel function (Hainmueller & Hazlett, 2014, Matheny, Resnic, Arora & Ohno-Machado, 2007), we tested different types of kernels, i.e., linear, polynomial, and radial basis function, and selected the one that maximized the performance measure for each model type.

2.1.3.2.1. Kernel Ridge Regression

The KRR combines ridge regression with a kernel trick allowing for mapping the input space into a higher dimensional space of nonlinear functions of predictors (Murphy, 2014). The general form of the KRR is described by:

| (1) |

where NT is the number of training points, k is the kernel function, and α are the weights obtained through the minimization of the cost function:

| (2) |

where α = (α1, …, αNT)T, K is the kernel matrix, and λ controls the amount of regularization applied to the model (Vu et al., 2015). The best performance of the KRR model was achieved by applying a radial basis function (RBF) kernel defined as:

| (3) |

where x and x′ are input vectors, and γ > 0 is a width parameter (Murphy, 2014).

2.1.3.2.2. Support Vector Machine and Support Vector Regression

SVM is a classification technique that performs classification tasks by mapping the input vectors onto a higher dimensional space denoted as Φ: Rd → Hf (d < f) where an optimal separating hyperplane is constructed using a kernel function k(xi, xj) (Ramírez et al., 2013). The performance of the SVM classifier was maximized using a polynomial kernel:

| (4) |

where xi and xj are vectors in the input space, c is a free parameter trading off the influence of higher-order versus lower-order terms in the polynomial, and d is the degree of polynomial (Cortes & Vapnik, 1995).

SVR is based on the same principles as SVM. In contrast to traditional regression techniques, SVR focuses on minimizing the bound of the generalization error instead of seeking to minimize the prediction error on the training set (training error) (Basak, Pal, & Patranabis, 2007). The objective of SVR is to find a regression function, y = f(x), such as it predicts the outputs {y} corresponding to a new input-output set {(x, y)} which are drawn from the same underlying joint probability distribution as the training set g = {(x1, y1), (x2, y2), (xp, yp)}, where xi ϵ υN is the vector of input variables and yiϵ υ is the vector of corresponding output values (Awad & Khanna, 2015). The basic concept of SVR is to non-linearly transform the original input space into a higher dimensional feature space and perform linear regression in this feature space by ε-insensitive loss (Awad & Khanna, 2015). The SVR ε-insensitive loss function penalizes misestimates that are farther than ε from the desired output. The ε parameter determines the width of the ε-insensitive region (tube) around the function; a lower tolerance for error is reflected in a smaller ε value. If the predicted value is within the ε-zone, the loss is zero. If the predicted value is located outside the ε-zone, the loss is defined by the magnitude of the difference between the predicted value and the ε radius (Awad & Khanna, 2015).

2.1.3.2.3. k-Nearest Neighbors

kNN is a non-parametric approach applied to both classification and regression problems. The prediction of values of any new data points uses the 'feature similarity' measure (Kramer, 2013). Accordingly, given a predefined threshold for the rule (i.e. the k number of neighbors) a new point is assigned a value based on its distance to training examples. Here, the distance between two data points is determined using the normalized Euclidean distance function defined as:

where A and B are represented by feature vectors A = (x1, x2, …, xm), B = {y1, y2, …, ym), and m is the dimensionality of the feature space (Kramer, 2013). The kNN classification assigns a class label of the majority of the k-nearest patterns in the feature space while the kNN regression calculates the mean of the function values of its k-nearest neighbors (Kramer, 2013).

2.1.3.2.4. Random Forest

RF estimates the importance of features included in a model by constructing an ensemble of decision trees (Rodin et al., 2009). As a boosting type of algorithm, RF combines the efforts of an ensemble of weak classifiers to build a single, stronger classifier. It achieves it by training a specified number of decision trees using different partitions of the training set and conducting the following randomizing operations: 1) each tree is trained on a random bootstrap subset of the training data; 2) each node of a tree only uses a randomly selected subset of features. The trained decision trees then produce a single prediction by averaging the individual estimates from random subsamples of the data. More detail about the theory and mechanisms of RF is given in Breiman (2001).

2.1.3.3. Model performance evaluation

The optimal subset of features identified during the feature selection process was subsequently used for training the selected regression and classification models. Both types of models were developed using 90% of the original data. The values of hyper-parameters used in constructing the models were optimized by applying grid search with LOOCV on the training data (Elisseeff & Pontil, 2003). The LOOCV technique is N-fold cross-validation, where N is the number of instances in the dataset. Although LOOCV is computationally intensive, choosing the number of folds equal to N gives more accurate assessment as the true size of the training set is closely mimicked and hence, the model bias is minimized (Elisseeff & Pontil, 2003). Accordingly, we tested each single held out patient record (validation set) on the classifier trained on the remaining (N - 1) patient observations. Note that, the optimal values of the parameters were determined separately for each model type and each modality type or their combinations (i.e., CFA, MRI, PET, CSF, Age). The predictive performance of trained models was later evaluated on an (unseen) test set randomly partitioned from the original data (10% of the original data). The test was performed once for each model constructed using different modality types and their combinations. This allowed us to identify a subset of features that was later used as entry input for the CDSS.

Two established measures for assessing the performance of regression models were used: the adjusted coefficient of determination (R2) and the Root Mean Square Error (RMSE) (Allen, 1997). For classification models, we calculated four metrics: multi-class classification accuracy (MCA), sensitivity, specificity, and area under the ROC curve (AUC) (Hand & Till, 2001). Since simple form of AUC is only used as a binary classification measure, we extended the definition of AUC to the case of multi-class problem by averaging pairwise comparisons (Hand &Till, 2001).

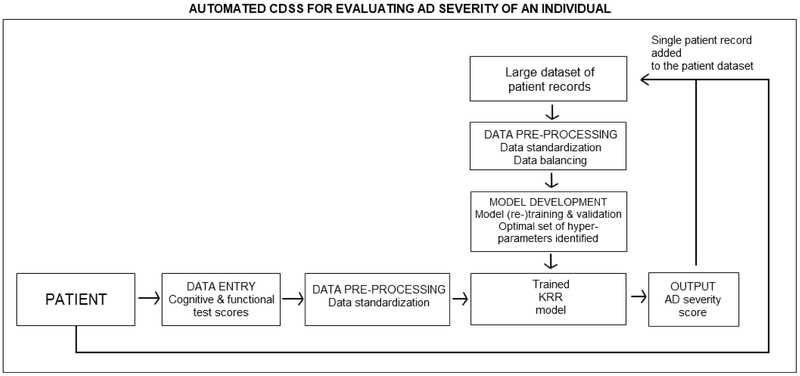

2.2. Development of clinical decision support system

The development of the computational framework described above allowed us to identify a subset of features with high discriminative power in evaluating levels of cognitive impairment in AD. These features were used as CDSS inputs for assessing AD severity of an individual (Bucholc et al. 2017, Bucholc et al., 2018). The CDSS workflow characteristics are shown in Fig. 2. The elements of the framework responsible for data pre-processing, modelling, and validation were automated and realized in the CDSS. The software prototype was developed using R version 3.4.1 and Shiny version 1.0.5. A team of domain experts including computer scientists and clinical experts was involved in the design process. To maximize system effectiveness, clarity, and guarantee efficient interaction with clinical staff, the visual representations of clinical data were displayed in concise formats that did not lower cognitive effort required to interpret them in a timely manner. Consultations with medical personnel enabled an understanding of the local context in which the system will be implemented. Furthermore, all involved parties became familiar with the rationale and methodological approach behind the development of our decision support tool. This closed-loop process between the computer scientist and clinicians helped us identify leading obstacles to the system’s adoption and routine use in clinical practice.

Fig. 2.

UML activity diagram of the computer-based clinical decision support system for predicting AD severity of an individual.

3. Results

3.1. Identification of AD features for the CDSS data entry

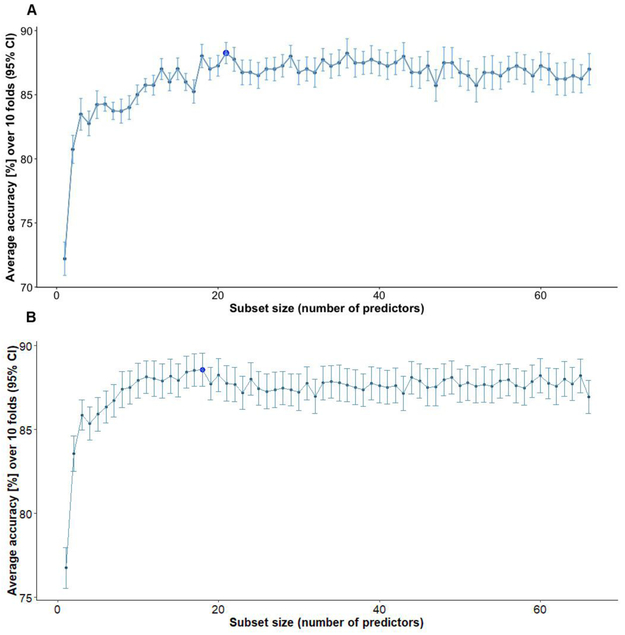

3.1.1. Dimensionality reduction of AD data

Both feature selection methods (RFE-RF and RFE-BT) we consider are variants of the recursive stepwise selection approach. Fig. 3 shows the performance profile across different subset sizes evaluated with the RFE-RF (Fig. 3A) and RFE-BT (Fig. 3B) techniques. The plotted values refer to the average accuracy measured using 10 repeats of 10-fold cross-validation. The accuracy of classifiers (RF and BT) was calculated for different combinations of features and the subset of features with best performance was retained.

Fig. 3.

A) Performance profile across different subset sizes evaluated using the RFE-RF technique. Dark blue dot: the subset of features with the best performance B) Resampling performance of the best subset of features across different folds.

Given the RFE-RF, we found a combination of 21 features (LDELTOTAL, FAQ, MOCA, ADAS13, LIMMTOTAL, RAVLT Immediate, MMSE, Hippocampus, FDG, Angular Left, Whole Brain, Age, RAVLT Perc Forgetting, MidTemp, Angular Right, Temporal Left, SUMZ3, RAVLT Learning, TAU_ABETA, TAU, Entorhinal) to achieve the highest predictive accuracy (MCA = 88.9%, 95%CI = (88.2%, 89.6%)). The optimal subset of features identified with RFE-BT consisted of 18 features with MCA = 88.5%, 95%CI = (87.5%, 89.5%). All features (with the exception of SUMZ2) selected during the RFE-BT process were also identified with RFE-RF. Since the best subset of features determined using the RFE-RF approach was more comprehensive and yielded higher accuracy, we henceforth used it for training regression and classification models.

The features identified with RF-RFE were grouped into five modality types: 1) CFA (LDELTOTAL, FAQ, MOCA, ADAS13, LIMMTOTAL, MMSE, RAVLT Immediate, RAVLT Perc Forgetting, RAVLT Learning); 2) MRI (Hippocampus, MidTemp, Entorhinal, Whole Brain); 3) PET (FDG, Angular Left, Angular Right, Temporal Left, SUMZ3); 4) CSF (TAU_ABETA, TAU); and 5) Age. The reason for grouping the features into modality types was to determine if cost-effective and non-invasive AD markers, and therefore, easier to implement into the CDSS, have high discriminative power in assessing the severity of AD. Accordingly, we analysed the performance of predictive models constructed using each data type (as well as their combinations).

3.1.2. Model performance

To test the robustness of our hypothesis, we used six different ML methods for the development of predictive models, namely KRR, SVR, and kNNreg for regression and SVM, RF, and kNNclass for classification. Our analysis showed that all models incorporating CFA into their design performed better than models based on a single or combination of biomarkers. The results of the best performing regression and classification models (KRR and SVM respectively) were presented in the main text while the performance measures for the remaining 4 models were included in the Supplementary Material (Table A.2, A.3, A.4).

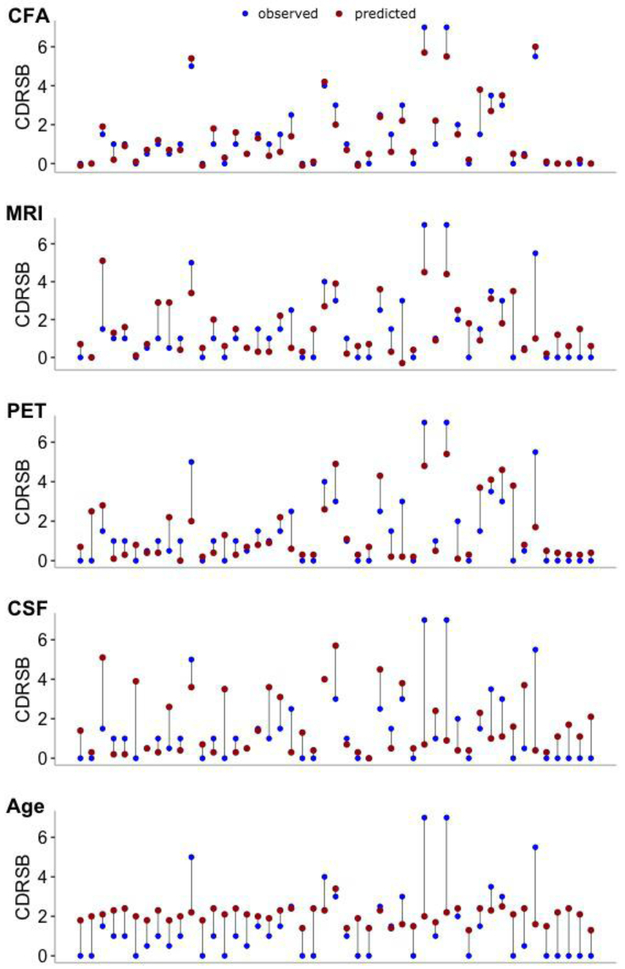

3.1.2.1. Kernel Ridge Regression model

The KRR model constructed for a combination of CFA and biomarkers performed consistently better than models incorporating only biomarkers (either a single modality type or their combinations) (Table 1). The best performance of the KRR model was observed for the combined CFA and MRI data, i.e., R2 = 0.874, 95%CI = (0.827, 0.922) (Table 1, bold). Of the two modalities, CFA features were the most discriminative while MRI markers provided complementary information about AD severity, enhancing the predictive performance of the model. Taken together, CFA provided insight into the memory deficits and behavioural symptoms of AD while MRI features offered complementary information regarding the structural degeneration of AD. Biomarker features achieved significantly lower performance, e.g., combined PET, MRI, and CSF data yielded R2 = 0.417, 95%CI = (0.256, 0.578) while for PET and MRI features, we reported R2 of 0.407, 95%CI = (0.237, 0.578). Given a single modality type, the model based on CFA (R2 = 0.866, 95%CI = (0.809, 0.922)) clearly outperformed models constructed with MRI (R2 = 0.317, 95%CI = (0.120, 0.513)), PET (R2 = 0.404, 95%CI = (0.215, 0.593)) and CSF (R2 = 0.024, 95%CI = (0, 0.105)) features. Modelsbuilt using Age or CSF data alone achieved the worst performance. KRR predictions of AD severity of individual patients along with the expected diagnosis for each modality type are shown in Fig. 4.

Table 1.

KRR model performance measures for MRI, PET, CSF and cognitive function modalities retained for the training after feature selection. CFA represents 9 selected cognitive and functional assessments (LDELTOTAL, FAQ, MOCA, ADAS13, LIMMTOTAL, MMSE, RAVLT Immediate, RAVLT Perc Forgetting, RAVLT Learning), MRI - 4 features (Hippocampus, MidTemp, Entorhinal, Whole Brain), PET – 5 features (FDG, Angular Left, Angular Right, Temporal Left, SUMZ3), and CSF – 2 features (TAU_ABETA, TAU). ‘All’ features refer to a combination of MRI, PET, CSF, CFA, and Age. Performances of predictive models for each combination of modalities were recorded using an (unseen) testing set partitioned from the original data (10% of the original data). R2: adjusted coefficient of determination; RMSE: Root Mean Square Error. Asterix (*): a subset of features with the highest R2. For more details on data types and their abbreviations, refer to Table A.1.

| Features | R2 | RMSE |

|---|---|---|

| All | 0.839, 95%CI (0.793,0.885) | 0.463 |

| CFA, PET, MRI, CSF | 0.847, 95%CI (0.802,0.892) | 0.442 |

| CFA, PET, MRI | 0.839, 95%CI (0.788,0.890) | 0.436 |

| CFA, PET, CSF | 0.850, 95%CI (0.798,0.903) | 0.400 |

| CFA, MRI, CSF | 0.865, 95%CI (0.817,0.913) | 0.402 |

| PET, MRI, CSF | 0.417, 95%CI (0.256,0.578) | 0.795 |

| CFA, PET | 0.821, 95%CI (0.757,0.884) | 0.429 |

| CFA, MRI * | 0.874, 95%CI (0.827,0.922) | 0.379 |

| CFA, CSF | 0.863, 95%CI (0.809,0.918) | 0.374 |

| PET, MRI | 0.407, 95%CI (0.237,0.578) | 0.800 |

| PET, CSF | 0.374, 95%CI (0.192,0.555) | 0.860 |

| MRI, CSF | 0.181, 95%CI (0.012,0.351) | 0.942 |

| CFA | 0.866, 95%CI (0.809,0.922) | 0.369 |

| PET | 0.404, 95%CI (0.215,0.593) | 0.810 |

| MRI | 0.317, 95%CI (0.120,0.513) | 0.854 |

| CSF | 0.024, 95%CI (0,0.105) | 1.215 |

| Age | 0.055, 95%CI (0,0.176) | 1.036 |

| Set of 4 cognitive/functional assessments (FAQ, ADAS13, MoCA, MMSE) | 0.832, 95%CI (0.754,0.910) | 0.423 |

Fig. 4.

KRR model predictions of medical diagnosis (CDRSB) of individual patients for 5 modality types: a) CFA, b) MRI, c) PET, d) CSF, and e) Age. Blue dots: observed values of CDRSB; red dots: predicted values of CDRSB; vertical lines: differences between observed and predicted values of the outcome. Models’ predictions for each set of considered markers were obtained using an (unseen) testing set partitioned from the original data (10%). CFA: functional and cognitive assessments; MRI: magnetic resonance imaging; PET: positron emission tomography; CSF: cerebrospinal fluid biomarkers.

3.1.2.2. Support Vector Machine

Three target disease classes associated with AD severity were used in SVM classification: ‘Normal’ (CDRSB = 0), ‘QCI’ (0.5 ≤ CDRSB ≤ 4.0), and ‘AD Mild/Moderate’ (4.5 ≤ CDRSB ≤ 15.5). The SVM MCA and multiclass AUC observed for a combination of all 5 modality types was 74.5%, 95%CI = (61.9%, 87.1%) and 91.6% respectively (Table 2). Again, combinations of features incorporating CFA yielded higher performance than models constructed using a single or combined biomarker modalities. The best SVM performance was observed for a subset of 4 CFA features (FAQ, ADAS13, MoCA, MMSE), i.e., MCA of 83.0%, 95%CI = (72.1%, 93.8%) and AUC = 94.9% (Table 2, bold). Given individual modality types, the model built using CFA outperformed models constructed with MRI, PET, or CSF data. Fig. 5 shows the expected diagnosis along with the corresponding SVM predictions obtained for 5 considered modality types. The best sensitivity and specificity in distinguishing Normal from QCI and Mild/Moderate AD cases was achieved for a combination of four CFA (FAQ, ADAS13, MoCA, MMSE) (sensitivity = 100% and specificity = 100%) (Table 2). The best sensitivity and specificity in identifying QCI from Normal and Mild/Moderate AD subjects was observed for combined CFA, PET, and MRI features (sensitivity = 80.8% and specificity = 85.7%). For all modality types (and their combinations), the QCI category had generally lower sensitivity than Normal and Mild/Moderate AD.

Table 2.

SVM model performance measures for MRI, PET, CSF and cognitive function modalities retained for the training after feature selection. CFA represents 9 selected cognitive and functional assessments (LDELTOTAL, FAQ, MOCA, ADAS13, LIMMTOTAL, RAVLT Immediate, MMSE, RAVLT Perc Forgetting, RAVLT Learning), MRI – 4 features (Hippocampus, MidTemp, Entorhinal, Whole Brain), PET – 5 features (FDG, Angular Left, Angular Right, Temporal Left, SUMZ3), and CSF – 2 features (TAU_ABETA, TAU). ‘All’ features refer to a combination of MRI, PET, CSF, CFA, and Age. Performances of predictive models for each combination of modalities were recorded using an (unseen) testing set partitioned from the original data (10% of the original data). MCA: multi-class classification accuracy. Multi-class AUC: multiclass area under the curve. Asterix (*): a subset of features with the best predictive performance. For more details on data types and their abbreviations, refer to Table A.1.

| Features | MCA (%) | Sensitivity (%) | Specificity (%) | Multi-class AUC (%) |

||||

|---|---|---|---|---|---|---|---|---|

| Normal | QCI | Mild/ Moderate | Normal | QCI | Mild/ Moderate | |||

| All | 74.5, 95%CI (61.9,87.1) | 82.4 | 65.4 | 100 | 80.0 | 85.7 | 93.0 | 91.6 |

| CFA, PET, MRI, CSF | 80.9, 95%CI (69.5,92.2) | 82.4 | 76.9 | 100 | 86.7 | 85.7 | 95.4 | 93.4 |

| CFA, PET, MRI | 82.9, 95%CI (72.1,93.8) | 82.4 | 80.8 | 100 | 90.0 | 85.7 | 95.4 | 94.1 |

| CFA, PET, CSF | 80.9, 95%CI (69.5,92.2) | 88.2 | 73.1 | 100 | 86.7 | 90.5 | 93.0 | 94.0 |

| CFA, MRI, CSF | 72.3, 95%CI (59.4,85.3) | 82.4 | 65.4 | 75.0 | 80.0 | 81.0 | 93.0 | 87.7 |

| PET, MRI, CSF | 61.7, 95%CI (47.7,75.8) | 70.6 | 57.7 | 50.0 | 60.0 | 71.4 | 100 | 72.5 |

| CFA, PET | 80.9, 95%CI (69.5,92.2) | 82.4 | 76.9 | 100 | 86.7 | 85.7 | 95.4 | 93.4 |

| CFA, MRI | 76.6, 95%CI (64.4,88.8) | 76.5 | 73.1 | 100 | 80.0 | 80.1 | 97.8 | 91.7 |

| CFA, CSF | 76.6, 95%CI (64.4,88.8) | 88.2 | 65.4 | 100 | 80.0 | 90.5 | 93.0 | 92.5 |

| PET, MRI | 44.7, 95%CI (30.3,59.1) | 58.8 | 34.6 | 50.0 | 43.3 | 61.9 | 97.8 | 67.7 |

| PET, CSF | 46.8, 95%CI (32.4,61.2) | 64.7 | 34.6 | 50.0 | 46.7 | 71.4 | 93.0 | 68.0 |

| MRI, CSF | 53.2, 95%CI (38.8,67.6) | 64.7 | 50.0 | 25.0 | 56.7 | 66.7 | 95.4 | 65.4 |

| CFA | 76.6, 95%CI (64.4,88.8) | 82.4 | 69.2 | 100 | 80.0 | 85.7 | 95.4 | 92.2 |

| PET | 46.8, 95%CI (32.4,61.2) | 70.6 | 26.9 | 75.0 | 43.3 | 80.1 | 90.7 | 71.7 |

| MRI | 55.3, 95%CI (41.0,69.7) | 82.4 | 34.6 | 50.0 | 50.0 | 90.1 | 90.1 | 73.5 |

| CSF | 42.6, 95%CI (28.3,56.8) | 64.7 | 23.1 | 75.0 | 56.7 | 85.7 | 74.4 | 68.6 |

| Age | 40.4, 95%CI (26.2,54.6) | 100 | 7.7 | 0 | 6.7 | 100 | 100 | 50.0 |

| Set of 4 cognitive/functional tests (FAQ, ADAS13, MoCA, MMSE)* | 83.0, 95%CI (72.1,93.8) | 100 | 69.2 | 100 | 76.7 | 100 | 97.7 | 94.9 |

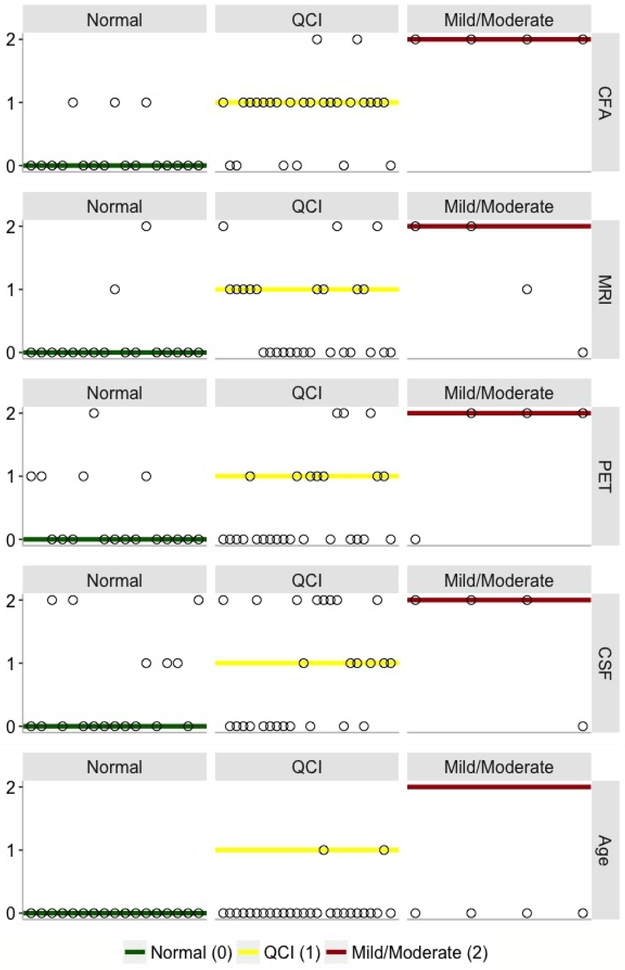

Fig. 5.

SVM model predictions of medical diagnosis of individual patients for 5 data types: a) CFA, b) MRI, c) PET, d) CSF, and e) Age. The vertical axis values and corresponding horizontal lines refer to the target CDRSB class, i.e., ‘Normal’ (green) = 0 (CDRSB = 0), ‘QCI’ (yellow) = 1 (0.5 ≤ CDRSB ≤ 4.0), and ‘Mild/Moderate’ (red) = 2 (4.5 ≤ CDRSB ≤ 15.5). Circles: predicted CDRSB class. CFA: functional and cognitive assessments; MRI: magnetic resonance imaging; PET: positron emission tomography; CSF: cerebrospinal fluid biomarkers.

3.2. Development of computer-based decision support tool

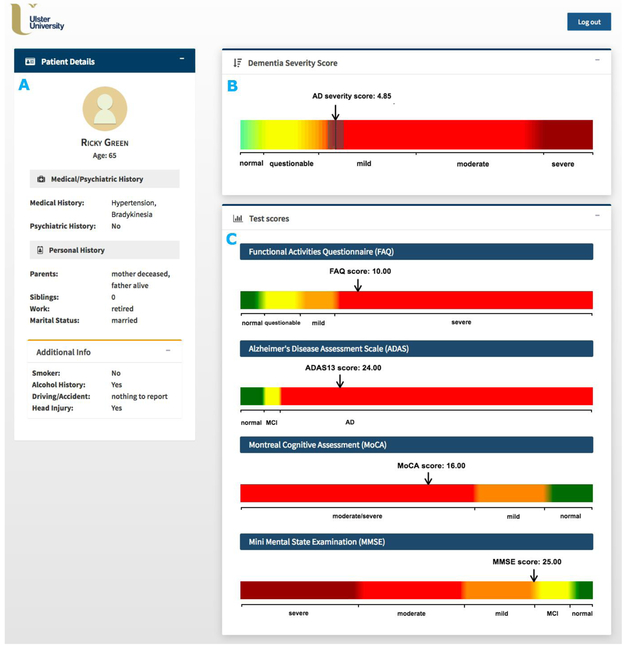

Given the high predictive power of CFA and their common use in clinical practice, we developed a prototype of the CDSS for assessing the severity of AD of an individual (based solely on CFA) to aid clinicians to diagnose AD (Fig. 6). The feasibility of our CDSS was demonstrated by using the baseline data from ADNI to benchmark the ability of the AD severity score to model disease prediction. The system implements an automated machine learning approach for data pre-processing, modelling, and validation (as described in Section 2.1) and uses scores of selected cognitive measures as data entries. The disease outcome prediction is generated using the KRR model as it regards the course of disease as a continuous progression and therefore, allows for discriminating between different ‘stages’ of the same AD category (e.g., a light-green colour in Fig. 6 indicates less probable QCI whereas a light-orange colour - more probable QCI). Furthermore, the KRR model achieved the best predictive performance of all regression techniques considered.

Fig. 6.

Graphical user interface of the computer-based clinical decision support system for predicting severity of dementia of an individual patient. A) Patient information panel; B) AD severity measurement scale with AD severity score (black line) and its confidence interval (gray range); C) Measurement scales for the selected cognitive/functional assessments (FAQ, ADAS13, MoCA, MMSE). To allow quick interpretation, the AD severity measurement scale is divided into 5 classes based on the CDRSB score, i.e., ‘Normal’ (CDRSB = 0), ‘QCI’ (0.5 ≤ CDRSB ≤ 4.0), ‘AD Mild’ (4.0 ≤ CDRSB ≤ 9), ‘AD Moderate’ (9.5 ≤ CDRSB ≤ 15.5)., and ‘AD severe’ (16 ≤ CDRSB ≤ 18).”

The input panel of our CDSS is designed for a set of 4 CFA inputs, namely, the total scores for FAQ, ADAS13, MoCA, and MMSE. These 4 efficient AD markers achieved the highest performance for the SVM model (MCA of 83%, 95%CI = (72.1%, 93.8%)) while for the KRR model, their performance (R2 = 0.832, 95%CI = (0.754,0.910)) was only slightly lower than best performance reported for the combined CFA and (more labour-intensive and costly) MRI data, i.e., R2 = 0.874, 95%CI = (0.827,0.922) (Table 1 & 2). Although all four tests are commonly used to provide a measure of cognitive impairment in clinical, research, and community settings, they have never been used in combination for evaluating AD severity (Nasreddine et al., 2006, Skinner et al., 2012, Teng et al., 2010, Trzepacz et al., 2015). The MMSE is currently the most widely used screening assessment for general cognitive evaluation and staging of Alzheimer’s disease (Nasreddine et al., 2006, Vertesi et al., 2001). It assesses various cognitive areas including attention, memory, language, orientation, and visuospatial abilities (Vertesi et al., 2001). The MMSE has been frequently applied not only to scale the severity of cognitive impairment at a given point in time but also to document the overall progression of cognitive decline over time (de Souza, Sarazin, Goetz, & Dubois, 2009). When compared to the MMSE, the MoCA consists of more memory, structured language, and executive function items and demonstrates high discriminant potential for MCI patients that performed within the normal range of the MMSE (Nasreddine et al., 2006, Trzepacz et al., 2015, Whitney, Mossbarger, Herman, & Ibarra, 2012). In addition, the MoCA has been shown to exhibit superior sensitivity for amnestic MCI detection compared to the MMSE (Freitas, Simões, Alves, & Santana, 2013). The ADAS13 is mainly applied to evaluate the severity of cognitive and non-cognitive disfunctions from mild to severe AD (Skinner et al., 2012). However, it has also been used as an outcome measure for trials of interventions in people with MCI and appeared to be able to discriminate between patients with MCI and mild AD (Kueper, Speechley, & Montero-Odasso, 2018). In contrast to MMSE, MoCA, and ADAS13, the FAQ is not used in everyday clinical routine (Ritter et al., 2015). However, its relevance for determining impairment in everyday functioning and ensuring accurate early diagnosis of AD has been well-documented (Devanand et al., 2008, Ding et al., 2018, Ritter et al., 2015). For instance, studies found the use of FAQ can significantly contribute to discerning MCI versus AD cases with MoCA scores overlapping in the MCI range (Trzepacz et al., 2015). Furthermore, the FAQ has been shown to be highly sensitive in detecting differences in cognitive functioning between healthy and MCI patients, mainly via the assessment of the ability of assembling documents and remembering appointments (Jekel et al., 2015).

Given the scores of 4 CFA described above, our system is able to provide an evidence-based AD score reflecting the severity of AD in the case of an individual subject. The score is generated by comparing selected CFA scores of an undiagnosed patient against a large database of existing patient records (Figs. 2 & 6). A single patient data with the predicted AD severity score is later added to the clinical data warehouse, updating the database, and initiating the retraining and validation procedure of the predictive model. To highlight the uncertainty inherent in the disease prediction, the system also provides a confidence interval for the predicted AD severity score based on the output from the individual sample validation procedure. Since our approach does not currently use input from clinicians for subsequent learning but uses its own predictions for reinforcing the existing model, further work is required to incorporate a self-training scheme that chooses only high-confidence predictions in the iterative process of model training.

The CDSS patient profile includes only content that is relevant in the context of AD diagnosis, in a concise format to allow quick and unambiguous interpretation. It consists of: 1) the patient information section with patient’s medical, psychiatric, and personal history details (Fig. 6A); 2) the AD severity measurement scale along with the predicted AD score and its confidence interval (Fig. 6B); and 3) CFA test scores together with their corresponding cut-off values for disease classes (Fig. 6C). The AD severity measurement scale is divided into 5 classes based on the CDRSB score i.e. ‘Normal’ (CDRSB = 0), ‘QCI’ (0.5 ≤ CDRSB ≤ 4.0), ‘AD Mild’ (4.0 ≤ CDRSB ≤ 9), ‘AD Moderate’ (9.5 ≤ CDRSB ≤ 15.5)., and ‘AD severe’ (16 ≤ CDRSB ≤ 18). Simple and user-friendly layout of the patient profile allows clinicians to easily assess how different CFA contribute to the predicted AD severity score (Bucholc et al. 2018).

4. Discussion

In this study, we have developed a computational framework for identifying key measures in predicting the severity of AD using baseline data from ADNI, which leads to the development of an efficient and practical CDSS prototype for evaluating the severity of AD of an individual on a continuous spectrum. It is efficient in that only a small subset of the data attributes with the highest predictive accuracy of AD severity level is chosen, and they consist of readily available CFA scores. This is practical in the sense that clinical decisions of AD relies relatively heavily on CFA scores. Furthermore, the system uses an automated machine learning approach for data pre-processing, modelling, and validation, making the clinical decision process more objective and accurate.

We showed that model predictions incorporating CFA were more accurate than those based solely on biomarker modalities (single or combinations) in this particular ADNI dataset. The KRR model performed best for the combined CFA and MRI data, i.e., R2 = 0.874, 95%CI = (0.827, 0.922) (Table 1). However, the KRR model incorporating only CFA scores (FAQ, ADAS13, MoCA, MMSE) achieved comparable performance, i.e., R2 = 0.832, 95%CI = (0.754, 0.910). The SVR achieved the highest performance for the combination of CFA and MRI, i.e., R2 = 0.790, 95%CI (0.715, 0.866) while kNNreg performed best for CFA, i.e., R2 = 0.750, 95%CI (0.653,0.847) (Table A.2). Given the SVM model, the optimal performance was reported for CFA data, i.e., MCA of 83.0%, 95%CI = (72.1%, 93.8%) for a subset of 4 CFA (FAQ, ADAS13, MoCA, MMSE) (Table 2). Again, the highest accuracy of the RF model was reported for all CFA with MCA of 80.0%, 95%CI (66.7%, 90.9%) while kNNclass performed best for the combinations of CFA, MRI and CSF, i.e., MCA of 89.7%, 95%CI (76.9%, 96.5%) (Table A.3, A.4). These results lend support to existing clinical practices that depend relatively heavily on CFAs (Grober, Wakefield, Ehrlich, Mabie & Lipton, 2017). Future analysis of individual tasks making up each of the considered CFAs can lead to building a single optimised CFA.

High predictive power of CFA has been demonstrated in previous studies (Chapman et al., 2011, Cui et al., 2011, Korolev et al., 2016). Cui et al. (2011) showed that single-modality predictive models based on CFA, namely FAQ, LM Delayed Recall, LM Immediate Recall, AVLT Delayed Recall and AVLT trials 1–5 (accuracy of 65%) outperformed those based on volumetric based CSF (accuracy of 60%) and MRI (accuracy of 62%) biomarkers in the task of early identification of MCI patients at risk of progressing to AD. In addition, incorporating multiple data modalities into the model, i.e., CFA, MRI, and CSF data, only slightly improved model performance (accuracy of 67%). Similar observations have been reported by (Chapman et al., 2011, Ewers et al., 2012). Cognitive measures (either alone or combined with other predictors) were also highly predictive in discriminating between stages of cognitive decline (Ewers et al., 2012, Nestor, Scheltens, & Hodges, 2004). In Ewers et al. (2012), the best statistical differentiation between AD and healthy subjects was reached for a combination of neuropsychological tests (RAVLT Immediate and RAVLT Delayed Recall) and CSF t-tau/Aβ1-4 ratio. However, a single-modality model incorporating cognitive measures showed a predictive accuracy comparable to that of the multi-predictor model. Few other studies claimed relatively good predictive performance of models constructed using tests for memory impairment, abstract reasoning, and verbal fluency (Jacobs et al., 1995, Small, Herlitz, Fratiglioni, Almkvist, & Bäckman, 1997). Note that an increasing number of studies is based on the multimodal approach for either differentiating between stages of disease severity or identifying potential descriptors for the decline of cognition from MCI to AD (Bauer, Cabral, & Killiany, 2018, Ritter et al., 2015). Therefore, it is difficult to assess the individual contributions of modalities, such as CFA, to the accuracy of predictive models. Furthermore, differences in study designs reflected in different data types used, characteristics of patient populations, subject inclusion/exclusion criteria, diagnostic criteria for AD, classification frameworks and evaluation metrics make it challenging to compare results across studies. However, the discriminatory value of cognitive measures in the AD severity assessment or MCI-to-AD conversion has been repeatedly demonstrated.

Numerous predictive approaches have been developed for diagnosis of AD, most of them derived using Cox Regression (Barnes et al., 2014, Derby et al., 2013, Ewers et al., 2012, Okereke et al., 2012, Seshadri et al., 2010), and Logistic Regression (Barnes et al., 2010, Bauer et al., 2018, Chary et al. 2013, Wolfsgruber et al., 2014). In the past decade, there has also been growing interest toward the application of SVM (Casanova et al., 2015, Cui et al., 2011, Klöppel et al., 2008, Ritter et al., 2015, Weygandt et al., 2011), RF (Gray et al., 2013, Sarica et al., 2017) as well as deep neural network models for AD diagnostics (Ortiz, Munilla, Gorriz, & Ramirez, 2016, Shen, Wu, & Suk, 2017). The SVM-based models have been developed for both differential diagnosis and assessment of AD severity using neuroimaging, genome-based, and blood-based biomarkers (Klöppel et al., 2008, Laske et al., 2011, Smith-Vikos & Slack, 2013, Weygandt et al., 2011). RF demonstrated advantages over other ML methods regarding the ability to handle highly non-linearly correlated data (Caruana & Niculescu-Mizil, 2006). While most of deep learning models show great performance in diagnostic classification, their interpretation remains an emerging field of research (Che, Purushotham, Khemani, & Liu, 2016). Other machine learning approaches for assisted diagnosis of cognitive impairment and dementia include linear regression (Agosta et al., 2012, Bauer et al., 2018, Koch et al., 2012), penalized regression (Wang, Liu, & Shen, 2018), Bayesian networks (Ding et al., 2018), hidden Markov models (Wang et al., 2014), and probabilistic multiple kernel learning (MKL) classifiers (Korolev et al., 2016, Youssofzadeh et al., 2017). Despite the common use of machine learning techniques for the disease diagnostics, controversy still exists regarding the effects of different combinations of explanatory variables, hyper-parameter tuning, sample size and class balance on the performance of predictive models (Du, Fu, & Calhoun, 2018, Finch & Schneider, 2007, Michie, Spiegelhalter, & Taylor, 1994). Different applications using different data sets (simulated or real) have failed to generate a model that performed best in all applications (Michie wt al., 1994, Wolpert & MacReady (1997). The results of empirical comparisons often showed opposite results, for example one study claiming that decision trees are superior to neural nets, and another making the opposite claim (Michie et al., 1994). In fact, Wolpert & MacReady (1997) demonstrated the danger of comparing performance of algorithms on a small sample of problems and showed the best learning algorithm is always context dependant.

The integration of efficient, less invasive, and cost-effective clinical markers into CDSS for AD diagnosis of individuals can support prevention-related decision-making in clinical settings. So far, educational interventions aimed at improving GPs’ knowledge and skills in recognition and management of dementia made no significant impact on the number of dementia patients’ care reviews or newly diagnosed cases (Dodd et al., 2015). Despite this, the deployment of CDSSs for a routine use in AD diagnostics, especially those incorporating machine learning methodologies, is still very rare. Furthermore, CDSSs currently used in dementia decision-making require information from expensive and labour-intensive biomarkers (e.g., PredictAD) (Antila et al., 2013) or make use of predictive methodologies based on binary classifications (e.g., CADi2 or CANTAB) (Fray, Robbins & Sahakian, 1996, Onoda & Yamaguchi, 2014). Such approaches are designed to differentiate between two disease categories, e.g., healthy patients and individuals with cognitive impairment. Our computational approach defines the disease in more realistic manner as a continuous progress rather than a sequence of discrete stages, conforming more to the pathology of the disease. Importantly, it also provides clinician with an estimate of prediction reliability by adopting a validation procedure appropriate for an individual participant data.

Our study has several limitations worth noting. First, our CDSS prototype does not yet include a mechanism for handling missing data. Work is currently in progress to develop an automated approach for missing data imputation that will be later incorporated into the system. Second, the current version of our CDSS provides clinicians with the predicted AD severity score of an individual with the corresponding confidence interval and CFA test scores together with cut-off values for disease classes; however, it does not provide any measures of predictive accuracy of the incorporated model or information regarding the relative importance of individual predictors in the model. We plan to address these issues in future work by making the model evaluation metrics available to clinicians. We also intend to provide the relative importance of individual features incorporated into the model based on the magnitude of standardized regression coefficients. The format of visual representations of performance metrics will be developed in consultation with clinical end-users. Third, the AD measurement scale in our CDSS covers all 5 disease classes i.e. ‘normal’, ‘QCI’, ‘mild’, ‘moderate’, and ‘severe’. However, due to data unavailability, patients with the ‘severe’ type of AD have not been included into our model training set and therefore, such cases could not be learned from the data. The inclusion of the ‘severe’ disease class in the CDSS means the suitability of our KRR model for making predictions outside the range of data used to estimate the model must be further evaluated. The necessary follow-up step would be a testing phase, to establish the degree to which prediction for ‘severe’ cases is contextually valid and hence, clinically useful. This could be done when additional data for patients with the ‘severe’ AD type is obtained, for example from memory clinics.

It is also worth noting that the current computational approach implemented into our CDSS is based on the iterative method for semi-supervised learning that uses its own predictions to assign AD severity labels to new (unlabelled) patient data. Accordingly, our CDSS does not use input from clinicians for subsequent learning of the predictive model but uses its own predictions to reinforce the current model. We are aware that this may have a tendency for the model to overfit. Hence, for future work, we plan to enhance our computational framework by incorporating a self-training algorithm for selecting only high-confidence predictions to a training set for the next iteration. Most importantly, we will develop interpretability of our models, either through development of algorithms to “peer” through the black box (Giudotti et al., 2018) or complementing with more interpretable models such as decision trees (Sokol & Flach, 2018). This will facilitate an easy explanation of system’s content and allow for adjustment/correction of the AD severity class based on feedback from clinicians. A dynamic, easily interpretable predictive model interacting with decision makers to re-estimate predictions according to new clinical information could increase the clinical value of our CDSS. Finally, we acknowledge that the proposed CDSS requires further realtime testing and validation in a clinical setting to enhance system’s reliability, stability and adoptability.

5. Conclusion

Our CDSS offers a platform to standardize diagnostics in AD and has the potential to address variations in the quality of GP services associated with the lack of experience or skills in dementia recognition. By taking full advantage of ML techniques, our system can develop, update, and visualize AD risk profiles of individual patients by utilizing only non-invasive and cost-effective AD markers. Although our CDSS has not been designed to provide a diagnosis, it can streamline a clinical workflow and assist with clinical decisionmaking. As our predictive, ML-based framework becomes more established and its performance better characterized and tested, it could be further upgraded to automate the care pathway for dementia. This process will require the active involvement of the medical community to ensure that developed algorithms are intelligently integrated into existing medical practice and are rigorously validated for clinical efficacy.

Supplementary Material

Highlights.

Integrated machine-learning methods can predict AD severity with high accuracy

Model validation procedure appropriate for processing individual participant data

Highly accessible cognitive and functional markers more accurate than biomarkers

Automated decision-support tool predicts individual AD severity on continuous scale

System assesses undiagnosed patient data against an existing dataset of patients

Acknowledgements

Data collection and sharing for this project was funded by the Alzheimer's Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Funding

This project was previously supported by Innovate UK (102161) (MB, XD, HYW, DHG, HW, GP, LPM, AJB, KWL) and then supported by the EU’s INTERREG VA Programme, managed by the Special EU Programmes Body (SEUPB) (MB, LPM, AJB, PLM, ST, DPF, KWL), the Northern Ireland Functional Brain Mapping Facility (1303/101154803) funded by Invest NI and Ulster University (GP, LPM, AJB, KWL), Ulster University Research Challenge Fund (KWL, XD, PLM, ST), Global Challenges Research Fund (XD, KWL, PLM, ST), and the COST Action Open Multiscale Systems Medicine (OpenMultiMed) supported by COST (European Cooperation in Science and Technology) (KWL). The views and opinions expressed in this paper do not necessarily reflect those of the European Commission or the Special EU Programmes Body (SEUPB).

Footnotes

Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf

Declarations of interest

None

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Abikoff H, Alvir J, Hong G, Sukoff R, Orazio J, Solomon S & Saravay S (1987). Logical memory subtest of the Wechsler Memory Scale: age and education norms and alternate-form reliability of two scoring systems. Journal of clinical and experimental neuropsychology, 9(4), 435–448. [DOI] [PubMed] [Google Scholar]

- 2.Agosta F, Pievani M, Geroldi C, Copetti M, Frisoni GB, & Filippi M (2012). Resting state fMRI in Alzheimer's disease: beyond the default mode network. Neurobiology of aging, 33(8), 1564–1578. [DOI] [PubMed] [Google Scholar]

- 3.Allen MP (1997). The coefficient of determination in multiple regression. Understanding Regression Analysis, 91–95. [Google Scholar]

- 4.Antila K, Lotjonen J, Thurfjell L, Laine J, Massimini M, Rueckert D, Zubarev R, Oresic M, van Gils M, Mattila J, Hviid Simonsen A, Waldemar G and Soininen H (2013). The PredictAD project: development of novel biomarkers and analysis software for early diagnosis of the Alzheimer's disease. Interface Focus, 3(2), 20120072–20120072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Awad M, & Khanna R (2015). Support vector regression In Efficient Learning Machines (pp. 67–80). Apress, Berkeley, CA. [Google Scholar]

- 6.Barber D (2012). Bayesian reasoning and machine learning. Cambridge University Press. [Google Scholar]

- 7.Barnes DE, Beiser AS, Lee A, Langa KM, Koyama A, Preis SR, Neuhaus J, McCammon RJ, Yaffe K, Seshadri S & Haan MN (2014). Development and validation of a brief dementia screening indicator for primary care. Alzheimer's & Dementia, 10(6), 656–665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Barnes DE, Covinsky KE, Whitmer RA, Kuller LH, Lopez OL, & Yaffe K (2010). Dementia risk indices: A framework for identifying individuals with a high dementia risk. Alzheimer's & Dementia, 6(2), 138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Basak D, Pal S, & Patranabis DC (2007). Support vector regression. Neural Information Processing-Letters and Reviews, 11(10), 203–224. [Google Scholar]

- 10.Bauer CM, Cabral HJ, & Killiany RJ (2018). Multimodal Discrimination between Normal Aging, Mild Cognitive Impairment and Alzheimer’s Disease and Prediction of Cognitive Decline. Diagnostics, 8(1), 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bengio Y, Delalleau O, Roux NL, Paiement JF, Vincent P, & Ouimet M (2003). Feature Extraction: Foundations and Applications, chapter Spectral Dimensionality Reduction. Springer. [Google Scholar]

- 12.Benoít F, Van Heeswijk M, Miche Y, Verleysen M, & Lendasse A (2013). Feature selection for nonlinear models with extreme learning machines. Neurocomputing, 102, 111–124. [Google Scholar]

- 13.Brodaty H, Woolf C, Andersen S, Barzilai N, Brayne C, Cheung K, Corrada M, Crawford J, Daly C, Gondo Y, Hagberg B, Hirose N, Holstege H, Kawas C, Kaye J, Kochan N, Lau B, Lucca U, Marcon G, Martin P, Poon L, Richmond R, Robine J, Skoog I, Slavin M, Szewieczek J, Tettamanti M, Viña J, Perls T and Sachdev P (2016). ICC-dementia (International Centenarian Consortium - dementia): an international consortium to determine the prevalence and incidence of dementia in centenarians across diverse ethnoracial and sociocultural groups. BMC Neurology, 16(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brown SA (2016). Patient similarity: emerging concepts in systems and precision medicine. Frontiers in physiology, 7, 561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bucholc M*, Ding X*, Wang HY, Glass D, Wang H, Prasad G, Maguire LP, Bjourson AJ, McClean PL, Todd S, Finn DP & Wong-Lin K (2018, March). Development of a computer-based clinical decision support tool for identifying individuals with different levels of cognitive impairment. Poster session presentation at the meeting of Alzheimer's Research UK, London *Joint first authors. [Google Scholar]

- 16.Bucholc M, Ding X, Wang H, Glass DH, Wang H, Bjourson AJ, Dowey LR, O’Kane M, Maguire L, Prasad G & Wong-Lin K (2017, September). Data analytics and computerised application for predicting Alzheimer’s disease severity and related outlier test scores, Poster session presentation at the meeting of Translational Medicine (TMED) 8 Conference, Derry-Londonderry. [Google Scholar]

- 17.Caruana R, & Niculescu-Mizil A (2006). An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd international conference on Machine learning (pp. 161–168). ACM. [Google Scholar]

- 18.Casanova R, Hsu FC, Espeland MA, & Alzheimer's Disease Neuroimaging Initiative. (2012). Classification of structural MRI images in Alzheimer's disease from the perspective of ill-posed problems. PloS one, 7(10), e44877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Castaneda C, Nalley K, Mannion C, Bhattacharyya P, Blake P, Pecora A, Goy A & Suh KS (2015). Clinical decision support systems for improving diagnostic accuracy and achieving precision medicine. Journal of clinical bioinformatics, 5(1), 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cedarbaum JM, Jaros M, Hernandez C, Coley N, Andrieu S, Grundman M, Vellas B & Alzheimer's Disease Neuroimaging Initiative. (2013). Rationale for use of the Clinical Dementia Rating Sum of Boxes as a primary outcome measure for Alzheimer’s disease clinical trials. Alzheimer's & Dementia, 9(1), S45–S55. [DOI] [PubMed] [Google Scholar]

- 21.Chapman RM, Mapstone M, McCrary JW, Gardner MN, Porsteinsson A, Sandoval TC, Guillily MD, DeGrush E & Reilly LA (2011). Predicting conversion from mild cognitive impairment to Alzheimer's disease using neuropsychological tests and multivariate methods. Journal of Clinical and Experimental Neuropsychology, 33(2), 187–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chary E, Amieva H, Pérès K, Orgogozo JM, Dartigues JF, & Jacqmin-Gadda H (2013). Short-versus long-term prediction of dementia among subjects with low and high educational levels. Alzheimer's & Dementia, 9(5), 562–571. [DOI] [PubMed] [Google Scholar]

- 23.Chawla NV, Bowyer KW, Hall LO, & Kegelmeyer WP (2002). SMOTE: synthetic minority over-sampling technique. Journal of artificial intelligence research, 16, 321–357. [Google Scholar]

- 24.Che Z, Purushotham S, Khemani R, & Liu Y (2016). Interpretable deep models for icu outcome prediction. In AMIA Annual Symposium Proceedings (Vol. 2016, p. 371). American Medical Informatics Association. [PMC free article] [PubMed] [Google Scholar]

- 25.Chen K, Langbaum JB, Fleisher AS, Ayutyanont N, Reschke C, Lee W, Liu X, Bandy D, Alexander GE, Thompson PM & Foster NL (2010). Twelve-month metabolic declines in probable Alzheimer's disease and amnestic mild cognitive impairment assessed using an empirically pre-defined statistical region-of-interest: findings from the Alzheimer's Disease Neuroimaging Initiative. Neuroimage, 51(2), 654–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cortes C, & Vapnik V (1995). Support-vector networks. Machine learning, 20(3), 273–297. [Google Scholar]

- 27.Cortizo JC, & Giraldez I (2006). Multi criteria wrapper improvements to naive bayes learning. In International Conference on Intelligent Data Engineering and Automated Learning (pp. 419–427). Springer, Berlin. [Google Scholar]

- 28.Cui Y, Liu B, Luo S, Zhen X, Fan M, Liu T, Zhu W, Park M, Jiang T, Jin JS & Alzheimer's Disease Neuroimaging Initiative. (2011). Identification of conversion from mild cognitive impairment to Alzheimer's disease using multivariate predictors. PloS one, 6(7), e21896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dagliati A, Tibollo V, Sacchi L, Malovini A, Limongelli I, Gabetta M, Napolitano C, Mazzanti A, De Cata P, Chiovato L & Priori S (2018). Big Data as a driver for Clinical Decision Support Systems: a Learning Health Systems perspective. Frontiers in Digital Humanities, 5, 8. [Google Scholar]

- 30.de Souza LC, Sarazin M, Goetz C, & Dubois B (2009). Clinical investigations in primary care. Dementia in Clinical Practice, 24, 1–11. [DOI] [PubMed] [Google Scholar]

- 31.Devanand DP, Liu X, Tabert MH, Pradhaban G, Cuasay K, Bell K, de Leon MJ, Doty RL, Stern Y & Pelton GH (2008). Combining early markers strongly predicts conversion from mild cognitive impairment to Alzheimer's disease. Biological psychiatry, 64(10), 871–879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Derby CA, Burns LC, Wang C, Katz MJ, Zimmerman ME, L’italien G, Guo Z, Berman RM & Lipton RB (2013). Screening for predementia AD time-dependent operating characteristics of episodic memory tests. Neurology, 80(14), 1307–1314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ding X, Bucholc M, Wang H, Glass DH, Wang H, Clarke DH, Bjourson AJ, Le Roy CD, O’Kane M, Prasad G & Maguire L & Wong-Lin K (2018). A hybrid computational approach for efficient Alzheimer’s disease classification based on heterogeneous data. Scientific reports, 8(1), 9774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dodd E, Cheston R, & Ivanecka A (2015). The assessment of dementia in primary care. Journal of psychiatric and mental health nursing, 22(9), 731–737. [DOI] [PubMed] [Google Scholar]

- 35.Du Y, Fu Z, & Calhoun VD (2018). Classification and prediction of brain disorders using functional connectivity: Promising but challenging. Frontiers in neuroscience, 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Duchesne S, Caroli A, Geroldi C, Collins DL, & Frisoni GB (2009). Relating one-year cognitive change in mild cognitive impairment to baseline MRI features. Neuroimage, 47(4), 1363–1370. [DOI] [PubMed] [Google Scholar]

- 37.Duchesne S, Caroli A, Geroldi C, Frisoni GB, & Collins DL (2005, October). Predicting clinical variable from MRI features: application to MMSE in MCI. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 392–399). Springer, Berlin, Heidelberg. [DOI] [PubMed] [Google Scholar]

- 38.Dyrba M, Grothe M, Kirste T, & Teipel SJ (2015). Multimodal analysis of functional and structural disconnection in A lzheimer's disease using multiple kernel SVM. Human brain mapping, 36(6), 2118–2131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Elisseeff A, & Pontil M (2003). Leave-one-out error and stability of learning algorithms with applications. NATO science series sub series iii computer and systems sciences, 190, 111–130. [Google Scholar]

- 40.Ewers M, Walsh C, Trojanowski JQ, Shaw LM, Petersen RC, Jack CR Jr, Feldman HH, Bokde AL, Alexander GE, Scheltens P & Vellas B (2012). Prediction of conversion from mild cognitive impairment to Alzheimer's disease dementia based upon biomarkers and neuropsychological test performance. Neurobiology of aging, 33(7), 1203–1214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Farran B, Channanath AM, Behbehani K, & Thanaraj TA (2013). Predictive models to assess risk of type 2 diabetes, hypertension and comorbidity: machine-learning algorithms and validation using national health data from Kuwait—a cohort study. BMJ open, 3(5), e002457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Finch H, & Schneider MK (2007). Classification accuracy of neural networks vs. discriminant analysis, logistic regression, and classification and regression trees. Methodology, 3(2), 47–57. [Google Scholar]

- 43.Folstein MF, Robins LN, & Helzer JE (1983). The mini-mental state examination. Archives of general psychiatry, 40(7), 812–812. [DOI] [PubMed] [Google Scholar]

- 44.Forlenza OV, Radanovic M, Talib LL, Aprahamian I, Diniz BS, Zetterberg H, & Gattaz WF (2015). Cerebrospinal fluid biomarkers in Alzheimer's disease: Diagnostic accuracy and prediction of dementia. Alzheimer's & Dementia: Diagnosis, Assessment & Disease Monitoring, 1(4), 455–463. [DOI] [PMC free article] [PubMed] [Google Scholar]