Abstract

Purpose:

This study was designed to determine the extent to which high-frequency helped or hindered speech recognition as a function of hearing loss, gain-frequency response, and background noise.

Method:

Speech recognition was measured monaurally under headphones for nonsense syllables low-pass filtered in one-third-octave steps between 2.2 and 5.6 kHz. Adults with normal hearing and with high-frequency thresholds ranging from 40 to 80 dB HL listened to speech in quiet processed with an identical “nonindividualized” gain-frequency response. Hearing-impaired participants also listened to speech in quiet and noise processed with gain-frequency responses individually prescribed according to the National Acoustic Laboratories-Revised (NAL-R) formula.

Results:

Mean speech recognition generally increased significantly with additional high-frequency speech bands. The one exception was that hearing-impaired participants’ recognition of speech processed by the nonindividualized response did not improve significantly with the addition of the highest frequency band. Significantly larger increases in scores with increasing bandwidth were observed for speech in noise than quiet.

Conclusions:

Given that decreases in scores with additional high-frequency speech bands for individual participants were relatively small and few and did not increase with quiet thresholds, no evidence of a degree of hearing loss was found above which it was counterproductive to provide amplification.

Keywords: amplification, hearing loss, speech perception

A widely debated issue concerning auditory function and its relation to hearing aids is the benefit and optimal degree of high-frequency amplification for individuals with high-frequency hearing loss. In recent years, improvements in technology have resulted in hearing aids with more effective feedback management, more precise spectral shaping, wider band frequency responses, and high-frequency directional benefit. These advances make it possible to provide audible higher frequency speech information o individuals with substantial high-frequency hearing loss and may be especially relevant given the growing popularity of “open-fit” strategies.

Some studies have reported that speech recognition remained constant or deteriorated as amplification was added in the higher frequencies (Amos & Humes, 2007; Baer, Moore, & Kluk, 2002; Ching, Dillon, & Byrne, 1998; Hogan & Turner, 1998; Turner & Cummings, 1999; Vickers, Moore, & Baer, 2001). These results led some to suggest that benefit of higher frequency speech audibility is related to the magnitude of high-frequency hearing loss and/or the presence of cochlear “dead regions.” Hogan and Turner concluded that caution is warranted when providing high-frequency amplification when hearing loss at 4.0 kHz and above exceeds 55 dB HL. Furthermore, Ching et al. concluded that amplifying speech above 0 dB sensation level was not beneficial for hearing losses at 4.0 kHz of 80 dB HL or greater.

Several explanations for limited benefit of high-frequency amplification have been proposed. First, high signal levels may contribute to poorer speech recognition—that is, for individuals with high-frequency hearing loss, high-frequency speech must be amplified to high levels to make it audible, which could create unwanted distortion or masking. For example, excessive downward spread of masking could reduce audibility for lower frequency speech information, thus negating any benefit of amplification (Rankovic, 1998). Also, with signal-to-noise ratio held constant, speech recognition in noise for normal-hearing and hearing-impaired individuals has been shown to decrease at signal levels just exceeding conversational levels (Studebaker, Sherbecoe, McDaniel, & Gwaltney, 1999), effects that are generally well predicted by masked thresholds (Dubno, Horwitz, & Ahlstrom, 2005a, 2005b, 2006).

Second, the consequences of high-frequency hearing loss may not be limited to a loss of high-frequency speech audibility, given the importance of the base of the cochlea to the encoding of lower frequency information (e.g., Horwitz, Dubno, & Ahlstrom, 2002; Joris, Smith, & Yin, 1994; Kiang & Moxon, 1974). Accordingly, providing amplification for high-frequency speech would not necessarily restore the contribution of the base of the cochlea for lower frequency cues.

Third, a source of reduced benefit of higher frequency amplification may relate to physiological changes in the cochlea that accompany high-frequency hearing loss. Specifically, it has been suggested that thresholds greater than about 60 dB HL reflect not only loss of outer hair cells but also some loss of inner hair cells and diminished afferent input (Liberman & Dodds, 1984). Under these circumstances, providing amplified speech to the damaged system may be, at best, ineffective and, at worst, deleterious to speech understanding for some individuals. Consistent with this hypothesis is the notion that providing amplified speech within such dead regions would likely not result in improved speech recognition (Moore, Huss, Vickers, Glasberg, & Alcantara, 2000). For a dead region, it may be necessary for spectral information corresponding to that region (and temporal information normally carried by those fibers) to be encoded by nerve fibers that typically respond to other frequencies. Given that these fibers may already be responding to other speech information and may be best able to encode information only within a region near their characteristic frequencies, processing of speech information within a dead region may be of limited benefit (Baer et al., 2002; Vickers et al., 2001). Such an explanation is consistent with reduced benefit of amplification over a frequency range where there is moderate-to-severe hearing loss, although differences in audibility between individuals with and without dead regions may also account for some differences in benefit of aided high- frequency speech (Mackersie, Crocker, & Davis, 2004; Rankovic, 2002).

Regardless ofthe explanation, individuals with high-frequency hearing loss must listen to amplified speech at high levels to improve speech audibility. Thus, it is important to determine if significant benefit to speech recognition is derived from amplifying higher frequency speech. Amplification that does not provide added benefit to speech recognition or that has negative consequences, such as increased feedback or distortion, or downward spread of masking, could result in reduced hearing-aid use. It is also of interest to better understand the factors underlying reduced benefit of high-frequency amplification. If normal hearing (NH) and hearing-impaired (HI) listeners have similar decreases in recognition with increases in bandwidth for high-level speech, then the cause would more likely be related to high-level effects (e.g., spread of masking) and less likely to consequences of hearing impairment, per se (e.g., in-ner hair-cell loss, dead regions). A more complete understanding could help direct further research, including signal processing to minimize these factors.

In contrast to declines in performance with high-frequency amplification, findings of other studies have revealed improved speech recognition with increased speech audibility in the higher frequencies (e.g., Hornsby & Ricketts, 2003, 2006; Pascoe, 1975; Plyler & Fleck, 2006; Skinner, 1980; Sullivan, Allsman, Nielsen, & Mobley, 1992; Simpson, McDermott, & Dowell, 2005; Turner & Henry, 2002). Contradictory outcomes regarding the benefit of high-frequency amplification may relate to (a) the degree of high-frequency hearing loss; (b) whether conclusions were drawn from individual or mean results; (c) differences across studies in gain-frequency responses, which varied from gain with no frequency shaping to “one- size-fits-all” approaches to individualized, but at times unrealistic, gain-frequency responses; (d) the extent to which increases in cutoff frequency or level led to increases in audible high-frequency speech, which was not clear in some studies; and (e) whether lower frequency speech cues were largely available (as when listening in quiet) or largely unavailable (as when listening in noise).

Some previous investigations of the benefits of high-frequency amplification compared speech recognition for NH and HI listeners at similar sensation levels (Ching, et al., 1998; Hogan & Turner, 1998). Consequently, absolute levels differed considerably between subject groups. Therefore, the extent to which the detrimental effects of high-frequency amplification were due to effects of high speech levels or consequences of hearing impairment remained unclear. Thus, experiments designed to assess the benefit of high-frequency amplification using similar high signal levels for all participants were warranted.

To address these issues, three experiments were conducted that measured recognition of nonsense syllables as a function of low-pass-filter cutoff frequency; signals were spectrally shaped to provide high-frequency amplification according to two gain-frequency response strategies. Recognition of spectrally shaped nonsense syllables was measured monaurally under headphones for participants with normal hearing and for participants with impaired high-frequency hearing. HI listeners were primarily those with high-frequency hearing loss between 55 and 80 dB HL because of the large number of potential hearing-aid users with thresholds in that range and because some questions related to benefit of high-frequency amplification for these listeners have not been resolved. Incrementally increasing the low-pass-filter cutoff frequency in one-third-octave steps between 2.2 and 5.6 kHz revealed the extent to which increases in amplified high-frequency speech cues helped or hindered listeners’ speech recognition as a function of hearing loss, gain-frequency response strategy, and presence or absence of background noise. Further, comparisons between observed scores and scores predicted from articulation-index-based calculations of speech audibility revealed the extent to which changes in speech recognition may be expected due to changes in speech audibility.

More specifically, in Experiment 1, consonant recognition was measured for individuals with normal hearing (in noise) and impaired high-frequency hearing (in quiet) listening to high-level speech processed with an identical (nonindividualized) gain-frequency response to address the following question: Are changes in speech recognition with additional high-frequency speech bands similar for NH and HI participants listening to speech at the same high level?

In Experiment 2, consonant recognition was measured for individuals with impaired high-frequency hearing listening in quiet to speech processed with individualized gain-frequency responses to address the following question: Are changes in recognition with additional high-frequency speech bands similar for speech processed with nonindividualized (from Experiment 1) and individualized gain-frequency responses?

In Experiment 3, consonant recognition was measured for individuals with impaired high-frequency hearing listening to speech and noise processed with individualized gain-frequency responses to address the following question: Are changes in recognition with additional high-frequency speech bands similar for speech in noise and in quiet (from Experiment 2)?

Finally, each of the three experiments was designed to address the additional question of whether there is a frequency and degree of hearing loss above which it is counterproductive to provide high-frequency amplification.

Method

Participants

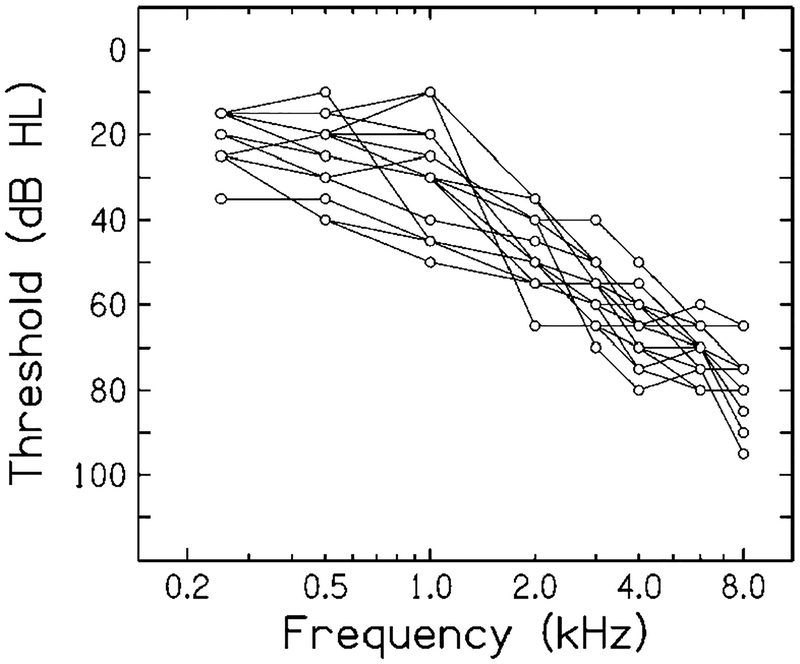

There were two groups of participants: (a) 18 younger adults with normal hearing (M = 21.3 years; range = 18–29 years); and (b) 16 older adults with sloping high-frequency sensorineural hearing loss (M = 76.4 years; range = 69–82 years). Younger participants with normal hearing had thresholds that were ≤20 dB HL (American National Standards Institute [ANSI], 1996) at octave frequencies from 0.25 to 8.0 kHz and immittance measures within normal limits. HI participants had adult-onset cochlear hearing loss (specific etiology unknown) and normal immittance measures. HI participants were selected so that the majority of pure-tone thresholds above 2.0 kHz ranged from 55 to 80 dB HL. The 80 dB HL limit was chosen, in part, so that speech audibility would be predicted to increase with the addition of high-frequency speech bands for Experiment 1 (see Procedures section for more details). Individual pure-tone thresholds (in dB HL) for HI listeners are shown in Figure 1. The number of participants with thresholds between 55 and 80 dB HL at 3, 4, and 6 kHz is 12, 15, and 16, respectively. These thresholds were obtained using clinical equipment and procedures (as explained in the Procedures section) and were used to determine individualized gain-frequency responses, consistent with the clinical practice of prescribing hearing-aid gain based on the audiogram. In addition, for all participants, pure-tone thresholds were obtained at one-third-octave frequencies using different equipment and procedures to provide more detailed data for use in audibility estimates (also explained more fully in the Procedures section). Participants received approximately 2 hr of practice with the various tasks. Data collection was completed in three (NH) or six (HI) 2-hr sessions. Participants were paid an hourly wage.

Figure 1.

Individual audiograms (dB HL) for hearing-impaired (HI) participants. Although there were 16 participants, fewer than 16 line segments are visible between adjacent frequencies because several participants had the same thresholds.

Apparatus and Stimuli

Tonal and Speech Signals

For thresholds in dB HL shown in Figure 1, pure tones were presented at audiometric frequencies using a Madsen OB922 audiometer equipped with TDH-39 headphones mounted in supra-aural cushions. For all other thresholds, signals were digitally generated (TDT DD1), 350-ms pure tones (including 10-ms rise/fall ramps) sampled at 50.0 kHz and low-pass filtered (TDT FT6) at 12.0 kHz and then delivered through one of a pair of TDH-49 headphones mounted in supra-aural cushions. Pure-tone levels were expressed as the output in dB SPL of the headphone as developed in an NBS-9/A coupler. Signal frequencies ranged from 0.2 to 6.3 kHz in 16 one-third-octave intervals.

Speech signals were nonsense syllables formed by combining the consonants /b,t∫,d,f,g,k,l,m,n,ŋ,p,r,s,∫,t,θ, v,w,j,z/ with the vowels /α,i,u/. More specifically, the 57 consonant-vowel (19 × 3) and 54 vowel-consonant (3 ×18) syllables were each spoken by one male talker and one female talker without a carrier phrase (for a total of 222 syllables). Descriptions of the speech stimuli are in Dubno and Schaefer (1992) and Dubno, Horwitz, and Ahlstrom (2003). To provide a reasonable listening interval, the 222-item set was divided into four approximately equivalent lists such that each list contained 55 or 56 items and included all 20 consonants, three vowels, both talkers, and both consonant placements.

Spectral Shaping of Speech

Nonindividualized gain-frequency response (Experiments 1 and 2).

For NH and HI participants, speech was spectrally shaped to provide a gain-frequency response similar to the high-frequency emphasis used by Hogan and Turner (1998). Although not based on a specific prescriptive target, these authors reported that the response was most similar to NAL-R (Byrne & Dillon, 1986). Because all participants listened to speech with identical spectral shaping (i.e., amplification), this spectral shaping was called the nonindividualized gain-frequency response. Gain values at one-third-octave intervals from 0.2 to 6.3 kHz were estimated from Figure 1 of Hogan and Turner. Gain was added at 4.0, 5.0, and 6.3 kHz to provide some audible speech to listeners with thresholds up to 80 dB HL. (Further increases in gain would have allowed the inclusion of HI participants with more high-frequency hearing loss; however, none were included to avoid loudness discomfort for all participants.) These gain values were entered into a MATLAB (Version 5.3) program that generated coefficients for an FIR digital filter; nonsense syllables were then spectrally shaped by custom software using the MATLAB-generated coefficients. The syllables were output through a 16-bit digital- to-analog converter (TDT DA3–4) and low-pass filtered (TDT FT6) at 12.0 kHz prior to attenuation and mixing with other signals.

Individualized gain-frequency response (Experiments 2 and 3).

For each HI participant, nonsense syllables were spectrally shaped (amplified) using a gain-frequency response that was fit individually. Gain values at one-third-octave intervals were computed based on the pure-tone thresholds shown in Figure 1 and the NAL-R hearing-aid gain rule. Corrections were added to the insertion gain values to calculate the desired gain for TDH-49 supra-aural headphones calibrated in a 6-cc coupler (Dillon, 1997). For the speech input level used in this study (see Signal levels section), the resulting gain-frequency responses are very similar to those using the newer National Acoustic Laboratories-Non-Linear Version 1 (NAL-NL1) prescriptive fitting procedure for nonlinear hearing aids (Byrne, Dillon, Ching, Katsch, & Keidser, 2001). Apparatus for spectral shaping and speech output were the same as for the nonindividualized gain-frequency response.

Spectral Shaping of Noise

Threshold-matching noise (Experiment 1).

NH participants listened to nonsense syllables in the presence of a steady-state, broadband threshold-matching noise (TMN). The noise was digitally generated and its spectrum adjusted at one-third-octave intervals (Cool Edit Pro, Version 1.2, Syntrillium Software Corp.) to produce masked thresholds similar to quiet thresholds of listeners with sloping high-frequency hearing loss. The purpose of the TMN was to achieve (reduced) speech audibility for NH participants that was generally similar to speech audibility for HI participants, i.e., relatively good speech audibility in the low and middle frequencies and reduced, but measurable, speech audibility in the higher frequencies. The TMN was created and NH data were gathered prior to the recruitment of all HI participants. Thus, it was not possible to precisely match audibility for NH participants listening in TMN and HI participants listening in quiet. The TMN was output through a 16-bit digital-to-analog converter (TDT DA3–4), low-pass filtered at 12 kHz (TDT FT6), and recorded onto a CD for playback.

Speech-shaped noise (Experiment 3).

For HI participants, a broadband noise was spectrally shaped in one-third-octave intervals (Cool Edit Pro, Version 1.2, Syntrillium Software Corp.) to match the long-term spectrum of the (unshaped) nonsense syllables. This noise was then spectrally shaped (as discussed previously) for each participant’s individualized NAL-R gain-frequency response. This individualized speech-shaped noise was output through a 16-bit digital-to-analog converter (TDT DA3–4) and recorded onto a CD for playback.

Low-pass filtering.

In all three experiments, speech was low-pass filtered (two cascaded Stanford Research Dual Channel Filters Model 650) at 2.2,2.8,3.6,4.5, and 5.6 kHz (3-dB down points). Low-pass-filtered speech was presented in quiet (HI in Experiments 1 and 2), with low-pass-filtered speech-shaped noise at a +5-dB signal-to-noise ratio (HI in Experiment 3), or with low-pass-filtered TMN (NH in Experiment 1).

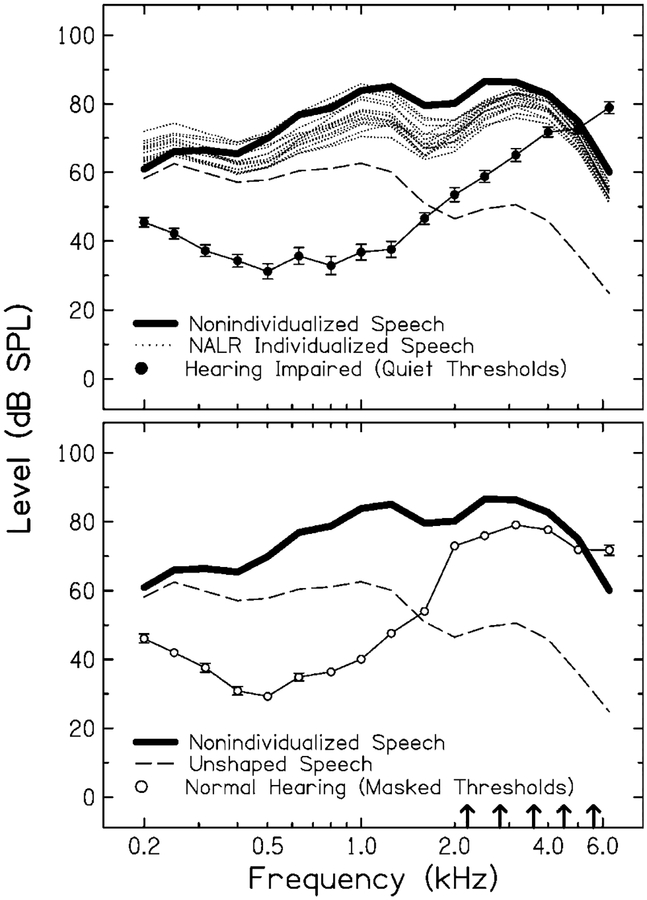

Signal levels.

The speech level was 70 dB SPL (i.e., the level of the unshaped speech) plus the gain provided by the nonindividualized or individualized gain-frequency response. The resulting overall rms levels in the broad-band conditions were 93 dB SPL for the nonindividualized gain-frequency response and ranged from 82.1 to 91.5 dB SPL for the individualized gain-frequency responses (i.e., depending on the gain prescribed by NAL-R). Figure 2 shows the one-third-octave band spectrum of broadband speech shaped by the nonindividualized gainfrequency response (thick solid line in each panel). The dotted lines in the top panel represent the one-thirdoctave band spectra for the broadband NAL-R gainfrequency responses for each of the 16 HI participants. For reference, each panel also includes the broadband unshaped speech spectrum (dashed line).

Figure 2.

One-third-octave band spectrum of speech (dashed line in each panel) and the same spectrum shaped by the nonindividualized gain-frequency response (thick solid line in each panel). Top panel: Mean (±1 SE) quiet thresholds for HI participants (filled circles). The thin dotted lines represent the one-third-octave band spectra for the individualized (NAL-R) gain-frequency responses for 16 HI participants. Bottom panel: Mean (±1 SE) thresholds in threshold- matching noise for NH participants (open circles). The arrows on the abscissa at 2.2, 2.8, 3.6, 4.5, and 5.6 kHz indicate the 3-dB down frequencies for the five low-pass filters.

The overall levels of the speech and noise were controlled individually using programmable and manual attenuators (TDT PA4). Following attenuation, the speech and noise were mixed (TDT SM3), low-pass filtered, and then delivered through one of a pair of TDH-49 headphones mounted in supra-aural cushions. Spectral characteristics of all signals were verified on an acoustic coupler and a signal analyzer (Stanford Research SR780).

Procedures

Thresholds for Pure Tones

Thresholds for audiometric frequencies (Figure 1) were measured using a standard clinical procedure (American Speech-Language-Hearing Association, 2005). Thresholds for one-third-octave frequencies were measured using a single-interval (yes-no) maximum-likelihood psychophysical procedure (Green, 1993; Leek, Dubno, He, & Ahlstrom, 2000). The slope factor (k) was 0.5 according to Green (1993). Each threshold was determined from 24 trials, including 4 catch trials. Signal level was varied adaptively with a minimum step size of 0.5 dB. Threshold was defined as the “sweet point” (Green, 1993), which was calculated based on the estimated m (the midpoint of the psychometric function) and α (the false alarm rate) after 24 trials. “Listen” and “vote” periods were displayed on a computer monitor. Participants responded by clicking one of two mouse buttons corresponding to the responses “yes, I heard the tone” and “no, I did not hear the tone.”

Thresholds for one-third-octave frequencies were measured in quiet (all participants), in the speech-shaped noise (HI participants), and in the TMN (NH participants). Figure 2 presents mean (±1 S E) quiet thresholds in dB SPL for HI participants (top panel, filled circles) and mean (±1 S E) masked thresholds for NH participants listening in the TMN (bottom panel, open circles). Thresholds were higher for NH than HI listeners, especially at higher frequencies. Comparing the two groups, thresholds differed by less than 4 dB through 1.0 kHz and by 10 dB at 1.25 kHz; the largest difference was 20 dB at 2.0 kHz. Given these threshold differences, audibility of high-frequency speech shaped by the nonindividualized gain-frequency response (solid lines) was less for NH listeners than for HI listeners.

Speech Recognition

For this closed-set nonsense syllable test, the set of possible consonants was presented on a computer monitor, and participants responded by pointing with a mouse. During data collection, the order of low-pass-filter cutoff frequency was determined as follows: For each participant and each condition (i.e., nonindividualized gain-frequency response, individualized gain-frequency response in quiet, and individualized gain-frequency response in noise), the order of the five filter cutoff frequencies was randomized (e.g., 2.8, 5.6, 3.6, 4.5, 2.2 kHz). Two of the four lists were presented in that order, and then the remaining two lists were presented in the reverse order (i.e., 2.2, 4.5, 3.6, 5.6, 2.8 kHz). The order of the filter conditions was balanced in this way to help compensate for possible learning effects during testing. For HI participants, order of gain-frequency response in quiet (nonindividualized vs. individualized) was counterbalanced. The individualized gain-frequency response in noise was always the final condition tested. Consonant-recognition scores for each condition were the percentage of correct responses to 222 syllables. Prior to data collection, participants practiced listening and responding to the syllables in each of the conditions (i.e., in quiet for the nonindividualized and NAL-R gain-frequency responses and in noise for the NAL-R responses). The narrowest and widest of the five low-pass-filter cutoffs were included during this practice period. The experimenter initially reinforced participants’ responses verbally. Following the initial familiarization period, no feedback was provided.

Predicted consonant-recognition scores were computed from articulation-index (AI) values using procedures similar to ANSI (1969) and ANSI (1997). In particular, the frequency-importance function and AI-recognition transfer function developed for these materials were used along with the measured speech peaks (range: 8–13 dB above the long-term rms level at each one-third-octave band; Dirks, Dubno, Ahlstrom, & Schaefer, 1990). Quiet thresholds were used to quantify audibility for HI participants listening to speech in quiet (Experiments 1 and 2); masked thresholds were used to quantify audibility for NH participants (measured with the TMN in Experiment 1) and for HI participants (measured with the individualized speech-shaped noise in Experiment 3). For each one-third-octave band, puretone thresholds (dB SPL) were converted to equivalent internal noise levels by adding 10 times the logarithm of the bandwidth and subtracting the corresponding normal critical ratio. No additional corrections (e.g., elevated speech levels, elevated hearing threshold levels, or spread of masking) were included. Speech recognition that was poorer than predicted suggests that “effective” speech audibility was less than calculated by the AI—that is, compared with calculations, less speech was heard or usable by the listener perhaps due to masking spread, distortion, or for other reasons. Using rau-transformed observed and AI-predicted scores (Studebaker, 1985), effects of gain-frequency response, low-pass-filter cutoff frequency, noise background, and participant group were assessed by repeated measures analyses of variance. Post hoc tests were conducted when the main effects or interactions were significant. For all analyses, effects were significant with p < .05.

Results

Experiment 1: Consonant Recognition for NH and HI Participants Using a Nonindividualized Gain-Frequency Response

Mean Consonant Recognition

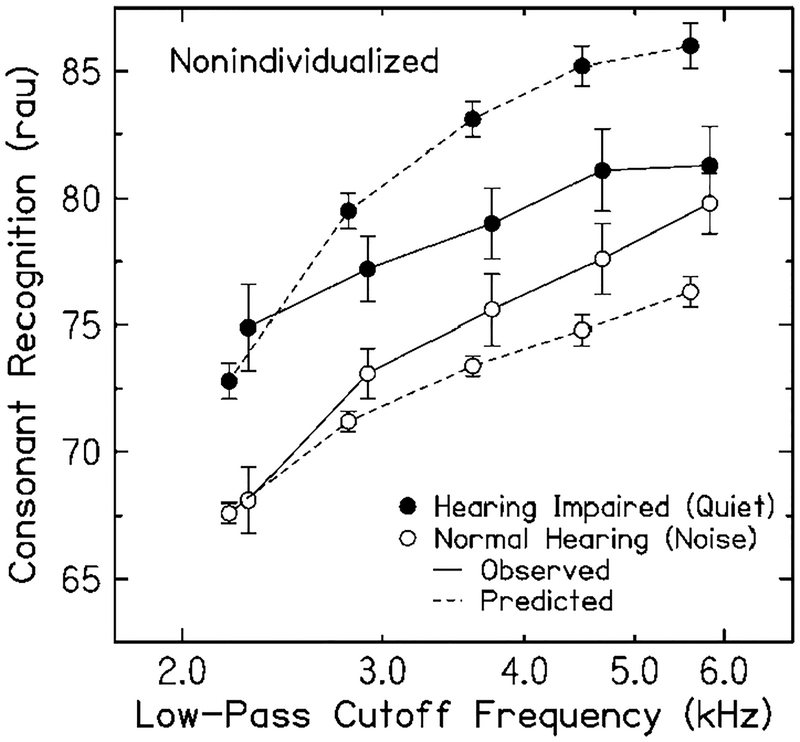

Mean consonant-recognition scores (in rau) are plotted in Figure 3 as a function of low-pass cutoff frequency for NH participants listening in TMN (open circles and solid lines) and for HI participants listening in quiet (filled circles and solid lines). Also shown are consonant-recognition scores predicted for NH and HI listeners (open circles and dashed lines, filled circles and dashed lines, respectively). Starting from the score for the lowest cutoff frequency (2.2 kHz) and the narrowest speech bandwidth, the change in each subsequent score is due to the presence of an additional one-third-octave band of high-level speech (see nonindividualized speech spectrum in Figure 2). For all listeners, scores generally increased as cutoff frequency and speech bandwidth increased (i.e., with additional high-frequency speech bands). However, for both NH and HI listeners, only relatively small increases in scores were observed with each additional band. This was expected for two reasons: (a) the addition of each amplified band of speech corresponded to a relatively narrow frequency range (i.e., one-third octave); and (b) despite the high overall speech level and high-frequency amplification, speech audibility was relatively low in each high-frequency band, due to the presence of background noise (for NH listeners) or elevated thresholds (for HI listeners).

Figure 3.

Mean (±1 SE) observed and predicted consonant-recognition scores (in rau) as a function of low-pass-filter cutoff frequency for speech shaped with the nonindividualized gain-frequency response. HI participants listened in quiet (filled), and NH participants listened in a threshold-matching noise (open). Observed scores are connected by solid lines and predicted scores are connected by dashed lines. For clarity, some data points are offset along the abscissa.

Although the changes were small, observed and predicted scores for NH listeners increased significantly with each increase in bandwidth up to and including 5.6 kHz, the widest bandwidth—for example, with the addition of the highest frequency band, observed: F(1, 32) = 9.82, p = .004; predicted: F (1, 32) = 109.13, p < .0001. Scores for HI listeners were also predicted to increase significantly, up to and including 5.6 kHz—for example, with the addition of the highest frequency band, F (1, 32) = 25.203, p < .0001. However, increases in consonant recognition for HI listeners were significant only through 4.5 kHz. Predicted increases in mean scores for HI listeners with the addition of the 5.6-kHz band means that, on average, the high-level speech in that band was estimated to be partially audible for these listeners. Thus, the lack of significant improvement in observed scores for the HI listeners may be attributed to the limited ability of the impaired auditory system to make use of amplified speech in the band centered at 5.0 kHz.

Before concluding that HI listeners cannot benefit from the addition of the amplified speech band centered at 5.0 kHz, an additional explanation should be considered, related to the HI listeners’ relatively good speech recognition for this condition: Across cutoff frequency, mean observed and predicted scores were significantly better for HI than for NH listeners—observed: F (1, 32) = 4.50, p < .042; predicted: F(1, 32) = 112.86, p < .0001—which may be seen by comparing scores with filled symbols (HI) to scores with open symbols (NH) in Figure 3. These observed differences are consistent with the higher speech audibility estimated by the AI for HI than NH listeners, resulting in higher predicted scores. Recall that the TMN resulted in lower speech audibility for NH than HI listeners. One consequence of this audibility difference was that as cutoff frequency increased from 4.5 kHz to 5.6 kHz, the predicted increase in recognition, although small for all listeners, was larger for NH than for HI listeners. This result was expected based on the AI-recognition transfer function that relates predicted scores to speech audibility. Specifically, the lower AI values for NH listeners corresponded to a steeper range of the transfer function, so that the additional high-frequency band was predicted to result in a larger increase in score for NH than HI listeners. In other words, it may be more difficult to obtain significant improvement with additional high-frequency speech bands when scores are already high, as was the case for the HI participants in this condition.

Listeners’ abilities to benefit from amplified speech cues may be clarified by focusing on differences between observed and predicted scores (i.e., solid and dashed lines in Figure 3). At the narrowest bandwidth only, observed scores were higher than predicted for both groups of listeners, but this difference was significantly larger for the HI than NH listeners, F(1, 32)= 17.23,p < .001. In contrast, as high-frequency speech bands were added, scores were increasingly poorer than predicted for HI listeners, F (1, 32) = 36.60, p < .0001. This finding is consistent with previous reports of better-than-predicted recognition of low-pass-filtered speech for HI listeners (Ching et al., 1998; Hornsby & Ricketts, 2006; Vestergaard, 2003) and may relate to the configuration of hearing loss (i.e., best hearing sensitivity below 2.0 kHz). In contrast, across cutoff frequency, as high-frequency speech bands were added, scores were increasingly better than predicted for NH listeners, F(1, 32) = 8.37, p = .007 (i.e., listeners who are accustomed to receiving useful cues from across the full speech range). Taken together, these results suggest that, although amplification can increase speech audibility and improve speech recognition, listeners with hearing loss may not take advantage of audible speech cues to the same extent as listeners with normal hearing. Nevertheless, HI listeners may be more efficient at extracting information to which they have become accustomed and from the frequencies for which their hearing is best.

Consonant Recognition for Individual Participants

Given that mean changes in scores with increases in bandwidth were small, it was important to examine changes in scores for individual NH and HI participants to confirm that mean values appropriately represented the results for most participants in this experiment. Consonant-recognition scores for each low-pass cutoff frequency for individual participants are presented in Table 1; the highest score for each participant (regardless ofcutofffrequency) is shown in boldface type. For 7 of 18 NH participants, the highest score was obtained at one of the narrower bandwidths (usually 4.5 kHz). However, differences between the highest score and the score at the widest bandwidth were small; the largest decrease from the highest score to the score at the widest bandwidth was 3.8 rau. Similarly, for 10 of 16 HI participants, the highest score was obtained at less than the widest bandwidth; again, the largest decrease was small (i.e., 4.3 rau).

Table 1.

Consonant-recognition scores (in rau) for individual HI and NH participants for low-pass-filtered speech at five cutoff frequencies.

| Low-pass-filter cutoff frequency (kHz) | |||||

|---|---|---|---|---|---|

| 2.2 | 2.8 | 3.6 | 4.5 | 5.6 | |

| Hearing impaired | |||||

| 1 | 82.6 | 86.6 | 83.7 | 90.3 | 87.8 |

| 2 | 77.9 | 79.4 | 81.0 | 77.4 | 80.0 |

| 3 | 66.2 | 72.1 | 74.0 | 75.4 | 73.0 |

| 4 | 78.9 | 80.5 | 79.4 | 84.3 | 80.0 |

| 5 | 71.6 | 72.5 | 77.4 | 77.9 | 80.5 |

| 6 | 81.5 | 79.4 | 86.0 | 91.6 | 88.4 |

| 7 | 83.2 | 83.2 | 84.3 | 87.2 | 84.3 |

| 8 | 67.9 | 68.4 | 69.2 | 73.5 | 74.5 |

| 9 | 76.4 | 75.9 | 77.9 | 80.0 | 84.3 |

| 10 | 68.9 | 71.6 | 71.6 | 74.5 | 74.5 |

| 11 | 81.0 | 81.5 | 81.5 | 87.8 | 87.8 |

| 12 | 62.2 | 70.7 | 70.7 | 72.5 | 71.1 |

| 13 | 65.7 | 75.4 | 75.4 | 72.5 | 74.5 |

| 14 | 76.4 | 81.4 | 84.9 | 87.8 | 89.0 |

| 15 | 77.4 | 80.5 | 83.7 | 82.1 | 86.0 |

| 16 | 80.0 | 75.9 | 83.2 | 82.6 | 85.4 |

| Normal hearing | |||||

| 1 | 74.0 | 76.9 | 82.1 | 90.9 | 87.2 |

| 2 | 73.0 | 76.4 | 77.9 | 81.5 | 86.0 |

| 3 | 71.1 | 77.4 | 79.4 | 76.4 | 77.4 |

| 4 | 69.8 | 76.4 | 78.4 | 80.5 | 80.5 |

| 5 | 70.7 | 74.0 | 71.1 | 79.4 | 77.9 |

| 6 | 60.9 | 67.5 | 67.1 | 69.3 | 72.1 |

| 7 | 63.5 | 68.9 | 72.5 | 73.0 | 77.4 |

| 8 | 57.1 | 64.0 | 66.6 | 67.9 | 67.9 |

| 9 | 65.3 | 74.9 | 77.4 | 80.5 | 81.0 |

| 10 | 70.2 | 75.9 | 83.7 | 81.5 | 87.2 |

| 11 | 67.5 | 73.5 | 74.9 | 78.4 | 79.4 |

| 12 | 65.3 | 72.1 | 76.7 | 77.4 | 78.9 |

| 13 | 67.1 | 71.1 | 72.5 | 72.5 | 78.4 |

| 14 | 77.4 | 75.9 | 84.9 | 83.7 | 86.0 |

| 15 | 60.5 | 67.1 | 64.8 | 68.4 | 78.4 |

| 16 | 66.2 | 70.2 | 77.9 | 80.0 | 82.6 |

| 17 | 69.3 | 73.5 | 73.0 | 78.4 | 77.9 |

| 18 | 76.4 | 80.5 | 80.5 | 77.4 | 80.5 |

Note. Speech was spectrally shaped with a nonindividualized gain-frequency response. For each participant, the highest score is shown in boldface type.

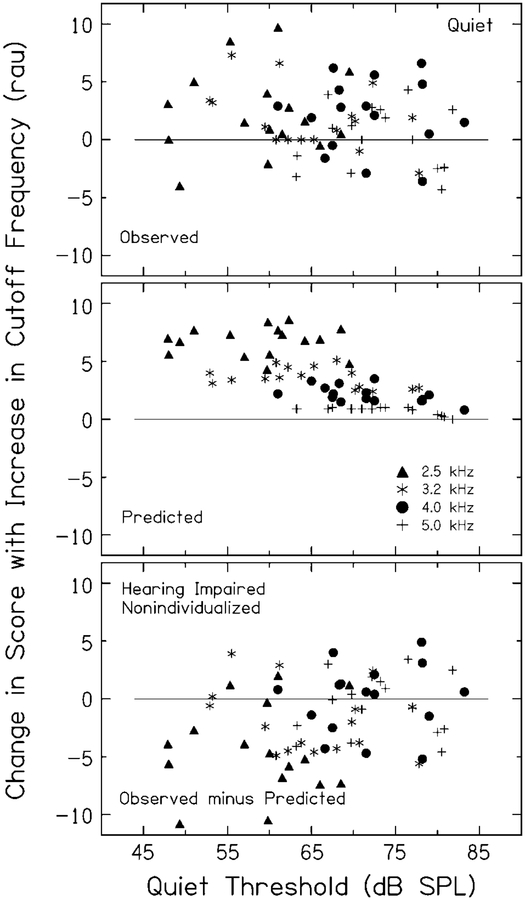

To explore further the question of whether adding amplified nonindividualized high-frequency speech is beneficial or counterproductive for individual HI listeners, Figure 4 shows each HI participant’s change in score with the addition of each high-frequency speech band plotted against the participant’s quiet threshold (in dB SPL) at the center frequency of each band.1 Presenting results in this manner helps to clarify whether there is a frequency and degree of hearing loss above which it is counterproductive to provide high-frequency amplification. The triangle, asterisk, circle, and plus symbols represent changes in score and thresholds measured for bands centered at 2.5, 3.2, 4.0, and 5.0 kHz, respectively. The top panel shows results for observed score changes. Scores increased more than decreased, with decreases (i.e., scores below the horizontal line at zero) distributed across all four bands and across quiet thresholds, providing no evidence of a frequency and/or degree of hearing loss above which it is counterproductive to provide amplification. The middle panel shows results for predicted score changes. A trend for smaller predicted improvements with increasing hearing loss and band frequency can be seen. This downward trend reflects the reduction in audible speech associated with both higher thresholds and the declining amplified speech spectrum (i.e., the amplification does not fully compensate for the elevated thresholds). The bottom panel shows the differences between observed changes and predicted changes in score. Although most scores improved (as shown in top panel), most did not improve as much as predicted (i.e., data points below the horizontal line at zero), consistent with mean results for HI participants in Figure 3. Comparing observed score changes to score changes predicted based on audibility in this manner takes into account differences in high-frequency speech audibility due to quiet threshold differences—that is, predicted improvements may be large or small, depending in part on high-frequency speech audibility. A downward trend remaining after accounting for reduced audibility would suggest that some factor beyond reduced audibility resulted in less benefit with increasing high-frequency hearing loss and speech bands. Instead, the data points above and below the horizontal line in the bottom panel were generally distributed across all four bands and across quiet thresholds. This suggests that after accounting for audibility effects, changes in score were independent of degree of high-frequency hearing loss. Thus, for the range of thresholds included in this study, these results do not support the assumption that benefit of high-frequency amplification declines with increasing hearing loss at higher frequencies.

Figure 4.

For each HI participant listening to speech in quiet processed with the nonindividualized gain-frequency response, changes in score (in rau) with each additional third-octave band of speech are plotted in each panel as a function of the participant’s quiet threshold at the center frequency of that band. The triangle, asterisk, circle, and plus symbols represent data for thresholds measured and bands centered at 2.5, 3.2, 4.0, and 5.0 kHz, respectively. In some cases, multiple data points fall in the same location, resulting in fewer than 16 visible data points per condition. The top panel shows results for observed score changes. The middle panel shows results for predicted score changes. The bottom panel shows results for observed minus predicted score changes.

As described in the introduction, conflicting results from previous studies motivated the design of Experiment 1, which compared recognition of high-level speech as a function of low-pass-filter cutoff frequency for NH and HI listeners, using a gain-frequency response that was similar to a response used in a previously published study (Hogan & Turner, 1998). However, speech recognition for HI listeners using more realistic, individualized levels and gain-frequency responses is also of clinical and theoretical importance (as is the comparison of scores for both gain-frequency responses). Accordingly, two additional experiments were conducted that included only the 16 HI participants of Experiment 1 in which recognition of speech was measured in quiet and in speechshaped noise using more realistic (NAL-R) gain-frequency responses.

Experiment 2: Consonant Recognition for HI Participants Using Nonindividualized and Individualized (NAL-R) Gain-Frequency Responses

Mean Consonant Recognition

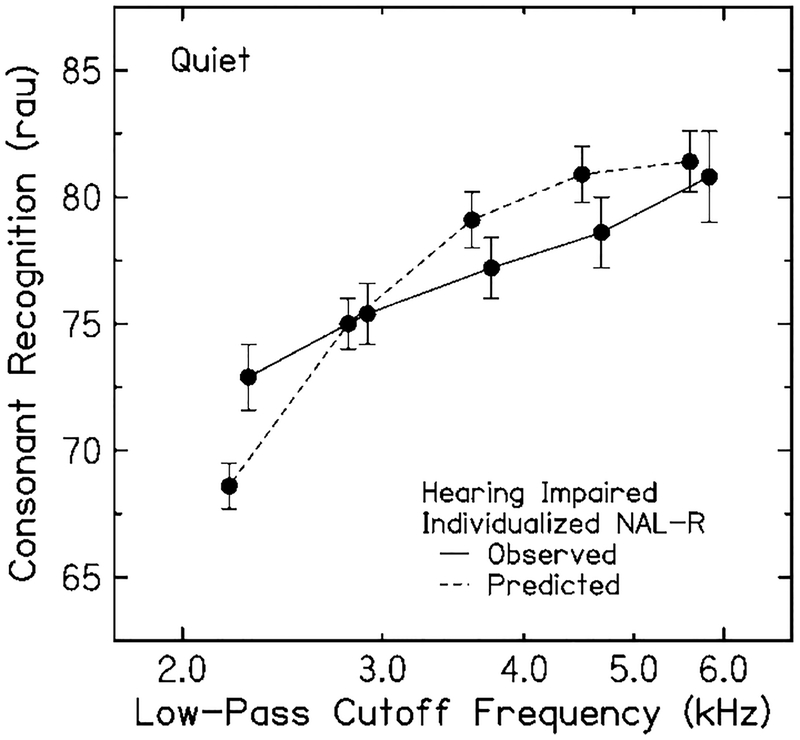

In Experiment 2, the 16 HI participants from Experiment 1 listened to nonsense syllables in quiet, monaurally, under headphones. Nonsense syllables were spectrally shaped (amplified) using a gain-frequency response that was fit individually for each participant based on the NAL-R hearing-aid gain rule. Mean consonant-recognition scores in quiet with NAL-R gain-frequency responses are plotted in Figure 5 as a function of low-pass cutoff frequency (solid line); also shown are predicted scores for the same condition (dashed line). Consonant recognition increased significantly with each additional high-frequency speech band up to and including 5.6 kHz—for example, with the addition of the highest frequency band, F(1, 32) = 8.32, p = .011. In contrast, HI listeners’ observed scores with the nonindividualized gain-frequency response increased significantly only through 4.5 kHz (filled circles and solid line in Figure 3). As was the case for the nonindividualized response, scores for NAL-R responses were predicted to increase significantly with each additional speech band—for example, with the addition of the highest frequency band, F(1, 32) = 25.88, p = .0001.

Figure 5.

Mean (±1 SE) consonant-recognition scores (in rau) as a function of low-pass-filter cutoff frequency for speech shaped with individualized (NAL-R) gain-frequency responses. HI participants listened in quiet. Observed scores are connected by solid lines, and predicted scores are connected by dashed lines. For clarity, some data points are offset along the abscissa.

Differences between observed and predicted scores may help clarify HI listeners’ ability to benefit from amplified speech cues. Across cutoff frequency, observed scores with NAL-R responses were not significantly different than predicted, F(1,15) = .001,p = .980. However, the relationship between observed and predicted scores differed significantly as a function of cutoff frequency, F(4, 60) = 28.33,p < .0001. In addition, with regard to improvements in scores with the addition of high-frequency speech bands, across cutoff frequency, observed improvements were significantly smaller than predicted, F(1, 15) = 46.66, p < .0001, similar to improvements with the nonindividualized response. However, in contrast to results for the nonindividualized response, with the addition ofthe highest frequency band (4.5–5.6 kHz), the improvement in score was significantly larger than predicted with NAL-R responses, F(1,15) = 5.05,p = .040.

Comparing differences in scores between nonindividualized and NAL-R responses may help clarify the influence of gain-frequency responses on HI listeners’ ability to benefit from amplified speech cues. Across cutoff frequency, predicted scores for HI listeners were significantly higher for the nonindividualized response (see Figure 3) than for NAL-R responses (see Figure 5), F(1,15) = 115.40, p < .0001. Differences in predicted scores ranged from 4.1 to 4.6 rau, across cutoff frequency. These differences were expected, given the higher overall level for the nonindividualized speech compared to levels for NAL-R responses (with level differences ranging from 1.5 to 11.9 dB across listeners). In contrast, although observed scores were also higher for the nonindividualized than for NAL-R responses, across cutoff frequency, observed differences between conditions were smaller (ranging from 0.5 to 2.4 rau) and not significant, F(1,15) = 3.52, p = .080.

Consonant Recognition for Individual Participants

Table 2 includes observed consonant-recognition scores in quiet with NAL-R gain-frequency responses for each cutoff frequency and each HI participant, arranged in the same format as Table 1; the highest scores for each participant are shown in boldface type. For 4 of 16 HI participants, the highest score was obtained with the 4.5-kHz cutoff frequency rather than with the widest bandwidth. Further, as was the case for the nonindividualized response, differences between the highest score and the score at the widest bandwidth were small; the largest decrease from the highest score to the score at the widest bandwidth was 2.9 rau.

Table 2.

Consonant-recognition scores (in rau) for individual HI participants for low-pass-filtered speech at five cutoff frequencies.

| Low-pass-filter cutoff frequency (kHz) | |||||

|---|---|---|---|---|---|

| 2.2 | 2.8 | 3.6 | 4.5 | 5.6 | |

| Hearing impaired | |||||

| 1 | 76.4 | 81.5 | 80.0 | 84.3 | 82.6 |

| 2 | 73.5 | 78.4 | 75.9 | 75.4 | 79.4 |

| 3 | 66.6 | 69.3 | 67.9 | 69.8 | 68.9 |

| 4 | 65.3 | 70.7 | 69.3 | 70.2 | 75.4 |

| 5 | 77.4 | 76.9 | 78.4 | 78.4 | 78.9 |

| 6 | 80.5 | 82.1 | 81.0 | 86.6 | 91.6 |

| 7 | 74.5 | 75.4 | 80.0 | 81.0 | 82.1 |

| 8 | 72.5 | 69.8 | 74.0 | 75.9 | 73.0 |

| 9 | 69.8 | 72.5 | 75.4 | 75.9 | 74.9 |

| 10 | 67.9 | 68.4 | 76.4 | 75.4 | 76.9 |

| 11 | 77.9 | 78.9 | 83.2 | 86.6 | 92.3 |

| 12 | 61.8 | 69.8 | 72.1 | 73.5 | 74.0 |

| 13 | 73.0 | 74.9 | 75.9 | 75.9 | 78.4 |

| 14 | 77.9 | 82.1 | 84.9 | 87.2 | 89.7 |

| 15 | 75.9 | 75.9 | 80.0 | 79.4 | 86.0 |

| 16 | 74.9 | 80.0 | 81.5 | 82.6 | 88.4 |

Note. Speech was spectrally shaped with individualized (NAL-R) gainfrequency responses and presented in quiet. For each participant, the highest score is shown in boldface type

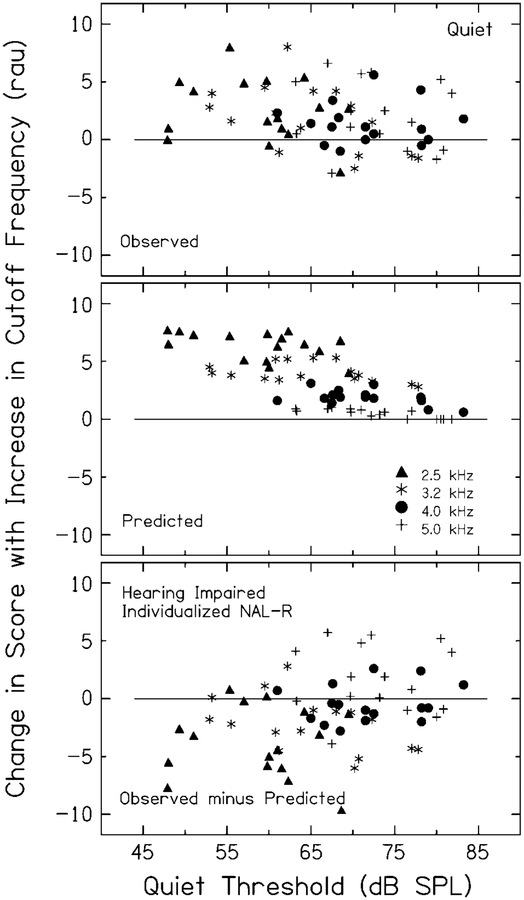

To explore further the benefit of adding high-frequency speech bands for individualized gain-frequency responses and levels, Figure 6 shows each HI participant’s change in score with the addition of each high-frequency speech band plotted against the participant’s quiet threshold at the center frequency of each band. The top panel shows results for observed score changes. As was seen with results for nonindividualized speech processing, observed scores increased more than decreased with additional high-frequency speech bands, with decreases distributed across all four bands and across quiet thresholds. Thus, as with nonindividualized speech processing, results for NAL-R responses also provide no evidence of a frequency and/or degree of hearing loss above which it is counterproductive to provide amplification. The middle panel shows results for predicted score changes. As in Figure 4, a trend for smaller predicted improvements with increasing hearing loss and band frequency can be seen, which reflects the reduction in audible speech associated with both higher thresholds and the declining amplified speech spectrum. The bottom panel shows the differences between observed changes and predicted changes in score. As shown for the nonindividualized response in Figure 4, here too, most scores improved, but not as much as predicted, consistent with mean results in Figure 5. The placement of these data points was distributed across all four bands and quiet thresholds. This suggests that, after accounting for audibility effects, changes in score were independent of degree of high-frequency hearing loss for the range of thresholds included in this study.

Figure 6.

This figure is the same as Figure 4, except that it is for HI participants listening to speech in quiet processed with individualized (NAL-R) gain-frequency responses.

Experiment 3: Consonant Recognition in Quiet and in Speech-Shaped Noise for HI Participants Using Individualized (NAL-R) Gain-Frequency Responses

Mean Consonant Recognition

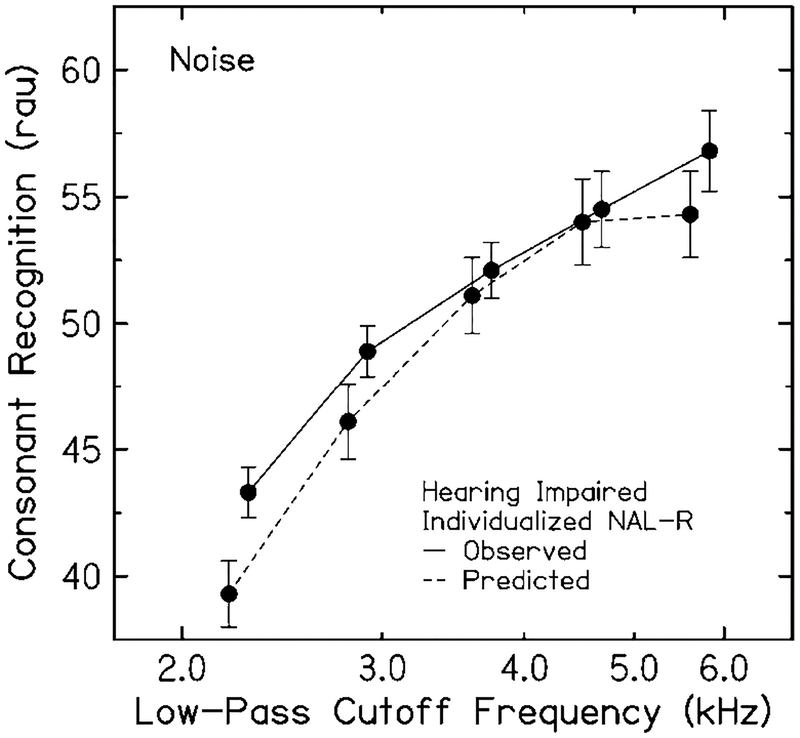

The participants and individualized gain-frequency responses in Experiment 3 were the same as those in Experiment 2. Here, recognition of nonsense syllables was measured in speech-shaped noise with the noise at a +5–dB signal-to-noise ratio. Mean consonant-recognition scores in noise with NAL-R gain-frequency responses are plotted in Figure 7 as a function of low-pass cutoff frequency (solid line); also shown are predicted scores for the same condition (dashed line). Similar to scores in quiet (Figure 5), mean observed and predicted consonant- recognition scores increased significantly with each additional high-frequency speech band up to and including 5.6 kHz—for example, with the addition of the highest frequency band, observed: F(1, 32) = 13.51, p = .002; predicted: F(1, 32) = 4.73, p = .046. Indeed, increases in scores from the narrowest to the widest bandwidth were even larger in noise than in quiet, F(1,15) = 39.12, p < .0001. Similar to differences in observed and predicted scores for NH and HI listeners in Experiment 1, differences in scores when listening in quiet and in noise were expected based on the AI-recognition transfer function that relates predicted scores to speech audibility. That is, the presence ofspeech-shaped noise reduced the amount of audible speech cues across the entire frequency range, resulting in lower AI values than when listening in quiet. These lower AI values correspond to lower scores and a steeper range ofthe transfer function, so that each additional high-frequency band was predicted to result in a larger increase in score in noise than in quiet.

Figure 7.

This figure is the same as Figure 5, except that it is for HI participants listening in speech-shaped noise. For clarity, some data points are offset along the abscissa.

Differences between observed and predicted scores again show that HI listeners generally benefited from amplified high-frequency speech cues. The results of the specific comparisons were all similar to those for the NAL-R responses in quiet, shown in Figure 5 as follows: Across cutoff frequency, observed scores with NAL-R responses for speech in noise were not significantly different than predicted, F(1, 15) = 2.75, p = .118. The relationship between observed and predicted scores for speech in noise differed significantly as a function of cutoff frequency, F(4, 60) = 115.40, p = .001. Finally, although across cutoff frequency, observed improvements in scores were significantly smaller than predicted with the addition of high-frequency speech bands, F(1, 15) = 5.17, p = .038, for the addition of the highest frequency band (4.5–5.6 kHz), observed scores increased significantly more than predicted, F(1, 15) = 9.02, p = .009.

Consonant Recognition for Individual Participants

Table 3 includes observed consonant-recognition scores in speech-shaped noise with NAL-R gain-frequency responses for each cutoff frequency, arranged in the same format as Table 2; the highest scores for each participant are shown in boldface type. For only 2 of 16 participants, the highest score was obtained with the 4.5-kHz cutoff frequency rather than with the widest bandwidth. Further, as was the case for speech in quiet, differences between the highest score and the score at the widest bandwidth were small; the largest decrease from the highest score to the score at the widest bandwidth was 2.1 rau.

Table 3.

Consonant-recognition scores (in rau) for individual HI participants for low-pass-filtered speech at five cutoff frequencies.

| Low-pass-filter cutoff frequency (kHz) | |||||

|---|---|---|---|---|---|

| 2.2 | 2.8 | 3.6 | 4.5 | 5.6 | |

| Hearing impaired | |||||

| 1 | 46.2 | 49.2 | 46.7 | 54.2 | 55.0 |

| 2 | 44.1 | 51.3 | 52.5 | 53.8 | 56.3 |

| 3 | 38.6 | 45.4 | 49.6 | 49.6 | 51.3 |

| 4 | 41.6 | 50.4 | 53.3 | 54.6 | 55.4 |

| 5 | 42.5 | 50.8 | 51.3 | 52.1 | 51.7 |

| 6 | 41.2 | 48.3 | 51.7 | 54.6 | 61.4 |

| 7 | 41.2 | 50.4 | 53.8 | 53.8 | 56.3 |

| 8 | 50.0 | 51.3 | 53.8 | 54.2 | 62.2 |

| 9 | 42.0 | 45.4 | 48.7 | 49.6 | 52.1 |

| 10 | 42.9 | 45.4 | 51.7 | 55.9 | 58.8 |

| 11 | 49.2 | 52.9 | 55.4 | 66.2 | 68.4 |

| 12 | 36.5 | 41.2 | 45.4 | 43.7 | 47.5 |

| 13 | 42.5 | 45.8 | 48.3 | 50.0 | 47.9 |

| 14 | 48.7 | 58.0 | 65.3 | 67.5 | 69.3 |

| 15 | 46.2 | 52.1 | 55.9 | 58.4 | 59.7 |

| 16 | 38.6 | 44.6 | 50.8 | 54.6 | 55.4 |

Note. Speech was spectrally shaped with individualized (NAL-R) gain-frequency responses and presented in speech-shaped noise. For each participant, the highest score is shown in boldface type.

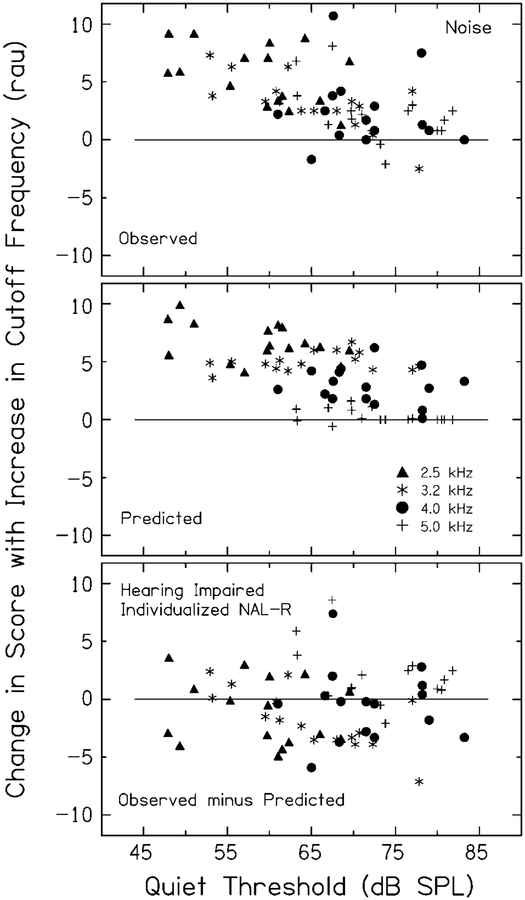

To explore further the benefit of adding high-frequency bands for speech in noise, Figure 8 shows each HI participant’s change in score with the addition of each high-frequency speech band plotted against the participant’s quiet threshold at the center frequency of each band. The top panel shows results for observed score changes. As was seen with results for speech in quiet, scores generally increased more than decreased. Indeed, for speech in noise, there were only four cases of declines in scores with increases in speech band. Further, the declines were small and distributed across three of the four bands. A general downward trend can be seen, reflecting smaller increases in observed scores with larger degrees of high-frequency hearing loss. The same trend is apparent in the middle panel, which shows results for predicted score changes and reflects the reduction in audible speech associated with both higher thresholds and the declining amplified speech spectrum. The bottom panel shows differences between observed changes and predicted changes in score. Note the relatively large number of larger-than-predicted changes in scores for the highest frequency band (+ symbols above the horizontal line), consistent with mean results in Figure 7. Again, although nearly all scores improved, most improvements were smaller than predicted. These data points were generally distributed across all four bands and across quiet thresholds, as was the case for speech in quiet (Figures 4 and 6). Thus, as seen for speech in quiet, after accounting for audibility effects, changes in score were independent of degree of high-frequency hearing loss. Therefore, for speech in noise and the range of thresholds included in this study, current results do not support the assumption that benefit of high-frequency amplification declines with increasing hearing loss at higher frequencies.

Figure 8.

This figure is the same as Figure 4, but it is for HI participants listening to speech in noise processed with individualized (NAL-R) gain-frequency responses.

Discussion

The current study was designed to replicate and extend previous research to allow more detailed comparisons across conditions for the same group of HI listeners. The results are generally consistent with data from other published studies obtained under similar conditions, although interpretation and conclusions may differ. The findings of the current study support the clinical practice of providing some degree of high-frequency amplification for listeners with high-frequency hearing loss between 55 and 80 dB HL.

The aim of Experiment 1 was to compare changes in HI listeners’ speech recognition with additional high-frequency speech bands for speech processed with a nonindividualized gain-frequency response to (a) results for NH participants listening to the identical high-level speech and (b) previous results for HI listeners. The presence of declines in recognition for some individual NH listeners suggests that detrimental effects of high-frequency amplification for HI listeners may be due, in part, to effects of listening at high signal levels and not solely due to consequences of hearing impairment. Current results for HI listeners showing small mean increases in recognition with additional high-frequency speech bands as well as some examples of individual declines in recognition are consistent with results for listeners with similar degrees of high-frequency hearing loss from some previous studies that reported less positive conclusions concerning benefits of high-frequency amplification (e.g., Amos & Humes, 2007; Baer, Moore, & Kluk, 2002; Hogan & Turner, 1998; Vickers, Moore, & Baer, 2001). For example, Hogan and Turner provided individual participants’ results in their Table 1 (p. 436). Other than Subject S9, the largest decrease in score with the addition of a one-third-octave band of speech was 6.6% for Subject S6. This decrease occurred as cutoff frequency increased from 2.8 to 3.5 kHz; for this participant, the next two increases in cutoff frequency resulted in increases in score of 5.1% and 2.7%. Therefore, Subject S6’s highest score occurred for the widest bandwidth, despite a decrease of 6.6% for an intermediate speech band. Further, although mean scores were not discussed by Hogan and Turner, averaging scores in their Table 1 across listeners shows that mean scores increased monotonically as cutoff frequency increased from 1.1 to 9.0 kHz; thus, different conclusions about the benefits of high-frequency amplification might be drawn depending on whether individual or mean results are considered.

Despite examples of individual declines in recognition and the lack of significance of the mean increase with the addition of highest frequency speech band for the nonindividualized response, we do not interpret the current results as evidence against the provision of high-frequency amplification because declines were relatively small and few. Further, looking more broadly at increases in bandwidth wider than one-third-octave bands, recognition for all listeners increased as bandwidth increased from 2.2 to 5.6 kHz. More importantly, results from Experiment 1 are the least clinically relevant in the current study because the same gain-frequency response was provided to all participants. Similarly, Amos and Humes (2007) used a nonindividualized gain-frequency response that ensured full speech audibility for all HI participants. They found no significant mean improvements in HI listeners’ recognition as cutoff frequency increased from 3.2 to 6.4 kHz and acknowledged that data using individualized gain-frequency responses are needed to resolve discrepancies with previous investigations showing that HI listeners benefit from high-frequency speech information. Accordingly, Experiments 2 and 3, which used individualized gain-frequency responses prescribed by NAL-R (and which resulted in fewer individual declines and more cases of significant mean increases than in Experiment 1) provide more clinically relevant information.

The aim of Experiment 2 was to compare changes in recognition with additional high-frequency speech bands for speech processed with nonindividualized (from Experiment 1) and individualized gain-frequency responses. Results for the two responses were generally similar in that mean recognition increased with each additional high-frequency speech band; however, the mean increase in score with the addition of the highest-frequency speech band was significant only for the individualized responses. Consistent with that mean difference, most HI listeners achieved their highest scores at less than the widest bandwidth for speech processed with a nonindividualized gain-frequency response, whereas most achieved their highest scores at the widest bandwidth for speech processed according to the NAL-R prescription. It is, therefore, not unreasonable that different conclusions regarding the benefit of high-frequency amplification could be drawn from results for nonindividualized and individualized responses. Thus, caution is warranted in predicting benefit of high-frequency amplification with hearing aids from speech recognition measured under substantially different conditions, such as with unrealistic levels and/or gain-frequency responses.

The nonindividualized response resulted in higher speech levels than those prescribed by NAL-R. Comparing scores for these conditions provides additional evidence of diminished benefit associated with listening at high levels. Specifically, predicted scores for the nonindividualized response were significantly higher than those for NAL-R, although observed scores were not. Smaller-than-predicted increases in speech recognition with increases in speech level are consistent with previous findings (e.g., Ching et al., 1998) and with the well-accepted notion that maximizing speech audibility is not a reasonable goal in hearing-aid fittings (Rankovic, 1991; Skinner, 1980), which underlies NAL and other prescriptive rules (e.g., Byrne & Dillon, 1986; Moore & Glasberg, 1998).

The aim of Experiment 3 was to compare changes in recognition with additional high-frequency speech bands for speech in noise and in quiet processed with individualized (NAL-R) gain-frequency responses. Results were generally similar in that mean recognition increased with each additional high-frequency speech band for both. However, significantly larger increases in scores were observed for speech in noise than quiet, and even more HI participants achieved their highest scores at the widest bandwidth for speech in noise than in quiet. An explanation consistent with these results is that when listening in speech-shaped noise, fewer of the redundant speech cues are available in the lower frequency range where hearing is best for most HI listeners. Thus, additional cues made available with additional high-frequency bands have added value and result in larger increases in recognition. This is consistent with an AI-based explanation whereby smaller increases in audibility with increasing bandwidth correspond to greater increases in speech recognition in noise.

Our finding of larger benefit from additional high-frequency bands for speech in noise than for speech in quiet is similar to results from Turner and Henry (2002), who discussed these differences in terms of available speech cues. These authors used voicing cues as an example, given that they are available across a wide range of speech frequencies (Grant & Walden, 1996). When low-frequency speech is sufficiently audible, most voicing information is available to listeners so that adding audible higher frequencies does not provide additional voicing information. However, when low-frequency cues are restricted by the presence of noise, adding higher frequency bands of speech may benefit perception of voicing. Thus, when listening in noise, the benefit of high-frequency amplification may be more pronounced than in quiet, as was observed in the current study.

In each of the three current experiments, HI listeners’ speech recognition improved with additional high-frequency speech bands, although most improvements were smaller than predicted by a speech audibility metric. Smaller-than-predicted improvements have also been noted in several related studies (Amos & Humes, 2007; Ching et al., 1998; Hogan & Turner, 1998; Hornsby & Ricketts, 2006). The current results suggest that although listeners with hearing loss may not take advantage of audible speech cues to the same extent as listeners with normal hearing, amplification increased speech audibility and improved speech recognition. Final conclusions regarding the benefit of high-frequency amplification provided by hearing aids should be based on further research conducted under more realistic conditions, such as using hearing aids outside of the laboratory.

Acknowledgments

This work was supported (in part) by research grants K23 DC00158, P50 DC00422, and R01 DC00184 from the National Institute on Deafness and Other Communication Disorders and by Grant M01 RR01070 from the Medical University of South Carolina General Clinical Research Center. This investigation was conducted in a facility constructed with support from Research Facilities Improvement Program Grant Number C06 RR14516 from the National Center for Research Resources, National Institutes of Health. Helpful contributions from Fu-Shing Lee, Rebecca McDonald, Stefanie R. Reed, and Jillanne Schulte are gratefully acknowledged.

Footnotes

Mean quiet thresholds in Figures 4, 6, and 8 are approximately 6 dB higher than those shown in the audiograms in Figure 1. This comparison took into account differences in procedures for threshold determination (approximately 4 dB lower for maximum-likelihood than standard clinical procedure) and reference equivalent threshold sound pressure levels (approximately 10 dB higher for dB SPL than dB HL).

References

- American National Standards Institute. (1969). Methods for the calculation of the Articulation Index. (ANSI S3.5–1969). New York: Author. [Google Scholar]

- American National Standards Institute. (1996). Specifications for audiometers. (ANSI S3.6–1996). New York: Author. [Google Scholar]

- American National Standards Institute. (1997). Methods for calculation of the Speech Intelligibility Index. (ANSI S3.5–1997). New York: Author. [Google Scholar]

- American Speech-Language-Hearing Association. (2005). Guidelines for manual pure-tone threshold audiometry. Rockville, MD: Author. [Google Scholar]

- Amos NE, & Humes LE (2007). Contribution of high frequencies to speech recognition in quiet and noise in listeners with varying degrees of high-frequency sensori-neural hearing loss. Journal of Speech, Language, and Hearing Research, 50, 819–834. [DOI] [PubMed] [Google Scholar]

- Baer T, Moore BCJ, & Kluk K (2002). Effects oflow pass filtering on the intelligibility of speech in noise for people with and without dead regions at high frequencies. The Journal of the Acoustical Society of America, 112, 1133–1143. [DOI] [PubMed] [Google Scholar]

- Byrne D, & Dillon H (1986). The National AcousticLaboratories (NAL) new procedure for selecting the gain and frequency response of a hearing aid. Ear and Hearing, 7, 257–265. [DOI] [PubMed] [Google Scholar]

- Byrne D, Dillon H, Ching T, Katsch R, & Keidser G (2001). NAL-NL1 procedure for fitting nonlinear hearing aids: Characteristics and comparisons with other procedures. Journal of the American Academy of Audiology, 12, 37–51. [PubMed] [Google Scholar]

- Ching TY, Dillon H, & Byrne D (1998). Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification. The Journal of the Acoustical Society of America, 102, 1128–1140. [DOI] [PubMed] [Google Scholar]

- Dillon H (1997). Converting insertion gain to and from headphone coupler responses. Ear and Hearing, 18, 346–348. [DOI] [PubMed] [Google Scholar]

- Dirks DD, Dubno JR, Ahlstrom JB, & Schaefer AB (1990). Articulation index importance and transfer functions for several speech materials. Asha, 32, 91. [Google Scholar]

- Dubno JR, Horwitz AR, & Ahlstrom JB (2003). Recovery from prior stimulation: Masking of speech by interrupted noise for younger and older adults with normal hearing. The Journal of the Acoustical Society of America, 113, 2084–2094. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, & Ahlstrom JB (2005a). Word recognition in noise at higher-than-normal levels: Decreases in scores and increases in masking. The Journal of the Acoustical Society of America, 118, 914–922. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, & Ahlstrom JB (2005b). Recognition of filtered words in noise at higher-than-normal levels: Decreases in scores with and without increases in masking. The Journal of the Acoustical Society of America, 118, 923–933. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, & Ahlstrom JB (2006). Spectral and threshold effects on recognition of speech at higher-than-normal levels. The Journal of the Acoustical Society of America, 120, 310–320. [DOI] [PubMed] [Google Scholar]

- Dubno JR, & Schaefer AB (1992). Comparison of frequency selectivity and consonant recognition among hearing-impaired and masked normal-hearing listeners. The Journal of the Acoustical Society of America, 91, 2110–2121. [DOI] [PubMed] [Google Scholar]

- Grant KW, & Walden BE (1996). Evaluating the articulation index for auditory-visual consonant recognition. The Journal of the Acoustical Society of America, 100, 2415–2424. [DOI] [PubMed] [Google Scholar]

- Green DM (1993). A maximum-likelihood method for estimating thresholds in a yes–no task. The Journal of the Acoustical Society of America, 93, 2096–2105. [DOI] [PubMed] [Google Scholar]

- Hogan CA, & Turner CW (1998). High-frequency audibility: Benefits for hearing-impaired listeners. The Journal of the Acoustical Society of America, 104, 432–441. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, & Ricketts TA (2003). The effects of hearing loss on the contribution of high- and low-frequency speech information to speech understanding. The Journal of the Acoustical Society of America, 113, 1706–1717. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, & Ricketts TA (2006). The effects of hearing loss on the contribution of high- and low-frequency speech information to speech understanding. II: Sloping hearing loss. The Journal of the Acoustical Society of America, 119, 1752–1763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horwitz AR, Dubno JR, & Ahlstrom JB (2002).Recognition of low-pass-filtered consonants in noise with normal and impaired high-frequency hearing. The Journal of the Acoustical Society of America, 111, 409–416. [DOI] [PubMed] [Google Scholar]

- Joris PX, Smith PH, & Yin TC (1994). Enhancement of neural synchronization in the anteroventral cochlear nucleus. II: Responses in the tuning curve tail. Journal of Neurophysiology, 70, 2533–2549. [DOI] [PubMed] [Google Scholar]

- Kiang NY, & Moxon EC (1974). Tails of tuning curves of auditory-nerve fibers. The Journal of the Acoustical Society of America, 55, 620–630. [DOI] [PubMed] [Google Scholar]

- Leek MR, Dubno JR, He N-J, & Ahlstrom JB(2000). Experience with a yes–no single-interval maximum- likelihood procedure. The Journal of the Acoustical Society of America, 107, 2674–2684. [DOI] [PubMed] [Google Scholar]

- Liberman MC, & Dodds LW (1984). Single-neuron labeling and chronic cochlear pathology. III: Stereocilia damage and alterations of threshold tuning curves. Hearing Research, 16, 55–74. [DOI] [PubMed] [Google Scholar]

- Mackersie CL, Crocker TL, & Davis RA (2004). Limiting high-frequency hearing aid gain in listeners with and without suspected cochlear dead regions. Journal of the American Academy of Audiology, 15, 498–507. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, & Glasberg BR (1998). Use of a loudness model for hearing-aid fitting. I: Linear hearing aids. British Journal of Audiology, 32, 317–335. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Huss MT, Vickers DA, Glasberg BR, & Alcántara JI (2000). A test for the diagnosis of dead regions in the cochlea. British Journal of Audiology, 34, 205–224. [DOI] [PubMed] [Google Scholar]

- Pascoe DP (1975). Frequency responses of hearing aids and their effects on the speech perception of hearing-impaired subjects. The Annals of Otology, Rhinology & Laryngology, 84(Suppl. 23), 5–40. [PubMed] [Google Scholar]

- Pavlovic CV (1989). Speech spectrum considerations and speech intelligibility predictions in hearing aid evaluations. Journal of Speech and Hearing Disorders, 54, 3–8. [DOI] [PubMed] [Google Scholar]

- Plyler PN, & Fleck EL (2006). The effects of high- frequency amplification on the objective and subjective performance of hearing instrument users with varying degrees of high-frequency hearing loss. Journal of Speech, Language, and Hearing Research, 49, 616–627. [DOI] [PubMed] [Google Scholar]

- Rankovic CM (1991). An application of the articulation index to hearing aid fitting. Journal of Speech and Hearing Research, 34, 391–402. [DOI] [PubMed] [Google Scholar]

- Rankovic CM (1998). Factors governing speech reception benefits of adaptive linear filtering for listeners with sensorineural hearing loss. The Journal of the Acoustical Society of America, 103, 1043–1057. [DOI] [PubMed] [Google Scholar]

- Rankovic CM (2002). Articulation index predictions for hearing-impaired listeners with and without cochlear dead regions. The Journal of the Acoustical Society of America, 111, 2545–2548. [DOI] [PubMed] [Google Scholar]

- Simpson A, McDermott HJ, & Dowell RC (2005).Benefits of audibility for listeners with severe high- frequency hearing loss. Hearing Research, 210, 45–52. [DOI] [PubMed] [Google Scholar]

- Skinner MW (1980). Speech intelligibility in noise- induced hearing loss: Effects of high-frequency compensation. The Journal of the Acoustical Society of America, 67, 306–317. [DOI] [PubMed] [Google Scholar]

- Studebaker GA (1985). A “rationalized” arcsine transform.Journal of Speech and Hearing Research, 28, 455–462. [DOI] [PubMed] [Google Scholar]

- Studebaker GA, Sherbecoe RL, McDaniel DM, & Gwaltney CA (1999). Monosyllabic word recognition at higher-than-normal speech and noise levels. The Journal of the Acoustical Society of America, 105, 2431–2444. [DOI] [PubMed] [Google Scholar]

- Sullivan JA, Allsman CS, Nielsen LB, & Mobley JP (1992). Amplification for listeners with steeply sloping, high-frequency hearing loss. Ear and Hearing, 13, 35–45. [DOI] [PubMed] [Google Scholar]

- Turner CW, & Cummings KR (1999). Speech audibility for listeners with high-frequency hearing loss. American Journal of Audiology, 8, 47–56. [DOI] [PubMed] [Google Scholar]

- Turner CW, & Henry BA (2002). Benefits of amplification for speech recognition in background noise. The Journal of the Acoustical Society of America, 112, 1675–1680. [DOI] [PubMed] [Google Scholar]

- Vestergaard MD (2003). Dead regions in the cochlea:Implications for speech recognition and applicability of articulation index theory. International Journal of Audiology, 42, 249–261. [DOI] [PubMed] [Google Scholar]

- Vickers DA, Moore BCJ, & Baer T (2001). Effects of low-pass filtering on the intelligibility of speech in quiet for people with and without dead regions at high frequencies. The Journal of the Acoustical Society of America, 110, 1164–1175. [DOI] [PubMed] [Google Scholar]