Abstract

Background:

Rigorous measurement of organizational performance requires large, unbiased samples to allow inferences to the population. Studies of organizations, including hospitals, often rely on voluntary surveys subject to nonresponse bias. For example, hospital administrators with concerns about performance are more likely to opt-out of surveys about organizational quality and safety, which is problematic for generating inferences.

Objective:

The objective of this study was to describe a novel approach to obtaining a representative sample of organizations using individuals nested within organizations, and demonstrate how resurveying nonrespondents can allay concerns about bias from low response rates at the individual-level.

Methods:

We review and analyze common ways of surveying hospitals. We describe the approach and results of a double-sampling technique of surveying nurses as informants about hospital quality and performance. Finally, we provide recommendations for sampling and survey methods to increase response rates and evaluate whether and to what extent bias exists.

Results:

The survey of nurses yielded data on over 95% of hospitals in the sampling frame. Although the nurse response rate was 26%, comparisons of nurses’ responses in the main survey and those of resurveyed nonrespondents, which yielded nearly a 90% response rate, revealed no statistically significant differences at the nurse-level, suggesting no evidence of nonresponse bias.

Conclusions:

Surveying organizations via random sampling of front-line providers can avoid the self-selection issues caused by directly sampling organizations. Response rates are commonly misinterpreted as a measure of representativeness; however, findings from the double-sampling approach show how low response rates merely increase the potential for nonresponse bias but do not confirm it.

Keywords: survey, survey methods, response rate, nonresponse bias, representation, hospitals

Surveys of organizations and the individuals in them are a mainstay of data collection used to evaluate and inform the delivery of health care services and quality. Survey designs used to make inferences about a population call for probability samples and high response rates. However, given endemic difficulties with survey response rates in the 21st century,1–3 high response rates are increasingly difficult to achieve. High response rates are primarily desirable because they reduce the threat of nonresponse bias—the risk that samples of responding organizations and the individual respondents in them are not representative of the underlying populations. For this reason, journal editors and readers are often wary of research findings derived from surveys with low response rates.

However, a survey’s response rate provides limited information about representativeness and is “at best an indirect measure of the extent of nonresponse bias”.1 Most important is the degree to which the sample represents the population of interest.4,5 In this article, we discuss the interpretation of survey response rates in the context of nonresponse bias and population representativeness. We provide an example from the sociology of complex organizations that employ front-line providers as informants about care in organizations.6–8

We use a novel survey design, which constructs organizational units (in our case, hospitals) from an individual-level sample (in our case, nurses). Our primary analytic population—and thus our target for achieving representativeness—is among the population of hospitals, with interest in the function of a number of patients served. Nurses are nested within hospitals, which gives them a primary role as informants of hospitals’ organizational properties. Because we are studying how hospital-specific features of the organization of nursing affect patient care, we are simultaneously interested in achieving representativeness of the population of patients. Thus hospitals, nurses, and patients are 3 interlocking populations, where patients and nurses are nested within the higher-order population of hospitals.

We describe how we conducted a large survey of nurses to yield an unbiased, representative sample of hospitals and the patients within them. We further describe a double-sampling approach to evaluate the extent to which nonresponse bias exists at the level of data collection (ie, nurses). Our example relies on nurses as informants about hospitals, but the lessons from our model can be applied to studies of front-line workers across the spectrum of health care organizations.7–9

In the sections that follow, we:

Describe conventional methods of surveying organizations and their limitations.

Introduce an established double-sampling approach and describe its advantages.

Assess organizational representativeness and the extent of nonresponse bias.

Summarize recommendations for sampling and survey methods to increase representativeness and reduce nonresponse bias.

CONVENTIONAL METHODS OF SURVEYING ORGANIZATIONS

Conventional methods of surveying organizations rely on voluntary surveys to collect data; however, nonprobabilistic voluntary survey designs introduce the problem of self-selection of organizations as organizations may choose to participate—or not—based on what the researcher is studying. A survey of organizational performance may be at risk if persons in authority within an organization perceive their organization to be a low-performer, and are therefore disinclined to have their organization shown in a bad light. Concomitant nonresponse might also occur when surveys of organizations rely on an individual key informant. Individual informants offer a singular perspective and often hold a vested interest in their organization’s performance. Even surveys founded on the responses of multiple informants within an organization can be problematic, as it is typically necessary to obtain from each organization permission from the administration to access lists of front-line workers to serve as the sampling frame at the secondary level (ie, that of front-line workers as informants). In situations of labor-management conflict, this may be difficult.10

Conventional methods of data collection—either 1-stage samples of organizations with appeals to key informants, or 2-stage samples requiring within-organizations sampling frames and institutional permissions8—can work well at certain scales and in conditions where organizations have dedicated record systems, as with patient data11,12; and/or where key organizational actors are “on board” with respect to the research enterprise.13 However, at large organizational scale and organizational self-selection, these conventional designs may not garner unbiased data, in the sense of representativeness of organizations such as hospitals. This was a driving motivation to reimagine methods for surveying organizations, which led us to bypass sampling at the organizational (hospital) level and to access front-line providers (nurses) directly at their home addresses, as informants about the hospitals in which they were employed.

METHODS

Surveying Front-line Providers to Obtain a Representative Sample of Organizations

The first sample of our double-sampling approach to evaluate hospital care quality, organizational structure, and workforce characteristics, involved a survey of registered nurses (RN4CAST-US) conducted in 2016 in California, Florida, New Jersey, and Pennsylvania. The 4 states were chosen for their diversity of urban and rural regions and because they include roughly 25% of Medicare beneficiary hospital discharges annually. The registered nurse sample was obtained from a 30% random sample of nurses from state licensure lists. There is no particular sampling fraction that is recommended in all cases, as it will depend on several components, including the size of the sampling frame, rates of nonresponse, the number of ineligible elements, and the number of hospitals or other organizational units. In our case, a large sample of ~231,000 nurses was used. Our primary research interest is in hospital performance and only roughly one third of registered nurses provide patient care in hospitals.14,15 Others have employed a similar survey approach with a smaller sampling frame, in part because of their ability to target the survey to nurses working on particular units in hospital settings.8 Also, we anticipated a low response rate given the lengthy 12-page questionnaire and our inability to offer financial incentives to such a large sample.15,16

On the basis of our experiences estimating models with nurses grouped within hospitals,17 and other investigations of reliability of organizational-level indicators based on aggregating worker reports7—which suggests a minimum of 10 nurses per hospital—plus observations on the range of nurses per hospital in hospitals of differing size (Table 1), we come up with the rough identity that: sample size (~230,000)≈Target number of hospitals in study area (~750)×25 (nurses per hospital, based on 10–40 per hospital)×4 (inverse of a 25% response rate)×3 (inverse of a one third eligibility rate). None of the terms on the right of this equation were known precisely a priori, but they were posited to a degree that proved quite accurate in drawing the sample. This kind of calculus can be adjusted—researchers will need to scan the current literature as it pertains to their specific research sites, interests, and constraints—but it serves as a starting point for related applications of this design.

TABLE 1.

Hospital and Patient Representation by Hospital Size

| Hospital Total by Size | ||||

|---|---|---|---|---|

| Hospital Total (Hospital N = 766) | Small ≤ 100 Beds (Hospital N = 175) | Medium 101 to ≤ 250 Beds (Hospital N = 305) | Large > 250 Beds (Hospital N = 286) | |

| Hospitals with ≥ 1 nurse respondent | ||||

| Mean (SD; IQR) nurse respondents | 31.5 (31.1; 10–44) | 7.6 (5.5; 3–11) | 20.9 (14.1; 10–29) | 55.8 (35.6; 32–65) |

| Hospital representation (%) | 96.7 | 88.6 | 98.7 | 99.7 |

| Patient representation (%) | 99.5 | 94.8 | 99.2 | 100 |

| Hospitals with ≥ 2 nurse respondents | ||||

| Mean (SD; IQR) nurse respondents | 32.4 (31.1; 11–44) | 8.2 (5.4; 4–12) | 21.3 (13.9; 11–30) | 55.8 (35.6; 32–65) |

| Hospital representation (%) | 94.1 | 81.1 | 96.4 | 99.7 |

| Patient representation (%) | 99.2 | 91.5 | 98.6 | 100 |

| Hospitals with ≥ 5 nurse respondents | ||||

| Mean (SD; IQR) nurse respondents | 35.0 (31.1; 13–46) | 10.3 (5.0; 7–13) | 22.4 (13.5; 13–30) | 56.0 (35.5; 32.5–65) |

| Hospital representation (%) | 86.4 | 57.7 | 90.8 | 99.3 |

| Patient representation (%) | 97.6 | 74.6 | 95.5 | 99.98 |

| Hospitals with ≥ 10 nurse respondents | ||||

| Mean (SD; IQR) nurse respondents | 40.3 (31.2; 19–50) | 14.5 (4.4; 12–16) | 25.6 (12.7; 16–31) | 56.5 (35.3; 33–65) |

| Hospital representation (%) | 72.7 | 26.3 | 75.4 | 98.3 |

| Patient representation (%) | 93.1 | 45.6 | 85.7 | 99.6 |

Patient representation is derived from state discharge databases in 2015. Interquartile range (IQR) includes the middle 50th percentile, ranging from the bottom 25th percentile to the top 75th percentile.

Data collection for the RN4CAST-US survey of nurses used a modified Dillman approach to obtain the main survey sample.15 Surveys were mailed to nurses’ home addresses with prepaid return envelopes as well as an option to complete the survey online or by phone. Nurses were asked to provide the name of their primary employer, allowing responses to be aggregated within hospitals and other health care organizations (eg, skilled nursing facilities, home health care agencies). A letter was sent by first-class mail to the home address of each of the randomly selected nurses describing the purpose of the survey and estimated arrival of the survey package. Next, the full-length survey was sent in an by 11 envelope with the survey logo on the outside. One week later, thank you/reminder postcards were sent. A second survey was sent to nurses who did not respond to the original mailing, followed by a second thank you/reminder postcard. Approximately 4 months after the first mailing, nurses who had not yet responded were mailed a third survey. The fourth and final mailing occurred 6 weeks later and consisted of a thank you postcard and an abbreviated version of the survey. The main survey data collection efforts concluded after 6 months, and yielded a response rate of 26% (52,510 nurses). The responses obtained during this main survey data collection are used in our program of research to describe the organizational performance and nursing resources.

The second sample of our double-sampling approach involved an intensive resurvey of nonrespondents. Responses obtained in the second samples were used exclusively for the purposes of evaluating nonresponse bias in our main survey. The resurvey of nonrespondents involved a random sample of 1400 nonrespondents surveyed in California, Florida, and Pennsylvania using more intensive methods (eg, certified mail, phone calls, and financial incentives) that were not feasible in the initial sample of 231,000 nurses. The amount of data collected from nonrespondents was significantly pared down from the main survey. We removed the majority of questions, including those requesting respondents name their employer. We retained the most critical survey items related to employment status, setting, workload, work environment, and rating of overall care quality and safety.

To help ensure the nonrespondent sample would open our next mailing, we designed a custom multi-layer wedding-style invitation that was personalized and assembled by hand. Outer envelopes were hand-addressed, stamped, and sealed. The invitation unfolded to reveal a short note explaining the significance of their selection for the survey and to watch for a US Postal Service Priority Mail envelope containing an abbreviated version of the survey and $10 bill. This was followed by a thank you/reminder postcard. For those who did not respond, a second survey was sent 4 weeks later by FedEx. After 4 more weeks, a third survey mailing was sent by UPS to nonrespondents with a $20 bill enclosed.

When the third nonrespondent survey was mailed, an in-house call center was activated (using VanillaSoft Call Center Software).18 The call center was staffed by nursing students who were trained by the research team. Callers were matched as well as possible with nonrespondents from their home state. A minimum of 10 attempts were made to reach each nonrespondent by phone. The phone script included a brief version of the survey, similar to the postcards used in the final wave of the main survey and mailings to nonrespondents. Refusals and do not call requests were recorded and honored. The final response rate from the nonrespondent sample was 87% after accounting for people who had died or were unreachable by mail or phone.

RESULTS

To examine the results of our double-sampling survey approach, we considered 2 issues: (1) representativeness and (2) nonresponse bias. With respect to representativeness, we were concerned with the extent to which our main survey of nurses obtained data from a large, unbiased sample of hospitals—and by extension, the patients receiving care within those hospitals. With respect to nonresponse bias, we were concerned with the extent to which nurse respondents differed in important ways from nonrespondents.

To assess hospital representativeness, we used publicly available data on the number of hospitals in our sampling frame and then calculated the percent of hospitals for which we had at least 1, 2, 5, and 10 nurse respondents on the main survey. The average number of nurse respondents per hospital was 32. Most hospitals (96.7%) in the 4-state sampling frame were represented in the RN4CAST-US survey by at least 1 nurse respondent (Table 1). Among hospitals with >250 beds, 99.7% of hospitals were represented, compared with 88.6% of hospitals with <100 beds. Among hospitals with at least 10 nurse respondents, the sample represented 72.7% of hospitals overall, and 98.3% of hospitals with >250 beds.

Because our research interest is primarily the outcomes of patients in hospitals,19 we assessed the extent to which patients in the 4 states were represented in our sample of hospitals. Patient representation was derived using state discharge databases from 2015. Hospitals with at least 10 nurse respondents (73% of hospital sample) account for 93% of hospitalized patients in the 4 states; and if we consider hospitals with at least 5 nurse respondents, 98% of eligible hospital patients are represented (Table 1).

Did this excellent representation of hospitals and patients come at a cost? Unlike Tourangeau et al,8 we did not have a sampling frame of nurses within hospitals, and our massive-scale mail survey of nurses to generate multiple organizational measures of hospitals resulted in a modest response rate (26% of nurses), as is typical in such efforts. We thus tested whether the response rate among nurses introduced bias in our estimates of hospital quality and performance. From our double-sampling approach, we considered nurse respondents and nonrespondents as 2 distinct groups.20,21 The target population means for inference about any variable of interest can be expressed as:

| (1) |

where and are the means for the respondent and non-respondent groups (the subscripts r for respondents and m for the missing), and Wr and Wm are the proportions of the combined sample in these 2 groups (Wr+Wm=1). Because the proportion of original nonrespondents sampled and interviewed was very high (87%) but not fully 100%, our estimate of could still be slightly biased, we follow Levy and Lemeshow22 in referring to .. as a “nearly unbiased” estimator of a characteristic of nurses (or as judged by nurses) in this population.

Evaluating the effect of nonresponse bias is thus the question of whether , because, when they are equivalent, , what we can observe via our general sampling design, is approximately equal to , the population mean that is our target for inference (representation). We compared responses from the main RN4CAST-US survey (first sample) in 3 states and the corresponding nonrespondent survey (second sample), to test for differences in employment status and nurse demographics, including age, sex, and education, as well as reports of hospital quality, safety, and resources. Responses are reported in aggregate (Table 2), and stratified within the state (Table 3). Likelihood ratio χ2 and 1-way analysis of variance were used to test for significance.

TABLE 2.

Comparison of Nurse Responses From the Main Survey and Nonrespondent Survey

| Survey Item All Respondents |

Main Survey Sample N = 52,510 |

Nonrespondent Survey Sample N = 1168 |

P |

|---|---|---|---|

| Employment status (%) | |||

| Employed in health care | 72.6 | 85.0 | < 0.001 |

| Employed, not in health care | 2.8 | 1.8 | 0.035 |

| Not currently employed | 5.7 | 6.3 | 0.461 |

| Retired | 17.3 | 6.5 | < 0.001 |

| Not reported | 1.7 | 0.43 | < 0.001 |

| Respondents employed in health care | N = 38,109 | N = 993 | |

| Setting of employment (%) | |||

| Hospital | 62.4 | 64.0 | 0.326 |

| Long-term care | 4.7 | 5.5 | 0.244 |

| Home health care | 6.8 | 7.1 | 0.763 |

| Other | 22.6 | 23.0 | 0.789 |

| Not reported | 3.5 | 0.5 | < 0.001 |

| Respondents employed in hospitals as direct care staff nurses | N = 13,632 | N = 431 | |

| Nurse demographics | |||

| Male (%) | 9.6 | 16.2 | < 0.001 |

| Age [mean (SD)] | 46.1 (12.5) | 43.3 (11.5) | < 0.001 |

| White, race (%) | 73.1 | 60.1 | < 0.001 |

| Hispanic/Latino (%) | 7.5 | 11.8 | 0.003 |

| BSN or higher (%) | 57.2 | 61.3 | 0.092 |

| Years as registered nurse [mean (SD)] | 17.6 (12.8) | 15.2 (11.5) | < 0.001 |

| Hospital characteristics as reported by nurses | |||

| % satisfied with job | 78.7 | 79.1 | 0.847 |

| % who rate work environment favorably (single item) | 66.2 | 65.0 | 0.585 |

| 14-item work environment (1—best to 4—poorest) [mean (SD)] | 2.2 (0.6) | 2.2 (0.6) | 0.501 |

| % who rate quality of nursing care delivered to patients favorably | 85.7 | 80.7 | 0.005 |

| % who would recommend where they work to family or friends needing health care | 83.5 | 82.4 | 0.530 |

| % who are confident that their patients are able to manage care when discharged | 50.1 | 50.6 | 0.836 |

| % who are confident that management will act to resolve problems in patient care that they report | 45.9 | 48.7 | 0.241 |

| % who give a good overall grade on patient safety (A or B grade) | 69.9 | 65.9 | 0.079 |

| Patients assigned to your on your most recent shift [mean (SD)] | 4.5 (2.4) | 4.1 (2.1) | 0.008 |

The main survey sample results reported are data from nurses in the 3 states in which the nonresponse survey was also conducted.

P values based on likelihood ratio χ2 and 1-way analysis of variance. Nurses were included in the respondents employed in hospitals as direct care staff nurses analysis if the nurse cared for ≤ 15 patients on their last shift.

TABLE 3.

Comparison of Nurse Responses Among Those Employed in Hospitals as Direct Care Staff Nurses From the Main Survey and the Nonrespondents Survey, by State

| 2016 CA | 2016 PA | 2016 FL | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Survey Item | Main Survey Sample (N = 5501) | Nonrespondent Survey Sample (N = 200) | P | Main Survey Sample (N = 4038) | Nonrespondent Survey Sample (N = 94) | P | Main Survey Sample (N = 4093) | Nonrespondent Survey Sample (N = 137) | P |

| Nurse demographics | |||||||||

| Male (%) | 10.6 | 18.0 | 0.002 | 7.8 | 14.9 | 0.022 | 9.9 | 14.6 | 0.092 |

| Age [mean (SD)] | 46.4 (12.2) | 45.3 (11.0) | 0.234 | 45.6 (12.7) | 41.3 (11.9) | 0.001 | 46.1 (12.6) | 41.7 (11.5) | < 0.001 |

| White, race (%) | 57.8 | 46.0 | 0.001 | 91.6 | 88.3 | 0.287 | 75.4 | 61.3 | < 0.001 |

| Hispanic/Latino (%) | 9.8 | 11.6 | 0.414 | 1.4 | 1.1 | 0.819 | 10.7 | 19.3 | 0.004 |

| BSN or higher (%) | 62.2 | 61.0 | 0.734 | 57.3 | 62.8 | 0.285 | 50.4 | 60.6 | 0.018 |

| Years as registered nurse [mean (SD)] | 17.6 (12.5) | 17.1 (10.9) | 0.555 | 18.6 (13.0) | 14.1 (10.8) | 0.001 | 16.6 (12.8) | 13.3 (12.3) | 0.003 |

| Hospital characteristics as reported by nurses | |||||||||

| % satisfied with job | 84.1 | 81.0 | 0.252 | 73.8 | 73.4 | 0.932 | 76.4 | 80.3 | 0.281 |

| % who rate work environment favorably (single item) | 68.6 | 63.5 | 0.135 | 62.9 | 62.8 | 0.978 | 66.4 | 68.6 | 0.584 |

| %14-item work environment (1-best to 4-poorest) [mean (SD)] | 2.2 (0.6) | 2.2 (0.6) | 0.276 | 2.3 (0.6) | 2.3 (0.6) | 0.968 | 2.2 (0.6) | 2.2 (0.6) | 0.812 |

| % who rate quality of nursing care delivered to patients favorably | 86.4 | 81.0 | 0.036 | 86.4 | 80.9 | 0.142 | 84.1 | 80.3 | 0.246 |

| % who would recommend where they work to family or friends needing health care | 84.4 | 84.5 | 0.976 | 84.0 | 83.0 | 0.786 | 81.8 | 78.8 | 0.386 |

| % who are confident that their patients are able to manage care when discharged | 52.0 | 50.0 | 0.573 | 47.6 | 48.9 | 0.794 | 49.9 | 52.6 | 0.543 |

| % who are confident that management will act to resolve problems in patient care that they report | 48.9 | 54.5 | 0.118 | 41.1 | 41.5 | 0.941 | 46.5 | 45.3 | 0.775 |

| % who give a good overall grade on patient safety (A or B grade) | 72.1 | 66.0 | 0.064 | 68.0 | 57.5 | 0.034 | 68.7 | 71.5 | 0.482 |

| Patients assigned to your on your most recent shift [mean (SD)] | 4.0 (2.2) | 3.8 (1.9) | 0.169 | 4.7 (2.5) | 4.2 (2.2) | 0.054 | 4.7 (2.4) | 4.6 (2.1) | 0.402 |

This analysis includes nurse respondents employed in hospitals as direct care staff nurses caring for ≤ 15 patients on their last shift. The main survey sample results reported are data from nurses in the 3 states in which the nonresponse survey was also conducted.

CA indicates California; FL, Florida, PA, Pennsylvania.

Some 52,510 registered nurses responded to the main survey and 1168 responded to the nonrespondent survey (Table 2). Nonrespondents were significantly more likely to be employed in health care (85.0% vs. 72.6%, P < 0.001), and less likely to be retired (6.5% vs. 17.3%, P < 0.001). Among nurses employed in health care, there were no significant differences between nonrespondents and respondents in their setting of employment. The majority of respondents employed in health care were employed in hospitals (64.5% of main survey respondents; 64.0% of nonrespondents).

Among nurses employed in hospitals, demographics differed significantly between the 2 sample groups. Non-respondents were more likely to be male, younger, nonwhite, and Hispanic, and to have fewer years of nursing experience. No significant differences were observed between the 2 groups with respect to educational attainment.

Next, we examined whether the 2 groups of nurses differed in their reports of hospital quality and performance. Respondents and nonrespondents reported provided similar ratings for the majority of hospital measures, including job satisfaction, work environment, the likelihood of recommending their hospital to family/friends, confidence in patients’ ability to manage care when discharged, confidence in management resolving problems in patient care, and overall grade on patient safety. Nonrespondents were less likely to give a favorable rating of the quality of nursing care in their hospital (80.7% vs. 85.7%, P = 0.005) and reported caring for fewer patients on their most recent shift (4.1 vs. 4.5, P = 0.008).

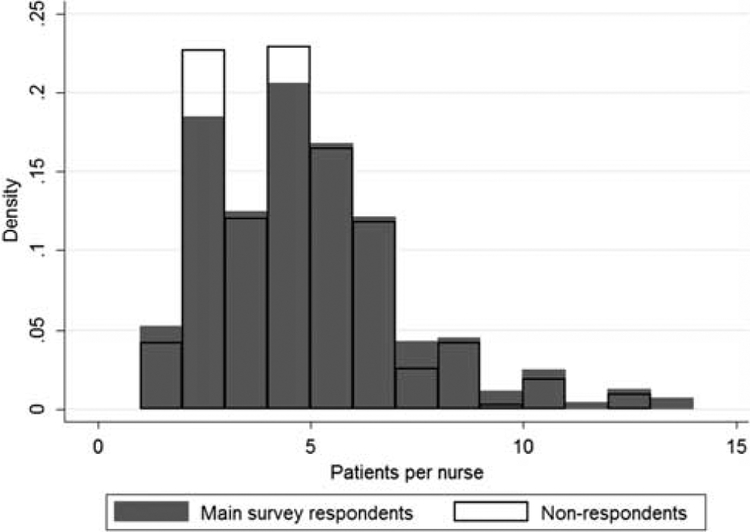

When we stratified the results by the state to assess the extent to which the primary variables of interest were confounded by the nurses’ geographic location of employment, we found large differences in respondents and nonrespondents across states; however, within the state the 2 samples were similar (Table 3). Respondents and nonrespondents in California and Pennsylvania had similar levels of education; however, nonrespondents in Florida were significantly more likely to have a BSN degree or higher (60.6% vs. 50.4%, P = 0.018). The samples did not significantly differ within states on job satisfaction, ratings of the work environment, the likelihood of recommending their hospital to family/friends, confidence in patients’ ability to manage care when discharged, confidence in management resolving problems in patient care. Nonrespondents in California were less likely to rate the nursing care quality favorably (81.0% vs. 86.4%, P = 0.036) and those in Pennsylvania were less likely to give a good overall grade on patient safety (57.5% vs. 68.0%, P = 0.034). Unlike the aggregated data (Table 2) in which nonrespondents appear to care for fewer patients per shift, within-state staffing means reveal no significant differences between the nonrespondent and main survey samples. In fact, even when the measure of patient per nurse staffing is aggregated across the 3 states, the variation in respondent and nonrespondent reports is modest (Fig. 1).

FIGURE 1.

Distribution of a number of patients per nurse among main survey respondents and nonrespondents. This figure includes nurse respondents employed in hospitals as direct care staff nurses caring for ≤ 15 patients on their last shift.

DISCUSSION

Using a novel method of surveying organizations—by directly sampling front-line providers nested within organizations—we demonstrate how organizational representativeness and minimal nonresponse bias can be achieved and evaluated. Our survey approach avoided self-selection of organizations by surveying front-line workers (in our case, nurses within hospitals) and accessing them outside of their place of employment (in our case, sending surveys to their home address). We obtained data on quality and safety from 96.7% of hospitals in our sampling frame, giving us confidence in the representativeness of our sample. Greater representativeness was achieved among larger hospitals (99.7% representation among hospitals with > 250 beds). Our approach disadvantaged smaller hospitals, which employed fewer nurses. Among the smallest hospitals, those with ≤ 100 beds, 88.6% had at least 1 nurse respondent, 57.7% had ≤ 5 respondents, and 26.3% had ≤ 10 respondents.

Our use of multiple informants enhanced the reliability of our estimates, an improvement from more conventional approaches, which typically rely on a single key informant. The main survey yielded an average of 32 nurse responses per hospital, sufficient to produce reliable estimates of quality and safety measures.

Survey data were collected at the nurse-level, allowing us to calculate a nurse response rate, which, in the main survey, was 26%. However, because our research interests are principally in studying hospitals, and the patients receiving care within those hospitals, the nurse response rate holds little weight in determining the sample’s representativeness of our populations of interest. In summary, response rates should not be interpreted as a summary measure of a survey’s representativeness.2,23

However, response rates are not entirely meaningless. They provide insight into the potential for survey response bias—and in particular, nonresponse bias. In the case of our nurse survey, we wondered whether the respondents differed from the nonrespondents in their reports of hospital quality and performance. Our double-sampling approach, which included an intensive resurvey of nurses and yielded close to a 90% response rate, revealed no statistically significant differences on the majority of measures, suggesting no evidence of nonresponse bias at the nurse-level.

Double-sampling is considered the “gold standard” for evaluating the impact of nonresponse12,24–26; however, in some cases, a double-sampling approach is not possible due to time and resource constraints. In these situations, researchers can employ alternatives. For example, differences in patterns of responses from the early, mid, and late respondents can be analyzed. No differences between the “waves” of respondents indicate a low likelihood of non-response bias, assuming that late respondents share characteristics/attitudes with nonrespondents. If differences between “waves” are observed, responses in the last wave can be used in the formula for the weighted mean to estimate the nonrespondent group.

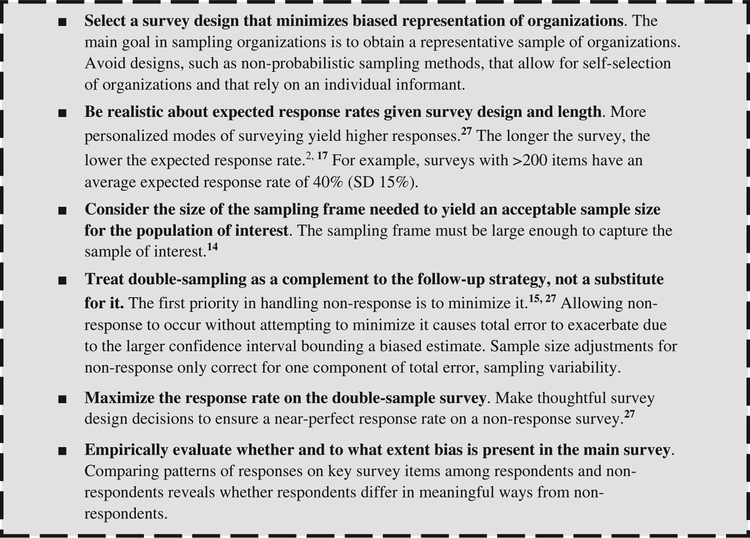

Finally, double-sampling should be used as a complementary strategy for dealing with nonresponse. The first priority in handling nonresponse is to minimize it through thoughtful sampling design decisions.15,27 Reducing non-response depends on the target population, the data collection method, and the funding that can be dedicated to data collection efforts. As is the case for most aspects of sampling design, strategies to minimize nonresponse should be developed before finalizing the design. It may be worthwhile to favor a smaller sample with minimal nonresponse, over a larger sample with substantial nonresponse. In our case, we chose a 30% random sample of nurses to conserve funds for multiple waves of follow-up and intensive outreach to a subsequent random sample of nonrespondents. To summarize sampling and survey method strategies to increase representativeness and reduce nonresponse in surveys of organizations, we provide several practical recommendations (Fig. 2).

FIGURE 2.

Recommendations for sampling and survey methods to increase representativeness and reduce nonresponse bias.

CONCLUSIONS

Our primary goal in surveying nurses was to obtain detailed information not available from other sources in a large, representative sample of hospitals. We conclude that it is feasible to use organization members as informants through direct surveys rather than asking permission from large numbers of organizations, which can be labor intensive and time-consuming, and inevitably results in a nonrepresentative sample. A double-sampling approach that includes non-respondents is a feasible strategy to confirm the absence of nonresponse bias at the survey respondent level. Our novel approach to surveying organizations yielded a high degree of representativeness in a large population of hospitals compared with traditional methods of sampling organizations directly.

Acknowledgments

Funding for this study came from the National Institutes of Health (R01NR014855, L.H.A.; P2CHD044964, H.L.S.; R00-HS022406, O.F.J.) and the Robert Wood Johnson Foundation (71654, L.H.A.). The funding organizations did not participate in the research. The content of this article is solely the responsibility of the authors.

Footnotes

These data were presented on October 26, 2018 in Lyon, France at the 10thFrench-speaking Colloquium on polls.

The authors declare no conflict of interest.

REFERENCES

- 1.Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol. 1997;50:1129–1136. [DOI] [PubMed] [Google Scholar]

- 2.National Research Council (NRC). Tourangeau R, Plewes TJ, eds. Nonresponse in social science surveys: a research agenda. Panel on a Research Agenda for the Future of Social Science Data Collection, Committee on National Statistics Division of Behavioral and Social Sciences and Education. Washington, DC: The National Academies Press; 2013:1–166. [Google Scholar]

- 3.Spetz J, Cimiotti JP, Brunell ML. Improving collection and use of interprofessional health workforce data: Progress and peril. Nurs Outlook. 2016;64:377–384. [DOI] [PubMed] [Google Scholar]

- 4.Nathan G, Holt D. The effect of survey design on regression analysis. J R Stat Soc Ser B. 1980;42:377–386. [Google Scholar]

- 5.Holbrook A, Krosnick J, Pfent A, eds. The causes and consequences of response rates in surveys by the news media and government contractor survey research firms In: Lepkowski J, Tucker C, Brinck JM, De Leeuw E, Japec L, Lavrakas P, Link M, Sangster R, eds. Advances in Telephone Survey Methodology. New York, NY: Wiley; 2008:499–678. [Google Scholar]

- 6.Aiken M, Hage J. Organizational interdependence and intro-organizational structure. Am Sociol Rev. 1968;6:912–930. [Google Scholar]

- 7.Marsden PV, Landon BE, Wilson IB, et al. The reliability of survey assessments of characteristics of medical clinics. Health Serv Res. 2005; 41:265–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tourangeau AE, Doran DM, McGillis Hall LM, et al. Impact of hospital nursing care on 30-day mortality for acute medical patients. J Adv Nurs. 2007;57:32–44. [DOI] [PubMed] [Google Scholar]

- 9.Cleary PD, Gross CP, Zaslavsky AM, et al. Multilevel interventions: study design and analysis issues. J Natl Cancer Inst Monogr. 2012; 44:49–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Coffman JM, Seago JA, Spetz J. Minimum nurse-to-patient ratios in acute care hospitals in California. Health Aff. 2002;21:53–64. [DOI] [PubMed] [Google Scholar]

- 11.Meterko M, Mohr DC, Young GJ. Teamwork culture and patient satisfaction in hospitals. Med Care. 2004;42:492–498. [DOI] [PubMed] [Google Scholar]

- 12.Young GJ, Meterko M, Desai KR. Patient satisfaction with hospital care: effects of demographic and institutional characteristics. Med Care. 2000;38:325–334. [DOI] [PubMed] [Google Scholar]

- 13.Aiken LH, Sermeus M, Van den Heede K, et al. Patient safety, satisfaction, and quality of hospital care: Cross sectional surveys of nurses and patients in 12 countries in Europe and the United States. BMJ. 2012;344:e1717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dutka S, Frankel LR. Measuring response error. J Advertising Res. 1997;37:33–40. [Google Scholar]

- 15.Dillman DA, Smyth JD, Christian LM. Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method, 4th ed New York, NY: John Wiley & Sons Inc.; 2014. [Google Scholar]

- 16.Yu J, Cooper H. A quantitative review of research design effects on response rates to questionnaires. J Mark Res. 1983;20:36–44. [Google Scholar]

- 17.Sloane DM, Smith HL, McHugh MD, et al. Effect of changes in hospital nursing resources on improvements in patient safety and quality of care. Med Care. 2018;56:1001–1008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.The American Association for Public Opinion Research (AAPOR). Standard definitions: final dispositions of case codes and outcome rates for surveys, 8th edition; 2015. Available at: www.esomar.org/uploads/public/knowledge-and-standards/codes-and-guidelines/AAPOR_Standard-Definitions2015_8theditionwithchanges_April2015_logo.pdf. Accessed July 16, 2016.

- 19.Aiken LH, Cimiotti JP, Sloane DM, et al. The effects of nurse staffing and nurse education on patient deaths in hospitals with different nurse work environments. Med Care. 2011;49:1047–1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kalton G Introduction to Survey Sampling. New York, NY: Sage; 1983. [Google Scholar]

- 21.Smith HL. A Double Sample to Minimize Bias due to Non-response in a Mail Survey. Philadelphia, PA: Population Studies Center, University of Pennsylvania; 2009. [Google Scholar]

- 22.Levy P, Lemeshow S. Sampling of Populations: Methods and Applications. New York, NY: John Wiley & Sons, Inc; 1999. [Google Scholar]

- 23.Peytchev A Consequences of survey nonresponse. Ann Am Acad Pol Soc Sci. 2013;645:88–111. [Google Scholar]

- 24.Hansen M, Hurwitz W. The problem of non-response in sample surveys. J Am Stat Assoc. 1946;41:517–529. [DOI] [PubMed] [Google Scholar]

- 25.Elliott MR, Little RJ, Lewitzky S. Subsampling callbacks to improve survey efficiency. J Am Stat Assoc. 2000;95:730–738. [Google Scholar]

- 26.Valliant R, Dever JA, Kreuter F. Practical Tools for Designing and Weighting Survey Samples. New York, NY: Springer; 2013. [Google Scholar]

- 27.Henry GT. Practical Sampling, Vol 21 New York, NY: Sage; 1990. [Google Scholar]