Abstract

Phase-sensitive coherent imaging exploits changes in the phases of backscattered light to observe tiny alterations of scattering structures or variations of the refractive index. But moving scatterers or a fluctuating refractive index decorrelate the phases and speckle patterns in the images. It is generally believed that once the speckle pattern has changed, the phases are scrambled and any meaningful phase difference to the original pattern is removed. As a consequence, diffusion and tissue motion that cannot be resolved, prevent phase-sensitive imaging of biological specimens. Here, we show that a phase comparison between decorrelated speckle patterns is still possible by utilizing a series of images acquired during decorrelation. The resulting evaluation scheme is mathematically equivalent to methods for astronomic imaging through the turbulent sky by speckle interferometry. We thus adopt the idea of speckle interferometry to phase-sensitive imaging in biological tissues and demonstrate its efficacy for simulated data and imaging of photoreceptor activity with phase-sensitive optical coherence tomography. We believe the described methods can be applied to many imaging modalities that use phase values for interferometry.

Subject terms: Microscopy, Applied optics, Imaging and sensing

Introduction

Phase-sensitive, interferometric imaging measures small changes in the time-of-flight of a light wave by detecting changes in its phase. But in many applications, statistically varying optical properties of the scattering structure randomize the phase of the backscattered light, resulting in a speckle pattern with random intensity and phase1. As a consequence, we can only extract meaningful phase differences from images with identical or at least almost identical speckle patterns2. However, if the detected wave’s speckle pattern changes over time, it inevitably impedes phase sensitive imaging3,4. For example, holographic interferometry or electronic speckle pattern interferometry (ESPI) compare at least two states of backscattered light acquired at different times, and it can only be applied if the respective speckle patterns are still correlated2–5. While some techniques, such as dynamic light scattering6,7 or optical coherence angiography8 make use of these decorrelations, other imaging techniques are severely hindered by it.

Among the most important effects that decorrelate speckles with time, are random motions and changes of the optical path length on a scale below the resolution, e.g., by diffusion6,7. These effects are impossible to prevent. But also bulk sample motion can cause the phase evaluation to compare different parts of the same speckle pattern. While in 3D imaging this effect of bulk motion can often be corrected to some extent by co-registration and suitable algorithms, in 2D sectional imaging we lack the data to correct this as the acquired slices of the specimen change if the specimen moves perpendicularly to the imaging plane.

We recently demonstrated imaging of the activity of photoreceptors and neurons by phase-sensitive full-field swept-source optical coherence tomography (FF-SS-OCT)9,10. The optical path length of neurons and photoreceptors in the human retina changes by few nanometers upon activation, e.g., after white light stimulation, which can be used as functional contrast for those cellular structures11–13. While most OCT systems lack the axial resolution required to resolve this, phases of the OCT signal encode small changes of the optical path length. Using the phases, FF-SS-OCT successfully measured nanometer changes in the optical path length of neurons and receptors due to activation over a few seconds. However, we could neither increase the measurement time nor determine tissue changes in single cross-sectional scans (B-scans). In both cases speckle patterns changed after a few seconds due to diffusion, bulk tissue motion, or tissue deformations and the phase information was lost, ultimately limiting the applicability of the method. Apart from this application, phase evaluation beyond the speckle decorrelation time can be of importance in other fields, such as optical coherence elastography (OCE)14, for which Chin et al. noticed the importance of phase correlation15. Finally, countless applications of OCT make use of the phase16–19 and might benefit from better phase evaluation schemes.

In this paper, we reconstruct phase changes over times significantly longer than the decorrelation time of the corresponding speckle patterns. The idea is to calculate phase differences in a series of consecutive images over small time differences (short-time phase differences) that still show sufficient correlation. If this is done for several measurements with different speckle patterns, averaging of these independent measurements will cancel the contributions of the disturbing phase. Integrating all these phase differences then yields the real time evolution of the phase. By averaging the phase in multiple speckles to obtain a single phase value, the real phase change can be obtained beyond the correlation time of the speckles. In essence, we combine the information from multiple speckles, each of which carries information on phase changes over a certain time (see Fig. 1a).

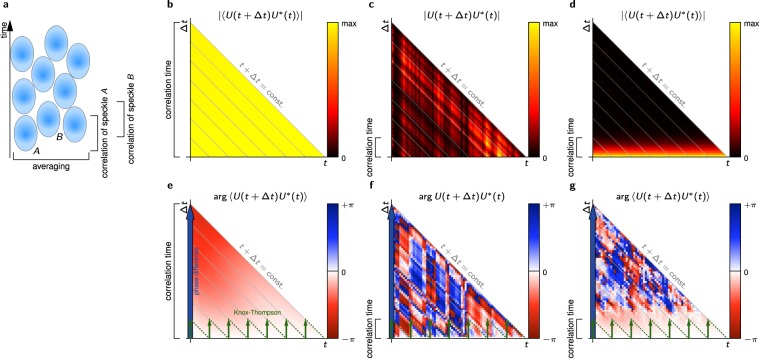

Figure 1.

(a) In average, each speckle (schematically represented by the blue ellipses) carries valid phase information only for the correlation time of the speckle pattern. Assuming that all speckle in the averaged area are subject to a common phase change in addition to random uncorrelated phase changes, one can use the phase of speckle A as long as it is valid and then continue with the phase of speckle B. Using multiple speckle, phase information for times significantly exceeding the correlation time can be extracted. (b,e) Exemplary cross-spectrum magnitude and phase assuming infinite correlation time. Total phase changes can either be computed directly (blue arrow) or using the Knox-Thompson path (green arrows). (c,f) Magnitude and phase of the cross-spectrum without averaging; neither method can extract the phase beyond the correlation time. (d,g) Magnitude and phase of ensemble averaged cross-spectrum. Direct phase differences (blue) cannot be used for phase extraction, but Knox-Thompson (green) method can be applied since phase values for small Δt are valid.

Just integrating the phase difference of successive frames followed by a summation, as it is commonly used for phase unwrapping16–20, accumulates the phase noise; except for phase unwrapping it is mathematically equivalent to the direct computation of phase differences. Without averaging, calculating short-time phase differences will not yield any information about phase differences of uncorrelated speckle patterns. The averaging of short-time phase differences before integration is the essential step as it effectively cancels the phase noise.

In the 1970s and 1980s the same idea, known as speckle interferometry, was originally used in astronomy for successful imaging through the turbulent atmosphere with diffraction limited resolution1,21–28. Short time exposures were used to reconstruct the missing phase of the Fourier transform of diffraction limited undisturbed images: By utilizing a large number of short-exposure images with different disturbances, true and undisturbed phase differences for small distances in the aperture plane could be computed. From these phase differences, the complete phase was reconstructed by integration. We will frame the similarities and the mathematical analogy between the two methods, which allows us to benefit from advances in astronomy for interferometric measurements during coherent imaging.

Methods that allow phase evaluation beyond the correlation time have also been developed for synthetic aperture radar (SAR) interferometry (InSAR)29,30. In InSAR, phase evaluation is used to monitor ground elevation, but small changes on the ground or turbulence in the sky interfere with an evaluation of the phase29 similar to interferometric measurements in biological imaging. For InSAR, two techniques have been developed which selectively evaluate the phase from only minimally affected images or structures. The first technique is referred to as small baseline subset technique (SBAS)31. It selects optimal images from a time series to compare phase values based on good correlation. The other method only compares phases of single selected scatterers that maintain good correlation over long times, so called permanent scatterers32.

In the first part of this paper, we lay down the basics and establish the commonality between coherent phase-sensitive imaging and astronomic speckle interferometry. Afterwards, we demonstrate the efficacy of the resulting methods by simulating simple phase resolved images in backscattering geometry and evaluating these with the proposed approach. Finally, we apply our method to in vivo data of phase-sensitive optical coherence tomography.

Theory

Mathematical formulation of the problem

We assume coherent imaging of the physical system, in which a deterministic change of the optical path length, e.g., swelling or shrinking of cells, is superimposed by random changes. These may be caused by diffusion, fluctuations of the refractive index, or rapid uncorrelated micro motion. The complex amplitude in each pixel of a coherently acquired and focused image can be represented as a sum of (random) phasors (complex values), where each summand comprises amplitude and phase. Such a coherently acquired field U(x,t) has contributions from the random phases ϕi(x,t) and a systematic phase ϕ(x,t). U(x,t) may then be written as

| 1 |

where Ai represents the unknown amplitude of each scatterer, and x and t denote location and time, respectively. Depending on the imaging scenario, U might not depend on the location x at all or x might be up to three-dimensional as in tomographic imaging.

We can now separate U into its systematic phase contribution U0 and a random modifier H, which yields

| 2 |

The modifier H covers all effects that alter the speckle patterns over time, e.g., changing phase, changing amplitude, and scatterers moving out of or into the detection area. In this form, we have no way to distinguish H from U0. We can, however, compute the ensemble average by

| 3 |

when assuming that U0 (though not H) is constant over the averaged ensemble. The ensemble could consist of repeated measurements or certain pixels from a volume, in which U0 is constant. In this averaged expression, we can now obtain the time evolution of U0 for times where 〈H〉 remains approximately constant, i.e., where the contribution of the random phases is small. For t exceeding the correlation time of 〈H〉, however, the decorrelation of 〈H〉 still prevents us from computing changes in the systematic phase U0.

Solving the phase problem in astronomic speckle interferometry

The resolution of large ground-based telescopes is limited by atmospheric turbulences, which modify the phase of the optical transfer function (OTF) and cause time varying speckles in the image. During a long exposure these speckle patterns average out to a blurred image. Speckle interferometry is a way to obtain one diffraction limited image from several short exposure images. In each of these images the random atmospheric turbulences are constant and each image essentially contains one speckle pattern1. If the actual diffraction limited image of the object is I0(x) and has a Fourier spectrum , the Fourier spectra of the short exposure images are given by

| 4 |

where is the short exposure OTF1. represents the disturbing effect of the atmosphere, changes randomly in each acquired image, and causes speckle noise in each image I(x). A single short exposure image I(x) will thus not yield the object information because phase and magnitude of are not known. However, the magnitude of the spectrum of the diffraction limited images is obtained by averaging the squared magnitude of all short exposure spectra ,

| 5 |

since the squared short exposure OTF averaged over all measurements is larger than zero and can be computed on the basis of known statistical properties of 1. But the magnitude of the Fourier spectrum only allows computation of the autocorrelation of I0. To obtain the full diffraction limited image the phase of is required too. This phase is contained in the Fourier transforms of the short time exposures, but it is corrupted by a random phase contribution of the atmospheric turbulences in the same way as the systematic phase in coherent imaging is corrupted by random phase noise.

Hence, retrieving diffraction limited images by speckle interferometry faces the same problem as retrieving the temporal phase evolution in coherent imaging. Only over small spatial frequency distances in the Fourier plane the phase difference in a spectrum corresponds to the phase difference in the spectrum of the diffraction limited image. Over larger distances, the phase information is lost due to atmospheric turbulence. The similarity of the problem is seen in the analogy of Eqs (4) with (2), where and I correspond to H and U, respectively. However, while the astronomic problem has a two-dimensional vector k of spatial frequencies as the dependent variable, the phase-sensitive imaging problem has only the time t. Both use a series of measurements, in which only phase information over small Δk or Δt is contained, respectively. We will show that by analyzing a large number of measurements, in which the corrupting phase contribution changes, the full phase can be reconstructed.

Phase information in the cross-spectrum

In astronomy, it was Knox and Thompson who solved the recovery of phases from multiple, statistically varying images21 with short exposure times using the so-called cross-spectrum. Later, another approach, called the triple-correlation or bispectrum technique, even improved the achieved results22. One major advantage of the bispectrum over the cross-spectrum technique for astronomic imaging is that it is only sensitive to the non-linear phase contributions of the transfer function , since shifted images yield to the same bispectrum and do not pollute the obtained phases (see, for example23, for details). However, for phase sensitive imaging, the linear part of the phase evolution of U0 is generally of interest and thus the bispectrum technique is not applicable here.

Following we will apply the algorithm of Knox and Thompson to recover the phase beyond the speckle correlation time in coherent imaging. For convenience, we will retain the term cross-spectrum, even though in our scenario it is evaluated in time-domain as a function of t rather than in (spatial) frequency-domain as a function of k.

We define the cross-spectrum CH of the phase disturbing speckle modulation H by

It is subject to speckle noise since H itself merely contains speckle (Fig. 1c,f); but its ensemble average

| 6 |

has in general a magnitude larger than 0 and speckles of H are averaged out, at least for small Δt. Most importantly, it is to a good approximation real-valued, i.e., its phase is zero, as long as Δt is within the correlation time of H. This real-valuedness was previously shown for the corresponding cross-spectrum in astronomic imaging1,27. For phase imaging, we show the real-valuedness of CH for small Δt for which the autocorrelation is still strong in the Methods section. If Δt is larger than the correlation time, H(x,t) and H*(x,t + Δt) become statistically independent, the phase of 〈CH〉 gets scrambled and CH follows speckle statistics.

Now, if we again assume that U0 and thus are constant over the ensemble, we compute the averaged cross-spectrum of the measurements of U to

| 7 |

Knowing that 〈CH〉 is real-valued for small Δt, the phases of 〈CU〉 are determined only by the phases of . If we find phases of U0 that yield the phases of 〈CU〉 for these small Δt, we obtain the systematic phase ϕ(x,t) we are looking for as introduced in (1).

Note that 〈CU(x,t,Δt)〉 is related to the time-autocorrelation of U, except averaging being performed over the ensemble instead of the time t and thus being a function not only of Δt bus also of t. Due to this relation, the cross-spectrum maintains comparably large magnitudes for time differences Δt with large autocorrelation values. The magnitudes and phases of exemplary cross-spectra, in the decorrelation-free scenario and with strong decorrelation, with and without the ensemble averaging (obtained from simulations, see Results and Methods) is shown in Fig. 1b–g. The figures illustrate that 〈CU〉 yields a deterministic increase of the phases for small Δt (Fig. 1g) as long as its magnitude (Fig. 1d, related to the autocorrelation) remains large, but only if the ensemble averaging is performed. It can further be seen, that the valid phase differences visible in Fig. 1g are small compared to the phases for large t in Fig. 1e since they represent merely phase difference for small Δt. Nevertheless, when reconstructing the phase of U0 from the values of 〈CU〉, we need to ensure that only values for (small) Δt with strong autocorrelation are taken into account. This condition coincides with the real-valuedness of 〈CH〉.

Ensemble averaging needs multiple independent measurements, which cannot be performed easily in a real experiment. However, averaging over multiple lateral pixels or averaging over multiple detection apertures can be done from a single experiment and approximates the ensemble average. In our case, we either use an area over which we strive to obtain one mean phase-curve, or we use a Gaussian filter with a width determined as a compromise between spatial resolution and sufficient averaging statistics to obtain images of the phase evolution (see Methods).

Phase retrieval from the cross-spectrum

Having obtained the cross-spectrum 〈CU〉, the systematic phase function U0 needs to be extracted. We assume a given initial phase ϕ(x,t = t0) and evaluate methods to extract the systematic phase evolution ϕ(x,t) from the cross-spectrum: An approach equivalent to computing phase differences directly is given by

| 8 |

for t ≥ t0 (blue arrows in Fig. 1b–g). However, it only yields phases within the correlation time. In astronomy, this approach never works, unless the image was not disturbed by turbulence in the first place. Instead, Knox and Thompson originally demonstrated diffraction limited imaging through the turbulent sky21 by iteratively walking in small increments of Δt (e.g., one time step) through the cross-spectrum, i.e.,

| 9 |

for integer n ≥ 1 with increasing iteration number n (green arrows in Fig. 1b–g). This method uses only phase values well within the correlation time. If 〈CU〉 is valid for the single time steps Δt, i.e., 〈CH〉 is real valued, the iterative formula will yield valid results, even for larger t. However, a single outlier at a time terr, i.e., one false step, ruins all following values for t ≥ terr.

The influence of these single events can be reduced by taking multiple ways with different Δt for stepping through the cross-spectrum. In astronomy, this is known as the extended Knox-Thompson method23,28. However, most of the algorithms for finding the optimal paths have been developed for use with the bispectrum technique24–26; nevertheless, in general, they can be easily transferred to the cross-spectrum. Here we minimized the sum of weighted squared differences between the measured cross-spectrum and the cross-spectrum resulting from the systematic phase ϕ(x,t). The algorithm is described step-by-step in the methods section.

Realization of the algorithm

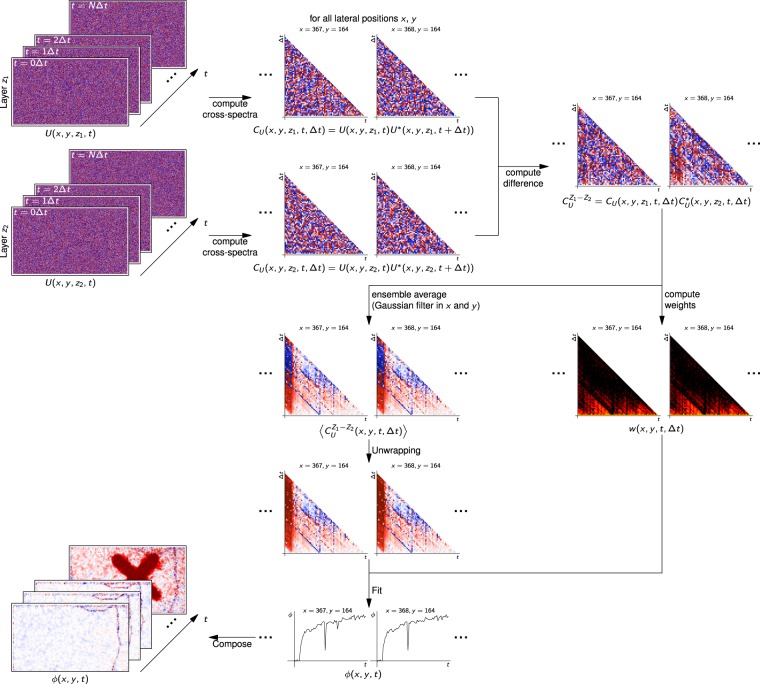

To actually implement the presented extended Knox-Thompson algorithm for phase-sensitive OCT imaging we proceeded as follows and as illustrated in Fig. 2. A more detailed description of the required steps is given in the Methods section. We assume the OCT data is reconstructed, co-registered and the layers of interest are segmented and denoted z1 and z2, with the volumes being given by U(x,t). For example, these layers can be the inner segment/outer segment junction of the retina and the outer segment tips, respectively, in which case phase difference will show elongation of the photoreceptor outer segments. We now compute the cross-spectra for all points in the respective depths of z1 and z2 by

To average signals over multiple depths, the complex cross spectra CU can also be averaged over multiple z values here. Next the phase difference between these cross-spectra in the respective layers of interest is performed by computing

Utilizing the difference of two layers makes the measurement almost independent from the phase stability of the system (see also Fig. S4 in the Supplementary Information). This step is followed by the actual ensemble average yielding 〈CU〉 as in Eq. (7). To create images, this can be done by applying a Gaussian filter in x and y direction. In parallel, weights w(x,y,t,Δt) are computed that describe the quality of each entry in all cross-spectra and are required to perform a fit afterwards (see Methods section, Eqs (12) and (13)). After phase unwrapping the ensemble averaged cross-spectra 〈CU〉, a phase function is fitted to the phase of 〈CU〉 for each x and y coordinate, respecting the weights w. This yields the phase ϕ(x,y,t). These values then compose the resulting images. In case only a single curve is to be extracted, the dependency of x and y is dropped in the ensemble averaging step and only one curve is fitted.

Figure 2.

Flowdiagram of the actual implementation of the extended Knox-Thompson algorithm.

Acquisition and reconstruction parameters

The algorithm depends on several acquisition and reconstruction parameters. Among the obvious ones are the number of values used for (ensemble) averaging and the trade-off between obtainable signal-to-noise ratio, e.g., by increasing the integration time of the data acquisition, and the time distance between time-adjacent images. While it is difficult to give definite answers on how to choose these parameters, rough guide-lines can be provided: Accuracy of obtained phases will improve if the averaging size is increased, provided that all averaged data points exhibit the same or at least similar systematic phase changes. In many cases, the practical consideration here will be related to the resolution loss that is accompanied by more averaging or larger filter sizes.

The choice of integration time is ultimately limited by the requirement, that at least temporally adjacent images need to be well correlated. If this is not the case, however, an increased integration time also no longer yields increased signal-to-noise ratio, but rather phase wash-out diminishes the signals. In our cases, we were always limited by technical implications preventing us from decreasing the time between successive measurements and thereby limiting the temporal sampling frequency. However, we are fairly certain that our phase signals would still benefit from more frequent measurements. We believe this holds for most applications since some inherent averaging is done when fitting data to the cross-spectrum in the extended Knox-Thompson method. Exceptions might occur when strong phase noise dominates the cross-spectra.

Implementation

We implemented the respective algorithms in C++ using the Clang compiler. Vectorization and OpenMP were used to increase performance where possible. Most importantly, to achieve good performance, all cross-spectra in single-precision complex floating point numbers had to fit in memory at the same time, requiring in our case about 10 GB of RAM. Parts requiring linear algebra were implemented using the Armadillo library33,34.

Results

Simulation

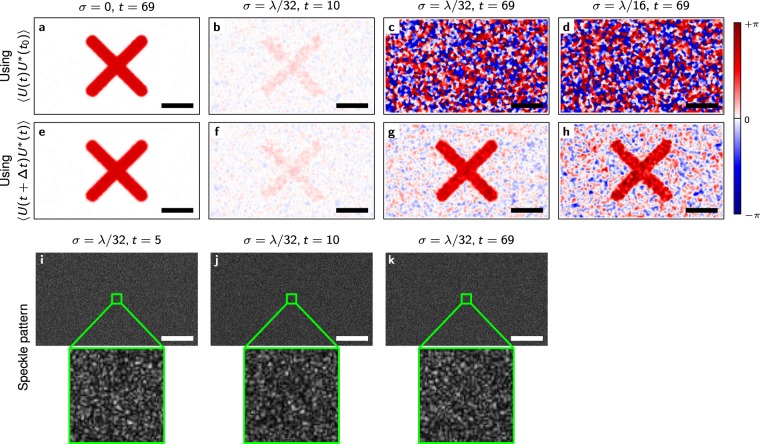

We simulated images of a large number of point scatterers that exhibit a random Gaussian-distributed 3-dimensional motion (with variance σ2) between frames in addition to a common axial motion in a specific “x”-shaped area. A simulation has the advantage that the actual phase change (ground-truth) is well known and that the speckle decorrelation can be tightly controlled. While both methods show indistinguishable results for the noise-free scenario (σ = 0), the simulation in the case of degrading speckle demonstrates the power of the extended Knox-Thompson method. For σ = λ/32, the phase evaluation with a simple phase difference to the first image and the extended Knox-Thompson method yield almost indistinguishable results for the first 10 steps (Fig. 3b,f). After 60 time steps, a simple phase difference to the first image yields only noise (Fig. 3c), while the “x”-shaped pattern is still well visible (Fig. 3g) using the extended Knox-Thompson method. The speckle patterns appear correlated after 10 steps but are completely changed after 69 steps (Fig. 3i–k). Doubling the scatterer motion to σ = λ/16, the “x”-shaped pattern begins to deteriorate after 69 steps even with the Knox-Thompson method. However, the “x” remains visible (Fig. 3h), whereas the phase difference again yields only phase noise (Fig. 3d).

Figure 3.

Phase images and speckle patterns obtained from the simulation. (a–d) Phase evaluated by phase differences for different σ and time steps. (e–h) Phase evaluated using the extended Knox-Thompson methods for the same σ and time steps as shown in (a–d). (i–k) Speckle patterns for σ = λ/32 after a different number of time steps. Scale bars are 500λ.

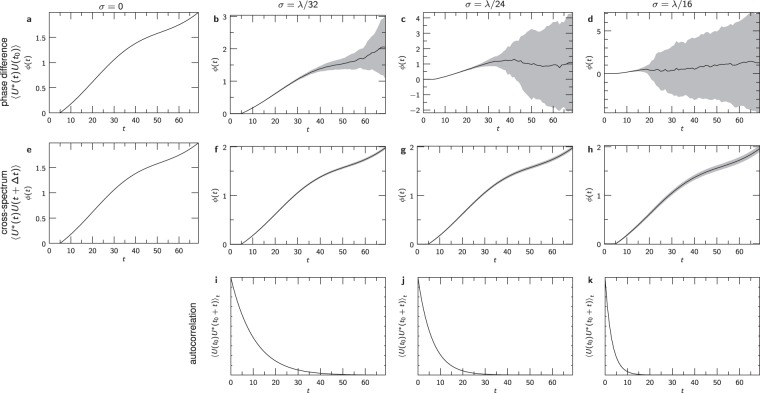

The curves extracted in multiple simulations from the “x” confirm the results of the images and demonstrate the reproducibility of the method. Without random scatterer motion (σ = 0, Figs 3a,e and 4a,e), the phase can be calculated by the phase difference to the first frame yielding the exact curve that was supplied to the simulation. But introducing random Gaussian-distributed motion of the scatterers with as little as σ = λ/32 between frames accumulates huge errors in the phase of the directed motion after 70 frames (Fig. 4b); the mean autocorrelation of the complex fields (Fig. 4i) in this case drops to one half in less than ten frames. Increasing motion amplitude (Fig. 4c,d) has devastating effects on the calculated phase differences. Retrieving the phase with the cross-spectrum based Knox-Thompson method yields the directed motion up to σ = λ/16 (Fig. 4f–h) even though the autocorrelation halves after a few frames (Fig. 4k).

Figure 4.

Extracting a single phase curve from simulated images of point scatterers that move between successive measurements by subtracting the phase of the first image (a–d) and extended Knox-Thompson evaluation of phases (e–h) while random 3D motion of the simulated scatterers is increased from 0 to λ/16. (i–k) Autocorrelation of temporal changes of the wave field. Each curve was simulated 100×; the grey areas indicate the standard deviation of the obtained values.

Finally, the dependency on the number of independent samples that are averaged is shown in the Supplementary Information. Fig. S1 shows that smaller averaging numbers increase the fluctuations of the results but do not lead to a general bias. However, for small averaging numbers, the fluctuations increase dramatically. In this simulation, the statistical fluctuations of these averaged and laterally adjacent points should be fully independent. Consequently, these points only share the systematic phase change that we aim to extract.

The resulting curves also show that deviations from the ground truth add up over time, i.e., they increase with time t. Although one might expect that, in particular, the extended Knox-Thompson method would be subject to this, it is also clear from Fig. 4 that the effect with the extended Knox-Thompson method is lower than when computing phase differences. The strength of this overall error depends on multiple factors, such as statistical and independent phase noise, how many values are averaged, and on the strength of the (simulated) Brownian motion. Two kinds of effects might contribute to these phase deviations with increasing time t. Uncorrelated phase noise that is statistically independent in all acquired or simulated images and decorrelation effects that alter the phases and the speckle with time, but where a correlation remains in successive images. The strong discrepancies observed in Fig. 4 result from decorrelating effects instead of uncorrelated phase noise. The extended Knox-Thompson method cannot improve results if uncorrelated phase noise dominates the images or the data and should in many cases be more vulnerable to this phase noise than the standard phase differences.

To further quantify these results we looked at the average sum of all squared differences of the theoretical curves and the actual curves for all simulated datasets, as well as the difference of the final time-point to the ground-truth curve, which might show accumulated errors (Table 1). As soon as some degree of speckle decorrelation is introduced, the extended Knox-Thompson method outperformed the simple phase differences. For the extended Knox-Thompson method, deviations from the ground truth are orders of magnitude lower than for simple phase differences.

Table 1.

Quantitative comparison of the simulated results (compare Fig. 4).

| Mean over all t | Final t | |||

|---|---|---|---|---|

| Phase differences | Extended Knox-Thompson | Phase differences | Extended Knox-Thompson | |

| σ = 0 | 0.0 ± 0.0 | 0.0 ± 0.0 | 0.0 ± 0.0 | 0.0 ± 0.0 |

| σ = λ/32 | 0.17 ± 0.38 | 0.00038 ± 0.00042 | 2.8 ± 6.6 | 0.0010 ± 0.0012 |

| σ = λ/24 | 2.1 ± 1.3 | 0.00068 ± 0.00082 | 7.3 ± 8.1 | 0.0015 ± 0.0020 |

| σ = λ/16 | 3.7 ± 1.3 | 0.0024 ± 0.0031 | 6.8 ± 7.8 | 0.0054 ± 0.0073 |

Values indicate the mean squared errors over the simulated datasets taken all values (mean over all t) or only the final values in a time series (final t) into account.

In vivo experiments

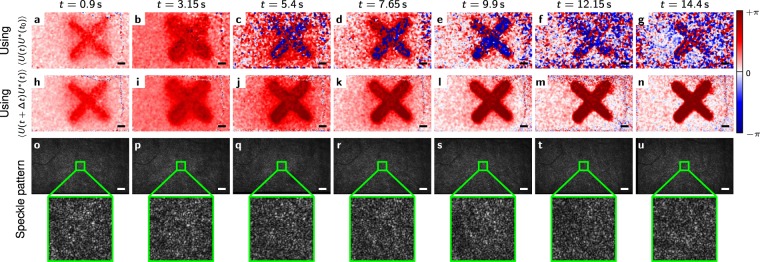

The in vivo experiments confirm the simulation results. Here, we imaged human retina with full-field swept-source optical coherence tomography (FF-SS-OCT, see Methods), which provides three-dimensional tomographic data comprising amplitude and phase, to show the elongation of photoreceptor cells in the living human retina. Phase differences between both ends of the photoreceptor outer segments were evaluated before and during stimulating the photoreceptors with an “x”-shaped light-stimulus, similar to the simulation (see Methods). Figure 5a–g show the extracted phase difference of the 5th frame (the beginning of the stimulus) to various other frames of the data set. The last frame was acquired 14.6 seconds later. After 5 seconds the phase differences are dominated by random phases and the speckle patterns of the compared images changed considerably after this time (Fig. 5o–u). Diffusion and uncorrected tissue motion are likely to be the dominant factors. The phase evaluation using the cross-spectrum is nevertheless able to reconstruct the phase difference and show the elongation of the photoreceptor outer segments (Fig. 5h–n). Even in the non-stimulated area of Fig. 5g, it is clearly seen that the phase difference gives random results, whereas the extended Knox-Thompson approach shows the expected small changes (Fig. 5n). It is also worth mentioning that the phase differences wrap causing the blue colour within the stimulated area in Fig. 5c–g. In contrast to this, the values of the extended Knox-Thompson do not wrap but are clipped at +π in the false-colour representation of Fig. 5j–n.

Figure 5.

Phase differences between the ends of the photoreceptor outer segment in the living human eye at different times compared to the initial phase at t = 0. The entire measurement ended at about t = 14.6 s after initiating a light stimulus. (a–g) Phase difference obtained by directly comparing to pre-stimulus phase with phase after different times. (h–n) Phase difference obtained from the cross-spectrum as described in the Methods section (by minimizing Eq. (14)). (o–u) Speckle pattern of one of the involved layers before the stimulus and at the respective times. It can clearly be seen, that that the speckle pattern changes with time. Scale bars are 200 μm.

A second measurement with a brief stimulation lasting 200 ms that was taken over 8 seconds is shown in Fig. S3. This measurement demonstrates the relaxation of the signals. It is visible that both methods, using phase differences and the extended Knox-Thompson method, yield comparable results here, albeit the extended Knox-Thompson method shows reduced noise in the resulting phase images. Small residual signals remain in either method.

Finally, we also compared phase results for different averaging parameters (Supplementary Document Fig. S2), represented by the size of the Gaussian filter in Eq. (11). The results show that even for small averaging of laterally adjacent pixels the extended Knox-Thompson method outperforms simple phase differences. Contrary to the simulated case, lateral pixels might still show some correlation in their phase fluctuations since the actual motion is unknown and there might be systematic motion that is correlated in regions smaller than the filter size. The results indicate, however, that this does not result in major problems for the extended Knox-Thompson algorithm.

While uncorrelated phase noise is inevitable using FF-SS-OCT, it is not dominating the image degradation in Fig. 5a–g. Rather, major improvements in the in vivo experiments are visible when using the extended Knox-Thompson method, which indicates that decorrelating speckle patterns and phases dominate the imaging scenario and these originate from the specimen, i.e., the retina in these images.

Processing times

Obviously, the extended Knox-Thompson method has additional computational cost compared to the simple phase differences. Table 2 lists the required execution times for a single dataset as shown in Figs 3–5 as measured on an Intel Core i7 8700 with 32 GB of RAM. Although the extended Knox-Thompson method requires a processing time exceeding the phase differences by up to 2 orders of magnitude, processing times remain manageable with under 2 minutes in all tested cases. For our specific imaging scenario, this time is negligible when compared to the required pre-processing time (see Methods), comprising reconstruction, motion correction, registration and segmentation, which takes hours for one dataset.

Table 2.

Computational time required to evaluate single datasets of the data shown in Figs 3–5, for both simulated and experimental data.

Conclusion

Building the phase differences of successive frames, and then summing or integrating the differences has been used in phase sensitive imaging16–20. It removes the burden of phase unwrapping and thereby improves results. In contrast, including an average over multiple speckles after computing the phase differences and before integrating those differences again, as done by the Knox-Thompson method in astronomic speckle interferometry, allows phase sensitive imaging over more than ten times the speckle decorrelation time. To our knowledge, this important step has never been fully realized in phase sensitive imaging. The resulting method overcomes limitations from decorrelating speckle patterns and recovers phase differences even if speckle are completely decorrelated. Using extended Knox-Thompson methods yields the optimized results presented here and removes the sensitivity to outliers. The method achieves this by attempting to separate random phase and speckle decorrelations (such as Brownian motion) and systematic phase changes. This requires some form of presumptions on the data, e.g., on the spatial dependence of the systematic changes, that will influence the results of the algorithm and inaccurate assumptions introduce some form of error into the results. But, since simple phase differences do not yield any meaningful results after decorrelation of the speckles, the proposed extended Knox-Thompson method is an improved tool for phase sensitive imaging in these scenarios.

Still, measurements and the algorithm can be further improved in several regards. We approximated the ensemble average either by averaging a certain area of lateral pixels or by a Gaussian filter. The former is suitable to obtain the mean expansion in a certain area, the latter is used to obtain images. But to get high-resolution images, using spatial filtering is not ideal. Other speckle averaging techniques, e.g., based on non-local means35,36, and time-encoded manipulation of the speckle pattern by deliberately manipulating the sample irradiation (similar to37) should preserve the full spatial resolution.

Increasing the sampling rate of the t axis of the cross-spectrum, i.e., decreasing the smallest Δt, should also improve results, since then the correlation of compared speckle patterns is improved. The results presented here are limited by the finite sampling interval of the t-axis and not by the overall measurement time; however, at some point, it is expected that errors add up for high frequent sampling of t as data size increases further. Alternatively, one could acquire two immediate frames with a small Δt, followed by a larger time gap to the next two frames (during which speckle patterns can begin to decorrelate). The immediate frames can give the correct current rate of phase changes (the first derivative of the phase change) that can then be extrapolated linearly to the time of the next two frames, etc. Obviously, this can be extended to three or more frames with small Δt giving the second or even higher order local derivative, respectively.

There are also extensions of the presented method possible. For example, the extraction of systematic phase changes in a dynamic, random speckle pattern can be combined with dynamic light scattering (DLS)6,7 basically separating the random diffusion from systematic particle motion. This approach might give additional contrast compared to either method on its own.

Overall, applications of phase sensitive imaging over long times are manifold. Applications range from biological phase imaging to measure retinal pulse waves19, detect cellular activity9,10, all the way to visualize deformations using electronic speckle interferometry (ESPI). In particular, for OCT imaging of neuron function of layers that are more severely decorrelating and have lower signal-to-noise ratio compared to the photoreceptors, the proposed method will be of importance.

Methods

Simulation

For the simulation we created a complex valued image series with 640 × 368 pixels and a pixel spacing of 4λ, where λ is the simulated light wavelength. To obtain an image series we created a collection of 50 × 640 × 368 = 11,776,000 point scatterers each getting a random x, y, and z coordinate, as well as an amplitude A. While the x and y coordinate was distributed entirely random in the image area, the z coordinate was restricted between 0 and 10λ and the amplitude was equally distributed between 0 and 1. To create an image we iterated over all scatterers, and summed the values Aexp(i2kz) for the pixel corresponding to the scatterer’s x and y coordinate, where z is the scatterer’s z-value, and k = 2π/λ. This basically corresponds to a phase one would obtain in reflection geometry when neglecting any possible defocus. Finally the image was laterally filtered simulating a limited numerical aperture (NA).

For each simulation we created a series of 70 images; starting with the initial scatterers, to create the next frame, we moved each scatterer randomly as specified by a Gaussian distribution with a certain variance σ2 in x, y, and z direction. Furthermore, all scatterers currently having x and y-coordinates as found in a pre-created “x”-shaped mask were additionally subjected to the movement

where t is the frame number and t0 = 5 in each frame.

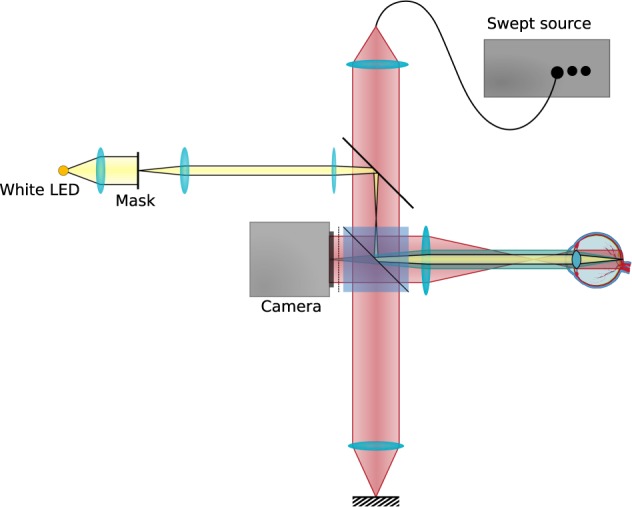

Experiments

In vivo data was acquired with a Michelson interferometer-based full-field swept-source optical coherence tomography system (FF-SS-OCT) as shown in Fig. 6. The setup is similar to the system previously used for full-field and functional imaging9,19,38. Light from a Superlum Broadsweeper BS-840-1 is collimated and split into reference and sample arm. The reference arm light is reflected from a mirror and then directed by a beam splitter onto a high-speed area camera (Photron FASTCAM SA-Z). The sample light is directed in such a way, that it illuminates the retina with a collimated beam; the light backscattered by the retina is imaged through the illumination optics and the beamsplitter onto the camera, where it is superimposed with the reference light.

Figure 6.

Full-field swept-source optical coherence tomography setup used for acquiring in vivo data.

In addition the retina was stimulated with a white LED, where a conjugated plane to the retina contained an “x”-shaped mask. A low-pass optical filter in front of the camera ensured that the stimulation light does not reach the camera.

Swept laser, camera, and stimulation LED were synchronized by an Ardunio Uno microprocessor board. It generates the trigger signals such that the laser sweeps 70× for one dataset. During each sweep the camera acquires 512 images with 640 × 368 pixels each at a framerate of 60,000 frames/s. Each set of 512 images corresponds to one OCT volume. The stimulation LED is triggered after the first 5 volumes and remains active for the rest of the dataset. The time between volumes determines the overall measurement time. The number of 70 volumes was limited by the camera’s internal memory since the acquisition of the camera is too fast to stream the data to a computer in real time.

Measurement light had a centre wavelength of 841 nm and a bandwidth of 51 nm. About 5 mW of this light reached the retina illuminating an area of about 2.6 mm × 1.5 mm, which is the area imaged onto the camera. The “x”-pattern stimulation had significantly less irradiant power, of about 20 μW white light.

Phase stability

Additionally, we characterized the phase stability of the setup by measuring a cover slip glass that contains two reflecting surfaces over almost 30 seconds. As shown in the Supplementary Information (Fig. S4), the phase observed in a single lateral position in one of the two surfaces of the cover slip fluctuates with time. As a consequence, the autocorrelation of this single layer on its own decreases significantly after a few seconds. Taking the phase difference of both layers shows excellent phase stability and autocorrelation over the entire measurement time. Our measurements are thus limited by phase decorrelation of the specimen and not by the phase stability of the system.

In vivo data acquisition

All investigations were done with healthy volunteers; written informed consent was obtained from all subjects. Compliance with the maximum permissible exposure (MPE) of the retina and all relevant safety rules was confirmed by the responsible safety officer. The study was approved by the ethics board of the University of Lübeck (ethics approval Ethik-Kommission Lübeck 16-080).

Data reconstruction

Data was reconstructed, registered and segmented as described in previous publications10: After a background removal the OCT signal was reconstructed by a fast Fourier transform (FFT) along the spectral axis. Next step was a dispersion correction by multiplication of the complex-valued volumes in the axial Fourier domain (after an FFT along the depth axis) with a correcting phase function that was determined iteratively by an optimization of sharpness metric of the OCT images38. Co-registering the volumes aligned the same structures in the same respective voxels of the data series as precisely as the present and changing speckle patterns permitted. This registration also took other layers of the retina into account making use of all retinal structures. Finally, segmenting the average volume allowed aligning of the photoreceptor layers in a certain constant depth for easier phase extraction.

Phase evaluation

Computing the cross-spectrum

To compute the cross-spectrum, we took the series of OCT volumes U(x,t) and computed the cross-spectrum by

Comparing phases of two different depths

For the in vivo experiments we additionally want to compare the phases between different ranges of layers. Let Z1 and Z2 denote the sets of layers, then we computed the effective phase difference cross-spectrum by

| 10 |

where we now represented the vector x by its components (x,y,z). For the simulation, there is only one layer to be compared, and thus we set the phase difference cross-spectrum equal to the cross-spectrum of this one layer, i.e., .

Approximating the ensemble average

To extract a curve the phase difference was approximated by averaging the cross-spectrum over the x and y coordinates belonging to a mask M, i.e.,

where |M| is the number of pixels in the mask M.

To obtain images, instead, the ensemble average was approximated by applying a Gaussian filter (convolving with a Gaussian with variance ):

| 11 |

where * is the convolution in x and y direction. In effect we used a circular convolution by using a fast Fourier transform (FFT) based algorithm. For Figs 3 and 5 a Gaussian filter of width σGauss = 4 pixels was used. A comparison of different sizes is found in the Supplementary Information in Fig. S2. For Fig. 4 ensemble averaging was performed over the entire ‘x’-shaped pattern, containing 26,437 independent data points. The dependency on the number of data points for curves is shown in the Supplementary Information (Fig. S1).

The weights

We additionally used weights to specify how reliable the respective value of the cross-spectrum 〈CU〉 is. Unfortunately, most algorithms that were used previously to compute weights in astronomy cannot be computed as efficiently as 〈CU〉, since we used a fast convolution to apply the Gaussian filter in Eq. (11). We therefore use the weights

| 12 |

for phase curve extraction and

| 13 |

for phase imaging; both could be computed as efficiently as 〈CU〉 itself. In these formula T is a cut-off function for negative values:

The truncation of negative values and the introduction of b are supposed to keep the expectation value for randomly distributed CU at 0. The sum in (12) and the convolution term in (13) follow speckle statistics if the phase of CU is random, since they are a sum of random phasors1. Consequently, a non-zero value is expected. This can be used to determine suitable values for b. The amplitude probability of a speckle pattern is given by a Rayleigh distribution1

Enforcing a certain threshold A0 gives a total probability

and its inverse

With this formula, we selected b to be the 99% threshold, i.e., b = A0(0.99)), except for Fig. 3 for which b = A0(0.99999) was chosen.

For the two scenarios of curve extraction and imaging with a Gaussian convolution, the parameter σ of the Rayleigh distribution remains to be determined. Starting with the derivation of speckle statistics1, it can be computed to yield

for curve extraction and

for imaging, when assuming unit magnitude phasors (as present in (12) and (13)) with completely random phases.

Phase unwrapping of the cross-spectrum

While alternate approaches to extract the phase from the cross-spectrum deal with the phase wrapping problem differently (e.g.26), for our scenario phases of the obtained cross-spectrum need to be unwrapped in the Δt axis in order to obtain good results. However, in 1D phase unwrapping, single outliers, i.e., a random phase for single specific Δt, can tremendously degrade results for all following values. We therefore slightly modified the standard 1D-phase unwrapping approach:

We assume the initial D phases to be free of wrapping. Afterwards we moved through the data set from small Δt to larger Δt. We computed the sum of all absolute values of the phase differences to the preceding D phases for each phase value that is to be determined. We increased or decreased the respective phase value by 2π as long as this difference sum kept decreasing. Then me move to the next Δt. This procedure was done once for curve extraction and repeated for each x and y value for imaging. In our scenario, we used D = 10.

Obtaining the phase from the cross spectrum

Instead of using the approaches described by (8) or (9), we formulate the recovery of the phases from the cross-spectrum as a linear least squares problem. This also served as basis for many of the previously demonstrated approaches in astronomy. Assuming the phase of the cross-spectrum is unwrapped in its Δt axis, we can assume that

| 14 |

minimizes for the desired phase ϕ(x,t), if the weights w represent the quality of the cross-spectrum for the respective parameters x, t, and Δt. However, the solution to this linear least square problem is not unique: since the cross-spectrum only contains phase differences, there will be different solutions for different initial values ϕ(x,t = 0). In addition, all weights might be 0 for one specific t which represents a gap in reliable data. Both problems in the evaluation can be solved by Tikhonov regularization with small parameters. For the former case one should force the resulting phase for one time point t0 to be small; the corresponding regularization parameter should not influence other results as long as it is chosen sufficiently small to not introduce numerical errors. For the second problem, a difference regularization can be introduced. Again, the parameter can be small; it is only used is to obtain a unique solutions, in case weights approach 0. Consequently, the entire approach can be formulated as a linear least squares problem, and solved by regularized solving of the respective linear equation.

To solve the least squares problem (14) we first need to discretize it properly. To this end, we first create the discrete vectors and matrices cϕ corresponding to the unwrapped cross-spectrum phase arg〈(CU)〉, ϕ corresponding to the phases to be extracted, and a matrix S relating the two. Assume a given systematic phase ϕ′, then the corresponding cross-spectrum cϕ′ would be uniquely given by

We can write this as

Given the actually measured cross-spectrum cϕ and the corresponding phase ϕ to be computed and introducing the diagonal weight matrix W = diag(w0,w1, …, wN−1) as computed by (12) or (13) we can write the determination of ϕ as the regularized minimization problem by

The corresponding ϕ is found by

which needs to be performed for each curve or each lateral pixel (x,y) when doing phase imaging. In general, we chose

and

with μ1 = 0.02, μ2 = 0.0001, and μ3 = 0.02. Without the regularization terms, the matrix S is not invertible or not invertible if there are gaps in the data.

Specifying the initial value

The regularization term basically enforces the phase value corresponding to the 5th volume to 0, thereby specifying the initial value. Since in both, simulation and experiment, we only know that no (deliberate) phase change is occurring in the frames <5, we can normalize the final result by

with N0 = 5.

Real-valuedness of the modulating cross-spectrum

For the entire approach to work it remains to be shown, that the modulation cross spectrum 〈CH〉 is real-valued for small Δt. We assume the modulation cross-spectrum is given according to (2) and (6) by

Now, the second term is small compared to the first term since ϕi and ϕj are statistically independent making the sum and the average run over random phasors. The phases of the first term can be approximated by ϕi(t) − ϕi(t) + ∂t′ϕi(t′)|t′=t Δt = ∂t′ϕi(t′)|t′=t Δt and thus will be 0 for small Δt that are within good autocorrelation of the modulating function H. Thus 〈CH〉 is real for small Δt to a good approximation.

Phase evaluation by phase differences

In the alternative approach we compute merely phase differences. We can still formulate this by using the cross-spectrum of phase difference given by (10). For all values t ≥ t0, the phase is obtained by

for imaging and

for curve evaluation. For this direct phase computation, the phase-wrapped cross-spectrum was used. For curve extraction the phase was unwrapped in t afterwards.

Supplementary information

Acknowledgements

This work was funded by the German Research Foundation (DFG), Project Holo-OCT HU 629/6-1.

Author Contributions

H.S. and D.H. conceived of the presented method and implemented the algorithm. C.P. and L.K. conducted the experiments. G.H. and D.H. supervised the project. All authors designed the experiments, discussed the results, and contributed to the final manuscript.

Data Availability

The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.

Competing Interests

D.H. is an employee of Thorlabs GmbH, which produces and sells OCT systems. D.H. and G.H. are listed as inventors on a related patent application.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information accompanies this paper at 10.1038/s41598-019-47979-8.

References

- 1.Goodman, J. Speckle Phenomena in Optics: Theory and Applications (Roberts & Company, 2007).

- 2.Creath K. Phase-shifting speckle interferometry. Appl. Opt. 1985;24:3053–3058. doi: 10.1364/AO.24.003053. [DOI] [PubMed] [Google Scholar]

- 3.Joenathan C, Haible P, Tiziani HJ. Speckle interferometry with temporal phase evaluation: influence of decorrelation, speckle size, and nonlinearity of the camera. Appl. Opt. 1999;38:1169–1178. doi: 10.1364/AO.38.001169. [DOI] [PubMed] [Google Scholar]

- 4.Lehmann M. Decorrelation-induced phase errors in phase-shifting speckle interferometry. Appl. Opt. 1997;36:3657–3667. doi: 10.1364/AO.36.003657. [DOI] [PubMed] [Google Scholar]

- 5.Jones R, Wykes C. De-correlation effects in speckle-pattern interferometry. Opt. Acta. 1977;24:533–550. doi: 10.1080/716099421. [DOI] [Google Scholar]

- 6.Berne, B. & Pecora, R. Dynamic Light Scattering: With Applications to Chemistry, Biology, and Physics. Dover Books on Physics Series (Dover Publications, 2000).

- 7.Goldburg WI. Dynamic light scattering. Am. J. Phys. 1999;67:1152–1160. doi: 10.1119/1.19101. [DOI] [Google Scholar]

- 8.Chen C-L, Wang RK. Optical coherence tomography based angiography [invited] Biomed. Opt. Express. 2017;8:1056–1082. doi: 10.1364/BOE.8.001056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hillmann D, et al. In vivo optical imaging of physiological responses to photostimulation in human photoreceptors. Proc. Natl. Acad. Sci. USA. 2016;113:13138–13143. doi: 10.1073/pnas.1606428113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pfäffle, C. et al. Functional imaging of ganglion and receptor cells in living human retina by osmotic contrast. ArXiv e-prints 1809.02812, https://arxiv.org/abs/1809.02812 (2018).

- 11.Zhang Pengfei, Zawadzki Robert J., Goswami Mayank, Nguyen Phuong T., Yarov-Yarovoy Vladimir, Burns Marie E., Pugh Edward N. In vivo optophysiology reveals that G-protein activation triggers osmotic swelling and increased light scattering of rod photoreceptors. Proceedings of the National Academy of Sciences. 2017;114(14):E2937–E2946. doi: 10.1073/pnas.1620572114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang F, Kurokawa K, Lassoued A, Crowell JA, Miller DT. Cone photoreceptor classification in the living human eye from photostimulationinduced phase dynamics. Proc. Natl. Acad. Sci. 2019;116:7951–7956. doi: 10.1073/pnas.1816360116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Azimipour M, Migacz JV, Zawadzki RJ, Werner JS, Jonnal RS. Functional retinal imaging using adaptive optics swept-source OCT at 1.6 MHz. Optica. 2019;6:300–303. doi: 10.1364/OPTICA.6.000300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kennedy BF, Kennedy KM, Sampson DD. A review of optical coherence elastography: Fundamentals, techniques and prospects. IEEE J. Sel. Top. Quantum Electron. 2014;20:272–288. doi: 10.1109/JSTQE.2013.2291445. [DOI] [Google Scholar]

- 15.Chin L, et al. Analysis of image formation in optical coherence elastography using a multiphysics approach. Biomed. Opt. Express. 2014;5:2913–2930. doi: 10.1364/BOE.5.002913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang RK, Kirkpatrick S, Hinds M. Phase-sensitive optical coherence elastography for mapping tissue microstrains in real time. Appl. Phys. Lett. 2007;90:164105. doi: 10.1063/1.2724920. [DOI] [Google Scholar]

- 17.An L, Chao J, Johnstone M, Wang RK. Noninvasive imaging of pulsatile movements of the optic nerve head in normal human subjects using phasesensitive spectral domain optical coherence tomography. Opt. Lett. 2013;38:1512–1514. doi: 10.1364/OL.38.001512. [DOI] [PubMed] [Google Scholar]

- 18.Li P, et al. Phase-sensitive optical coherence tomography characterization of pulse-induced trabecular meshwork displacement in ex vivo nonhuman primate eyes. J. Biomed. Opt. 2012;17:17–17–11. doi: 10.1117/1.JBO.17.7.076026. [DOI] [PubMed] [Google Scholar]

- 19.Spahr H, et al. Imaging pulse wave propagation in human retinal vessels using full-field swept-source optical coherence tomography. Opt. Lett. 2015;40:4771–4774. doi: 10.1364/OL.40.004771. [DOI] [PubMed] [Google Scholar]

- 20.van Brug, H. & Somers, P. A. A. M. Speckle decorrelation: Observed, explained, and tackled. In Jacquot, P. & Fournier, J.-M. (eds) Interferometry in Speckle Light, 11–18 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2000).

- 21.Knox KT, Thompson BJ. Recovery of images from atmospherically degraded short-exposure photographs. Astrophys. J. 1974;193:L45–L48. doi: 10.1086/181627. [DOI] [Google Scholar]

- 22.Lohmann AW, Weigelt G, Wirnitzer B. Speckle masking in astronomy: triple correlation theory and applications. Appl. Opt. 1983;22:4028–4037. doi: 10.1364/AO.22.004028. [DOI] [PubMed] [Google Scholar]

- 23.Ayers GR, Northcott MJ, Dainty JC. Knox–Thompson and triple-correlation imaging through atmospheric turbulence. J. Opt. Soc. Am. A. 1988;5:963–985. doi: 10.1364/JOSAA.5.000963. [DOI] [Google Scholar]

- 24.Lannes A. Backprojection mechanisms in phase-closure imaging. Bispectral analysis of the phase-restoration process. Exp. Astron. 1989;1:47–76. doi: 10.1007/BF00414795. [DOI] [Google Scholar]

- 25.Marron JC, Sanchez PP, Sullivan RC. Unwrapping algorithm for least-squares phase recovery from the modulo 2π bispectrum phase. J. Opt. Soc. Am. A. 1990;7:14–20. doi: 10.1364/JOSAA.7.000014. [DOI] [Google Scholar]

- 26.Haniff CA. Least-squares Fourier phase estimation from the modulo 2π bispectrum phase. J. Opt. Soc. Am. A. 1991;8:134–140. doi: 10.1364/JOSAA.8.000134. [DOI] [Google Scholar]

- 27.Roggemann, M., Welsh, B. & Hunt, B. Imaging Through Turbulence. Laser & Optical Science & Technology (Taylor & Francis, 1996).

- 28.Mikurda K, Der Lühe OV. High resolution solar speckle imaging with the extended Knox–Thompson algorithm. Sol. Phys. 2006;235:31–53. doi: 10.1007/s11207-006-0069-6. [DOI] [Google Scholar]

- 29.Bamler, R. & Just, D. Phase statistics and decorrelation in SAR interferograms. In Geoscience and Remote Sensing Symposium, 1993. IGARSS ’93. Better Understanding of Earth Environment., International, 980–984 vol.3, 10.1109/IGARSS.1993.322637 (1993).

- 30.Zebker HA, Villasenor J. Decorrelation in interferometric radar echoes. IEEE T. Geosci. Remote. 1992;30:950–959. doi: 10.1109/36.175330. [DOI] [Google Scholar]

- 31.Lanari, R. et al. An overview of the Small BAseline Subset algorithm: A DInSAR technique for surface deformation analysis. In Wolf, D. & Fernández, J. (eds) Deformation and Gravity Change: Indicators of Isostasy, Tectonics, Volcanism, and Climate Change, 637–661 (Birkhäuser Basel, Basel, 2007).

- 32.Ferretti A, Prati C, Rocca F. Permanent scatterers in SAR interferometry. IEEE T. Geosci. Remote. 2001;39:8–20. doi: 10.1109/36.898661. [DOI] [Google Scholar]

- 33.Sanderson C, Curtin R. Armadillo: a template-based C++ library for linear algebra. J. Open Source Softw. 2016;1:26. doi: 10.21105/joss.00026. [DOI] [Google Scholar]

- 34.Sanderson C, Curtin R. A user-friendly hybrid sparse matrix class in C++ Lect. Notes Comput. Sci. 2018;10931:422–430. doi: 10.1007/978-3-319-96418-8_50. [DOI] [Google Scholar]

- 35.Deledalle C, Denis L, Tupin F. NL-InSAR: Nonlocal interferogram estimation. IEEE T. Geosci. Remote. 2011;49:1441–1452. doi: 10.1109/TGRS.2010.2076376. [DOI] [Google Scholar]

- 36.Lucas A, et al. Insights into Titan’s geology and hydrology based on enhanced image processing of Cassini RADAR data. J. Geophys. Res. –Planet. 2014;119:2149–2166. doi: 10.1002/2013JE004584. [DOI] [Google Scholar]

- 37.Liba O, et al. Speckle-modulating optical coherence tomography in living mice and humans. Nat. Commun. 2017;8:15845. doi: 10.1038/ncomms15845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hillmann D, et al. Aberration-free volumetric high-speed imaging of in vivo retina. Sci. Rep. 2016;6:35209. doi: 10.1038/srep35209. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.