Abstract

While all minimally invasive procedures involve navigating from a small incision in the skin to the site of the intervention, it has not been previously demonstrated how this can be done autonomously. To show that autonomous navigation is possible, we investigated it in the hardest place to do it – inside the beating heart. We created a robotic catheter that can navigate through the blood-filled heart using wall-following algorithms inspired by positively thigmotactic animals. The catheter employs haptic vision, a hybrid sense using imaging for both touch-based surface identification and force sensing, to accomplish wall following inside the blood-filled heart. Through in vivo animal experiments, we demonstrate that the performance of an autonomously-controlled robotic catheter rivals that of an experienced clinician. Autonomous navigation is a fundamental capability on which more sophisticated levels of autonomy can be built, e.g., to perform a procedure. Similar to the role of automation in fighter aircraft, such capabilities can free the clinician to focus on the most critical aspects of the procedure while providing precise and repeatable tool motions independent of operator experience and fatigue.

Introduction

Minimally invasive surgery reduces the trauma associated with traditional open surgery resulting in faster recovery times, fewer wound infections, reduced postoperative pain and improved cosmesis (1). The trauma of open-heart surgery is particularly acute since it involves cutting and spreading the sternum to expose the heart. Nonetheless, an important additional step to reducing procedural trauma and risk in cardiac procedures is to develop ways to perform repairs without stopping the heart and placing the patient on cardiopulmonary bypass.

To this end, many specialized devices have been designed that replicate the effects of open surgical procedures, but which can be delivered by catheter. These include transcatheter valves (2), mitral valve neochords (3), occlusion devices (4), stents (5) and stent grafts (6). To deploy these devices, catheters are inserted either into the vasculature (e.g., femoral vein or artery) or, via a small incision between the ribs, directly into the heart through its apex.

From the point of insertion, the catheter must be navigated to the site of the intervention inside the heart or its vessels. Beating-heart navigation is particularly challenging because the blood is opaque and the cardiac tissue is moving. Despite the difficulties of navigation, however, the most critical part of the procedure is device deployment. This is the component when the judgement and expertise of the clinician is most crucial. Much like the autopilot of a fighter jet, autonomous navigation can relieve the clinician from performing challenging, but routine tasks so that they can focus on the mission-critical components of planning and performing device deployment.

To safely navigate a catheter, it is necessary to be able to determine its location inside the heart and to control the forces it applies to the tissue. In current clinical practice, forces are largely controlled by touch while catheter localization is performed using fluoroscopy. Fluoroscopy provides a projective view of the catheter, but does not show soft tissue and exposes the patient and clinician to radiation. Ultrasound, which enables visualization of soft tissue and catheters, is often used during device deployment, but the images are noisy and of limited resolution. In conjunction with heart motion, this makes it difficult to precisely position the catheter tip with respect to the tissue.

The limitations of existing cardiac imaging prompted us to seek an alternate approach. In nature, wall following – tracing object boundaries in one’s environment – is utilized by certain insects and vertebrates as an exploratory mechanism in low-visibility conditions to ameliorate their localization and navigational capabilities in the absence of visual stimuli. Positively thigmotactic animals, that attempt to preserve contact with their surroundings, employ wall following in unknown environments as an incremental map-building function to construct a spatial representation of the environment. Animals initially localize new objects discovered by touch in an egocentric manner, i.e., the object’s relative position to the animal is estimated; however, later more complex spatial relations can be learned, functionally resembling a map representation (33,34). These animals often sample their environment by generating contact such as through rhythmically controlled whisker motion, as reported in rodents (7), or antennae manipulations in cockroaches (8) and blind crayfish (9).

Results

Inspired by this approach, we designed positively thigmotactic algorithms that achieve autonomous navigation inside the heart by creating low-force contact with the heart tissue and then following tissue walls to reach a goal location. To enable wall following while also locally recapturing the detailed visual features of open surgery, we introduce a novel sensing modality at the catheter tip that we call haptic vision. Haptic vision combines intracardiac endoscopy, machine learning and image processing algorithms to form a hybrid imaging and touch sensor – providing clear images of whatever the catheter tip is touching while also identifying what it is touching (e.g., blood, tissue, valve) and how hard it is pressing (Fig. 1A). We use haptic vision as the sole sensory input to our navigation algorithms to achieve wall following while also controlling the forces applied by the catheter tip to the tissue. We evaluated autonomous navigation through in vivo experiments and compared it with operator-controlled robot motion and with manual navigation.

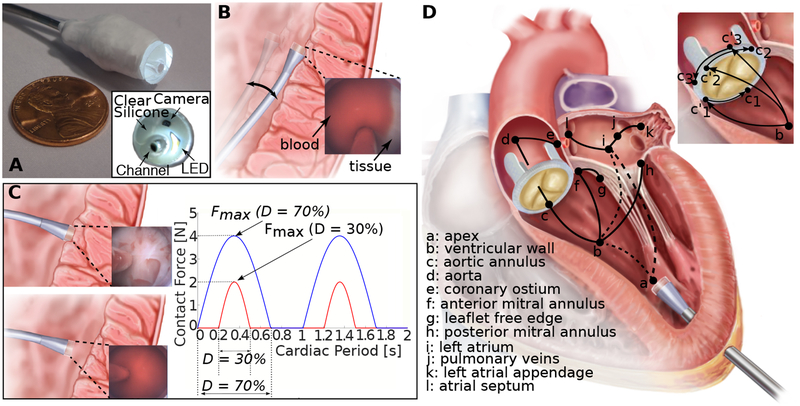

Fig. 1. Autonomous intracardiac navigation using haptic vision.

(A) Haptic vision sensor comprised of millimeter-scale camera and LED encased in silicone optical window with working channel for device delivery. Endoscope acts as a combined contact and imaging sensor with optical window displacing blood between camera and tissue during contact. (B) Continuous contact mode in which catheter tip is pressed laterally against heart wall over entire cardiac motion cycle. Contact force is controlled based on amount of tissue visible at edge of optical window as shown in inset. (C) Intermittent contact mode in which catheter is in contact with heart wall for a specified fraction, D, of the cardiac period (contact duty cycle). Insets show corresponding haptic vision images in and out of contact. Maximum contact force relates to contact duty cycle, D, as shown on plot and is controlled by small catheter displacements orthogonal to the heart wall. (D) Wall-following connectivity graph. Vertices, defined in legend, denote walls and cardiac features. Solid arcs indicate connectivity of walls and features while dashed arcs indicate that non-contact transition is required. Wall-following paths through heart can be constructed as sequence of connected vertices. Paravalvular leak closure experiments described in paper consist of paths a→b→ci’→ci, i={1,2,3}.

For wall following, we exploited the inherent compliance of the catheter to implement two control modes based on continuous and intermittent contact. Continuous contact can often be safely maintained over the cardiac cycle when the catheter tip is pressed laterally against tissue since catheters are highly compliant in this direction (Fig. 1B). Intermittent contact can be necessary when there is significant tissue motion and the catheter is pressed against the tissue along its stiffer longitudinal axis (Fig. 1C).

In both the continuous and intermittent contact modes, the robot acts to limit the maximum force applied to the tissue using a haptic-vision-based proxy for force. In the continuous contact mode, catheter position with respect to the tissue surface is adjusted to maintain a specified contact area on the catheter tip (Fig. 1B) corresponding to a desired force. In the intermittent contact mode, catheter position with respect to the tissue surface is adjusted to maintain a desired contact duty cycle – the fraction of the cardiac cycle during which the catheter is in tissue contact (Fig. 1C). The relationship between contact duty cycle and maximum force was investigated experimentally as described in the Supplementary Materials. Complex navigation tasks can be achieved by following a path through a connectivity graph (Fig. 1D) and selecting between continuous and intermittent contact modes along that path based on contact compliance and the amplitude of tissue motion.

We have implemented autonomous navigation based solely on haptic-vision sensing and demonstrated the potential of the approach in the context of a challenging beating-heart procedure, aortic paravalvular leak closure. Paravalvular leaks occur when a gap opens between the native valve annulus and the prosthetic valve (10,11). Transcatheter leak closure involves sequentially navigating a catheter to the leak, passing a wire from the catheter through the gap and then deploying an expanding occluder device inside the gap (Fig. 2A). This procedure is currently manually performed using multi-modal imaging (electrocardiogram-gated computed tomographic angiography, transthoracic and transesophageal echo pre-operatively and echocardiography and fluoroscopy intraoperatively) and requires 29.9±24.5 minutes of fluoroscopic x-ray exposure (12).

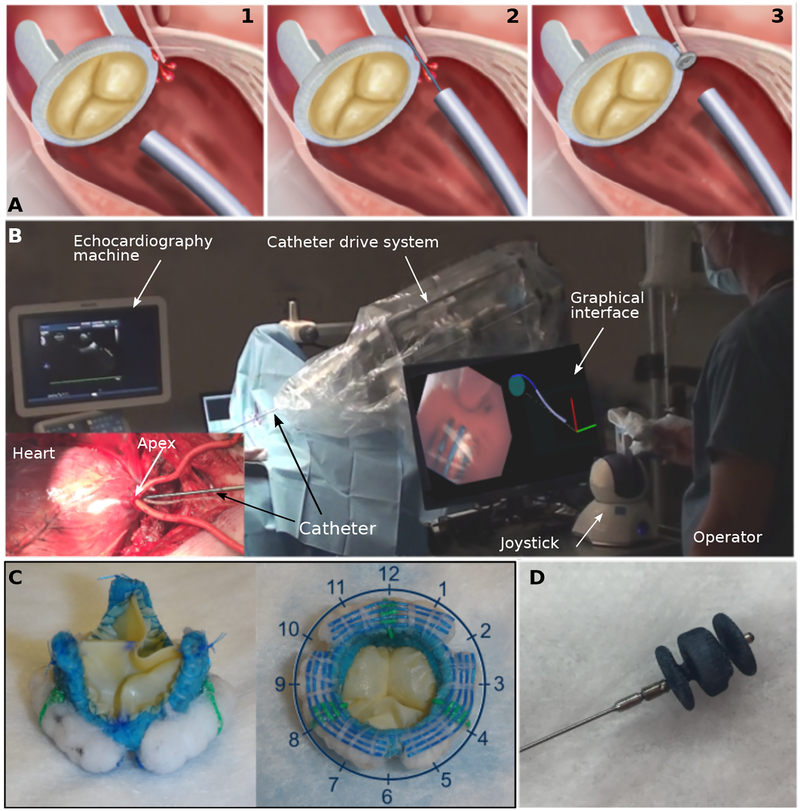

Fig. 2. Paravalvular leak closure experiments.

(A) Current clinical approach to paravalvular leak closure: (1) Catheter approaches valve. (2) Wire is extended from catheter to locate leak. (3) Vascular occluder is deployed inside leak channel. While transapical access is illustrated, approaching the valve from the aorta via transfemoral access is common. (B) Robotic catheter in operating room. Graphical interface displays catheter tip view and geometric model of robot and valve annulus. (C) Two views of bioprosthetic aortic valve designed to produce 3 paravalvular leaks at 2, 6 and 10 o’clock positions. Blue sutures are used to detect tangent to annulus. Green sutures are used to estimate valve rotation with respect to robot. (D) Vascular occluder (AMPLATZER Vascular Plug II, St. Jude Medical, Saint Paul, MN) used to plug leaks.

To perform paravalvular leak closure, we designed a robotic catheter (Fig. 2B) for entering through the apex of the heart into the left ventricle, navigating to the aortic valve and deploying an occluder into the site of a leak (Fig. 1D). We created a porcine paravalvular leak model by replacing the native aortic valve with a bioprosthetic valve incorporating three leaks (Fig. 2C). Using leak locations determined from pre-operative imaging, the catheter can either navigate autonomously to that location or the clinician can guide it there (Fig. 2B). Occluder deployment is performed under operator control.

During autonomous catheter navigation to the leak location, both continuous and intermittent contact modes are employed (Fig. 1D). For navigation from the heart’s apex to the aortic valve, the robot first locates the ventricular wall (a → b) and then follows it to the aortic valve using the continuous contact mode (b → c). Since the valve annulus displaces by several centimeters over the cardiac cycle along the axis of the catheter, the robot switches to the intermittent contact mode once it detects that it has reached the aortic valve. It then navigates its tip around the perimeter of the valve annulus to the leak location specified from preoperative imaging (ci’ → ci, Fig. 1D inset).

Switching between continuous and intermittent contact modes depends on the robot recognizing the tissue type it is touching. We implemented the capability for the catheter to distinguish the prosthetic aortic valve from blood and tissue using a machine learning classification algorithm. The classification algorithm first identifies a collection of “visual words,” which consists of visual features shared between multiple images in a set of pre-labeled training images, and learns the relationship between how often these visual features occur and what the image depicts – in this case, the prosthetic valve or blood and tissue. Implementation details and performance evaluation of the classification algorithm can be found in the Supplementary Materials.

Navigation on the annulus of the aortic valve to the location of a leak requires two capabilities. The first is to maintain the appropriate radial distance from the center of the valve. The second is to be able to move to a specified angular location on the annulus. For robust control of radial distance, we integrated colored sutures into the bioprosthetic valve annulus that enable the navigation algorithm to compute the tangent direction of the annulus (Fig. 2C). Moving to a specific angular location requires the robot to estimate its current location on the annulus, to determine the shortest path around the valve to its target location and to detect when the target has been reached. We programmed the robot to build a geometric model of the valve as it navigates. Based on the estimated tangent direction of the valve annulus as well as basic knowledge of the patient and robot position on the operating table, the robot can estimate its clock face position on the valve (Fig. 2C). To account for valve rotation relative to the robot due to variability in patient anatomy and positioning, we incorporated radially oriented colored registration sutures spaced 120 degrees apart. As the catheter navigates along the annulus and detects the registration sutures, it updates its valve model to refine the estimate of its location on the valve.

To evaluate the autonomous navigation algorithms, we performed in vivo experiments comparing autonomous navigation with teleoperated (i.e., joystick-controlled) robotic navigation. We also compared these two forms of robotic navigation with our results in (13) describing manual navigation of a handheld catheter. In all cases, the only sensing used consisted of the video stream from the tip-mounted endoscope, kinesthetic sensing of the robot / human and force sensing of the human (handheld). At the end of each experiment, we opened the heart and examined the ventricular walls for bruising or other tissue damage and found none.

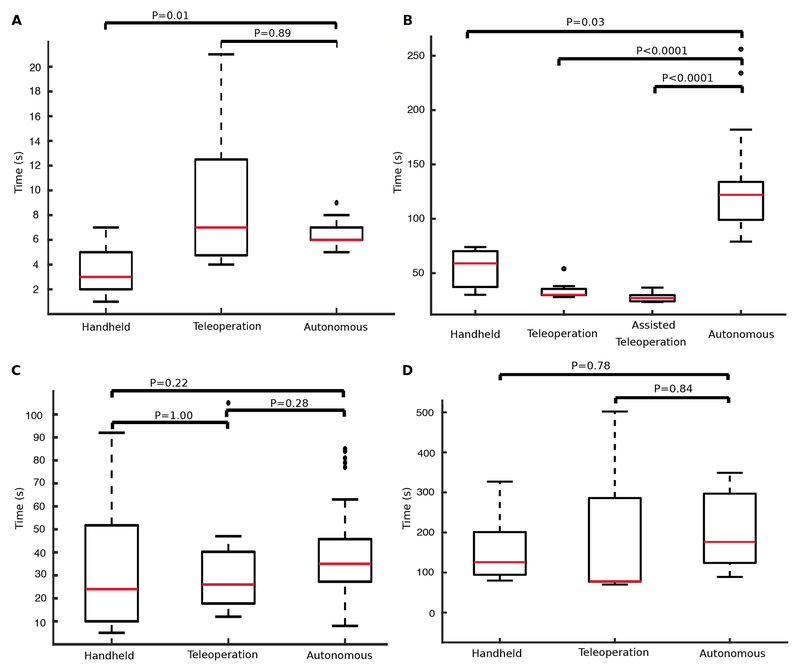

We first compared success rate and navigation time for autonomous navigation (Fig. 1D, a→b→ci’) from the apex of the left ventricle to the aortic annulus (5 animals, 90 trials), with teleoperated control (3 animals, 9 trials) and with manual control (3 animals, 13 trials), (Fig. 3A). Autonomous navigation consisted of first moving to a wall of the ventricle specified by clock position (Fig. 2C) and then following that wall to the valve using the continuous contact control mode. Autonomous navigation was successful 99% of the time (89/90 trials). Autonomous control was faster than teleoperated control and with a smaller variance, but slower than manual control.

Fig. 3. In vivo navigation completion times.

(A) Navigation from apex of the left ventricle to the aortic annulus (Fig. 1D, a→b→c). (B) Circumnavigation of the entire aortic valve annulus (e.g., c1’→c1→c2→c3’→c2’→c3→c1’, Fig. 1D inset). (C) Navigation from apex to paravalvular leak (Fig. 1D, a→b→ci’→ci). (D) Deployment of vascular occluder. Red bars indicate median, box edges are 25th and 75th percentiles, whiskers indicate range and dots denote outliers. P values computed as described in Supplemental Materials.

Next, we investigated the ability of the controller to navigate completely around the valve annulus using the intermittent contact mode (e.g., c1’→c1→c2→c3’→c2’→c3→c1’, Fig. 1D inset). This is substantially more challenging than what is required for paravalvular leak closure since, for leak closure, our algorithms enable the catheter to follow the ventricular wall in a direction that positions the catheter at an angular position on the valve that is close to the leak. (For example, to reach c1 in Fig. 1D, the catheter could follow the path, a→b→c1’→c1.) For circumnavigation of the annulus, we compared autonomous control (3 animals, 65 trials) with a handheld catheter (3 animals, 3 trials) and with two forms of teleoperation (Fig. 3B). The first consisted of standard teleoperated control (1 animal, 9 trials). The second corresponds to autonomous operator assistance (1 animal, 10 trials). In the latter, the robot automatically controlled motion perpendicular to the plane of the valve to achieve a desired contact duty cycle while the human operator manually controlled motion in the valve plane. Autonomous valve circumnavigation was successful 66% of the time (43/65 trials). Manual and teleoperated control had 100% success rates since the human operator, a clinician, could interpret and respond to unexpected situations. For this task, teleoperation was faster than autonomous and manual navigation with assisted teleoperation being the fastest (Fig. 3B). Autonomous control was the slowest taking over twice as long as manual control.

We then compared controller performance for the complete paravalvular leak navigation task (Fig. 1D, a→b→ci’→ci) in which the catheter started at the heart’s apex, approached the ventricular wall in a user-provided direction, moved to the aortic annulus along the ventricular wall and then followed the annulus to the prespecified leak position (5 animals, 83 trials). We chose the direction along the ventricular wall so that the catheter would arrive on the valve at a point ci’, close to the leak ci, but such that it would still have to pass over at least one registration marker to reach the leak. Autonomous navigation was successful in 95% of the trials (79/83) with a total time of 39±17sec compared to times of 34±29sec for teleoperation (3 animals, 9 trials) and 31±27sec for manual navigation (3 animals, 13 trials). See Fig. 3C. Note that for teleoperated and manual navigation, the operator was not required to follow a particular path to a leak.

For autonomous navigation, we also evaluated how accurately the catheter was able to position its tip over a leak. In the first three experiments, valve rotation with respect to the robot was estimated by an operator prior to autonomous operation. In the last two experiments, valve rotation was estimated by the robot based on its detection of the registration sutures. The distance between the center of the catheter tip and the center of each leak was 3.0±2.0mm for operator-based registration (3 animals, 45 trials) and 2.9±1.5mm for autonomous estimation (2 animals, 38 trials) with no statistical difference between methods (p = 0.8262, Wilcoxon rank sum). This error is comparable to the accuracy with which a leak can be localized based on preoperative imaging.

To ensure that autonomous navigation did not affect occluder delivery, we performed leak closure following autonomous, teleoperated and manual navigation. The time to close a leak was measured from the moment either the robot or the human operator signaled that the working channel of the catheter was positioned over the leak. Any time required by the operator to subsequently adjust the location of the working channel was included in closure time (Fig. 3D). Leak closure was successful in 8/11 trials (autonomous navigation), 7/9 trials (teleoperation) and 11/13 trials (manual navigation). The choice of navigation method produced no statistical difference in closure success or closure time.

Discussion

Our primary result is that autonomous navigation in minimally invasive procedures is possible and can be successfully implemented using enhanced sensing and control techniques to provide results comparable to expert manual navigation in terms of procedure time and efficacy. Furthermore, our experiments comparing clinician-controlled robotic navigation with manual navigation echo the results obtained for many medical procedures – robots operated by humans often provide no better performance than manual procedures except for the most difficult cases and demanding procedures (14,15). Medical robot autonomy provides an alternative approach and represents the way forward for the field (16–18,35).

Benefits of Autonomous Navigation

Automating such tasks as navigation can provide important benefits to clinicians. For example, when a clinician is first learning a procedure, a significant fraction of their attention is allocated to controlling instruments (e.g., catheters, tools) based on multi-modal imaging. Once a clinician has performed a large number of similar procedures with the same instruments, the amount of attention devoted to instrument control is reduced. By using autonomy to relieve the clinician of instrument control and navigation, the learning curve involved in mastering a new procedure could be substantially reduced. While this would be of significant benefit during initial clinical training, it could also enable mid-career clinicians to adopt new minimally invasive techniques that would otherwise require too much re-training. And even after a procedure is mastered, there are many situations where an individual clinician may not perform a sufficient number of procedures to maintain their mastery of it. In all of these cases, autonomy could enable clinicians to operate as experts with reduced experience- and fatigue-based variability.

There are also many places in the world where clinical specialties are not represented. While medical robots can provide the capability for a specialist to perform surgery remotely (19), this approach requires dedicated high-bandwidth two-way data transfer. Transmission delays or interruptions compromise safety owing to loss of robot control. In these situations, autonomy could enable stable and safe robot operation even under conditions of low-bandwidth or intermittent communication. Autonomy could also enable the robot to detect and correct for changing patient conditions when communication delays preclude sufficiently fast reaction by the clinician.

Autonomy also enables to an unprecedented degree the capability to share, pool and learn from clinical data (20–22). With teleoperated robots, robot motion data can be easily collected, but motions are being performed by clinicians using different strategies and the information they are using to guide these strategies may not all be known let alone recorded. In contrast, the sensor data streaming to an autonomous controller is well defined as is its control strategy. This combination of well-defined input and output data together with known control strategies will make it possible to standardize and improve autonomous technique based on large numbers of procedural outcomes. In this way, robot autonomy can evolve by applying the cumulative experiential knowledge of its robotic peers to each procedure.

Limitations

We demonstrated that haptic vision combined with biologically-inspired wall following serve as an enabling method for autonomous navigation inside the blood-filled heart. The haptic vision sensor provides a high-resolution view of the catheter tip – which is exactly the view needed for both wall following and device deployment. The sensor, combined with its machine learning and image processing algorithms, enables the robot to distinguish what it is touching and to control its contact force.

After initial wall-following exploration, blinded animals as well as people have been observed to use their environmental map to create shortcuts through free space (33,34,36,37). While not formally studied, we observed similar behavior in our previously published experiments with a handheld instrument (13). During initial use of the instrument, the clinician navigated from the apex to the aortic annulus using wall following. Once familiar with the procedure, however, the clinician would usually attempt to move directly through the center of the ventricle to the annulus. If the annulus was not found where they expected it to be, they would retract the instrument, search for the ventricular wall and then follow it to the annulus. While we did not attempt this more advanced form of navigation, it is worthy of future study since it is likely to lead to faster performance and would facilitate safe navigation through valves.

The most challenging part of our in vivo navigation plan was moving along the aortic valve annulus. While the success rate of the overall navigation task was 95%, complete circumnavigation of the annulus was successful only 66% of the time. This lower success rate is not a limitation of wall following, but rather reflects our decision to make annular navigation only as robust as necessary to achieve high overall task success. In particular, a primary failure mode of circumnavigation corresponded to the catheter tip experiencing simultaneous lateral contact with the ventricular wall and tip contact with the valve. This uncontrolled lateral contact led to the catheter becoming stuck against the ventricular wall. By adding a new control mode considering simultaneous tip and lateral contacts, circumnavigation could be made much more robust. And while we limited navigational sensing to haptic vision in order to evaluate its capabilities, the use of additional sensing modalities and more sophisticated modeling and control techniques is warranted for clinical use.

We employed transapical access in our experiments because it allowed us to focus on the most challenging navigation problem – performing precise motions inside a pulsating 3D volume containing complex moving features. For clinical use, the proposed approach should be extended to enable autonomous navigation starting from the femoral artery. Such vascular navigation is a straightforward extension of our proposed approach and can be performed with our continuous contact control mode. It would involve following 1D curves using a wall-following connectivity graph (Fig. 1D) mapping the branching of the vascular network.

Future Directions

Wall-following autonomous navigation is extensible to many minimally invasive procedures including those in the vasculature, airways, gastrointestinal tract and the ventricular system of the brain. Even in the absence of blood or bleeding, haptic vision, potentially augmented with other sensing modalities, can be used to mediate tissue contact. The sequence of wall contacts defining a navigational plan are based on anatomical topology, but not on anatomical dimensions. Consequently, wall-following plans are largely patient independent, but can be adapted as needed for anatomical variants based on preoperative imaging. As demonstrated with our bioprosthetic aortic valve, previously deployed devices can serve as navigational waypoints and can incorporate visual features as navigational aids.

Clinical Translation

Clinical translation of autonomy does not require that the robot be capable of completing its task in every possible circumstance. Instead, it needs to satisfy the lesser requirement of knowing when it cannot complete a task and should ask for help. Initially, this framework would enable the robot to perform the routine parts of a procedure, as demonstrated here for navigation, and so enable clinicians to focus on planning and performing the critical procedural components. Ultimately, as autonomous technology matures, the robot can expand its repertoire into more difficult tasks.

Materials and Methods

The goal of the study was to investigate the feasibility of performing autonomous catheter navigation for a challenging intracardiac procedure in a pre-clinical porcine in vivo model. To perform this study, we designed and built a robotic catheter and haptic vision sensor. We also designed and wrote control algorithms enabling the catheter to navigate either autonomously or under operator control. For our in vivo experiments, we chose transapical paravalvular leak closure as a demonstration procedure and compared autonomous and operator-controlled navigation times with each other and with prior results using a handheld catheter. For autonomous navigation, we also measured the distance between the final position of the catheter tip and the actual location of the leak.

Robotic catheter

Catheter design

We designed the catheter using Concentric Tube Robot technology in which robots are comprised of multiple needle-sized concentrically-combined pre-curved superelastic tubes. A motorized drive system located at the base of the tubes rotates and telescopically extends the tubes with respect to each other to control the shape of the catheter as well as its tip position (23, 24) (Movie S1). The drive system is mounted on the operating room table using a passively adjustable frame that allows the catheter tip to be positioned and oriented for entry into the apex (Fig. 2B).

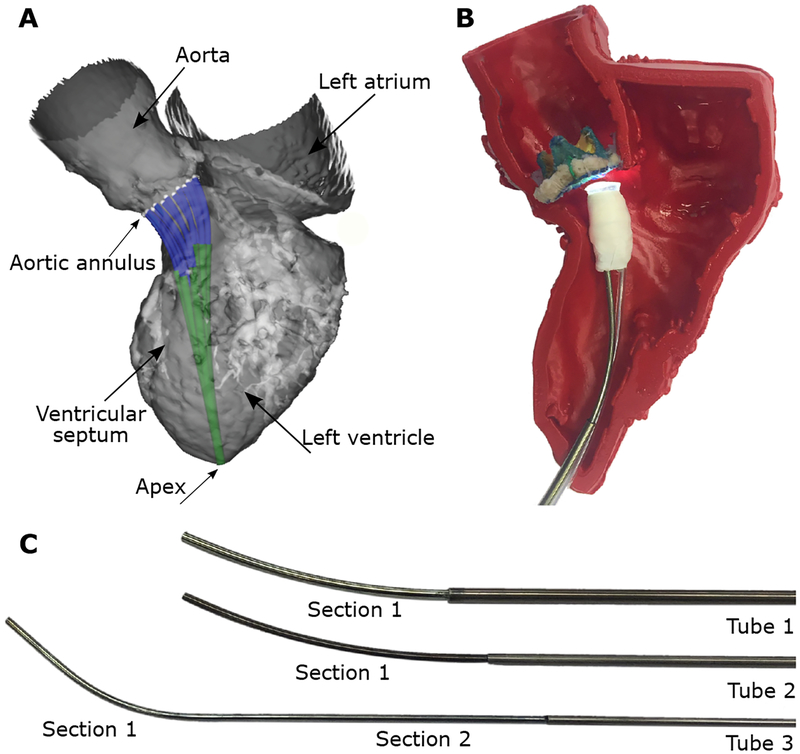

Tools and devices are delivered through the lumen of the innermost robot tube which incorporates a valve and flush system at its proximal end. This system enables the catheter lumen to be flushed with saline to prevent air entry into the heart and to prevent pressurized blood from the heart from entering the lumen of the catheter. We used a design optimization algorithm to solve for the tube parameters based on the anatomical constraints and clinical task (aortic paravalvular leak closure) (25). The anatomical and task constraints were defined using a 3D model of an adult human left ventricle (Fig. 4). Since the relative dimensions of the human and porcine hearts are similar, the resulting design was appropriate for our in vivo experiments. The design algorithm solved for the tube parameters enabling the catheter tip to reach from the apex of the heart to a set of twenty-five uniformly sampled points around the aortic valve annulus without the catheter contacting the ventricular wall along its length. The orientation of the catheter tip was further constrained at the 25 points to be within 10 degrees of orthogonal to the valve plane. The resulting design was comprised of 3 tubes forming 2 telescoping sections with motion as shown in Movie S1. The tube parameters of the robotic catheter are given in Table 1.

Fig. 4. Algorithmic robotic catheter design.

(A) Computer model of optimized design comprised of 3 tubes shown in adult left ventricle. Robot enters heart through apex and is depicted at 12 locations on the aortic annulus. (B) Fabricated catheter with haptic vision sensor shown inside 3D printed model of heart shown in (A). (C) Disassembled catheter showing its three pre-curved superelastic tubes. Tube parameters are given in Table 1.

Table 1.

Optimized parameter values for 3 tubes comprising the robotic catheter. Tube sections are labeled in Fig. 4B.

| Tube 1 | Tube 2 | Tube 3 | ||

|---|---|---|---|---|

| Section 1 | Section 1 | Section 1 | Section 2 | |

| Outer diameter (mm) | 2.77 | 2.40 | 1.875 | 1.875 |

| Inner diameter (mm) | 2.54 | 2.00 | 1.60 | 1.60 |

| Section Length (mm) | 72.0 | 72.0 | 55.0 | 72.0 |

| Radius of curvature (mm) | 150 | 150 | 40.0 | ∞ (straight) |

| Relative bending stiffness | 0.995 | 0.995 | 0.338 | 0.338 |

Haptic vision sensor design

We fabricated the sensor (Fig. 1A) using a 1mm3 CMOS camera (NanEye Camera System, Awaiba) and a 1.6×1.6mm LED (XQ-B LED, Cree Inc.) encased in an 8mm diameter silicone optical window (OW; QSil 218, Quantum Silicones LLC) molded onto a stainless steel body (13). The optical window diameter was selected to provide a field of view facilitating both autonomous and operator-guided navigation. The sensor also incorporated a 2.5mm diameter working channel for device delivery. The system clamps to the tip of the catheter such that the lumen of the innermost catheter tube aligns with the endoscope working channel. While we have also designed catheters in which the sensor wiring was run through the catheter lumen, in these experiments, the wiring for the camera and LED were run outside the catheter so that the sensor could be replaced without disassembling the robotic catheter.

Computer software design

The software is executed on two PCs. One is used for catheter motion control (Intel® Core™ Quad CPU Q9450@2.66GHz, 4GB RAM) while the second is used to acquire and process images from the haptic vision sensor (Intel® Core™ i7–6700HQ CPU @2.6GHz with 16GB RAM). The two computers exchange information at runtime via TCP/IP. The motion control computer receives real-time heart rate data by serial port (Surgivet, Advisor) and is also connected through USB to a 6DOF joystick (Touch, 3D Systems) that is used during teleoperated control of catheter motion. The motion control computer can execute either the autonomous navigation algorithms or joystick motion commands. In either case, catheter tip motion commands are converted to signals sent to the motor amplifiers of the catheter drive system.

Robotic catheter control

The catheter control code converting desired catheter tip displacements to the equivalent rotations and translations of the individual tubes was written in C++. The code is based on modeling the kinematics using a functional approximation (truncated Fourier series) that is calibrated offline using tip location data collected over the workspace (23). The calibrated functional approximation model has been previously demonstrated to predict catheter tip position more accurately (i.e., smaller average and maximum prediction error) over the workspace compared to the calibrated mechanics-based model (38). Catheter contact with tissue along its length produces unmodeled and unmeasured deformations which must be compensated for via tip imaging. A hierarchical control approach is used (26) to ensure that desired tip position is given a higher priority than desired orientation if both criteria cannot be satisfied simultaneously.

Haptic-vision-based contact classification

To perform wall following, we designed a machine-learning-based image classifier that can distinguish between blood (no contact) or ventricular wall tissue and the bioprosthetic aortic valve. The algorithm uses the bag-of-words approach (27) to separate images into groups (classes) based on the number of occurrences of specific features of interest. During training, the algorithm determines which features are of interest and the relationship between their number and the image class. For training, we used OpenCV to detect features in a set of manually labeled training images. Next, the detected features were encoded mathematically using LUCID descriptors for efficient online computation (28). To reduce the number of features, the optimal feature representatives were identified using clustering (KMeans). The resulting cluster centers were the representative features used for the rest of the training, as well as for runtime image classification. Having identified the set of representative features, we made a second pass through the training data to build a feature histogram for each image by counting how many times each representative feature appears in the image. The final step was to train a Support Vector Machine (29) (SVM) classifier that learned the relationship between the feature histogram and the corresponding class.

Using the trained algorithm, image classification proceeded by first detecting features and computing the corresponding LUCID descriptors. The features are then matched to the closest representative features and the resulting feature histogram is constructed. Based on the histogram, the SVM classifier predicts the tissue-based contact state. We achieved good results using a small set of training images (~2000 images) with training taking ~4 minutes. Since image classification takes 1ms, our haptic vision system estimates contact state at the frame rate of the camera (45 frames per second). The contact classification algorithm is accurate 97% of the time (tested on 7000 images not used for training) with type I error (false positive) of 3.7% and type II (false negative) of 2.3%.

Continuous-contact navigation algorithm

When the catheter is positioned laterally against cardiac tissue, the flexibility of the catheter can enable continuous contact to be maintained without applying excessive force to the tissue. We used haptic vision to control the amount of tissue contact by controlling catheter motion in the direction orthogonal to the tissue surface. Catheter motion in the plane of the tissue surface was independently controlled so as to produce wall following at the desired velocity and in the desired direction. The controller is initialized with an estimate of wall location so that if it is not initially in tissue contact, it moves toward the wall to generate contact. This occurred in our in vivo experiments during navigation from the apex to the aortic valve. The catheter started in the center of the apex with the haptic vision sensor detecting only blood. It would then move in the direction of the desired wall (Fig. 1D, a→b), specified using valve clock coordinates (Fig. 2C), to establish contact and then follow that wall until it reached the valve.

When the haptic vision sensor is pressed laterally against the tissue, the tissue deforms around the sensor tip so that it covers a portion of the field of view (Fig. 1B). The navigation algorithm adjusts the catheter position orthogonal to the tissue surface to maintain the centroid of the tissue contact area within a desired range on the periphery of the image, typically, 30–40%. We implemented tissue segmentation by first applying a Gaussian filter to reduce the level of noise in the image. Next, we segmented the tissue using color thresholding on the Hue-Saturation-Value (HSV) and the CIE L*a*b* representations of color images. The result was a binary image, where white pixels indicate tissue. Finally, we perform a morphological opening operation to remove noise from the binary image. After segmentation, the tissue centroid is computed and sent to the motion control computer. Image processing speed is sufficient to provide updates at the camera frame rate (45fps).

Intermittent-contact navigation algorithm

When a catheter is stiff along its longitudinal axis and is positioned orthogonal to a tissue surface that moves significantly in the direction of this axis over the cardiac cycle, the contact forces can become sufficiently high so as to result in tissue damage or puncture. To maintain contact forces at safe levels, one approach is to design the catheter so that it can perform high-velocity trajectories that move the robotic catheter tip in synchrony with the tissue (30). We employ an alternative technique requiring only slow catheter motion so as to position the tip such that it is in contact with the tissue for a specified fraction of the cardiac cycle, the contact duty cycle, D, Fig. 1C. As described in the Supplementary Text below, the contact duty cycle is linearly related to the maximum contact force. The intermittent contact mode was used during navigation around the aortic valve annulus.

We implemented intermittent-contact navigation using haptic vision to detect tissue contact and, combined with heart rate data, to compute the contact duty cycle at the frame rate of the camera (45fps). We implemented a controller that adjusts catheter position along its longitudinal axis to drive the contact duty cycle to the desired value. Catheter motion in the plane of the tissue surface was performed either autonomously or by the operator (shared control mode). In the autonomous mode, catheter motion in the tissue plane was performed only during the fraction of the cardiac cycle when the haptic vision sensor indicated that the catheter was not touching tissue. This reduced the occurrence of the catheter tip sticking to the tissue surface during wall following.

Autonomous navigation on the valve annulus

Intermittent contact control was used to control catheter motion orthogonal to the plane of the annulus. The desired value of contact duty cycle was typically set to be ~40%. Thus, 40% of the cardiac cycle was available for image processing (during contact) while motion in the plane of the annulus was performed during the 60% non-contact portion of the cardiac cycle. During contact, the robot detects the blue tangent sutures on the valve (Fig. 2C) using color thresholding in the HSV color space and computes the centroid of the detected sutures. Next, a Hough transform on the thresholded image is used to estimate the tangent of the aortic annulus. During the non-contact portion of the cardiac cycle, the algorithm generates independent motion commands in the radial and tangential directions. In the radial direction, the catheter adjusts its position such that the centroid of the detected sutures is centered in the imaging frame. Motion in the tangential direction is performed at a specified velocity. While navigating around the valve, the robot incrementally builds a map of the location of the annulus in three-dimensional space based on the centroids of the detected sutures and the catheter tip coordinates as computed using the robot kinematic model. The model is initialized with the known valve diameter and the specified direction of approach. By comparing the current tangent with the model, the robot estimates its clock position on the annulus. While not implemented, this model could also be used to estimate the valve tangent and radial position in situations where the sutures are not well detected.

Registration of valve rotation with respect to the robot

We assume that paravalvular leaks have been identified in pre-operative imaging, which also indicates their location relative to the features of the bioprosthetic valve, e.g., leaflet commissures. In the ventricular view of the valve annulus provided by haptic vision, such features are hidden. While the model built during annular navigation defines the coordinates of the annulus circle in three-dimensional space, there is no means to refine the initial estimate of where 12 o’clock falls on the circle, i.e., to establish the orientation of the valve about its axis. To enable the robot to refine its orientation estimate, we introduced registration features into the annulus comprised of green sutures located at 4, 8 and 12 o’clock. During annular navigation, whenever the robot detects one of these features, it compares its actual location with the current prediction of the model and updates its estimate of valve rotation accordingly.

Endothelialization of blue and green sutures on the valve annulus

In clinical use, the sutures would remain visible for several months prior to endothelialization. Thus, they could be used for autonomous repair of paravalvular leaks that occur at the time of valve implantation or soon after – as is the case for transcatheter valves (10).

Autonomous navigation for paravalvular leak closure

The algorithm inputs consisted of the clock-face leak location and the desired ventricular approach direction, also specified as a clock-face position. Starting from just inside the apex of the left ventricle, the catheter moves in the desired approach direction until it detects tissue contact. It then switches to continuous-contact mode and performs wall following in the direction of the valve. When the classifier detects the bioprosthetic valve in the haptic vision image, the controller switches to intermittent-contact mode and computes the minimum-distance direction around the annulus to the leak location based on its initial map of the annulus. As the catheter moves around the annulus in this direction, its map is refined based on the detection of tangent and registration sutures. Once the leak location is reached, the robot controller acts to maintain its position at this location and sends an alert to the operator. Using joystick control, the operator can then reposition the working channel over the leak as needed and then the occluder can be deployed.

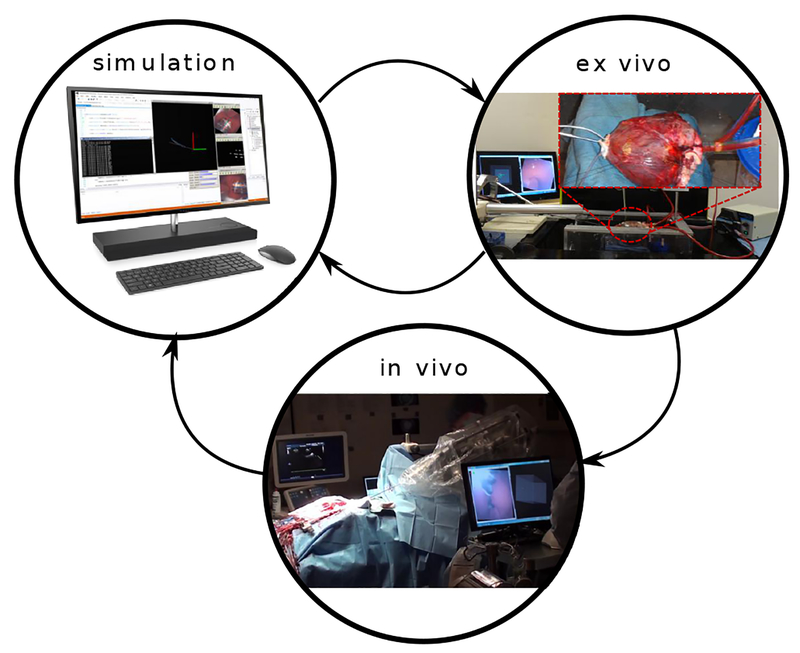

Software development cycle

To develop and test our autonomous navigation algorithms, we implemented a development cycle comprised of three steps: in silico simulation, ex vivo experiments and in vivo experiments (Fig. 5). We created a simulation engine that can replay time-stamped data, comprising haptic vision images and robot trajectories, recorded during in vivo cases. We used the simulation engine to implement new software functionality and to troubleshoot unexpected results from in vivo experiments. After simulation, we tested new functionality on an ex-vivo model comprising an explanted porcine heart, pressurized using a peristaltic pump (Masterflex Pump, 115 VAC). We immobilized the pressurized heart by using sutures to attach it to a fixture. Based on the outcome of the ex-vivo tests, we would either perform additional simulations to refine the software implementation or proceed to in vivo testing. This process was repeated iteratively as each algorithm was developed.

Fig. 5. Software Development Cycle.

In simulation, we replayed data from prior in vivo experiments to evaluate and debug software. New features were first implemented in the simulator to either address previously identified in vivo challenges or to extend robot capabilities. New software was then tested in the ex-vivo model to check the desired functionality and to ensure code stability. Identified problems were addressed by iterating between in silico and ex vivo testing. New software features were then assessed with in vivo testing. The design cycle was then completed by importing the in vivo data into the simulator and evaluating algorithm performance.

In vivo experiments

Surgical Procedure

We created a porcine paravalvular leak model by implanting a custom bioprosthetic device (Fig. 2C) into the aortic valve position in 84.3±4.7kg Yorkshire swine. The device is designed with three sewing ring gaps evenly distributed around its circumference (120° degrees apart) to produce the areas of paravalvular leakage. The bioprosthetic valve consists of a titanium frame covered by a non-woven polyester fabric. A polypropylene felt sewing ring is sutured to the frame around the annulus. Suture is passed through this ring when the valve is sewn in place inside the heart. Finally, glutaraldehyde-fixed porcine pericardium leaflets are sutured to the frame (31).

Animal care followed procedures prescribed by the Institutional Animal Care and Use Committee. To implant the bioprosthetic valve, we pre-medicated the swine with atropine 0.04mg/kg IM followed by Telazol 4.4mg/kg and Xylazine 2.2mg/kg IV, and we accessed the thoracic cavity through a median sternotomy incision. We acquired epicardial echocardiographic images to determine the size of the valve to be implanted. Next, we initiated cardiopulmonary bypass by placing purse-string sutures for cannulation, cross-clamping the aorta, and infusing cardioplegia solution to induce asystole. We incised the aorta to expose the valve leaflets, which were then removed, and the artificial valve was implanted using nine 2–0 Ethibond valve sutures supra-annularly. At this point, we re-sutured the aortomy incision, started rewarming, and released the aortic cross-clamp. We maintained cardiopulmonary bypass to provide 35–50% of normal cardiac output to ensure hemodynamic and cardiac rhythm stability. The function of the implanted valve, as well as the leak locations and sizes, were determined by transepicardial short- and long-axis 2D and color Doppler echocardiography. Apical ventriculotomy was then performed, with prior placement of purse-string sutures to stabilize the cardiac apex for the introduction of the robotic catheter. The catheter was introduced through the apex and positioned such that its tip was not in contact with the ventricular walls. All experiments in a group were performed using the same apical catheter position. Throughout the procedure, we continuously monitored arterial blood pressure, central venous pressure, heart rate, blood oxygenation, temperature, and urine output. At the end of the experiment a euthanasia solution was injected, and we harvested the heart for postmortem evaluation.

Autonomous navigation from apex to valve

We performed experiments on 5 animals. For each animal, navigation was performed using three valve approach directions corresponding to 6 o’clock (posterior ventricular wall), 9 o’clock (ventricular septal wall) and 12 o’clock (anterior ventricular wall) (Fig. 2C). Of the 90 total trials, the number performed in the 6, 9 and 12 o’clock directions were 31, 32 and 27, respectively.

Autonomous circumnavigation of aortic valve annulus

Experiments were performed on 3 animals. In the first experiment, a range of contact duty cycles was tested while in the latter two experiments, the contact duty cycle was maintained between 0.3–0.4. In all experiments, the tangential velocity was specified as 2mm/sec during those periods when the tip was not in contact with the valve and 0mm/sec when in contact.

Autonomous navigation from apex to paravalvular leaks

We performed experiments on 5 animals. As an initial step for all experiments, we built a 3D spatial model of the valve by exploring the valve with the catheter under operator control. We used this model, which is separate from the model built by the autonomous controller, to monitor autonomous navigation. For 3 animals, we also used this model to estimate valve rotation with respect to the robot and provided this estimate as an input to the autonomous navigation algorithm. In 2 animals, valve rotation was estimated autonomously based on the valve model built by the navigation algorithm and its detection of registration sutures.

In each experiment, navigation trials were individually performed for each of the 3 leaks located at 2 (n=28), 6 (n=27) and 10 (n=28) o’clock (Fig. 2C). For each leak location, we selected a clock direction to follow on the ventricular wall such that the catheter would arrive at the valve annulus close to the leak, but far enough away that it would have to pass over registration sutures to reach the leak. In general, this corresponded to approaching the valve at 11, 9 and 1 o’clock to reach the leaks at 2 (clockwise), 6 (counter clockwise) and 10 (counter clockwise) o’clock, respectively. If we observed that along these paths the annulus was covered by valve tissue or a suturing pledget, we instructed the navigation algorithm to approach the leak from the opposite direction. Note that the mitral valve is located from 2 to 5 o’clock; the ventricular wall cannot be followed in these directions to reach the aortic valve. Thus, in one experiment involving operator-specified valve registration, the clockwise approach path was covered by tissue and we chose to approach the leak directly from the 2 o’clock direction rather than start farther away at 6 o’clock.

We designed the registration sutures to be 120° apart under the assumption that valve rotation with respect to the robot would be less than ±60° from the nominal orientation. In one animal in which valve rotation was estimated autonomously, however, the rotation angle was equal to 60°. In this situation, it impossible for either man or machine to determine if the error is +60° or −60°. For these experiments, we shifted the approach direction for the leak at 6 o’clock from 9 to 8 o’clock so that the catheter would only see one set of registration sutures along the path to the leak. This ensured that it would navigate to the correct leak.

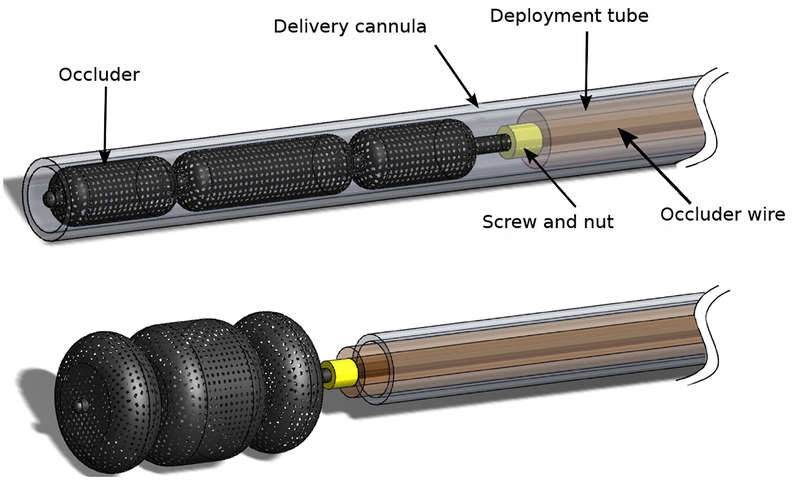

Occluder deployment

After navigation to the desired leak location, the operator took control of the catheter and, as needed, centered the working channel over the leak. A three-lobed vascular occluder (AMPLATZER Vascular Plug II, AGA Medical Corporation), attached to a wire and preloaded inside a delivery cannula, was advanced ~3mm into the leak channel (Fig. 6). The cannula was then withdrawn allowing the occluder to expand inside the leak channel. We then retracted the wire and robotic catheter until the proximal lobe of the occluder was positioned flush with the valve annulus and surrounding tissue. If positioning was satisfactory, the device was released by unscrewing it from the wire. If not, the device was retracted back into the delivery cannula and the procedure was repeated as necessary.

Fig. 6. Occluder deployment system.

The occluder, attached to a wire via a screw connection, is pre-loaded inside a flexible polymer delivery cannula. The delivery cannula is inserted through the lumen of the catheter into the paravalvular leak. A polymer deployment tube is used to push the occluder out of the delivery cannula. Once positioned, the occluder is released by unscrewing the wire.

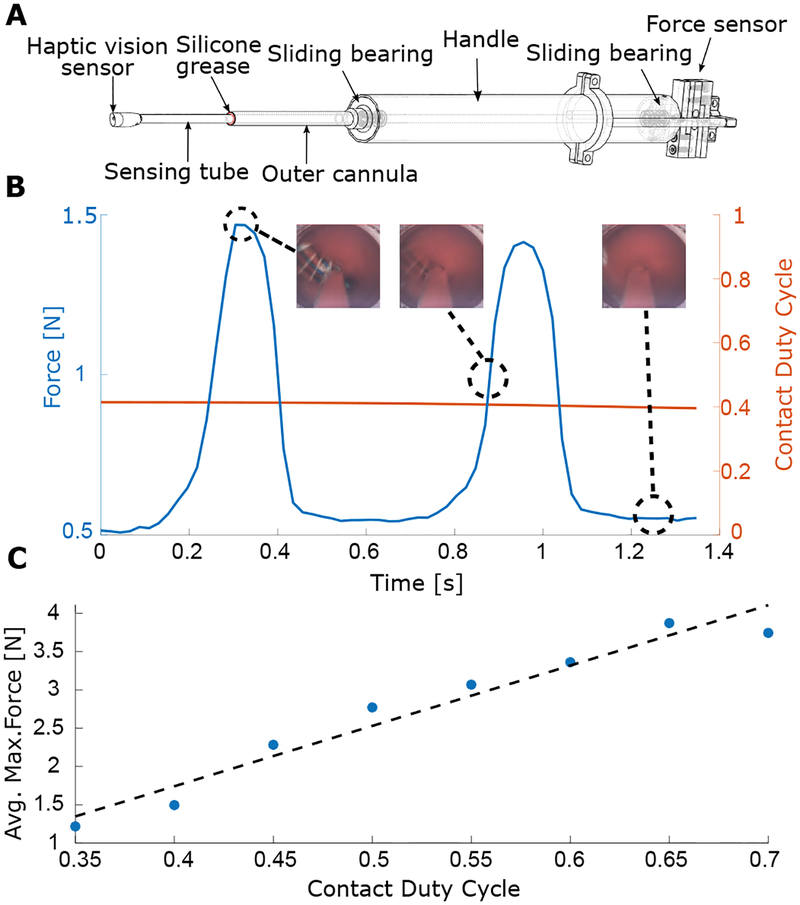

In-vivo calibration of contact duty cycle versus maximum tissue force

To investigate the relationship between maximum contact force and contact duty cycle, we designed a handheld instrument integrating haptic vision and force sensing (Fig. 7). Force sensor integration was inspired by (32). The haptic vision sensor is mounted on a stiff tube that is supported by two polymer sliding bearings mounted inside the proximal handle. The proximal end of the shaft is connected to a force sensor. An outer cannula encloses the sensing shaft and extends from the handle to about 6cm from the sensing tip. When the instrument is inserted into the apex of the heart, the outer cannula is in contact with the apical tissue, but the sensing tube is not. The gap between the outer cannula and sensing tube is filled with silicone grease to prevent blood flow from the heart into the instrument while generating minimal friction on the sensing tube. Calibration experiments indicated that friction due to the bearings and grease is less than ±0.2N.

Fig. 7. Maximum tissue force as a function of contact duty cycle.

(A) We constructed a handheld instrument for the simultaneous measurement of tip contact force and contact duty cycle that combines a haptic vision sensor with a force sensor. (B) We made in vivo measurements of the temporal variations in contact force as a function of contact duty cycle on the aortic valve annulus. The insets show images from the haptic vision sensor at 3 points in the cardiac cycle. Note that the minimum force value is not necessarily zero because a small amount of tip contact with ventricular tissue can occur during systole when the valve moves away from the tip, but the ventricle contracts around it. This white (septal) ventricular tissue can be seen on the left side of the rightmost inset. (C) Maximum contact force as a function of duty cycle. Average values of maximum force are linearly related to contact duty cycle for duty cycles in the range of 0.35–0.7.

We performed in vivo experiments in which we positioned the haptic vision sensor on the bioprosthetic valve annulus in locations where we could be sure that the sensor was experiencing contact only on its tip. At these locations, we collected force, heart rate and haptic vision data (Fig. 7B). By manually adjusting instrument position along its axis, we were able to obtain data for a range of duty cycle values. The image and force data were collected at 46Hz and contact duty cycle based on valve contact was computed at each sampling time using a data window of width equal to the current measured cardiac period (~36 images). To remove high-frequency components not present in the force data, the computed duty cycle was then filtered using a 121-sample moving average filter corresponding to ~3.4 heartbeats. The filtered input data and the output data (e.g., Fig. 7B), were then binned using duty cycle intervals of 0.05. Finally, we computed the relationship between filtered contact duty cycle and maximum applied force by averaging the maximum forces for each binned duty cycle value (Fig. 7C). We computed the Pearson’s coefficient as a measure of linear relationship between the contact duty cycle and the maximum annular force. The Pearson coefficient was equal to 0.97, which indicates a strong linear relationship. The plot of Fig. 7C indicates that the contact duty cycle range of 0.35–0.45 that we used in most of our experiments corresponded to a maximum force of 1.25–2.3N.

Statistical analysis

MATLAB® (version R2017b) statistical subroutines were used to analyze the data and perform all statistical tests. We compared time duration for each navigation mode (i.e., handheld, teleoperated, autonomous) for the tasks of navigating from the apex to the aortic annulus, navigating around the valve annulus, and from the apex to the leak. We also compared occluder deployment times for each navigation mode. Groups, corresponding to different navigation modes, have unequal sample sizes and sample variances. We used Levene’s test to evaluate equality of variances. With no evidence of normally-distributed time duration and more than two groups, we used the Kruskal-Wallis non-parametric test to check whether there are statistically significant time differences among groups. In experiments with statistical significance, we compared pairs of groups using the Mann-Whitney U test with Bonferroni correction. Data that differ more than 1.5×IQR from the 25th percentile (Q1) and the 75th percentile (Q3), where IQR is the interquartile range IQR=Q3–Q1, are considered outliers. Fisher’s exact test was used to compare success rates between different groups in the case of paravalvular leak closure. Statistical significance was tested at the 5% confidence level (p<0.05).

Supplementary Material

Acknowledgments:

We thank R. Howe for his suggestions on how to improve the manuscript. We thank A. Nedder, C. Pimental, M. Woomer, K. Connor, M. Bryant, W. Regan, and N. Toutenel for assistance with the in vivo experiments.

Funding: This work was supported by the National Institutes of Health under grant R01HL124020. B.R. was partially supported by ANR/Investissement d’avenir program (Labex CAMI, ANR-11-LABX-0004).

Footnotes

Competing interests: P.E.D., M.M., B.R., and Z.M. are inventors on a U.S. Patent application 15/158,475 held by Boston Children’s Hospital that covers the optical imaging technique.

Data and materials availability: All the data needed to evaluate the study are in the main text or in the Supplementary Materials. Contact P.E.D. for additional data or materials.

References and Notes:

- 1.Cooper MA, Hutfless S, Segev DL, Ibrahim A, Lyu H, Makary MA, Hospital level under-utilization of minimally invasive surgery in the United States: retrospective review. Br. Med. J 349, g4198 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Reardon MJ, Van Mieghem NM, Popma JJ, Kleiman NS, Søndergaard L, Mumtaz M, Adams DH, Deeb GM, Maini B, Gada H, Chetcuti S, Gleason T, Heiser J, Lange R, Merhi W, Oh JK, Olsen PS, Piazza N, Williams M, Windecker S, Yakubov SJ, Grube E, Makkar R, Lee JS, Conte J, Vang E, Nguyen H, Chang Y, Mugglin AS, Serruys PWJC, Kappetein AP, Surgical or Transcatheter Aortic-Valve Replacement in Intermediate-Risk Patients. N. Engl. J. Med 376, 1321–1331 (2017). [DOI] [PubMed] [Google Scholar]

- 3.Kiefer P, Meier S, Noack T, Borge MA, Ender J, Hoyer A, Mohr FW, Seeburger J, Good 5-Year Durability of Transapical Beating Heart Off-Pump Mitral Valve Repair With Neochordae. Ann. Thorac. Surg 106, 440–445 (2018). [DOI] [PubMed] [Google Scholar]

- 4.Tobis J, Shenoda M, Percutaneous treatment of patent foramen ovale and atrial septal defects. J. Am. Coll. Cardiol 60, 1722–1732 (2012). [DOI] [PubMed] [Google Scholar]

- 5.Stefanini GG, Holmes DR, Drug-Eluting Coronary-Artery Stents. N. Engl. J. Med 368, 254–265 (2013). [DOI] [PubMed] [Google Scholar]

- 6.Lederle FA, Freischlag JA, Kyriakides TC, Matsumura JS, Padberg FT Jr., Kohler TR, et al. , Long-term comparison of endovascular and open repair of abdominal aortic aneurysm. N. Engl. J. Med 367, 1988–1997 (2012). [DOI] [PubMed] [Google Scholar]

- 7.Mitchinson B, Martin CJ, Grant RA, Prescott TJ, Feedback control in active sensing: rat exploratory whisking is modulated by environmental contact. Pro. R. Soc. Lond. B Biol. Sci 274, 1035–1041 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Creed R, Miller J, Interpreting animal wall-following behavior. Experientia 46, 758–761 (1990). [Google Scholar]

- 9.Patullo B, Macmillan D, Corners and bubble wrap: the structure and texture of surfaces influence crayfish exploratory behaviour. J. Exp. Biol 209, 567–575 (2006). [DOI] [PubMed] [Google Scholar]

- 10.Eleid MF, Cabalka AK, Malouf JF, Sanon S, Hagler DJ, Rihal CS, Techniques and outcomes for the treatment of paravalvular leak. Circ. Cardiovasc. Interv 8, e001945 (2015). [DOI] [PubMed] [Google Scholar]

- 11.Nietlispach F, Maisano F, Sorajja P, Leon MB, Rihal C, Feldman T, Percutaneous paravalvular leak closure: chasing the chameleon. Euro. Heart J 37, 3495–3502 (2016). [DOI] [PubMed] [Google Scholar]

- 12.Ruiz C, Jelnin V, Kronzon YI, Dudiy R, Del Valle-Fernandez BN, Einhorn TLP, Chiam C, Martinez R, Eiros G, Roubin H, Cohen A, Clinical outcomes in patients undergoing percutaneous closure of periprosthetic paravalvular leaks. J. Am. Coll. Cardiol 58, 2210–2217 (2011). [DOI] [PubMed] [Google Scholar]

- 13.Rosa B, Machaidze Z, Mencattelli M, Manjila S, Shin B, Price K, Borger M, Thourani V, Del Nido P, Brown D, Baird C, Jr Mayer JE, Dupont PE, Cardioscopically guided beating heart surgery: paravalvular leak repair. Ann. Thorac. Surg 104, 1074–1079 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Barbash GI, Glied SA, New technology and health care costs—the case of robot-assisted surgery. N. Engl. J. Med 363, 701–704 (2010). [DOI] [PubMed] [Google Scholar]

- 15.Hu JC, Gu X, Lipsitz SR, Barry MJ, D’Amico AV, Weinberg AC, Keating NL, Comparative effectiveness of minimally invasive vs open radical prostatectomy. J. Am. Med. Assoc 302, 1557–1564 (2009). [DOI] [PubMed] [Google Scholar]

- 16.Shademan Decker RS Opfermann JD Leonard S, Krieger A, Kim PCW, , Supervised autonomous robotic soft tissue surgery. Sci. Transl. Med 8, 337ra364–337ra364 (2016). [DOI] [PubMed] [Google Scholar]

- 17.Yang G-Z, Cambias J, Cleary K, Daimler E, Drake J, Dupont PE, Hata N, Kazanzides P, Martel S, Patel RV, Santos VJ, Taylor RH, Medical robotics—Regulatory, ethical, and legal considerations for increasing levels of autonomy. Sci. Robotics 2, 8638 (2017). [DOI] [PubMed] [Google Scholar]

- 18.Yang G-Z, Bellingham J, Dupont PE, Fischer P, Floridi L, Full R, Jacobstein N, Kumar V, McNutt M, Merrifield R, Nelson BJ, Scassellati B, Taddeo M, Taylor R, Veloso M, Wang ZL, Wood R, The grand challenges of Science Robotics. Sci. Robotics 3, eaar7650 (2018). [DOI] [PubMed] [Google Scholar]

- 19.Marescaux J, Leroy J, Gagner M, Rubino F, Mutter D, Vix M, Butner SE, Smith MK, Transatlantic robot-assisted telesurgery. Nature 413, 379–380 (2001). [DOI] [PubMed] [Google Scholar]

- 20.Obermeyer Z, Emanuel EJ, Predicting the Future – Big Data, Machine Learning, and Clinical Medicine. N. Engl. J. Med 375, 1216–1219 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Porter ME, Larsson S, Lee TH, Standardizing Patient Outcomes Measurement. N. Engl. J. Med 374, 504–506 (2016). [DOI] [PubMed] [Google Scholar]

- 22.Maier-Hein L, Vedula SS, Speidel S, Navab N, Kikinis R, Park A, Eisenmann M, Feussner H, Forestier G, Giannarou S, Hashizume M, Katic D, Kenngott H, Kranzfelder M, Malpani A, März K, Neumuth T, Padoy N, Pugh C, Schoch N, Stoyanov D, Taylor R, Wagner M, Hager GD, Jannin P, Surgical data science for next-generation interventions. Nat. Biomed.l Eng 1, 691–696 (2017). [DOI] [PubMed] [Google Scholar]

- 23.Dupont PE, Lock J, Itkowitz B, Butler E, Design and control of concentric tube robots. IEEE Trans. Robot 26, 209–225 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Webster R, Romano J, Cowan N, Mechanics of precurved tube continuum robots. IEEE Trans. Robot 25, 67–78 (2009). [Google Scholar]

- 25.Bergeles C, Gosline AH, Vasilyev NV, Codd PJ, del Nido PJ, Dupont PE, Concentric tube robot design and optimization based on task and anatomical constraints. IEEE Trans. Robot 31, 67–84 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Luis S, Khatib O, Synthesis of whole-body behaviors through hierarchical control of behavioral primitives. Int. J. Hum. Robot 2, 505–518 (2005). [Google Scholar]

- 27.Li FF, Perona P, A Bayesian hierarchical model for learning natural scene categories. Computer Vision and Pattern Recognition, IEEE Computer Society Conference on, 2, 524–531 (2005). [Google Scholar]

- 28.Ziegler A, Christiansen E, Kriegman D, Belongie SJ, Locally uniform comparison image descriptor. Adv. Neural. Inf. Process. Syst 1–9 (2012). [Google Scholar]

- 29.Corinna C, Vapnik V, Support-vector networks. Mach. Learn 20, 273–297 (1995). [Google Scholar]

- 30.Kesner S, Howe RD, Robotic catheter cardiac ablation combining ultrasound guidance and force control. Int. J. Rob. Res 33, 631–644 (2014). [Google Scholar]

- 31.Rosa B, Machaidze Z, Shin B, Manjila S, Brown D, Baird C, Mayer J Jr, Dupont PE. A low cost bioprosthetic semilunar valve for research, disease modeling and surgical training applications. Int. Cardiovasc. Thorac. Surg ivx189 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kesner SB, “Robotic Catheters for Beating Heart Surgery,” thesis, Harvard University, Cambridge, MA: (2012). [Google Scholar]

- 33.Avni R, Tzvaigrach Y, Eilam D , Exploration and navigation in the blind mole rat (Spalax ehrenbergi): global calibration as a primer of spatial representation. J. Exp. Bio 211, 2817–2826 (2008). [DOI] [PubMed] [Google Scholar]

- 34.Sharma S, Coombs S, Patton P, Burt de Perera T, The function of wall-following behaviors in the Mexican blind cavefish and a sighted relative, the Mexican tetra (Astyanax). J Comp Physiol A 195, 225–240 (2009). [DOI] [PubMed] [Google Scholar]

- 35.Patronik N, Ota T, Zenati M, Riviere C, A Miniature Mobile Robot for Navigation and Positioning on the Beating Heart. IEEE Trans. Robotics 25, 1109–1124 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kallai J, Makany T, Csatho A, Karadi K, Horvath D, Nadel L, Jacobs J, Cognitive and Affective Aspects of Thigmotaxis Strategy in Humans. Behavioral Neuroscience 121, 21–30 (2007). [DOI] [PubMed] [Google Scholar]

- 37.Yaski O, Portugali J, Eilam D, The dynamic process of cognitive mapping in the absence of visual cues: human data compared with animal studies. J. Exp. Biology 212, 2619–2626. [DOI] [PubMed] [Google Scholar]

- 38.Ha J, Fagogenis G, Dupont PE, Effect of Path HIstory on Concentric Tube Robot Model Calibration. Proc. Hamlyn Symposium on Medical Robots 77–78 (2017). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.