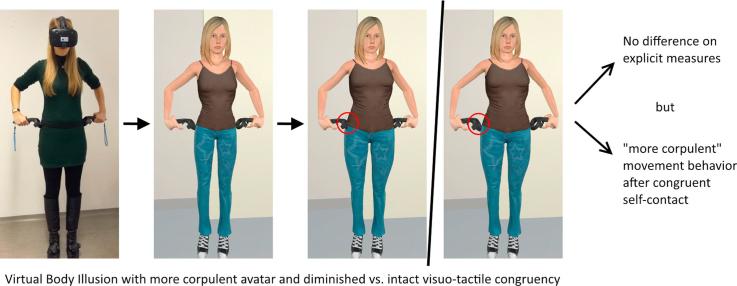

Graphical abstract

Keywords: Full body ownership illusion, Visuo-tactile congruency, Body schema, Movement behavior

Highlights

-

•

Visuo-tactile congruency influences the body schema, but not the body image.

-

•

Movement behavior may provide a window into the unconscious body schema.

-

•

We present novel methods to distort avatars and Euclidean space in virtual reality.

Abstract

Previous research showed that full body ownership illusions in virtual reality (VR) can be robustly induced by providing congruent visual stimulation, and that congruent tactile experiences provide a dispensable extension to an already established phenomenon. Here we show that visuo-tactile congruency indeed does not add to already high measures for body ownership on explicit measures, but does modulate movement behavior when walking in the laboratory. Specifically, participants who took ownership over a more corpulent virtual body with intact visuo-tactile congruency increased safety distances towards the laboratory’s walls compared to participants who experienced the same illusion with deteriorated visuo-tactile congruency. This effect is in line with the body schema more readily adapting to a more corpulent body after receiving congruent tactile information. We conclude that the action-oriented, unconscious body schema relies more heavily on tactile information compared to more explicit aspects of body ownership.

1. Introduction

Virtual body illusions — the perception that a fake or virtual body is one’s own — have attracted attention in psychology, neurology, neuroscience and philosophy (Lenggenhager et al., 2007, Blanke, 2012, Blanke and Metzinger, 2009) as a tool to investigate the body image and bodily self-consciousness. The field originated in the induction of illusory ownership over artificial hands (so called rubber hand illusion) using physical hand models (Botvinick and Cohen, 1998, Tsakiris et al., 2006), but has extended to inducing ownership over whole bodies (Petkova & Ehrsson, 2008) and to employ virtual reality technology to more flexibly vary aspects of virtual bodies and experimental conditions (Slater, 2009, Kilteni et al., 2012).

With regards to ownership over fake hands, several studies emphasized the sense of touch and reported outright effects of this modality even on implicit dimensions of the ownership experience: When the fake and real hand were stroked in synchrony and participants were later asked to point towards a certain point in space, they did so as if their hand was now located at the position of the fake hand (Botvinick and Cohen, 1998, Ehrsson et al., 2004, Austen et al., 2004, Tsakiris and Haggard, 2005). Such effects of proprioceptive drift were not, or to a lesser extent, observed when the fake and real hand were stroked asynchronously, i.e. when visuo-tactile congruency was broken. Moreover, it was found that self-touch allowed for stronger feelings of ownership over a virtual hand compared to being touched by another person (Hara et al., 2015).

Research on the illusory ownership over whole bodies further elaborated on the role of touch on the strength of the illusion. In one study (Lenggenhager et al., 2007), participants saw a mannequin or their own body from behind using a head mounted display (HMD). If the back of the body presented in front of them was stroked synchronously with the physical back, participants reported the location of their self to drift towards the body seen in front of them. Furthermore, when participants were passively displaced after the stroking and subsequently asked to return to their initial position, they chose a position closer to the fake body after synchronous compared to asynchronous stroke, again demonstrating a drift in self-location also on a behavioral level. Interestingly, drifts in self-location can also occur in neurological conditions and were linked to the temporo-parietal junction (TPJ) both in patients and in the healthy population (Ionta et al., 2011).

While these studies showed a drift in the location of the hand or the self as a result of an identification with a touched body or body part, relatively little is known about the role of tactile sensations for other aspects of the bodily self. Instead, several studies employing full body ownership illusions (where the artificial body is seen from a first-person-perspective, not a third-person-perspective) found that congruent visual perspective was sufficient to install the illusion, and congruent touch did not provide any further enhancement (Slater et al., 2010, Maselli and Slater, 2013, Piryankova et al., 2014, Jong et al., 2017). By contrast, Normand, Giannopoulos, Spanlang, and Slater (2011) as well as Bovet, Debarba, Herbelin, Molla, and Boulic (2018) did find participants to report lower ownership over a virtual body after asynchronous touch. Here, however, the asynchronous condition was designed to be grotesquely asynchronous, with touch occuring at a visual distance of 7.5 cm or by providing no correlation between the real and virtual hand’s movement whatsoever. Furthermore, synchronous and asynchronous experiences were alternated within each participant, possibly inducing effects of social desirability and questioning the generalizability towards more subtle experimental variations in between-group setups.

Importantly, most studies investigating body ownership did so by explicitly asking participants to state their sense of ownership on a scale or by adjusting the size of an aperture until a virtual body would fit through (Piryankova et al., 2014). In some cases, heart-rate deceleration as response to a threat cue in the virtual reality was used as an implicit test of body ownership (Slater et al., 2010). What remains mostly unexplored, however, is the influence of body ownership on action-related representations of the body known as body schema (de Vignemont, 2010). The concept of a (largely unconscious) body schema, which is widely agreed upon compared to the more vaguely defined notion of the (more conscious) body image, has fruitfully guided research in neurology and neuropsychology for decades, but has not yet been fully embraced in the study of virtual body illusions. A palpable example for the distinction between body image and body schema comes from patients suffering from Anorexia Nervosa (AN), a disorder which is known to encompass body representation disturbances (Stice, 2002) and which may be alleviated using full body ownership illusions (Keizer, van Elburg, Helms, & Chris Dijkerman, 2016). In an intriguing study by Keizer et al. (2013), AN patients and (to a lesser extent) healthy controls overestimated their shoulder width when asked to draw its representation on a whiteboard – a common measurement for the body image. A larger difference between AN patients and healthy controls was however found on an implicit behavioral task, in which participants had to pass through a narrow door-like opening. Here, AN patients rotated their shoulders in a manner consistent with having wider shoulders compared to healthy controls, demonstrating a tangible distortion in the unconscious body schema.

The present study generally follows the approach used by Keizer et al. (2013), but transfers it to a virtual scenario in which (healthy) participants take ownership over a virtual body. The virtual body was designed to be more corpulent than the physical body for all participants – an alteration which is known not to deteriorate the strength of the illusion (Piryankova et al., 2014). In one experimental group, this distortion led to a reduced visuo-tactile congruency when participants touched their own hips or belly, while in the other group, we used a novel geometric procedure to keep visuo-tactile congruency intact in spite of an enlargement of the virtual body. We hypothesized that the experience of visuo-tactile congruency would lead to higher levels of body ownership. We furthermore hypothesized that this heightened level of ownership would be less evident in explicit measures, but rather become tangible in behavioral markers as a result of an updated body schema.

2. Materials and methods

2.1. Participants

Forty participants (twenty female) took part in this study. The sample size and experimental procedure were determined a priori and were pre-registered (AsPredicted #11746, see https://aspredicted.org/8mj7g.pdf). We used stratified sampling to achieve even distributions of gender in the two experimental groups. Specifically, we defined prior to the experiment that both experimental groups should consist of 10 female and 10 male participants. Participants from both genders were randomly assigned to one of the remaining experiment slots, 10 of which were reserved for each condition. For both genders separately, enrollment for the experiment was closed after a sufficient number of participants was tested. Two participants had a thinness grade 1 (Body Mass Index (BMI) = 17.58 and 18.36), which is defined as a BMI below 18.5 (Kopelman, 2000). No participant had thinness grade 2 or 3 (BMI below 17 and 16, respectively). One participant had light adiposity (BMI = 31.91), defined as BMI above 30. All participants had normal or corrected-to-normal vision. The study conformed to the principles expressed in the Declaration of Helsinki (version 2008) and all participants provided written informed consent. Participants in the two experimental groups did not differ in age, weight, height, BMI, waist circumference, hip circumference, waist-to-hip-ratio (WHR) or shoulder width (see Table 1).

Table 1.

Demographics and body measures in the two experimental groups. VTC = visuo-tactile congruency. Groups were compared using a t-test for variables which are normally distributed (determined using the Shapiro–Wilk test) and using a Wilcoxon rank-sum test for variables which are not normally distributed. Note: BMI = Body Mass Index, WHR = Waist-To-Hip Ratio. Age is measured in years, all body measures in cm.

| VTC lost |

VTC preserved |

||||||

|---|---|---|---|---|---|---|---|

| Range | Range | Group comparison | |||||

| Age | [20.00, 30.00] | 24.15 | 2.68 | [19.00, 28.00] | 22.80 | 2.48 | t(38) = 1.65, p = .11 |

| BMI | [17.58, 31.91] | 22.86 | 3.84 | [19.03, 29.70] | 22.73 | 2.69 | W = 183.00, p = .66 |

| WHR | [1.02, 1.44] | 1.21 | 0.10 | [0.97, 1.41] | 1.20 | 0.11 | t(38) = 0.52, p = .61 |

| Hips | [82.00, 114.00] | 98.05 | 8.62 | [83.00, 114.00] | 95.40 | 7.26 | t(38) = 1.05, p = .30 |

| Waist | [69.00, 111.00] | 81.45 | 10.91 | [68.00, 112.00] | 80.65 | 11.97 | W = 215.00, p = .69 |

| Shoulders | [38.00, 51.00] | 44.25 | 4.48 | [37.00, 51.00] | 44.65 | 4.22 | W = 189.50, p = .79 |

| Weight | [45.00, 96.00] | 68.00 | 15.04 | [53.00, 92.00] | 68.10 | 11.66 | t(38) = −0.02, p = .98 |

| Height | [155.00, 186.00] | 171.75 | 8.93 | [153.00, 186.00] | 172.65 | 7.99 | t(38) = −0.34, p = .74 |

2.2. Apparatus and software

The VR equipment consisted of an HTC Vive system. The system’s HMD had a resolution 2160 × 1200 pixels and a 110 field of view. One HTC Vive tracker was tied to the participants’ backs and one was tied to each foot in order to continously track their position and rotation in space, while the head and both hands were tracked by the HMD itself and the Vive controllers which participants held in their hands. We used a TPCAST Wireless Adapter instead of the standard cable to control communication between the VR system and the stimulus PC, allowing the participants to more freely move in the laboratory room. The area in the laboratory in which participants were able to move covered about 4.50 m × 4.80 m. Within this area, two tables were placed as obstacles.

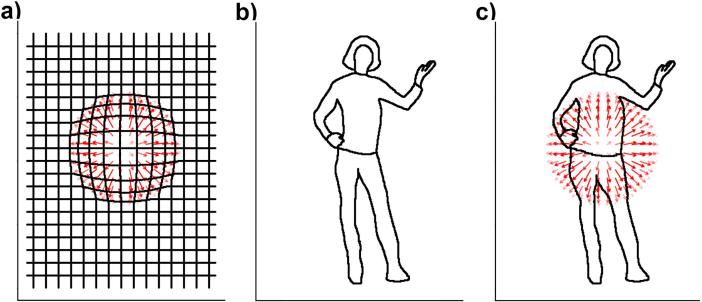

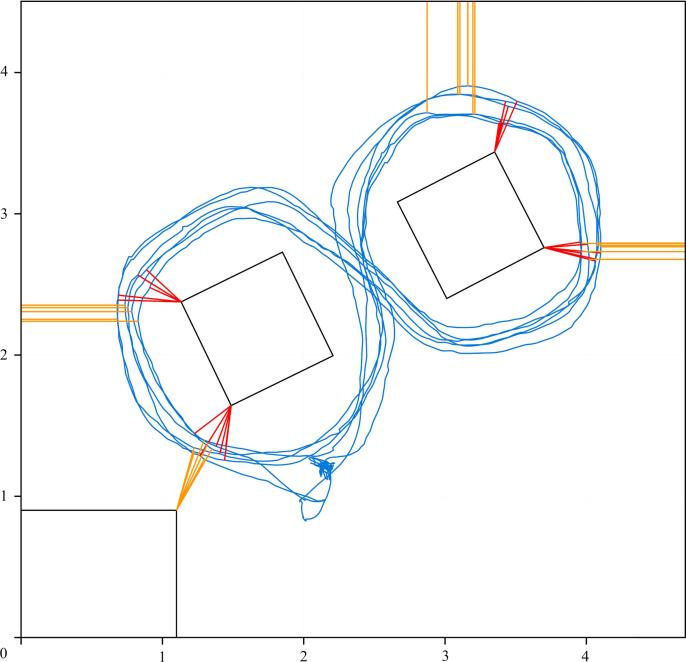

The experimental software was developed using Unity 3D (unity3d.com). 16 virtual characters varying on gender, race and physical appearance were designed using Autodesk Character Generator (charactergenerator.autodesk.com/). Real-time motion capturing was achieved by mapping the positions of all trackers onto the virtual characters using an inverse kinematics algorithm (Aristidou & Lasenby, 2011, root-motion.com/). Proportions of the characters were adapted within Unity 3D using in-house software available at github.com/mariusrubo/Unity-Humanoid-AdjustProportions. This software allows to adapt a humanoid charater’s total size, leg length, arm length, waist width and hip width, which is used here to align the proportions of a virtual avatar with those of a participant’s physical body, but also to additionally make avatars more corpulent at the cost of visuo-tactile congruency. Distortions of Eucledian space in the virtual scenario were performed using in-house software available at github.com/mariusrubo/Unity-Vertex-Displacements and described in Rubo and Gamer (2018). This software allows to alter the proportions of a virtual body while keeping the coherence of the arrangement (i.e., the information on which body parts or objects are touching) in place, i.e. preserve visuo-tactile congruency. The underlying algorithm can be described as a lens effect displacing all points which make up the shape of an avatar (or, more generally, all points in a virtual scenario) within a certain range from the center of the lens. Fig. 1 demonstrates this effect schematically in 2D, Eq. (1) provides a mathematical description of the lens effect in 3D space, and Fig. 2 shows a comparison of the distortion effects obtained from the two different approaches as they can be observed in the virtual scenario.

| (1) |

where

-

P

is the center of the lens

-

Q

=

-

s

describes the strength of the lens effect

-

t

describes an inverse of the size of the lens.

Fig. 1.

2D visualization of the space distortion effect. Red arrows indicate offsetting of points in the lens effect. The grid in (a) shows the effect of the lens on originally straight lines, which are bent but remain continuous. The schematic human body shown in (b) is adapted along the same logic in (c). Here, the lens effect results in the body appearing more corpulent, but the contact between the hand and the hip is preserved. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

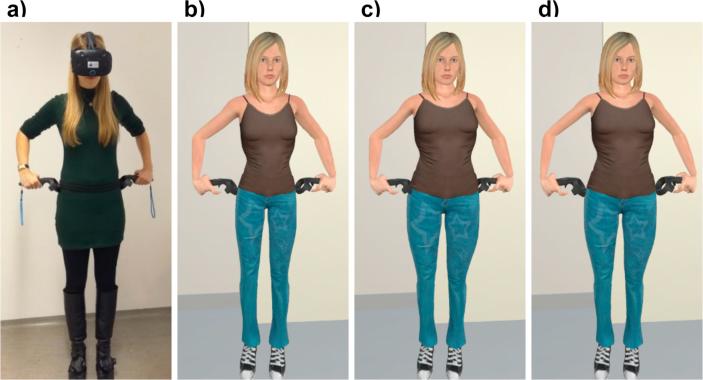

Fig. 2.

Comparison of the two types of distortions used to make virtual avatars more corpulent compared to participants’ physical bodies. In an intermediate step which is not visible to the participant, the participant’s body posture (a) is matched on a virtual avatar with corresponding proportions (b). The avatar is then made more corpulent compared to the participant’s physical body either by distorting the virtual body’s torso (c), which results in the controllers intruding the avatar’s hips (impaired visuo-tactile congruency), or by distorting Euclidean space (d), which displaces the controllers accordingly and thereby keeps visuo-tactile congruency intact.

The virtual scenario consisted of a copy of the real laboratory room, with the walls and tables positioned in space accordingly. This means that any action involving tracked objects resulted in a congruent action in the virtual scenario. For instance, if a (physical) controller was placed on one of the (physical) tables, the virtual controller representation matched this movement. Unlike the physical laboratory room, the virtual room was furthermore equipped with a mirror which allowed participants to view their entire virtual body and which could be switched on and off by the experimenter during the different phases of the experiment.

2.3. Procedure

Participants were invited to the laboratory individually and informed about the purpose of the study. The explanation given to the participants was accurate with the exception that they were misleadingly led to believe that the main outcome measure were sensations of balance problems during walking in virtual reality, although the true main outcome measure was their movement behavior. We decided to misguide participants in this respect due to considerations that an awareness of the true main outcome measure may lead some persons to deviate from their natural, unadulterated behavioral patterns.

Upon completing an informed consent form, participants were connected to the VR equipment. With all trackers in place, but not yet wearing the HMD, participants were instructed to walk around the two tables in the room in the shape of an “8”, i.e. by first walking around the table on their right counter-clockwise, and then walking around the second table in the clockwise direction. This was performed three times, and then repeated in the reverse direction, resulting in a total of 6 orbits around both tables. Participants were told that the purpose of this warm-up phase was to allow them to make themselves familiar with the dimensions of the lab environment. While this is true, the warm-up phase also served as a baseline measurement for participants’ movement behavior when not immersed in a virtual scenario (baseline round).

Next, participants additionally wore the HMD, which allowed them to view the virtual copy of the laboratory room, but no longer the real laboratory room. The experimenter chose an avatar which best resembled the participant’s physical appearance in terms of gender, skin color, hair color and body shape. The avatar’s position and body posture were aligned with the participant, but the avatar remained visible only on the experimenter’s computer screen until the calibration was finished. In order to calibrate the avatar, participants were asked to stand up straight, stretch out their arms horizontally and to then touch their hips and their stomach with both controllers. The experimenter then adjusted the avatar’s body proportions until each posture was precisely replicated. Note that in this procedure, the avatar’s body dimensions are implicitly aligned with those of the participant. For instance, if the avatar is shorter compared to the participant, the inverse kinematics algorithm leads it to rise up onto its toes in order to bring its head to the same position as the participant. Conversely, if the avatar is taller, it will arch its back for the purpose of aligning its head with the participant, again giving the experimenter a manifest cue for readjusting the avatar’s body dimensions until they precisely match the participant. The calibration furthermore included defining the body’s center of mass in relation to the tracker placed on the back (which could not always be placed in the exact same spot depending on participants’ clothing). The position of the body center was recorded at a rate of 60 Hz during the whole experiment (and reconstructed from the Vive tracker’s position for the baseline round which took place prior to calibration). After the calibration phase, any touch of the participants’ hips or belly with the controllers resulted in an according touch of the virtual avatar’s hips or belly by the virtual controller representations, i.e. visuo-tactile congruency was installed.

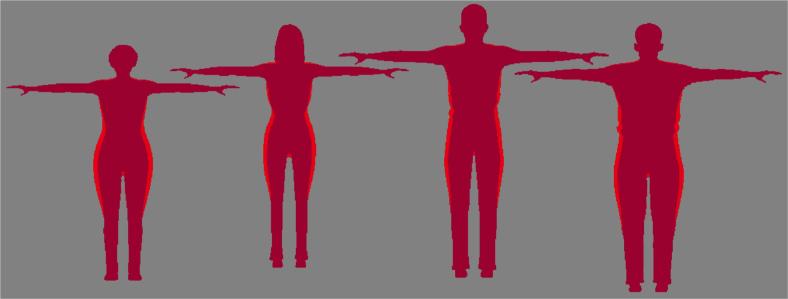

In an additional and critical step, the avatar was made more corpulent compared to the participant’s physical body in one of two ways: Either the avatar itself (i.e. its mesh) was deformed, which resulted in a loss of the previously achieved visuo-tactile congruency during touch of the hips or belly (), or a lens effect distorting Euclidean space as a whole was installed (). Space distortion produced an effect indistinguishable from mesh distortion when the participants had their arms outstretched, but, by contrast, preserved visuo-tactile congruency in spite of the differences in proportion compared to the participant’s physical body. For all participants, we chose the same coefficient s in the distortion algorithm described in Eq. (1). We chose a coefficient which we had previously experienced to roughly result in a change in corpulence comparable to a change from one silhouette number to the next in a popular figure rating scale (Bulik et al., 2001). Fig. 3 illustrates the extent to which avatars were made more corpulent in the present study. Post-hoc analysis revealed that, when viewed as a silhouette, each avatar gained 7.09% (SD = 0.71%) in area compared to when it was matched to the participant’s actual body dimensions. Pearson correlation between silhouette sizes before and after being made more corpulent was r = .99, p = .001. For a comparison, we furthermore calculated spatial area of figures in the rating scheme by Bulik et al. (2001). Here, each figure increases, on average, by 8.31% when compared with the preceding silhouette number.

Fig. 3.

Silhouettes of virtual avatars from four participants in the present study after they were aligned with the actual body dimensions in an intermediate step (inner outlines, dark red) and after they were made more corpulent (outer outlines; increase shown in light red). Avatar size increase was achieved using either a method preserving visuo-tactile congruency or using a method resulting in the loss of visuo-tactile congruency, but both procedures lead to identical increases in silhouette size in the shown body posture. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Distorting space as described in Eq. (1) algorithmically solves the problem of transferring body postures from one body to another while preserving self-contact — a problem which naturally arises whenever proportions or posture of two bodies (i.e. the physical body and a virtual body) don’t match. Take, for instance, the case of a person touching her physical hips while being immersed in a virtual scenario with body movements transferred to a virtual avatar. For the avatar to correspondingly touch its hips in a similar manner, thus installing visuo-tactile congruency in the participant, the avatar's hands and hips must be located in coordinates in the virtual space corresponding to coordinates in real space. This correspondence is, of course, most naturally achieved when the virtual body is matched to the participant’s body both in terms of proportions and in terms of posture. If the avatar’s hips are wider, more narrow or located above or below the participant’s hips, touch will occur in a displaced location on the virtual body, breaking visuo-tactile congruency. In order to hide such a touch incongruency from the participant, virtual body distortions are typically used in conjunction with restrictions in movement. For example, Normand et al. (2011) provided participants with a virtual body with an enlarged belly and were touched using a rod. Visuo-tactile congruency was achieved by restricting the rod’s movement to a back- and-forth sliding while linearly offsetting the rod’s virtual representation. While this procedure does install visuo-tactile congruency in the pre-defined use case, it cannot be easily generalized to other forms of self-contact. Molla, Debarba, and Boulic (2018) solved the problem more generally by defining positions in space with regards to egocentric coordinates as seen from individual bodies with specific size and proportions. While this approach can transfer body postures seamlessly between a wide range of different body shapes, the approach used in the present study furthermore extends to providing congruent touch with external objects and is comparatively easy to set up.

After the calibration phase was completed, the avatar was set to be visible for the participants. Standing in front of the virtual mirror, participants could now view their virtual avatar and were asked to naturally move their bodies and hands for about 20 s to make themselves familiar with this new experience. To facilitate identification with the virtual avatar as much as possible, the avatar furthermore looked into her own eyes in the mirror. While this effect goes unnoticed when participants gaze at other body parts like their hands, it matches participants’ gaze in moments when they look into their own virtual eyes in the mirror, providing another cue for visuo-motor congruency. Participants were then asked to repeatedly touch their hips with both controllers for 10 s and then repeatedly touch their stomachs with both controllers for 10 s (body examination phase). In the -condition, participants saw the controllers slightly penetrate their virtual bodies while touching their physical bodies. By contrast, visuo-tactile congruency was still intact in the -condition.

Participants were then asked to walk around both tables six times just as they had before (walking phase), which completed round 1. The body examination phase and the walking phase were repeated two more times (round 2 and round 3). After each body examination phase as well as after the last walking phase, participants were asked to verbally report, on a scale from 1 to 7, how congruent they experienced their self-touch (“How well did place and time of the self-contact fit together in terms of what you saw and what you felt?”) and how difficult they found balancing their body. During the walking phase, the mirror was switched off to avoid distracting the participants in their walking.

After the last walking phase, all VR equipment was taken off from the participants and they were given several questionnaires to inform us about their subjective experience during the experiment. Presence, the sense of “being there” in the virtual environment (Skarbez, Brooks, & Whitton, 2017), was tested using the German version of the Igroup Presence Questionnaire (IPQ; Schubert, Friedmann, & Regenbrecht, 2001). Simulator Sickness, a problematic possible side-effect in virtual reality experiments, was tested using the Simulator Sickness Questionnaire (SSQ, Kennedy, Lane, Berbaum, & Lilienthal, 1993). The sense of ownership over the virtual body was tested using the Illusion of Virtual Body Ownership Questionnaire (IVBO, Roth, Lugrin, Latoschik, & Huber, 2017), a questionnaire which comprises of the three scales acceptance (of the virtual body as one’s own body), control (of the virtual body during movement) and change (of one’s bodily experience due to the ownership illusion). Participants furthermore filled out a sociodemographic questionnaire. Finally, participants were asked to report their body weight and we measured their hip circumference, waist circumference, height and shoulder width. Participants were then debriefed about the purpose of the experiment and thanked for their participation.

2.4. Data processing

Movement data were analyzed using R for statistical computing (version 3.2; R Development Core Team, 2015). During the walking phase in each round, participants walked the pre-defined itinerary six times (three times in each direction), each time passing all the corners of both tables placed in the middle of the laboratory room. At the same time, participants passed by the walls of the room as well as the appliance carrying the laboratory’s computer and screen. Complying with the pre-registered procedure, we extracted the minimal distance, or safety distance, kept during each passing of the table corners facing outwards of the arrangement. We furthermore extracted safety distances kept towards the laboratory walls and the appliance positioned at the wall. We refer to the corners of the tables which mark the itinerary as internal boundaries of the laboratory room, and to the boundaries positioned on opposite sides as external boundaries of the laboratory room. We generally assumed that participants would aim to walk the pre-defined itinerary in a relatively straight-forward manner while making sure to avoid contact with any of the boundaries, thereby adapting the safety distances kept towards the boundaries according to their body’s dimensions. Fig. 4 schematically shows the laboratory arrangement in a top-view, with movement data from one exemplary participant in one of the four walking phases as well as the safety distances kept to each boundary. 1.91% of the individual safety distances could not be identified since participants omitted one round of walking around the tables or prematurely turned around for the next itinerary in the reverse direction. In order to exclude moments in which participants strayed off the course or accidentally came closer to a boundary than planned, we excluded safety distances which were above (0.41%) or below (0.32%) 3 standard deviations from the mean of the distribution within each individual boundary. We then aggregated data as mean values of safety distances for each participant, boundary and round.

Fig. 4.

Schematic top-view of the laboratory arrangement. The blue line shows the position data of one exemplary participant (i.e. her body’s center) as she walked around the two tables positioned in the center of the room. Red lines indicate where the distance to each table corner (the laboratory’s internal boundaries) was smallest during each passing by. Orange lines indicate safety distances towards the laboratory’s external boundaries. Distances on both axes are measured in meters. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

3. Results

3.1. Explicit measures of self-report

We first compared measures of self-reports between the two groups. Perceived congruency during self-touch as well as balance problems (recorded to distract attention away from the study’s main purpose) were recorded using a single question at four points during the VR phase of the experiment. In order to trace developments in these measures over time, we computed linear models with experimental condition, point in time as well as a condition time interaction. No predictor reached a level of statistical significance in predicting touch congruency (condition: F(152) = 0.59, p = .44, = .00, time: F(152) = 0.21, p = .89, = .00, condition time: F(152) = 0.82, p = .48, = .02) or balance problems (condition: F(152) = 0.16, p = .69, = .00, time: F(152) = 1.74, p = .16, = .03, condition time: F(152) = 0.26, p = .85, = .02). For better comparability with the other explicit measures recorded after the VR phase, we condensed measurements for touch congruency and balance problems along the four measurement points.

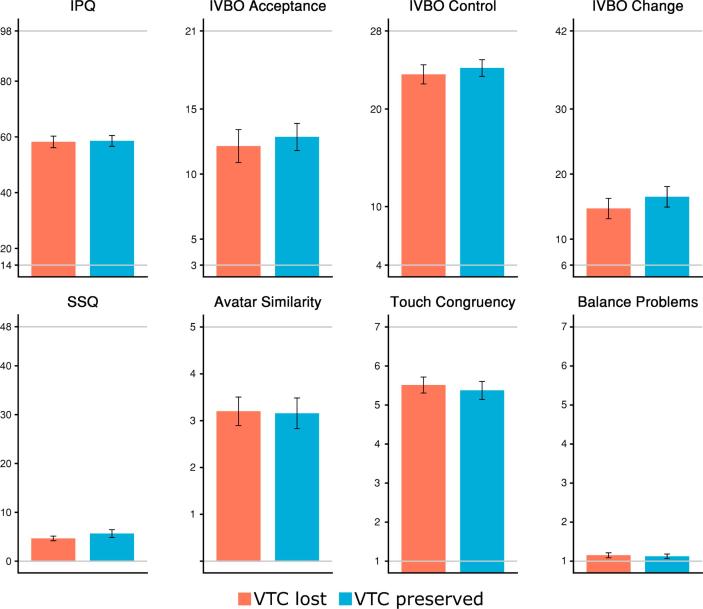

Self-report measures on presence (IPQ), the three facets of body ownership (IVBO), simulator sickness (SSQ), perceived physical similarity of the avatar, perceived touch congruency and balance problems are depicted in Fig. 5. Comparisons between the two groups were again made using a t-test for normally distributed variables — which was only the case for “IVBO Change” — and using a Wilcoxon rank-sum test for non-normally distributed variables. There were no significant differences between the and the experimental condition on any of these measures. Altogether, participants in both experimental groups gave medium to high ratings for presence, virtual body acceptance, virtual body control, similarity of the virtual body to the physical body and touch congruency, and gave low ratings for perceived change of the body image, simulator sickness and balancing problems.

Fig. 5.

Results from questionnaires in both experimental groups. Error bars indicate SEM. Gray lines at the bottom and top of each graph indicate minimum and maximum values on the specific measure.

3.2. Walking behavior in real-life

In analyzing participants’ movement patterns while walking in the laboratory, we first inspected our core assumption that body dimensions modulate the safety distances kept towards the laboratory’s boundaries. In addition to the body measures we had directly obtained from the participants, we furthermore computed the Body Mass Index (BMI, Kopelman, 2000) and the waist-to-hip ratio (WHR), an index found to be especially diagnostic for health risks related to obesity (Sahakyan et al., 2015). We computed a linear model with safety distances towards the boundaries (z-standardized within each boundary) as dependent variable and each of the body measures (height, shoulder width, hips circumference, waist circumference, BMI, WHR) as well as their interaction with the boundary type (external vs. internal) as predictors. The significant predictors were the boundary type shoulder width interaction (F(304) = 44.03, p = .001, = .13), the boundary type weight interaction (F(304) = 5.58, p = .02, = .02), the boundary type waist circumference interaction (F(304) = 10.75, p = .01, = .03) and the boundary type WHR interaction (F(304) = 27.30, p = .001, = .08). None of the other predictors reached the level of statistical significance (hip width: F(304) = 0.08, p = .78, = .00, shoulder width: F(304) = 0.01, p = .92, = .00, weight: F(304) = 0.25, p = .62, = .00, height: F(304) = 0.54, p = .46, = .00, waist: F(304) = 0.37, p = .54, = .00, BMI: F(304) = 0.00, p = .98, = .00, WHR: F(304) = 0.00, p = .98, = .00, boundary type hip width: F(304) = 0.14, p = .71, = .00, boundary type height: F(304) = 0.24, p = .62, = .00, boundary type BMI: F(304) = 3.77, p = .05, = .01).

To follow up on the significant interactions with the boundary type (internal vs. external), we computed simple bidirectional Neyman-Pearson correlations between all body measures and the safety distances kept towards the boundaries, now aggregated along the four boundaries within both boundary types. As depicted in Table 2, only the shoulder width shows a clear correlation with the safety distances kept towards the internal and external boundaries when tested in a bivariate correlation. Specifically, wider shoulders were associated both with keeping larger distances towards the external boundaries and smaller distances towards the internal boundaries. None of the other measures which reached statistical significance in the full linear model (weight, waist circumference and WHR) when considered in interaction with the boundary type remained statistically significant in the simple correlations, hinting to complex suppressor effects among the individual body measures and behavioral markers. In short, this finding indicates that in the specific experimental setup used here, having wider shoulders provoked keeping larger distances towards the external boundaries of the room, and, consequently, smaller distances to the opposing internal boundaries.

Table 2.

Correlations between body measures and the distances kept to the internal and external boundaries during walking in the laboratory.

| Internal boundaries | External boundaries | |||

|---|---|---|---|---|

| Correlation | Correlation | |||

| Hips | −0.04 [−0.34, 0.28] | p = .82 | 0.01 [−0.31, 0.32] | p = .98 |

| Shoulders | −0.37 [−0.61, −0.06] | p = .02 | 0.36 [0.06, 0.61] | p = .02 |

| Weight | −0.10 [−0.40, 0.21] | p = .52 | 0.11 [−0.21, 0.41] | p = .50 |

| Height | −0.11 [−0.41, 0.21] | p = .51 | 0.18 [−0.14, 0.46] | p = .28 |

| Waist | −0.08 [−0.38, 0.24] | p = .62 | 0.08 [−0.24, 0.38] | p = .65 |

| BMI | −0.06 [−0.36, 0.26] | p = .73 | 0.02 [−0.30, 0.33] | p = .92 |

| WHR | 0.06 [−0.26, 0.36] | p = .71 | −0.08 [−0.38, 0.24] | p = .63 |

3.3. Modification of walking behavior in VR

Next, we analyzed the development of the safety distances between both experimental groups along the three rounds in VR. Since the distances kept towards the internal and external boundaries of the scenario are partly reciprocal and redundant, we focused on the distances kept towards the external boundaries, which are associated with having wider shoulders (the analogous model of the internal boundaries showed a corresponding pattern).

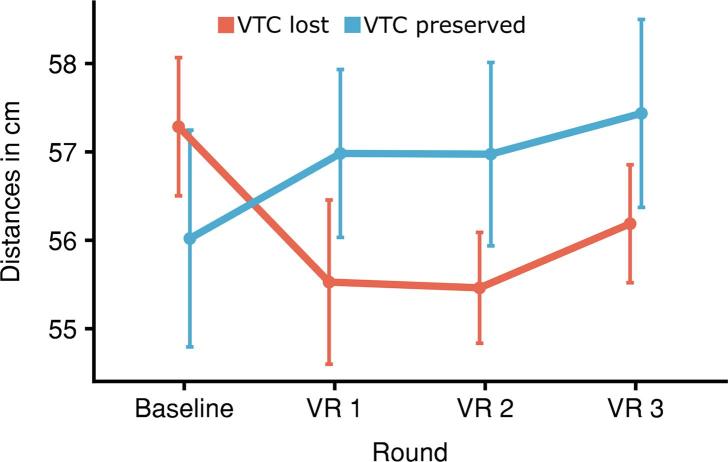

Fig. 6 shows the distances aggregated along all four external boundaries, but kept in metric units for better tangibility. We compared these trajectories statistically by means of a linear model, with safety distances (again z-standardized within individual boundaries and then aggregated) of the three VR rounds as dependent variable. Safety distances in the baseline round (aggregated along the same routine), experimental group, round as well the group round interaction were used as predictor variables. All parameters were tested for significance using an F-test.

Fig. 6.

Safety distances kept towards the external boundaries in the two experimental groups in the baseline round and the three consecutive rounds in VR. Error bars indicate SEM.

We found a main effect of the baseline (F(115) = 61.26, p = .001, = .35) and the experimental group (F(115) = 12.71. p = .001, = .10) but no effect of the round (F(115) = 0.66, p = .42, = .01) or the group round-interaction (F(115) = 0.02, p = .88, = .00). While behavior shown in the baseline round was the strongest predictor for behavior shown in the VR rounds, participants who experienced intact visuo-tactile congruency in VR furthermore increased the safety distances compared to participants who experienced flawed visuo-tactile congruency. This difference in behavior was present across the three VR rounds, with no evidence for an increase or decline over time.

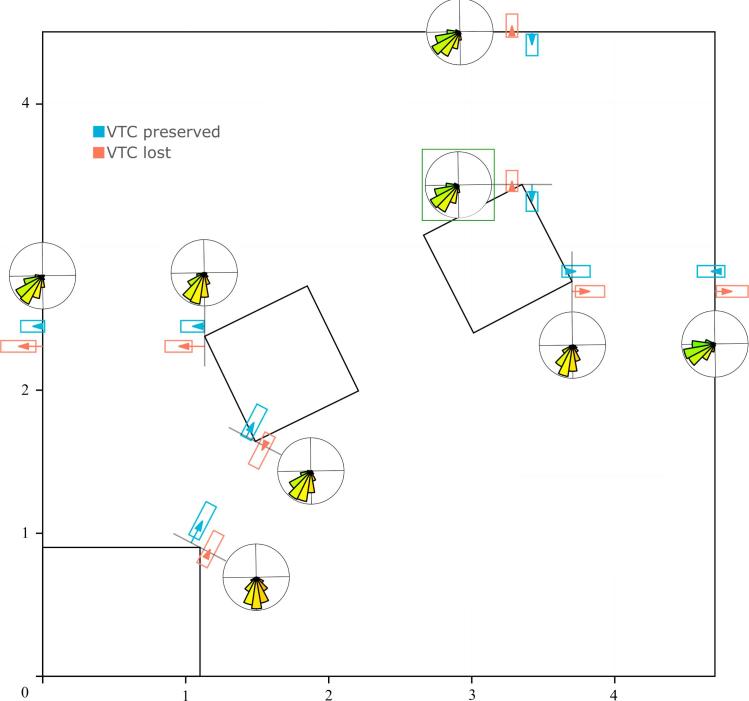

Next, we attempted to estimate the robustness of behavioral changes due to (loss of) visuo-tactile congruency across participants and across the individual boundaries in the scenario. Since the analysis above showed a linear relationship between the safety distances in the baseline round but no evidence for a modulation along the individual VR rounds, we condensed the data by subtracting the safety distances in the baseline round from the aggregated safety distances in the three VR rounds within each boundary (both external and internal). On the other hand, we now kept safety distances in the individual boundaries separate without any form of aggregation. As can be seen in Fig. 8, we found the same descriptive pattern in the behavior towards all eight boundaries: a relative increase of safety distances towards the external boundaries in participants who experienced intact visuo-tactile congruency compared to those who did not, and the reverse pattern towards the internal boundaries.

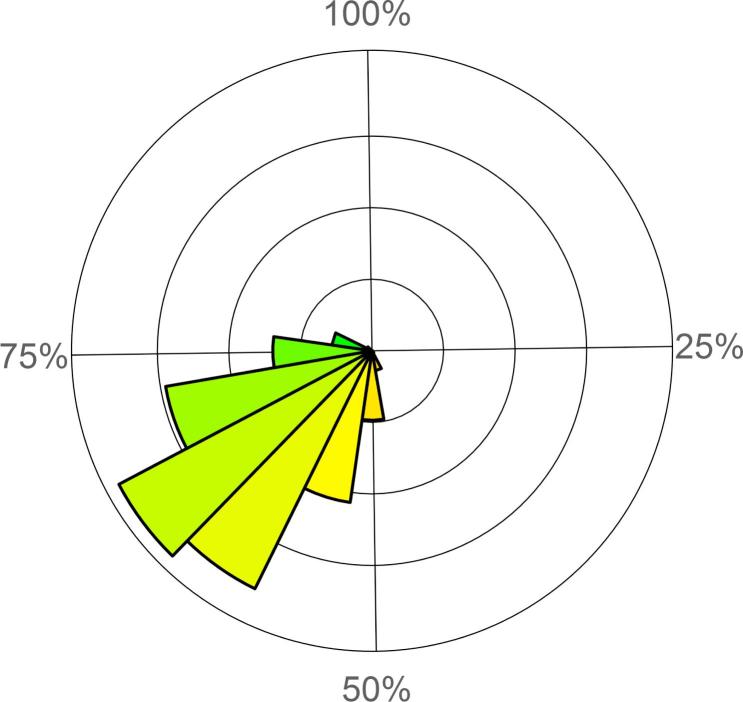

Fig. 8.

Schematic top-view of the laboratory arrangement with changes in safety distances (visualized as arrows) kept towards each boundary in the room, defined as difference between the VR condition and the baseline condition. Changes are in metric units like the rest of the graph, but exaggerated fivefold for better visibility. Boxes around each arrow represent 95%-CI of the distance change. Polar histograms next to each boundary represent predictive accuracy in estimating the presence of visuo-tactile congruency only based on changes in safety distances kept towards the specific boundary. The polar histogram highlighted with a green framing is also shown in Fig. 7. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

We then attempted to predict the presence of visuo-tactile congruency in each participant based on changes in safety distances toward each boundary (here again z-standardized within each boundary) using a generalized linear model (GLM, made accessible to linear modelling by means of the probit-function). Starting with a full model incorporating all eight boundaries, we used a backward elimination strategy to find the most parsimonious model as defined by the Akaike information criterion (AIC). The selected model used only one internal boundary (the top table corner on the right table in Fig. 8), which significantly contributed to predict presence of visuo-tactile congruency ( = 0.20 (SE = 0.07), p = .01). As a litmus test for robustness across participants, we then predicted the presence of visuo-tactile congruency in a cross-validated fashion. To this end, we randomly selected ten participants from both groups, fitted a GLM with the selected predictor, and used this model to predict presence of visuo-tactile congruency in the remaining 20 participants. This procedure was repeated 10,000 times. We noted predictive accuracy in each loop and computed 95% confidence intervals (CI) in their most unadulterated form as range between the 2.5th and 97.5th percentile rank of the distribution. The model correctly predicted the presence of visuo-tactile congruency in 63.60% of the cases [95%-CI = 50%, 80%] and thus provides a predictive power above the chance level of 50% (see Fig. 7).

Fig. 7.

Polar histogram of the predictive accuracy when estimating the presence of visuo-tactile congruency only based on safety distances kept towards one of the internal boundaries (highlighted with a green framing in Fig. 8). Predictive accuracy values were obtained by running 10,000 split-half cross-validated GLMs. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

However, since performing cross-validation on a model selected via the AIC is partly recursive (Varma and Simon, 2006, Houwelingen and Sauerbrei, 2013), we performed the same cross-validation procedure on the other seven boundaries aswell. For the remaining three internal boundaries, predictive accuracy was 54.90% [95%-CI: 30%, 70%], 56.60% [35%, 75%], and 52.25% [30%, 65%], and for the external boundaries, predictive accuracy was 67.19% [95%-CI = 50%, 80%], 63.59% [45%, 80%], 49.47% [30%, 65%] and 59.81% [25%, 75%]. The distribution of predictive accuracies for all boundaries is displayed in Fig. 8 in the same fashion as in Fig. 7, but stripped of all labels for better clarity. Altogether, alterations in movement behavior were found to be robust across individual boundaries in the test environment and across individual participants. When averaged, participants showed the same descriptive pattern in movement alterations towards all boundaries in the experimental setup.

4. Discussion

The present study aimed to investigate the influence of congruent self-touch on the experience of ownership over a virtual body. In line with previous studies (Slater et al., 2010, Maselli and Slater, 2013, Piryankova et al., 2014), congruent touch did not provide any further enhancement to (any of the dimensions of) the illusion of body ownership as measured by explicit questionnaires, or to the sense of presence. This may not be surprising given that both groups in the present experiment experienced the virtual body from a first-person-perspective and experienced visuo-sensorimotor congruency, and previous research (Maselli & Slater, 2013) found a ceiling effect in reported body ownership even after providing only a first-person-perspective.

Moreover, participants who experienced congruent self-touch did not even report higher levels of perceived touch congruency. Instead, both groups reported experiencing touch as relatively congruent. While this finding may be interpreted as a failed manipulation check, it is also in line with previous findings showing that a strong ownership illusion can modulate the way touch is perceived, and allows for incongruent touch to be experienced as congruent. Maselli and Slater (2013) found that the same asynchronous stimulation was perceived as more synchronous when participants experienced intact versus impeded visuo-sensorimotor congruency, with median scores of 4 versus 1, respectively, on a scale from 0 to 7. Nonetheless, taking together results from explicit measures, we found no signs that visuo-tactile congruency as manipulated in the present study had any effect on how participants experienced their virtual body, or was even perceived at all.

By contrast, the experience of visuo-tactile congruency had marked consequences for participants’ movement behavior while walking in the virtual laboratory. In line with their body schema updating to a larger body, participants who experienced intact visuo-tactile congruency with a larger body increased the safety distances towards the external boundaries of the laboratory compared to participants who experienced an enlarged virtual avatar with defective visuo-tactile congruency. This effect could not only be demonstrated at the group level and for the entire experimental setup, but was relatively robust across individual participants as well as individual marker points within the laboratory room. We therefore conclude that the experience of visuo-tactile congruency when touching one’s own body is a crucial stream of information which influences the body schema to concert body movements in arrangement with constraints in the outside world.

In the specific laboratory setup used here, only the width of participants’ shoulders predicted safety distances towards the boundaries while participants walked in the laboratory room prior to the VR illusion. Specifically, wider shoulders were associated with keeping larger safety distances towards the external boundaries, and consequently smaller distances towards the table corners opposing the walls along the itinerary. We were admittedly not able to predict this relationship prior to the experiment and it seems conceivable to us that the relationship between spatial setups and body movements are modulated by a variety of properties within a specific setup. For instance, the tables used in our setup are lower than people’s shoulders and, unlike the external boundaries, do not pose a threat for collision with the shoulders. In our view, future research should more thoroughly investigate the interaction between an environment’s dimensions and body measures in modulating movement behavior, and establish guidelines for experimental setups to make experiments more comparable across laboratories. Interestingly, the special role of the shoulders in the body image or body schema has been reported before. Serino et al. (2016) provided participants with a virtual body with a skinny belly, showed stimulation on the virtual body’s belly either congruently or incongruently with physical stimulation and asked participants to estimate various dimensions of their body size. Participants reported lower shoulder width estimates after synchronous compared to asynchronous stimulation, but no differences for any other measure of body proportions were found. The authors speculate that the shoulders were especially prone to being updated in the body image as they were the only body parts which are mostly invisible to the participants and estimations need to rely more heavily on imagination.

Another interesting aspect in the study by Serino et al. (2016) as well as the present study is an apparent spreading of the ownership from the stimulated to other, non-stimulated body parts. Consider that participants moved as if their shoulders had become wider, although the visuo-tactile illusion of being more corpulent was initially created at the hips and the belly. Such a spreading effect was, however, also found in several previous studies and is consistent with the idea that the illusion, although triggered through only a subset of the perceptual streams available to us, nonetheless encompasses the entire body (Petkova & Ehrsson, 2008).

The profound effect of visuo-tactile congruency on the body schema along with an absence of effects at the explicit level can perhaps be seen in parallel with findings on body image disturbances in AN patients. Here it was argued that explicit questions with regards to one’s bodily sensations are difficult to pose in an unambiguous manner: while some AN patients may be referring to the body they visually see, others may be referring to their body as they feel it (Smeets, 1997). Indeed, Caspi et al. (2016) found stronger overestimation when asking AN patients to state if they felt a distorted photo of themselves represented their true proportions compared to when asked if they thought this was the case, indicating a vulnerability of the effect to seemingly subtle differences in linguistic framing. Overall, while the majority of studies did find an overestimation of body size in AN in explicit measurements (Guardia et al., 2010, Guardia et al., 2012, Gardner and Brown, 2014), several did not (Cornelissen et al., 2013, Mölbert et al., 2017). Mölbert, Klein, et al. (2017) furthermore found that both AN and bulimia nervosa (BN) patients showed stronger body size overestimation when more deictic (although still explicit) spatial measures were used compared to when more abstraction was required, i.e. in evaluating pictures of bodies. In our view, this finding provides further support for the assumption that body image (or body schema) disturbances in AN and BN might be especially prominent in less abstract, more action-oriented tasks guided by the body schema (Keizer et al., 2013).

At the same time, the precise mechanisms behind body image disturbances in AN are still debated. One prominent model emphasizes a failure to update the allocentric, autobiographical memory of one’s own body (Riva, 2012, Riva and Dakanalis, 2018). In this model, the internal image of one’s body as seen from the outside is kept at a certain state of corpulence even when novel visual or sensory input point towards a thinner body. Such an overemphasized consideration of past information and a reluctance to update a model to novel sensory stimuli was also found at a lower perceptual level: when judging the corpulence of figural images of bodies, individuals with higher eating disorder symptomatology more strongly biased their ratings towards previously seen images (serial dependence) compared to individuals with lower eating disorder symptomatology (Alexi, Palermo, Rieger, & Bell, 2019). Along these lines, AN patients were shown to bias estimations of gravitational inflow towards their body axis in different body orientation conditions (Guardia, Carey, Cottencin, Thomas, & Luyat, 2013), again overemphasizing memorized information over novel perceptual input.

Taken together, the present study documents a profound impact of touch congruency on the operation of the body schema during a full body ownership illusion. Participants who took ownership over a more corpulent virtual body with intact visuo-tactile congruency showed a movement behavior in line with a body schema operating for a more corpulent body compared to participants who experienced the same illusion with deteriorated visuo-tactile congruency. At the same time, none of the common explicit measures asking for body ownership or presence differentiated between the two conditions. We conclude that the body schema constitutes a more central component of body ownership than is often acknowledged in research on virtual body illusions.

More generally, the present study provides further evidence that bodily self-consciousness (BSC) does not arise from a unitary process, but instead relies on several subsystems — only some of which build on perceptual data processed in real-time, while others rely more heavily on top-down predictions from memorized information about the body. Intriguingly, the multisensory integration processes combining the variety of signals into a sense of BSC seem to allow for interactions between information channels, as observed in an inability to detect deteriorations in visuo-tactile congruency when visuo-sensorimotor congruency is intact.

Although the basic distinction between an action-oriented, egocentric body schema and a more conscious, allocentric body image has proven practical both in research and clinical practice (de Vignemont, 2010, Longo, 2015), recent accounts assume a more fine-grained differentiation of subsystems to better account for such information cross-channeling. Riva (2018) subdivides the body schema into a sentient body (the most basic experience of being in a body), a spatial body (allowing for a sense of self-location) and an active body (allowing for a sense of agency), while the ontogenetically later occurring body image is subdivided into a personal body (the integration of percepts into the representation of a whole body), an objectified body (allowing for a sense of being perceived by others) and a social body (allowing to reflect one’s body’s appearance relative to social norms). The model poses that input from different channels of perception, but also proprioception and interoception, is interpreted in the light of information stored in any of the subsystems, and that, in return, each subsystem can be selectively updated according to sensory input. With regards to this model, the present study suggests that components of the body image rely more heavily, and more rigidly, on memorized information and are not quickly updated after conflicting sensory input, even if vision and touch provide incongruent sensory cues. By contrast, body memory referring to the active body appears to be more readily updated if multi-sensory integration results in prediction errors. Note that the explicit questions posed to participants in the present study most likely addressed the personal body, but it remains unclear how the objectified body and the social body respond to or influence updates in the active body. Future research may attempt to more specifically target and measure changes in the different subsystems of BSC, i.e. by allowing for interactions with other participants in the same virtual environment in order to stimulate the objectified body, or by incorporating measures of body dissatisfaction as an expression of the social body. Furthermore, future studies should investigate changes in the body image and body schema over longer timescales, possibly triggering changes in BSC which are too inert to be activated within the short timescale of the present experiment. With regards to body image disturbances as observed in AN, it should be investigated whether the reluctance to update the objectified body and the social body according to novel input is present in the active body as well, or whether manipulations targeted at the active body may even open a novel pathway to stimulate the objectified and the social body.

Data Availability

The data that support the findings of this study are available at https://osf.io/v8qtr/.

Declaration of Competing Interest

The authors declare no conflicts of interest.

Acknowledgement

This work was supported by the European Research Council (ERC-2013-StG #336305). The authors thank Aki Schumacher for her help during data collection and Aleya Flechsenhar for her help in generating Fig. 2.

Footnotes

Supplementary data associated with this article can be found, in the online version, at https://doi.org/10.1016/j.concog.2019.05.006.

Contributor Information

Marius Rubo, Email: marius.rubo@uni-wuerzburg.de.

Matthias Gamer, Email: matthias.gamer@uni-wuerzburg.de.

Appendix A. Supplementary material

The following are the Supplementary data to this article:

References

- Alexi J., Palermo R., Rieger E., Bell J. Evidence for a perceptual mechanism relating body size misperception and eating disorder symptoms. Eating and Weight Disorders – Studies on Anorexia, Bulimia and Obesity. 2019 doi: 10.1007/s40519-019-00653-4. https://doi.org/10.1007/s40519-019-00653-4 February. Springer Nature. [DOI] [PubMed] [Google Scholar]

- Aristidou A., Lasenby J. FABRIK: A fast, iterative solver for the inverse kinematics problem. Graphical Models. 2011;73(5):. 243–. 260. Elsevier BV. [Google Scholar]

- Austen E.L., Soto-Faraco S., Enns J.T., Kingstone A. Mislocalizations of touch to a fake hand. Cognitive, Affective, & Behavioral Neuroscience. 2004;4(2):. 170–. 181. doi: 10.3758/cabn.4.2.170. Springer Nature. [DOI] [PubMed] [Google Scholar]

- Blanke O. Multisensory brain mechanisms of bodily self-consciousness. Nature Reviews Neuroscience. 2012;13(8):556–571. doi: 10.1038/nrn3292. Springer Nature. [DOI] [PubMed] [Google Scholar]

- Blanke O., Metzinger T. Full-body illusions and minimal phenomenal selfhood. Trends in Cognitive Sciences. 2009;13(1):. 7–.13. doi: 10.1016/j.tics.2008.10.003. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Botvinick M., Cohen J. Rubber hands ‘Feel’ touch that eyes see. Nature. 1998;391(6669) doi: 10.1038/35784. 756–56, Springer Nature. [DOI] [PubMed] [Google Scholar]

- Bovet S., Debarba H.G., Herbelin B., Molla E., Boulic R. The critical role of self-contact for embodiment in virtual reality. IEEE Transactions on Visualization and Computer Graphics. 2018;24(4):. 1428–. 1436. doi: 10.1109/TVCG.2018.2794658. Institute of Electrical; Electronics Engineers (IEEE) [DOI] [PubMed] [Google Scholar]

- Bulik C.M., Wade T.D., Heath A.C., Martin N.G., Stunkard A.J., Eaves L.J. Relating body mass index to figural stimuli: Population-based normative data for Caucasians. International Journal of Obesity. 2001;25(10):1517–1524. doi: 10.1038/sj.ijo.0801742. Springer Nature. [DOI] [PubMed] [Google Scholar]

- Caspi A., Amiaz R., Davidson N., Czerniak E., Gur E., Kiryati N.…Stein D. Computerized assessment of body image in Anorexia Nervosa and Bulimia Nervosa: Comparison with standardized body image assessment tool. Archives of Womens Mental Health. 2016;20(1):139–147. doi: 10.1007/s00737-016-0687-4. Springer Nature. [DOI] [PubMed] [Google Scholar]

- Cornelissen P.L., Johns A., Tovée M.J. Body size over-estimation in women with Anorexia Nervosa is not qualitatively different from female controls. Body Image. 2013;10(1):103–111. doi: 10.1016/j.bodyim.2012.09.003. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Henrik Ehrsson H., Spence C., Passingham R.E. That’s My Hand! activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305(5685):875–877. doi: 10.1126/science.1097011. American Association for the Advancement of Science. [DOI] [PubMed] [Google Scholar]

- Gardner R.M., Brown D.L. Body size estimation in Anorexia Nervosa: A brief review of findings from 2003 through 2013. Psychiatry Research. 2014;219(3):407–410. doi: 10.1016/j.psychres.2014.06.029. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Guardia D., Carey A., Cottencin O., Thomas P., Luyat M. Disruption of spatial task performance in Anorexia Nervosa.” Edited by Alessio Avenanti. PLoS ONE. 2013;8(1):e54928. doi: 10.1371/journal.pone.0054928. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guardia D., Conversy L., Jardri R., Lafargue G., Thomas P., Dodin V.…Luyat M. Imagining one’s own and someone else’s body actions: Dissociation in Anorexia Nervosa.” Edited by Manos Tsakiris. PLoS ONE. 2012;7(8):e43241. doi: 10.1371/journal.pone.0043241. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guardia D., Lafargue G., Thomas P., Dodin V., Cottencin O., Luyat M. Anticipation of body-scaled action is modified in Anorexia Nervosa. Neuropsychologia. 2010;48(13):3961–3966. doi: 10.1016/j.neuropsychologia.2010.09.004. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Hara M., Pozeg P., Rognini G., Higuchi T., Fukuhara K., Yamamoto A.…Blanke O. Voluntary self-touch increases body ownership. Frontiers in Psychology. 2015;6(October) doi: 10.3389/fpsyg.2015.01509. Frontiers Media SA. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Houwelingen H.C., Sauerbrei W. Cross-validation, shrinkage and variable selection in linear regression revisited. Open Journal of Statistics. 2013;03(02):79–102. Scientific Research Publishing Inc. [Google Scholar]

- Ionta S., Heydrich L., Lenggenhager B., Mouthon M., Fornari E., Chapuis D.…Blanke O. Multisensory mechanisms in temporo-parietal cortex support self-location and first-person perspective. Neuron. 2011;70(2):363–374. doi: 10.1016/j.neuron.2011.03.009. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- de Jong J.R., Keizer A., Engel M.M., Chris Dijkerman H. Does affective touch influence the virtual reality full body illusion? Experimental Brain Research. 2017;235(6):1781–1791. doi: 10.1007/s00221-017-4912-9. Springer Nature. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keizer A., van Elburg A., Helms R., Chris Dijkerman H. A virtual reality full body illusion improves body image disturbance in Anorexia Nervosa.” Edited by Andreas Stengel. PLOS ONE. 2016;11(10):e0163921. doi: 10.1371/journal.pone.0163921. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keizer A., Smeets M.A.M., Chris Dijkerman H., Uzunbajakau S.A., van Elburg A., Postma A. Too fat to fit through the door: First evidence for disturbed body-scaled action in Anorexia Nervosa during locomotion. Edited by Manos Tsakiris. PLoS ONE. 2013;8(5):e64602. doi: 10.1371/journal.pone.0064602. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy R.S., Lane N.E., Berbaum K.S., Lilienthal M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. The International Journal of Aviation Psychology. 1993;3(3):203–220. Informa UK Limited: [Google Scholar]

- Kilteni K., Normand J.-M., Sanchez-Vives M.V., Slater M. Extending body space in immersive virtual reality: A very long arm illusion. Edited by Manos Tsakiris. PLoS ONE. 2012;7(7):e40867. doi: 10.1371/journal.pone.0040867. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kopelman P.G. Obesity as a medical problem. Nature. 2000;404(6778):. 635–. 643. doi: 10.1038/35007508. Springer Nature. [DOI] [PubMed] [Google Scholar]

- Lenggenhager B., Tadi T., Metzinger T., Blanke O. Video ergo sum: Manipulating bodily self-consciousness. Science. 2007;317(5841):1096–1099. doi: 10.1126/science.1143439. American Association for the Advancement of Science (AAAS) [DOI] [PubMed] [Google Scholar]

- Longo M.R. Implicit and explicit body representations. European Psychologist. 2015;20(1):6–15. Hogrefe Publishing Group. [Google Scholar]

- Maselli A., Slater M. The building blocks of the full body ownership illusion. Frontiers in Human Neuroscience. 2013;7 doi: 10.3389/fnhum.2013.00083. Frontiers Media SA. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molla E., Debarba H.G., Boulic R. Egocentric mapping of body surface constraints. IEEE Transactions on Visualization and Computer Graphics. 2018;24(7):2089–2102. doi: 10.1109/TVCG.2017.2708083. [DOI] [PubMed] [Google Scholar]

- Mölbert S., Thaler A., Mohler B., Streuber S., Black M., Karnath H.O.…Giel K. Assessing body image disturbance in patients with anorexia nervosa using biometric self-avatars in virtual reality: Attitudinal components rather than visual body size estimation are distorted. Journal of Psychosomatic Research. 2017;97(June):162. doi: 10.1017/S0033291717002008. Elsevier BV. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mölbert S., Klein L., Thaler A., Mohler B., Brozzo C., Martus P.…Giel K. Depictive and metric body size estimation in anorexia nervosa and bulimia nervosa: A systematic review and meta-analysis. Clinical Psychology Review. 2017;57(November):21–31. doi: 10.1016/j.cpr.2017.08.005. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Normand J.-M., Giannopoulos E., Spanlang B., Slater M. Multisensory stimulation can induce an illusion of larger belly size in immersive virtual reality. Edited by Martin Giurfa. PLoS ONE. 2011;6(1):e16128. doi: 10.1371/journal.pone.0016128. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkova V.I., Ehrsson H.H. If I Were You: Perceptual illusion of body swapping. Edited by Justin Harris. PLoS ONE. 2008;3(12):e3832. doi: 10.1371/journal.pone.0003832. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piryankova I.V., Wong H.Y., Linkenauger S.A., Stinson C., Longo M.R., Bülthoff H.H.…Mohler B.J. Owning an overweight or underweight body: Distinguishing the physical, experienced and virtual body. PLoS ONE. 2014;9(8):e103428. doi: 10.1371/journal.pone.0103428. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riva G. Neuroscience and eating disorders: The allocentric lock hypothesis. Medical Hypotheses. 2012;78(2):254–257. doi: 10.1016/j.mehy.2011.10.039. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Riva G. The neuroscience of body memory: From the self through the space to the others. Cortex. 2018;104(July):241–260. doi: 10.1016/j.cortex.2017.07.013. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Riva G., Dakanalis A. Altered processing and integration of multisensory bodily representations and signals in eating disorders: A possible path toward the understanding of their underlying causes. Frontiers in Human Neuroscience. 2018;12 doi: 10.3389/fnhum.2018.00049. https://doi.org/10.3389/fnhum.2018.00049 (February). Frontiers Media SA. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roth D., Lugrin J.-L., Latoschik M.E., Huber S. Proceedings of the 2017 CHI conference extended abstracts on human factors in computing systems - CHI EA 17. ACM Press; 2017. Alpha IVBO - Construction of a scale to measure the illusion of virtual body ownership. [Google Scholar]

- Rubo M., Gamer M. 2018 IEEE conference on virtual reality and 3d user interfaces (Vr) IEEE; 2018. Using vertex displacements to distort virtual bodies and objects while preserving visuo-tactile congruency during touch; pp. 673–674. [Google Scholar]

- Sahakyan K.R., Somers V.K., Rodriguez-Escudero J.P., Hodge D.O., Carter R.E., Sochor O.… Normal-weight central obesity: Implications for total and cardiovascular mortality. Annals of Internal Medicine. 2015;163(11):827. doi: 10.7326/M14-2525. American College of Physicians. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schubert T., Friedmann F., Regenbrecht H. The experience of presence: factor analytic insights. Presence: Teleoperators and Virtual Environments. 2001;10(3):266–281. MIT Press - Journals. [Google Scholar]

- Serino S., Pedroli E., Keizer A., Triberti S., Dakanalis A., Pallavicini F.…Riva G. Virtual reality body swapping: A tool for modifying the allocentric memory of the body. Cyberpsychology, Behavior, and Social Networking. 2016;19(2):127–133. doi: 10.1089/cyber.2015.0229. Mary Ann Liebert Inc. [DOI] [PubMed] [Google Scholar]

- Skarbez R., Brooks F., Whitton M. A survey of presence and related concepts. ACM Computing Surveys. 2017;50(6):1–39. Association for Computing Machinery (ACM) [Google Scholar]

- Slater M. Inducing illusory ownership of a virtual body. Frontiers in Neuroscience. 2009;3(2):214–220. doi: 10.3389/neuro.01.029.2009. Frontiers Media SA. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slater M., Spanlang B., Sanchez-Vives M.V., Blanke O. First person experience of body transfer in virtual reality. Edited by Mark A. Williams. PLoS ONE. 2010;5(5):e10564. doi: 10.1371/journal.pone.0010564. Public Library of Science (PLoS) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smeets M.A.M. The rise and fall of body size estimation research in anorexia nervosa: A review and reconceptualization. European Eating Disorders Review: The Professional Journal of the Eating Disorders Association. 1997;5(2):75–95. Wiley Online Library. [Google Scholar]

- Stice E. Risk and maintenance factors for eating pathology: A meta-analytic review. Psychological Bulletin. 2002;128(5):825–848. doi: 10.1037/0033-2909.128.5.825. American Psychological Association (APA) [DOI] [PubMed] [Google Scholar]

- Tsakiris M., Haggard P. The rubber hand illusion revisited: Visuotactile integration and self-attribution. Journal of Experimental Psychology: Human Perception and Performance. 2005;31(1):80–91. doi: 10.1037/0096-1523.31.1.80. American Psychological Association (APA) [DOI] [PubMed] [Google Scholar]

- Tsakiris M., Prabhu G., Haggard P. Having a body versus moving your body: How agency structures body-ownership. Consciousness and Cognition. 2006;15(2):423–432. doi: 10.1016/j.concog.2005.09.004. Elsevier BV. [DOI] [PubMed] [Google Scholar]

- Varma S., Simon R. Bias in error estimation when using cross-validation for model selection. BMC Bioinformatics. 2006;7(1):91. doi: 10.1186/1471-2105-7-91. Springer Nature. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Vignemont F. Body schema and body image – pros and cons. Neuropsychologia. 2010;48(3):669–680. doi: 10.1016/j.neuropsychologia.2009.09.022. Elsevier BV. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this study are available at https://osf.io/v8qtr/.