Abstract

Objective:

To systematically review original user evaluations of patient information displays relevant to critical care and understand the impact of design frameworks and information presentation approaches on decision-making, efficiency, workload, and preferences of clinicians.

Methods:

We included studies that evaluated information displays designed to support real-time care decisions in critical care or anesthesiology using simulated tasks. We searched PubMed and IEEExplore from 1/1/1990 to 6/30/2018. The search strategy was developed iteratively with calibration against known references. Inclusion screening was completed independently by two authors. Extraction of display features, design processes, and evaluation method was completed by one and verified by a second author.

Results:

Fifty-six manuscripts evaluating 32 critical care and 22 anesthesia displays were included. Primary outcome metrics included clinician accuracy and efficiency in recognizing, diagnosing, and treating problems. Implementing user-centered design (UCD) processes, especially iterative evaluation and redesign, resulted in positive impact in outcomes such as accuracy and efficiency. Innovative display approaches that led to improved human-system performance in critical care included: (1) improving the integration and organization of information, (2) improving the representation of trend information, and (3) implementing graphical approaches to make relationships between data visible.

Conclusion:

Our review affirms the value of key principles of UCD. Improved information presentation can facilitate faster information interpretation and more accurate diagnoses and treatment. Improvements to information organization and support for rapid interpretation of time-based relationships between related quantitative data is warranted. Designers and developers are encouraged to involve users in formal iterative design and evaluation activities in the design of electronic health records (EHRs), clinical informatics applications, and clinical devices.

Keywords: Information display, Clinical decision support, Electronic medical record, User-centered design, User-computer interface, Critical care (6 allowed)

1. Background and significance

Critical care patients generate a large amount of data due to the complexity of their conditions and therapy [1,2]. In this dynamic, high-risk, and information-rich environment, clinicians increasingly rely on the electronic health record (EHR) and bedside information displays to monitor patients and make care decisions. However, the ability of information displays to support these activities has been criticized [3–5], and their use has been linked with errors that compromise patient safety [6,7].

An important factor that can influence the success of information displays is the quality of clinicians’ interactions with them. Common challenges with EHR use include locating high priority information, customizing information presentation, and integrating relevant information to form a mental model of the patient [8,9]. Additional challenges include user interfaces that do not match clinical workflow, information overload, and poor usability [3,4,10]. Some interruptive decision support tools for standardizing care and improving adherence have been perceived by clinicians as a nuisance, leading to alert fatigue and delayed responses [11]. In light of this, it is not surprising that physicians identified EHRs as one of the primary contributors to professional dissatisfaction [10].

Applying user-centered methods to the design of information displays may reduce the likelihood that important information will be missed or misunderstood. In user-centered design (UCD) frameworks, systems are designed around the needs and capabilities of users. The process typically begins with an in-depth study of the work environment and the needs of the intended end users. An iterative process of design, evaluation, and redesign with representative users then follows, progressing from design concepts to increasingly detailed designs and prototypes [12–15]. By considering the users’ perspective, displays can integrate into users’ workflow, facilitate productivity, and support situation awareness [4,8,16–18].

Another design framework is ecological interface design (EID), in which “an interface has been designed to reflect the constraints of the work environment in a way that is perceptually available to the people who use it” [19]. EID involves a work domain analysis to develop an abstraction hierarchy of form and function, which is then used to inform the design of the interface to make the form and functional relationships visible to end users. There is overlap in some of the details of EID and UCD. Important differences are that UCD emphasizes iterative design and evaluation with end users and EID focuses on defining functional relationships of data and designing displays to support visualization of those relationships. A 2008 review recommended future display design work to follow a theoretical framework such as UCD or EID and called for reviews to evaluate specific features of successful displays [20].

2. Objective

We systematically reviewed articles that evaluated novel patient information displays for critical care to: (1) understand the use and impact of specific design processes in the context of design frameworks such as UCD, (2) survey and classify the variety of display innovations being developed and evaluated, and (3) evaluate the impact of display innovations on human-system performance. Regarding outcomes, we sought to understand the impact of display design on clinicians’ decision-making accuracy, efficiency, workload, and preferences. In particular, we explored what design processes were used, and whether specific design processes, features, or principles were associated with positive outcomes.

3. . Methods

3.1. Scope

Our review complements other related reviews [4,20–30] with a focus on the presentation of patient information relevant to critical care patient management and decision-making. Critical care settings are of interest because of the complex and dynamic nature of patient information. We also included evaluations of anesthesia care displays because of similarities in patient monitoring practices (e.g., the need for continuous electrocardiogram and ventilator monitoring).

This review includes evaluations of display design prototypes out-side of live clinical practice, using methods such as evaluation of performance in the context of simulated clinical tasks. These types of evaluations are useful because they frequently include a description of the design process, are able to test multiple hypotheses in a controlled setting, and can include novel display designs that may be difficult to implement in live clinical environments. We expected conceptual or pre-implementation user evaluation studies to place greater emphasis on describing design approaches and to use different methods to evaluate displays than clinical trials. This review was conducted in parallel with a review to evaluate the impact of novel display designs on outcomes in live clinical settings, which is reported in Waller et al. [31]. The Waller et al. [31] review included 22 studies evaluating 17 novel displays, which were grouped into 4 categories: comprehensive integrated displays, multi-patient dashboards, physiologic and laboratory monitoring, and expert systems. Twelve of the studies reported significant improvements in patient and other outcome measures, primarily in the evaluation of comprehensive integrated displays and multi-patient dashboards. We compare our findings to those of Waller et al. [31], to learn whether design innovations that were successful in pre-implementation user evaluations also improved outcomes in real clinical care.

3.2. Approach

We were guided in the development of our protocol by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [32] and the Institute of Medicine Standards for Systematic Reviews [33]. Study procedures were based on formal processes and instruments defined a priori by the authors.

3.3. Eligibility criteria

We included studies that completed conceptual or pre-implementation user evaluations of information displays designed to support real-time decisions in critical care or anesthesiology. We excluded data entry forms, imaging displays, simple interfaces involving few data points, interfaces not designed for clinical care, non-visual (e.g., auditory or tactile) interfaces, studies that were not focused on evaluating the user interface, studies that did not involve evaluation by humans as potential users of the system, and papers not published in English. (See Supplement for full inclusion and exclusion criteria and search terms.)

3.4. Information sources

We searched PubMed and IEEExplore from January 1st 1990 to June 30, 2018. The search strategy for each database was developed iteratively with calibration against a set of known references. We manually checked the reference lists of included articles and relevant review papers [20,26] to validate our search strategy and to review for inclusion citations from sources other than PubMed and IEEExplore (book chapters, conference proceedings, or non-PubMed journals).

3.5. Study selection

Two authors independently reviewed titles and abstracts to determine if the paper met the inclusion criteria. Disagreements were resolved through consensus with a third author. If the abstract had insufficient information to make a confident decision, the article was included for full-text review. A similar process was followed for papers selected for full-text screening. To calculate inter-rater reliability, we used a bias- and prevalence-adjusted kappa, which adjusts for a high proportion of papers being excluded [34].

3.6. Data extraction process

For each paper that met the inclusion criteria, we extracted information about display characteristics, design processes, and evaluation methods. A primary reviewer extracted data and a second reviewer checked for accuracy of the extraction. Disagreements were reconciled through consensus.

Display characteristics extracted included the type of display, clinical data shown, display features used to organize or prioritize the data (see Supplement for a full list), design approach, and design activities. For design processes, we reviewed both the full text of the article and references to prior publications. Where possible, we used the language of the authors to classify the design processes, including references to specific frameworks such as EID, UCD, and other processes. Because UCD is a relatively broad and flexible framework and because there can be overlap across frameworks, we specifically documented whether the design process: (1) involved users in early conceptualization of the design, (2) used an iterative process of evaluating human-system performance with users and subsequently improving the design based on findings, (3) involved attention to specific design principles (e.g., parsimony, making deviations visually apparent [35–43]), and (4) in keeping with EID, making functional relationships between data visible.

Evaluation methods information extracted included the method (i.e., comparison to a baseline display, other comparison, usability test, or design activity), number and type of participants, tasks and scenarios they completed, primary and secondary measures and findings, and the comparison display. We were unable to identify validated tools for evaluating the quality of pre-implementation or conceptual user evaluations. Therefore, the review team developed study quality criteria through a consensus among human factors and clinical informatics experts. Study quality was classified based on criteria including sample size, fidelity of the evaluation environment and relevant comparisons, whether study participants were representative of actual users, whether tasks and scenarios were representative of clinical work, use of objective or validated performance measures, and design balance for experimental comparisons. For usability tests, additional quality criteria included use of validated survey tools. For qualitative evaluations, quality criteria included coding by more than one person, description of an approach to validating findings, and description of an approach to evaluate comprehensiveness. Studies were categorized as high quality if they met 75% of criteria, medium quality if they met 50–75% of criteria, or low quality if they met less than 50% of criteria (see Supplement).

3.7. Summary measures

Common human-system performance outcome measures included accuracy (e.g., number of correct answers, number of successful trials, number of errors) and efficiency (e.g., time to detect an event or abnormal variable, time to diagnose, time to treat). Other measures included satisfaction, usability, and workload surveys. We classified findings of comparisons of novel to baseline displays based on results for the primary measure(s). Where authors did not specify which measures were of primary importance, we selected accuracy or efficiency as primary measures or, when these were not used, other measures, ranked by objectivity. We categorized findings as positive (novel display is favored in half or more of tasks), mixed (at least one finding favoring the novel display), neutral (no differences between novel and control displays), or negative (findings favoring the control display) (see Supplement).

3.8. Analysis

Two reviewers evaluated the key objectives and design features of included displays to identify overarching design innovations that were common across many included studies. Depending on the number of included studies, we aimed to identify two or more design innovations to compare regarding impact on human-system performance. We calculated descriptive statistics of the number of studies that applied different design innovations (e.g., improve visualization of trends) and design processes (e.g., involve users early in design). We compared the number of studies with positive findings for different design innovations using exact 90% binomial confidence intervals for proportions for the given n and proportion [44]. We used 90% based on the rationale that binomial confidence intervals have poor power among small numbers of trials for meta-analysis [45]. For comparisons between two proportions (e.g., whether or not a specific design process was used), we used Fisher’s exact test of independence to compare the effect on human-system performance outcomes. This test was selected because it is appropriate for small sample sizes. A p-value of 0.05 was considered significant. Finally, we conducted a descriptive comparison of our findings to the findings of the parallel review of evaluations conducted in live clinical settings [31].

4. Results

4.1. Inclusion flow

After removing duplicates, our initial query included 6742 articles. Interrater agreement was 0.86 for title and abstract screening and 0.78 for full-text review. Twenty-two excluded studies met inclusion criteria except that they were evaluated in clinical settings; these studies are reviewed in Waller et al. [31]. Following additional review of articles from citation lists, 56 publications were included in this review (Fig. 1 and Supplement 1). These articles describe 56 novel display designs (see Supplement eTable 1 and eTable 2) and 79 unique evaluations or comparisons (see Supplement eTable 3 and eTable 4). Among the 56 display designs, 33 were designed for the intensive care unit (ICU) setting (5 specifically focused on neonatal or pediatric ICU) and 23 for the anesthesia care setting.

Fig. 1.

Inclusion flow.

4.2. Display types and features

See Supplement 1 for characteristics of the 56 novel display designs with respect to intended care setting, design processes, display purposes, design innovations, data displayed, and display features (eTable 1 and eTable2). Supplement 2 provides extracted data in an Excel spreadsheet format to provide opportunity for additional data exploration. The stated purposes for the display were most often either to: (1) improve the speed and accuracy of detecting problems or (2) to improve the interpretation of information to support more accurate or faster clinical decisions. The most common applications of displays were for monitoring and response related to anesthesia or critical care in general or, more specifically, for hemodynamic or respiratory monitoring and response. Displays also were designed for specific applications such as antibiotic prescribing decisions and anesthesia drug delivery. Displays used a variety of techniques (see Supplement) to convey: (1) quantitative information (e.g., bar plots, area plots, x-y plots, quantitative color coding), (2) relationships between variables (e.g., metaphor graphics, area plots, x-y plots, star diagrams), (3) change over time (e.g., linear trend, small multiples, change indicator, text), and (4) deviation from a reference range (e.g., change indicator, visual comparison, emergent feature, color code, risk indicator).

Based on two reviewers analyses of purpose and features of the displays, we identified the following five common design innovations: (1) improve information organization and integration, (2) improve the presentation of trend information, (3) implement simple graphic objects to improve information interpretations, (4) implement more complex configural or metaphor object displays to support information interpretation and diagnosis (commonly resulting from EID), and (5) improve performance through the implementation of new functionality such as decision support, interactive visualization of data, or access to new information (see Figs. 2–5). For each display, we documented whether any of these 5 innovations were represented by the display (see Table 1).

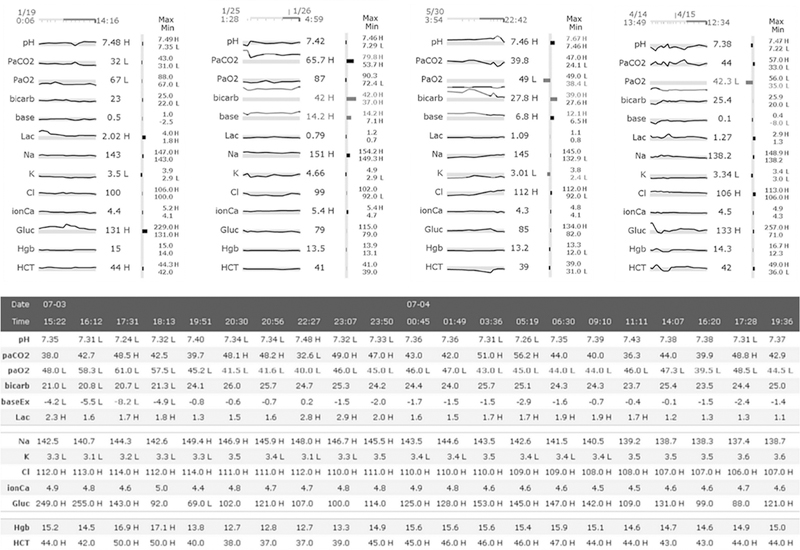

Fig. 2.

Integrated trend display with data presented on a common time axis; Anders et al. [47]. Reprinted with permission from Elsevier. This display incorporates four of the five design approaches: (1) improved information and integration, (2) improved depiction of trends, (3) cardiovascular metaphor graphic display (bottom left) (see also Agutter et al. [65] and Albert et al. [66]) and (4) a graphical object (bottom right) (see Doig et al. [69]).

Fig. 5.

Novel trend representation of lab data; Bauer et al. [94]. Reprinted with permission from Oxford University Press. Data represented as small trend sparklines (top) and in enhanced tables (bottom) that maximize data-ink ratios for ease of visibility [37]. This display includes novel trend representations.

Table 1.

Number of display designs including each of five design innovations by decade. Also see Supplement eTable 2.

| 1990–1999 | 2000–2009 | 2010–2018 | Total | |

|---|---|---|---|---|

|

| ||||

| Improve information organization and integration | 7 (70%) | 20 (87%) | 16 (70%) | 43 (77%) |

| Improve the display of trend information | 4 (40%) | 9 (39%) | 13 (57%) | 26 (46%) |

| Implement simple graphic objects | 9 (90%) | 8 (35%) | 15 (65%) | 32 (57%) |

| Implement configural or metaphor graphic objects | 5 (50%) | 10 (43%) | 3 (13%) | 18 (32%) |

| Other new functionality | 0 | 3 (13%) | 4 (17%) | 7 (13%) |

Examples of displays that were designed to combine information from different sources to support more meaningful grouping of related information included: (1) displays that integrated a broad range of critical care data to be presented in a high resolution display (see Fig. 2 for an example) [46–55], (2) displays that integrated data from bedside devices and monitors (see Fig. 3) [56–61], (3) displays that integrated data for the purpose of antibiotic selection and management [62–64], and (4) displays that integrated information into a novel graphical presentation [65–68]. Display designers frequently described an intention to organize the information in more clinically meaningful ways, for example, by human body systems, medical concepts, or provider tasks. In some of these, multiple variables are displayed as trend lines over time, with a common time axis that supports comparison of changes in patient state across different data and time points [47–49,51,53,55].

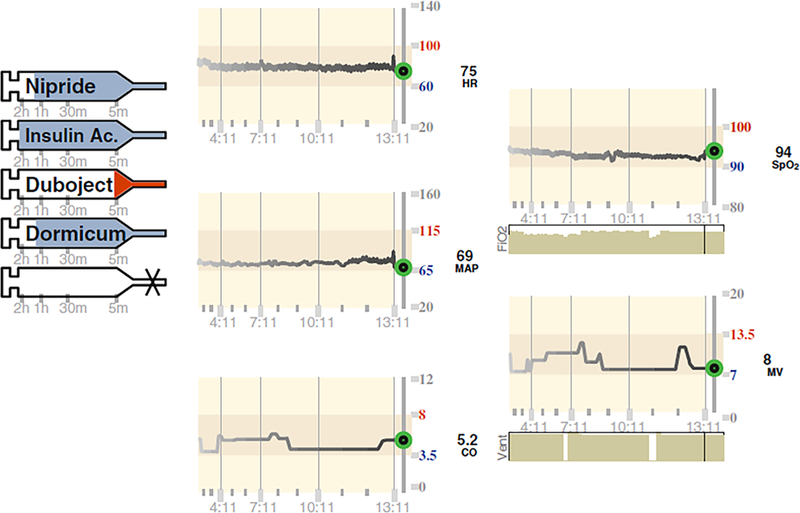

Fig. 3.

Integrated bedside display of vital sign trends and infusions; Görges et al. [57]. Reprinted with permission from Oxford University Press. This display includes: information integration and organization, improved trends, and simple graphic objects.

Configural or metaphor objects present multiple variables as objects (e.g., rectangles, stars, polygons) or as configural or metaphor graphics intended to make relationships between variables visually apparent (see Fig. 4). In addition to a variety of displays with conventional geometric objects [67,68,70–78], more complex geographical objects or metaphor objects were designed specifically to present emergent visualization of problems such as shock, cardiovascular problems, and pulmonary problems [65,66,79–83]. For example, blood flow was modeled as a pipe with a heart object or pump [47,65,66] (incorporated into the integrated display in Fig. 2, bottom left) and pulmonary function was modeled as lung objects with bellows [81,82]. Simple graphic objects include bar plots and dials for representing single or multiple quantitative variables (see Fig. 2, bottom right) [69,84–91].

Fig. 4.

Object display (at right) displaying blood pressure and heart rate in a rectangular object display; Drews and Doig [72]. Reprinted with permission from Sage. A reference range of values is represented by the gray outline. The orange rectangle at the right represents the current value. The white rectangle displays variability in heart rate and blood pressure over the past hour. This display includes: (1) improved information organization, (2) improved trends, and (3) a configural graphic object.

Several approaches were evaluated to improve the presentation of trend information. These included: (1) using statistical or modeling methods to detect clinically meaningful change which was represented with a change indicator [92,93], (2) using sparklines for trend display of lab data (see Fig. 5) [94], (3) text descriptions of change over time [95,96], and (4) integrating modeling to display predicted future trends [97,98]. Some displays provided more in-depth visualization of data relationships over time [48,49,55,91].

4.3. Design framework and processes

Table 3 presents the number of displays designed using any of the four design processes defined by our methods. Designers implemented 0, 1, 2, or 3–4 design processes for 7%, 34%, 29%, and 30% of the included displays, respectively. Specific design activities to support these processes included user interviews, observations, focus groups, and usability or other evaluations driving iterative redesign. Design activities involving representative users were described for 29 of the 56 displays (52%).

Table 3.

Number of displays in which the design incorporated any of the four design processes by decade. Also see Supplement 1 eTable 2.

| 1990–1999 | 2000–2009 | 2010–2018 | Total | |

|---|---|---|---|---|

|

| ||||

| Involved users in early conceptualization of the design | 2 (20%) | 14 (61%) | 16 (70%) | 32 (57%) |

| Iterative evaluation of human-system performance with users followed by redesign | 0 | 8 (35%) | 15 (65%) | 23 (41%) |

| Using EID methods, making functional relationships between data visible | 1 (10%) | 10 (43%) | 3 (13%) | 14 (25%) |

| Attention to other specific design principles | 4 (10%) | 14 (61%) | 8 (35%) | 26 (46%) |

4.4. Study designs

Evaluation methods included comparisons to a baseline display, comparisons between design alternatives, usability tests, and other design evaluations (see Table 2 and eTables 3 and 4). Displays used for baseline comparisons varied and included: (1) current or conventional EHRs and anesthesia monitors, (2) simulated EHRs, anesthesia monitors, strip charts, and digital displays, and (3) paper-based information presentation (see eTable 3). Among comparative studies, the most frequently missed quality criterion was high environment fidelity (evaluated in the context of a realistic task environment with appropriate distractors such as other clinicians and displays). Among usability and design activities, most studies met criteria of adequate sample size but rarely met criteria of using standard or validated scales and applying principles of rigorous qualitative data analysis.

Table 2.

Evaluation methods and study quality. Also see Supplement, eTable 3 and eTable 4.

| Quality | Comparison to baseline |

Comparison across design alternatives |

Usability tests and other design evaluations |

|---|---|---|---|

|

| |||

| High | 38 | 11 | 1 |

| Medium | 11 | 4 | 3 |

| Low | 2 | 0 | 9 |

4.5. Study findings by display innovations

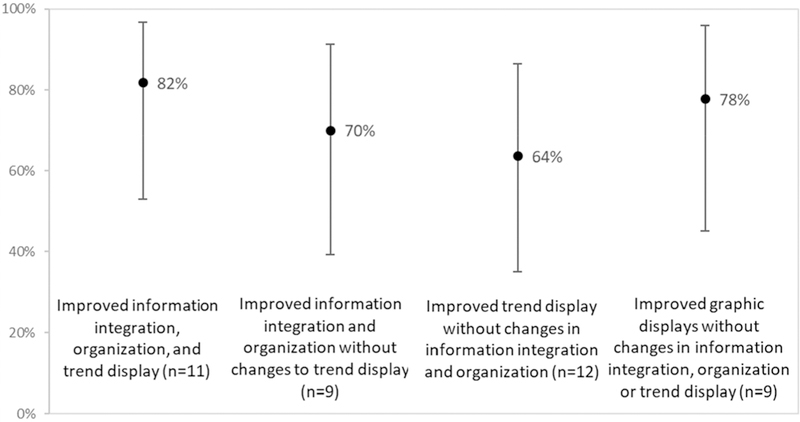

We identified 41 experiments of medium or high quality that supported comparison of outcomes by display innovations. For these 41 comparisons, 30 (73%) showed improved accuracy, faster response, or lower workload in comparison to control displays. Among the 41 novel displays evaluated, 23 incorporated improved trend presentation, 20 incorporate improved integration and organization, 19 implemented new simple graphical improvements and 16 implemented new complex or metaphor graphics. There was overlap between display approaches and few studies incorporated simple graphical objects or configural or metaphor graphical objects independent from other design approaches. Thus, we elected to compare outcomes across four mutually exclusive groups of studies that compared a control display to a novel display with: (1) improved information integration and organization and improved trend representation (with or without novel graphic objects) (n = 11), (2) improved integration and organization (but no trend improvements; with or without novel graphic objects) (n = 9), (3) improved trend representation (but no information integration and organization improvements; with or without novel graphic objects) (n = 12), and (4) implementation of novel graphic objects (but no changes in information content or representation of trend display) (n = 9). The proportion of positive studies for each group and 90% binomial confidence intervals are presented in Fig. 6. There were no significant differences in proportion of positive findings based on display approach.

Fig. 6.

Proportion of positive human-system performance outcomes resulting from different combinations of information display innovations for critical care. 90% confidence intervals for proportions are also shown.

Multiple studies supported the concept of grouping related information, dating back to 1997 for both anesthesia [52] and critical care [99] applications. In 2009, Miller et al. showed, in comparison to paper charts, that there were positive gains in clinician accuracy with an electronic display that presented multiple related ICU trend line plots on a common time axis [51]. This concept was expanded to integrate more flexible interaction and trend visualization in MIVA (medical information visualization system) [48,49] and to incorporate a common timeline trend depiction with a cardiovascular metaphor display in Anders et al. [47]. The AWARE™ displays, with positive findings, focused on organizing key parsimonious critical care data in meaningful body-system groupings, but display only the latest data value (trend plots for an individual data element can be accessed with a click) [46].

For geometric (rectangular, circular, polygon, ellipses, star) object displays of cardiovascular and respiratory data, positive findings in comparisons to baseline displays appear to be more common for solutions that involved simpler (e.g., rectangular) geometric representations that display only 2–3 related variables [72,75,79,80]. Two variations of metaphor graphic displays for cardiovascular data [65,66,100,101] were compared to baseline displays. One of these [65,66] led to faster time to detect and diagnose events and greater treatment accuracy compared to baseline displays. This display concept was subsequently included in an integrated display design (see Fig. 3) [47]. One pulmonary metaphor graphic display provided evidence to support faster time to treat ventilation problems in anesthesia [82].

Four evaluations compared displays with trend line plots to tabular EHR presentation of data over time and better performance was observed with trend line plots [47,84,90,94]. Bauer et al. found trend line plots to be more effective for noticing trends and fluctuations, while tables supported detection of values just beyond a normal reference range [94]. There is also limited support for natural language text descriptions of patient changes over time. In comparison to trend line plots of key ICU data, two studies found that human generated text descriptions led to higher accuracy of clinician responses [95,96]. However, a comparison of natural language processing generated text description to trend line plots resulted in no differences in accuracy of responses [95].

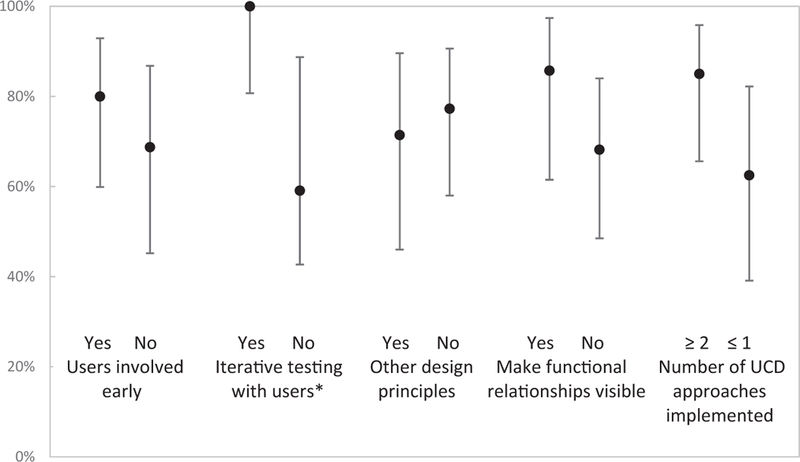

4.6. Study findings by design process

We identified 36 experiments of medium or high quality that supported comparison of outcomes by design processes. Among this sample, 27 (75%) novel displays showed improved accuracy, faster response, or lower workload in comparison to baseline displays. Using Fishers exact tests, we compared the impact of involving users early in the design process, iterative evaluation with users, making functional relationships visible (EID), and implementation of other design principles on the proportion of experiments with positive findings. Among these, there was a significant effect of iterative evaluation with end users (p < 0.01). Displays designed using iterative evaluation processes had higher proportions of positive outcomes (14 of 14 positive) than displays designed without iterative evaluation processes (13 of 22 positive). We also compared the outcomes of studies involving display designs resulting from implementation of 2 or more design processes (17 of 20 positive) to studies involving display designs resulting from implementing 1 or none of our classified design processes (10 of 16 positive). The difference was not significant (p = 0.15). Fig. 7 presents the proportion of positive findings and 90% binomial confidence intervals for these comparisons.

Fig. 7.

Proportion of positive outcomes in human-system performance based on design processes applied to displays designed to improve critical care information displays. For all pairs of comparisons, n=35. 90% confidence intervals for proportions are also shown. *Significant at p < 0.01 using Fisher’s Exact test.

4.7. Comparison to evaluations of novel displays in clinical settings

Positive outcomes in the parallel systematic review of clinical trials [31] were less frequent (9 of 17 displays, 53%) than in experiments involving simulated care activities. For the displays evaluated in clinical trials, there was evidence of implementation of formal design processes (early involvement of users, iterative evaluations, make functional relationships visible, apply other design principles) for 7 of the 17 included displays: four provided evidence of using two or more design processes and three provided evidence of using one design process.

Among clinical trial-based novel displays, positive outcomes were most prevalent for integrated EHR displays and multi-patient dashboards. This corroborates our findings of the value of information integration and organization. Multi-patient dashboards were not present in our set of displays evaluated using simulated care tasks. However, multi-patient dashboards integrate and organize information for specific purposes including patient prioritization or compliance tracking.

Although the review of evaluations using prototype displays in simulated settings positively supported design innovations for physiologic monitoring, this was not strongly corroborated in the review of clinical trials. Among 5 physiologic displays evaluated, 1 had positive findings [102], one had positive findings in one of two studies [103,104] and the remaining three had mixed or neutral findings. Three displays targeted improving representation of trends [102–105], one targeted improving information integration and organization [106], and one targeted the use of an EID-motivated configural display to improve anesthetic monitoring [107]. The two positive outcome studies were associated with improved trend representations.

Three of the 17 displays included from clinical trial evaluations were represented in prior user evaluations or design activities from our review [103,105,108]. Two of these generated positive clinical out-comes in at least one evaluation.

5. Discussion

Common innovations to information displays with intention to improve human-system performance in critical care include: (1) improving the integration and organization of information, (2) improving the representation of trend information, and (3) implementing graphical approaches to make relationships between data visible. These approaches were associated with positive improvements in human-system performance in 73% of evaluations. Our analysis did not reveal a significant advantage of one of these innovations over another.

There was convergent evidence to support gains in provider efficiency and accuracy by grouping conceptually-related information on a common display. Although there are examples of translation of improved information organization displays into commercial products (AWARE™) [109,110], there is substantial opportunity to improve the presentation of information in EHRs through integrated displays [3,60,111]. For example, response time to simulated realistic clinical decisions was 12% faster when clinicians used a display that integrated data from a conventional patient monitor with infusion pump data than when clinicians used conventional, separated, patient monitors and infusion pump displays [57].

There was also support for providing explicit trend information. Traditional bedside monitors provide continuous cardiac cycle wave-forms that provide only very short (seconds) visual representations of trends. Longer term trends, such as changes in heart rate or oxygen saturation over minutes or hours are not typically explicitly represented on the display. This review supports the value of providing trend information at the bedside, either through display of graphical trend lines [57,93] or through the display of a simple graphical indicator when statistical or modeling algorithms detect clinically meaningful change in a monitored variable [92,93].

In current EHRs, trend information is typically either absent from screens that provide a snapshot view of the patient, is presented in complex tabular form on multiple screens, or requires complex user interaction to generate. Our review provides support for improvements to EHRs that provide rapid access to real-time trend representations of related information (including treatments and related responses which may require integrating information from different sources) over a common timeline (Fig. 2) [47,51].

Our study found that implementing UCD processes and, in particular, iterative redesign based on evaluations involving end users, leads to a higher likelihood of display designs that succeed in improving human-system performance in simulated care tasks. Although displays developed without reference to specific design processes continue to be common, increased use of UCD appears to coincide with the timing of recommendations in the review by Gorges and Staggers in 2009 [20]. The work by Koch and colleagues to design and test an integrated critical care bedside display provides a good example of a UCD approach progressing from participatory design activities to iterative evaluation and redesign [58–60]. Wachter and colleagues also exemplify a comprehensive approach in the design of a pulmonary metaphor graphic display using EID principles while also integrating UCD processes of early design evaluations with end users [81] and summative evaluation [82].

Recent designs evaluated in clinical trials (mostly multi-patient displays) appear to have been developed without explicit attention to UCD processes. It is not clear whether this represents a lack of attention to UCD or if design processes simply were not reported. There may be an opportunity to enhance the potential for success in improving clinical outcomes through integration of processes such as early involvement of users in interviews and observations, heuristic inspection of early display concepts, and intentionally planning for design refinement following pilot implementation and evaluation with end users. The use of these processes need not be limited to simulation or laboratory settings.

5.1. Study design and quality

The majority of comparative evaluations met our definition of high quality studies. Although few studies evaluated displays in high fidelity environments, the environment fidelity was typically equivalent between the novel display and the comparison conditions. We did not formally critique the quality of the comparison display. Future studies may be improved by providing greater attention to presenting a high standard for comparison or attention to controlled manipulation of specific display features of interest. An example of a study with strong potential for applicability to future designs and generalizability is that by Bauer et al. [94], which compared optimized (in terms of applying relevant design principles) versions of a tabular display to sparkline (small trend line) displays (Fig. 5). The tabular display version serves not only as a current system comparator and targets a specific design question, but also sets a high standard which may be relevant to future system enhancements.

There were few usability and design activity evaluations and study quality was lower for these. Because there are limited approaches to assessing these evaluation methods, we defined our criteria based on recommendations for usability testing and applying key principles of qualitative research to design activities such as interviews and heuristic evaluations [104,112–114]. It is not clear, particularly in the application of guidance for qualitative research as criteria for evaluating design activities, that this is a meaningful assessment of quality. It also is not clear whether failure to meet these criteria are due to lack of reporting or if methods were not applied. Our review suggests that there is a need to develop approaches to evaluating the rigor of formative and iterative design activities and their reporting for clinical informatics display design work.

5.2. Limitations

Our search strategy included only two databases. The high number of studies captured from citation review raises concerns of selection bias that may have been offset by searching a third database such as CINAHL. The studies reviewed are likely to have a bias related to greater publication of evaluations with positive findings. Substantial differences in the design of experiments, variations in display design features, and small sample sizes in the context of our meta-analyses limit our interpretations and conclusions.

6. Conclusion

Our review identified 56 publications describing user evaluations of human-system performance associated with novel information display concepts for patient care in anesthesia and critical care settings. Implementing UCD processes, particularly iterative evaluation and re-design, resulted in positive impact in outcomes such as accuracy and efficiency. While the use of UCD is becoming more common, there is a concern that many displays continue to be designed and implemented in clinical settings without attention to formal design processes. There is an opportunity to facilitate faster interpretation of critical care information and more accurate diagnoses and treatment decisions through improved information displays. In particular, integrating meaningful groups of information and supporting an understanding of changes in related quantitative data over a common timeframe are warranted.

Supplementary Material

Acknowledgments

The authors wish thank and acknowledge the contributions of Atilio Barbeito, Jonathan Mark, Eugene Moretti, Rebecca Schroeder of Duke University; Bruce Bray, Farrant Sakaguchi, Joe Tonna, Charlene Weir of University of Utah; Brekk Macpherson of Virginia Commonwealth University, Anthony Faiola, University of Illinois; Kathleen Harder, University of Minnesota; and Leanne Vander Hart, Trinity Health. These individuals participated as expert panel reviewers and provided ongoing critical appraisal of our work. Jonathan Mark and Eugene Moretti also contributed to critical revision of the manuscript. Thanks to Sydney Radcliffe of Saint Alphonsus Regional Medical Center for assistance with table manipulation, presentation, and preparation of Supplement. Thanks to Matthias Gorges, University of BC; Anders Shilo, Vanderbilt University; Stephanie Guerlain, University of Virginia; and Frank Drews, University of Utah for assistance with figure reprint content. This manuscript does not necessarily reflect the opinions or views of Trinity Health.

Funding statement

This work was supported by the National Library of Medicine of the National Institutes of Health R56LM011925 and T15LM007124.

Footnotes

Declaration of Competing Interest

The authors have no competing interests to declare.

Appendix A. Supplementary material

Supplementary data to this article can be found online at https://doi.org/10.10Wj.yjbinx.2019.10004L

References

- [1].Donchin Y, et al. , A look into the nature and causes of human errors in the intensive care unit, Quality Safety Health Care 12 (2) (2003) 143–147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Manor-Shulman O, et al. , Quantifying the volume of documented clinical information in critical illness, J. Crit. Care 23 (2) (2008) 245–250. [DOI] [PubMed] [Google Scholar]

- [3].Wright MC, et al. , Toward designing information display to support critical care. A qualitative contextual evaluation and visioning effort, Appl. Clin. Inform. 7 (4) (2016) 912–929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Zahabi M, Kaber DB, Swangnetr M, Usability and safety in electronic medical records interface design: a review of recent literature and guideline formulation, Hum. Factors 57 (5) (2015) 805–834. [DOI] [PubMed] [Google Scholar]

- [5].Zhang J, Walji MF, TURF: toward a unified framework of EHR usability, J. Biomed. Inform. 44 (6) (2011) 1056–1067. [DOI] [PubMed] [Google Scholar]

- [6].Magrabi F, et al. , An analysis of computer-related patient safety incidents to inform the development of a classification, J. Am. Med. Inform. Assoc. 17 (6) (2010) 663–670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Meeks DW, et al. , An analysis of electronic health record-related patient safety concerns, J. Am. Med. Inform. Assoc. 21 (6) (2014) 1053–1059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Russ AL, et al. , Perceptions of information in the electronic health record, in: Proceedings of the 2009 Human Factors and Ergonomics Society Annual Meeting, vol. 53, no. 11. 2009, pp. 635–639. [Google Scholar]

- [9].Weir CR, Nebeker JR, Critical issues in an electronic documentation system, AMIA Annual Symposium Proceedings, 2007, pp. 786–790. [PMC free article] [PubMed] [Google Scholar]

- [10].Zhou YY, et al. , Improved quality at Kaiser Permanente through e-mail between physicians and patients, Health Aff. 29 (7) (2010) 1370–1375. [DOI] [PubMed] [Google Scholar]

- [11].Görges M, Markewitz BA, Westenskow DR, Improving alarm performance in the medical intensive care unit using delays and clinical context, Anesth. Analg. 108 (5) (2009) 1546–1552. [DOI] [PubMed] [Google Scholar]

- [12].Endsley MR, Designing for Situation Awareness: An Approach to User-centered Design, CRC Press, Boca Raton, FL, 2011. [Google Scholar]

- [13].Hollnagel E, Handbook of Cognitive Task Design, Lawrence Erlbaum Publishers, Mahwah, N.J., 2003. [Google Scholar]

- [14].Holtzblatt K, Wendell JB, Wood S, Rapid Contextual Design: A How-to Guide to Key Techniques for User-centered Design, Elsevier/Morgan Kaufmann, San Francisco, 2005. [Google Scholar]

- [15].Vicente KJ, Rasmussen J, Ecological interface design: theoretical foundations, IEEE Trans. Syst. Man Cybern. 22 (4) (1992) 589–606. [Google Scholar]

- [16].Saleem JJ, et al. , Exploring the persistence of paper with the electronic health record, Int. J. Med. Inf. 78 (9) (2009) 618–628. [DOI] [PubMed] [Google Scholar]

- [17].Scott JT, et al. , Kaiser Permanente’s experience of implementing an electronic medical record: a qualitative study, BMJ 331 (7528) (2005) 1313–1316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Sittig DF, Singh H, Eight rights of safe electronic health record use, JAMA 302 (10) (2009) 1111–1113. [DOI] [PubMed] [Google Scholar]

- [19].Burns CM, Hajdukiewicz JR, Ecological Interface Design, CRC Press, Boca Raton FL, 2004. [Google Scholar]

- [20].Görges M, Staggers N, Evaluations of physiological monitoring displays: a systematic review, J. Clin. Monit. Comput. 22 (1) (2008) 45–66. [DOI] [PubMed] [Google Scholar]

- [21].Brenner SK, et al. , Effects of health information technology on patient outcomes: a systematic review, J. Am. Med. Inform. Assoc. 23 (5) (2016) 1016–1036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Buntin MB, et al. , The benefits of health information technology: a review of the recent literature shows predominantly positive results, Health Aff. 30 (3) (2011) 464–471. [DOI] [PubMed] [Google Scholar]

- [23].Chaudhry B, et al. , Systematic review: impact of health information technology on quality, efficiency, and costs of medical care, Ann. Intern. Med. 144 (10) (2006) 742–752. [DOI] [PubMed] [Google Scholar]

- [24].Ellsworth MA, et al. , An appraisal of published usability evaluations of electronic health records via systematic review, J. Am. Med. Inform. Assoc. (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Drews FA, Westenskow DR, The right picture is worth a thousand numbers: data displays in anesthesia, Hum. Factors 48 (1) (2006) 59–71. [DOI] [PubMed] [Google Scholar]

- [26].Kamaleswaran R, McGregor C, A review of visual representations of physiologic data, JMIR Med. Inform. 4 (4) (2016) e31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Sanderson PM, Watson MO, Russell WJ, Advanced patient monitoring displays: tools for continuous informing, Anesth. Analg. 101 (1) (2005) 161–168. [DOI] [PubMed] [Google Scholar]

- [28].Schmidt JM, De Georgia M, Multimodality monitoring: informatics, integration data display and analysis, Neurocritical Care 21 (Suppl 2) (2014) p. S229–38. [DOI] [PubMed] [Google Scholar]

- [29].Khairat SS, et al. , The impact of visualization dashboards on quality of care and clinician satisfaction: integrative literature review, JMIR Hum Factors 5 (2) (2018) e22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Segall N, et al. , Trend displays to support critical care: a systematic review, in: IEEE International Conference on Healthcare Informatics, Park City, UT, 2017. [Google Scholar]

- [31].Waller R, et al. , Novel displays of patient information in critical care settings: a systematic review, J. Am. Med. Inform. Assoc. 26 (5) (2019) 479–489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Liberati A, et al. , The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration, PLoS Med. 6 (7) (2009) e1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Institute of Medicine (US) Committee on Standards for Systematic Reviews of Comparative Effectiveness Research, et al. , Finding What Works in Health Care: Standards for Systematic Reviews, 2011. [PubMed] [Google Scholar]

- [34].Byrt T, Bishop J, Carlin JB, Bias, prevalence and kappa, J. Clin. Epidemiol. 46 (5) (1993) 423–429. [DOI] [PubMed] [Google Scholar]

- [35].Bertin J, Semiology of Graphics, University of Wisconsin Press, Madison, 2010. [Google Scholar]

- [36].Helander MG, Handbook of Human-Computer Interaction, Elsevier Science Publishing Company Inc:, New York, NY, 1988. [Google Scholar]

- [37].Tufte ER, The Visual Display of Quantitative Information, Graphics Press, Cheshire, CT, 2001. [Google Scholar]

- [38].Endsley MR, Bolte B, Jones DG, Designing for Situation Awareness: An Approach to Human-Centered Design, Taylor and Francis, London, 2003. [Google Scholar]

- [39].Few S, Information Dashboard Design, second ed., Analytics Press, Burlingame, 2013. [Google Scholar]

- [40].Holtzblatt K, Wendell J, Wood S, Rapid Contextual Design, Elsevier Inc., San Francisco, 2005. [Google Scholar]

- [41].Mayhew DJ, Principles and Guidelines in Software User Interface Design, Prentice Hall, Englewood Cliffs, NJ, 1992. [Google Scholar]

- [42].Schlatter T, Levinson D, Visual Usability: Principles and Practices for Designing Digital Applications, Elsevier, Boston, 2013. [Google Scholar]

- [43].Smith SL, Mosier JN, Guidelines for Designing User Interface Software, ESD-TR- 86–278. 1986. [cited 2007 October 11]; Available from: http://www.hcibib.org/sam/index.html.

- [44].Conover WJ, Practical Nonparametric Statistics, John Wiley & Sons, New York, 1999. [Google Scholar]

- [45].Haidich AB, Meta-analysis in medical research, Hippokratia 14 (Suppl 1) (2010) 29–37. [PMC free article] [PubMed] [Google Scholar]

- [46].Ahmed A, et al. , The effect of two different electronic health record user interfaces on intensive care provider task load, errors of cognition, and performance, Crit. Care Med. 39 (7) (2011) 1626–1634. [DOI] [PubMed] [Google Scholar]

- [47].Anders S, et al. , Evaluation of an integrated graphical display to promote acute change detection in ICU patients, Int. J. Med. Inf. 81 (12) (2012) 842–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Faiola A, Newlon C, Advancing Critical Care in the ICU: A Human-Centered Biomedical Data Visualization Systems, in: Lecture Notes in Computer Science, Springer Berlin Heidelberg: Berlin, Heidelberg, 2011, pp. 119–128. [Google Scholar]

- [49].Faiola A, Srinivas P, Duke J, Supporting Clinical Cognition: A Human-Centered Approach to a Novel ICU Information Visualization Dashboard. AMIA ... Annual Symposium proceedings/AMIA Symposium, AMIA Symposium, 2015, 2015, pp. 560–569. [PMC free article] [PubMed] [Google Scholar]

- [50].Pickering BW, et al. , Novel representation of clinical information in the ICU: developing user interfaces which reduce information overload, Appl. Clin. Inform. 1 (2) (2010) 116–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Miller A, Scheinkestel C, Steele C, The effects of clinical information presentation on physicians’ and nurses’ decision-making in ICUs, Appl. Ergon. 40 (4) (2009) 753–761. [DOI] [PubMed] [Google Scholar]

- [52].Michels P, Gravenstein D, Westenskow DR, An integrated graphic data display improves detection and identification of critical events during anesthesia, J. Clin. Monit. 13 (4) (1997) 249–259. [DOI] [PubMed] [Google Scholar]

- [53].Flohr L, et al. , Clinician-driven design of VitalPAD-an intelligent monitoring and communication device to improve patient safety in the intensive care unit, IEEE J. Transl. Eng. Health Med. 6 (2018) 3000114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Lin YL, et al. , Heuristic evaluation of data integration and visualization software used for continuous monitoring to support intensive care: a bedside nurse’s perspective, Nursing Care 4 (6) (2015). [Google Scholar]

- [55].Lin YL, et al. , Usability of data integration and visualization software for multi-disciplinary pediatric intensive care: a human factors approach to assessing technology, BMC Med. Inf. Decis. Making 17 (1) (2017) 122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Gorges M, et al. , A far-view intensive care unit monitoring display enables faster triage, Dimens Crit Care Nurs 30 (4) (2011) 206–217. [DOI] [PubMed] [Google Scholar]

- [57].Gorges M, Westenskow DR, Markewitz BA, Evaluation of an integrated intensive care unit monitoring display by critical care fellow physicians, J. Clin. Monit. Comput 26 (6) (2012) 429–436. [DOI] [PubMed] [Google Scholar]

- [58].Koch S, Sheeren A, Staggers N, Using personas and prototypes to define nurses’ requirements for a novel patient monitoring display, Stud. Health Technol. Inform. 146 (2009) 69–73. [PubMed] [Google Scholar]

- [59].Koch SH, et al. , ICU nurses’ evaluations of integrated information displays on user satisfaction and perceived mental workload, Stud. Health Technol. Inform. 180 (2012) 383–387. [PubMed] [Google Scholar]

- [60].Koch SH, et al. , Evaluation of the effect of information integration in displays for ICU nurses on situation awareness and task completion time: a prospective randomized controlled study, Int. J. Med. Inform. 82 (8) (2013) 665–675. [DOI] [PubMed] [Google Scholar]

- [61].Doesburg F, et al. , Improved usability of a multi-infusion setup using a centralized control interface: a task-based usability test, PLoS ONE 12 (8) (2017) e0183104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Forsman J, et al. , Integrated information visualization to support decision making for use of antibiotics in intensive care: design and usability evaluation, Inform.Health Soc. Care 38 (4) (2013) 330–353. [DOI] [PubMed] [Google Scholar]

- [63].Thursky KA, Mahemoff M, User-centered design techniques for a computerised antibiotic decision support system in an intensive care unit, Int. J. Med. Inform. 76 (10) (2007) 760–768. [DOI] [PubMed] [Google Scholar]

- [64].Gil M, et al. , Co-Design of a computer-assisted medical decision support system to manage antibiotic prescription in an ICU ward, Stud. Health Technol. Inform. 228 (2016) 499–503. [PubMed] [Google Scholar]

- [65].Agutter J, et al. , Evaluation of graphic cardiovascular display in a high-fidelity simulator, Anesth. Analg. 97 (5) (2003) 1403–1413. [DOI] [PubMed] [Google Scholar]

- [66].Albert RW, et al. , A simulation-based evaluation of a graphic cardiovascular display, Anesth Analg 105 (5) (2007) 1303–1311 table of contents. [DOI] [PubMed] [Google Scholar]

- [67].Effken JA, Improving clinical decision making through ecological interfaces, Ecol. Psychol. 18 (2006) 283–318. [Google Scholar]

- [68].Effken JA, et al. , Clinical information displays to improve ICU outcomes, Int. J. Med. Inform. 77 (11) (2008) 765–777. [DOI] [PubMed] [Google Scholar]

- [69].Doig AK, et al. , Graphical arterial blood gas visualization tool supports rapid and accurate data interpretation, Comput. Inform. Nurs. 29 (4 Suppl) (2011) TC53–60. [DOI] [PubMed] [Google Scholar]

- [70].Cole WG, Stewart JG, Human performance evaluation of a metaphor graphic display for respiratory data, Methods Inf. Med. 33 (4) (1994) 390–396. [PubMed] [Google Scholar]

- [71].Deneault LG, et al. , An integrative display for patient monitoring, in: 1990 IEEE International Conference on Systems, Man, and Cybernetics Conference Proceedings, IEEE, 1990, pp. 515–517. [Google Scholar]

- [72].Drews FA, Doig A, Evaluation of a configural vital signs display for intensive care unit nurses, Hum. Factors 56 (3) (2014) 569–580. [DOI] [PubMed] [Google Scholar]

- [73].Green CA, et al. , Aberdeen polygons: computer displays of physiological profiles for intensive care, Ergonomics 39 (3) (1996) 412–428. [DOI] [PubMed] [Google Scholar]

- [74].Jungk A, et al. , Ergonomic evaluation of an ecological interface and a profilogram display for hemodynamic monitoring, J. Clin. Monit. Comput. 15 (7–8) (1999) 469–479. [DOI] [PubMed] [Google Scholar]

- [75].Jungk A, et al. , Evaluation of two new ecological interface approaches for the anesthesia workplace, J. Clin. Monit. Comput. 16 (4) (2000) 243–258. [DOI] [PubMed] [Google Scholar]

- [76].Liu Y, Osvalder AL, Usability evaluation of a GUI prototype for a ventilator machine, J. Clin. Monit. Comput. 18 (5–6) (2004) 365–372. [DOI] [PubMed] [Google Scholar]

- [77].Ordonez P, et al. , Visualization of multivariate time-series data in a neonatal ICU, IBM J. Res. Dev. 56 (5) (2012) 7:1–7:12. [Google Scholar]

- [78].van Amsterdam K, et al. , Visual metaphors on anaesthesia monitors do not improve anaesthetists’ performance in the operating theatre, Br. J. Anaesth. 110 (5) (2013) 816–822. [DOI] [PubMed] [Google Scholar]

- [79].Blike GT, Surgenor SD, Whalen K, A graphical object display improves anesthesiologists’ performance on a simulated diagnostic task, J. Clin. Monit. Comput. 15 (1) (1999) 37–44. [DOI] [PubMed] [Google Scholar]

- [80].Blike GT, et al. , Specific elements of a new hemodynamics display improves the performance of anesthesiologists, J. Clin. Monit. Comput. 16 (7) (2000) 485–491. [DOI] [PubMed] [Google Scholar]

- [81].Wachter SB, et al. , The employment of an iterative design process to develop a pulmonary graphical display, J. Am. Med. Inform. Assoc. 10 (4) (2003) 363–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [82].Wachter SB, et al. , The evaluation of a pulmonary display to detect adverse respiratory events using high resolution human simulator, J. Am. Med. Inform. Assoc. 13 (6) (2006) 635–642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [83].Zhang Y, et al. , Effects of integrated graphical displays on situation awareness in anaesthesiology, Cogn. Technol. Work 4 (2) (2002) 82–90. [Google Scholar]

- [84].Charabati S, et al. , Comparison of four different display designs of a novel anaesthetic monitoring system, the integrated monitor of anaesthesia (IMA), Br. J. Anaesth. 103 (5) (2009) 670–677. [DOI] [PubMed] [Google Scholar]

- [85].Elouni J, et al. , A visual modeling of knowledge for decision-making, in: International Conference on Image and Vision Computing New Zealand (IVCNZ), 2016, IEEE, 2016. [Google Scholar]

- [86].Frize M, Bariciak E, Gilchrist J, PPADS: Physician-PArent decision-support for neonatal intensive care, Stud. Health Technol. Inform. 192 (2013) 23–27. [PubMed] [Google Scholar]

- [87].Gorges M, et al. , An evaluation of an expert system for detecting critical events during anesthesia in a human patient simulator: a prospective randomized controlled study, Anesth. Analg. 117 (2) (2013) 380–391. [DOI] [PubMed] [Google Scholar]

- [88].Gurushanthaiah K, Weinger MB, Englund CE, Visual display format affects the ability of anesthesiologists to detect acute physiologic changes. A laboratory study employing a clinical display simulator, Anesthesiology 83 (6) (1995) 1184–1193. [DOI] [PubMed] [Google Scholar]

- [89].Mitchell PH, Burr RL, Kirkness CJ, Information technology and CPP management in neuro intensive care, Acta Neurochir. Suppl. 81 (2002) 163–165. [DOI] [PubMed] [Google Scholar]

- [90].Sharp TD, Helmicki AJ, The application of the ecological interface design approach to neonatal intensive care medicine, Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 1998, pp. 350–354. [Google Scholar]

- [91].Stubbs B, Kale DC, Das A, Sim*TwentyFive: an interactive visualization system for data-driven decision support, AMIA Annu. Symp. Proc. 2012 (2012) 891–900. [PMC free article] [PubMed] [Google Scholar]

- [92].Tappan JM, et al. , Visual cueing with context relevant information for reducing change blindness, J. Clin. Monit. Comput. 23 (4) (2009) 223–232. [DOI] [PubMed] [Google Scholar]

- [93].Kennedy RR, Merry AF, The effect of a graphical interpretation of a statistic trend indicator (Trigg’s Tracking Variable) on the detection of simulated changes, Anaesth. Intensive Care 39 (5) (2011) 881–886. [DOI] [PubMed] [Google Scholar]

- [94].Bauer DT, Guerlain S, Brown PJ, The design and evaluation of a graphical display for laboratory data, J. Am. Med. Inform. Assoc. 17 (4) (2010) 416–424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [95].Hunter J, et al. , Summarising complex ICU data in natural language, in: AMIA ... Annual Symposium proceedings/AMIA Symposium, AMIA Symposium, 2008, pp. 323–327. [PMC free article] [PubMed] [Google Scholar]

- [96].Law AS, et al. , A comparison of graphical and textual presentations of time series data to support medical decision making in the neonatal intensive care unit, J. Clin. Monit. Comput. 19 (3) (2005) 183–194. [DOI] [PubMed] [Google Scholar]

- [97].Drews FA, et al. , Drug delivery as control task: improving performance in a common anesthetic task, Hum. Factors 48 (1) (2006) 85–94. [DOI] [PubMed] [Google Scholar]

- [98].Syroid ND, et al. , Development and evaluation of a graphical anesthesia drug display, Anesthesiology 96 (3) (2002) 565–575. [DOI] [PubMed] [Google Scholar]

- [99].Ireland RH, et al. , Design of a summary screen for an ICU patient data management system, Med. Biol. Eng. Compu. 35 (4) (1997) 397–401. [DOI] [PubMed] [Google Scholar]

- [100].Effken JA, Kim NG, Shaw RE, Making the relationships visible: testing alternative display design strategies for teaching principles of hemodynamic monitoring and treatment, in: Proc Annu Symp Comput Appl Med Care, 1994, pp. 949–953. [PMC free article] [PubMed] [Google Scholar]

- [101].Effken JA, Kim NG, Shaw RE, Making the constraints visible: testing the ecological approach to interface design, Ergonomics 40 (1) (1997) 1–27. [DOI] [PubMed] [Google Scholar]

- [102].Giuliano KK, et al. , The role of clinical decision support tools to reduce blood pressure variability in critically ill patients receiving vasopressor support, Comput. Inform. Nurs. 30 (4) (2012) 204–209. [DOI] [PubMed] [Google Scholar]

- [103].Kennedy RR, McKellow MA, French RA, The effect of predictive display on the control of step changes in effect site sevoflurane levels, Anaesthesia 65 (8) (2010) 826–830. [DOI] [PubMed] [Google Scholar]

- [104].Kennedy T, Lingard L, Making sense of grounded theory in medical education, Med. Educ. (2006) 101–108. [DOI] [PubMed] [Google Scholar]

- [105].Kirkness CJ, et al. , The impact of a highly visible display of cerebral perfusion pressure on outcome in individuals with cerebral aneurysms, Heart Lung 37 (3) (2008) 227–237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [106].Bansal P, et al. , A computer based intervention on the appropriate use of arterial blood gas, Proc. AMIA Symp. (2001) 32–36. [PMC free article] [PubMed] [Google Scholar]

- [107].Sondergaard S, et al. , High concordance between expert anaesthetists’ actions and advice of decision support system in achieving oxygen delivery targets in high-risk surgery patients, Br. J. Anaesth. 108 (6) (2012) 966–972. [DOI] [PubMed] [Google Scholar]

- [108].Olchanski N, et al. , Can a novel ICU data display positively affect patient out-comes and save lives? J. Med. Syst. 41 (11) (2017) 171. [DOI] [PubMed] [Google Scholar]

- [109].Dziadzko MA, et al. , User perception and experience of the introduction of a novel critical care patient viewer in the ICU setting, Int. J. Med. Inform. 88 (2016) 86–91. [DOI] [PubMed] [Google Scholar]

- [110].Pickering BW, et al. , The implementation of clinician designed, human-centered electronic medical record viewer in the intensive care unit: a pilot step-wedge cluster randomized trial, Int. J. Med. Inform. 84 (5) (2015) 299–307. [DOI] [PubMed] [Google Scholar]

- [111].Reese T, et al. , Patient information organization in the intensive care setting: expert knowledge elicitation with card sorting methods, J. Am. Med. Inform. Assoc. 25 (8) (2018) 1026–1035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [112].Nielsen J, Mack R, Usability Inspection Methods, John Wiley & Sons Inc., New York, 1994. [Google Scholar]

- [113].Charmaz K, Constructing Grounded Theory, Sage Publications Inc, Thousand Oaks, CA, 2006. [Google Scholar]

- [114].Kushniruk AW, Patel VL, Cimino JJ, Usability testing in medical informatics: cognitive approaches to evaluation of information systems and user interfaces, in: Proceedings: a conference of the American Medical Informatics Association/AMIA Annual Fall Symposium AMIA Fall Symposium, 1997, pp. 218–22. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.