Abstract

The National Institutes of Health requires data sharing plans for projects with over five hundred thousand dollars in direct costs in a single year and has recently released a new guidance on rigor and reproducibility in grant applications. The National Science Foundation outright requires Data Management Plans (DMPs) as part of applications for funding. However, there is no general and definitive list of topics that should be covered in a DMP for a research project. We identified and reviewed DMP requirements from research funders. Forty-three DMP topics were identified. The review uncovered inconsistent requirements for written DMPs as well as high variability in required or suggested DMP topics among funder requirements. DMP requirements were found to emphasize post-publication data sharing rather than upstream activities that impact data quality, provide traceability or support reproducibility. With the emphasis equalized, the forty-three identified topics can aid Data Managers in systematically generating comprehensive DMPs that support research project planning and funding application evaluation as well as data management conduct and post-publication data sharing.

Keywords: Data management, Data management plan, Data management planning, Reproducibility, Traceability, Data quality

1. Introduction

Data are the building blocks of scientific inquiry. Data management is the process by which observations and measurements are defined, made, and documented, and the methods by which the data from those observations are subsequently processed and maintained. As such, data management is an essential part of every research endeavor. Historically, many research data management practices stem directly from things necessary to support research reproducibility.

Research reproducibility had been variously defined [1–6] For the purposes of this discussion, we adopt the Peng (2009) definitions of replication and reproducibility stated as, “The replication of scientific findings using independent investigators, methods, data, equipment, and protocols has long been, and will continue to be, the standard by which scientific claims are evaluated. However, in many fields of study there are examples of scientific investigations that cannot be fully replicated because of a lack of time or resources. In such a situation, there is a need for a minimum standard that can fill the void between full replication and nothing. One candidate for this minimum standard is “reproducible research”, which requires that data sets and computer code be made available to others for verifying published results and conducting alternative analyses.” [7] The Institute of Medicine in the workshop report on Assuring Data Quality and Validity in Clinical Trials for Regulatory Decision Making (1999) similarly defines quality data as, “data that support the same conclusions as error free data” [8], i.e., quality data produce the same conclusions as error free data.

A second concept, traceability is important to replicability and reproducibility. Traceability of research data is the ability to reproduce the raw data from the analysis datasets and vice versa. Traceability requires a record of all operations performed on data and all changes to data and is required of data submitted for example, to the United States Food and Drug Administration for regulatory decision-making. The regulatory basis for traceability is found in Title 21 CFR 58.130 (c) and (e) and 58.35 (b), and Title 42 CFR 93[9].1 Title 21 CFR Part 11, the electronic Signature Rule goes further to require audit trails as a mechanism for maintaining traceability in systems using electronic signatures. Traceability of data demonstrates that all changes to data are documented and that no undocumented changes to data occurred. Through traceability, all changes are attributable to the individual (user account of the individual) or machine making them, and are detectable. It should be obvious that where a replication or reproduction of a study has failed, or other questions about how the data were handled arise, traceability and associated documentation is the only credible support. For this reason, traceability is a central concern of professional data managers in regulated industries and increasingly more broadly in scientific inquiry. The study reported here was primarily motivated by a perceived lack of emphasis on traceability, replicability and reproducibility in data management and sharing plan requirements as stated by research funders.

2. Background

Plans for data management have been variously named and composed since their early uses. These plans are often written for a specific project and differ in the aspects of data management addressed. Common variants include data management plans, data handling plans or protocols, research data security plans, data sharing plans, manuals of procedures, manuals of operations, etc. As defined by the Society for Clinical Data Management (SCDM), a data management plan comprehensively describes data from its definition, collection and processing to its final archival or disposal. Similarly comprehensive, the Data Management Association (DAMA) defines data management as, “the business function that develops and executes plans, policies, practices and projects that acquire, control, protect, deliver and enhance the value of data and information.” [10] However, other conceptualizations of data management and data management plans focus more narrowly on data preservation and archival [11,12]. The Clearinghouse for the Open Research of the United States (CHORUS) and Association of Research Libraries, Shared Access Research Ecosystem (SHARE) proposals as well as extensions of PubMED Central and journal sites to facilitate sharing research data exemplify emphasis on management of data post-publication, i.e., curation, discoverability, preservation for sharing.

The National Institutes of Health (NIH) and National Science Foundation (NSF) share a similar focus on post-publication data management. Since October 1, 2003, the NIH has required that any investigator submitting a grant application seeking direct costs of $500,000 or more in any single year include a plan to address data sharing in the application or state why data sharing is not possible [13]. More recently, starting January 18, 2011, the NSF proposals submitted to the NSF required inclusion of a Data Management Plan [14]. Continuing the focus on management of data post-publication, in February of 2013, the White House Office of Science and Technology Policy (OSTP) in a memorandum, directed Federal agencies providing significant research funding to develop a plan to expand public access to research [15]. Among other requirements, the plans must, “Ensure that all extramural researchers receiving Federal grants and contracts for scientific research and intramural researchers develop data management plans, as appropriate, describing how they will provide for longterm preservation of, and access to, scientific data in digital formats resulting from federally funded research, or explaining why long-term preservation and access cannot be justified” [15].

Likewise, the DataRes Project, a two-year study funded by the Institute for Museum and Library Services (IMLS), focused on the perceptions of library professionals regarding management of research data post-publication or study completion. Their findings, included a lack of academic preparation in research data management, that researchers seek to maximize the portion of grant funds expended on “research proper” rather than tasks perceived as “outside the scope of research” or as “secondary support functions”, no established expectation for long-term data management by individual researchers or institutions, and subsequent lack of institutional mandates for managing research data effectively [12]. While the DataRes project conceptualized data management as post-publication preservation and data sharing, for example, the categories used for stages of the data management process, Data management plan development, Data storage during research, Data preservation after research, Data access and sharing, and other, do not address data collection and processing – the two components of the data management process that almost completely determine data quality, the study was robust in terms of its mixed methods approach and breadth of knowledge sources consulted and we find the findings applicable to these critical upstream aspects of data management.

Others share a broader perspective of Data Management that necessarily includes research observations, data collection and data processing. As early as 1967, the Greenberg report brought to attention the need for monitoring and controlling performance in large multi-centre clinical studies [16]. The Greenberg Report was followed in 1995 by a special issue of Controlled Clinical Trials (now Clinical Trials) containing a collection of five review papers documenting current practice at the time [17–22]. Organizations and individuals have implemented this broader conceptualization of data management plans to increase consistency in the performance of tasks, to supplement organizational standard operating procedures (SOPs) with documentation of study-specific processes, and to communicate processes to other functions or contractors [23–25]. In fact, the tasks associated with this broader conceptualization are included in the national Certified Clinical Data Manager (CCDM™) exam. With this research, we set out to identify the aspects of data management that are addressed by current funder requirements for data management plans and the extent to which current data management plan requirements from funders include aspects of upstream data management that either seek to impact the quality of research data or to document those actions taken in making research observations, collecting research data and processing it, i.e., those actions that would likely have impact on the quality of data analyzed, published or shared.

While the more narrow conceptualization of data management as handling of data post-publication originated in response to international and domestic governmental policy regarding increasing access to research results and resources generated with public funds and has the laudable goal increasing public availability of research results and data, it misses important upstream data handling. Upstream data collection and handling processes largely determine data quality. Further, in the case of a failed replication or reproduction differences in upstream operations performed on data should always be examined. As such, a focus on only post-publication data management processes covers only a portion of the story.

In addition to the DataRes project published in 2013, four other reviews of data management or sharing plan content have been reported in the literature. In 2011, Jones, Bicarregui and Singleton synthesized data management planning documentation from Medical Research Council (MRC) funded studies, developed a data management planning template and guidelines for completing it and piloted the brief template on three studies [26]. Also concerned with clinical studies and similarly to the Society for Clinical Data Management, they define a data management plan as covering “the entire life-cycle of the project and the data it generates” [26]. Their synthesis was limited to MRC funded studies and the template, by design, provides a high-level summary intended to accompany an application for research funding rather than comprehensive planning and documentation to support study conduct and associated traceability. The synthesis method and decisions about what was ultimately included in the template were not described in the report. A second DMP related review was reported in 2012 by Dietrich, Admus, Miner and Steinhart [27]. The 2012 review synthesized Data Management Plan requirements from the ten top US funding agencies selected from those funding research at Cornell University and thus was not limited to any particular industry or discipline. They classified each DMP requirement document according to seventeen criteria that they adapted from those previously reported by the Digital Curation Centre (DCC) focused on data sharing, curation and preservation. In summary, they found that no single policy addressed all criteria and that data policies were missing a significant number of elements [27]. A third review similar to the DCC overview (Knight) was also reported in 2012 [28]. The Knight review included funding agencies supporting research projects at the London School of Hygiene and Tropical Medicine and was similarly focused on post-publication data management [28] The fourth review by Brandt et al. (2015) was limited to DMPs from several pharmaceutical and medical device companies and academic research institutions and resulted in a guideline for writing a standard operating procedure for a DMP that contained recommended topics to be covered in a DMP [29].

Soon most grants funded by Federal agencies in the United States will require some form of data management plan. A tool has been collaboratively developed to facilitate authoring Data Management Plans for a broad range of funders, Data Management Plan creation tool (https://dmptool.org) and has been adopted by more than 100 institutions. The DMP tool, however appears largely focused on creation of DMPs to accompany funding applications rather than a DMP that comprehensively specifies all operations to be performed on data for a study, serves as a reference and job aid throughout the study, and results in explanatory documentation to be archived with study data.

The large differences in aspects of data management addressed in the aforementioned policies, requirements and studies demonstrate a need for a comprehensive approach to and common vocabulary around aspects of data management planning, and for education and training on basic principles of data-related procedures for research and their relationship to data quality, traceability, replicability and reproducibility. As such, we undertook and report a review of funder requirements for data management and data sharing plans (DMP/DSPs). The objective of the review was to examine funder requirements for data management or sharing plans more comprehensively over the entire data lifecycle, their component topics and the variability of topics across data management or sharing plan requirements. Our goal was to synthesize current practice, and inform more comprehensive coverage of and support for the data lifecycle in data management requirements and data management plans themselves.

3. Materials and methods

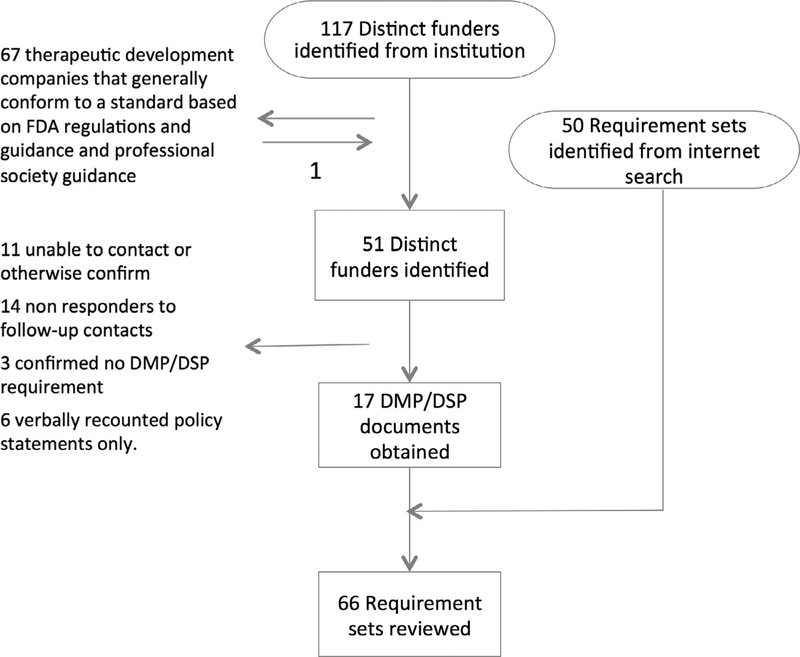

Data management or sharing plan requirements were identified via two mechanisms for this review. First, similar to Dietrich et al., we requested a list of organizations funding research at the lead author’s institution for the calendar years 2010–2014. This list contained 117 distinct funders providing over one million U.S. dollars in direct cost funding in one or more calendar years. We subsequently compared this list to the ten funders included in the Dietrich et al. review adding no new funders. We separated out therapeutic development companies from this list because an industry specific practice standard exists for data management planning in the therapeutic development industry. The practice standard, the Good Clinical Data Management Practices (GCDMP) is based on regulation and guidance and represents professional society consensus on the topic [24]. The GCDMP document was included and counted once as representative of all 67 therapeutic development companies identified in our organizational search and no further information was sought from these organizations. We did this to prevent bias from the preponderance of therapeutic development industry funders compared to the low frequency of identified funders in other disciplines. In total, our organizational search contributed 51 organizations that were subsequently contacted to ascertain whether they had DMP/DSP requirements and to obtain them where they existed. A total of seventeen requirements documents resulted from our first search strategy (Fig. 1).

Fig. 1.

Disposition and sources of identified research funders.

Our second source of research funders was internet searching. We conducted a web search to identify data management plan requirements from United States federal funding agencies such as NIH and NSF, as well as colloquia, foundations, societies and for- and not-for-profit organizations funding research. Collaborative efforts at data management or sharing plan guidelines or templates were also included if identified, but individual university policies were not included. The web-based search identified an additional 50 data management or sharing plan requirements documents (Fig. 1).

In the case of sub-organizations having different requirements statements, e.g., each of the NSF Directorates has a directorate-specific data management plan requirements statement, the separate documents were included and counted as separate funder documents in the sixty-six total. Sixty-six data management/sharing plan requirements documents were reviewed. Federal organizations comprised the largest proportion (47%), followed by industry (21%), and other organizations (18%) primarily foundations. Twelve percent of the organizations were consortia or federations of academic institutions and two percent of organizations were collaborations involving institutions from more than one sector (Table 1).

Table 1.

Types of organizations with requirements for data management or data sharing.

| Organization type | Proportion |

|---|---|

| Academica | 8 (12%) |

| Federal | 31 (47%) |

| Industry | 14 (21%) |

| Otherb | 12 (18%) |

| Multiple | 1 (2%) |

Academic organizations were primarily consortia or federations of academic organizations collaborating in some ways on data management or sharing plan requirements.

Organizations in the other category tended to be foundations.

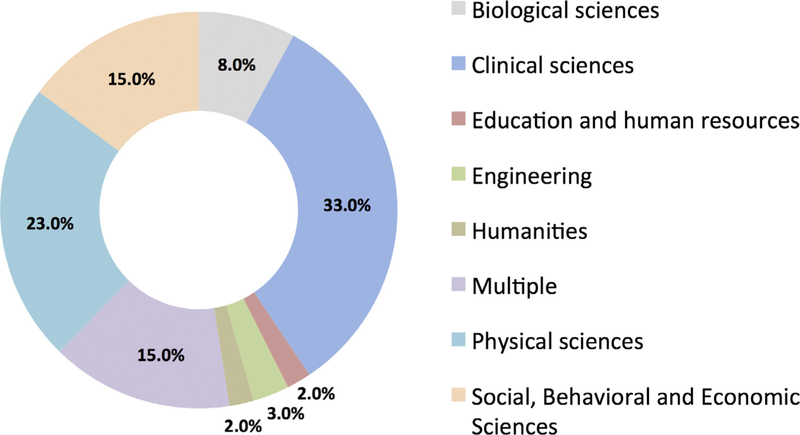

The sixty-six reviewed DMP/DSP requirements documents were also classified according to scientific discipline of the intended audience, e.g., physical sciences, biological sciences, clinical sciences (Fig. 2).

Fig. 2.

Research disciplines covered by the review. Fig. 2 shows the distribution across disciplines of the identified funders with data management or data sharing plan requirements.

Our approach to identifying funders with data management or sharing plan requirements was similar to Dietrich et al. in that we selected the top funders at an example institution, however, we diverged in coding approach. Dietrich et al., coded the DMP/DSP requirements with an existing set of seventeen codes focused on data curation, sharing, and preservation. We instead created a code for each mention of any data management or sharing related requirement in the included documents. Our approach purposefully did not impose external codes on the reviewed corpus. To identify such codes arising from the data, all source documents were loaded into NVivo qualitative analysis software in a shared project repository. The documents were then independently read and coded by two different coders. Discrepancies in coding were initially adjudicated by the coders with ties broken by the senior author. For coding, all mentions of a requirement or guideline related to the definition, observation, collection, processing, curation, storage, or archival of data, i.e., the most broad conceptualization of Data Management, were assigned a code indicating its purpose. As new codes were identified, they were defined and added to the code list (nodes in NVivo). After the first ten documents were coded, the codes were reviewed and codes were added for completeness to facilitate coding the remainder of the documents, for example, when the code for data accuracy was noted, popular co-occurring terms, such as attributable, legible, contemporaneous, and original were added. Throughout the project, coding was reviewed and code categories were split where conflation of multiple concepts became evident, and merged in instances where it was later realized that two separate terms used as codes contained similar content and should not be further separated. Later coding discrepancies were reviewed by the two coders and discrepant codes were resolved by consensus. A duplicate of one of the DMP requirements documents was randomly and blindly included and thus was subject to the full coding and adjudication process twice. The Intra-rater (intra with respect to having been subjected to the full coding process twice) reliability was82.4% for coding whether a topic was required versus suggested and 73% for coding how well described the requirement was.

4. Results

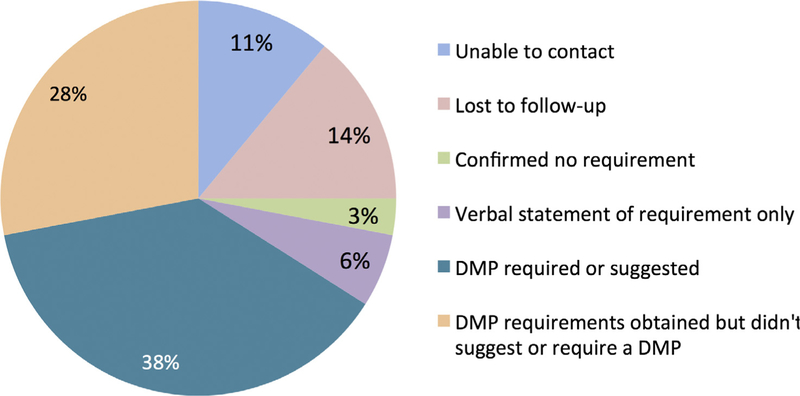

Of the 51 institutionally identified funders, we were able to establish contact with 40 for a total of ninety funders contacted (40 institutionally identified funders contacted and 50 additional funder data management plan requirements identified through internet searching). Of the 40 institutionally identified funding organizations with which we were able to establish contact, fourteen were unresponsive to two follow-up contacts, three stated that there was no data management or sharing plan requirement and six verbally stated some requirement, mostly around making data or research products public, but had no written policy that could be shared (Fig. 3). Further, of the 66 organizations from which we received DMP requirements thirty-eight 66% of the total organizations contacted either required or suggested a written DMP. Of the 89 organizations with which at least one contact was made, 43% either required or suggested a written DMP. Thus, formal or written DMPs are not required by a majority of identified or contacted research funders.

Fig. 3.

Disposition of the contacted research funders. Contacted research funders include those identified through the institutional database for sponsored research as well as those identified as having data management or sharing plan requirements through internet searches. The peach and teal areas denote the proportion of contacted funders from whom data management or sharing plan requirements were obtained – this area is further demarcated into those having data management or sharing plan requirements but not suggesting or requiring a written DMP (peach) versus those who explicitly suggested or required a written DMP in their requirements statement (teal).

The remainder of the analysis is based on the overall total sixty-six received and reviewed data management or sharing plan requirements documents. Of these, thirty-eight (58%) either required or suggested a data management plan (DMP), twenty-nine (44%) required a DMP as part of the application and 8 (12%) required creation of a DMP after award. Five of these (8%) required a data management plan both before and after award. Ten included documents (15%) mentioned some sort of institutional collaboration on the data management plan, either the funder or collaborator involvement. Four (6%) included documents required institutional collaboration while six (9%) suggested that such collaboration might occur. Likewise, four (6%) included documents suggested some type of standardization of the data management plan; in all cases, the standardization was only somewhat described.

Fifty-three (80%) of the included documents suggested (8%) or required (73%) data sharing or a justification of why data sharing was not possible. Nine included documents (14%) mentioned a data sharing plan (8 or 12% requiring and 1 or 2% suggesting) and nine (14%) mentioned a data sharing agreement (6 or 9% requiring and 3 or 5% suggesting) with one organization requiring both a data sharing plan and a data sharing agreement.

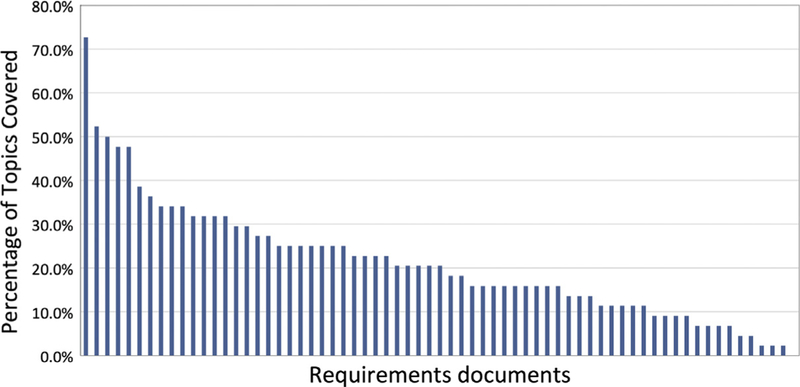

Forty-three distinct DMP topics were identified in the sixty-six reviewed data management or sharing plan requirements documents. Seven additional topics were identified that pertained to the DMP or DSP itself, for example, requiring a data sharing plan, requiring a data management plan or requiring versioning of the DMP. The requirements document covering the highest number of codes mentioned thirty-one (72%) of the identified topics. Fifty-two (79%) of the included DMP requirements documents covered thirty or less percent of the identified 43 DMP topics (Fig. 4), and all but one of the sixty-six reviewed documents covered less than sixty percent of the 43 identified DMP topics, suggesting incompleteness and lack of consistency among DMP requirements. These figures suggest poor coverage of important DMP topics by DMP requirements documents.

Fig. 4.

Percent of topics covered by data management or sharing plan requirements documents. The data summarization in Fig. 4 shows the percentage of identified topics covered by each data management or sharing plan requirements document. The majority of requirements documents covered 30% or fewer of the identified data management plan topics.

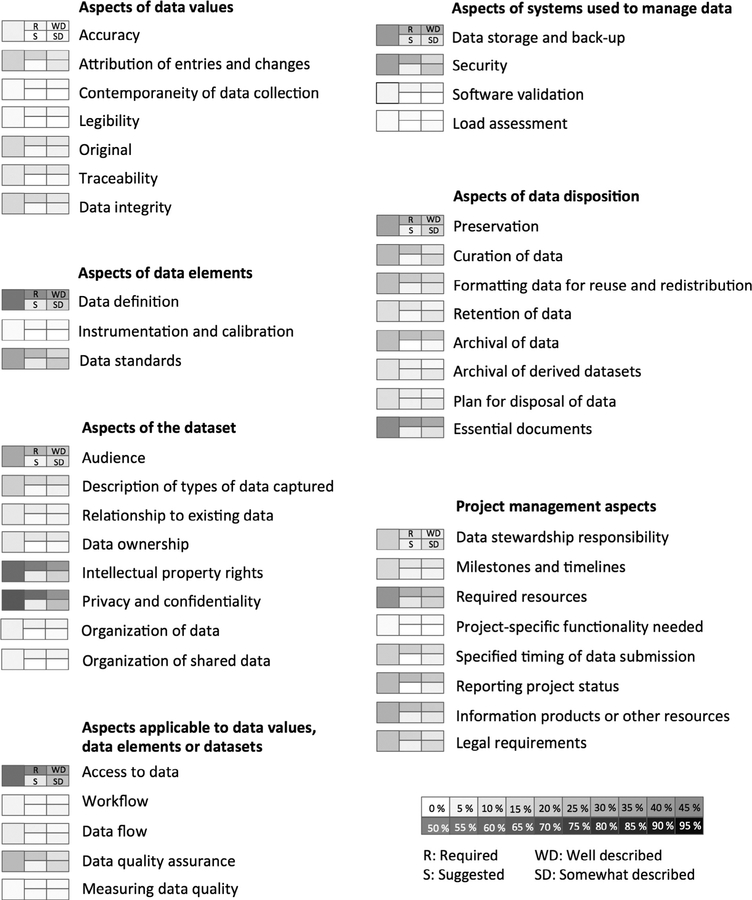

Fig. 5 lists the forty-three DMP topics identified in the reviewed DMP requirements documents. These data management plan topics were categorized by topicality into seven broad categories. The first group of categories (left hand side of Fig. 5) traverses topics relevant to data values, topics relevant to data elements, topics relevant to whole datasets, and topics that could be addressed at one of more of the aforementioned levels of abstraction. The last three categories (right hand side of Fig. 5) include aspects of computer systems used to store and manage data, aspects of data disposition, and aspects of how the data management work is managed.

Fig. 5.

Coverage for each of the forty-three identified data management plan topics. R – explicitly stated as required, S – suggested but not explicitly stated as required, WD – well described such that a mid-career data manager could implement the requirement with little or no additional clarification, SD – somewhat described such that a mid-career data manager would require additional clarification to implement the requirement.

The graphic next to each identified topic (Fig. 5) represents the overall coverage of the topic by the reviewed DMP requirements documents (larger leftmost square), to what extent included documents required (R) or suggested (S) the topic (middle set of rectangles), and an indication of the extent of description (WD for well described and SD for somewhat described; rightmost set of rectangles). The darker the shading, the higher percent coverage for the topic with black (not occurring) being covered by all included documents and white being mentioned by none. The coverage frequency (Fig. 4) shows that fifty-two (79%) of the included documents covered thirty or less percent of the forty-three identified topics. Fig. 5 shows which topics were addressed more frequently, which tended to be required versus suggested and of those which tended to be well versus only somewhat described. For example, topics related to software testing, description of instrumentation and instrumentation calibration, measurement of data quality, and organization of data were among the least well covered topics mentioned by ten or fewer percent of the data management and sharing plan requirements documents. The standard for the therapeutic development industry, the Good Clinical Data Management Practices document covered 72% of the identified topics. While the highest coverage, 72% is far from comprehensive.

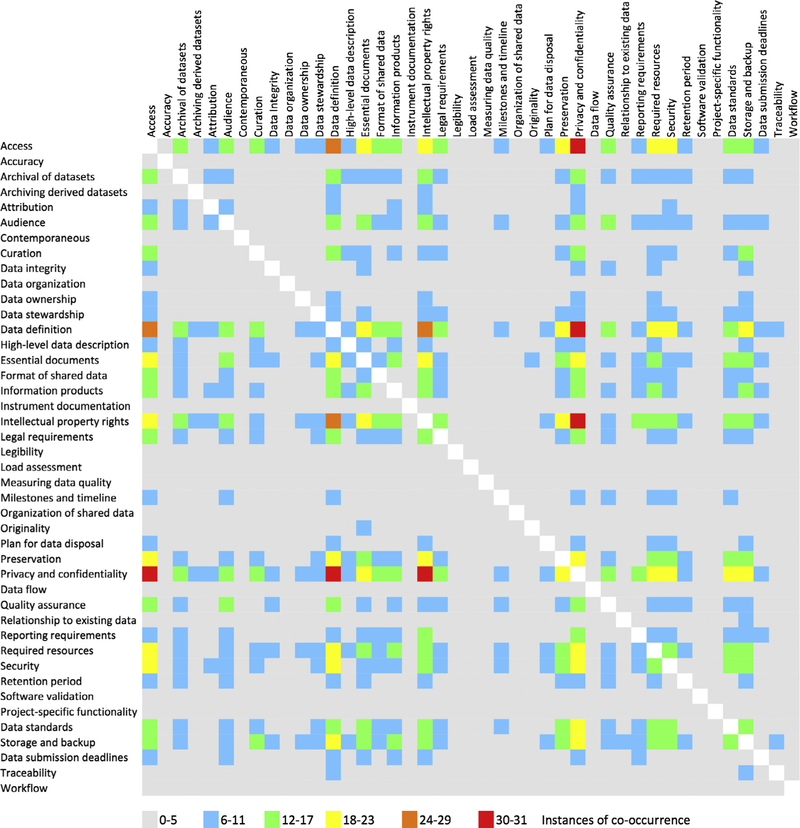

Lastly, we generated a co-occurrence matrix (Fig. 6) to tally the number of times each DMP topic was mentioned in the same DMP requirements document as every other DMP topic. The purpose was to see if there were topics that seemed to occur together. Of particular note, the DMP topics associated with management of data post-publication tended to co-occur at high frequency as well as have higher coverage in general. Topics applying to upstream data collection and processing tasks however had lower coverage in general.

Fig. 6.

Co-occurrence of the forty-three identified data management topics.

5. Discussion

Thirty-eight (58%) of the organizations from which we received DMP requirements either required or suggested a written DMP. This is 42% of the contacted funders and 37% of the identified funders. Thus, outside the therapeutic development industry, written DMPs are not required a majority of research funders. Yet, data sharing plans or a justification of why data sharing was not possible were required by 81% of the funders from which we received DMP requirements, highlighting the disparity of emphasis on pre versus post-publication management of data.

The 29% of funders requiring a DMP prior to award suggests acknowledgement that data management planning is important to the credibility of a research proposal or to the intended results. However, the low percentage (8%) of funders requiring a DMP after award is particularly disheartening. The data management plan necessarily changes throughout the planning and conduct phases of a study. As such, changes to procedures and handling of exceptions to procedures accumulate to the data management plan documentation throughout the life of a study. Following completion of a study this documentation supports the conclusions by providing complete explanation of how data were collected and processed as well as providing traceability. Lack of maintenance of the data management plan documentation through the active phase of a study risks lack of documentation to support research results, thus, status of data management plan maintenance after award should be an important consideration of research funders. Taken as a few page summary of data curation, sharing, and preservation plans, for a grant application, a DMP is an extra few speculative pages that an investigator must add to an application. Instead, when conceptualized and operationalized as comprehensive documentation of the data lifecycle for a study, a data management plan is a powerful tool and an integral component of the data management quality system. The best practice would be for research funders to require maintenance of the data management plan following award and during the active phase of a study.

We offer several possible reasons for the lack of requirement for DMPs following award. First, most doctoral programs do not include training in data management, thus, scientists in leadership positions in funding institutions may not be aware of the DMP as a tool in data related quality assurance and control. Second, the differences in language and scope of data management tasks between the library science community and the therapeutic development and information technology communities indicates that data management-related terminology is not consistent between disciplines and may also play a role. For example, while DMPs are seen as the mechanism for data related quality assurance and control by some, others may not conceptually group the tasks associated with data collection, processing and storage as data management and may refer to or provide for them in some other way.

The low percent coverage of identified DMP topics as evidenced by the 79% of the included documents covering thirty or less percent of the identified topics further demonstrates lack of consistency in DMP requirements. Further, those tasks initiated prior to or associated with upstream data collection and processing received the lowest coverage compared to topics pertaining to post-publication data preservation, curation or sharing. The lower emphasis of these topics in data management planning requirements suggests that these upstream tasks do not command the attention of research funders. If this is the case, the situation should be rectified. Providing for data sharing while neglecting upstream data collection and processing is somewhat akin to the well pump in the London cholera outbreak of 1854; the distribution was fine while the distributed was problematic. While specification of information to be shared with data certainly aids in reproducibility and replication, when questions arise, documentation of how data were collected and processed and the traceability of the data itself greatly aid the investigator [31].

Perhaps this lack of consistency in DMP topics covered is due to absence of an accepted list of topics that a DMP should cover. Our searching did not identify any frameworks or standards for DMP contents outside of the therapeutic development industry. We offer a list of topics for data management plans (Table 2). The list (Table 2) is based inductively on the DMP topics identified here and deductively on the documentation required for traceability of data in research contexts. In research contexts, data are observed, asked or measured; data are processed in some way, transferred from one system or location to another and often integrated with yet other data for the same study. We view these topics (Table 2) as necessary for traceability and re-use of data from studies. Further, we recommend the DMP as a tool for assuring and controlling data quality on studies. The DMP as a planning tool communicates the planned data flow and workflow as well as the procedures for all operations performed on data and details of software used to manage data. As such, the DMP specifies the artifacts that evidence adherence to data processing procedures. These artifacts can be inspected as part of an audit or can be otherwise used to understand the operations that occurred. Finally, the DMP itself, and artifacts specified by the DMP are those things that fully document data and should be archived with data. The list in Table 2 will aid researchers in comprehensively and systematically addressing the management of research data.

Table 2.

Data management plan framework.

| DMP component | Rationale for inclusion |

|---|---|

| Project personnel, the duration of their association with the project, their roles, responsibilities, data access, training, other qualifications and identifiers. |

|

| Description of all data sources | Documents and describes the origin of the data. |

| Data and workflow diagrams | Graphically depicts at a high level the data sources, operations performed on the data and the path taken by the data through information systems and operations. |

| Definition of data elements Planned data model during data collection and processing Planned data model for data sharing |

Provides unambiguous definition of the data and associates the definition with data values and location of data values in data models used for the study. |

| Procedures for or pointers to all operations performed on data: Observation and measurement Data recording Data processing Data integration Data quality control definitions and acceptance criteria |

Describes operations through which data should be traceable. Provides algorithms needed for traceability. |

| Description of software and devices used for data including Calibration plans Configuration specifications for the project Validation or testing plans Scheduled maintenance Plan for handling unscheduled maintenance Change control plans Security plan Data back-up and schedule |

|

| Privacy and confidentiality plan | Documents steps taken to comply |

| Project management plan (deliverables, timeline, tracking and reporting plan, and resource estimates) | with privacy and confidentiality requirements and facilitates identification of mismatches between such requirements and project plans. |

| Data retention, archival, and disposal plan Data sharing plan |

|

While reviewing data management and sharing plan requirements from multiple disciplines certainly looses discipline-specific detail, doing so allows generalization of common principles and methods required to provide traceability and documentation for research data in any discipline. The latter is our focus here. As was shown by the results, none of the discipline-specific requirements comprehensively covered those aspects necessary for traceability. Thus, a general guideline such as Table 2 for topics to be addressed in data management and sharing plans is likely to promote more comprehensive data management and sharing documentation across disciplines.

Is adherence to the documentation suggested in Table 2 achievable for the average investigator? Because the level of documentation in Table 2 is routinely maintained throughout regulated clinical trials intended for submission to the United States Food and Drug Administration, some of which are conducted in academia, it is certainly possible. However, industry funded clinical studies explicitly budget for data collection and management. In grant and foundation funded studies, it is up to the investigator to apportion an appropriate part of the budget to data-related tasks, including documentation of data-related tasks. Keralis et al., however, found that researchers seek to maximize the portion of grant funds expended on “research proper” rather than tasks perceived as “secondary support functions” such as data management [12]. Thus, to some, documentation tasks compete directly with research aims rather than being viewed as critical to achieving them. Without funder requirements for allocation of resources to these tasks, relegation to secondary status is likely. Further, Keralis et al. also identified lack of academic preparation in research data management among surveyed investigators; without such training, the aforementioned perceptions will likely persist. Lastly, in academic institutions the autonomy of the investigator is highly regarded. Academic institutions rarely have cross-cutting procedures in place for data management and related documentation. Thus, investigators who pursue comprehensive data management documentation are writing procedures from scratch rather than having existing procedures that can be referenced as is commonly done in industry funded research. Therefore, a larger level of investment is required of these investigators. Lessening these challenges will require better data-related training for investigators, supporting institutional infrastructure, and support from funders with respect to resources for data-related documentation. Thus, while we hope to prompt funders and researchers toward systematic and comprehensive data management plans and related documentation, such a move in the absence of the aforementioned support will increase the burden on researchers.

6. Limitations

This work was exploratory in nature. It is possible that the funders identified through our institution and through web searching are not representative of all funders. Though our methods identified the funders from the Dietrich paper and expanded the set from ten to sixty-six. Because the therapeutic development industry is regulated with respect to data management, e.g., traceability is required, we treated all funders from the therapeutic development sector as homogenous with respect to DMP requirements. Further, because there were so many therapeutic development funders identified, to prevent skewing the results, we reviewed the industry standard and counted it once, as one funder. Thus, the results, while a fair representation of funder practices across sectors does not represent the actual distribution or likelihood that any one project will require a DMP. The methods reported here are qualitative in nature, while double independent coding was used to identify DMP topics, based on the single inter-rater reliability case results, different coders may arrive at different topic frequencies.

7. Conclusions

The low percentage of funders requiring DMPs versus data sharing plans indicates a greater emphasis on sharing and reuse of data than on data collection and processing. This disproportionate emphasis should be reexamined and equal weight given upstream processes that determine data quality and support research results. The least-required items in a data management plan were aspects about data collection and processing. This unfortunate, because data collection including the original observation and processing are not only large determinants of data quality, they are the determinants for which the opportunity intervene is lost as time moves on [30]. The significant variability in expectations for contents of data management and sharing plans suggests that there is a lack of knowledge of and guidance for topics that should be covered in DMPs. As such, we synthesized set of topics that data management plans should cover, i.e., those that would meet the requirements of any of the funders whose requirements were reviewed, and those that provide for traceability and support the data related aspects of reproducibility and replication.

Acknowledgements

This work was partially supported through Institutional Commitment from Duke University to grant R00-LM011128 from the National Institutes of Health (NIH) National Library of Medicine (NLM). The work and opinions presented here are those of the authors and do not necessarily represent the NIH.

Appendix A

List of Organizations and Requirements Reviewed

Source 1

Entity: National Science and Technology Council’s Committee on Science formed the Interagency Working Group on Digital Data, USA

Title: Harnessing the power of digital data for science and society

Date: 2009

Source 2

Entity: Biotechnology and Biological Sciences Research Council (BBSRC), UK

Title: BBSRC Data Protection Policy

Year: 2012

Source 3

Entity: Medical Research Council, UK

Title: MRC Policy and Guidance on Sharing of Research Data 1 from Population and Patient Studies, v01–00

Year: 2011

Source 4

Entity: Engineering and Physical Sciences Research Council (EPSRC), UK

Title: Clarifications of EPSRC expectations on research data management

Year: 2014

Source 5

Entity: National Environment Research Council (NERC), UK

Title: NERC Data Policy

Year: no date, Reviewed document on website (http://dx.doi.org/10.1016/j.jbi.2017.05.004) as of December 2016

Source 6

Entity: Science and Technology Facilities Council (STFC), UK

Title: STFC Scientific Data Policy

Year: no date, Reviewed document on website (http://dx.doi.org/10.1016/j.jbi.2017.05.004) as of December 2016

Source 7

Entity: Wellcome Trust

Title: Guidance for researchers: Developing a data management and sharing plan

Year: no date, Reviewed document accessed July 2, 2015

Source 8

Entity: Mayo Clinic Biobank Access Committee

Title: Individualized Medicine Mayo Clinic

Year: no date, Accessed July 9, 2015

Source 9

Entity: Agency for Healthcare Research and Quality (AHRQ) Title: AHRQ Public Access to Federally Funded Research

Year: no date, Accessed July 20, 2015

Source 10

Entity: Bill and Melinda Gates Foundation

Title: Evaluation Policy

Year: 2015

Source 11

Entity: Andrew W. Mellon Foundation

Title: Intellectual Property Policy

Year: 2011

Source 12

Entity: The Alliance

Title: Guidelines for availability of data sets, Policy number 15.1; Request procedures, Policy 15.2; Regulatory considerations, Policy 15.3; Genomic data sharing, Policy15.4; Release conditions and disclaimer, Policy 15.5; Appeals process, Policy 15.6; Fees, Policy 15.7

Year: 2013

Source 13

Entity: Susan G. Komen Research Programs

Title: Policies and procedures for research and training grants

Year: 2015

Source 14

Entity: Patient Centered Outcomes Research Institute (PCORI)

Title: Research plan template: improving methods

Year: 2014

Source 15

Entity: CHDI Foundation (Huntington’s disease)

Title: Data, reagents, and biomaterials sharing policy Year: no date, accessed October 19, 2015

Source 16

Entity: Gordon and Betty Moore Foundation

Title: Data sharing philosophy

Year: 2008

Source 17

Entity: Bristol-Myers Sqibba

Title: Clinical Trials Disclosure Commitment

Year:2015

Source 18

Entity: Fred Hutchinson Foundation

Title: Openness in research

Year: no date, Accessed August 17, 2015

Source 19

Entity: U.S. and European pharmaceutical trade associations, PhRMA and EFPIA

Title: Principles for Responsible Clinical Trial Data Sharing (Principles)

Year: 2013

Source 20

Entity: Henry M. Jackson Foundation for the Advancement of Military Medicine

Title: Code of Ethics

Year: 2012

Source 21

Entity: 3ie

Title: 3ie Impact Evaluation Practice: a guide for grantees

Year: no date, Accessed August 18, 2015

Source 22

Entity: U.S. Army Medical Research Acquisition Activity (USAMRAA)

Title: Research terms and conditions agency specific requirements

Year: 2011

Source 23

Entity: Department of Defense, USA

Title: DoD Grant and Agreement Regulations, DoD 3210.6-R; Part 32

Year: 1998

Source 24

Entity: Department of Homeland Security (DHS)

Title: Healthcare and public health sector-specific plan an annex to the national infrastructure protection plan

Year: 2010

Source 25

Entity: Inter-university Consortium for Political and Social Research (ICPSR)

Title: ICPSR Guidelines for effective data management plans

Year: no date, Accessed April 22, 2015

Source 26

Entity: National Science Foundation (NSF) Directorate for Biological Sciences

Title: UPDATED Information about the Data Management Plan Required for all Proposals (2/20/13)

Year: 2013

Source 27

Entity: National Science Foundation (NSF) Directorate for Computer & Information Science & Engineering (CISE)

Title: Data management guidance for proposals and awards

Year: 2011

Source 28

Entity: National Science Foundation (NSF) Directorate for Education & Human Resources

Title: Data Management for NSF EHR Directorate Proposals and Awards

Year: 2011

Source 29

Entity: National Science Foundation (NSF) Engineering Directorate

Title: Data Management for NSF Engineering Directorate Proposals and Awards

Year: no date, Accessed April 23, 2015

Source 30

Entity: National Science Foundation (NSF) Directorate of Mathematical and Physical Sciences Division of Astronomical Sciences (AST)

Title: Advice to PIs on Data Management Plans

Year: no date, Accessed April 23, 2015

Source 31

Entity: National Science Foundation (NSF) Directorate of Mathematical and Physical Sciences Division of Chemistry (CHE)

Title: Advice to PIs on Data Management Plans

Year: no date, Accessed April 23, 2015

Source 32

Entity: National Science Foundation (NSF) Directorate of Mathematical and Physical Sciences Division of Materials Research (DMR)

Title: Advice to PIs on Data Management Plans

Year: no date, Accessed April 23, 2015

Source 33

Entity: National Science Foundation (NSF) Directorate of Mathematical and Physical Sciences Division of Mathematical Sciences (DMS)

Title: Advice to PIs on Data Management Plans

Year: no date, Accessed April 23, 2015

Source 34

Entity: National Science Foundation (NSF) Directorate of Mathematical and Physical Sciences Division of Physics (PHY)

Title: Advice to PIs on Data Management Plans

Year: no date, Accessed April 23, 2015

Source 35

Entity: National Science Foundation (NSF) Directorate of Social, Behavioral & Economic Sciences (SBE)

Title: Data management for NSF SBE directorate proposals and awards

Year: 2010

Source 36

Entity: United States Geological Survey (USGS)

Title: USGS data management – data management plans

Year: 2015

Source 37

Entity: National Aeronautics and Space Administration (NASA)

Title: Guidelines for Development of a Data Management Plan (DMP) Earth Science Division NASA Science Mission Directorate Year: 2011

Source 38

Entity: National Institutes of Health (NIH)

Title: NIH data sharing policy and implementation guidance

Year: 2003

Source 39

Entity: World Agroforestry Centre

Title: Research data management training manual

Year: 2012

Source 40

Entity: Course developed for the Office of Research Integrity (ORI) US Department of Health and Human Services

Title: Guidelines for responsible data management in scientific research

Year: 2006

Source 41

Entity: DMP Tool Partnership

Title: Data Management general guidance

Year: Reviewed document current as of December 12, 2016

Source 42

Entity: Inter-university Consortium for Political and Social Research (ICPSR)

Title: Guide to social science data preparation and archiving: best practice throughout the data life cycle (5th ed.)

Year: 2012

Source 43

Entity: Department of Energy (DOE) Biological and Environmental Research (BER) Climate and Environmental Sciences Division (CESD)

Title: Data management

Year: no date, Accessed April 20, 2015

Source 44

Entity: Society for Clinical Data Management (SCDM)

Title: Good Clinical Data Management Practices

Year: 2013

Source 45

Entity: Digital Curation Centre (DCC)

Title: How to Develop a Data Management and Sharing Plan

Year: 2011

Source 46

Entity: National Oceanic and Atmospheric Administration (NOAA)

Title: Community Best Practices for Dissemination and Sharing of Research Results

Year: no date, Accessed April 23, 2015

Source 47

Entity: National Oceanic and Atmospheric Administration (NOAA)

Title: NOAA data management planning procedural directive version 1.0

Year: 2011

Source 48

Entity: National Endowment for the Humanities (NEH) Office of Digital Humanities

Title: Data Management Plans for NEH Office of Digital Humanities Proposals and Awards

Year: no date, Accessed April 20, 2015

Source 49

Entity: United States Department of Health and Human Services Office of Research Integrity

Title: Responsible conduct in data management

Year: no date, Accessed April 23, 2015

Source 50

Entity: Environmental Protection Agency (EPA)

Title: Open government data quality plan

Year: 2010

Source 51

Entity: Australian National Data Service (ANDS)

Title: Data management planning

Year: 2011

Source 52

Entity: European Commission, EU Framework Programme for Research Innovation, Horizon 2020

Title: Guides in data management, horizon 2020

Year: 2013

Source 53

Entity: National Institutes of Health (NIH), National Institute of Mental Health

Title: Research Domain Criteria Initiative (RDoC) Database (RDoCdb) Data Sharing Plan

Year: 2014

Source 54

Entity: Economic and Social Research Council, UK

Title: ESRC research data policy

Year: 2015

Source 55

Entity: National Institutes of Health (NIH)

Title: National institutes of health plan for increasing access to scientific publications and digital scientific data from NIH funded scientific research

Year: 2015

Source 56

Entity: Arts and Humanities Research Council

Title: Research funding guide

Year: 2015

Source 57

Entity: California Digital Library (CDL)

Title: Primer on data management: what you always wanted to know

Year: 2012

Source 58

Entity: Online Computer Library Center (OCLC)

Title: Starting the conversation: university-wide research data management policy

Year: 2013

Source 59

Entity: Association of Southeastern Research Libraries (ASERL) / Southeastern Research Universities Research Association (SURA)

Title: Model language for research data management policies

Year: no date, Accessed April 20, 2015

Source 60

Entity: Council on Library Information Resources (CLIR)

Title: Data management plans, collaborative research governance, and the consent to share

Year: 2014

Source 61

Entity: US Army Research Office

Title: General terms and conditions for cooperative agreement awards to educational institutions and other nonprofit organizations

Year: 2009

Source 62

Entity: Engineering and Physical Sciences Research Council (EPSRC)

Title: Clarifications of EPSRC expectations on research data management

Year: 2014

Source 63

Entity: Department of Homeland Security (DHS)

Title: Information sharing strategy

Year: 2008

Source 64

Entity: University of Reading Statistical Services Centre

Title: Data management guidelines for experimental projects

Year: 2000

Source 65

Entity: Research Councils UK (RCUK)

Title: Response to the royal society’s study into science as a public enterprise

Year: 2011

Source 66

Entity: U.S. Agency for International Development

Title: Freedom of Information Act (FOIA) Regulations Part 212 – Public Information

Year: 2012, Accessed August 20, 2015

Footnotes

Conflict of Interest statement

The authors report no conflicts financial or otherwise.

Included eventhough a therapeutic development company because the document covered topics outside the scope of the current version of the industry Good Clinical Data Management Practices practice standard.

While Title 42 CFR 93 does not specifically call for traceability of all operations performed on data, all institutions receiving Public Health Service (PHS) funding are required to have written policies and procedures for addressing allegations of research misconduct. In misconduct investigations, traceability of data from it’s origin through all operations performed on the data to the analysis is necessary for developing the factual record.

References

- [1].Young S, Karr A, Deming, data and observational studies: a process out of control and needing fixing. Significance (September) (2011) 116–120. [Google Scholar]

- [2].Bissell M, The risks of the replication drive, Nature 503 (21) (2013) 333–334. [DOI] [PubMed] [Google Scholar]

- [3].Young SS, Miller HI, Are medical articles true on health, disease? Sadly, not as often as you might think, Genet. Eng. Biotechnol. News 34 (9) (2014). May 1. [Google Scholar]

- [4].Freedman LP, Cockburn IM, Simcoe TS, The economics of reproducibility in preclinical research, PLoS Biol 13 (6) (2015) e1002165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Plant A, Parker GC, Translating stem cell research from the bench to the clinic: a need for better quality data, Stem Cells Develop. 22 (18) (2013). [DOI] [PubMed] [Google Scholar]

- [6].Hayden EC, The automated lab, Nature 516 (2014) 131–132. [DOI] [PubMed] [Google Scholar]

- [7].Peng RD, Reproducible research and biostatistics, Biostatistics 10 (3) (2009) 405–408. [DOI] [PubMed] [Google Scholar]

- [8].Division of Health Sciences Policy, Institute of Medicine, Assuring Data Quality and Validity in Clinical Trials for Regulatory Decision Making: Workshop Report: Roundtable on Research and Development of Drugs, Biologics, and Medical Devices, National Academy Press, Washington, DC, 1999. [PubMed] [Google Scholar]

- [9].Woollen SW, Data quality and the origin of ALCOA, The Compass – Summer 2010. Newsletter of the Southern Regional Chapter Society or Quality Assurance, 2010 <http://www.southernsqa.org/newsletters/Summer10.DataQuality.pdf> (accessed November 17, 2015).

- [10].Mosley M (Ed.), Data Management Association of America (DAMA), The DAMA Dictionary of Data Management, first ed., Technics Publications LLC, New Jersey, 2008. [Google Scholar]

- [11].Van den Eynden V, Corti L, Woollard M, Bishop L, Horton L, Managing and Sharing Data The UK Data Archive, third ed., University of Essex, Wivenhoe Park, Colchester Essex, 2011. [Google Scholar]

- [12].Keralis Spencer DC, Stark S, Halbert M, Moen WE, Research data management in policy and practice: the DATARES project, in: Research data management principles, practices, and prospects, Council on library and information resources (CLIR), CLIR Publication No. 160, Washington DC, November 2013. [Google Scholar]

- [13].NIH Data Sharing Policy and Implementation Guidance (Updated: March 5, 2003) <http://grants.nih.gov/grants/policy/data_sharing/data_sharing_guidance.htm>.

- [14].National Science Foundation (NSF), NSF Grant Proposal Guide, Chapter 11.C.2.j. NSF 11–1 January 2011 <http://www.nsf.gov/pubs/policydocs/pappguide/nsf11001/gpg_index.jsp> (accessed June 30, 2015). [Google Scholar]

- [15].Holdren JP, Office of Science and Technology Policy (OSTP), Memorandum for the heads of executive departments and agencies, February 22, 2013 <https://www.whitehouse.gov/sites/default/files/microsites/ostp/ostp_public_access_memo_2013.pdf> (accessed July 7, 2016).

- [16].No author Organization, review, and administration of cooperative studies (Greenberg Report): a report from the Heart Special Project Committee to the National Advisory Heart Council, May 1967. Control Clin Trials 9(2) (1988) 137–148. [DOI] [PubMed] [Google Scholar]

- [17].McBride R, Singer SW, Introduction to the 1995 clinical data management special issue of controlled clinical trials, Control. Clin. Trials 16 (1995) 1S–3s. [Google Scholar]

- [18].McBride R, Singer SW, Interim reports, participant closeout, and study archives, Control. Clin. Trials 16 (1995) 1S–3s. [DOI] [PubMed] [Google Scholar]

- [19].Gassman JJ, Owen WW, Kuntz TE, Martin JP, Amoroso WP, Data quality assurance, monitoring, and reporting, Control. Clin. Trials 16 (1995) 1S–3s. [DOI] [PubMed] [Google Scholar]

- [20].Hosking JD, Newhouse MM, Bagniewska A, Hawkins BS, Data collection and transcription, Control. Clin. Trials 16 (1995) 1S–3s. [DOI] [PubMed] [Google Scholar]

- [21].McFadden ET, LoPresti F, Bailey LR, Clarke E, Wilkins PC, Approaches to data management, Control. Clin. Trials 16 (1995) 1S–3s. [DOI] [PubMed] [Google Scholar]

- [22].Blumenstein BA, James KE, Lind BK, Mitchell HE, Functions and organization of coordinating centers for multicenter studies, Control. Clin. Trials 16 (1995) 1S–3s. [DOI] [PubMed] [Google Scholar]

- [23].Association for Clinical Data Management (ACDM), ACDM Guidelines to Facilitate Production of a Data Handling Protocol. November 1, 1996 <http://www.acdm.org.uk/resources.aspx> (accessed April 30, 2016).

- [24].Society for Clinical Data Management (SCDM), Good Clinical Data Management Practices (GCDMP) October 2013 <http://www.scdm.org> (Accessed April 30, 2016).

- [25].Muraya P, Garlick C, Coe R, Research Data Management, The World Agroforestry Centre, Nairobi, Kenya, 2002. [Google Scholar]

- [26].Jones C, Bicarregui J, Singleton P, JISC/MRC Data Management Planning: Synthesis Report. Sciences and Facilities Technology Council (STFC) Open archive for STFC research publications, November 20, 2011 <http://purl.org/net/epubs/work/62544> (accessed April 30, 2016). [Google Scholar]

- [27].Dietrich D, Admus T, Miner A, Steinhart G, Demystifying the data management plan requirements of research funders, Iss. Sci. Technol. Librarian, (Summer) (2012), doi: 10.5062/F44M92G2. [DOI] [Google Scholar]

- [28].Knight G, Funder Requirements for Data Management and Sharing Project Report. London School of Hygiene and Tropical Medicine, London: (Unpublished), 2012 <http://researchonline.lshtm.ac.uk/208596/> (accessed April 30, 2016). [Google Scholar]

- [29].Brand S, Bartlett D, Farley M, Fogelson M, Hak JB, Hu G, Montana OD, Pierre JH, Proeve J, Qureshi S, Shen A, Stockman P, Chamberlain R, Neff K, A model data management plan standard operating procedure: results from the DIA clinical data management community, committee on clinical data management plan, Ther. Innov. Regul. Sci 49 (5) (2015) 720–729. [DOI] [PubMed] [Google Scholar]

- [30].Nahm M, Bonner J, Reed PL, Howard K, Determinants of accuracy in the context of clinical study data, in: Proceedings of the international conference on information quality (ICIQ) November 2012 <http://mitiq.mit.edu/ICIQ/2012> (accessed February 19, 2017). [Google Scholar]

- [31].GCP Inspectors Working Group (GCP IWG) European Medicines Agency (EMA), EMA/INS/GCP/454280/2010, Reflection paper on expectations for electronic source data and data transcribed to electronic data collection tools in clinical trials, June 9, 2010. [Google Scholar]