Abstract

The purpose of this study was to examine neural substrates of frequency change detection in cochlear implant (CI) recipients using the acoustic change complex (ACC), a type of cortical auditory evoked potential elicited by acoustic changes in an ongoing stimulus. A psychoacoustic test and electroencephalographic (EEG) recording were administered in 12 post-lingually deafened adult CI users. The stimuli were pure tones containing different magnitudes of upward frequency changes. Results showed that the frequency change detection threshold (FCDT) was 3.79% in the CI users, with a large variability. The ACC N1’ latency was significantly correlated to the FCDT and the clinically collected speech perception score. The results suggested that the ACC evoked by frequency changes can serve as a useful objective tool in assessing frequency change detection capability and predicting speech perception performance in CI users.

Keywords: Acoustic Change Complex, Cochlear Implant, Cortical Auditory Evoked Potential, Frequency Change Detection, Electroencephalography

1. INTRODUCTION

Cochlear implants (CIs) are prosthetic devices that have been widely used to provide hearing sensation in individuals with severe to profound hearing loss. CIs bypass the damaged inner ear and directly stimulate auditory nerve fibers with electrical pulses. Unlike the normal hearing individuals who have approximately 3,500 inner hair cells and 12,000 outer hair cells to provide fine grained spectral resolution, CI users rely on the sound information conveyed by the electrical stimulation through up to 22 electrodes. Due to this technical limitation, a CI device can only provide a coarse frequency information of the input signal.

The capability of discerning small frequency changes and fine cues in the spectral dimension plays a crucial role in speech understanding (Gifford et al., 2014; Won et al., 2014; Kenway et al., 2015). Numerous studies reported that post-lingual CI users demonstrate poor frequency discrimination (Gfeller et al., 2007; Kang et al., 2009; Limb & Roy, 2014; Turgeon et al., 2015). Moreover, the frequency discrimination performance varies substantially among patients, who typically have heterogeneous demographic variables (e.g., duration of deafness, age of implantation, and the degree of residual hearing etc.). For example, using a pitch direction discrimination task that requires the participant to identify which of the two stimuli was higher in pitch, Kang et al. (2009) reported that normal hearing (NH) listeners can detect a 1-semitone difference (approximately 6% frequency change) while CI users demonstrated a much poorer frequency discrimination capability with an enormous variance (from one to eight semitones).

It has been well documented that CI users’ speech perception performance varies significantly (Leung et al., 2005; Finley et al., 2008; Gfeller et al., 2008; Holden et al., 2013). Multiple psychoacoustic studies have shown that the speech outcome of CI users is highly associated with the capability of frequency change detection (Litvak et al., 2007; Gifford et al.,2014; Won et al., 2014; Kenway et al., 2015; Sheft et al., 2015; Turgeon et al., 2015; Won et al., 2015). Detecting frequency changes may play an important role in pitch-based tasks that are typically challenging for CI users, including melody contour identification, talker gender identification, and speech perception in noise (Stickney et al., 2007; Cullington & Zeng, 2011; Gfeller et al., 2012; Zeng et al., 2014).

Understanding the neural substrates of the variability in CI speech perception outcomes is critical for future efforts to improve speech outcomes by reducing the variability; it will also lead to the identification of promising neural responses as diagnostic tools to objectively assess potential benefits (or problems) in difficult-to-test patients. Much evidence has shown that cortical function is more closely related to CI outcomes than the neural responses from lower level of the auditory system (e.g., the response from the auditory nerve, brainstem, or subcortical levels, Firszt et al., 2002; Lazard et al., 2012; Anderson et al., 2017). Therefore, there is an enormous need to examine how CI users’ auditory brain processes frequency changes in order to understand the large variability in CI speech outcomes.

The cortical auditory evoked potentials (CAEPs) have been widely used to evaluate the neural detection of sound in the central auditory system (Kelly et al., 2005). CAEPs can be recorded in a passive listening condition that does not require participant’s attention or voluntary responses and thus can serve as a suitable tool for difficult-to-test subjects (Sharma & Dorman, 2006; Small & Werker, 2012). The CAEPs can be elicited by stimulus onset (onset CAEP), stimulus offset (offset CAEP), and an acoustic change embedded in an ongoing stimulus (acoustic change complex, ACC), with similar waveform morphologies.

The ACC has raised strong interests among researchers in recent years as an objective tool for assessing auditory discrimination capability. The ACC can be elicited by a perceivable change in acoustic features including frequency, intensity, and duration, in ongoing stimuli. The ACC has been studied in non-CI users (Harris et al., 2007; Dimitrijevic et al., 2008; He et al., 2015). Data in non-CI users have shown that the ACC threshold (the minimum magnitude of acoustic changes required to evoke the ACC) is in agreement with behavioral auditory discrimination threshold and the ACC amplitude is related to the salience of the perceived acoustic change (He et al., 2012; Liang et al., 2016).

The ACC differs from and has advantages over the Mismatch Negativity (MMN), another type of auditory evoked response reflecting auditory discrimination (Tremblay et al., 1997; Pakarinen et al., 2007; Sandmann et al., 2010; Itoh et al., 2012). First, in the stimuli paradigm for the ACC, every trial of the stimuli contributes to the ACC response. In MMN recordings, a large number of standard stimuli is required to embed a sufficient number of deviant stimuli. Thus, the recording time for the ACC is much shorter than that for the MMN. Second, the ACC has a much larger amplitude (higher signal-to-noise ratio) compared to the MMN, which enables the accurate identification of ACC peaks for latency and amplitude calculation (Martin & Boothroyd, 1999). Third, the MMN is an outcome of waveform subtraction between the response to the deviant stimuli and the response to the standard stimuli, while the ACC is a response directly collected from the participant.

From the several existing ACC studies involving CI users, the findings have not allowed us to draw a conclusion on the correlation between the ACC and the behavioral performance of frequency change detection. For example, one study using synthetic vowels, with a change of the second formant, reported an agreement between the ACC and behavioral discrimination capability based on data from one CI user (Martin, 2007); another study reported different waveform patterns in the ACC evoked by speech syllables between good and poor performers (Friesen & Tremblay 2006). Note that different types of acoustic changes have been used in previous ACC studies (e.g., electrical stimuli delivered via the CI electrode or speech stimuli delivered in the sound field, Friesen & Tremblay, 2006; Martin, 2007; Brown et al., 2008; Kim et al., 2009; Hoppe et al., 2010; Brown et al., 2015; Scheperle & Abbas, 2015). These stimuli contain changes of sound in multiple dimensions. For instance, the electric stimuli in electrical ACC studies (Brown et al., 2008; Hoppe et al., 2010) were electrical pulse trains with the first half of each stimulus from one electrode and the other half from a second electrode. Such stimulus contains the change in stimulation electrode and the change in perceived frequency due to the change in the stimulating electrodes; the speech stimuli in acoustic ACC studies (e.g., vowel /ui/ that has frequency change in the 2nd formant, Martin, 2007; Brown et al., 2015) contain the changes in spectral envelope, amplitude, and periodicity at the transition in consonant-vowel syllables. Therefore, it is unclear which components of the acoustic change have contributed to the ACCs. It is necessary to further examine the ACC elicited by the change in only frequency dimension and its correlation with the behavioral performance of frequency change detection in CI users.

In this study, we examined how auditory cortex processes frequency changes in CI users and how the neural measures correlate with the frequency change detection capability using pure tones containing only frequency changes. The use of pure tones as opposed to complex tones is due to the following reason: a pure tone stimulates one electrode or two electrodes, while a complex tone stimulates several electrodes. Therefore, a pure tone allows for a better detection of frequency changes based on a single excitation site than a complex tone does. Previous studies showed that the performance of melody recognition with a pure tone is significantly better than with complex tones, suggesting that current CIs cannot code the complex pitch (Singh et al., 2009). Our primary goals were: 1) to characterize the ACC features in CI users, and 2) to determine if ACC measures can be used to predict the frequency change detection threshold in CI users. Additionally, we explored the correlation between the ACC measures and the speech perception performance collected clinically.

2. MATERIALS AND METHODS

2.1. Subjects

Twelve post-lingually deafened CI users (7 females and 5 males; 43-75 years old, with the mean age of 63 years) participated in this study. All participants were right-handed, native English speakers without any neurological disorder history. The CI users wore the devices from Cochlear (Sydney, Australia) and they all had severe-to-profound sensorineural hearing loss bilaterally prior to implantation. In the 12 CI subjects, 7 had bilateral CIs, 4 had bimodal devices (a CI in one ear and a hearing aid in the other ear), and one had a unilateral CI. All subjects have been using the CI for their daily communication. Each CI ear was tested individually. One bilateral CI user was tested with only one CI ear because the other CI ear was not able to detect the maximum magnitude of frequency changes in the psychoacoustic test. Therefore, a total 18 CI ears underwent both psychoacoustic and EEG tests. Individual CI subject’s demographic information is provided in Table 1. All participants gave informed written consent prior to their participation This study was approved by the Institutional Review Board of the University of Cincinnati. The authors did not have any conflicts of interest.

Table 1.

Cochlear implant (CI) users’ demographics.

| CI user | Gender | Age (years) |

Ear | Duration of severe-to-profound deafness (years) |

Duration of CI use (years) |

Etiology |

|---|---|---|---|---|---|---|

| SCI44 | M | 54 | L | 6 | 3.00 | Noise induced |

| SCI44 | M | 54 | R | 3 | 0.25 | Noise induced |

| SCI45 | F | 48 | L | 43 | 6 | Unknown |

| SCI45 | F | 48 | R | 43 | 6 | Unknown |

| SCI46 | F | 64 | L | 38 | 1.5 | Virus infection |

| SCI47 | F | 66 | R | 36 | 2.5 | Cochlear otosclerosis |

| SCI48 | F | 43 | R | 41 | 4.00 | Meningitis |

| SCI49 | M | 62 | L | 3 | 4.08 | Noise exposure |

| SCI49 | M | 62 | R | 3 | 4.33 | Presbycusis |

| SCI50 | F | 75 | R | 4 | 3 | Unknown |

| SCI50 | F | 75 | L | 4 | 1.58 | Unknown |

| SCI51 | M | 75 | R | 20 | 0.92 | Noise induced |

| SCI52 | F | 62 | R | 10 | 1.83 | Genetic |

| SCI52 | F | 62 | L | 10 | 0.42 | Genetic |

| SCI53 | M | 73 | L | 12 | 0.58 | Presbycusis |

| SCI54 | F | 75 | R | 20 | 3.42 | Virus infection |

| SCI55 | F | 75 | R | 41 | 20.17 | Congenital |

| SCI55 | F | 63 | L | 41 | 6.17 | Congenital |

2.2. Stimuli

2.2.1. Stimuli for psychoacoustic tests

A tone of 1 sec duration at a base frequency of 160 Hz was used as the standard tone. The target tones were the same as the standard tone except that the target tones contained upward frequency changes at 500 ms after the tone onset, with the magnitude of frequency change varying from 0.05% to 65%. These tones were generated using Audacity 1.2.5 (http://audacity.sourceforge.net) at a sample rate of 44.1 kHz. To avoid an abrupt onset and offset of the 1-sec tones, the amplitude was reduced to zero over 10 ms. These tones contained upward frequency changes at 500 ms after the tone onset. The frequency change occurred for an integer number of cycles of the base frequency and the change occurred at 0 phase (zero crossing). Therefore, the onset cue of the frequency change was removed and it did not produce audible transients (Dimitrijevic et al.,2008). The amplitudes of all stimuli were normalized. The stimuli were calibrated using a Brüel & Kjær (Investigator 2260) sound level meter set on linear frequency and slow time weighting with a 2 cc coupler.

2.2.2. Stimuli for EEG recording

Tones of 1 sec duration at base frequencies of 160 Hz and 1200 Hz that contained 3 types of frequency change (0%, 5%, and 50%) were used to evoke the event-related-potentials (ERPs). We used the above two different base frequencies because 160 Hz is in the frequency range of the fundamental frequency (F0), while 1200 Hz is in the frequency range of the 2nd formant of vowels. The F0 and 2nd formant frequency are important spectral cues in speech processing (Blamey et al., 1987; Stickney et al., 2007). Examining the ACC at these two base frequencies would help understand how the auditory cortex processes the frequency change near the F0 and the 2nd formant of vowels. A total of six stimuli (3 types of frequency changes x 2 base frequencies) were used for the EEG recording. The inter-stimulus interval was 0.8 sec.

2.3. Procedure

All participants underwent psychoacoustic test of frequency change detection and EEG recording. Stimuli were presented in the sound field via a single loudspeaker placed at ear level, 50 cm in front of the participant. The stimuli were presented at an intensity corresponding to the loudness level 7 (most comfortable level) on a 0-10-point (inaudible to too loud) numerical scale (Hoppe et al., 2001) to the tested CI ear. This stimuli presentation approach has been widely used in CI users. The device (either the CI or hearing aid) in the non-test ear was tuned off and the non-test ear was blocked with an earplug.

2.3.1. Psychoacoustic test of frequency change detection

An adaptive, 2-alternative forced-choice procedure with an up-down stepping rule using APEX software (Francart et al., 2008) was employed to measure the minimum frequency change the participant was able to detect. In each trial, a target stimulus and a standard stimulus were included. The standard stimulus was the tone without frequency change and the target stimulus was the tone with a frequency change. The order of standard and target stimulus was randomized and the interval between the stimuli in a trial was 0.5 sec. The initial frequency change between the standard and target was 5% from 10-65 %, 0.5% from 0.5-10%, and 0.05% from 0.05-0.5%. The participant was instructed to choose the target signal by pressing the button on the computer screen and was given a visual feedback regarding the response. Each run generated a total of 5 reversals. The asymptotic amount of frequency change (the average of the last 3 trials) was used as the frequency change detection threshold (FCDT).

2.3.2. EEG recording

Participants wore a 40-channel Neuroscan quick-cap (NuAmps, Compumedics Neuroscan, Inc., Charlotte, NC) for the EEG recording. The cap was placed according to the International 10-20 system. Electro-ocular activity (EOG) was monitored so that eye movement artifacts could be identified and rejected during the offline analysis. Electrodes located directly over or closely surrounding the implant transmission coil were not used. Electrode impedances for the remaining electrodes were kept at or below 5 kΩ during EEG recordings using the SCAN software (version 4.3, Compumedics Neuroscan, Inc., Charlotte, NC) with a band-pass filter setting from 0.1 to 100 Hz and an analog-to-digital converter sampling rate of 1000 Hz.

CI users were presented with 400 trials for each type of the stimuli. Previous study showed that 400 trials were adequate for obtaining an ideal signal-to-noise ratio in the recording of cortical evoked potentials in CI users (Han et al., 2016). During testing, participants were instructed to avoid excessive eye and body movements. Participants read self-selected magazines to keep alert and were asked to ignore the acoustic stimuli. The total recording time was approximately 1.4 hours for each CI ear.

2.4. EEG Data Processing

Electrophysiological data were initially processed using Scan software (Compumedics Neuroscan, Inc., Charlotte, NC). Continuous EEG data were digitally filtered using a band-pass filter (0.1 to 30 Hz). Then the data were segmented into epochs over a window of 1100 ms (including a 100 ms pre-stimulus duration). Following segmentation, the baseline was corrected by subtracting the mean amplitude in the 100 ms pre-stimulus time window. Data were then imported into the EEGLAB toolbox (Delorme & Makeig, 2004) in MATLAB (Mathworks Inc.) for further analysis. The data were re-referenced to the common average reference. Bad channels near the CI coil that were not injected with recording gel (up to 3 channels for unilateral CI users and 6 channels for bilateral CI users) were removed and interpolated. Visual inspection of the epochs which contained non-stereotyped artifacts were discarded. There were at least 200 epochs left for each participant.

Independent component analysis (ICA, Delorme & Makeig, 2004) was performed for the identification and removal of stereotyped artifacts including CI artifacts. The success of artifact removal, using the ICA method, is demonstrated in Figure 1 and detailed information can be found in EEGLAB manual and our previous published papers (Delorme & Makeig, 2004; Zhang et al., 2009). After the independent components (ICs) related to artifacts were linearly subtracted from the EEG data, the remaining ICs were used to reconstruct the dataset. Then data epochs were averaged separately for each of the 6 types of stimuli (2 base frequencies x 3 types of frequency changes) in each participant.

Figure 1.

The example of event-related potential (ERP) waveforms at Cz evoked by a tone with 0% (black line), 5% (green line), and 50% (red line) frequency change. The waveforms before artifact removal (left) and after artifact removal (right) are displayed.

We performed further waveform analysis on the responses from the electrode Cz where the response amplitudes were the largest. The presence of the onset CAEP and ACC response was determined based on criteria: (i) an expected wave morphology within the expected time window for the specific response based on mutual agreement between two researchers (He et al., 2015), and (ii) a visual difference in the waveforms between the frequency change conditions vs. no change condition when evaluating the ACC. Then, the wave peaks of the onset CAEP and ACC response were identified. In the onset CAEP, the response peaks were labeled using standard nomenclature of N1 and P2. The ACC response peaks were labeled using N1’ and P2’ (Figure 1). For each averaged response, the N1 was identified as the maximum negative peak in the latency range of 100-200 ms and P2 was identified as the maximum positive peak in 150-300 ms after stimulus onset (Näätänen & Picton, 1987; Budd et al., 1998). The N1’ and P2’ peaks of the ACC were identified in a latency range of 600-700 ms and 650-800 ms, respectively, after the stimulus onset (or 100-200 ms and 150-300 ms after the starting point of the frequency change, respectively).

The measures used for statistical analysis include: N1 and P2 peak latencies (the time interval between the peaks and the stimulus onset), N1-P2 peak-to-peak amplitude (the voltage difference between N1 and P2) for the onset CAEP and the corresponding measures (N1’ and P2’ peak latencies and N1’-P2’ amplitude) for the ACC responses.

2.5. Statistical Analysis

For behavioral testing, the dependent variable is the FCDT. For EEG testing, the dependent variables include: 1) the measures in the onset CAEP (N1 latency, P2 latency, and N1-P2 amplitude); 2) the measures in the ACC (N1’ latency, P2’ latency, and N1’-P2’ amplitude). The mean values and standard errors of amplitude and latency measures for each type of stimuli were obtained. Repeated measures analysis of variance (ANOVA) procedures were performed to examine the difference between the ACC and onset CAEP for different base frequencies. The Pearson product-moment correlation analysis was performed to examine correlations between FCDT and the ACC measures as well as the correlation between speech perception performance and the ACC measures. Statistical analysis was performed with SPSS 13.0. A p-value of 0.05 was used as the significance level for all analyses.

3. RESULTS

3.1. Electrophysiology Results

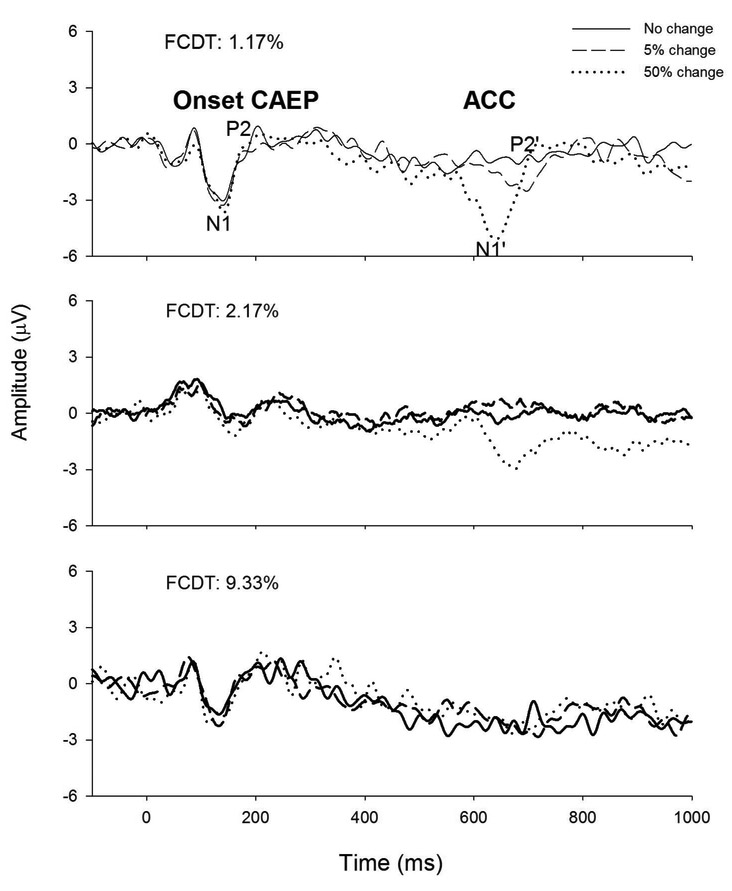

Figure 2 shows the individual ERPs evoked by tones containing no change (solid line), 5% change (dashed line), and 50% change (dotted line) in 3 CI users whose FCDT was good (1.17%), moderate (2.17%), and poor (9.33%), respectively. There were two types of responses in the ERP waveforms. One is onset CAEP, characterized as the N1-P2 complex with peak latencies of approximately 100-300 ms after the onset of stimuli; the other is the ACC, labeled as N1’-P2’ complex, with the latency at approximately 600-800 ms after stimulus onset or 100-300 ms after the occurrence of the frequency change. As seen in this figure, the ACC is smaller for moderate and poor performers than for the good performer. Compared to the ACC, the onset CAEP does not appear to indicate the FCDT of the participant.

Figure 2.

Individual ERPs evoked by 160 Hz tones containing no change (solid line), 5% change (dashed line), and 50% change (dotted line) in 3 CI users whose FCDT was good (1.17%), moderate (2.17%), and poor (9.33%), respectively.

Figure 3 shows the grand mean of the ERPs evoked by tones of 160 Hz (left plots) and 1200 Hz (right plots) containing no change (solid line), 5% change (dashed line), and 50% change (dotted line) in CI users. This figure shows that, while the onset CAEPs evoked by the tones without and with frequency changes are similar, the ACC only exist when there is a frequency change and the ACC amplitude is larger for the 50% frequency change than for the 5% change. The trend is the same for both 160 Hz and 1200 Hz base frequency, although the same percentage of frequency change in the latter evoked bigger ACCs.

Figure 3.

Group average waveforms in CI users evoked by tones (160 Hz in the left panel and 1200 Hz in the right panel) with 0% (solid line), 5% (dash line), and 50% change (dotted line).

The ACC exists in 61-78% of CI ears for the 5% change depending on the base frequency and 100% for the 50% change. Table 2 shows the means and standard deviations of the peak amplitudes and latencies in the onset CAEP and the ACC evoked by tones with the 50% frequency change. Note that the latency of the ACC N1’ and P2’ latencies were adjusted by subtracting the pre-frequency change duration of 500 ms for comparison purposes. It can be seen that the ACC peak latencies are longer than those of the onset CAEP peaks.

Table 2.

Latencies and amplitudes of the responses elicited by tones with 50% change.

| Onset CAEP |

ACC response |

||||||

|---|---|---|---|---|---|---|---|

| Latency (ms ± SD) |

Amplitude (μV ± SD) |

Latency (ms ± SD) |

Amplitude (μV ± SD) |

||||

| N1 | P2 | N1P2 | N1’ | P2’ | N1’P2’ | ||

| CI | 160Hz | 138(18.9) | 233.8(20.2) | 1.8(0.8) | 157.2(19.2) | 250.8(24.5) | 1.62(1.0) |

| 1200Hz | 129.6(15.3) | 227.4(35.1) | 2.0(1.0) | 135.4(21.4) | 245.4(22.4) | 1.9(1.1) | |

A series of two-way repeated ANOVAs were conducted to examine the effects of Base Frequency (within-subject factors) and the Response Type (onset CAEP vs. ACC) for different measures (peak latency and amplitude). The statistical results showed that there was a difference between 160 Hz and 1200 Hz [F(1,71)=17.9, p<0.01] as well as difference between onset CAEP and ACC [F(1,71)=11.21, p<0.01] for the N1(N1’) peak latency. There was no interaction between Base Frequency and Response Type [F(1,71)=2.45, p>0.05]. The results showed that the N1(N1’) latency was significantly longer for 160 Hz than for 1200 Hz and the ACC N1’ latency was significantly greater than the onset CAEP N1 latency; there was a difference between onset CAEP and ACC [F(1,71)=10.82, p<0.01] for the P2(P2’) peak latency. There was no significant effect of Base Frequency on the P2(P2’) peak latency [F(1,71)=1.75, p>0.05] and no interaction between Base Frequency and Response Type [F(1,71)=0.004, p>0.05]. The results suggested that ACC P2’ latency was significantly longer than the onset CAEP P2 latency. There was no effects of Base Frequency [F(1,71)=2.03, p>0.05] and Response Type [F(1,71)=1.05, p>0.05] as well as their interaction [F(1,71)=0.07, p>0.05] on the N1P2 (N1’P2’) amplitude. In summary, the peak latencies of the ACC were significantly longer than those of the onset CAEP. The N1(N1’) latency was significantly longer for 160 Hz than for 1200 Hz.

3.2. Correlations Between ACC Measures and Psychoacoustic FCDT

The CI group has a mean FCDT of 3.79%. The variability in CI group is substantial, ranging from 0.67 to 9.66. The ACC responses were correlated to behavioral performance of frequency change detection, evidenced by the results of the following analyses. The first analysis was based on the ACC data evoked by 5% change. The ACCs were missing in some CI subjects when evoked by 5% frequency changes. Therefore, the CI users were separated into two subgroups: CI users without the ACC and CI users with the ACC for the 5% change. Figure 4 shows mean values of the FCDT in the two subgroups. The mean FCDT was 5.34% and 3.09%, respectively, in CI users without the ACC and CI users with present ACCs.

Figure 4.

Mean FCDTs in 2 subgroups of CI ears (CI ears without ACCs and CI ears with present ACC evoked by the 5% frequency change). Error bars indicate the standard error.

A t-test was performed to determine if there was a significant difference in the FCDT between the CI subgroup with present ACC and the CI subgroup (M=3.09%, SD=1.86) without ACC for the 5% change (M=5.34%, SD=3.27). The results showed that the difference between these two subgroups of CI users did not reach statistical significance [t=−1.87, p=0.08]. The fact that the CI subgroup with present ACCs had a FCDT smaller than 5% and the CI subgroup with absent ACCs had a FCDT greater than 5% suggested that ACC measures for the 5% change were in agreement with behavioral measures.

The second analysis was based on the ACC data evoked by the 50% change. Pearson’s product moment correlation analysis was used to evaluate the correlation between FCDTs and the ACC measures for the 50% frequency change. Figure 5 showed that there was a significant correlation between Nl’latency and FCDTs (R2=0.23, p<0.05). The significant positive correlation between N1’ latency and FCDT (p<0.05) seems to be driven by the two subjects who have FCDTs around 9-10%. Future studies with a larger sample size will help determine if this correlation exists. Although there was a trend that CI users with smaller N1’-P2’ amplitudes and longer P2’ latency were more likely to have a larger FCDT (poor performance), this correlation was not statistically significant (not shown).

Figure 5.

The FCDT as the function of ACC N1’ latency in CI users. The solid line shows linear regression fit to the data. The stimulus used to evoke the ACC was a 160 Hz tone containing 50% frequency change.

3.3. ACC Measures vs. Speech Perception Outcomes in CI Users

The speech perception data collected by an audiologist, one of the co-authors, at the latest clinical follow-up (within 6 months from our experiment) in the Department of Otolaryngology-Head and Neck Surgery at UC were used to examine the correlation between ACC and speech perception performance. Although both consonant-nucleus-consonant (CNC) monosyllabic word and AzBio sentences (presented at the most comfortable level) were used for clinical assessment, the CNC score was found to be significantly correlated to the ACC N1’ latency. Figure 6 shows the correlation between ACC N1’ latency and the CNC score (R2=0.36, p<0.05). The CNC scores from 15 individual CI ears were included in this figure. The CNC scores from other 3 CI ears were either unavailable or not meeting the criterion for the clinical testing time.

Figure 6.

ACC N1’ latency as the function of CNC score in CI users. The solid line shows linear regression fit to the data. The stimulus used to evoke the ACC was a 160 Hz tone containing 50% frequency change.

4. DISCUSSION

This study used tones containing frequency changes for psychoacoustic tests of frequency change detection and to evoke ACC responses in post-lingually deafened CI users. The ACC shows longer peak latencies compared to the onset CAEP. There is an effect of the base frequency in the ACC N1’ latency measurement. The ACC is in agreement with the FCDT results and is correlated to CNC score.

4.1. Frequency Discrimination in Post-Lingual Cochlear Implant Users

Numerous studies have reported that post-lingual CI users have significantly poorer frequency discrimination ability than NH listeners (Gfeller et al., 2007; Kang et al., 2009; Limb & Roy, 2014; Turgeon et al., 2015). Several factors have been reported to negatively affect the ability to discriminate frequencies in CI users. First, the auditory system of CI users typically suffers from pervasive neural degeneration due to long-term deafness before the implantation. This inadequate peripheral input to the auditory cortex results in weaker cortical responses to sound, which in turn leads to poorer frequency discrimination capability compared to their NH peers. Second, the spectral cues of the sound transmitted by CI devices are degraded. In CI hearing, the perception of frequency change is achieved by the change of electrical stimulation rate and the change of the location of the stimulated electrodes. The poor spectral resolution is the result of the small number of electrodes (6-22), the interference between adjacent electrodes when several electrodes are activated concurrently, and a possible mismatch between the acoustic input and electrode location in the cochlea (Oxenham et al., 2004; Fu & Nogaki, 2005). Third, the auditory cortex reorganization (intra- and cross-modal reorganization as the result of long-term deafness) may be so resistant to change that it cannot be reversed in some CI users even after years of CI use (Hung et al., 2010; Sandmann et al., 2012; also see review by Anderson et al., 2017).

In this study, the results support the poor capability of frequency discrimination in CI users. The FCDT of CI users was 3.79%, with a range from 0.67% to 9.66% in the CI ears. An ad-hoc analysis of the correlation between the FCDF and the duration of deafness was conducted. Figure 7 shows that the FCDT is significantly correlated to the duration of deafness, suggesting that the degree of deafness-related neural deficit is a significant factor for the variance in the FCDT.

Figure 7.

The FCDT as the function of the duration of deafness in CI users. The solid line shows linear regression fit to the data.

These FCDT results are consistent with those reported in previous studies (Gfeller et al., 2007; Kang et al., 2009; Limb & Roy, 2014; Kenway et al., 2015; Turgeon et al., 2015). Goldsworthy (2015) reported that CI users’ pitch discrimination thresholds for pure tones (0.5, 1, and 2 kHz) ranged between 1.5-9.9%, while the thresholds for complex tones (with fundamental frequencies at 110, 220, 440 Hz) ranged between 2.6-28.5%. Gfeller et al. (2002) reported that NH listeners’ mean discrimination threshold for complex tones (F0 between 73 to 553 Hz) was 1.12 semitones (range of 1-2 semitones), while CI users had a mean threshold of 7.56 semitones (range of 1-24 semitones). Note that the exact values of frequency discrimination may vary among these studies, possibly due to: 1) the various degrees of heterogeneity in demographic variables of the CI subjects recruited, 2) the difference in the psychoacoustic tasks used (pitch discrimination, pitch ranking, frequency change detection etc.), 3) the difference in the stimulus type used (pure tone vs. complex tone, acoustic vs. electric stimuli), and 4) the difference in the base frequency.

Previous evidence showed that CI users with poorer auditory frequency discrimination also have poorer speech recognition compared to CI users with better frequency discrimination ability (Kenway et al., 2015; Turgeon et al., 2015). The capability of spectral ripple discrimination was more significantly correlated with the identification of formant transitions and word recognition than the capability of temporal discrimination (Winn et al., 2016). Frequency perception deficiency is a major reason causing poor performance in speech perception in CI users (Finley et al., 2008; Kenway et al., 2015; Zeng et al., 2014).

4.2. ACC is an Objective Tool for Assessing Frequency Change Detection Capability and Speech Perception Score in CI Users

In this study, the correlation between the ACC measures and FCDT is reflected in the following observations. First, the subgroup of CI users with missing ACCs evoked by the 5% change exhibited the FCDT greater than 5% while the subgroup of CI users with present ACCs had a FCDT of less than 5%. Second, the ACC is larger with a more salient frequency change (50% vs. 5% change). Third, there was a significant positive correlation between ACC-N1’ latency and FCDT (p<0.05). There was also a trend that other ACC components are correlated with the FCDT: individuals with lower FCDTs (better performance) had a shorter P2’ latency and a bigger N1’P2 amplitude (not shown). These correlations were not statistically significant, however, possibly due to the small sample size and large inter-subject variability.

The correlation between behavioral measured auditory discrimination ability and ACC measures has been reported in several ACC studies. Hoppe et al. (2010) reported that the ACC N1’ latency of EACC response was significantly correlated to the perceptual difference between two electrodes. The findings in the current study further supported that ACC measures can serve as an objective indicator of frequency change detection capacity in CI users.

The correlation between ACC response and speech performance is uncertain with the current CI studies. Friesen & Tremblay (2006) reported the CI users with good syllable identification score showed a different ACC evoked by speech token change pattern compared to those with poor scores. Scheperle & Abbas (2015) reported that spectral ACC (evoked by ripple-noise stimuli presented directly via CI processor) was significantly correlated to speech perception in noise. Some other investigators reported no correlation between the ACC response and speech performance (Brown et al., 2015). The above discrepancy reported in previous studies may be related to different stimuli used (electric vs. acoustic stimuli). In this study, the stimuli for both psychoacoustic tests and ACC recordings were pure tones containing frequency changes, with the onset amplitude cues of the frequency change removed (Dimitrijevic et al., 2008). The ACC is evoked by the change of frequency only. The finding that the ACC is correlated to both behaviorally measured frequency change detection ability and CNC scores indicate that the ACC can be used as an objective tool to predict CI outcomes.

Previous ACC studies have suggested that the ACC can be used as a promising tool to objectively assess auditory discrimination for several reasons: (1) The EEG recording is non-invasive and the ACC is a pre-attentive response, which made it suitable for the hard-to-detect population who cannot reliably perform behavioral tasks (He et al., 2015), (2) The ACC’s feature change can reflect the change of stimulus properties (Friesen & Tremblay, 2006), and (3) The ACC shows excellent test-retest reliability (Tremblay et al., 2003).

4.3. Cortical Responses to Frequency Changes Differ between Low and High Base Frequencies

The current study showed that the ACC response exhibited longer latencies for the 160 Hz base frequency than for the 1200 Hz. In acoustic hearing, it takes a longer time for low-frequency sounds to be transmitted in the peripheral auditory system (e.g., a longer traveling wave time along the basilar membrane for low frequencies). The time delay for low frequency sounds also exists at the cortical processing stage, where the subcortical temporal codes need to be transformed into central rate codes (Dimitrijevic et al., 2008).

In the current study, the traveling wave time difference does not exist since the CI bypasses the damaged cochlear and directly stimulate the auditory nerve fibers, yet the response time for processing frequency changes at the low base frequency is longer than that at the high base frequency. We speculated that this neural timing differences between low and high frequencies were due to the difference in temporal window of integration (Dimitrijevic et al., 2008). The low frequency sound has a longer duration for each cycle. To get a necessary number of cycles for frequency change detection, the integration window needs to be longer for low frequencies than for high frequencies. Thus, the ACC displayed longer latencies for 160 Hz than for 1200 Hz base frequency.

An alternative explanation for the latency difference in the ACC evoked by frequency changes at 160 Hz and 1200 Hz may be related to the different cues perceived by the participants at these two base frequencies. At the CI speech processing stage, the acoustic signal is separated based on its frequency components and then filtered through a number of contiguous band-pass filters before further processing. In Cochlear devices, the first cutoff frequency band is higher than the 160 Hz, and therefore the 160 Hz is located at the filter slope of the first band. The detection of a frequency change from 160 Hz relies on the different intensities in the CI output and thus temporal rate cues (different intensities result in different neural activation patterns and neural timing, Eggermont, 2001). On the other hand, the 1200 Hz is assigned to a middle electrode and thus the frequency change from 1200 Hz would be perceived mainly based on the electrode place cue (Pretorius & Hanekom, 2008). It is possible that the cue for frequency change detection at 1200 Hz is more obvious than the cue at 160 Hz, resulting a larger ACC amplitude and a shorter peak latency (Fig. 2 and Table 2).

5. LIMITATIONS AND FUTURE STUDIES

One limitation of this study is that the participants in this study have heterogeneous demographic features. Specifically, bilateral, bimodal, and unilateral CI users were included. Future studies will separately recruit patients of each category. Moreover, because the position of the reference frequency relative to the frequency response of filters in the CI users’ clinical map would affect the frequency discrimination threshold (Pretorius & Hanekom, 2008), future study will explore which frequency position will result in the best behavioral-neurophysiological relationships.

6. CONCLUSION

This study found that the features of the onset CAEP and the ACC have differences, indicating different neural substrates for coding acoustic onset and frequency changes. The ACC measures are correlated to the behavioral frequency change detection in CI users. The ACC is also related to clinically collected CNC score of the CI users. The ACC can be used as an objective tool for assessing frequency discrimination and predicting speech perception performance.

7. ACKNOWLEDGMENTS

We would like to thank all participants for their participation in this research. This research was partially supported by the University Research Council (URC) at the University of Cincinnati, the Center for Clinical and Translational Science and Training (CCTST), and the National Institute of Health (NIH 1R15DC011004-01). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

List of Abbreviations

- ACC

Acoustic Change Complex

- CAEP

Cortical Auditory Evoked Potential

- CI

Cochlear Implant

- CNC

Consonant-Nucleus-Consonant

- EEG

Electroencephalography

- ERP

Event-Related Potential

- FCDT

Frequency Change Detection Threshold

- ICA

Independent Component Analysis

Footnotes

DISCLOSURE STATEMENT

RS and LH received research and travel funding from Cochlear Ltd. Other authors report no conflict of interest

REFERENCES

- Anderson CA, Lazard DS, & Hartley DEH (2017). Plasticity in bilateral superior temporal cortex: Effects of deafness and cochlear implantation on auditory and visual speech processing. Hear Res, 343, 138–149. 10.1016/j.heares.2016.07.013 [DOI] [PubMed] [Google Scholar]

- Blamey PJ, Dowell RC, Clark GM, & Seligman PM (1987). Acoustic parameters measured by a formant-estimating speech processor for a multiple-channel cochlear implant. The Journal of the Acoustical Society of America, 82(1), 38–47. 38–47. 10.1121/1.395542 [DOI] [PubMed] [Google Scholar]

- Brown CJ, Etler C, He S, O ’brien S, Erenberg S, Kim J-R, … Abbas PJ (2008). The Electrically Evoked Auditory Change Complex: Preliminary Results from Nucleus Cochlear Implant Users. Ear & Hearing, 29(5), 704–717. 10.1097/AUD.0b013e31817a98af [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CJ, Jeon EK, Chiou L-K, Kirby B, Karsten S. a, Turner CW, & Abbas PJ (2015). Cortical Auditory Evoked Potentials Recorded From Nucleus Hybrid Cochlear Implant Users. Ear and Hearing, 36(6), 723–732. 10.1097/AUD.0000000000000206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Budd TW, Barry RJ, Gordon E, Rennie C, & Michie PT (1998). Decrement of the N1 auditory event-related potential with stimulus repetition: Habituation vs. refractoriness. International Journal of Psychophysiology, 31(1), 51–68. 10.1016/S0167-8760(98)00040-3 [DOI] [PubMed] [Google Scholar]

- Cullington HE, & Zeng ZF-G (2011). Comparison of bimodal and bilateral cochlear implant users on speech recognition with competing talker, music perception, affective prosody discrimination and talker identification. Ear and Hearing Journal, 32(1), 16–30. 10.1097/AUD.0b013e3181edfbd2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A, & Makeig S (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. 10.1016/jjneumeth.2003.10.009 [DOI] [PubMed] [Google Scholar]

- Dimitrijevic A, Michalewski HJ, Zeng FG, Pratt H, & Starr A (2008). Frequency changes in a continuous tone: Auditory cortical potentials. Clinical Neurophysiology, 119(9), 2111–2124. 10.1016/j.clinph.2008.06.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggermont JJ (2001). Between sound and perception: Reviewing the search for a neural code. Hearing Research, 157(1–2), 1–42. [DOI] [PubMed] [Google Scholar]

- Finley CC, Skinner MW, Holden TA, Holden LK, Whiting BR, Chole RA, … Hullar TE. (2008). Role of electrode placement as a contributor to variability in cochlear implant outcomes. Otology and Neurotology, 29(7), 920–928. 10.1097/MAO.0b013e318184f492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Chambers And RD, & Kraus N (2002). Neurophysiology of cochlear implant users II: comparison among speech perception, dynamic range, and physiological measures. Ear and Hearing, 23(6), 516–531. 10.1097/01.AUD.0000042154.70495.DE [DOI] [PubMed] [Google Scholar]

- Francart T, van Wieringen A, & Wouters J (2008). APEX 3: a multi-purpose test platform for auditory psychophysical experiments. Journal of Neuroscience Methods, 172(2), 283–293. 10.1016/jjneumeth.2008.04.020 [DOI] [PubMed] [Google Scholar]

- Friesen LM, & Tremblay KL (2006). Acoustic change complexes recorded in adult cochlear implant listeners. Ear and Hearing, 27(6), 678–685. 10.1097/01.aud.0000240620.63453.c3 [DOI] [PubMed] [Google Scholar]

- Fu, & Nogaki G (2005). Noise susceptibility of cochlear implant users: The role of spectral resolution and smearing. JARO - Journal of the Association for Research in Otolaryngology, 6(1), 19–27. 10.1007/s10162-004-5024-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K, Jiang D, Oleson J, Driscoll V, Olszewski C, Knutson JF, … Gantz B. (2012). The Effects of Musical and Linguistic Components in Recognition of Real-World Musical Excerpts by Cochlear Implant Recipients and Normal-Hearing Adults. Journal of Music Theory, 49(1), 68–101. 10.1038/nmeth.2250.Digestion [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K, Oleson J, Knutson JF, Breheny P, Driscoll V, & Olszewski C (2008). Multivariate Predictors of Music Perception and Appraisal by Adult Cochlear Implant Users. Journal of the American Academy of Audiology, 19(2), 120–134. 10.3766/jaaa.19.2.3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Mehr M, Woodworth G, Fearn R, Knutson JF, … Stordah J (2002). Recognition of familiar melodies by adult cochlear implant recipients and normal-hearing adults. Cochlear Implants International, 3(1), 29–53. 10.1179/cim.2002.3.1.29 [DOI] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Oleson J, Zhang X, Gantz B, Froman R, & Olszewski C (2007). Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear and Hearing, 28(3), 412–23. 10.1097/AUD.0b013e3180479318 [DOI] [PubMed] [Google Scholar]

- Gifford, Hedley-Williams A, & Spahr AJ (2014). Clinical assessment of spectral modulation detection for adult cochlear implant recipients: A non-language based measure of performance outcomes. International Journal of Audiology, 53(3), 159–164. 10.3109/14992027.2013.851800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldsworthy RL (2015). Correlations between pitch and phoneme perception in cochlear implant users and their normal hearing peers. Journal of the Association for Research in Otolaryngology : JARO, 16(6), 797–809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han J-H, Zhang F, Kadis DS, Houston LM, Samy RN, Smith ML, & Dimitrijevic A (2016). Auditory cortical activity to different voice onset times in cochlear implant users. Clinical Neurophysiology, 127(2), 1603–17. 10.1016/j.clinph.2015.10.049 [DOI] [PubMed] [Google Scholar]

- Harris KC, Mills JH, & Dubno JR (2007). Electrophysiologic correlates of intensity discrimination in cortical evoked potentials of younger and older adults. Hearing Research, 228(1–2), 58–68. 10.1016/j.heares.2007.01.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- He S, Grose JH, Teagle HFB, Woodard J, Park LR, Hatch DR,… Buchman CA. (2015). Acoustically Evoked Auditory Change Complex in Children With Auditory Neuropathy Spectrum Disorder: A Potential Objective Tool for Identifying Cochlear Implant Candidates. Ear and Hearing, 36(3), 289–301. 10.1097/AUD.0000000000000119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holden LK, Finley CC, Firszt JB, Holden TA, Brenner C, Potts LG, … Skinner MW (2013). Factors Affecting Open-Set Word Recognition in Adults With Cochlear Implants. Ear and Hearing, 34(3), 342–360. 10.1097/AUD.0b013e3182741aa7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoppe U, Wohlberedt T, Danilkina G, & Hessel H (2010). Acoustic change complex in cochlear implant subjects in comparison with psychoacoustic measures. Cochlear Implants International, 11 Suppl 1(January 2017), 426–430. https://doi.org/10.n79/146701010X12671177204101 [DOI] [PubMed] [Google Scholar]

- Hoppe, Rosanowski F, Iro H, & Eysholdt U (2001). Loudness perception and late auditory evoked potentials in adult cochlear implant users. Scand Audiol, 30(2), 119–125. Retrieved from http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=11409789 [DOI] [PubMed] [Google Scholar]

- Hung T-V, Evelyne V, Arnaud N, Jeanne G, & Lionel C (2010). Plasticity of tonotopic maps in humans: influence of hearing loss, hearing aids and cochlear implants. Acta Oto-Laryngologica, 130(3), 333–337. 10.3109/00016480903258024 [DOI] [PubMed] [Google Scholar]

- Itoh K, Okumiya-Kanke Y, Nakayama Y, Kwee IL, & Nakada T (2012). Effects of musical training on the early auditory cortical representation of pitch transitions as indexed by change-N1. European Journal of Neuroscience, 36(11), 3580–3592. 10.1111/j.1460-9568.2012.08278.x [DOI] [PubMed] [Google Scholar]

- Kang R, Nimmons GL, Drennan W, Longnion J, Ruffin C, Nie K, … Rubinstein J. (2009). Development and Validation of the University of Washington Clinical Assessment of Music Perception Test. Ear and Hearing, 30(4), 411–418. 10.1097/AUD.0b013e3181a61bc0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly AS, Purdy SC, & Thorne PR (2005). Electrophysiological and speech perception measures of auditory processing in experienced adult cochlear implant users. Clinical Neurophysiology, 116(6), 1235–1246. 10.1016/j.clinph.2005.02.011 [DOI] [PubMed] [Google Scholar]

- Kenway B, Tam YC, Vanat Z, Harris F, Gray R, Birchall J, … Axon P. (2015). Pitch Discrimination: An Independent Factor in Cochlear Implant Performance Outcomes. Otology & Neurotology, 36(9), 1472–9. 10.1097/MAO.0000000000000845 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J-R, Brown CJ, Abbas PJ, Etler CP, & O ’brien S (2009). The Effect of Changes in Stimulus Level on Electrically Evoked Cortical Auditory Potentials. Ear and Hearing, 30(3), 320–329. 10.1097/AUD.0b013e31819c42b7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazard, Giraud AL, Gnansia D, Meyer B, & Sterkers O (2012). Understanding the deafened brain: Implications for cochlear implant rehabilitation. European Annals of Otorhinolaryngology, Head and Neck Diseases. 10.1016/j.anorl.2011.06.001 [DOI] [PubMed] [Google Scholar]

- Leung J, Wang N-Y, Yeagle JD, Chinnici J, Bowditch S, Francis HW, & Niparko JK (2005). Predictive models for cochlear implantation in elderly candidates. Archives of Otolaryngology--Head & Neck Surgery, 131(12), 1049–1054. 10.1016/S1041-892X(07)70049-5 [DOI] [PubMed] [Google Scholar]

- Limb CJ, & Roy AT (2014). Technological, biological, and acoustical constraints to music perception in cochlear implant users. Hearing Research, 308, 13–26. 10.1016/j.heares.2013.04.009 [DOI] [PubMed] [Google Scholar]

- Litvak LM, Spahr AJ, Saoji AA, & Fridman GY (2007). Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners. The Journal of the Acoustical Society of America, 122(2), 982–991. 10.1121/1.2749413 [DOI] [PubMed] [Google Scholar]

- Martin BA (2007). Can the acoustic change complex be recorded in an individual with a cochlear implant? Separating neural responses from cochlear implant artifact. Journal of the American Academy of Audiology, 18(2), 126–140. [DOI] [PubMed] [Google Scholar]

- Martin, & Boothroyd A. (1999). Cortical, auditory, event-related potentials in response to periodic and aperiodic stimuli with the same spectral envelope. Ear and Hearing, 20(1), 33–44. 10.1097/00003446-199902000-00004 [DOI] [PubMed] [Google Scholar]

- Näätänen R, & Picton T (1987). The N1 Wave of the Human Electric and Magnetic Response to Sound: A Review and an Analysis of the Component Structure. Psychophysiology, 24(4), 375–425. 10.1111/j.1469-8986.1987.tb00311. [DOI] [PubMed] [Google Scholar]; Oxenham AJ (2008). Pitch perception and auditory stream segregation: implications for hearing loss and cochlear implants. Trends in amplification, 12(4), 316–331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oxenham, Bernstein JGW, & Penagos H (2004). Correct tonotopic representation is necessary for complex pitch perception. Proceedings of the National Academy of Sciences of the United States of America, 101(5), 1421–1425. 10.1073/pnas.0306958101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pakarinen S, Takegata R, Rinne T, Huotilainen M, & Näätänen R (2007). Measurement of extensive auditory discrimination profiles using the mismatch negativity (MMN) of the auditory event-related potential (ERP). Clinical Neurophysiology, 118(1), 177–185. 10.1016/j.clinph.2006.09.001 [DOI] [PubMed] [Google Scholar]

- Pretorius LL, & Hanekom JJ (2008). Free field frequency discrimination abilities of cochlear implant users. Hearing Research, 244(1–2), 77–84. doi: 10.1016/j.heares.2008.07.005 [doi] [DOI] [PubMed] [Google Scholar]

- Sandmann P, Dillier N, Eichele T, Meyer M, Kegel A, Pascual-Marqui RD, … Debener S (2012). Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. Brain, 135(2), 555–568. 10.1093/brain/awr329 [DOI] [PubMed] [Google Scholar]

- Sandmann P, Kegel A, Eichele T, Dillier N, Lai W, Bendixen A, … Meyer M. (2010). Neurophysiological evidence of impaired musical sound perception in cochlear-implant users. Clinical Neurophysiology, 121(12), 2070–2082. 10.1016/j.clinph.2010.04.032 [DOI] [PubMed] [Google Scholar]

- Scheperle RA, & Abbas PJ (2015). Relationships Among Peripheral and Central Electrophysiological Measures of Spatial and Spectral Selectivity and Speech Perception in Cochlear Implant Users. Ear and Hearing, 36(4), 441–453. 10.1097/AUD.0000000000000144 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A, & Dorman MF (2006). Central Auditory Development in Children with Cochlear Implants: Clinical Implications. Adv Otorhinolaryngol, 64, 66–88. 10.1159/000094646 [pii]\r10.1159/000094646 [DOI] [PubMed] [Google Scholar]

- Sheft S, Min-Yu C, & Shafiro V (2015). Discrimination of Stochastic Frequency Modulation by Cochlear Implant Users. Journal of the American Academy of Audiology, 26, 572–581 10p. 10.3766/jaaa.14067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh S, Kong YY, & Zeng FG (2009). Cochlear implant melody recognition as a function of melody frequency range, harmonicity, and number of electrodes. Ear and Hearing, 30(2), 160–168. 10.1097/AUD.0b013e31819342b9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Small SA, & Werker JF (2012). Does the acc Have Potential as an Index of Early Speech-Discrimination Ability? a Preliminary Study in 4-Month-Old Infants With Normal Hearing. Ear and Hearing, 33(6), e59–e69. 10.1097/AUD.0b013e31825f29be [DOI] [PubMed] [Google Scholar]

- Stickney GS, Assmann PF, Chang J, & Zeng FG (2007). Effects of cochlear implant processing and fundamental frequency on the intelligibility of competing sentences. The Journal of the Acoustical Society of America, 122(2), 1069–1078. [DOI] [PubMed] [Google Scholar]

- Tremblay K, Kraus N, Carrell TD, & McGee T (1997). Central auditory system plasticity: Generalization to novel stimuli following listening training. Journal of the Acoustical Society of America, 102(6), 3762–3773. 10.1121/L420139 [DOI] [PubMed] [Google Scholar]

- Tremblay, Friesen ,L, Martin BA, & Wright R (2003). Test-Retest Reliability of Cortical Evoked Potentials Using Naturally Produced Speech Sounds. Ear and Hearing, 24(3), 225–232. 10.1097/01.AUD.0000069229.84883.03 [DOI] [PubMed] [Google Scholar]

- Turgeon C, Champoux F, Lepore F, & Ellemberg D (2015). Deficits in auditory frequency discrimination and speech recognition in cochlear implant users. Cochlear Implants International, 16(2), 88–94. 10.1179/1754762814Y.0000000091 [DOI] [PubMed] [Google Scholar]

- Winn MB, Won JH, & Moon IJ (2016). Assessment of Spectral and Temporal Resolution in Cochlear Implant Users Using Psychoacoustic Discrimination and Speech Cue Categorization. Ear and Hearing, 1 10.1097/AUD.0000000000000328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Humphrey EL, Yeager KR, Martinez A. a, Robinson CH, Mills KE, … Woo J (2014). Relationship among the physiologic channel interactions, spectral-ripple discrimination, and vowel identification in cochlear implant users. The Journal of the Acoustical Society of America, 136(5), 2714 10.1121/1.4895702 [DOI] [PubMed] [Google Scholar]

- Won JH, Moon IJ, Jin S, Park H, Woo J, Cho YS, … Hong SH (2015). Spectrotemporal modulation detection and speech perception by cochlear implant users. PLoS ONE, 10(10), 1–24. 10.1371/journal.pone.0140920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng FG, Tang Q, & Lu T (2014). Abnormal pitch perception produced by cochlear implant stimulation. PLoS ONE, 9(2). 10.1371/journal.pone.0088662 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang F, Samy RN, Anderson JM, & Houston L (2009). Recovery function of the late auditory evoked potential in cochlear implant users and normal-hearing listeners. Journal of the American Academy of Audiology, 20(7), 397–408. 10.3766/jaaa.20.7.2 [DOI] [PubMed] [Google Scholar]