Abstract

In 2007 the US National Research Council (NRC) published a vision for toxicity testing in the 21st century that emphasized the use of in vitro high throughput screening (HTS) methods and predictive models as an alternative to in vivo animal testing. Here we examine the state of the science of HTS and progress that has been made in implementing and expanding on the NRC vision, as well as challenges to implementation that remain. Overall, significant progress has been made with regard to the availability of HTS data, aggregation of chemical property and toxicity information into on-line databases, and the development of various models and frameworks to support extrapolation of HTS data. However, HTS data and associated predictive models have not yet been widely applied in risk assessment. Major barriers include the disconnect between the endpoints measured in HTS assays and the assessment endpoints considered in risk assessments and the rapid pace at which new tools and models are evolving in contrast with the slow pace at which regulatory structures change. Nonetheless, there are opportunities for environmental scientists and policy makers alike to take an impactful role in the ongoing development and implementation of the NRC vision. Six specific areas for scientific and/or coordination and policy engagement are identified.

Keywords: Computational toxicology, adverse outcome pathway, effects-based monitoring, in vitro to in vivo extrapolation, risk assessment, mixtures

Environmental risk assessment of chemicals

Government authorities, industries, and the public they serve, have a shared interest in protecting both human health and ecosystems from adverse effects of exposure to chemicals entering the environment as a result of human activities. The framework for environmental risk assessment (US EPA Risk Assessment Forum 1998; 2014) provides a systematic process for evaluating the likelihood that adverse effects may occur as the result of exposure to chemicals in the environment. The primary elements of that process include (1) clear formulation of a problem statement in light of an appropriate protection goal or management decision; (2) assessment of the probable routes, severity, duration, and frequency of exposure; (3) assessment of the adverse or toxic effects that may result when exposure occurs; (4) and final risk characterization that brings the first three elements together. Risk assessments are conducted in a wide range of regulatory and commercial contexts, but may be broadly categorized as prospective (i.e., trying to assess risks before any release or exposure takes place) or retrospective (i.e., evaluating risks associated with exposures that have already occurred and/or are still occurring). Regardless of context, quality risk assessments are dependent on the availability of data and/or models for estimating exposure and probable effects, and the lack of appropriate data and models can represent a significant impediment to environmental safety decision-making. The lack of available data for evaluating the safety of many chemicals in commerce was a significant driver for considering new approaches (NRC, 2007; Judson et al. 2009).

A vision for modernizing chemical safety evaluation, ca. 2007

In a landmark report published in 2007, the US National Research Council (NRC) clearly identified the lack of toxicity data, for most chemicals in commerce, as a major barrier to efficient and cost-effective risk assessment (NRC, 2007; Judson et al. 2009). The authors laid out a vision that emphasized the use of predictive, high throughput screening (HTS) assays to characterize the ability of chemicals to perturb biological pathways critical to health. High throughput assays were defined as those that can be “automated and rapidly performed to measure the effect of substances on a biological process of interest” (NRC, 2007). Broadly speaking they referred to assays that could be run in 96, 384, or 1536 well plate formats. This allows hundreds or thousands of chemicals to be screened over a wide concentration range, and also facilitates the use of many different assays that can encompass different biological endpoints from different organs and different systems. They envisioned that data from these HTS assays would be complemented by collection of chemical-specific information related to physicochemical properties, important functional groups, key structural features, commercial production and use(s), etc. to identify exposure potential and critical aspects of uptake, distribution, metabolism, elimination, and/or accumulation and by fundamental understanding of biological pathways that could facilitate quantitative extrapolation modeling. The NRC identified critical needs for implementation of their overall vision which included: (1) development of appropriate suites of HTS assays, (2) availability of targeted whole organism data to complement and provide an interpretive context for the HTS results, (3) computational extrapolation models that could predict which exposures may result in adverse changes, (4) infrastructure to support the basic and applied research to develop the assays and models, (5) validation of the assays and data for incorporation into guidance regarding their interpretation and use, and (6) evidence that the new approaches are adequately predictive of adverse outcomes (NRC, 2007). While that vision was focused entirely on human health risk assessment and primarily prospective chemical assessments, it was expected that many aspects of the vision could translate to ecological and retrospective risk assessments as well (Villeneuve and Garcia-Reyero, 2011). It was also understood that realizing the vision necessitates the development of, biologically based extrapolation tools or models that allow cell or tissue level data to be applied to individual or population outcomes (Ankley et al. 2010).

High throughput screening, state of the science, ca. 2018

The science of HTS has come a long way since publication of the NRC report in 2007. In April 2018, over 100 international participants from academia, government, industry, and other sectors came together to take stock of the progress that has been made in HTS science and its application in environmental risk assessment. Additionally, challenges that remain with regard to implementation of NRC’s vision and broadening its scope to encompass a full range of environmental risk assessment contexts were identified. Building from the critical needs for implementation that were identified by the NRC, we detail the state of the science and on-going challenges in application of HTS in risk assessment. While the topics, activities, and examples described below are by no means comprehensive with respect to everything happening in the field, they do provide a general view on how far the science has come and how far it still needs to go for HTS to play a routine role in environmental risk assessment.

HTS bioassays and data

A first major need for implementation of a HTS assay-based paradigm was the availability of the assays themselves and associated data. The ToxCast™ and Tox21 initiatives (Kavlock et al. 2012; Tice et al. 2013) were landmark achievements in that regard. Together the ToxCast and Tox21 datasets represent the largest, publically accessible libraries of HTS data currently available. Most of the assays used by ToxCast were performed under contract by commercial vendors (Kavlock et al. 2012) allowing a wide range of biological endpoints (100s) to be interrogated in a relatively rapid manner using assays that had already been developed. Since 2007, over 4700 chemicals have been screened in at least a portion of the ToxCast assays (https://comptox.epa.gov/dashboard/chemical_lists/toxcast; Richard et al. 2016). The Tox21 program (Tice et al. 2013) has employed the infrastructure of the National Toxicology Program to screen over 8900 chemicals (https://comptox.epa.gov/dashboard/chemical_lists/tox21sl), although in a smaller number of assays (10s) compared to the number included in ToxCast. All data from these pioneering HTS programs are accessible through EPA’s CompTox Chemistry dashboard (https://comptox.epa.gov/dashboard/; Williams et al. 2017). In addition to these large scale efforts, targeted HTS assay development, followed by screening of large chemical libraries available through the ToxCast and Tox21 programs are being used to gradually expand the biological pathway space covered by the assays (e.g., Paul-Friedman 2016). However, with the exception of incorporation of zebrafish embryo toxicity assays (e.g., Reif et al. 2016) and some research-scale attempts to incorporate cross-species testing platforms (e.g., Arini et al. 2017) assay and data coverage remains strongly mammalian-biased, reflecting the human health-centric nature of the ToxCast and Tox21 efforts, to date.

Complementary chemical property characterization

Along with the rapid expansion and availability of HTS data sets, there has been a complementary explosion in the resources available for chemical property characterization. Modern computing power along with available high speed internet has brought access to chemical property information for hundreds of thousands of structures to our computers and even our mobile phones. For example, the US EPA Chemistry Dashboard (https://comptox.epa.gov/dashboard/) has made an attempt to not only link together many external databases and prediction models, but also to provide as much documentation on models as possible via direct hyperlinks, thereby providing one-stop access to a wide range of chemical-specific information. The widespread availability of chemical structure and property information as well as crystal structures for proteins has made construction of in silico 3D docking models nearly routine. Likewise, the combination of chemical property information and HTS-based biological effects data, in many cases, now provides large enough libraries of biologically active and inactive chemical structures to employ machine learning approaches to identify structural alerts and define chemical categories likely to interact with specific biological targets and/or pathways. Such resources and tools are developing so rapidly that it is extremely challenging for scientists and risk assessors to keep pace with the development of new structure-based prediction models and databases. Consequently, at present, deficiencies in structure-based prediction model documentation, validation, and training and outreach needed to build confidence in the fit-for-purpose use of such models are perhaps the greatest barrier to application. Arguably, as with other types of computational models, there is a need for the scientific community to develop a set of rules and best practices that ensure the quality and applicability of the models, decrease uncertainty, and increase acceptance. For instance, the Interagency Coordinating Committee on the Validation of Alternative Methods (ICCVAM) sponsored a global project to develop in silico models for acute oral systemic toxicity that predicted specific endpoints (https://ntp.niehs.nih.gov/go/tox-models). More than 130 models were submitted and discussed during a workshop (https://ntp.niehs.nih.gov/go/atwksp-2018) where participants discussed developing a consensus model as well as next steps to encourage appropriate use of the models in a regulatory context.

Accessing whole organism data to complement HTS results

The aggregation of targeted, whole organism data is another area where significant progress toward implementation has been made. For example, US EPA’s CompTox Chemistry Dashboard (https://comptox.epa.gov/dashboard/) aggregates toxicity data from a wide variety of sources and presents it in viewable and downloadable tables linked with chemical structures. Likewise, the US EPA ECOTOX knowledgebase has been modernized to greatly improve search capabilities as well as data visualization and interpretation (https://cfpub.epa.gov/ecotox-new/). The ECOTOX knowledgebase has also been dynamically linked to the Chemistry Dashboard so that both ecological and human health related effects data, where available, can be readily accessed. Computational tools for searching the literature and both automated and semi-automated extraction of data and information from various online sources are developing so rapidly that it is difficult to stay abreast of the strengths and critical limitations of different approaches. Consequently, when it comes to computer assisted literature and data extraction, documentation of an appropriate problem statement, search strategy, retrieval and extraction approach, and subsequent clean-up and formatting of the extracted data have become critical for transparent application of literature-obtained data in risk assessments (Vandenberg et al. 2016).

Extrapolation models

Ultimately, the use of HTS data in environmental risk assessment relies on a range of different extrapolation models (Figure 1). In vitro to in vivo extrapolation (IVIVE) models are needed to translate relevant in vitro doses to equivalent blood or tissue-doses in exposed organisms. Biologically-based extrapolation models are needed to understand how pathway perturbations typically measured in a cell or even a cell-free system may translate to higher level impacts in an intact organism. The integrated impacts on multiple physiological processes in an intact organism such as growth, maintenance, reproduction, and resilience to environmental change, need to be translated into estimates of individual fitness, and, at least in the case of ecological risk assessment relevant effects on populations or ecosystem services. Finally, for both human and ecological risk assessments cross-species extrapolation from the model systems represented in toxicity tests or HTS assays to those that are of concern for a given risk assessment are often involved. Consequently, many types of extrapolation models are needed to effectively employ HTS data in risk assessment.

Figure 1:

Integration of extrapolation models and frameworks required for more effective use of high throughput screening data in environmental risk assessment.

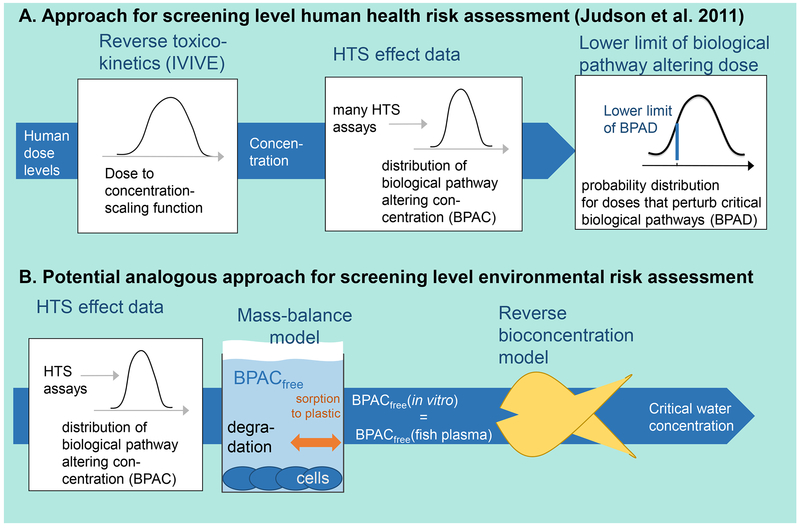

Considerable progress has been made in the development of extrapolation models. For example, several tools and frameworks have been developed to support IVIVE of HTS data for health risk assessment (Judson et al., 2014). Some generalized models for IVIVE/reverse toxicokinetics have been proposed (Wetmore et al. 2015). These allow at least screening level estimation of human plasma doses equivalent to a nominal in vitro effect concentration. Likewise, dose-response data for many different endpoints for a given chemical can be combined into a distribution of “biological pathway activating concentrations” (BPAC), which can be converted into “biological pathway activating doses” (BPAD) for diverse human populations by reverse toxicokinetic modelling (Judson et al. 2011). From the resulting distribution of BPAD, a lower confidence limit (BPADL) can be selected as a conservative exposure limit (Figure 2A).

Figure 2:

Applications of HTS data in (A) screening potential human health risk(s) (Judson et al. 2011) and (B) screening ecological risk to fish as an example.

This BPAD model is based on nominal activity concentrations in in vitro assays. However, generalizable mass balance/partitioning models for predicting free versus bound chemical fractions in vitro (Armitage et al. 2014, Fischer et al. 2017) are also available. These mass/balance partitioning models can provide more accurate estimation of the fraction of total administered mass that is available to impact the cells or organism in the HTS assay. However, to be widely employed, assay-specific parameters like lipid and protein content of the cells, volume and composition of the microplate wells, properties of the carrier solvent(s) used, liposome-water and protein-water partition coefficients, etc. need to be measured and made available (e.g., Fischer et al. 2017). More widespread reporting of activity/effect concentrations in in vitro assays as freely dissolved concentrations would allow HTS data to be more directly translated to freely dissolved concentrations in blood of diverse biological species.

With appropriate availability of assay-specific parameters and in vitro toxicokinetic models, an approach similar to the BPAD approach in human health could be used in ecological risk assessment as well. Specifically, HTS activity data expressed in relation to freely dissolved concentrations in an assay well could then be equated to free concentrations in fish plasma and finally, using reverse bioconcentration models, equated to critical water concentrations (Figure 2B) that could be used for criteria setting. To aid this process, parameter estimation tools and databases of physiological parameter values for different species are being assembled to facilitate the use of physiologically-based toxicokinetic models to aid IVIVE (Bessems et al. 2014). However, there is more work to be done. Bell and collaborators (2018) proposed that suitability of currently available IVIVE models for regulatory use will vary depending on whether they are used for prioritization or hazard assessment, and that transparency and reporting standards are critical during implementation.

With regard to extrapolation across levels of biological organization, the adverse outcome pathway (AOP) framework has been developed with the aim of organizing scientifically credible support for extrapolation between pathway perturbations commonly measured in HTS assays and impacts on survival, growth, reproduction, or individual health that are more widely considered relevant to risk assessment (Ankley et al. 2010). Since initial publication of the NRC’s vision for toxicity testing in the 21st century, the AOP framework has evolved from an informal concept to an internationally coordinated program supporting development of a harmonized AOP knowledgebase (aopwiki.org; aopkb.org) and accompanying guidance for AOP documentation and weight of evidence evaluation. Recognizing that chemical exposures in the real-world are likely to result in concurrent perturbation of multiple pathways in an organism, and that those pathways are highly interconnected, both AOP networks and dynamic energy budget (DEB) theory are being used to help integrate specific pathway perturbations into overall estimates of organism fitness (Murphy et al, 2018).

Dynamic energy budget theory describes the metabolism of an organism throughout its life-cycle and is a multi-compartment dynamical systems model of the performance (growth, development, reproduction, and mortality) of organisms (Kooijman 2010). DEB models are constructed around assumptions of conservation of energy and of elemental matter, and these models intersect with physiology through assumptions on homeostasis. The theory is general so that it covers a wide diversity of organisms, including unicellular, multicellular, ectothermic, endothermic life histories and also accommodates autotrophic and heterotrophic energy systems. The theory uses food availability and environmental conditions as forcing functions and can accommodate multiple stressors (Kooijman 2010). DEB models require for parameterization specific time resolved data for multiple endpoints collected under different temperature and food regimes (Jager et al 2016), and the link to outcomes from high throughput assays will likely make use of damage variables (eg, quantitative representation of damaged membranes, wrong proteins, oxidative stress etc) that can be correlated or mechanistically linked to DEB fluxes (Murphy et al 2018; Muller et al 2018). With some directed experimental studies, it may be possible to validate quantitative linkages between the cytotoxicity burst (Judson et al 2016, for example), or particular MIE and KEs, to physiological modes of action within DEB (Murphy et al 2018).

However, while a number of case studies have been developed, quantitative application of AOPs and AOP networks integrated within DEB models for specific organisms has not moved from concept into regulatory practice to date. Integration of AOP with DEB would greatly assist ecological risk assessment because DEB models are more amenable to incorporation into population, community and ecosystem effects models (Murphy et al 2018; Figure 1).

Cross-species extrapolation of pathway-based data is being facilitated by US EPA’s Sequence Alignment to Predict Across Species Susceptibility tool (LaLone et al. 2016; seqapass.epa.gov). This tool leverages the rapidly growing database of protein sequence information to generate quantitative comparison of protein conservation across species. While this is by no means the only relevant determinant of a species’ probable susceptibility to a chemical that acts via a specific protein target or pathway, it provides an important line of evidence that can help to inform appropriate cross-species extrapolation of HTS as well as strategic development of new HTS assays intended to provide broader coverage than the current human-centric assay space. Additionally, embedding the HTS into AOP framework and developing concrete linkages to DEB also facilitates cross-species extrapolations because DEB models have been parameterized for over 1000 species (http://www.bio.vu.nl/thb/deb/deblab/add_my_pet) (Murphy et al 2018) Although by no means comprehensive, these provide several examples of focused research activities that have been aimed at building the theory, tools, and models that will be needed to extrapolate HTS data for use in risk assessment for multiple ecologically-relevant species.

Infrastructure

Technologies are not self-implementing and obstacles are not self-solving (N. Kleinstreuer, quoted 04/16/2018). Development of institutes, organizations, and consortia of various kinds has been critical to coordinating and supporting the basic and applied research needed to elicit the envisioned paradigm shift in toxicity testing. The on-going support and growth of US EPA’s National Center for Computational Toxicology and continued engagement in Tox21 by multiple federal Agencies were foundational in North America. Likewise, similar efforts have been mounted worldwide. The SEURAT-1 (http://www.seurat-1.eu/) and Solutions (https://www.solutions-project.eu/solutions/) projects are just two examples involving European Union and global partners in the scientific endeavor of transforming our approach to chemical safety assessment. Through consortia like the Interagency Coordinating Committee on the Validation of Alternative Methods (ICCVAM), strategic research efforts are being coordinated among multiple agencies and organizations (e.g., https://ntp.niehs.nih.gov/go/natl-strategy). Notably for the SETAC (Society of Environmental Toxicology and Chemistry) community, ICCVAM has also initiated an EcoToxicology working group, recognizing the need to provide coordination and infrastructural support for development of transformative approaches in ecological toxicity testing and risk assessment as well.

Validation

Validation of assays and resulting data has generally been viewed as a prerequisite for regulatory application. Validation typically involves establishing that an assay is specific, sensitive, and reliable for its intended purpose. It also entails the identification of reference compounds (positive and negative controls) that can be used to establish that the assay is being conducted and is functioning as intended, and that the data generated are of high quality. A primary goal of validation, particularly mediated through the Organization for Economic Co-operation and Development (OECD), is the mutual acceptance of data among different regulatory domains.

High throughput assays and data have presented some challenges for traditional assay validation. First, because many of the early HTS datasets (e.g., ToxCast) relied on commercial assay providers and largely on assays intended for pharmaceutical candidate screening, many of the methods were at least partially proprietary and not intended to be transferred to or run in other laboratories (e.g., requiring specialized equipment, reagents, cell types, or infrastructure). Consequently, traditional round-robin testing, characteristic of many validation processes, was not feasible. Additionally, in the case of many multiplexed assays that measure multiple endpoints reflective of different pathway activation in a single assay, use of reference chemicals to establish performance-based standards was also problematic. Furthermore, distinction of true positives and negatives from false positives and negatives relies on the availability of orthogonal data or comparison of results with some “founder assay”, which in many cases was not available for HTS data sets. Consequently, while validation is viewed as critical for acceptance and application of HTS data, conventional approaches to assay validation do not always apply and new standards for mutual acceptance of data need to be developed.

A major step forward with regard to HTS assay validation has been improved documentation of the assay methods. Following OECD guidance document 211, “guidance document for describing non-guideline in vitro methods” (OECD 2014), test method descriptions are now being developed for assays employed in ToxCast and Tox21. This description includes the development of a bioassay ontology that organizes assay information with regard to assay, the number of different assay components (e.g., entities that are measured), and component endpoints (e.g., increase or decrease in those entities measured as indicative of either activation or inhibition of a particular pathway/process). Where appropriate to the assay, performance measures and reference chemicals are being identified. Additionally, acceptance is being fostered through full transparency with regard to the data themselves and their processing. For example, the ToxCast Pipeline, a data storage and statistical analysis platform, is organized into seven levels of data processing/analysis. The assay annotations, raw and processed data, curve fits, and other summary information are publicly available (US EPA 2015; Filer et al. 2017). Additional statistical methods aimed at providing uncertainty estimates associated with values derived from curve-fitting, such as a confidence interval for the concentration at which 50% of activity is observed (AC50), are under development (Watt and Judson 2018). Consequently, while the kind of information needed to assure validity of HTS data and foster acceptance was often lacking when the data were first released, that information, i.e. detailed assay information, structured data releases with transparent and reproducible analysis methods, and uncertainty estimates, is emerging and appropriate guidance for the validation of HTS assays is taking shape.

State of application to environmental risk assessment, ca. 2018.

A view on the state of the science ca. 2018 suggests that considerable progress has been made in developing the scientific and technical foundations needed for implementation of the NRC’s vision. However, adoption and acceptance into the chemical risk assessment process has been limited. The disconnect between what is measured in most HTS assays (e.g., receptor binding, enzyme inhibition, gene expression, changes in cell growth or morphology) and the outcomes of concern from a risk management perspective (e.g., human health, survival, growth, and reproduction in wildlife, sustainability of populations, impacts on ecosystem services) remains one of the greatest impediments to use. In concept, the AOP framework was developed to help address this disconnect (Ankley et al. 2010). However, well developed AOPs to aid interpretation of these data don’t exist in most cases, and even where they do exist, they provide only a qualitative connection, rather than quantitative extrapolation into terms that are relevant for risk assessment.

The challenge posed by inadequate anchoring to AOPs is compounded by the legacy nature of much of the legislation and regulatory structures under which risk assessments are currently conducted. Both the risk assessment framework itself and much of the major legislation under which chemical risk assessments are conducted predate the NRC vision. Consequently, statutes often don’t allow for the acceptance or use of data from alternative to traditional whole animal toxicity tests. Updating of these regulatory structures may be needed before the use of HTS data in environmental risk assessment becomes common. That said, a number of newer regulatory frameworks have started to foster a transition toward alternatives to animal testing data and methods. Some of the earliest drivers came from European initiatives like the Registration, Evaluation, Authorisation, and Restriction of Chemicals (REACH; EC No. 1907/2006), European Cosmetics Act (EC No. 1223/2009), and EU Water Framework Directive (Directive 2000/60/EC), with the first two in particular advocating or requiring reduction, refinement, and replacement of animal testing. More recently, the Frank R. Lautenberg Chemical Safety for the 21st Century Act (2016) represents the first US chemical safety legislation to promote the use of non-animal alternative testing methods (https://www.epa.gov/assessing-and-managing-chemicals-under-tsca/alternative-test-methods-and-strategies-reduce; US EPA 2018). Consequently, regulatory frameworks are starting to create opportunities to consider HTS data in risk assessments.

Nonetheless, it is perceived that more work is needed. Although HTS data sets are large and cover far more chemicals than conventional testing, gaps in both the biological and chemical space encompassed remain. Furthermore, the HTS datasets are complex. By and large, regulators don’t feel confident in how to interpret and extrapolate those data and it remains unclear how to place the data into proper context for decision-making. Consequently, even broader data sets, application case studies, and training are all needed to foster comfort, confidence, and competence in using these data appropriately. This can all be viewed as part of the sixth element required for implementation of the NRC vision, developing evidence that the new approaches are adequately predictive of adverse outcomes and provide scientifically reliability and quality at least equivalent that achieved using traditional methods (US EPA 2018).

Opportunities

Currently, application of HTS data in environmental risk assessment of chemicals is very limited. However, there is considerable awareness of the availability of the data themselves and broad recognition of the many ways in which they might be used in the future (Box 1). Nearer term opportunities for application fall in the realm of screening, prioritization, and mode of action identification, where knowing a chemical’s mode of action or “lead activity” could be used to inform subsequent testing and data gathering activities. It is expected that programs accustomed to “data poor” risk assessments that already rely heavily on structure-activity relationships, read-across, and other modeling approaches (e.g., assessment of new chemicals under TSCA, emergency management scenarios), would likely be earlier adopters than “data rich” programs with authority to require extensive whole animal toxicity testing. Nonetheless, eventual replacement of animal testing is a long term, yet potentially achievable goal.

Box 1. Opportunities for the application of HTS in Environmental Risk Assessment.

Use of (existing) single-chemical HTS data from the chemical dashboard for chemical risk assessment

Identification of a chemical’s mode of action

Identification of the “lead activity” of a chemical or sample that exhibits multiple biological activities

Ranking/prioritization of chemicals that should be moved into conventional testing pipelines

Assessment of predicted metabolites via read across approaches

Establishing the potency/relative potency of chemicals that interact with specific pathways

Used to organize and integrate complex data sets

Inform bioassay development

Identify data gaps

Chemical read across/QSARs

Use AOP/MOA to waive testing requirements that are likely to be irrelevant to the RA

Used for study design

Used in combination with IATA to reduce animal testing

Supports Weight of Evidence evaluations

Informs developments of new AOPs – cheaper, faster, efficient

Used for scoping documents

Use of (existing) single-chemical HTS to link to environmental applications

Derivation of bioassay-based equivalency factors

Informing mixture modeling

Cumulative Risk Assessments

Environmental Assessment using the exposure-activity ratio (EAR)

Derivation of effect-based trigger values for water quality

Application of HTS data to environmental samples

Testing of water, soil and sediment samples with HTS bioassays

Biomonitoring using HTS assays

Linking measured mixture effects to predicted mixture effects from iceberg modelling

Too early? - Any data is viewed better than no data

Not using it – waiting for science to mature, maybe good for mixtures

Maybe good for species extrapolations eventually

Broadening the scope

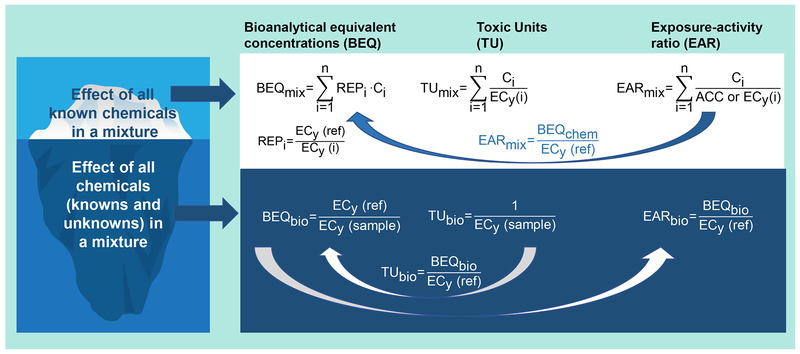

While the vision of the NRC was focused on the prospective assessment of chemical risks to human health, the current ambitions for modernizing chemical risk assessment are broader. Given significant conservation of biological pathways across organisms, many of the current mammalian-focused HTS data can be reasonably extrapolated to a diversity of vertebrates, and in some cases even invertebrates (LaLone et al. 2018). Likewise, AOPs available for translating HTS responses to potential in vivo hazards are as abundant for ecological toxicity outcomes as for human health focused pathways (aopwiki.org). Similarly, some of the strongest application case studies for HTS data and tools, to date, have been in retrospective environmental monitoring. For example, HTS data available for individual chemicals are being used to better understand the toxicological significance of chemical monitoring data, e.g. water quality monitoring. Building on traditional risk assessment concepts like hazard quotients and hazard indices, exposure-activity ratios- (EAR), the ratios of detected concentrations of chemicals to their activity cut-off concentrations in HTS assays, are being summed for all detected chemicals to yield EARmix (Figure 3) as a proxy of relative risk of the known components in a water sample contributing to specific types of biological effects (Blackwell et al. 2017). In this way, both relative exposure concentrations and the relative potency of chemicals tested in a specific bioassay are accounted, allowing the total bioanalytical equivalent concentration (BEQ) to be estimated and expressed as an equivalent concentration of a reference chemical. When derived from the measured chemical concentration, this is termed a BEQchem or BEQmix when summing across multiple chemicals. Though different nomenclatures have been used, EARmix and BEQmix are interconvertible (Figure 3).

Figure 3.

Practical applications of HTS data for risk assessment using the EAR and BEQ approach and how the different approaches relate to each other. ECy(i) = effect concentration at y effect level of compound/sample i; ACC = activity cut-off concentration (often close to EC20(i))

Additionally, the same types of assays used for single chemical evaluation in ToxCast and Tox21 are being increasingly used for environmental samples, including water, sediment, and even tissues and biofluids (Figure 3). The EU SOLUTIONS project (https://www.solutions-project.eu/solutions/) for example, involving over 100 scientists from nearly 40 institutions, conducted pioneering studies on the application of HTS assays for water quality monitoring and effect-directed analysis. This included bioassay-based screening of water samples for bioactive contaminants. Direct analysis of environmental samples in HTS assays allows for determination of BEQbio, which are based on concentration-response modeling compared to an appropriate reference compound. BEQbio represents the effects of the totality of chemicals in a sample, including contribution of chemicals that are unknown or present in concentrations below the detection limit. Comparison of biological activities observed in these assays (BEQbio) with those predicted based on known chemical composition (BEQchem or BEQmix) reveal what was long anticipated, that unknown/undetected constituents of environmental samples often contribute large fractions of their overall biological activity (Neale et al. 2015).

The comparison of BEQbio with BEQmix has been termed “iceberg modelling.” The BEQmix constitutes the visible tip of the iceberg, BEQbio the entire iceberg, and BEQbio minus BEQmix is a measure of effect triggered by (yet) unknown chemicals (Figure 3). A more classic approach is the use of toxic units (TU), which are just the inverse of the effect concentrations (Figure 3). They allow the same comparison but are defined in a slightly different way (Figure 3). It is possible to convert TUs to BEQs and vice versa. The EAR approach has only been for defined mixtures, but one could also derive an EARbio (Figure 3). The different terms for expressing the effects of mixtures of known and unknown chemicals have hampered the comparison between different studies. The summary of definitions and how they relate to each other given in Figure 3 will hopefully help to make future efforts easier to compare.

In cases where unknowns are contributing to observed biological activities, high throughput tools also offer distinct advantages for effects-directed analyses (Brack et al. 2016; Muschket et al. 2018) aimed at identifying sources and drivers of biological activity. Specifically, large numbers of fractions/samples can be rapidly and efficiently analyzed using high throughput platforms. Given the number of useful applications in environmental monitoring and retrospective mixture assessments, an even larger consortium, organized via the NORMAN network, has been actively engaged in the development of performance-based criteria for benchmarking bioassays for use in water quality monitoring (Neale et al. 2017) and developing effect-based trigger values (Escher et al. 2018). Derivation of effect-based trigger values, along with guidance on their interpretation and significance, offer real opportunities for practical application of HTS assays and data in environmental monitoring. Similar efforts are underway in North America. Collectively, these efforts paint a picture of vigorous scientific development of HTS applications that lie well beyond the scope articulated in the NRC’s vision for toxicity testing in the 21st century.

What’s next?

In their proposed timeline for implementation of the vision for toxicity testing in the 21st century, the NRC envisioned that “new knowledge and technology generated from the proposed research program will be translated to noticeable changes in toxicity-testing practices within 10 years” (NRC, 2007). Certainly significant progress, only a fraction of which was noted here, has been made in the areas of need that the NRC identified. They envisioned that “within 20 years, testing approaches will more closely reflect the proposed vision than current approaches,” assuming adequate and sustained funding. Taking stock of the state of the science ca. April 2018, what are the critical challenges that lie ahead?

At least at some level, the commitment, collaboration, and communication needed to support the establishment of new approaches and methodologies, including HTS is in place. Within the US, 16 federal agencies have contributed and agreed to a strategic plan to move these efforts ahead (https://ntp.niehs.nih.gov/go/natl-strategy). The Tox21 collaboration has been sustained for over a decade and a road map for expanding the chemical and biological space addressed by the HTS programs as well as known challenges, such as the metabolic competence and unknown chemical disposition of in vitro systems, is under way (Thomas et al. 2018). The OECD remains committed to an AOP development programme and Europe remains dedicated to the effort through activities like the NORMAN network, EU ToxRisk http://www.eu-toxrisk.eu/ and a variety of other programs. Consequently, global support for seeing through this transformation in chemical safety assessment appears strong. Nonetheless, there are many and varied challenges yet to be addressed. Each of these represent areas where SETAC and its members could take an impactful role in advancing the vision for 21st century chemical safety assessment.

HTS assays for non-mammalian physiology

The human health research community has made it a priority to continue to fill gaps in the toxicological space covered by the major HTS programs. To that end, assays for under-represented modes of action like neurotoxicity are being developed. Likewise, HTS assays that query the entire human transcriptome are being employed to maximize pathway coverage. However, to date there has been no systematic or parallel effort to develop HTS assay and testing infrastructure for pathways that are important in ecological risk assessment but are neither conserved with mammalian physiology nor represented in current HTS programs. A few prominent examples include photosynthetic pathways in plants and invertebrate endocrine systems. Both plants and invertebrates often drive ecological risk assessment and a variety of pesticide classes are specifically designed to interact with these systems, yet HTS assays for these systems are unavailable at present. In principle, many of these should be “low-hanging fruit” for development. A coordinated effort to map out the major gaps in the current mammalian centric HTS assay space relative to screening for ecologically-relevant hazards would allow for a strategic, coordinated, and efficient approach to ecological HTS assay design and development.

Accessible testing infrastructure

To date, most HTS data has been generated by large consortia applying government funding and contracting mechanisms. At present, it is generally not feasible for an individual investigator, manufacturer, or regulatory body to rapidly have a chemical or sample screened through a well-established and validated battery of HTS assays. Likewise, new HTS assays are often being developed in individual laboratories which may screen a large library consisting of several hundred to a few thousand compounds, then move on to a new assay. While such efforts have succeeded in placing a large amount of data into the public domain, they are not readily accessed or applied for the assessment of new chemicals or environmental samples. The lack of access to HTS testing platforms limits both the amount and scope of the data that are generated and broader acceptance and uptake in risk assessment. If there are roughly 80,000 chemicals in commerce and data are still only available for 1 in 10 of those, let alone their relevant transformation products, mixtures, etc., HTS data may still only be useful in a small minority of risk assessments. Creating and investing in an infrastructure in which researchers, regulators, and industry, including those from developing countries, could submit chemicals/samples and have a standardized and validated set of HTS assays available to test their compound or sample would broaden the available data while distributing the costs of testing across the community. Establishment of one or more certified, accessible, HTS testing facilities would also have substantial benefits with regard to validation and mutual acceptance of data. The lack of accessibility of HTS could be addressed through creative public-private partnerships that should make it possible to both expand the amount of data in the public domain while protecting confidential business information, for example through substantially discounted pricing for users that agree to make their data public. At the same time additional effort should be invested in development of guidelines, performance-based measures, controlled vocabularies, and databases that could be used to establish the quality, validity, and comparability of low-medium throughput assays that are viable for individual investigators to run in their labs and would be accepted as comparable to those available through commercial HTS services.

Enhanced International coordination

Stakeholders around the globe would stand to benefit from greater accessibility to HTS infrastructure. In particular, the environmental monitoring community has been one of the first to really seize on the potentially transformative nature of these testing platforms (Schroeder et al. 2014; Neale et al. 2015; Blackwell et al. 2017 Muschket et al. 2018). However, a recognized limitation, to date, has been a relatively limited amount of interaction and awareness between various global efforts to develop the approaches, models, and tools to support the application of HTS in environmental monitoring. This has led, to some extent, to the use of inconsistent terminologies and approaches, and less efficient use of limited research and development resources. Given its role as a global organization, SETAC is uniquely positioned to help coordinate a truly global research strategy to tackle both scientific and policy hurdles related to the application of HTS in this domain and to assist in providing developing countries access and training related to HTS technology and their application.

Response-response, not just dose-response

Considering the state of the science, there is little skepticism that HTS data can be generated. As noted previously, however, a major barrier to application is the disconnect between what is measured in a HTS assay and assessment endpoints considered meaningful for decision-making. The AOP framework can address this to some extent, but quantitative extrapolation along AOPs is currently limited by a lack of data that address the critical question, how much perturbation of a key event is too much? Toxicologists have been trained to think in terms of dose-response. What magnitude of exposure is needed to elicit an effect? However, quantitative understanding needed to extrapolate along an AOP requires a more generalized understanding of how much change in some upstream key event or biological pathway is needed to evoke some degree of downstream change. At what point do those biological changes become so severe that an organism’s ecological fitness is compromised? While this question may seem closely aligned with traditional toxicology experiments, answering it often involves investigation of multiple endpoints at multiple time-points to understand the progression of different key events along an AOP. Thus, a shift in mind-set is required with regard to the kinds of experimental designs needed to support the AOP framework and its use for more quantitative applications of HTS in risk assessment.

This shift could also coincide with a concerted effort to collaborate with the broader biological research community (cell and molecular biologists, physiologists, ecologists etc), as the study of both unperturbed biological systems and various responses to non-chemical stressors can both add to key event inventories and augment understanding of response-response relationships.

Ecosystem relevance

However, even if AOPs can be successfully developed and applied to translate HTS data to relevant endpoints at the individual level, there remain deficiencies in our ability to translate individual-based endpoints into predicted population or ecosystem level consequences (Forbes et al, 2017). Ecological risk is measured focusing on sensitive individuals and relies on a few well-studied model organisms. Nevertheless, unlike in human health risk assessment, the concern for hazard is at the population level, which makes the gap between measured effects and protection goals even larger, particularly when focusing on HTS and/or mechanistic data to inform decisions. Furthermore, the large number of species in ecological risk assessment with different life histories, strategies, and environments, significantly increases the challenge of extrapolation and the level of uncertainly when using in vitro or in silico tools for hazard prediction. Predicted effects on survival, growth, development and reproduction, at the individual level still need to be integrated in the context of life history traits to understand their overall significance to a population. Likewise, effects that may translate across trophic levels to have ecosystem-scale impacts should not be ignored. A simple example is the case of an herbicide affecting photosystem II of plants. While the chemical may be completely innocuous to animal life, if it were to eliminate the base of the food chain, populations of a broad range of taxa could nonetheless be impacted. Consequently, on-going efforts to link ecological modeling frameworks through dynamic energy budgets and other integrated approaches like the AOP framework is another area where a strategic research effort could make a powerful impact (Forbes et al 2017; Murphy et al 2018).

Aggregation of ecological exposure datasets

Finally, although the focus of this article is primarily on what HTS could bring to the hazard or effects side of the risk assessment equation, it is recognized that exposure is critical and constitutes a large source of variability when extrapolating from laboratory to field studies. The temporal and spatial variability of exposure concentrations in the field and their intersection with the different life histories, behaviors, and physiological attributes of different species is an aspect of ecological risk assessment that can’t be ignored. While investment in the HTS tools for biological effects assessment is important, there needs to be parallel investment in aggregation of exposure and toxicokinetic information. Human exposure and ADME (absorption, distribution, metabolism, and elimination) data are being aggregated through sources like EPA’s Chemistry Dashboard. However, to date, there are no on-going parallel efforts to aggregate ecological exposure data or relevant parameters needed to develop robust toxicokinetic models for a wide range of vertebrate and invertebrate wildlife and plants.

Conclusions

It is apparent that the science needed to support implementation of a broad vision of toxicity testing in the 21st century has advanced considerably over the decade since initial publication of the NRC report. Many of the objectives defined by the NRC have been at least partially achieved. However, the uptake of these approaches into risk assessment practices has been limited. The reasons for that limited uptake are multi-factorial, but the challenges involved that have been articulated and defined and are not insurmountable. At the same time, none are trivial, and well-coordinated strategic research, development, and investment will be required to address them in an effective and efficient manner. While several cross-agency and global partnerships have already laid out strategic goals and road maps for addressing some of the critical science challenges, there remain a number of key areas were SETAC leadership, and/or effective coordination of the SETAC community with the efforts of other institutes and societies could make highly impactful contributions. The authors encourage SETAC and its membership to take up the charge.

Acknowledgements

This article was prepared by the steering committee for the SETAC Focused Topic Meeting on High Throughput Screening and Environmental Risk Assessment, Durham, April, 2018. The authors acknowledge all the presenters and participants from the meeting for their work and insight presented in this paper. All the content was inspired and informed by their contributions. American Chemistry Council; American Petroleum Institute; B&C Consortia Management; Bayer; Colgate-Palmolive; Crop Life America; The Dow Chemical Company; ER2; Exponent; Global Silicon Council; Michigan State University ; Mississippi State University; Monsanto; Tessenderlo Kerley; The Human Society of the United States; U.S. Army Research and Development Center; United States Environmental Protection Agency provided financial and/or in-kind support for the meeting. K. Paul-Friedman provided comments on an earlier draft of this manuscript. The mention of products and/or tradenames does not constitute endorsement or recommendation for use. Likewise, all views express are the personal views of the authors and neither constitute nor necessarily reflect the positions or policies of their organizations.

References

- Ankley GT, Bennett RS, Erickson RJ, Hoff DJ, Hornung MW, Johnson RD, Mount DR, Nichols JW, Russom CL, Schmieder PK, Serrrano JA, Tietge JE, Villeneuve DL. 2010. Adverse outcome pathways: a conceptual framework to support ecotoxicology research and risk assessment. Environ Tox Chem. 29: 730–741. [DOI] [PubMed] [Google Scholar]

- Arini A, Mittal K, Dornbos P, Head J, Rutkiewicz J, Basu N. 2017. A cell-free testing platform to screen chemicals of potential neurotoxic concern across twenty vertebrate species. Environ Toxicol Chem. 36:3081–3090. doi: 10.1002/etc.3880. [DOI] [PubMed] [Google Scholar]

- Armitage JM, Wania F, and Arnot JA. 2014. Application of mass balance models and the chemical activity concept to facilitate the use of in vitro toxicity data for risk assessment. Environ Sci Technol. 48: 9770–9779. doi: 10.1002/etc.2020 [DOI] [PubMed] [Google Scholar]

- Bell SM, Chang X, Wambaugh JF, Allen DG, Bartels M, Brouwer KLR, Casey WM, Choksi N, Ferguson SS, Fraczkiewicz G, Jarabek AM, Ke A, Lumen A, Lynn SG, Paini A, Price PS, Ring C, Simon TW, Sipes NS, Sprankle CS, Strickland J, Troutman J, Wetmore BA, Kleinstreuer NC. 2018. In vitro to in vivo extrapolation for high throughput prioritization and decision making. Toxicol In Vitro. 47:213–227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bessems JG, Loizou G, Krishnan K, Clewell HJ 3rd, Bernasconi C, Bois F, Coecke S, Collnot EM, Diembeck W, Farcal LR, Geraets L, Gundert-Remy U, Kramer N, Küsters G, Leite SB, Pelkonen OR, Schröder K, Testai E, Wilk-Zasadna I, Zaldívar-Comenges JM. 2014. PBTK modelling platforms and parameter estimation tools to enable animal-free risk assessment: recommendations from a joint EPAA--EURL ECVAM ADME workshop. Regul Toxicol Pharmacol. 68:119–39. doi: 10.1016/j.yrtph.2013.11.008. [DOI] [PubMed] [Google Scholar]

- Blackwell BR, Ankley GT, Corsi SR, DeCicco LA, Houck KA, Judson RS, Li S, Martin MT, Murphy E, Schroeder AL, Smith ER, Swintek J, Villeneuve DL. 2017. An “EAR” on environmental surveillance and monitoring: a case study on the use of exposure-activity ratios (EARs) to prioritize sites, chemicals, and bioactivities of concern in Great Lakes waters. Environ Sci Technol. 51:8713–8724. doi: 10.1021/acs.est.7b01613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brack W, Ait-Aissa S, Burgess RM, Busch W, Creusot N, Di Paolo C, Escher Bl, Mark Hewitt L, Hilscherova K, Hollender J, Hollert H, Jonker W, Kool J, Lamoree M, Muschket M, Neumann S, Rostkowski P, Ruttkies C, Schollee J, Schymanski EL, Schulze T, Seiler TB, Tindall AJ, De Aragao Umbuzeiro G, Vrana B, Krauss M. 2016. Effect-directed analysis supporting monitoring of aquatic environments—An in-depth overview. Sci Total Environ. 544:1073–118. doi: 10.1016/j.scitotenv.2015.11.102. [DOI] [PubMed] [Google Scholar]

- Bl Escher, Aït-Aïssa S, Behnisch PA, Brack W, Brion F, Brouwer A, Buchinger S, Crawford SE, Du Pasquier D, Hamers T, Hettwer K, Hilscherová K, Hollert H, Kase R, Kienle C, Tindall AJ, Tuerk J, van der Oost R, Vermeirssen E, Neale PA. 2018. Effect-based trigger values for in vitro and in vivo bioassays performed on surface water extracts supporting the environmental quality standards (EQS) of the European Water Framework Directive. Sci Total Environ. 628–629:748–765. doi: 10.1016/j.scitotenv.2018.01.340. [DOI] [PubMed] [Google Scholar]

- Filer DL, Kothiya P, Setzer RW, Judson RS, Martin MT. 2017. Tcpl: the ToxCast pipeline for high-throughput screening data. Bioinformatics 33: 618–620. [DOI] [PubMed] [Google Scholar]

- Fischer FC, Henneberger L, König M, Bittermann K, Linden L, Goss KU, Escher Bl (2017). Modeling exposure in the Tox21 in vitro bioassays. Chem Res Toxicol. 30:1197–1208. doi: 10.1021/acs.chemrestox.7b00023 [DOI] [PubMed] [Google Scholar]

- Forbes VE, Salice CJ, Birnir B, Bruins RJ, Calow P, Ducrot V, Galic N, Garber K, Harvey BC, Jager H, Kanarek A, Pastorok R, Railsback SF, Rebarber R, Thorbek P. 2017. A framework for predicting impacts on ecosystem services from (sub)organismal responses to chemicals. Environ Toxicol Chem. 36:845–859. doi: 10.1002/etc.3720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huggett DB, Cook JC, Ericson JF, and Williams RT. 2003. A theoretical model for utilizing mammalian pharmacology and safety data to prioritize potential impacts of human pharmaceuticals to fish. Human and Ecological Risk Assessment, 9:1789–1799. [Google Scholar]

- Jager T 2016. Predicting environmental risk: A road map for the future. J Tox Environ Health. Part A 79: 572–584. [DOI] [PubMed] [Google Scholar]

- Judson R, Houck K, Martin M, Richard AM, Knudsen TB, Shah I, Little S, Wambaugh J, Setzer RW, Kothiya P, Phuong J, et al. 2016. Editor’s Highlight: analysis of the effects of cell stress and cytotoxicity on in vitro assay activity across a diverse chemical and assay space. Toxicological Sciences, 152(2), pp.323–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judson R, Richard A, Dix DJ, Houck K, Martin M, Kavlock R, Dellarco V, Henry T, Holderman T, Sayre P, Tan S, Carpenter T, Smith E. 2009. The toxicity landscape for environmental chemicals. Environ Health Perspect. 117:685–695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judson R, Houck K, Martin M, Knudsen T, Thomas RS, Sipes N, Shah I, Wambaugh J, and Crofton K. 2014. In vitro and modelling approaches to risk assessment from the US Environmental Protection Agency ToxCast programme. Basic & Clin Pharmacol & Toxicol. 115: 69–76. [DOI] [PubMed] [Google Scholar]

- Judson RS, Kavlock RJ, Setzer RW, Hubal EAC, Martin MT, Knudsen TB, Houck KA, Thomas RS, Wetmore BA, and Dix DJ. 2011. Estimating toxicity-related biological pathway altering doses for high-throughput chemical risk assessment. Chem Res Toxicol. 24: 451–462. [DOI] [PubMed] [Google Scholar]

- Kavlock R, Chandler K, Houck K, Hunter S, Judson R, Kleinstreuer N, Knudsen T, Martin M, Padilla S, Reif D, Richard A, Rotroff D, Sipes N, Dix D. 2012. Update on EPA’s ToxCast program: providing high throughput decision support tools for chemical risk management. Chem Res Toxicol. 25:1287–302. doi: 10.1021/tx3000939. [DOI] [PubMed] [Google Scholar]

- Kooijman SALM. 2010. Dynamic Energy Budget theory for Metabolic Organization. Third Edition Cambridge University Press; Cambridge, UK. [Google Scholar]

- LaLone CA, Villeneuve DL, Lyons D, Helgen HW, Robinson SL, Swintek JA, Saari TW, Ankley GT. 2016. Editor’s highlight: Sequence Alignment to Predict Across Species Susceptibility (SeqAPASS): a web-based tool for addressing the challenges of cross-species extrapolation of chemical toxicity. Toxicol Sci. 153:228–45. doi: 10.1093/toxsci/kfw119. [DOI] [PubMed] [Google Scholar]

- LaLone CA, Villeneuve D, Doering JA, Blackwell BR, Transue TR, Simmons CW, Swintek JA, Degitz SJ, Williams AJ, and Ankley GT. 2018. Defining the taxonomic domain of applicability for mammalian-based high-throughput screening assays. Environ. Sci. Technol In Review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller EB, Klanjscek T, Nisbet RM. 2018. Inhibition and damage to synthesizing units. J Sea Res. (in press) [Google Scholar]

- Muschket M, Di Paolo C, Tindall AJ, Touak G, Phan A, Krauss M, Kirchner K, Seiler TB, Hollert H, Brack W. 2018. Identification of unknown antiandrogenic compounds in surface waters by effect-directed analysis (EDA) using a parallel fractionation approach. Environ Sci Technol. 52:288–297. doi: 10.1021/acs.est.7b04994. [DOI] [PubMed] [Google Scholar]

- National Research Council. 2007. Toxicity Testing in the 21st Century: A Vision and a Strategy. Washington, DC: The National Academies Press; 10.17226/11970. [DOI] [Google Scholar]

- Murphy CA, Nisbet RM, Antczak P, Garcia-Reyero N, Gergs A, Lika K, Mathews T, Muller EB, Nacci D, Peace A, Remien CH, Shultz IR, Stevenson LM, Watanabe K. 2018. Incorporating sub-organismal processes into dynamic energy budget models for ecological risk assessment. Integr Environ Assess Manag. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neale PA, Ait-Aissa S, Brack W, Creusot N, Denison MS, Deutschmann B, Hilscherová K, Hollert H, Krauss M, Novák J, Schulze T, Seiler TB, Serra H, Shao Y, Escher BI. 2015. Linking in vitro effects and detected organic micropollutants in surface water using mixture-toxicity modeling. Environ Sci Technol. 49:14614–14624. doi: 10.1021/acs.est.5b04083. [DOI] [PubMed] [Google Scholar]

- Neale PA, Altenburger R, Ait-Aissa S, Brion F, Busch W, de Aragão Umbuzeiro G, Denison MS, Du Pasquier D, Hilscherova K, Hollert H, Morales DA, Novac J, Schlichting R, Seiler T-B, Serra H, Shao Y, Tindall AJ, Tollefsen KE, Williams TD, Escher BI 2017. Development of a bioanalytical test battery for water quality monitoring: Fingerprinting identified micropollutants and their contribution to effects in surface water. Water Res. 123: 734–750. [DOI] [PubMed] [Google Scholar]

- Oki NO, Nelms MD, Bell SM, Mortensen HM, Edwards SW. 2016. Accelerating adverse outcome pathway development using publicly available data sources. Curr Environ Health Rep 3:53–63. [DOI] [PubMed] [Google Scholar]

- Paul Friedman K, Watt ED, Hornung MW, Hedge JM, Judson RS, Crofton KM, Houck KA, Simmons SO. 2016. Tiered high-throughput screening approach to identify thyroperoxidase inhibitors within the ToxCast phase I and II chemical libraries. Toxicol Sci. 151:160–80. doi: 10.1093/toxsci/kfw034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reif DM, Truong L, Mandrell D, Marvel S, Zhang G, Tanguay RL. 2016. High-throughput characterization of chemical-associated embryonic behavioral changes predicts teratogenic outcomes. Arch Toxicol. 90:1459–70. doi: 10.1007/s00204-015-1554-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richard AM, Judson RS, Houck KA, Grulke CM, Volarath P, Thillainadarajah I, Yang C, Rathman J, Martin MT, Wambaugh JF, Knudsen TB, Kancherla J, Mansouri K, Patlewicz G, Williams AJ, Little SB, Crofton KM, Thomas RS. 2016. ToxCast chemical landscape: paving the road to 21st century toxicology. Chem Res Toxicol. 29:1225–51. doi: 10.1021/acs.chemrestox.6b00135. [DOI] [PubMed] [Google Scholar]

- Schroeder AL, Ankley GT, Houck KA, Villeneuve DL. 2016. Environmental surveillance and monitoring--The next frontiers for high-throughput toxicology. Environ Toxicol Chem. 35:513–25. doi: 10.1002/etc.3309. [DOI] [PubMed] [Google Scholar]

- Schmolke A, Thorbek P, DeAngelis DL, Grimm V. 2010. Ecological models supporting environmental decision making: a strategy for the future. TREE 25:479–486. [DOI] [PubMed] [Google Scholar]

- Thomas RS, Paules RS, Simeonov A, Fitzpatrick SC, Crofton KM, Casey WM, Mendrick DL. 2018. The US Federal Tox 21 Program: a strategic and operational plan for continued leadership. ALTEX. 35: 163–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tice RR, Austin CP, Kavlock RJ, Bucher JR. 2013. Improving the human hazard characterization of chemicals: a Tox21 update. Environ Health Perspect. 121:756–65. doi: 10.1289/ehp.1205784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- US EPA Risk Assessment Forum. 1998. Guidelines for ecological risk assessment. U.S. Environmental Protection Agency, Washington, DC: EPA/630/R-95/002F [Google Scholar]

- US EPA Risk Assessment Forum. 2014. Framework for human health risk assessment to inform decision-making. U.S. Environmental Protection Agency, Washington, DC: EPA/100/R-14/001. [Google Scholar]

- US EPA. 2015. ToxCast & Tox21 Summary Files from invitrodb_v2. Retrieved from https://www.epa.gov/chemical-research/toxicity-forecaster-toxcasttm-data on October 28, 2015. Data released October 2015

- US EPA. 2018. Strategic plan to promote the development and implementation of alternative test methods within the TSCA program. US EPA Office of Chemical Safety and Pollution Prevention; EPA-740-R1-8004. [Google Scholar]

- Vandenberg LN, Ågerstrand M, Beronius A, Beausoleil C, Bergman Å, Bero LA, Bornehag CG, Boyer CS, Cooper GS, Cotgreave I, Gee D, Grandjean P, Guyton KZ, Hass U, Heindel JJ, Jobling S, Kidd KA, Kortenkamp A, Macleod MR, Martin OV, Norinder U, Scheringer M, Thayer KA, Toppari J, Whaley P, Woodruff TJ, Rudén C. 2016. A proposed framework for the systematic review and integrated assessment (SYRINA) of endocrine disrupting chemicals. Environ Health. 15:74. doi: 10.1186/s12940-016-0156-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watt ED, Judson RS. 2018. Uncertainty Quantification in ToxCast High Throughput Screening. PLOS One. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wetmore BA, Wambaugh JF, Allen B, Ferguson SS, Sochaski MA, Setzer RW, Houck KA, Strope CL, Cantwell K, Judson RS, LeCluyse E, Clewell HJ, Thomas RS, Andersen ME. 2015. Incorporating high-throughput exposure predictions with dosimetry-adjusted in vitro bioactivity to inform chemical toxicity testing. Toxicol Sci.148:121–136. doi: 10.1093/toxsci/kfv171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams AJ, Grulke CM, Edwards J, McEachran AD, Mansouri K, Baker NC, Patlewicz G, Shah I, Wambaugh JF, Judson RS, Richard AM. 2017. The CompTox Chemistry Dashboard: a community data resource for environmental chemistry. J Cheminform. 61. doi: 10.1186/s13321-017-0247-6. [DOI] [PMC free article] [PubMed] [Google Scholar]