Abstract

Goals and methods

A simulation study investigated how ceiling and floor effect (CFE) affect the performance of Welch’s t-test, F-test, Mann-Whitney test, Kruskal-Wallis test, Scheirer-Ray-Hare-test, trimmed t-test, Bayesian t-test, and the “two one-sided tests” equivalence testing procedure. The effect of CFE on the estimate of group difference and on its confidence interval, and on Cohen’s d and on its confidence interval was also evaluated. In addition, the parametric methods were applied to data transformed with log or logit function and the performance was evaluated. The notion of essential maximum from abstract measurement theory is used to formally define CFE and the principle of maximum entropy was used to derive probability distributions with essential maximum/minimum. These distributions allow the manipulation of the magnitude of CFE through a parameter. Beta, Gamma, Beta prime and Beta-binomial distributions were obtained in this way with the CFE parameter corresponding to the logarithm of the geometric mean. Wald distribution and ordered logistic regression were also included in the study due to their measure-theoretic connection to CFE, even though these models lack essential minimum/maximum. Performance in two-group, three-group and 2 × 2 factor design scenarios was investigated by fixing the group differences in terms of CFE parameter and by adjusting the base level of CFE.

Results and conclusions

In general, bias and uncertainty increased with CFE. Most problematic were occasional instances of biased inference which became more certain and more biased as the magnitude of CFE increased. The bias affected the estimate of group difference, the estimate of Cohen’s d and the decisions of the equivalence testing methods. Statistical methods worked best with transformed data, albeit this depended on the match between the choice of transformation and the type of CFE. Log transform worked well with Gamma and Beta prime distribution while logit transform worked well with Beta distribution. Rank-based tests showed best performance with discrete data, but it was demonstrated that even there a model derived with measurement-theoretic principles may show superior performance. Trimmed t-test showed poor performance. In the factor design, CFE prevented the detection of main effects as well as the detection of interaction. Irrespective of CFE, F-test misidentified main effects and interactions on multiple occasions. Five different constellations of main effect and interactions were investigated for each probability distribution, and weaknesses of each statistical method were identified and reported. As part of the discussion, the use of generalized linear models based on abstract measurement theory is recommended to counter CFE. Furthermore, the necessity of measure validation/calibration studies to obtain the necessary knowledge of CFE to design and select an appropriate statistical tool, is stressed.

1 Introduction

In 2008, Schnall investigated how participants rate moral dilemmas after they have been presented with words related to the topic of cleanliness, as opposed to neutral words [1]. The study reported that participants who were primed with the concept of cleanliness, found moral transgressions less bad than participants who weren’t primed. [2] conducted a replication study using the same methods and materials. In contrast to [1], [2] found that the mean ratings of the two groups in their study did not differ. [3] pointed out that in [2] participants provided overall higher ratings than in the original study. [3] argued that the failure of the replication study to establish a difference between the two groups was due to a ceiling effect. Since substantial proportions of both groups provided maximum ratings, it was not possible to determine a difference in rating between these two groups. [4] provided their own analyses of ceiling effects in both, the original and the replication study and concluded that the ceiling effect can’t account for the failure to replicate the original finding. Other researchers shared their analyses of the ceiling effects in these two studies on their websites and on social media. Here is a quick overview of the variety of the suggested analyses: [3] showed that the mean ratings in the replication study were significantly higher than those in the original study. Furthermore, she showed that the proportion of the most extreme ratings on the 10 point scale was significantly higher in the replication study than in the original study. [4] argued that rank-based Mann-Whitney test provides results that are identical to an analysis with Analysis of Variance (ANOVA). Furthermore, analyses without extreme values failed to reach significance as well. [5] did not find the above-mentioned analyses satisfactory and suggested an analysis with Tobit model, which showed a non-significant effect. [6] argued that Schnall’s analyses do not support her conclusions. [7] investigated how ceiling effects would affect the power of a t-test. He used a graded response model to simulate data that were affected by ceiling, similar to those obtained in the replication study. The effect size was set to a value obtained in the original study. He found that, depending on the model parametrization, the power of a t-test in the simulated replication study ranges from 70 to 84% which should be sufficient to detect the effect. [8] performed Bayes Factor analysis and compared the quantiles. Both analyses suggested an absence of an effect in the replication study.

In our opinion, this discussion about the presence and impact of ceilings effects illustrates how relevant, yet elusive, the concept of ceiling effect is. Apparently, the only point regarding ceiling effects, on which all parties agreed, is that the application of parametric analyses such as ANOVA or t-test is problematic in the presence of a ceiling effect. Yet the authors disagreed on how to demonstrate and measure the impact of ceiling effect, which makes the default application of ANOVA problematic per se. Motivated by these concerns, the current work presents a computer simulation study that investigates how various methods of statistical inference perform when the measurements are affected by ceiling and/or floor effect (CFE). The main focus is on the performance of the textbook methods: Welch’s version of t-test, ANOVA and rank-based tests. In addition, the performance of potential candidate methods, some of which were already encountered in the discussion of the study by [1], is investigated. The hallmark of the current work is the theoretical elaboration of the concept of CFE with the help of formal measurement theory [9]. This theoretical embedding provides a backbone for the simulations, and, as we further point out, the lack of such theoretical embedding may be one of the reasons why the number and scope of simulation studies of CFE up until now is limited.

Measurement-theoretic definitions of CFE are discussed in section 1.1. Section 1.2 reviews the literature on the robustness of the textbook methods. Only few robustness studies do explicitly consider the CFE. However, numerous studies investigate other factors, which may combine to create CFE. These studies, in particular, are considered in section 1.2. Taking stock of the material presented in the preceding sections, sections 1.3.1 and 1.3.2 justify the choice of statistical methods and the choice of the data-generating mechanism utilized by the simulations. While section 2 provides additional details on the methods and procedures, the description provided in sections 1.3.1 and 1.3.2 should be sufficient to follow the results presentation and a reader interested in the results may directly skip to section 3 and 4.

1.1 Formal definition of CFE

Consider first some informal notions of CFE. The dictionary of statistical terms by [10] provides the following entry on CFE. “Ceiling effect: occurs when scores on a variable are approaching the maximum they can be. Thus, there may be bunching of values close to the upper point. The introduction of a new variable cannot do a great deal to elevate the scores any further since they are virtually as high as they can go.” The dictionary entry in [11] (see also [12]) says: “Ceiling effect: A term used to describe what happens when many subjects in a study have scores on a variable that are at or near the possible upper limit (‘ceiling’). Such an effect may cause problems for some types of analysis because it reduces the possible amount of variation in the variable.”

We identify two crucial aspects of CFE in these quotes. First, CFE causes a “bunching” of measured variables, such that the measure becomes insensitive to changes in the latent variable that it is supposed to measure. Second, CFE does not only affect the expected change in the measured variable but its other distributional properties as well which may in turn affect the performance of some statistical methods. [11] mentions the variability, which one may interpret as the variance of the measured variable. [13] hypothesized that skew is the crucial property that characterizes CFE. Importantly, the informal descriptions lack precise rationale and risk excluding less obvious and intuitive phenomena from the definition of CFE. In section 1.1.1 we show that formal measurement theory allows us to make these (and many others) informal notions precise. Historically, the research in measurement theory has been concerned with deterministic variables and despite multiple attempts a principled extension to random variables was not achieved. In section 1.1.3 we review the derivation of maximum entropy distributions, which provides an extension of measurement theory to random variables and in particular allow us to derive distributions, that can be used to simulate CFE and to manipulate its magnitude.

1.1.1 Measurement theory

A function, say ϕ from A to a subset of , describes the assignment of numbers to empirical objects or events. ϕ is, in the context of measurement theory, referred to as scale. It is crucial that ϕ is chosen such that the numerical values retain the relations and properties of the empirical objects in A (i.e. ϕ is a homomorphism, see section 1.2.2 in [9]). For instance, if the empirical objects are ordered, such that a ⪯ b for some a, b ∈ A, then it is desirable that ϕ satisfies a ⪯ b if and only if (iff) ϕ(a) ≤ ϕ(b). Measurement theory describes various scale types and the properties of the empirical events necessary and sufficient to construct the respective scale type. In addition, given a set of properties of empirical objects, multiple choices of ϕ may be possible and measurement theory delineates the set of such permissible functions. A scale that preserves the order, i.e. a ⪯ b iff ϕ(a)≤ϕ(b) is referred to as ordinal scale. Given that ϕ is an ordinal scale, then ϕ′(a) = f(ϕ(a)) is also an ordinal scale for all a ∈ A and for a strictly increasing f (ibid. p.15). Note that the set of possible scales is described as a set of possible transformations f of some valid scale ϕ. Other notable instances are ratio scale and interval scale. In addition to order, a ratio scale preserves a concatenation operation ∘ such that ϕ(a ∘ b) = ϕ(a) + ϕ(b). A ratio scale is specified up to a choice of unit i.e. ϕ′(a) = αϕ(a), with α > 0. The required structure of empirical events is called extensive structure (ibid. chapter 3). In some situations it is not possible to take direct measurements of the empirical objects of interest, however one may measure pairwise differences or intervals between the empirical objects, say a ⊖ b or c ⊖ d. Then, one may construct an interval scale given that a ⊖ b ⪯ c ⊖ d if ϕ(a ⊖ b) ≤ ϕ(c ⊖ d) and ϕ(a ⊖ c) = ϕ(a ⊖ b) + ϕ(b ⊖ c) for all a, b, c, d ∈ A (ibid, p. 147). The set of permissible transformations is given by ϕ′(a) = αϕ(a) + β with and α > 0. The corresponding structure is labelled difference structure (ibid. chapter 4).

Consider concatenation again. The concatenation operation in length measurement can be performed by placing two rods sequentially. In weight measurement the concatenation may be performed by placing two objects on the pan of a balance scale. As [9] (chap. 3.14) point out, finding and justifying a concatenation operation in social sciences often poses difficulties. Furthermore, it is not necessary to map concatenation to addition. For instance, taking ψ = exp(ϕ), with interval scale ϕ, will translate addition on to multiplication on . More generally, any strictly monotonous function f may be used to obtain a valid numerical representation ψ(a) = f−1(ϕ(a)) with a (possibly non-additive) concatenation formula ψ(a ∘ b) = f−1(f(ψ(a)) + f(ψ(b))). [9] (chap. 3.7.1) make use of this fact when considering measurement of relativistic velocity (also referred to as rapidity). Relativistic velocity is of interest in the present work because it is bounded—it can’t exceed the velocity of light. The upper bound poses difficulties for the additive numerical representation since an extensive structure assumes positivity of addition, i.e. a ≺ a ∘ b for all a, b ∈ A (axiom 5 in definition 1 on p.73 ibid.). However, if z is the velocity of light, we have z ∼ z ∘ a, which violates positivity. [9] resolve this issue by mapping velocity from a bounded range to an unbounded range, performing addition there and mapping the result back to the bounded range. Formally, concatenation is given by ([9] chapter 3, theorem 6)

| (1) |

where fu is a strictly increasing function from [0, 1] to that is unique up to a positive multiplicative constant. As [9] point out, taking transformation fu = tanh−1 results in the velocity-addition formula of the relativistic physics. However, this choice is arbitrary and Eq 1 provides us with the general result, which we will use in the current work. [9] call an element which satisfies zu ∼ zu ∘ a (for all a ∈ A) an essential maximum. [9] further show that given an extensive structure with an essential maximum, there always exists a strictly increasing function fu such that Eq 1 is satisfied.

Next, we consider several straightforward extensions of the result by [9]. First, we wish to introduce extensive structures with an essential minimum zl. Note that even though velocity has a lower bound at zero, this lower bound is not an essential minimum, because the concatenation is positive and a repeated concatenation results in increasing numerical values. As a consequence, essential maximum or essential minimum is a property related to a concatenation operation rather than a property of the numerical range of a scale. If we distinguish between zu and zl we need to distinguish between ∘u and ∘l and in turn between fu and fl. Of course, this does not preclude the possibility that in some particular application it may be true that fu = fl.

Consider a modification of Eq 1 to describe an essential minimum. Qualitatively, an essential minimum manifests, similar to an essential maximum, the property z ∼ z ∘l a. In this case however ∘l is a negative operation in the sense that a ≻ a ∘l b for all a, b ∈ A. The results then are analogous to those of the velocity derivation. The main difference is that the scale ϕl maps to [ϕl(zl), 0] rather than to [0, ϕu(zu)]. However, both expressions ϕl(a)/ϕl(zl) and ϕu(a)/ϕu(zu) translate into the range [0, 1] and hence, the only modification of Eq 1 is to add subscripts:

| (2) |

Thus, if a structure has an essential minimum, then a strictly increasing function fl exists such that Eq 2 is satisfied.

Second, we wish to extend Eq 1 to situations in which the minimal element zl (irrespective of whether it is essential minimum or not) is non-zero. We do so by first translating the measured values from range [ϕ(zl), ϕ(zu)] to [0, ϕ(zu) − ϕ(zl)] and then to the domain of fu i.e. [0, 1]:

| (3) |

Third, similar to step two, we modify Eq 2 to apply in situations with a non-zero maximal element zu

| (4) |

Above, we distinguished between a scale with an essential minimum ϕl and a scale with an essential maximum ϕ, which was in Eq 3 labelled more accurately as ϕu. This distinction was necessary, because the two scales in Eqs 3 and 4 map to different number ranges. However, and this is the fourth extension, we need to consider a single scale which has both, an essential minimum and an essential maximum. To do so, consider a scale ϕ with range [ϕ(zl), ϕ(zu)]. Then both Eqs 3 and 4 apply. We just change the labels: ϕl = ϕu = ϕ.

Fifth, a further simplification can be achieved by assuming that essential minima and essential maxima affect concatenation in an identical manner i.e. f = αu fu = αl fl = f for some positive constants αl and αu. Eqs 3 and 4 simplify respectively to:

| (5) |

| (6) |

for all a, b ∈ A. To simplify the notation, we introduced the function . This notation highlights that in terms of measurement ϕ, the operations ∘l and ∘u are symmetric around the line g[x] = 0.5. To illustrate this with an example, consider the popular choice fl(x) = fu(x) = f(x) = −log(1 − x) with x restricted to [0, 1]. This is a strictly increasing function to and hence provides a valid choice. The left panel in Fig 1 shows f(1 − g[ϕ(a)]) = −log(g[ϕ(a)]) and f(g[ϕ(a)]) = −log(1 − g[ϕ(a)]) as a function of g[ϕ(a)] ∈ [0, 1]. As noted, the two curves manifest symmetry around g[ϕ(a)] = 0.5

Fig 1. Examples of fu and fl.

The panels show functions fl(g[ϕ]) and fu(1 − g[ϕ]). In the left panel fl(g[ϕ]) = fu(g[ϕ]) = log g[ϕ] while in the right panel fl(g[ϕ]) = fu(g[ϕ]) = log(g[ϕ]/(1 − g[ϕ])).

Above, we assumed that ϕ is a ratio scale. As a final modification we consider the case when ϕ is an interval scale. The result for an interval scale and the corresponding difference structure is provided in chapter 4.4.2 in [9]. The result is identical to Eq 1 except that f is a function to rather than to and that f is unique up to a linear transformation. Hence, if ϕ is an interval scale and ∘l = ∘u then f = αu fu + βu = αl fl + βl (α > 0 and ). Again, to provide an example of an interval scale with an essential maximum and an essential minimum, consider a case with logit function fl(x) = fu(x) = f(x) = log(x/(1 − x)). The right panel in figure shows f(1 − g[ϕ(a)]) and f(g[ϕ(a)]) as a function of g[ϕ(a)] ∈ [0, 1]. A logit function is a strictly increasing function from [0, 1] to and is hence valid model for difference structure with essential maximum and with essential minimum. The set of permissible transformations of f is given by f(x) = α log(x/(1 − x)) + β.

1.1.2 Structure with Tobit maximum

The measurement structures discussed so far have the notable property that it’s not possible to obtain the maximal element by concatenation of two non-maximal elements. [9] note that “we do not know of any empirical structure in which the concatenation of two such elements is an essential maximum”. [9] (theorem 7 on p. 95-96) nevertheless provide the results for such a case, which we refer to as extensive structure with Tobit maximum. This measurement structure is implicit in the popular Tobit model which is sometimes discussed in connection with CFE and hence, we briefly present it. The scale ϕ must satisfy order monotonicity and monotonicity of concatenation when the concatenation result is not equal to the Tobit maximum. In the remaining case, i.e. when zu ∼ a ∘ b, the concatenation is represented numerically as ϕ(zu) = inf(ϕ(a) + ϕ(b)) where inf is the infimum over all a, b ∈ A which satisfy zu ∼ a ∘ b. The scale ϕ is unique up to a multiplication by a positive constant. Extensions to extensive structures with Tobit minimum and to difference structures with Tobit maximum and/or minimum are straightforward and follow the rationale presented in the previous section.

1.1.3 Random variables with CFE

The extensive and difference structures with essential minimum/maximum introduce a crucial aspect of CFE that was exemplified by the dictionary entry on the ceiling effect by [10]. Formally, we may interpret the “introduction of a new variable” that elevates the previous level of the variable as a concatenation with some other element. Then we may look at the difference between the new level f−1(f(x) + f(h)) and the old level x. Notably, we get limx→1|f−1(f(x) + f(h)) − x| = 0 (for h ≠ 0), which may be seen as a formal notion of “bunching”. Crucially, measurement theory suggests that the concept of a boundary effect implicitly assumes the existence of a concatenation operation which has a non-additive numerical representation.

The measurement-theoretic account fits well with the description of CFE by [10]. It misses however the other highlighted aspects of CFE: the distributional properties of the measured variable such as the variance reduction or the increased skew. To formally approach the concept of reduced variation and the influence of CFE on the distribution of the measured values more generally, we need to introduce the concept of random variables (RVs). Recall, that scale ϕ maps from the set of empirical events to some interval. Instead, we consider ϕ to map from empirical events to a set of RVs over the same interval. We are not aware of work that explores such a probabilistic formulation of measurement theory. Nor do we wish to explore such an approach in detail in the current work. Our plan is to point out that the above-listed results from measurement theory along with few straight-forward assumptions about the probabilistic representation, allow us to derive most widely used probability distributions. Crucially, the derivation determines which parameters and under what transformation, represent the concatenation operation. This in turn allows us to specify and justify the choice of data generators used in the simulations and the choice of the metrics used to evaluate the performance on the simulated data.

When the scale maps to a set of RVs, constraints, such us ϕ(a ∘ b) = ϕ(a) + ϕ(b), or a ⪯ b iff ϕ(a) ≤ ϕ(b), are in general not sufficient to determine the distribution of ϕ. The first step is to formulate the constraints in terms of summaries of RVs. We choose the expected value E[X] as data summary. The expected value is linear in the sense that E[aX + b] = aE[X] + b and also E[X] + E[Y] = E[X + Y] (where X,Y are independent RVs and a, b are constants). Due to the linearity property, we view expected value as a data summary that is applicable to scales, which represent concatenation by addition. See chapter 2 in [14] and chapter 22 in [15] for similar views on the role of expectation in additive numerical representations.

As a consequence, we modify the constraint a ⪯ b iff ϕ(a) ≤ ϕ(b) to a ⪯ b iff E[ϕ(a)] ≤ E[ϕ(b)]. We modify the constraint ϕ(a ∘ b) = ϕ(a) + ϕ(b) to E[ϕ(a ∘ b)] = E[ϕ(a)] + E[ϕ(b)]. Effectively, the above constraints state that ϕ is a parametric distribution with a parameter equal to E[ϕ(a)] for each a ∈ A and in which the parameter satisfies monotonicity, additivity, or some additional property required by the structure.

Above, we saw that in the presence of CFE, concatenation can’t be represented by addition. Instead, we apply the expectation to the values transformed with f which supports addition. For instance Eq 3 translates into

| (7) |

Thus, we require that the distribution is parametrized by cu = E[(fu(g[ϕ(a)])] and/or cl = E[(fl(1 − g[ϕ(a)])] depending on whether the structure has an essential maximum, an essential minimum or both.

Consider again the dictionary descriptions of CFE. One may interpret these in terms of random variables as follows. With the repeated concatenation, the expected value of the measured values approaches the boundary. Furthermore, as it approaches the boundary, a concatenation of the equivalent object/event results in an increasingly smaller adjustment to the expected value. Finally, [11] stated that the variability decreases as the values approach boundary, which one may interpret as that the variance of the random variable approaches zero upon repeated concatenation.

Consider a random variable Y(cu) ∈ [yl, yu] with a ceiling effect at yu and parameter . We may investigate whether the stated requirements are satisfied by checking whether the following formal conditions of a ceiling effect are true:

for every x > 0

.

Instead of condition 3 and 4, one may alternatively consider whether Var[Y(cu)] and Skew[Y(cu)] are respectively increasing and decreasing functions of cu. Eq 7 implies the existence of series of random variables Y(cu) that converge to ϕ(zu) as cu → ∞. By dominated convergence theorem (chapter 9.2 in [16]) then the second condition holds but instead of the first condition we obtain . By assuming E[ϕ(zl)] = yl one additionally obtains both, the first and the third condition. Indeed, the third condition is a direct consequence of the first condition. Analogous results follow for a variable with a floor effect at yl with the limiting process cu → −∞. To conclude, the second condition follows immediately from the measurement-theoretic considerations, however to determine the remaining conditions one has to consider specific distributions of Y which we do next.

1.1.4 Maximum entropy distributions with CFE

In this section we present an approach that allows us to derive probability distribution Y(cl, cu) given functions fl and fu. We adapt the principle of maximum entropy (POME, [17–19]) to obtain the probability distributions with the desired parametrization. According to POME, if nothing else is known about the distribution of Y except a set of N constraints of the form ci = E[gi(Y)] and that the domain of Y is [yl, yu] (and that it is a probability distribution, i.e. ), then one should select a distribution that maximizes the entropy of the distribution subject to the stated constraints. Mathematically, this is achieved with the help of the calculus of variations ([20] chapter 12). The POME derivation results in a distribution with N parameters and the derivation fails if the constraints are inconsistent. The procedure is similar, but somewhat more general compared to the alternative method of deriving a parametric distribution that is member of the exponential family with the help of constraints ([for applications of this method see for instance [21]). POME allows to derive distributions that are not part of the exponential family. To mention some general examples, the uniform distribution is the maximum entropy distribution of RV on closed interval without any additional constraints. Normal distribution is the maximum entropy distribution of RV Y on real line with constraints cm = E[Y] and cv = E[(Y − c1)2] ([19] section 3.1.1).

Table 1 provides an overview of maximum entropy distributions found in the POME literature that are derived from constraints posed by structures with essential minimum and/or essential maximum and thus relevant in the current context. For more details on the derivation of the listed maximum entropy distributions see [19] and [22]. We make the following observations. First, the popular choice fu = fl = −log(1 − Y) translates into constraints cu = E[log(1 − Y)] and cl = E[logY], which correspond to the logarithm of geometric mean of Y and of 1 − Y respectively. The sole exception in the table is the Logit-normal distribution which uses fu = fl = log(Y/(1 − Y)) to model CFE.

Table 1. Maximum entropy distributions derived from constraints posed by structures with essential minimum and/or essential maximum.

| distribution | range | constraint on cu and cl | additional constraint | p(Y = y) | parameters |

|---|---|---|---|---|---|

| Beta | Y ∈ [0, 1] | cu = E[log(1 − Y)] | B(a, b)−1 ya(1 − y)b | cu = ψ(a) − ψ(a + b) | |

| cl = E[log(Y)] | cl = ψ(b) − ψ(a + b) | ||||

| Logit-normal | Y ∈ [0, 1] | cu = E[log(Y/(1 − Y))] | cv = Var[log(Y/(1 − Y))] | cu = cl | |

| cl = E[log(Y/(1 − Y))] | |||||

| Truncated Gamma | Y ∈ [0, 1] | cl = E[log(Y)] | cm = E[Y] | ||

| Generalized Gamma | Y ∈ [0, ∞] | cl = E[log(Y)] | cn > 0 | cl = log b + ψ(a)/cn | |

| Beta Prime | Y ∈ [0, ∞] | cl = E[log(Y)] | cn = E[log(1 + Y)] | cl = ψ(a) − ψ(b) | |

| B(a, b)−1 ya−1(1 + y)−a−b | cn = ψ(a + b) − ψ(b) | ||||

| Log-normal | Y ∈ [0, ∞] | cl = E[log(Y)] | cv = Var[log(Y)] | ||

| Generalized Geometric | Y ∈ {0, 1, …, ∞} | cl = E[log(Y)] | cm = E[Y] | D(a, b)−1ay yb | |

| Discrete Beta | Y ∈ {0, 1, …, cn} | cu = E[log(1 − Y)] | D(a, b)−1ya(1 − y)b | ||

| cl = E[log(Y)] | |||||

| Beta-binomial | Y ∈ {0, 1, …, cn} | cu = E[log(1 − Q)] | E[Y] = q | cu = ψ(a) − ψ(a + b) | |

| cl = E[log(Q)] | q ∼ Beta(a, b) | cl = ψ(b) − ψ(a + b) |

PDF—probability density function. B is beta function. ψ is Digamma function.

Second, the maximum entropy distributions include large portion of the most popular distributions on [0, 1] and [0, ∞]. Exponential, Weibull, Gamma, F, Log-logistic or Power function distribution are included by Generalized Gamma family, by Beta Prime distribution or both [23]. A generalized version of the Beta prime distribution can be obtained with a constraint . [22] went further and showed that a maximum entropy distribution with constraints cl = E[log(Y)] and includes the Generalized Gamma family (for cp → 0) and the generalized Beta Prime family (cp = 1). While [22] don’t consider the case cp = −1, it is straightforward to see that assuming results in a generalized form of Beta distribution. The resulting distributions and the relations between them are discussed in more detail by [23].

Third, the parameters cv may be interpreted as variance parameters, or more generally as constraints that introduce a distance metric. Notably, the variance is formulated over f(Y). Thus while cl can be respectively interpreted as expected log-odds or logarithm of geometric mean, cv can be interpreted as log-odds variance or geometric variance. Introduction of these parameters is consistent with the measurement theoretic framework, except that the constraints are expressed in terms of variance cv = Var[f(Y)] rather than in terms of expectation cl = E[f(Y)].

Fourth, the interpretation of the cm parameters is interesting as well. One possibility is to view cm as an additional constraint unrelated to CFE. As [24] discuss in the case of gamma distribution, the parameter cl controls the generation of small values while cm controls the generation of large values. This interpretation is similar to the interpretation of cl and cu of beta distribution even though there is no essential maximum in the former case as opposed to the latter case.

Fifth, one may illustrate the similarity between cu of Beta and cm of Gamma distribution in one additional way. Consider a generalization of Beta distribution with a scaling parameter b = ϕ(zu) such that Y ∈ [0, b]. As detailed in [23] Gamma and some other distributions can be obtained from a generalized Beta by constructing the limit b → ∞. The parameter cu of Beta translates into cm of Gamma.

Sixth, it’s possible for two notationally distinct constraints to result in the same probability distribution, albeit with different parametrization. For instance, constraints and imply a Generalized Gamma distribution with a somewhat different parametrization than that of the Generalized Gamma distribution listed in the table: cl = cn log b + ψ(a).

Seventh, recall that fl and fu are defined up to a scaling constant (extensive structure) or up to linear transformation (difference structure). As a consequence cl and cu are known up to a scale or up to a linear transformation, i.e. cl = βl + αlE[fl(Y)]. In similar manner, one may modify the constraints cu or even cm so that the set of permissible transformations of f is explicit. α and β are not identifiable in addition to cl and their introduction does not affect the derivation of maximum entropy distribution. Nevertheless, it may be possible to parametrize the distribution with α and/or β instead of some nuisance parameter. Consider the Generalized Gamma distribution with constraints and . Note that we may introduce transform cl = cnE[log(Y)] so that cn can be interpreted as the scale/unit of cl. Finally, one may sacrifice parameter cm and introduce shift parameter, say cβ such that cl = cβ + cnE[log(Y)]. From the formula for cl from Table 1 it follows that cβ = cn log b = log cm − log a.

Eighth, as illustrated with the case of truncated gamma distribution, it is straightforward to introduce a maximum of the distribution while maintaining the floor effect. Note though that the maximum thus introduced is not an essential maximum and the process of truncation can’t be used to introduce CFE. This can be perhaps best seen on the case of truncated normal distribution with range [0, ∞] which does not satisfy the CFE conditions listed in the previous section.

Finally, it is straightforward to apply the above ideas to discrete measurement. While the values of Y are discrete, the values E[f(Y)], that are part of the constraints, are continuous. Maximum entropy derivation provides analogous results to continuous distributions. Constraints cl = E[logY] and cm = E[Y] with Y ∈ {0, 1, …} lead to generalized geometric distribution ([19] section 2.1d). Constraints cl = E[log Y] and cm = E[log(1 − Y)] result in a discrete version of Beta distribution with Y ∈ {0, 1/n, 2/n, …, (n − 1)/n, 1} and . Discrete Beta distribution is seldom used in applied work, perhaps due to the fact that p(Y = 0) = p(Y = 1) = 0. As a more popular and more plausible alternative we included the Beta-binomial distribution, which can be obtained by sampling q from Beta distribution parametrized by cl and cu and y ∈ 0, 1, …, n is then sampled from Binomial distribution with proportion parameter q and . As described in [25], binomial distribution can be seen as a maximum entropy distribution with E[Y] = q and with additional assumptions about the discretization process.

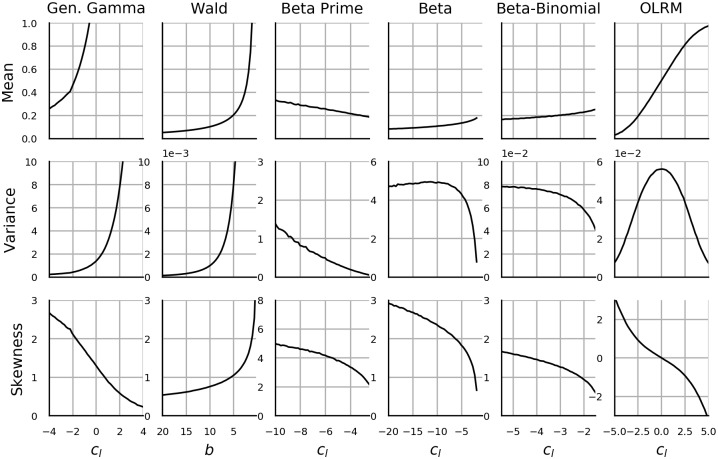

Having presented the maximum entropy distributions, we now consider to what extent these satisfy the informal CFE conditions. As already mentioned, the second CFE condition is incorporated as an assumption in the derivation of the maximum entropy distributions, but the remaining three conditions must be checked separately. In principle, such task is in exercise of looking up the formula for expectation, variance or skew and then computing the limit. Unfortunately, the analytic formulas for these quantities are either not known or do not exist (Generalized Gamma, Log-logistic) or the available formulas do not use the current parametrization (Beta, Beta prime, Beta-binomial, Generalized geometric). As a consequence only few results are readily available and are discussed next. The remaining results are obtained through simulation and presented in section 3.9.

In the case of Log-normal distribution, the first and the third condition are satisfied while the skew is independent of cl. In the case of Beta distribution we set cl → ∞ while cu is held constant (and vice versa). As a consequence cl − cu = ψ(a) − ψ((1/E[Y] − 1)a) → ∞. Since ψ is increasing and convex, cl − cu → ∞ when E[Y] → 0 or both a → 0 and b → 0. In the latter case, however cu → ∞ which is in contradiction with our assumptions and hence only the former case is valid. Thus, the first condition is satisfied by Beta distribution.

Regarding Generalized Gamma distribution, note that the result depends on the choice of nuisance parameters. Trivially, if we hold cm = E[Y] constant, then cl → −∞ will not affect the expected value. Instead, we propose to hold cn and cn log b constant, where the latter term may be conceived as the offset of cl. Then cl → −∞ implies, ψ(a) → −∞, hence a → 0 and as a consequence E[Y] = exp(cn log b)a → 0. The first condition is satisfied by Generalized Gamma distribution distribution.

1.1.5 Additional distributions with CFE

We discuss two additional distributions that are popular in the literature, that mostly satisfy the CFE conditions and that offer a plausible connection to measurement theory, yet their maximum entropy derivation is not available in the literature.

Boundary hitting times of Brownian motion and its extensions are popular models of response times and response choice in psychology [26]. Wald distribution describes the first boundary hitting time of a one dimensional Wiener Process with one boundary located at distance a > 0 from the starting position of the particle, which moves with a drift b > 0 and a diffusion rate s > 0. The probability density function of the hitting time is

All three parameters are not identifiable. The common choice in the psychological literature is to fix s = 1 [26], although we prefer a = 1 because s and b are independent while a on b are not. The concatenation can be numerically represented by addition of positive quantities to b while holding s constant. The increments to b increase the speed of the particle and result in a faster response.

A more recent approach by [27] at conceptualization of maximum velocity, considers the conjoint structure of velocity, duration and distance. Such conjoint structure is inherent in the process definition of Wald distribution, which suggests a possible measure-theoretic derivation of the Wald distribution. We are not aware of such derivation in the literature and we limit this section to checking whether Wald distribution satisfies the CFE conditions. The expected value, variance and skewness of Wald distribution are 1/b, s2/b3 and . All three quantities converge to zero for b → ∞ and thus Wald distribution satisfies all four CFE conditions.

Two additional popular models for Y ∈ {0, 1, …, N} are the Polytomous Rasch model and Ordered logistic regression. Polytomous Rasch model is defined by

where cu = −cl represents concatenation and are threshold weights. [28] and [29] show that Polytomous Rasch model presents an extension of Boltzmann distribution and they use Boltzmann’s method of maximum probability distribution in their derivation. [19] (chapter 9) shows that this method provides identical results as POME. [18] (see section 10.5) provides POME derivation of the case N = 3. All these derivations utilize the constraint cm = E[Y], even though a parametrization in terms of cm is rarely used in the applied research. In any case, with cl → −∞ and ck constant, p(Y = 0) → 1 and hence E[Y] = cm → 0 and Var[Y] → 0. The Rasch model thus satisfies the first three CFE conditions.

Another, different parametrization of Rasch model is available in the literature under the label ordered logistic regression and can be formulated as a latent variable model ([30], p. 120):

with latent variable generated from the logistic distribution z ∼ Logistic(cu, cv) and thresholds for k ∈ {0, 1, …, N}. The parameters cu = −cl provide an opportunity to manipulate the CFE. In particular and . Thus Ordered logistic regression satisfies the first three CFE conditions.

The current section showed how measurement theory along with POME allows us to derive the most popular distributions with bounded or semi-bounded range. Crucially, these derivations suggest the appropriate parametrization and tell us which parameters must be manipulated to create CFE. Informal CFE conditions were considered and two additional distributions were suggested based on these conditions. This review of probability distributions will facilitate the review of robustness literature in the subsequent section and the choice and justification of the data-generating process used in the simulations as described in section 1.3.2.

1.2 Robustness of t-test and F-test

The previous section suggested that CFE affects mean, variance and other distributional properties of the measured quantity. These in turn, may lead to violations of assumption of normal error distribution and assumption of variance homogeneity which underlie the derivation of t-test and F-test. To what extent does violation of these assumptions affect the conclusions obtained with t-test and ANOVA? The early analytic derivations and initial computer simulations came to the prevailing conclusion that t-test and F-test are surprisingly robust to violations—perhaps with the exception of homogeneity violations in unbalanced designs (see [31] or a review of this early work). More recent literature however suggests that the early studies underestimated the magnitude of normality and variance homogeneity violations in data [32]. In addition, the question of robustness depends on the choice of performance measure. The decision depends on the costs and benefits of alternative methods. Early literature stressed the advantage in terms of power and computational simplicity of parametric relative to non-parametric methods. However more recent research suggests that power loss of non-parametric methods is often negligible and the advent of computer technology made use of non-parametric methods readily available.

Apart from the parametric/non-parametric distinction, large chunk of robustness studies, especially those utilizing discrete RVs, have been concerned with the danger of applying parametric methods to data with ordinal scale. As highlighted by [33], for various reasons formal measurement theory did not become popular and is rarely used in applied research. Instead a limited version of measurement theory, to which we refer as Stevens’ scale types [34, 35], has become prominent. The tools of formal measurement theory provide the flexibility to design infinite variety of structures, that capture the apparently infinite variety of data generating processes encountered by the researcher. [34] proposed to reduce this complexity to four structures (and the corresponding scales), which he considered essential. Interval scale preserves intervals and allows researcher to make statements about the differences between the empirical objects. Ordinal scale preserves ranks and allows researcher to make statements about the order of empirical objects. With ordinal scale any transformation that preserves order is permitted and does not alter the conclusions obtained from the data. However, with interval scale only linear transformations are permitted. Since t-test or F-test compute means and variances which in turn imply additive representation a controversy arose as to whether the application of parametric methods to data with ordinal scale is meaningful. Some of the related simulation studies will be described below, for a review of the issue and the arguments see [36–40]. A more recent and more specialized branch of this controversy was concerned with the application of parametric methods to data obtained with Likert scales [41–44].

Below we review the robustness studies relevant to the present topic with data generated from continuous distributions. Discrete distributions are covered in the subsequent section which is followed by our discussion of this literature.

1.2.1 Continuous distributions

The robustness studies of t-test [45–51] show a type I error change of up to 3 percentage points and a power loss of up to 20 percentage points. Most studies used the t-test for groups with equal variance instead of Welch’s t-test [52] for unequal variances, which appears to be unequivocally recommended in its favour and is more robust [53–57]. For instance, [58] compared type I error rate of t-test, Welch test and Wilcoxon test on variates with Exponential, Log-normal, Chi squared, Gumbel and Power function distribution. Welch test showed best performance with error rate ranging to 0.083 for two Log-normal distributions with unequal variance. In this case, Wilcoxon test showed error rate of 0.491, even though it performed on par with Welch test when the variances were equal. In their meta-analytic review of the literature, though, [59] noted “the apparent sensitivity of the Welch test to distinctly non-normal score distributions” (p.334). Most simulation studies on performance of F-test were either performed with normal variates (see table 2 in [59] or with discrete variates (e.g. [60–63]). The few available studies with non-normal continuous distributions used exponential, Log-normal or double exponential distribution. In their meta-analysis [59] conclude that “the Type I error rate of the F-test was relatively insensitive to the effects of the explanatory variables SKEW and KURT” which corresponded to the skew and kurtosis of the distribution from which the data were generated in the respective studies. [56] reported similar results, but provided a results for particular distribution types (table 12 in their work). The average type I error rate across studies ranged from.048 of Log-normal to.059 of exponential distribution. At the same time KW test performance was.035 with Log-normal and 0.055 with exponential distribution. Regarding the power of F-test [56] did not provide an analysis as they concluded that the number of studies was not sufficient and they pointed to additional conceptual difficulties of power estimation. In particular, with non-normal distribution it’s not straightforward to compute power [55]. While [59] provided an analysis of power, their analysis was inconclusive.

The studies discussed so far suggest that skew, kurtosis and choice of distribution do not notably affect the performance of t-test, F-test and KW test and other factors such as group size homogeneity and variance homogeneity of the groups are more important. These studies and reviews are not very informative in the present context as they mostly manipulate variance (type I error), or mean and variance (power) and only in few cases skew and kurtosis are manipulated. As such it is difficult make conclusions regarding the robustness with respect to CFE. Some researchers claim that the simulations are not representative with respect to the data that actually occur in research. [32] presented a survey of 440 large-sample achievement and psychometric measures. He reported that “no distributions among those investigated passed all tests of normality, and very few seem to be even reasonably close approximations to the Gaussian.” and he pointed out that “The implications of this for many commonly applied statistics are unclear because few robustness studies, either empirical or theoretical, have dealt with lumpiness or multimodality.” (p. 160) [64] investigated the performance of t-test on data sampled from distributions that resembled those reported by [32] and concluded that t-test is robust in particular the “multi-modality and lumpiness and digit preference, appeared to have little impact”. They further concluded that “a dominant factor bringing about nonrobustness to Type I error was extreme skew” (p.359). [64] discuss the practice of manipulating group variance and group means in isolation as part of the simulation studies intended to test type I and type II errors. They state that “we have spent many years examining large data sets but have never encountered a treatment or other naturally occurring condition that produces heterogeneous variances while leaving population means exactly equal. While the impact of some treatments may be seen primarily in measures of scale, they always (in our experience) impact location as well.” We suggest that CFE is one possible mechanism that explains the dependence between mean and variance.

1.2.2 Discrete distributions

Numerous studies investigated type I error rate of t-test and F-test when data were generated from discrete distribution [60–63, 65–67]. The error rate ranged from 0.02 to 0.055 (α = 0.05) depending on the choice of data generating mechanism. These studies lack the measurement-theoretic embedding. As a consequence multiple factors such as discretness, nonlinearity, skewness or variance heterogeneity were confounded. However, some insight can be obtained from graphical and numerical summaries of the data generating process.

[60] first generated a latent discrete variable in range [1, 30] with approximately normal, uniform or exponential distribution. This was then transformed using a set of 35 nonlinear monotonic transformations. Notably, one set of transformations qualitatively resembled a floor effect (see Figure 3 in [60]) and, compared other three sets of transformations, this set manifested the worst performance, both in terms of t-test’s type I error rate (2.8 to 5.1% with α = 0.05) and in terms of the correlation between t values of transformed and raw latent values (see Table 4 in [60]).

[63] generated values from uniform, normal and normal mixture distributions which were then transformed to integers from 1 to 5 with help of predetermined sets of thresholds. [63] focused mainly on the type I error rate of Wilcoxon test and CI procedure based on t-test. For the present purpose of most interest is the test of difference between one group with symmetric normal distribution and another group with shifted and skewed normal distribution. t-test showed higher power than Wilcoxon test for small sample size. For large sample sizes both methods performed at 100% power. Again, it’s not possible to judge the magnitude of power reduction due to the change of distribution properties such as skewness since a comparable control condition with shifted but not skewed condition is missing.

[67] investigated type I error rate of t-test with group values randomly sampled from over 140 thousand scores obtained from trauma patients with Glasgow Coma scale. The scores were integers and ranged from 3 to 15 points and their distribution manifests strong ceiling and floor effect with two modes at 3 and 15. The error rate was 0.018 for a two-tailed test with group size 10, but the error was negligible for the next larger group with 30 samples.

In addition to [63], only a couple of studies investigated the power of statistical methods with discrete distributions. We think that the reasons are conceptual. Such study requires a formulation with help of measure-theoretic concepts. As a consequence the few available studies are the ones that are conceptually most similar to the present study, even though these are not explicitly concerned with CFE. Hence these studies are reviewed in some detail.

[68] explicitly discussed formal measurement theory in relation to the controversy of application of parametric methods to ordinal scales. [68] (p. 392) argued that the question of invariance of results under a permissible scale transformation was crucial: “A test of this question would seem to require a comparison of the statistical decision made on the underlying structure with that of the statistical decision made on the observed numerical representational structure. […] If the statistical decision based on the underlying structure is not consistent with the decision made on the representational structure then level of measurement is important when applying statistical tests. Since statistical statements are probabilistic, consistency of the statistical decision is reflected by the power functions for the given test.” The authors generated normal RV which was then transformed to ranks, pseudo-ranks and ranks with added continuous normal error. The authors compared the power of t-test between the transformed and untransformed variables. The power loss due to the transformation was rather negligible with up to two percentage points for ranks and pseudo-ranks. Power loss for ranks with noise was up to ten percentage points.

[69] generated data from uniform, normal, exponential or triangular distribution, and then squashed the values with thresholds to obtain a discrete RV with 2 to 10 levels. The authors compared the power of linear and probit regression and concluded that linear regression (OLSLR) performed well. Exponential distribution which had most resemblance to ceiling effect got a special mention (p. 383): “As an exception, we note OLSLR-based power was decreased relative to that of probit models for specific scenarios in which violation of OLSLR assumptions was most noticeable; namely, when the OCR had 7 or more levels and a frequency distribution of exponential shape.”

[70] generated two group samples from ordered probit regression model and showed that a normal model may lead to incorrect inferences compared to ordered probit model fitted to these data. The ordered probit model is similar to the ordered logistic regression model described in section 1.1.5 except that . From all of the reviewed studies, the design of this study is the most similar to the current work. Note, that the authors compare the performance of parametric methods with a performance of a model which was used to generate the data. Trivially, in terms of likelihood, any model that was used to generate data will fit those data better than any other non-nested model. The current work will try to avoid such situation by using distinct and non-overlapping models for the purpose of method evaluation and for the purpose of data generation.

1.2.3 Robustness research explicitly concerned with ceiling effect

The research on statistical ceiling effect is difficult to find due to the abundance of research on an equally named phenomenon in managerial science. Hence the review in this section, which is focused on the research that explicitly mentions CFE in statistical sense, is likely to be incomplete.

Majority of this literature considers the Tobit model and the relative performance of linear regression and Tobit regression. Tobit model assumes that a RV Y is generated as y = yu if z ≥ yu and y = z if y < yu, where . yu is fixed and finite, while μ and σ are model parameters. The procedure, used by Tobit, in which the value below some or above some fixed threshold is replaced with the threshold value is called censoring(e.g. see entry on censored observations in [10]). Censoring should be distinguished from truncation in which the values above some ceiling are discarded. The distinction between the observed Y and the latent Z is similar to the distinction between the empirical structure and the numerical representation, in which the concatenation is not represented by addition. In Tobit model Z is an additive numerical representation while Y is not. In particular, the Tobit model is an instance of a structure in which the maximal element can be obtained by concatenation and which was briefly mentioned in section 1.1.1. Contrary to the claim by [9] that such structure is not relevant to research, a considerable literature on Tobit model and CFE emerged in recent two decades.

[71] considered how ceiling effect affects the growth estimates of linear model applied to longitudinal data generated from multivariate normal model which were then subject to a threshold similar to how Z was treated in the Tobit model. Up to 42% of the data were subject to ceiling effect. The authors compared performance of Tobit model with that of a linear model. The linear model was applied to data subjected to omission or list-wise deletion of the data at ceiling, which was intended to improve its performance. However, linear model performed worse and it underestimated the growth magnitude. In particular, “the magnitude of the biases was positively related to the proportion of the ceiling data in the data set.” ([71] p. 491).

[13] investigated how ceiling effect changes the value-added estimates of a linear method compared to a Tobit model. Similar to [71], this was an educational research scenario with longitudinal structure, but unlike the former study, [13] used test scores obtained with human participants which were then censored. The authors concluded that Tobit model considerably improved estimation, but also concluded that the linear method performed reasonably well up to a situation in which the ceiling affected more than the half of the values. See [72–75] for additional studies on the relative performance of Tobit and linear method with similar results.

The aforementioned studies rely on censoring to generate data. The sole exception is the study by [13] who distinguish between hard ceiling and soft ceiling. Hard ceiling was generated using the Tobit procedure and corresponds to the empirical structure in which the maximal element can be obtained through concatenation of two non-maximal elements. Soft ceiling corresponds to the structure with essential maximum, which we described in detail in section 1.1.1 and which is the focus of the current work. [13] considered skewness to constitute the crucial feature of a soft ceiling and used a spline to simulate RVs with skewed distribution. The choice of spline model is ad hoc and as [13] concede there are other valid choices. [13] found that the effects of soft ceiling were somewhat less severe.

The focus on data generated with censored normal or empirical distributions, limits the conclusions of the literature discussed so far in this section. Apart from the exclusion of soft ceiling, as mentioned by [71] (p.492), there are other potential choices to model the latent variable Z, such as Weibull or Log-normal distribution. Another class of excluded models with similar distributions are zero-inflated models, the most notable instance being the zero-inflated poisson model [76] used in survival analysis. Furthermore, censoring is readily recognizable—especially with continuous measurements. It manifests as a distinct mode at maximum/minimum. Thus, the Tobit model provides a very specific way to model CFE, which raises the concern about generality of the results obtained with this model.

A few additional studies on CFE compare the performance of linear regression model with a generalized linear model on discrete data. [77] used logistic model to study attrition in gerontological research and claimed that the choice of generalized linear model helped them avoid incorrect inferences caused by CFE. [78] compared the data fit of four statistical models. The simulated data modelled the distribution of four psychometric tests popular in epidemiological studies. The four tested models were: linear regression, linear regression applied to square root transformed data, linear regression applied to data transformed with CDF of Beta distribution, and a threshold model similar to ordered logistic regression reviewed in section 1.1.3. The last two models provided better fit than linear regression with no data transformation. [79] compared linear regression with generalized linear model based on binomial logit link function. They analysed a large data set obtained with Health of the Nation Outcome Scale for Children and Adolescent. The scale has integer outcome in range from 0 to 52. The linear method provided considerably larger effect size estimates (change between two time point of magnitude −2.75 vs. −0.49).

The three studies of CFE on discrete data demonstrated that generalized linear model and linear regression provide different effect size estimates. Strictly, this does not imply that the estimates from linear model are incorrect or biased. The authors made this conclusion, by assuming that the bounded values obtained with the test or the scale reflect an unbounded latent trait which seems plausible. The assumption of continuity of the latent trait and the discrepancy between the latent trait and the measured values highlights the conceptual link of these studies to the measure-theoretic framework. Unlike Tobit studies, these studies investigated the soft ceiling. All the studies reviewed in this section focused on effect size estimation of either regression coefficient or of differences (between groups or between repeated measurements) and how their estimation is affected by CFE. The effect of CFE on hypothesis testing, p values or confidence intervals was rarely discussed.

1.3 Goals and scope

1.3.1 Selection of statistical methods for investigation

The main goal driving the selection of statistical analyses is to complement the literature by providing additional results for previously untested modern methods. We focus on performance of t-test and ANOVA due to their popularity. As already mentioned in the previous section, estimation of regression coefficients in linear regression under CFE has been repeatedly investigated, hence we omit the regression setting and focus mainly on hypothesis testing. In particular, we investigate the performance of Welch test as it has been recommended in favor of the standard t-test without any additional drawbacks. We consider one-way ANOVA with three levels and a 2 × 2 omnibus ANOVA, in which case we present results from two F-tests for the two respective main effects and the results from F-test for the interaction. The following alternatives to t-test and ANOVA are included.

First, while the robustness of non-parametric methods has been investigated extensively, the investigations did not manipulate or account for the magnitude of CFE. Instead the focus was set on the ordinality of measurement and on the distributional properties such as variance or skew. We look at the performance of Mann-Whitney (MW) Test, Kruskal-Wallis (KW) Test and Scheirer-Ray-Hare-Test (SRHT).

Second, [80, 81] argued for a wider use of so-called robust statistical methods—methods that were designed to tolerate violation of normality and presence of outliers. [82] and [83] recommended the trimmed t-test by [84] in which a predefined proportion of the smallest and largest data values is discarded. Investigation of the performance of the trimmed t-test is included in the present study.

Third, Bayesian hypothesis testing has been recommended for diverse reasons [85–88]. Notably, it allows researchers to both confirm and reject hypotheses and the results are easier to interpret than p values since they describe the probability of hypotheses. One difficulty with Bayesian methods is that they require the specification of the data distribution under the alternative hypothesis. Another difficulty is computational: nuisance parameters are handled by integrating the data likelihood over their prior probability, which, depending on the choice of prior and the amount of data, can be computationally intensive. As a consequence various approaches to Bayesian hypothesis testing have been recommended [86, 89, 90]. The Bayesian t-test presented by [86] was selected in the current study, due to its computational simplicity as well as to its close relation to the frequentist t-test, which facilitates comparison. The Bayesian t-test provides the probability of the null hypothesis that the groups are equal relative to the alternative hypothesis that the groups differ.

Fourth, a frequentist alternative which offers the flexibility to confirm a hypothesis is equivalence testing. In particular, “two one-sided tests” (TOST) procedure by [91] has been advocated by [92]. The procedure asks the researcher to specify an equivalence interval around the effect size value suggested by the null hypothesis. Two t-tests are performed to determine whether the test statistic is respectively higher and lower than the lower and higher bound implied by the equivalence interval. Equivalence is assumed, if both hypotheses can be rejected. We are not aware of any robustness studies of the TOST procedure.

Fifth, a shift from null-hypothesis significance testing to reporting of (standardized) effect sizes along with confidence intervals was advocated by numerous authors (see e.g. [93–95]. Since any hypothesis testing procedure may be used to construct a confidence interval, the decisions based on confidence intervals fail under conditions similar to t-test. [96] showed with data generated from skewed distributions that the capture rate of the parametric CI does not correspond to the nominal CI. As an alternative, they recommended a CI of a bias-corrected effect size computed with bootstrap procedure. In the current work we present effect size estimates along with CI estimates in a research scenario with two groups. We present both the unstandardised effect size which corresponds to the mean difference in the observed value and its standardized version—Cohen’s d. The effect size estimates and CIs can be viewed as an inferential alternative to t-test, but in the present context they also highlight how CFE affects mean, variance and standard error, i.e. the elementary quantities utilized by the t-test computation.

Sixth, the literature review suggested that extreme skew and heterogeneous variances are (in addition to unequal group size) crucial factors leading to poor performance of t-test. The review of measurement theory as well as some publications [13] suggest that the two are related and we investigate the issue further by providing estimates of mean, variance and skew of the generated data. This further facilitates the discussion of the results as it helps us to relate these to those obtained by robustness studies with focus on skew manipulation.

Finally, our goal was to evaluate a statistical inferential method that would specifically target CFE and provide an alternative to the established methods. In selecting such method we had two concerns. First, the method should be reasonably easy to apply in terms of both computation and interpretation. Second, we wanted to avoid the obvious but not very helpful choice to use models identical to the data-generating process. This would mean for instance, that we compare the performance of generalized gamma model with say a t-test when the data are generated from generalized gamma distribution. Such setup has been used previously (e.g. [70]), and it may be useful for demonstrating drawbacks of the linear method, where by fitting a model identical with the data-generating mechanism one obtains an upper bound on the performance. However, as an alternative to established methods, it’s not helpful to recommend a model that precisely matches the data-generating distribution, as the main difficulty is that the data-generating mechanism is not known, or only very rough knowledge is available. Such rough knowledge may be a suspicion that CFE affects the measure. The candidate statistical methods can be justified by considering the measurement theory behind CFE that was discussed in section 1.1.1. There we considered the appropriate models of CFE and for each distribution we distinguished the nuisance parameters from parameters describing the magnitude of CFE. The latter correspond to cu and cl in Table 1. We saw that in most cases CFE is described by a logarithmic function which is further modified by the nuisance parameters e.g. in the case of Gamma distribution. Thus, one may assume that the logarithmic function provides accurate descriptions of CFE even though its precise shape determined by the nuisance parameters may not be known. We think that this situation, better describes the applied case, in which the researcher may suspect an influence of ceiling effect due to the bounded measurement range or due to the skew; but she may not have the exact knowledge of how CFE affects the measurement.

Given these consideration we decided to use Log-normal model and Logit-normal model in scenarios with one and two bounds respectively. In fact, since these models correspond to an application of normal linear model to data transformed by log or logit function, we make the comparison more direct by comparing t-test with t-test applied to log or logit transformed data. The data transformation is additionally used in the case of one-way ANOVA, two-way ANOVA, with CIs, Bayesian t-test and equivalence testing, thereby obtaining an additional version of each statistical method. In our opinion, such design helps us distinguish to what extent the performance of each statistical method depends on the assumption of normality.

To conclude, the selected statistical methods used in the current work are Welch’s t-test, one-way ANOVA, two-way ANOVA, Bayesian t-test and TOST. Each method is applied to log-transformed or logit-transformed data. In addition, t-test is compared with trimmed t-test and rank-based MW test, while one-way ANOVA is compared to KW test and two-way ANOVA is compared to SRHT.

1.3.2 Selection of data-generating process and performance metric

The present work will utilize Beta distribution, Generalized Gamma distribution, Beta prime distribution, Wald distribution, Beta-Binomial distribution and Ordered Logit Regression model (OLRM). We excluded the Log-normal and Logit-normal distribution as these would coincide with the models which performance is being investigated. The discrete Beta distribution and generalized geometric distribution listed in Table 1 are excluded for lack of applied interest in these models. In addition, theoretical results needed for simulation of such random variables are not available in the statistical literature.

Even more important than the choice of distributions is the choice of their parametrization. All distributions are parametrized with ci as described in section 1.1.3. Gamma distribution is the sole exception, for which we use parameter b instead of cm. The latter choice would result in the expected value cm being held constant, which in turn would result in a trivial failure of any statistical method which uses the difference between means for inference. We gave priority to OLRM over polytomous Rasch model as the former appears to be more popular being included in textbooks such as [30] and has generated some prior research interest relevant to the current topic [70, 78, 79].

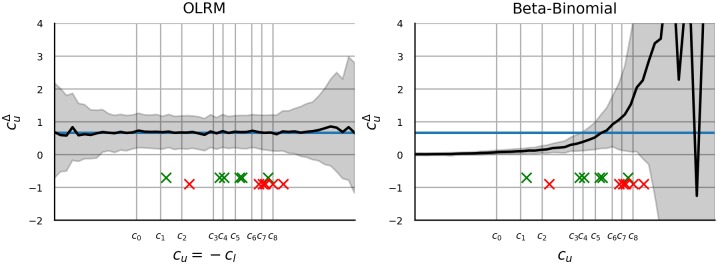

Since all three distributions which manifest both ceiling and floor effect use the same function for ceiling and floor effect (fu = fl), without a loss of generality, we restrict the investigation to a floor effect and the related parameter cl. Then the goal is to investigate the ability of statistical methods to detect constant difference in cl as the offset of cl goes to −∞. In particular, we investigate seven research scenarios. First, two groups (A and B) of 50 values each are generated from the respective distributions with and . We manipulate the magnitude of CFE by varying , while (which may be interpreted as an effect size) and all remaining parameters are held constant. The two-group scenario is used to investigate the inference with Confidence intervals, with Cohen’s d, Welch’s t-test, Trimmed t-test, MW test, TOST, Bayesian t-test and Welch’s t-test on transformed data. In the second situation, we introduce a third group with and the performance of one-way ANOVA, KW test and one-way ANOVA on transformed data is investigated. The remaining five scenarios assume presence of two factors with two levels each and and the performance of 2 × 2 ANOVA applied to raw and transformed data and the performance of the rank-based SRHT is investigated. Fig 2 illustrates the five different types of interactions that are being investigated. The choice of the scenarios is motivated by the research on how CFE affects linear regression, which showed that the linear method has problems identifying interactions [78]. In the first two cases we are interested in whether the statistical methods are able to correctly distinguish between a main effect and an interaction. In the first case, one main effect occurs and there is no interaction. The second case describes a situation with interaction but without main effect. The last three cases include two main effects and an interaction on cl, but differ in the order of the cl mean values of the four groups. We label these uncrossed, crossed and double-crossed interaction. Assuming that CFE affects the performance, the double-crossed interaction should pose least problems for statistical methods, since CFE preserves order information and the inferential methods should be able to pick out this information. In contrast, the uncrossed interaction should pose most problems. As in previous scenarios, the group differences are held constant, while . In each interaction case the group specific cl is computed as with d shown in Fig 2. The procedure is repeated for various combinations of nuisance parameters. Notably, for each distribution we look at how different values of affect performance. One additional nuisance parameter is investigated for each distribution. The identity of these nuisance parameters as well as the ranges of all parameters are listed in Table 2.

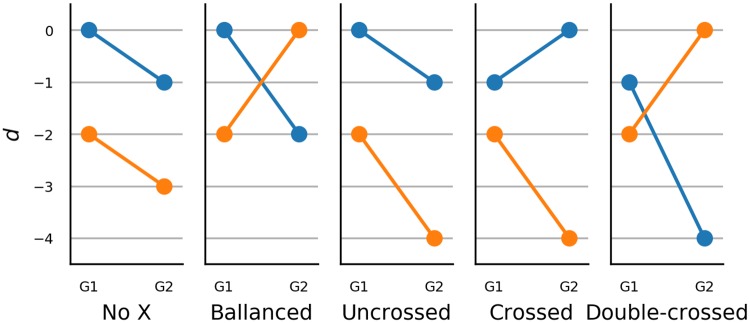

Fig 2. Types of interactions.

Each panel shows a qualitatively different outcome of a 2 × 2 factorial design. The first panel (from left) shows two main effects without an interaction. The second panel shows an (additive) interaction but no main effect. The remaining three panels show different constellations of two main effects accompanied by an interaction. Crucially the relative order of the conditions differs across these three constellations. The five displayed outcomes are used in the simulations.

Table 2. Parameter values used in simulation.

| Distribution | Range cl | value | range | NP label | NP value | NP range |

|---|---|---|---|---|---|---|

| Gen. Gamma | [−4, 4] | 0.25 | [0, 1] | cn | 1.0 | [0, 2] |

| Wald | [20, 0] | 1.0 | [0, 4] | σ | 1.0 | [0, 2] |

| Beta Prime | [−10, −2] | 2.0 | [0, 8] | cm | 0.155 | [0, 0] |

| Beta | [−20, 0] | 3 | [1, 5] | cu | -0.2 | [0, 0] |

| Beta-Binomial | [−5, −1] | 4 | [1, 8] | n | 7 | [3, 15] |

| OLRM | [−5, 5] | 0.8 | [0, 1] | n | 7 | [3, 15] |

NP- nuisance parameter

Regarding the robustness metric, we repeat each simulation 10000 times and present the proportion of cases in which a group difference was detected. Assuming a binomial model, the width of the 95% CI of the proportion metric is less than 0.02. As an exception, when reporting the performance of confidence intervals, Cohen’s d and Bayesian t-test, we report median values. With Bayesian t-test we report the median probability of hypothesis that the two groups differ as opposed to the hypothesis that the two groups are equal.

2 Materials and methods

2.1 Design

For each of the eight distributions and each of the seven scenarios, two, three or four groups of data were generated with 50, 33 or 25 samples per group respectively. The statistical methods were then applied and the inferential outcome was determined and recorded. This was repeated 10000 times and the performance metrics were computed over repetitions. Furthermore, the procedure was repeated for 25 combinations of five values of and five values of a distribution-specific nuisance parameter. The distribution-specific nuisance parameter is listed in Table 2 (column NP label). The five values of and of the nuisance parameter were selected from the ranges listed in Table 2 with equally spaced intervals.

2.2 Software implementation

The implementation relied on the stats library from Python’s Scipy package (Python 3.7.3, Numpy 1.16.3, Scipy 1.2.1). A translation from the cl and/or cu to the more common parametrization of Gamma and Beta distribution (in terms of a and b in Table 1) was obtained iteratively with Newton method. The formulas for compution of the gradient are provided in [97] (chapter 7.1) and [98] (chapter 25.4) respectively. To compute the initial guess we used the approximation ψ(x) = log(x − 0.5).

The confidence intervals were computed with the normal-based procedure (theorem 6.16 in [99]). The standard error was that of the Welch test. To compute standard error of Cohen’s d we used the approximation in [100] (Eq 12.14): where n is the sample size of each group. The denominator in computation of Cohen’s d was (Eq 12.12 [100]): where vi is the group variance. The TOST thresholds were fixed across cl to correspond to an median estimate of the group difference obtained with a cl located 3/4 between the lower and upper bound of the cl range. E.g. cl used to obtain the threshold for gamma distribution was 4 − (−4) × 0.75 − 4 = 2. Separate thresholds were obtained for transformed and raw data. The threshold estimates were obtained with Monte Carlo simulation with 5000 samples in each group. The code used to run the simulations and to generate the figures is available from https://github.com/simkovic/CFE.

2.3 Parameter recovery with ordered logistic regression and Beta-binomial distribution

This section describes the methods used in section 3.8. The motivation behind this supplementary investigation is described in section 3.8. In the first step OLRM was fitted to ratings of moral dilemmas reported in [1] and [2]. The data are labelled , where y ∈ 0, 1, …, 9 is the rating, ns indexes the participant, i ∈ {1, …, 6} indexes the item, g ∈ {0, 1} indicates whether the data come from the experiment group or from the control group, and s ∈ {0, 1} indicates wheter the data come from the original or from the replication study. OLRM is then given by for control group and for experimental group it’s where is the set of nine thresholds, is item difficulty and ds is the group difference.

In the second step a set of data points were generated with , where d0 and ck were fixed to median estimates of the corresponding parameters obtained in the previous step. cu was fixed to one of 50 values in the range [−7.7, 4.8] with equally spaced intervals between the values. In the third step another OLRM was fitted with which estimates of , and were obtained.

The estimation was performed with PySTAN 2.19 [101] which is a software for statistical inference with MCMC sampling. In each analysis, six chains were sampled and a total of 2400 samples was obtained. The convergence was checked by estimating the potential scale reduction ([102] p.297)in the parameters. In all analyses and for all parameters where upon convergence.

3 Results

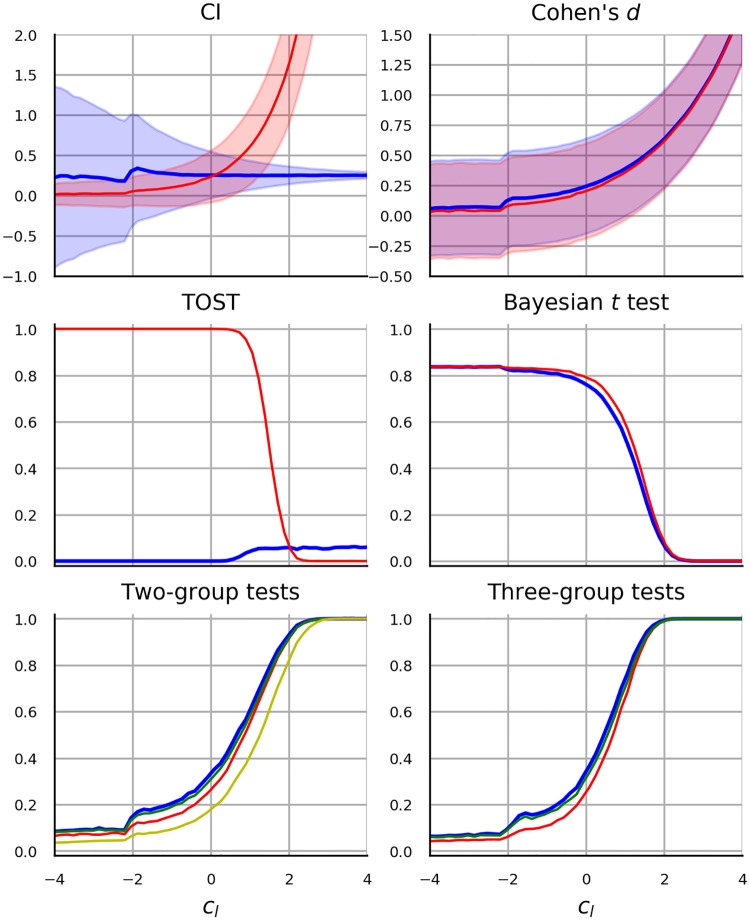

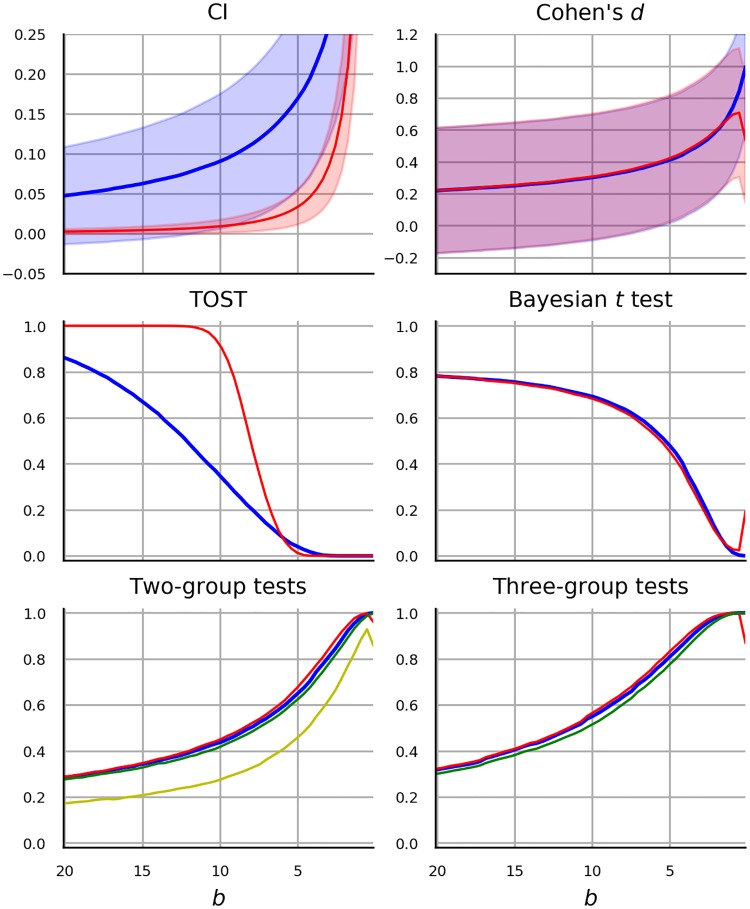

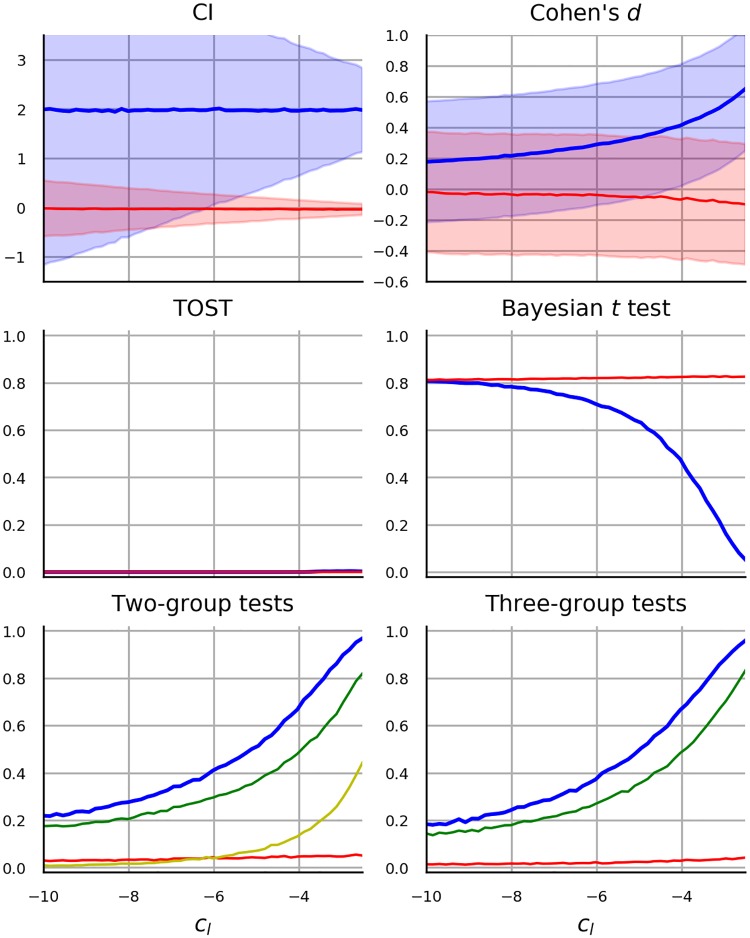

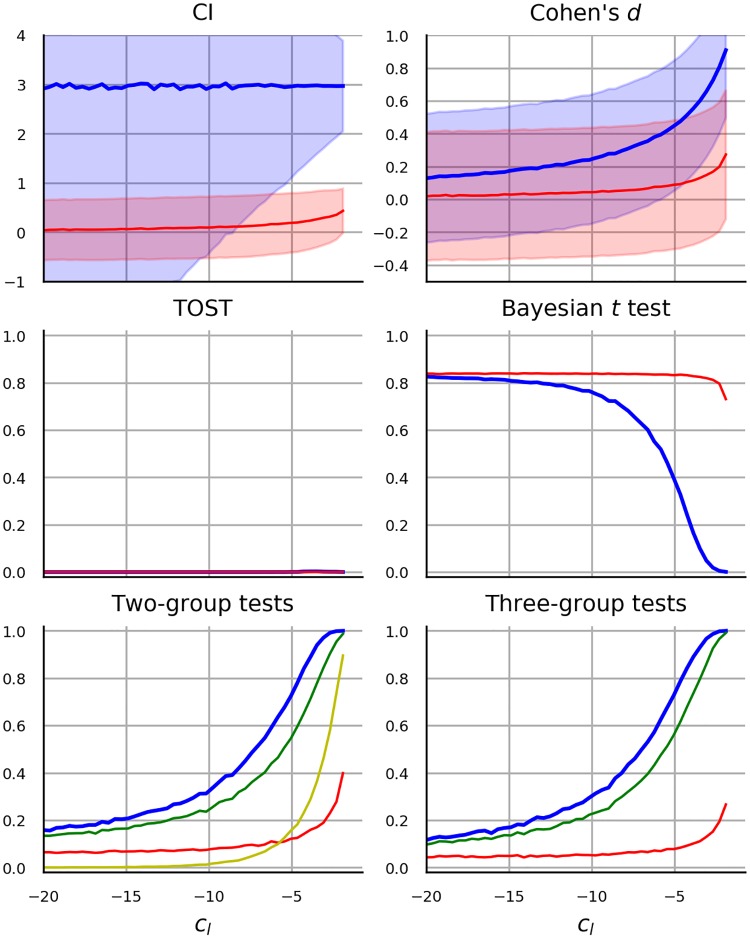

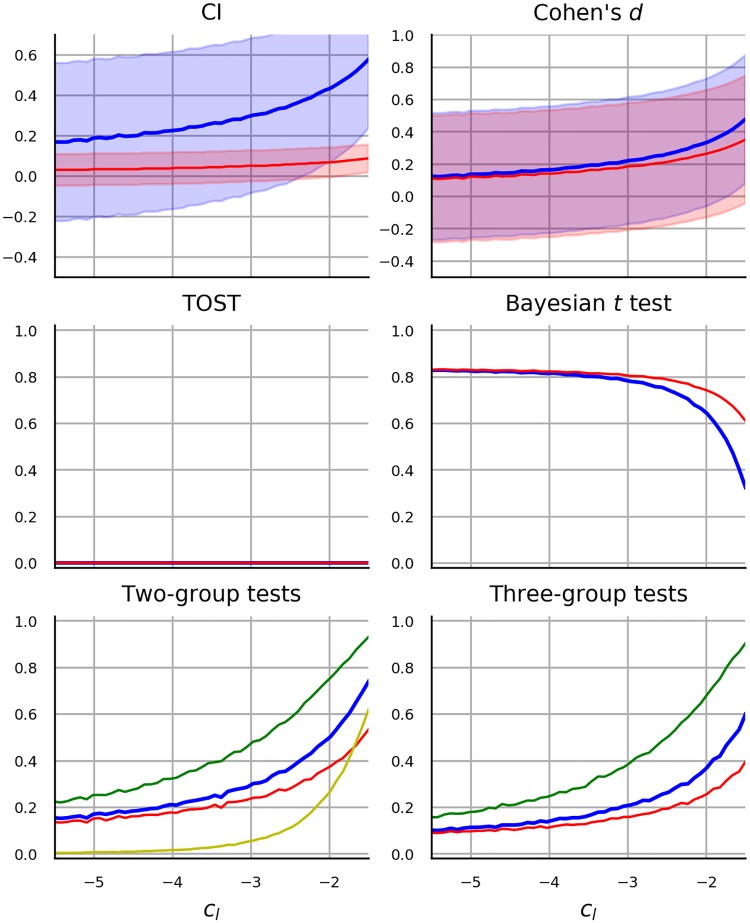

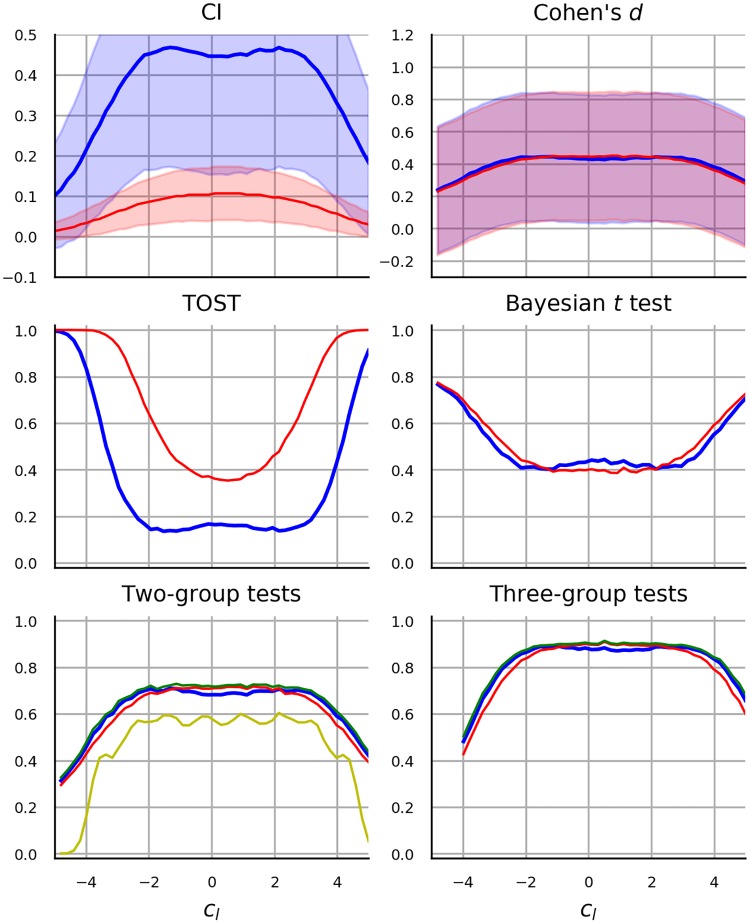

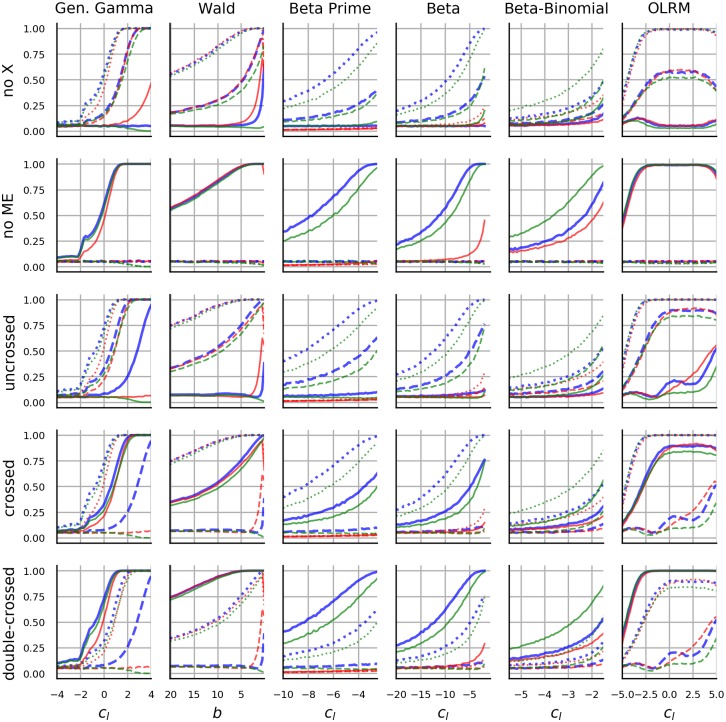

The results are presented as graphs with on the horizontal axis and performance metric on the vertical axis. To facilitate comparison, statistical methods which were applied to the same data, in the same scenario and with the same metric are presented together in the same graph. Excluding 2 × 2 ANOVA, the result is a total of 1050 graphs, which we structure as 42 figures with 5 × 5 panels. It is neither feasible nor instructive to present all figures in this report. The figures are included in S1 Appendix. Most of the graphs manifest qualitatively similar patterns and in the results section we present, what we deem to be a fair and representative selection. In particular we present for each distribution the results for a particular pair of values of and of the nuisance parameter. These values are listed in the third and the sixth column of Table 2.

3.1 Generalized gamma distribution