Abstract

A seven-dimension framework, introduced by Baer, Wolf, and Risley in an iconic 1968 article, has become the de facto gold standard for identifying “good” work in applied behavior analysis. We examine the framework’s historical context and show how its overarching attention to social relevance first arose and then subsequently fueled the growth of applied behavior analysis. Ironically, however, in contemporary use, the framework serves as a bottleneck that prevents many socially important problems from receiving adequate attention in applied behavior analysis research. The core problem lies in viewing the framework as a conjoint set in which “good” research must reflect all seven dimensions at equally high levels of integrity. We advocate a bigger-tent version of applied behavior analysis research in which, to use Baer and colleagues’ own words, “The label applied is determined not by the procedures used but by the interest society shows in the problem being studied.” Because the Baer-Wolf-Risley article expressly endorses the conjoint-set perspective and devalues work that falls outside the seven-dimension framework, pitching the big tent may require moving beyond that article as a primary frame of reference for defining what ABA should be.

Keywords: Applied behavior analysis, History, Historiography, Translation, Social validity

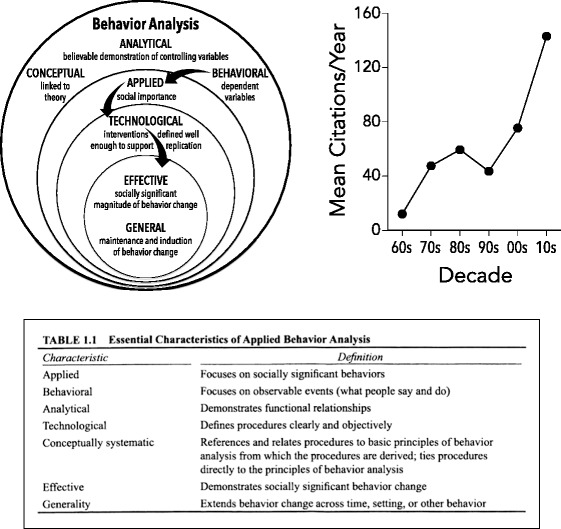

Before long, a half-century will have elapsed since the first issue of Journal of Applied Behavior Analysis (JABA) featured Baer, Wolf and Risley’s (1968) iconic article, “Some current dimensions of applied behavior analysis” (hereafter we will refer to the article as BWR, and the authors as Baer et al.). In the years since the article was published, the seven-dimension framework that it described (top, left panel of Fig. 1) has become deeply engrained in the fabric of applied behavior analysis (ABA; see Carpintero Capell, De Barrio, & Mabubu, 2014). The framework is invoked frequently in ABA scholarship, with BWR garnering nearly 3400 scholarly citations (Google Scholar search conducted October 6, 2016) and its citation rate increasing across the decades (top, right panel of Fig. 1). The BWR framework also is routinely used to initiate future professionals into the field of ABA. At the time of this writing, we examined how 12 behavior analysis textbooks, published in the years 2000–2015, defined ABA. Eleven of the books referenced BWR for this purpose, with 9 specifically describing the seven dimensions (see Fig. 1, bottom, for an example). Similarly, according to the Behavior Analyst Certification Board’s list of required competencies, a Board Certified Behavior Analyst® must be able to, “Define and describe the dimensions of applied behavior analysis (Baer, Wolf, & Risley, 1968)” (Behavior Analyst Certification Board Fifth Edition Task List, retrieved February 13, 2017, from http://bacb.com/wp-content/uploads/2017/01/170113-BCBA-BCaBA-task-list-5th-ed-english.pdf).

Fig. 1.

Top, left: the seven dimensions of applied behavior analysis as described by Baer, Wolf, and Risley (1968). All of behavior analysis is Behavioral, Analytical, and Conceptual. ABA’s focus on problems of social importance is a subset of Behavioral, since not only selected behaviors are bound up in social concerns. ABA creates Technological Interventions that are Effective under the conditions in which they are implemented and also produce General outcomes. Top, right: mean number of scholarly citations per year of the Baer et al. article. Bottom: example of a textbook summary of the seven dimensions. Reproduced from Mayer et al. (2012) by permission of the Sloan Publishing Company

As the preceding examples document, BWR has become the de facto frame of reference for describing what ABA is, or should be, at the level of both research and practice. Both domains are important, but increasingly they are separate domains. At the time BWR was composed, seminal practitioners were also, for the most part, ABA’s seminal scientists (Rutherford, 2009), but in recent years, the roles of scientist and practitioner have tended to be filled by different individuals (e.g., Marr, 1991; Rider, 1991; Sidman, 2011). The present article focuses on research and examines how BWR achieved its status as the benchmark definition of scholarship in ABA. This part of our appraisal examines some general factors that lead people to regard a publication as iconic and the specific historical conditions under which BWR was created.

In recognition of the fact that iconic works have wildly varied shelf lives,1 we also explore BWR’s continuing qualifications as the gold-standard description of ABA research. Central to this part of our appraisal is the mission that Baer et al. defined back in 1968: the capacity of ABA research to achieve broad social relevance (defined in terms of “the interest which society shows in the problems being studied;” BWR, p. 92).2 Among the points of discussion is a risk that an overly strict criterion for what qualifies as ABA may focus attention on specific methods and away from many informative lines of investigation. Because research of societal importance is framed primarily in terms of topics or problems rather than methods, we suggest that there is no one-size-fits-all definition of ABA research. Our core proposition will be that ABA research should be thought of as a fuzzy concept with many possible features, not all of which must be present in any single investigation. Throughout, we make no attempt to thoroughly summarize BWR (its wide circulation suggests that readers will require no such assistance) although we quote from it as necessary to show that our observations are not subjective.

Iconic Publications Articulate a Discipline’s Rules of Engagement

People tend to value publications that shaped the discipline to which they are committed. Branches of geology, biology, and psychology, respectively, were forever altered when Hutton (1788) proposed that rock layers hold clues to events of the distant past; when Darwin (1859) derived the principle of natural selection; and when Skinner (1938) showed how to reveal regularities by focusing on behavior-consequence relations. Iconic publications like these alter a discipline by describing rules for engaging with a particular subject matter, and such rules of engagement are appreciated because they save the reader a considerable amount of contingency shaping. Skinner (1956) colorfully described his early career as a series of laboratory false starts that gradually shaped an investigatory repertoire. This repertoire proved crucial to later successes of both Skinner and those who followed his lead. Those who followed had it easier than Skinner, of course, because Skinner shared not only what had been discovered but also some of the rules of engagement that fueled his discoveries (e.g., Ferster & Skinner, 1957; Skinner, 1938).

By disseminating rules of engagement, iconic publications establish a network of “apprentices,” with repertoires similar to those of the mentor-author, who subsequently come into contact with significant professional reinforcers (e.g., see Skinner’s [1957] account of scientific behavior). This outcome helps to explain the esteem with which many disciplines regard their “first textbooks,” such as Thorpe’s (1898) in chemical engineering, and Keller and Schoenfeld’s (1950) Principles of Psychology in behavior analysis. In reflecting on his own impressive career accomplishments, for example, Murray Sidman (1995) was reminded of Principles of Psychology, to which he was exposed fairly early in his training:

I found that much of my own behavior that I had assumed was the result of interactions with my own data, assisted by my own creative thought processes, actually came from [Principles of Psychology]; the behavior had become part of my repertoire. (unpaginated preface)

Sidman’s comment precisely captures how, for newly indoctrinated “apprentices,” an iconic publication “does not just present what is known but points out what we need to know, and suggests how we might find out” (Sidman, 1995, unpaginated preface). As the next section describes, BWR may be assumed to have served this role for early generations of ABA investigators.

BWR’s Pivotal Role in the Development of ABA

BWR is appreciated today for providing the first systematic rules of engagement for extending behavior analysis, which began in the laboratory, to the field. These rules of engagement had taken form gradually, and through considerable trial and error, during the 1940s through the early 1960s, when procedures devised for studying the behavior of rats and pigeons first were adapted for use with humans (e.g., Rutherford, 2009). As some of these efforts shifted from laboratory to field, by the late 1950s several published reports documented the emergence of something not very different from ABA as Baer et al. would later describe it (Ayllon & Michael, 1959; Azrin & Lindsley, 1956; Williams, 1959; see also Morris, Altus, & Smith, 2013).

Once initiated, ABA grew rapidly. Barely a decade after those seminal reports of the 1950s, a sufficient body of work existed to found JABA, which provides as good a marker as any of ABA becoming distinct from basic research in the experimental analysis of behavior (Morris et al., 2013). Thus, BWR, as a principal component of JABA’s first issue, appeared just as the efforts of a modest-sized community of pioneers were coalescing into a scholarly movement. Later, Baer et al. would acknowledge a debt to this seminal community by referring to BWR as “an anthropologists’s account of the group calling its culture Applied Behavior Analysis” (Baer, Wolf, & Risley, 1987, p. 313).

The rules of engagement articulated in BWR placed a heavy emphasis on addressing problems of societal importance (hereafter we will refer to this as a social-validity perspective). Subsequently, ABA developed strongly along these lines, with attendant growth in the number of individuals practicing ABA as BWR described it (Baer et al., 1987), in the number of total pages published in JABA, and the proportion of JABA articles describing BWR-style research (Hayes, Rincover, & Solnick, 1980).

At least part of this growth can be credited directly to the rules of engagement that BWR articulated. With its clear specification of an agenda and its common-sense explanation of defining dimensions, BWR would have proven immensely useful in training new generations of “apprentices” according to the social-validity model. As Baer et al. (1987) later pointed out (though BWR, surprisingly, did not), such an emphasis on social validity is essential to harnessing contingencies of survival that allow professional disciplines to flourish. No professional field can proceed without resources, and those who transparently address society’s interests may be rewarded with fee-for-service arrangements, grant funds, positions for its researchers and practitioners, and so forth (e.g., Critchfield, 2011a; Mace & Critchfield, 2010; Stokes, 1997). BWR’s emphasis on social validity helped to link ABA to such tangible benefits, and those who followed its rules tended to profit accordingly. In recent years especially, this has been evident in fervent public demand for ABA services and, in many jurisdictions, regulatory embrace of professional ABA certification in fee-for-service arrangements (e.g., Johnston, Carr, & Mellichamp, in press; Shook & Favell, 2008).

Iconic Publications Are Creations of Their Time

Like all behavior, that which creates publications is a product of past and current influences. Whatever a publication’s subsequent impact on a professional field, it first arises, as per Baer et al.’s (1987) “anthropologist” comment, as a snapshot of the period in which it was created and must be evaluated partly in this context. For example, Watson’s classic (1913) article, “Psychology as the behaviorist views it,” may be a seminal description of behaviorism (Harzem, 2004) but it did not spring forth unprovoked. Rather, Watson penned the article to address specific problems of his day, prominent among which were the prevalence of biological nativism and subjective measurement methods (introspection). Watson’s influence has been so durable that it is easy to overlook this historical context and uncritically accept once - revolutionary ideas that have become part of a diffuse contemporary zeitgeist, “like a cube of sugar dissolved in tea; it has no major distinct existence but is everywhere” (Harzem, 2004, p. 11).

BWR and the Battle for the Soul of ABA

So it may be with BWR. Baer et al.’s seven-dimension framework has become so familiar that contemporary observers may overlook the circumstances that gave rise to the source article. During the early days of extending behavior analysis to human behavior, there was considerable friction between two competing camps (Rutherford, 2009). One group (which we will call the experimental-control camp) believed that responsible application required preliminary explorations of the generality to humans of basic behavior principles that had been derived from animal research, or as Wolf (2001) put it:

Some were of the opinion that ... a leap [from lab to field] was premature and unwise because we didn’t know enough, that we needed to wait for more human operant research. (p. 290).3

At the least, it was asserted that field extensions should retain laboratory-like procedures in order to preserve experimental control:

At the time… many of the operant conditioning people were very strongly methodologically oriented to the point where [they felt] you could not possibly collect reasonable data unless it was automatically collected. (Jack Michael, quoted in Rutherford, 2009, pp. 60–61)

Those with strong backgrounds in the experimental analysis of behavior therefore were uncomfortable with what they regarded as rather unstructured tinkering in field settings. In their view, some of the laboratory’s most powerful analytic tools were being abandoned, at best costing opportunities for investigation, and at worst possibly eroding the field’s conceptual core (Rutherford, 2009). For instance, in observing the dissemination of Skinner’s air crib (an automated “baby tender”), Ogden Lindsley remarked that

It amazed me that 250 children had been reared in air cribs … and no one had put in a lever. No one had put in a lever.4 (quoted in Rutherford, 2009, p. 61)

Skinner (1959) might have captured the essence of the experimental-control camp’s worries when, writing about mainstream clinical psychology, he warned that clinicians might be more strongly influenced by social factors (“gratitude is a powerful generalized reinforcer;” p. 450) than by the reinforcers associated with understanding behavior’s controlling variables. Deitz (1982) has explained how the development of professional medicine was slowed by foregoing experimental analyses in hurried pursuit of socially important outcomes, and Skinner (1959) illustrated his own argument by invoking Albert Schweitzer: “If he had worked as energetically for as many years in a laboratory of tropical medicine, he would almost certainly have made discoveries which in the long run would help -- not thousands -- but literally billions of people” (p. 451). A parallel with the concerns of the experimental-control camp in behavior analysis is obvious.5

By contrast, members of the social-validity camp were impatient with laboratory-esque extensions6 and instead sought to change behavior that had been selected for practical importance rather than laboratory convenience. To their way of thinking, basic principles of reinforcement were straightforward and dependable “like gravity” (Jack Michael, quoted in Rutherford, 2009, p. 59), and consequently “findings from human-sized Skinner boxes held little interest for this new breed of behavior-shaper” (Rutherford, 2009, p. 60). As Risley (2001) wrote:

We saw too many studies… whose importance to human affairs were [sic] highlighted in their introduction and discussion sections but absent in their procedures and results sections. (p. 271)

A leading member of the social-validity camp was Montrose Wolf, who, in the early 1960s, was hired at the University of Washington to run a human operant laboratory, but instead spent most of his time consulting on child behavior problems in natural settings:

Wolf came to consider laboratory research on human behavior to be mostly a ‘dead end in scientific trappings’ and… when he created the editorial policy of the new Journal of Applied Behavior Analysis, he explicitly excluded laboratory purported-analogues, in favor of the in-context observation and investigation of real-world phenomena. (Todd Risley, quoted in Rutherford, 2009, p. 60)

Manifesto: All Social Validity, All the Time

These were the times in which BWR was composed. With a formative battle underway about whether the “extension to human behavior” would be defined more by society’s practical concerns or by a methodological debt to the experimental analysis of animal behavior, any credible observer would have been compelled to address the controversy. In doing so, Baer et al. took a decisive stand in favor of the social-validity camp. BWR’s first two pages were devoted to distinguishing clearly between ABA and the experimental analysis of behavior, and its subsequent explanation of the dimension Applied was, not surprisingly, grounded almost exclusively in social-validity arguments:

• Applied research is constrained to examining behaviors which are socially important, rather than convenient for study. (BWR, p. 92, italics added).

• In behavioral application, the behavior, stimuli, and/or organism under study are chosen because of their importance to man and society, rather than their importance to theory. (BWR, p. 92, italics added)

• The label applied is … determined by the … interest which society shows in the problem being studied. (BWR, p. 92, italics added)

Further affirmation of the social validity mission suffused BWR’s discussion of the dimensions Behavioral, Analytic, Effective, and General (Table 1). For good measure, the central point was reiterated in BWR’s concluding comments:

• An applied behavior analysis will make obvious the importance of the behavior changed (p. 97, italics in original)

Table 1.

Emphasis on social importance in BWR’s description of several dimensions of applied behavior analysis

| Dimension | Relevant statements |

|---|---|

| Behavioral | “Applied research is imminently pragmatic. It asks how it is possible to get someone to do something... [and the behavior analyst must] defend that goal as socially important.” (BWR, p. 93, italics added) |

| Analytic | “Application typically means producing valuable behavior.” (BWR, p. 94, italics added) |

| Effective | “If an application of behavioral techniques does not produce large enough effects for practical value, then application has failed.” (BWR, p. 96, italics added) |

| “In application, the theoretical importance of a variable is usually not an issue. Its practical importance, specifically its power in altering behavior enough to be socially important, is the essential criterion.” (BWR, p. 96, italics added) | |

| “In evaluating whether a given application has changed behavior enough to deserve the label, a pertinent question can be, how much did that behavior need to be changed? Obviously, that is not a scientific question, but a practical one. Its answer is likely to be supplied by people who must deal with the behavior.” (BWR, p. 96, italics added) | |

| General | “Application means practical improvement in important behaviors.” (BWR, p. 96, italics added) |

From the vantage point of contemporary ABA, in which socially important problems are routinely addressed, these remonstrances seem excessive, but BWR was a product of its era. With social validity as the prime directive and ABA’s status as an independent enterprise not yet solidified, Baer et al. sought not just to identify, but also to defend and promote, those characteristics that, in their view, most distinguished ABA from the experimental analysis of behavior.

Because BWR was a creation of its time, some of its arguments, while technically correct, may no longer merit the special emphasis that BWR gave them. For instance, BWR defended direct observation (as contrasted with automated behavior recording) as a viable means of measuring behavior in the field. Today, of course, observational methods are an integral part of every student’s introduction to ABA (e.g., Cooper, Heron, & Heward, 2007). In selected instances, BWR’s arguments seem not only dated but also stylistically emblematic of the period’s prickly relations between more basic researchers and what Baer et al. might have regarded as “truly applied” behavior analysts. For example, when explaining how a concern for social validity might place practical constraints on experimental design, Baer et al. noted that:

Society rarely will allow its important behaviors, in their correspondingly important settings, to be manipulated repeatedly for the merely logical comfort of a scientifically skeptical audience. (BWR, p. 92, italics added)

[A laboratory] experimenter has achieved an analysis of behavior when he can exercise control over it. By common laboratory standards, that has meant an ability to turn the behavior on and off, or up and down, at will. Laboratory standards usually have made this control clear by demonstrating it repeatedly, even redundantly, over time. Applied research... cannot approach this arrogantly frequent clarity of being in control.... (BWR, p. 94, italics added)

Today, it is widely understood that practical considerations in field settings can limit the number of observations that may be made and mitigate the opportunity to reverse experimental effects. The multiple baseline design, which BWR went to some lengths to justify as an alternative to potentially troublesome reversal designs (see p. 94), is now an uncontroversial standard component of the ABA investigatory arsenal (Cooper et al., 2007).

Upon close inspection of such details, it becomes evident that BWR is not, as Baer, et al. (1987) suggested, merely an “anthropologist’s account of ... [1968] Applied Behavior Analysis” (p. 313). It is a polemic,7 a Declaration of Independence from the experimental analysis of behavior. While the BWR framework (Fig. 1) included three dimensions that describe behavior analysis as a whole (Behavioral, Conceptual, and Analytical), within an overarching concern for social validity these were embraced only uneasily. Baer et al. took pains to emphasize that not every Behavior is socially important; that in applied work not all Analytical tools are suitable; and that Conceptual analysis is not the primary goal but rather a possible tool for arriving at for socially important behavior change (“In application, the theoretical importance of a variable is usually not an issue. Its practical importance... is the essential criterion;” BWR, p. 96). The framework’s remaining dimensions (Technological, Effective, and General) were explained almost entirely within the context of the social-validity mission.

Viewing BWR as “Declaration of Independence” makes it possible to extend several observations about the article’s influence upon the early development of ABA. First, BWR may have helped to tip the balance of power between the early social-validity and experimental-control camps not only because it articulated the former model with exceptional clarity but also because, in its edgier sections, it portrayed the alternative view as misguided and perhaps even foolish (i.e., “redundant,” “arrogant”). Years later, when reflecting upon the development of ABA, Risley (2001) emphasized that it had spread far and wide “except peculiarly in those places where operant laboratory research was strongest” (p. 270, italics added). This phrasing suggests a persistent view that ABA and other kinds of human research were locked in a zero-sum game.

Second, the experimental-control camp produced no equivalent manifesto, and predictably, perhaps, it produced relatively few intellectual “apprentices.” In fact, a generation and more later, the descendants of this camp, now calling their work human operant research or the Experimental Analysis of Human Behavior (EAHB), were still struggling to achieve critical mass, to standardize their methods, to make sense of their findings, and to identify their proper place in the broader discipline of behavior analysis (e.g., Baron, Perone, & Galizio, 1991; Buskist & Johnston, 1988; Dinsmoor, 1991; Etzel, 1987; Hake, 1982; Harzem & Williams, 1983; Michael, 1984; Perone, 1985; Perone, Galizio, & Baron, 1988).

Third, BWR served as an explicit mission statement for the newly launched JABA, which according to Montrose Wolf’s design explicitly excluded the kinds of investigations being conducted by the experimental-control camp (Rutherford, 2009). As Risley (2001) has noted:

The Baer, Wolf, and Risley (1968) article was written…as an attempt to differentially prompt certain types of submissions….[What] most concerned Wolf and me: the encouragement of field research; the insistence that you should seek lawfulness in the everyday activities of people; and the pursuit of the invention (and documentation) of new behavioral technology. (p. 270)

Investigators from the social validity camp therefore had a natural outlet for their work, while those from the opposing camp did not. Even a generation later, with basic science journals still featuring primarily research with nonhumans and JABA, by design, unfriendly to laboratory research with humans, those working in EAHB found it difficult to publish their research (e.g., Hake, 1982).

A Crisis of Confidence and a Translational Pivot

A further noteworthy development is that, roughly a decade after BWR’s publication, ABA’s heady beginnings gave way to a crisis of confidence during which observers worried that the field had lost contact with its scholarly foundations and therefore had become overly Technological (e.g., Birnbrauer, 1979; Branch & Malagodi, 1980; Cullen, 1981; Deitz, 1978; Michael, 1980; Moxley, 1989; Pierce & Epling, 1980; Poling, Picker, Grossett, Hall-Johnson, & Holbrook, 1981). “We are becoming less concerned with basic principles of behavior and more concerned with techniques per se,” wrote Hayes, Rincover, & Solnick (1980, p. 283), and Morris (1991) bemoaned the rise of research that, “demonstrates the effects of behavioral interventions at the expense of discovering... actual controlling relationships” (p. 413).

In support of these concerns, Hayes et al. (1980) surveyed the first 10 volumes of JABA and determined that its contents were growing less Analytical and Conceptual. Regarding Analytical, they found a decrease over time in the percentage of JABA empirical articles that described component analyses (which determine the features of an intervention package that are responsible for behavior change) and parametric analyses (which map dose-response relationships between interventions and behavior changes). Regarding Conceptual, Hayes et al. described an increase over time in the percentage of JABA articles that were purely methodological (e.g., development-of-procedures exercises that might today be referred to as R&D; Johnston, 2000) or Technological (describing an intervention without reference to behavior principles). There was a concomitant decrease in the percentage of articles that today might be called “translational” (i.e., “shows an effort to advance our basic understanding of some behavioral phenomena;” Hayes et al., p. 278).

It is difficult to view these trends as unrelated to BWR’s ardent focus on distinguishing ABA from basic research. BWR presented seven dimensions but passionately defended one core idea: that application means creating socially appreciated behavior change. The dimensions of greatest concern during “The Crisis” (Conceptual and Analytical) were two of the three that are most central to behavior analysis generally—that is, they are dimensions along which ABA is particularly at risk for being confused with the experimental analysis of behavior. We suggest, therefore, that “The Crisis” arose when a newly minted army of “apprentices” took BWR’s rules of engagement very literally by emphasizing social importance above all else, and by taking pains not to look too much like the experimental analysis of behavior. As Hayes et al. (1980) commented:

The data show that applied behavior analysis is increasingly following Baer et al.’s recommendation that applied studies directly manipulate the problem behaviors, in natural settings, and with the troubled populations. However, this definition of applied has been labeled a “structural” one because it is based strictly upon the nature of the subjects, settings, and behavioral topography being studied [rather than increasing] the applied workers’ ability to predict, understand, and control socially important behavior in the settings and with the clients they serve (p. 281).

“The Crisis” shows that BWR was less a final statement on ABA than a developmental marker in a continuing debate over what ABA should become. Prior to BWR’s publication, members of the social-validity camp had been impatient with conceptually and methodologically cautious research-to-practice translation. Following an additional decade or so of field successes, it was perhaps logical for the same individuals to exhibit “less and less interest in conceptual questions” (Hayes et al., 1980, p. 289)—or, stated more positively, to conclude that the technology of dissemination was sufficiently advanced that field practitioners had little need to understand the underlying theoretical principles (e.g., see Baer, 1981; Wolf, 2001).8 To be clear, Baer et al. did not advance this view explicitly and even acknowledged that

The field of applied behavior analysis will probably advance best if published descriptions of its procedures... strive for relevance to principle... This can have the effect of making a body of technology into a discipline rather than a collection of tricks. (p. 96)

But BWR may have contributed to “The Crisis” by damning with faint praise. In particular, its endorsement of the Conceptual dimension was cursory (one paragraph of a 6.5-page article) and lukewarm (in the above quotation, note the autoclitics “probably” and “can” rather than, say, “certainly” and “will”). This, combined with the article’s dismissive treatment of the Analytical conventions of laboratory research, may have left the impression that that the Conceptual and Analytical dimensions were optional considerations or even—consistent with the pre-BWR views of some members of the social-validity camp who we quoted previously—inconvenient hurdles to the development and dissemination of Technological interventions.

Eventually, more Conceptual and Analytical heads prevailed in ABA research. At roughly the same time that the “The Crisis” became a matter of public discussion, there was a development that would dramatically inflect ABA’s trajectory. Functional analysis methodology (Iwata, Dorsey, Slifer, Bauman, & Richman, 1982) provided a relatively user-friendly way to examine socially important behavior in the context of basic principles. Its procedures qualified as Technological (easily replicated) but were explicitly Analytical in design (demonstrated clear experimental control), and in a standardized way led directly to Conceptual insights (linking problem behavior to behavior principles). Functional analysis thus provided a vivid illustration of how to integrate the practice-friendly Technological dimension with dimensions that characterize behavior analysis as a whole (e.g., Mace & Critchfield, 2010; Wacker, 2000).

The Iwata et al. (1982) functional analysis report was reprinted in JABA in 1994. Within a few years, that journal, which Hayes et al. (1980) had previously regarded as part of the Technological problem, became part of the solution through shifts in editorial policy that placed greater emphasis on the theoretical roots of application. Beginning around the mid-1990s, ABA scholarship took on an increasingly translational tenor that continues to this day (e.g., Virues-Ortega, Hurtado-Parrado, Cox, & Pear, 2014; for a brief history, see Mace & Critchfield, 2010; for more extensive surveys, see DiGennaro Reed & Reed, 2015; Madden, 2013).

Section Conclusion

Darwin is reputed to have said that in scholarly discourse there are “lumpers” who emphasize the similarities among things and “splitters” who emphasize differences.9 In composing BWR, Baer et al. operated primarily as splitters. Although their spirited defense of ABA as a unique enterprise makes sense in historical perspective, the approach can be linked to both desirable and undesirable disciplinary outcomes (ABA’s dramatic growth and “The Crisis,” respectively). With this mixed track record as context, we now consider how well the BWR framework characterizes contemporary ABA research.

BWR and Contemporary Applied Research

It is reasonable to suggest that investigations that incorporate all of the BWR dimensions (Fig. 1) remain as well positioned to contribute to ABA’s social-validity mission as they were 50 years ago. An investigation that is Analytical, Conceptual, and Behavioral is good behavior analysis and therefore capable of advancing the understanding of a problem, whether practical (Applied) or theoretical. An intervention that is Technological, Effective, and General shows how to use this understanding to create socially valued change. In this general sense, the BWR framework holds up well as a template for ABA research. We said “a template” rather than “the template” because, upon careful consideration of the mission of applied research, two kinds of ambiguities about the BWR framework can be identified.

The first ambiguity concerns how individual BWR dimensions are to be interpreted when examining specific research activities. For illustrative purposes, consider the dimension Behavioral in the context of the study of childhood accidents that cause injury, death, loss of physical functioning, and substantial health care costs. Baer et al. asserted that, “the behavior of an individual is composed of physical events” (BWR, p. 93) and admonished against anything other than direct observation of those events. Child accidents, however, pose considerable challenges to direct observation. They take place rarely and unpredictably, almost always out of an investigator’s scope of observation. Consequently, research aimed at identifying the antecedents and consequences of accident-related behaviors often employs interviews with caregivers and other people who have observed these behaviors (e.g., Alwash & McCarthy, 1987; Peterson, Farmer, & Mori, 1987). Baer et al. strongly objected to using verbal reports as a substitute for direct observation, so according to a strict application of the BWR framework, this research must be considered uninformative, despite the fact that it has led to Effective strategies for preventing injuries (e.g., Finney, Christophersen, Friman, Kalnins, Maddux, Peterson, Roberts, & Wolraich, 1993). Or at least apparently effective strategies, because the primary dependent variable in intervention research is the number of child injuries (e.g., see Kaung, Hausman, Fisher, Donaldson, Cox, Lugo, & Wiskow, 2014), and injuries are not behavior per se but rather a byproduct of behaviors and environments that place children at risk (see Johnston & Pennypacker, 1980, for a critique of measuring behavior products instead of behavior). Thus, according to a strict application of the BWR framework, this research can support no confident conclusions about improvements in child welfare. Never mind that markers of “general functioning,” like child injuries, directly address problems as society conceives of them (e.g., see Strosahl, Hayes, Bergan, & Romano, 1999) or that most observers would consider an intervention to be pointless if it changed child behavior without reducing injuries (e.g., Wolf, 1978). In short, by common sense, standard research on child injuries qualifies as socially important, but within a strict application of the BWR framework, it cannot be useful to ABA because it is insufficiently Behavioral.

The preceding anticipates a second ambiguity about the BWR framework, which concerns whether every study must exhibit all seven of its dimensions in order to qualify as ABA. Although putatively “the label applied is not determined by the research procedures used but by the interest which society shows in the problem being studied” (BWR, p. 92), Baer et al. presented their framework as a conjoint set in which six dimensions were described as necessary components of the seventh (Applied = social importance). Thus:

An applied behavior analysis will make obvious the importance of the behavior changed, its quantitative characteristics, the experimental manipulations which analyze with clarity what was responsible for the change, the technologically exact description of all procedures contributing to that change, the effectiveness of those procedures in making sufficient change for value, and the generality of that change (BWR, p. 97; italics in original)

This passage makes clear that, in the view of Baer et al., no investigation qualifies as ABA if it lacks any one of the seven dimensions. Thus, just as child-accident research is insufficiently Behavioral, thoughtful analyses of the variables controlling acts of terrorism (Nevin, 2003) and consumer demand for indoor tanning services (Reed, Partington, Kaplan, Roma, & Hursh, 2013) fall short on three dimensions (Effective, General, and Technological) because they lack an intervention.

Similarly, Lovaas’ (1987) seminal randomized controlled trial on early intensive autism intervention may be insufficiently Analytical because it employs a group-statistical experimental design. A common objection is that group-comparison research is not, in BWR terminology, Analytical because behavioral functional relations always are within-individual effects (for representative arguments see Bailey & Burch, 2002; Branch & Pennypacker, 2013; Cooper et al., 2007; Hurtado-Parrado & Lopez-Lopez, 2015; Johnston & Pennypacker, 1980, 1986; and Sidman, 1960).10 The BWR article did not directly disparage group-based designs, but Baer (1977) subsequently did and, tellingly, when Baer et al. introduced the dimension Analytical, they mentioned only single-subject designs. Despite the central role of randomized controlled trials in contemporary public policy deliberations (e.g., Drake, Latimer, Leff, McHugo, & Burns, 2004), this remains a common strategy for illustrating the ABA research agenda (see Haegele & Hodge, 2015; Malott & Shane, 2014; Martin & Pear, 2015; Miltenberger, 2016 ; Poling & Grossett, 1986). Overall, while the interpretation that group designs are incompatible with ABA cannot be traced exclusively to BWR, it is fair to call it a standard component of contemporary appeals to the BWR framework (see Bailey & Burch, 2002; Cooper et al., 2007; Johnston & Pennypacker, 1986).

If the examples just mentioned do not qualify as Applied then a state of affairs exists in which, in order to guarantee the BWR-defined purity of ABA, potentially informative work on important problems must be excluded from consideration. We did not manufacture this conundrum for dramatic effect. Throughout the history of ABA, journal editorial teams have wrestled with the determination of what counts as Applied, and in the process, they often have considered more than “the interest which society shows in the problem being studied” (BWR, p. 92). Montrose Wolf’s insistence that JABA would reject “laboratory purported-analogues, in favor of the in-context observation and investigation of real-world phenomena” (Todd Risley, quoted in Rutherford, 2009, p. 60), partially illustrates this point. During ABA’s formative years, it was observed that “some JABA reviewers will now reject articles [focusing on socially important phenomena] because the result does not appear to be of social significance to the clients” (Birnbrauer, 1979, p. 17), and “a common lament is that ... applied journals consider [laboratory models of socially important phenomena] as not applied enough and suggest a basic journal” (Hake, 1982, p. 23).

Because journals do not make public their peer review files, it is difficult to know how relevant such concerns may be to contemporary ABA journals, and one might hope that, in our enlightened present, journals no longer resist work that does not conform to the BWR framework.11 For want of systematic data that might bear on this hypothesis, we must rely on anecdote in the form of our own peer review experiences over the past two decades or so. Though of unknown generality, these experiences suggest that things have not changed much since ABA’s formative years. On multiple occasions, a reviewer has remarked that our work was interesting and socially relevant (topics included dermatological health, politics, and elite sport competition). The primary objection to these studies was that they deviated from the BWR framework along at least one dimension (e.g., by using descriptive rather than experimental methods, or by employing measurement that was not deemed Behavioral). The recurring refrain, in the verbatim words of some of our reviewers, has been:

not applied (Baer, Wolf, & Risley, 1968)

not applied... in the Baer, Wolf, & Risley sense

not reflecting [the journal’s] emphasis on the Baer et al. (1968) dimensions of ABA

not suitable for [the journal] because it does not meet the Baer et al. definition of ‘applied’12

Sometimes our feedback has referenced one or more of the seven dimensions without invoking BWR by name. At the risk of sounding like sore losers in the editorial game, we illustrate with the following comments from an action editor of one prominent journal, who wrote that the research “addresses an important question,” commended the authors “for exploring a relatively non-traditional research subject for applied behavior analysis,” and acknowledged that, “I take great interest in the work reported here.” The decision letter indicated that “the reviews are generally positive, the recommendations for revisions are doable, and the content of the manuscript might be of interest to readers.” This may sound like the preface to a slam-dunk acceptance, but instead the conclusion was that, “The manuscript does not meet the minimum requirements to be accepted for publication ... because the research relies exclusively on self-report measures [and because] the presentation and analysis of the data are in aggregate form and rely exclusively on the use of inferential statistics.” Thus, a conceptually informative manuscript of transparent social significance was not deemed sufficiently Behavioral or Analytical for inclusion in an Applied behavior analysis journal—although, we were assured, “these data will be of great interest, and very appropriate, for a different journal and audience.” This is not the only time that we have seen the BWR framework used to direct work on interesting topics away from behavior analysis journals.

If the translational movement in behavior analysis has demonstrated anything, it is that no single means exists to advance the understanding of socially important behaviors (see Critchfield, Doepke, & Campbell, 2015; Mace & Critchfield, 2010; see also Birnbrauer, 1979; Dietz, 1983; Fawcett, 1985, 1991; Hake, 1982; Moxley, 1989; Stokes, 1997; Wacker, 1996, 2000, 2003). Although ABA could not progress without research that reflects all seven BWR dimensions, it makes little sense to limit the pursuit of social relevance that Baer et al. so valued only to topics that lend themselves to study within the BWR framework.

This point is so crucial that we now bolster it by examining several further examples of research that could be judged as inadequate based on one or more BWR dimensions. These examples are intended to be illustrative rather than exhaustive, and the reader is invited to identify further examples from personal experience. As contrasted with “pure behavioral research” (Johnston & Pennypacker, 1986, p. 36) that incorporates a full complement of traditionally expected features, these examples can be considered “quasi-behavioral research” (p. 38) that intermingles some of those features with features that are associated with other research traditions. The challenge we present to the reader is not to determine whether any particular instance should count as “pure” ABA research, but instead to decide holistically whether ABA, as a general enterprise, would be more interesting, vibrant, intellectually engaged, and socially relevant with such instances included within versus excluded from its core literature.13

Experimental Evaluation of Effective Interventions

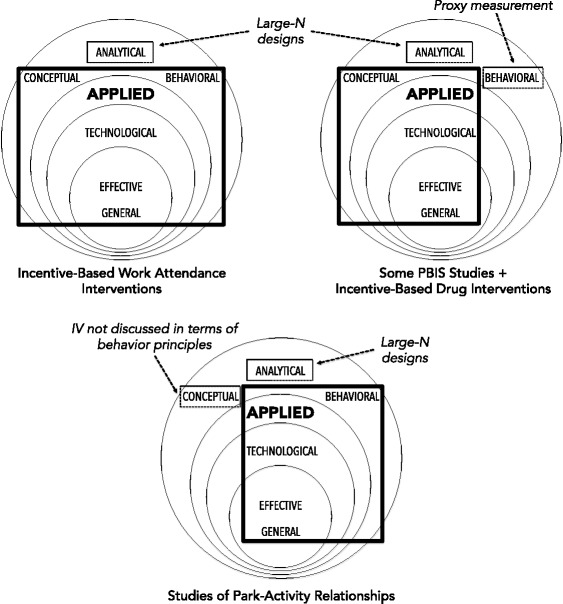

Some clinical experiments, despite being well grounded in the Conceptual framework of behavior principles and examining Effective, General, and Technological interventions, may raise questions concerning the extent to which they are Analytical and/or Behavioral. For instance, clinical experiments have shown Technological voucher-based incentive interventions (Fig. 2, top panel) to be Effective in improving workplace attendance by drug abusers with poor attendance records (e.g., Jones, Haug, Silverman, Stitzer, & Svikis, 2001). The voucher system is derived from the Conceptual framework of behavioral economics theory. Measurement of attendance is strictly Behavioral and there is evidence that attendance improvements are General. However, effectiveness often has been evaluated in large N designs that many behavior analysts would regard as insufficiently Analytical.

Fig. 2.

Examples of Effective interventions that can be questioned on at least one of the seven dimensions of applied behavior analysis of Baer et al. (1968). Solid rectangles identify dimensions that are clearly reflected in the research. Dashed rectangles identify dimensions about which some behavior analysts might have concerns. See text for further explanation

The voucher system described above also reduces drug taking (Higgins, Budney, Bickel, Foerg, Donham, & Badger, 1994; Jones et al., 2001; Lussier, Heil, Mongeon, Badger, & Higgins, 2006), as demonstrated in putatively non-Analytical group design experiments (e.g., Lussier et al., 2006; Prendergast, Podus, Finney, Greenwell, & Roll, 2006). Daily monetary incentives are contingent on providing a drug-free urine sample so, according to the BWR framework, this research might not be considered Behavioral because its primary dependent measure is a marker of general functioning (the chemical composition of urine) that is a byproduct of behavior and not behavior per se (Fig. 2, middle panel). A similar concern could be raised about research on the effectiveness of Positive Behavioral Interventions and Supports in which school office referrals were measured rather than behaviors of individual students that lead to office referrals (e.g., Horner & Sugai, 2015; see middle panel of Fig. 2), and about research on dental compliance in which investigator-rated levels of plaque buildup were measured instead of the behaviors to which plaque formation presumably was related (Iwata & Becksfort, 1981; not shown in Fig. 2).

The potentially objectionable features of these examples reflect not poor scientific judgment but rather topic-specific strategic investigatory decisions. For example, proxy measures are used in lieu of direct behavioral observation when behaviors of interest (like drug-taking, actions that get students into trouble at school, and dental hygiene habits) are difficult to track and observe in the field on a moment-to-moment basis. Moreover, the target audience for research on voucher systems, PBIS, and dental health includes mainstream researchers, funding agencies, and policy makers for whom markers of general functioning, assessed at the large-group level, will be most persuasive as outcome measures.

Some intervention research is Behavioral without being especially Conceptual (Fig. 2, bottom panel). For example, a recent series of studies has examined the effects of creating or renovating public parks on the activity levels of community members. The interventions were defined well enough to support replication (Technological), and when evaluated through well-validated Behavioral observation systems (e.g., McKenzie, Cohen, Sehgal, Williamson, & Golinelli, 2006), they were found to be Effective and General across several settings (Cohen, Han, Derose, Wiliamson, Marsh, & McKenzie, 2013; Cohen, Han, Isacoff, Shulaker, Williamson, Marsh, McKenzie, Weir, & Bhatia, 2015; Cohen, Marsh, Williamson, Han, Derose, Golinelli, & Mckenzie, 2014). Yet some behavior analysts might consider this work to be insufficiently Conceptual because the independent variable (access to parks) was not discussed in terms of behavior principles, and insufficiently Analytical because the results were examined through large N designs that pooled the behavior of many community members. Nevertheless, in light of an epidemic of obesity (Wang & Baydoun, 2007) and the near-absence of a behavior analysis literature on how public spaces can promote physical activity, the studies just describe provide a start at unpacking an important societal problem.

Experimental Evaluation of Ineffective Interventions

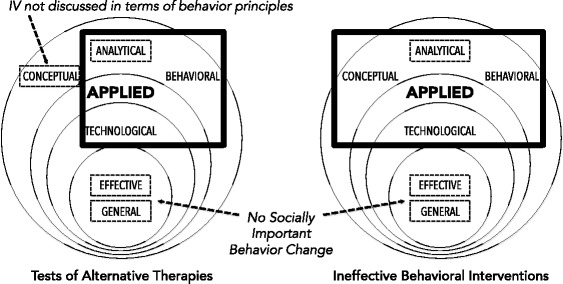

According to the BWR framework, an intervention experiment that does not produce socially important behavior change “is not an obvious example of applied behavior analysis” (BWR, p. 96), even if it is textbook perfect in other ways (Applied, Conceptual, and Technological). This is relevant to the present discussion because not all experiments aimed at socially important problems create socially important behavior change. For instance, the Analytical, Behavioral, and Technological research strategies of behavior analysis have been used to evaluate interventions with origins outside of the Conceptual system of behavior analysis (Fig. 3, top panel). Example topics of investigation include effects on task engagement and rates of problem behaviors of hyperbaric chamber exposure (Lerman, Sansbury, Hovanetz, Wolever, Garcia, O’Brien, & Adedipe, 2008), on motor skills of wearing ambient prism lenses (Chok, Reed, Kennedy, & Bird, 2010), and on autism-related behaviors of facilitated communication (e.g., Montee, Miltenberger, & Wittrock, 1995) and “therapeutic” horseback riding (Jenkins & DiGenarro Reed, 2013). In none of these cases was the intervention found to be Effective, in which case there were no effects that might prove to be General.

Fig. 3.

Examples of clinical interventions that are not Effective. Solid rectangles identify dimensions from Baer et al. (1968) that are clearly reflected in the research. Dashed rectangles identify dimensions about which some behavior analysts might have concerns. See text for further explanation

Made clear by these examples is that, although the BWR dimensions place a premium on creating behavior change, sometimes there is value in knowing what does not work (e.g., Normand, 2008). A topic is “socially important” if members of society think it is. Non-behavioral therapies can be popular with consumers (Green, 1996; Shute, 2010) and in this sense, they qualify as indisputably “important.” From the Conceptual perspective of a behavior analyst, alternative therapies may not seem promising, but they are worthy of experimental attention in part because of their capacity to waste consumers’ limited time and money (e.g., Lilienfeld, 2002; Normand, 2008) or even to make existing problems worse (e.g., Mercer, Sarner, & Rosa, 2003). Thus, Normand (2008) has asserted that, “Detection of and protection from pseudoscientific practices is an important service for those in need who have limited ability to detect such foolery themselves” (p. 48).

But that is not all. Even interventions that are rooted in behavior principles do not necessarily create socially meaningful behavior change in all settings and for all kind of clients. Baer et al. (1987) distinguished between “theoretical failure” (which results from basing interventions on incorrect principles, as per many alternative therapies) and “technological failure” (which results from tailoring interventions poorly to the demands of a given situation), in the latter case acknowledging that in the pursuit of Effective interventions, there will be instructive failures:

Quite likely, technological failure is an expected and indeed important event in the progress of any applied field, even those whose underlying theory is thoroughly valid. (p. 342, italics in original)

The publication of failures to achieve socially important behavior change (Fig. 3, bottom panel) thus is consistent with the BWR emphasis on Analytical, and with the general notion of science as self-correcting. It is, however, inconsistent with a strict, conjoint-set reading of the BWR dimensions, which allows that only Effective interventions can be of interest. That interpretation is especially illogical in the case of experiments that show not just that an intervention failed but why:

...Failures teach... Surely our journals should begin to publish not only [reports of] our field’s successes but also those of its failures done well enough to let us see the possibility of better designs. (Baer, et al., 1987, p. 325; italics added)

Recent examples of this approach have illustrated how promising approaches to intervention were subverted by unexpected punishment contrast effects (Roane, Fisher, & McDonough, 2003), by discriminative properties of reinforcement (Tiger & Hanley, 2005), and by events outside of the intervention setting (Critchfield & Fienup, 2013). Such empirical evaluations of “failure” are part of what separates science from pseudoscience (Normand, 2008) and thus are an essential component of any program of research.

Translational Studies Without a Clinical Intervention

Possibly more controversial than the examples described so far are studies that are designed to shed light on socially important problems without attempting to create socially valued behavior change.

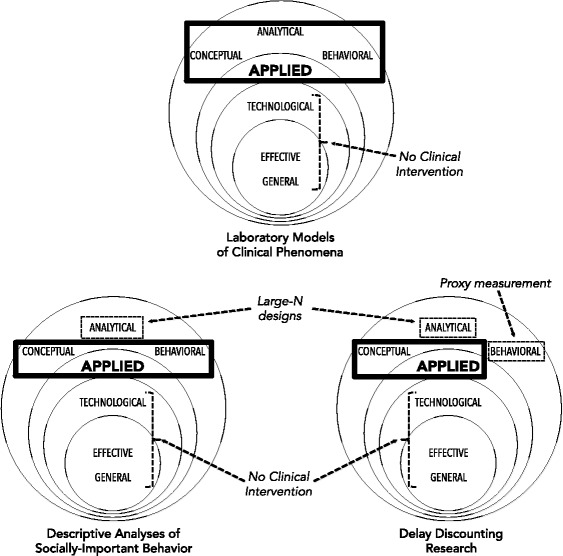

Laboratory Models of Clinical Phenomena

One such category of study involves what Baer et al. derisively labeled “laboratory purported-analogues” (Todd Risley, quoted in Rutherford, 2009, p. 60), that is, experiments that, for convenience of observation and experimental control, model socially important phenomena outside of clinical settings (Fig. 4, top panel). For example, several laboratory experiments have explored the role of derived stimulus relations in such problems as false memory (e.g., Guinther & Dougher, 2010, 2014), consumer product preferences (Barnes-Holmes, Keane, Barnes-Holmes, & Smeets, 2000), clinically relevant fear and avoidance (e.g., Augustson & Dougher, 1997; Dymond, Schlund, Roche, De Houwer, & Freegard, 2012), and social categorization and stereotyping (Lilis & Hayes, 2007; Roche & Barnes, 1996). Similarly, laboratory simulations have been developed to study gambling behavior (Dixon & Schreiber, 2002; Maclin, Dixon, & Hayes, 1999), demand by pregnant women for nicotine and other drugs (e.g., Higgins, Reed, Redner, Skelly, Zvorski, & Kurti, 2017), and behaviors that may contribute to child accidents (e.g., Cataldo, Finney, Richman, Riley, Hook, Brophy, & Nau, 1992).

Fig. 4.

Examples of research on socially important topics that lack a clinical intervention. Solid rectangles identify dimensions from Baer et al. (1968) that are clearly reflected in the research. Dashed rectangles identify dimensions about which some behavior analysts might have concerns. See text for further explanation

Laboratory models do not examine only human behavior (Davey, 1983). Perhaps the most familiar example of a laboratory model comes from behavioral pharmacology, where animal drug self-administration experiments have proven exceptionally useful in predicting human drug use and abuse in natural environments (e.g., Meisch & Carroll, 1987). But there are many other examples. For instance, some of the dynamics of say-do correspondence have been examined in pigeons (da Silva & Lattal, 2010; Lattal & Doepke, 2001), and clinically important predictions of behavioral momentum theory have been tested first in rats (Mace et al., 2010, Experiment 2).

All of these cases fall outside of the BWR framework because the setting is artificial, the participants may be selected at least partly for convenience, and the behavior under study may bear only partial similarity to that seen in everyday circumstances. Nevertheless, the relevant experiments may illuminate mechanisms that matter in everyday settings and clinical interventions, including in ways that field studies and theoretically driven basic research have not previously illuminated (e.g., Mace, McComas, Mauro, Progar, Taylor, Ervin, & Zangrillo, 2010, Experiment 2). Such studies therefore share many features with clinical functional analyses conducted under analog circumstances, which despite not reproducing the exact behavior-environment relations that exist in everyday circumstances provide valuable insights about behavior control that generalize to those circumstances (Hanley, Iwata, & McCord, 2003).

Descriptive Analyses of Naturally Occurring Behaviors

A second category of non-intervention research involves the study of problems that are widely recognized as socially important but for which field interventions (and experiments to evaluate them) are beyond the practical reach of the investigator, leaving descriptive studies as the only logical approach (Fig. 4, middle panel). Though anticipating this problem (“The analysis of socially important problems becomes experimental only with difficulty;” BWR, p. 92), Baer et al. apparently concluded that no investigation at all is better than one employing descriptive methods (“a non-experimental analysis is a contradiction in terms;” p. 92).

Subsequent investigators have not always agreed, in a number of instances preferring a non-experimental analysis of important problems to no analysis at all. Previously, we mentioned analyses of terrorist acts (Nevin, 2003) and of consumer demand for indoor tanning (Reed et al., 2013). Other investigations have examined whether matching theory can reveal “deviance” in youth conversation patterns (McDowell & Caron, 2010a, 2010b); whether reinforcement principles shed light on the legislative behavior of members of the US Congress (Critchfield, Haley, Sabo, Colbert, & Macropoulis, 2003; Critchfield, Reed, & Jarmolowicz, 2015; Weisberg & Waldrop, 1972); whether public policy changes affected use of child-adapted automobile seating (Seekins, Fawcett, Cohen, Elder, Jason, Schnelle, & Winett, 1988); and whether behavior during elite sport competition is explained by various aspects of behavior theory (e.g., Critchfield & Stilling, 2015; Mace, Lalli, Shea, & Nevin, 1992; Poling, Weeden, Redner, & Foster, 2011; Reed, Critchfield, & Martens, 2006; Seniuk, Williams, Reed, & Wright, 2015; Vollmer & Bourret, 2000).

Studies like these examine face-valid topics of great social interest and—unlike the narrative interpretation strategy employed often by Skinner (e.g., 1953)—test behavior analytic interpretations against empirical evidence. Unlike most experiments, they can operate at massive scales of analysis (e.g., for an entire branch of government or for entire nations; Critchfield, Reed, & Jarmolowicz, 2015; Reed et al., 2013). The standard objection to descriptive analyses is that they reveal only correlations and therefore provide, at best, unsubstantiated clues about possible causal relations. Until behavior analysts conduct experimental analyses of the relevant problems, however, the reader must decide whether such clues are better than no empirical clues whatsoever.

Using Verbal Behavior as Proxy for Socially Important Behavior

In the quest for socially valuable behavior change, Baer et al. saw nothing quite so threatening as using verbal responses as measurement proxy for other behavior:

A subject’s verbal description of his own non-verbal behavior usually would not be accepted as a measure of his actual behavior unless it were independently substantiated. Here there is little applied value in the demonstration that an impotent man can be made to say that he is no longer impotent. The relevant question is not what he can say, but what he can do. Application has not been achieved until this question has been answered satisfactorily. (BWR, p. 93; see Baer et al., 1987, pp. 316-317, for elaboration)

Yet many socially important behaviors, such as those implicated in child accidents, drug use, and sexual relations, tend to occur outside of a researcher’s scope of observation, creating a potentially uncomfortable choice between ignoring these behaviors and studying them with alternatives to direct observation.

The use of verbal reports for this purpose is illustrated in a series of studies using structured interviews to collect information about naturally occurring alcohol consumption and environmental events to which it might be related. The goal was to test predictions of behavioral economic theory for such clinically significant behaviors as spontaneous help-seeking and post-treatment relapse. A careful process of validation showed that while self-reports did not necessarily indicate exact amounts of important events like drinking, they correlated well with those amounts (e.g., Gladsjo, Tucker, Hawkins, & Vuchinich, 1992). Based on self-reports, help-seeking and relapse were found to covary with rates of alternative (i.e., non-alcohol-related) sources of reinforcement, thereby linking alcohol use in the everyday environment to the findings of experiments on incentive-based interventions (e.g., Tucker, Vuchinich, & Pukish, 1995; Tucker, Vuchinich, & Rippens, 2002).

This is not intervention research (Effective, General), but for present purposes the pertinent issue is that, within the BWR framework, it is insufficiently Behavioral due to reliance on self-report measurement. As Baer et al. alluded, the rub with self-reports is that they can deviate from the reported behavior, but often overlooked is that this problem is not unique to self-report measurement. The “reports” of external observers also can deviate substantially from what actually occurs with target behavior and—as BWR emphasized—are useful in research only under conditions that establish good correspondence. By the same token, self-reports are inherently neither informative nor uninformative. They are good measurement under some conditions and not under others, and the trick, as with using external observers, is to identify and instantiate the useful conditions (Critchfield & Epting, 1998; Critchfield, Tucker, & Vuchinich, 1998). This is a point that Baer (1981) subsequently came to appreciate when considering how to evaluate consumer satisfaction with behavioral interventions: “20 years [of experience in the field] had shown a use for clients’ statements that things were or were not better now…. Suddenly we were in the ironic position of needing truthful talk about behavior from the very people we had earlier not trusted to talk truthfully about behavior” (pp. 257–258).

A different point of contention arises with research procedures that ask participants to respond verbally to hypothetical scenarios. For example, delay discounting tasks (Fig. 4, bottom panel) include a series of choices between unequal outcomes (often, but not always, money amounts) in the format of a smaller amount available sooner versus a larger amount available later (this usually takes place in the context of insufficiently Analytical large N designs that typically do not include a clinical intervention and therefore cannot be Technological, Effective, or General). From these choices can be derived an “index of impulsivity” that varies across individuals and across selected external contexts, and that also correlates with engagement in a wide variety of socially important impulsive behaviors (for introductions, see Critchfield & Kollins, 2001; Odum, 2011).

As Odum (2011) has pointed out, behavior analysts object to delay discounting research on at least two grounds. First, consistent with Baer et al.’s mistrust of verbal data, there is the uncertain ontological status of “hypothetical verbal behavior,” which is not the same thing as physical behavioral responses. Second, the concept of “impulsivity” might suggest a personality construct, and historically, behavior analysts have not looked fondly upon constructs that equate behavior patterns with an unchanging trait. In light of these “shortcomings,” it might seem curious that both Journal of the Experimental Analysis of Behavior and The Psychological Record have recently devoted special issues to delay discounting (Bickel, MacKillop, Madden, Odum, & Yi, 2015; Green, Myerson, & Critchfield, 2011). According to the logic often employed in conjunction with the BWR framework, these projects undermine those journals’ status as Behavioral.

Yet, as employed by most behavior analysts, “impulsivity” is merely a way of summarizing behavior patterns in one domain (e.g., delay discounting tasks) that predict pattern in others. As Odum notes:

Whatever delay discounting may be, its study has provided the field of behavior analysis and other areas measures with robust generality and predictive validity for a variety of significant human problems (p. 427)....Due to the scope and impact of research in the area, the study of delay discounting can be considered as one of the successes of the field of behavior analysis. Skinner (1938) maintained that the appropriate level of analysis was one that produced orderly and repeatable results.... Delay discounting is related to a host of maladaptive behaviors, including drug abuse, gambling, obesity... poor college performance, personal safety, and self care. (p. 435-436)

Thus, standard critiques of hypothetical delay discounting tasks as not Behavioral confuse construct validity with predictive validity. At issue is not whether verbal responses are “true” but rather whether they provide insights into other objectively verifiable and socially important behaviors and their eventual clinical modification. They often do (Ayres, 2010; Bickel, Moody, & Higgins, 2016; Bickel & Vuchinich, 2000; Critchfield & Kollins, 2001; Odum, 2011).

Section Conclusion: ABA Research as a Fuzzy Concept

A great deal of interesting research on socially important problems is being conducted without fully representing the dimensions of ABA that BWR specified. Even though behavior analysts conducted much of the research just described, very different procedures were employed across studies and research areas, and it is reasonable to ask why. We suggest that as behavior analysis extends to new domains of social importance, a few key individuals modify normative rules of engagement based on trial-and-error experience with new problems. As a result of this inductive process, it is a virtual certainty that, for good and unavoidable reasons, emergent rules of engagement for studying Social Problem X will differ from those for studying Social Problem Y. For example, studies conducted in residential facilities may allow for long-term observation of individual behavior within the context of elaborate, steady-state within-subject experimental designs (e.g., Piazza, Hanley, & Fisher, 1996). The same luxuries may not be available in everyday settings such as public schools and business organizations, or for behaviors that occur too infrequently and unpredictably to be analyzed in terms of individual-client response rates (e.g., suicidal actions; Richa, Fahed, Khoury, & Mishara, 2014). In short, investigators tackling problems of societal importance that have not been the focus of previous systematic investigation within applied behavior analysis have little recourse but to embrace Baer et al.’s frequently overlooked assertion that, “The label applied is determined not by the procedures used but by the interest society shows in the problem being studied” (BWR, p. 92).

As a means of understanding ambiguous cases like those described here, it may be productive to think about ABA research as a fuzzy concept rather than a conjoint set of dimensions. A concept exists “when a group of [stimuli] gets the same response, when they form a class the members of which are reacted to similarly” (Keller & Schoenfeld, 1950, p. 154). For instance, there are many different kinds of chairs, but we sit in all of them and tact them with the same verbal label. In a fuzzy concept, exemplars of a class have multiple defining stimulus features, but not all of these features must be present in each exemplar (e.g., Herrnstein, 1990, Rosch & Mervis, 1975). For example, a chair might be said to consist of (a) a surface for supporting the buttocks, (b) perched on three or four legs, and supplemented by (c) a vertical surface for supporting the back and (d) additional horizontal surfaces for supporting the arms. Although a “stadium seat” lacks leg and arm supports, and a bar stool lacks the back and arm supports, both are generally recognized as chairs. A beanbag chair, despite containing “pure” versions of none of these features, also is recognized as a chair because it functions adequately for sitting.

A concept implies “generalization within classes and discrimination between classes” (Keller & Schoenfeld, 1950, p. 155, italics in original), so in a fuzzy concept, there may be generalization along multiple stimulus dimensions. While ABA research must reflect social relevance in some transparent fashion, Figs. 2, 3, and 4 make clear that ABA’s other defining features will be present to varying degrees in different programs of investigation. A common finding in the study of concept learning is that the more class-defining stimulus features that are present in an exemplar, the more likely it is to be responded to as part of the class (Rosch & Mervis, 1975). It is therefore understandable that research incorporating all of the BWR dimensions is most readily recognized as ABA while other research is sometimes viewed with ambivalence. But this ambivalence is a property of observers, not of the relevant research. It makes little sense to impose categorical distinctions (“applied” versus “not applied”) on studies that, despite varying in degree of similarity to ABA research as BWR described it, in all cases aim to advance the understanding of socially important problems.

During the time of “The Crisis,” Iwata (1991) argued that the “too technological” research that troubled other observers was a valid component of a discipline that by its very nature should encompass a wide variety of research topics and styles. What he wrote then is equally applicable to contemporary research that does not necessarily meet every requirement of the BWR framework:

The most serious problem evident in applied behavior analysis today is not the type of research being conducted; it is that not enough good research -- of all types -- is being conducted.... To reduce the frequency of one type of research by denigrating it or by punishing those who do it well seems foolish. (p. 424).

This is the case because opportunities exist

To conduct many varieties of empirical investigation that should qualify as applied behavior analysis because they speak to the functional properties of socially important behavior, even if they deviate from the field’s procedural norms.... These are, to be sure, serious compromises in the modus operandi of applied behavior analysis, and they should not be embraced lightly or routinely. But neither should topics of considerable interest in the everyday world be excluded from analysis. (Critchfield et al., 2003, pp. 482-482)

Limitations of Boundaries

Whatever else may be said of the BWR framework, its strict interpretation creates tidy boundaries between ABA and other pursuits (as was Baer et al.’s intent). We have attempted to raise the question of what is achieved, in the post-1968 world of contemporary ABA, by drawing such distinctions. Once there might have existed uncertainty about the goals to emphasize in extending behavior analysis to the empirical study of everyday human behavior, and Baer et al.’s “Declaration of Independence” was an attempt to ensure that the nascent field of ABA did not devolve into something “too basic” for face-valid attention to important social problems or too casual in approach to achieve behavior change when necessary. A half-century later, with ABA’s traditions well established, neither concern seems terribly compelling.

One place where firm distinctions matter is in the development of a profession (according to Google.com, “a paid occupation, especially one that involves prolonged training and a formal qualification”). Members of a profession typically control who may join as a means of protecting both access to a marketplace of consumers and the quality of services delivered; credentialing is one mechanism for doing this (Critchfield, 2011b). In the case of ABA-as-profession, therefore, boundaries exclude unqualified practitioners and help qualified practitioners find work. The BWR dimensions, as a conjoint set, may be as good a means as any for determining who is qualified to practice. But with one exception, to be noted shortly, the present discussion focuses on research, not practice.

Research is a process of inquiry, not a guild, so what of firm distinctions between one research tradition and another? Because our advocacy for “quasi-behavioral” studies blurs the distinction between “pure” ABA research and other research traditions, the reader will be justified in pointing out that not all methods are created equal. Classical introspection, for instance, had fatal flaws that impeded scholarly progress, and it was justifiably relegated to the scrap heap of investigatory tools (e.g., Boring, 1953). Are “quasi-behavioral” methods, like those of the studies described in the previous section, similarly bankrupt? One perspective, which echoes a strict embrace of the BWR conjoint framework, is that “The standards for pure behavioral research are as uncompromising as the behavioral nature that dictates them… Their violation may not doom an experiment to utter worthlessness, but it must suffer in direct proportion to the trespass” (Johnston & Pennypacker, 1986, p. 32). In response to this proposition, we merely repeat a theme from the last section. Do “quasi-behavioral” studies like we have described provide useful insights into socially relevant behavior? And would including those studies in ABA’s core literature help the field become more interesting, vibrant, intellectually engaged, and socially relevant? The answers to these questions, rather than conceptual arguments, should dictate our collective appraisal of “quasi-behavioral” methods.

A final way in which distinctions might matter is in delineating the mission of academic journals. In penning the BWR article for this purpose, Baer et al. did not just promote attention to social relevance; given the times in which they wrote, they also promoted a particular means of achieving social relevance. If our hypothesis is correct—if topics of societal importance are being marginalized in ABA because they have not been examined within the BWR framework—then an interesting irony emerges. The method that Baer et al. recommended for pursuing social relevance now sometimes helps to limit the number of domains in which ABA, as depicted in its core journals, is socially relevant.

For our money, applied journals, like the applied studies they feature, exist to promote insights into problems of societal importance, not to promote a specific method. Only academicians organize the world according to methods used to study it, and when the goal is to understand a particular problem, firm methodological and disciplinary boundaries rarely are productive. Many important scientific advances trace to an investigator who gained insight by drawing on disparate influences (Root-Bernstein, 1988). Pavlov learned how to measure salivation (and ultimately to describe classical conditioning) by reading case reports of an American physician from the French and Indian War era (Harre, 2009). Field’s (e.g., 1993) development of therapeutic massage for prenatal infants arose partly from an accidental encounter with research about pup-rearing practices in rats. Several prominent behavior analysts have similar stories of unexpected inspiration (Holdsambeck & Pennypacker, 2015, 2016). We suggest that an essential purpose of problem-focused journals is to pull together disparate influences that might yield the next important insight. This is not to propose that, in a perfect world of journal operations, anything goes, only that the bar for what pertains to a behavioral analysis should not be set so high as to discourage clearly relevant work that does not fit a preconceived research mold.

Because insight is not the sole province of scientists, we conclude the present section as it began, by focusing on practice. Baer et al. acknowledged, and observers during “The Crisis” emphasized, that practitioners should understand both intervention techniques (“a collection of tricks;” BWR, p. 96) and the behavior principles that guide their design. Because most of today’s field workers are trained at the Masters level or below (Shook & Favell, 2008), observers have worried that practitioner training may include too little grounding in Conceptual foundations—creating a “Second Crisis” of technological excess involving uncertain quality of implementation and dissemination by practitioners who may be ill-equipped to link practice to basic principles that “have the effect of making a body of technology into a discipline rather than a collection of tricks” (BWR, p. 96). The stakes are considerable. Practitioners who do not understand the field’s Conceptual foundations may not be “able to place their particular problem in a more general context and thereby deal with it ... successfully” (Sidman, 2011, p. 973), and any resulting ineffectiveness may undermine the public demand that now exists for ABA services (Critchfield, Doepke, & Campbell, 2015; Dixon et al., 2015; Sidman, 2011).