Abstract

Impulsivity has traditionally been thought to involve various behavioral traits that can be measured using different laboratory protocols. Whereas some authors regard different measures of impulsivity as reflecting fundamentally distinct and unrelated behavioral tendencies (fragmentation approach), others regard those different indexes as analogue forms of the same behavioral tendency, only superficially different (unification approach). Unifying accounts range from mere intuitions to more sophisticated theoretical systems. Some of the more complete attempts at unifying are intriguing but have validity weaknesses. We propose a new unifying attempt based on theoretical points posed by other authors and supplemented by theory and research on associative learning. We then apply these assumptions to characterize the paradigms used to study impulsivity in laboratory settings and evaluate their scope as an attempt at unification. We argue that our approach possesses a good balance of parsimony and empirical and theoretical grounding, as well as a more encompassing scope, and is more suitable for experimental testing than previous theoretical frameworks. In addition, the proposed approach is capable of generating a new definition of impulsivity and outlines a hypothesis of how self-control can be developed. Finally, we examine the fragmentation approach from a different perspective, emphasizing the importance of finding similarities among seemingly different phenomena.

Keywords: Conditioned reinforcement, Delay discounting, Impulsiveness, Negative contingency learning, Pavlovian–operant interactions, Response inhibition

Many examples of behavior may be deemed impulsive. For instance, a student who knows that she must be attentive in class in order to achieve satisfactory grades and is trying to pay attention to what the teacher says may be distracted by the flight of a strange insect that passes by, causing her to lose the thread of the discourse. As a result, she does not fully understand the subject. Another familiar example involves a man who has been diagnosed with diabetes and has been instructed to reduce his glucose intake. Nonetheless, when walking by a shelf full of cream-filled donuts in the market, he cannot resist the temptation to buy and eat a couple. Another situation that is surely related to impulsivity is a young man with drug abuse problems: Although he is likely aware of the potentially undesirable consequences of taking drugs (e.g., health problems, social rejection, loss of money, and being arrested), he continues to do it.

The concept of impulsivity has long attracted attention because, as these examples show, it is related to socially significant human behaviors such as delinquency, accidents, substance abuse, eating disorders, poverty, and educational and occupational difficulties (e.g., Barkley, 1997; Mannuzza, Klein, Bessler, Malloy, & Heynes, 1997; McReynolds, Green, & Fisher, 1983; Schubiner et al., 2000). The concepts of impulsivity and its opposite, self-control, have often been considered the two ends of a behavioral continuum (Logue, 1988; Rachlin, 1995) and have long been discussed and analyzed by authors from various empirical and theoretical perspectives (e.g., psychodynamics, behavioral psychology, psychiatry, psychopharmacology, personality psychology, etc.; see Ainslie, 1975; Arce & Santistevan, 2006; Odum, 2011b).

Recently, there seems to be renewed interest in this topic (Madden & Bickel, 2010; Odum, 2011a), but despite the widespread use of this concept, there is no consensus on a precise definition. To make matters worse, it has been linked to several behavioral measures that could be obtained under different procedures. For example, in the behavioral literature, impulsivity has been equated with preference for relatively immediate rewards over delayed (but more valuable) ones (e.g., Madden & Bickel, 2010). However, some authors have argued that various other behavioral traits (i.e., interindividual differences that are relatively constant across time; Odum & Baumann, 2010) could be representative of this concept as well, such as inattention, poor response inhibition, novelty seeking, and so on (e.g., Bari & Robbins, 2013; Richards, Gancarz, & Hawk, 2011). Moreover, there are different protocols to evaluate each one of these behavioral traits associated with impulsivity.

Although the word impulsivity is used to label different types of measures, it is not yet clear whether (a) these indexes are fundamentally distinct and therefore represent behavioral tendencies unrelated to a more general phenomenon or (b) they evaluate analogous behavioral tendencies that appear to be only superficially different (Miller, Joseph, & Tudway, 2004; Richards et al., 2011). We will refer to this matter as the fragmentation versus unification issue. In this article, we review some of the evidence and arguments used by both sides in this discussion and then put forward our own account based on basic conditioning principles. We believe that clarifying this issue will nourish our understanding of impulsive behavior and would consequently help us to improve its management. For example, if impulsivity is unitary, then it may suffice to tackle one single index of this construct to make a diagnosis or to design an intervention. Conversely, if impulsivity is fragmented, every index would have to be tackled in order to have a valid diagnosis or an effective intervention. The main goal of this work is to provide insights that could open up a dialog among authors from different research traditions who study what we conceive as closely related phenomena. We will first review some of the procedures commonly used to study impulsivity in laboratory settings.

Laboratory Measures of Impulsivity/Self-Control

Several laboratory protocols aim to measure specific, operationalized, behavioral traits associated with impulsivity/self-control. One of the advantages of these standardized procedures over observations of behavior in natural settings and self-report measures is that one can easily compare analogue behaviors across species (Richards et al., 2011). In addition, studying nonhuman animals’ behavior in the laboratory allows the researcher to considerably reduce extraneous variables, such as learning history, and to make manipulations that would be implausible with humans (e.g., Kerlinger & Lee, 2000). In this section, we will review two major families of measures of impulsivity/self-control. Our discussion of laboratory measures of impulsivity will be limited to those suitable to be used across a wide range of taxa (e.g., human and nonhuman primates, rodents, birds, etc.). Therefore, we have excluded some prominent measures of impulsivity, such as the Barratt Impulsiveness Scale (Barratt, 1959) and the Balloon Analogue Risk Task (Lejuez et al., 2002), that are designed for exclusive application in humans.

Intertemporal Choice Procedures

Intertemporal choice procedures are designed to measure the tendency that individuals have to discount the value of delayed consequences. In these procedures, subjects are typically exposed to a trade-off between a small immediate reward (smaller sooner [SS]) and a larger delayed one (larger later [LL]). These alternatives are usually made available simultaneously and are contingent on topographically similar, but mutually exclusive, responses. There is always an intertrial interval between choices in order to prevent successive choices of the SS alternative that would allow individuals to obtain rewards more frequently by choosing that alternative (Richards et al., 2011); thus, the function of the intertrial interval is to equalize reward rates. Similar choice procedures are used to assess risk proneness—another putative component of impulsivity. In these procedures, the trade-off consists of certain small rewards versus a probabilistic larger reward that represents less gain in the long run. Although both types of choice procedures are typically grouped together, a large body of research suggests that they reflect quite different processes (see Green & Myerson, 2010). For the sake of brevity, we will limit our discussion to intertemporal choice procedures. Two types of intertemporal choice procedures are described in the following sections.

Simple Intertemporal Choice Procedure

This type of procedure is typically used in research whose main objective is to evaluate changes in preference due to a specific type of treatment (e.g., pharmacological, surgical, motivational, etc.) or to compare the preference of two independent intact samples. This is a relatively brief and simple procedure that entails the simultaneous presentation of both alternatives (SS and LL) and the measurement of the proportion of choices or responses (for discrete or free operant trials, respectively). Individuals are considered more impulsive or more self-controlled by comparing their choices to those observed in a previous stage of the experiment or to those of another individual or experimental group (or even another species). A subject’s behavior is usually considered more self-controlled when the proportion of LL choices is greater than 0.5 (the indifference point). However, even if the proportion of LL choices is less than 0.5, behavior may be considered more self-controlled. Interpreting this measure requires a reference point (Killeen, 2015). For example, if an individual chooses the LL alternative in a proportion of 0.3, he or she would be considered more self-controlled than someone who chooses LL in a proportion of 0.12. Thus, the proportion by itself is insufficient. The opposite trend—a smaller proportion of LL choices—would be considered a more impulsive pattern of responding. For illustration, Kirk and Logue (1997) compared the preferences in an intertemporal choice situation with food as a reward for women in different deprivation conditions. Participants’ preferences were tested in two conditions: (a) food and water deprivation and (b) food preload; all of the participants were tested in both conditions. These authors found that the proportion of LL choices was greater when the participants received the food preload treatment. Another study that used this type of method was the one conducted by Orduña (2015), who compared the preferences in an intertemporal choice procedure of two rat strains, Wistar and spontaneous hypertensive rats (SHRs; an animal model of impulsive behavior). In this study, the SS and LL rewards were the terminal links in a concurrent-chains schedule of reinforcement; that is, subjects had to satisfy a response requirement before they could have access to either the immediate or the delayed alternative. Results showed that Wistar rats allocated proportionally more responses to the LL alternative than to the SS alternative compared with the SHRs, which endorsed the latter strain as an appropriate model of impulsivity in nonhuman animals.

Adjusting Intertemporal Choice Procedure

This type of procedure was proposed by Mazur (1987) as an adaptation of psychophysical escalations (Stevens, 1951) in which the value of a parameter (delay or amount of reward) is changed within a session in order to determine indifference points between two alternatives when one remains fixed with respect to delay and reward magnitude. As a result, the magnitudes of the delay and amount that make the LL alternative equivalent to the SS alternative—or vice versa—can be determined. Plotting several indifference points against the delay to the LL alternative produces a decreasing function, usually hyperbolic in form. From this mathematical function, a temporal discount index (i.e., the k parameter) can be inferred, whose value is presumed to be directly proportional to the degree of impulsivity (and therefore inversely proportional to the degree of self-control). This index has proven to be quite useful in predicting maladaptive behaviors in humans, such as substance abuse (Madden, Petry, Badger, & Bickel, 1997; Vuchinich & Tucker, 1983) and gambling (Dixon, Jacobs, & Sanders, 2006).

Omission Procedures

In addition to intertemporal choice procedures, other approaches also claim to study behavioral tendencies related to impulsivity, although they are not explicitly designed to study choice. In omission procedures, also known as response inhibition or action impulsivity tasks, performance is deemed impulsive if subjects fail to refrain from, or omit, a specific response that cancels or postpones reward delivery. Three such procedures are described in the following sections.

Go/No-Go Paradigm and Stop Signal Task (SST)

The go/no-go paradigm and the SST are two procedures that are usually used to measure the response inhibition component of impulsivity. In both paradigms, subjects are exposed to a situation in which “go” and “no-go” trials are interspersed unpredictably. Go trials are signaled with a particular stimulus, and no-go trials can be signaled by either a different stimulus or by a compound of the go stimulus and a “stop” signal. Subjects can receive a reward if they respond quickly in go trials and if they successfully omit responding in no-go trials. The crucial difference between these two protocols is the temporal location of the stop signal: The stop signal is presented simultaneously with the go stimulus in the go/no-go paradigm, whereas the stop signal is presented when a certain length of time has elapsed from the go stimulus onset in the SST (Bari & Robbins, 2013). Correspondingly, different dependent variables are used in each paradigm. In the go/no-go paradigm, the typical dependent variable is the proportion of commission errors (i.e., failure to omit responding in no-go trials). In the SST procedure, the probability of responding in no-go trials is plotted against several durations of the interstimulus interval or stop-signal delay, creating an inhibition function (e.g., Logan & Cowan, 1984). All else being equal, inhibition functions tending toward the left are indicative of poor inhibitory capacity (but see Verbruggen & Logan, 2009, for alternative explanations) and are thus indicative of impulsivity.

Despite the subtle differences between these paradigms, theorists have suggested that performance on each reflects distinct behavioral processes. Performance in the go/no-go paradigm is assumed to measure the ability to refrain from emitting a response before it starts, whereas performance in the SST is supposed to measure the ability to cancel an already-initiated response (Schachar et al., 2007). There is some evidence indicating that those processes have different (yet overlapping, to some extent) neural substrates (Eagle, Bari, & Robbins, 2008). Similar to other impulsivity measures, a poor performance in these paradigms has been related to a number of psychiatric disorders, such as attention deficit/hyperactivity disorder (ADHD), bipolar disorder, and substance abuse (see Wright, Lipszyc, Dupuis, Thayapararajah, & Schachar, 2014). In addition, a mice strain that is a model of impulsive behavior has shown a poorer performance in these types of protocols (Richards et al., 2011).

Differential Reinforcement of Low Rates (DRL) Procedures

DRL schedules are free operant procedures in which the reward is delivered when subjects produce a certain response following a specific interval with no response (e.g., Doughty & Richards, 2002). Here, premature responses are taken as an index of impulsivity. Children categorized as impulsive by their teachers (Gordon, 1979) and those diagnosed with ADHD (Mancebo, 2002), as well as selectively bred impulsive rats (Orduña, Valencia-Torres, & Bouzas, 2009; Sagvolden & Berger, 1996), have all been shown to be less efficient than control groups in this procedure, thus offering evidence of its external validity as a measure of impulsivity. In this task, factors other than impulsivity (e.g., motivational, motor, timing related) may also account for performance, but analytical strategies have been developed for dissociating some of these factors, and complementary procedures have been proposed for discarding alternative explanations. For instance, Richards, Sabol, and Seiden (1993) have developed a peak deviation analysis of interresponse time distributions to differentiate time estimation and response inhibition. In a similar vein, Sanabria and Killeen (2008) used a response-withholding task to complement DRL performance analysis, which rules out confounding factors such as motivational and motor variables.

Autoshaping Procedures

Tomie (1995, 1996) argued that autoshaping procedures may also provide measures of impulsivity. These procedures involve presenting an unconditioned appetitive stimulus (e.g., food, water, heat, etc.) preceded by the presentation of a spatially localized conditioned stimulus. Because the appearance of the stimuli is unrelated to the subject’s behavior, this might be conceived as a Pavlovian procedure. When subjects approach and/or make contact with the conditioned stimulus, they are said to be performing autoshaped responses (Brown & Jenkins, 1968). It is common to observe large individual differences in the propensity to approach the localized cue; subjects that tend to approach the cue are usually labeled as sign trackers, whereas subjects that approach the food site instead of the cue upon cue presentation are labeled as goal trackers (Robinson & Flagel, 2009). Tomie, Aguado, Pohorecky, and Benjamin (1998) argued that autoshaped responses, like impulsive choice, occur in anticipation of an imminent reward and that their emission is “compulsive.” To assess the relation between autoshaped behavior and impulsive choice, those authors conducted a study in which they measured the correlation between performance in intertemporal choice and autoshaping procedures and found that subjects that showed a stronger preference for SS rewards in choice situations were also more likely to acquire responses through autoshaping. This result lends support to their idea of a link between these two types of behavioral protocols. They further evaluated the validity of their argument using other indirect measures of impulsivity and determined, for example, that autoshaped behavior is related to voluntary ethanol consumption and higher levels of corticosterone release induced by aversive stimulation. Ethanol intake has been linked to impulsivity when measured by other procedures (e.g., Poulos, Le, & Parker, 1995), whereas stress-related corticosterone release has been shown to be a predictor of drug abuse in both rats (Piazza, Demeniere, Le Moal, & Simon, 1989; Prasad & Prasad, 1995) and nonhuman primates (Higley & Linnoila, 1997). Drug abuse is, in turn, related to other measures of impulsivity (Critchfield & Kollins, 2001).

Fragmentation Versus Unification

So far, we have described some of the most commonly used procedures for measuring impulsivity/self-control at a behavioral level. There is a near-universal consensus that impulsivity is a multifactorial trait and that each measure accounts for a distinct component of this construct. Many authors believe that the behavior observed in each type of procedure may not necessarily represent the same behavioral tendency (i.e., fragmentation) and that the concept of impulsivity should not be considered a “monolithic whole” (e.g., Evenden, 1999; Ho, Al-Zahrani, Al-Ruwaitea, Bradshaw, & Szabadi, 1998; Sagvolden & Berger, 1996; Winstanley, Dalley, Theobald, & Robbins, 2004). These claims rest upon evidence of the absence of correlation and covariation between different measures of impulsivity/self-control.

For example, Winstanley et al. (2004) infused a serotonin neurotoxin in rats intracerebroventricularly (serotonin depletion is known to increase impulsive behavior; Soubrié, 1986), measured their performance under several procedures that are assumed to assess impulsivity/self-control, and compared the results with those of a control group. They found between-group differences in performance in autoshaping, in conditioned locomotion activity, and on a visual attention task but no significant differences in performance on an intertemporal choice procedure. They also assessed the correlation between performances in each procedure and found a relation between autoshaped behavior and conditioned locomotion activity, possibly indicating a relation between these two types of behavior. Nevertheless, they failed to ascertain any significant correlation among other measures of impulsivity. The absence of correlation among most measures was interpreted as evidence refuting the idea that impulsivity is a unitary construct. They further concluded that not all types of impulsivity are equally sensitive to serotonin depletion.

There are several other studies reporting the absence of correlations between performances on different families of behavioral procedures to assess impulsivity in humans (e.g., McDonald, Schleifer, Richards, & de Wit, 2003; Reynolds, Ortengren, Richards, & de Wit, 2006) and nonhuman animals (e.g., Dellu-Hagedorn, 2006). This absence of correlations seems to suggest that the behavioral tendencies measured by each family of procedures are not easily explained by a unitary process. Nevertheless, caution must be exercised in interpreting these findings. Green and Myerson (2010) suggest that if one measure is not predictable from another measure (i.e., they do not correlate), “then either the two do not share a common process or, at the very least, differences in other processes exist and are more powerful determinants than the shared process” (p. 81). Thus, in order to demonstrate the existence of the shared process, one ought to reduce the influence of the other processes. Then, perhaps, the expression of the relationship between two measures of impulsivity would be parameter dependent and not every arrangement of a given procedure may serve to predict performance in another procedure.

Few studies have found correlations between measures of different families of procedures used to assess impulsivity. The aforementioned study by Tomie et al. (1998) is an example. Another example is a study conducted by Anker, Zlebnik, Gliddon, and Carroll (2009) in which impulsive choice by rats was predictive of no-go responses in a go/no-go paradigm. Interestingly, this correlation was observed when saccharin pellets—but not cocaine infusions—were used as the reward in the go/no-go task. The absence of correlation observed in the cocaine condition may have been due to a ceiling effect that masked the interindividual variability necessary for the detection of any correlation between two measures.

In a more recent study, El Massioui et al. (2016) evaluated DRL performance and impulsive choice in a rat transgenic model of Huntington disease (tgHD rats) and compared it to those of a control rat strain. They found that the control strain outperformed tgHD rats in both choice and DRL tasks. Furthermore, El Massioui et al. (2016) evaluated the relationship between the different measures for each strain and found a strong correlation, but only for tgHD rats. The finding that impulsive choice and DRL performance were statistically independent in the control animals was reported by the authors as being in line with previous studies in which no correlations were found between omission and choice measures of impulsivity. By contrast, the authors interpreted the positive correlation in the tgHD rats as being indicative of a parallel deterioration of the neural substrates underlying both measures. Nevertheless, in a closer examination of El Massioui et al.’s (2016) data, it could be noted that the relative variability was greater in tgHD rats for both measures. As in the study of Anker et al. (2009), the condition with poorer interindividual variation could have obscured a potential correlation.

Small-sample (e.g., n < 100) correlational studies must be interpreted with caution, as they can be conceived as low-resolution analyses. Two recent studies used relatively large samples to study the relationship between different families of impulsivity measures in humans using structural equation modeling. Stahl et al. (2014) found that some of the different families of measures correlated. Nevertheless, as these correlations were not perfect, the authors interpreted those measures as reflecting separable factors. However, MacKillop et al. (2016) found a virtually nil (r = .01) correlation between choice and response inhibition measures, which was interpreted as evidence that different measures pertain to largely different categories. In both studies, the authors made cautionary notes about the possibility that shared features (e.g., requirement of quick motor responses) embedded within different tasks may have biased results. Whereas Stahl et al. (2014) warned that their correlations could have been artificially inflated by the similarity of task modalities across different measures, MacKillop et al. (2016) cautioned that the across the assessment format in the different measures may have contributed to the unexpected near-zero correlation in their study. These insights point to the importance of embedded differences and similarities in task formats among different measures, and this might explain the results of a study conducted by Brucks, Marshall-Pescini, Wallis, Huber, and Range (2017) in which no significant correlations were found between several measures even within the same family (response inhibition) in a moderate sample size (n = 67) of domestic dogs.

In spite of the absence of a substantial body of evidence supporting the notion of a unitary construct of impulsivity, several authors have devoted their efforts to building theoretical bridges between different indicators of impulsive behavior. Some, for instance, claim that the underlying factor of several manifestations of impulsive behaviors is a deficit in inhibitory capacity (e.g., Bari & Robbins, 2013; Buss & Plomin, 1975; Poulos et al., 1995). Others have suggested that the underlying feature of impulsive behavior in several tasks is a steep delay discounting. For example, after reviewing a handful of procedures that other authors have considered measures of impulsivity/self-control, Logue (1988) asserted that “[it] is possible to redefine all of these indicators of self-control and impulsiveness as choices between larger, more delayed and smaller, less delayed reinforcers” (p. 665). Although Logue clearly expressed a unifying intuition, she did not say exactly how this might be accomplished.

More recently, Monterosso and Ainslie (1999) made an effort to develop an approach similar to the one suggested by Logue (1988). These authors attempted to unify impulsivity in autoshaping, DRL, and intertemporal choice procedures in a sophisticated delay discounting framework. They argued that the differences among these procedures are misleading and that the discounting rate is sufficient to characterize impulsivity as measured in any of them, so performance under all three types of procedures could be reduced to the effects of delay upon the value of rewards, and impulsivity would reflect a steep discount of the reward value according to the delay in reception.

Although this might be taken as a considerably parsimonious unifying account, Monterosso and Ainslie (1999) were forced to make assumptions for each procedure above and beyond those typically used to characterize intertemporal choice performance. Moreover, they had to postulate different assumptions for each type of procedure. For the purpose of accounting for impulsive behavior.

In order to view poor performance on a DRL schedule as reflective of a steep underlying discount function, it is necessary to view premature responses as the choice of a smaller more immediate reward over a larger later one. This requires only the reasonable assumption [emphasis added] that the imperfect time sense makes the organism’s estimate of reward vary as a function of delay. (p. 342)

Accordingly, when an amount of time shorter than the criterion to reward elapses, subjects could have some expectancy with respect to the occurrence of a reward with the following response and, as time elapses, this expectancy should increase. Therefore, when an individual produces a premature response, this could be understood as a choice of a smaller and sooner expectancy of reward over a larger, more delayed expectancy. However, regarding autoshaping procedures, they make a quite different assumption:

The case of autoshaping requires further explanation, but we believe it can also be incorporated into the discounting framework, at least in the negative automaintenance variant. Again, the temporal discount rate conception of impulsivity requires viewing impulsivity as the choice of a smaller, more immediate reward over a later, larger alternative. Given a narrow definition of reward, autoshaped behavior has no measurable reward. As such, automaintenance behaviors have typically been treated as principally Pavlovian rather than instrumental . . . therefore [these behaviors could] not be viewed as the choice of a smaller, more immediate reward since they are not choice behaviors at all. However, we almost certainly make a mistake if we assume that all or even most of what rewards an organism’s choice is observable. . . . The operant (reward-driven) nature of that behavior is evident from the fact that its value is in competition with the value of observable rewards. . . . Pecking may be intrinsically rewarding for a pigeon that is anticipating food. (Monterosso and Ainslie, 1999, p. 342)

Thus, autoshaping could be conceived as the choice of a smaller, sooner reward (because it is claimed that autoshaping responses are intrinsically rewarding) over a larger but temporally remote reward (i.e., access to the actual reward).

Monterosso and Ainslie’s (1999) account seems to be moot for two reasons. First, despite their attempt to unify three different paradigms under one common characterization (delay discounting), they need to incorporate ad hoc assumptions for each paradigm and thus fail to provide a parsimonious view of impulsive behavior. Second, although the additional assumptions proposed in each case (i.e., expectancies and intrinsic rewards) are easy to understand intuitively, they have questionable ontological status.

Another remarkable attempt to unify different measures of impulsivity under a single framework was made by Tomie et al. (1998). These authors highlighted certain similarities between impulsivity in choice situations and autoshaping procedures. In the former, impulsive choices reduce the amount of the rewards obtained per time unit, whereas impulsive behavior in the latter situation (i.e., stimulus-approaching responses) does not improve the probability of obtaining rewards but, quite to the contrary, could delay their reception or even cause the individual to lose the opportunity to receive them. Moreover, in so-called negative automaintenance procedures—a variety of the autoshaping paradigm—approach responses actually cancel reward delivery that would otherwise be obtained. Nevertheless, even in such adverse situations as these, subjects have been shown to develop and maintain autoshaped behavior despite long-lasting experiences with those contingencies (Williams & Williams, 1969; but see Sanabria, Sitomer, & Killeen, 2006). Hence, it is argued that one important similarity of behavior in these two situations is persistence in spite of the potential reduction in overall gain.

Although these authors omitted DRL schedules and abstention procedures from their analysis, their reasoning could easily be applied to such situations. In DRL schedules, for example, premature responses cause the clock that times the temporal requirement without responses to reset, which necessarily causes overall reward density to decrease. In a go/no-go procedure, in turn, inappropriate approaching responses also result in an overall reduction in the rewards obtained because each approach response cancels reward delivery in a particular trial. Thus, Tomie et al.’s (1998) characterization of impulsivity fits DRL and go/no-go procedures with no problem, which increases its external validity. In other words, in this characterization, impulsivity appears as a noxious behavior in the sense that the organism will not be able to maximize available rewards in its environment if it is emitted.

This conceptualization seems capable of encompassing the dimension of impulsivity/self-control in several types of procedures. Nonetheless, it remains quite loose because it does not help differentiate impulsivity from suboptimal behavior observed in other situations. One could recognize several ways in which an organism might fail to reach the maximum gain in almost any instrumental conditioning situation. For example, suppose we were to expose two rats to a situation in which they have to press a lever 10 times in order to obtain a food reward and that one rat obtains an average of 80 rewards every half hour, whereas the other only obtains 62. Based on the fact that the second rat obtained fewer rewards than the first, can we say that it is more impulsive? According to Tomie et al.’s approach, the answer would be yes, but these relatively simple reward arrangements were not particularly designed to evaluate impulsivity/self-control. This approach does not specify which conditions are suitable for assessing impulsivity/self-control.

Another perspective that could contribute to the unifying efforts is the competing neurobehavioral systems approach. This account posits that individuals will behave impulsively when the relative strength of the so-called impulsive decision system exceeds that of the so-called executive decision system (Bickel, Jarmolowicz, Mueller, & Gatchalian, 2011a). Such a claim is supported by evidence that choices for immediate rewards are associated with relatively high levels of neural activation in the limbic and paralimbic areas (i.e., the impulsive system), whereas choices for delayed rewards are associated with relatively high levels of neural activation in prefrontal brain regions (i.e., the executive system; see McClure, Ericson, Laibson, Loewenstein, & Cohen, 2007). Koffarnus, Jarmolowicz, Mueller, and Bickel (2013) explain that

the evolutionarily older impulsive system, made up of portions of the limbic and paralimbic regions (the amygdala, nucleus accumbens, ventral pallidum, and related structures), values immediate reinforcers. By contrast, the more recently evolved executive system, made up of portions of the prefrontal cortex, may be needed for the inhibition of the impulsive system and the associated valuation on delayed reinforcers. (p. 36)

Given that support for this neuroeconomic account comes mostly from correlational studies, no causal inference can be made based on these data. Nevertheless, some recent experimental studies using transcranial magnetic stimulation techniques suggest that the dorsolateral prefrontal cortex modulates impulsive choice in humans (e.g., Cho et al., 2010), thus providing more reliable support for this approach.

Interestingly, evidence to support the notion that competing neurobehavioral systems account for impulsivity/self-control is also provided by other kinds of procedures, such as go/no-go tasks (e.g., Nieuwenhuis, Yeung, van den Wildenberg, & Ridderinkhof, 2003; Silbersweig et al., 2007). Thus, this account could be considered to possess a unifying potential. A recent review of the neural mechanisms involved in impulsivity by Dalley and Robbins (2017) reported that although there are subtly different neural substrates related to different forms of impulsivity (which may indicate dissociation in controlling mechanisms), there is also considerable overlap in some of those neural substrates. This implies that the postulates made by the competing neurobehavioral systems approach hold, at least at the macrocircuit scale. Clearly, this approach does not attempt to characterize impulsivity/self-control behaviorally but rather neurobehaviorally; nevertheless, it could serve to complement purely behavioral-based approaches. In other words, although it corresponds to a different level of analysis, this approach could become a resource for understanding some aspects of the behavioral level (see Killeen, 2001).

In conclusion, although there is sparse convincing evidence supporting the idea of a monolithic construct of impulsivity, some authors have made laudable efforts to unify different forms of impulsivity into a single theoretical framework. Nevertheless, as discussed in this section, such efforts have noticeable validity weaknesses. In the next section, we outline our own unifying attempt, trying to incorporate some of the assumptions of previous attempts and making some amendments to them by adding other assumptions grounded in basic learning processes.

The Present Approach

Our approach could be framed as an attempt to view impulsive performance under different paradigms as analogous forms of one sole behavioral tendency. We argue that our proposal has fewer weaknesses than other unifying attempts, given that (a) we appeal to a single set of explicative sources that make it more parsimonious; (b) assumptions are well grounded by evidence or coherent theoretical formulations that make them more plausible; (c) our theory may account for different measures of impulsivity that make it more unifying; (d) it may also account for both the formal descriptions of impulsive behavior and the ontogenesis of self-control, which makes it more encompassing; and (e) it may stipulate a priori conditions for measuring impulsivity/self-control, which facilitates establishing experimental conditions for testing.

The core assumption of our approach is the possibility that a stimulus may acquire a different function depending on its temporal and statistical (i.e., Pavlovian) relation to primary rewards. This is not new in the impulsivity/self-control literature. It has long been suggested that Pavlovian conditioned stimuli could play a role in impulsivity. Lopatto and Lewis (1985) asserted that signals related to reward may partly account for the impulsivity observed in pigeons under experimental procedures. Other authors have demonstrated that manipulating the function of cues related to SS and LL alternatives in choice procedures alters the performance of pathological gamblers (Dixon & Holton, 2009). Ainslie (1975), meanwhile, hypothesized that if individuals are able to detect signals that predict reward delivery in the LL alternative, those cues could become secondary rewards due to the temporal proximity between the stimuli and the reward delivered after the delay. Moreover, based on the idea that stimuli associated with rewards acquire a conditioned rewarding value, Mazur (1995) generated a mathematical model that aimed to determine the value of conditioned rewards as a function of delay.

Secondary rewards increase the frequency of a response that produces them, maintain a previously acquired response, and can even make a subject choose one of two alternatives that deliver the same amount of primary reward but different rates of secondary rewards (e.g., Mazur, 1995; Prével, Rivière, Darcheville, & Urcelay, 2016). Correspondingly, and more important for our approach, stimuli that are negatively correlated to a primary reward may acquire an aversive function (Leitenberg, 1965); this conditioned aversive stimulus may punish ongoing responses (i.e., decrease their frequency when presented contingently), and the organism will tend to avoid or escape from them. There is recent evidence for this claim in the field of choice behavior. For example, Laude, Stagner, and Zentall (2014) exposed pigeons to a choice procedure with different stimuli associated with the delivery of food and with the absence of food in the poorer of two probabilistic alternatives. Using a summation test (a routine test for conditioned inhibition), these authors found that the conditioned inhibitory properties of the stimulus related to the absence of food covaried with the choice of the poor (suboptimal) alternative. The more the inhibitory properties of the stimulus were negatively associated with food, the less the animals preferred the poor alternative (for similar results with rats as subjects, see Trujano, López, Rojas-Leguizamón, & Orduña, 2016). This was interpreted as evidence not only that acquired appetitive stimuli (conditioned rewards) can influence performance but also that acquired aversive properties of stimuli (arising from the prediction of no reward) may also be important determinants of choice behavior.

Therefore, our first assumption is that a Pavlovian process is an important factor in behavior classified as impulsivity. This kind of approach is not specific to either impulsive behavior or the paradigms described herein. Several authors have attempted to explain other instances of behavior in different paradigms by unifying Pavlovian and operant processes, such as avoidance (e.g., Mowrer, 1956), autoshaped behavior (Hearst & Jenkins, 1974), behavior sequences (Enquist, Lind, & Ghirlanda, 2016), or behavioral contrast (Schwartz, 1978).

Second, we assume that such cues exert an effect that is independent of primary rewards and that they are commensurable in the sense that they could be traded. For instance, primary rewards often control behavior at the expense of secondary rewards, but there may be cases in which the latter also override the former. Most of the time, behavior is the result of a joint contribution of primary and secondary rewards (or conditioned aversive stimuli). Indeed, Enquist et al. (2016) recently modeled this phenomenon mathematically, accounting for various cases of misbehavior and suboptimal performance. A very neat demonstration of this assumption was presented in an experiment conducted by Cronin (1980) in which pigeons showed a greater preference for secondary rewards over delayed primary rewards. The birds were exposed to a condition in which pecking one of two available keys produced the delivery of food after a 60-s delay. During the first 10 s of the delay to the primary reward, a yellow light came on, whereas a blue light was presented during the last 10 s of the delay so that this light was paired with the primary reward. When subjects chose the other alternative, the blue light came on for 10 s, followed by a 40-s blackout and, finally, the presentation of the yellow light (but with no primary reward). Unexpectedly, the pigeons showed a greater preference for the alternative that produced the blue light immediately, although it did not deliver any food reward. We may interpret this result as evidence that secondary reinforcement can override the value of available primary rewards, at least under certain conditions.

A third assumption is that an organism’s responses produce stimuli of which the experimenters may not be aware or over which they may have no control but that actually can play a critical role in observed performance. Sensory feedback can be classified into two categories: extrinsic and intrinsic (not to be confounded with external and internal). Extrinsic sensory feedback comprises consequences of behavior that are controlled by the experimenter, whereas intrinsic feedback includes those cues that are inevitably produced by the response itself (Greenwald, 1970). Examples of intrinsic sensory feedback are changes in the visual field due to ocular or cephalic rotation, auditory perception of one’s own vocalizations, proprioceptive cues contingent upon specific motor movements of an extremity, tactile cues contingent upon contact with an object, or vestibular cues contingent upon postural changes. The idea that sensory feedback can acquire stimulus control was developed earlier by such classic authors as Guthrie (1940). More recently, Dinsmoor (2001) further developed this notion, arguing that the stimuli scheduled by the experimenter should not have any special status in theoretical analysis, emphasizing the role of such response-dependent stimuli as tactile and proprioceptive cues, and claiming that, far from being entirely hypothetical, as some authors have suggested (e.g., Herrnstein, 1969), these response-dependent stimuli are just as material and observable as the presentation of a light or tone. Dinsmoor (2001) concluded that “the only difference worthy of note is that this stimulus is under an immediate control of the subject, rather than under the control of the experimenter” (p. 315). Thus, neglecting these stimuli and their dynamic properties when describing organism–environment interactions would constitute a serious error.

Killeen (2014) also took a similar approach. He suggested that access to behaviors that anticipate an appetitive situation could reinforce actions leading to them. For example, Killeen, Sanabria, and Dolgov (2009) conducted a study using an intermittently reinforcing Pavlovian appetitive procedure with a key–light preceding food availability. In this autoshaping task, key–peck responding on a given trial was found to be dependent of whether the previous trial involved (a) light–food pairings or (b) peck–food pairings. This finding is in line with the idea that although the scheduled probabilities of the stimulus–reinforcer pairings can be fully controlled by the experimenters, the random sampling of trials with responses causes the acquired properties of the stimulus to continually vary. The authors stated that “the [conditioned] strengths of the context, the key, and peck state [i.e., response feedback] could be continuously varying, each in their own way” (p. 467).

A fourth assumption is the well-known idea that consequences lose their value (appetitive or aversive) as the delay to their presentation is lengthened (e.g., Lattal, 2010). This is a common source of explanations of impulsive behavior found in the literature. Although we agree with this assertion, we also believe that, on its own, it is insufficient to understand impulsive behavior in some conditions, and it adds nothing to our understanding of how self-control develops. Therefore, we believe that this idea may have enormous explicative potential, but only if it is supplemented by the other assumptions mentioned in this section.

The final assumption concerns choice. Similar to Monterosso and Ainslie (1999), we claim that anything an organism does could be regarded as choice, whether or not it involves a situation in which there are two or more explicitly arranged symmetrical alternatives. Thus, organisms always perform one of various alternative behaviors that are mutually exclusive and that result in mutually exclusive consequences.

To sum up, there is a great deal of empirical support for the idea that stimuli positively correlated to appetitive events become secondary rewards and for the notion that stimuli negatively correlated to appetitive events become conditioned aversive stimuli. Moreover, there is support for the idea that the values of primary and secondary rewards add up algebraically. In addition, there is sufficient theoretical elaboration to consider the idea that stimuli not explicitly scheduled by an experimenter may play an important role in observed performance under some experimental arrangements. Of course, the assumption that has the greatest support concerns the delay discounting of reward value. Finally, the notion that we can regard any kind of behavior as choice only requires a minor conceptual shift. Assuming that it is plausible for these ideas to operate simultaneously, we can build a theoretical system that is able to account for some examples of impulsive behavior in laboratory settings. Let us see how, if well assembled, these fragments of scientific knowledge can account for all the aforementioned procedures.

Applying our Assumptions to Impulsivity/Self-Control Paradigms

We will now attempt to apply the aforementioned theoretical assumptions to some of the most common paradigms used to measure impulsivity. First, we tackle DRL procedures. Applying the first and third assumptions allows us to deduce that kinesthetic and tactile feedback plus the contextual cues produced by the response (e.g., lever pressing) could acquire secondary reward properties under DRL procedures because every single reward is preceded by the response (and therefore by this stimulation). Although this logic could apply to almost all positive reinforcement situations, DRL procedures constitute a peculiar case in which, paradoxically, the same response that produces a reward may also postpone the opportunity to obtain the reward if it is emitted before a specified period of time.

Our second assumption is that a secondary reward could be comparable to a primary one in the sense that both increase the probability of a response that precedes them, although not equally. It is clear that, all things being equal, a primary reward would be much more effective in altering response frequencies than a secondary reward. However, when subjects emit premature responses in a DRL schedule, these responses could be under the control of immediate secondary rewards at the expense of delayed primary rewards. Thus, subjects make choices in the sense that emitting the premature response and waiting for the opportunity to respond successfully are exclusive behaviors that produce exclusive outcomes. Responding prematurely results in a considerable amount of secondary rewards (i.e., tactile or kinesthetic feedback, contextual cues), whereas withholding that response would reliably lead to a primary reward, but the subject would have to give up the secondary reward at that moment. Accordingly, it is also easy to frame impulsive performance under DRL procedures with the delay discounting approach of impulsivity by considering it as the choice of an immediate secondary reward over a primary delayed one. Given that the primary reward is delayed, at some point its subjective value may be less than that of the secondary immediate reward.

With respect to autoshaping and automaintenance procedures, the fact that a neutral stimulus is paired with an appetitive unconditioned stimulus (i.e., one that would function as a reward if presented contingently on a response) may cause that neutral stimulus to function as a secondary reward. The problem here is that such a stimulus is presented regardless of the subject’s behavior, so in this case it would be hard for the experimenter to track a response–outcome relation. Nevertheless, we can assume that approaching the focalized stimulus is maintained by the increased salience of that presumed secondary reward as it gets closer to the optical field. Therefore, by withholding the autoshaped response, subjects would be stimulated by a mild secondary reward, but when they actively approach the focus of that stimulation, the outcome is an intense (and presumably more effective) secondary reward (for similar interpretations, see Enquist et al., 2016; Williams, 1994). In fact, there is evidence that individual differences in the propensity to approach a localized cue predict the susceptibility of that cue to acquire conditioned reinforcement properties. In a study conducted by Robinson and Flagel (2009), a localized cue previously paired with a food reward functioned as a conditioned reinforcer when it was made contingent upon a new response (nose poke), but interestingly this effect was only observed for subjects classified as sign trackers.

Indeed, among other possible explanations, Wasserman, Franklin, and Hearst (1974) offered a secondary reward (or conditioned reinforcement) interpretation of autoshaped behavior that is quite similar to this one. However, they object to this interpretation because autoshaped responses are maintained even when reward presentation is prevented or reward delivery is canceled (in the case of negative automaintenance procedures). In our framework, the fact that autoshaped responses persist despite potential reward loss does not effectively refute a secondary reward interpretation; rather, it is an argument that supports an interpretation based on delay discounting supplemented by the assumption of competition of secondary and primary rewards for controlling behavior. This approach would characterize autoshaped responses as being controlled by immediate secondary rewards at the expense of delayed—and therefore less valued—primary rewards. Additionally, the rewarding value of the conditional stimulus can hardly be extinguished, inasmuch as it is continuously (in autoshaping)—or occasionally (in automaintenance)—paired with the unconditioned stimulus (see Killeen et al., 2009).

The case of go/no-go procedures can be interpreted in a similar manner. In this sort of procedure, a cue sets the occasion for the response (e.g., magazine approach) that is to be rewarded. However, this procedure has one particularly salient feature: The stop cue can be presented unexpectedly and, if so, the subject must refrain from responding in order to obtain a reward. Impulsive performance under these procedures could also be interpreted as behavior maintained by immediate secondary rewards. In this case, a cue-prompted approaching response always precedes a reward, so we can consider that sensory feedback (visual, tactile, kinesthetic) of the approaching response (in the presence of the cue [therefore conditionally]) would function as a secondary reward.

In a typical laboratory intertemporal choice procedure, the deliveries of the SS and LL rewards have many stimuli in common—for example, the sounds and vibrations emitted by the food magazine (cues that are virtually identical regardless of the amount of reward delivered). Therefore, those stimuli may acquire similar secondary rewarding properties. If the value of the secondary reward is proportional to the magnitude of the primary reward that it signals (e.g., D’Amato, 1955), then the secondary reward value of cues involved in the SS alternative may be oddly strong because this value is paired with the larger reward as well. Thus, the similarity between the stimuli involved in the SS and LL reward deliveries—or, more precisely, the generalization of those stimuli—could arguably be a critical variable in the maintenance of impulsive behavior in intertemporal choice procedures.

This characterization is similar to the one offered by Killeen et al. (2009) to explain autoshaping performance. These authors assert that “key-food pairings elicits key pecking (conditioning), which, in turn, eliminates the key-food pairings, reducing key pecking (extinction), which reestablishes key-food pairings (conditioning), and so on. This generates alternating epochs of responding and nonresponding” (p. 448). In our view, choices of the LL alternative endow reward delivery–associated stimuli with oversized conditioned reward properties. When the subject incidentally chooses the SS alternative, these inflated conditioned rewards add up to the smaller reward subjective value, making it relatively probable that the subject will choose that alternative in the next trial and so on, until the oversized conditioned value of the reward delivery–associated stimuli eventually deflates by its association with the smaller reward. This assertion could be tested empirically by making the SS and LL rewards qualitatively distinct or at least signaling them with different cues in order to make them more distinguishable. Our prediction is that impulsivity would be a function of the similarity of the rewards (and/or their accompanying stimuli) provided by the SS and LL alternatives.

A New Definition of Impulsivity

So far, we have presented the assumptions that guide our view of impulsivity. We hope that the preceding section was sufficient to clarify how these assumptions can be applied to some of the procedures currently used to study the concept of impulsivity. The next step is to identify common elements in those various—and apparently different—forms of behavior in order to characterize them all as one sole behavioral tendency. According to our approach, the defining feature of impulsivity (at least in the procedures described herein) is that such behavior tendency is maintained largely by secondary rewards and involves responses that are negatively related to primary reward delivery and hence diminish overall gain. Although this idea is formulated in a purely behavioral manner, it can be integrated with the neuroeconomic perspective of impulsivity, which posits that impulsivity emerges whenever the neural activation of the impulsive system outweighs the activation of the executive system. For instance, there is ample evidence with rodents and nonhuman primates that shows that some structures (e.g., nucleus accumbens, amygdala) and neurochemical substrates (e.g., dopamine system) known to underlie the so-called impulsive system are also associated with the acquisition and expression of secondary reward properties by a stimulus (e.g., Chow, Nickell, Darna, & Beckmann, 2016; Parkinson et al., 2001; Parkinson, Olmstead, Burns, Robbins, & Everitt, 1999).

Impulsivity as a Trait and the Ontogenesis of Self-Control

Why is impulsive behavior maintained despite its undesirable consequences? Why is this behavioral tendency observed in some, but not all, individuals? What kinds of experiences could reliably make an individual more likely to display greater self-control across various situations? Although we have no conclusive answers to these questions, we can present some thoughts based on the aforementioned approach that might guide our search for answers.

Given that our claim that impulsive responses are maintained by secondary rewards holds, if the secondary reward properties of those stimuli diminish, then impulsive behavior would decrease in strength and allow other responses to be emitted and reinforced. In all the procedures described, impulsive responses set a time-out during which the reward (or the larger reward, in choice procedures) is not available. Leitenberg (1965) asserted that stimuli related to a time-out from reward will acquire an aversive function (i.e., the probability of a response that produces them would decrease and the probability of one that cancels or postpones them would increase). If the stimuli related to impulsive responding in each one of these procedures (namely, the secondary rewards described for each case) indeed acquired an aversive function (because of their positive relation to time-outs or their negative relation to larger rewards), then subjects would avoid emitting them. Interestingly, this only occurs in a few subjects.

Development of self-control might depend on how subjects deal with the competing strengths of the positive and negative relations between stimuli and rewards. It is easier to see how subjects might learn the negative relation between responses (and the stimuli related to them) and rewards under omission procedures in which each inappropriate response is “punished” by canceling the reward delivery. However, it is not so easy to conceive how stimuli related to the SS alternative would acquire aversive properties through their negative relation with the LL reward delivery because they are continuously paired with immediate reward. The first problem here is how to explain self-controlled behavior, given that self-controlled responses typically do not produce immediate primary reward. This has led various theorists to argue that we need a secondary reward explanation of self-controlled behavior in choice procedures (e.g., Ainslie, 1975; Killeen, 1982; Mazur, 1995). According to this view, cues present in the delay to the larger reward would acquire secondary reward properties that maintain the choice of this alternative. We largely agree with this notion as a partial explanation of self-controlled behavior. However, stimuli related to an SS alternative could arguably acquire aversive properties as well based on their negative relation with a relatively richer condition (i.e., LL reward delivery). Each choice of the SS reward eliminates the possibility of consuming the large reward for at least the intertrial interval plus the delay to the LL alternative if the individual chooses that alternative in the next trial. If it is true that stimuli related to the SS alternative acquire aversive properties, then the number of SS choices would eventually decrease, resulting in a greater proportion of LL choices (self-control).

There is some evidence to suggest that a negative relationship between a stimulus and a richer reward condition might cause this stimulus to acquire an aversive function, even when it is associated with some amount of reward. For example, Jwaideh and Mulvaney (1976) exposed pigeons to an observing response procedure that assessed the function of stimuli related to “richer” or “poorer” reward densities. In their study, two unsignaled schedules alternated, with one delivering rewards more frequently than the other. During successive conditions, two observing keys were available. Pecking one of them produced stimuli related to both the rich and poor schedules, whereas pecking the other produced only the stimulus related to the rich schedule or only the stimulus related to the poor one. When pecking the observing key produced only the rich stimulus, response rates were higher compared to the key that produced both stimuli. Conversely, when pecking the observing key produced only the poor stimulus, the response rate increased for the key that produced both. This was interpreted as evidence that a stimulus related to a poorer reward density acquires aversive properties because its presentation suppresses, to some extent, the responses that produce it. This finding is important for our approach because it reveals the relativity of secondary reward value. Whereas in other situations the poor stimulus would have acquired rewarding properties (e.g., if it was contrasted to an even poorer density of reward or a nonreward condition), here a stimulus of this kind acquired aversive properties, presumably due to the contrast to a richer schedule.

Following this reasoning, we can attempt to explain the causes of individual differences in impulsive performance by taking into account that such behavior may be due to a discrepancy between the way in which organisms learn positive and negative relations between events. If their behavior is controlled more by positive than negative relations, then they would be more prone to behave impulsively. In contrast, if their behavior is controlled relatively more by negative than positive relations between events, then they would be more likely to behave in a self-controlled manner. Experiments on so-called learning sets have shown that subjects exposed to arrangements in which stimuli are positively associated learn a positive relation with a different set of stimuli more quickly (e.g., Kehoe & Holt, 1984). This suggests that the way in which individuals learn positive relations with a novel set of stimuli depends, in part, on their experience. If the ways in which animals learn positive and negative relations from their environment are independent, then we could expose subjects to different sets of negatively related stimuli and later test their performance in one of the aforementioned procedures. In such a setting, we would expect to find a decrease in impulsive performance compared to naive subjects. However, these ideas remain untested.

Some support for the idea that aversive properties are acquired by stimuli related to impulsive responses comes from studies that manipulated serotonergic systems in nonhuman animals. In a review, Soubrié (1986; as cited in Evenden, 1999) reported that operations (e.g., with drugs, brain lesions) that decrease serotonergic activity generally attenuate punishment-induced suppression of responding, whereas serotonin agonists tend to enhance punishment-induced suppression. Also, numerous studies have shown that reduced levels of serotonin promote impulsive behavior in both omission and choice tasks designed to test impulsivity (Cardinal, 2006; Pattij & Vanderschuren, 2008; but see Miyazaki, Miyazaki, & Doya, 2012, for a review and analysis of mixed results). If indeed the same brain system is related to both punishment control and omission training, one could posit that there is a formal similarity between these two task types or that some kind of aversive control is required to behave optimally in a self-control task.

Limitations and Future Directions

Other Laboratory Procedures

Although we believe that our approach may adequately explain the procedures described previously, we are aware that many other different procedures are used to test impulsivity/self-control in humans and nonhuman animals (some of them mentioned elsewhere in the text), including the Barratt Impulsiveness Scale (Barratt, 1959), the Balloon Analogue Risk Task (Lejuez et al., 2002), the five-choice serial reaction-time task (Robbins, 2002), operant hoarding (e.g., Cole, 1990), and conditioned locomotion (e.g., Winstanley et al., 2004). We chose to apply our idea to a few procedures that seemed more readily amenable to this analysis, but further theoretical development of the approach might attempt to deal with those paradigms or discard them for lack of construct validity.

Reliance on Response Feedback as an Intervening Variable

It is known that there are sensory receptors that allow organisms to detect changes produced by their own responses. It is difficult to know for certain, however, whether response-produced cues acquire stimulus functions in the same way as the exteroceptive signals typically manipulated by researchers. In our account, the conditioned reward properties of intrinsic feedback have the status of an intervening variable (MacCorquodale & Meehl, 1948). Our characterizations of impulsivity rely to a large degree on the assumption that these feedback stimuli can acquire a conditioned reward value by being associated with the responses’ consequences. To our knowledge, there is no solid experimentation supporting this proposition. One of the reasons for this absence of empirical support may be that isolating intrinsic feedback to study its functional relations with responding is inherently difficult (Greenwald, 1970) or, by definition, impossible. It is hard to separate the performance of a response from its mechanical outcomes and, similarly, it is difficult to artificially present to the organisms the feedback that is experienced when the response is performed but without the response actually being performed.

One field that may potentially help to clarify this issue is research on brain–machine interfaces. An outstanding example comes from a study conducted by Suminski, Tkach, Fagg, and Hatsopoulos (2010). In this study, rhesus macaques were implanted with a recording microelectrode array in the motor cortex and were trained in an operant task in which they had to locate a visual cursor in a target site on a screen in order to receive a reward. The cursor’s trajectory was controlled by neural activity, detected as electric spikes through the microelectrode array. In one condition, subjects had to move the cursor aided only by the visual feedback of the cursor moving across the screen. In another condition, an additional source of feedback was added: While subjects’ neural activity moved the cursor, proprioceptive feedback was provided, moving their arms with a robotic exoskeleton in a congruent fashion with cursor movements. This latter condition was observed to improve subjects’ performance in the task, measured as time-to-target and path length. This study can be regarded as a successful demonstration that responding can be isolated from proprioceptive feedback, which is a prototypical example of intrinsic sensory feedback. Moreover, the finding that proprioceptive feedback improved performance when made contingent upon responding supports the idea that stimuli from this frequently disregarded sensory modality may acquire conditioned properties.

In a complementary study, Dadarlat, O’Doherty, and Sabes (2014) implanted rhesus macaques with a stimulating microelectrode array in the primary somatosensory cortex and trained them in a task in which specific hand movements were required in order to obtain a reward. In the first condition, the only available feedback source was a moving dot projected on a screen. Then, artificial patterns of brain stimulation pulses were delivered upon hand movements in addition to visual feedback; these patterns of stimulation encoded both distance and direction of hand movements. In the latter condition, behavioral performance improved and was maintained at high levels of optimality, even as visual feedback was faded out. However, in this study, natural proprioception related to hand movement was left intact. Even in spite of this shortcoming, the results of this study provide valuable insight into how multiple sensory modalities add up to guide skillful performance in a synergic fashion, even when one of the added cues is an arbitrary artificial signal. Interestingly, an analogue version of this additive effect has been documented in the conditioning literature. In short, organisms learn more rapidly from a multimodal stimulus than from a stimulus from a single sensory modality (e.g., Kehoe, 1982; Siddall & Marples, 2008). This parallelism may point to a shared process between learning from intrinsic and extrinsic sensory feedback.

Although they are quite compelling, the studies described previously cannot ascertain whether the stimulus function of intrinsic feedback is informative, motivating, rewarding (e.g., Greenwald, 1970), or some combination of these. Proper control conditions (e.g., Sosa, Santos, & Flores, 2011) should be added in order to unambiguously demonstrate that sensory feedback has improved performance through a conditioned reward function. Unfortunately, these kinds of brain–machine interface studies require expensive instrumentation and delicate surgical techniques, which have a prohibitive cost for the typical behavior laboratory. Nevertheless, there are several models, that account for different operant behavior phenomena, such as habitual (Nafcha, Higgins, & Eitam, 2016) and goal-directed behavior (de Wit & Dickinson, 2009), incorporating sensory feedback as a key part of their theorizing. We acknowledge that articulating a conceptual framework founded on this intervening variable may not convince everyone. However, we hope that the information and the arguments provided in this section allow readers to make a more thorough judgment.

Toward an Understanding of Impulsivity/Self-Control Outside the Laboratory

We also acknowledge that the applicability of the approach presented herein seems limited to the behavior of nonhuman animals observed in laboratory settings. A legitimate question would be What about naturally occurring human behaviors that are considered impulsive, such as the ones described at the beginning of this article? First, we must consider that there are important differences between behavioral measures of impulsivity/self-control from humans and other animals. For example, some authors (Hackenberg, 2005; Richards et al., 2011; Stevens & Stephens, 2010) have pointed out that the differences in reward type (e.g., edible, token, imaginary), the amount of direct experience with rewards, and other motivational factors may drive substantial differences in intertemporal choice across species. Indeed, such differences decrease drastically if the procedural conditions are equated (e.g., Rosati, Stevens, Hare, & Hauser, 2007; see Hackenberg, 2005, for a review).

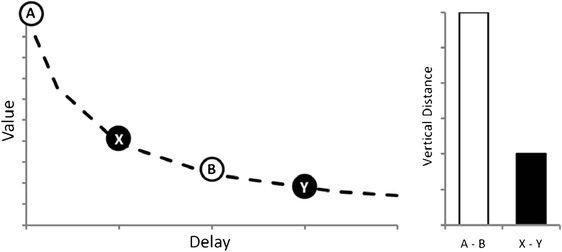

Notably, a robust effect has been reported in the discount rate depending on the type of reward. For instance, Estle, Green, Myerson, and Holt (2007) found that money is discounted less steeply than edible rewards by humans, and similar findings exist in the nonhuman literature using token rewards (e.g., Jackson & Hackenberg, 1996; but see Evans, Beran, Paglieri, & Addessi, 2012). Money has long been regarded as a hallmark example of secondary reward (e.g., Skinner, 1951), and tokens have been conceived as a model of money in nonhuman animals. We can try to explain these findings from a conditioned reinforcement approach, considering some basic assumptions. First, money gets its rewarding value by being exchanged for primary or other secondary rewards. The delay imposed by the exchange period makes money (or tokens) less valuable, even if its receipt is not delayed (see Jackson & Hackenberg, 1996). Then, delayed money would have a discounted value over the already-discounted value of immediate money. The rate of discounting when both alternatives are delayed is less than the rate of discounting when one option is immediate (Green, Myerson, & Macaux, 2005). Therefore, the rate of discounting when primary rewards are used should be greater than the rate of discounting when conditioned rewards are used because in the latter case both options are actually delayed by the exchange period. That could explain why money and token rewards are discounted less steeply (see Fig. 1 for an illustration). This reasoning is congruent with the fact that neuroimaging studies have reported that monetary rewards activate relatively more areas associated with self-control, such as the orbitofrontal cortex, than other types of reinforcers (see Sescousse, Caldú, Segura, & Dreher, 2013).

Fig. 1.

Comparison of value decay from different points of the hyperbolic function for equivalent delays. Points A and B represent immediate and delayed primary rewards, respectively. Points X and Y represent immediate (already discounted by the exchange period) and delayed conditioned rewards (e.g., money or token), respectively. The left panel illustrates the coordinates of each point, whereas the right panel shows the difference in value induced by equivalent delays within different types of rewards. Note that even when the horizontal distance from A to B is equal to that from X to Y, the vertical distance between the former points is about 3 times greater than that between the latter

In spite of the apparently parallel effects of varying the type of reward, there are large differences in the discount rates of humans and nonhuman animals. These differences are likely underpinned by verbal behavior (Hackenberg, 2005). Language may give rise to qualitatively distinct techniques to cope with delays from those exhibited by nonverbal animals. However, in spite of the large differences in the rate of discounting, there remain similarities in the discount function (i.e., hyperbola-like) in humans and other animals (Richards et al., 2011). This might suggest that even if the human way of discounting were unique, discounting processes across species would at least be analogous (i.e., different ontogenetical pathways leading to similar behavioral patterns; Hackenberg, 2005). If so, understanding impulsive behavior in laboratory settings would provide valuable insights into the field of human behavior.

Because of all these important differences between human and nonhuman animals, we are aware that we cannot unscrupulously stretch our approach to clearly more complex instances of behavior. However, we can attempt to shed light on some complex environment–behavior relations by examining the factors that distinguish them from behavior in laboratory settings. We have asserted that impulsivity is said to occur when an individual’s behavior is controlled by conditioned rewards at the expense of primary rewards. In all the aforementioned experimental paradigms, the putative secondary rewards acquired their rewarding properties by being paired with the same primary reward that impulsive responses prevent. We believe that this detail is the main feature that differentiates impulsivity in laboratory settings from naturally occurring impulsivity. In the case of DRL procedures, proprioceptive conditioned reinforcers that maintain maladaptive responses presumably acquire their value through their relation to the presentation of food, although in effect they prevent the presentation of a food reward. Conversely, conditioned stimuli (sight, taste, smell, texture, etc.) related to cream-filled donuts do not acquire their value through an association with enjoying good health or with looking good in a swimsuit, nor was the sight of a flying insect paired with achieving an academic goal. Stimuli maintaining impulsive behavior in naturally occurring behaviors rarely acquire their properties by being paired with the desirable consequence that they prevent; therefore, these situations could be conceived as cross-commodity choices (see Bickel, Landes, et al., 2011b).

Another important aspect to consider is that “maximizing overall gains,” which is a crucial element in our approach, is much easier to operationalize in the laboratory than in daily life. For instance, in laboratory settings, the time window in which behavior is recorded is discretized, whereas in daily life, time could be segmented using widely different scales, which would potentially affect the putative measure of maximization. Another difference is the value of reward, which is controlled to a great extent by deprivation in the animal laboratory, whereas for humans the value of a good may vary considerably depending on several factors, such as budget, mood, or subjective preference. Nonetheless, the core feature of our approach can be identified in the three examples of real-life impulsive behaviors described earlier if we define maximization as achievement of explicitly targeted goals. In all three cases, impulsive behavior (and the stimuli associated with it) prevents (i.e., is negatively associated with) intensely desired goals. Paying attention to intrusive stimuli during a class would impede obtaining a satisfactory grade, eating donuts would preclude healthy blood sugar levels if one has diabetes, and taking drugs would prevent obtaining (or maintaining) a decent job. People who readily learn that such behaviors undermine achieving their goals would therefore begin to avoid any stimuli associated with impulsive action; thus, we would say that they exhibit self-control. But we often see people who, in spite of adverse experiences, continue to behave in a manner that sabotages their long-term goals. In those cases, individuals may be under the control of stimuli that would otherwise lose their rewarding properties due to their negative relation with long-term goals, but somehow they do not. Of course, additional theoretical and empirical work will help clarify these admittedly speculative assertions.

Fragmentation of Impulsivity Revisited