Abstract

Hallucinations, including auditory verbal hallucinations (AVH), occur in both the healthy population and in psychotic conditions such as schizophrenia (often developing after a prodromal period). In addition, hallucinations can be in-context (they can be consistent with the environment, such as when one hallucinates the end of a sentence that has been repeated many times), or out-of-context (such as the bizarre hallucinations associated with schizophrenia). In previous work, we introduced a model of hallucinations as false (positive) inferences based on a (Markov decision process) formulation of active inference. In this work, we extend this model to include content–to disclose the computational mechanisms behind in- and out-of-context hallucinations. In active inference, sensory information is used to disambiguate alternative hypotheses about the causes of sensations. Sensory information is balanced against prior beliefs, and when this balance is tipped in the favor of prior beliefs, hallucinations can occur. We show that in-context hallucinations arise when (simulated) subjects cannot use sensory information to correct prior beliefs about hearing a voice, but beliefs about content (i.e. the sequential order of a sentence) remain accurate. When hallucinating subjects also have inaccurate beliefs about state transitions, out-of-context hallucinations occur; i.e. their hallucinated speech content is disordered. Note that out-of-context hallucinations in this setting does not refer to inference about context, but rather to false perceptual inference that emerges when the confidence in–or precision of–sensory evidence is reduced. Furthermore, subjects with inaccurate beliefs about state transitions but an intact ability to use sensory information do not hallucinate and are reminiscent of prodromal patients. This work demonstrates the different computational mechanisms that may underlie the spectrum of hallucinatory experience–from the healthy population to psychotic states.

Introduction

Hallucinations, including auditory verbal hallucinations (AVH), are a key component of the nosology and phenomenology of schizophrenia [1]. Despite decades of phenomenological, neurobiological and computational research, it is still not clear precisely how AVH develop and what mechanism underwrites them. Some investigators have turned to computational modelling to better understand the information processing deficits underlying AVH [2,3,4,5,6] Models based on Bayesian inference–inference via a combination of prior beliefs and sensory information [2,3,6]–support the notion that the overweighting of prior beliefs (e.g. that a certain word or sentence will be heard), relative to sensory information is necessary for hallucinations to emerge. We have shown that hallucinations emerge when an overweighting of prior beliefs is coupled with a decrease in the precision of, or confidence in, sensory information [2]. In other words, computational agents hallucinate when they believe they should be hearing something and are unable to use sensory information (e.g. silence) to update this belief. Empirical evidence supports this model. For example, using a hierarchical Bayesian model (the Hierarchical Gaussian Filter [7]) to fit data from a task in which participants were conditioned to hear certain tones, [3] found that patients with hallucinations in the context of schizophrenia and healthy voice-hearers placed greater weight on their prior (i.e. conditioned) beliefs about the tone-checkerboard pairing. [8] found that individuals in early psychosis, and healthy individuals with psychosis-like experiences, had improved performance–due to greater contributions of prior knowledge–in a visual task compared with controls. Finally, [9] found that healthy participants who were more hallucination-prone were more likely to hallucinate a predictable word (given the semantic context) when the stimulus presented was white noise or an unpredictable word partially masked by white noise (i.e. a stimulus of low precision).

This body of work, which has investigated the genesis of hallucinations resulting from an imbalance between prior beliefs and sensory information, is well-suited to describe ‘conditioned’ hallucinations [3], those hallucinations resulting from a prior that a subject acquires through recent experience, such as a pattern of tones. However, clinical hallucinations tend to have some additional properties: i) they are often ‘voices’ with semantic content and ii) they are often not coherent with the current environmental context. Semantic content can be derived from–or coloured by–a patient’s memory or feelings of fear [10,11]. Conditioned hallucinations are necessarily coherent with some aspect of the environmental context (it is this context from which the prior is inherited). Hallucinations in patients with schizophrenia are often emphatically out of context, however, such as a frightening voice that is heard in an otherwise safe room or hearing the voices of world leaders whom one has never met.

In this paper, we explore the computational mechanisms that could underwrite the difference between hallucinations that are in-context (like a conditioned hallucination) or out-of-context (closer to a true clinical hallucination). Our purpose in doing so is twofold. First, we aim to determine whether the same computational mechanism [2] is appropriate for describing hallucinations in and out of context. This is important for understanding the relevance of empirical work on conditioned hallucinations to the clinical phenomena. Our second aim is to understand, if the underlying mechanism is the same, what sort of additional computational deficits might be in play to give out-of-context hallucinatory percepts. We have previously argued that prior beliefs, when combined with reduced confidence in sensations, could underwrite hallucinations (operationalised as false positive inferences). In the present work, we investigated (through simulation) whether a similar mechanism could influence a subject’s beliefs–not just about whether they hear sound–but about the content of that sound. We hoped that the form of these hallucinations might enable us to draw parallels with known AVH phenomenology, and to show whether common mechanisms could underwrite both AVH and other psychotic symptoms, such as thought disorder. Note that in this treatment, “out-of-context hallucinations” does not refer to the result of aberrant inference about context, but rather to hallucinatory content that is inconsistent with environmental context. In this setup, hallucinations to arise from underlying (aberrant) beliefs about state transitions when the confidence in–or precision of–sensory information is reduced. One hypothetical mechanism–that could lead to this state of affairs–is that beliefs about transition probabilities could depend upon higher level beliefs about the context we find ourselves in. This would require additional hidden states in our generative model at a higher hierarchical level that effectively select transition matrices, which are inferred to be appropriate in specific contexts. A failure of this contextual inference could result in an aberrant model that is a poor fit for the current sensory context (i.e., likelihood precision). We do not model this contextual inference, but make the simplifying assumption that aberrant beliefs about probability transitions are always present and are revealed in the absence of precise sensory information.

Methods

To address the questions above, we simulated belief-updating in a simple in silico subject who could make inferences about the content they were hearing and could choose to speak or listen to another agent. To accomplish this, we used a Markov Decision Process (MDP) implementation of active inference [12,13]. We opted for an MDP as this furnishes a generative model of discrete states and time, where the recognition and generation of words are thought to occur in a discrete manner [14]. Working within the active inference framework treats perception as an active process (i.e., active listening), in which an agent’s decisions (e.g. choosing to speak or listen) impact upon their perceptions [13]. This is a crucial difference from previous models of AVH, which only consider passive perception [4,5]. This is important because priors engendering hallucinations may concern action plans (or policies) an agent chooses to engage in, where these policies are ill-suited to the current context. For example, if the agent strongly believes it should be listening to another agent speaking, this prior is inappropriate if there is nothing to listen to. In this section, we describe active inference and the MDP formalism, and then specify the implementation of our in-silico agent.

Active inference

Under active inference, biological systems use a generative (internal) model to infer the causes of their sensory experiences and to act to gather evidence for their beliefs about causes. More formally, agents act to minimize their variational free energy [15]; a variational approximation to the negative log (marginal) likelihood of an observation, under an internal model of the world [16]. This quantity–also known as surprise, surprisal and self-information–is negative Bayesian model evidence; as such, minimizing this variational free energy is equivalent to maximizing the evidence for one’s model of the world. This is also often termed “self-evidencing”. The free energy can be written as:

| Eq 1 |

In this equation, F is the free energy, is the sequence of observations over time (note that in our equations, the tilde over variables denotes ‘over time’), s are unobserved or hidden states that cause sensations, and Q is an approximate probability distribution over s. P is the generative model that expresses beliefs about how sensory data are generated. This is the model that attempts to link observations with the hidden states that generate them.

Markov decision process and generative model

MDPs allow us to model the beliefs of agents who must navigate environments in which they have control over some, but not all, variables. These agents must infer two types of hidden variables: hidden states and policies. Hidden variables are those variables that cannot be directly observed. The hidden states sτ, represent hypotheses about the causes of sensations. In other words, these hidden states represent states of affairs in the world that generate the sensations we experience; for example, a conversational partner. For our agent, there are three sorts of hidden states: listening (or not); speaking (or not); and lexical content (i.e., the words that the agent may say or listen to). These hidden states have been constructed such that the lexical content does not depend directly on whether the agent is listening or speaking. Intuitively, this kind of structure can be thought of as analogous to the factorization [17] of visual processing into ‘what’ and ‘where’ streams [18]. Just as an object retains its identity no matter where it appears in space, the content of speech is the same regardless of who is speaking.

This generative model is closely analogous to that used in previous treatments of pre-linguistic (continuous) auditory processing [5]. These simulations relied upon generation and perception of synthetic birdsong. Crucially, this birdsong had a pre-defined trajectory that depends upon internally generated dynamics. Similarly, our simulations depend upon an internal conversational trajectory; i.e., narrative. This comprises the six words in the short song “It’s a small world after all!” but does not specify which word is spoken by which agent. The hidden states are inferred from the sensory observations oτ (where τ indicates the time-step). Our agent has access to two outcome modalities: audition and proprioception. The auditory outcome includes each of the six words in the song, as well as a seventh ‘silent’ condition, which is generated when the agent is neither listening or speaking.

As previously [2], our subject’s generative model is equipped with beliefs about the probability of a sensory observation, given a hidden state (i.e. the probability of observing some data, given a state). These probabilities can be expressed as a likelihood matrix with elements P(oτ = i| sτ = j) = Aij. For the proprioceptive modality, this was simply an identity matrix (mapping speech to its proprioceptive consequences). For the auditory modality the likelihood depended on whether the agent believed it was listening or speaking (in which case it did not consider silence to be possible and had non-zero probabilities for the six content states, see Fig 1) or if it believed no sound was present (in which case it assigned a probability of 1 to the silent outcome). The resulting likelihood matrix was then passed through the following equation:

| Eq 2 |

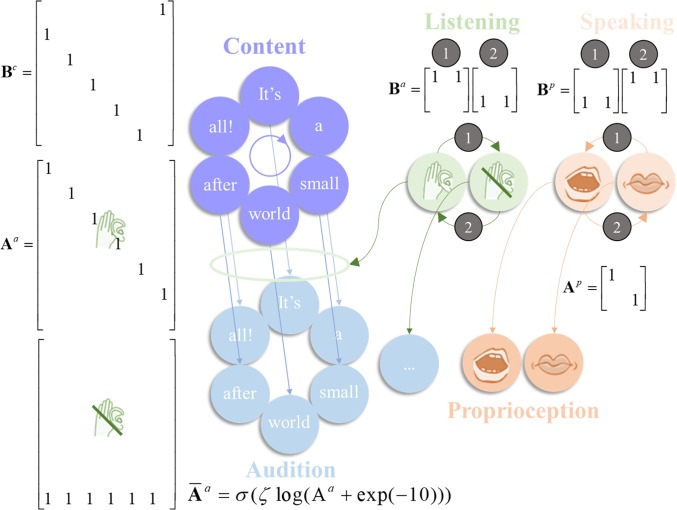

Fig 1. The generative model.

This schematic illustrates the specification of the (partially observed Markov decision process) generative model used in the simulations. There are three sorts of hidden state: those that determine the content of speech (i.e. the words in the song), whether the agent is currently listening to anything, and whether the agent is speaking. The first deterministically transitions from each word to the next in the song, and repeats this sequence indefinitely as shown in the B-matrix for this content state Bc. The latter two hidden state factors depend upon the agent’s choice; with actions given in small numbered circles. The policies are defined such that, when action 1 (Speaking) is taken for the listening state (leading to ‘I am not listening’), action 1 is also taken for the speaking state (leading to ‘I am speaking’), and similarly for action 2 (Listening). There is no such constraint in the generative process, so this belief can be violated. When listening, the (lexical) content states map to auditory outcomes representing the same words. This is shown in the first of the auditory A-matrices Aa for which columns represent content states, and rows content outcomes. The second (lower) version of this matrix is employed when not listening to anything and gives rise to a ‘silent’ auditory outcome (7th row). There is additionally a proprioceptive outcome modality op that depends only upon whether the agent is speaking. The equation at the bottom of this Figure shows how we equip the auditory likelihood with a precision (inverse temperature) parameter ζ. The bar above the A indicates that the matrix has been normalised, following precision modulation.

Here, σ is the softmax function which normalizes the matrix. The softmax function takes in a distribution of variables and transforms then into a distribution of probabilities that sum to one [19]. The bar notation means that Aa has been normalised (the superscript denotes the auditory modality). We have added a very small amount of uncertainty to the elements of Aa, via the addition of exp(-10) to each element, in order to model inherent uncertainty in perceptual processes. ζ is the likelihood precision (When the outcomes are sensory samples, the likelihood precision plays a role of a sensory precision in predictive coding formulations). As ζ increases, this mapping tends towards an identity matrix (that is, the mapping between causes and sensations becomes more deterministic). As it decreases, the probabilities become close to uniform and the mapping becomes very uncertain: i.e., even if one knew the hidden state of the world, all outcomes are equally likely. In other words, modulating the likelihood precision modifies the fidelity of the mapping between states and outcomes; the lower the precision, the ‘noisier’, or stochastic, the mapping becomes. In continuous state space formulations of active inference, optimising the equivalent quantity is synonymous with the process of directing attention; by attending to a sensory channel, an agent increases the precision of information it receives from that channel [20]. We have previously shown that synthetic agents tend to ‘disregard’ low-precision mappings [13] (c.f. the ‘Streetlight effect’ [21], which states that the sensible place to look for information is where it is most precise; i.e. under a streetlamp in an otherwise dark street).

Policies are the second type of hidden variable. Policies are sequences of actions (e.g., listening or speaking) that an agent can employ. Crucially, the currently pursued action must be inferred from sensory data. This is known as planning as inference [22]. For our agent, policies are sequences of actions that generate a conversational exchange. Specifically, our agent has three allowable policies: always listen, always speak, or alternate between speaking and listening. These three policies were selected because they represented a reasonable a priori ‘toolbox’ for the agent: it can believe it is always listening, always speaking, or taking turns–speaking and listening. Because the agent must infer the policy it is following at each time step, this produced an accommodating agent that should be able to, for example, listen or speak–and then engage in turn-taking. At any given time, the policies are mutually exclusive and the action of listening or speaking within the policies are mutually exclusive. In other words, our subject believes she is listening or speaking, but not doing both at the same time. This is a simple approximation of sensory attenuation; namely, the attenuation of the precision of auditory information while speaking [23, 24, 25]. As explained below, we deliberately do not model the failure of sensory attenuation, in order to focus on cognitive processes (i.e., belief updating) deeper or higher up in the hierarchy. The hidden states change over time according to a probability transition matrix, conditioned upon the previous state and the current policy, π. This transition matrix is defined as B(u)ij = P(sτ+1 = i|sτ = j,u = π(τ)) (where tau τ represents a timestep). The policy influences the probability a state at a given time transitions to a new state (e.g. if I choose to listen, I am more likely to end up in the ‘listening’ state at the next time-step). Heuristically, we consider ‘listening’ as an action to be a composite of mental and physical actions–all the things one might do to prepare to listen, when expecting to hear someone speak (i.e., pay attention, turn your head to hear better, etc.) [26]. While listening and speaking are actions and are therefore selected as a function of the inferred policy, the lexical content of the song (from beginning to end) is not determined by the policy but by the transition matrix Bc In other words, the order of the words in the song–i.e. the transitions between different content states–is a prior stored in Bc (the superscript denotes ‘content’), the probability transitions encoding some level or latent narrative (e.g., song). The associated transition matrix could take one of two forms–as can be seen in Fig 1: a veridical form, where the song progresses in a standard fashion, starting at “It’s” and ending at “all!”; alternatively, anomalous narrative could be in play, which provides a ‘private’ narrative that is not shared with other people. This alternative perceptual hypothesis will form the basis of the anomalous inference we associate with hallucinations. It is important to note that the specific anomalies induced in these simulations are not intended as a literal account of schizophrenia; rather, the point we attempt to make is that an account of psychotic hallucinations requires a disruption of internally generated dynamics to account for the often-bizarre narratives, characteristic of such hallucinations. This does not necessarily influence whether a hallucination takes place, but profoundly alters what is hallucinated.

The subject was paired with another agent (i.e., a generative process) that determined the sensory input experienced–a partner that produces alternating sounds and silences according (always) to the normal content of the song (i.e. it produces every second word, beginning with ‘It’s’). Whenever our subject chose to speak, a sound was generated. Our subject could also choose to listen to–or sing with–their conversational partner. In this way, our subject interacted with the (simple) generative process, generating sequences of sounds and silences depending on both the environment and the actions. We chose a dialogic set up to provide the subject with context, which allows us to demonstrate out-of-context hallucinations. In addition, having a dialogic structure allowed us to more closely mimic hallucinatory phenomena, which can take the form of dialogue [10].

The subject began a prior probability distribution over possible initial hidden states, Di = P(s1 = i). In our simulations, our subject always listened at the first timestep, as if they were ‘getting their cue’. The subject was also equipped with a probability distribution over possible outcomes, which sets the prior preferences, Cτi = P(oτ = i). These prior preferences influence policy choices, because agents select policies that are likely to lead to those outcomes. Here, we used flat priors over outcomes, ensuring no outcome was preferred relative to any other. This means that action selection (i.e. talking and listening) was driven purely by their epistemic affordance, or the imperative to resolve uncertainty about the world.

To make active inference tractable, we adopt a mean-field approximation [17]:

| Eq 3 |

Here Q represents approximate posterior beliefs about hidden variables. This formulation (which just says that the approximate posterior beliefs about hidden variables depends on the product of the approximate probability over policies and the approximate probability of states given polices at a given time) allows for independent optimization of each factor on the right-hand side of Eq 3. The expression of the free energy under a given policy is:

| Eq 4 |

Free energy scores the evidence afforded to a model by an observation. This poses a problem when selecting policies, as these should be chosen to reduce future–not current or past–free energy (and we do not yet have access to future observations). In this setting, policies are treated as alternative models, and are chosen via Bayesian model selection, where the policy selected is the one that engenders the lowest expected free energy:

| Eq 5 |

Here G(π) is the expected free energy under a given policy and allows for predictions about what is likely to happen if one pursues a given policy–that is, how much uncertainty will be resolved under the policy (and when prior preferences are used, how likely the policy is to fulfil them). Note that the free energy F describes the surprise or the negative Bayesian model evidence at a given moment, whereas the expected free energy G is an expectation of how the free energy will change in the future, given a particular policy an agent may pursue. It allows an agent to project themselves into the future and evaluate the consequences, in terms of free energy minimization, of pursuing different policies. Simply put, free energy is used to optimize beliefs in relation to current data, while expected free energy is used to select policies to optimize data assimilation, in relation to current beliefs.

Formally, the distribution over policies can be expressed as

| Eq 6 |

This equation demonstrates how policy selection depends on the minimization of expected free energy. This is equivalent to saying that agents choose policies that are most likely to resolve uncertainty. Here, the expected free energy under alternative policies is multiplied by a scalar and normalized via a softmax function. The scalar plays the role of an inverse temperature parameter that signifies the precision, or confidence, the agent has about its beliefs about policies. This confidence will have an impact on the relative weighting of sensory information, when the agent tries to infer its policy. The more confident an agent is about its policies, the less it relies upon sensory information to infer its course of action. The γ parameter has previously been linked to the actions of midbrain dopamine [27]. We have previously shown that an increased prior precision over policies can facilitate hallucinations [2], but in this paper we do not modulate this policy precision.

In the above, posterior beliefs about states are conditioned upon the policy pursued, which is consistent with the interpretation of planning as model selection. Through Bayesian model averaging, we can marginalize out this dependence to recover a belief about hidden states, irrespective of beliefs about the policy pursued:

| Eq 7 |

This equation is crucial because it means that beliefs about states (to the left of the equation) depend upon beliefs about the policy. The terms on the right correspond to the approximate probability distribution over policies, and over states, given policies. This fundamental observation further ties perception to action–and frames the development of hallucinations as an enactive process, which was the focus of our previous work [2]. In that work, we showed that policy spaces–prior beliefs about action sequences–which did not match sequences of events in the environment led to hallucinations when external information could not be used to correct perceptions (because of low likelihood precision). In this paper, we use anomalous policies to characterise perceptual inference (about lexical content) during hallucinations.

The transition probabilities from one time step to the next depend upon which policy is selected. In other words, each policy may be seen as an alternative hypothesis for the probabilistic transitions over time. For those hidden factors over which an agent has no control (here, the ‘content’ factor), there is only one possible transition probability matrix. Note that the factorization we have employed means we do not specify probabilities for transitions from speaking to listening, or vice versa. Instead, there are transitions from speaking to not speaking, and from not listening to listening. The only thing that precludes simultaneous speaking and listening is the sequence of transitions implied by the available policies.

This model simulated the beliefs (in terms of both perception and action) of an in silico subject. This licenses us to make an important theoretical point; namely, lesions that disrupt the balance between confidence in prior beliefs and sensory evidence can cause false positive inference, but disordered hallucinatory content additionally implies aberrant internal models of dynamics or narrative. As such, our simulations are not meant to directly simulate empirical data. However, we hope to test the principles disclosed by these simulations, using empirical behavioral and self-report data.

Hypotheses: The generation of in-context and out-of-context hallucinations

To summarise, we have an agent with policies for active listening, who is tasked with singing a duet with another agent. Our agent can be equipped with either a veridical narrative (i.e., transition matrix); namely, the belief that the song will always proceed as “It’s a small world after all!”. Alternatively, it can be equipped with an anomalous transition matrix–under which it believes that the words should be sung in a different order. A plausible mechanism for switching between transition matrices could call on a higher (contextual) level of the generative model, representing different beliefs about lexical transitions. When likelihood or sensory precision is low, an anomalous matrix may be selected by default. It is interesting to consider that this default matrix may in fact be optimal in some situations but not for others–and, as such, precipitates the formation of out-of-context hallucinations when changes in context are improperly inferred. An alternative hypothesis is that pathology may directly disrupt the transition probabilities, perhaps through dysconnections representing the implicit conditional distributions [28, 29]. This view is motivated by the fact that any changes to the transition probability matrix–acquired under ideal Bayesian observer assumptions–will provide an impoverished account of environmental dynamics.

Crucially, the presence of the other agent–who always sings the song in the standard order–provides a context for our agent. Our objective was to produce in and out-of-context hallucinations, and to see which factors disambiguate between the two.

From our previous work, we hypothesized that our agent would hallucinate when the likelihood precision was reduced relative to prior beliefs. We further hypothesized that, when this occurred with a veridical transition matrix, the agent would hallucinate perceptual content consistent with the context. This is because the agent’s beliefs about lexical content are entirely consistent with the narrative being followed in the environment. In contrast, we hypothesized that the agent would hallucinate out-of-context, when equipped with the anomalous transition matrix. This is because when the likelihood precision is low, our agent is unable to use sensory information to correct its anomalous beliefs regarding lexical transitions. For the same reason, we expected the agent with the anomalous transition matrix to infer the standard word order when likelihood (i.e., sensory) precision was high–because it would be able to use the information present in the environment to correct its biased prior beliefs about content state transitions. In short, with this relatively straightforward setup, we hoped to demonstrate a dissociation in terms of context sensitive hallucinations, and active listening

Results

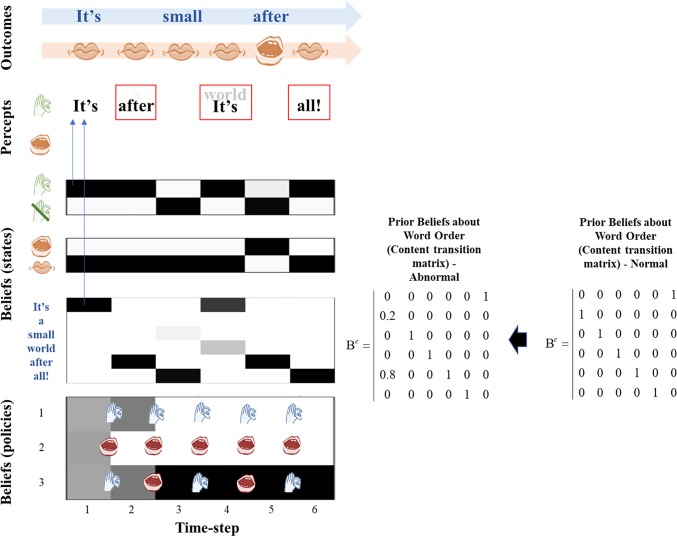

Here, we present the results of our simulations. These are presented in Figs 2–5 and represent the results of four in silico ‘experiments’. In Experiment 1, we showcase a subject that demonstrates normal behavior and non-hallucinatory beliefs. This subject is equipped with a veridical transition matrix and as such believes that the song will unfold in the usual way. This is reflected in the purple ring of circles (and its adjacent matrix) in Fig 1. The agent is also equipped with a normal likelihood precision, and as such can accurately use sensory information to infer hidden states. For example, it accurately infers the silent state at the second and fourth timesteps. At other times, it chooses to sing or listen to the other agent singing, but in all cases its beliefs about the lexical narrative map perfectly onto auditory outcomes.

Fig 2. Normal state.

This figure depicts the normal state of the agent, without any induced pathology. The figure has four parts: Outcomes, Percepts, Beliefs (about States) and Beliefs (about Policies). The figure represents the simulation’s evolution over six time-steps from left to right. Beliefs are expressed probabilistically, as indicated by the colorbar, which demonstrates that the grayscale shading indicates the strength (probability) of a given belief the agent holds, with darker shading representing stronger beliefs. Outcomes describe the data presented to the agent. The top blue line contains information from the auditory modality, with each word that is actually spoken by either agent written and with empty space representing silence. The bottom line represents information from the proprioceptive modality, representing the sensory consequences of the agent’s executed action- either speaking or not speaking. The ‘percepts’ section of the figure provides a qualitative summary of the ‘belief’ plots below. Together, these represent the inferred state of the world. This includes percept of the word, or silence, regardless of which agent it believes produced the word, and whether this word is heard or spoken (or both). These percepts are illustrated quantitatively in the belief plots. These separate into beliefs about states and about policies. The former comprises beliefs about whether the agent is in a ‘listening’ state or a ‘not listening’ state, the agent’s belief about whether or not it is speaking, and the agent’s beliefs about content, with the six words of the song being represented. Note that this represents the agent’s posterior belief about word order; it does not necessarily represent the agent’s prior belief about word order, which is represented beside the figure in the form of a transition matrix. Beliefs about policies are expressed similarly, with each row of the plot describing one of the three policies. The top row is “always listen”, the middle row is “always speak”, and the bottom row is “alternate speaking and listening”. The actions called for by each policy at each time step is illustrated by the icons overlaid on the matrix. Note that these icons are placed at transitions between time steps, which is when actions are selected. The same structure outlined here will apply to each subsequent figure. In this figure the agent hears words in the order of the song and does not experience any hallucinations. The sequence of events in this figure is as follows: (1) The agent is hears ‘It’s’ in the absence of proprioceptive input, and infers that it was listening to this word. (2) The agent samples an action from the most likely policy given the previous time-step, which is ‘Listening’. This leads to no proprioceptive input, but also no auditory input (because the other agent sings only on timesteps 1, 3 and 5), so it infers its state is ‘I am not listening’. It is not speaking so it also infers ‘I am not speaking’. Given these states, its posterior beliefs about its policy are equivocal between the listening and speaking policies (all are unlikely). (3) The agent samples an action from policy 2 or 3 (both are ‘Speaking’). It speaks and infers ‘I am speaking’ because its mouth is moving. It also hears a sound, so it infers ‘I am listening’. (4) At the start of this timestep it is equivocal between policies 2 and 3 as both were speaking at the last timestep. It samples an action–‘listen’–so its mouth does not move, and it correctly infers ‘I am not speaking’. However, it hears only silence, so it also infers its state is ‘I am not listening’. (5&6) The agent samples from the most probable policy ‘Speaking’. It speaks, its mouth moves, and so it infers its state to be ‘I am speaking’. It also hears sounds at each time point which make it infer ‘I am listening’ with the correct content.

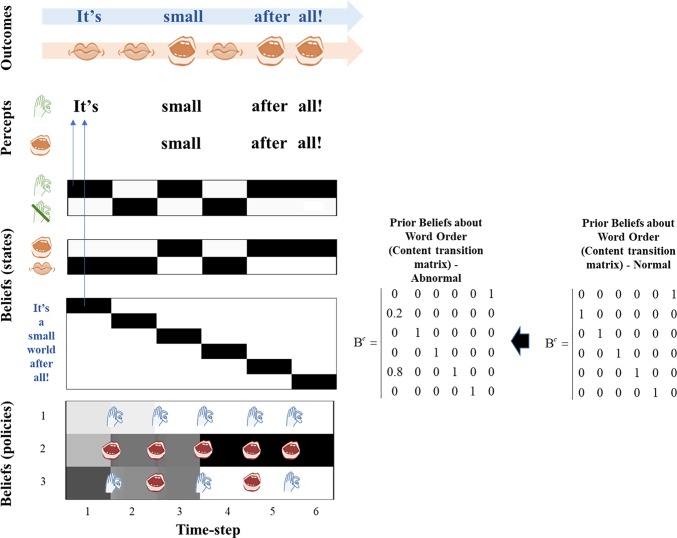

Fig 5. Out-of-context hallucinations.

In this figure, the agent has been equipped with an anomalous transition matrix as well as a reduced likelihood precision. The anomalous transition matrix is presented alongside the figure, in comparison to the normal matrix employed in experiment 2. In this case, the agent again experiences hallucinations at the 2nd, 4th, and 6th time steps but these are ‘out of context’ or out of the usual order of the song at the 2nd and 4th timesteps. Here the agent has heard “after” at the second time step instead of “a”, and “It’s” at the 4th time step instead of “world”. It also fails to hear the other agent (the generative process) at the 3rd time step, and believes “all!” should be present instead of “small”. It fails to hear its own voice at the 5th timestep. Here, lighter colored font indicates a lower certainty that word is being heard, and darker font a higher certainty. As an example of how an out-of-context hallucination emerges, going into the 2nd timestep the agent samples a “listening” action, from policy 1 or 3. This induces a prior belief that it will be hearing something at timestep 2, even though no sound is present at this time. The low likelihood precision leads it to hallucinate–as it cannot use the silence to correct this prior belief. As the anomalous transition matrix is in play, the agent hears “after” instead of “a”, because it is unable to use the lexical cues to predict the correct word order. The critical point of this figure is that anomalous posterior beliefs about content only emerge in the context of a low likelihood precision- the agent in experiment 3 has the same anomalous transition matrix (prior beliefs) as the agent in this experiment, but has normal posterior beliefs about content because it is able to cue into its environmental partner, demonstrating that out-of-context hallucinations are not caused solely by having fixed false prior beliefs. As in the previous figure, Bayesian smoothing is in play which allows the agent to have beliefs about the correct song order at the 2nd and 4th time steps.

In Experiment 2 we reduced the likelihood precision (from ζ = 3 to ζ = 1), which engendered hallucinations at the second, fourth and sixth timesteps. As discussed in our previous paper (Benrimoh et al., 2018), these hallucinations are driven by priors derived from the policies selected by the agent: these were all timesteps immediately before which the ‘listening’ action had been selected, and as such the agent expected to be listening (i.e., attending) to sounds. Given reduced confidence in its sensations, the agent disregarded its observations (of silence) and instead inferred that sound was present, as expected. What is critical here is that what it heard was determined by its beliefs about lexical content and word order. As such, all the hallucinations were in-context, in the sense that they reproduced the sound as expected in the sequence present in the environment. At the third timestep, this subject failed to hear their partner, and at the fifth timestep they failed to hear their own voice. This is again a symptom of selecting a policy ill-suited to the context: i.e. one that did not cohere with states of affairs in the environment. At the third timestep, the subject is unsure of whether to listen or speak (follow policy one or two), and at the fifth timestep it has selected the third policy–which leads it to choose to speak and to believe it will not be listening. However, under normal likelihood precision the agent would be able to use sensory information to correct that belief; i.e. to infer it was listening, even if it was speaking or did not expect to hear anything–as seen in Experiment 1, when the subject hears their own voice in the last timestep, even though they were speaking). It should be noted that [4] found that misperception–including the failure to perceive certain sounds–is a feature of hallucinations.

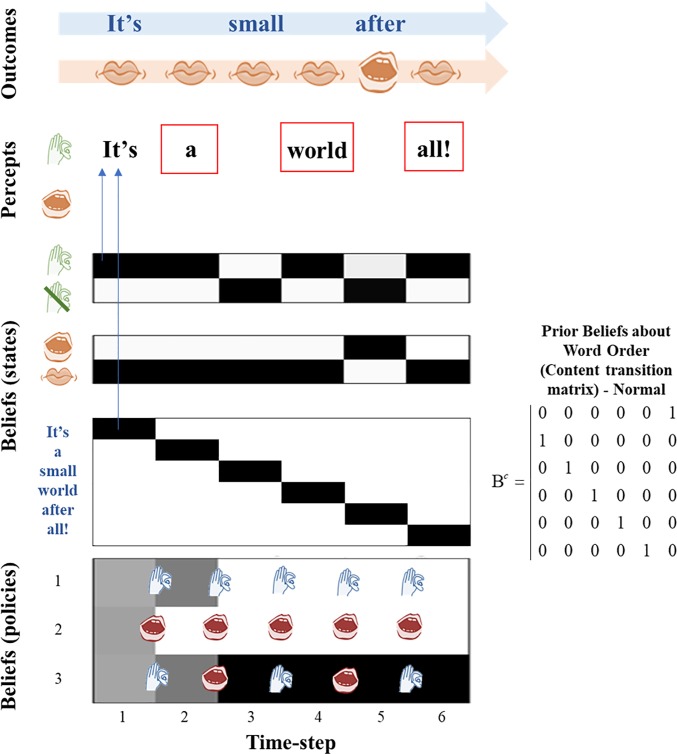

In Experiment 3, we equipped our subject with an anomalous transition matrix and a normal likelihood precision. If the subject’s beliefs about lexical content were solely determined by its priors, we would expect it to believe that the song was being sung in an anomalous order. However, because it is coupled to an environment that provides context, it can use this context to correct its priors and infer that the song is, in fact, following its usual order. The purpose of this experiment was to simulate an agent that could be considered to be ‘prodromal’; i.e. an agent having some out-of-context beliefs but not yet experiencing the full psychotic phenotype. The prodromal state is observed in many people who go on to be diagnosed with schizophrenia.

In Experiment 4, we removed the ability to correct anomalous perception by reducing the likelihood precision, which leads to the development of hallucinations and to an anomalous perceptual experience. The subject now believes that instead of the song progressing as “It’s a small world after all!” They perceive “It’s after It’s all!”. Note that the policy selection–and therefore the priors that drive the hallucinations–are the same as in Experiments 2 and 4. The only thing that has changed are the beliefs about lexical (i.e., hidden state) transitions. However, it should be clear that the hallucinations are still being driven by the reduction in likelihood precision and do not occur in the absence of this reduction (see experiment 3, where hallucinations did not occur despite a anomalous content transition matrix). Because the experienced percepts during the hallucinations do not match the environment, this agent has experienced out-of-context hallucinations.

Discussion

In this paper, we simulate an agent who can experience both in- and out-of-context hallucinations. Reproducing our previous work, we show that hallucinations emerge when likelihood precision is low relative to prior beliefs. This induces false positive inferences that are consistent with the perceptual content expected in the presence of a perceptual narrative (e.g., dyadic singing or conversation). This may be thought of as analogous to the sorts of hallucinations induced in (often neurotypical) subjects in empirical studies. To investigate whether this mechanism generalises to the sorts of hallucinations associated with the clinical phenomenology of psychotic hallucinations, we asked what minimal computational pathology is required for the genesis of out-of-context hallucinations. Induction of imprecise prior beliefs about the trajectory of lexical content was enough to reproduce this phenomenon. This conceptual and computational analysis endorses the relevance of empirical studies that attempt to induce in-context hallucinations to understanding the basic mechanisms of hallucinogenesis in psychopathology, but additionally implicates abnormalities in internally generated narratives in understanding what is hallucinated.

In previous work [2] we demonstrated that hallucinations could be generated when prior beliefs predominated over sensory evidence. Specifically, we illustrated the sorts of prior beliefs that might lead someone to infer they have heard something in the absence of confirmatory sensory data. The key advance in this paper is the introduction of content to the generative model, such that we infer not only whether someone has said something, but what they have said. This affords an opportunity to examine the conditions required not just to hallucinate, but to hallucinate absurd or incongruent content. This is important in disambiguating ‘out of context’ hallucinations, which resemble the often bizarre or frightening hallucinations found in schizophrenia; and ‘in-context hallucinations’, which resemble the conditioned hallucinations often used in experimental investigation of hallucinations (e.g. [3]). Sharing that both in- and out-of-context hallucinations can be induced by the same computational mechanisms–but that additional mechanisms may be at play in the generation of the content of the out-of-context hallucinations–endorses the use of conditioned hallucinations in empirical research and opens new avenues for inquiry; especially, in relation to out-of-context content in clinical hallucinations.

The policy selections that drove hallucinations in Experiments 2 and 4 were identical. The difference between the two cases is specifically what the subject hallucinated, not how the hallucinations have come about. This dissociation between hallucination onset and hallucination content illustrates the differences between in-context and out-of-context hallucinations. The former lead to hallucinations whose content is governed by appropriate context (i.e., it matches the environmental context), whereas the latter are governed by priors that are not apt for a normal dyadic exchange. It is also interesting to note that the agent continues to attempt to use environmental cues to correct its beliefs about content, even under low likelihood precision. As can be seen in Fig 5, in the fourth timestep the subject still has a weak belief that they are hearing the sound that would be encountered under a veridical song order. This may represent the uncertainty that some patients feel about the reality of their hallucinations [11].

What is common to these results are that out-of-context hallucinations occur when an agent has a poor model of reality (i.e., anomalous priors) and dysfunctional method for checking the predictions of that model (relatively low likelihood precision). This is in keeping with a long tradition of understanding the psychotic state as a loss of contact with reality [30]. It is also reminiscent of Hoffman’s computational model [4]. In that model, hallucinations were produced in the presence of degraded phonetic input (analogous to our imprecise likelihoods) and an overly pruned working memory layer, which allowed anomalous sentence structures to become possible (analogous to our anomalous transition matrix). By simulating a subject that can hallucinate content outside of the appropriate context, we are tapping into the disconnection from reality–and sensory evidence–that is central to schizophrenia, where patients can have sensory experiences that do not cohere with environmental context (hallucinations) and experience beliefs that are not supported by evidence (delusions). These beliefs may be strange and very much out of the realm of normal experiential context (bizarre delusions, such as those about extraterrestrials). The fact that these experiences feel very real to patients [11], while being very much out of the context provided by every day experience, speaks to a mechanism that allows for both a detachment from–and an altered model of–reality, such as the one we propose involving reduced sensory (i.e., likelihood) precision.

It is interesting to consider the lexical content perceived in Experiment 4. The subject hears a ‘nonsense’ version of the song, one in which the correct order of the words has been lost and repetition sets in. While this is a result of the set-up of this simulation–which makes it possible for the agent to experience only this song or silence–it is interesting to note that 21% of patients with hallucinations do in fact experience nonsense hallucinations [11]. In addition, this out-of-order song bears a striking resemblance to another important feature of psychosis: thought disorder. Thought disorder can include thought blocking, loosening of associations between thoughts, repeating thoughts and perseveration, and extreme disorganization of thought (such as the classical ‘word salad’) [31]. Our synthetic subject appears to experience thought disorder in the form of partially repeating nonsense phrases, caused by anomalous prior beliefs about lexical transitions, in conjunction with a reduced likelihood precision.

While thought disorder and hallucinations cluster separately in some symptom-cluster analyses [32], our aberrant transition matrix only determines the type of hallucination, and not their presence or absence. As such, our simulations do not contravene the results of these cluster analyses. Furthermore, some theoretical models of hallucination draw links between cognitive dysfunction and thought disorder in the prodromal phase and later development of hallucinations, which may be influenced by these disordered thoughts. For example, as reviewed by [33], Klosterkotter [34] noted that many patients described elements of thought disorder in the prodromal phase and hypothesized that these could transform into audible thoughts. Conrad (reviewed in [35]) described “trema”, a state of fear and anxiety as well as cognitive and thought disturbances during the prodromal period, which is succeeded by frank psychosis and the development of hallucinations and delusions; he conceptualized certain thought disturbances, such as pressured thinking or thought interference, as transforming into hallucinations.

The central message of this paper is that false positive inference is not sufficient to account for the phenomenology of many verbal hallucinations. Specifically, it is not enough for prior beliefs overwhelm evidence from our sensorium–we also require abnormal or disordered priors that generate perceptual content that would seem implausible to healthy agents. We have highlighted one aberrant priors could explain verbal hallucinations–that rests upon the transitions of hidden states. A more complete model could consider reducing the precision of the transition matrix, in addition to an attenuated likelihood precision. This would produce out-of-context hallucinations that were less predictable, but with the same phenomenology as in our simulations.

Note that our computational anatomy does not rest on an anomalous transition matrix being engaged when precision is lowered. Rather, we have modelled the pathophysiology of hallucinations in terms of aberrant transition probabilities that are ‘revealed’ when the precision of sensory input falls. When agents are equipped with a normal transition matrix (Figs 2 and 3) perceptual inference is veridical, regardless of sensory precision. Conversely, with an anomalous matrix (Figs 4 and 5) out-of-context hallucinations ensue when precision is decreased. A more complete account of hallucinations in this setting could consider a switch between normal and anomalous beliefs about transitions, based upon inferred sensory (i.e., likelihood) precision. This (outstanding) challenge is particularly interesting; given that the deployment of precision during perceptual inference has close relationships to attention and mental action: e.g., [36].

Fig 3. In-context hallucinations.

In this figure, the agent has been given a reduced likelihood precision but has access to the same policies and the same transition matrices as the agent in Fig 2 (the ‘normal state’ agent). With this lowered precision, the agent hallucinates at the 2nd, 4th, and 6th time steps (red boxes), hearing the words of the song it expects to be present at those time steps based on its transition matrix. It also fails to hear its own voice at the 5th time step, and the voice of the other agent at the 3rd time step. At time-step 1, the agent expects to be ‘cued’. Auditory input is present, but there is no proprioception, and so the agent correctly infers it is listening but not speaking; all the policies are roughly equally probable at this point. At the second time-step, there is no audition or proprioception and the agent infers that it is not speaking (due to high proprioceptive precision). This is consistent with policy 1 or 3 having been engaged at the previous time-step, but inconsistent with policy 2. As the agent infers policies 1 and 3 with high confidence (though it is still uncertain as to which one to choose), this provides a strong prior belief that it must be listening during this time-step. As there is low likelihood precision, the agent hallucinates because it cannot use the information in the environment (i.e. the absence of sound) in order to correct this prior belief. At the 3rd time-step, there is auditory information but no proprioception; the agent should infer that it is listening but not speaking, which would be consistent with both modalities. However, the low likelihood precision leads the agent to fail to hear the sound and the agent infers it is not listening. This is inconsistent with policy 1, so this is eliminated, and the agent becomes confident in policy 3, which it then employs for the rest of the simulation and which dictates an alternating pattern of speaking and listening. This means that at the 4th and 6th timesteps, the agent is very confident it is listening, but its low likelihood precision means it cannot accurately perceive the absence of sound, so it hallucinates–just as in timestep 2.

Fig 4. “Prodromal” agent.

In this figure, the agent has been equipped with an anomalous transition matrix for the lexical state, but it retains a normal likelihood precision. Note alongside the figure the anomalous transition matrix is shown, with the ‘normal’ transition matrix shown for comparison. This enables the agent to avoid any hallucinations, and it perceives the order of words expected given the order of the song as sung by the generative process (i.e. the normal song order), even though it has been biased to expect a different transition structure. This can be considered a ‘prodromal’ agent because it has anomalous beliefs that are kept from being expressed or experienced as hallucinations because of the agent’s ability to use sensory information to correct its beliefs. Note that at the end of the third timestep, the agent has equal probabilities for policies 2 and 3 and could sample either a speaking or listening action. At the fourth timestep, there is no sound as the agent does not sample a speaking action and the other agent does not speak; as the agent has a normal likelihood precision it is able to correctly infer silence. The correct inferences about states for which there are no sensory data (e.g. ‘a’ at the second time-step) may seem a little strange, particularly given the anomalous transition matrix that favors ‘after’ following ‘It’s’. However, this arises from the Bayesian smoothing that occurs in virtue of reciprocal message passing from beliefs about the past to those about the future and vice versa. In this instance, it is the postdictive (as opposed to predictive) influence that lets us infer ‘a’ instead of ‘after’. This is because we do have precise sensory data telling us that the word is ‘small’ at the third time step, and even the anomalous transition matrix favors ‘a’ before ‘small’.

Our simulations do not include any affective or interoceptive aspects and, as such, we are not able to make any claims regarding the ‘mood’ or ‘anxiety’ the subject could be experiencing (i.e. we cannot say if it is experiencing trema). However, it is interesting to consider the clinical analogies from Experiment 3 in light of Klosterkotter’s and Conrad’s models. This agent would be experiencing some odd internal beliefs that must be reconciled with external reality (the environmental context), but it is still able to do this successfully and make correct state inferences. In Experiment 4, when the likelihood precision is decreased, we see an ‘unmasking’ effect, akin to the onset of psychosis: hallucinations occur, and the out-of-context beliefs that had been suppressed now manifest as out-of-context hallucinations. While this remains speculative, one might conceptualize the subject from Experiment 3 as being in the prodromal phase; namely, a phase that is possessed of odd, context-incongruent prior beliefs but which is able to maintain normal inference. When this subject loses their ability to correct odd prior beliefs by reference to the environment, they enter a true psychotic state–not only hallucinating but doing so in an out-of-context manner.

These simulations allow us to hypothesize about the neurobiological mechanisms that could underlie these computational states. An altered generative model of the world; e.g., containing imprecise or improbable (in the real world) transitions, could arise due to genetic and/or neurodevelopmental changes in synaptic and circuit function. Yet these changes may only manifest in abnormal inference, once likelihood precision is compromised; for example, in a disordered hyperdopaminergic state. A key question is therefore: what might cause the onset of such a state? There may be purely neurobiological reasons for this transition. Another possibility is that this change is at least in part computationally-motivated; i.e., Bayes-optimal, given some (pathological) constraints. In some circumstances, it may be optimal for an agent to reduce its sensory (likelihood) precision- for example, if sensory evidence conflicts with a prior belief held with great precision (see [18] for a related simulation). The latter may be the result of powerful effects (e.g. an overwhelming sense of paranoia or grandiosity) or parasitical attractors in dysfunctional cortical networks [37].

Therefore, a person beginning to experience thought disorder might at first try to use information from the environment to check or update their beliefs. Should these thoughts remain impervious updating by contradictory data–for the pathological reasons suggested above–the person might begin to decrease the precision afforded to external information and adopt a new transition structure. These aberrant priors may manifest as thought disorder, eventually leading to frank psychosis whose content is determined by this new transition structure and potentially themed according to the prevailing affect. As noted in [11], memories and fearful emotions are frequently the content of hallucinations, which would seem to implicate medial temporal structures, such as the hippocampus and amygdala, and the amygdala-hippocampal complex has also been shown to be abnormal in schizophrenia [38]. Indeed, in those with visual hallucinations and schizophrenia, hyperconnectivity of the amygdala and visual cortex has been observed [39]. While our model does not explicitly include memory or emotional states, future work could simulate belief updating that shapes hallucination content through the impact of memories or fear. The aim of this work would be to sketch a computational account of the prodromal phase and its subsequent development into frank psychosis.

It may be useful to contrast this model with the ‘inner speech’ model, which proposes verbal hallucinations occur when the sensory consequences of one’s inner speech fail to be attenuated, due to dysfunctional efference copy mechanisms [40]. Both models posit abnormal interactions between predictions and sensory data, but in different forms. In the inner speech model, the predictions are about one’s own inner speech, whereas in this model, the agent’s priors are about the speech of another. Thus, the inner speech model is arguably best equipped to explain thought echo or hearing one’s thoughts out loud, but most hallucinated voices are those of other agents. Secondly, the inner speech model holds that inner speech dominates sensations because an efferent copy of that inner speech fails to ‘cancel out’ the inner speech itself. In contrast, this model proposes that priors dominate because the likelihood is imprecise: i.e., the agent has low confidence in–and therefore attenuates–sensory input. This makes the additional prediction that subjects with schizophrenia ought to show deficits in hearing genuine voices; e.g., misperceptions or failures to detect words, which they do [4].

Limitations

Our model has several limitations. The first is that assumes a dialogic context, where the environment takes the form of another agent singing at every second time-step. This singing provide evidence for the environmental context. This context is rather simplistic and contrived, but it is intended to provide a minimalist illustration of contextual and non-contextual hallucinations. It is not clear if agents would respond the same way to other forms of environmental context, such as visual cues, and if these would serve as the context for verbal hallucinations. In addition, we selected certain types of priors–those pertaining to action selection and state transitions–and it should be clear that these are not the only possible sources of priors for hallucinations. For example, an agent might simply have a strong preference for hearing certain content (e.g., caused by strong affective states), which could bias policy selection towards hearing that content, but this is not something we have explored in this simulation.

For simplicity, and to reinforce the dialogic structure of the simulation, we restricted our policy spaces so that speaking, and listening were mutually exclusive during action selection. However, the purpose of the simulation was not to comment on specific sequences of inferred actions that may exist in vivo, and as such this set-up does not impact upon the meaning of our simulations. In addition, we only employed two modalities–proprioception and audition–and we assumed no errors in proprioception, given the absence of gross sensory deficits in schizophrenia.

We also omitted a key phenomenon in schizophrenia from our model: the failure of sensory attenuation. Sensory attenuation is the reduction of the precision afforded to self-generated sensory stimuli, intuitively exemplified as the inability to tickle oneself [23,24]. Impairments in sensory attenuation have long been implicated in theoretical models of hallucinations and delusions [41, 42]–as well as other phenomena in schizophrenia, such as improved performance in the force-matching paradigm [43] and a loss of N1 suppression during speech [44]. We did not include them in our model because failures of sensory attenuation in schizophrenia may best be described as occurring at lower levels of the sensorimotor hierarchy [43, 45], whereas our model deals with very high-level functions–the recognition of linguistic content. We therefore felt that including failure of low-level sensory attenuation would overcomplicate the model by mixing mechanisms operating at different hierarchical levels. As such our model is unlikely to capture certain aspects of AVH phenomenology that are thought to be related to failure of sensory attenuation, such as the attribution of external agency to voices, or the phenomenon of thought insertion [46]. However, our generative model does allow us to examine what mechanisms operate at higher levels, where content is inferred, in isolation.

While we have not directly simulated empirical data, our model provides insight into the possible belief states of a hallucinating agent. These could be investigated experimentally through behavioral and self-report data from subjects experiencing hallucinations. For example, one might construct an enactive version of Vercammen’s task [9]. In this paradigm, subjects engage in an artificial conversation, the precision of sensory information is reduced, and the content of any hallucination is assessed for its agreement with the semantic context. One could also ask whether subjects with schizophrenia are more likely to experience out-of-context hallucinations, even when context is provided by the conversation.

Another limitation of our model is that it does not model the selection of anomalous transition matrices. In other words, it does not infer the context established by sensory precision. In future work, we will consider hierarchical generative models that accommodates both inference about content and precision–to provide a more complete picture of the computational anatomy that underwrites hallucinations.

An interesting future direction will be to allow selection between different lexical policies, such that our agent could choose what to say and not just whether to say it. This would afford an opportunity to examine the factors that make a given lexical policy more or less plausible.

Conclusion and future directions

In this paper we have demonstrated simulations of agents experiencing hallucinations that are or are not consistent with the environmental context. On a Bayesian (active inference) reading of perception, when likelihood precision was reduced, agents experienced hallucinations congruent with the environmental context. When we additionally induced anomalous prior beliefs about trajectories (i.e., the sequence of words in a narrative), the agent experienced out-of-context hallucinations. These out-of-context hallucinations were reminiscent of disordered thought in psychotic subjects, suggesting that there may be common mechanisms underlying hallucinations and thought disorder. This work suggests that we may distinguish between two, possibly interconnected, aspects of computational psychopathology. First, the genesis of hallucinatory percepts depends upon an imbalance between prior beliefs and sensory evidence. This idea generalises across psychotic hallucinations, and the induction of false positive inferences in healthy subjects used in empirical studies. Second, disordered content associated with a false positive inference may depend upon additional pathology that affects prior beliefs about the trajectories of hidden states. Beliefs about unusual transitions may be a more latent trait in schizophrenia, which become fully manifest only when the precision of sensory evidence is compromised.

Data Availability

All simulations were run using the DEM toolbox included in the SPM12 package. This is open-access software provided and maintained by the Wellcome Centre for Human Neuroimaging and can be accessed here: http://www.fil.ion.ucl.ac.uk/spm/software/spm12/. The scripts for this simulation can be found at the following GitHub account: https://github.com/dbenrimo/Auditory-Hallucinations---Active-Inference---Content-.git.

Funding Statement

DB is supported by a Richard and Edith Strauss Fellowship (McGill University) and the Fonds de Recherche du Quebec- Santé (FRQS). TP is supported by the Rosetrees Trust (Award Number 173346). RAA was supported by an NIHR University College London Hospitals Biomedical Research Centre Postgraduate Fellowship and is now an MRC Skills Development Fellow (MR/S007806/1). KJF is a Wellcome Principal Research Fellow (Ref: 088130/Z/09/Z).

References

- 1.American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Arlington, VA: American Psychiatric Publishing. [Google Scholar]

- 2.Benrimoh D., Parr T., Vincent P., Adams R., and Friston. (2018) Active Inference and Auditory Hallucinations. Computational Psychiatry 2018. Vol. 2, 183–204 10.1162/cpsy_a_00022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Powers A. R., Mathys C., & Corlett P. R. (2017). Pavlovian conditioning–induced hallucinations result from overweighting of perceptual priors. Science, 357(6351), 596–600. 10.1126/science.aan3458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hoffman R. E., & McGlashan T. H. (2006). Using a Speech Perception Neural Network Computer Simulation to Contrast Neuroanatomic versus Neuromodulatory Models of Auditory Hallucinations. Pharmacopsychiatry, 39, 54–64. [DOI] [PubMed] [Google Scholar]

- 5.Adams R. A., Stephan K. E., Brown H. R., Frith C. D., & Friston K. J. (2013). The computational anatomy of psychosis. Frontiers in Psychiatry, 4, 47 10.3389/fpsyt.2013.00047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jardri Renaud, Sophie Denève; Circular inferences in schizophrenia, Brain, Volume 136, Issue 11, 1 November 2013, Pages 3227–3241 [DOI] [PubMed] [Google Scholar]

- 7.Mathys C, Daunizeau J, Friston, KJ, and Stephan KE. (2011). A Bayesian foundation for individual learning under uncertainty. Frontiers in Human Neuroscience, 5:39 10.3389/fnhum.2011.00039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Teufel C, Subramaniam N, Dobler V, Perez J, Finnemann J, Mehta PR, et al. Shift toward prior knowledge confers a perceptual advantage in early psychosis and psychosis-prone healthy individuals. Proc Natl Acad Sci U S A. 2015. October 27;112(43):13401–6. 10.1073/pnas.1503916112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vercammen A., & Aleman A. (2010). Semantic expectations can induce false perceptions in hallucination-prone individuals. Schizophrenia Bulletin, 36(1), 151–156. 10.1093/schbul/sbn063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McCarthy-Jones S., & Fernyhough C. (2011). The varieties of inner speech: links between quality of inner speech and psychopathological variables in a sample of young adults. Consciousness and Cognition, 20(4), 1586–1593. 10.1016/j.concog.2011.08.005 [DOI] [PubMed] [Google Scholar]

- 11.McCarthy-Jones S., Trauer T., Mackinnon A., Sims E., Thomas N., & Copolov D. L. (2014). A New Phenomenological Survey of Auditory Hallucinations: Evidence for Subtypes and Implications for Theory and Practice. Schizophrenia Bulletin, 40(1), 231–235. 10.1093/schbul/sbs156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mirza M. B., Adams R. A., Mathys C. D., & Friston K. J. (2016). Scene Construction, Visual Foraging, and Active Inference. Frontiers in Computational Neuroscience, 10 10.3389/fncom.2016.00056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Parr T, Friston KJ. 2017. Uncertainty, epistemics and active inference. J. R. Soc. Interface 20170376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dietrich E., & Markman A. B. (2003). Discrete Thoughts: Why Cognition Must Use Discrete Representations. Mind & Language, 18(1), 95–119. [Google Scholar]

- 15.Friston K, Kilner J, Harrison L. 2006. A free energy principle for the brain. J. Physiol. Paris 100, 70–87 10.1016/j.jphysparis.2006.10.001 [DOI] [PubMed] [Google Scholar]

- 16.Friston K. (2012). Prediction, perception and agency. International Journal of Psychophysiology, 83(2), 248–252. 10.1016/j.ijpsycho.2011.11.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Friston K. and Buzsaki G. (2016). "The Functional Anatomy of Time: What and When in the Brain." Trends Cogn Sci. [DOI] [PubMed] [Google Scholar]

- 18.Parr T., Benrimoh D. A., Vincent P., & Friston K. J. (2018). Precision and False Perceptual Inference. Frontiers in Integrative Neuroscience, 12 10.3389/fnint.2018.00039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Deep Learning (Ian J. Goodfellow, Yoshua Bengio and Aaron Courville), MIT Press, 2016, p. 184 [Google Scholar]

- 20.Feldman H, Friston K. 2010. Attention, uncertainty, and free-energy. Front. Hum. Neurosci. 4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Demirdjian D., et al. Avoiding the "streetlight effect": tracking by exploring likelihood modes. in Tenth IEEE International Conference on Computer Vision (ICCV'05) Volume 1 2005 [Google Scholar]

- 22.Botvinick M. and Toussaint M. (2012). "Planning as inference." Trends Cogn Sci. 16(10): 485–488. 10.1016/j.tics.2012.08.006 [DOI] [PubMed] [Google Scholar]

- 23.Blakemore SJ., Wolpert D., Frith C., (2000) Why can't you tickle yourself? NeuroReport: August 3rd, 2000—Volume 11—Issue 11—p R11–R16 [DOI] [PubMed] [Google Scholar]

- 24.Shergill SS, Bays PM, Frith CD, Wolpert DM. Two eyes for an eye: the neuroscience of force escalation, Science, 2003, vol. 30 pg. 187. [DOI] [PubMed] [Google Scholar]

- 25.Wang J., Mathalon D. H., Roach B. J., Reilly J., Keedy S. K., Sweeney J. A., & Ford J. M. (2014). Action planning and predictive coding when speaking. NeuroImage, 91, 91–98. 10.1016/j.neuroimage.2014.01.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Holzman PS. Assessment of perceptual functioning in schizophrenia. Psychopharmacologia. 1972;24(1):29–41. [DOI] [PubMed] [Google Scholar]

- 27.Schwartenbeck P., FitzGerald T. H. B., Mathys C., Dolan R., & Friston K. (2015). The Dopaminergic Midbrain Encodes the Expected Certainty about Desired Outcomes. Cerebral Cortex (New York, N.Y.: 1991), 25(10), 3434–3445. 10.1093/cercor/bhu159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Friston K., Brown H. R., Siemerkus J., & Stephan K. E. (2016). The dysconnection hypothesis (2016). Schizophrenia Research, 176(2–3), 83–94. 10.1016/j.schres.2016.07.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Pettersson-Yeo W, Allen P, Benetti S, McGuire P, Mechelli A. 2011. Dysconnectivity in schizophrenia: Where are we now? Neuroscience and biobehavioral reviews 35: 1110–24 10.1016/j.neubiorev.2010.11.004 [DOI] [PubMed] [Google Scholar]

- 30.Bentall R. P., Baker G. A., & Havers S. (1991). Reality monitoring and psychotic hallucinations. The British Journal of Clinical Psychology, 30 (Pt 3), 213–222. [DOI] [PubMed] [Google Scholar]

- 31.Andreasen N. C. (1986). Scale for the assessment of thought, language, and communication (TLC). Schizophrenia Bulletin, 12(3), 473 10.1093/schbul/12.3.473 [DOI] [PubMed] [Google Scholar]

- 32.Arndt S., Alliger R. J., & Andreasen N. C. (1991). The Distinction of Positive and Negative Symptoms: The Failure of a Two-Dimensional Model. The British Journal of Psychiatry, 158(3), 317–322. 10.1192/bjp.158.3.317 [DOI] [PubMed] [Google Scholar]

- 33.Handest P., Klimpke C., Raballo A., & Larøi F. (2016). From Thoughts to Voices: Understanding the Development of Auditory Hallucinations in Schizophrenia. Review of Philosophy and Psychology, 7(3), 595–610. 10.1007/s13164-015-0286-8 [DOI] [Google Scholar]

- 34.Klosterkötter J. 1992. The meaning of basic symptoms for the development of schizophrenic psychoses. Neurology Psychiatry and Brain Research 1: 30–41. [Google Scholar]

- 35.Sass L.A., and Parnas J. 2003. Schizophrenia, consciousness, and self. Schizophrenia Bulletin 29: 427–444. 10.1093/oxfordjournals.schbul.a007017 [DOI] [PubMed] [Google Scholar]

- 36.Limanowski J, Friston K. 2018. ‘Seeing the Dark’: Grounding Phenomenal Transparency and Opacity in Precision Estimation for Active Inference. Frontiers in Psychology 9: 643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rolls E. T., Loh M., Deco G., & Winterer G. (2008). Computational models of schizophrenia and dopamine modulation in the prefrontal cortex. Nature Reviews Neuroscience, 9(9), 696–709. 10.1038/nrn2462 [DOI] [PubMed] [Google Scholar]

- 38.Lawrie S. M., Whalley H. C., Job D. E., & Johnstone E. C. (2003). Structural and functional abnormalities of the amygdala in schizophrenia. Annals of the New York Academy of Sciences, 985, 445–460. 10.1111/j.1749-6632.2003.tb07099.x [DOI] [PubMed] [Google Scholar]

- 39.Ford J. M., Palzes V. A., Roach B. J., Potkin S. G., van Erp T. G. M., Turner J. A., … Mathalon D. H. (2015). Visual hallucinations are associated with hyperconnectivity between the amygdala and visual cortex in people with a diagnosis of schizophrenia. Schizophrenia Bulletin, 41(1), 223–232. 10.1093/schbul/sbu031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Feinberg Irwin, Copy Efference and Corollary Discharge: Implications for Thinking and Its Disorders, Schizophrenia Bulletin, Volume 4, Issue 4, 1978, Pages 636–640, https://doi-org.proxy3.library.mcgill.ca/10.1093/schbul/4.4.636 [DOI] [PubMed] [Google Scholar]

- 41.Frith C. D., & Done D. J. (1989). Experiences of alien control in schizophrenia reflect a disorder in the central monitoring of action. Psychological Medicine, 19(2), 359–363. [DOI] [PubMed] [Google Scholar]

- 42.Fletcher P., Frith D., (2009) Perceiving is believing: a Bayesian approach to explaining the positive symptoms of schizophrenia. Nature Reviews Neuroscience volume 10, pages 48–58 (2009) 10.1038/nrn2536 [DOI] [PubMed] [Google Scholar]

- 43.Shergill SS, Samson G, Bays PM, Frith CD, Wolpert DM. Evidence for sensory prediction deficits in schizophrenia, Am J Psychiatry, 2005, vol. 162 (pg. 2384–6) 10.1176/appi.ajp.162.12.2384 [DOI] [PubMed] [Google Scholar]

- 44.Ford J. M., Roach B. J., Faustman W. O., & Mathalon D. H. (2007). Synch Before You Speak: Auditory Hallucinations in Schizophrenia. American Journal of Psychiatry, 164(3), 458–466. 10.1176/ajp.2007.164.3.458 [DOI] [PubMed] [Google Scholar]

- 45.Cassidy C. M., Balsam P. D., Weinstein J. J., Rosengard R. J., Slifstein M., Daw N. D., … Horga G. (2018). A Perceptual Inference Mechanism for Hallucinations Linked to Striatal Dopamine. Current Biology, 28(4), 503–514.e4. 10.1016/j.cub.2017.12.059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sterzer P., Mishara A. L., Voss M., & Heinz A. (2016). Thought Insertion as a Self-Disturbance: An Integration of Predictive Coding and Phenomenological Approaches. Frontiers in Human Neuroscience, 10 10.3389/fnhum.2016.00502 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All simulations were run using the DEM toolbox included in the SPM12 package. This is open-access software provided and maintained by the Wellcome Centre for Human Neuroimaging and can be accessed here: http://www.fil.ion.ucl.ac.uk/spm/software/spm12/. The scripts for this simulation can be found at the following GitHub account: https://github.com/dbenrimo/Auditory-Hallucinations---Active-Inference---Content-.git.