Abstract

Study design:

Repeated measures.

Introduction:

The Kinect is widely used for tele-rehabilitation applications including rehabilitation games and assessment.

Purpose:

To determine effects of the Kinect location relative to a person on measurement accuracy of upper-limb joint angles.

Methods:

Kinect error was computed as difference in the upper-limb joint range of motion (ROM) during target reaching motion, from the Kinect vs. 3D Investigator™ Motion Capture, and compared across nine Kinect locations.

Results:

The ROM error was the least when the Kinect was elevated 45° in front of the subject, tilted toward the subject. This error was 54% less than the conventional location in front of a person without elevation/tilting. The ROM error was the largest when the Kinect was located 60° contralateral to the moving arm, at the shoulder height, facing the subject. The ROM error was the least for the shoulder elevation and largest for the wrist angle.

Conclusion:

This information facilitates implementation of Kinect-based upper-limb rehabilitation applications with adequate accuracy.

Keywords: Kinect location, accuracy, motion capture, arm movement, upper limb joint angle range of motion

1. Introduction

The Kinect is a low-cost motion detection device, originally developed for gaming purposes. The Kinect provides kinematic data that used to be accessible only through traditional research-purpose motion capture systems.1–6 Yet, the Kinect costs only a fraction of traditional motion capture systems, is portable, and is less technically demanding to use. In addition, while typical research-purpose motion capture systems require a person to wear markers over the body to track the person’s limb motion, the Kinect captures limb motion without the need to wear any equipment on the body. This easy-to-use aspect of the Kinect is also complemented by user-friendly interfaces for obtainment of processed data, once developed for a specific application. These practical benefits of the Kinect have fueled development of Kinect-based applications for telemedicine. These applications include: Kinect-based assessment tools to objectively quantify patient movements, evaluate rehabilitation progress, and aid planning of rehabilitation.1, 7–14 In addition, Kinect-based virtual reality rehabilitation games have been developed to motivate patients to continue therapeutic movements in the comfort of their home or typical environments such as school.15–22 These Kinect-based rehabilitation applications have been shown to be well liked by both patients and therapists.17, 18, 23 With its increasing popularity, a knowledge translation resource has been developed to support clinical decision making about selection and use of Kinect games in physical therapy.24 Thus, the Kinect is considered a promising tool to aid rehabilitation.25, 26

In use of the Kinect sensor for movement assessment and/or rehabilitation games, the manufacturer recommendation is to place the Kinect horizontally in front of a person.27 While this Kinect location may work well for detecting movements in the frontal plane, accuracy of the Kinect sensor may decrease for movements in the sagittal plane, because the Kinect’s measurement error is the largest for the depth direction (i.e., direction from the Kinect sensor to a person) compared to the horizontal and vertical directions: the root mean square errors for the Kinect sensor is 6.5, 5.7, and 10.9 mm in the horizontal, vertical, and depth direction, respectively.28 In other words, accuracy of the Kinect depends on its relative location to a person and movements being captured, and the Kinect accuracy may be improved by modifying the Kinect sensor location. For this reason, researchers have used different Kinect locations relative to the movement of interest. For example, Pfister et al. placed the Kinect 45° to the left of the person in the hope to best capture the knee and hip motion during treadmill walking.29 However, the optimal placement of the Kinect sensor has not been systematically investigated. The knowledge of optimal Kinect placement may contribute to increasing accuracy of joint angle measurements and utility of the Kinect. The likely reason that the optimal Kinect placement has not been established is that accuracy of the Kinect changes depending on the movements30 due to the non-uniform measurement errors in the 3 axes and thus the optimal Kinect placement may vary depending on the movement of interest.

One of the movements of interest for upper limb therapy is target reaching.22, 31–42 Target reaching motion is typically used in upper limb rehabilitation settings as follows. First, people with movement disorders, such as due to stroke22, 31, 32 and burn injury,33 practice target reaching motion for therapy since it is one of the most important abilities for activities of daily living.43 In addition, target reaching motion is used as part of outcome assessments of rehabilitation therapy programs for those with movement disorders such as post stroke31, 34–36 and peripheral nerve injury.37 Likewise, target reaching motion has been used to characterize movement disorders for patients such as those with stroke38–41 and muscular dystrophy,42 because of its ability to distinguish kinematic characteristics of patients from healthy controls or the unaffected side as well as its importance in our understanding of motor control.44, 45 While target reaching motion is frequently used in upper limb rehabilitation settings, information regarding accuracy of the Kinect sensor in measuring all upper limb joint angles during target reaching motion is limited for varying Kinect sensor locations.25

Therefore, the objective of this study was to examine measurement accuracy of upper limb joint angles during target reaching movement using the Kinect, and to determine the impact of adjusting the location of the Kinect sensor relative to a person on the measurement accuracy. Specifically, Kinect error in the range of motion measurement was assessed as the difference in the upper limb joint range of motion detected by the Kinect using Kinect for Windows Software Development Kit (SDK) (Microsoft, Redmond, WA, USA) and by 3D Investigator™ Motion Capture System (NDI, Waterloo, ON, Canada). 3D Investigator was used as a research-grade motion capture system as it has been used for research involving upper limb46–49 and other motion analysis.50 A smaller difference in the measurement between the two systems would indicate better agreement of the Kinect to the research-grade motion capture system and thus accuracy. The error in the range of motion measurement was compared across nine Kinect sensor locations to examine the extent to which this error changed with varying Kinect sensor locations and to determine if the error in the range of motion could be reduced by modifying the Kinect sensor location as compared to the standard location of being horizontally in front of a person. This study intends to contribute to improving Kinect positioning relative to a patient for better measurement accuracy and standardizing a Kinect-based measurement protocol for an upper limb rehabilitation setting, which is a necessary step for implementation in clinical practice.

2. Method

2.1. Subjects

Ten right-handed healthy subjects (age range 20–37 years, 5 males and 5 females) participated in this study. The study protocol was approved by the Institutional Review Board and all subjects signed the informed consent forms.

2.2. Procedure

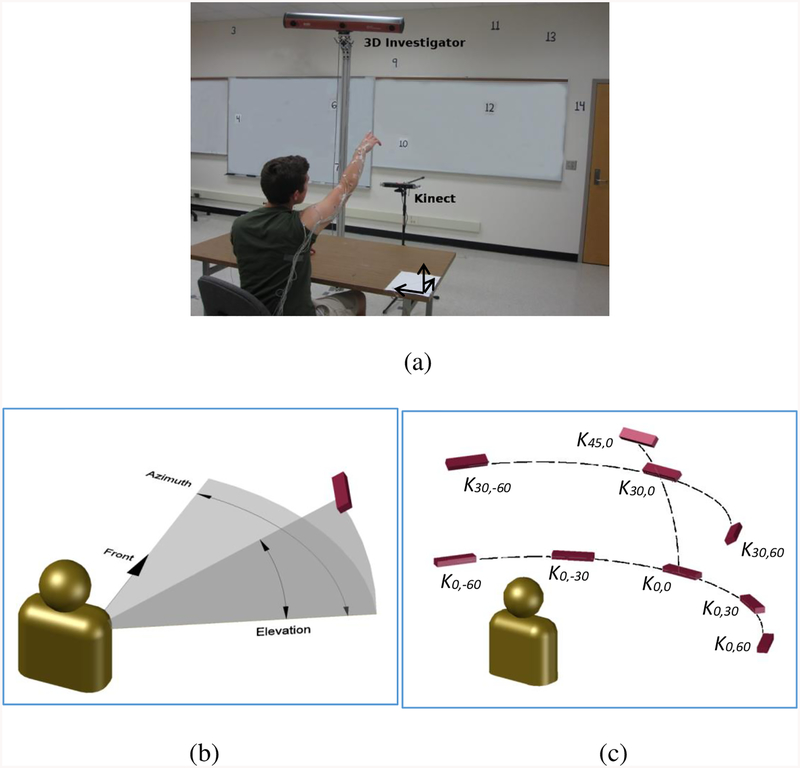

An experiment was conducted to quantify difference in the ranges of motion for the upper limb joint angles determined using the Kinect as compared to a research-grade motion tracking system of 3D Investigator, and to compare the difference across multiple Kinect sensor locations. Subjects were seated with the right forearm resting on a table. Upon computer-generated cues, subjects were asked to lift their right arm, point their index finger toward a prescribed target, and return to the initial position at a comfortable speed (Figure 1a), similarly with previous studies.31, 32, 37 Twenty one targets labeled from 1 to 21 were presented on the wall in front of the subject to cover the upper limb workspace in front of a person at or above the shoulder level (Figure 1a). Subjects’ upper limb joint positions were recorded using the Kinect as well as 3D Investigator simultaneously. Each target for each Kinect location was prescribed at least twice. The order of testing the targets was randomized within a Kinect location. The order of testing Kinect locations was randomized across subjects. The consecutive reaching was separated by 5 seconds. Subjects were provided with rest breaks between Kinect location conditions.

Figure 1:

(a) A subject performing a reaching motion toward a target. Their right upper limb motion was detected using Kinect (K0,0 location shown in the picture) as well as 3D Investigator using active markers placed on the right upper limb. Numbered targets were placed on the walls in front of the subject. (b) Kinect location was specified by its elevation and azimuth angles relative to the subject’s right shoulder. (c) The 9 Kinect locations tested are labeled by their elevation angle and azimuth angle.

Nine Kinect sensor locations were tested. The nine locations differed by the elevation and azimuth angle of the Kinect sensor relative to the right shoulder (Figure 1b–c): directly in front of the right shoulder at 45° elevation (denoted by K45,0 in Figure 1c), 30° elevation and directly in front of the right shoulder (K30,0), 30° elevation and 60° to the left (K30,−60) or 60° to the right (K30,60), at the shoulder level directly in front of the right shoulder (K0,0), 30° to the left (K0,−30) or to the right (K0,30), or 60° to the left (K0,−60) or right (K0,60). For all locations, the Kinect sensor was tilted such that the sensor faced the subject’s right shoulder. The Kinect sensor was placed 1.5 m away from the right shoulder to ensure that the right shoulder and hand were within the capture range recommended by Kinect specifications51 while minimizing the distance between Kinect and the subject since the depth accuracy of Kinect decreases with increasing distance.52 Any shiny or dark objects such as a watch were removed from subjects to prevent interference with Kinect’s motion detection.28, 52 The position data for the right shoulder, elbow, and wrist joints in addition to hand in 3-D space were obtained using custom-developed software with Kinect for Windows SDK.

During all reaching tasks, 3D Investigator recorded positions of the infrared light emitting markers placed on the subject’s upper limb to determine the shoulder, elbow, and wrist joint positions as well as hand position in 3-D space. The markers were placed on the right upper limb: 3 markers on the dorsum of the right hand, 2 markers on the right wrist (medial and lateral), 3 markers on the right forearm, 2 markers on the right elbow (medial and lateral), 3 markers on the right upper arm, and 1 marker on the right shoulder. Such marker placement of using 2 markers to estimate a joint center with additional markers to allow detection of rotational orientation is conventional in upper limb motion analysis.42, 53 In the present study, the positions of the 3 markers on the hand were averaged to find the hand position. The midpoints of the pairs of markers on the wrist and elbow joints were used as the positions of the wrist and elbow joints, respectively. The extra markers on the forearm and upper arm were used to estimate the positions of other markers if their view was obstructed during movement. In addition, three markers were placed on the desk on predetermined spots to enable transformation of the position data to the reference coordinate system.

2.3. Data analysis

The range of motion for each of five upper limb joint angles during a target reaching motion was determined using data obtained from each device. The five joint angles were: shoulder elevation, shoulder plane of elevation, shoulder axial rotation, elbow, and wrist angles. The shoulder elevation angle was the angle between the upper arm and the vertical axis and the shoulder plane of elevation angle was the angle between the sagittal plane and the projection of the upper arm on the horizontal plane (the yaw angle representing a rotation of the arm about the vertical axis), while the shoulder axial rotation angle represented a rotation of the forearm about the axis of the upper arm according to the International Society of Biomechanics standard definition54 and literature.55 The shoulder axial rotation angle was defined to be zero when the forearm had the largest vertical component. The elbow angle was the angle between the upper arm and the forearm. The wrist angle was the angle between the forearm and the hand.

To enable the shoulder joint angle computations relative to the horizontal and sagittal planes, all Kinect and 3D Investigator position data were transformed into the reference coordinate system. The reference coordinate system aligned with the horizontal, sagittal, and frontal planes. For the Kinect, the transformation matrix from the local coordinate system to the reference coordinate system was derived from the elevation and azimuth angles of the Kinect. The transformation matrix for the 3D Investigator was found from the 3 markers placed on the desk (horizontal plane), forming a right triangle with one side parallel to the sagittal plane and another side parallel to the frontal plane.

The start of data recording for the Kinect and the 3D Investigator in two different computers was synchronized via an external trigger signal. Both systems recorded the sample time in addition to position information, and the sampling frequency was 16–20 Hz for the Kinect and 100 Hz for the 3D Investigator. Since the two data sets were sampled at different frequencies, all joint angle data for both Kinect and 3D investigator were re-sampled at the mean sampling frequency of the Kinect, and the re-sampled data were used for computing ranges of motion. The range of motion was computed as the difference between the maximum and minimum of the joint angle observed during each target reaching motion. The error of the Kinect in the range of motion measurement was determined as the difference in the range of motion between the Kinect and 3D Investigator (the range of motion computed using the Kinect minus the range of motion computed using the 3D Investigator), for each Kinect location, each target, each joint angle, and each subject.

2.4. Statistical analysis

Repeated measures ANOVA was conducted to determine whether the Kinect’s range of motion error significantly changed with the 9 Kinect locations, 5 joint angles, 21 targets, and their second-order interactions. The significance level of 0.05 was used. For significant factors, Tukey post-hoc analysis was used for pairwise comparisons. In addition, the bias and variability of the difference between the Kinect and 3D Investigator for the shoulder elevation and elbow range of motion data were further examined using the Bland-Altman plots. Lastly, the correlative relationship between the measurements from the two systems was further examined using intraclass correlation (ICC).

3. Results

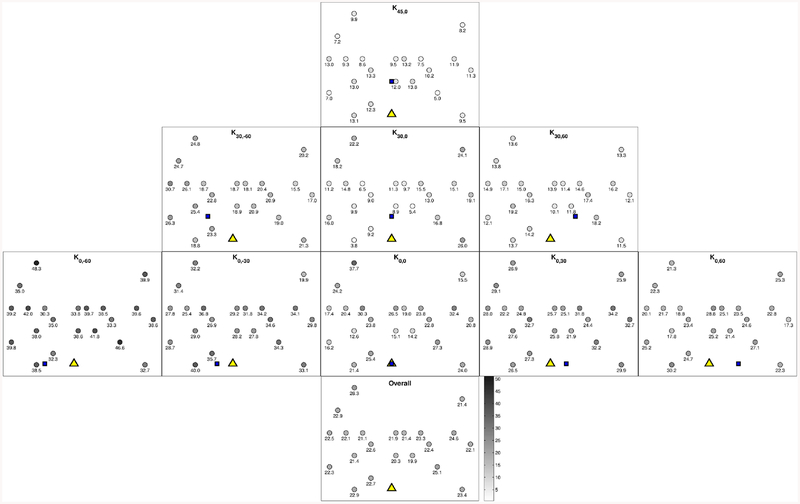

The mean error in the ranges of motion for each Kinect location and for each target is shown in Figure 2 (joint angles pooled). The ANOVA results indicate that the error in the range of motion was significantly dependent upon Kinect location (p < 0.001), joint angle (p < 0.001), and the interaction between Kinect location and joint angle (p < 0.001). The target, interaction between Kinect location and target, and interaction between joint angle and target were found to be not significant (p = 0.32, 0.46, and 0.82, respectively).

Figure 2:

Mean error in the upper limb joint ranges of motion (in degrees) for each target for each Kinect location (first three rows) as well as averaged for all Kinect locations (bottom row), averaged for the five joint angles. Within each Kinect location, the mean errors in the range of motion (in degrees) are noted below each target location as well as with the gray scale. The triangle in the bottom center of each Kinect location denotes the right shoulder location, while the filled square denotes the Kinect location. The least mean error in the range of motion measurement was observed for K45,0 and the largest error was observed for K0,−60.

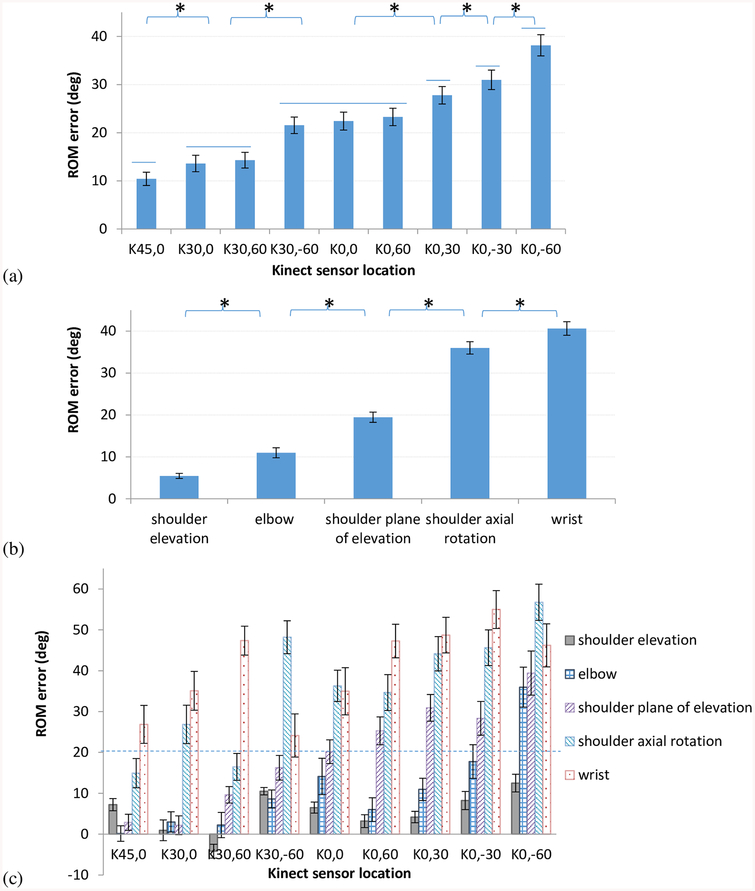

The K45,0 location resulted in the least mean error in the range of motion (mean ± 95% confidence interval (CI) = 10° ± 1°) among all 9 locations (Figure 3a, Tukey’s post-hoc p < 0.05), followed by the K30,0 location. On the other hand, the K0,−60 location was found to result in the largest mean error in the range of motion (38° ± 2°) (Figure 3a, Tukey’s post-hoc p < 0.05), followed by the K0,-30 location. The conventional K0,0 location was associated with the median error in the range of motion among all Kinect locations (22° ± 2°).

Figure 3:

(a) Mean error in the range of motion (ROM) significantly varied by Kinect locations (p< 0.001). The mean error in the range of motion was the least when the Kinect was located 45⁰ elevated in front of the subject (K45,0) and the largest when the Kinect was located at the shoulder level and 60⁰ to the left (K0,−60). (b) Mean error in the range of motion significantly varied by joint angles (p<0.001). The mean error in the range of motion was the least for shoulder elevation angle. Stars indicate groups with statistically significant differences. (c) Mean error in the range of motion for each joint angle and Kinect location. All error bars indicate 95% confidence interval.

The shoulder elevation angle was found to have the least mean error in the range of motion (5° ± 1°), followed by the elbow, shoulder plane of elevation, and shoulder axial rotation angles (Figure 3b, Tukey’s post-hoc, p < 0.05). The largest mean error in the range of motion was found for the wrist angle (41° ± 2°, Figure 3b, Tukey’s post-hoc, p < 0.05).

The mean errors in the ranges of motion for individual joints for each Kinect location are shown in Figure 3c. The mean error in the shoulder elevation range of motion was less than 15° for all Kinect locations. For the elbow, shoulder plane of elevation, and shoulder axial rotation angles, the mean error in the ranges of motion varied substantially depending on the Kinect location. The mean error in the wrist range of motion was greater than 20° for all Kinect locations.

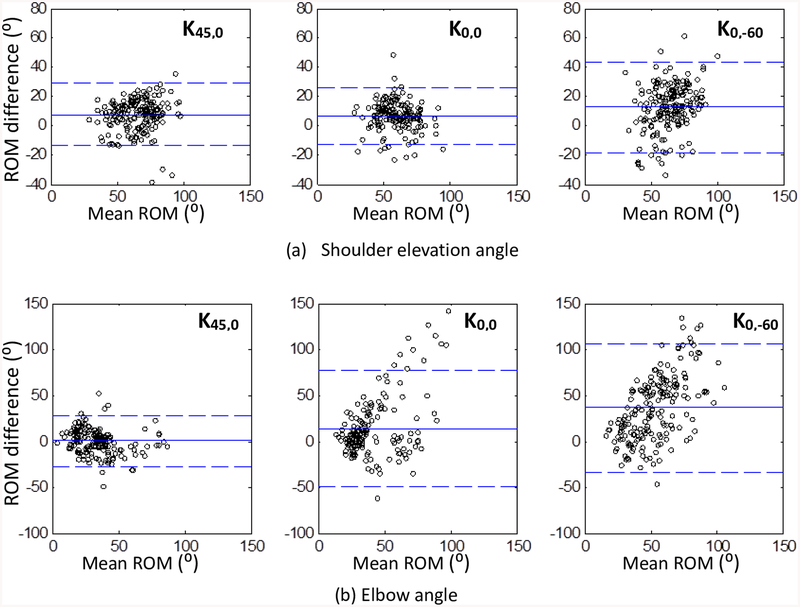

The mean ± 95% CI Kinect errors in the ranges of motion were mostly positive (Figure 3c), indicating that the Kinect tended to overestimate the range of motion compared to the 3D Investigator. For instance, the shoulder elevation angle was overestimated for the K45,0, K0,0, and K0,−60 locations by the mean ± 95% CI of 7° ± 1°, 7° ± 1°, and 12° ± 2°, respectively (statistically different from zero). The elbow angle was not overestimated nor underestimated for the K45,0 location (0° ± 2°, statistically indifferent from zero), while it was overestimated for the K0,0 and K0,−60 locations by 14° ± 4° and 36° ± 5°, respectively. These overestimation biases can be seen again in the Bland-Altman plots for the shoulder elevation and elbow angles (Figure 4a and b). In addition to the bias, the CI of the elbow range of motion difference between the two systems was 2.3 and 2.6 times greater for the K0,0 and K0,−60 locations compared to the K45,0 location (Figure 3c). This larger variability can be clearly seen in the Bland-Altman plots (Figure 4b).

Figure 4.

Bland-Altman plots comparing the range of motion (ROM) from the Kinect and the 3D Investigator for the shoulder elevation angle (a) and the elbow angle (b) for the three Kinect locations (K45,0, K0,0, and K0,−60). The solid horizontal line indicates the mean difference, with a positive value indicating an overestimation. The segmented horizontal lines indicate the 95% limits of agreement.

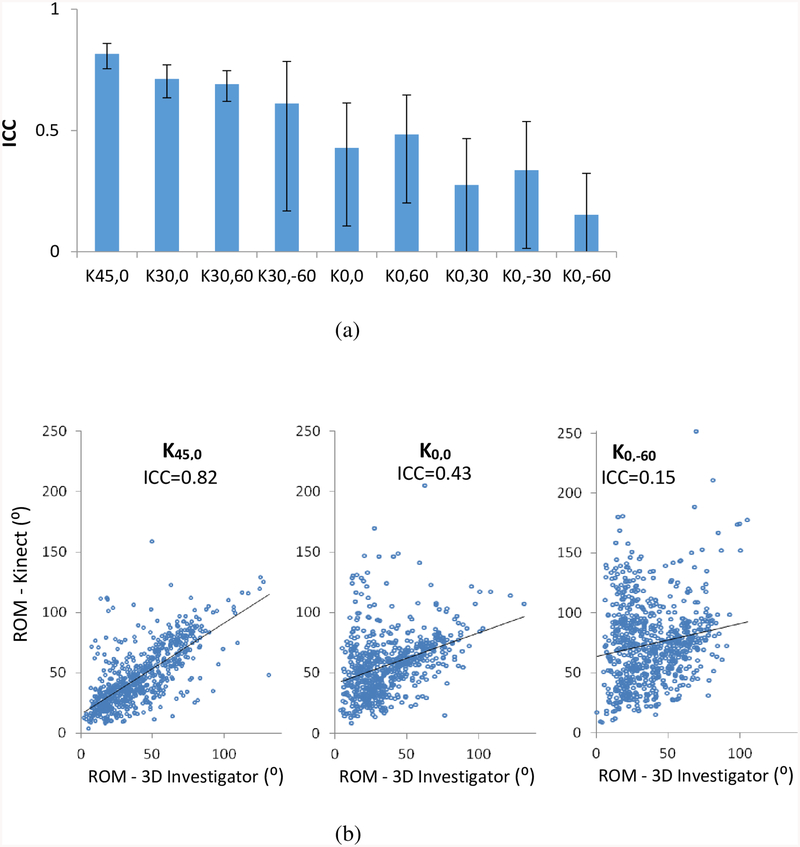

The agreement between the Kinect and 3D Investigator was further examined using ICC (Figure 5). Since the wrist range of motion error was greater than 20° for all Kinect locations which was deemed excessive for clinical assessment purposes (please see the detailed rationale provided in Discussion, Clinical Implication), ICC was computed using range of motion data of the three shoulder angles and the elbow angle, without the wrist data. The ICC was the highest for the K45,0 location and the lowest for the K0,−60 location (Figure 5a). The correlation plots (Figure 5b) illustrate the relationship between the range of motion measurements from the two systems for the K45,0 location with the highest ICC, the standard K0,0 location, and the K0,−60 location with the lowest ICC.

Figure 5.

Intraclass correlation (ICC) between the Kinect and 3D Investigator in the range of motion (ROM) measurement for all shoulder and elbow angles is shown for each Kinect location (a). The error bars indicate 95% confidence interval. Correlation plots between the Kinect and 3D Investigator for the ROM measurement of all shoulder and elbow angles are shown for three Kinect locations (K45,0, K0,0, and K0,−60) (b).

4. Discussion

Effects of the Kinect placement

This study demonstrated that accuracy of the Kinect sensor for detecting upper limb joint ranges of motion during target reaching motion depends on its location relative to a subject. Specifically, the least mean error in the range of motion measurement with the highest ICC was obtained by placing the Kinect at an elevation angle of 45° in front of the subject and tilting Kinect to directly face the subject (Figure 3a,c, Figure 5a). The conventional Kinect location of right in front, facing the subject (K0,0 with 0° azimuth angle and 0° elevation angle27), was outperformed by the Kinect locations of K45,0, K30,0, and K30,60 (Figure 3a, Figure 5a). By changing the Kinect location from the conventional K0,0 to K45,0, the mean error in the range of motion decreased approximately by half (Figure 3a). The largest error in the range of motion was obtained with the Kinect sensor placed on the left side of the subject at the elevation angle of 0° while the right upper limb motion was tracked.

In the absence of this data, one may postulate that the least error might be obtained by positioning the Kinect on the side of the arm reaching movement (e.g., K0,-60 or K0,60 locations) to capture joint angles primarily by using the Kinect’s RGB camera with higher accuracy than the Kinect’s depth sensor with lower accuracy.28 However, that postulation was not supported by the data. In particular, the largest error in the range of motion as well as the lowest ICC for the K0,−60 location (on the left side of the subject) may have occurred as the Kinect’s view of the right arm could have been obstructed by the trunk and the left arm resulting in poor detection of the right arm motion.56 On the other hand, the least error in the range of motion along with the highest ICC was achieved when the Kinect was located in the center of the targets (K45,0, Figure 2). As most targets were at or above the shoulder level, elevation of the Kinect sensor above the shoulder level (K45,0 and K30,_) resulted in less error in the range of motion compared to the Kinect placed at the shoulder level (K0,_) (Figure 3a). This Kinect location in the center of the targets may have resulted in the least error in the range of motion as the upper extremity became closer to the Kinect sensor during pointing, and the shorter distance from the Kinect sensor is associated with less error in depth estimation.28, 52

Comparison to previous studies

Consistent with previous studies, the Kinect detected movements of the shoulder and elbow joints more accurately than the wrist.2, 30, 57 Specifically, the mean error in the range of motion was the least for the shoulder elevation angle followed by the elbow angle, while the mean error in the range of motion was the largest for the wrist angle followed by the shoulder axial rotation angle (Figure 3b). The largest error in the range of motion for the wrist angle may be associated with a challenge in detecting the small hand compared to the other upper limb parts and/or the small distance between the wrist and the hand with which small error in wrist or hand position estimation may result in large error in the angle. Also, it is possible that during reaching, the hand may have reached close to the boundary of the Kinect’s capture volume compared to the proximal upper limb, which could increase estimation error for the hand position and thus the wrist joint angle.28 The second largest error in the range of motion for the shoulder axial rotation angle may be related to involvement of three vectors in the joint angle computation as opposed to only two vectors for all other joint angles, since inclusion of more number of estimated data with error in calculation results in greater accumulated error.

The error in the range of motion observed in this study was in similar magnitudes with previous studies. For instance, the mean error in the range of motion and standard deviation of the shoulder elevation of 7° ± 10° for the K0,0 location found in the present study was comparable to the mean shoulder elevation error of 10° ± 6° across previous studies.1, 3–5, 58, 59 The mean error in the range of motion for the elbow angle of 14° ± 32° for the K0,0 location in the present study was also comparable to the mean elbow angle error of 10° ± 10° across previous studies.1, 4–6, 58 In addition, the Kinect’s tendency to overestimate joint angles seen in the present study is consistent with the previous studies1, 29

Clinical implication

The present study provides an objective dataset that can be used in designing a Kinect setup for upper limb rehabilitation applications. The need for accuracy changes depending on specific applications and goals. For example, larger Kinect error may be tolerated for applications to motivate patients to move upper limb repeatedly by engaging them in an interesting virtual reality game environment. Yet, too large Kinect error (e.g., > 45°) could be rather frustrating than motivating to patients, as they may feel that the Kinect does not detect their motion well and the system does not work well. For assessment of rehabilitation recovery, Kinect error less than 20° may be desired, as the inter-rater standard deviation in upper limb joint angle estimation is up to 20°60 and additional shoulder elevation needed to reach one higher level of a standard kitchen shelf is approximately 20° based on anthropometry data.61 For that limit, the present study suggests the followings: The Kinect appears to be adequate for detecting the shoulder elevation range of motion, as the error in the range of motion was less than 15° for all Kinect locations. However, if the target measure includes the elbow and shoulder plane of elevation angles, the Kinect sensor may be placed with elevation to minimize error in detecting the upper limb joint range of motion during target reaching motion. In addition, the Kinect may not be adequate for assessing the wrist range of motion, as this error was greater than 20° for all Kinect locations. For example, it may be inadequate to use the Kinect as a tool to monitor if a patient becomes eligible for a constraint-induced movement therapy that requires 30° wrist extension, as the 95% CI of the wrist range of motion error includes or exceeds 30°. In summary, having this detailed information about joint angle estimation error helps guide use of the Kinect for upper limb reaching rehabilitation applications.

Limitations

There are ways to increase accuracy of the Kinect such as use of a Kalman filter,40 calibrations relative to a conventional research-purpose motion capture data to adjust the Kinect data,62, 63 and sensor fusion.41, 64 However, the present study used the manufacturer-provided Kinect for Windows SDK to obtain joint position data and did not employ additional calibration procedures. Using the same physical sensor with another SDK with a different detection algorithm may lead to different magnitudes of error. Secondly, the error in the upper limb joint range of motion reported in this study may be specific to upper limb reaching motion toward targets at or above the shoulder height of seated persons. Generalizability to other specific motions was not examined in the present study. Thirdly, the present study tested healthy adults in order to cover wide joint ranges of motion observed during reaching. Patients with severe upper limb spasticity due to neurologic disorders may not have wide joint ranges of motion and the Kinect may have difficulty distinguishing upper limb segments from each other or from the trunk when the limb is tight. Lastly, a small sample size was used in this study, and the generalizability to the healthy population at large may be limited.

5. Conclusion

The location of the Kinect sensor relative to a subject can affect its accuracy in detection of upper limb joint angle ranges of motion. The detailed information regarding the measurement error can be used to evaluate how much error is expected for each Kinect location and for each joint angle. This finding can be used for better placement of the Kinect sensor and understanding of its accuracy in future studies using the Kinect for upper limb motion detection. The results of this study have implications for low-cost virtual reality applications such as rehabilitation games, assessment, and telemedicine.

Acknowledgment

This project was supported by the grant number 5R24HD065688–04 from the Eunice Kennedy Shriver National Institute of Child Health and Human Development, as part of the Medical Rehabilitation Research Infrastructure Network.

Footnotes

Conflicts of interest: none

Bibliography

- 1.Kurillo G, Chen A, Bajcsy R, Han JJ. Evaluation of upper extremity reachable workspace using kinect camera. Technol. Health Care. 2013;21:641–656 [DOI] [PubMed] [Google Scholar]

- 2.Mobini A, Behzadipour S, Saadat Foumani M. Accuracy of kinect’s skeleton tracking for upper body rehabilitation applications. Disabil Rehabil Assist Technol. 2014;9:344–352 [DOI] [PubMed] [Google Scholar]

- 3.Fern’ndez-Baena A, Susin A, Lligadas X. Biomechanical validation of upper-body and lower-body joint movements of kinect motion capture data for rehabilitation treatments. Intelligent Networking and Collaborative Systems (INCoS), 2012 4th International Conference on. 2012:656–661 [Google Scholar]

- 4.Hawi N, Liodakis E, Musolli D, Suero EM, Stuebig T, Claassen L, et al. Range of motion assessment of the shoulder and elbow joints using a motion sensing input device: A pilot study. Technology and health care: official journal of the European Society for Engineering and Medicine. 2014;22:289–295 [DOI] [PubMed] [Google Scholar]

- 5.Bonnechere B, Jansen B, Salvia P, Bouzahouene H, Omelina L, Moiseev F, et al. Validity and reliability of the kinect within functional assessment activities: Comparison with standard stereophotogrammetry. Gait Posture. 2014;39:593–598 [DOI] [PubMed] [Google Scholar]

- 6.Tao G, Archambault PS, Levin MF. Evaluation of kinect skeletal tracking in a virtual reality rehabilitation system for upper limb hemiparesis. 2013 International Conference on Virtual Rehabilitation (ICVR), Philadelphia, PA 2013:164–165 [Google Scholar]

- 7.Han JJ, Kurillo G, Abresch RT, Nicorici A, Bajcsy R. Validity, reliability, and sensitivity of a 3d vision sensor-based upper extremity reachable workspace evaluation in neuromuscular diseases. PLoS Curr. 2013;5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kurillo G, Han JJ, Obdrzalek S, Yan P, Abresch RT, Nicorici A, et al. Upper extremity reachable workspace evaluation with kinect. Stud. Health Technol. Inform 2013;184:247–253 [PubMed] [Google Scholar]

- 9.Oskarsson B, Joyce NC, de Bie E, Nicorici A, Bajcsy R, Kurillo G, et al. Upper extremity 3d reachable workspace assessment in als by kinect sensor. Muscle Nerve. 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Han JJ, Kurillo G, Abresch RT, De Bie E, Nicorici A, Bajcsy R. Upper extremity 3-dimensional reachable workspace analysis in dystrophinopathy using kinect. Muscle Nerve. 2015;52:344–355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Han JJ, Kurillo G, Abresch RT, de Bie E, Nicorici A, Bajcsy R. Reachable workspace in facioscapulohumeral muscular dystrophy (fshd) by kinect. Muscle Nerve. 2015;51:168–175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Stone EE, Skubic M. Passive in-home measurement of stride-to-stride gait variability comparing vision and kinect sensing. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:6491–6494 [DOI] [PubMed] [Google Scholar]

- 13.Lowes LP, Alfano LN, Yetter BA, Worthen-Chaudhari L, Hinchman W, Savage J, et al. Proof of concept of the ability of the kinect to quantify upper extremity function in dystrophinopathy. PLoS Curr. 2013;5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nixon ME, Howard AM, Chen Y-P. Quantitative evaluation of the microsoft kinect tm for use in an upper extremity virtual rehabilitation environment. 2013 International Conference on Virtual Rehabilitation (ICVR), Philadelphia, PA 2013:222–228 [Google Scholar]

- 15.Brokaw EB, Eckel E, Brewer BR. Usability evaluation of a kinematics focused kinect therapy program for individuals with stroke. Technol. Health Care 2015 [DOI] [PubMed] [Google Scholar]

- 16.Bao X, Mao Y, Lin Q, Qiu Y, Chen S, Li L, et al. Mechanism of kinect-based virtual reality training for motor functional recovery of upper limbs after subacute stroke. Neural Regen Res. 2013;8:2904–2913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Seo NJ, Arun Kumar J, Hur P, Crocher V, Motawar B, Lakshminarayan K. Usability evaluation of low-cost hand and arm virtual reality rehabilitation games. J. Rehabil. Res. Dev 2016;53:in Press [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chang YJ, Chen SF, Huang JD. A kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Res. Dev. Disabil 2011;32:2566–2570 [DOI] [PubMed] [Google Scholar]

- 19.Lee G Effects of training using video games on the muscle strength, muscle tone, and activities of daily living of chronic stroke patients. Journal of physical therapy science. 2013;25:595–597 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lange B, Chang CY, Suma E, Newman B, Rizzo AS, Bolas M. Development and evaluation of low cost game-based balance rehabilitation tool using the microsoft kinect sensor. Conf Proc IEEE Eng Med Biol Soc 2011:1831–1834 [DOI] [PubMed] [Google Scholar]

- 21.Chang C-Y, Lange B, Zhang M, Koenig S, Requejo P, Somboon N, et al. Towards pervasive physical rehabilitation using microsoft kinect. 6th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth), San Diego, CA 2012:159–162 [Google Scholar]

- 22.Dukes PS, Hayes A, Hodges LF, Woodbury M. Punching ducks for post-stroke neurorehabilitation: System design and initial exploratory feasibility study. 3D User Interfaces (3DUI), 2013 IEEE Symposium on 2013:47–54 [Google Scholar]

- 23.Anton D, Goni A, Illarramendi A. Exercise recognition for kinect-based telerehabilitation. Methods Inf. Med 2015;54:145–155 [DOI] [PubMed] [Google Scholar]

- 24.Levac D, Espy D, Fox E, Pradhan S, Deutsch JE. “Kinect-ing” with clinicians: A knowledge translation resource to support decision making about video game use in rehabilitation. Phys. Ther 2015;95:426–440 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hondori H, Khademi M. A review on technical and clinical impact of microsoft kinect on physical therapy and rehabilitation. Journal of Medical Engineering. 2014;2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Morrison C, Culmer P, Mentis H, Pincus T. Vision-based body tracking: Turning kinect into a clinical tool. Disabil Rehabil Assist Technol. 2014:1–5 [DOI] [PubMed] [Google Scholar]

- 27.Microsoft. More about kinect sensor placement, http://support.Xbox.Com/en-us/xbox-360/kinect/sensor-placement. 2015;March 24, 2015

- 28.Dutta T Evaluation of the kinect sensor for 3-d kinematic measurement in the workplace. Appl. Ergon 2012;43:645–649 [DOI] [PubMed] [Google Scholar]

- 29.Pfister A, West AM, Bronner S, Noah JA. Comparative abilities of microsoft kinect and vicon 3d motion capture for gait analysis. J. Med. Eng. Technol 2014;38:274–280 [DOI] [PubMed] [Google Scholar]

- 30.Galna B, Barry G, Jackson D, Mhiripiri D, Olivier P, Rochester L. Accuracy of the microsoft kinect sensor for measuring movement in people with parkinson’s disease. Gait Posture. 2014;39:1062–1068 [DOI] [PubMed] [Google Scholar]

- 31.Subramanian SK, Lourenco CB, Chilingaryan G, Sveistrup H, Levin MF. Arm motor recovery using a virtual reality intervention in chronic stroke: Randomized control trial. Neurorehabil Neural Repair. 2013;27:13–23 [DOI] [PubMed] [Google Scholar]

- 32.Levin MF, Knaut LA, Magdalon EC, Subramanian S. Virtual reality environments to enhance upper limb functional recovery in patients with hemiparesis. Stud. Health Technol. Inform 2009;145:94–108 [PubMed] [Google Scholar]

- 33.Parry I, Carbullido C, Kawada J, Bagley A, Sen S, Greenhalgh D, et al. Keeping up with video game technology: Objective analysis of xbox kinect and playstation 3 move for use in burn rehabilitation. Burns. 2014;40:852–859 [DOI] [PubMed] [Google Scholar]

- 34.de Oliveira Cacho R, Cacho EW, Ortolan RL, Cliquet AJ, Borges G. Trunk restraint therapy: The continuous use of the harness could promote feedback dependence in poststroke patients: A randomized trial. Medicine (Baltimore). 2015;94:e641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Exell T, Freeman C, Meadmore K, Kutlu M, Rogers E, Hughes A-M, et al. Goal orientated stroke rehabilitation utilising electrical stimulation, iterative learning and microsoft kinect. Rehabilitation Robotics (ICORR), 2013 IEEE International Conference on 2013:1–6 [DOI] [PubMed] [Google Scholar]

- 36.Kim CY, Lee JS, Lee JH, Kim YG, Shin AR, Shim YH, et al. Effect of spatial target reaching training based on visual biofeedback on the upper extremity function of hemiplegic stroke patients. Journal of physical therapy science. 2015;27:1091–1096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Brown SH, Napier R, Nelson VS, Yang LJ. Home-based movement therapy in neonatal brachial plexus palsy: A case study. J. Hand Ther 2014 [DOI] [PubMed] [Google Scholar]

- 38.Hingtgen B, McGuire JR, Wang M, Harris GF. An upper extremity kinematic model for evaluation of hemiparetic stroke. J. Biomech 2006;39:681–688 [DOI] [PubMed] [Google Scholar]

- 39.Cirstea MC, Levin MF. Compensatory strategies for reaching in stroke. Brain. 2000;123 (Pt 5):940–953 [DOI] [PubMed] [Google Scholar]

- 40.Adams RJ, Lichter MD, Krepkovich ET, Ellington A, White M, Diamond PT. Assessing upper extremity motor function in practice of virtual activities of daily living. Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2015;23:287–296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hondori HM, Khademi M, Lopes CV. Monitoring intake gestures using sensor fusion (microsoft kinect and inertial sensors) for smart home tele-rehab setting. 2012 1st Annual IEEE Healthcare Innovation Conference 2012 [Google Scholar]

- 42.Bergsma A, Murgia A, Cup EH, Verstegen PP, Meijer K, de Groot IJ. Upper extremity kinematics and muscle activation patterns in subjects with facioscapulohumeral dystrophy. Arch. Phys. Med. Rehabil 2014;95:1731–1741 [DOI] [PubMed] [Google Scholar]

- 43.Hudak PL, Amadio PC, Bombardier C. Development of an upper extremity outcome measure: The dash (disabilities of the arm, shoulder and hand) [corrected]. The upper extremity collaborative group (uecg). Am. J. Ind. Med 1996;29:602–608 [DOI] [PubMed] [Google Scholar]

- 44.Apker GA, Buneo CA. Contribution of execution noise to arm movement variability in three-dimensional space. J. Neurophysiol 2012;107:90–102 [DOI] [PubMed] [Google Scholar]

- 45.van Beers RJ, Haggard P, Wolpert DM. The role of execution noise in movement variability. J. Neurophysiol 2004;91:1050–1063 [DOI] [PubMed] [Google Scholar]

- 46.Fernandes HL, Albert MV, Kording KP. Measuring generalization of visuomotor perturbations in wrist movements using mobile phones. PLoS One. 2011;6:e20290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Newkirk JT, Tomšič M, Crowell CR, Villano MA, Stanišić MM. Measurement and quantification of gross human shoulder motion. Applied Bionics and Biomechanics. 2013;10:159–173 [Google Scholar]

- 48.Zabihhosseinian M, Holmes MW, Murphy B. Neck muscle fatigue alters upper limb proprioception. Exp. Brain Res 2015;233:1663–1675 [DOI] [PubMed] [Google Scholar]

- 49.Hur P, Wan Y-H, Seo NJ. Investigating the role of vibrotactile noise in early response to perturbation. IEEE Transactions on Biomedical Engineering. 2014;61:1628–1633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kaipust JP, McGrath D, Mukherjee M, Stergiou N. Gait variability is altered in older adults when listening to auditory stimuli with differing temporal structures. Ann. Biomed. Eng 2013;41:1595–1603 [DOI] [PubMed] [Google Scholar]

- 51.Microsoft. Coordinate spaces, https://msdn.Microsoft.Com/en-us/library/hh973078.Aspx#depth_ranges. 2015;March 24, 2015

- 52.Khoshelham K, Elberink SO. Accuracy and resolution of kinect depth data for indoor mapping applications. Sensors (Basel). 2012;12:1437–1454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Mackey AH, Walt SE, Stott NS. Deficits in upper-limb task performance in children with hemiplegic cerebral palsy as defined by 3-dimensional kinematics. Arch. Phys. Med. Rehabil 2006;87:207–215 [DOI] [PubMed] [Google Scholar]

- 54.Wu G, van der Helm FC, Veeger HE, Makhsous M, Van Roy P, Anglin C, et al. Isb recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion--part ii: Shoulder, elbow, wrist and hand. J. Biomech 2005;38:981–992 [DOI] [PubMed] [Google Scholar]

- 55.Soechting JF, Buneo CA, Herrmann U, Flanders M. Moving effortlessly in three dimensions: Does donders’ law apply to arm movement? J. Neurosci 1995;15:6271–6280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Microsoft. Skeletal tracking, http://msdn.Microsoft.Com/en-us/library/hh973074.Aspx.2015;March 24, 2015

- 57.van Diest M, Stegenga J, Wortche HJ, Postema K, Verkerke GJ, Lamoth CJ. Suitability of kinect for measuring whole body movement patterns during exergaming. J. Biomech 2014;47:2925–2932 [DOI] [PubMed] [Google Scholar]

- 58.Kitsunezaki N, Adachi E, Masuda T, Mizusawa J-i. Kinect applications for the physical rehabilitation. Medical Measurements and Applications Proceedings (MeMeA), 2013 IEEE International Symposium on 2013:294–299 [Google Scholar]

- 59.Choppin S, Wheat J. The potential of the microsoft kinect in sports analysis and biomechanics. Sports Technology. 2013;6:78–85 [Google Scholar]

- 60.Coenen P, Kingma I, Boot CR, Bongers PM, van Dieen JH. Inter-rater reliability of a video-analysis method measuring low-back load in a field situation. Appl. Ergon 2013;44:828–834 [DOI] [PubMed] [Google Scholar]

- 61.Wickens CD, Lee J, Liu Y, Becker S. An introduction to human factors engineering. 2004 [Google Scholar]

- 62.Bonnechere B, Jansen B, Salvia P, Bouzahouene H, Sholukha V, Cornelis J, et al. Determination of the precision and accuracy of morphological measurements using the kinect sensor: Comparison with standard stereophotogrammetry. Ergonomics. 2014;57:622–631 [DOI] [PubMed] [Google Scholar]

- 63.Clark RA, Pua YH, Bryant AL, Hunt MA. Validity of the microsoft kinect for providing lateral trunk lean feedback during gait retraining. Gait Posture. 2013;38:1064–1066 [DOI] [PubMed] [Google Scholar]

- 64.Bo A, Hayashibe M, Poignet P. J oint angle estimation in rehabilitation with inertial sensors and its integration with kinect. EMBC’11: 33rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society 2011:3479–3483 [DOI] [PubMed] [Google Scholar]