Abstract

Our attention is strongly influenced by reward learning. Stimuli previously associated with monetary reward have been shown to automatically capture attention in both behavioral and neurophysiological studies. Stimuli previously associated with positive social feedback similarly capture attention; however, it is unknown whether such social facilitation of attention relies on similar or dissociable neural systems. Here, we used the value-driven attentional capture paradigm in an fMRI study to identify the neural correlates of attention to stimuli previously associated with social reward. The results reveal learning-dependent priority signals in the contralateral visual cortex, posterior parietal cortex, and caudate tail, similar to studies using monetary reward. An additional priority signal was consistently evident in the right middle frontal gyrus (MFG). Our findings support the notion of a common neural mechanism for directing attention on the basis of selection history that generalizes across different types of reward.

Keywords: Selective attention, value-driven attention, social reward, fMRI

Introduction

Attention is a mechanism that selectively filters perceptual information to determine what is represented in the brain (Desimone & Duncan, 1995). Attention can at times be goal-directed, prioritizing information based on contextually-specific priorities, such as when someone searches through a desk for misplaced keys (Corbetta & Shulman, 2002; Folk et al., 1992; Wolfe et al., 1989). Stimuli that possess high informational value, such as the eyes and mouth of a face, tend to be preferentially attended (Dupierrix et al., 2014; Emery, 2000; Janik et al., 1978; Mckelvie, 1976). However, what we direct our attention to is not always under our control. Physically salient stimuli can automatically capture our attention (Theeuwes, 1992, 2010). It is also the case that how we direct attention is shaped by associative learning. Stimuli that signal the availability of reward draw attention (Anderson & Yantis, 2013; Della Libera & Chelazzi, 2006; Engelmann & Pessoa, 2007; Hickey et al., 2010), as do stimuli previously associated with aversive outcomes (Schmidt et al., 2015; Wang et al., 2013). The influence of reward history on attention has been shown to persist even when previously reward-associated stimuli are inconspicuous, currently task-irrelevant, and no longer predictive of reward, suggesting that attention can be fundamentally value-driven (Anderson et al., 2011).

Studies of value-driven attention have typically examined the consequences of associative learning on information processing using monetary reward as feedback (e.g., Anderson, 2016a; Anderson & Yantis, 2013; Della Libera & Chelazzi, 2006; Failing & Theeuwes, 2017). Only a few studies have used other types of reward such as food (Pool et al., 2014), water (Seitz et al., 2009), and pleasant sounds (Miranda & Palmer, 2014), all of which produce similar attentional biases towards stimuli that they are associated with during training. Stimuli associated with drug reward also capture attention in drug-dependent patients (Field & Cox, 2008), which is further consistent with a general principle by which associative learning modifies the attentional priority of cues for reward (Anderson, 2016c).

Recent evidence extends the principle of value-driven attention to learning from social outcomes. Stimuli reliably followed by a face bearing a positive (happy; Anderson, 2016b; Anderson & Kim, 2018) or negative (angry; Anderson, 2017a) expression come to automatically capture attention, and spatial cues predicting social reward (credit earned for another person) more effectively guide attention (Hayward et al., 2018). Such evidence fits with the idea that positive and negative social outcomes evoke reward and punishment signals that are similarly capable of shaping attention.

Several studies have probed the neural mechanisms of value-driven attention using monetary reward. The neural correlates of attentional capture by reward cues include early visual cortex (Hopf et al., 2015; MacLean & Giesbrecht, 2015; Serences, 2008; Serences & Saproo, 2010), ventral visual cortex (Anderson, 2017b; Anderson et al., 2014; Barbaro et al., 2017; Donohue et al., 2016; Hickey & Peelen, 2015, 2017), posterior parietal cortex (Anderson, 2017b; Anderson et al., 2014; Barbaro et al., 2017; Lee & Shomstein, 2013; Qi et al., 2013), and the caudate tail (Anderson et al., 2014, 2016; Kim & Hikosaka, 2013; Yamamoto et al., 2013), which have collectively been referred to as the value-driven attention network (Anderson, 2017b). The caudate tail in particular has not been implicated in goal-directed or stimulus-driven attention (Corbetta et al., 2008; Corbetta & Shulman, 2002; Moore & Zirnsak, 2017) and may be specific to value-driven attentional orienting (Anderson, 2016a). Whether social reward similarly recruits this attention network, or whether distinct neural systems are engaged in directing attention on the basis of social utility, is not known, and evidence suggestive of either possibility can be found in the literature.

On the one hand, some neuroimaging studies exploring social reward in the context of reputation and verbal praise have found reward-related activation of the striatum (Izuma et al., 2008, 2010; Zink et al., 2008). One study directly comparing monetary gain and reputation as a social reward found common activation in the striatum from both types of outcomes (Wake & Izuma, 2017). Such evidence is consistent with a “common neural currency” for reward that both money and positive social outcomes can recruit, which could serve as input to the attention system. On the other hand, studies in the field of social neuroscience have implicated multiple brain regions that appear to be preferentially recruited by social information processing, including the superior temporal sulcus (Deen et al., 2015), the ventral medial prefrontal cortex (mPFC) in social evaluation (Moor et al., 2010), the superior frontal gyrus and paracingulate gyrus in social norm processing (Bas-Hoogendam et al., 2017), and a region of the fusiform gyrus in the perception of faces and emotional pictures with human forms (Geday et al., 2003; Kanwisher et al., 1997).

In the present study, we probed whether the value-driven attention network consistently recruited by monetary reward cues would be similarly recruited following training with social reward, suggesting that positive social outcomes bias attention via a “common currency” for reward. We were also interested in whether evidence could be found for a distinctly social component to value-driven attention not previously observed in attention studies using monetary reward. To this end, we adapted the paradigm of Anderson et al. (2014) previously used to study value-driven attention, substituting monetary reward with images of faces bearing positive and neutral expressions (as in Anderson, 2016b). Participants were trained to associate two color-defined targets with different probabilities of positive social feedback, and then these same color stimuli were used as task-irrelevant distractors in a shape-search task in which the color of the shapes was explicitly task-irrelevant. We measured neural responses and decrements in behavioral performance (indicative of attentional capture) associated with the presence of the critical distractors.

Methods

Participants

Twenty-eight participants, were recruited from the Texas A&M University community. One participant withdrew from the experiment before completing the brain scans, and three participants were not scanned because they did not meet the performance criteria for the behavioral task during their in-lab visit. Twenty-four participants were fully scanned (10 female, ages 18–29 [M = 21.9 y]), and eye-tracking data was collected from 22 of these participants. All participants reported normal or corrected-to-normal visual acuity and normal color vision. All procedures were approved by the Texas A&M University Institutional Review Board and were conducted in accordance with the principles expressed in the Declaration of Helsinki. Written informed consent was obtained for each participant.

Task Procedure

Participants were scheduled for an initial in-lab visit for 1 hr and a scan-center visit on the following day. During their initial appointment, participants came into the lab for consenting, MRI safety screening, and screening for adequate performance on the behavioral task. Participants first practiced and completed the test phase of the task that had built-in performance checks requiring at least 85% accuracy and an average response time of less than 1000 ms to continue. Next, participants practiced the training phase of the behavioral task with social feedback for three runs. Participants were instructed that an average of 85% accuracy across the three runs was required to be eligible to be scanned. Each eligible participant underwent fMRI in a single 1.5 hr session that took place the following day. Participants completed two runs of the training phase, three runs of the test phase, an anatomical scan, and lastly completed an additional run of the training phase and then two additional runs of the test phase. The final “top-up” training run was completed to re-instantiate the color-outcome associations to protect against possible extinction (see, e.g., Lee & Shomstein, 2014).

Apparatus

During the initial in-lab visit, all tasks were completed on a Dell OptiPlex 7040 computer equipped with Matlab software, and Psychophysics Toolbox extensions (Brainard, 1997). Stimuli were presented on a Dell P2717H monitor. The participants viewed the monitor from a distance of approximately 70 cm in a dimly lit room. Eye-tracking was conducted using the EyeLink 1000 Plus system, and head position was maintained using a manufacturer-provided chin rest (SR Research Ltd.). For the fMRI portion of the experiment, stimulus presentation was controlled by an Invivo SensaVue display system. The eye-to-screen distance was approximately 125 cm. Responses were entered using a Cedrus Lumina two-button response pad. An EyeLink 1000 Plus system was again used to track eye position.

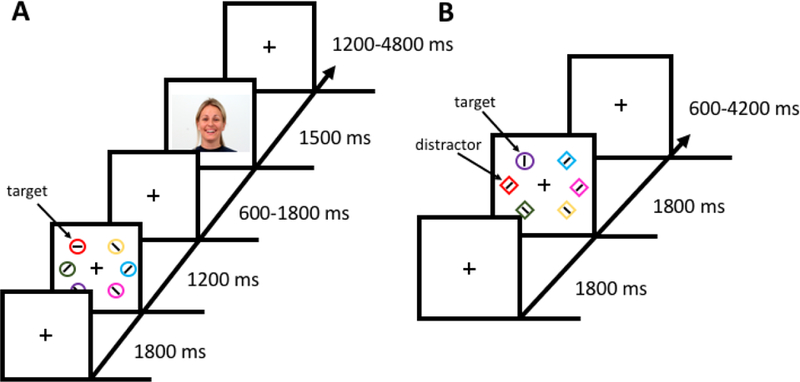

Training Phase

Each run of the training phase consisted of 60 trials. Each trial began with a fixation display (1800 ms) which was followed by a search array (1200 ms), an inter-stimulus-interval (ISI), a social feedback display (1500 ms), and an inter-trial-interval (ITI) (see Fig. 1A). The fixation display consisted of a fixation cross (0.7° x 0.7° visual angle) at the center of the screen. During the search array, participants were instructed to search for a target circle that was unpredictably red or green and report the orientation of a bar within the target as either vertical or horizontal via button press. Half of the trials in each run contained a red target and half contained a green target. Each target color appeared at every position equally-often across trials and the order of trials was randomized for each run. Each circle in the search array was 4.5° visual angle in diameter. Stimuli located on the left and right sides were 8.2° and 10.6° visual angle from the meridian. Vertically, stimuli were 8.2° visual angle above and below the horizontal equator. The colors of the non-targets were drawn from the set [blue, cyan, pink, orange, yellow, white] without replacement. The ISI lasted for 600, 1200, or 1800 ms (equally distributed). Positive-valence (happy) and neutral-valence faces for the social feedback were taken from the Warsaw Set of Emotional Facial Expression Pictures (Olszanowski et al., 2015). The faces were those of 12 male and 12 female models, with each model contributing both a positive and a neutral counterpart. One of the target colors predicted a positive-valence face during the social feedback display 80% of the time and a neutral face 20% of the time (high-valence target), while the other (low-valence) target color these percentages were reversed. The color-to-valence mapping was counterbalanced across participants. Feedback was not related to task performance, which could only be predicted by the target color. Lastly, the ITI lasted for 1200, 3000, or 4800 ms (exponentially distributed). The fixation cross disappeared for the last 200 ms of the ITI to indicate to the participant that the next trial was about to begin.

Figure 1.

Sequence and time course of trial events. (A) In the training phase, participants searched for a color-defined target (red or green) and reported the orientation of the bar within the target as vertical or horizontal. One color target yielded a reward in terms of a positive-valence face on 80% of trials and a neutral-valence face on 20% of trials (high-valence target), whereas the other color target yielded a positive-valence face on only 20% of trials and a neutral-valence face on 80% of trials (low-valence target). (B) In the test phase, participants searched for a shape singleton target (diamond among circles or circle among diamonds), and the color of the shapes was irrelevant to the task. On a subset of trials, one of the non-targets was rendered in the color of either the high-valence or low-valence color on which they were previously trained (regarded as a high-valence or low-valence distractor).

Test Phase

Each run of the test phase consisted of 60 trials. Each trial began with a fixation display (1800 ms) which was followed by a search array (1800 ms), and an ITI (see Fig. 1B). During the search array, participants reported the orientation of a bar within the uniquely-shaped target as either vertical or horizontal via button press. The color of the shapes was irrelevant to the task. On one-third of the trials, one of the non-target shapes was the color of the previously trained high-valence target (high-valence distractor trial), low-valence target (low-valence distractor trial), or none of the shapes were red or green (distractor absent trial). The target shape was never red or green. The target appeared in each position equally-often in each run. Each color distractor appeared on the side of the screen opposite the target on 3/5 of trials on which it was present, and on the same side on the remaining 2/5 of trials (i.e., each non-target position equally-likely). The order of trials was randomized for each run. The size and positions of the stimuli were identical to the training phase, as was the set of non-target colors used. Lastly, the ITI lasted for 600, 2400, or 4200 ms (equally distributed). Again, the fixation cross disappeared for the last 200 ms of the ITI to indicate to the participant that the next trial was about to begin.

Analysis of Behavioral Data

Only correct trials were included in the RT analyses. RTs more than 2.5 standard deviations above and below the mean for a given condition for a given participant were trimmed (as in Anderson et al., 2014; Anderson & Yantis, 2012).

MRI data acquisition

Images were acquired using a Siemens 3-Tesla MAGNETOM Verio scanner with a 32-channel head coil at the Texas A&M Institute for Preclinical Studies (TIPS), College Station, TX. High-resolution whole-brain anatomical images were acquired using a T1-weighted magnetization prepared rapid gradient echo (MPRAGE) pulse sequence [150 coronal slices, voxel size = 1 mm isotropic, repetition time (TR) = 7.9 ms, echo time (TE) = 3.65 ms, flip angle = 8°]. Whole-brain functional images were acquired using a T2*-weighted echo planar imaging (EPI) pulse sequence [56 axial slices, TR = 600 ms, TE = 29 ms, flip angle = 52°, image matrix = 96 × 96, field of view = 240 mm, slice thickness = 2.5mm with no gap]. Each EPI pulse sequence began with dummy pulses to allow the MR signal to reach steady state and concluded with an additional 6 sec blank epoch.

MRI Data Analyses

Preprocessing

All preprocessing was conducted using the AFNI software package (Cox, 1996). Each EPI run for each participant was motion corrected using the last image prior to the anatomical scan as a reference. EPI images were then coregistered to the corresponding anatomical image for each participant. These images were then non-linearly warped to the Talairach brain (Talairach & Tournoux, 1988) using 3dQwarp. Finally, the EPI images were converted to percent signal change normalized to the mean of each run, and then spatially smoothed to a resulting 5 mm full-width half-maximum using 3dBlurToFWHM.

Statistical Analyses

All statistical analyses were performed using the AFNI software package. We used a general linear model (GLM) approach to analyze the test phase data. The GLM included the following regressors of interest (as in Anderson et al., 2014): (1) target on left – no distractor, (2) target on right – no distractor, (3) target on left, high-valence distractor on same side, (4) target on left, high-valence distractor on opposite side, (5) target on right, high-valence distractor on same side, (6) target on right, high-valence distractor on opposite side, (7) target on left, low-valence distractor on same side, (8) target on left, low-valence distractor on opposite side, (9) target on right, low-valence distractor on same side, (10) target on right, low-valence distractor on opposite side. Each regressor of interest was modeled using 16 finite impulse response functions (FIRs), beginning at stimulus onset. Six degrees of head motion and drift in the scanner signal were modeled using nuisance regressors. Trials in which the participant failed to make a motor response were excluded from the analyses. The peak beta value for each condition from 3–6 sec post stimulus presentation was extracted, and paired-samples t-tests were conducted comparing the peak response on trials on which a distractor was present versus absent in the left and right side of the display, separately for each of the two hemifields (as in Anderson et al., 2014). We focused these analyses on trials on which the target was presented in the opposite hemifield, thereby isolating the response to task-irrelevant stimuli in the contralateral hemifield as a function of reward history. The results were corrected for multiple comparisons using the AFNI program 3dClustSim, with the smoothness of the data estimated using the ACF method (clusterwise α < 0.05, voxelwise p < 0.005).

Eye-tracking

During the fMRI scan, head position was restricted using foam padding within the head coil, and eye-tracking was conducted using the reflection of the participant’s face on the mirror attached to the head coil. Eye position was calibrated prior to each run of trials using 9-point calibration (Anderson & Yantis, 2012), and was manually drift corrected by the experimenter as necessary during the fixation display.

Results

Behavior

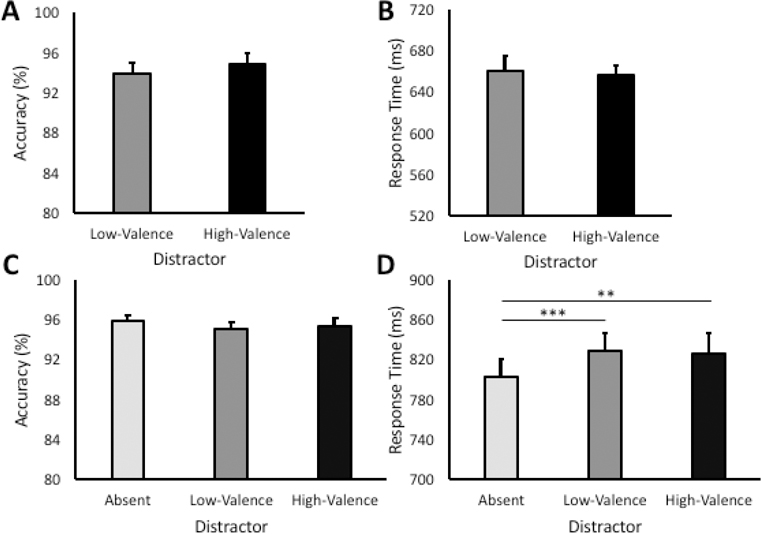

During the training phase, there were no reliable differences between high-valence and low-valence target trials in either accuracy, t(23) = 0.87, p = 0.392, or RT, t(23) = 0.53, p = 0.603 (see Fig. 2A and 2B). This is consistent with prior reports (Anderson, 2016b, 2017a; Anderson & Kim, 2018) and suggests that participants could easily manage the task of searching for two color-defined targets, with performance near ceiling.

Figure 2.

Behavioral results in the training and test phase. (A) Accuracy in the training phase by target condition. (B) Response time in the training phase by target condition. (C) Accuracy during the test phase by distractor condition. (D) Response time in the test phase by distractor condition. Error bars reflect the standard error of the means. **p < 0.005, ***p < 0.001

During the test phase, there was a highly reliable main effect of distractor condition on RT, F(2,46) = 9.06, p < 0.001, η2 = 0.283 (see Fig. 2C and 2D). Post hoc comparisons revealed that RT was significantly slower when both a high-valence, t(23) = 3.40, p = 0.002, d = 0.69, and a low-valence, t(23) = 3.83, p = 0.001, d = 0.78, distractor was present compared to distractor-absent trials. RT did not differ between the high- and low-valence distractor conditions, however, t(23) = −0.75, p = 0.463. Accuracy was similarly high across the three distractor conditions, F(2,46) = 1.01, p = 0.373. Across participants, an eye movement from fixation to one of the six shape stimuli was registered within the timeout limit on 49.8% of all trials (s = 34.3%, range = [3.3% - 92.7%]).

Neuroimaging

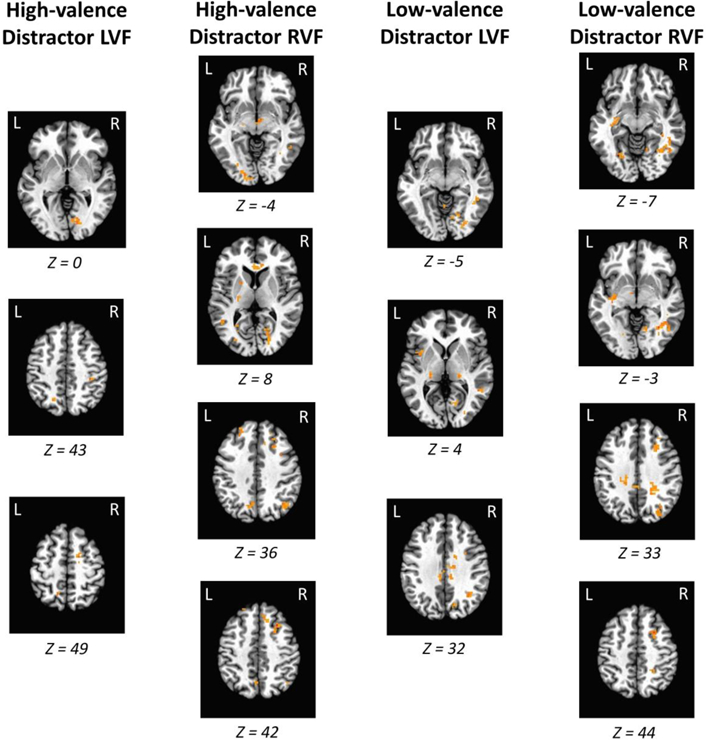

Consistent with the behavioral data, direct contrasts between the high-valence and low-valence distractor conditions did not reveal any significant clusters of activation. However, the presence of each distractor evoked a highly reliable and replicable pattern of activation across the contralateral visual cortex and caudate tail, in addition to posterior parietal cortex and the right middle frontal gyrus (MFG; see Fig. 3). A complete list of all regions activated across all contrasts is provided in Supplemental Table 1. Since our paradigm involved fMRI concurrent with eye-tracking, we were able to determine whether activated brain regions were eye-movement contingent. We performed the identical contrasts but included the number of trials on which distractors drew eye movements as a covariate. We found that the visual cortex, caudate tail, and MFG activations all remained significant when individual differences in oculomotor capture by the distractors were accounted for, suggesting that our findings do not reflect purely overt attentional selection and are consistent with the deployment of covert attention.

Figure 3.

Regions of the value-driven attention network and middle frontal gyrus that were significantly more active in response to the critical distractors (RVF = right visual field; LVF = left visual field). Each of the contrasts depicted are against distractor-absent trials, with the target presented in the visual field opposite the distractor. Activations are overlaid on an image of the Talairach brain. A complete list of all regions showing significant activation is provided in Supplemental Table 1.

For some of the contrasts, regions of the value-driven attention network (caudate tail, early visual cortex, lateral occipital cortex, and posterior parietal cortex; see Anderson, 2017b) were evident bilaterally. Therefore, to determine whether these regions were indeed sensitive to the position of the distractor as has been argued in prior studies (e.g., Anderson et al., 2014; Anderson, 2017b), we further probed the distractor-evoked responses in these regions. Specifically, for each of the aforementioned contrasts (see Fig. 3 and Supplemental Table 1), we created a mask of the regions comprising the value-driven attention network in the contralateral hemifield, and using this mask contrasted all trials on which the distractor was presented in the contralateral vs ipsilateral hemifield (collapsing across the position of the target: [distractor contra/target contra and distractor contra/target ipsi] vs [distractor ipsi/target contra and distractor ipsi/target ipsi]); we then collapsed across the four contrasts to get an overall estimate of response to the distractor when presented in the contralateral vs ipsilateral hemifield. This analysis revealed a significantly stronger response to the distractor when presented in the contralateral hemifield, t(23) = 6.07, p < 0.001, d = 1.24, confirming sensitivity to stimulus position. Further consistent with the positional sensitivity of these regions, on distractor-absent trials, each individual region showed a stronger response to targets in the contralateral vs ipsilateral hemifield, ts > 3.33, ps < 0.003, ds > 0.68.

In previous studies using monetary reward rather than social reward in the training phase, elevated distractor-evoked responses were observed in the visual cortex, parietal cortex, and caudate tail (e.g., Anderson, 2017b; Anderson et al., 2016; Anderson et al., 2014). However, no studies of value-driven attention that we are aware of have reported activation in the right MFG (e.g., Anderson, 2017b; Anderson et al., 2014, 2016; Barbaro et al., 2017; Donohue et al., 2016; Hickey & Peelen, 2015, 2017; Hopf et al., 2015; MacLean & Giesbrecht, 2015). Unlike the other regions identified, the MFG was evident specifically in the right hemisphere. In order to uncover whether the activation in this region is consistent with an attentional priority signal or whether it instead shares an antagonistic relationship with distractor-evoked activity in the visual system consistent with the inhibition of value-driven attentional priority, we correlated the activation in the MFG to the activation in the contralateral visual cortex (mean of peak beta values across voxels within the corresponding regions identified in the whole-brain analysis). If the MFG represents the attentional priority of the distractor, it should be positively correlated with contralateral distractor-evoked activity within visual areas, whereas if it serves to inhibit priority signals in the visual system, a negative correlation should instead be evident. We found that the observed right MFG activation was positively correlated with the activation in the contralateral visual cortex across participants, r = 0.405, p = 0.050; this result is consistent with the priority-signaling account and inconsistent with an account in which MFG activity suppresses attentional priority in the visual system, although it is important to note that the MFG could still play a more general inhibitory role as the haemodynamic response is not diagnostic of a facilitatory or inhibitory signal per se.

Discussion

We investigated the neural correlates of attention to stimuli previously associated with social reward. Our behavioral results replicate performance costs associated with the presence of stimuli previously associated with valent social outcomes (Anderson, 2016b, 2017a; Anderson & Kim, 2018). Such value-based distraction was accompanied by distractor-evoked attentional priority signals in the caudate tail, visual cortex, and posterior parietal cortex, producing a pattern of results very similar to that previously observed using cues for monetary reward (Anderson, 2017b; Anderson et al., 2014, 2016; Barbaro et al., 2017; Donohue et al., 2016; Hickey & Peelen, 2015; Hopf et al., 2015; Lee & Shomstein, 2013; MacLean & Giesbrecht, 2015). These findings support the presence of a core value-driven attention network in the brain that generalizes across different types of reward, taking input from a “common neural currency” for reward to which social outcomes contribute. Furthermore, by accounting for distractor-evoked eye movements, we show for the first time that these reward-general attentional priority signals are not particular to oculomotor capture (Anderson & Yantis, 2012; Theeuwes & Belopolsky, 2012), reflecting significant contributions from covert attentional priority.

In contrast to neuroimaging studies of value-driven attention using non-social reward, we identified an additional attention priority signal in the right MFG. Unlike the value-driven priority signals evident in prior studies, the representation in this region was not retinotopically organized, being observed only in the right hemisphere regardless of the distractor position. The right MFG has been found to be activated in studies of goal-contingent attentional capture to target-colored distractors (Serences et al., 2005), and is thought to play a role in attentional reorienting (Corbetta et al., 2008). Specifically, activation of the right MFG is hypothesized to serve as a circuit-breaker for the endogenous control of attention, diverting control to exogenous input and allowing unexpected but potentially goal-relevant stimuli to capture attention. In social neuroscience, activation of the right MFG has been observed in tasks probing social hierarchy (Zink et al., 2008), social competition (Decety et al., 2004), social norm violations (Bas-Hoogendam et al., 2017), emotional maintenance (Waugh et al., 2014), and social incentive delay (Rademacher et al., 2010). The broad involvement of the right MFG in social information processing, coupled with its role in reorienting attention to unexpected but potentially relevant stimuli, places it in a unique position to facilitate the reorienting of attention on the basis of social utility.

In addition to the right MFG, the right temporoparietal junction (TPJ) has also been implicated in the goal-contingent capture of attention and has been hypothesized to play a potentially similar circuit-breaker function (Corbetta & Shulman, 2002; Corbetta et al., 2008). As with prior studies examining the neural correlates of value-driven attention (see Anderson, 2019, for a review), we did not find any evidence for the involvement of the TPJ in value-driven attentional capture. The TPJ is thought not to play a role in the capture of attention by physically salient stimuli that are not potentially goal-relevant (Corbetta et al., 2008); consistent with this, there is some evidence that value-driven attentional priority can be represented in a manner similar to a change in the physical salience of an object (Anderson, 2019; Anderson & Kim, in press). The apparent selectivity of the MFG but not the TPJ in the present study suggests possibly distinct roles in supporting the exogenous reorienting of attention, although direct comparisons to a situation in which attentional capture does recruit the TPJ would be needed to explore this possibility further.

Unlike in a pair of previous behavioral studies using this same experimental paradigm (Anderson, 2016b; Anderson & Kim, 2018), we observed significant attentional capture by both high- and low-valence distractors. In these prior studies, only the high-valence distractor produced behavioral evidence of attentional capture, resulting in a significant effect of differential valence. The neural correlates of capture we observed here mirrored our behavioral result, with high- and low-valence distractors producing a similar neural profile. The reasons for this discrepancy are not clear, although one potentially important difference was the amount of training between studies. In the present study, participants completed training both the day before the brain scans and the day of scanning, with the initial training consolidated over a night’s sleep. This greater overall amount of training, spread out over two days, may have provided the low-valence stimulus with enough reinforcement to similarly reach significant levels of attentional capture. Consistent with this, the effect size of attentional capture was generally larger in the present study compared to prior studies using valenced social outcomes (Anderson, 2016b, 2017a; Anderson & Kim, 2018).

Unfortunately, the lack of significant differences between high- and low-valence distractor conditions does not allow the current dataset to distinguish between valence-dependent effects of associative learning from search history more broadly (i.e., status as former target; Grubb & Li, 2018; Sha & Jiang, 2016). The results of the present study definitively support the idea that what has been referred to as the value-driven attention network (Anderson, 2017b) plays a more general role in learning-dependent attentional priority and is similarly recruited following training with fundamentally different kinds of outcomes. Such recruitment potentially reflects some combination of value-dependent influences (see Anderson & Halpern, 2017) and more general influences of selection history. This is especially salient in the context of the right MFG, which has not been previously implicated in value-driven attention; to the degree that the present study preferentially recruited value-independent mechanisms of selection history more so than in prior studies of value-driven attention, this stronger value-independent influence may be mediated in part by the MFG.

Taken in the context of the broader literature on learning-dependent attentional priority, however, our findings are more consistent with a specific role for learned value in the observed pattern of activation. Prior studies have demonstrated more convincingly that the regions of the value-driven attention network observed in the present study are selectively recruited by valuable stimuli (e.g., Anderson, 2017b; Anderson et al., 2014, 2016; Barbaro et al., 2017; Donohue et al., 2016; Hickey & Peelen, 2015; Qi et al., 2013). Furthermore, a study explicitly examining the neural correlates of attentional capture by unrewarded former target colors (following multiday search training) detected primarily visual cortical influences with no evidence for corresponding priority signals in the striatum or MFG (Kim & Anderson, 2019). The influence of reward history and outcome-independent selection history on the nature of attentional orienting is fundamentally dissociable (Kim & Anderson, in press), suggesting distinct underlying neural mechanisms. In the context of this prior evidence, our findings seem more consistent with a “common currency” interpretation of the value-driven attention network than an interpretation that assumes a pure selection history-dependent priority signal with no influence of associated value.

Collectively, our findings support the idea that social outcomes shape attention by recruiting both reward-general mechanisms of information processing and mechanisms particular to social reward. Monetary and social rewards are represented by overlapping neural populations (Izuma et al., 2008), and our findings suggest that such “common currency” representations of value serve as the teaching signals to the value-driven attention network (Anderson et al., 2014; Anderson, 2017b). In this sense, social reward affects attention much like any other reward. At the same time, social reward cues appear to recruit the attention system’s circuit-breaking capacity in a way not previously observed for monetary reward cues, which may stem from the important role of the right MFG in both social information processing (Bas-Hoogendam et al., 2017; Decety et al., 2004; Rademacher et al., 2010; Waugh et al., 2014; Zink et al., 2008) and the control of attention (Corbetta et al., 2008; Serences et al., 2005). Such a mechanism could serve to better ensure that opportunities for positive social outcomes do not go unnoticed, interrupting goal-directed information processing in order to more fully evaluate such opportunities. Our findings expand our understanding of both the value-driven attention network and the role of the right MFG in the control of attention.

Supplementary Material

Acknowledgements

This research was supported by a start-up package from Texas A&M University to BAA and grants from the Brain & Behavior Research Foundation [NARSAD 26008] and NIH [R01-DA046410] to BAA.

Footnotes

The authors report no conflicts of interest.

Contributor Information

Andy Jeesu Kim, Texas A&M University.

Brian A. Anderson, Texas A&M University

References

- Anderson BA (2016a). The attention habit: how reward learning shapes attentional selection. Annals of the New York Academy of Sciences, 1369, 24–39. [DOI] [PubMed] [Google Scholar]

- Anderson BA (2016b). Social reward shapes attentional biases. Cognitive Neuroscience, 7(14), 30–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA (2016c). What is abnormal about addiction-related attentional biases? Drug and Alcohol Dependence, 167, 8–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA (2017a). Counterintuitive effects of negative social feedback on attention. Cognition & Emotion, 31(3), 590–597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA (2017b). Reward processing in the value-driven attention network: reward signals tracking cue identity and location. Social Cognitive and Affective Neuroscience, 12(3), 461–467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA (2019). Neurobiology of value-driven attention. Current Opinion in Psychology, 29, 27–33. [DOI] [PubMed] [Google Scholar]

- Anderson BA, Kuwabara H, Wong DF, Gean EG, Rahmim A, Brasic JR, . . . Yantis S (2016). The role of dopamine in value-based attentional orienting. Current Biology, 26(4), 550–555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Laurent PA, & Yantis S (2011). Value-driven attentional capture. Proceedings of the National Academy of Sciences of the United States of America, 108(25), 10367–10371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Laurent PA, & Yantis S (2014). Value-driven attentional priority signals in human basal ganglia and visual cortex. Brain Research, 1587, 88–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, & Halpern M (2017). On the value-dependence of value-driven attentional capture. Attention, Perception, and Psychophysics, 79, 1001–1011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, & Kim H (2018). Relating attentional biases for stimuli associated with social reward and punishment to autistic traits. Collabra: Psychology, 4(1), article 10. [Google Scholar]

- Anderson BA, & Kim H (in press). On the relationship between value-driven and stimulus-driven attentional capture. Attention, Perception, & Psychophysics [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, & Yantis S (2012). Value-driven attentional and oculomotor capture during goal-directed, unconstrained viewing. Attention Perception & Psychophysics, 74(8), 1644–1653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, & Yantis S (2013). Persistence of value-driven attentional capture. Journal of Experimental Psychology-Human Perception and Performance, 39(1), 6–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbaro L, Peelen MV, & Hickey C (2017). Valence, not utility, underlies reward-driven prioritization in human vision. Journal of Neuroscience, 37(43), 10438–10450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bas-Hoogendam JM, van Steenbergen H, Kreuk T, van der Wee NJA, & Westenberg PM (2017). How embarrassing! The behavioral and neural correlates of processing social norm violations. Plos One, 12(4). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436. [PubMed] [Google Scholar]

- Corbetta M, Patel G, & Shulman GL (2008). The reorienting system of the human brain: From environment to theory of mind. Neuron, 58(3), 306–324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, & Shulman GL (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience, 3(3), 201–215. [DOI] [PubMed] [Google Scholar]

- Cox RW (1996). AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research, 29(3), 162–173. [DOI] [PubMed] [Google Scholar]

- Decety J, Jackson PL, Sommerville JA, Chaminade T, & Meltzoff AN (2004). The neural bases of cooperation and competition: an fMRI investigation. Neuroimage, 23(2), 744–751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deen B, Koldewyn K, Kanwisher N, & Saxe R (2015). Functional organization of social perception and cognition in the superior temporal sulcus. Cerebral Cortex, 25(11), 4596–4609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Della Libera C, & Chelazzi L (2006). Visual selective attention and the effects of monetary rewards. Psychological Science, 17(3), 222–227. [DOI] [PubMed] [Google Scholar]

- Desimone R, & Duncan J (1995). Neural mechanisms of selective visual-attention. Annual Review of Neuroscience, 18, 193–222. [DOI] [PubMed] [Google Scholar]

- Donohue SE, Hopf JM, Bartsch MV, Schoenfeld MA, Heinze HJ, & Woldorff MG (2016). The rapid capture of attention by rewarded objects. Journal of Cognitive Neuroscience, 28(4), 529–541. [DOI] [PubMed] [Google Scholar]

- Dupierrix E, de Boisferon AH, Meary D, Lee K, Quinn PC, Di Giorgio E, . . . Pascalis O (2014). Preference for human eyes in human infants. Journal of Experimental Child Psychology, 123, 138–146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emery NJ (2000). The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience and Biobehavioral Reviews, 24(6), 581–604. [DOI] [PubMed] [Google Scholar]

- Engelmann JB, & Pessoa L (2007). Motivation sharpens exogenous spatial attention. Emotion, 7(3), 668–674. [DOI] [PubMed] [Google Scholar]

- Failing M, & Theeuwes J (2017). Don’t let it distract you: how information about the availability of reward affects attentional selection. Attention Perception & Psychophysics, 79(8), 2275–2298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field M, & Cox WM (2008). Attentional bias in addictive behaviors: A review of its development, causes, and consequences. Drug and Alcohol Dependence, 97(1–2), 1–20. [DOI] [PubMed] [Google Scholar]

- Folk CL, Remington RW, & Johnston JC (1992). Involuntary covert orienting is contingent on attentional control settings. Journal of Experimental Psychology-Human Perception and Performance, 18(4), 1030–1044. [PubMed] [Google Scholar]

- Geday J, Gjedde A, Boldsen AS, & Kupers R (2003). Emotional valence modulates activity in the posterior fusiform gyrus and inferior medial prefrontal cortex in social perception. Neuroimage, 18(3), 675–684. [DOI] [PubMed] [Google Scholar]

- Grubb MA, & Li Y (2018). Assessing the role of accuracy-based feedback in value-driven attentional capture. Attention, Perception, & Psychophysics, 80, 822–828. [DOI] [PubMed] [Google Scholar]

- Hayward DA, Pereira EJ, Otto AR, & Ristic J (2018). Smile! Social reward drives attention. Journal of Experimental Psychology-Human Perception and Performance, 44(2), 206–214. [DOI] [PubMed] [Google Scholar]

- Hickey C, Chelazzi L, & Theeuwes J (2010). Reward changes salience in human vision via the anterior cingulate. Journal of Neuroscience, 30(33), 11096–11103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickey C, & Peelen MV (2015). Neural mechanisms of incentive salience in naturalistic human vision. Neuron, 85(3), 512–518. [DOI] [PubMed] [Google Scholar]

- Hickey C, & Peelen XV (2017). Reward selectively modulates the lingering neural representation of recently attended objects in natural scenes. Journal of Neuroscience, 37(31), 7297–7304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopf JM, Schoenfeld MA, Buschschulte A, Rautzenberg A, Krebs RM, & Boehler CN (2015). The modulatory impact of reward and attention on global feature selection in human visual cortex. Visual Cognition, 23(1–2), 229–248. [Google Scholar]

- Izuma K, Saito DN, & Sadato N (2008). Processing of social and monetary rewards in the human striatum. Neuron, 58(2), 284–294. [DOI] [PubMed] [Google Scholar]

- Izuma K, Saito DN, & Sadato N (2010). Processing of the incentive for social approval in the ventral striatum during charitable donation. Journal of Cognitive Neuroscience, 22(4), 621–631. [DOI] [PubMed] [Google Scholar]

- Janik SW, Wellens R, Goldberg ML, & Dellosso LF (1978). Eyes as the center of focus in the visual examination of human faces. Perceptual and Motor Skills, 47(3), 857–858. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, & Chun MM (1997). The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience, 17(11), 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, & Anderson BA (2019). Dissociable neural mechanisms underlie value-driven and selection-driven attentional capture. Brain Research, 1708, 109–115. [DOI] [PubMed] [Google Scholar]

- Kim H, & Anderson BA (in press). Dissociable components of experience-driven attention. Current Biology [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim HF, & Hikosaka O (2013). Distinct basal ganglia circuits controlling behaviors guided by flexible and stable values. Neuron, 79(5), 1001–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J & Shomstein S (2014). Reward-based transfer from bottom-up to top-down search tasks. Psychological Science, 25(2): 466–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J, & Shomstein S (2013). The differential effects of reward on space- and object-based attentional allocation. Journal of Neuroscience, 33(26), 10625–10633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLean MH, & Giesbrecht B (2015). Neural evidence reveals the rapid effects of reward history on selective attention. Brain Research, 1606, 86–94. [DOI] [PubMed] [Google Scholar]

- Mckelvie SJ (1976). Role of eyes and mouth in memory of a face. American Journal of Psychology, 89(2), 311–323. [Google Scholar]

- Miranda AT, & Palmer EM (2014). Intrinsic motivation and attentional capture from gamelike features in a visual search task. Behavior Research Methods, 46(1), 159–172. [DOI] [PubMed] [Google Scholar]

- Moor BG, van Leijenhorst L, Rombouts SARB, Crone EA, & Van der Molen MW (2010). Do you like me? Neural correlates of social evaluation and developmental trajectories. Social Neuroscience, 5(5–6), 461–482. [DOI] [PubMed] [Google Scholar]

- Moore T, & Zirnsak M (2017). Neural mechanisms of selective visual attention. Annual Review of Psychology, 68, 47–72. [DOI] [PubMed] [Google Scholar]

- Olszanowski M, Pochwatko G, Kuklinski K, Scibor-Rylski M, Lewinski P, & Ohme RK (2015). Warsaw set of emotional facial expression pictures: a validation study of facial display photographs. Frontiers in Psychology, 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pool E, Brosch T, Delplanque S, & Sander D (2014). Where is the chocolate? Rapid spatial orienting toward stimuli associated with primary rewards. Cognition, 130(3), 348–359. [DOI] [PubMed] [Google Scholar]

- Qi SQ, Zeng QH, Ding C, & Li H (2013). Neural correlates of reward-driven attentional capture in visual search. Brain Research, 1532, 32–43. [DOI] [PubMed] [Google Scholar]

- Rademacher L, Krach S, Kohls G, Irmak A, Grunder G, & Spreckelmeyer KN (2010). Dissociation of neural networks for anticipation and consumption of monetary and social rewards. Neuroimage, 49(4), 3276–3285. [DOI] [PubMed] [Google Scholar]

- Schmidt LJ, Belopolsky AV, & Theeuwes J (2015). Attentional capture by signals of threat. Cognition & Emotion, 29(4), 687–694. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Kim D, & Watanabe T (2009). Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron, 61(5), 700–707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT (2008). Value-based modulations in human visual cortex. Neuron, 60(6), 1169–1181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, & Saproo S (2010). Population response profiles in early visual cortex are biased in favor of more valuable stimuli. Journal of Neurophysiology, 104(1), 76–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Shomstein S, Leber AB, Golay X, Egeth HE, & Yantis S (2005). Coordination of voluntary and stimulus-driven attentional control in human cortex. Psychological Science, 16(2), 114–122. [DOI] [PubMed] [Google Scholar]

- Sha LZ, & Jiang YV (2016). Components of reward-driven attentional capture. Attention, Perception, & Psychophysics, 78, 403–414. [DOI] [PubMed] [Google Scholar]

- Talairach J, & Tournoux P (1988). Co-planar stereotaxic atlas of the human brain New York: Thieme. [Google Scholar]

- Theeuwes J (1992). Perceptual selectivity for color and form. Perception & Psychophysics, 51(6), 599–606. [DOI] [PubMed] [Google Scholar]

- Theeuwes J (2010). Top-down and bottom-up control of visual selection. Acta Psychologica, 135(2), 77–99. [DOI] [PubMed] [Google Scholar]

- Theeuwes J, & Belopolsky AV (2012). Reward grabs the eye: Oculomotor capture by rewarding stimuli. Vision Research, 74, 80–85. [DOI] [PubMed] [Google Scholar]

- Wake SJ, & Izuma K (2017). A common neural code for social and monetary rewards in the human striatum. Social Cognitive and Affective Neuroscience, 12(10), 1558–1564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang LH, Yu HB, & Zhou XL (2013). Interaction between value and perceptual salience in value-driven attentional capture. Journal of Vision, 13(3). [DOI] [PubMed] [Google Scholar]

- Waugh CE, Lemus MG, & Gotlib IH (2014). The role of the medial frontal cortex in the maintenance of emotional states. Social Cognitive and Affective Neuroscience, 9(12), 2001–2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe JM, Cave KR, & Franzel SL (1989). Guided Search - an Alternative to the Feature Integration Model for Visual-Search. Journal of Experimental Psychology-Human Perception and Performance, 15(3), 419–433. [DOI] [PubMed] [Google Scholar]

- Yamamoto S, Kim HF, & Hikosaka O (2013). Reward value-contingent changes of visual responses in the primate caudate tail associated with a visuomotor skill. Journal of Neuroscience, 33(27), 11227–11238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zink CF, Tong YX, Chen Q, Bassett DS, Stein JL, & Meyer-Lindenberg A (2008). Know your place: Neural processing of social hierarchy in humans. Neuron, 58(2), 273–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.