Summary

Recent studies have reported a strong interaction between spatial and temporal representation when visual experience is missing: blind people use temporal representation of events to represent spatial metrics. Given the superiority of audition on time perception, we hypothesized that when audition is not available complex temporal representations could be impaired, and spatial representation of events could be used to build temporal metrics. To test this hypothesis, deaf and hearing subjects were tested with a visual temporal task where conflicting and not conflicting spatiotemporal information was delivered. As predicted, we observed a strong deficit of deaf participants when only temporal cues were useful and space was uninformative with respect to time. However, the deficit disappeared when coherent spatiotemporal cues were presented and increased for conflicting spatiotemporal stimuli. These results highlight that spatial cues influence time estimations in deaf participants, suggesting that deaf individuals use spatial information to infer temporal environmental coordinates.

Subject Areas: Disability, Biological Sciences, Neuroscience, Cognitive Neuroscience

Graphical Abstract

Highlights

-

•

Deaf individuals are not able to build complex temporal representations

-

•

Their deficit disappears when coherent temporal and spatial cues are presented

-

•

In some cases, deaf people use spatial cues to infer temporal coordinates

-

•

There exists a strong interaction between spatial and temporal representation

Disability; Biological Sciences; Neuroscience; Cognitive Neuroscience

Introduction

Time perception is inherently part of everyday life. It occurs while we stare at the hands of the clock slowly moving when we are bored, but also while listening to our favorite song or listening to speech unfolding in time. To perceive a coherent temporal representation and successfully interact with our environment, we need to combine information derived from our sensory modalities. In 1963, Paul Fraisse stated that “hearing is the main organ through which we perceive change: it is considered as the ‘time sense’.” (Fraisse, 1963). Recent studies support his idea, showing that different sensory modalities are more appropriate to process specific environmental proprieties, and specifically the auditory system is the most accurate one to represent temporal information (e.g., Guttman et al., 2005, Bresciani and Ernst, 2007, Burr et al., 2009, Barakat et al., 2015).

Behavioral results showed that audition prevails in audiovisual temporal tasks. For instance, a single flash is perceived as two flashes when presented with two concurrent beeps (Shams et al., 2000) and the perceived frequency of flickering lights is influenced by an auditory stimulus presented simultaneously at a different rate (Gebhard and Mowbray, 1959, Shipley, 1964). Similarly, neuroimaging studies on hearing individuals highlighted a crucial role of the auditory cortex on time representation. For example, activation of the superior temporal gyrus has been observed during temporal processing of visual stimuli with functional Magnetic Resonance Imaging (Coull et al., 2004, Ferrandez et al., 2003, Lewis and Miall, 2003), and transcranial magnetic stimulation (TMS) over the auditory cortex has been shown to affect time estimation of both auditory and visual stimuli (Kanai et al., 2011), as well as tactile events (Bolognini et al., 2010).

Given the superiority of audition over the other sensory systems for time perception, the auditory modality might offer a temporal background for calibrating other sensory information. Converging evidence suggests that the development of multisensory interactions between audition and other senses depends on early perceptual experience (e.g., Merabet and Pascual-Leone, 2010, Cardon et al., 2012, Lazard et al., 2014) and the lack of auditory experience might interfere with the development of a temporal representation of the environment (Gori et al., 2017).

Deafness is a natural condition that offers valuable insight into the role of audition on temporal representation (see Bavelier et al., 2006, Pavani and Bottari, 2012). Research in both animals and humans suggests that a deficit in one sensory modality, such as audition, can induce compensatory mechanisms leading to increased abilities in spared sensory modalities, such as vision or touch (Strelnikov et al., 2013, Allman et al., 2009, Barone et al., 2013, Lomber et al., 2010). At the neurophysiological level, large-scale reorganization occurs after this sensory loss (e.g., Bola et al., 2017, Auer et al., 2007, Finney et al., 2003, Benetti et al., 2017). The auditory cortex deprived of the auditory input starts to be recruited by tactile and visual stimuli (e.g., Finney et al., 2001, Kok et al., 2014, Campbell and Sharma, 2016, Bottari et al., 2014, Karns et al., 2012), and changes within the early visual pathway in the absence of auditory input have also been reported in deaf individuals (e.g., Bottari et al., 2011). However, other studies reported only little change of the auditory neural structures in deaf animals (e.g., Clemo et al., 2016) and very few new connections between visual and auditory cortices as a result of deafness (e.g., Chabot et al., 2015, Butler et al., 2016). Focusing on the abilities to process temporal information in conditions of auditory deprivation, behavioral results are conflicting and seem to vary based on the type of task and stimuli. When asked to estimate and reproduce the duration of visual stimuli, for instance, deaf participants are often found to perform similar or better than controls in the range of milliseconds (Bross and Sauerwein, 1980, Poizner and Tallal, 1987), but not in the range of seconds (Kowalska and Szelag, 2006). However, Bolognini et al. (2012) observed low abilities to reproduce tactile durations in the range of milliseconds. In addition, tactile perceptual thresholds in a simultaneity judgment task are significantly higher in deaf compared with hearing individuals regardless of the spatial location of the stimuli (Heming and Brown, 2005), but opposite results were obtained for a visual temporal order judgment task (Nava et al., 2008).

Developmental results showed that both typical children and adults exhibit strong auditory dominance during audiovisual temporal bisection, which involves judging the relative presentation timings of three stimuli and requires to build complex temporal representations (Gori et al., 2012). Interestingly, deaf children with restored hearing do not show the same auditory dominance (Gori et al., 2017). In light of these findings, here we hypothesized that the lack of audition should affect the development of complex temporal representation underlying visual temporal bisection skills. Moreover, we recently reported a strong interaction between spatial and temporal representation when the visual experience is missing (Gori et al., 2018). Specifically, we showed that when vision is not available, such as in blindness, subjects are not able to build complex spatial representations and are strongly attracted by temporal cues. Based on the evidence showing a strong link between space and time representation in the absence of vision, we hypothesized that when audition is not available, not only complex temporal representations could be impaired but also spatial representation of events could be used to build a temporal metric.

To test our hypotheses, we asked hearing and deaf individuals to perform visual bisection tasks, where conflicting and not conflicting temporal and spatial information was delivered. Participants see three stimuli and need to judge whether the second stimulus is temporally (i.e., temporal bisection) or spatially (i.e., spatial bisection) closer to the first one or the third one. Specifically, the second stimulus was randomly and independently delivered at different spatial positions with different temporal lags, giving rise to coherent (i.e., identical space and time) and incoherent (i.e., opposite space and time) spatiotemporal information, as well as independent spatiotemporal information (i.e. space not informative about time, and vice versa). As predicted, deaf individuals were not able to perform the temporal bisection when only temporal and not spatial cues were informative. However, the temporal bisection deficit disappeared when coherent temporal and spatial cues were presented (e.g., short time associated with short space) and increased when conflicting temporal and spatial information was presented (e.g., short time associated with long space). Our results suggest that deaf individuals rely strongly on spatial cues when inferring temporal metric information. These findings support the idea that the temporal and spatial domains tightly interact, and sensory experience is fundamental for the development of independent temporal and spatial representations.

Results

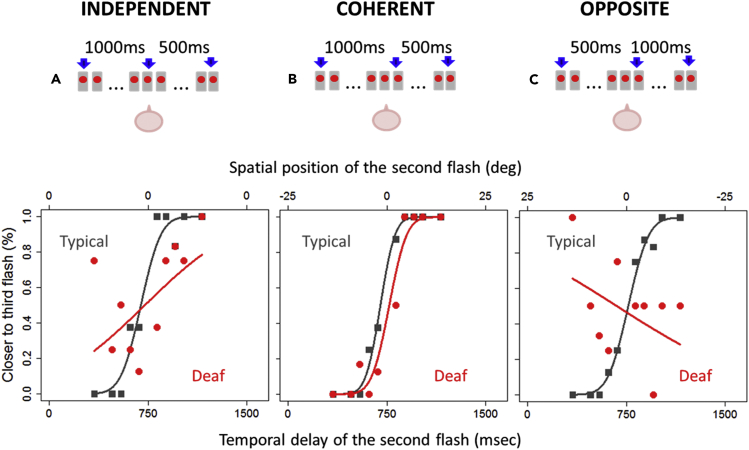

Seventeen deaf adults (see Table S1 for details) and seventeen age-matched controls performed four visual bisection tasks: three temporal bisection tasks and one spatial bisection task as a control experiment. In the three temporal bisection tasks (independent space, coherent space, and opposite space), aimed to measure thresholds for temporal bisection, three consecutive flashes were presented (see Figure 2, upper panels), and subjects judged whether the second flash was temporally closer to the first (displayed at −25°, left of center) or to the third (+25°, right of center) flash. To evaluate the role of spatial cues on temporal bisection performance of deaf individuals, the spatial distance between the three flashes was manipulated in the three temporal tasks. In the independent space temporal bisection task, the three flashes were delivered with the same distance between the first and the second flash and between the second and the third flash (25°, as in previous work, Gori et al., 2014). In this case, only temporal cues were relevant to compute the task as the spatial distance between the three flashes was the same and space coordinates were uninformative about time coordinates (Figure 2A top). In the coherent space temporal bisection task, temporal intervals and spatial distances between the three flashes were directly proportional: a longer time delay between the first and the second flash was associated with a longer spatial distance between the two flashes, and the reverse for shorter intervals (Figure 2B top). In the opposite space temporal bisection task, temporal intervals and spatial distances between the three flashes were inversely proportional: a longer time delay between the first and the second flash was associated with a shorter spatial distance between the two flashes, and the reverse for shorter intervals (Figure 2C top). In the spatial bisection task performed as a control, subjects had to pay attention to three similar flashes but produced with the same temporal delay between the first and second flashes and the second and third flashes and report if the second flash was closer to the first one or to the last one in space, thus using spatial cues.

Figure 2.

Visual Temporal Bisection Tasks

Results of the three conditions for a deaf participant showing strong spatial attraction (red symbols) and a typical hearing control (gray symbols). Subjects sat in front of an array of 23 light-emitting diodes, illustrated by the sketches above the graphs.

(A) Independent space temporal bisection. Top: the space distance between the first (-25°) and the second (0°) flashes was equal to the space distance between the second (0°) and the third (+25°) flashes. Bottom: proportion of trials judged “closer to the third flash source” plotted against the temporal delay for the second flash. Both sets of data are fitted with the Gaussian error function.

(B) Coherent space temporal bisection. Top: temporal intervals and spatial distances between the three flashes were directly proportional (e.g., a long temporal interval of 1,000 ms is associated with a longer spatial distance). Bottom: same as for (A).

(C) Opposite space temporal bisection. Top: temporal intervals and spatial distances between the three flashes were inversely proportional (e.g., a short temporal interval of 500 ms is associated with a longer spatial distance). Bottom: same as for (A) and (B).

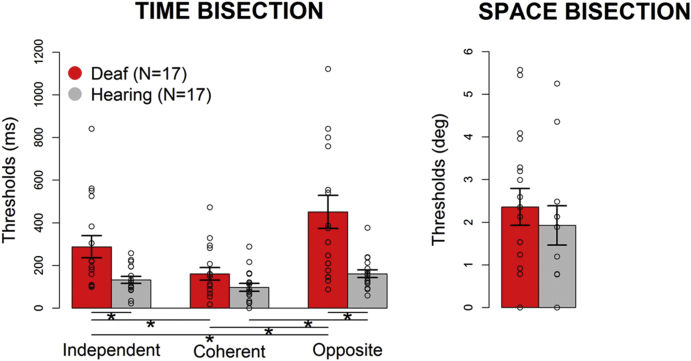

Averages and individual data for the three temporal bisection tasks and for the spatial bisection task are reported for deaf (in red) and hearing (in gray) individuals in Figure 1. The two-way ANOVA with temporal thresholds as dependent variable shows a significant interaction (F2,64 = 9.39, p < 0.001, generalized eta squared = 0.2) between group (hearing, deaf) and task (independent space, coherent space, opposite space). Post-hoc comparisons were conducted with follow-up one-way ANOVAs and two-tailed t tests, with probabilities treated as significant when lower than 0.05 after Bonferroni correction. Post-hoc t tests reveal that deafness impairs temporal bisection abilities, as evident from the higher thresholds of deaf people in the independent space temporal task compared with hearing participants (deaf versus hearing: t19.7 = 2.86, p = 0.03). Moreover, whereas for hearing individuals (in gray) the manipulation of the spatial cue during time bisection slightly influences the response (i.e., similar performance for the three temporal conditions, see Figure 1), it strongly affects the response of deaf participants (in red). Indeed, from follow-up one-way ANOVAs significant differences among tasks emerge for both deaf (F2,34 = 14.96, p < 0.001, generalized eta squared = 0.2) and hearing participants (F2,34 = 6.53, p = 0.004, generalized eta squared = 0.01), but post-hoc t tests reveal only a small difference between the coherent and the opposite conditions for hearing participants (t16 = 2.87, p = 0.03), whereas the performance of deaf individuals results is statistically more impaired in the opposite space bisection task compared with the independent space (t16 = 3.29, p = 0.01) and coherent space (t16 = 4.84, p < 0.001) conditions. These findings indicate a strong reduction of precision in the conflict condition after auditory deprivation. Still, performance of deaf individuals significantly improves from the independent space to the coherent space condition (t16 = 3.71, p = 0.005), suggesting that deaf individuals benefit from the spatial cue during temporal judgments. The average threshold of deaf participants (red bar) is also higher than that of hearing participants for the opposite space time bisection (deaf versus hearing: t17.8 = 3.66, p = 0.005), but average thresholds become low and similar between the groups for the coherent space time bisection (deaf versus hearing: t26.9 = 1.82, p = 0.2), in which temporal cues can be used by deaf participants to succeed at the task. The timing of sign language exposure does not affect the results of deaf participants, as no significant differences across the tasks emerge between early and late sign language learners from the permutation ANOVA (n. permutation1,45 = 429, p = 0.2).

Figure 1.

Group Performance in Visual Bisection Tasks

Average thresholds (±SEM) of the three temporal bisection tasks (left panel) and the spatial bisection task (right panel) for deaf (red; see also Table S1) and hearing (gray) participants. Dots represent individual data; ∗p < 0.01 after Bonferroni correction.

As expected, all participants were able to perform the spatial bisection task and similar precision is observed between hearing and deaf groups (Figure 1 right panel; F1,32 = 0.47, p = 0.5, generalized eta squared = 0.01). However, we can exclude that deaf subjects performed better at the coherent space temporal bisection task simply because they performed a spatial task using the easier discriminable dimension for them (i.e., space) as no correlation appeared between performance in the coherent space temporal bisection and performance in the spatial bisection (r = 0.11, p = 0.7), and between performance in the opposite space temporal bisection and performance in the spatial bisection (r = 0.11, p = 0.6). Similarly, there is no correlation between the independent space temporal bisection task and the spatial bisection task (r = 0.08, p = 0.7), supporting the interpretation that the spatial cue was not influencing the performance in the independent space temporal bisection.

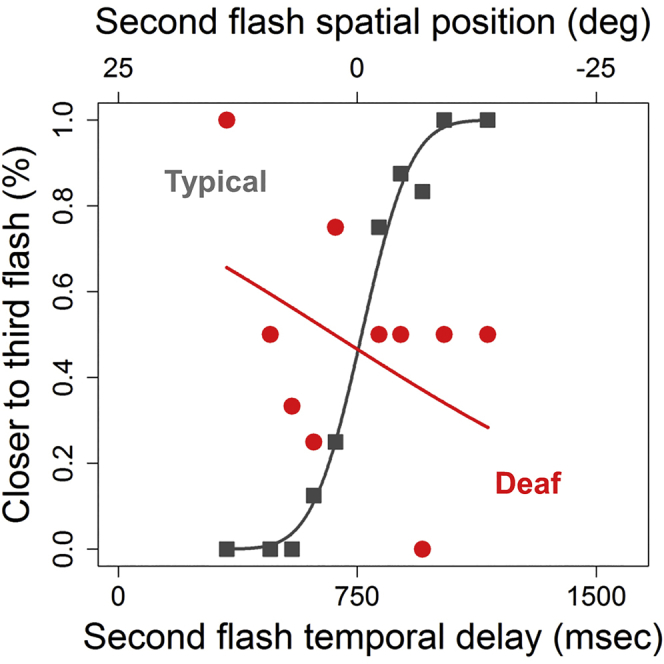

Figure 2 (lower panels) plots the proportion of answer “second flash closer to the third flash” as a function of the temporal delay of the second flash, for one deaf subject (in red) and one age-matched hearing control (in gray). Figure 2A reports the results for the independent bisection condition, Figure 2B for the coherent bisection condition, and Figure 2C for the opposite bisection condition. As suggested by group data, in the independent bisection condition (Figure 2A) the hearing individual shows the typical psychometric function. Contrarily, the deaf subject shows more random responses without a well-shaped psychometric function, reflecting for the first time an impairment of deaf people in this task. As regards the coherent bisection task (Figure 2B), the results are quite different: here the psychometric function for the deaf individual is present and as steep as that of the hearing participant, meaning similar precision. This result suggests that a spatial cue can be used by deaf individuals to improve their performance in the time bisection task. In the opposite temporal bisection task (Figure 2C), the response of the hearing subject is identical to the response in the other two conditions. In contrast, the deaf individual not only does not show a clear psychometric function but also his pattern of responses is in the opposite direction than expected (in red). The performance of the deaf individual reveals a strong spatial influence for the time bisection task under this condition, suggesting that in this deaf subject, whereas not in the hearing one, the spatial cue is attracting the temporal visual response.

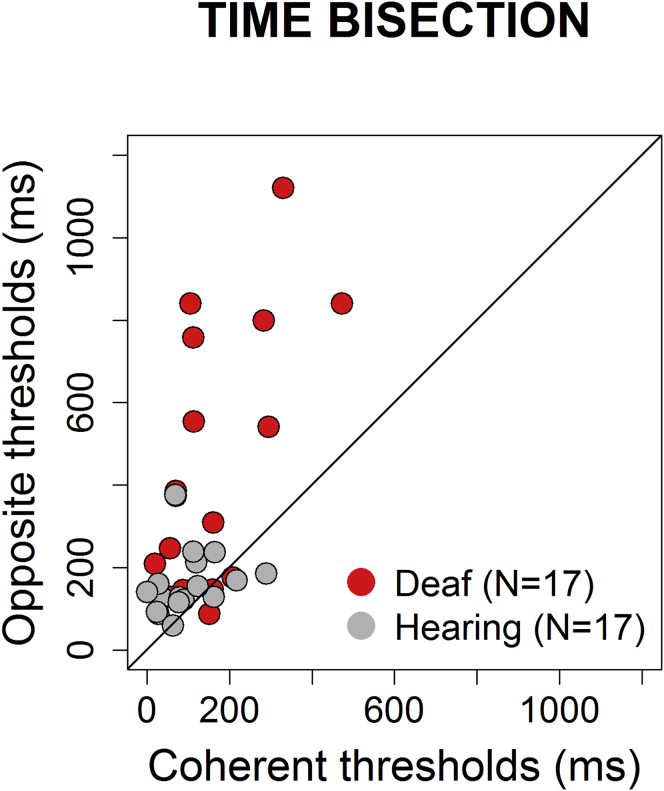

In Figure 3 individual thresholds in the coherent space temporal bisection task are plotted against individual thresholds in the opposite space temporal bisection task for the hearing (in gray) and deaf (in red) groups. Hearing participants show similar performances for both tasks, with all the individual data laying in the equality line, whereas deaf participants display discrepancies between thresholds in the two tasks. In this latter group, almost all dots lie above the equality line suggesting lower performance for the opposite space than the coherent space task.

Figure 3.

Relationship between Coherent and Opposite Temporal Bisection Tasks

Individual data, plotting opposite thresholds against coherent thresholds (calculated from the width of individual psychometric functions). Red and gray dots represent deaf and hearing individuals, respectively.

Discussion

Here we studied whether space influences time for individuals with auditory impairment. In particular, we hypothesized that in deaf individuals, for whom the auditory input is missing, the construction of complex temporal metrics could be impaired and spatial cues could be used to determine the temporal relationships of events. Deaf and hearing subjects were tested with a visual task where conflicting and not conflicting temporal and spatial information was delivered. As predicted, deaf individuals showed a deficit in complex temporal representation, and we observed a strong attraction toward spatial cues during time bisection in deaf but not in hearing individuals. Indeed, deaf participants were not able to perform the temporal bisection task when space distances between the flashes were always the same and independent with respect to the time delay (i.e., independent space temporal bisection task). However, the temporal bisection deficit disappeared when coherent temporal and spatial cues were presented (i.e., coherent space temporal bisection task) and increased for conflicting temporal and spatial stimuli (i.e., opposite space temporal bisection task). On the contrary, hearing participants were unaffected by the cross-domain coherence or conflict, showing similar performances for the three conditions.

A first important result from the present study is that deaf participants showed a specific deficit for the temporal bisection task, which required subjects to encode the presentation timings of three flashes, keep them in mind over a period of 1.5 s, extract the relative time intervals between them, and compare these estimates. There was no impairment in the spatial bisection task instead, which required the evaluation of spatial distances between three flashes. This suggests that our results did not originate merely from attentional or mnemonic deficits per se and that the effort of deaf subjects did not encompass all aspects of visual processing but was limited to visual temporal representations. Although literature about temporal skills following auditory deprivation is inconsistent (see Introduction), this result is in line with our expectation. The temporal bisection is a complex task in terms of temporal memory and attention; it involves the construction of complex temporal matrices, and we know from previous studies that audition plays a strong dominant role in it. When performing an audiovisual multisensory temporal bisection task, both young children and adults use only auditory information to estimate the multisensory temporal position of the stimulus (Gori et al., 2012). Interestingly, deaf children with restored hearing did not show this auditory dominance during the same task, further confirming that the auditory experience has a crucial influence on temporal bisection skills (Gori et al., 2017). In addition, the temporal bisection deficit we observed in deaf participants is in agreement with existing literature showing that auditory experience is necessary for the development of timing abilities in other modalities. Deaf adults were found to be impaired in estimating visual temporal durations in the range of seconds (Kowalska and Szelag, 2006) and tactile temporal durations in the range of milliseconds (Bolognini et al., 2012).

Most importantly, this study allowed us to describe the influence of spatial features on time estimations in deaf individuals. By naturally combining temporal and spatial representations (as both spatial distances and temporal intervals are determined by the first and the third stimuli and the spatial and temporal coordinates of the second stimulus can be independently modulated with respect to the other two stimuli), the bisection task gives the opportunity to investigate the interaction between space and time. In line with our study in blindness (Gori et al., 2018), the current findings provide further evidence that temporal and spatial representations are strictly linked in the human brain and sensory experience is crucial for the development of independent spatial and temporal representations. Indeed, we have previously demonstrated that the gross deficit of blind people in visual-spatial bisection (Amadeo et al., 2019, Gori et al., 2014, Campus et al., 2019) disappears when coherent temporal cues are delivered, and increases in front of conflicting spatiotemporal information (Gori et al., 2018). As vision is the most apt sense for spatial representation, blind people are impaired in building complex spatial representations and show a temporal attraction of space. Here, we show the opposite: deaf people are impaired in building complex temporal representations and show a spatial attraction of time. Thus the modification of spatiotemporal cues alters temporal and spatial bisection performance in deafness and blindness, respectively, whereas in both tasks control subjects can easily dissociate the spatial and temporal cues.

To better understand the strategy used by deaf participants we run some additional correlational analyses. The lack of correlation between spatial performance (i.e., space bisection) and temporal performance when coherent (i.e., coherent space temporal bisection) and conflicting (i.e., opposite space temporal bisection) spatiotemporal cues were presented suggests that the improved and impaired temporal performance of deaf individuals under these conditions was not simply due to the use of the spatial cue. Indeed, if they just performed the spatial task with temporal information injecting noise, at least their performance in the coherent space temporal bisection should be as good as in the independent space temporal bisection. Furthermore, if deaf participants were simply performing a spatial task even though asked about time, in the opposite space temporal bisection the psychometric functions should be always perfectly inverted. Instead, in the opposite space condition we observed a biased and a not complete inversion, suggesting that the strategy of the group was not exclusively based on the spatial cue but that there exists a dominance of spatial over temporal information. Also, performance in the independent space temporal bisection task did not correlate with that in the pure spatial bisection, further supporting the lack of weight assigned to the spatial cue in the independent space temporal task. Thus, although we cannot completely exclude that deaf participants used space as it was the easier discriminable dimension, our results suggest that participants were not simply performing a spatial task instead of a temporal judgment.

In the literature, two main theories address how the concepts of space and time are linked in the human mind. According to the Metaphor Theory (MT, Lakoff and Johnson, 1999), temporal representations depend asymmetrically on spatial representations, meaning that space unilaterally affects time, whereas the opposite is not possible. The metaphorical language is mentioned to sustain this hypothesis (Boroditsky, 2000, Clark, 1973), suggesting that spatial metaphors are necessary to think and talk about time. By contrast, Walsh et al. (Walsh, 2003) introduced a different perspective by proposing A Theory of Magnitude (ATOM), which does not predict any cross-domain asymmetry. ATOM states that space and time, together with numbers, are represented in the brain by a common magnitude system and are thus symmetrically interrelated (Bueti and Walsh, 2009, Burr et al., 2010, Lambrechts et al., 2013). At first glance, our results seem to support the MT as we show a spatial attraction on time. However, considering our specular study on blindness too (Gori et al., 2018), there exists a strong interaction between space and time, with space influencing time estimations, and vice versa, which strongly supports the ATOM. Several other behavioral (e.g., Bueti and Walsh, 2009, Dormal et al., 2008) and neuroimaging studies (e.g., Fias et al., 2003, Pinel et al., 2004, Dormal and Pesenti, 2009) agree with this theory, highlighting interferences between the two domains and the overlapping activation of areas in the parietal lobe during magnitude processing.

Our results also provide strong support to previous studies showing that the visual system is fundamental for space perception (Alais and Burr, 2004), whereas the auditory system is essential for temporal discrimination (Burr et al., 2009). In this context, McGovern et al. (2016) recently demonstrated that benefits derived from training on a spatial task in the visual modality transfer to the auditory modality, and benefits derived from training on a temporal task in the auditory modality transfer to the visual modality. As the converse patterns of transfer were absent, they suggested a unidirectional transfer of perceptual learning across sensory modality, from the dominant to the non-dominant sensory modality. In line with this, we previously suggested that, as audition is fundamental for time perception, the temporal sequence of events could be at the base of the development of auditory spatial metric understanding (Gori et al., 2018). Symmetrically, as vision is fundamental for space perception, the spatial relationship of events could be at the base of the development of visual temporal metric understanding. Therefore, on the one hand, temporal metric representations seem to be mediators for the development of auditory spatial metric representations, and the visual experience is crucial for this mediation to occur (Gori et al., 2018). On the other hand, spatial metric representations seem to be mediators for the development of visual temporal metric representations, and the auditory experience is crucial for this mediation too.

However, how space and time are represented in the brain and how different sensory modalities shape the development of these representations is still an open issue. Further research is necessary to better understand whether spatial attraction of time is a general mechanism of time perception in deafness, or it is specific for the temporal bisection task. The present data are also in agreement with the theory of cross-sensory calibration (Gori, 2015), which states that during development the most robust, accurate sensory modality for a given perceptual task (i.e., audition for temporal judgments, Gori et al., 2012) can be used to calibrate the other sensory channels. From our studies in deafness and blindness, we can add that not only sensory modalities interact during development but also the spatial and the temporal domains seem to interact. It might be that the auditory system is used to calibrate visual temporal representation, transferring the visual processing from a spatial to a temporal coordinate system, thanks to a prior of constant velocity, which may represent a channel of communication between the two sensory systems. The brain may implicitly assume the constant velocity of the stimuli and consequently uses spatial maps to solve visual temporal metric analysis. This hypothesis would explain why when the auditory input is absent, such as in deafness, people are attracted by spatial cues to make specific temporal estimations.

These findings open important opportunities for new rehabilitation strategies following sensory loss, and for the development of new sensory substitution devices. If space attracts time estimations when the auditory input is absent, we should think of new techniques where spatial and temporal cues could be simultaneously manipulated to convey richer information, taking advantage of spatial cues to recalibrate temporal representation in deaf individuals.

Limitations of the Study

One limitation of the study is sample size due to difficulties in recruiting deaf individuals.

Methods

All methods can be found in the accompanying Transparent Methods supplemental file.

Acknowledgments

Authors would like to thank Alessia Tonelli for her precious help in data collection and Lucilla Cardinali for her suggestions in discussion. Moreover, the authors thank deaf and hearing adults for their willing participation in this research.

Author Contributions

M.G., M.B.A., F.P., and C.C. conceived the study and designed the experiments. M.B.A and C.C. carried out experiments and analyzed data. M.G., M.B.A., F.P., and C.C. wrote the manuscript, prepared figures, and reviewed the manuscript.

Declaration of Interests

The authors declare no competing interests.

Published: September 27, 2019

Footnotes

Supplemental Information can be found online at https://doi.org/10.1016/j.isci.2019.07.042.

Supplemental Information

References

- Alais D., Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Allman B.L., Keniston L.P., Meredith M.A. Not just for bimodal neurons anymore: the contribution of unimodal neurons to cortical multisensory processing. Brain Topogr. 2009;21:157–167. doi: 10.1007/s10548-009-0088-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amadeo M.B., Campus C., Gori M. Impact of years of blindness on neural circuits underlying auditory spatial representation. Neuroimage. 2019;191:140–149. doi: 10.1016/j.neuroimage.2019.01.073. [DOI] [PubMed] [Google Scholar]

- Auer E.T., Jr., Bernstein L.E., Sungkarat W., Singh M. Vibrotactile activation of the auditory cortices in deaf versus hearing adults. Neuroreport. 2007;18:645–648. doi: 10.1097/WNR.0b013e3280d943b9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barakat B., Seitz A.R., Shams L. Visual rhythm perception improves through auditory but not visual training. Curr. Biol. 2015;25:R60–R61. doi: 10.1016/j.cub.2014.12.011. [DOI] [PubMed] [Google Scholar]

- Barone P., Lacassagne L., Kral A. Reorganization of the connectivity of cortical field DZ in congenitally deaf cat. PLoS One. 2013;8:e60093. doi: 10.1371/journal.pone.0060093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D., Dye M.W., Hauser P.C. Do deaf individuals see better? Trends Cogn. Sci. 2006;10:512–518. doi: 10.1016/j.tics.2006.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benetti S., van Ackeren M.J., Rabini G., Zonca J., Foa V., Baruffaldi F., Rezk M., Pavani F., Rossion B., Collignon O. Functional selectivity for face processing in the temporal voice area of early deaf individuals. Proc. Natl. Acad. Sci. U S A. 2017;114:E6437–E6446. doi: 10.1073/pnas.1618287114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bola L., Zimmermann M., Mostowski P., Jednorog K., Marchewka A., Rutkowski P., Szwed M. Task-specific reorganization of the auditory cortex in deaf humans. Proc. Natl. Acad. Sci. U S A. 2017;114:E600–E609. doi: 10.1073/pnas.1609000114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolognini N., Cecchetto C., Geraci C., Maravita A., Pascual-Leone A., Papagno C. Hearing shapes our perception of time: temporal discrimination of tactile stimuli in deaf people. J. Cogn. Neurosci. 2012;24:276–286. doi: 10.1162/jocn_a_00135. [DOI] [PubMed] [Google Scholar]

- Bolognini N., Papagno C., Moroni D., Maravita A. Tactile temporal processing in the auditory cortex. J. Cogn. Neurosci. 2010;22:1201–1211. doi: 10.1162/jocn.2009.21267. [DOI] [PubMed] [Google Scholar]

- Boroditsky L. Metaphoric structuring: understanding time through spatial metaphors. Cognition. 2000;75:1–28. doi: 10.1016/s0010-0277(99)00073-6. [DOI] [PubMed] [Google Scholar]

- Bottari D., Caclin A., Giard M.H., Pavani F. Changes in early cortical visual processing predict enhanced reactivity in deaf individuals. PLoS One. 2011;6:e25607. doi: 10.1371/journal.pone.0025607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bottari D., Heimler B., Caclin A., Dalmolin A., Giard M.H., Pavani F. Visual change detection recruits auditory cortices in early deafness. Neuroimage. 2014;94:172–184. doi: 10.1016/j.neuroimage.2014.02.031. [DOI] [PubMed] [Google Scholar]

- Bresciani J.P., Ernst M.O. Signal reliability modulates auditory-tactile integration for event counting. Neuroreport. 2007;18:1157–1161. doi: 10.1097/WNR.0b013e3281ace0ca. [DOI] [PubMed] [Google Scholar]

- Bross M., Sauerwein H. Signal detection analysis of visual flicker in deaf and hearing individuals. Percept. Mot. Skills. 1980;51:839–843. doi: 10.2466/pms.1980.51.3.839. [DOI] [PubMed] [Google Scholar]

- Bueti D., Walsh V. The parietal cortex and the representation of time, space, number and other magnitudes. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2009;364:1831–1840. doi: 10.1098/rstb.2009.0028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burr D., Banks M.S., Morrone M.C. Auditory dominance over vision in the perception of interval duration. Exp. Brain Res. 2009;198:49–57. doi: 10.1007/s00221-009-1933-z. [DOI] [PubMed] [Google Scholar]

- Burr D.C., Ross J., Binda P., Morrone M.C. Saccades compress space, time and number. Trends Cogn. Sci. 2010;14:528–533. doi: 10.1016/j.tics.2010.09.005. [DOI] [PubMed] [Google Scholar]

- Butler B.E., Chabot N., Lomber S.G. Quantifying and comparing the pattern of thalamic and cortical projections to the posterior auditory field in hearing and deaf cats. J. Comp. Neurol. 2016;524:3042–3063. doi: 10.1002/cne.24005. [DOI] [PubMed] [Google Scholar]

- Campbell J., Sharma A. Visual cross-modal Re-organization in children with cochlear implants. PLoS One. 2016;11:e0147793. doi: 10.1371/journal.pone.0147793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campus C., Sandini G., Amadeo M.B., Gori M. Stronger responses in the visual cortex of sighted compared to blind individuals during auditory space representation. Sci. Rep. 2019;9:1935. doi: 10.1038/s41598-018-37821-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardon G., Campbell J., Sharma A. Plasticity in the developing auditory cortex: evidence from children with sensorineural hearing loss and auditory neuropathy spectrum disorder. J. Am. Acad. Audiol. 2012;23:396–411. doi: 10.3766/jaaa.23.6.3. quiz 495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chabot N., Butler B.E., Lomber S.G. Differential modification of cortical and thalamic projections to cat primary auditory cortex following early- and late-onset deafness. J. Comp. Neurol. 2015;523:2297–2320. doi: 10.1002/cne.23790. [DOI] [PubMed] [Google Scholar]

- Clark H.H. Space, time, semantics, and the child. In: Moore T.E., editor. Cognitive Development and the Acquisition of Language. Academic Press; 1973. pp. 37–63. [Google Scholar]

- Clemo H.R., Lomber S.G., Meredith M.A. Synaptic basis for cross-modal plasticity: enhanced supragranular dendritic spine density in anterior ectosylvian auditory cortex of the early deaf cat. Cereb. Cortex. 2016;26:1365–1376. doi: 10.1093/cercor/bhu225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coull J.T., Vidal F., Nazarian B., Macar F. Functional anatomy of the attentional modulation of time estimation. Science. 2004;303:1506–1508. doi: 10.1126/science.1091573. [DOI] [PubMed] [Google Scholar]

- Dormal V., Andres M., Pesenti M. Dissociation of numerosity and duration processing in the left intraparietal sulcus: a transcranial magnetic stimulation study. Cortex. 2008;44:462–469. doi: 10.1016/j.cortex.2007.08.011. [DOI] [PubMed] [Google Scholar]

- Dormal V., Pesenti M. Common and specific contributions of the intraparietal sulci to numerosity and length processing. Hum. Brain Mapp. 2009;30:2466–2476. doi: 10.1002/hbm.20677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrandez A.M., Hugueville L., Lehericy S., Poline J.B., Marsault C., Pouthas V. Basal ganglia and supplementary motor area subtend duration perception: an fMRI study. Neuroimage. 2003;19:1532–1544. doi: 10.1016/s1053-8119(03)00159-9. [DOI] [PubMed] [Google Scholar]

- Fias W., Lammertyn J., Reynvoet B., Dupont P., Orban G.A. Parietal representation of symbolic and nonsymbolic magnitude. J. Cogn. Neurosci. 2003;15:47–56. doi: 10.1162/089892903321107819. [DOI] [PubMed] [Google Scholar]

- Finney E.M., Clementz B.A., Hickok G., Dobkins K.R. Visual stimuli activate auditory cortex in deaf subjects: evidence from MEG. Neuroreport. 2003;14:1425–1427. doi: 10.1097/00001756-200308060-00004. [DOI] [PubMed] [Google Scholar]

- Finney E.M., Fine I., Dobkins K.R. Visual stimuli activate auditory cortex in the deaf. Nat. Neurosci. 2001;4:1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Fraisse P. Harper & Row; Oxford, England: 1963. The Psychology of Time. [Google Scholar]

- Gebhard J.W., Mowbray G.H. On discriminating the rate of visual flicker and auditory flutter. Am. J. Psychol. 1959;72:521–529. [PubMed] [Google Scholar]

- Gori M. Multisensory integration and calibration in children and adults with and without sensory and motor disabilities. Multisens. Res. 2015;28:71–99. doi: 10.1163/22134808-00002478. [DOI] [PubMed] [Google Scholar]

- Gori M., Amadeo M.B., Campus C. Temporal cues influence space estimations in visually impaired individuals. iScience. 2018;6:319–326. doi: 10.1016/j.isci.2018.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gori M., Chilosi A., Forli F., Burr D. Audio-visual temporal perception in children with restored hearing. Neuropsychologia. 2017;99:350–359. doi: 10.1016/j.neuropsychologia.2017.03.025. [DOI] [PubMed] [Google Scholar]

- Gori M., Sandini G., Burr D. Development of visuo-auditory integration in space and time. Front. Integr. Neurosci. 2012;6:77. doi: 10.3389/fnint.2012.00077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gori M., Sandini G., Martinoli C., Burr D.C. Impairment of auditory spatial localization in congenitally blind human subjects. Brain. 2014;137:288–293. doi: 10.1093/brain/awt311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guttman S.E., Gilroy L.A., Blake R. Hearing what the eyes see: auditory encoding of visual temporal sequences. Psychol. Sci. 2005;16:228–235. doi: 10.1111/j.0956-7976.2005.00808.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heming J.E., Brown L.N. Sensory temporal processing in adults with early hearing loss. Brain Cogn. 2005;59:173–182. doi: 10.1016/j.bandc.2005.05.012. [DOI] [PubMed] [Google Scholar]

- Kanai R., Lloyd H., Bueti D., Walsh V. Modality-independent role of the primary auditory cortex in time estimation. Exp. Brain Res. 2011;209:465–471. doi: 10.1007/s00221-011-2577-3. [DOI] [PubMed] [Google Scholar]

- Karns C.M., Dow M.W., Neville H.J. Altered cross-modal processing in the primary auditory cortex of congenitally deaf adults: a visual-somatosensory fMRI study with a double-flash illusion. J. Neurosci. 2012;32:9626–9638. doi: 10.1523/JNEUROSCI.6488-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kok M.A., Chabot N., Lomber S.G. Cross-modal reorganization of cortical afferents to dorsal auditory cortex following early- and late-onset deafness. J. Comp. Neurol. 2014;522:654–675. doi: 10.1002/cne.23439. [DOI] [PubMed] [Google Scholar]

- Kowalska J., Szelag E. The effect of congenital deafness on duration judgment. J. Child Psychol. Psychiatry. 2006;47:946–953. doi: 10.1111/j.1469-7610.2006.01591.x. [DOI] [PubMed] [Google Scholar]

- Lakoff G., Johnson M. University of Chicago Press; 1999. Philosophy in the Flesh: The Embodied Mind and its Challenge to Western Thought. [Google Scholar]

- Lambrechts A., Walsh V., van Wassenhove V. Evidence accumulation in the magnitude system. PLoS One. 2013;8:e82122. doi: 10.1371/journal.pone.0082122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazard D.S., Innes-Brown H., Barone P. Adaptation of the communicative brain to post-lingual deafness. Evidence from functional imaging. Hear. Res. 2014;307:136–143. doi: 10.1016/j.heares.2013.08.006. [DOI] [PubMed] [Google Scholar]

- Lewis P.A., Miall R.C. Brain activation patterns during measurement of sub- and supra-second intervals. Neuropsychologia. 2003;41:1583–1592. doi: 10.1016/s0028-3932(03)00118-0. [DOI] [PubMed] [Google Scholar]

- Lomber S.G., Meredith M.A., Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat. Neurosci. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- McGovern D.P., Astle A.T., Clavin S.L., Newell F.N. Task-specific transfer of perceptual learning across sensory modalities. Curr. Biol. 2016;26:R20–R21. doi: 10.1016/j.cub.2015.11.048. [DOI] [PubMed] [Google Scholar]

- Merabet L.B., Pascual-Leone A. Neural reorganization following sensory loss: the opportunity of change. Nat. Rev. Neurosci. 2010;11:44–52. doi: 10.1038/nrn2758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nava E., Bottari D., Zampini M., Pavani F. Visual temporal order judgment in profoundly deaf individuals. Exp. Brain Res. 2008;190:179–188. doi: 10.1007/s00221-008-1459-9. [DOI] [PubMed] [Google Scholar]

- Pavani F., Bottari D. Visual abilities in individuals with profound deafness a critical review. In: Murray M.M., Wallace M.T., editors. The Neural Bases of Multisensory Processes. CRC Press/Taylor & Francis; Boca Raton (FL): 2012. Available from: https://www.ncbi.nlm.nih.gov/books/NBK92865/, Chapter 22. [PubMed] [Google Scholar]

- Pinel P., Piazza M., Le Bihan D., Dehaene S. Distributed and overlapping cerebral representations of number, size, and luminance during comparative judgments. Neuron. 2004;41:983–993. doi: 10.1016/s0896-6273(04)00107-2. [DOI] [PubMed] [Google Scholar]

- Poizner H., Tallal P. Temporal processing in deaf signers. Brain Lang. 1987;30:52–62. doi: 10.1016/0093-934x(87)90027-7. [DOI] [PubMed] [Google Scholar]

- Shams L., Kamitani Y., Shimojo S. Illusions. What you see is what you hear. Nature. 2000;408:788. doi: 10.1038/35048669. [DOI] [PubMed] [Google Scholar]

- Shipley T. Auditory flutter-driving of visual flicker. Science. 1964;145:1328–1330. doi: 10.1126/science.145.3638.1328. [DOI] [PubMed] [Google Scholar]

- Strelnikov K., Rouger J., Demonet J.F., Lagleyre S., Fraysse B., Deguine O., Barone P. Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain. 2013;136:3682–3695. doi: 10.1093/brain/awt274. [DOI] [PubMed] [Google Scholar]

- Walsh V. A theory of magnitude: common cortical metrics of time, space and quantity. Trends Cogn Sci. 2003;7:483–488. doi: 10.1016/j.tics.2003.09.002. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.