Abstract

Short-term synaptic plasticity (STP) is widely thought to play an important role in information processing. This major function of STP has recently been challenged, however, by several computational studies indicating that transmission of information by dynamic synapses is broadband, i.e., frequency independent. Here we developed an analytical approach to quantify time- and rate-dependent synaptic information transfer during arbitrary spike trains using a realistic model of synaptic dynamics in excitatory hippocampal synapses. We found that STP indeed increases information transfer in a wide range of input rates, which corresponds well to the naturally occurring spike frequencies at these synapses. This increased information transfer is observed both during Poisson-distributed spike trains with a constant rate and during naturalistic spike trains recorded in hippocampal place cells in exploring rodents. Interestingly, we found that the presence of STP in low release probability excitatory synapses leads to optimization of information transfer specifically for short high-frequency bursts, which are indeed commonly observed in many excitatory hippocampal neurons. In contrast, more reliable high release probability synapses that express dominant short-term depression are predicted to have optimal information transmission for single spikes rather than bursts. This prediction is verified in analyses of experimental recordings from high release probability inhibitory synapses in mouse hippocampal slices and fits well with the observation that inhibitory hippocampal interneurons do not commonly fire spike bursts. We conclude that STP indeed contributes significantly to synaptic information transfer and may serve to maximize information transfer for specific firing patterns of the corresponding neurons.

Introduction

Short-term plasticity (STP) acts on millisecond-to-minute timescales to modulate synaptic strength in an activity-dependent manner. STP is widely believed to play an important role in synaptic computations and to contribute to many essential neural functions, particularly information processing (Abbott and Regehr, 2004; Deng and Klyachko, 2011). Specific computations performed by STP are often based on various types of filtering operations (Fortune and Rose, 2001; Abbott and Regehr, 2004). This generally accepted role of STP in information processing has been challenged recently by several computational studies aimed at directly computing STP influence on information transfer using an information-theoretic framework (Lindner et al., 2009; Yang et al., 2009). Although earlier studies have shown that information transfer is dependent on release probability (Zador, 1998), which is directly modified by STP, Lindner et al. (2009) used a generalized model of STP to show that information transfer by dynamic synapses is frequency independent, no matter whether synapses express dominant facilitation or depression. Similar results obtained using more detailed models of the calyx of Held synapse also demonstrated that information transmission is predominately broadband (Yang et al., 2009). These studies suggested that STP does not contribute to frequency-dependent information filtering and raised the question of what specific roles STP plays in synaptic information transmission.

One common feature of these computational studies, however, is that they considered only steady-state conditions that synapses reach after prolonged periods of high-frequency stimulation. Although this is a physiologically plausible condition, it reflects the strained state of the synapses when significant amounts of their resources, such as the readily releasable pool (RRP) of vesicles, have been exhausted. This is not representative, for instance, of excitatory CA3–CA1 synapses, which typically experience rather short 15- to 25-spike-long high-frequency bursts separated by relatively long periods of lower activity (Fenton and Muller, 1998). In fact, less than half of the RRP is typically used at any time during such naturalistic activity (Kandaswamy et al., 2010) with nearly complete RRP recovery between the bursts. This discrepancy between the strained state of synapses used in analytical calculations of information transmission and the realistic state of the synapses during natural activity suggests that more representative conditions need to be considered to evaluate STP contributions to information processing.

We therefore developed an analytical approach to calculate the time and rate dependence of synaptic information transmission using a realistic model of STP in excitatory hippocampal synapses. Indeed, using more realistic conditions, we found that STP contributes significantly to increasing information transfer over a wide frequency range. Furthermore, our time-dependent analysis indicated that STP optimizes information transmission specifically for short high-frequency spike bursts in low release probability synapses, and that this optimization shifts from bursts to single spikes in high release probability synapses. We verified these predictions using recordings in excitatory and inhibitory hippocampal synapses with corresponding properties. Our study thus directly establishes the role of STP in information transmission within the information-theoretic framework and shows that STP works to optimize information transmission for specific firing patterns of the corresponding neurons.

Materials and Methods

Animals and slice preparation.

Horizontal hippocampal slices (350 μm) were prepared from 15- to 25-d-old mice using a vibratome (VT1200 S, Leica). Both male and female animals were used for recordings. Dissections were performed in ice-cold solution that contained the following (in mm): 130 NaCl, 24 NaHCO3, 3.5 KCl, 1.25 NaH2PO4, 0.5 CaCl2, 5.0 MgCl2, and 10 glucose, saturated with 95% O2 and 5% CO2, pH 7.3. Slices were incubated in the above solution at 35°C for 1 h for recovery and then kept at room temperature (∼23°C) until use. All animal procedures conformed to the guidelines approved by the Washington University Animal Studies Committee.

Electrophysiological recordings.

Whole-cell patch-clamp recordings were performed using an Axopatch 200B amplifier (Molecular Devices) from CA1 pyramidal neurons visually identified with infrared video microscopy (BX50WI, Olympus; Dage-MTI) and differential interference contrast optics. All recordings were performed at near-physiological temperatures (33–34°C). The recording electrodes were filled with the following (in mm): 130 K-gluconate, 0.5 EGTA, 2 MgCl2, 5 NaCl, 2 ATP2Na, 0.4 GTPNa, and 10 HEPES, pH 7.3. The extracellular solution contained the following (in mm): 130 NaCl, 24 NaHCO3, 3.5 KCl, 1.25 NaH2PO4, 2 CaCl2, 1 MgCl2, and 10 glucose, saturated with 95% O2 and 5% CO2, pH 7.3. In all experiments, NMDA receptors were blocked with AP5 (50 μm) to prevent long-term effects. EPSCs were recorded from CA1 pyramidal neurons at a holding potential of −65 mV by stimulating Schaffer collaterals with a bipolar electrode placed in the stratum radiatum ∼300 μm (range ∼200–500 μm) from the recording electrode. EPSCs recorded in this configuration represent an averaged synaptic response across a population of similar CA1–CA3 synapses. The same recording configuration was previously used to provide experimental support for the realistic model of STP (Kandaswamy et al., 2010) used in the current study. Note that under our experimental conditions, receptor desensitization and saturation are insignificant, and voltage-clamp errors are also small and do not provide a significant source of nonlinearity (Wesseling and Lo, 2002; Klyachko and Stevens, 2006b). Therefore, postsynaptic responses can be used as a linear readout of transmitter release in the relevant frequency range.

Data were filtered at 2 kHz, digitized at 20 kHz, acquired using custom software written in LabVIEW, and analyzed using programs written in MATLAB. EPCSs during the stimulus trains were normalized to an average of five low-frequency (0.2 Hz) control responses preceding each train to provide relative changes in synaptic strength. Each stimulus train was presented four to six times in each cell, and each presentation was separated by ∼2 min of low-frequency (0.2–0.1 Hz) control stimuli to allow complete EPSC recovery to the baseline. To correct for the overlap of EPSCs at short interspike intervals (ISIs), a normalized template of EPSC waveform was created for each stimulus presentation by averaging all EPSCs within a given train that were separated by at least 100 ms from their neighbors and normalized to their peak values. Every EPSC in the train then was approximated by a template waveform scaled to the peak of the current EPSC, and its contribution to synaptic response was digitally subtracted.

The natural stimulus trains used in this study represent the firing patterns recorded in vivo from the hippocampal place cells of awake, freely moving rats (generously provided by Drs. A. Fenton and R. Muller, State University of New York, Brooklyn, NY) (Fenton and Muller, 1998). Spikes with ISIs <10 ms were treated as a single stimulus, because the delay between the action potential firing and the peak of postsynaptic currents/potentials prevented resolution of individual synaptic responses at shorter ISIs. Such treatment does not significantly affect synaptic responses to natural stimulus trains, as we have shown previously (Klyachko and Stevens, 2006a).

Analytical framework for analysis of information transmission by dynamic synapses.

Information theory provides a general framework to quantify information transfer in any system based on the principles of Shannon (1948). Our approach described below is an extension of the previous work of Zador (1998) to analytically compute the contributions of STP to information transfer based on these principles. Synaptic information transmission can be measured by how much information the output spike train provides about the input train, which is termed “mutual information” (Shannon, 1948). Within the information-theoretic framework, this property is defined formally in terms of ensemble entropies. The entropy is a basic measure in information theory and is given by the following:

where P(xi) is the probability of variable x to have the value xi. The synaptic mutual information Im depends on the input (also termed “source”) spike train's entropy, H(s), the entropy of output spike trains (or of synaptic responses) H(r), and their joint entropy H(r, s), and by definition is given by the following:

where H(r | s) is a conditional entropy of the output given the inputs, which reflects variability of output for repeated presentations of the same input. In practical terms, this means that variability of synaptic output for the multiple presentations of the same input represents an inherent “noise” in transmission and does not carry information, because it cannot distinguish between two different inputs.

The realistic model of STP we used to describe synaptic dynamics in the hippocampal synapses (Kandaswamy et al., 2010) is formulated to predict changes in synaptic release probability during a random spike input. The term “release probability” (Pr) is commonly used to describe probability of vesicle release given a presynaptic spike. If we describe the existing/nonexisting presynaptic spike as s = 1/0, and denote a vesicle that is released/not released as r = 1/0, then the release probability is Pr ≡ P(r = 1 | s = 1). In our calculations we will distinguish between the term Pr, the synaptic release probability, and another probability variable, p ≡ P(r = 1), which simply represents the probability of synaptic response at a given time. The relation between these two variables is determined by the stimulation rate, p = R · Pr, where R is the presynaptic firing rate P(s = 1). The advantage of chosen STP formulation is that it allows direct comparison to experimental measurements (Kandaswamy et al., 2010) and provides a useful framework for the calculation of the information transmission. Specifically, when an ensemble of source spike trains with the same properties (e.g., constant rate) is used as an input to the STP model, a resulting distribution of release probabilities, f(p, t), at each point in time can be calculated. The output of individual synapses is determined by the release of a vesicle, which is controlled stochastically by the release probability p at any given point in time. Since the synapse either releases a vesicle or it does not, the synaptic response (r) is thus a binary-state system at each point in time. Application of Equation 1 to calculate mutual information for this simplified binary-state system gives the following:

where p is the probability to be in one state and (1 − p) is the probability to be in the other (notice that the expression is symmetrical regarding assignment of the two states (p → 1 − p). Also note that the above formulation is derived in assumption of a monovesicular release. Our previous computational analyses (Kandaswamy et al., 2010) indicated that models of STP in hippocampal synapses described the experimental data equally well in assumption of either a monovesicular release or multivesicular release in case the number of active release sites does not change significantly during elevated activity levels. Given these previous results, and because the extent of multivesicular release in hippocampal synapses and the quantitative relationship between multivesicular release and release probability have not been established, we will limit our analysis to the monovesicular release framework.

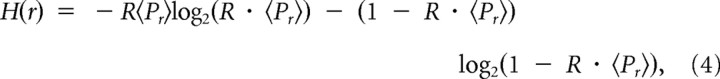

For synaptic transmission, the entropy of vesicle release and thus of synaptic response, H(r), is determined by the release probability of the synaptic ensemble, and is given by the following:

|

where 〈…〉 denotes the ensemble averaged value, and R is the input stimulus rate.

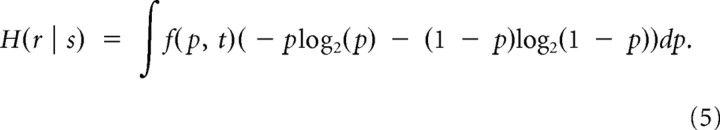

To calculate the second term, H(r | s), in Equation 2, we take into account that for each individual response in a given train, a single value of p is drawn from the distribution. Therefore, the expression for H(r | s) is the average of Equation 3 with the distribution function f(p, t). This means that for each point in time a different distribution f(p, t) is calculated, the release probability p is randomly selected for that time, and then the binary-state entropy (Eq. 3) is calculated for that time point. The resulting expression for conditional entropy is then given by the following:

|

Note that the averaging in Equation 5 is the ensemble averaging over available input spike trains. Expression 5 can be further simplified by noticing that if p = 0 is randomly selected, then H(r) in Equation 4 is exactly 0; then we can exclude the case of no stimulation from Equation 5. Formally, this can be done by expressing the release probability distribution function f(p, t) as a sum of two contributions given by the following:

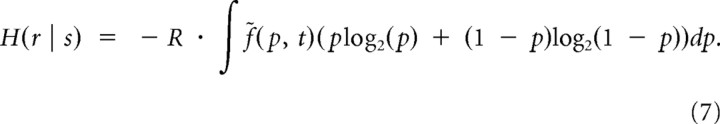

where the first component represents a contribution when there is no stimulation and the second component represents a contribution when stimulation is present. From Equations 5 and 6 a simplified expression for the conditional entropy can thus be derived as follows:

|

In an individual input train, there will be R stimuli per unit of time, and each of these will contribute according to the release probability at that time. In the case of ensemble entropy, each time point contributes equally, because within the complete ensemble of inputs there is an equal probability to randomly pick an input spike train that contains a spike at that time point. The main difference between full ensemble entropy and conditional entropy is then determined by the choice of specific firing times. This means that in this calculation the main source of information carried by the input train is determined by the spike timing within a train.

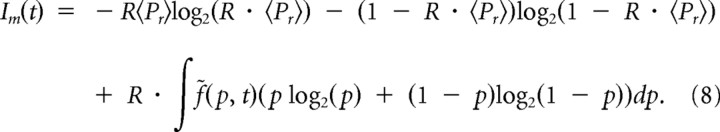

The mutual information, a measure of transferred information, can now be simply calculated using Equations 4 and 7 as follows:

|

To verify this derivation, we can check our formalism for a few simple, time-independent cases. For the perfectly reliable synapse (Pr = 1), f̃(p, t) = δ(p − 1), which leads to zero conditional entropy. This is exactly true for this simple case because the output is exactly determined by the input, and although different trains produce different responses, single stimulation produces only one and the same single response. The mutual information is then the full entropy of the input given by Im = H(r) = H(s) = − Rlog2(R) − (1 − R)log2(1 − R).

For the opposite case of Pr = 0, the response entropy is zero since there is only one response to any input. When 〈Pr〉 = 0 is used in Equation 4, the resulting entropy is 0.

For any other constant release probability Pr = p0, we can calculate the rate-dependent entropy as H(r) = − (p0 · R)log2(p0 · R) − (1 − p0 · R)log2(1 − p0 · R). The conditional entropy has a simplified expression in this case, because the integration of Equation 7 can be performed exactly as follows: H(r | s) = − R[(p0)log2(p0) + (1 − p0)log2(1 − p0)].

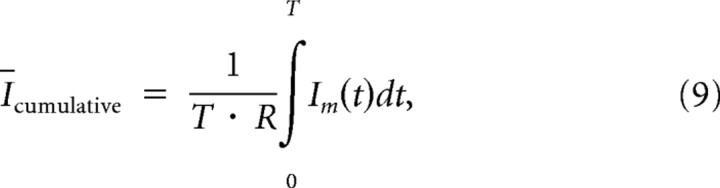

Because all of the above analysis was performed for a single point in time (binned time), the resulting Equation 8 thus measures the mutual information per unit time. We can also define mutual information per spike by dividing Equation 8 by the stimulation rate R and define the average cumulative mutual information as the following:

|

which measures the average information transfer per unit time, within a period of time from 0 to T. It is important to point out that in the case of a static, constant Pr synapse mutual information and average cumulative mutual information are exactly the same because they are time independent.

Note that the exact values of bits of information transmitted are dependent on the chosen bin width of time and the release probability, so that if we assume a more precise Pr or spike-timing measurements, their information contents will increase. We will therefore consider relative changes in functional behavior due to the presence of STP by comparing information transmission in a model of a dynamic synapse to information transmission by a synapse with a constant Pr (i.e., no STP); both are analyzed using exactly the same procedures.

In addition, the chosen model does not consider multivesicular release or the natural variability in the amount of released neurotransmitter by a single vesicle. Because there are no clearly established mechanisms that control these processes, they can only be modeled as randomly distributed effects. All such processes therefore would not contribute to information transfer, and in the information theory formalism their contribution will be subtracted as part of H(r | s).

Computational analysis.

For simulation of the effects of STP on the information transfer of the CA3–CA1 excitatory synapse, we used our recently developed realistic model of STP, which shows a close agreement with experimental measurements at this synapse in rat hippocampal slices (Kandaswamy et al., 2010). This model accounts for three components of short-term synaptic enhancement (two components of facilitation and one component of augmentation) and depression, which is modeled as the depletion of the ready releasable vesicle pool using a sequential two-pool model. To determine the model parameters in a wide frequency range, we performed an extensive set of recordings of synaptic responses in the CA1 neurons in mouse hippocampal slices at stimulus frequencies of 2–100 Hz. Model parameters were then determined by fitting this expanded experimental dataset as described by Kandaswamy et al. (2010). The model was then able to successfully predict synaptic responses for arbitrary stimulus patterns.

In the first part of our study, constant-rate Poissonian spike trains were used. An ensemble of 6400 short trains was simulated for each rate. Train duration was chosen to achieve the ensemble average number of spikes in the train equal to 100. This timescale was chosen to match the existing experimental data on constant frequency and natural spike train responses (Klyachko and Stevens, 2006a; Kandaswamy et al., 2010). Since the quantitative information theory analysis requires discretization of continuous parameters, we chose release probability steps of 0.1 and time steps of 3 ms. Time steps were chosen to limit the minimum allowed ISI to experimentally and physiologically realizable cases.

Results

Analytical calculation of information transmission by a dynamic synapse

Careful experimental analysis of synaptic information transmission requires testing a prohibitively large number of possible input spike trains. As a result, direct experimental measurements of information transfer are not currently feasible. Rather, studies of synaptic information transmission are performed mostly by computer simulations using models of synaptic dynamics (Markram et al., 1998b; Zador, 1998; Fuhrmann et al., 2002; Silberberg et al., 2004b; Lindner et al., 2009; Yang et al., 2009). Computationally, synaptic information transmission can be estimated within the information-theoretic framework by calculating the mutual information (Shannon, 1948) that reflects how much information the output spike train provides about the input train. To examine the contributions of STP to synaptic information transfer, we developed an analytical approach to calculate both the rate and time dependence of mutual information in a dynamic synapse in terms of the entropy of the synaptic response itself H(r) (Eq. 4) and the conditional entropy of the synaptic response given the input (Eq. 7). This approach is an extension of the earlier formalism originally developed by Zador (1998).

To examine synaptic dynamics that closely approximate the experimental data, we derived the entropy terms as a function of the input spike rate and synaptic release probability (Eq. 8). The release probability during input spike trains was determined based on a realistic model of STP that we developed previously for excitatory hippocampal synapses (Kandaswamy et al., 2010). To determine the model parameters in a wide frequency range, we performed a set of recordings of synaptic responses in the CA1 neurons in mouse hippocampal slices at stimulus frequencies of 2–100 Hz. The observed synaptic dynamics closely followed our previous recordings in the rat slices (Klyachko and Stevens, 2006a,b) (data not shown). Parameters of the model were determined as we previously described (Kandaswamy et al., 2010). With this optimal set of parameters, the model has been shown to accurately predict all key features of synaptic dynamics during natural spike trains recorded in exploring rodents (Kandaswamy et al., 2010). The basal Pr value in the model was set to 0.2, which represents the mean release probability in these synapses (Murthy et al., 1997). It is important to note that although Pr is typically low in these synapses, it is distributed across a significant range of values in the population of hippocampal synapses. We therefore assumed the average value of Pr = 0.2 in our first set of calculations, but then performed a detailed robustness analysis of the information transmission and of our results as a function of all major model parameters, including the range of Pr values from 0.05 to 0.4, which includes a large proportion of the synaptic population (Dobrunz and Stevens, 1997; Murthy et al., 1997) (see text and Fig. 4).

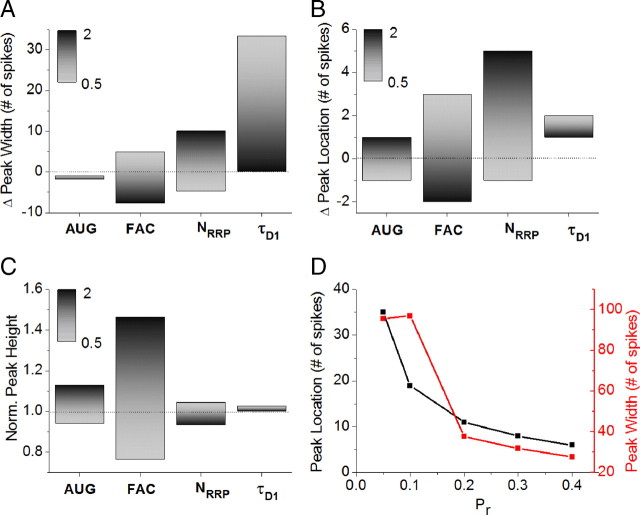

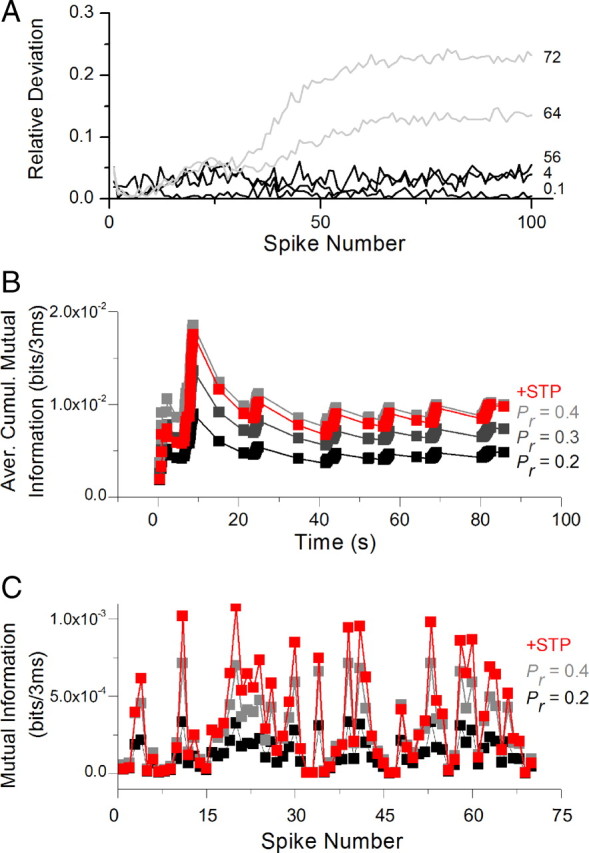

Figure 4.

Robustness of information transmission optimization. A, Changes in peak width of average cumulative mutual information with the 2× change of model parameters (augmentation amplitude, facilitation amplitude, number of vesicles in RRP and recycling (depression) timescale). The peak half-width above the steady-state level was taken as a measure for peak width. The stimulus rate of 32 Hz was used in this robustness analysis. B, C, Same as A for the changes in peak location (B) and height (C). D, Changes in peak width with the changes of basal release probability.

Previous analyses of synaptic information transfer considered the steady-state conditions that synapses reach after prolonged high-frequency stimulation (Lindner et al., 2009; Yang et al., 2009) and were thus time independent. Because such steady-state conditions might not be fully representative of the state in which synapses operate during natural activity levels, the contribution of STP could have been obscured in such time-independent analyses if this contribution has a strong temporal component. We thus focused on deriving and using a time-dependent formalism to capture such time-dependent effects.

The role of STP in information transfer during constant-rate Poisson spike trains

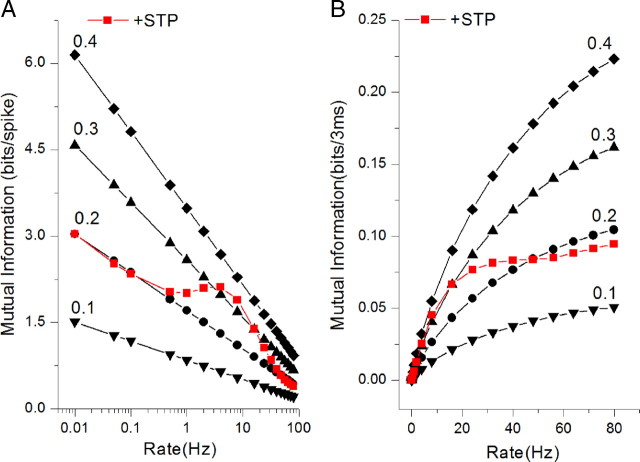

We first applied our time-dependent formalism to examine information transmission by a dynamic synapse during constant-rate, Poisson-distributed spike trains. As expected, the information transmission showed a clear dependence on the input rate (Fig. 1) similarly to the previous report (Zador, 1998). Most importantly, we found that information transfer was greater in the presence of STP than for the constant basal Pr (i.e., no STP present) for a wide range of input rates, ∼1–40 Hz (Fig. 1). This is the case for both the mutual information per spike (Fig. 1A) and the mutual information per unit of time (Fig. 1B). At low input rates, 0.01 < R < 0.1, at which STP contribution is small and does not significantly alter release probability, information transmission follows the same line as that for constant basal Pr = 0.2 value; however, as input rate increases, information transfer grows faster in the presence of STP, reaching levels that nearly double information transmission at basal Pr value. The range of input rates at which STP contributes to information transfer is comparable to the range of frequencies found in natural spike trains (Fenton and Muller, 1998; Leutgeb et al., 2005). Thus, STP clearly increases information transfer in a rate-dependent manner, unlike the findings in previous reports (Lindner et al., 2009; Yang et al., 2009). This result arises in part from using a realistic model of STP that closely approximates synaptic dynamics in excitatory hippocampal synapses and in part from performing time-dependent analysis. The latter has shown that the steady-state synaptic response commonly analyzed in information transmission studies may not be representative, at least in the case of the hippocampal excitatory synapses, of the state that synapses assume during physiologically relevant activity.

Figure 1.

Information transmission for constant-rate Poisson-distributed input spike trains. A, B, Mutual information per spike (A) or per unit of time (B) for a static synapse for a range of Pr values shown (black traces) and the average cumulative mutual information Icumulative for a dynamic synapse with a basal Pr = 0.2 (red traces). Icumulative was determined for 100-spike-long trains at each rate (see Materials and Methods for details). Note that in the case of a static, constant Pr synapse, mutual information and Icumulative are exactly the same since they are time independent. The presence of STP increases information transferred by the dynamic synapse in a wide frequency range above that of a static synapse with the same basal release probability.

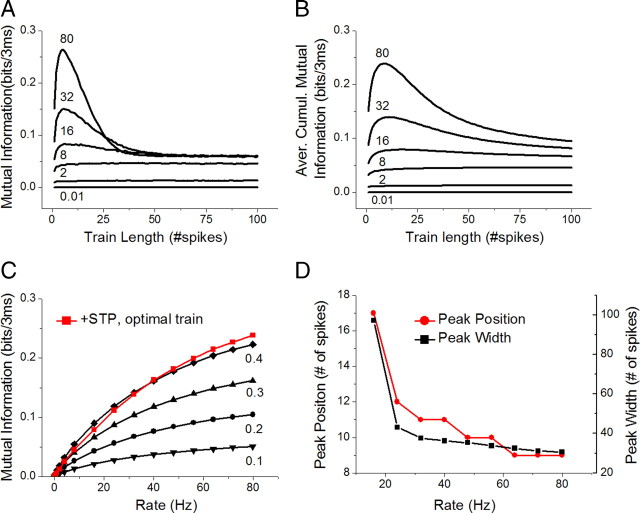

Optimization of information transmission by STP in unreliable synapses

If STP plays a role in information processing, then the time dependence of the information transfer may determine the optimal length and structure of the input spike train. Indeed, analysis of short input train lengths (Fig. 2A) showed a clear peak of information transmission for trains of ∼4–20 spikes (with a peak at 4 to 6 spikes) at all rates above ∼16 Hz. Lower rates showed an increase toward the steady-state mutual information value, but under these conditions mutual information grew with rate independently of the train length. Higher rates and long train lengths showed convergence to a common universal value, suggesting that under the conditions approaching the steady state the mutual information is broadband, as previously reported (Yang et al., 2009). The same results were also obtained when cumulative information transfer was examined for different input rates (Fig. 2B). For cumulative information transfer, the peak shifted to 9–12 spikes (Fig. 2B,D), as expected when we factored in the added contribution from the several initial spikes that occurred when information transfer was low.

Figure 2.

Time dependence of synaptic information transmission. A, Time-dependent mutual information for a dynamic synapse with a basal Pr = 0.2 is plotted versus spike number in the train. Information transmission is optimal for the short high-frequency spike bursts and converges to a universal steady-state value at longer train durations. Numbers shown above each trace represent the rate of synaptic input. B, Same as in A for the average cumulative mutual information Icumulative. The peak of optimal train length shifted toward the larger number of spikes, but the same overall optimization behavior is seen. Numbers shown above each trace represent the rate of synaptic input. C, The benefit of optimizing the train length for the chosen firing rate. If optimal train length is chosen, the dynamic synapse with a basal Pr = 0.2 can transfer information as efficiently as a static synapse with Pr = 0.4. D, Peak position and width (calculated as a half-width above the steady-state level) of the average cumulative mutual information Icumulative. The peak location corresponds to optimal burst length and the width determines the specificity of this optimization.

To evaluate the benefits of such optimization, we compared mutual information for the optimal train length at a given firing rate for dynamic synapses versus static synapses with a range of Pr values. In the case of dynamic synapses with a basal Pr = 0.2, information transmission for the optimal length spike train was equivalent to that of a static synapse with twice higher Pr = 0.4 (Fig. 2C). Together, these results show that STP in low release probability excitatory synapses not only increases information transmission in a rate-dependent manner, but it also leads to optimization of information transfer for short spike bursts that are indeed commonly observed in excitatory hippocampal neurons (Leutgeb et al., 2005).

Information transmission during natural spike trains

To examine whether these information transmission principles play a role in a more realistic situation, we examined information transmission in a model of excitatory hippocampal synapses during natural spike patterns recorded in hippocampal place cells of freely moving and exploring rodents (Fenton and Muller, 1998). These spike trains represent the patterns of inputs that the excitatory hippocampal synapses are likely to encounter in vivo (Leutgeb et al., 2005). To be able to apply our formalism to an arbitrary spike train with varying rates, we needed to transform the input train into a time-dependent rate r(t) and produce a corresponding ensemble of input spike trains, making the precise analysis of information transfer very time intensive. The analysis of information transfer can be simplified, however, by eliminating the need for ensemble measurements if the expression for mutual information can be formulated only as a function of measured values, such as Pr. We thus used an approximation for the conditional entropy expression in Equation 7 by replacing the averaging over values of release probability p, by its average, i.e., 〈Pr〉, as follows:

|

We then determined the accuracy of this approximation by comparing the exact amount of information transfer (given by Eq. 8) for constant-rate trains using the precise expression for H(r | s) in Equation 7 versus its approximation in Equation 10. This approximation resulted in 95% accuracy or better in estimating information transfer for stimulus trains shorter than ∼40 spikes at all rates tested (0.01–72 Hz), and for 100-spike-long trains at all rates ≤56 Hz (Fig. 3A). The only input regimes in which larger deviations were seen were outside the physiologically relevant range of stimuli for these synapses. The accuracy of this approximation suggests that the entropy held in the distribution f(p, t) of p values is relatively small with respect to the main contribution to entropy, which is due to the spike timing in the train. This is not surprising considering that the probability of an action potential firing at any given time point is very small. This notion can be seen more easily using a simple example at a 1 Hz rate. Since we chose time steps of 3 ms for our analysis, there were 333 time points in 1 s and therefore 333 possible different spike trains all having the 1 Hz rate. The synapse can release with 1 of 10 possible values of release probability (since it is quantized with 0.1 steps), and in reality the values are constrained by the model so the actual spectrum is even smaller. This explains why in our approximation the variability arising from the spike timing is much greater than that of the release probability distribution. Estimation of information transfer during a natural spike train using this simplification is based on the assumption that the properties of a specific natural spike train used are representative of an ensemble of natural spike trains and that variability within this ensemble is relatively small. Under this assumption, our analysis shows that information transfer by a dynamic synapse increases several-fold during spike bursts in the presence of STP (Fig. 3B,C). The synaptic information transfer due to STP in dynamic synapses with the basal Pr = 0.2 is comparable to that of a static synapse with Pr = 0.4. This effect of STP is very similar to the results seen for the optimal length spike train (Fig. 2C), suggesting that natural spike trains in hippocampal neurons may be optimized to transmit maximal information given the specific dynamics of their synapses. It is important to point out that a real synapse with a basal release probability of Pr = 0.4 is more likely to have depression-dominated STP (Dobrunz and Stevens, 1997; Murthy et al., 1997), leading to an overall decrease of transferred information (see Fig. 5 and text below). It is thus the tuning between the natural spike train structure and the dynamic properties of excitatory hippocampal synapses that allows the enhancement of information transfer during natural spike trains.

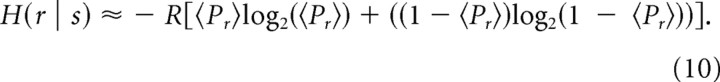

Figure 3.

Information transmission for a natural spike train. A, Relative deviation of approximation for mutual information from exact numerical calculations. The approximation presented holds true with 95% accuracy for all firing rates between 0.01 and 56 Hz and train durations of 100 spikes, as well as for spike trains shorter than ∼40 spikes at all rates tested. For spike trains longer than ∼40 spikes at rates of 64 Hz and above, significant deviations appear. The approximation accuracy is reduced when the model is stressed to the point when the release probability during prolonged high-rate stimulation approaches zero. B, The average cumulative mutual information Icumulative for a natural spike train. Icumulative shows rapid changes during natural spike trains with peaks corresponding to spike bursts and decays corresponding to periods of low activity. Information transfer in a dynamic synapse based on measured data starts as low as for a static synapse with a Pr = 0.2, but then increases during bursts, due to STP, to reach the performance of a static synapse with a Pr = 0.4. C, Mutual information per unit time for the first 70 spikes in the train plotted for a dynamic synapse with a basal Pr = 0.2 and static synapse with Pr from 0.2 to 0.4. The dynamic synapse expresses a wide range of transferred information values during the natural spike train from that similar to a static Pr = 0.2 synapse to above that of static Pr = 0.4 synapse.

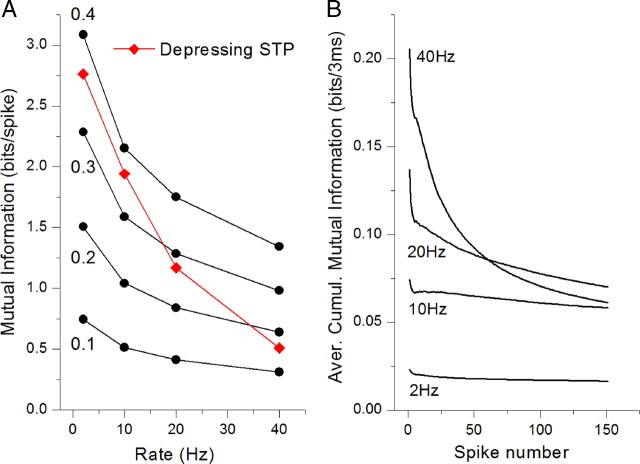

Figure 5.

Time and rate dependence of mutual information in high release probability depressing synapse. A, Mutual information per spike for a static synapse for a range of Pr values shown (black traces) and the average cumulative mutual information Icumulative for a depression-dominated high release probability synapse (Pr = 0.5) (red trace) plotted as a function of the input rate. Icumulative shows a strong decay as input rate increased. At all rates above 1 Hz depressing synapse with (Pr = 0.5) transfers less information during a 150-stimuli-long train than the static synapse with Pr = 0.4. B, Average cumulative mutual information was calculated directly from the previous experimental measurements of synaptic dynamics in CA1 inhibitory hippocampal synapses (Klyachko and Stevens, 2006a). This mutual information is plotted as a function of spike number in the train and shows a steep decrease with the train length. No optimization for spike bursts is observed at any of the frequencies tested (2–40 Hz) and maximal information transmission is achieved at the first spike in the train.

Robustness of information transfer optimization

Although hippocampal excitatory synapses have a low average release probability, it has a wide distribution in the synaptic population (Murthy et al., 1997). The expression of individual STP components is interdependent with the release probability and varies in amplitude as a function of Pr (Zucker and Regehr, 2002; Abbott and Regehr, 2004). It is therefore important to determine to what extent our findings are robust regarding changes in release probability as well as in individual model parameters. We thus performed the same analysis as described above for a range of model parameters within a 100% range of changes (from ×0.5 to ×2) in facilitation amplitude, augmentation amplitude, the time course of RRP recovery that effectively controls depression amplitude, and the size of the RRP (Fig. 4A–C). We found that all of our observations regarding the role of STP in increasing information transfer, as well as the optimization of information transfer for spike bursts, were not strongly dependent on the model parameters within these ranges and held true for all values tested.

This analysis also allowed us to examine the roles of different forms of STP in optimization of information transfer. Specifically, we used three metrics to quantify information transfer optimization: the peak position (Fig. 4A), the peak width (Fig. 4B), and the peak height (Fig. 4C). We found that the largest decrease in both the peak position and width occurred when facilitation amplitude was increased. This effect was presumably due to faster use of synaptic resources (vesicles) leading to faster and stronger depression. Opposite of these effects of facilitation, we found that the largest increase in peak width occurred when vesicle recycling time was decreased (Fig. 4A), increasing vesicle availability and leading to much slower onset of depression. Similarly, increasing the size of the RRP produced the largest increase in peak position (Fig. 4B) by effectively extending vesicle availability and thus delaying the onset of depression. We further considered the contributions of STP components to the peak height (Fig. 4C), which represents one way of quantifying optimization strength. Our analysis revealed that peak height received its largest contribution from the facilitation amplitude. This effect of facilitation is expected since the peak occurs early during the stimulus train, when synaptic dynamics is indeed dominated by facilitation.

We also examined the robustness of information transmission regarding changes in basal release probability in a range from 0.05 to 0.4 (Fig. 4D), which includes the majority of excitatory hippocampal synapses (Murthy et al., 1997). While the optimization of information transmission was observed at all Pr values within this range, we found strong inverse dependence between optimization and Pr such that the optimal length of the bursts decreased rapidly with increasing Pr (from 35 spikes at Pr = 0.05, to 11 spikes at Pr = 0.2, to 6 spikes at Pr = 0.4). It is important to note that this analysis represents the lower bound approximation in the sense that in real synapses this dependence between the optimal peak position and Pr is likely to be even stronger. This is because most of the current STP model parameters have been determined from experimental data that represent the averaged behavior of CA3–CA1 synapses, i.e., the synapse with a Pr ≈ 0.2. Since functional interdependences between Pr and STP parameters are not currently known, performing this analysis at significantly higher Pr values would require determining a new set of model parameters based on the experimental data recorded at these increased Pr. As Pr value increases, the experimentally determined amplitudes of facilitation and augmentation would decrease and amplitude of depression would increase. These indirect effects of increasing the Pr would further accentuate the dependence of optimization on release probability, but they are not taken into account in our current analysis, because we vary only one parameter (in this case Pr) at a time. Based on these considerations, we limited our analysis to a lower range of Pr values (0.05–0.4, the range within which synaptic dynamics remains qualitatively similar) before it shifts from facilitation- to depression-dominated mode at higher Pr values (Dobrunz and Stevens, 1997).

Together, these results indicate that STP-mediated optimization of information transmission in unreliable synapses is robust within a relevant range of model parameters and within a lower range of release probabilities that are predominant in excitatory hippocampal synapses.

Prediction for information transmission in high release probability synapses and its verification

The above analysis suggests that STP-mediated optimization of information transmission for spike bursts holds for unreliable synapses, but might not be present in high release probability synapses, which are expected to have a dominant short-term depression. Indeed, analysis of depression-dominated dynamic synapses with a Pr = 0.5 (and no facilitation/augmentation) shows strong monotonous decay of average cumulative mutual information with the input rate (Fig. 5A). Even at 2 Hz, depressing synapse with a Pr = 0.5 transfers less information during a 150-spike-long train than the static synapse with Pr = 0.4, and at 40 Hz the dynamic synapse transfers less information than a static synapse with a Pr = 0.2.

Based on the above analysis, we predicted that in high release probability synapses single spikes rather than bursts would be expected to carry maximal information as the optimal burst length would approach a value of 1 (Fig. 4D). To verify this prediction, we took advantage of the fact that a large proportion of inhibitory hippocampal synapses in the CA1 area have a high release probability (Mody and Pearce, 2004; Patenaude et al., 2005) and express dominant short-term depression (Maccaferri et al., 2000). To examine information transfer in these synapses, we used a series of measurements we previously performed in CA1 inhibitory hippocampal synapses with constant-frequency stimulation (Klyachko and Stevens, 2006a). The actual measured values of synaptic strength during trains were used in these calculations. We found that the average cumulative mutual information decreased monotonically with the length of the train, and no optimization peak was observed at all frequencies examined (Fig. 5B). This result confirms the prediction of our analysis and suggests that for inhibitory hippocampal neurons, optimal information transfer would take place when the train is composed of single spikes rather than bursts. This fits well with the observation that inhibitory hippocampal interneurons, unlike excitatory pyramidal cells, do not typically fire spike bursts (Connors and Gutnick, 1990).

Discussion

We have examined the role of synaptic dynamics in information transmission by estimating the mutual information between synaptic drive and the output synaptic gain changes in a realistic model of STP in excitatory hippocampal synapses. Our analysis shows that the presence of STP leads to an increase in information transfer in a wide frequency range. Furthermore, considerations of the time dependence of information transmission revealed that STP also determines the optimal number of spikes in a train that maximizes information transmission. Specifically, in these low release probability synapses, information transmission is optimal for the short high-frequency spike bursts that are indeed common in the firing patterns of excitatory hippocampal neurons. When an optimal spike pattern is used as an input, the information transfer by the dynamic synapse is equivalent to that of a static synapse with twice greater basal release probability. Our analysis further showed strong dependence of this optimization on the basal release probability and predicted that this optimization will reach unity (so that a single spike is optimal for information transmission) at large values of Pr, when synaptic dynamics is dominated by short-term depression. We verified these key observations using analyses of experimental recordings in low release probability excitatory and high release probability inhibitory hippocampal synapses in brain slices. Our findings thus demonstrate that STP contributes significantly to synaptic information processing and works to optimize information transmission for specific firing patterns of the corresponding neurons.

The role of STP in information transmission

The function of STP in information processing has been suggested by numerous studies of visual and auditory processing (Chance et al., 1998; Taschenberger and von Gersdorff, 2000; Chung et al., 2002; Cook et al., 2003; DeWeese et al., 2005; MacLeod et al., 2007) and of cortical/hippocampal circuit operations (Abbott et al., 1997; Markram et al., 1998a; Silberberg et al., 2004a; Klyachko and Stevens, 2006a; Kandaswamy et al., 2010). Specific computations performed by STP are often based on frequency-dependent filtering operations and include, but are not limited to, detection of transient inputs, such as spike bursts (Lisman, 1997; Richardson et al., 2005; Klyachko and Stevens, 2006a) and abrupt changes in input rate (Abbott et al., 1997; Puccini et al., 2007), synaptic gain control (Abbott et al., 1997), input redundancy reduction (Goldman et al., 2002), and processing of population bursts (Richardson et al., 2005).

Information theory provides a robust quantitative framework to analyze the role of STP in information transmission at synapses and has been successfully used in several studies of synaptic processing (Tsodyks and Markram, 1997; Varela et al., 1997; Markram et al., 1998b; Tsodyks et al., 1998; Zador, 1998; Maass and Zador, 1999; Natschläger et al., 2001; Fuhrmann et al., 2002; Goldman et al., 2002; Loebel and Tsodyks, 2002). The main complication of applying information theory to address physiological questions is its reliance on the analysis of large ensembles of input spike patterns, which require either prohibitively large sets of measurements or a commitment to simplifying assumptions. In the pioneering work of Zador (1998), calculations based on ISI distribution were used to significantly reduce the number of simulations needed. This simplification assumes the time independence of synaptic responses and works well in approximation of steady-state synaptic conditions. This methodology, however, does not allow the correct analysis of time-dependent information transmission by dynamic synapses with rapidly changing release probability. By developing an extension of this previous information theory formalism to include time-dependent analysis, we were able to clearly demonstrate the role of STP in increasing information transfer in a wide range of input frequencies (Fig. 1).

This result would not be apparent in analyses of the steady-state conditions that were used in previous studies of information transmission by dynamic synapses (Lindner et al., 2009; Yang et al., 2009) and indeed led to different conclusions. Both studies, however, assumed time-independent information transfer, an assumption that we found obscured the contributions of STP, which have a strong temporal component. In fact, we have shown, in agreement with Yang et al. (2009), that for the significantly long trains, when synapses reach a steady state, information transmission indeed converges to the same unifying level, and there is a wide range of stimulation rates that all exhibit the same information transfer. Analysis of synaptic dynamics during natural spike trains (Fig. 3) revealed that this regime is not common during physiologically relevant activity levels, at least in the case of excitatory hippocampal synapses. In addition, performing simulations under steady-state conditions reduces the dynamic range of synaptic strength, which might contribute to the lack of frequency dependence.

It is also important to note that our calculations were simplified by avoiding a postsynaptic neuron firing model, which is usually introduced as a final stage of calculations. Our goal was to keep our calculations as close to the experimental data as possible. The most commonly used model in similar studies is the leak-integrate-and-fire neuron, which introduces a large number of free parameters, avoids the nonlinear properties of dendritic integration, and is difficult to verify experimentally (Burkitt, 2006; Brette et al., 2007; Paninski et al., 2007). A more realistic approach to generating output neuron spiking could be based on a previous study of dendrite-to-soma input/output function of the CA1 pyramidal neurons, demonstrating that this input–output relationship could be modeled as a linear filter followed by adapting static-gain function (Cook et al., 2007). Application of such an approach would also require precise knowledge of how multiple heterogeneous synaptic inputs interact and are spatially integrated in the dendrites. Given the intricate spatiotemporal dendritic processing (Spruston, 2008) and complexity of intersynaptic interactions over various timescales (Remondes and Schuman, 2002; Dudman et al., 2007), the problem of linking individual synaptic dynamics to the actual spiking output of a neuron remains largely unresolved. We therefore chose not to use a neuronal-spiking model. Moreover, if any neuronal-spiking model changes information-transfer properties in a rate- or time-dependent manner, it would be advantageous to study these effects independently of the choice of synaptic STP model. It thus remains to be determined how information transmission at individual synapses is modified by complex dendritic processing in the postsynaptic neuron. However, given that an STP-dependent increase in synaptic information transfer is observed over a wide frequency range and is highly robust, we predict that the effects of STP we observed at the level of synaptic output will also be qualitatively present at the level of actual spiking output of a neuron, unless dendritic filtering strongly attenuates synaptic signals over this entire frequency range.

Optimization of information transfer

The key finding of our study is the optimization of information transmission by STP. In low release probability synapses, information transmission is maximal for short high-frequency spike bursts (Fig. 2). This result demonstrates that the short timescale, ∼30 spikes, during which the synapse reaches its steady state, has a nontrivial time- and rate-dependent contribution of STP to information transfer. Our numerical calculations show that synapses can modulate the information they transfer with respect to the length and the rate of the input spike pattern. This may lead to the optimization of information transfer for variable rate trains, such as natural spike trains, if they are composed of constant-rate trains of a length that maximizes information transfer at that rate. Our calculations thus predict that a mixture of input rates would be optimal for information transmission when low-frequency trains of any length are mixed with short bursts of high-rate firing to maximize the information transfer of a synapse. This is indeed in agreement with the experimentally observed firing patterns of excitatory hippocampal neurons (Fenton and Muller, 1998; Leutgeb et al., 2005).

Based on the same optimization considerations, our analyses predicted that high release probability synapses would have maximal information transmission when single spikes rather than bursts are used as synaptic input. This effect arises from the switch in synaptic dynamics from facilitation/augmentation to depression at high release probabilities (Figs. 1, 5). This interpretation is in agreement with a previous study (Goldman et al., 2002) showing that depressing synapses reduces information redundancy in spike trains. Indeed, when natural spike trains were used as an input to depressing stochastic synapses, they exhibited reduced autocorrelation of spike timing, which is equivalent to our finding of optimization by single spikes. It is tempting to speculate that STP expression might have evolved in part to optimize information transmission in the firing patterns of the corresponding neurons, as seems to be the case for both excitatory and inhibitory hippocampal synapses. Alternatively, it is possible that the adaptation properties of neurons, which determine their bursty firing patterns, might have evolved in part to optimize information transfer given the existence of STP. Future studies of information transmission using more detailed models of synaptic dynamics in other neural systems will reveal the extent to which this principle applies to other types of synapses, or whether it is specific to a subset of circuits or to certain types of information transmitted. These analyses will also require a better understanding of information encoding, which currently limits application of information theory to a wider variety of synapses and circuits.

Footnotes

This work was supported in part by a grant to V.K. from the Whitehall Foundation. We thank Dr. Valeria Cavalli and Diana Owyoung for their constructive comments on the manuscript.

References

- Abbott LF, Regehr WG. Synaptic computation. Nature. 2004;431:796–803. doi: 10.1038/nature03010. [DOI] [PubMed] [Google Scholar]

- Abbott LF, Varela JA, Sen K, Nelson SB. Synaptic depression and cortical gain control. Science. 1997;275:220–224. doi: 10.1126/science.275.5297.221. [DOI] [PubMed] [Google Scholar]

- Brette R, Rudolph M, Carnevale T, Hines M, Beeman D, Bower JM, Diesmann M, Morrison A, Goodman PH, Harris FC, Jr, Zirpe M, Natschläger T, Pecevski D, Ermentrout B, Djurfeldt M, Lansner A, Rochel O, Vieville T, Muller E, Davison AP, et al. Simulation of networks of spiking neurons: a review of tools and strategies. J Comput Neurosci. 2007;23:349–398. doi: 10.1007/s10827-007-0038-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burkitt AN. A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol Cybern. 2006;95:1–19. doi: 10.1007/s00422-006-0068-6. [DOI] [PubMed] [Google Scholar]

- Chance FS, Nelson SB, Abbott LF. Synaptic depression and the temporal response characteristics of V1 cells. J Neurosci. 1998;18:4785–4799. doi: 10.1523/JNEUROSCI.18-12-04785.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung S, Li X, Nelson SB. Short-term depression at thalamocortical synapses contributes to rapid adaptation of cortical sensory responses in vivo. Neuron. 2002;34:437–446. doi: 10.1016/s0896-6273(02)00659-1. [DOI] [PubMed] [Google Scholar]

- Connors BW, Gutnick MJ. Intrinsic firing patterns of diverse neocortical neurons. Trends Neurosci. 1990;13:99–104. doi: 10.1016/0166-2236(90)90185-d. [DOI] [PubMed] [Google Scholar]

- Cook DL, Schwindt PC, Grande LA, Spain WJ. Synaptic depression in the localization of sound. Nature. 2003;421:66–70. doi: 10.1038/nature01248. [DOI] [PubMed] [Google Scholar]

- Cook EP, Guest JA, Liang Y, Masse NY, Colbert CM. Dendrite-to-soma input/output function of continuous time-varying signals in hippocampal CA1 pyramidal neurons. J Neurophysiol. 2007;98:2943–2955. doi: 10.1152/jn.00414.2007. [DOI] [PubMed] [Google Scholar]

- Deng PY, Klyachko VA. The diverse functions of short-term plasticity components in synaptic computations. Commun Integr Biol. 2011;4:543–548. doi: 10.4161/cib.4.5.15870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeWeese MR, Hromádka T, Zador AM. Reliability and representational bandwidth in the auditory cortex. Neuron. 2005;48:479–488. doi: 10.1016/j.neuron.2005.10.016. [DOI] [PubMed] [Google Scholar]

- Dobrunz LE, Stevens CF. Heterogeneity of release probability, facilitation, and depletion at central synapses. Neuron. 1997;18:995–1008. doi: 10.1016/s0896-6273(00)80338-4. [DOI] [PubMed] [Google Scholar]

- Dudman JT, Tsay D, Siegelbaum SA. A role for synaptic inputs at distal dendrites: instructive signals for hippocampal long-term plasticity. Neuron. 2007;56:866–879. doi: 10.1016/j.neuron.2007.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenton AA, Muller RU. Place cell discharge is extremely variable during individual passes of the rat through the firing field. Proc Natl Acad Sci U S A. 1998;95:3182–3187. doi: 10.1073/pnas.95.6.3182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fortune ES, Rose GJ. Short-term synaptic plasticity as a temporal filter. Trends Neurosci. 2001;24:381–385. doi: 10.1016/s0166-2236(00)01835-x. [DOI] [PubMed] [Google Scholar]

- Fuhrmann G, Segev I, Markram H, Tsodyks M. Coding of temporal information by activity-dependent synapses. J Neurophysiol. 2002;87:140–148. doi: 10.1152/jn.00258.2001. [DOI] [PubMed] [Google Scholar]

- Goldman MS, Maldonado P, Abbott LF. Redundancy reduction and sustained firing with stochastic depressing synapses. J Neurosci. 2002;22:584–591. doi: 10.1523/JNEUROSCI.22-02-00584.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandaswamy U, Deng PY, Stevens CF, Klyachko VA. The role of presynaptic dynamics in processing of natural spike trains in hippocampal synapses. J Neurosci. 2010;30:15904–15914. doi: 10.1523/JNEUROSCI.4050-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klyachko VA, Stevens CF. Excitatory and feed-forward inhibitory hippocampal synapses work synergistically as an adaptive filter of natural spike trains. PLoS Biol. 2006a;4:e207. doi: 10.1371/journal.pbio.0040207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klyachko VA, Stevens CF. Temperature-dependent shift of balance among the components of short-term plasticity in hippocampal synapses. J Neurosci. 2006b;26:6945–6957. doi: 10.1523/JNEUROSCI.1382-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leutgeb S, Leutgeb JK, Moser MB, Moser EI. Place cells, spatial maps and the population code for memory. Curr Opin Neurobiol. 2005;15:738–746. doi: 10.1016/j.conb.2005.10.002. [DOI] [PubMed] [Google Scholar]

- Lindner B, Gangloff D, Longtin A, Lewis JE. Broadband coding with dynamic synapses. J Neurosci. 2009;29:2076–2088. doi: 10.1523/JNEUROSCI.3702-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisman JE. Bursts as a unit of neural information: making unreliable synapses reliable. Trends Neurosci. 1997;20:38–43. doi: 10.1016/S0166-2236(96)10070-9. [DOI] [PubMed] [Google Scholar]

- Loebel A, Tsodyks M. Computation by ensemble synchronization in recurrent networks with synaptic depression. J Comput Neurosci. 2002;13:111–124. doi: 10.1023/a:1020110223441. [DOI] [PubMed] [Google Scholar]

- Maass W, Zador AM. Dynamic stochastic synapses as computational units. Neural Comput. 1999;11:903–917. doi: 10.1162/089976699300016494. [DOI] [PubMed] [Google Scholar]

- Maccaferri G, Roberts JD, Szucs P, Cottingham CA, Somogyi P. Cell surface domain specific postsynaptic currents evoked by identified GABAergic neurones in rat hippocampus in vitro. J Physiol. 2000;524:91–116. doi: 10.1111/j.1469-7793.2000.t01-3-00091.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLeod KM, Horiuchi TK, Carr CE. A role for short-term synaptic facilitation and depression in the processing of intensity information in the auditory brain stem. J Neurophysiol. 2007;97:2863–2874. doi: 10.1152/jn.01030.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markram H, Pikus D, Gupta A, Tsodyks M. Potential for multiple mechanisms, phenomena and algorithms for synaptic plasticity at single synapses. Neuropharmacology. 1998a;37:489–500. doi: 10.1016/s0028-3908(98)00049-5. [DOI] [PubMed] [Google Scholar]

- Markram H, Gupta A, Uziel A, Wang Y, Tsodyks M. Information processing with frequency-dependent synaptic connections. Neurobiol Learn Mem. 1998b;70:101–112. doi: 10.1006/nlme.1998.3841. [DOI] [PubMed] [Google Scholar]

- Mody I, Pearce RA. Diversity of inhibitory neurotransmission through GABA(A) receptors. Trends Neurosci. 2004;27:569–575. doi: 10.1016/j.tins.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Murthy VN, Sejnowski TJ, Stevens CF. Heterogeneous release properties of visualized individual hippocampal synapses. Neuron. 1997;18:599–612. doi: 10.1016/s0896-6273(00)80301-3. [DOI] [PubMed] [Google Scholar]

- Natschläger T, Maass W, Zador A. Efficient temporal processing with biologically realistic dynamic synapses. Network. 2001;12:75–87. [PubMed] [Google Scholar]

- Paninski L, Pillow J, Lewi J. Statistical models for neural encoding, decoding, and optimal stimulus design. Prog Brain Res. 2007;165:493–507. doi: 10.1016/S0079-6123(06)65031-0. [DOI] [PubMed] [Google Scholar]

- Patenaude C, Massicotte G, Lacaille JC. Cell-type specific GABA synaptic transmission and activity-dependent plasticity in rat hippocampal stratum radiatum interneurons. Eur J Neurosci. 2005;22:179–188. doi: 10.1111/j.1460-9568.2005.04207.x. [DOI] [PubMed] [Google Scholar]

- Puccini GD, Sanchez-Vives MV, Compte A. Integrated mechanisms of anticipation and rate-of-change computations in cortical circuits. PLoS Comput Biol. 2007;3:e82. doi: 10.1371/journal.pcbi.0030082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Remondes M, Schuman EM. Direct cortical input modulates plasticity and spiking in CA1 pyramidal neurons. Nature. 2002;416:736–740. doi: 10.1038/416736a. [DOI] [PubMed] [Google Scholar]

- Richardson MJ, Melamed O, Silberberg G, Gerstner W, Markram H. Short-term synaptic plasticity orchestrates the response of pyramidal cells and interneurons to population bursts. J Comput Neurosci. 2005;18:323–331. doi: 10.1007/s10827-005-0434-8. [DOI] [PubMed] [Google Scholar]

- Shannon CE. A mathematic theory of communication. Bell Sys Tech J. 1948;27:379–423. [Google Scholar]

- Silberberg G, Wu C, Markram H. Synaptic dynamics control the timing of neuronal excitation in the activated neocortical microcircuit. J Physiol. 2004a;556:19–27. doi: 10.1113/jphysiol.2004.060962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silberberg G, Bethge M, Markram H, Pawelzik K, Tsodyks M. Dynamics of population rate codes in ensembles of neocortical neurons. J Neurophysiol. 2004b;91:704–709. doi: 10.1152/jn.00415.2003. [DOI] [PubMed] [Google Scholar]

- Spruston N. Pyramidal neurons: dendritic structure and synaptic integration. Nat Rev Neurosci. 2008;9:206–221. doi: 10.1038/nrn2286. [DOI] [PubMed] [Google Scholar]

- Taschenberger H, von Gersdorff H. Fine-tuning an auditory synapse for speed and fidelity: developmental changes in presynaptic waveform, EPSC kinetics, and synaptic plasticity. J Neurosci. 2000;20:9162–9173. doi: 10.1523/JNEUROSCI.20-24-09162.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsodyks MV, Markram H. The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc Natl Acad Sci U S A. 1997;94:719–723. doi: 10.1073/pnas.94.2.719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsodyks M, Pawelzik K, Markram H. Neural networks with dynamic synapses. Neural Comput. 1998;10:821–835. doi: 10.1162/089976698300017502. [DOI] [PubMed] [Google Scholar]

- Varela JA, Sen K, Gibson J, Fost J, Abbott LF, Nelson SB. A quantitative description of short-term plasticity at excitatory synapses in layer 2/3 of rat primary visual cortex. J Neurosci. 1997;17:7926–7940. doi: 10.1523/JNEUROSCI.17-20-07926.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wesseling JF, Lo DC. Limit on the role of activity in controlling the release-ready supply of synaptic vesicles. J Neurosci. 2002;22:9708–9720. doi: 10.1523/JNEUROSCI.22-22-09708.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang Z, Hennig MH, Postlethwaite M, Forsythe ID, Graham BP. Wide-band information transmission at the calyx of Held. Neural Comput. 2009;21:991–1017. doi: 10.1162/neco.2008.02-08-714. [DOI] [PubMed] [Google Scholar]

- Zador A. Impact of synaptic unreliability on the information transmitted by spiking neurons. J Neurophysiol. 1998;79:1219–1229. doi: 10.1152/jn.1998.79.3.1219. [DOI] [PubMed] [Google Scholar]

- Zucker RS, Regehr WG. Short-term synaptic plasticity. Annu Rev Physiol. 2002;64:355–405. doi: 10.1146/annurev.physiol.64.092501.114547. [DOI] [PubMed] [Google Scholar]