Abstract

Objects in the visual world can be represented in both egocentric and allocentric coordinates. Previous studies have found that allocentric representation can affect the accuracy of spatial judgment relative to an egocentric frame, but not vice versa. Here we asked whether egocentric representation influenced the processing speed of allocentric perception. We measured the manual reaction time of human subjects in a position discrimination task in which the behavioral response purely relied on the target's allocentric location, independent of its egocentric position. We used two conditions of stimulus location: the compatible condition—allocentric left and egocentric left or allocentric right and egocentric right; the incompatible condition—allocentric left and egocentric right or allocentric right and egocentric left. We found that egocentric representation markedly influenced allocentric perception in three ways. First, in a given egocentric location, allocentric perception was significantly faster in the compatible condition than in the incompatible condition. Second, as the target became more eccentric in the visual field, the speed of allocentric perception gradually slowed down in the incompatible condition but remained unchanged in the compatible condition. Third, egocentric-allocentric incompatibility slowed allocentric perception more in the left egocentric side than the right egocentric side. These results cannot be explained by interhemispheric visuomotor transformation and stimulus-response compatibility theory. Our findings indicate that each hemisphere preferentially processes and integrates the contralateral egocentric and allocentric spatial information, and the right hemisphere receives more ipsilateral egocentric inputs than left hemisphere does.

Introduction

Spatial locations of objects in the surrounding environment are not only perceived based on an observer-centered (egocentric) coordinate (Goodale and Milner, 1992; Kalaska and Crammond, 1992; Andersen, 1995; Carey, 2000; Connolly et al., 2003; Medendorp et al., 2005), but also on an object-centered (allocentric) coordinate (Olson, 2003; Burgess, 2006; Dean and Platt, 2006; Moorman and Olson, 2007; Ward and Arend, 2007). Human imaging studies found that different cortical regions were active when the subjects processed ego- or allocentric information (Galati et al., 2000, 2010; Gramann et al., 2006; Neggers et al., 2006; Zaehle et al., 2007). However, it is not clearly known whether an object's representation in one coordinate system influences a subject's perception in the other. In an earlier study, Roelofs (1935) found an influence of allocentric representation on egocentric spatial perception, i.e., when the center of a large frame was offset to the left or right of the sagittal middle line of an observer, its edge was mislocalized in the opposite direction in an egocentric coordinate system. This phenomenon has been called the “Roelofs effect,” and it was confirmed by later investigations (Bridgeman et al., 1997). More recently, interactions between ego- and allocentric representation were systematically investigated by independently varying ego- and allocentric representation (Neggers et al., 2005). Results from this study showed that allocentric representation influenced the accuracy of spatial judgment in egocentric coordinate, but no reverse interaction was found. The authors suggested that the interactions between two coordinate frames were most likely only unidirectional with allocentric representation influencing egocentric judgment, but not vice versa.

However, these studies evaluated accuracy but not reaction time (RT). In the present study, we asked whether a conflict between a target's allocentric and egocentric positions affected reaction time. We used a position discrimination task, in which the behavioral response purely relied on the visual target's allocentric location (2 allocentric locations: left, right), not on its egocentric position (8 egocentric locations: 4 in up-left quadrant and 4 in up-right quadrant). We found that the egocentric position of the target affected the speed of an allocentric decision, and that interhemispheric visuomotor transformation and stimulus-response compatibility (SRC) theory could not fully explain our data. We suggest that each hemisphere preferentially processes and integrates contralateral ego- and allocentric information.

Materials and Methods

In the present study, we tested 10 subjects (age 21–48 years, 4 male, 6 female) in the main task. All subjects were right handed. Nine of 10 subjects were naive to the project and one is an author (Z.M.). All subjects had normal or corrected to normal vision. Nine of the 10 subjects were available to participate in the control experiments. All experiments followed the guidelines of the Animal Care and Ethics Committee of Institute of Neuroscience, Shanghai Institute of Biological Sciences, China Academy of Sciences. The subjects received compensation for their participation.

Experimental setup

The subject sat in front of a monitor with his head restrained on a chin rest. All visual stimuli were presented on a 21-inch fast phosphor CRT monitor (Sony Multiscan G520, 1280 × 960 pixels, 100 Hz vertical refresh rate) with a distance of 80 cm to subjects' eyes. The subjects' eye position was recorded by an infrared image eye tracker (EyeLink 2000 Desktop Mount, SR Research). The key press device was modified from a computer keyboard. There are three buttons on the setup, one on each end and one in the middle of a 30 cm board. The distance between two neighboring buttons is 15 cm. During the experiments, the key device was positioned in front of the subjects so that the middle button was aligned with their sagittal midline. We used MATLAB (MathWorks) with Psychtoolbox running on a PC to control stimulus presentation and collect manual reaction time data.

Behavioral tasks

Position discrimination task (Main task).

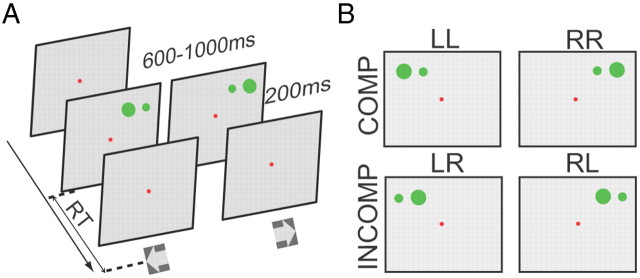

We used a task that independently varied the allocentric and egocentric locations of visual stimuli. The task began with a red fixation appearing in the center of a CRT screen. The subjects had to fixate the spot for a random interval of 600–1000 ms, after which a pair of horizontally aligned green dots 2.5° apart appeared on the screen, a large one (1.5°) and a small one (0.6°). The subjects had to determine whether the larger dot was on the right or left relative to the small one and press the right or left key accordingly as fast as possible (an allocentric decision). The pair of dots could be placed in one of eight positions in the visual field. The 8 egocentric locations were 7° above the fixation point in the y-axis, while in the x-axis they were from 8.75° left to 8.75° right of the fixation point with equal spacing (2.5°) between adjacent locations. The dots remained on the screen for 200 ms (Fig. 1A). In this design eight of the displays had compatible egocentric and allocentric locations (both on the left, LL, or both on the right, RR, Fig. 1B). The other eight displays had incompatible locations (a right allocentric target in the left visual field, LR, or a left allocentric target in the right visual field, RL).

Figure 1.

Main task and types of trials. A, Schematically depicts the allocentric position discrimination task (main task). The red spot indicates the central fixation point, which remains visible throughout the trial. Subjects were required to fixate at it throughout the trial. After a random delay (600–1000 ms), the visual cue composing of two green dots (one small and one big) appeared at one of 8 egocentric locations. Subjects needed to press either the left key or the right key, based on the relative position of the bigger dot (target) to the smaller dot (reference). B, Based on the ego- and allocentric representations of the target, trials could classify into two groups (compatible, incompatible). Compatible: egocentric left and allocentric left (LL), or egocentric right and allocentric right (RR); incompatible: egocentric left but allocentric right (LR), or egocentric right but allocentric left (RL).

Crossed-hand task.

The visual display was exactly same as in the main task. However, subjects were required to cross their arms and to use the opposite hand to press a key, i.e., the left hand pressed the right key and the right hand pressed the left key.

Single-hand task.

The visual display was exactly same as in the main task, but subjects only used their right hand to press a key that was localized on the sagittal middle line of the subjects. Subjects were instructed to respond to one allocentric representation (allocentric left, or right) in a given session, and respond to the other allocentric representation in another session. Since the response location was on the midline, there was no spatial compatibility of stimulus and response. If the RT difference between compatible and incompatible had been purely caused by SRC, we should have seen no RT difference in this task even though we saw a difference in the main task.

Colored-cue task.

To render the spatial location of the big cue irrelevant to the task, we used a colored-cue task in which the time sequence and patterns of visual display were the same as in main task, but the bigger dot was either an isoluminant red or blue (17.64 ± 0.3 cd/m2, measured by Konica Minolta LS-110). The subjects had to press the left key whenever the big dot was red and press the right key when it was blue. In this task, although the spatial location of target was task irrelevant, in half of the trials the spatial correlation between target's representation and response was same as in our main task. If the RT difference between the compatible and incompatible stimulus locations in the main task was merely caused by stimulus-response compatibility independent of the need of a spatial decision, we expected to see a similar RT difference in this task as that in our main task.

RT calculation

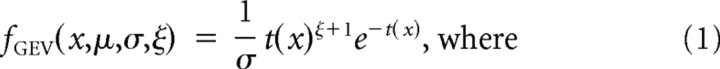

We excluded error trials (pressed wrong key) and fixation breaks (eyes moved out of the fixation window before pressing key) from the analysis. The proportion of excluded trials was 2.34% (1467 of 62,720 trials). We also excluded outlier trials in which the RT was differed >3 SDs from the mean (1.49%, 913 of 61,253 for all the tasks). Since the distribution of RTs is a biased normal distribution, we used a generalized extreme value (GEV) distribution model to fit the RT distribution data and calculated the mean RT in each allo- and egocentric location of visual stimuli (Guan et al., 2012). The GEV distribution is:

|

|

In the GEV distribution, μ, σ, and ξ represent location, scale and skewness of the curve, respectively. The mean of this distribution was determined by:

|

Because the RT of the dominant hand often differs from non-dominant hand in humans (intrinsic RT difference) (Boulinguez et al., 2001), we set the compatible condition as a baseline condition in the main task. The intrinsic RT difference between the two hands was calculated by subtracting the mean RT of the right hand in the compatible condition from the mean RT of the left hand in the compatible condition of each subject. The postadjusted RTs were then calculated by subtracting the intrinsic RT difference from the RTs of left hand. The data presented were postadjusted, with the exception of “egocentric difference index” (EDI) analysis.

In the single hand task, the subjects used only the right hand to press a key as responding to two reversed allocentric locations of the target in separate sessions. Therefore, there was no intrinsic RT difference caused by two hands as in other tasks. However, the general RT performance of subjects might have varied randomly across sessions. This behavioral variation might confound the RT comparison between two reversed allocentric locations. To solve this problem, we set the RT in the compatible condition when visual target was closest to the sagittal middle line as the baseline in each session, and then we calculated the intersession RT difference by subtracting the baseline RT in allocentric right session from the baseline RT in allocentric left session. The postadjust mean RTs were computed by subtracting the intersession RT difference from RTs in the allocentric left session.

Normalized measurements

To determine the effect of the compatible and incompatible conditions for a given egocentric location, we calculated a “compatibility difference index” (CDI) as the mean RT difference between incompatible and compatible trials for a given egocentric location, divided by the mean RT of all of a given subject's trials:

To determine the effect of target eccentricity on either compatible or incompatible trials we computed a EDI by subtracting the mean RT at the egocentric location closest to the sagittal midline, from the RT when the target was at an off-middle-line location, divided by the mean RT of the location closest to the midline, separately for compatible and incompatible trials.

Results

To make the comparison between different experiments more reliable, data from subject (WX) are used as example in this paper even though in some experiments WX's data were not the best of all the subjects. The results of statistic tests are shown with asterisks in figures (*p < 0.05, **p < 0.01, ***p < 0.001).

Reaction times are longer in the incompatible condition than in the compatible condition

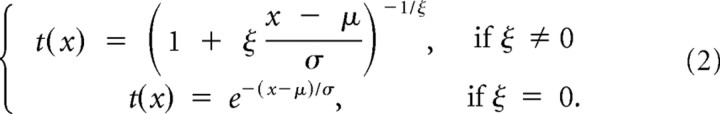

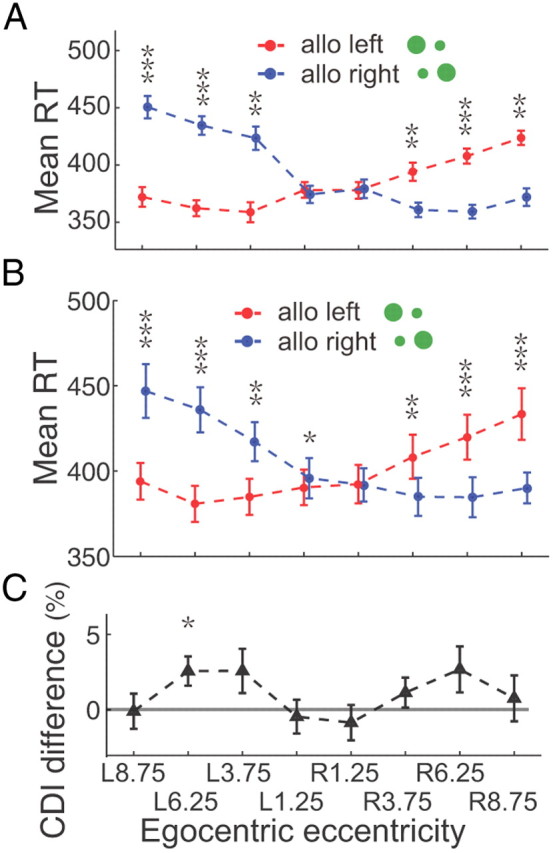

Figure 2A shows the profile of the RTs of an individual subject in the main task. RTs in compatible condition (LL, RR) were significantly smaller than those in incompatible condition (LR, RL) in the peripheral egocentric locations (the maximum p = 0.0395, Wilcoxon test). Also, RTs in incompatible condition gradually increased as the target became more eccentric. Conversely, RTs in compatible condition show little change among different eccentricities. Finally, the RT difference between compatible and incompatible conditions was greater in egocentric left than in egocentric right. The averaged population data (Fig. 2B) are consistent with the results of the example subject. The RT difference reaches statistic significance in all 6 off-center locations (the maximum p = 0.0019, two-tailed t test). Such results are confirmed by two-way ANOVA test (p = 0.0001, main effect, compatible VS incompatible).

Figure 2.

RTs in the main task. A, Represents RTs from an example subject. Dots and short vertical bars represent the average RTs and the SEM, and are displayed as a function of target's egocentric location (x-axis). Two allocentric representations are plotted in different colors: red for allocentric left and blue for allocentric right. B, The RTs of 10 subjects are shown in the same format as in A. Asterisks denote the results of statistic test: *p < 0.05, **p < 0.01, ***p < 0.001.

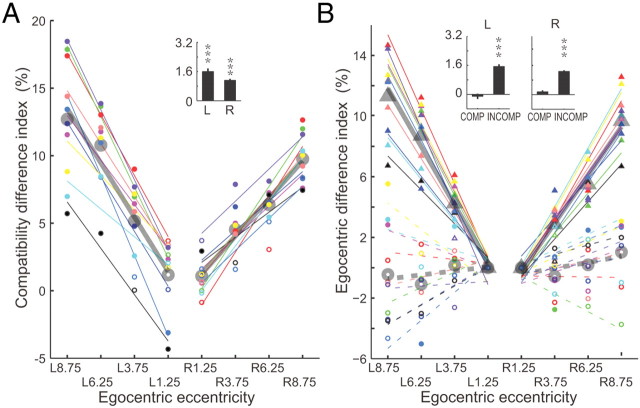

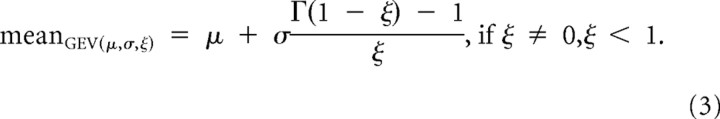

The magnitude of the RT affected the difference in RT between compatible and incompatible conditions among subjects. To eliminate a bias induced by the mean reaction time of a given subject, we normalized the RT difference between compatible and incompatible conditions for each allocentric position by dividing the difference by the mean reaction time for all trials, named as compatibility difference index (CDI). The CDI values increased with the egocentric eccentricity and fitted well with a least-square linear regression against the target position (Fig. 3A). The values of the coefficient of determinacy (R2) ranged from 0.39 to 0.91 with mean value of 0.82 in egocentric left and 0.69 in egocentric right, and the slopes (k = 1.608 in egocentric left and k = 1.116 in egocentric right) of fits for population data were significantly different from zero (p = 2.7981e-006 for left; p = 4.5120e-007 for right, two-tailed t test, see inset in Fig. 3A).

Figure 3.

CDI and EDI. A, CDI of 10 subjects. Symbols with the same color represent data from one subject. Filled circles indicate that the RT is significantly greater (p < 0.05, Wilcoxon test) in the incompatible condition than in the compatible condition, whereas open circles indicate the difference is not significant. Each subject's CDIs were fitted by a least-square linear regression shown with a matching color line. The thick gray circles and lines represent the population data and the linear regression fitting. B, EDI of 10 subjects. Circles depict EDIs in compatible condition and triangles represent EDIs in incompatible condition. Filled symbols indicate that the RT difference between the location closest to the sagittal midline and location more peripheral is significant (p < 0.05, Wilcoxon test) whereas the open symbols mean the difference is not significant (p > 0.05, Wilcoxon test). Thin colored lines represent the regression linear fitting for each subject, whereas thick gray lines depict fittings for the population data.

The effect of increasing target eccentricity on reaction time was limited to the incompatible case. We normalized the difference between the reaction time at a given location and the reaction time at the location closest to the sagittal midline separately for the compatible and incompatible cases, named as egocentric difference index (EDI, Fig. 3B), and regressed the normalized values against the target egocentric position using a least-squares linear regression. For the incompatible case the fits were excellent for both individual subjects (R2 is between 0.7875 and 0.9586) and the population (R2 = 0.88 in egocentric left, and R2 = 0.93 in egocentric right), and the slope (k = 1.536 in left and k = 1.268 in right) of the fits for population data were significantly different from zero (p = 1.0241e-008 for left and p = 5.4371e-007 for right, two-tailed t test; see inset histograms in Fig. 3B). In contrast, the distributions of EDI in the compatible condition were less consistent among subjects, with some subjects having negative slopes that were much smaller than the incompatible case, and other subjects showing positive slopes. The population had a slight negative trend in egocentric left whereas had slightly positive trend in egocentric right (R2 = 0.49 in egocentric left, and R2 = 0.39 in egocentric right), but the slope (k = −0.26 in left and k = 0.39 in right) of the population was not different from zero (p = 0.4838 in left and p = 0.0917 in right, two-tailed t test).

Prolonged RT in the incompatible condition was not due to interhemispheric transfer

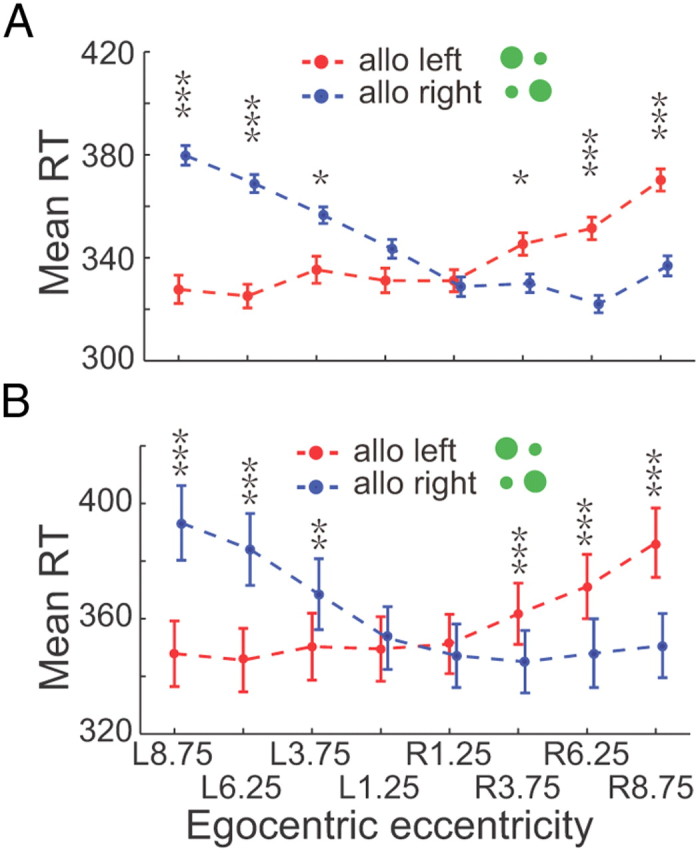

In the incompatible case the visual analysis is done by one hemisphere and the motor planning by the other, whereas both processes are done within one hemisphere in the compatible condition. Previous studies have shown that interhemispheric transfer has a 10 ms cost in reaction time (Velay et al., 2001), a much shorter difference than what we found. Nevertheless, we did a control experiment to rule out the role of interhemispheric transfer in our data. We asked the subjects to cross their arms in front of their bodies and use their left hands to press the right key and vice versa. In this experiment the interhemispheric transfer takes place in the compatible condition but not in the incompatible condition. Despite the change in interhemispheric transfer, the incompatible case had significant longer reaction times for both single subjects (the maximum p = 0.0044, Wilcoxon test, Fig. 4A) and the population (the maximum p = 0.0013 or 0.0406, two-tailed t test, Fig. 4B). All of the reaction times in the crossed task had an increase in reaction time on the average, but there was no consistent difference in the effect of target eccentricity on the CDI. Figure 4C shows the averaged population CDI difference between these two tasks. The CDI difference was computed by subtracting the CDI in the uncrossed-hand task (main task) from the CDI in the crossed-hand task. While 1 of 8 data points showed a significant difference from zero (p = 0.0368, two-tailed t test), the other 7 data points were not different from zero (the minimum p = 0.1347, t test). Furthermore, a three-way interaction ANOVA test (different tasks, different conditions, and different target eccentricities) also confirmed that different tasks (crossed-hand task vs uncrossed-hand task) did not have significant influence on the CDI of reaction time between the two conditions (compatible vs incompatible) in the population data (p = 0.4525).

Figure 4.

RT in the Crossed-hand task. A, The example subject's averaged RTs with SEM are plotted in red (allocentric left) and blue (allocentric right) correlated with target's egocentric locations. RTs are significantly smaller in compatible condition than in incompatible condition in 6 peripheral egocentric locations (the maximum p = 0.0044, Wilcoxon test). B, The average RTs of 9 subjects. The population data show significantly smaller RT in the compatible condition than that in incompatible condition in 7 of 8 egocentric locations (the maximum p = 0.0013 for egocentric left and 0.0406 for egocentric right, two-tailed t test). C, CDI difference between crossed-hand task and uncrossed-hand (main) task. The CDI differences are close to 0 in 7 of 8 egocentric locations (the minimum p = 0.1347, two-tailed t test).

Smaller RT in the compatible condition was not only due to stimulus-response compatibility

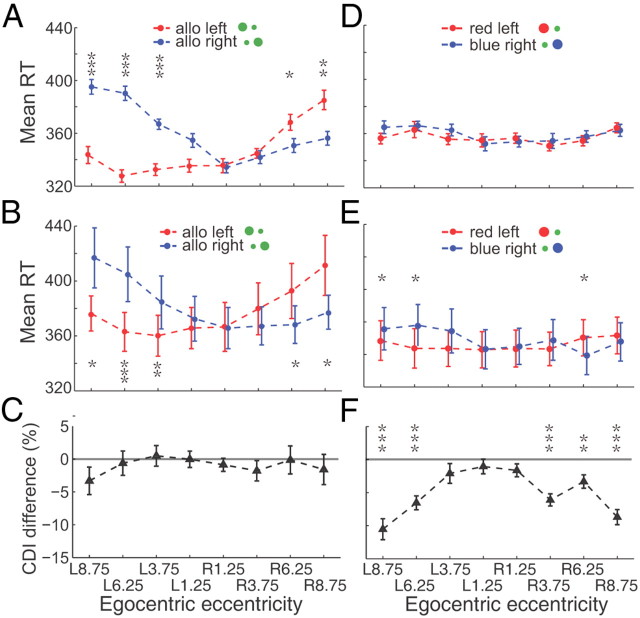

There are similarities in the behavioral protocol between our position discrimination task and the conventional stimulus-response compatibility task. In both tasks subjects are required to press either the left key or the right key based on the spatial property of a visual target. The compatible condition in our main task resembles the compatible condition in the stimulus-response compatibility task, whereas the incompatible condition is a kind of stimulus-response incompatible condition. Could the stimulus-response compatibility theory fully explain our data? Although the RT asymmetry between two egocentric hemifields was against the SRC theory, we did two control experiments that broke down the spatial correlation between stimulus and response. The first task was the “Single-hand task,” in which the visual representation was exactly the same as in the main task, but with a different response rule. In a given block, the subject was required to only use his/her right hand to press the central key, which was localized at the sagittal middle line, when the target was in one allocentric location (e.g., allocentric right) but not respond to the opposite allocentric location (e.g., allocentric left). Then, in the next block the response rule was reversed (pressing the key only if the visual target was in allocentric left). There was no spatial compatibility between stimulus and hand movement in this task. We found similar results in the Single-hand task to the main task, for single subject (Fig. 5A) and across the population (Fig. 5B). The example subject's RTs in the compatible condition were markedly smaller than that in incompatible condition in 5 peripheral eccentricities in both egocentric hemifields (the maximum p = 0.0291, Wilcoxon test). The population data from 9 tested subjects showed consistent results (the maximum p = 0.0331, two-tailed test). The RTs in the single-hand task were increased ∼30 ms (comparing Fig. 2B with Fig. 5B), but the CDI of reaction time was similar between these two tasks at all target positions (the minimum p = 0.1705, two-tailed t test, Fig. 5C). Furthermore, a three-way interaction ANOVA test (different tasks, different conditions, and different target eccentricities) also confirmed that different tasks (single-hand task vs main task) did not have a significant influence on the RT difference between two conditions (p = 0.8951).

Figure 5.

RT in the Single-hand task and in Colored-cue task. A, The example subject's RTs in the Single-hand task. The RTs in compatible condition are significantly smaller than in incompatible condition in 5 peripheral egocentric locations (the maximum p = 0.0291, Wilcoxon test). B, Population data of 9 tested subjects show significant smaller RT in compatible condition than that in incompatible condition in 5 peripheral egocentric locations (the maximum p = 0.0331, two-tailed t test). C, The CDI difference between Crossed-hand task and Uncrossed-hand (Main) task. In all egocentric locations, CDI differences are close to 0 (the minimum p = 0.1705, two-tailed t test). D, The example subject's RTs in Colored-cue task. The RT difference between compatible and incompatible conditions becomes markedly smaller and do not reach to significant level (the minimum p = 0.2224, two-tailed t test) in all egocentric locations. E, The averaged RT data of 9 subjects. The RT difference between compatible and incompatible condition is smaller, only in 3 of 8 egocentric locations the RT difference reach to significant level (the maximum p = 0.0427, two-tailed t test). F, The CDI difference between main task and colored-cue task. CDI differences are smaller than 0 in all egocentric locations and reach significant level in 5 of 8 locations (the maximum p = 0.0097, two-tailed t test).

The second control experiment was the “Colored-cue task,” in which the spatial configuration of the cue was same as in the main task except that the color of big dot was either blue or red. In this task, the color, not the allocentric representation of the big dot, instructed the subject's response: red meant press the left key using the left hand; blue meant press the right key with the right hand. In this task both the allocentric and egocentric locations of the target were task-irrelevant. Nevertheless, depending upon the spatial relationship of the two dots, there were trials in which the spatial configuration of the target is compatible, and others in which is incompatible, like the main task. In the Colored-cue task, when the subject did not have to make a spatial judgment, the RT difference between compatible and incompatible was not significant for the example subject (the minimum p = 0.2224, Wilcoxon test, Fig. 5D). For the population data (Fig. 5E), there were small but significant (the maximum p = 0.0427, two-tailed t test) differences at 3 of 8 locations, but no significant differences by two-way ANOVA test (different conditions, and different target eccentricities, p = 0.395). The values of the CDI difference were all smaller than 0 in all 8 eccentricities, and the CDI difference was significantly <0 in 5 of 6 peripheral locations (the maximum p = 0.0097, two-tailed t test). A three-way interaction ANOVA test showed the existence of significant interactions between the task and condition (p = 0.0274). The results from these two control experiments indicate that stimulus-response compatibility or incompatibility is not the major reason for causing the RT difference between compatible and incompatible condition in our studies.

Interhemispheric asymmetry in the integration of two coordinates

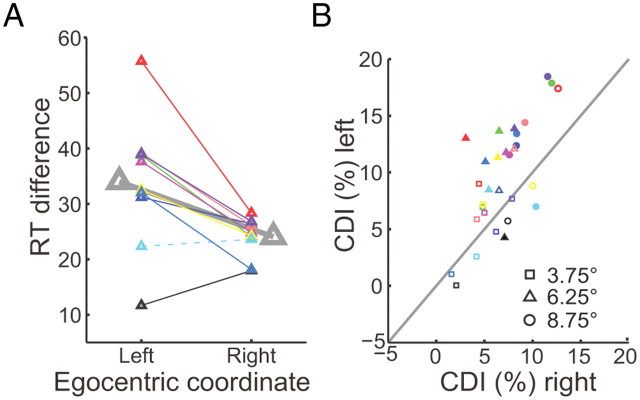

The influence of egocentric representation on egocentric perception also differs in two egocentric hemifield. Stimuli in the left hemifield evoked slower reaction times in the incompatible condition than those in the right hemifield. Figure 6A shows the mean RT difference of peripheral locations in two egocentric sides. Eight of 10 subjects had significant larger RT differences in the egocentric left visual field than that in egocentric right (the maximum p = 0.0453, Wilcoxon test). One subjects showed the opposite result, i.e., larger RT difference in egocentric right (p = 0.0092, Wilcoxon test), while another subject did not show a significant RT difference (p = 0.9544, Wilcoxon test) between egocentric left and right. The paired CDI values that have equal eccentricity between the two egocentric sides are compared for each subject (Fig. 6B). Most data points are biased toward the egocentric left side. These results indicate that the two hemispheres asymmetrically integrate ego- and allocentric spatial information.

Figure 6.

Comparing CDI between egocentric left and right. A, Small triangles represent the mean RT difference between compatible and incompatible conditions and big triangles represent the population data. The solid lines depict the RT difference between egocentric left and right is significant (p < 0.05, Wilcoxon test),while the dashed line is not. B shows the comparison of each subject's CDIs between egocentric left and right with equal eccentricity. Different symbols represent different egocentric positions of the target. The filled symbols depict that the CDI difference reaches significant level (p < 0.05, two-tailed t test) whereas the open symbols do not.

Discussion

In the present study, we showed when human subjects were asked to detect a visual target's allocentric location, the reaction time became greater if the target's representation in ego- and allocentric coordinates was incompatible. This difference was not due to interhemispheric transformation because in the “Crossed-hand task,” regardless of the longer visuomotor pathway in compatible condition, subjects still showed significant shorter RTs. Also, this difference depended upon the subjects having to make a spatial decision because when subjects merely had to report the color of the target regardless of the spatial representation of target (Colored-cue task), there was no reaction time difference between the compatible and incompatible conditions. Finally, this difference was not merely a result of stimulus-response compatibility because in the Single-hand task the RT was still significant longer in the incompatible condition than in the compatible condition even though there was no stimulus-response compatibility. Altogether, our data indicate that each hemisphere preferentially integrates and processes the contralateral ego- and allocentric information, with the right hemisphere receiving more ipsilateral egocentric inputs than left hemisphere does.

Possible explanations for RT difference between compatible and incompatible cases

There are several possible causes of the RT difference between the compatible and incompatible cases in our experiment. First, the different eccentricity of the target between two reversed allocentric locations in a given egocentric representation might cause the RT difference. Reaction time varies inversely with retinal eccentricity (Hufford, 1964; Payne, 1966). However, the geometry of the experiment forces the target always to be farther away from center fixation point in the compatible condition than that in incompatible condition (Fig. 1A). Therefore, the RT difference was not simply due to the different eccentricity of target.

Second, interhemispheric transfer may have lengthened the reaction time in the incompatible case because the progression from stimulus to response was completed within one hemisphere in compatible condition but across two hemispheres in the incompatible condition. We tested this possibility by asking subjects to cross their arms (right hand pressing left key, and left hand pressing right key) to perform the position discrimination task. In this case, the interhemispheric transfer happened in the compatible condition but not in the incompatible condition, but the RTs were similar to the case when the hands were uncrossed, so interhemispheric transfer could not explain our results.

Third, the results might have been explained by the advantage conferred when the stimulus and the motor response were in the same egocentric field. In both tasks subjects were required to press either the left key or the right key based on the spatial instruction of visual target. Fitts and Deininger (1954) discovered that the subject's performance was faster and more accurate when stimulus and response were compatible in space than when they were incompatible. To rule out the importance of stimulus-response compatibility in our study, we measured the subjects' manual RT in a control experiment, in which the subject's had to press a button in the sagittal midline, thus eliminating the problem of spatial compatibility with the target. Nonetheless, all subjects still showed shorter RTs in the compatible condition than that in the incompatible condition, which indicated that stimulus-response compatibility was not the major reason for causing the RT difference in our study.

Comparison between previous studies and the present study

Previous studies have found an influence of allocentric representation on the accuracy of spatial judgments in egocentric coordinates, but no evidence has been found for the reversed interaction (Roelofs, 1935; Bridgeman et al., 1997; Neggers et al., 2005). Based on these findings, Neggers and his colleagues proposed that the interaction between ego- and allocentric coordinate was unidirectional—allocentric representation influencing the egocentric perception, but no reversed interaction. However, in the present study we found that task irrelevant egocentric representation could markedly influence the process of allocentric perception. Two possible reasons could explain these different observations. First, different measures of spatial perception were studied: spatial accuracy in the previous studies, and the time course of spatial perception in our study. Second, the position of the visual stimuli was close to the central fixation point (<3° visual angle) in previous studies, whereas the farthest location of visual stimuli in our study was 8.75°. Furthermore, our results showed that the farther the target was away from the central fixation point, the more egocentric representation influenced the process of allocentric perception. When the target was closest to the middle line of subject's body (1.25° on the left or right), most subjects did not show a significant RT difference between two the allocentric locations. The influence of egocentric representation on the process of allocentric perception might only occur in peripheral locations.

Hemispheric asymmetry in egocentric processing

A striking result of our study is that the incompatible representation of target between allo- and egocentric coordinates is processed more efficiently in the right visual field than the left. It has been known for a long time that each hemisphere of the human brain preferentially processes contralateral egocentric spatial information (Tootell et al., 1982). Recently, it has been found that supplementary eye field neurons coding object-centered direction of saccades were distributed mainly in the contralateral hemisphere (Olson and Gettner, 1995), which indicated that each hemisphere mainly processed contralateral allocentric representation (Olson and Gettner, 1996).

While each hemisphere preferentially receives and processes contralateral ego- and allocentric spatial information, it also receives and processes some ipsilateral egocentric spatial information in places that are near the midline. It has been reported that the ipsilateral representation in area LIP of monkeys is restricted to within ∼5° of the vertical meridian (Ben Hamed et al., 2001). The farther away from the center of vision, the less ipsilateral input was received. The similar RTs in the compatible condition between egocentric left and right (Fig. 2) indicates that each hemisphere preferentially and equally receives and processes spatial information from contralateral ego- and allocentric coordinates. However, the difference between the hemispheres in the incompatible condition may be due to an asymmetry in receiving and processing the ipsilateral egocentric information between two hemispheres—the right hemisphere may receive more ipsilateral inputs than left hemisphere does. After a lesion in the left frontoparietal cortex, the right hemisphere could get efficient visual input from the right visual field to compensate for the impaired spatial function of the left hemisphere, so that there is no severe right hemineglect. Conversely, after a lesion in right frontoparietal cortex, the left hemisphere could not get enough visual input from the left visual field to compensate for the impaired spatial function of the right hemisphere, so that patients show severe left hemineglect.

Footnotes

This study is supported by the following foundations: The Hundred Talent Program, Chinese Academy of Sciences; Pujiang Program, Shanghai government; and the State Key Laboratory of Neuroscience, Chinese government. We especially thank Dr. Michael E. Goldberg for his help on the manuscript preparation. We also thank Dr. Sara Steenrod and Dr. Suresh Krishna for their helpful comments.

The authors declare no competing financial interests.

References

- Andersen RA. Encoding of intention and spatial location in the posterior parietal cortex. Cereb Cortex. 1995;5:457–469. doi: 10.1093/cercor/5.5.457. [DOI] [PubMed] [Google Scholar]

- Ben Hamed S, Duhamel JR, Bremmer F, Graf W. Representation of the visual field in the lateral intraparietal area of macaque monkeys: a quantitative receptive field analysis (Retracted Article. See vol 146, pg 127, 2002) Exp Brain Res. 2001;140:127–144. doi: 10.1007/s002210100785. [DOI] [PubMed] [Google Scholar]

- Boulinguez P, Nougier V, Velay JL. Manual asymmetries in reaching movement control. I: Study of right-handers. Cortex. 2001;37:101–122. doi: 10.1016/s0010-9452(08)70561-6. [DOI] [PubMed] [Google Scholar]

- Bridgeman B, Peery S, Anand S. Interaction of cognitive and sensorimotor maps of visual space. Percept Psychophys. 1997;59:456–469. doi: 10.3758/bf03211912. [DOI] [PubMed] [Google Scholar]

- Burgess N. Spatial memory: how egocentric and allocentric combine. Trends Cogn Sci. 2006;10:551–557. doi: 10.1016/j.tics.2006.10.005. [DOI] [PubMed] [Google Scholar]

- Carey DP. Eye-hand coordination: eye to hand or hand to eye? Curr Biol. 2000;10:R416–R419. doi: 10.1016/s0960-9822(00)00508-x. [DOI] [PubMed] [Google Scholar]

- Connolly JD, Andersen RA, Goodale MA. FMRI evidence for a ‘parietal reach region’ in the human brain. Exp Brain Res. 2003;153:140–145. doi: 10.1007/s00221-003-1587-1. [DOI] [PubMed] [Google Scholar]

- Dean HL, Platt ML. Allocentric spatial referencing of neuronal activity in macaque posterior cingulate cortex. J Neurosci. 2006;26:1117–1127. doi: 10.1523/JNEUROSCI.2497-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitts PM, Deininger RL. S-R compatibility—correspondence among paired elements within stimulus and response codes. J Exp Psychol. 1954;48:483–492. doi: 10.1037/h0054967. [DOI] [PubMed] [Google Scholar]

- Galati G, Lobel E, Vallar G, Berthoz A, Pizzamiglio L, Le Bihan D. The neural basis of egocentric and allocentric coding of space in humans: a functional magnetic resonance study. Exp Brain Res. 2000;133:156–164. doi: 10.1007/s002210000375. [DOI] [PubMed] [Google Scholar]

- Galati G, Pelle G, Berthoz A, Committeri G. Multiple reference frames used by the human brain for spatial perception and memory. Exp Brain Res. 2010;206:109–120. doi: 10.1007/s00221-010-2168-8. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Gramann K, Müller HJ, Schönebeck B, Debus G. The neural basis of ego- and allocentric reference frames in spatial navigation: evidence from spatio-temporal coupled current density reconstruction. Brain Res. 2006;1118:116–129. doi: 10.1016/j.brainres.2006.08.005. [DOI] [PubMed] [Google Scholar]

- Guan S, Liu Y, Xia R, Zhang M. Covert attention regulates saccadic reaction time by routing between different visual-oculomotor pathways. J Neurophysiol. 2012;107:1748–1755. doi: 10.1152/jn.00082.2011. [DOI] [PubMed] [Google Scholar]

- Hufford LE. Reaction time and the retinal area-stimulus intensity relationship. J Opt Soc Am. 1964;54:1368–1373. doi: 10.1364/josa.54.001368. [DOI] [PubMed] [Google Scholar]

- Kalaska JF, Crammond DJ. Cerebral cortical mechanisms of reaching movements. Science. 1992;255:1517–1523. doi: 10.1126/science.1549781. [DOI] [PubMed] [Google Scholar]

- Medendorp WP, Goltz HC, Crawford JD, Vilis T. Integration of target and effector information in human posterior parietal cortex for the planning of action. J Neurophysiol. 2005;93:954–962. doi: 10.1152/jn.00725.2004. [DOI] [PubMed] [Google Scholar]

- Moorman DE, Olson CR. Impact of experience on the representation of object-centered space in the macaque supplementary eye field. J Neurophysiol. 2007;97:2159–2173. doi: 10.1152/jn.00848.2006. [DOI] [PubMed] [Google Scholar]

- Neggers SFW, Scholvinck ML, van der Lubbe RHJ, Postma A. Quantifying the interactions between allo- and egocentric representations of space. Acta Psychol. 2005;118:25–45. doi: 10.1016/j.actpsy.2004.10.002. [DOI] [PubMed] [Google Scholar]

- Neggers SFW, Van der Lubbe RHJ, Ramsey NF, Postma A. Interactions between ego- and allocentric neuronal representations of space. Neuroimage. 2006;31:320–331. doi: 10.1016/j.neuroimage.2005.12.028. [DOI] [PubMed] [Google Scholar]

- Olson CR. Brain representation of object-centered space in monkeys and humans. Annu Rev Neurosci. 2003;26:331–354. doi: 10.1146/annurev.neuro.26.041002.131405. [DOI] [PubMed] [Google Scholar]

- Olson CR, Gettner SN. Object-centered direction selectivity in the macaque supplementary eye field. Science. 1995;269:985–988. doi: 10.1126/science.7638625. [DOI] [PubMed] [Google Scholar]

- Olson CR, Gettner SN. Brain representation of object-centered space. Curr Opin Neurobiol. 1996;6:165–170. doi: 10.1016/s0959-4388(96)80069-9. [DOI] [PubMed] [Google Scholar]

- Payne WH. Reaction time as a function of retinal location. Vision Res. 1966;6:729–732. doi: 10.1016/0042-6989(66)90085-x. [DOI] [PubMed] [Google Scholar]

- Roelofs C. Optische localization. Archives fur Augenheilkunde. 1935;109:395–415. [Google Scholar]

- Tootell RB, Silverman MS, Switkes E, De Valois RL. Deoxyglucose analysis of retinotopic organization in primate striate cortex. Science. 1982;218:902–904. doi: 10.1126/science.7134981. [DOI] [PubMed] [Google Scholar]

- Velay JL, Daffaure V, Raphael N, Benoit-Dubrocard S. Hemispheric asymmetry and interhemispheric transfer in pointing depend on the spatial components of the movement. Cortex. 2001;37:75–90. doi: 10.1016/s0010-9452(08)70559-8. [DOI] [PubMed] [Google Scholar]

- Ward R, Arend I. An object-based frame of reference within the human pulvinar. Brain. 2007;130:2462–2469. doi: 10.1093/brain/awm176. [DOI] [PubMed] [Google Scholar]

- Zaehle T, Jordan K, Wüstenberg T, Baudewig J, Dechent P, Mast FW. The neural basis of the egocentric and allocentric spatial frame of reference. Brain Res. 2007;1137:92–103. doi: 10.1016/j.brainres.2006.12.044. [DOI] [PubMed] [Google Scholar]