Abstract

Differential Privacy (DP) has received increasing attention as a rigorous privacy framework. Many existing studies employ traditional DP mechanisms (e.g., the Laplace mechanism) as primitives to continuously release private data for protecting privacy at each time point (i.e., event-level privacy), which assume that the data at different time points are independent, or that adversaries do not have knowledge of correlation between data. However, continuously generated data tend to be temporally correlated, and such correlations can be acquired by adversaries. In this paper, we investigate the potential privacy loss of a traditional DP mechanism under temporal correlations. First, we analyze the privacy leakage of a DP mechanism under temporal correlation that can be modeled using Markov Chain. Our analysis reveals that, the event-level privacy loss of a DP mechanism may increase over time. We call the unexpected privacy loss temporal privacy leakage (TPL). Although TPL may increase over time, we find that its supremum may exist in some cases. Second, we design efficient algorithms for calculating TPL. Third, we propose data releasing mechanisms that convert any existing DP mechanism into one against TPL. Experiments confirm that our approach is efficient and effective.

Keywords: Differential privacy, correlated data, Markov model, time series, streaming data

1. INTRODUCTION

With the development of IoT technology, vast amount of temporal data generated by individuals are being collected, such as trajectories and web page click streams. The continual publication of statistics from these temporal data has the potential for significant social benefits such as disease surveillance, real-time traffic monitoring and web mining. However, privacy concerns hinder the wider use of these data. To this end, differential privacy under continual observation [1], [2], [3], [4], [5], [6], [7], [8] has received increasing attention because differential priavcy provides a rigorous privacy guarantee. Intuitively, differential privacy (DP) [9] ensures that the change of any single user’s data has a “slight” (bounded in ϵ) impact on the change in outputs. The parameter ϵ is defined to be a positive real number to control the level of privacy guarantee. Larger values of ϵ result in larger privacy leakage. However, most existing works on differentially private continuous data release assume data at different time points are independent, or attackers do not have knowledge of correlation between data. Example 1 shows that temporal correlations may degrade the expected privacy guarantee of a DP mechanism.

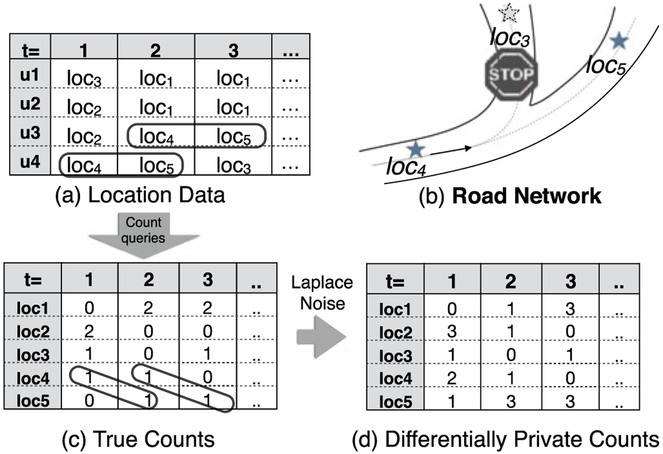

Example 1. Consider the scenario of continuous data release with DP illustrated in Fig. 1. A trusted server collects users’ locations at each time point in Fig. 1a and tries to continuously publish differentially private aggregates (i.e., the private counts of people at each location). Suppose that each user appears at only one location at each time point. According to the Laplace mechanism [10], adding Lap(2/ϵ) noise1 to perturb each count in Fig. 1c can achieve ϵ-DP at each time point. It is because the modification of each cell (raw data) in Fig. 1a affects at most two cells (true counts) in Fig. 1c, i.e., the global sensitivity is 2. However, it may not be true under the existence of temporal correlations. For example, due to the nature of road networks, user may have a particular mobility pattern such as “always arriving at loc5 after visiting loc4” (shown in Fig. 1b), leading to the patterns illustrated in solid lines of Figs. 1a and 1c. Such temporal correlation can be represented as Pr(lt = loc5|lt−1 = loc4) = 1 where lt is the location of a user at time t. In this case, adding Lap(2/ϵ) noise only achieves 2ϵ-DP because the change of one cell in Fig. 1a may affect four cells in Fig. 1c in the worst case, i.e., the global sensitivity is 4.

Fig. 1.

Continuous data release with DP under temporal correlations.

Few studies in the literature investigated such potential privacy loss of event-level ϵ-DP under temporal correlations as shown in Example 1. A direct method (without finely utilizing the probability of correlation) involves amplifying the perturbation in terms of group differential privacy [10], [11], i.e., protecting the correlated data as a group. In Example 1, for temporal correlation Pr(lt = loc5|lt−1 = loc4) = 1, we can protect the counts of loc4 at time t − 1 and loc5 at time t in a group by increasing the scale of the perturbation (using Lap(4/ϵ) at each time point. However, this technique is not suitable for probabilistic correlations to finely prevent privacy leakage and may over-perturb the data as a result. For example, regardless of whether Pr(lt = loc5|lt−1 = loc4) = 1 is 1 or 0.1, it always protects the correlated data in a bundle.

Although a few studies investigated the issue of differential privacy under probabilistic correlations, existing works are not appropriate for continuous data release because of two reasons. First, most of existing works focused on correlations between users (i.e., user-user correlation) rather than correlation between data at different time points (i.e., temporal correlations). Yang et al. [12] proposed Bayesian differential privacy, which measures the privacy leakage under probabilistic correlations between users using a Gaussian Markov Random Field without taking time factor into account. Liu et al. [13] proposed dependent differential privacy by introducing two parameters of dependence size and probabilistic dependence relationship between tuples. It is not clear whether we can specify these parameters for temporally correlated data since it is not commonly used probabilistic model. A concurrent work [14] proposed Markov Quilt Mechanism when data correlation is represented by a Bayesian Network (BN). Although BN is possible to model both user-user correlation (when the nodes in BN are individuals) and temporal correlation (when the nodes in BN are individual data at different time points), [17] assumes the private data are released only at one time. This is the second major difference between our work and all above works: they focus on the setting of “one-shot” data release, which means the private data are released only at one time. To the best of our knowledge, no study reported the dynamical change of the privacy guarantee in continuous data release.

In this work, we call the adversary with knowledge of probabilistic temporal correlations . Rigorously quantifying and bounding the privacy leakage against in continuous data release remains a challenge. Therefore, our goal is to solve the following problems:

How do we analyze the privacy loss of DP mechanisms against ? (Section 3)

How do we calculate such privacy loss efficiently? (Section 4)

How do we bound such privacy loss in continuous data release? (Section 5)

1.1. Contributions

Our contributions are summarized as follows.

First, we show that the privacy guarantee of data release at a single time is not on its own or static; instead, due to the existence of temporal correlation, it may be increasing with previous release and even future release. We first adopt a commonly used model Markov Chain to describe temporal correlations between data at different time points, which includes backward and forward correlations, i.e., and where denotes the value (e.g., location) of user i at time t. We then define Temporal Privacy Leakage (TPL) as the privacy loss of a DP mechanism at time t against who has knowledge of the above Markov model and observes the continuous data release. We show that TPL includes two parts: Backward Privacy Leakage (BPL) and Forward Privacy leakage (FPL). Intuitively, BPL at time t is affected by previously releases that are from time 1 to t − 1, and FPL at time t will be affected by future releases that are from time t + 1 to the end of release. We define α-differential privacy under temporal correlation, denoted as , to formalize the privacy guarantee of a DP mechanism against , which implies the temporal privacy leakage should be bounded in α. We prove a new form of sequential composition theorem for , which reveals interesting connections among event-level DP, w-event DP [7] and user-level DP [4], [5].

Second, we design efficient algorithms to calculate TPL under given backward and forward temporal correlations. Our idea is to transform such calculation into finding an optimal solution of a Linear-Fractional Programming problem. This type of optimization can be solved by well-studied methods, e.g., simplex algorithm, in exponential time. By exploiting the constraints of this problem, we propose fast algorithms to obtain the optimal solution without directly solving the problem. Experiments show our algorithms outperform the off-the-shelf optimization software (CPLEX) by several orders of magnitude with the same optimal answer.

Third, we design novel data release mechanisms against TPL effectively. Our scheme is to carefully calibrate the privacy budget of the traditional DP mechanism at each time point to make them satisfy . A challenge is that TPL is dynamically changing due to previous and even future allocated privacy budgets so that is hard to achieve. In our first solution, we prove that, even though TPL may increase over time, its supremum may exist when allocating a calibrated privacy budget at each time. That is to say, TPL will never be greater than such supremum α no matter when the release ends. However, when the releases are too short (TPL is far from reaching its supremum), we may over-perturb the data. In our second solution, we design another budget allocation scheme that exactly achieves at each time point.

Finally, experiments confirm the efficiency and effectiveness of our TPL quantification algorithms and data release mechanisms. We also demonstrate the impact of different degree of temporal correlations on privacy leakage.

2. PRELIMINARIES

2.1. Differential Privacy

Differential privacy[9] is a formal definition of data privacy. Let D be a database and D′ be a copy of D that is different in any one tuple. D and D′ are neighboring databases. A differentially private output from D or D′ should exhibit little difference.

Definition 1 (ϵ-DP). is a randomized mechanism that takes as input D and outputs r, i.e.,. satisfies ϵ-differential privacy if the following inequality is true for any pair of neighboring databases D, D′ and all possible outputs r

| (1) |

The parameter ϵ, called the privacy budget, represents the degree of privacy offered. A commonly used method to achieve ϵ-DP is the Laplace mechanism as shown below.

Theorem 1 (Laplace Mechanism). Let be a statistical query on database D. The sensitivity of Q is defined as the maximum L1 norm between Q(D) and Q(D′), i.e., Δ = maxD,D′ ‖Q(D) – Q(D′)‖1. We can achieve ϵ-DP adding Laplace noise with scale Δ/ϵ, i.e., Lap(Δ/ϵ).

2.2. Privacy Leakage

In this section, we define the privacy leakage of a DP mechanism against a type of adversaries Ai, who targets user i’s value in the database, i.e., li ∈ [loc1, …, locn], and has knowledge of . The adversary Ai observes the private output r and attempts to distinguish whether user i’s value is locj or lock where locj, lock ∈ [loc1, …, locn]. We define the privacy leakage of a DP mechanism w.r.t. such adversaries as follows.

Definition 2. The privacy leakage of a DP mechanism against one Ai with a specific i and all Ai, i ∈ U are defined, respectively, as follows in which li and are two different possible values of ith data in the database

In other words, the privacy budget of a DP mechanism can be considered as a metric of privacy leakage. The larger ϵ, the larger the privacy leakage. We note that a ϵ′-DP mechanism automatically satisfies ϵ-DP if ϵ′ < ϵ. For convenience, in the rest of this paper, when we say that satisfies ϵ-DP, we mean that the supremum of privacy leakage is ϵ.

2.3. Problem Setting

We attempt to quantify the potential privacy loss of a DP mechanism under temporal correlations in the context of continuous data release (e.g., releasing private counts at each time as shown in Fig. 1). For the convenience of analysis, let us assume the length of release time is T. Note that we do not need to know the exact T in this paper. Users in the database, denoted by U, are generating data continuously. Let loc = {loc1, …, locn} be all possible values of user’s data. We denote the value of user i at time t by . A trusted server collects the data of each user into the database at each time t (e.g., the columns in Fig. 1a). Without loss of generality, we assume that each user contributes only one data point in Dt. DP mechanisms release differentially private outputs rt independently at different time t. For simplicity, we let to be the same DP mechanism but may with different privacy budgets at each t ∈ [1, T]. Our goal is to quantify and bound the potential privacy loss of against adversaries with knowledge of temporal correlations. We note that while we use location data in Example 1, the problem setting is general for temporally correlated data. We summarize notations used in this paper in Table 1.

TABLE 1.

Summary of Notations

| U | The set of users in the database |

| i | The ith user where i ∈ [1, |U|] |

| loc | Value domain {loc1, …, locn} of all user’s data |

| , | The data of user i at time t, , |

| Dt | The database at time t, |

| Differentially private mechanism over Dt | |

| rt | Differentially private output at time t |

| Ai | Adversary who targets user i without temporal correlations |

| Adversary Ai with temporal correlations | |

| Transition matrix that represents , i.e., backward temporal correlation, known to | |

| Transition matrix that represents , i.e., forward temporal correlation, known to |

|

| The subset of database known to |

Our problem setting is identical to differential privacy under continual observation in the literature [1], [2], [3], [4], [5], [6], [7], [8]. In contrast to “one-shot” data release over a static database, the attackers can observe multiple private outputs, i.e., r1, …, rt. There are typically two different privacy goals in the context of continuous data release: event-level and user-level [4], [5]. The former protects each user’s single data point at time t (i.e., the neighboring databases are Dt and Dt′), whereas the latter protects the presence of a user with all her data on the timeline (i.e., the neighboring databases are {D1, …, Dt} and {D1′, …, Dt′}). In this work, we start from examining event-level DP under temporal correlations, and we also extend the discussion to user-level DP by studying the sequential composability of the privacy leakage.

3. Analyzing Temporal Privacy Leakage

3.1. Adversay with Knowledge of Temporal Correlations

Markov Chain for Temporal Correlations.

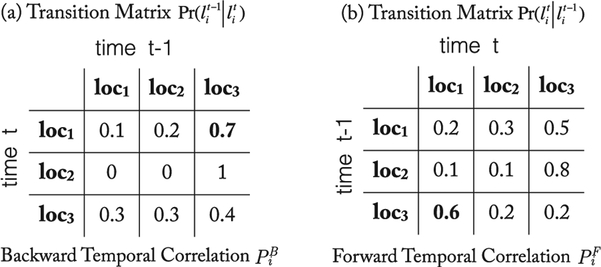

The Markov chain (MC) is extensively used in modeling user mobility. For a time-homogeneous first-order MC, a user’s current value only depends on the previous one. The parameter of the MC is the transition matrix, which describes the probabilities for transition between values. The sum of the probabilities in each row of the transition matrix is 1. A concrete example of transition matrix and time-reversed one for location data is shown in Fig. 2. As shown in Fig. 2a, if user i is at loc1 now (time t); then, the probability of coming from loc3 (time t − 1) is 0.7, namely, . As shown in Fig. 2b, if user i at loc3 at the previous time t − 1, then the probability of being at loc1 now (time t) is 0.6; namely, . We call the transition matrices in Figs. 2a and 2b as backward temporal correlation and forward temporal correlation, respectively.

Fig. 2.

Examples of temporal correlations.

Definition 3 (Temporal Correlations). The backward and forward temporal correlations between user i’s data and are described by transition matrices , representing and , respectively.

It is reasonable to consider that the backward and/or forward temporal correlations could be acquired by adversaries. For example, the adversaries can learn them from user’s historical trajectories (or the reversed trajectories) by well studied methods such as Maximum Likelihood estimation (supervised) or Baum-Welch algorithm (unsupervised). Also, if the initial distribution of is known (i.e., ), the backward temporal correlation (i.e., ) (can be derived from the forward temporal correlation (i.e., ) by Bayesian inference. We assume the attackers’ knowledge about temporal correlations is given in our framework.

We now define an enhanced version of Ai (in Definition 2) with knowledge of temporal correlations.

Definition 4 . is a class of adversaries who knowledge of (1) all other users’ data at each time t except the one of the targeted victim, i.e., , and (2) backward and/or forward temporal correlations represented as transition matrices and . We denote who targets user i by .

There are three types of : (i) , (ii) , (iii) , where ∅ denotes that the corresponding correlations are not known to the adversaries. For simplicity, we denote types (i) and (ii) as and , respectively. We note that is the same as the traditional DP adversary Ai without any knowledge of temporal correlations.

3.2. Temporal Privacy Leakage

We now define the privacy leakage w.r.t. . The adversary observes the differentially private outputs rt, which is released by a traditional differentially private mechanisms (e.g., Laplace mechanism) at each time point t ∈ [1, T], and attempts to infer the possible value of user i’s data at t, namely . Similar to Definition 2, we define the privacy leakage in terms of event-level differential privacy in the context of continual data release as described in Section 2.3.

Definition 5 (Temporal Privacy Leakage, TPL). Let Dt′ be a neighboring database of Dt. Let be the tuple knowledge of . We have and where and are two different valuesgof user i’s data at time t. Temporal Privacy Leakage of w.r.t. a single and all , i ∈ U are defined, respectively, as follows:

| (2) |

| (3) |

| (4) |

We first analyze (i.e., Equation (2)) because it is key to solve Equation (3) or (4).

Theorem 2. We can rewrite as follows:

| (5) |

It is clear that because PL0 indicates the privacy leakage w.r.t. one output r (refer to Definition 2). As annotated in the above equation, we define backward and forward privacy leakage as follows.

Definition 6 (Backward Privacy Leakage, BPL). The privacy leakage of caused by r1, …, rt w.r.t. is called backward privacy leakage, defined as follows:

| (6) |

| (7) |

Definition 7 (Forward Privacy Leakage, FPL). The privacy leakage of caused by rt, …, rT w.r.t. is called forward privacy leakage, defined by follows:

| (8) |

| (9) |

By substituting Equations (6) and (8) into (5), we have

| (10) |

Since the privacy leakage is considered as the worst case among all users in the database, by Equations (7) and (9), we have

| (11) |

Intuitively, BPL, FPL and TPL are the privacy leakage w.r.t. the adversaries , and , respectively. In Equation (11), we need to minus because it is counted in both BPL and FPL. In the following, we will dive into the analysis of BPL and FPL.

BPL Over Time.

For BPL, we first expand and simplify Equation (6) by Bayesian theorem, is equal to

| (12) |

We now discuss the three annotated terms in the above equation. The first term indicates BPL at the previous time t − 1, the second term is the backward temporal correlation determined by , and the third term is equal to the privacy leakage w.r.t. adversaries in traditional DP (see Definition 2). Hence, BPL at time t depends on (i) BPL at time t − 1, (ii) the backward temporal correlations, and (iii) the (traditional) privacy leakage of which is related to the privacy budget (which is related to the privacy budget allocated to ). By Equation (12), we know that if t = 1, ; if t > 1, we have the following, where (·) is a backward temporal privacy loss function for calculating the accumulated privacy loss

| (13) |

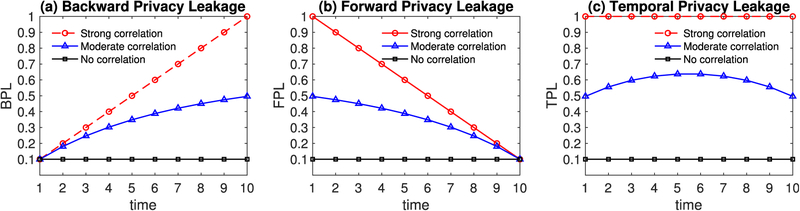

Equation (13) reveals that BPL is calculated recursively and may accumulate over time, as shown in Example 2 (Fig. 3a).

Fig. 3.

Backward (BPL), Forward (FPL), and Temporal Privacy Leakage (TPL) of a 0.1-DP mechanism at each time point for Examples 2 and 3.

Example 2 (BPL Due to Previous Releases). Suppose that a DP mechanism satisfies for each time t ∈ [1, T], i.e., 0.1-DP at each time point. We now discuss BPL at each time point w.r.t. with knowledge of backward temporal correlations . In an extreme case, if indicates the strongest correlation, say, , then, at time t, knows , i.e., Dt = Dt−1 = · · · = D1 because of for any t ∈ [1, T]. Hence, the continuous data release r1, …, rt is equivalent to releasing the same database multiple times; the privacy leakage at each time point will accumulate from previous time points and increase linearly (the red line with circle marker in Fig. 3a). In another extreme case, if there is no backward temporal correlation that is known to (e.g., for the Ai in Definition 2 or ), BPL at each time point is , as shown in Fig. 3a, the black line with rectangle marker. The blue line with triangle marker in Fig. 3a depicts the backward privacy leakage caused by , which can be finely quantified using our method (Algorithm 1) in Section 4.

FPL Over Time.

For FPL, similar to the analysis of BPL, we expand and simplify Equation (6) by Bayesian theorem, is equal to

| (14) |

By Equation (14), we know that if t = T, ; if t < T, we have the following, where is a forward temporal privacy loss function for calculating the increased privacy loss due to FPL at the next time

| (15) |

Equation (15) reveals that FPL is calculated recursively and may increase over time, as shown in Example 3 (Fig. 3b).

Example 3 (FPL Due to Future Releases). Considering the same setting in Example 2, we now discuss FPL at each time point w.r.t. with knowledge of forward temporal correlations . In an extreme case, if indicates the strongest correlation, say, , then, at time t, knows , i.e., Dt = Dt+1 = · · · = Dt because of for any t ∈ [1, T]. Hence, the continuous data release rt, …, rT is equivalent to releasing the same database multiple times; the privacy leakage at time t will increase when every time new release (i.e., rt+1, rt+2,…) happens, as shown in the red line with circle marker in Fig. 3b. We see that contrary to BPL, the FPL at time 1 is the highest (due to future releases at time 1 to 10) while FPL at time 10 is the lowest (since there is no future release with respect to time 10 yet). If r11 is released, all FPL at time t ∈ [1, 10] will be updated. In another extreme case, if there is no forward temporal correlation that is known to (e.g., for the Ai in Definition 2 or ), then the forward privacy leakage at each time point is , as shown in the black line with rectangle marker Fig. 3b. The blue line with triangle marker in Fig. 3b depicts the forward privacy leakage caused by , which can be finely quantified using our method (Algorithm 1) in Section 4.

Remark 1. The extreme cases shown in Examples 2 and 3 are the upper and lower bound of BPL and FPL. Hence, the temporal privacy loss functions and in Equations (13) and (15) satisfy , where x is BPL at the previous time, and , where x is FPL at the next time, respectively.

From Examples 2 and 3, we know that: backward temporal correlation (i.e., ) does not affect FPL, and forward temporal correlation (i.e., ) does not affect BPL. In other words, adversary only causes BPL; only causes FPL; while poses a risk on both BPL and FPL. The composition of BPL and FPL is shown in Equations (10) and (11). Fig. 3c shows TPL, which can be calculated using Equation (11).

3.3. and Its Composability

In this section, we define (differential privacy under temporal correlations) to provide a privacy guarantee against temporal privacy leakage. We prove its sequential composition theorem and discuss the connection between and ϵ-DP in terms of event-level/user-level privacy [4], [5] and w-event privacy [7].

Definition 8 (, differential privacy under temporal correlations). If TPL of a differentially private mechanism is less than or equal to α, we say that such mechanism satisfies α-Differential Privacy under Temporal correlation, i.e., .

is an enhanced version of differential privacy on time-series data. If the data are temporally independent (i.e., for all user i, both and are ∅), an ϵ-DP mechanism satisfies . If the data are temporally correlated (i.e., existing user i whose or is not ∅), an ϵ-DP mechanism satisfies where α is the increased privacy leakage and can be quantified in our framework.

One may wonder, for a sequence of mechanisms on the timeline, what is the overall privacy guarantee. In the following, we suppose that is a ϵt-DP mechanism at time t ∈ [1, T] and poses risks of BPL and FPL as and , respectively. That is, satisfies at time t according to Equation (11). Similar to definition of TPL w.r.t. a DP mechanism at a single time point, we define TPL of a sequence of DP mechanisms at consecutive time points as follows.

Definition 9 (TPL of a sequence of DP mechanisms). The temporal privacy leakage of DP mechanisms where j ≥ 0 is defined as follows:

It is easy to see that, if j = 0, it is event-level privacy; if t = 1 and j = T − 1, it is user-level privacy.

Theorem 3 (Sequential Composition under Temporal Correlations). A sequence of DP mechanisms satisfies

| (16) |

In Theorem 3, when t = 1 and j = T − 1, we have Corollary 1.

Corollary 1. The temporal privacy leakage of a combined mechanism is where ϵk is the privacy budget of .

It shows that temporal correlations do not affect the user-level privacy (i.e., protecting all the data on the timeline of each user), which is in line with the idea of group differential privacy: protecting all the correlated data in a bundle.

Comparison Between DP and .

As we mentioned in Section 2.3, there are typically two privacy notions in continuous data release: event-level and user-level [4], [5]. Recently, w-event privacy [7] is proposed to merge the gap between event-level and user-level privacy. It protects the data in any w-length sliding window by utilizing the sequential composition theorem of DP.

Theorem 4 (Sequential composition on independent data[15]). Suppose that satisfies ϵt-DP for each t ∈ [1, T]. A combined mechanism satisfies .

For ease of exposition, suppose that satisfies ϵ-DP for each t ∈ [1, T]. According to Theorem 4, it achieves Tϵ-DP on user-level and wϵ-DP on w-event level. We compare the privacy guarantee of on independent data and temporally correlated data in Table 2.

TABLE 2.

The Privacy Guarantee of ϵ-DP Mechanisms on Different Types of Data

It reveals that temporal correlations may blur the boundary between event-level privacy and user-level privacy. As shown in Table 2, the privacy leakage of a ϵ-DP mechanism at a single time point (event-level) on temporally correlated data may range from ϵ to Tϵ which depends on the strength of temporal correlation. For example, as shown in Fig. 3c, TPL of a 0.1-DP mechanism under strong temporal correlation at each time point (event-level) is T * 0.1, which is equal to the privacy leakage of a sequence of 0.1-DP mechanisms that satisfies user-level privacy. When the temporal correlations is moderate, TPL of a 0.1-DP mechanism at each time point (event-level) is less than T * 0.1 but still larger than 0.1, as shown in the blue line with triangle markers in Fig. 3c.

3.4. Connection with Pufferfish Framework

is highly related to Pufferfish framework [16], [17] and its instantiation [14]. Pufferfish provides rigorous and customizable privacy framework which consists of three components: a set of secrets, a set of discriminative pairs and data evolution scenarios (how the data were generated, or how the data are correlated). Note that the data evolution scenarios is essentially the adversary’s background knowledge about data, such as data correlations. In Pufferfish framework [16], [17], they prove that when secrets to be all possible values of tuples, discriminative pairs to be the set of all pairs of potential secrets, and tuples to be independent, it is equivalent to DP; hence, Pufferfish becomes under the above setting of secrets and discriminative pairs, but with temporally correlated tuples.

A recent work [14] proposes Markov Quilt Mechanism for Pufferfish framework when data correlations can be modeled by Bayesian Network. They further design efficient mechanisms MQMExact and MQMApprox when the structure of BN is Markov Chain. Although we also use Markov Chain as temporal correlation model, the settings of two studies are essentially different. In the motivating example of [14], i.e., Physical Activity Monitoring, the private histogram is “one-shot” (see Section 2.3) release: adapting to our setting in Fig. 1, their example is equivalent to release location access statistics for a one-user database only at a given time T. Whereas, we focus on quantifying the privacy leakage in continuous data release. One important insight of our study is that, the privacy guarantee of one-shot data release is not on its own or static; instead, it may be affected by previous release and even future release, which are defined as Backward and Forward Privacy Leakage in our work.

3.5. Discussion

We make a few important observations regarding our privacy analysis. First, the temporal privacy leakage is defined in a personalized way. That is, the privacy leakage may be different for users with distinct temporal patterns (i.e., and ). We define the overall temporal privacy leakage as the maximum one for all users, so that is compatible with the traditional ϵ-DP mechanisms (using one parameter to represent the overall privacy level) and we can convert them to protect against TPL. On the other hand, our definitions is also compatible with personalized differential privacy (PDP) mechanisms[18], in which the personalized privacy budgets, i.e., a vector [ϵ1, …, ϵn], are allocated to each user. In other words, we can convert a PDP mechanism to bound the temporal privacy leakage for each user.

Second, in this paper, we focus on the temporally correlated data and assume that the adversary has knowledge of temporal correlations modeled by Markov chain. However, it is possible that the adversary has knowledge about more sophisticated temporal correlation model or other types of correlations. Especially, if the the assumption about Markov model is not the “ground truth”. We may not protect against TPL appropriately. Song et al. [14] provided a formalization of the upper bound of privacy leakage if a set of possible data correlations Θ is given, which is max-divergence between any two conditional distributions of and θ ∈ Θ given any secret that we want to protect. In their Markov Quilt Mechanism that models correlation using Bayesian Network, the privacy leakage can be represented by max-influence between nodes (tuples). Hence, given a set of BNs as possible data correlations, the upper bound of privacy leakage is the largest max-influence under different BNs. Similarly, in our case, given a set of Transition Matrices (TM), we can calculate the maximum TPL w.r.t. different TMs. However, it remains an open question how to find a set of possible data correlations Θ that includes the adversarial knowledge or the ground truth in a high probability, which may depend on the application scenarios. We believe that this question is also related to “how to identify appropriate concepts of neighboring databases in different scenarios” since properly defined neighboring databases can circumvent the affect of data correlations on privacy leakage in a certain extent (as we show in Section 3.3, the difference between event-level privacy, w-event privacy, and user-level privacy).

4. CALCULATING TEMPORAL PRIVACY LEAKAGE

In this section, we design algorithms for computing backward privacy leakage and forward privacy leakage. We first show that both of them can be transformed to the optimal solution of a linear-fractional programming problem [19] in Section 4.1. Traditionally, this type of problem can be solved by simplex algorithm in exponential time [19]. By exploiting the constraints in this problem, we then design a polynomial algorithm to calculate it in Section 4.2. Further, based on our observation of some repeated computation when the polynomial algorithm runs on different time points, we precompute such common results and design quasi-quadratic and sub-linear algorithms for calculating temporal privacy leakage at each time point in Sections 4.3 and 4.4, respectively.

4.1. Problem Formulation

According to the privacy analysis of BPL and FPL in Section 3.2, we need to solve the backward and forward temporal privacy loss functions and in Equations (13) and (15), respectively. By observing the structure of the first term in Equations (12) and (14), we can see that the calculations for recursive functions and are virtually in the same way. They calculate the increment of the input values (the previous BPL or the next FPL) based on temporal correlations (backward or forward). Although different degree of correlations result in different privacy loss functions, the methods for analyzing them are the same.

We now quantitatively analyze the temporal privacy leakage. In the following, we demonstrate the calculation of , i.e., the first term

| (17) |

We now simplify the notations in the above formula. Let two arbitrary (distinct) rows in be vectors q = (q1, …, qn) and d = (d1, …, dn). For example, suppose that q is the first row in the transition matrix of Fig. 2b; then, the elements in q are: , , , etc. Let x = (x1, …, xn)T be a vector whose elements indicate with distinct values of , e.g., x1 denotes . We obtain the following by expanding in Equation (17)

| (18) |

Next, we formalize the objective function and constraints. Suppose that . According the definition of BPL (as the supremum), for any xj, xk ∈ x, we have . Given x as the variable vector and q, d as the coefficient vectors, is equal to the logarithm of the objective function (19) in the following maximization problem

| (19) |

| (20) |

| (21) |

The above is a form of Linear-Fractional Programming [19] (i.e., LFP), where the objective function is a ratio of two linear functions and the constraints are linear inequalities or equations. A linear-fractional programming problem can be converted into a sequence of linear programming problems and then solved using the simplex algorithm in time O(2n) [19]. According to Equation (18), given as an n × n matrix, finding involves solving n(n– 1) such LFP problems w.r.t. different permutation of choosing two rows q and d from the transition matrix . Hence, the overal time complexity using simplex algorithm is O(n22n).

As we mentioned previously, the calculations of and are identical. For simplicity, in the following part of this paper, we use to represent the privacy loss function or , use α to denote or , and use Pi to denote or .

4.2. Polynomial Algorithm

In this section, we design an efficient algorithm to calculate TPL, i.e., to solve the linear-fractional program in Equations (19), (20), and (21). Intuitively, we prove that the optimal solutions always satisfy some conditions (Theorem 5), which enable us to design an efficient algorithm to obtain the optimal value without directly solving the optimization problem.

Properties of the Optimal Solutions.

From Inequalities (20) and (21), we know that the feasible region of the constraints are not empty and bounded; hence, the optimal solution exists. We prove Theorem 5, which enables the optimal solution to be found in time O(n2).

We first define some notations that will be frequently used in our theorems. Suppose that the variable vector x consists of two parts (subsets): x+ and x−. Let the corresponding coefficients vectors be q+, d+ and q−, d−. Let q = ∑q+ and d = ∑d+. For example, suppose that x+ = [x1, x3] and x+ = [x2, x4, x5]. Then, we have q+ = [q1, q3], d+ = [d1, d3], q− = [q2, q4, q5], and d− = [d2, d4, q5]. In this case, q = q1 + q3 and d = d1 + d3.

Theorem 5. If the following Inequalities (22) and (23) are satisfied, the maximum value of the objective function in the problem (19), (20), and (21) is

| (22) |

| (23) |

According to Equation (18), the increment of temporal privacy loss, i.e., , is the maximum value among the n(n– 1) LFP problems, which are defined by different 2-permutations q and d chosen from n rows of Pi

| (24) |

Further, we give Corollary 2 for finding q+ and d+.

Corollary 2. If Inequalities (22) and (23) are satisfied, we have qj > dj in which qj ∈ q+ and dj ∈ d+.

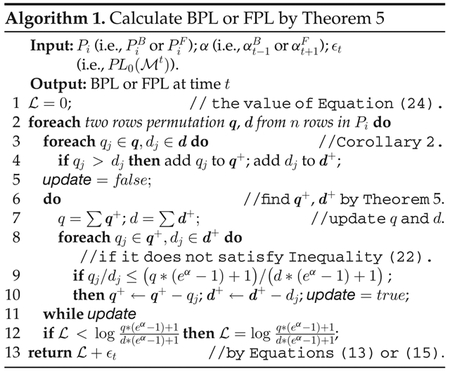

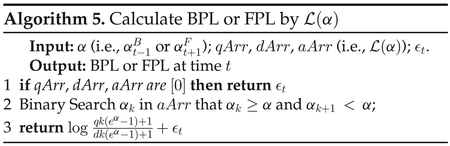

Now, the question is how do we find q and D (or, q+ and d+) in Theorem 5 that give the maximum value of objective function. Inequalities (22) and (23) are sufficient conditions for obtaining such optimal value. Corollary 2 gives a necessary condition for satisfying Inequalities (22) and (23). Based on the above analysis, we design Algorithm 1 for computing BPL or FPL.

Algorithm Design.

According to the definition of BPL and FPL, we need to find the maximum privacy leakage (Line 12) w.r.t. any 2-permutations selected from n rows of the given transition matrix Pi (Line 2). Lines 3~11 are to solve a single linear-fractional programming problem w.r.t two specific rows chosen from Pi. In Lines 3 and 4, we divide the variable vector x into two parts according to Corollary 2, which gives the necessary condition for finding the maximum solution: if a pair of coefficients with the same subscript qj ≤ dj, they are not in q+ and d+ that satisfy Inequalities (22) and (23). In other words, if qj > dj, they are “candidates” in q+ and d+ that gives the maximum objective function. In Lines 5~11, we check the candidates q+ and d+ whether or not satisfying Inequalities (22) and (23). In Line 7, we update the values of q and d because they may be changed in the context. In Lines 8~10, we check each bit in q+ and d+ whether or not satisfying Inequality (22). If any bit is removed, we set a flag updated to true and do the loop again until every pairs of q+ and d+ satisfy Inequality (22).

A subtle question may arise regarding such “update”. In Lines 8~10, the algorithm may remove several pairs of qj and dj, say, {q1, d1} and {q2, d2}, that do not satisfy Inequality (22) in one loop. However, one may wonder if it is possible that, after removing {q1, d2} from q+ and d+, Inequality (22) can be satisfied for {q2, d2} due to the update of q and d, i.e., . We show that this is impossible. If , we have . Hence, . Therefore, we can remove multiple pairs of qj and dj that do not satisfy Inequality (22) at one time.

Theorems 6 and 7 provide insights on transition matrices that lead to the extreme cases of temporal privacy leakage, which are in accordance with Remark 1.

Theorem 6. If for any two rows q and d chosen from Pi that satisfy qi = di for i ∈ [1, n], we have .

Theorem 7. If there exist two rows q and d in Pi that satisfy qi = 1 and di = 0 for a certain index i, in an identical function, i.e, .

Complexity.

The time complexity for solving one linear-fractional programming problem (Lines 3~11) w.r.t. two specific rows of the transition matrix is O(n2) because Line 9 may iterate n(n – 1) times in the worst case. The overall time complexity of Algorithm 1 is O(n4) since there are n(n – 1) permutations of different pairs of q and d.

4.3. Quasi-Quadratic Algorithm

When Algorithm 1 runs on different time points for continuous data release, we have a constant Pi and different α as inputs at each time point. Our observation is that, there may exist some common computations when Algorithm 1 takes different inputs of α. More specifically, we want to know that, for given q and d which are two rows chosen from Pi, whether or not the final q and d (when stopping update in Line 11 in Algorithm 1) keep the same for any input of α, so that we can precompute q and d and do not need Lines 5~11 at next run with a different α. Since q, d and α indicate a specific LFP problem in Equations (19), (20), and (21), we attempt to directly obtain the q and p in Theorem 5. Unfortunately, we find that such q and d do not keep the same for different α w.r.t. given q and d. However, interestingly we find that, for any input α, there are only several possible pairs of q and d when the update in Lines 6~11 of Algorithm 1 is terminated, as shown in Theorem 8.

Theorem 8. Let q and d be two vectors drawn from rows of a transition matrix. Assume qi ≠ di, i ∈ [1, n], for each qi ∈ q and di ∈ d.2 Without loss of generality, we assume , then there are only k pairs of q and d that satisfy Inequalities (22) and (23) for given q and d

More interestingly, we find that the values of q and d are monotonically decreasing with the increase of α. That is, when α is increasing from 0 to ∞, the pairs of q and d is transiting from case 1 to case 2,…, until to case k. In other words, the final q and d is constant in a certain range of α. Hence, the mapping from a given α to the optimal solution of a LFP problem w.r.t. q and d can be represented by a piecewise function as shown in Theorem 9.

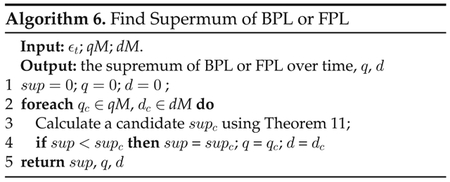

Theorem 9. We can represent the function of the optimal solution of LPF problem w.r.t. given q and d by a piecewise function as follows, where qi and di are the ith elements of q and d, respectively; and for j ∈ [1, k − 1]. We call α1, …, αk−1 in the sub-domains as transition points; q1, …, qk and d1, …, dk as coefficients of the piecewise function

| (25) |

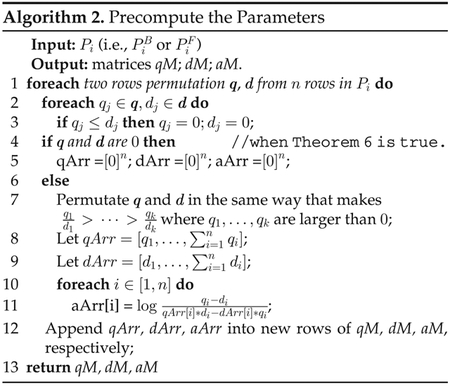

For convenience, we let qArr = [q1, …, qk], dArr = ‘[d1, …, dk], and aArr = [αk−1, …, 0]. Then, qArr, dArr, aArr determine a piecewise function in Equation (25). Also, we let qM, dM and aM be n(n – 1) × n matrices in which rows are qArr, dArr and aArr w.r.t. distinct 2-permutations of q and d from n rows of Pi. In other words, the three matrices determine n(n – 1) piecewise functions.

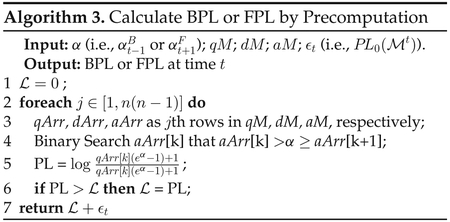

In the following, we first design Algorithm 2 to obtain qM, dM and aM. We then design Algorithm 3 to utilize the precomputed qM, dM, aM for calculating backward or forward temporal privacy leakage at each time point.

We now use an example of q = [0:2, 0:3, 0:5] and d = [0.1, 0, 0:9] to demonstrate two notable points in Algorithm 2. First, with such q and d, Line 7 results in q = [0:3, 0:2, 0] and q = [0, 0.1, 0] since only q1 = 0:3 > 0 and q2 = 0:2 > 0. Second, after the operation of Lines 10 and 11, we have aArr= [Inf,1.47,NaN].

Algorithm 2 needs O(n3) time for precomputing the parameters qM, dM and aM, which only needs to be run one time and can be done offline. Algorithm 3 for calculating privacy leakage at each time point needs O(n2 log n) time.

4.4. Sub-Linear Algorithm

In this section, we further design a sub-linear privacy leakage quantification algorithm by investigating how to generate a function of , so that, given an arbitrary α, we can directly calculate the privacy loss.

Corollary 3. Temporal privacy loss function can be represented as a piecewise function: maxq,d∈Pi log fq,d(α).

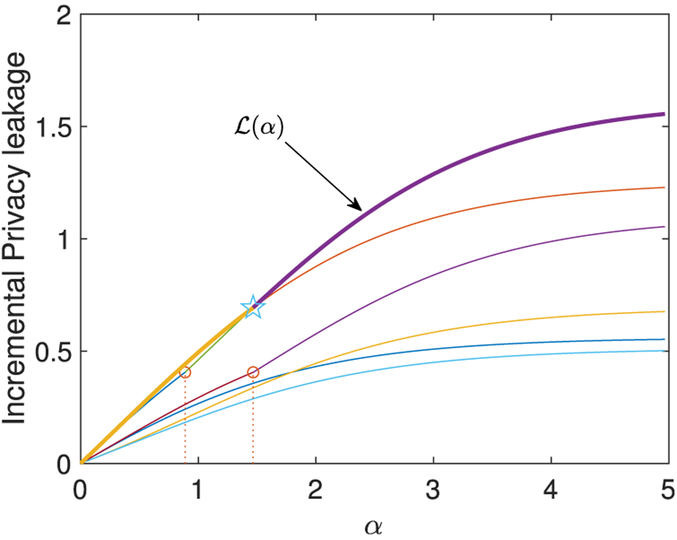

Example 4. Fig. 4 shows the function w.r.t. . Each line represents a piecewise function fq,d(α) w.r.t. distinct pairs of q and d chosen from Pi. Accoding to the definition of BPL and FPL, function is a piecewise function whose value is not less than any other functions for any α, e.g., the bold line in Fig. 4. The pentagram, which is not any transition point, indicates an intersection of two piecewise functions.

Fig. 4.

Illustration of function w.r.t. a 3 × 3 transition matrix in Example 4. Each lines is fq,d(α) w.r.t. distinct pairs of q and d. The bold line is . The circle marks are transition points.

Now, the challenge is how to find the “top” function which is larger than or equal to other piecewise functions. A simple idea is to compare the piecewise functions in every sub-domains. First, we list all transition points α1, …, αm of n(n – 1) functions fq,d(α) w.r.t. distinct pairs of q and d (i.e., all distinct values in aM); then, we find the “top” piecewise function on each range between two consecutive transition points, which requires computation time O(n3). Despite the complexity, this idea may not be correct because two piecewise functions may have an intersection between two consecutive transition points, such as the pentagram shown in Fig. 4. Hence, we need a way to find such additional transition points. Our finding is that, if the top function is the same one at a1 and a2, then it is also the top function for any α ∈ [a1, a2], which is formalized as the following theorem.

Theorem 10. Let and . If f(a1) ≥ f′(a1) and f(a2) ≥ f′(a2) in which 0 < a1 < a2, we have f(α) ≥ f′(α) for any a1 ≤ α ≤ a2.

Based on Theorem 10, we design Algorithm 4 to generate a privacy loss function w.r.t. a given transition matrix. Algorithm 4 outputs a piecewise function of , dArr are coefficients of the sub-functions, and aArr contains the corresponding sub-domains.

We now analyze Algorithm 4, which generate the privacy loss function in given a domain [a1, am] by recursively find its sub-functions in sub-domains [a1, ak] and [a1, am]. In Line 1, we check whether the parameters qM, dM and aM are [0], which implied that Pi is uniform and is 0 (see Lines 4 and 5 in Algorithm 2). For convenience of the following analysis, we denote the definition of at point α = aj by with coefficients qj and dj. In Lines 2, we obtain the definition of with coefficients q1 and d1. In Line 3, we obtain the definition of with coefficients qm and dm. From Line 4 to 20, we attempt to find the definition of at every point in [a1, am]. There are two modules in this part. The first one is from Line 5 to 12, in which we check that the definition of in (a1, am) is or . The second module is from Line 13 to 19, in which we exam the definition of at point ak (which may be the intersection of two sub-functions and ) and in the sub-domains [a1, ak] and [a1, ak]. Now, we dive into some details in these modules. In Line 5, the condition is true when and are the same, or they intersect at α = am. Then, is the top function in [a1, am] by Theorem 10 because of and . In Line 8, the condition is true when and intersect at a1 or they have no intersection in [a1, am] (this implies q1 = qm and d1 = dm). Then, is the top function in [a1, am] by Theorem 10. In Lines 6~8 and 10~12, we store the coefficients and sub-domains in arrays. From Line 14 to 19, we deal with the case of two functions intersecting at αk that a1 < αk < am by recursively invoking Algorithm 4 to generate the sub-function of in [α1, αk] and [α1, αm].

When obtaining function , we can directly calculate BPL or FPL as shown in Algorithm 5. In Line 1 of Algorithm 5, we can perform a binary search because aArr is sorted.

Complexity.

The complexity of Algorithm 5 for calculating privacy leakage at one time point is O(log n), which makes it very efficient even for large n and T. Algorithm 4 itself requires O(n2 log n + m log m) time where m is the amount of transition points in [a1, am], and its parameters qM, dM and aM need to be calculated by Algorithm 2 which requires O(n3) time; hence, in total, generating needs O(n3 + m log m) times.

5. Bounding Temporal Privacy Leakage

In this section, we design two privacy budget allocation strategies that can be used to convert a traditional DP mechanism into one protecting against TPL.

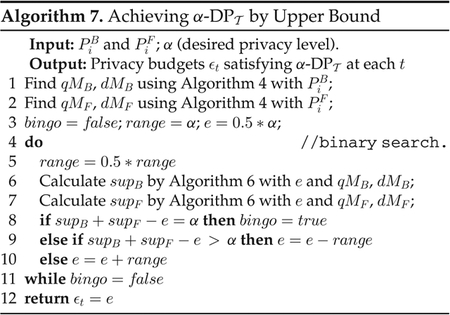

We first investigate the upper bound of BPL and FPL. We have demonstrated that BPL and FPL may increase over time as shown in Fig. 3. A natural question is that: is there a limit of BPL and FPL over time.

Theorem 11. Given a transition matrix (or ), let q and d be the ones that give the maximum value in Equation (24) and q ≠ d. For that satisfies ϵ-DP at each t ∈ [1, T], there are four cases regarding the supremum of BPL (or FPL) over time

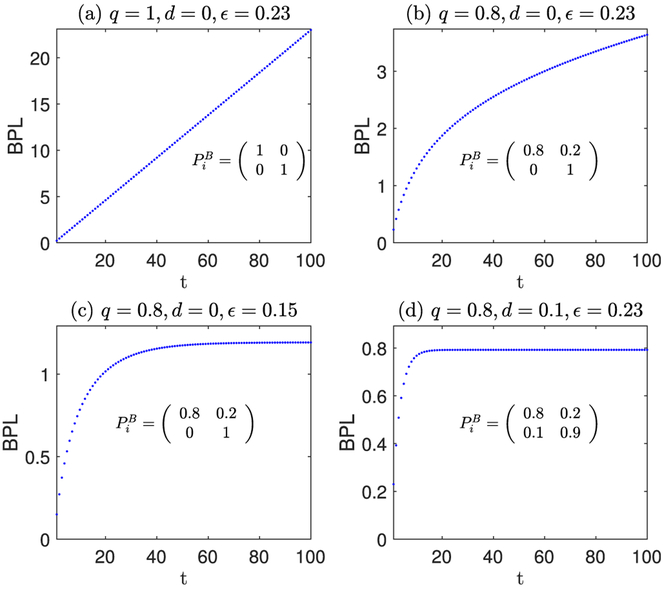

Example 5 (The supremum of BPL over time). Suppose that satisfies ϵ-DP at each time point. In Fig. 5, it shows the maximum BPL w.r.t. different ϵ and different transition matrices of . In (a) and (b), the supremum does not exist. In (c) and (d), we can calculate the supremum using Theorem 11. The results are in line with the ones from computing BPL step by step at each time point using Algorithm 1.

Fig. 5.

Examples of the maximum BPL over time.

Algorithm 6 for finding supreme of BPL or FPL is correct because, according to Theorem 8, all possible q and d that give the maximum value in Equation (24) are in the matrices qM and dM. Algorithm 6 is useful not only for designing privacy budget allocation strategies in this section, but also for setting an appropriate parameter am as the input of Algorithm 4 because we will show in experiments that a larger am makes Algorithm 4 time-consuming (while, too small am may result in failing to calculate privacy leakage from if the input α may be larger than am).

Achieving by Limiting Upper Bound.

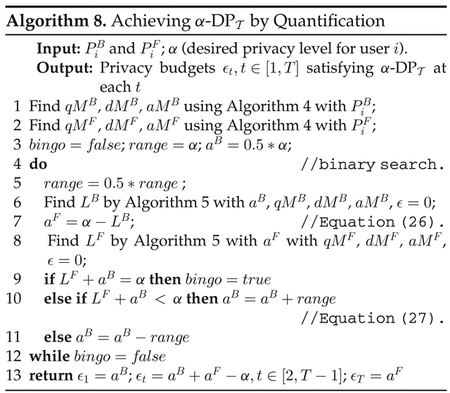

We now design a privacy budget allocation strategy utilizing Theorem 11 to bound TPL. Theorem 11 tells us that, if it is not the strongest temporal correlation (i.e., d = 0 and q = 1), we may bound BPL or FPL within a desired value by allocating an appropriate privacy budget to a traditional DP mechanism at each time point. In other words, we want a constant ϵt that guarantee the supremum of TPL, which is equal to the sum of the supremum of BPL and the supremum of BPL subtracting ϵt by Equation (11), will not larger than α. Based on this idea, we design Algorithm 7 for solving such ϵt.

Achieving by Privacy Leakage Quantification.

Algorithm 7 allocates privacy budgets in a conservative way: when T is short, the privacy leakage may not be increased to the supremum. We now design Algorithm 8 to overcome this drawback. Observing the supremum of backward privacy loss in Figs. 5c and 5d, BPL at the first time point is much less than the supremum. Similarly, it is easy to see that FPL at the last time point is much less than its supremum. Hence, we attempt to allocate more privacy budgets to and so that the temporal privacy leakage at every time points are exactly equal to the desired level. Specifically, if we want that BPL at two consecutive time points are exactly the same value αB, i.e., , we can derive that ϵt = ϵt+1 for t ≥ 2 (it is true for t = 1 only when ). Applying the same logic to FPL, we have a new strategy for allocating privacy budgets: assigning larger privacy budgets at time points 1 and T, and constant values at time [2, T] to make sure that BPL at time points [1, T − 1] are the same, denoted by αB, and FPL at time [2, T] are the same, denoted by αF. Hence, we have ϵ1 = αB and ϵT = αF. Let the value of privacy budget at [2, T] be ϵm. We have (i) , (ii) and (iii) αB + αF − ϵm = α Combing (i) and (iii), we have Equation (26); Combing (ii) and (iii), we have Equation (27)

| (26) |

| (27) |

Based on the above idea, we design Algorithm 8 to solve αB and αF. Since αB should be in [0, α], we heuristically initialize αB with 0:5 * α in Line 3 and then use binary search to find appropriate αB and αF that satisfy Equations (26) and (27).

6. EXPERIMENTAL EVALUATION

In this section, we design experiments for the following: (1) verifying the runtime and correctness of our privacy leakage quantification algorithms, (2) investigating the impact of the temporal correlations on privacy leakage and (3) evaluating the data release Algorithms 7 and 8. We implemented all the algorithms3 in Matlab2017b and conducted the experiments on a machine with an Intel Core i7 2.6 GHz CPU and 16G RAM running macOS High Sierra.

6.1. Runtime of Privacy Quantification Algorithms

In this section, we compare the runtime of our algorithms with IBM ILOG CPLEX,4 which is a well-known software for solving optimization problems, e.g., the linear-fractional programming problem (19), (20), and (21) in our setting.

For verifying the correctness of three privacy quantifying algorithms, Algorithms 1, 3 and 5, we generate 100 random transition matrices with dimension size n = 30 and comparing the calculation results with the one solving LFP problem using CPLEX. We verified that all results obtained from our algorithms are identical to the one using CPLEX w.r.t. the same transition matrix.

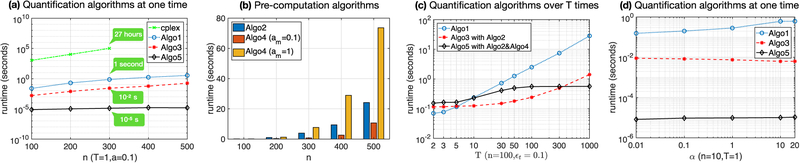

For testing the runtime of our algorithms, we run them 30 times with randomly generated transition matrices, and run CPLEX one time (because it is very time-consuming), and then calculate the average runtime for each of them. Since Algorithm 3 needs parameters that are precomputed by Algorithm 2, and Algorithm 5 needs that can be obtained using Algorithm 4, we also test the runtime of these precomputations. The results are shown in Fig. 6.

Fig. 6.

Runtime of temporal privacy leakage quantification algorithms.

Runtime versus n.

In Figs. 6a and 6b, we show the runtime of privacy quantification algorithms and precomputation algorithms, respectively, In Fig. 6a, each algorithm takes inputs of α = 0.1 and n × n random probability matrices. The runtime of all algorithms increase along with n because the number of variables in our LFP problem is n. The proposed Algorithms 1, 3 and 5 significantly outperform CPLEX. In Fig. 6b, we test precomputation procedures. We observed that all algorithms are increasing with n, but Algorithm 4 is more susceptible to am. Algorithm 4 with a larger am results in higher runtime because it performs binary search in [0, am].

These results are in line with our complexity analysis, which shows the complexity of Algorithms 2 and 4 are O(n3) and O(n2 log n + m log m) (m is the amount of transition points and increasing with am), respectively. However, when n or am is large, pre-computation Algorithms 2 and 4 are time-consuming because we need to find optimal solutions for n *(n – 1) LFP problems given a n-dimension transition matrix. This can be improved by advanced computation tools such as parallel computing because here each LFP problem is independently solved, and such computation only needs to be run one time before starting to release private data. Another interesting way to improve the runtime is to find the relationship between the optimal solutions of different LFP problems given a transition matrix (so that we can prune some computations). We defer this to future study.

Runtime versus T.

In Fig. 6c, we test the runtime of each privacy quantification algorithm integrated with their precomputations over different length of time points. We want to know how can we benefit from these precomputations over time. All algorithms take inputs of 100 × 100 matrices and ϵt = 0.1 for each time point t. The parameters of Algorithm 4 need to be initialized by Algorithm 2, so we take them as an integrated module along with Algorithm 5. It shows that, Algorithm 1 runs fast if T is small. Algorithm 3 becomes the most preferable if T is in [5, 300]. However, when T is larger than 300, Algorithm 5 with its precomputation (Algorithm 4 with am = (T + 1) * ϵt which is the worst case of supremum) is the fast one and its runtime is almost constant with the increase of T. Therefore, there is no best algorithm in efficiency without a known T, but we can choose appropriate algorithm adaptively.

Runtime versus α.

In Fig. 6d, we show that, a larger previous BPL (or the next FPL), i.e., α, may lead to higher runtime of Algorithm 1, whereas other algorithms are relatively stable for varying a. The reason is that, when a is large, Algorithm 1 may take more time in Lines 9 and 10 for updating each pair of qj ∈ q+ and dj ∈ d+ to satisfy Inequality (22). An update in Line 10 is more likely to occur due to a large α because is increasing with α. However, such growth of runtime along with α will not last so long because the update happens n times in the worse case. As shown in Fig. 6b, when α > 10, the runtime of Algorithm 1 becomes stable.

6.2. Impact of Temporal Correlations on TPL

In this section, for the ease of exposition, we only present the impact of temporal correlations on BPL because the growth of BPL and FPL are in the same way.

The Setting of Temporal Correlations.

To evaluate if our privacy loss quantification algorithms can perform well under different degrees of temporal correlations, we find a way to generate the transition matrices to eliminate the effect of different correlation estimation algorithms or datasets. First, we generate a transition matrix indicating the “strongest” correlation that contains probability 1:0 in its diagonal cells (this type of transition matrix will lead to an upper bound of TPL). Then, we perform Laplacian smoothing [20] to uniformize the probabilities of Pi (the uniform transition matrix will lead to an low bound of TPL). Let pjk be an element at the jth row and kth column of the matrix Pi. The uniformized probabilities are generated using Equation (28), where s is a positive parameter that controls the degrees of uniformity of probabilities in each row. Hence, a smaller s means stronger temporal correlation. We note that, different s are only comparable under the same n

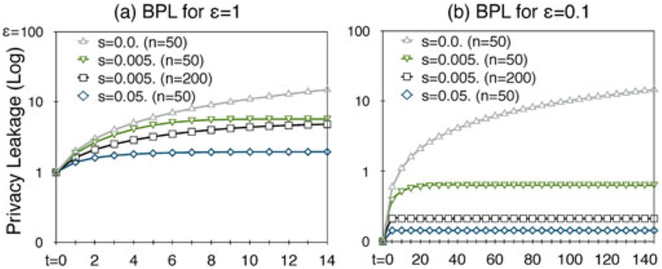

| (28) |

We examined s values ranging from 0.005 to 1 and set n to 50 and 200. Let ε be the privacy budget of at each time point. We test ε = 1 and 0.1. The results are shown in Fig. 7 and are summarized as follows.

Fig. 7.

Evaluation of BPL under different degrees of correlations.

Privacy Leakage versus s.

Fig. 7 shows that the privacy leakage caused by a non-trivial temporal correlation will increase over time, and such growth first increases sharply and then remains stable because it is gradually close to its supremum. The increase caused by a stronger temporal correlations (i.e., smaller s) is steeper, and the time for the increase is longer. Consequently, stronger correlations result in higher privacy leakage.

Privacy Leakage versus ε.

Comparing Figs. 7a and 7b, we found that 0.1-DP significantly delayed the growth of privacy leakage. Taking s = 0:005, for example, the noticeable increase continues for almost 8 timestamps when ε = 1 (Fig. 7a), whereas it continues for approximately 80 time-stamps when ε = 0.1 (Fig. 7b). However, after a sufficient long time, the privacy leakage in the case of ε = 0.1 is not substantially lower than that of ε = 1 under stronger temporal correlations. This is because, although the privacy leakage is eliminated at each time point by setting a small privacy budget, the adversaries can eventually learn sufficient information from the continuous releases.

Privacy Leakage versus n.

Under the same s, TPL is smaller when n (dimension of the transition matrix) is larger, as shown in the lines s = 0.005 with n = 50 and n = 200 of Fig. 7. This is because the transition matrices tend to be uniform (weaker correlations) when the dimension is larger.

6.3. Evaluation of Data Releasing Algorithms

In this section, we first show a visualization of privacy allocation of Algorithms 7 and 8, then we compare the data utility in terms of Laplace noise.

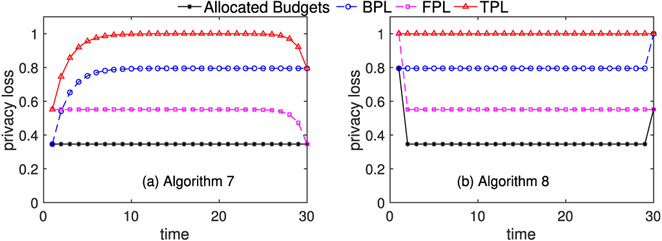

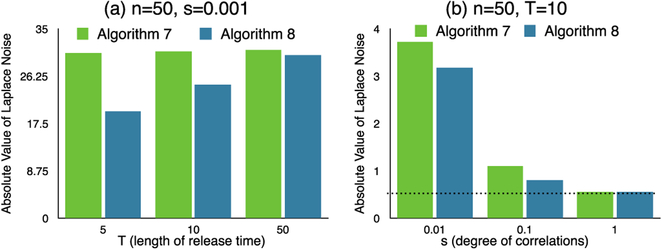

Fig. 8 shows an example of budget allocation, w.r.t. and . The goal is . It is easy to see that Algorithm 8 has better data utility because it exactly achieves the desired privacy level.

Fig. 8.

Privacy budget allocation schemes for .

Fig. 9 shows the data utility of Algorithms 7 and 8 with . We calculate the absolute value of the Laplace noise with the allocated budgets (as shown in Fig. 8). Higher value of noise indicates lower data utility. In Fig. 9a, we test the data utility under backward and forward temporal correlation both with parameter s = 0:001, which means relatively strong correlation. It shows that, when T is short, Algorithm 8 outperforms Algorithm 7. In Fig. 9b, we investigate the data utility under different degree of correlations. The dash line indicates the absolute value of Laplace noise if no temporal correlation exists (privacy budget is 2). It is easy to see that the data utility significantly decays under strong correlation s = 0:01.

Fig. 9.

Data utility of mechanisms.

7. RELATED WORK

Dwork et al. first studied differential privacy under continual observation and proposed event-level/user-level privacy [4], [5]. A plethora of studies have been conducted to investigate different problems in this setting, such as high dimensional data [1], [8], infinite sequence [7], [21], [22], and real-time publishing [6], [23]. To the best of our knowledge, no study has reported the risk of differential privacy under temporal correlations for the continuous aggregate release setting. Although [24] have considered a similar adversarial model in which the adversaries have prior knowledge of temporal correlations represented by Markov chains, they proposed a mechanism extending differential privacy for releasing a private location, whereas we focus on the scenario of continuous aggregate release with DP.

Several studies have questioned whether differential privacy is valid for correlated data. Kifer and Machanavajjhala [16], [17], [25] first raised the important issue that differential privacy may not guarantee privacy if adversaries know the data correlations between tuples. They [25] argued that it is not possible to ensure any utility in addition to privacy without making assumptions about the data-generating distribution and the background knowledge available to an adversary. To this end, they proposed a general and customizable privacy framework called PufferFish [16], [17], in which the potential secrets, discriminative pairs, and data generation need to be explicitly defined. Song et al. [14] proposed Markov Quilt Mechanism when the correlations can be modeled by Bayesian Network. Yang et al. [12] investigated differential privacy on correlated tuples described using a proposed Gaussian correlation model. The privacy leakage w.r.t. adversaries with specified prior knowledge can be efficiently computed. Zhu et al. [26] proposed correlated differential privacy by defining the sensitivity of queries on correlated data. Liu et al. [13] proposed dependent differential privacy by introducing dependence coefficients for analyzing the sensitivity of different queries under probabilistic dependence between tuples. Most of the above works dead with correlations between users in the database, i.e., user-user correlations, in the setting of one-shot data release, whereas we deal with the correlation among single user’s data at different time points, i.e., temporal correlations, and focusing on the dynamic change of privacy guarantee in continuous data release.

On the other hand, it is still controversial [27] what should be the guarantee of DP on correlated data. Li et al. [27] proposed Personal Data Principle for clarifying the privacy guarantee of DP on correlated data. It states that an individual’s privacy is not violated if no data about the individual is used. By doing this, one can ignore any correlation between this individual’s data and other users’ data, i.e., user-user correlations. On the other hand, the question of “what is individual’s data”, or “what should be an appropriate notion of negiboring databases” is tricky in many application scenarios such as genomic data. If we apply Personal Data Principle to the setting of continuous data release, event-level privacy is not a good fit for protecting individual’s privacy because a user’s data at each time point is only a part of his/her whole data in the streaming database. Our work shares the same insight with Personal Data Principle on this point: we show that the privacy loss of event-level privacy may increase over time under temporal correlation, while the guarantee of user-privacy is as expected. We note that, when the data stream is infinite or the end of data release is unknown, we can only resort to event-level privacy or w-event privacy [7]. Our work provides useful tools against TPL in such setting.

8. CONCLUSIONS

In this paper, we quantified the risk of differential privacy under temporal correlations by formalizing, analyzing and calculating the privacy loss against adversaries who have knowledge of temporal correlations. Our analysis shows the privacy loss of event-level privacy may increase over time, while the privacy guarantee of user-level privacy is as expected. We design fast algorithms for quantifying temporal privacy leakage which enables private data release in real-time. This work opens up interesting future research directions, such as investigating the privacy leakage under temporal correlations combining with other type of correlation models, and use our methods to enhance previous studies that neglected the effect of temporal correlations in continuous data release.

ACKNOWLEDGMENTS

This work was supported by JSPS KAKENHI Grant Number 16K12437, 17H06099, JSPS Core-to-Core Program, A. Advanced Research Network, the National Institute of Health (NIH) under award number R01GM114612, the Patient-Centered Outcomes Research Institute (PCORI) under contract ME-1310–07058, and the US National Science Foundation under award CNS-1618932.

Biographies

Yang Cao received the BS degree from the School of Software Engineering, Northwestern Polytechnical University, China, in 2008, and the MS and PhD degrees from the Graduate School of Informatics, Kyoto University, Japan, in 2014 and 2017, respectively. He is currently a postdoctoral fellow with the Department of Math and Computer Science, Emory University. His research interests include privacy preserving data publishing and mining.

Masatoshi Yoshikawa received the BE, ME, and PhD degrees from the Department of Information Science, Kyoto University, in 1980, 1982, and 1985, respectively. Before joining the Graduate School of Informatics, Kyoto University, as a professor in 2006, he was a faculty member of Kyoto Sangyo University, the Nara Institute of Science and Technology, and Nagoya University. His general research interest include the area of databases. His current research interests include multi-user routing algorithms and services, theory and practice of privacy protection, and medical data mining. He is a member of the ACM and IPSJ.

Yonghui Xiao received the three BS degrees from Xi’an Jiaotong University, China, in 2005, the MS degree from Tsinghua University, in 2011 after spending two years working in industry, and the PhD degree from the Department of Math and Computer Science, Emory University, in 2017. He is currently a software engineer with Google.

Li Xiong received the BS degree from the University of Science and Technology of China, the MS degree from Johns Hopkins University, and the PhD degree from the Georgia Institute of Technology, all in computer science. She is a Winship distinguished research professor of computer science (and biomedical informatics) with Emory University. She and her research group, Assured Information Management and Sharing (AIMS), conduct research that addresses both fundamental and applied questions at the inter face of data privacy and security, spatiotemporal data management, and health informatics.

Footnotes

Lap(b) denotes a Laplace distribution with variance 2b2.

The case of qi = di is proven in Theorem 6.

Souce code: https://github.com/brahms2013/TPL

http://www-01.ibm.com/software/commerce/optimization/cplex-optimizer/. We use version 12.7.1.

Contributor Information

Yang Cao, Department of Math and Computer Science, Emory University, Atlanta, GA 30322..

Masatoshi Yoshikawa, Department of Social Informatics, Kyoto University, Kyoto 606-8501, Japan..

Yonghui Xiao, Google Inc., Mountain View, CA 94043..

Li Xiong, Department of Math and Computer Science, Emory University, Atlanta, GA 30322..

REFERENCES

- [1].Acs G and Castelluccia C, “A case study: Privacy preserving release of spatio-temporal density in paris,” in Proc. ACM SIGKDD Int. Conf. Knowl. Discovery Data Mining, 2014, pp. 1679–1688. [Google Scholar]

- [2].Bolot J, Fawaz N, Muthukrishnan S, Nikolov A, and Taft N, “Private decayed predicate sums on streams,” in Proc. Int. Conf. Database Theory, 2013, pp. 284–295. [Google Scholar]

- [3].Chan T-HH, Shi E, and Song D, “Private and continual release of statistics,” ACM Trans. Inf. Syst. Secur, vol. 14, no. 3, pp. 26:1–26:24, 2011. [Google Scholar]

- [4].Dwork C, “Differential privacy in new settings,” in Proc. Annu. ACM-SIAM Symp. Discrete Algorithms, 2010, pp. 174–183. [Google Scholar]

- [5].Dwork C, Naor M, Pitassi T, and Rothblum GN, “Differential privacy under continual observation,” in Proc. Annu. ACM Symp. Theory Comput, 2010, pp. 715–724. [Google Scholar]

- [6].Fan L, Xiong L, and Sunderam V, “FAST: Differentially private real-time aggregate monitor with filtering and adaptive sampling,” in Proc. ACM SIGMOD Int. Conf. Manage. Data, 2013, pp. 1065–1068. [Google Scholar]

- [7].Kellaris G, Papadopoulos S, Xiao X, and Papadias D, “Differentially private event sequences over infinite streams,” Proc. VLDB Endowment, vol. 7, no. 12, pp. 1155–1166, 2014. [Google Scholar]

- [8].Xiao Y, Xiong L, Fan L, Goryczka S, and Li H, “DPCube: Differentially private histogram release through multidimensional partitioning,” Trans. Data Privacy, vol. 7, no. 3, pp. 195–222, 2014. [Google Scholar]

- [9].Dwork C, “Differential privacy,” in Proc. Int. Colloq. Automata Languages Program, 2006, pp. 1–12. [Google Scholar]

- [10].Dwork C, McSherry F, Nissim K, and Smith A, “Calibrating noise to sensitivity in private data analysis,” in Proc. 3rd Conf. Theory Cryptography, 2006, pp. 265–284. [Google Scholar]

- [11].Chen R, Fung BC, Yu PS, and Desai BC, “Correlated network data publication via differential privacy,” Int. J. Very Large Data Bases, vol. 23, no. 4, pp. 653–676, 2014. [Google Scholar]

- [12].Yang B, Sato I, and Nakagawa H, “Bayesian differential privacy on correlated data,” in Proc. ACM SIGMOD Int. Conf. Manage. Data, 2015, pp. 747–762. [Google Scholar]

- [13].Liu C, Chakraborty S, and Mittal P, “Dependence makes you vulnerable: Differential privacy under dependent tuples,” in Proc. Netw. Distrib. Syst. Secur. Symp, vol. 16, pp. 21–24, 2016. [Google Scholar]

- [14].Song S, Wang Y, and Chaudhuri K, “Pufferfish privacy mechanisms for correlated data,” in Proc. ACM SIGMOD Int. Conf. Manage. Data, 2017, pp. 1291–1306. [Google Scholar]

- [15].McSherry FD, “Privacy integrated queries: An extensible platform for privacy-preserving data analysis,” in Proc. ACM SIGMOD Int. Conf. Manage. Data, 2009, pp. 19–30. [Google Scholar]

- [16].Kifer D and Machanavajjhala A, “A rigorous and customizable framework for privacy,” in Proc. ACM SIGMOD-SIGACT-SIGART Symp. Principles Database Syst, 2012, pp. 77–88. [Google Scholar]

- [17].Kifer D and Machanavajjhala A, “Pufferfish: A framework for mathematical privacy definitions,” ACM Trans. Database Syst, vol. 39, no. 1, pp. 3:1–3:36, 2014. [Google Scholar]

- [18].Jorgensen Z, Yu T, and Cormode G, “Conservative or liberal? Personalized differential privacy,” in Proc. IEEE Int. Conf. Data Eng, 2015, pp. 1023–1034. [Google Scholar]

- [19].Bajalinov EB, Linear-Fractional Programming Theory, Methods, Applications and Software. Berlin, Germany: Springer, 2003. [Google Scholar]

- [20].Sorkine O, Cohen-Or D, Lipman Y, Alexa M, Rössl C, and Seidel H-P, “Laplacian surface editing,” in Proc. Eurographics Symp. Geometry Process, 2004, pp. 175–184. [Google Scholar]

- [21].Cao Y and Yoshikawa M, “Differentially private real-time data release over infinite trajectory streams,” in Proc. Int. Conf. Mobile Data Manage, 2015, pp. 68–73. [Google Scholar]

- [22].Cao Y and Yoshikawa M, “Differentially private real-time data publishing over infinite trajectory streams,” IEICE Trans. Inf. Syst, vol. E99-D, no. 1, pp. 163–175, 2016. [Google Scholar]

- [23].Li H, Xiong L, Jiang X, and Liu J, “Differentially private histogram publication for dynamic datasets: An adaptive sampling approach,” in Proc. ACM Int. Conf. Inf. Knowl. Manage, 2015, pp. 1001–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Xiao Y and Xiong L, “Protecting locations with differential privacy under temporal correlations,” in Proc. ACM SIGSAC Conf. Comput. Commun. Secur, 2015, pp. 1298–1309. [Google Scholar]

- [25].Kifer D and Machanavajjhala A, “No free lunch in data privacy,” in Proc. ACM SIGMOD Int. Conf. Manage. Data, 2011, pp. 193–204. [Google Scholar]

- [26].Zhu T, Xiong P, Li G, and Zhou W, “Correlated differential privacy: Hiding information in non-IID data set,” IEEE Trans. Inf. Forensics Secur, vol. 10, no. 2, pp. 229–242, February 2015. [Google Scholar]

- [27].Li N, Lyu M, Su D, and Yang W, “Differential privacy: From theory to practice,” Synthesis Lectures Inf. Secur. Privacy Trust, vol. 8, pp. 1–138, 2016. [Google Scholar]

- [28].Dinkelbach W, “On nonlinear fractional programming,” Manage. Sci, vol. 13, no. 7, pp. 492–498, 1967. [Google Scholar]