Abstract

Azimuthal beam scanning eliminates the uneven excitation field arising from laser interference in through-objective total internal reflection fluorescence (TIRF) microscopy. The same principle can be applied to scanning angle interference microscopy (SAIM), where precision control of the scanned laser beam presents unique technical challenges for the builders of custom azimuthal scanning microscopes. Accurate synchronization between the instrument computer, beam scanning system and excitation source is required to collect high quality data and minimize sample damage in SAIM acquisitions. Drawing inspiration from open-source prototyping systems, like the Arduino microcontroller boards, we developed a new instrument control platform to be affordable, easily programmed, and broadly useful, but with integrated, precision analog circuitry and optimized firmware routines tailored to advanced microscopy. We show how the integration of waveform generation, multiplexed analog outputs, and native hardware triggers into a single central hub provides a versatile platform for performing fast circle-scanning acquisitions, including azimuthal scanning SAIM and multiangle TIRF. We also demonstrate how the low communication latency of our hardware platform can reduce image intensity and reconstruction artifacts arising from synchronization errors produced by software control. Our complete platform, including hardware design, firmware, API, and software, is available online for community-based development and collaboration.

Subject terms: Optical imaging, Interference microscopy

Introduction

Modern imaging applications typically require the cooperative action of several independent devices, such as stages, filters, excitation modulators, and many others. Control of peripheral devices directly from the instrument computer requires independent connections, communication protocols and manufacturer-provided drivers and application programming interfaces (APIs). Integration of several peripherals in this way is technically challenging, and while software packages exist from both commercial and open-source1 suppliers, they each have a limited set of supported devices. Moreover, the latency introduced by serial communications, the operating system (OS), application overhead and suboptimal API implementations can have a significant effect on synchronization accuracy. Because of these limitations, the time taken to update the experimental parameters across multiple peripherals can be much greater than the individual devices’ response times, limiting the effective acquisition speed and increasing the risk of synchronization errors that could cause artifacts in the collected data.

To overcome these challenges, hardware controllers centralize device control within a single unit, typically using digital triggers or analog voltages to step peripheral devices through a series of predefined states. Hardware controllers can decrease communications overhead and implementation complexity by eliminating serial communications during an experiment. For devices that support digital triggering or analog control, the update time is reduced to the controller’s response time, eliminating the additional delay of serial data transfer and decoding. A controller with sufficient speed and memory can have the entire acquisition sequence pre-programmed, allowing autonomous operation from the instrument computer, eliminating communication overhead and processing time delays at runtime.

Universal microcontroller breakout boards, such as the Arduino devices2, have provided individuals and communities with a flexible platform to prototype and create interactive hobbyist-grade electronic devices. However, these controller platforms lack the appropriate I/O and optimizations to control many of the high precision, scientific-grade peripherals in state-of-the-art microscopes. General-purpose PC bus and USB data acquisition (DAQ) cards are popular alternatives that offer more powerful general-purpose I/O and system integration. However, they suffer the drawbacks of implementation complexity at hardware and software levels3,4. While the underlying controller or DAQ may be general purpose, it is implemented and developed in a single, highly specialized application. Because of this the completed systems generally lack portability, limiting transferability and community support.

Here we describe an open-source instrument-control platform that is designed with flexibility, ease of adoption, performance, and cost in mind. Throughout the development of our device, we have committed to three major design principles: (1) the device should be hardware independent and support a wide range of peripherals, (2) the device should not limit data acquisition rate and (3) the device should be an open-source community resource. While the instrument controller is general purpose by design, specific I/O and routines have been included to provide a low-cost, high-performance solution for rapid peripheral device synchronization in advanced microscopes, including laser-scanning systems. We demonstrate the advantages of hardware control in a custom azimuthal beam scanning microscope by characterization of the effects of excitation quality and timing accuracy in a pair of complementary axial localization techniques: scanning angle interference microscopy (SAIM)5,6 and multiangle total internal reflection fluorescence microscopy (MA-TIRF)7.

Results

Controller design

To effectively provide a centralized hub we integrated both analog and digital functions on a single printed circuit board (PCB) (Fig. 1a,b, Supplementary Fig. 1), simplifying connector wiring and providing protection against unwanted electrical interference. Our design further subdivided the analog circuitry into waveform generation (2x) by independent direct digital synthesizers (DDS), high-speed bipolar analog outputs (2x) with adjustable references, and a multiplexed 8-channel analog output (Fig. 1b, Supplementary Note 1), allowing us to optimize the design of each subcircuit for its intended task. We incorporated full-speed USB connectivity, 8 digital I/O lines and a serial communication port in the hardware design. Given the high component and trace density required while considering cost and availability, we opted for a 4-layer PCB design with minimum 6 mil trace width and separation. This design could be affordably sourced, unpopulated, from many custom PCB manufacturers (i.e. OSH Park, 3 boards $197.90). Following the same reasoning we selected components for our design that were actively being produced and available from multiple suppliers (i.e. Digikey, complete component set $270.90 per board) in package sizes that could be placed and soldered by hand without the need for specialized equipment. We then populated the PCBs using basic surface mount and through-hole soldering tools such as a hot air rework station, soldering iron and tweezers, commonly available in most electronics labs. The completed, populated PCBs could be built by hand in around 6 hours apiece (~$200 and 2 additional hours of labor were required to assemble the enclosure and internal connectors). The PCB layout incorporated a 0.1 inch 6-pin header for connection to an in-circuit serial programmer and debugger (ICD-U64, Custom Computer Services Inc., $89), which facilitated rapid development of updates to the controller firmware.

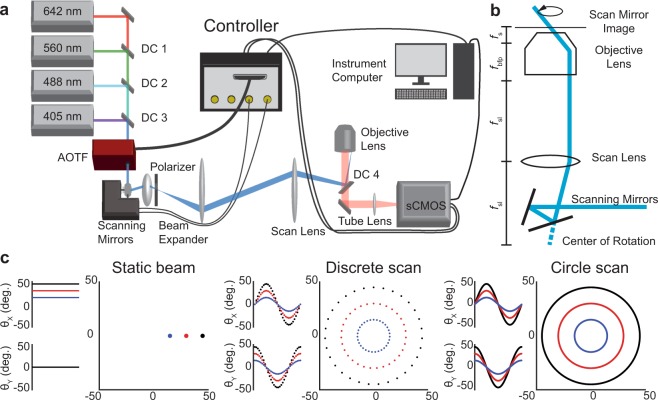

Figure 1.

Hardware control integration facilitates the cooperation of a variety of peripheral devices. (a) Completed PCB assembly with key I/O features and dimensions. (b) Conceptual system topology wherein the microcontroller (MCU) acts as an intermediary between the instrument computer and multiple peripherals. (c) Timing waveforms from a maximum framerate acquisition sequence. The camera’s exposure acts as the timing signal to synchronize peripheral device updates in a typical circle-scanning experiment. The experiment was defined as a series of three repeating scan radii (mirror waveform amplitude) for each of two excitation wavelengths (Lasers 1 and 2).

For communications between the computer and instrument controller we utilized the microcontroller’s native full-speed universal serial bus (USB) human interface device (HID) protocol. This protocol was supported on all major computer operating systems (OS) and eliminated the need for OS-specific USB drivers, making the controller “plug and play”. A limitation of the full-speed USB HID protocol was the 64 kB/s bandwidth and 1 ms frame length. Even with higher frequency transfers in faster USB protocols (the high-speed USB standard operated on 0.125 ms microframes) there was no mechanism for synchronizing USB transfers with events coming from the peripheral devices such as the camera. The round-trip time for the controller to recognize a synchronization signal from a peripheral, notify the instrument computer via USB, wait for the computer to process the signal and respond to the controller with the appropriate action to take was too long for accurate synchronization. We addressed this issue by selecting a 16-bit PIC® microcontroller with 256 kB program memory and 96 kB RAM in our design, allowing us to load complex sequences of experimental parameters in the controller memory. In this way peripheral updates and simple processing tasks were done without the need for communication between the controller and the instrument computer, circumventing the synchronization limitations of the USB protocol.

The controller firmware was written to prioritize minimum latency for the most critical tasks such as shuttering. We built our hardware controller around a microcontroller to take advantage of its low cost, field reprogrammability, native interrupts, and chip-level integration of relevant functions, such as those for serial communications, counters, and parallel ports. One limitation of the 16-bit microcontroller used in our design was the relatively slow processor speed and low computational throughput. To address this, we offloaded calculations for acquisition sequences to the instrument computer. Prior to data acquisition the experimental parameters were uploaded to the controller, which ran as a finite-state machine during the experiment. The controller stepped through the experimental sequence using the microcontroller’s native interrupts triggered by the camera exposure, device triggers or internal timers (Fig. 1c). Furthermore, we prioritized the allocation of parallel ports and internal serial communication hardware to minimize processor overhead wherever possible.

We found that for a typical experimental step, including waveform amplitude change, excitation shuttering and modulating 2 lasers, the total update time was on the order of 50 μs. For reference, the vertical shift time of our electron-multiplying CCD camera (EMCCD; Andor iXon 888 Ultra) was approximately 600 μs and the readout time of our scientific CMOS (sCMOS; Andor Zyla 4.2) was approximately 3.9 ms (Supplementary Fig. 2). Thus, the controller could synchronize several peripheral devices far faster than the dead time between exposures on a state-of-the-art microscope and was capable of handling complex, experimental sequences without limiting speed.

Azimuthal beam scanning

Speckles and fringes caused by imperfections in the optical train, such as dust particles or internal reflections, are inherent to laser illumination8. The uneven excitation profile caused by these coherence artifacts is particularly noticeable in TIRF excitation and other widefield imaging techniques. While a variety of technologies have been developed to reduce or eliminate these artifacts9–11, azimuthal beam scanning, or “circle scanning,” has become an attractive solution to reduce the effects of interference fringes and speckle artifacts in fluorescence microscopy12–14. Therefore, we incorporated several laser-scanning routines into our controller and demonstrated how a super-resolution azimuthal beam scanning microscope could be built around our open-source control platform (Fig. 2a).

Figure 2.

Circle scanning microscope design with hardware control. (a) Schematic illustration of the key components of the circle scanning microscope. DC1-DC3: Laser combining dichroic mirrors. AOTF: Acousto-optic tunable filter. Polarizer: m = 1 vortex half-wave retarder. DC4: Quad bandpass dichroic. Additional components omitted for clarity. (b) Conceptual diagram of the circle scanning optics. fsl: Scan lens focal length. fbfp: Objective lens rear focal length. fs: Objective lens front focal length. (c) Scan mirror command signals and laser pattern on the objective rear focal plane. Static beam: the laser parked at a single location on the objective back focal plane by applying a constant voltage to the galvanometer-mounted mirrors. Discrete scan: the mirrors drive the beam through 32 points approximating a circle in the objective back focal plane. Circle scan: the mirrors are driven with sinusoidal voltages scanning the beam in a continuous circle.

In our microscope, the controller formed a central hub, synchronizing the cooperative actions of the laser-scanning mirrors, acousto-optic tunable filter (AOTF), sCMOS camera and other devices (mechanical shutter, EMCCD etc., Fig. 2a). The scanning mirrors’ angular deflections were controlled by the dual waveform outputs of our controller. The scanning mirrors’ apparent center of rotation was positioned in the objective’s conjugate image plane so that the excitation beam location remained static in the sample plane regardless of the azimuthal or axial angle (Fig. 2b). This configuration of the optical system produced a homogeneous excitation field by scanning many azimuthal angles within a single camera frame, with the effective profile being the average across the azimuthal angles in each exposure. The sCMOS fire-all and EMCCD exposure outputs were both connected to one of the controller’s digital I/O ports, which was configured as an external interrupt for acquisition synchronization. Using the cameras’ exposure signal allowed precise shuttering by the AOTF, only exciting the sample during the sensor active time. Additionally, peripheral updates, such as changing the scan radius or excitation wavelength, were begun immediately at the end of an exposure, ensuring the system had enough settling time before the beginning of the next exposure without adding additional delays between frames (Supplementary Fig. 2, Supplementary Note 2).

In an azimuthal scanning microscope, the excitation laser must be steered about the optical axis such that its position describes a circle in the objective lens’ back focal plane. This can be accomplished by a variety of beam steering methods including crossed acousto-optic deflectors15, deformable micromirror devices7, and motorized mirrors13,16. We noted that circular scans could be approximated with a discrete set of points (discrete scan, Fig. 2c and Supplementary Fig. 3, Supplementary Note 2) lying along a circle. However, for mechanical beam steering mirrors with finite maximum accelerations, we found that our galvanometer-driven mirrors were unable to follow the command signal perfectly. When we used a 32-point circle approximation, the mirror momentum resulted in oscillations about each point, decreasing the precision with which the axial incidence angle at the sample could be controlled (Supplementary Fig. 3).

As an alternative strategy, we used the integrated waveform generators of our control platform to drive the mirrors with a pair of sine waves with a π/2 phase offset (circle scan, Fig. 2c and Supplementary Fig. 3). With this approach the mirrors remained settled on the circle and in constant motion, which eliminated the noise associated with starting and stopping at each point in a discreet scan (Supplementary Fig. 3).

Utilizing the integrated waveform generators in circle scanning mode, we investigated the improvements that could be gained through circle scanning in SAIM experiments to minimize laser-induced interference artifacts. SAIM is a surface-generated interference localization microscopy technique that measures the pixelwise average axial position of fluorescent probes with better than 10 nm precision. SAIM samples were prepared on a reflective silicon wafer with a defined layer of thermal oxide to act as a transparent spacer (Fig. 3a). When illuminated with a coherent light source, the direct and reflected excitation created an axial interference pattern with a characteristic spatial frequency that depended on the axial angle of incidence17. A series of images at known axial incidence angles were acquired and fit pixelwise to an optical model that predicted the observed fluorescence intensity fluctuations as a function of axial position (height) above the SiO2/water interface (Fig. 3b). Previous SAIM implementations utilized a static beam excitation scheme5, the simplest method to implement on a commercial microscope.

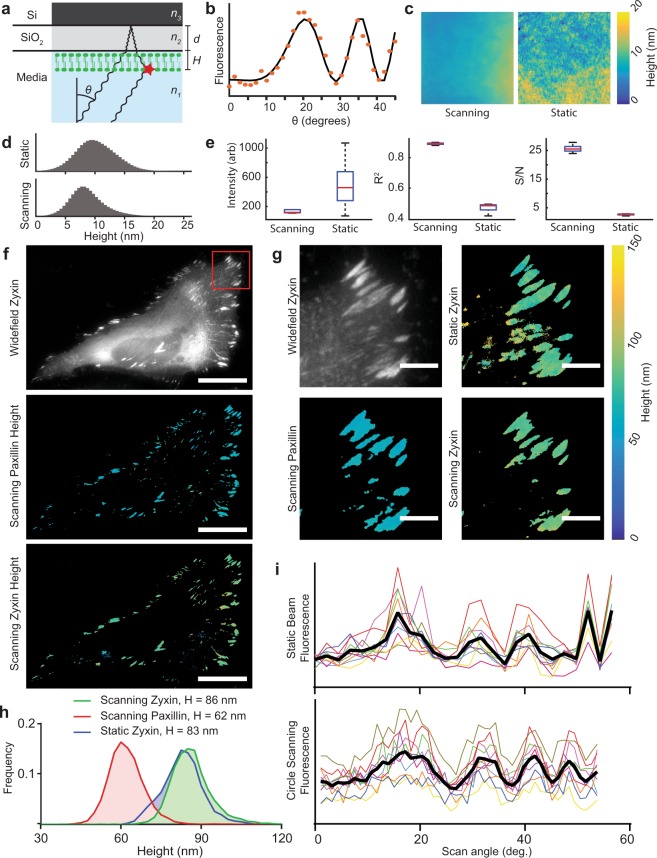

Figure 3.

Azimuthal beam scanning reduces incidence angle dependent laser artifacts in scanning angle interference microscopy (SAIM) imaging. (a) Schematic illustration of SAIM. Samples were prepared on reflective silicon substrates with a defined oxide layer of thickness d and excited with a laser at incidence angle θ to generate an axial interference pattern with known spatial frequency. The measured fluorescence intensity at distance from the substrate H varies with θ for a supported lipid bilayer (SLB) labeled with DiI. (b) Example single-pixel raw data (orange dots) and best-fit curve. (c) Reconstructed SLB height maps with circle scanning or static beam excitation schemes (full image 147.9 × 147.9 μm). (d) Pooled DiI height distributions in SLBs acquired with static beam and circle scanning excitation schemes (n = 5 regions, 4.2 × 106 pixels per region). (e) Quantification of the average fit parameters in circle scanning and static beam SAIM imaging of the 5 lipid bilayer regions in d. Red lines indicate mean, boxes the central quartiles, whiskers min and max. (f) Widefield and circle scanning SAIM height reconstructions of a live HeLa cell expressing the focal adhesion components mEmerald-Zyxin and mCherry-Paxillin, scale bars 25 μm. (g) Enlarged view of the region boxed in f. The colored images represent pixel-by-pixel SAIM height reconstructions of mEmerald-Zyxin or mCherry-Paxillin as noted, scale bars 5 μm. A static beam reconstruction of zyxin height is included for comparison. (h) Distribution of pixelwise height fits from adhesion complexes in g. Heights are the average of all non-zero fits. (i) Plots of fluorescence intensity as a function of scan angle for the static and circle scanning Zyxin images in g. Colored lines indicate the same 10 representative pixels from each image, black lines the average of all 10 pixels.

We hypothesized that circle scanning SAIM would remove the pixelwise, frame-to-frame variations in the excitation intensity arising from speckle and fringe artifacts in a static beam system that degrade the accuracy of axial position reconstructions. To illustrate the advantages of circle scanning over static beam excitation in SAIM experiments, we used our microscope to image supported lipid bilayers (SLBs) labeled with DiI using either a static beam or circle scanning excitation scheme. The bilayer topography from static beam reconstructions showed local topographical variations of several nanometers that were not present in circle scanning reconstructions (Fig. 3c). When multiple, independent regions of the samples were imaged, we found that the same topographical patterns emerged, indicating that the excitation artifacts caused erroneous height estimations in the analysis (Supplementary Fig. 4). Figure 3d shows the height distributions from the combined results of 5 bilayer regions with average heights of 10.2 ± 3.46 nm and 8.72 ± 3.10 nm for the static beam and circle scanning experiments, respectively. To verify that the samples were equivalent in terms of labeling density and bilayer continuity we examined the fluorescence intensity maps (Supplementary Fig. 4). Based on a visual inspection, the height reconstructions in both cases were independent of variations in label density or bilayer defects. The average of 5 height reconstructions showed the topographical variations seen in the static beam experiments were consistent across multiple regions unlike the circle scanning experiments, indicating that they were systematic and did not reflect the sample topography (Supplementary Fig. 4). This led us to conclude that the topography observed in the static beam experiments was a consequence of laser excitation artifacts that are mitigated by circle scanning. The improvement in the quality of the raw data was reflected in the more consistent average intensity across image sets and higher average R2 value from the reconstructions (Fig. 3e). Finally, circle scanning resulted in a greater than 5-fold increase in the average signal to noise ratio (S/N) across the experiments, a direct consequence of eliminating the incidence angle dependent laser artifacts.

When imaging biological samples, such as live cells, high background fluorescence, local variations of the sample’s optical properties caused by intracellular structures and dynamic processes conspire to disrupt the ideal axial interference pattern. While these properties cannot be eliminated, as they are intrinsic to the sample, optimizing instrument performance could possibly mitigate their effects. To demonstrate the advantages of circle scanning in a biologically relevant context, we imaged live HeLa cells expressing the focal adhesion proteins mEmerald-zyxin and mCherry-paxillin (Fig. 3f). We noted that paxillin and zyxin could serve as axial measuring sticks as their height distributions are well known from several sources5,18,19. The widefield image in Fig. 3f highlights the background fluorescence in a typical live-cell sample. Because SAIM illuminated throughout the sample volume, the fluorescence from diffuse pools of free probes within the sample contributed to the observed intensity at each incidence angle. For bright, discrete structures, such as focal adhesions on the cell periphery, the intensity fluctuations of the background were typically much smaller than the signal magnitude and robust localization was possible. With dim structures or in regions with no structure, fluctuations in the background, such as those arising from laser excitation artifacts, could be erroneously identified as real structures in the reconstruction. In the magnified view of the cell’s leading edge (Fig. 3g), the widefield image showed several adhesion complexes on the periphery of the cell and a typical inhomogeneous background away from the cell’s edge. The laser artifacts in the static beam case resulted in the artifactual appearance of structures that were not visible in the widefield image. The circle scanning reconstructions were free of these errors and better matched the focal adhesions from the widefield image. Both circle scanning and static beam reconstructions of zyxin showed similar distributions in height of 86.4 ± 8.17 nm and 83.1 ± 7.69 nm, respectively, as expected (Fig. 3h). Inspecting the pixelwise raw data demonstrated the improved S/N and reduction in outlier data points at high incidence angles where laser illumination artifacts are particularly problematic (Fig. 3i).

Accuracy of peripheral device synchronization in hardware versus software control

Another potential source of error in SAIM and other techniques that make frame-to-frame intensity comparisons challenging is variation in the excitation dose. Excitation shuttering minimizes photobleaching and phototoxicity in living samples, reducing the total light dose to the minimum required for the experiment. However, the excitation shutter must be accurately synchronized with the camera exposure. Variations in shutter/camera synchronization between frames are reflected in the observed fluorescence intensity, leading to localization artifacts. This effect is particularly problematic when using sCMOS cameras with rolling shutters where the beginning of exposure and readout are done by pixel rows20. Shuttering partway through the exposure/readout cycle results in the over exposure of some rows, while exposure throughout the exposure/readout cycle can lead to spatial distortion of fast-moving objects. sCMOS cameras can be run in a simulated global shutter mode wherein the excitation exposure is begun once all pixel rows are exposing and ended when the first row begins to readout (i.e. “fire-all” mode).

We considered that the roundtrip communication travel time from camera to computer to AOTF and the variable latency inherent in software control schemes could limit the precision of shutter synchronization. We collected 500 frames of a homogeneous fluorescent monolayer with either software control or hardware control to illustrate the effects of synchronization errors with a sCMOS camera (Fig. 4a). From the pixel column kymograph, with software control the upper pixel rows were relatively underexposed compared to the center pixel row. Under hardware control this effect was reduced, and a more even fluorescence intensity pattern was observed. Timing variability had the greatest effect on the last rows to be exposed or readout (those furthest from the center of the sensor in a center-out readout configuration, Fig. 4b). The center row (dashed lines in Fig. 4b) showed little difference between software and hardware control schemes while the top row (solid lines) had a lower average value and greatly increased noise under software control when compared to hardware control (Fig. 4a,b). To quantify the synchronization errors, we placed a fast photomultiplier tube directly in the excitation path of our microscope (Fig. 4c, Supplementary Fig. 5). With software synchronization, we measured an average −12.34 ± 0.9943 ms and 2.714 ± 1.236 ms delay between the beginning or end of exposure, respectively, and shutter operation. On the other hand, when the camera’s fire-all signal was used to trigger the excitation shutter through our hardware controller, we achieved a 3 order of magnitude improvement in synchronization latency (12.73 ± 12.80 μs and 10.59 ± 1.552 μs start and end of exposure, respectively, Fig. 4c, Supplementary Fig. 5). Although the hardware controller does introduce an additional delay in signal transmission, we found this to be on the order of 25% of the total time difference between the trigger signal and the effective shuttering time (Supplementary Note 3). With careful calibration the average delay under software control could be reduced. However, the variability due to communications and software overhead were intrinsic and could not be easily accounted for. It is important to note that a suboptimal or incorrect implementation of hardware control could introduce the same or even greater errors in synchronization than software control. Similarly, highly optimized software implementations could approach the minimum latency introduced by the device communication protocol but cannot overcome this fundamental limit.

Figure 4.

Hardware based synchronization minimizes latency-induced artifacts in SAIM. (a) Top: fluorescence kymographs of the top half of the center pixel column of an sCMOS camera imaging a homogeneous dye monolayer. Bottom: Full sensor single frame images from the same acquisition sequence. (b) Plot of average row intensities (2048 pixels) from the series in a. Dashed lines represent the center pixel row, solid lines the top pixel row. (c) Distribution of excitation shutter delays at the start of frame (SOF) and end of frame (EOF) relative to the camera’s exposure signal over 50 frames. Lines represent the average of 50 frames, boxes the center quartiles and whiskers the minimum and maximum values. (d–f) Lipid bilayer height, fluorescence intensity and signal-to-noise ratio in SAIM reconstructions from image sets acquired with software or hardware synchronization. (g) Standard deviation from SAIM reconstructions of lipid bilayer height. Each of 6 equivalent regions of the sample were imaged first with hardware synchronization and then immediately after with software synchronization. The horizontal bands in the software image follow the center-out readout pattern of the sCMOS camera. (h–j) Lipid bilayer height distributions in SAIM reconstructions vs. fluorescence intensity, coefficient of determination and signal-to-noise ratio with the excitation under software (top row) or hardware (bottom row) control. Images in a and d–g reflect the full sensor area of 2048 × 2048 pixels, corresponding to a 147.9 × 147.9 μm field of view. Data in a and b were normalized to the average intensity in the first exposure for comparison.

Considering these results, we hypothesized that the increased precision of hardware synchronization would result in a higher S/N and better localization precision in SAIM. To make a direct comparison between software and hardware synchronization, we imaged SLBs first with software synchronization and then, without moving the sample, with hardware synchronization at the same excitation intensity and over the same range of angles, such that an identical observation of the sample was made. The height reconstructions of SLBs (Fig. 4d) acquired with software synchronization demonstrated a strong dependence of fitted height on distance from the center of the sensor which was not reflected in the hardware synchronization acquisitions. The fitted fluorescence intensity profile (Fig. 4e) was closely matched with both synchronization schemes. The hardware acquisition sequences had a lower average intensity, which was expected given the shorter overall excitation time (Fig. 4c). The S/N from the reconstructions with software synchronization showed a high S/N band in the region around the center of the detector that decays toward the top and bottom of the image compared to the hardware synchronization acquisition where the S/N closely followed the excitation intensity profile, as expected (Fig. 4f). Using the standard deviation in height over several independent SLB regions as a metric for localization precision, we found that the sensor readout pattern was reflected in the localization precision with software synchronization and not with hardware synchronization (Fig. 4g). Owing to the large sensor size in the sCMOS camera, the excitation profile was not perfectly flat across the field of view, which could make interpretation of the reconstructions difficult. To understand the effects of synchronization errors, we examined the height distributions as a function of intensity, coefficient of determination (R2, a measure of goodness of fit) and S/N (Fig. 4h–j). In the case of software synchronization, the reconstructed heights showed a strong dependence on the fluorescence intensity with higher intensity corresponding to larger height values. A similar trend was observed for both R2 and S/N. With hardware synchronization, the reported height was independent of intensity and similar distributions were seen for both R2 and S/N.

MA-TIRF

To demonstrate some of the versatility of our hardware control platform, we performed MA-TIRF imaging experiments utilizing the same hardware and sequencing functions used in SAIM experiments. Like SAIM, MA-TIRF achieves axial localization of fluorescent probes by reconstruction from multiple independent images. When the excitation incidence angle at the coverslip to sample interface is beyond the critical angle, θc, total internal reflection occurs, and the excitation potential is confined to a thin evanescent field within a few hundred nanometers of the interface. The exponential decay of the evanescent field depends on the angle of incidence and is characterized by the penetration depth, which decreases with angle of incidence21. In an MA-TIRF experiment the excitation incidence is swept through a range of angles greater than the critical angle and individual images are acquired at each step (Fig. 5a).

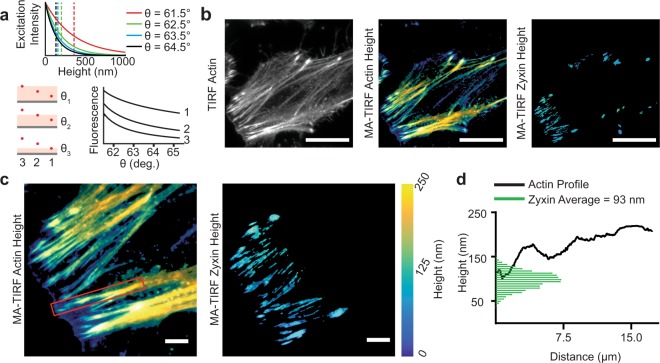

Figure 5.

Implementation of circle scanning multiangle TIRF (MA-TIRF). (a) Concept of MA-TIRF. Top: The evanescent excitation field intensity (solid lines) as a function of height above the glass-liquid interface and penetration depth (dashed lines) for various angles of incidence. Lower left: Schematic representation of fluorescent probes (red dots) at 300, 200 and 100 nm (3, 2 and 1, respectively) from the glass-liquid interface. Lower right: Theoretical intensity profiles as a function of angle of incidence for the 3 fluorescent probes. (b) Widefield and height reconstructions of phalloidin-AF560 and mEmerald-Zyxin in a fixed HeLa cell. Scale bars 25 μm. (c) Enlarged view of the leading edge of the cell in b. Scale bars 5 μm. (d) Reconstructed actin height profile (solid black line, red box in c) and zyxin height distribution (green bars, non-zero fits from c).

As a test subject, we imaged actin stress fibers, which are involved in adhesion-based motility and are required for maintenance of focal adhesion complexes. The ubiquity of actin fibers throughout cells complicates methods, such as SAIM, that lack optical sectioning. The thin evanescent excitation field in MA-TIRF rendered the ventral stress fibers clearly visible. We also imaged zyxin, which is known to interact with actin in the upper layers of focal adhesions where the mechanical coupling between the cytoskeleton and adhesion machinery occurs. Simultaneous imaging of actin and zyxin highlighted MA-TIRF’s ability to resolve stress fiber profiles (Fig. 5b,c). Compared to SAIM, MA-TIRF returned a similar average zyxin height in adhesion complexes (93.3 nm) but a broader standard deviation (19.9 nm vs. 8.17 nm for circle scanning SAIM). Using MA-TIRF we were able to visualize the height profile along actin stress fibers (Fig. 5d) originating in adhesion zones as determined by zyxin enrichment. We found that in the zyxin-rich regions the stress fiber height matches the average zyxin height but increased with distance from the zyxin end. The ability to perform both SAIM and MA-TIRF experiments on the same microscope platform demonstrated the ability of our hardware controller to adapt existing infrastructure for new applications.

Discussion

High-speed synchronization of multiple devices has become a necessity in modern microscope systems and can be a complicated barrier for researchers who need to build or customize microscopes for specific imaging tasks. The two common approaches to peripheral integration are through software packages or dedicated hardware controllers. Software is typically the simplest path to system integration, but generally limits the available hardware pool to a list of supported devices. In some cases, such as with the open-source Micro-Manager1 microscopy software, unsupported devices can be added by writing new device adapters. Doing so requires familiarity with the specific programming languages used, the device and software APIs and in some cases the operating system architecture. Furthermore, inefficient or improper implementations can limit performance and affect data quality. As we have shown in Fig. 4, even in the case of a stable and widely used software package, limitations imposed by the communication protocol’s speed and intricacies can limit the synchronization precision of multiple devices. With the rapid progress of image processing technology, online and real-time analysis are being applied in many fields, such as imaging flow cytometery22, presenting even greater challenges in device synchronization. While microcontrollers may lack the computational resources for high-throughput data processing, they can provide precise device synchronization by limiting the need for high-latency, non-deterministic communication between multiple peripherals. For example, a single USB transaction between the host computer and a microcontroller can be reliably used to trigger multiple peripheral updates within a few microseconds of the controller receiving the transmission.

Hardware device synchronization can overcome many of the limitations imposed by software. We demonstrated this for the example case of camera and AOTF timing wherein the excitation is shuttered in concert with the camera’s actual exposure. A naïve solution would be to avoid shuttering the excitation at the cost of increased excitation dose, photobleaching and phototoxicity. The greater the interframe interval the more pronounced each of these effects becomes, making it infeasible for time-course experiments with significant delays between frames. Under software control, and with shuttering, we observed timing differences of 10 or more milliseconds between the exposure and shuttering. If synchronization differences are consistent they can be accounted for in software by adding a delay between the slow and fast devices. In the case we have presented, latency between camera and AOTF events had variabilities on the order of milliseconds, and a simple delay would not suffice. Under hardware control we were able to achieve precise and consistent synchronization regardless of the framerate

We have used lipid bilayers to demonstrate that small variances in the timing between excitation and exposure can decrease the accuracy and precision of SAIM experiments. SLBs formed by fusion of small unilamellar vesicles make an ideal test sample with known properties (e.g. thickness, index of refraction, structure), are easily labeled with a variety of dies and have extremely low background fluorescence. The SLB experiments illustrate the importance of frame-to-frame consistency in maximizing the precision of SAIM localizations. Inconsistent illumination caused by interference at the sample (laser fringes and speckles) has the same effect as inconsistent timing on SAIM experiments. Although the causes are different, the result is that on a pixelwise basis, the instrument is causing additional intensity variations that obscure the predicted intensity variations in the raw data. In this study we chose not to use a set of background or correction images in any experiment. We found that the combined circle scanning and hardware synchronization approach eliminated the need for preprocessing the data with correction images.

Resolution of fast biological processes places additional constraints on the imaging system, and the effective time resolution is limited to the elapsed time to acquire the complete image series for a range of scan angles. Ultimately, this limit is set by the camera framerate plus any delay associated with changing the excitation angle or wavelength. In a typical circle scanning SAIM experiment we acquire between 16 and 32 images over the incidence angle series. The exact number of images is determined empirically at the time of the experiment based on balancing acquisition time with data quality. Our hardware controller allows acquisition at the camera framerate, that is there is no additional delay added and all devices are updated during readout. Under these conditions the effective time resolution is the number of frames per acquisition multiplied by the exposure period. For instruments with slower peripheral devices (i.e. motorized TIRF prisms), or under software control, an adequate delay between frames must be added while the system reaches the new stable point for the next camera frame. Motion of the sample between the first and last frames of a SAIM or MA-TIRF sequence will introduce additional errors in the reconstruction because a pixel in the first frame is not mapped to the same sample location in subsequent frames in the angle series. It follows that the time between the first exposure and last exposure in a set of incidence angles should be less than the time it takes the sample to move by one pixel. This condition can be difficult to satisfy but hardware control maximizes the achievable acquisition rate, providing a more accurate multi-frame snapshot in SAIM and MA-TIRF experiments (Supplementary Fig. 6, Supplementary Videos 2,3).

The concept of a general-purpose scientific instrument controller is not unique to this project and several examples exist, some as commercial products. One open source instrumentation project like what we have reported is the Pulse Pal23 signal generator (Sanworks, $745 at the time of writing), which features 4 independent +/− 10 V waveform outputs and 2 trigger inputs. While Pulse Pal could be used for driving the scanning system, additional hardware would be required to integrate multichannel excitation control and shuttering. Another open-source example is the FlyPi, a multifunctional microscopy project (project costs range between ~$100 and ~$250 depending on the number of additional modules the user incorporates)24. The FlyPi utilizes a Raspberry Pi computer for data acquisition and experiment control and an external Arduino microcontroller to control the microscope peripherals such as stage, temperature, illumination and others. While similar in approach, the FlyPi is a complete microscope solution designed for accessibility whereas our instrument controller is designed to offer high-precision, high speed peripheral integration in advanced system development. Outside of dedicated microscopy systems, there are many examples, such as the general purpose instrument STEMlab™ hardware platform (Red Pitaya, ~$290 at the time of writing) which features 2 radio frequency (RF) inputs, 2 RF outputs, 16 digital I/O signals and 4 additional low speed analog outputs as well as significant computational resources due to its integrated FPGA and dual core CPU. The hardware is limited, though, to +/−1 V for the RF outputs and 0–1.8 V for the analog outputs, requiring additional, custom amplification stages to interface with many devices. Furthermore, while the software is open source, the hardware is not. Many non-open source solutions are available such as the Triggerscope 16 (Advanced Research Consulting, $1499 at the time of writing), which features 16 0-10 V analog outputs, 16 TTL outputs and sequencing capabilities of up to 60 steps. Other popular options are the DAQ solutions offered by National Instruments, which range in cost from ~$200 to more than $6000, with cards providing 4 analog outputs, 48 digital I/Os and USB connectivity beginning at ~$1100. While these boards arguably offer greater flexibility and standardization than our controller, they do not have the same level of microscopy-specific optimization or integration of design, requiring additional effort in development. For comparison, a researcher wishing to build a copy of our controller as reported here could do so for ~$525 (we have included a bill of materials in the project repository including part numbers and suppliers) and 8 hours of labor with access to basic surface mount soldering and test equipment found in most electronics labs.

There are many forms of hardware control that have been used in microscopy from plugin extension PCBs for universal microcontroller platforms to commercial field programmable gate array boards to custom embedded electronics platforms covering a broad range of cost, complexity and flexibility. We have sought to create a broadly useful platform that is economical and easy to integrate. The open-source model used by Micro-Manager has greatly contributed to the success of the project and we seek to emulate their example by making all design and source code freely available25,26. We have made all levels of the project from hardware to driver API and user interface software open-source. This will allow us to capitalize on the skills and feedback of users and create a community of contributors, distributing the cost of further development based on the framework presented here. While we have demonstrated the utility of our controller in a single, circle scanning instrument, the control platform we have developed has a broad range of applications including laser-scanning confocal or two photon microscopies, imaging cytometry, microfluidic systems and spectroscopy to name a few. Through continuing, community-driven development of the project we envision our hardware as a valuable resource for general scientific device control.

Methods

Preparation of supported lipid bilayers

A chloroform solution of 1-palmitoyl-2-oleoyl-glycero-3-phosphocholine with 0.1 mole percent 1,2-dipalmitoyl-sn-glycero-3-phosphoethanolamine-N-(cap biotinyl) (Avanti Polar Lipids) was dried first under a stream of nitrogen and then under vacuum to remove the solvent. The resulting film was dissolved to 1 mg/mL in PBS pH 7.4 at 37 °C with extensive vortexing and freeze-thawed 7x by cycling between liquid nitrogen and 37 °C baths. The lipid suspension was then extruded 13x through double stacked 100 nm polycarbonate membranes (Whatman) to yield small unilamellar vesicles (SUVs). SUVs prepared in this way were stored at 4 °C and used within 2 weeks. Silicon wafers with ~1900 nm thermal oxide (Addison Engineering) were diced into 1 cm2 chips, and the oxide layer thickness for each chip was measured with a FilMetrics F50-EXR. The chips were then cleaned in piranha solution (30 volume % hydrogen peroxide in sulfuric acid), rinsed extensively and stored in deionized water until use. SLBs were formed by incubating the cleaned silicon chips in the SUV solution for 1 hour, and then rinsed with PBS, incubated with 1.75 μM DiIC18 for 30 minutes, and rinsed again with PBS. The samples were then inverted into a 35 mm glass-bottom imaging dish (MatTek) containing PBS and immediately imaged.

Preparation of HeLa cell samples

The plasmids mEmerald-Zyxin-6 (Addgene plasmid # 54319; http://n2t.net/addgene:54319; RRID: Addgene_54319) and mCherry-Paxillin-22 (Addgene plasmid # 55114; http://n2t.net/addgene:55114; RRID: Addgene_55114) were a gift from Michael Davidson. HeLa cells (ATCC CCL-2) were cultured in Dulbecco’s Modified Eagle Medium (ThermoFisher) supplemented with 10% fetal bovine serum (ThermoFisher) and 100 U/mL penicillin-streptomycin (ThermoFisher) at 37 °C and 5% CO2.

For SAIM experiments, silicon chips were prepared as for SLBs except the chips were sonicated for 15 mins in acetone, 15 minutes in 1 M NaOH and UV sterilized in place of the piranha cleaning. Cells were seeded onto the chips at 2 × 104 cm−2 in full culture medium and incubated overnight. Cells were transfected with 1:1 mEmerald-Zyxin-6 to mCherry-Paxillin-22 using Lipofectamine 3000 (ThermoFisher) per the manufacturer’s protocol and allowed to grow for 24 hours. Sample were then rinsed 3x in PBS, inverted into a 35 mm glass-bottom imaging dish containing HEPES-Tyrode’s (119 mM NaCl, 5 mM KCl, 25 mM HEPES buffer, 2 mM CaCl2, 2 mM MgCl2, 6 g/L glucose, pH 7.4) and imaged at 37 °C.

For MA-TIRF experiments, cells were seeded at 2 × 104 cm−2 directly into 35 mm glass-bottom imaging dishes and incubated overnight to allow attachment. Samples were then transfected with mEmerald-Zyxin-6 as before and incubated an additional 24 hours. Following incubation, the samples were rinsed with PBS and fixed in 4% formaldehyde in PBS for 10 minutes, rinsed 3 additional times and permeabilized with 0.1% v/v Triton X-100 in PBS for 10 minutes. The solution was then aspirated, and the sample was incubated for 1 hour at room temperature with Alexa Fluor™ 568 Phalloidin (ThermoFisher) diluted to 1 U/mL in PBS with 1% bovine serum albumin (ThermoFisher). Samples were then rinsed 3 times in PBS and imaged immediately.

Controller design

Full details on the controller design are included in Supplementary Note 1, 3. Briefly, the analog subcircuits were designed and simulated using CircuitLab27. The controller PCB layout was made using EAGLE (Autodesk). Printed circuit boards were ordered unpopulated from OSH Park. The components were purchased from Digi-Key and then hand assembled. Firmware was written in the C programming language, compiled and debugged with C-Aware IDE (Custom Computer Service Inc.). Host-side driver and GUI software was written in C++ with Visual Studio 2017 (Microsoft) using the Qt28 framework, HIDAPI29, boost C++ 30, and OpenCV libraries31.

Microscope design

The circle scanning microscope was built on a Nikon Ti-E inverted fluorescence microscope. The 405 nm (Power Technology Inc.), 488 nm (Coherent), and 560 nm (MPB Communications Inc.) lasers were combined with the 642 nm (MPB Communications Inc.) to make a colinear beam by a series of dichroic mirrors (Chroma). The combined output beam was attenuated and shuttered by an AOTF (AA Opto-Electronic) before being directed onto the galvanometer scanning mirrors (Cambridge Technology). The image of the laser on the scanning mirrors was magnified and relayed to the sample by 2 4 f lens systems, the beam expanding telescope and the scan lens / objective lens combination. The beam expander is formed by f = 30 mm and f = 300 mm achromatic lenses with an m = 1 zero-order vortex half-wave plate positioned between them and positioned 2 f from the 300 mm achromatic scan lens (Thorlabs). SAIM experiments were performed with a 60x N.A. 1.27 water immersion objective, and MA-TIRF with a 60x N.A. 1.49 oil immersion objective lens (Nikon). Fluorescence emission was collected with a quad band filter cube and single band filters (TRF89901-EMv2, Chroma) mounted in a motorized filter wheel (Sutter). For both SAIM and MA-TIRF experiments, images were acquired with a Zyla 4.2 sCMOS (Andor) camera using the microscope’s 1.5x magnifier for a total magnification of 90×. In all experiments the open source software Micro-Manager1 was used for camera and filter wheel control and image acquistion. For timing data with software control the AOTF driver hardware was disconnected from the hardware controller and connected to the instrument computer via USB. The AOTF was added to the micromanager configuration utilizing the AA AOTF device adapter written by Erwin Peterman and supplied with the software. A single AA AOTF device was added to the hardware configuration to avoid potential delays associated with using multiple channels. Details on excitation incidence angle calibration are given in Supplementary Note 4.

Timing data acquisition

Timing data acquired with the EMCCD camera was done with frame transfer and electron multiplying gain enabled. The sCMOS camera auxiliary output was configured for “fire all” mode. In both cases the camera fire output was connected to the hardware controller through a BNC “T” connector. The other side of the “T” was connected to a digital oscilloscope (MSO2014B, Tektronix) and single shot acquisitions were acquired triggered by the rising edge of the camera fire signal. A fast photomultiplier tube (Hammamatsu) was placed directly in the excitation path immediately after the AOTF and the amplifier output connected to the oscilloscope. Automatic shuttering was enabled in either Micro-Manager (software control) or via the hardware controller (hardware control) and single exposures were initiated with the “Snap” function.

SAIM

For static beam SAIM experiments, a series of 31 images were acquired at evenly spaced incidence angles from −45.8 to 43.9 degrees. For circle scanning experiments, 32 images were acquired at evenly spaced intervals from 0 to 30 degrees in the case of live cells or 0 to 45 degrees in the case of SLBs. Angle ranges are given with respect to the wafer normal in the imaging media (Fig. 3a). The raw images were fit pixelwise without further preprocessing using a custom analysis program written by the authors (Supplementary Note 5) to obtain the reconstructed height topography of the samples. Images were postprocessed with Fiji32 and statistical analysis was performed in MATLAB (MathWorks) and Prism (GraphPad).

MA-TIRF

For MA-TIRF experiments, a series of 20 images were acquired from 61.5 degrees to 65.3 at intervals of 0.2 degrees with respect to the excitation angle of incidence in the coverslip at the glass to water interface. The galvanometer command amplitude corresponding to the critical angle was experimentally confirmed using the fluorescence intensity of a dye monolayer16. MA-TIRF reconstructions were done with MATLAB using the ADMM33. The resulting height images were postprocessed in Fiji and statistical analysis was performed in Prism.

Supplementary information

Acknowledgements

We thank Susan Daniel and Rohit Singh (Cornell University) for discussions and assistance preparing SLB samples. This research was funded by the National Institute of General Medical Sciences Ruth L. Kirschstein National Research Service Award 2T32GM008267 (M.J.C.), National Science Foundation Graduate Research Fellowship DGE-1650441 (M.J.C.), National Cancer Institute U54 CS210184 (M.J.P. and W.R.Z.) and R33-CA193043 (M.J.P. and W.R.Z.), National Science Foundation Award 1752226 (M.J.P.) and the Kavli Institute at Cornell for Nanoscale Science (M.J.C., W.R.Z. and M.J.P.). This work was performed in part at the Cornell NanoScale Science & Technology Facility (CNF), a member of the National Nanotechnology Coordinated Infrastructure (NNCI), which is supported by the National Science Foundation (Grant NNCI-1542081).

Author Contributions

M.J.C., W.R.Z. and M.J.P. conceived the project and devised the studies. M.J.C. and W.R.Z. conceived and developed the controller electronics and firmware. M.J.C. built the controller and microscope, wrote the driver, interface and analysis software. M.J.C. and S.P. performed the experiments and collected instrument performance data. All authors contributed to the writing of the manuscript.

Code Availability

All source code written by the authors including controller firmware, driver, graphical interface and SAIM analysis code as described here is available in the project repository at https://github.com/mjc449/SAIMscannerV3.git (commit 80819d26b2d570e266a7220d5379a96686e4b835). Additional source code and third-party libraries are available from the links referenced in the controller design section. Analysis code for the MA-TRIF experiments is available at https://github.com/zcshinee/Pol-TIRF.git (commit 5279d16bb0b9bcbd1fe98836f08d99dfbb50d628).

Data Availability

Source data including raw and processed image files are available upon request from the corresponding authors.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Marshall J. Colville, Email: mjc449@cornell.edu

Matthew J. Paszek, Email: mjp31@cornell.edu

Supplementary information

Supplementary information accompanies this paper at 10.1038/s41598-019-48455-z.

References

- 1.Edelstein, A., Amodaj, N., Hoover, K., Vale, R. & Stuurman, N. Computer Control of Microscopes Using µManager. in Current Protocols In Molecular Biology (eds Ausubel, F. M. et al.) (John Wiley & Sons, Inc., 2010). [DOI] [PMC free article] [PubMed]

- 2.OpenLabTools. Available at, http://openlabtools.eng.cam.ac.uk/Instruments/Microscope/. (Accessed: 18th December 2018).

- 3.Planchon TA, et al. Rapid three-dimensional isotropic imaging of living cells using Bessel beam plane illumination. Nature Methods. 2011;8:417–423. doi: 10.1038/nmeth.1586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.York AG, et al. Instant super-resolution imaging in live cells and embryos via analog image processing. Nature Methods. 2013;10:1122–1126. doi: 10.1038/nmeth.2687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Paszek MJ, et al. Scanning angle interference microscopy reveals cell dynamics at the nanoscale. Nature Methods. 2012;9:825–827. doi: 10.1038/nmeth.2077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Carbone CB, Vale RD, Stuurman N. An acquisition and analysis pipeline for scanning angle interference microscopy. Nature Methods. 2016;13:897–898. doi: 10.1038/nmeth.4030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zong W, et al. Shadowless-illuminated variable-angle TIRF (siva-TIRF) microscopy for the observation of spatial-temporal dynamics in live cells. Biomedical Optics Express. 2014;5:1530. doi: 10.1364/BOE.5.001530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Goodman, J. W. Speckle Phenomena in Optics: Theory and Applications. (Roberts and Company Publishers, 2007).

- 9.Ma H, Fu R, Xu J, Liu Y. A simple and cost-effective setup for super-resolution localization microscopy. Scientific Reports. 2017;7:1542. doi: 10.1038/s41598-017-01606-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Saloma C, Kawata S, Minami S. Large-amplitude current modulation of semiconductor laser and multichannel optical imaging. Optics Communications. 1992;93:4–10. doi: 10.1016/0030-4018(92)90119-C. [DOI] [Google Scholar]

- 11.Dingel B, Kawata S. Speckle-free image in a laser-diode microscope by using the optical feedback effect. Opt. Lett., OL. 1993;18:549–551. doi: 10.1364/OL.18.000549. [DOI] [PubMed] [Google Scholar]

- 12.Mattheyses AL, Shaw K, Axelrod D. Effective elimination of laser interference fringing in fluorescence microscopy by spinning azimuthal incidence angle. Microscopy Research and Technique. 2006;69:642–647. doi: 10.1002/jemt.20334. [DOI] [PubMed] [Google Scholar]

- 13.Johnson DS, Toledo-Crow R, Mattheyses AL, Simon SM. Polarization-Controlled TIRFM with Focal Drift and Spatial Field Intensity Correction. Biophysical Journal. 2014;106:1008–1019. doi: 10.1016/j.bpj.2013.12.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fiolka R, Belyaev Y, Ewers H, Stemmer A. Even illumination in total internal reflection fluorescence microscopy using laser light. Microscopy Research and Technique. 2008;71:45–50. doi: 10.1002/jemt.20527. [DOI] [PubMed] [Google Scholar]

- 15.van’t Hoff M, de Sars V, Oheim M. A programmable light engine for quantitative single molecule TIRF and HILO imaging. Optics Express. 2008;16:18495. doi: 10.1364/OE.16.018495. [DOI] [PubMed] [Google Scholar]

- 16.Fu Y, et al. Axial superresolution via multiangle TIRF microscopy with sequential imaging and photobleaching. Proceedings of the National Academy of Sciences. 2016;113:4368–4373. doi: 10.1073/pnas.1516715113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lambacher A, Fromherz P. Fluorescence interference-contrast microscopy on oxidized silicon using a monomolecular dye layer. Applied Physics A: Materials Science & Processing. 1996;63:207–217. doi: 10.1007/BF01567871. [DOI] [Google Scholar]

- 18.Kanchanawong P, et al. Nanoscale architecture of integrin-based cell adhesions. Nature. 2010;468:580–584. doi: 10.1038/nature09621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Liu J, et al. Talin determines the nanoscale architecture of focal adhesions. Proceedings of the National Academy of Sciences. 2015;112:E4864–E4873. doi: 10.1073/pnas.1512025112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Liang C, Chang L, Chen HH. Analysis and Compensation of Rolling Shutter Effect. IEEE Transactions on Image Processing. 2008;17:1323–1330. doi: 10.1109/TIP.2008.925384. [DOI] [PubMed] [Google Scholar]

- 21.Martin-Fernandez ML, Tynan CJ, Webb SED. A ‘pocket guide’ to total internal reflection fluorescence: A ‘Pocket Guide’ to Total Internal Reflection Fluorescence. Journal of Microscopy. 2013;252:16–22. doi: 10.1111/jmi.12070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Heo, Y. J., Lee, D., Kang, J., Lee, K. & Chung, W. K. Real-time Image Processing for Microscopy-based Label-free Imaging Flow Cytometry in a Microfluidic Chip. Scientific Reports7 (2017). [DOI] [PMC free article] [PubMed]

- 23.Sanders, J. I. & Kepecs, A. A low-cost programmable pulse generator for physiology and behavior. Front. Neuroeng. 7 (2014). [DOI] [PMC free article] [PubMed]

- 24.Maia Chagas A, Prieto-Godino LL, Arrenberg AB, Baden T. The €100 lab: A 3D-printable open-source platform for fluorescence microscopy, optogenetics, and accurate temperature control during behaviour of zebrafish, Drosophila, and Caenorhabditis elegans. PLOS Biology. 2017;15:e2002702. doi: 10.1371/journal.pbio.2002702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.mjc449. SAIMscannerV3. (2018). Available at, https://github.com/mjc449/SAIMscannerV3. (Accessed: 14th December 2018).

- 26.mjc449/SSv3_1 · CircuitHub. Available at, https://circuithub.com/projects/mjc449/SSv3_1/revisions/16275. (Accessed: 14th December 2018).

- 27.Online circuit simulator & schematic editor. CircuitLab Available at, https://www.circuitlab.com/. (Accessed: 16th December 2018).

- 28.Qt Wiki. Available at, https://wiki.qt.io/Main. (Accessed: 16th December 2018).

- 29.Ott, A. A Simple library for communicating with USB and Bluetooth HID devices on Linux, Mac, and Windows.: signal11/hidapi. (2018).

- 30.Boost C++ Libraries. Available at, https://www.boost.org/. (Accessed: 16th December 2018).

- 31.OpenCV library. Available at, https://opencv.org/. (Accessed: 16th December 2018).

- 32.Schindelin J, et al. Fiji: an open-source platform for biological-image analysis. Nature Methods. 2012;9:676–682. doi: 10.1038/nmeth.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zheng C, et al. Three-dimensional super-resolved live cell imaging through polarized multi-angle TIRF. Optics Letters. 2018;43:1423. doi: 10.1364/OL.43.001423. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All source code written by the authors including controller firmware, driver, graphical interface and SAIM analysis code as described here is available in the project repository at https://github.com/mjc449/SAIMscannerV3.git (commit 80819d26b2d570e266a7220d5379a96686e4b835). Additional source code and third-party libraries are available from the links referenced in the controller design section. Analysis code for the MA-TRIF experiments is available at https://github.com/zcshinee/Pol-TIRF.git (commit 5279d16bb0b9bcbd1fe98836f08d99dfbb50d628).

Source data including raw and processed image files are available upon request from the corresponding authors.