Abstract

Learning does not only depend on rationality, because real-life learning cannot be isolated from emotion or social factors. Therefore, it is intriguing to determine how emotion changes learning, and to identify which neural substrates underlie this interaction. Here, we show that the task-independent presentation of an emotional face before a reward-predicting cue increases the speed of cue–reward association learning in human subjects compared with trials in which a neutral face is presented. This phenomenon was attributable to an increase in the learning rate, which regulates reward prediction errors. Parallel to these behavioral findings, functional magnetic resonance imaging demonstrated that presentation of an emotional face enhanced reward prediction error (RPE) signal in the ventral striatum. In addition, we also found a functional link between this enhanced RPE signal and increased activity in the amygdala following presentation of an emotional face. Thus, this study revealed an acceleration of cue–reward association learning by emotion, and underscored a role of striatum–amygdala interactions in the modulation of the reward prediction errors by emotion.

Introduction

Students often notice that learning is affected by feelings of tension from teachers or in the classroom. Learning is not always rational, and is often affected by emotions and other social factors. Such observations pose an intriguing, but mostly unstudied, question about the mechanisms by which emotions and social factors affect learning.

Probabilistic reward learning has been studied intensively in humans and animals as a basic model of learning (Dayan, 2008), and can be understood within the framework of reinforcement learning models (Sutton and Barto, 1998), in which an agent aims to maximize their “reward.” The agent learns the “value,” which is the expected reward in a given context, by minimizing the “reward prediction error” (RPE), which is the difference between the expected reward value and the actual reward. The firing rates of midbrain dopamine neurons correlate with reward values and RPE signals (Schultz et al., 1997), and several human studies have identified the involvement of the ventral and dorsal striatum, which both receive massive dopamine projections (McClure et al., 2003; O'Doherty et al., 2003; Haruno and Kawato, 2006).

In the present study, we conducted behavioral and functional magnetic resonance imaging (fMRI) experiments using a probabilistic reward learning task, and examined whether an emotional stimulus changes a previously established learning process. More specifically, we tested whether the presentation of a task-independent emotional face before a reward-predicting cue would change learning and reward value and RPE representations in the brain.

Materials and Methods

The participants in this study were undergraduate students who did not declare any history of psychiatric or neurological disorders. This study was approved by the ethics committee of the National Institute of Information and Communications Technology and Tamagawa University. All participants provided written informed consent before any experiments.

Experimental design

Behavioral task.

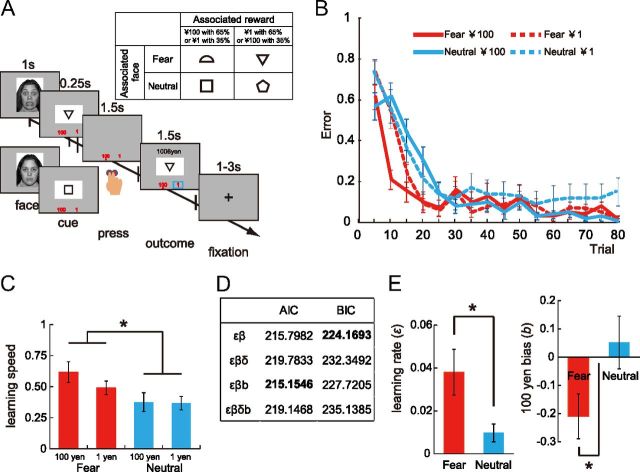

Twenty participants (10 male, 10 female; mean age 20.0 ± 0.7 years) learned probabilistic associations between visual cues and rewards (65% or 35%) by trial and error (Fig. 1A). A task-independent fearful or neutral face [two expressions × 20 stimuli taken from NimStim (http://www.macbrain.org/)] was presented on a screen (1000 ms) in a pseudo-random order before the visual cue (250 ms). Then, the participant pressed a ¥100 (large) or ¥1 (small) button within 1750 ms, depending on their expectation of which reward was more likely to follow the cue. The order of cue presentation and the position of the two buttons (left or right) were randomized across trials. A red dot was displayed under the chosen option, and the reward outcome was shown within a blue square. Although this task required the participants to predict the magnitude of the reward, the actual reward was unaffected by their choice, which is a form of classical conditioning. Before the experiments, we confirmed that the participants fully understood the task characteristics. They were instructed that the face presentation would signal the immediate appearance of a cue, and that there was no fixed relationship between the face and the reward. No participants reported noticing any associations between particular facial expressions and the cue. The associations between the four visual cues we used and the facial expressions and rewards were counterbalanced across participants (Fig. 1A, inset). The total number of trials was 320 (80 × 4 conditions).

Figure 1.

Experimental design and behavioral results. A, Inset, An example combination of the facial expression, cue, and reward. Each of the four cues was associated probabilistically (65%) with one of the two different reward amounts, and also with one of the two facial expressions. A, The participants were required to press a button, depending on their expectation regarding which reward would be associated with the presented cue. B, Learning curves. Each data point represents the average of five trials, and the error bar indicates the SEM. C, Exponential fit of learning speed (mean ± SEM). D, The results of the parameter estimation by AIC and BIC. ε, β, δ, and b represent the learning rate, exploration, reward sensitivity, and ¥100 bias, respectively. E, Model-based analysis of the learning rate and the ¥100 bias (mean ± SEM by εβb). *p < 0.05, **p < 0.01, and ***p < 0.005 throughout the figures.

fMRI task.

Before the fMRI experiment, 58 experimentally naive participants completed the learning task outside of the scanner. This phase includes presentation of a mask image instead of the facial expressions. This was to avoid habituation in the subsequent scanning session from repeated exposure to the faces. Learning was regarded as successful if the participant chose the correct option in 5 consecutive trials for all cues, and the participants who did not reach this criterion within 200 trials were not invited to the scanning session. Following a 5 min break, 34 participants (17 male, 17 female; mean age 19.8 ± 1.2 years; all right handed) underwent scanning with the presentation of faces. Thus, in the fMRI experiments, we requested that the participants confirm their already learned cue–reward associations. There were two main reasons for this. First, cue–reward learning elicits learning-related attention, which gradually decreases during learning (Maunsell, 2004). Therefore, to dissociate the RPE from the decrease in learning-related attention, we scanned them after they had completed the learning, when we could assume a constant attention level during the outcome. Second, it would take longer than 70 min for some participants to complete the learning process in a task design that would allow us to discriminate brain activity during reward feedback and cue presentation. Our probabilistic task design allows us to detect the RPE signal in the brain even after the completion of learning (Fiorillo et al., 2003; McClure et al., 2003; Haruno and Kawato, 2006). The time intervals between presentation of the visual cue and the button press, the button press and presentation of the outcome, and the intertrial interval were all randomized to be between 4.5 and 8.5 s. The total number of trials was 80 (20 × 4 conditions).

Postexperiment ratings of facial expressions.

After completing the experiment, all participants rated their subjective emotional responses to each facial expression based on its valence and arousal level (Bradley and Lang, 1994) with a 5-point scale. More negative valences and higher excitement were reported for fearful faces than for neutral faces (valence: fear = −1.19, neutral = −0.10, paired t(19) = −8.79, p < 0.001; arousal: fear = 0.96, neutral = −0.36, paired t(19) = 8.71, p < 0.001). This tendency was also seen in the fMRI experiments (valence: fear = −1.18, neutral = −0.12, paired t(33) = −11.08, p < 0.001; arousal: fear = 1.16, neutral = −0.54, paired t(33) = 15.70, p < 0.001). These data demonstrate that the emotional effects of the fearful face were equivalent in the behavioral and fMRI experiments.

Short-term memory task.

One block of the task consisted of two phases. In the encoding phase, participants were required to memorize six visual stimuli, each presented for 250 ms, with 3 following fearful faces presented for 1 s, and 3 following neutral faces. In the retrieval phase, after 35–38 s the subjects reported which of the 12 presented stimuli appeared in the encoding phase, in which only 6 appeared. The entire task comprised 24 blocks.

Exponential curve fitting

We fitted each participant's learning data in the four different conditions (F100, F1, N100, and N1) separately by an exponential function (c + la × exp(−ls × trial)), where ls represents learning speed (0–∞), la represents learning amplitude (0–1), and c means the convergent value (0–1). No goodness of fit was significantly different from the others (p > 0.5).

Reinforcement learning model-based analysis

To investigate the learning process in each participant, we adopted a reinforcement learning model (Sutton and Bart, 1998; Daw, 2011). This model assumes that each participant assigns the value function Vt(St) to the cue St at time t. Learning proceeds by increasing the accuracy of the value representation by updating the value in proportion to the RPE Rt − Vt(St), in other words the difference between the expected and actual reward at time t (Eq. 1):

Our learning model contains the free parameters of the learning rate (εf), reward sensitivity (δf), value-independent bias for the choice of ¥100 (at), (bf(at)), and exploration parameter (βf). The learning rate controls for the effects of the RPE, and reward sensitivity transforms the actual reward (rt) in yen into a subjective reward (Rt) for each participant (Eq. 2):

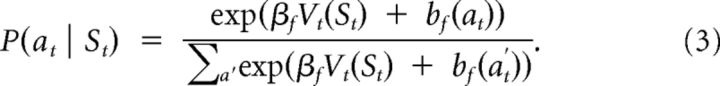

In relation to behavioral choice (Eq. 3), the bias term represents a value-independent bias or inclination toward the choice of ¥100, and the exploration parameter controls how deterministically a value function leads to an advantageous behavior:

|

We estimated these four free parameters (denoted as the vector θ) for each participant from their trial-by-trial learning using the maximum likelihood estimation method, which minimizes the negative log-likelihood of the participant's behavior (D), as shown in Equations 4 and 5. This nonlinear minimization of Equation 4 was conducted using the MATLAB function “fmincon”:

The probability of choosing an action, at (¥100 or ¥1), given a visual cue, st, was computed based on Equation 3.

We evaluated the significance of each parameter using the Akaike information criteria (AIC) and Bayesian information criteria (BIC) by comparing four models using the learning rate and exploration parameter (εβ), εβ with reward sensitivity (εβδ), εβ with ¥100 bias (εβb), and εβ with both (εβδb). For statistical tests of ε and β, we used the nonparametric Wilcoxon signed-rank test because the Kolmogorov–Smirnov test rejected normality.

fMRI data acquisition and analysis

The fMRI scanning was conducted at Tamagawa University using a Siemens Trio TIM 3T scanner with a 12-channel head coil. T2*-weighted echoplanar images (EPIs) were acquired with blood oxygen level-dependent (BOLD) contrast (42 slices; TR 2500 ms; TE 25 ms; flip angle 90°; FOV 192 mm; and resolution 3.0 × 3.0 × 3.0 mm). For each participant, functional data were acquired in one scanning session that consisted of 730 volumes. Two initial volumes in each session were discarded to provide steady-state magnetization. Anatomical images were acquired using a T2-weighted protocol (42 slices; TR 4200 ms; TE 84 ms; flip angle 145°; FOV 199.4 mm; and resolution 0.6 × 0.4 × 3.0 mm horizontal slices) after the EPI session.

Preprocessing and data analysis were performed using SPM8 (Friston et al., 1995). The images were corrected using slice timing correction and realignment, and the volumes were normalized to the MNI space and then resliced into 2 × 2 × 2 mm voxels and spatially smoothed with an 8 mm Gaussian kernel. The data for each participant were analyzed with a standard event-related design.

Together with six-dimensional head movement regressors, we used six constant and parametric regressors for the analysis, including constants for cue, button pressing, and outcome timing, and parametric modulators of value, RPE, and reward size. When we analyzed face timing, we used a face-timing constant instead of a cue-timing constant. In addition, in our split analysis, we separated the RPE at the outcome timing into two components: “reward size” and “minus value.” This analysis was done to determine whether a signal correlated with RPE was attributable to either reward size or negative expected value or only to their conjunction (Behrens et al., 2008; Li and Daw 2011). As we assumed that BOLD signal changes reflected energy consumption, the absolute value of the reward prediction error was used for RPE (Haruno and Kawato, 2006). These regressors were convolved with canonical, time, and dispersion derivative hemodynamic response functions, and the second-level group analysis was conducted using a full-factorial model. The subtraction contrast between the fearful versus neutral conditions was masked with the voxels that were correlated positively with both contrasts (fearful and neutral; p < 0.005 uncorrected with an extended threshold of 15 voxels). The anatomical masks and regions of interest (ROIs) were constructed from IBASPM (http://www.thomaskoenig.ch/Lester/ibaspm.htm) and Automated Anatomical Labeling map templates, and the statistics within the ROIs were obtained using MarsBaR (http://marsbar.sourceforge.net/).

Psychophysiologic interaction analysis

We conducted psychophysiologic interaction (PPI) analysis to test whether activity in the amygdala during face presentation modulates RPE-correlated activity in the ventral striatum (vStr). The psychological factor was defined as the product of the emotion contrast (fearful versus neutral) and the RPE size. To link striatum activity for outcome timing with amygdala activity for face timing, BOLD signals in the dorsal part of the amygdala (4 mm sphere around the peak at coordinates x = −26, y = −2, z = −12, which is the peak of the face-timing activity) during every face presentation with a 5 s delay, to account for the peak BOLD response, were extracted and convolved with the hemodynamic response function to yield a parametric modulator for outcome timing. Finally, we examined whether the interaction between the psychological factor and the parametric modulator explained activity in the vStr.

Results

Behavioral experiment

Figure 1B shows the learning curve for each cue averaged separately over all participants. Learning was faster for the cues associated with fearful faces than those associated with neutral faces. A within-participant three-way ANOVA (trial, reward size, and emotion) revealed significant main effects of trial (F(75,1425) = 52.937, p < 0.001) and emotion (F(1,19) = 4.618, p = 0.045). The interactions between trial × emotion × reward (F(75,1425) = 2.331, p < 0.001), trial × emotion (F(75,1425) = 3.674, p < 0.001), and reward × trial (F(75,1425) = 1.430, p = 0.011) were significant. We also fitted each participant's learning with an exponential function. A within-participant ANOVA revealed a statistically significant effect of facial expression (F(1,19) = 6.597, p = 0.019) for learning speed. There were no other significant effects (Fig. 1C).

This behavioral effect of fearful faces suggests that emotion acts on learning. However, an alternative explanation is the enhancement of short-term memory by emotion, unrelated to learning (Phelps et al., 2006; Schaefer et al., 2006). To address this possibility, we found no significant differences in the error rate between fearful (9.6%) and neutral (10.3%) conditions (t(19) = −1.415, p = 0.173) in a short-term memory task (see Materials and Methods).

To examine the computational process behind the acceleration of learning by emotion, we analyzed learning using a reinforcement learning model. Using model selection, the εβb model was selected by AIC, and the εβ model by BIC (Fig. 1D). Analysis with the εβb model showed that the learning rate was higher in the fearful than in the neutral condition [εF = 0.038 ± 0.011 (mean ± SEM), εN = 0.010 ± 0.004, Wilcoxon signed-rank test: Z = −2.053, p = 0.040] (Fig. 1E, left). In addition, the bias for ¥100 was less than zero in the fearful condition (bF = −0.211 ± 0.080, t(19) = −2.628, p = 0.017) but not different from zero in the neutral condition (Fig. 1E, right). The εβ model yielded a highly consistent statistical difference for ε and β (εF = 0.034 ± 0.010, εN = 0.006 ± 0.003, Z = −2.062, p = 0.039; and βF = 9.917 ± 2.246, βN = 14.556 ± 1.920, Z = −1.643, p = 0.100, respectively).

These results indicate that the presentation of a fearful face enhances the effects of the RPE by increasing the learning rate. To test this computational hypothesis, we used fMRI to examine whether the emotional stimulus changed the size of the RPE signal in the brain.

fMRI experiment

The estimated learning speeds in the pre-scan learning session were not different between the pre-fearful cues (which would later be presented with the fearful face), and the pre-neutral cues (which would later be presented with the neural face). These results validate that there were no differences among the four visual cues before the scanning session. Additionally, we did not find any significant differences in the error rate or rate of not responding among the four conditions in the scanning session.

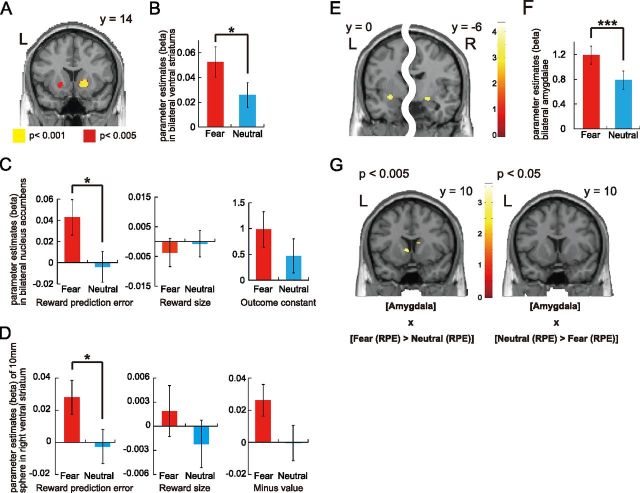

Activity correlated with the RPE

We first searched for the brain regions in which activity was correlated with RPEs at the time of the reward outcome (Fig. 1A). Significant activity was found in the right vStr (x = −20, y = 14, z = −8, p < 0.001; uncorrected for multiple comparisons; Fig. 2A), and in the left vStr with a slightly lower statistical threshold (x = 18, y = 16, z = −4, p < 0.005). Importantly, activity averaged within a 10 mm sphere around the bilateral peak voxels revealed a higher correlation (β value) with the RPE in the fearful than in the neutral condition (paired t(33) = 2.132, p = 0.041; Fig. 2B). This was also true for an anatomically defined ROI in the bilateral nucleus accumbens (NAcc) (paired t(33) = 2.409, p = 0.022; Fig. 2C, left).

Figure 2.

fMRI results. A, Activity in the right vStr (18, 16, −4) and activity in the left vStr (−20, 14, −8) that were correlated with the RPE (Fearful + Neutral). B, Activity in the vStr 10 mm radius ROI. C, Comparison of the correlations in the anatomically defined bilateral NAcc for the RPE, outcome constant, and reward size. D, Comparison of the correlations in 10 mm sphere ROI for the RPE (= reward size − value), reward size, and minus value revealed by the split analysis. E, Activity in the left (−28, 0, −10) and right (20, −6, −10) amygdala at the time of face presentation (Fearful − Neutral, p < 0.001 uncorrected). F, Activity in the anatomically defined bilateral amygdala ROI. G, Activity in the striatum as determined by PPI analysis (p < 0.005, uncorrected).

However, a pertinent question arises about whether the dissociable correlation between the fearful and neutral conditions in the NAcc is specific to the RPE, or more generally, is seen at the time of the reward outcome. To address this, we compared the fearful and neutral conditions for NAcc activity correlated with the constant and reward size regressors at the time of the reward outcome and found no difference (paired t(33) = 1.197, p = 0.24; and paired t(33) = −0.573, p = 0.57, respectively; Figure 2C, middle and right). Although we found brain activity correlated with RPEs in several areas (Table 1, A), none of these areas, except for the vStr, showed differences between the fearful and neutral conditions (threshold: p < 0.05). The results for the constant and reward size at the time of the outcome are shown in Table 1, B and C.

Table 1.

Summary of the fMRI peak voxels

| Area | L/R | x | y | z | k | t value |

|---|---|---|---|---|---|---|

| A—Prediction error activation at Outcome timing | ||||||

| Common regions of fearful and neutral conditions | ||||||

| Ventral striatum | R | 18 | 16 | −4 | 155 | 4.957 |

| Primary motor area | L | −42 | −10 | 54 | 103 | 4.042 |

| Inferior frontal operculum | L | −44 | 6 | 30 | 33 | 3.734 |

| Primary motor area | R | 46 | −8 | 56 | 32 | 3.675 |

| Cuneus | L | −8 | −88 | 40 | 28 | 3.436 |

| Fear-neutral masked by p < 0.005 common regionsa | ||||||

| Neutral-fear masked by p < 0.005 common regionsa | ||||||

| B—Reward size activation at Outcome timing | ||||||

| Common regions of fearful and neutral conditions | ||||||

| Calcarine sulcus | L | −14 | −90 | −6 | 483 | 6.615 |

| Lingual gyrus | R | 18 | −88 | −4 | 78 | 4.456 |

| Fear-neutral masked by p < 0.005 common regionsa | ||||||

| Neutral-fear masked by p < 0.005 common regionsa | ||||||

| C—Constant activation at outcome timing | ||||||

| Common regions of fearful and neutral conditions | ||||||

| Middle occipital lobe | L | −20 | −92 | 4 | 7502 | 16.573 |

| Inferior occipital lobe | R | 32 | −84 | −6 | 8017 | 15.598 |

| Primary motor area | R | 46 | 8 | 32 | 3953 | 13.329 |

| Insula cortex | L | −28 | 24 | 0 | 962 | 12.263 |

| Primary motor area | L | −44 | 4 | 30 | 1867 | 10.775 |

| Supplementary motor area | R | 8 | 20 | 46 | 2312 | 8.652 |

| Pallidum | L | −10 | 6 | −4 | 126 | 5.377 |

| Pallidum | R | 10 | 6 | −6 | 42 | 4.094 |

| Middle frontal lobe | L | −26 | 54 | 14 | 23 | 3.571 |

| Fear-neutral masked by p < 0.005 common regions | ||||||

| Insula cortex | R | 30 | 18 | −16 | 22 | 4.089 |

| Neutral-fear masked by p < 0.005 common regionsa | ||||||

| D—Expected value | ||||||

| Common regions of fearful and neutral conditions | ||||||

| Putamen | L | −30 | 12 | −4 | 59 | 3.905 |

| Anterior cingulate | R | 14 | 48 | 26 | 22 | 3.631 |

| Fear-neutrala | ||||||

| Neutral-feara | ||||||

| E—Face timing activation | ||||||

| Fear-neutral masked by p < 0.005 common regions | ||||||

| Inferior frontal triangularis | R | 56 | 34 | 8 | 85 | 4.325 |

| Amygdala | L | −28 | 0 | −10 | 47 | 3.555 |

| Amygdala | R | 20 | −6 | −10 | 16 | 3.457 |

| Neutral-fear masked by p < 0.005 common regionsa | ||||||

| F—PPI analysis | ||||||

| Fear-neutral masked by striatum-anatomical ROI | ||||||

| Dorsal striatum | R | 10 | 16 | 12 | 58 | 3.590 |

| Ventral striatum | L | −6 | 10 | 2 | 24 | 3.097 |

| Neutral-fear masked by striatum-anatomical ROIa |

The uncorrected threshold was p < 0.001 (A–E) or p < 0.005 (F), and the cluster size threshold was 15 voxels. k represents the cluster size.

aNo supratheshold clusters.

We also conducted the split analysis of the RPE signal, which examined whether either of “reward size (R) or “minus value (−V)” was modulated by the emotion or this modulation was significant only for total RPE (Behrens et al., 2008; Li and Daw 2011) (see also Materials and Methods). The contrast of Fear(R −V) > Neutral(R − V) exhibited a significant difference in activity in right vStr, which overlapped with Figure 2A (peak voxel t(33) = 3.27, p = 0.001, x = 16, y = 18, z = −4), and the Beta values for the conjunction of R and −V averaged in a 10 mm sphere around the peak voxel showed a significant difference between fearful and neutral conditions for RPE (t(33) = 2.097, p = 0.044; Fig. 2D, left). However, we could not detect the significant differences for R (t(33) = 1.116, p = 0.272; Fig. 2D, middle) or −V (t(33) = 1.861, p = 0.072; Fig. 2D, right). These results from the split analysis are consistent with those from the original RPE-based analysis (Fig. 2C) that the emotion affected RPE rather than reward size or value. All of these were consistent with the model selection results in the computational model-based analysis, where reward sensitivity did not help explain the behavioral data.

Activity correlated with the expected value

Consistent with previous studies, activity in the left putamen was correlated with the expected value (p < 0.001 uncorrected; Table 1, D). Importantly, this correlation was not different between the fearful and neutral conditions.

Activity during face presentation

We analyzed brain activity with respect to the time of face presentation. Consistent with previous human imaging studies on the processing of facial expressions (Whalen et al., 2009), the bilateral amygdala showed higher activity in the fearful than the neutral condition (p < 0.001 uncorrected; Fig. 2E; Table 1, E). Furthermore, this characteristic activity of the amygdala was also evident when we masked and averaged bilateral amygdala activity using an anatomical ROI (paired t(33) = 3.64, p < 0.001; Fig. 2F). The same subtraction between the fearful and neutral conditions revealed activity in the inferior frontal triangularis (Table 1, E), but no difference was observed using the opposite subtraction (i.e., neutral minus fear), even with a weak threshold (p < 0.01).

Psychophysiologic interactions between amygdala and striatum activity

We have seen that the RPE signal in the vStr was enhanced in the fearful condition, and activity in the amygdala was greater in the fearful condition. Accordingly, because we hypothesized that amygdala activation has a functional link with RPE signals in the striatum, we conducted a PPI analysis. Figure 2G, left, displays the activity in the left vStr (t = 3.02, p = 0.001, x = −6, y = 10, z = 2, cluster size = 24) that is correlated with the interaction between the amygdala activity and psychological factor ([Fear (RPE) > Neutral (RPE)]) (compare with Materials and Methods). This analysis also identified the right dorsal striatum (t = 3.59, p < 0.001, x = 10, y = 16, z = 12, cluster size = 58). Importantly, the inverse contrast ([Amygdala] × [Neutral (RPE) > Fear (RPE)]) did not detect difference in striatum activity even with a moderate threshold (p < 0.05) (Fig. 2G, right; Table 1, F).

Similarly, we searched for the functional link with the right inferior frontal cortex triangularis, but no significant activity was found, even with a weak threshold (p < 0.01). These results suggest an important contribution of amygdala activity to the enhancement of RPE signals in the vStr by emotion.

Discussion

Herein, we report that the presentation of a task-independent fearful face increased learning speed during probabilistic reward learning in comparison with the presentation of a neutral face. In parallel, we demonstrated an increased RPE signals in the ventral striatum (mainly the NAcc). We also found that amygdala activity during face presentation modulates RPE-correlated activity in the vStr in a trial-by-trial analysis.

Previous studies on decision making showed that emotional factors affect decision making and human brain activity, especially in the vStr. For example, people tend to be more sensitive to losses than gains that are of an equivalent magnitude (Kahneman and Tversky, 1979). An fMRI study reported that activity in the vStr reflected individual differences in expected loss aversion (Tom et al., 2007). It has also been reported that the expectation of the reward value in the vStr is modulated by the conscious control of a participant's own emotional reaction to a reward (Delgado et al., 2008). Related to this, Knutson et al. (2008) demonstrated that the task-independent pleasant picture presented before cues enhanced participant's risky choices and value signals in the vStr. In contrast, this study examined the effects of a task-unrelated emotional face on learning and revealed modulation of RPE signals in the vStr by emotion.

Related to this, one may ask whether enhanced RPE brain activity can be interpreted in the context of pavlovian instrumental transfer (PIT) (Bray et al., 2008; Talmi et al., 2008), which is a change in a cue value when it follows a positive or negative cue. Talmi et al. (2008) reported that a pavlovian-conditioned cue changed the value signal in the NAcc, and in the amygdala, during subsequent instrumental conditioning. These two phenomena are comparable in the sense that a task-independent cue changes the reward representations of subsequent cues and brain activity. However, fearful faces are likely to be perceived as negative stimuli and should slow cue–reward associations, which contradicts the results of this study. In addition, we found an increase in the RPE signal rather than an increase in the value signal (reward prediction). Therefore, we do not think that the observed accelerated learning can be linked to PIT. Interestingly, our model-based analysis revealed that the participants had a negative bias for ¥100 following the presentation of a fearful face, and this tendency could be related to PIT. Similarly, a recent fMRI study reported a facial expression-dependent bias using positive and negative facial expressions as reward-predicting cues (Evans et al., 2011). It is also known that novel objects can increase value representations and reward responses in the striatum (Guitart-Masip et al., 2010). However, we matched the novelty of the faces used in the current study.

Subjects simply confirmed their already learned association in the fMRI experiments, and there is a possibility that the behavioral and fMRI results do not have one-to-one interpretation. However, previous electrophysiological studies showed (Fiorillo et al., 2003) that dopamine neuron firing in a probabilistic task represents RPE even after the learning, and similar RPE activity was reported in the striatum in a fMRI study (McClure et al., 2003). These results indicate that activity in the striatum in the current study successfully detected RPE signal. It is also indicated from the task design that learning-related attention does not have a major role in the emotional modulation of the RPE signal (Maunsell, 2004). However, the dynamic property of the modulation during learning remains an open question for future study.

We showed that activity in vStr is correlated with both RPE and amygdala activity during face presentation (Fear > Neutral). This suggests that the amygdala plays a role in modulating RPE signals, although it is difficult to draw strong conclusions regarding causality with fMRI. Consistent with this view, a recent optogenetic study has revealed that excitatory glutamatergic transmissions from the basolateral amygdala to the NAcc are indispensable for cue–reward association learning (Stuber et al., 2011). Similarly, some recent fMRI studies have suggested the possibility that emotional stimuli enhance amygdala–striatum interactions and learning (Delgado et al., 2009; Schlund and Cataldo, 2010; Li et al., 2011) in the context of aversive conditioning.

Considering the temporally separated timing of amygdala and RPE-correlated striatum activity, they could be related through indirect pathways between the vStr and the amygdala, via the midbrain (Ahn and Phillips, 2002) or orbitofrontal cortex (Schoenbaum et al., 2003). However, we think that direct interactions are more likely, as neither the midbrain nor the orbitofrontal cortex showed significant activity differences between the fearful and neutral conditions.

Finally, a natural question may arise as to why task-independent emotion enhances RPEs and makes learning faster. One possible explanation may be that this mechanism contributes to the individual obtaining more rewards in complex environments by using the facial expressions of others and the surrounding atmosphere. Such an advantage may have allowed the development of the computational and neural processes found in this study.

Footnotes

This study was supported by PRESTO from the Japan Science and Technology Agency, and KAKENHI (Grant 22300139) to M.H. We are grateful to Drs. Peter Dyan and Okihide Hikosaka for helpful discussion, and Dr. Haji for technical assistance with fMRI.

The authors declare no competing financial interests.

References

- Ahn S, Phillips AG. Modulation by central and basolateral amygdalar nuclei of dopaminergic correlates of feeding to satiety in the rat nucleus accumbens and medial prefrontal cortex. J Neurosci. 2002;22:10958–10965. doi: 10.1523/JNEUROSCI.22-24-10958.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens TE, Hunt LT, Woolrich MW, Rushworth MF. Associative learning of social value. Nature. 2008;456:245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Measuring emotion: the self-assessment manikin and the semantic differential. J Behav Ther Exp Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Bray S, Rangel A, Shimojo S, Balleine B, O'Doherty JP. The neural mechanisms underlying the influence of pavlovian cues on human decision making. J Neurosci. 2008;28:5861–5866. doi: 10.1523/JNEUROSCI.0897-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw DN. Trial-by-trial data analysis using computational models. In: Delgado MR, Phelps EA, Robbins TW, editors. Decision making, affect, and learning: attention and performance XXIII. Oxford, UK: Oxford UP; 2011. pp. 3–38. [Google Scholar]

- Dayan P. The role of value systems in decision making. In: Engel C, Singer W, editors. Better than conscious? Decision making, the human mind, and implications for institutions. Cambridge, MA: MIT Press; 2008. pp. 51–70. [Google Scholar]

- Delgado MR, Gillis MM, Phelps EA. Regulating the expectation of reward via cognitive strategies. Nat Neurosci. 2008;11:880–881. doi: 10.1038/nn.2141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR, Jou RL, Ledoux JE, Phelps EA. Avoiding negative outcomes: tracking the mechanisms of avoidance learning in humans during fear conditioning. Front Behav Neurosci. 2009;3:33. doi: 10.3389/neuro.08.033.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans S, Fleming SM, Dolan RJ, Averbeck BB. Effects of emotional preferences on value-based decision-making are mediated by mentalizing and not reward networks. J Cogn Neurosci. 2011;23:2197–2210. doi: 10.1162/jocn.2010.21584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional brain imaging: a general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- Guitart-Masip M, Bunzeck N, Stephan KE, Dolan RJ, Düzel E. Contextual novelty changes reward representations in the striatum. J Neurosci. 2010;30:1721–1726. doi: 10.1523/JNEUROSCI.5331-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haruno M, Kawato M. Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus-action-reward association learning. J Neurophysiol. 2006;95:948–959. doi: 10.1152/jn.00382.2005. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- Knutson B, Wimmer GE, Kuhnen CM, Winkielman P. Nucleus accumbens activation mediates the influence of reward cues on financial risk taking. Neuroreport. 2008;19:509–513. doi: 10.1097/WNR.0b013e3282f85c01. [DOI] [PubMed] [Google Scholar]

- Li J, Daw ND. Signals in human striatum are appropriate for policy update rather than value prediction. J Neurosci. 2011;31:5504–5511. doi: 10.1523/JNEUROSCI.6316-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li J, Schiller D, Schoenbaum G, Phelps EA, Daw ND. Differential roles of human striatum and amygdala in associative learning. Nat Neurosci. 2011;14:1250–1252. doi: 10.1038/nn.2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH. Neuronal representations of cognitive state: reward or attention? Trends Cogn Sci. 2004;8:261–265. doi: 10.1016/j.tics.2004.04.003. [DOI] [PubMed] [Google Scholar]

- McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- Phelps EA, Ling S, Carrasco M. Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychol Sci. 2006;17:292–299. doi: 10.1111/j.1467-9280.2006.01701.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaefer A, Braver TS, Reynolds JR, Burgess GC, Yarkoni T, Gray JR. Individual differences in amygdala activity predict response speed during working memory. J Neurosci. 2006;26:10120–10128. doi: 10.1523/JNEUROSCI.2567-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlund MW, Cataldo MF. Amygdala involvement in human avoidance, escape and approach behavior. Neuroimage. 2010;53:769–776. doi: 10.1016/j.neuroimage.2010.06.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Stuber GD, Sparta DR, Stamatakis AM, van Leeuwen WA, Hardjoprajitno JE, Cho S, Tye KM, Kempadoo KA, Zhang F, Deisseroth K, Bonci A. Excitatory transmission from the amygdala to nucleus accumbens facilitates reward seeking. Nature. 2011;475:377–380. doi: 10.1038/nature10194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- Talmi D, Seymour B, Dayan P, Dolan RJ. Human pavlovian-instrumental transfer. J Neurosci. 2008;28:360–368. doi: 10.1523/JNEUROSCI.4028-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tom SM, Fox CR, Trepel C, Poldrack RA. The neural basis of loss aversion in decision-making under risk. Science. 2007;315:515–518. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Davis FC, Oler JA, Kim H, Kim MJ, Neta M. Human amygdala responses to facial expressions of emotion. In: Whalen PJ, Phelps EA, editors. The human amygdala. New York: Guilford Press; 2009. pp. 265–288. [Google Scholar]