Abstract

To combine information from different sensory modalities, the brain must deal with considerable temporal uncertainty. In natural environments, an external event may produce simultaneous auditory and visual signals yet they will invariably activate the brain asynchronously due to different propagation speeds for light and sound, and different neural response latencies once the signals reach the receptors. One strategy the brain uses to deal with audiovisual timing variation is to adapt to a prevailing asynchrony to help realign the signals. Here, using psychophysical methods in human subjects, we investigate audiovisual recalibration and show that it takes place extremely rapidly without explicit periods of adaptation. Our results demonstrate that exposure to a single, brief asynchrony is sufficient to produce strong recalibration effects. Recalibration occurs regardless of whether the preceding trial was perceived as synchronous, and regardless of whether a response was required. We propose that this rapid recalibration is a fast-acting sensory effect, rather than a higher-level cognitive process. An account in terms of response bias is unlikely due to a strong asymmetry whereby stimuli with vision leading produce bigger recalibrations than audition leading. A fast-acting recalibration mechanism provides a means for overcoming inevitable audiovisual timing variation and serves to rapidly realign signals at onset to maximize the perceptual benefits of audiovisual integration.

Introduction

We live in a multisensory world where single external events can produce correlated signals across our various sensory systems. Combining sensory information across the senses can markedly improve the precision and speed of perception (Morein-Zamir et al., 2003; Van der Burg et al., 2008b; Alais et al., 2010), although these benefits decline if the signals are asynchronous (Van der Burg et al., 2008b, 2010). Audio-visual signals are one particularly common cross-sensory pairing that we experience many times each day, such as when crossing a road or engaging in conversation. Audition and vision are also the senses we rely on to detect distant objects and events, although the different speeds of light and sound mean there is a distance-dependent audiovisual asynchrony that makes optimal integration difficult to achieve. An adaptive phenomenon known as temporal recalibration (Fujisaki et al., 2004; Vroomen et al., 2004) helps realign signals, as shown in a number of studies demonstrating that repeated exposure to an audiovisual asynchrony shifts the point of subjective simultaneity (PSS) in the direction of the leading sense.

Recalibration studies have typically used lengthy asynchronous cross-modal adaptation procedures lasting up to several minutes, suggesting that the recalibration process is sluggish and requires long periods of adaptation (Fujisaki et al., 2004; Vroomen et al., 2004; Navarra et al., 2009; Roseboom and Arnold, 2011). Ideally, recalibration would occur very rapidly, enabling the observer to benefit immediately from multisensory integration rather than having to wait several minutes for a slow realignment to occur. Here, we examine whether audiovisual temporal recalibration can occur over brief time-scales. Using a simultaneity judgment (SJ) task, participants judged whether a luminance onset and tone were synchronized or not across a range of stimulus onset asynchronies (SOAs). If temporal recalibration occurs rapidly, then we expect that the PSS on the current trial to be contingent upon the audiovisual asynchrony on the preceding trial.

Our results demonstrate that temporal recalibration can occur extremely quickly, far more rapidly than previously thought. We find that the PSS is highly contingent on the modality order and SOAs of the preceding trial. We also find that passively observing prior asynchronies is sufficient to trigger recalibration, and that preceding trials judged to be asynchronous still produce recalibration. Further, we show that temporal recalibration is asymmetrical with respect to stimulus order, occurring over a broader SOA range when vision leads on the previous trial than when audition leads. Finally, we show that participants who report simultaneity over a broad range show greater PSS recalibration than those with narrower simultaneity ranges. We present a temporal recalibration model to explain these results.

Materials and Methods

Experiment 1.

Fifteen participants (8 females, 7 males; 13 naive, mean age: 26.6 years) were paid AU $20/h to participate. Trials started with a white fixation dot on a black screen for 1000 ms after which a surrounding white ring appeared (radius 2.6°; width 0.4°) and a tone was presented (500 Hz; 50 ms duration). The tone either preceded or followed the ring's onset by a SOA drawn randomly from the set (±0, ±64, ±128, ±256, ±512 ms). The task was to judge whether the ring's onset was synchronized with the tone. The advantage of a SJ-task is that the modality order is irrelevant to the task and the outcome less susceptible to a response bias than other methods such as temporal order judgments (Van der Burg et al., 2008a; Spence and Parise, 2010). The ring remained until the unspeeded response was recorded and the fixation dot was present throughout. Participants completed 120 trials for each SOA condition in three sessions of 400 trials. The monitor (85 Hz) was viewed from 80 cm and the tones were delivered over headphones. Each session began with two practice trials.

Experiment 2.

In Experiment 2, nine participants (4 males, 5 females; 7 naive; mean age: 25.8 years) participated in an experiment identical to Experiment 1 except that the audiovisual stimuli were both brief (50 ms) and that on half of the trials, a cue informed participants that no response was required and instructed them to passively observe the stimuli. A green fixation dot indicated test trials, and a red dot indicated passive trials. After a passive trial, the next (test) trial was initiated after 700 ms. Participants performed one session of 600 trials.

Results

We conducted separate intertrial analyses for Experiments 1 and 2 to examine whether the modality order on a given previous trial (Trial t-1) affected the distribution of “simultaneous” responses on the current trial (Trial t). Simultaneity data form a peaked distribution centered on the PSS and are well modeled by Gaussian distributions. For each participant, we fitted the data for both kinds of t-1 trial order (i.e., vision led or audition led) with Gaussians to estimate PSS (distribution mean), amplitude, and simultaneity bandwidth (the distribution's SD).

Experiment 1.

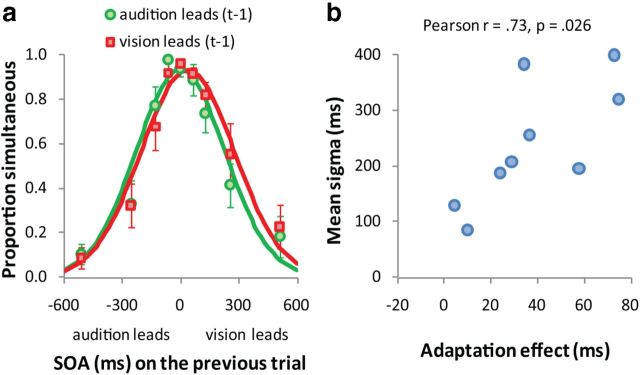

Figure 1a plots mean proportion of simultaneous responses as a function of SOA for both t-1 trial orders, with continuous lines showing best-fitting Gaussian distributions. Figure 1b shows the adaptation effect size for each individual plotted against their simultaneity bandwidths. The adaptation effect is defined as the PSS when vision leads on Trial t-1 minus the PSS when audition leads on Trial t-1. When the tone preceded the luminance onset on the previous trial (green curve), the PSS was significantly shorter (15 ms) than when the tone followed the luminance onset (red curve; 35 ms), t(14) = 3.6, p = 0.003 (two-tailed t test). That is, participants experienced luminance onsets presented ∼35 ms before the tone's onset as “synchronous” when vision occurred first on the previous trial. Conversely, when audition occurred first on the previous trial, luminance onsets presented 15 ms earlier than the tones were reported as synchronous. Thus, even though we used brief stimuli and did not use an explicit adaptation procedure entailing repeated exposure to a particular asynchrony, these strong intertrial effects show that adaptation occurs rapidly, with the direction of PSS shift contingent upon the modality order of the previous trial. Modality order did not affect bandwidths (t(14) = 1.3, p = 0.210) or amplitudes (t(14) = 0.6, p = 0.534).

Figure 1.

Results of Experiment 1. a, Mean proportion of simultaneous responses as a function of SOA. Negative SOAs indicate the tone precedes the luminance onset, positive SOAs indicate that the tone followed the luminance onset. The data have been binned based on the modality order in the previous trial (t-1): green symbols indicate that audition leads on Trial t-1, whereas red symbols indicate that vision leads. Continuous lines are best-fitting Gaussian distributions to the two types of t-1 trial order. The PSS for each t-1 trial order is given by the Gaussian mean. b, The simultaneity bandwidth (mean of the Gaussian SD for both kinds of t-1 trial order) as a function of the adaptation effect (PSS when vision leads-PSS when audition leads on Trial t-1) for each participant. c, Mean PSS of both t-1 trial types as a function of SOA on Trial t-1. The dotted line shows the mean PSS for a SOA of 0 ms on Trial t-1. d, Mean PSS of both t-1 trial types as a function of the preceding response.

We established that PSS on a particular trial is strongly determined by the order of audiovisual stimuli on the previous trial (Figs. 1a,b). Taking this further, we ask whether the magnitude of temporal recalibration depends on the specific SOA of the previous trial. We estimated Gaussian parameters for each participant's simultaneity data as above, but applied the analysis to each SOA presented on Trial t-1 (∼120 trials to estimate the PSS per SOA on Trial t-1). Data were pooled for t-1 SOA equals 0 ms. ANOVAs were run on PSS, bandwidth, and amplitude with t-1 SOA as the independent variable and Huynh–Feldt corrected p values reported wherever relevant. As Figure 1c shows, the ANOVA for PSS yielded a reliable effect of t-1 SOA (F(8,112) = 6.1, p = 0.001). We further analyzed this effect by comparing the PSS for each t-1 SOA with the default PSS (the PSS when t-1 SOA = 0 ms) and found that the PSS was significantly shorter when audition preceded vision on the t-1 trial by 64 ms (t(14) = 2.3, p = 0.034) but not for longer SOAs (ps > 0.373). In contrast, when vision led on Trial t-1, PSSs were significantly longer than the default for all SOAs (ts(14) > 3.1, ps < 0.008). The ANOVA for amplitude yielded no significant effect (F(8,112) = 2.1, p = 0.07). The ANOVA for bandwidth yielded a significant effect (F(8,112) = 3.7, p = 0.005) as bandwidths tended to increase with SOA on Trial t-1, independently of modality order.

Together, these results paint a clear picture of rapid temporal recalibration. Perceived audiovisual simultaneity on a given trial depends strongly on both the modality order in the previous trial and the SOA between those asynchronous stimuli. Intriguingly, temporal recalibration is asymmetrical with respect to stimulus order. When audition led on Trial t-1, only the shortest SOA showed a reliable shift in PSS, yet when vision led on Trial t-1, significant PSS shifts were observed for all SOAs. The asymmetry based on stimulus order is also evident in the magnitude of PSS shifts, with adaptation being stronger when vision led on Trial t-1 (maximum PSS − default PSS = 36 ms) compared with when audition led on Trial t-1 (minimum PSS − default PSS = −8 ms).

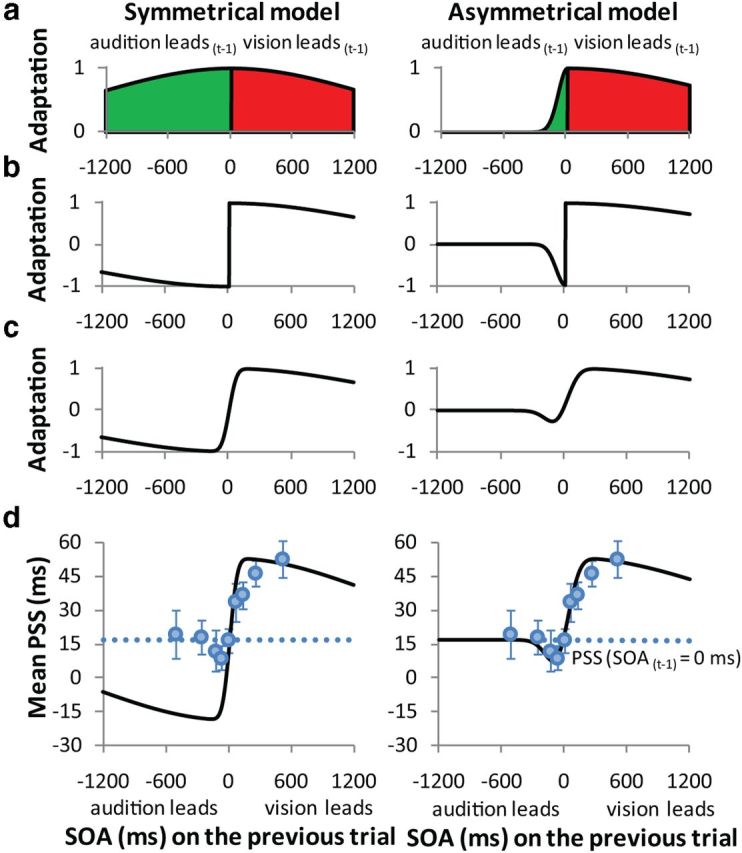

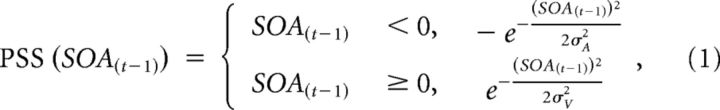

The asymmetrical pattern can be easily captured by a simple asymmetrical Gaussian model (Fig. 2a, right). The Gaussian peak is set to SOA = 0 ms, and declines rapidly on the left side (SOAs < 0 ms; audition leads on Trial t-1) and more gradually on the right (SOAs > 0 ms; vision leads on Trial t-1). The asymmetrical Gaussian describes how the magnitude of recalibration (PSS shift relative to default PSS) varies as a function of SOA on Trial t-1 and captures several fundamental observations in our data, specifically: (1) temporal recalibration is most pronounced when SOA is small, (2) the magnitude of recalibration declines systematically with increasing SOA, but more rapidly when audition leads, and (3) recalibration approaches zero for very large SOAs, an important functional limit as audiovisual signals with large SOAs are unlikely to have a common source and should not lead to recalibration. In this model, the negative bandwidth (σA) controls the SOA range over which recalibration occurs when audition leads on Trial t-1, and the positive bandwidth (σV) controls the SOA range supporting recalibration when vision leads. Because an auditory lead is defined as a negative SOA (Fig. 1c), we invert the sign of the negative side of the asymmetrical Gaussian (Fig. 2b, right), and then add a single source of Gaussian-distributed noise to produce the curves in Figure 2c, right).

Figure 2.

Model of temporal recalibration. Left, The behavior of a symmetrical Gaussian model. Right, The asymmetrical model. a, The green and red regions correspond, respectively, to audition leading on Trial t-1 and vision leading on Trial t-1. In the symmetrical model, a single parameter controls the SOA range over which recalibration can occur, whereas the asymmetrical model has separate recalibration bandwidth parameters for negative (audition leads) and positive (vision leads) SOAs. b, The same functions as in a except that the sign of the Gaussian's negative side was inverted to accommodate our convention that negative SOAs refer to audition leading vision. c, The Gaussian functions were convolved with normally distributed random noise whose bandwidth was a free parameter. d, The experimental data (Fig. 1c) showing the shift in PSS as a function of SOA on Trial t-1. The black continuous line shows the best-fitting Gaussian model, whose amplitude is controlled by a PSSnorm parameter.

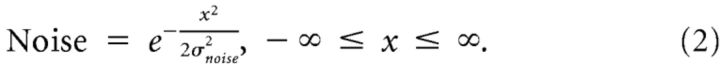

The model in Figure 2b is defined by Equation 1. Equation 2 was used to quantify the amount of random noise in the model. Figure 2c is the convolution of Equations 1 and 2.

|

|

The model has four parameters, the recalibration bandwidths for audition and for vision leading on Trial t-1 (σA and σV), the bandwidth of the noise (σnoise), and the normalization factor (PSSnorm). The only constraint was that σA and σV could not exceed 2500 ms. The noise is normally distributed random noise and represents variation due to neural noise, latency variation, response errors, individual differences, etc. PSSnorm scales the magnitude of the asymmetrical Gaussian to the observed PSS shifts.

The model was fitted to the data in Figure 2d by minimizing the root-mean-square-error. Before fitting, the unit amplitude version shown in Figure 2c was normalized by shifting its asymptote to the default PSS. The best fitting asymmetrical model was obtained when the parameters σnoise and PSSnorm were 92 and 33 ms, respectively, and when the recalibration bandwidth for audition leading on Trial t-1 (σA) was 88 ms and for vision leading (σV) was 2500 ms. The σV value corresponds to the constraining limit of our asymmetric fit. We propose that future studies include longer SOAs to better determine this parameter. Nevertheless, our asymmetrical model provided a very good fit to the data (R2 = 0.93), far better than the symmetrical model (Fig. 2, left) where the best fit was obtained for σA = σV = 1316 ms, σnoise = 56 ms and PSSnorm = 39 ms. Overall, although the symmetrical model describes the data reasonably well (R2 = 0.78), it overestimates shifts in PSS amplitude and fails to capture the asymmetry inherent in the data (Fig. 2d, left).

The results show that brief audiovisual stimuli lead to recalibration even when the auditory and visual signal components are quite far apart in time (e.g., when vision leads by 512 ms). What could explain large PSS shifts at such long SOAs? Individual differences in multisensory temporal processing may partly explain this as it is known that temporal resolution in multisensory processing and the ability to integrate multisensory signals are related. For instance, Stevenson, et al. (2012) showed that participants with broad temporal integration windows are more susceptible to multisensory illusions. Plausibly, therefore, the simultaneity bandwidth in our task (i.e., the Gaussian SD; Fig. 1a) may predict the magnitude of PSS shift for individual subjects. Figure 1b plots this relationship and reveals a strong tendency for participants with broad simultaneity bandwidths to show greater PSS shifts than those with narrower bandwidths (Pearson r = 0.69; p = 0.004).

An interesting question is whether the temporal recalibration effects are entirely due to the previous trial, or whether a longer history determines adaptation strength. We tested this by comparing an analysis based on Trial t-1 (as above) with an analysis based on Trial t-2. The t-2 analysis was the same as for t-1 except that we calculated the PSS shifts on the current trial resulting when vision-led or audition-led on Trial t-2. The ANOVA with modality order for Trial t-1 and t-2 as variables yielded a significant main effect of modality order for Trial t-1 (F(1,14) = 13.3, p = 0.003) and for Trial t-2 (F(1,14) = 7.3, p = 0.017). This t-2 effect indicates the PSS on trial t was significantly shorter when audition led on Trial t-2 (22 ms) than when vision led on Trial t-2 (28 ms). Importantly, this effect was independent of modality order on Trial t-1, as the two-way interaction was not significant, F(1,14) = 1.9, p = 0.180, confirming that Trial t-1 was sufficient on its own to produce strong adaptation effects. However, the significant t-2 effect demonstrates that the state of adaptation arising from a given trial is not completely overwritten by the subsequent trial: a small but significant effect (mean t-2 effect of 6 ms vs mean t-1 effect of 20 ms) will endure for at least one further trial.

Finally, we examined whether t-1 recalibration depends on the observer perceiving the previous trial as synchronous. To test this, we analyzed PSS values in an ANOVA with subject's response (simultaneous vs not simultaneous) and modality order on Trial t-1 as within-subject variables (Fig. 1d). The main effect of response was not significant, F(1,14) = 2.1, p = 0.170, and neither was the interaction, F(1,14) = 0.2, p = 0.690. This suggests temporal recalibration does not require the preceding trial to be perceived as synchronous.

Experiment 2.

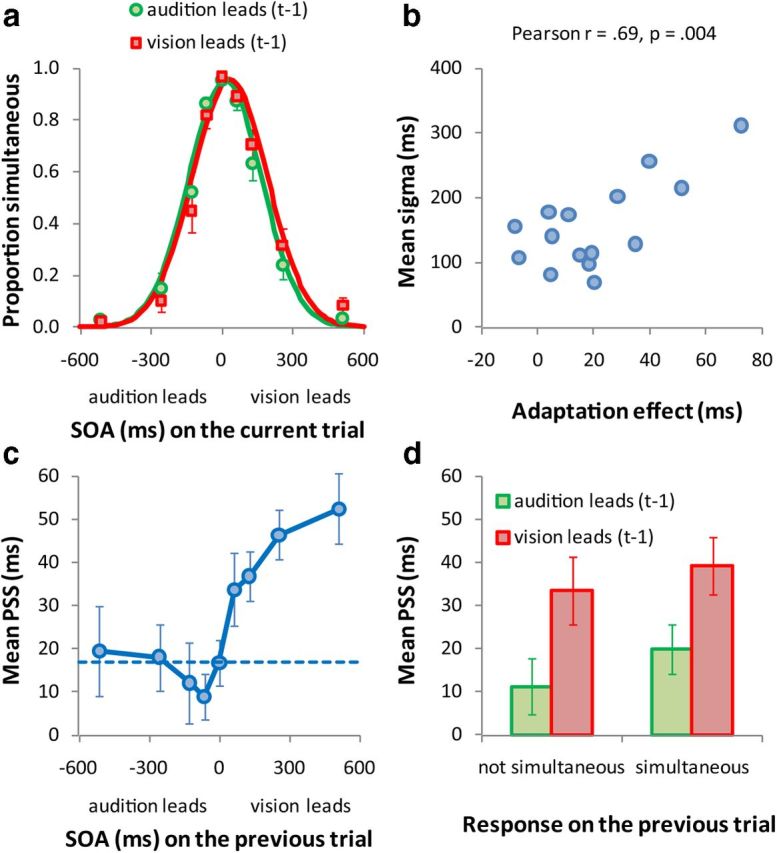

Figure 3a plots the mean proportion of simultaneous responses as a function of SOA and modality order on Trial t-1 for Experiment 2. For test trials (i.e., subjects responded, and the preceding trial was therefore passive), when the tone preceded the visual onset on the previous trial, the PSS was significantly shorter (6 ms) than when the visual onset preceded the tone (44 ms; t(8) = 4.5, p = 0.002). Even though participants were asked to passively observe the asynchronous audiovisual event on Trial t-1, strong intertrial effects still occurred. Consistent with Experiment 1, t tests yielded no effect of t-1 SOA on bandwidth (t(8) = 1.1, p = 0.284) or amplitude (t(8) = 0.4, p = 0.714), and participants with broad simultaneity bandwidths showed greater PSS shifts than those with narrower bandwidths (Pearson r = 0.73; p = 0.026; Fig. 3b). As temporal recalibration occurs independently of perceptual response on Trial t-1, it is possible that observing audiovisual events passively (i.e., with no response task required) may still produce temporal recalibration. In Experiment 2, we examined these using passive trials in alternation with test trials.

Figure 3.

Results of Experiment 2. a, Proportion of simultaneous responses as a function of SOA and the modality order in the previous trial (t-1). b, The simultaneity bandwidth as a function of the adaptation effect (PSS when vision leads minus PSS when audition leads on Trial t-1) for each participant.

Discussion

The intertrial adaptation effects reported here demonstrate two important points. First, recalibration to asynchronous multisensory stimuli can occur following exposure to a single, brief trial and does not require prolonged periods of adaptation. Second, the size of the recalibration effect exhibits a strong asymmetry: when audition leads, both the magnitude of subsequent recalibration and the SOA range over which it occurs is much smaller than when vision leads. The first finding is surprising as it suggests that recalibration occurs near-instantaneously, in an immediate, online fashion. Previous studies have used long adaptation procedures to induce comparable PSS shifts, yet our results show this is not necessary, and that even passive exposure to a single asynchronous audiovisual event, whether perceived as synchronous or not, is sufficient to induce effects of comparable size.

What advantage would such rapid adaptation to audiovisual asynchrony confer? Rapid recalibration makes sense in a world where auditory and visual information is highly variable in its relative timing due to different propagation speeds for light and sound and to different neural transduction times for auditory and visual stimuli. In such an environment, recalibrating to asynchronous audiovisual stimuli over a period of several minutes would not be sensible. Multisensory integration is optimal when audiovisual signals are approximately synchronized, and declines with temporal asynchrony (Slutsky and Recanzone, 2001; Van der Burg et al., 2010; Hartcher-O'Brien and Alais, 2011). It therefore makes sense that audiovisual recalibration would occur rapidly so that visual and auditory signals could be quickly realigned to facilitate multisensory integration. A highly relevant example would be the optimization of speech comprehension (Sumby and Pollack, 1954), which is optimal when visual and auditory signals are perceived as simultaneous. By rapidly recalibrating to the first audiovisual event in a speech stream, comprehension would be optimized for the remainder of the stream.

Our second key finding is that temporal recalibration to audiovisual asynchrony is asymmetrical. The recalibration shifts occurred over a much broader SOA range, and had a larger magnitude, when vision led on Trial t-1 than when audition led. This asymmetry is striking yet consistent with naturally occurring delays between vision and sound. For a synchronized audiovisual event occurring in the near field, audition will be perceived before vision (by ∼30 ms; Alais and Carlile, 2005) because neural transduction for audition is much faster than for vision and this more than compensates for the slower speed of sound. However, for events occurring beyond a distance of ∼10 m, vision will always be perceived first, and increasingly so as distance increases, due to the much faster speed of light. Thus, asynchronies can occur in either direction but audition can only lead vision over a narrow range, whereas vision can lead audition over a broad range. The strong asymmetry in our recalibration data therefore reflects the fundamental asymmetry in naturally occurring audiovisual stimuli and rapid recalibration provides a mechanism for overcoming this temporal variation to maintain optimal perception.

Could these intertrial effects be explained by a response bias or strategic response? We believe this is unlikely for several reasons: (1) recalibration was much stronger when vision led on Trial t-1 than when audition led, whereas a simple response bias based on the previous trial's modality order would predict symmetrical data; (2) recalibration occurred independently based on Trial t-1 and Trial t-2. An account of both effects in terms of response bias or cognitive strategy is improbable as it would require observers to maintain independent running tallies of modality order and SOA for the previous two trials while making judgments for trial t; (3) synchrony judgments were on average made within 600 ms of stimulus onset, leaving little time for response bias or strategic calculations; and (4) passive t-1 trials requiring no perceptual or motor response still produced recalibration even though no explicit representation of modality order was required. Together, this argues against simple criterion shifts or strategic responding. Instead, we propose that the intertrial recalibration effects are due early sensory effects.

Little is known at present about the neural mechanisms underlying temporal recalibration, although work by Stekelenburg et al. (2011) showed that adaptation affects early sensory areas. After prolonged adaptation to tap-flash stimuli with delayed flashes (100 ms), ERPs evoked by synchronous stimuli showed an attenuated P1 component over occipital electrodes, compared with a prolonged-synchrony control. In contrast, Kösem and van Wassenhove (2013) examined audiovisual recalibration following prolonged adaptation and found phase shifts in auditorily, but not visually, entrained components that were commensurate with behaviorally measured recalibrations of audiovisual PSS. This suggests that latency shifts in early auditory cortex might underlie audiovisual temporal recalibration. These studies indicate that recalibration entails changes in early sensory processing (either auditory or visual), although it is unclear whether the present intertrial effects are due to visual or auditory effects, or both.

More research is required to understand the neural mechanisms of temporal recalibration, although the strong effects reported here from brief, single trials will expedite exploration of behavioral aspects, in turn guiding neural research. Intertrial recalibration also has implications for multisensory research where SOA is commonly manipulated, e.g., orientation, alerting, and attention (Spence and Driver, 1997; Slutsky and Recanzone, 2001; Los and Van der Burg, 2013), as perceived and actual SOAs may differ following a single trial.

Footnotes

This work was supported by Australian Research Council Grant DE130101663 (E.V.d.B.) and DP120101474 (D.A. and J.C.).

References

- Alais D, Carlile S. Synchronising to real events: subjective audiovisual alignment scales with perceived auditory depth and speed of sound. Proc Natl Acad Sci U S A. 2005;102:2244–2247. doi: 10.1073/pnas.0407034102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alais D, Newell FN, Mamassian P. Multisensory processing in review: from physiology to behaviour. Seeing Perceiving. 2010;23:3–38. doi: 10.1163/187847510X488603. [DOI] [PubMed] [Google Scholar]

- Fujisaki W, Shimojo S, Kashino M, Nishida S. Recalibration of audiovisual simultaneity. Nat Neurosci. 2004;7:773–778. doi: 10.1038/nn1268. [DOI] [PubMed] [Google Scholar]

- Hartcher-O'Brien J, Alais D. Temporal ventriloquism in a purely temporal context. J Exp Psychol Hum Percept Perform. 2011;37:1383–1395. doi: 10.1037/a0024234. [DOI] [PubMed] [Google Scholar]

- Kösem A, van Wassenhove V. Phase encoding of perceived events timing. Multisensory Res. 2013;26:89. doi: 10.1163/22134808-000S0061. [DOI] [Google Scholar]

- Los SA, Van der Burg E. Sound speeds vision through preparation, not integration. J Exp Psychol Hum Percept Perform. 2013 doi: 10.1037/a0032183. in press. [DOI] [PubMed] [Google Scholar]

- Morein-Zamir S, Soto-Faraco S, Kingstone A. Auditory capture of vision: examining temporal ventriloquism. Brain Res Cogn Brain Res. 2003;17:154–163. doi: 10.1016/S0926-6410(03)00089-2. [DOI] [PubMed] [Google Scholar]

- Navarra J, Hartcher-O'Brien J, Piazza E, Spence C. Adaptation to audiovisual asynchrony modulates the speeded detection of sound. Proc Natl Acad Sci U S A. 2009;106:9169–9173. doi: 10.1073/pnas.0810486106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roseboom W, Arnold DH. Twice upon a time: multiple concurrent temporal recalibrations of audiovisual speech. Psychol Sci. 2011;22:872–877. doi: 10.1177/0956797611413293. [DOI] [PubMed] [Google Scholar]

- Slutsky DA, Recanzone GH. Temporal and spatial dependency of the ventriloquism effect. Neuroreport. 2001;12:7–10. doi: 10.1097/00001756-200101220-00009. [DOI] [PubMed] [Google Scholar]

- Spence C, Parise C. Prior-entry: a review. Conscious Cogn. 2010;19:364–379. doi: 10.1016/j.concog.2009.12.001. [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J. Audiovisual links in exogenous covert spatial orienting. Percept Psychophys. 1997;59:1–22. doi: 10.3758/BF03206843. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, Sugano Y, Vroomen J. Neural correlates of motor–sensory temporal recalibration. Brain Res. 2011;1397:46–54. doi: 10.1016/j.brainres.2011.04.045. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Zemtsov RK, Wallace MT. Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. J Exp Psychol Hum Percept Perform. 2012;38:1517–1529. doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. J Acoust Soc Am. 1954;26:212–215. doi: 10.1121/1.1907309. [DOI] [Google Scholar]

- Van der Burg E, Olivers CNL, Bronkhorst AW, Theeuwes J. Audiovisual events capture attention: evidence from temporal order judgments. J Vis. 2008a;8(5):2, 1–10. doi: 10.1167/8.5.2. [DOI] [PubMed] [Google Scholar]

- Van der Burg E, Olivers CN, Bronkhorst AW, Theeuwes J. Pip and pop: non-spatial auditory signals improve spatial visual search. J Exp Psychol Hum Percept Perform. 2008b;34:1053–1065. doi: 10.1037/0096-1523.34.5.1053. [DOI] [PubMed] [Google Scholar]

- Van der Burg E, Cass J, Olivers CN, Theeuwes J, Alais D. Efficient visual search from synchronized auditory signals requires transient audiovisual events. PLoS One. 2010;5:e10664. doi: 10.1371/journal.pone.0010664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vroomen J, Keetels M, de Gelder B, Bertelson P. Recalibration of temporal order perception by exposure to audio-visual asynchrony. Brain Res Cogn Brain Res. 2004;22:32–35. doi: 10.1016/j.cogbrainres.2004.07.003. [DOI] [PubMed] [Google Scholar]