Abstract

An extensive series of physiological studies in macaques shows the existence of neurons in three multisensory cortical regions, dorsal medial superior temporal area (MSTd), ventral intraparietal area (VIP), and visual posterior sylvian area (VPS), that are tuned for direction of self-motion in both visual and vestibular modalities. Some neurons have congruent direction preferences, suggesting integration of signals for optimum encoding of self-motion trajectory; others have opposite preferences and could be used for discounting retinal motion that arises from perceptually irrelevant head motion. Whether such a system exists in humans is unknown. Here, artificial vestibular stimulation was administered to human participants during fMRI scanning in conjunction with carefully calibrated visual stimulation that emulated either congruent or opposite stimulation conditions. Direction and speed varied sinusoidally, such that the two conditions contained identical vestibular stimulation and identical retinal stimulation, differing only in the relative phase of the two components. In human MST and putative VIP, multivoxel pattern analysis permitted classification of stimulus phase based on fMRI time-series data, consistent with the existence of separate neuron populations responsive to congruent and opposite cue combinations. Decoding was also possible in the vicinity of parieto-insular vestibular cortex, possibly in a homolog of macaque VPS.

Keywords: CSv, hMST, human, PIC, vestibular, vision

Introduction

Moving successfully through the environment requires the integration of visual and vestibular information. These senses provide primary sources of information for determining our own inertia and (in the case of vision) the movement of objects in the environment. However, natural movements of the head during self-motion result in optic flow that confounds both visual heading perception and detection of object motion. Multisensory integration potentially provides a means by which irrelevant retinal motion arising from the movement of the body, eyes, and head can be discounted from retinal motion that occurs as a result of either self-translation or movement of external objects.

Physiological studies of the integration of visual and vestibular cues have implicated macaque dorsal medial superior temporal area (MSTd; Gu et al., 2006) and ventral intraparietal area (VIP; Chen et al., 2011a; for review, see Fetsch et al., 2013). In these cortical regions, some neurons have congruent visual–vestibular preferences for direction of translation (heading) and others, in similar numbers, have opposite preferences. A third region, the visual posterior sylvian area (VPS), has many neurons with opposite preferences, although few with congruent preferences (Chen et al., 2011b). Neurons with congruent and opposite preferences may serve to strengthen the perception of heading and to discount optic flow that arises from head-motion, respectively. During rotational motion, VIP shows similar proportions of neurons with opposite and congruent visual–vestibular preference (Chen et al., 2011a), but MSTd shows a marked predominance of neurons with opposite preferences (Takahashi et al., 2007). This predominance might suggest that rotational vestibular cues resulting from head motion are encoded in MSTd primarily for the purpose of distinguishing relevant external motion from irrelevant self-motion. Whole-field visual flow can be used for this purpose but the additional use of vestibular cues might increase precision and also potentially disambiguates head rotation from large rotating objects.

In human neuroimaging studies, several cortical regions show specificity for optic flow, including a region known as human MST (hMST), which may include a homologous region to macaque MSTd. The cingulate sulcus visual area (CSv) and a region in intraparietal cortex that has some characteristics in common with macaque VIP (putative human VIP; pVIP), have been shown to favor optic flow that reflects self-motion over flow that does not (Wall and Smith, 2008; Cardin and Smith, 2010). hMST and CSv have also been found to respond to vestibular stimulation (Smith et al., 2012). Whether any of these areas contain neurons capable of disambiguating relevant and irrelevant visual cues by the use of opposite visual–vestibular preferences is unknown.

Standard artificial methods of inducing vestibular sensation in a scanner environment result in visual sensations that are inconsistent with natural visual–vestibular cue combinations, making meaningful study of visual–vestibular interactions very difficult. Using psychophysics, we were able to emulate cue combinations compatible with natural head roll by using visual stimuli that were tailored to match the measured sensation of head roll induced by galvanic vestibular stimulation (GVS). We then used multivariate pattern analysis to determine whether distinct populations of congruent and opposite neurons exist in human cortical regions that are known to be involved in multisensory processing.

Materials and Methods

Participants.

Seven healthy participants (5 female, median age 20 years) took part. They were screened according to standard procedures and gave informed consent. The study was approved by the relevant local ethics committee.

Stimuli.

During GVS, sinusoidal waveforms were used to generate vestibular stimulation with an isolated bipolar constant-current stimulator (DS5, Digitimer) located in the scanner control room. Stimulation was delivered via shielded cables that were passed through appropriate RF filters into the MRI examination room. Nonmetallic electrodes (Skintact F-WA00, Leonhard Lang) were placed over the mastoid bone just behind each ear. A sinusoidal alternating current (1 Hz, ±3 mA) passed between the two electrodes, activating the cranial nerves connecting the vestibular organs to the brainstem. This induced a perception of head roll (rotation, R, about the anterior–posterior head axis). The magnitude (excursion, in degrees) of perceived roll induced by vestibular activation is referred to here as Rvest. GVS also induces a vestibulo-ocular reflex (VOR) with a dominant torsional component, Rvor. This causes rotation of the image on the retina (Rret_vor), which is equal in magnitude but opposite in direction to Rvor and affects visual cues to head roll.

Visual stimuli were presented to the participant via a NordicNeuroLaboratory VisualSystem. This is an optical goggle system, chosen because it allowed the surroundings (scanner bore, etc.) to be completely occluded, by the lens hoods. The system included an IR video camera (60 Hz) for monitoring eye movements. The IR image was processed with software (Arrington) that could detect cyclotorsional eye rotation, based on the features of the iris, as well as gaze direction. The image presented was dark apart from 2000 white dots (limited lifetime to reduce afterimages, 120/s replaced) in a circular patch of diameter 15°. The patch could be rotated sinusoidally about its center, at a rate and phase that matched the sinusoidal percept induced by GVS. The phase differed from the GVS waveform by 90° to account for the integration of acceleration to speed seen in vestibular responses at 1 Hz (e.g., Fernandez and Goldberg, 1971). The magnitude of rotation could be varied. It was found that if the magnitude was appropriate, the sensation of visual roll induced by GVS could be nulled so that the dot patch appeared static (see Pre-scan calibrations). During periods with no GVS, the dot patch remained present but was stationary. The dot patch contained a central fixation point that changed randomly among five possible colors at 2 Hz. Participants engaged in a color counting task that encouraged good fixation and maintained attention in a constant state.

Pre-scan calibrations.

Before the main experimental runs, each participant performed two short procedures in the scanner to obtain session-tailored calibration values for the fMRI experiment. These values vary across individuals and sessions so it was important to obtain measures in the same session as the scan. First, a psychophysical nulling calibration determined Rscreen_null, the extent to which the dot patch had to rotate to null the perception of roll motion (Rperc) created by GVS. Participants fixated the central target and received 1 s of GVS. They then pressed one of two buttons to indicate whether they perceived clockwise (CW) or anticlockwise (ACW) rotation of the visual stimulus. Image rotation was then varied over trials with a Best-PEST staircase procedure (Lieberman and Pentland, 1982) to find the best value of Rscreen_null. This value was then tested, by giving the participant several 16 s GVS stimulations to induce CW Rperc, at the same time rotating the dot patch ACW by Rscreen_null. We were satisfied that an adequate null had been achieved if participants could not perceive motion or could not follow the direction of motion. The measured value of Rscreen_null was then used in the Nulled condition of the fMRI experiment (see Conditions) for that participant. It was found that on average the dot patch had to rotate sinusoidally ±1.71° (SD = 0.51, min = 0.75, max = 2.65) to cancel the percept of motion induced by the GVS.

Second, GVS was administered in blocks of 16 s (6 blocks separated by 8 s rest blocks) while cyclotorsional eye position was tracked, to measure Rvor. Rvor is always in the opposite direction to Rperc, the purpose of VOR being to compensate for head motion. Participants fixated and passively viewed the static dot patch. They were presented with 12 blocks (16 s) of GVS and torsional eye traces were recorded. At the end of the calibration, the eye data were smoothed and the amplitude of cycles 4–12 was extracted and averaged across cycles and blocks. This was ±0.43° on average (SD = 0.14, min = 0.16, max = 0.62; this low VOR gain is typical of previous studies). The participant-specific value measured was used to adjust the retinal speed of the Control condition (see Conditions) so as to match that of the Nulled condition i.e., to compensate for the effect of Rvor on retinal motion.

Conditions.

Using the parameters obtained immediately before scanning, GVS was applied together with visual rotation of a magnitude and direction that either cancelled the percept induced by GVS (Nulled condition) or had the opposite phase, effectively summing the retinal and vestibular effects (Control condition). The measured Rvor parameter was used to equate retinal speed across the two conditions. A small optokinetic reflex may also have occurred but this was ignored because it would be the same in both conditions, the retinal speed being the same.

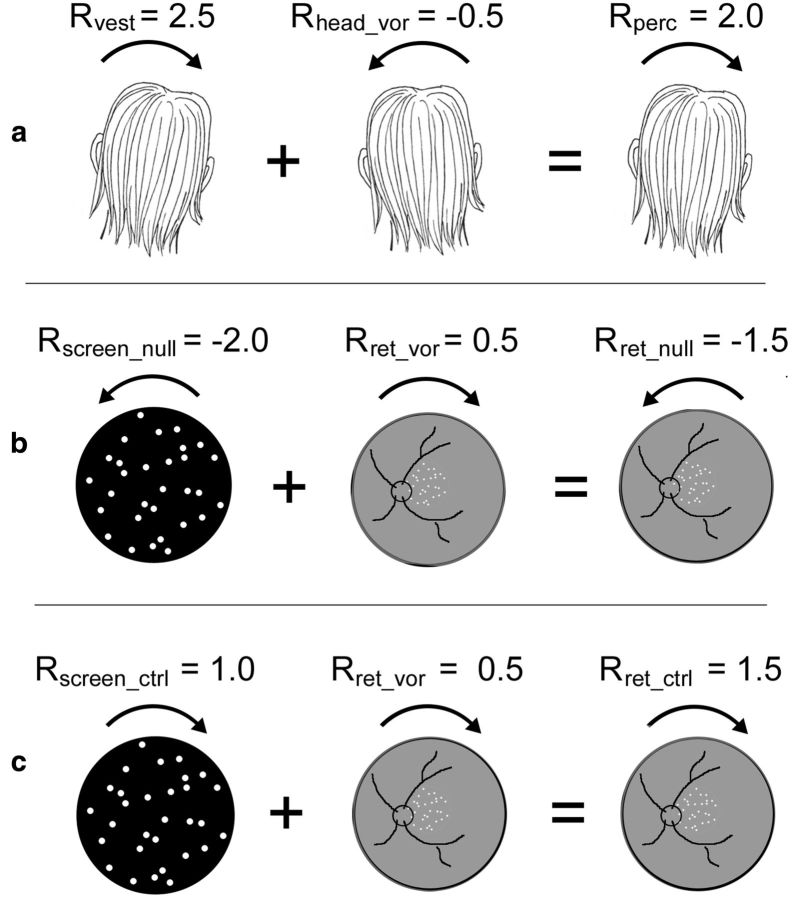

Figure 1 illustrates the two conditions quantitatively. The Nulled condition emulates natural head roll, in which retinal motion is present (but is not perceived as rotation of the visual world) and the visual and vestibular cues are congruent. In the Control condition, GVS is the same but retinal motion reverses direction, the motion is strongly perceived, and the two cues are incongruent (opposite in direction).

Figure 1.

Quantitative example to illustrate stimulus construction. Positive values represent CW movement, negative values ACW. a, GVS induces a CW vestibular head roll percept of say 2.5° (Rvest = 2.5). VOR acts to stabilize the image: when the vestibular system signals CW head roll, which normally causes ACW retinal motion, the eyes rotate ACW giving compensatory CW retinal motion, say 0.5° (Rret_vor = 0.5), which signals ACW head roll of −0.5° (Rhead_vor = −0.5), reducing the roll percept to 2.0° (Rperc = 2.0). Note that real CW head motion would make the retinal image rotate ACW on the retina but in the case of GVS, the retinal image is static (ignoring VOR), so you think the world (image) must be rotating CW. b, To cancel such a perceived rotation (Nulled condition), the dot patch must be rotated in the opposite direction (Rscreen_null = −2.0). This results in a nulled perceived roll (Rperc = 0) and a retinal image rotation (Rret_null) of −1.5°, the sum of Rscreen_null and Rret_vor. c, In the Control condition, retinal motion is required that is equal and opposite to the Nulled condition (Rret_ctrl = 1.5). The screen motion required to achieve this in the presence of VOR (Rret_vor = 0.5) is 1° (Rscreen_ctrl = 1.0).

Design.

The fMRI experiment used a block design and consisted of eight ∼5 min runs. Sixteen-second trial blocks were separated by 8 s rest blocks and the two conditions were each presented six times per run. This gave a total of 48 presentations per condition. fMRI data were collected with a Siemens Trio 3-tesla scanner with either an eight-channel head array coil for acquisition of a high-quality T1-weighted structural image (modified driven equilibrium Fourier transform, MDEFT; Deichmann et al., 2004) or a custom 8-channel posterior head coil (Stark Contrast) for an in-session T1 image and the functional images. Functional images were collected with 23 oblique slices (2.5 mm isotropic voxels) with an echoplanar imaging sequence (TR = 2 s, TE = 33 ms).

Analysis.

Both standard (univariate) analysis and multivoxel pattern analysis (MVPA) were used. Univariate analysis was performed with BrainVoyager software (Goebel et al., 2006). All functional data underwent preprocessing in which images were corrected for slice timing and for head motion. High pass temporal filtering (cutoff 0.01 Hz) was used to remove low-frequency drifts. Functional data were coregistered to the MDEFT anatomy. Statistical contrasts were set up using the general linear model to fit each voxel time course with a model derived by convolving a standard hemodynamic response function with the stimulus time series. Six additional regressors to model head movements and a session regressor were added.

ROI analysis.

Various regions-of-interest (ROIs) were defined in separate scans. hMST was defined using a standard paradigm (Huk et al., 2002) based on the presence of ipsilateral responses. Alternately expanding and contracting dot patterns (5° diameter; 13.5° eccentricity) were presented separately in the left or right visual hemifields. Sixteen-second stimulus blocks were interleaved with blocks with static dots. CSv and pVIP were defined using a second localizer (Wall and Smith, 2008) that consisted of two conditions presented for 15 s each, separated by 15 s bocks with no stimulus. In one condition, self-motion-compatible optic flow simulating spiral motion of the observer was presented. The second condition contained locally matched dot motion but in a self-motion-incompatible 3 × 3 array of nine flow patches. Contrasting activity for compatible versus incompatible flow readily defined CSv. It also revealed a visually responsive region in the vicinity of parieto-insular vestibular cortex (PIVC; Cardin and Smith, 2010) that we refer to here as PIC (posterior insular cortex) in line with previous studies. It is plausible that PIC is a homolog of macaque VPS (Frank et al., 2014). In many cases, pVIP was also defined. We find that pVIP is the most difficult region to define with this method because it responds quite well to both stimuli and definition relies on a modest degree of differential activity. In a few cases, pVIP could not be defined reliably for this reason, and in some others the acquisition volume did not extend sufficiently far dorsally. V1 was also localized, to provide a control ROI which was not expected to distinguish congruent and opposite vestibular–visual cues. V1 was defined using standard retinotopic mapping procedures, with a wedge (24° segment with a radius of 12°) rotating at 64 s/cycle.

In addition to these visually defined ROIs, a vestibular localizer was used to determine independent regions that responded to GVS in darkness. The GVS localizer used 2 s (2 cycles) of a 1 Hz sinusoid. Stimulation was followed by a 2–10 s intertrial interval. A total of 160 trials were presented across two runs. All light was excluded and participants were also asked to close their eyes. Two ROIs were defined in this way. PIVC was identified in all participants, in accord with several previous studies; however, we refer here to the ROI defined in this way as PIVC/PIC because it likely includes PIC as well as PIVC (see Discussion). Partial overlap was often seen between (vestibular) PIVC/PIC and (visual) PIC but PIC was on average slightly more posterior (Fig. 2), as is VPS in macaques. The proportion of overlapping voxels was 15%. A vestibular hMST ROI was also identified bilaterally that overlapped with visually defined hMST but was typically less extensive, in accord with previous work (Smith et al., 2012). The overlap was 38%.

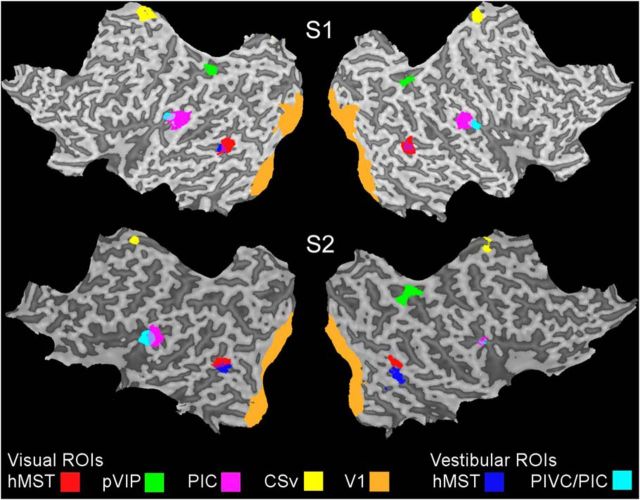

Figure 2.

Flattened cortical representations from two participants, S1 and S2, showing the ROIs examined. Each ROI is shown as a color overlay (see key). V1, hMST, pVIP, CSv, and PIC defined by visual localizers are shown in addition to PIVC/PIC and hMST defined with vestibular stimulation in darkness.

To test whether populations of neurons could distinguish the two cross-modal vestibular–visual cue combinations, the BOLD response in the main experiment was extracted from all regions of interest and submitted to both univariate analysis as above, to reveal any differences in response magnitude, and also to MVPA. MVPA was performed with a MATLAB-implemented Library for Support Vector Machines (LIBSVM; Chang and Lin, 2011). For each ROI, independently and regardless of overlap between visual and vestibular estimates of hMST and PIC, the data were pooled across participants to create a single large sample and normalized across the two conditions (Furlan et al., 2014 shows details of the method). Decoding performance was measured as a function of the number of features (voxels) included, which was incremented in steps of 30. The features were selected randomly. They were resampled and the analysis repeated 5000 times at each increment. A leave-one-out procedure was used to train a support vector machine on seven runs and test on the remaining run, resulting in eight performances for each sample. To test whether the classifier was performing above chance, the analysis was re-run with random permutation of trial labels. The 95th percentile of the distribution of 5000 results with different randomly permuted labels was calculated to determine whether correctly labeled classification analyses were performing significantly above chance. The mean of the distribution was used to estimate chance performance (expected to be 50%).

Results

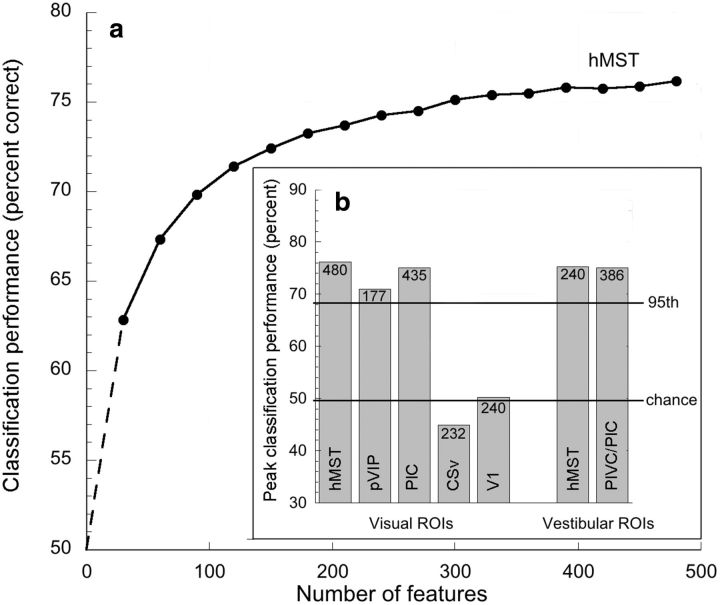

MVPA classification accuracies for decoding Nulled versus Control within visually defined hMST, pVIP, CSv, and PIC, and vestibularly defined hMST and PIVC/PIC, are displayed in Figure 3. Also included is V1, a control visual region that was not expected to distinguish between Nulled and Control conditions. As the two conditions were matched for retinal speed and GVS magnitude, decoding success is reliant on the presence of sensitivity to the relative phase of the two signals, perhaps in the form of independent populations of neurons that respond to congruent (Nulled condition) and opposite (Control condition) head-roll cues.

Figure 3.

a, MVPA classification accuracy for hMST, defined with a visual localizer, as a function of the number of features included. Dashed portion of curve is extrapolated. b, Peak classification accuracy for all cortical regions examined, together with the associated number of voxels in each case. Also shown are chance performance and the 95th percentile from permutation testing (mean across all regions).

Of the visually defined regions studied, hMST, pVIP, and PIC all supported classification of the two stimulus combinations, showing the expected increase in decoding performance with the number of features included (illustrated only for hMST) and reaching statistical significance for higher numbers of features. All three easily breached the 95th percentile of the permuted data. The two conditions could not be significantly decoded in visually defined CSv or in V1. Classification accuracy also reached significance in the two ROIs defined vestibularly, PIVC/PIC and hMST.

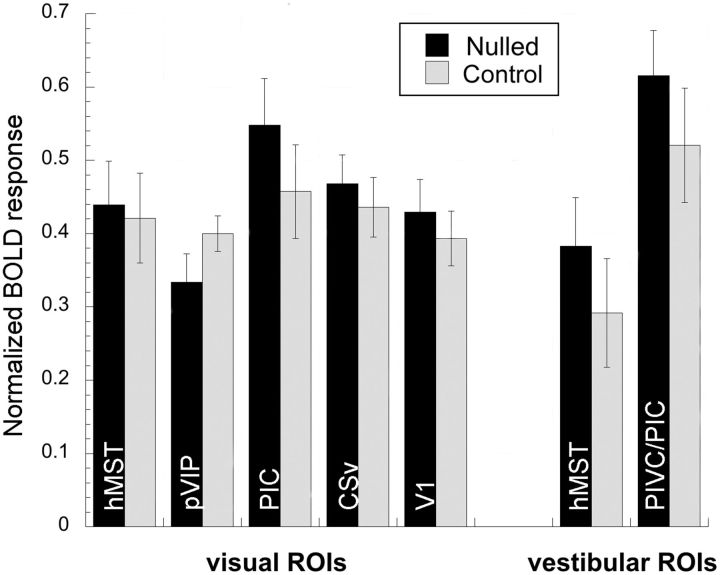

The univariate magnitude of the BOLD response in each condition was extracted from each ROI and is shown in Figure 4. All regions examined showed broadly comparable responses in the two conditions, mirroring the matched retinal stimulation rather than reflecting the difference in perceived motion resulting from the temporal phase in which the stimuli were combined. There was an overall trend toward larger responses in the Nulled condition (not significant by t test in any region). A possible explanation of this difference, if real, is that retinal motion in the Control condition was too weak. Analysis of VOR gain showed that it was ∼10% lower, in both conditions, than during the pre-scan calibration on which the correction was based, which means that retinal motion in the Control condition was somewhat slower than intended.

Figure 4.

Mean BOLD response amplitudes (normalized percentage signal change) for the Nulled and Control conditions in each cortical region studied. Error bars show ±1 SEM.

Discussion

Using psychophysics, we were able to create, and present to a static person lying in an MRI scanner, visual–vestibular cue combinations that were consistent with natural head rotation in the roll axis (congruent visual–vestibular cues). We were able to mimic the natural situation in which the head rolls, the retinal image consequently rotates, but the world appears static i.e., retinal image motion is suppressed. By reversing the direction of retinal motion (to give opposite visual–vestibular cues) we could create a situation in which the magnitude of retinal motion was unchanged but was now strongly perceived because it summed with, rather than nulling, the effect of GVS (Control condition). When direction of rotation was alternated over time by means of sinusoidal GVS accompanied by sinusoidal retinal motion, each combination could be created in a continuous fashion and we could switch between them by reversing the relative phase of the two stimuli. The two conditions then contained identical retinal motion and identical vestibular motion, and could be compared directly with fMRI, free from the confound of absolute direction. This allowed us to determine whether several key brain regions, some of which may be homologues of cortical regions in nonhuman primates that have been shown to integrate visual and vestibular cues, were sensitive to the difference between the two combinations; i.e., to the relative phase in which the stimuli were presented.

Univariate analysis of the fMRI data enabled us to ask, for each cortical region examined, whether activity is determined by retinal motion or by perceived motion. The two conditions elicited similar BOLD responses in all areas studied (Fig. 4). Small differences may exist that we failed to detect but activity appears to be broadly governed by retinal motion rather than perceived motion. If anything, activity was greater when no motion was visible (Nulled condition).

In contrast, MVPA enabled us to distinguish the Nulled and Control conditions in several cortical regions. First, hMST (whether defined with a visual or vestibular localizer) displayed significant prediction accuracy, indicating that it is sensitive to the phase in which visual and vestibular stimuli were combined. The most obvious interpretation, given the physiological literature, is that hMST contains some neurons that are selectively responsive to congruent visual/vestibular cues and others that prefer opposite cues, although other interpretations are possible (e.g., a continuous rather than bipolar distribution of phase sensitivities). If so, such neurons may act to strengthen perception of heading and to identify and discount retinal motion that results from head movements. The results incidentally provide further support for the notion that hMST includes a subregion that is homologous with macaque MSTd. The fact that MSTd is driven more strongly by visual than vestibular input (Gu et al., 2006; Takahashi et al., 2007; Chen et al., 2011b), and that the same is true of hMST (Smith et al., 2012), is also consistent with this interpretation.

Areas pVIP and PIC also showed sensitivity to the phase of the visual and vestibular stimuli, suggesting that they too may contain populations of neurons with congruent and opposite preferences. This is consistent with a possible homology with macaque areas VIP and VPS respectively. However, considerable caution is needed here. In the case of pVIP, the evidence for a functional homology is considerably weaker than for hMST. Macaque VIP responds to both visual and vestibular heading cues (Bremmer et al., 2002) and is involved in the integration of vestibular–visual information (Chen et al., 2011a). The fact that human pVIP is also multisensory (Bremmer et al., 2001) fits well with the possibility of a homology, as do the present results. However, the human intraparietal sulcus (IPS) contains many more discrete sensory regions than macaque IPS (Swisher et al., 2007) suggesting a different, more evolved organization. This reduces the likelihood of direct functional equivalence. We use the acronym pVIP because the area is the same as that referred to as human VIP by Bremmer et al., 2001, not because we are confident of a homology. Cells with congruent and opposite heading preferences have been found in VPS (Chen et al., 2011b), although with a preponderance of oppositely tuned cells. PIVC/PIC defined with GVS supports pattern classification. Macaque PIVC shows little responsiveness to optic flow (Chen et al., 2010) but is prominent in vestibular processing, and therefore may play a more peripheral role in multimodal processing, perhaps by providing vestibular signals to VPS. It is therefore likely that classification in vestibular PIVC/PIC was supported by neurons in the hypothesized PIC portion of this ROI. CSv did not show predictor accuracy above chance, and although this region has been found to respond well to vestibular as well as visual stimuli (Smith et al., 2012), our results provide no evidence to suggest that it is involved in multisensory integration.

In conclusion, our fMRI results, together with the finding that participants were unable to see retinal motion when it emulated that which would be present in a typical head roll situation, suggests that multisensory integration plays a large role in discounting retinal motion that is irrelevant to interacting with the world and provides important information concerning the locus of this process in the human brain.

Footnotes

This work was supported by a grant from the Leverhulme Trust to A.T.S.

The authors declare no competing financial interests.

References

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron. 2001;29:287–296. doi: 10.1016/S0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Cardin V, Smith AT. Sensitivity of human visual and vestibular cortical regions to egomotion-compatible visual stimulation. Cereb Cortex. 2010;20:1964–1973. doi: 10.1093/cercor/bhp268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol. 2011;2:21–27. [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Macaque parieto-insular vestibular cortex: responses to self-motion and optic flow. J Neurosci. 2010;30:3022–3042. doi: 10.1523/JNEUROSCI.4029-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci. 2011a;31:12036–12052. doi: 10.1523/JNEUROSCI.0395-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Convergence of vestibular and visual self-motion signals in an area of the posterior Sylvian fissure. J Neurosci. 2011b;31:11617–11627. doi: 10.1523/JNEUROSCI.1266-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deichmann R, Schwarzbauer C, Turner R. Optimisation of the 3D MDEFT sequence for anatomical brain imaging: technical implications at 1.5 and 3 T. Neuroimage. 2004;21:757–767. doi: 10.1016/j.neuroimage.2003.09.062. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating semicircular canals of the squirrel monkey: II Response to sinusoidal stimulation and dynamics of peripheral vestibular system. J Neurophysiol. 1971;34:661–675. doi: 10.1152/jn.1971.34.4.661. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, DeAngelis GC, Angelaki DE. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci. 2013;14:429–442. doi: 10.1038/nrn3503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank SM, Baumann O, Mattingley JB, Greenlee MW. Vestibular and visual responses in human posterior insular cortex. J Neurophysiol. 2014;112:2481–2491. doi: 10.1152/jn.00078.2014. [DOI] [PubMed] [Google Scholar]

- Furlan M, Wann JP, Smith AT. A representation of changing heading direction in human cortical areas pVIP and CSv. Cereb Cortex. 2014;24:2848–2858. doi: 10.1093/cercor/bht132. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with BrainVoyager QX: from single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Hum Brain Mapp. 2006;27:392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 2006;26:73–85. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huk AC, Dougherty RF, Heeger DJ. Retinotopy and functional subdivision of human areas MT and MST. J Neurosci. 2002;22:7195–7205. doi: 10.1523/JNEUROSCI.22-16-07195.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman H, Pentland A. Microcomputer based estimation of psychophysical thresholds: the best PEST. Behav Res Methods Instruments. 1982;14:21–25. doi: 10.3758/BF03202110. [DOI] [Google Scholar]

- Smith AT, Wall MB, Thilo KV. Vestibular inputs to human motion-sensitive visual cortex. Cereb Cortex. 2012;22:1068–1077. doi: 10.1093/cercor/bhr179. [DOI] [PubMed] [Google Scholar]

- Swisher JD, Halko MA, Merabet LB, McMains SA, Somers DC. Visual topography of human intraparietal sulcus. J Neurosci. 2007;27:5326–5337. doi: 10.1523/JNEUROSCI.0991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi K, Gu Y, May PJ, Newlands SD, DeAngelis GC, Angelaki DE. Multimodal coding of three-dimensional rotation and translation in area MSTd: comparison of visual and vestibular selectivity. J Neurosci. 2007;27:9742–9756. doi: 10.1523/JNEUROSCI.0817-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wall MB, Smith AT. The representation of egomotion in the human brain. Curr Biol. 2008;18:191–194. doi: 10.1016/j.cub.2007.12.053. [DOI] [PubMed] [Google Scholar]