Abstract

The prefrontal cortex (PFC) is deemed to underlie the complexity, flexibility, and goal-directedness of primates' behavior. Most neurophysiological studies performed so far investigated PFC functions with arm-reaching or oculomotor tasks, thus leaving unclear whether, and to which extent, PFC neurons also play a role in goal-directed manipulative actions, such as those commonly used by primates during most of their daily activities. Here we trained two macaques to perform or withhold grasp-to-eat and grasp-to-place actions, depending on the combination of two subsequently presented cues: an auditory go/no-go cue (high/low tone) and a visually presented target (food/object). By varying the order of presentation of the two cues, we could segment and independently evaluate the processing and integration of contextual information allowing the monkey to make a decision on whether or not to act, and what action to perform. We recorded 403 task-related neurons from the ventrolateral prefrontal cortex (VLPFC): unimodal sensory-driven (37%), motor-related (21%), unimodal sensory-and-motor (23%), and multisensory (19%) neurons. Target and go/no-go selectivity characterized most of the recorded neurons, particularly those endowed with motor-related discharge. Interestingly, multisensory neurons appeared to encode a behavioral decision independently from the sensory modality of the stimulus allowing the monkey to make it: some of them reflected the decision to act or refraining from acting (56%), whereas others (44%) encoded the decision to perform (or withhold) a specific action (e.g., grasp-to-eat). Our findings indicate that VLPFC neurons play a role in the processing of contextual information underlying motor decision during goal-directed manipulative actions.

SIGNIFICANCE STATEMENT We demonstrated that macaque ventrolateral prefrontal cortex (VLPFC) neurons show remarkable selectivity for different aspects of the contextual information allowing the monkey to select and execute goal-directed manipulative actions. Interestingly, a set of these neurons provide multimodal representations of the intended goal of a forthcoming action, encoding a behavioral decision (e.g., grasp-to-eat) independently from the sensory information allowing the monkey to make it. Our findings expand the available knowledge on prefrontal functions by showing that VLPFC neurons play a role in the selection and execution of goal-directed manipulative actions resembling those of common primates' foraging behaviors. On these bases, we propose that VLPFC may host an abstract “vocabulary” of the intended goals pursued by primates in their natural environment.

Keywords: decision, grasping actions, macaque, visuomotor neurons

Introduction

Contextual information available in the environment and the internal plans and goals of an agent constitute the two poles of a continuum along which the brain orchestrates action selection in a variety of contexts (Koechlin and Summerfield, 2007). Most of the existing literature on high-order action selection focused on the prefrontal cortex (PFC) (Tanji and Hoshi, 2008), and the bulk of the evidence has been collected with arm-reaching or oculomotor tasks (Rainer et al., 1998; Freedman et al., 2001; Wallis et al., 2001; Shima et al., 2007). These studies provided crucial contributions to clarify both the mechanisms underlying PFC executive functions and the possible evolutionary trends leading to the origin of uniquely human cognitive capacities (Wise, 2008; Genovesio et al., 2014). However, it remains unclear whether and to which extent the PFC is also involved in manipulative hand behaviors, such as reaching-and-grasping actions, in which motor and sensory brain regions located caudally to PFC have been shown to play a major role (Cisek and Kalaska, 2010; Rizzolatti et al., 2014).

Previous neurophysiological studies in monkeys (Fogassi et al., 2005; Bonini et al., 2010, 2011, 2012) demonstrated that the inferior parietal lobule (IPL) and the ventral premotor cortex (PMv) underlie the organization of forelimb action sequences as those commonly performed by monkeys in their environment (e.g., grasping an object to eat it or to place it). These studies showed that IPL and PMv neurons, in addition to primarily encoding specific motor acts (e.g., grasping), discharge differently during grasping execution depending on the monkey's final behavioral goal (i.e., eating or placing the grasped object), thus showing the capacity of predictive goal coding. This capacity crucially relies on the availability of contextual information (Bonini et al., 2011), whose processing is considered a main function of the ventrolateral PFC (VLPFC) (Fuster, 2008), which in turn can influence parietal and premotor neuron activity through its well-documented reciprocal connections with these regions (Petrides and Pandya, 1984; Barbas and Mesulam, 1985; Borra et al., 2011; Gerbella et al., 2013a). However, the possible contribution of prefrontal neurons to the processing of contextual information relevant for the organization of simple manual actions on solid objects has never been investigated.

To address this issue, we recorded single VLPFC neuron activity by using a modified, and more strictly controlled, go/no-go version of the grasp-to-eat/grasp-to-place paradigm used in previous studies on parietal and premotor neurons. With this task, we have been able to segment and independently evaluate the processing and integration of the visual and auditory contextual cues allowing the monkey to make a decision on whether or not to act and what action to perform.

Materials and Methods

Experiments were performed on two 4-year-old female Macaca mulatta (M1, 3 kg; M2, 4 kg). Before starting the recording sessions, monkeys were habituated to sit on a primate chair and to interact with the experimenters. Then, they were trained to perform the task described below using the hand (right) contralateral to the hemisphere to be recorded (left; Fig. 1A). When the training was completed, a head fixation system (Crist Instruments) and a plastic recording chamber (AlphaOmega) were implanted under general anesthesia (ketamine hydrochloride, 5 mg/kg i.m. and medetomidine hydrochloride, 0.1 mg/kg i.m.), followed by postsurgical pain medications. Surgical procedures were the same as previously described (Bonini et al., 2010). All the experimental protocols complied with the European law on the humane care and use of laboratory animals (directives 86/609/EEC, 2003/65/CE, and 2010/63/EU), were authorized by the Italian Ministry of Health (D.M. 294/2012-C, 11/12/2012), and approved by the Veterinarian Animal Care and Use Committee of the University of Parma (Prot. 78/12 17/07/2012).

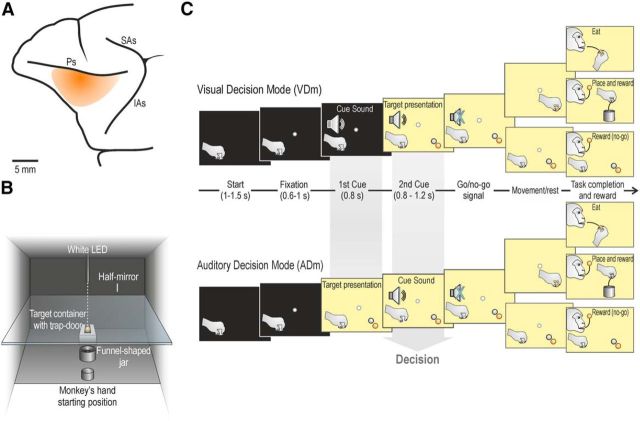

Figure 1.

Recorded region, apparatus, and temporal sequence of task events. A, Schematic view of the VLPFC region investigated in the present study. Ps, Principal sulcus; SAs, superior arcuate sulcus; IAs, inferior arcuate sulcus. B, Box and apparatus settled for performing the action sequence task, seen from the monkey's point of view. C, The task was constituted by a fixed sequence of events that could be run in two modes, depending on the order of presentation of the two cues (sound and target) whose integration allowed the monkey to decide what to do next, namely: (1) grasp-to-eat, (2) grasp-to-place, (3) refrain from grasping an object, or (4) refrain from grasping a food pellet. The monkey could select what to do next (decision) either following the visual presentation of the target (in the VDm) or following the presentation of the cue sound (in the ADm). Both monkeys performed the task with a considerable success rate (92% M1 and 83% M2), with clearly more errors during go (7% M1, and 15% M2) relative to no-go (1% M1 and 2% M2) trials, due to errors in the motor execution, which could happen only in the former type of trials. The reaction time during food and object go-trials (N = 100 for each monkey, randomly selected from 20 different recording sessions) were similar in both animals (M1: food 489 ± 205 ms, object 485 ± 221 ms, t = 0.19, not significant; M2: food 524 ± 121 ms, object 542 ± 133 ms, t = 0.97, not significant).

Apparatus and behavioral paradigm

Monkeys were trained to perform a sensory-cued go/no-go action sequence task, requiring them to grasp a target to eat it or to place it into a container. A success rate of at least 80% correctly performed trials per session was adopted as a criterion for training completion, and each monkey reached this criterion in a few months (M1, 5 months; M2, 4 months). The task included different experimental conditions, performed by means of the apparatus illustrated in Figure 1B.

The monkey was seated on the primate chair in front of a box, divided horizontally into two sectors by a half-mirror. The lower sector hosted two plastic containers: one was an empty plastic jar (inner diameter 4 cm), whereas the other was used to present the monkey with one of two possible targets: a piece of food (ochre spherical pellet of 6 mm of diameter, weight 19 mg), or an object (a white plastic sphere, of the same size and shape of the food). The target was located into a groove at the center of the container: the bottom of the groove was closed with a computer-controlled trap-door, with a small cavity in the middle that enabled precise positioning of the target, so that its center of mass was at exactly 11 cm from the lower surface of the half mirror. The target was positioned at the center of the groove in complete darkness, and in presence of a constant white noise, to prevent the monkey from obtaining any visual or auditory cue during set preparation. The container for the target was positioned along the monkey body midline, at 16 cm from its hand starting position. The monkey's hand starting position was constituted by a metal cylinder (diameter 3 cm, height 2.5 cm), fixed to the plane close to the monkey's body. The empty plastic jar, used as a container for placing the object, was located at the halfway point between the hand starting position and the target. The jar was endowed with a funnel-shaped pierced bottom: in this way, when the object was placed into the jar, it immediately felt down in a box unreachable and not visible to the monkey. The upper sector of the task box hosted a small black tube fixed to the roof, containing a white light-emitting diode (LED) located 11 cm above the surface of the half mirror. When the LED was turned on (in complete darkness), the half-mirror reflected the spot of light, so that it appeared to the monkey as located in the lower sector, in the exact position of the center of mass of the not-yet-visible target (fixation point). A stripe of white LEDs located on the lower sector of the box (and not directly visible to the monkey) allowed us to illuminate it during specific phases of the task. Because of the half-mirror, the fixation point remained visible even when the lower sector of the box was illuminated.

The task was run in two modes, depending on the order of presentation of the auditory and visual cues (Fig. 1C): in the visual decision mode (VDm), the cue sound was presented first, and then the target became visible, whereas in the auditory decision mode (ADm), the target was presented first, and the cue sound subsequently. In both modes, the task included two cue sounds (go/no-go) and two targets (food or object), thus resulting in a total of 8 different conditions, randomly interleaved, each of which recorded in 12 independent trials (96 trials in total).

Each trial started when the monkey held its hand on the starting position for a variable period of time, ranging from 1 to 1.5 s (intertrial period). The temporal sequence of task events (Fig. 1C) was as follows.

Go condition in VDm.

Following presentation of the fixation point, the monkey was required to start fixating it (tolerance window 3.5°) within 1.5 s. After a variable time lag from fixation onset (0.6–1 s), the first cue, a high tone constituted by a 1200 Hz sine wave, associated with Go trials, was presented. After 0.8 s, the second cue was provided: the lower sector of the box was illuminated, and one of the two possible targets (food or object) became visible (target presentation). Then, after a variable time lag (0.8–1.2 s), the cue sound ceased (go signal), and the monkey was required to reach and grasp the target: in case of the food pellet (food trials), the monkey brought it to the mouth and ate it (grasp to eat), whereas in case of the plastic sphere (object trials), the monkey had to place it into the jar (grasp to place). An almost natural bring-to-the-mouth movement was possible despite the presence of the half-mirror (Fig. 1B) because its border did not interfere with the insertion of the food into the mouth. Food trials were self-rewarded, whereas object trials were automatically rewarded with a food pellet (identical to the one used during food trials) delivered into the monkey's mouth by a customized, computer-controlled pellet dispenser (Sandown Scientific), activated by the contact of the monkey's hand with the metallic border of the jar.

No-go condition in VDm.

The temporal sequence of events in this condition was the same as in the go condition. Following presentation of the fixation point, the monkey was required to start fixating it within 1.5 s. After a variable time lag from fixation onset (0.6–1 s), the first cue, a low tone constituted by a 300 Hz sine wave, associated with no-go trials, was presented. After 0.8 s, the second cue was provided: the lower sector of the box was illuminated, and one of the two possible targets (food or object) became visible (target presentation). Then, after a variable time lag (0.8–1.2 s), the cue sound ceased (no-go signal), and the monkey had to remain still, maintaining fixation for 1.2 s, during both food and object trials. After correct task accomplishment, the monkey was automatically rewarded with a food pellet as described above.

In the ADm, the temporal sequence of events in the two conditions was the same as in the VDm, but the order of presentation of the two cue stimuli was inverted: in this way, target presentation occurred before any go/no-go instruction was provided, whereas the go/no-go cue subsequently presented enabled the monkey to decide whether to act or not. Importantly, in both the ADm and VDm, only the second cue allowed the monkey to make the final decision on what to do next, regardless of its sensory modality, by integrating the information conveyed by it with that previously provided.

Recording techniques

Neuronal recordings were performed by means of 8 and 16 channels multielectrode linear arrays: U-probes (Plexon), and silicon probes developed in the EU project NeuroProbes (Ruther et al., 2010; Herwik et al., 2011) and distributed by ATLAS Neuroengineering, respectively. Both types of probes were inserted through the intact dura by means of a manually driven stereotaxic micromanipulator mounted on the recording chamber (AlphaOmega). All penetrations were performed perpendicularly to the cortical surface, with a penetration angle of ∼40° relative to the sagittal plane. Previous studies provide more details on the devices and techniques used to handle Atlas probes (Bonini et al., 2014b) and U-probes (Bonini et al., 2014a).

The recordings were performed by means of a 8 channel AlphaLab system (AlphaOmega), and of a 16 channel Omniplex system (Plexon). The wide band (300–7000 Hz) neuronal signal was amplified and sampled in parallel with the main behavioral events and digital signals defining the task stages. All quantitative analyses of neuronal data were performed offline, as described in the subsequent sections.

Recording of behavioral events and definition of epochs of interest

Distinct contact-sensitive devices (Crist Instruments) were used to detect when the monkey touched with the hand the metal surface of the starting position, the metallic floor of the groove hosting the target (food or object) during grasping, or the metallic border of the plastic jar during placing of the object. Each of these devices provided a transistor–transistor logic signal, which was used by LabView-based software to monitor the monkey performance.

Eye position was monitored by an eye tracking system composed by a 50 Hz infrared-sensitive CCD video camera (Ganz, F11CH4) and two spots of infrared light. The eye position signals, together with the TTL events generated during task execution, were sent to the LabView-based software to monitor task unfolding and to control the presentation of auditory and visual cues of the behavioral paradigm. Based on TTL and eye position signals, the software enabled us to automatically interrupt the trial if the monkey broke fixation, made an incorrect movement or did not respect the temporal constrains of the behavioral paradigm. In all these cases, no reward was delivered: all the cues were switched off and, at the same time, the trap-door bearing the target opened so that the monkey could not grab it. The monkey always received the same food pellet as a reward after correct accomplishment of each type of trial.

Based on the digital signals related to the main behavioral events, we defined different epochs of interest for statistical analysis of neuronal responses: (1) sensory cue (target presentation/cue sound) epoch, including the 400 ms after the onset of each cue; (2) movement epoch, ranging from 100 ms before the detachment of monkey's hand from the starting position to 100 ms after the contact with the target; and (3) resting epoch, ranging from 0 to 400 ms after the end of the low sound (no-go signal). To assess whether neurons significantly responded to sensory cues, we compared the activity during the sensory cue epoch with that of the 500 ms preceding the onset of the cue (baseline epoch).

Data analyses and classification of the recorded neurons

Single units were isolated using standard principal component and template matching techniques, provided by dedicated offline sorting software (Plexon), as described previously (Bonini et al., 2014b).

After identification of single units that remained stable over the entire duration of the experiment, neurons were defined as “task-related” if they significantly varied their discharge during at least one of the epochs of interest (see above), investigated by means of the following repeated-measures ANOVAs (with significance criterion of p < 0.01).

Sensory response to the first cue. Single-neuron responses to the presentation of sounds (low and high tone) and targets (food and sphere) as first cues were assessed with a 2 × 2 repeated-measures ANOVA (factors: sound/target, and epoch), followed by Bonferroni post hoc tests in case of significant interaction effects. Neuronal activity during the cue presentation epoch was compared with that of the 500 ms prestimulus epoch.

Sensory response to the second cue. Because each stimulus (sound or target) presented as second cue occurred within the context established by the previously presented one, we used a 2 × 2 × 2 repeated-measures ANOVA (factors: sound, target, and epoch), followed by Bonferroni post hoc tests in case of significant interaction effects to explore not only possible activity changes induced by the cue, but also possible differences in stimulus processing caused by the context in which it occurred. The same analysis was applied to the neuron response tested in the ADm and VDm, separately. To verify a possible activity change specifically induced by the second cue, neuronal activity during cue presentation was compared with that of the 500 ms period before stimulus onset. Since when a stimulus was presented as second cue it could be influenced by the previously presented one, although this did not occur when it was presented as first, we compared the neurons response evoked by stimulus presentation in the cued and uncued contexts by using paired-samples t test (p < 0.05, Bonferroni corrected).

Response during the movement/resting epoch. Possible modulation of single neuron activity during the movement/resting epoch (in the go and no-go condition, respectively) has been assessed by means of 2 × 2 × 2 repeated-measures ANOVA (factors: target, condition, and epoch). In addition to the movement/resting epoch defined above, here we considered as baseline the 500 ms epoch preceding the presentation of the first sensory cue. We considered as motor-related all the neurons showing significant interaction effects (p < 0.01, followed by Bonferroni post hoc tests) of the factors condition and epoch, with possible additional interaction with the factor target.

Based on the results provided by the analyses described above, task-related neurons were classified as sensory-driven (activated only by some sensory cue), motor-related (activated only during the movement/resting epoch), or sensory-and-motor (activated significantly during both sensory cue and movement/resting epochs). Population analyses were performed on specific sets of neurons, classified on the basis of the results of the above described analyses, and taking into account single-neuron responses calculated as averaged activity (spk/s) in 20 ms bins across trials of the same condition. The same epochs used for single unit data were used for population analyses as well, except for motor-related responses (analyzed on a trial-by-trial basis in single neurons), which have been analyzed considering a 400 ms epoch ranging from 300 ms before hand-target contact to 100 ms after this event.

To identify the start/end of population selectivity for specific variables (i.e., target or condition), paired-sample t tests were used to establish the first/last of a series of at least 5 consecutive 80 ms bins (slid forward in steps of 20 ms) in which the activity significantly differed (uncorrected p < 0.05) between the two compared conditions.

Results

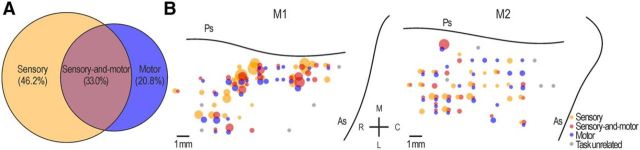

We recorded 403 task-related neurons (251 from M1 and 152 from M2). According to the criteria described above (see Materials and Methods), almost half of them (N = 186, 46.2%) have been classified as “sensory-driven” (127 in M1, 59 in M2), 84 (20.8%) “motor-related” (47 in M1 and 37 in M2), and 133 (33.0%) “sensory-and-motor” (77 in M1, 56 in M2) (Fig. 2A; Table 1). Figure 2B clearly shows that, in both monkeys, sensory-driven, motor-related, and sensory-and-motor neurons were not anatomically segregated in the explored VLPFC region.

Figure 2.

Types and anatomical distribution of task-related and task-unrelated neurons. A, The Venn charts represent the proportion of task-related neurons classified as sensory, motor, or sensory-and-motor. B, Reconstruction of the anatomical distribution of sensory, motor, and sensory-and-motor neurons in the explored VLPFC sector of both monkeys (M1 and M2): the size of each circle is proportional to the number of single neurons (from N = 1 to N = 18) isolated in each penetration, and characterized by the property indicated by the color code. Gray circles represent penetrations in which neurons did not show task-related responses. Ps, Principal sulcus; As, arcuate sulcus; M, medial; L, lateral; R, rostral; C, caudal.

Table 1.

Number of sensory-driven, motor-related, and sensory-and-motor neurons responding to visual and auditory cues presented in both task modes (VDm and ADm)

| Response to sensory cues |

No sensory response | Total | |||

|---|---|---|---|---|---|

| Auditory | Visual | Auditory and visual | |||

| Sensory-driven neurons | 6 | 145 | 35 | 0 | 186 |

| Motor-related neurons | 0 | 0 | 0 | 84 | 84 |

| Sensory-and-motor neurons | 6 | 85 | 42 | 0 | 133 |

| Total | 12 | 230 | 77 | 84 | 403 |

In the following sections the properties of these three main neuronal categories will be described in detail.

Sensory-driven neurons

The majority of sensory-driven neurons (151 of 186, 81.2%) responded to the presentation of cue stimuli in only one of the two tested sensory modalities, namely, auditory or visual: in this section, we will focus on the description of this set of neurons. The remaining sensory-driven neurons (35 of 186, 18.8%) responded to both the cue sound and the visually presented target, and their properties will be described below in a dedicated section on integrative multisensory responses.

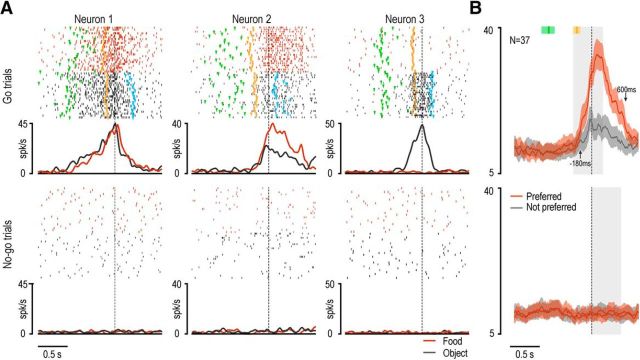

Neurons responding only to auditory cues were rarely found (N = 6). Two of them showed task-specific activation, responding to the sound only when it was presented as first (in the VDm), whereas the remaining 4 responded to cue sounds during both task modes in a similar way, as one would expect in the case of a purely auditory response (Fig. 3A, example Neuron 1).

Figure 3.

Examples of sensory-driven neurons and population responses. A, Examples of four different sensory-driven neurons. Rasters and histograms are aligned on target presentation and cue sound onset, which were separated by a fixed interval of 800 ms. Rasters and histograms of single-neuron response with different targets are shown in different colors. Gray shaded areas represent the 400 ms time windows used for statistical analysis of neuronal sensory responses during (symbols): (1) target presentation (light bulb), (2) high cue tone (green speaker), and (3) low cue tone (red speaker). B, Population activity of 29 visually responsive neurons showing selectivity for both condition (go/no-go) and target. The discharge of the same neuronal population is shown during target presentation cued by the preferred and not preferred sound in the VDm, as well as before the presentation of the cue sound, in the ADm. Gray speaker symbol represents the epoch in which the cue sound was presented.

In contrast to auditory-responsive neurons, those discharging only during visual presentation of the target were widely represented (N = 145): 30 of them (20.7%) discharged in a similar way regardless of the context in which target presentation occurred, whereas the great majority (115 of 145, 79.3%), although selectively activated during visual presentation, discharged differently depending on the previously presented auditory instruction (Table 2). Indeed, none of these neurons discharged stronger in the ADm, in which the target was presented as first cue, devoid of association with any previous instruction stimulus, whereas 95 neurons discharged stronger (N = 86) or even exclusively (N = 9) in the VDm, when target presentation followed the go/no-go cue. The remaining 20 neurons activated similarly in the two task modes, but in the VDm their visual response was always different depending on the previously presented cue sound (go/no-go).

Table 2.

Task mode (VDm/ADm), condition (go/no-go), and target (food/object) selectivity of visually-responsive neurons

| Go > No-go |

No-go > Go |

Go = No-go |

Total | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Food | Object | Ns | Food | Object | Ns | Food | Object | Ns | ||

| VDm > ADm | 3 | 14 | 19 | 3 | 3 | 13 | 2 | 6 | 32 | 95 |

| VDm = ADm | 1 | 4 | 13 | 0 | 1 | 1 | 0 | 0 | 30 | 50 |

| Subtotal | 4 | 18 | 32 | 3 | 4 | 14 | 2 | 6 | 62 | |

| Total | 54 | 21 | 70 | 145 | ||||||

Examples of contextual selectivity of visually responsive neurons are shown in Figure 3A. Neuron 2 discharged stronger in the VDm than in the ADm, but its discharge did not encode any of the specific aspects of the task (go/no-go condition or type of target). Thirty-two neurons showed this behavior (Table 2). Neuron 3 responded stronger in the VDm than in the ADm, particularly during go-trials, but also showed a clear selectivity for the food item. Three neurons showed a similar behavior (Table 2). Finally, Neuron 4 responded selectively to the visual presentation of the object during go-trials in the VDm. This pattern of discharge is representative of 14 of the recorded neurons (Table 2). Neurons activated differently during go and no-go trials also show target selectivity more often (29 of 75) than the other visually responsive neurons (8 of 70, Fisher's exact probability test, p = 0.0001).

To better understand the possible interaction between target selectivity and the different task contexts, we focused on those neurons showing both target selectivity and a preference for one of the two (go/no-go) conditions (N = 29). Then, we performed a population analysis in which we compared visual responses to the preferred (red line) and not preferred (black line) target (food, N = 7; object, N = 22) in three different contexts, namely: (1) instructed by the preferred cue sound, (2) instructed by the not preferred cue sound, or (3) not instructed by any previously presented sound in the ADm (Fig. 3B). A 3 × 2 × 2 repeated-measures ANOVA (factors: context, object, and epoch) revealed significant main effects of the factors context (F(2,56) = 18.02, p < 0.001), object (F(1,28) = 57.17, p < 0.001), and epoch (F(1,28) = 49.69, p < 0.001), as well as a significant interaction of all factors (F(2,56) = 36.16, p < 0.001). Bonferroni post hoc tests indicated that the population activity during target presentation in the preferred (go/no-go) condition was stronger relative to that during all the other epochs (p < 0.001). In addition, we found a clear-cut target preference in the preferred context (p < 0.001), a barely significant one in the uncued context (p < 0.05), and no preference in the not preferred context (p = 0.30). These findings indicate that the discharge of VLPFC visually responsive neurons is not simply related to the sight of a given object, but it is strongly modulated by previously available contextual information allowing the designation of visually presented objects as potential targets of forthcoming behaviors.

Motor-related neurons

Neurons classified as motor-related (N = 84) could activate either during the movement epoch of the go condition (72 of 84) or during the corresponding epoch of the no-go condition (12 of 84), in which the monkey was required to refrain from moving (Table 3). All these neurons did not respond during any of the previous cue epochs in both task modes.

Table 3.

Selectivity for target and/or go/no-go condition of motor-related neurons

| Target selectivity |

||||

|---|---|---|---|---|

| Food > object | Object > food | Food = object | Total | |

| Go > No-go | 14 | 23 | 35 | 72 |

| No-go > Go | 2 | 4 | 6 | 12 |

| Total | 16 | 27 | 41 | 84 |

Examples of motor-related neurons are shown in Figure 4A. Neuron 1 discharged during reaching-grasping actions regardless of the type of target (food or object), whereas it did not activate during no-go trials. Of the 72 motor-related neurons discharging specifically during go-trials, 35 displayed this type of activation pattern, whereas the remaining 37 neurons showed target selectivity (Table 3). Neuron 2 in Figure 4A provides an example of target-selective response: it discharged stronger during grasping of the food relative to the object, and it did not activate during no-go trials. Neuron 3 exhibited the opposite behavior, becoming active selectively during grasp-to-place actions.

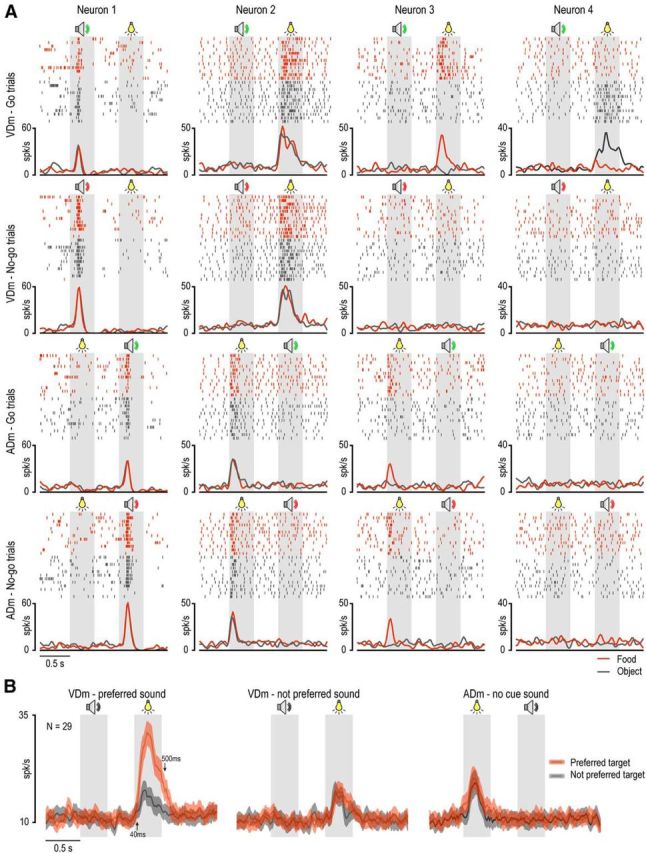

Figure 4.

Examples of motor-related neurons and population responses. A, Examples of three motor-related neurons. Rasters and histograms of go trials are aligned on the hand-target contact (grasping), whereas those of no-go trials are aligned on the no-go signal. Markers color code: green represent go signal; orange represents detachment of monkey's hand from the starting position (movement onset); light blue represents contact of the monkey's hand with the border of the jar (placing). B, Population activity of all motor-related neurons with target selectivity. Red and gray lines indicate the average discharge intensity of neurons during grasping of the preferred and not preferred target, respectively, aligned as the single neurons example in A. Colored shaded regions around each line represent 1 SEM. Gray shaded regions represent the windows used for statistical analysis of the population response. The median times of go-signal onset and movement onset are indicated with the green and orange markers, respectively, above each population plot. Shaded areas around each marker represent the 25th and 75th percentile times of other events of the same type. Black arrows indicate the time of onset (upward arrow) and end (downward arrow) of significant separation between the two compared conditions.

It might be interesting to note that target selectivity was overall much more represented in motor-related (43 of 84, 51.2%) than sensory-driven (37 of 115, 32.2%) neurons (Fisher's exact probability test, p = 0.0053), suggesting that it more strictly depends on the behavioral relevance of the target than on its perceptual features. At the population level, the response of motor-related neurons endowed with target selectivity (Fig. 4B) clearly showed a peak at 100 ms after hand-target contact, thus being tuned on target acquisition. Furthermore, although most of the difference between the preferred and not preferred response concerned the postcontact epoch, in line with previous studies with similar paradigms in different cortical areas (Bonini et al., 2010, 2012), target selectivity emerged since 180 ms before hand-target contact, allowing us to exclude that it depended on different sensory feedback from the grasped objects (Tanila et al., 1992).

Sensory-and-motor neurons

Sensory-and-motor neurons responded during the motor epoch of the task as well as during the presentation of cue stimuli in only one (91 of 133, 68.4%) or both (42 of 133, 31.6%) the two tested sensory modalities (auditory and visual). In this section, we will focus on the description of the former set of neurons, whereas the properties of those responding to both the cue sound and the visually presented target will be described (together with those of sensory-driven neurons with the same feature) in the subsequent section on integrative multisensory responses.

A few unimodal sensory-and-motor neurons (N = 6) were activated by the auditory cue, whereas the majority (N = 85) responded to target presentation (Table 4). Examples of this latter type of sensory-and-motor neurons are shown in Figure 5. Neuron 1 responded to target presentation, particularly when it occurred after the go cue in the VDm, as well as during grasping execution: a preferential discharge in food trials was evident during both target presentation (p = 0.04, Bonferroni corrected) and grasping execution (p < 0.001). Neuron 2 exemplifies the opposite target preference: it responded selectively to the presentation of the object following the go cue in the VDm, and it also fired with remarkable preference during the execution of grasp-to-place since the earliest phase of movement onset. Both these example neurons showed transient activation during specific phases of the task. In contrast, Neuron 3 responded to the visual presentation of the target during both the VDm and the ADm: it did not exhibit selectivity either for the type of target or for the go/no-go condition, but it showed sustained activation from target presentation till the end of the trial, with further significant increase of its firing rate following the go relative to the no-go signals.

Table 4.

Task mode (VDm/ADm), condition (go/no-go), and target (food/object) selectivity of the visual response of sensory-and-motor neurons

| Go > No-go |

No-go > Go |

Go = No-go |

Total | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Food | Object | Ns | Food | Object | Ns | Food | Object | Ns | ||

| VDm > ADm | 11 | 16 | 15 | 0 | 0 | 1 | 0 | 0 | 15 | 58 |

| VDm = ADm | 5 | 8 | 9 | 0 | 0 | 4 | 0 | 0 | 1 | 27 |

| Subtotal | 16 | 24 | 24 | 0 | 0 | 5 | 0 | 0 | 16 | |

| Total | 64 | 5 | 16 | 85 | ||||||

Figure 5.

Examples of sensory-and-motor neurons. For each neuron, the left part of the panels represents the response during the presentation of the sensory cues, whereas the right part (after the gap) represents the motor-related activity aligned on hand-target contact. Conventions as in Figures 3A and 4A.

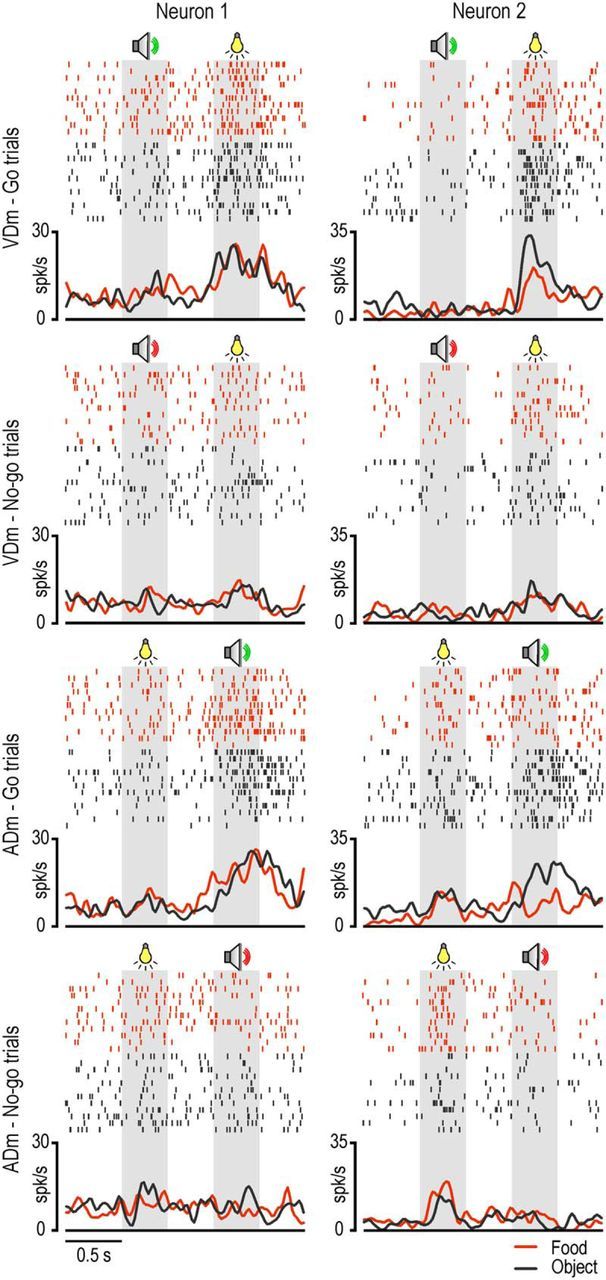

Interestingly, the properties of the visual response of sensory-and-motor neurons in terms of target selectivity and preference for the go condition were more similar to those of the motor response of motor-related neurons than to those of the visual response of sensory-driven neurons (Fig. 6), suggesting that this set of neurons encode the incoming visual information in relation to the upcoming motor action. This raises the issue of what might be the specific relationship between the visual and motor selectivity of sensory-and-motor neurons. To answer this question, we explored the target selectivity of the motor response of sensory-and-motor neurons preliminarily classified based on the selectivity of their visual response. Figure 7A shows that most of the sensory-and-motor neurons with a given target selectivity of their visual response tended to show the same selectivity (or no significant selectivity) in their motor response: virtually none of them exhibited incongruent visuomotor preference for the target. Figure 7B shows the time course and intensity of the population activity of the same neuronal subpopulations shown in Figure 7A. It is clear that, even at the population level, neurons showing visual preference for food or object (p < 0.001) during go trials in the VDm exhibited an overall significant grasp-to-eat (p < 0.001) or grasp-to-place (p < 0.001) preference in their motor response as well. It is interesting to note, however, that although the motor selectivity was present regardless of the order of presentation of the contextual cues, the visual selectivity emerged only when the target was presented as second cue, thus enabling the monkey to decide which action to perform based on visual information.

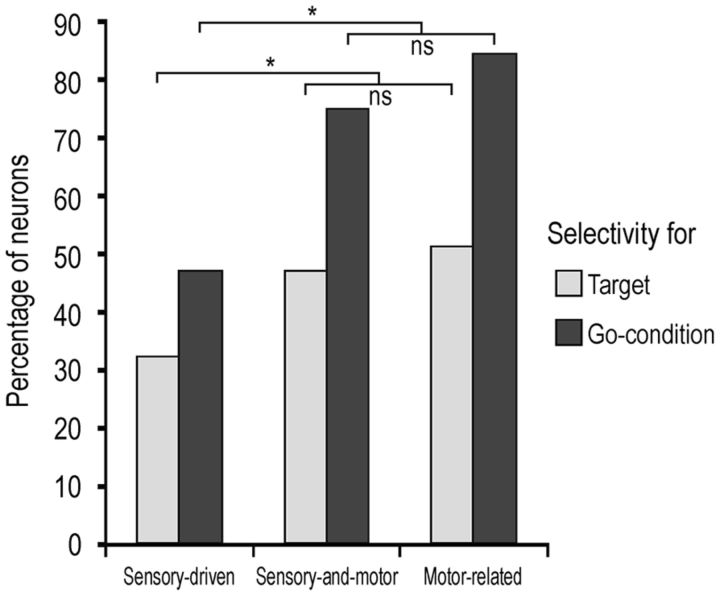

Figure 6.

Percentage of target selectivity and selectivity for the go condition in sensory-driven and sensory-and-motor neurons' visual response, and in motor-related neurons' motor response. *p < 0.05 (Fisher exact probability test). ns, Not significant.

Figure 7.

Visuo-motor congruence of target selectivity in sensory-and-motor neurons. A, Motor selectivity of sensory-and-motor neurons showing visual selectivity for food or object. B, Population response of the two subpopulations of sensory-and-motor neurons shown in A. In each population, the left part of the panels represents the response during the presentation of the sensory cues, whereas the right part (after the gap) represents the motor-related activity aligned on hand-target contact. *p < 0.001 for all comparisons (Bonferroni post hoc tests). Other conventions as in Figures 3 and 4.

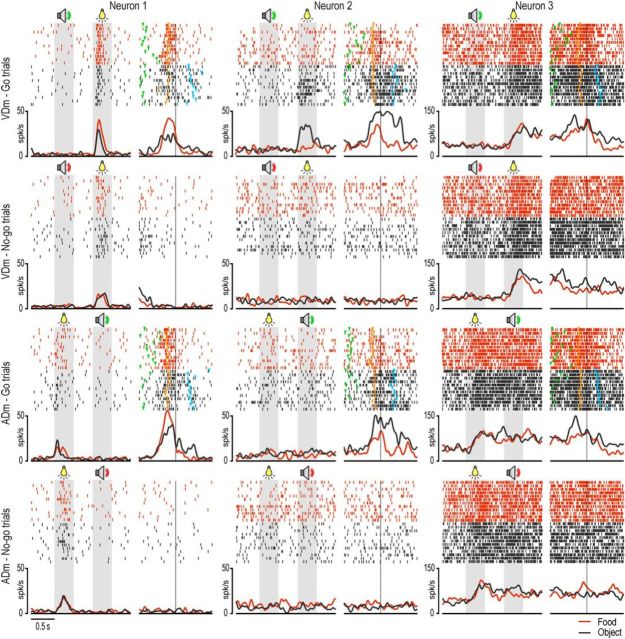

Integrative multisensory responses

As described in previous sections, some sensory-driven (N = 35) and sensory-and-motor (N = 42) neurons showed more complex multisensory properties: they discharged both to the presentation of the auditory cues and to the visual presentation of the target, especially when these stimuli allowed the monkey to decide what to do next. Indeed, it is interesting to note that all these neurons showed at least a preference for the go or the no-go condition, with possible additional target selectivity (Table 5).

Table 5.

Selectivity for go/no-go condition and target of multisensory neurons response to the second cue stimulus in both decision modes

| Target selectivity |

||||

|---|---|---|---|---|

| Food > object | Object > food | Food = object | Total | |

| Go > No-go | 8 | 23 | 36 | 67 |

| No-go > Go | 1 | 2 | 7 | 10 |

| Go = No-go | 0 | 0 | 0 | 0 |

| Total | 9 | 25 | 43 | 77 |

Examples of these two types of behavior are shown in Figure 8. Neuron 1 discharged to the presentation of the second sensory cue, regardless of whether it was visual or auditory, but only during the go trials: indeed, no significant response was observed to the second cue during no-go trials, and to the presentation of the go cue as first. In addition, the lack of selectivity for the type of target indicates that this neuron discharges when the cue allows the monkey to decide to perform an action, whatever action will be performed. Neuron 2 behaved in a similar way but also showed target selectivity with a clear preference for object relative to food trials. These findings suggest that multisensory neurons specifically contribute to decide whether or not to act and, in some cases (e.g., Neuron 2), even what action to perform.

Figure 8.

Examples of multisensory neurons. Conventions as in Figures 3A and 4A.

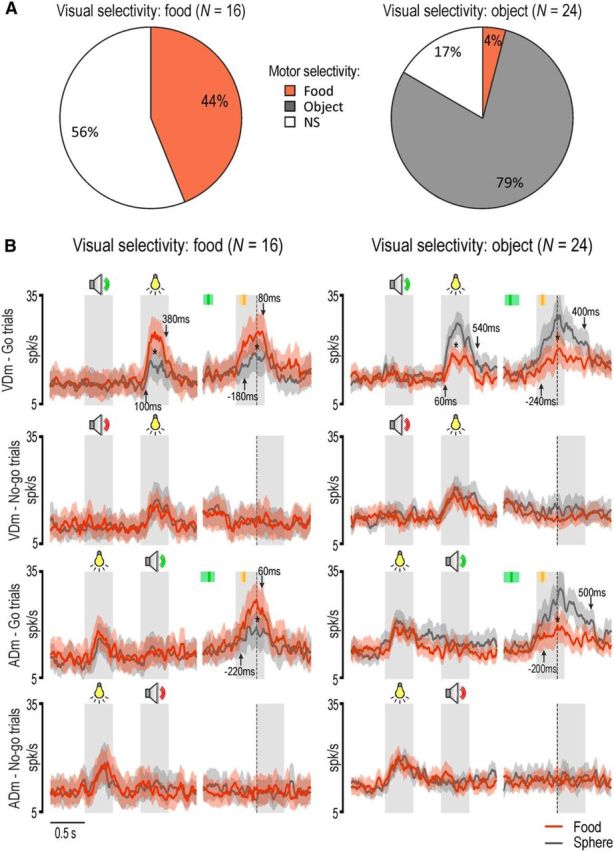

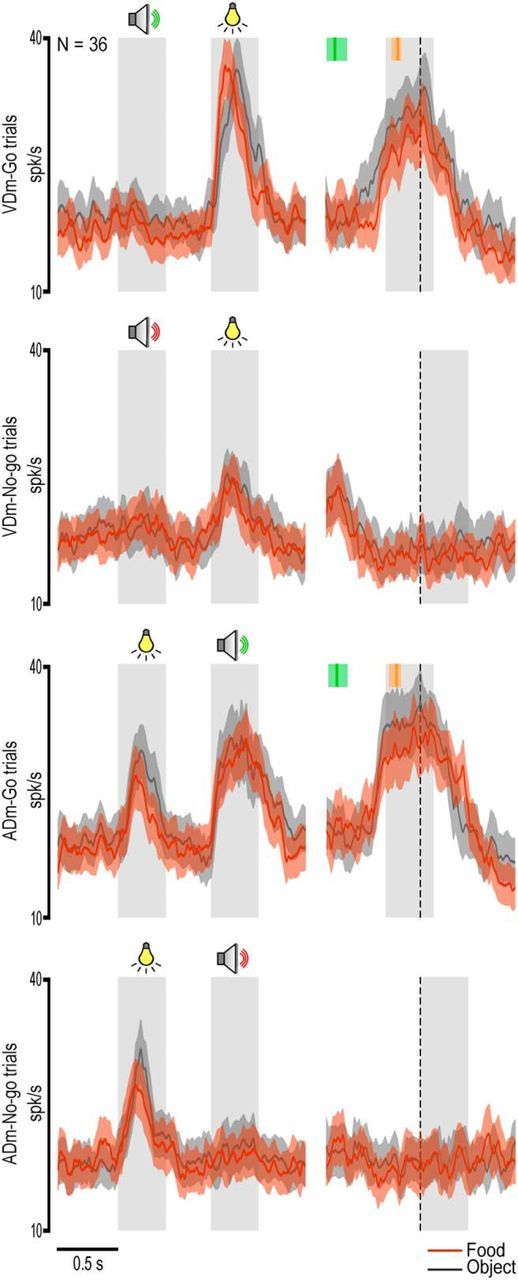

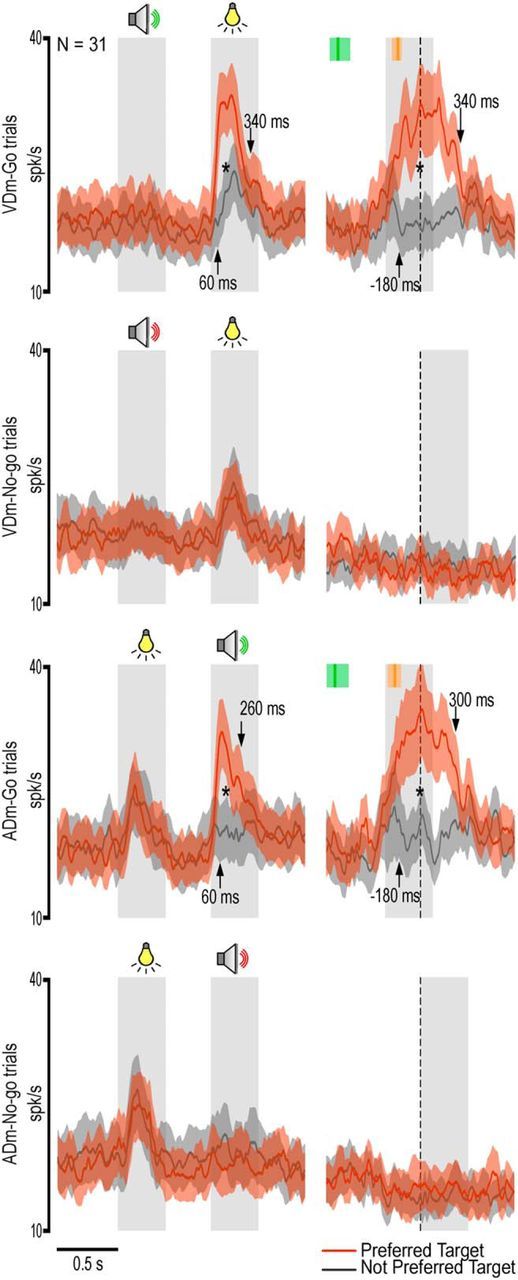

It is interesting to note that, during the VDm, whether or not to act could be already established based on the first auditory cue, although no final decision about the specific action to perform can be made yet. To better investigate whether and to which extent the auditory and visual cues are processed in an integrated manner by multisensory VLPFC neurons, we separately studied the population activity of neurons with go selectivity only (Fig. 9, N = 36, of which 12 were sensory-driven whereas 24 were sensory-and-motor) as well as of those showing, in addition, a selective response for a given action (Fig. 10, N = 31, of which 17 were sensory-driven whereas 14 were sensory-and-motor). The results clearly confirm that both populations encode a motor decision when the second cue is presented, regardless of its sensory modality. However, they also clearly show that while the visual cue (i.e., target presentation) can evoke a significant response (p < 0.001, Bonferroni post hoc test) even when presented before the auditory cue, although devoid of any selectivity, the auditory cue did not produce any modulation of populations activity when presented as first. It is interesting to note that, although a significant modulation during the execution epochs was present in only some of the multisensory neurons included in the analyses shown in Figure 9 (24 of 36) and Figure 10 (14 of 31), their contribution to the population activity was clearly strong enough to generate, in both populations, a significant motor response with the same selectivity exhibited by the neurons during the sensory cue periods. These findings strongly support the idea that multisensory neurons discharge is associated with the emergence of a specific motor decision.

Figure 9.

Population response of multisensory neurons showing stronger activation during the cue period of the go with respect to the no-go condition (Table 5). Population activity was also significant during the execution epoch: in VDm, interaction condition × epoch (F(1,35) = 46.02, p < 0.001); in ADm, interaction condition × epoch (F(1,35) = 56.01, p < 0.001). Bonferroni post hoc tests also indicated the presence of significantly stronger discharge during object than food grasping (p < 0.001). Other conventions as in Figures 3 and 7.

Figure 10.

Population response of multisensory neurons showing selectivity for a specific motor decision during the presentation of the second sensory cue (Table 5). Population activity was also significant during the execution epoch and showed the same selectivity as during the presentation of the second sensory cue: in VDm, interaction target × condition × epoch (F(1,30) = 7.73, p < 0.01); in ADm, interaction target × condition × epoch (F(1,30) = 4.99, p < 0.05). *p < 0.001 for all comparisons (Bonferroni post hoc tests). Other conventions as in Figures 3 and 7.

Discussion

In this study, we investigated the contribution of VLPFC neurons to the processing of contextual information allowing the monkey to select one among four behavioral alternatives: grasp/not to grasp a food morsel to eat it, and grasp/not to grasp an object to place it into a container. Crucially, the monkey's decision required to integrate two sequentially presented cues: a visually presented target, indicating what action to perform, and an auditory cue, indicating whether or not to act. Depending on the order of presentation of the two cues, the monkey made its final decision based on visual (VDm) or auditory (ADm) information. Thus, if neuronal activity reflects the monkey's decision to perform a specific action, it should do so regardless of the decision mode.

We found four main types of neurons, with no clear segregation within the recorded region: unimodal sensory-driven (37%), motor-related (21%), unimodal sensory-and-motor (23%), and multisensory (19%) neurons. No-go neurons were poorly represented in all categories, likely due to the fact that the trap-door below the target systematically prevented the monkey from grasping it during no-go trials, thus reducing both the automatic tendency of the animal to reach for potentially graspable objects and the need for active neural inhibition of this behavior. Interestingly, the discharge of multisensory neurons reflected a behavioral decision independently from the sensory modality of the stimulus allowing the monkey to make it: some encoded a decision to act/refraining from acting (56%), whereas others (44%) specified one among the four behavioral alternatives.

Contextual modulations of the processing of observed objects

Most unimodal sensory-driven neurons responded to the visually presented target (96%), whereas only a few (4%) encoded the auditory cue, in line with previous studies (Tanila et al., 1992; Saga et al., 2011). The wealth of visually responsive neurons in VLPFC is not surprising. However, they were typically studied by presenting monkeys with abstract visual cues of different size, shapes, colors, or spatial positions on a screen (Fuster, 2008). Here, we showed that VLPFC neurons respond to the presentation of real solid objects, often showing differential activation depending on the type of object and/or the behavioral context.

The great majority of visually triggered neurons discharged stronger and displayed target preference when the monkey was allowed to make a decision, by integrating the visual information with a previously presented cue (VDm). In contrast, when target presentation occurred before any go/no-go instruction (ADm), the response was generally weaker and devoid of any target selectivity, suggesting that VLPFC neurons mainly encode real objects depending on what the monkey is going to do with them (Watanabe, 1986; Sakagami and Niki, 1994). These findings extend previous evidence of categorical representation of visual stimuli in VLPFC (Freedman et al., 2001; Kusunoki et al., 2010) to solid objects belonging to behaviorally relevant, natural categories (i.e., edible vs inedible).

It is interesting to note that the majority of target-selective visually triggered neurons exhibited preference for the object (76%) relative to the food (24%): because monkeys needed an explicit training to learn what to do with the object (placing), but clearly not with the food, the prevalence of object-selective neurons likely reflects the role of VLPFC in the acquisition of visuomotor associations underlying action selection (Wise and Murray, 2000).

Visuomotor selectivity for target objects in VLPFC neurons

Motor-related discharge of VLPFC neurons has been described in previous studies (Kubota and Funahashi, 1982; Quintana et al., 1988; Tanila et al., 1992), and it was proposed that these responses “reflect behavioral factors such as the goal or concept of the motor activity” (Hoshi et al., 1998), operationally identifying “goals” with different locations or shapes (e.g., circle or triangle) of a target. Here we showed that a similar coding principle also applies to motor responses occurring during forelimb object-directed actions. Indeed, approximately half of motor-related neurons showed target selectivity since 180 ms before hand-target contact, suggesting that they might take part in the encoding of the final action goal previously described in IPL and PMv neurons (Fogassi et al., 2005; Bonini et al., 2010).

Interestingly, we also found a substantial number of VLPFC neurons showing both a motor-related discharge and a visual presentation response (sensory-and-motor neurons), with remarkable visuomotor congruence for the preferred target. Furthermore, we demonstrated that the visual target selectivity critically depends on the previously presented auditory (go) cue (Fig. 7), thus suggesting that it plays a role in the selection of the upcoming motor action. This proposal fits well with the rich anatomical connections linking VLPFC with IPL and PMv regions involved in the organization of goal-directed forelimb actions (Borra et al., 2011).

By comparing target selectivity during action execution observed in the present study with that described in previous reports on parietal and premotor neurons, it emerges that the selectivity for object/placing actions relative to food/eating actions forms a rostrocaudal gradient, with more object selectivity in PFC (63%, present data) relative to PMv (40%) and IPL (21%) regions (Bonini et al., 2010). This trend might reflect an overall major role of frontal regions in the encoding of learned sensory-motor associations between objects and actions relative to parietal cortex, which appears more devoted to the organization of hand-to-mouth actions automatically afforded by the intrinsic meaning of objects (Yokochi et al., 2003; Fogassi et al., 2005; Rozzi et al., 2008).

A further interesting finding is that the great majority of single cells, as well as population activity, clearly show transient activation in response to contextual cues and executed actions rather than sustained activity during the delay period. This could certainly occur because, in our task, both the auditory and visual information remained available for the entire duration of the delay period, thus rendering unnecessary to rely on memory. Nevertheless, remarkably similar behavioral paradigms applied to parietal (Sakata et al., 1995) and premotor (Murata et al., 1997; Raos et al., 2006; Bonini et al., 2014a) grasping neurons evidenced sustained neuronal activity and grip/object selectivity during the delay period, although no memory load was required. Very likely, other brain regions such as dorsal premotor cortex (PMd) or basal ganglia (Hoshi, 2013), which are anatomically connected with VLPFC (Borra et al., 2011; Gerbella et al., 2013a, b), underlie the generation of sustained context-dependent activation during the selection of goal-directed actions.

Multimodal integration of contextual information underlying motor decision

One of our most interesting findings is that almost 20% of the recorded neurons integrated the visual and auditory cues, providing a signal reflecting the decision of the monkey to act or refrain from acting, or even the decision to perform/withhold a specific action, such as grasp-to-eat or grasp-to-place. Crucially, their response and selectivity appeared only when sufficient sensory evidence was accumulated to enable the monkey to make a final decision, regardless of whether the last piece of evidence was conveyed by auditory (go/no-go) or visual (food/object) information. Interestingly, when the cue sound was presented first, it did not evoke virtually any significant response (Figs. 9, 10). Nevertheless, it evoked a vigorous response when it allowed the monkey to make a decision based on the previously presented target. Where does this multisensory integration come from?

One possibility is that other brain regions, anatomically linked with the VLPFC, perform this integration, select a specific action, and send their input to VLPFC. For example, neural correlates of motor decision based on sequentially presented instructions have been demonstrated in PMd (Hoshi and Tanji, 2000; Cisek and Kalaska, 2005). However, single-neuron evidence also supports the possibility that audiovisual integration occurs in PFC (Benevento et al., 1977; Fuster et al., 2000). Although further studies are needed to address this issue, the present and previous findings indicate a key role of VLPFC in the multimodal integration of contextual information underlying the selection of goal-directed forelimb actions.

A final important question concerns what exactly multisensory neurons discharge represents. A plausible interpretation is that they encode conceptual-like representation of the monkey's final behavioral goal. Indeed, previous studies showed that VLPFC neurons can code motor goals at different levels of abstraction (Saito et al., 2005; Mushiake et al., 2006), and even conceptual representations of sequential actions (Shima et al., 2007): the present findings suggest that a similar interpretation also applies to the activation of VLPFC multimodal neurons before goal-directed manual actions.

The PFC underwent a considerable expansion during phylogeny (Passingham and Wise, 2012), which appears to parallel the increasing complexity, goal-directedness, and flexibility of animals' behavior (Sigala et al., 2008; Stokes et al., 2013). In this study, we explored the neural underpinnings of the organization and execution of goal-directed actions resembling some of the most widespread foraging behaviors of primates. Our findings expand the knowledge on prefrontal functions by showing that the majority of prefrontal neurons encode target objects and manual actions in a context-dependent manner, and a set of them even exhibits multimodal representation of the intended goal of a forthcoming action. On these bases, we propose that the PFC may host an abstract “vocabulary” of the intended goals pursued by primates in their environment.

Footnotes

This work was supported by the Italian PRIN (2010MEFNF7), Italian Institute of Technology, and European Commission Grant Cogsystem FP7–250013. We thank Marco Bimbi for assistance in software implementation; Fabrizia Festante for help in training monkeys; Elisa Barberini and Alessandro Delle Fratte for help in initial data acquisition; and Francesca Siracusa for help in acquisition and analysis of the data of the second monkey.

The authors declare no competing financial interests.

References

- Barbas H, Mesulam MM. Cortical afferent input to the principalis region of the rhesus monkey. Neuroscience. 1985;15:619–637. doi: 10.1016/0306-4522(85)90064-8. [DOI] [PubMed] [Google Scholar]

- Benevento LA, Fallon J, Davis BJ, Rezak M. Auditory–visual interaction in single cells in the cortex of the superior temporal sulcus and the orbital frontal cortex of the macaque monkey. Exp Neurol. 1977;57:849–872. doi: 10.1016/0014-4886(77)90112-1. [DOI] [PubMed] [Google Scholar]

- Bonini L, Rozzi S, Serventi FU, Simone L, Ferrari PF, Fogassi L. Ventral premotor and inferior parietal cortices make distinct contribution to action organization and intention understanding. Cereb Cortex. 2010;20:1372–1385. doi: 10.1093/cercor/bhp200. [DOI] [PubMed] [Google Scholar]

- Bonini L, Serventi FU, Simone L, Rozzi S, Ferrari PF, Fogassi L. Grasping neurons of monkey parietal and premotor cortices encode action goals at distinct levels of abstraction during complex action sequences. J Neurosci. 2011;31:5876–5886. doi: 10.1523/JNEUROSCI.5186-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonini L, Ugolotti Serventi F, Bruni S, Maranesi M, Bimbi M, Simone L, Rozzi S, Ferrari PF, Fogassi L. Selectivity for grip type and action goal in macaque inferior parietal and ventral premotor grasping neurons. J Neurophysiol. 2012;108:1607–1619. doi: 10.1152/jn.01158.2011. [DOI] [PubMed] [Google Scholar]

- Bonini L, Maranesi M, Livi A, Fogassi L, Rizzolatti G. Space-dependent representation of objects and other's action in monkey ventral premotor grasping neurons. J Neurosci. 2014a;34:4108–4119. doi: 10.1523/JNEUROSCI.4187-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonini L, Maranesi M, Livi A, Bruni S, Fogassi L, Holzhammer T, Paul O, Ruther P. Application of floating silicon-based linear multielectrode arrays for acute recording of single neuron activity in awake behaving monkeys. Biomed Tech (Berl) 2014b;59:273–281. doi: 10.1515/bmt-2012-0099. [DOI] [PubMed] [Google Scholar]

- Borra E, Gerbella M, Rozzi S, Luppino G. Anatomical evidence for the involvement of the macaque ventrolateral prefrontal area 12r in controlling goal-directed actions. J Neurosci. 2011;31:12351–12363. doi: 10.1523/JNEUROSCI.1745-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural correlates of reaching decisions in dorsal premotor cortex: specification of multiple direction choices and final selection of action. Neuron. 2005;45:801–814. doi: 10.1016/j.neuron.2005.01.027. [DOI] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural mechanisms for interacting with a world full of action choices. Annu Rev Neurosci. 2010;33:269–298. doi: 10.1146/annurev.neuro.051508.135409. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, Rizzolatti G. Parietal lobe: from action organization to intention understanding. Science. 2005;308:662–667. doi: 10.1126/science.1106138. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Categorical representation of visual stimuli in the primate prefrontal cortex. Science. 2001;291:312–316. doi: 10.1126/science.291.5502.312. [DOI] [PubMed] [Google Scholar]

- Fuster JM, editor. The prefrontal cortex. Ed 4. London: Academic; 2008. [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Genovesio A, Wise SP, Passingham RE. Prefrontal-parietal function: from foraging to foresight. Trends Cogn Sci. 2014;18:72–81. doi: 10.1016/j.tics.2013.11.007. [DOI] [PubMed] [Google Scholar]

- Gerbella M, Borra E, Rozzi S, Luppino G. Connectional evidence for multiple basal ganglia grasping loops in the macaque monkey. San Diego: Society for Neuroscience; 2013a. [Google Scholar]

- Gerbella M, Borra E, Tonelli S, Rozzi S, Luppino G. Connectional heterogeneity of the ventral part of the macaque area 46. Cereb Cortex. 2013b;23:967–987. doi: 10.1093/cercor/bhs096. [DOI] [PubMed] [Google Scholar]

- Herwik S, Paul O, Ruther P. Ultrathin silicon chips of arbitrary shape by etching before grinding. J Microelectromech Syst. 2011;20:791–793. doi: 10.1109/JMEMS.2011.2148159. [DOI] [Google Scholar]

- Hoshi E. Cortico-basal ganglia networks subserving goal-directed behavior mediated by conditional visuo-goal association. Front Neural Circuits. 2013;7:158. doi: 10.3389/fncir.2013.00158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoshi E, Tanji J. Integration of target and body-part information in the premotor cortex when planning action. Nature. 2000;408:466–470. doi: 10.1038/35044075. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Task-dependent selectivity of movement-related neuronal activity in the primate prefrontal cortex. J Neurophysiol. 1998;80:3392–3397. doi: 10.1152/jn.1998.80.6.3392. [DOI] [PubMed] [Google Scholar]

- Koechlin E, Summerfield C. An information theoretical approach to prefrontal executive function. Trends Cogn Sci. 2007;11:229–235. doi: 10.1016/j.tics.2007.04.005. [DOI] [PubMed] [Google Scholar]

- Kubota K, Funahashi S. Direction-specific activities of dorsolateral prefrontal and motor cortex pyramidal tract neurons during visual tracking. J Neurophysiol. 1982;47:362–376. doi: 10.1152/jn.1982.47.3.362. [DOI] [PubMed] [Google Scholar]

- Kusunoki M, Sigala N, Nili H, Gaffan D, Duncan J. Target detection by opponent coding in monkey prefrontal cortex. J Cogn Neurosci. 2010;22:751–760. doi: 10.1162/jocn.2009.21216. [DOI] [PubMed] [Google Scholar]

- Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G. Object representation in the ventral premotor cortex (area F5) of the monkey. J Neurophysiol. 1997;78:2226–2230. doi: 10.1152/jn.1997.78.4.2226. [DOI] [PubMed] [Google Scholar]

- Mushiake H, Saito N, Sakamoto K, Itoyama Y, Tanji J. Activity in the lateral prefrontal cortex reflects multiple steps of future events in action plans. Neuron. 2006;50:631–641. doi: 10.1016/j.neuron.2006.03.045. [DOI] [PubMed] [Google Scholar]

- Passingham RE, Wise SP. The neurobiology of prefrontal cortex. Oxford: Oxford UP; 2012. [Google Scholar]

- Petrides M, Pandya DN. Projections to the frontal cortex from the posterior parietal region in the rhesus monkey. J Comp Neurol. 1984;228:105–116. doi: 10.1002/cne.902280110. [DOI] [PubMed] [Google Scholar]

- Quintana J, Yajeya J, Fuster JM. Prefrontal representation of stimulus attributes during delay tasks: I. Unit activity in cross-temporal integration of sensory and sensory-motor information. Brain Res. 1988;474:211–221. doi: 10.1016/0006-8993(88)90436-2. [DOI] [PubMed] [Google Scholar]

- Rainer G, Asaad WF, Miller EK. Selective representation of relevant information by neurons in the primate prefrontal cortex. Nature. 1998;393:577–579. doi: 10.1038/31235. [DOI] [PubMed] [Google Scholar]

- Raos V, Umiltá MA, Murata A, Fogassi L, Gallese V. Functional properties of grasping-related neurons in the ventral premotor area F5 of the macaque monkey. J Neurophysiol. 2006;95:709–729. doi: 10.1152/jn.00463.2005. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Cattaneo L, Fabbri-Destro M, Rozzi S. Cortical mechanisms underlying the organization of goal-directed actions and mirror neuron-based action understanding. Physiol Rev. 2014;94:655–706. doi: 10.1152/physrev.00009.2013. [DOI] [PubMed] [Google Scholar]

- Rozzi S, Ferrari PF, Bonini L, Rizzolatti G, Fogassi L. Functional organization of inferior parietal lobule convexity in the macaque monkey: electrophysiological characterization of motor, sensory and mirror responses and their correlation with cytoarchitectonic areas. Eur J Neurosci. 2008;28:1569–1588. doi: 10.1111/j.1460-9568.2008.06395.x. [DOI] [PubMed] [Google Scholar]

- Ruther P, Herwik S, Kisban S, Seidl K, Paul O. Recent progress in neural probes using silicon MEMS technology. IEEJ Trans Elec Electron Eng. 2010;5:505–515. doi: 10.1002/tee.20566. [DOI] [Google Scholar]

- Saga Y, Iba M, Tanji J, Hoshi E. Development of multidimensional representations of task phases in the lateral prefrontal cortex. J Neurosci. 2011;31:10648–10665. doi: 10.1523/JNEUROSCI.0988-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saito N, Mushiake H, Sakamoto K, Itoyama Y, Tanji J. Representation of immediate and final behavioral goals in the monkey prefrontal cortex during an instructed delay period. Cereb Cortex. 2005;15:1535–1546. doi: 10.1093/cercor/bhi032. [DOI] [PubMed] [Google Scholar]

- Sakagami M, Niki H. Encoding of behavioral significance of visual stimuli by primate prefrontal neurons: relation to relevant task conditions. Exp Brain Res. 1994;97:423–436. doi: 10.1007/BF00241536. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M, Murata A, Mine S. Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey. Cereb Cortex. 1995;5:429–438. doi: 10.1093/cercor/5.5.429. [DOI] [PubMed] [Google Scholar]

- Shima K, Isoda M, Mushiake H, Tanji J. Categorization of behavioural sequences in the prefrontal cortex. Nature. 2007;445:315–318. doi: 10.1038/nature05470. [DOI] [PubMed] [Google Scholar]

- Sigala N, Kusunoki M, Nimmo-Smith I, Gaffan D, Duncan J. Hierarchical coding for sequential task events in the monkey prefrontal cortex. Proc Natl Acad Sci U S A. 2008;105:11969–11974. doi: 10.1073/pnas.0802569105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes MG, Kusunoki M, Sigala N, Nili H, Gaffan D, Duncan J. Dynamic coding for cognitive control in prefrontal cortex. Neuron. 2013;78:364–375. doi: 10.1016/j.neuron.2013.01.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanila H, Carlson S, Linnankoski I, Lindroos F, Kahila H. Functional properties of dorsolateral prefrontal cortical neurons in awake monkey. Behav Brain Res. 1992;47:169–180. doi: 10.1016/S0166-4328(05)80123-8. [DOI] [PubMed] [Google Scholar]

- Tanji J, Hoshi E. Role of the lateral prefrontal cortex in executive behavioral control. Physiol Rev. 2008;88:37–57. doi: 10.1152/physrev.00014.2007. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Anderson KC, Miller EK. Single neurons in prefrontal cortex encode abstract rules. Nature. 2001;411:953–956. doi: 10.1038/35082081. [DOI] [PubMed] [Google Scholar]

- Watanabe M. Prefrontal unit activity during delayed conditional Go/No-Go discrimination in the monkey: II. Relation to Go and No-Go responses. Brain Res. 1986;382:15–27. doi: 10.1016/0006-8993(86)90105-8. [DOI] [PubMed] [Google Scholar]

- Wise SP. Forward frontal fields: phylogeny and fundamental function. Trends Neurosci. 2008;31:599–608. doi: 10.1016/j.tins.2008.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise SP, Murray EA. Arbitrary associations between antecedents and actions. Trends Neurosci. 2000;23:271–276. doi: 10.1016/S0166-2236(00)01570-8. [DOI] [PubMed] [Google Scholar]

- Yokochi H, Tanaka M, Kumashiro M, Iriki A. Inferior parietal somatosensory neurons coding face-hand coordination in Japanese macaques. Somatosens Mot Res. 2003;20:115–125. doi: 10.1080/0899022031000105145. [DOI] [PubMed] [Google Scholar]