Abstract

The neural substrates of semantic representation have been the subject of much controversy. The study of semantic representations is complicated by difficulty in disentangling perceptual and semantic influences on neural activity, as well as in identifying stimulus-driven, “bottom-up” semantic selectivity unconfounded by top-down task-related modulations. To address these challenges, we trained human subjects to associate pseudowords (TPWs) with various animal and tool categories. To decode semantic representations of these TPWs, we used multivariate pattern classification of fMRI data acquired while subjects performed a semantic oddball detection task. Crucially, the classifier was trained and tested on disjoint sets of TPWs, so that the classifier had to use the semantic information from the training set to correctly classify the test set. Animal and tool TPWs were successfully decoded based on fMRI activity in spatially distinct subregions of the left medial anterior temporal lobe (LATL). In addition, tools (but not animals) were successfully decoded from activity in the left inferior parietal lobule. The tool-selective LATL subregion showed greater functional connectivity with left inferior parietal lobule and ventral premotor cortex, indicating that each LATL subregion exhibits distinct patterns of connectivity. Our findings demonstrate category-selective organization of semantic representations in LATL into spatially distinct subregions, continuing the lateral-medial segregation of activation in posterior temporal cortex previously observed in response to images of animals and tools, respectively. Together, our results provide evidence for segregation of processing hierarchies for different classes of objects and the existence of multiple, category-specific semantic networks in the brain.

SIGNIFICANCE STATEMENT The location and specificity of semantic representations in the brain are still widely debated. We trained human participants to associate specific pseudowords with various animal and tool categories, and used multivariate pattern classification of fMRI data to decode the semantic representations of the trained pseudowords. We found that: (1) animal and tool information was organized in category-selective subregions of medial left anterior temporal lobe (LATL); (2) tools, but not animals, were encoded in left inferior parietal lobe; and (3) LATL subregions exhibited distinct patterns of functional connectivity with category-related regions across cortex. Our findings suggest that semantic knowledge in LATL is organized in category-related subregions, providing evidence for the existence of multiple, category-specific semantic representations in the brain.

Keywords: anterior temporal lobe, language, learning, multivariate pattern analysis, reading, semantic memory

Introduction

The neural correlates of semantic representation have long been controversial (Thompson-Schill, 2003). A meta-analysis of neuroimaging studies identified a left-lateralized network of regions involved in semantic processing of words, including the inferior parietal lobe (IPL), middle temporal gyrus, fusiform and parahippocampal gyri, inferior frontal gyrus, and several other regions (Binder et al., 2009). There is conflicting evidence, however, regarding the central role for any one region in the representation of semantic knowledge.

Of particular interest is the left anterior temporal lobe (LATL), which has been hypothesized to function as a “semantic hub” in which conceptual features encoded in sensory and motor cortices converge into a single amodal representation of semantic information (Patterson et al., 2007). This proposal remains controversial (Martin et al., 2014), however, and some reviews argue against a central role of the LATL, contending that the IPL and large expanses of temporal cortex exist as hubs (Binder and Desai, 2011).

The identification of semantic selectivity in neuroimaging experiments is complicated by several challenges. For one, identifying semantic areas by contrasting brain activation in a “semantic” versus “control” task may confound semantic and phonologic or orthographic processes if the control and semantic tasks do not make similar orthographic and phonological demands (Binder et al., 2009). Additionally, it is difficult to disentangle perceptual and semantic processing: a region may preferentially respond to different images of one object class (e.g., hammers) over another (e.g., faces) because pictures from the same object class share many physical features. Therefore, an apparent selectivity for conceptual attributes might simply reflect consistent responses to visual similarities between pictures. This challenge has motivated the use of word stimuli in studies of semantic representation, as orthographic similarity does not predict semantic similarity. The use of orthographic stimuli to probe the semantic system can build upon recent progress made in understanding the neural representation of whole words (Glezer et al., 2009, 2015; Dehaene and Cohen, 2011; Ludersdorfer et al., 2015). However, the rigorous identification of semantic selectivity with words requires the use of sufficiently large word sets with precisely controlled distinctions in meaning. This is a difficult aim to achieve with real words.

To address these challenges, we trained participants to associate a vocabulary of trained pseudowords (TPWs) with various animal and tool categories. Participants learned 10 novel TPWs for each of the categories (see Materials and Methods). We then used multivariate pattern analysis (MVPA) of fMRI data to decode the semantic representations of these TPWs. Crucially, machine learning classifiers were trained on fMRI data acquired in response to one set of TPWs, and subsequently tested on a disjoint set of TPWs. Therefore, in order for the classifier to correctly predict the category of the test set, it had to latch onto the semantic information associated with the trained TPW. This experimental design ensured the isolation of semantic vis-à-vis orthographic representation.

Superordinate category information (animals vs tools) was successfully decoded on the basis of fMRI activity in the medial LATL (specifically, the anterior fusiform). Classification at the basic level (distinguishing between different animal or tool categories) revealed that the LATL encoded semantic information about animals and tools in spatially distinct subregions. Our results show that the lateral/medial arrangement of perceptual representations of animals and tools found in previous studies (Chao et al., 1999; Martin 2007) is maintained in more anterior semantic representations in the anterior temporal lobe. Further, different tool, but not animal, categories could be distinguished on the basis of fMRI activity in the left IPL (LIPL). The tool-selective LATL subregion was more functionally connected with the LIPL and ventral premotor cortex, indicating that category-related LATL subregions exhibit distinct patterns of connectivity with category-selective regions across cortex. Our study reconciles and extends prior theories of semantic representations in the brain, providing evidence for convergent yet distinct semantic representations in the ATL for multiple category domains.

Materials and Methods

Participants.

A total of 14 right-handed healthy adults who were native English speakers were enrolled in the experiment. Subjects were excluded from analysis if they had excessive head motion during the scan (n = 1) or if they had poor in-scanner performance on the task (>2 SDs below mean subject d′; n = 1), resulting in a final dataset that included 12 subjects (age 19–28 years, 7 females). Georgetown University's Institutional Review Board approved all experimental procedures, and written informed consent was obtained from all subjects before the experiment.

Training.

TPWs (all four letters in length) matched for bigram and trigram frequency, and orthographic neighborhood were generated using MCWord (Medler and Binder, 2005). Subjects were trained to learn a vocabulary of 60 TPWs, with each TPW assigned to one of six categories: monkey, donkey, elephant, hammer, wrench, and screwdriver (i.e., 10 TPWs were defined as “monkey,” 10 TPW as “donkey,” etc.). To learn the TPW definitions, each subject performed 0.5–1 h of training per session in which a TPW was presented on a screen followed by 6 pictures: one picture for each of the animal and tool categories (randomly selected from a large database of images for each category to prevent subjects from associating particular words with particular images). Subjects indicated their category choice using a numeric keypad. When subjects answered incorrectly, an auditory beep and the correct answer were presented. No feedback was given for correct answers. A single training session consisted of 5 blocks, and a unique set of 2 TPWs (within the 10 per category) was used for each block to facilitate learning.

Masked priming.

To investigate whether the TPW evoked semantic priming effects following training, a masked semantic priming paradigm was administered following the eighth training sessions. Our task structure followed that of Quinn and Kinoshita (2008): each trial consisted of a blank screen for 300 ms, the symbols ######## (forward mask) for 500 ms, prime TPW (in lowercase) for 50 ms, and target TPW (in uppercase) for 2000 ms. Ten subjects were included in the analysis due to equipment malfunction for two of the subjects. Subjects' task was to indicate (by button press on a standard keyboard) whether the target word was an animal word. The task consisted of 24 practice trials with real words, followed by 6 blocks of 90 trials with the TPWs. All 60 TPWs were used in the task, and each word was repeated 9 times as the target and 9 times as the prime. Trials were broken into 3 different conditions depending on the relationship between the prime and target TPWs: different word, same basic category (e.g., prime: monkey TPW, target: a different monkey TPW), different word, different subordinate category (monkey, donkey), and different word, different superordinate category (monkey, hammer). Outlier trials, defined as trials with a reaction time (RT) >2.5 SDs away from the mean, were removed before analysis.

Event-related fMRI scans.

EPI images from 6 event-related runs were collected. Each run lasted 6.1 min and began and ended with a 20.4 s fixation period. Within each run, 110 TPWs were presented. Each TPW was presented for 400 ms, followed by a blank screen for 2549 ms. To maintain attention, subjects were instructed to perform an oddball detection task (Jiang et al., 2006; Glezer et al., 2015) in the scanner: Subjects were asked to press a button (with their right hand) whenever an oddball animal or tool category was presented (see Fig. 2A). Each subject was randomly assigned one oddball animal and one oddball tool category from the six possible categories. Trials containing oddball stimuli and false-alarm nonoddball trials were excluded from analyses. Only trials containing words belonging to nonoddball categories were used in the analyses.

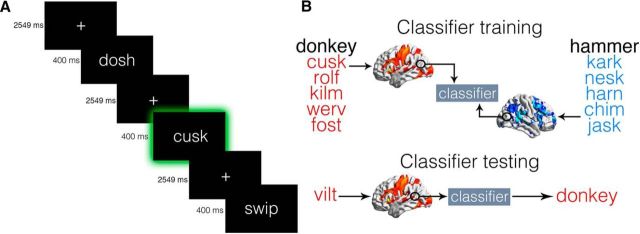

Figure 2.

fMRI oddball task paradigm and MVPA analysis schematic. A, Subjects performed an oddball detection task in the scanner, in which they responded with a button press whenever a TPW for an oddball animal or tool category was presented (illustrated here by the green frame, which was not visible in the experiment). B, Classifiers were trained on β images estimated from five TPWs for each animal and tool category. The classifiers were then tested on β images generated from a separate set of five TPWs for each category.

MRI acquisition.

MRI data were acquired at Georgetown University's Center for Functional and Molecular Imaging using an EPI sequence on a 3 tesla Siemens TIM Trio scanner. A 12 channel head coil was used (flip angle = 90°, TR = 2040 ms, TE = 29 ms, FOV = 205 mm, 64 × 64 matrix). Thirty-five interleaved axial slices (thickness = 4.0 mm, no gap; in-plane resolution = 3.2 × 3.2 mm2) were acquired. A T1-weighted MPRAGE image (resolution 1 × 1 × 1 mm3) was also acquired for each subject.

fMRI data preprocessing.

Image preprocessing was performed in SPM12 (http://www.fil.ion.ucl.ac.uk/spm/software/spm12/). The first five acquisitions of each run were discarded, and the remaining EPI images were slice time corrected to the middle slice and spatially realigned. EPI images for each subject were coregistered to the anatomical image. The anatomical image was then segmented and the resulting deformation fields for spatial normalization were saved. MVPA analyses were first performed on unsmoothed data and in subjects' native space, and then the accuracy map of each individual subject was normalized to MNI space for statistical analysis (Hebart et al., 2015).

MVPA.

MVPA analyses were performed using The Decoding Toolbox (Hebart et al., 2015) and custom MATLAB code (The MathWorks). All classifications implemented a linear support vector machine classifier with a fixed cost parameter (c = 1). We used a searchlight procedure (Kriegeskorte et al., 2006) with a radius of 2 voxels (similar results were obtained with searchlights of 3 and 4 voxels). Six-fold cross-validation was performed using a leave-one-run-out protocol in which the classifier was trained on data from 5 runs and tested on the left out sixth run. Beta-estimates were used to train and test our classifier (Pereira et al., 2009). The set of 10 TPWs from each nonoddball category were randomly divided into 2 sets of 5 TPWs, and a separate GLM was estimated for each set. Six motion parameters generated from realignment were included as regressors of no interest.

MVPA Analysis 1 (superordinate level classification).

For each subject, four animal versus tool classifications were performed, one for each of the four possible nonoddball category combinations (e.g., tool 1 vs animal 1, tool 2 vs animal 1, etc.). Importantly, the classifiers were trained and tested on β-images estimated from unique sets of TPWs (see Fig. 2B). The resulting accuracy maps were averaged across classifications to obtain one map per subject.

MVPA Analysis 2 (basic level classification).

To determine whether any regions of interest (ROIs) implicated in Analysis 1 encoded information about animals, tools, or both animals and tools, two additional, separate classifications were performed on the two nonoddball animals (animal 1 vs animal 2) and two nonoddball tools (tool 1 vs tool 2), respectively.

Statistical analysis.

Each classification resulted in a whole-brain accuracy-minus-chance map. Chance was defined as 50%, the probability of the classifier randomly decoding the correct TPW category. The accuracy maps were normalized to standard space and smoothed with an isotropic 6 mm Gaussian kernel. For group level inference, we implemented SPM's second-level procedure. Accuracy-minus-chance images were submitted to a one-sample t test against 0 to determine regions where the classifier was able to decode the TPW category with greater than chance accuracy. All analyses were thresholded at a voxelwise p < 0.005 and cluster-level p < 0.05, FWE-corrected unless otherwise noted.

Functional connectivity.

To estimate connectivity of the animal and tool subregions of LATL, seed-to-voxel functional connectivity was assessed using the CONN-fMRI toolbox (Whitfield-Gabrieli and Nieto-Castanon, 2012; Schurz et al., 2015). Functional images were normalized into MNI space, and β images were generated for all animal and tool categories. To construct a tool-selective seed region for each subject, a sphere (radius = 8 mm) was defined centered on the voxel within the tool-selective LATL subregion from MVPA Analysis 2 with peak accuracy for decoding tool TPW. The intersection between sphere and gray matter masks was computed for each subject to ensure that only gray matter voxels were included in the seed. An analogous procedure was performed to construct animal-selective seeds. The animal and tool-selective seeds were manually inspected for each subject to ensure that there was no overlap. BOLD signal time courses were used to calculate the temporal correlation of the BOLD signal between each seed region and the rest of the brain using a general linear model. Noise due to white matter and CSF signals was regressed out using CompCor (Behzadi et al., 2007). Movement parameters were entered as covariates of no interest. Main condition effects (β values for animal and tool TPWs) were also included as covariates of no interest to ensure that temporal correlations reflected functional connectivity and did not simply reflect stimulus-related coactivation. Functional connectivity contrast images were estimated for each seed region for the contrast animal and tool TPW > rest. The rest condition was estimated according to the methods of Fair et al. (2007). Individual subject contrast images of correlation coefficients were entered into a second-level random-effects analysis to assess connectivity at the group level. Results were thresholded at a voxelwise p < 0.005 (uncorrected) and cluster-level p < 0.05 (FWE-corrected).

Results

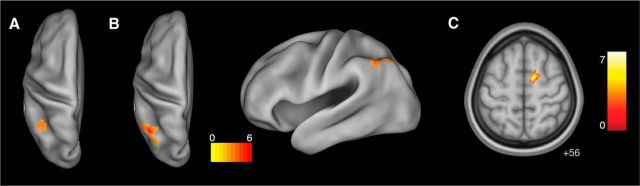

Each subject performed 8 training sessions over an average of 13.8 d (SD ± 3.2). Both accuracy and RT for identification of TPW category improved across training sessions (Fig. 1A). On average, subjects reached an accuracy of 98.7% by their eighth training session.

Figure 1.

Pseudoword training performance and semantic priming results. A, Mean accuracies and RTs for identification of pseudoword category across training sessions. B, RTs in the priming experiment completed after the eighth training session. Bars represent mean RT for the different priming conditions: DWSC, prime was different word, same subordinate category as target; DWDC, prime was different word, belonging to different subordinate category than target; DWDSC, different word, different superordinate category than target. Plot is grouped by category of the target word (animal or tool). Brackets indicate significant differences across priming conditions: *p < 0.05. Errors bars indicate SEM.

Following the eighth training session, subjects were tested on a semantic priming paradigm to assess for automatic processing of stimulus meaning. A two-way ANOVA with the factors condition (different word, same basic category; different word, different subordinate category; different word, different superordinate category) and category (animals and tools) revealed a significant main effect of condition on mean RT (F(2,18) = 9.503, p = 0.002). There was no significant main effect of category (F(1,9) = 3.042, p = 0.12) and no significant interaction between category and condition (F(2,18) = 0.022, p = 0.98). Post hoc t tests showed that RT for different word, same basic category (p = 0.002) and different word, different subordinate category (p = 0.011) were significantly faster than different word, different superordinate category. Therefore, subjects were able to more quickly identify target TPW category when the target and prime TPW categories were congruent, indicating semantic priming effects following training.

In the oddball detection task in the scanner (Fig. 2A), subjects had a mean d′ for detection of the oddball category of 4.50 (SD ± 0.49) for animals and 4.29 (SD ± 0.93) for tools. The median RT for animal oddball words was 833 ms (SD ± 152) and 865 ms (SD ± 154) for tool oddball words. There were no significant differences in d′ or RT for animal and tool TPW (p = 0.44 and p = 0.26, respectively, paired t tests).

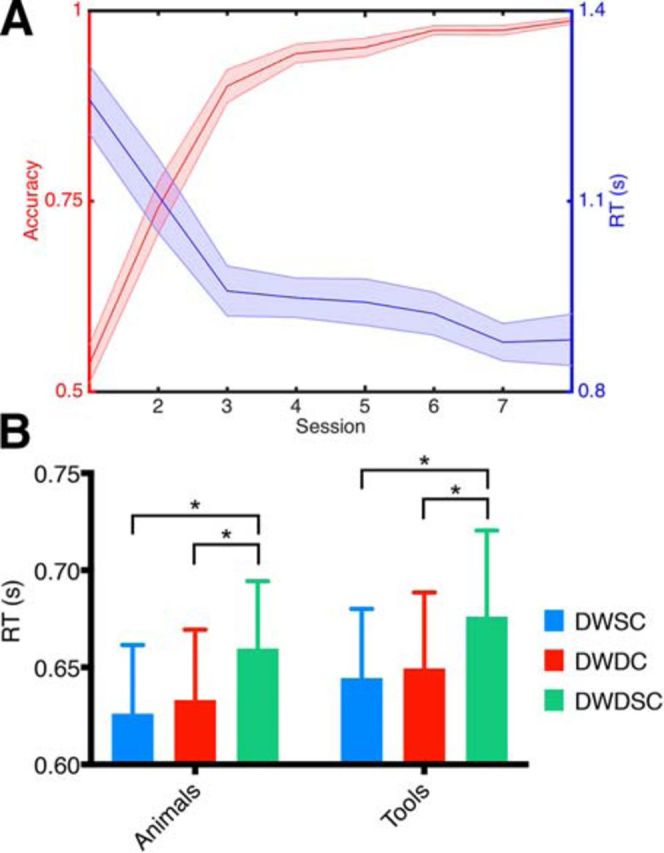

We first explored where in the brain superordinate-level semantic information (animals vs tools) could be decoded (MVPA Analysis 1). Our searchlight analysis revealed a significant cluster of above-chance classification for animals versus tools in LATL (Fig. 3A; Table 1). Visualization with Xjview (http://www.alivelearn.net/xjview) determined that the cluster was predominantly located in anterior fusiform gyrus. There was an additional cluster of above-chance classification performance in LIPL (Fig. 4A; Table 1).

Figure 3.

Superordinate and basic level classification of TPW semantics in the LATL. A, Significantly above chance decoding of TPW superordinate category in LATL (MNI peak coordinates: −38, −4, −26). Color bar represents t statistic. Thresholded at a voxelwise p < 0.005 and cluster-level p < 0.05 (FWE-corrected). B, Basic level classification of animals (blue; peak coordinates: −48, −12, − 20) and tools (red; coordinates: −36, −18, −28) in distinct subregions of LATL. Thresholded at a voxelwise p < 0.005 and cluster-level p < 0.05 (FWE-corrected). For visualization purposes, other significant ROI (LIPL and rSFG, shown in Fig. 4) have been masked here.

Table 1.

Location and cluster extent for all significant ROIs identified in the MVPA analysesa

| Classification | Region | MNI coordinates (x, y, z) | Extent (voxels) |

|---|---|---|---|

| Superordinate | LATL | −38, −4, −26 | 476 |

| LIPL | −24, −44, 42 | 367 | |

| Basic level | LATL (animals) | −48, −12, −20 | 63 |

| LATL (tools) | −36, −18, −28 | 126 | |

| LIPL (tools) | −36, −64, 44 | 683 | |

| rSFG (animals) | 10, −4, 56 | 177 |

aClusters are thresholded at a voxel-wise p < 0.005 and cluster-level p < 0.05, FWE-corrected.

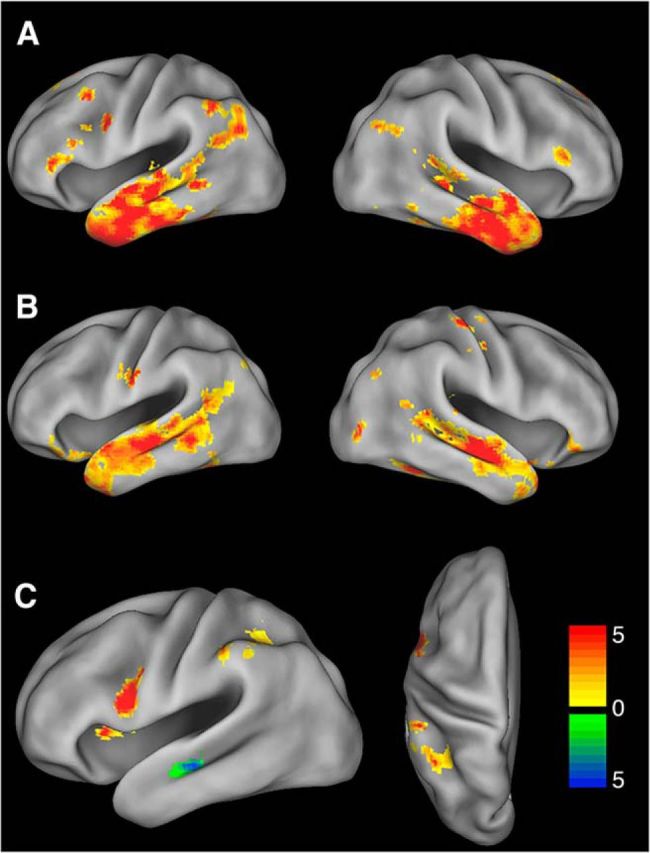

Figure 4.

Superordinate and basic level classification of TPW semantics in LIPL and rSFG. A, Classification of TPW superordinate category in LIPL (peak coordinates: −24, −44, 42). B, Basic level classification of tool TPW in LIPL (coordinates: −36, −64, 44). C, Basic level decoding of animal TPW in rSFG (coordinates: 10, −4, 56). Color bar represents t statistic. Thresholded at a voxelwise p < 0.005 and cluster-level p < 0.05 (FWE-corrected).

This above-chance classification at the superordinate level in LATL and LIPL could arise from three distinct kinds of neural representation: (1) representations encoding information about animals, but not tools; (2) representations encoding information about tools, but not animals; or (3) representations that encode information about both animals and tools. To address these possibilities, we tried to decode basic-level information (i.e., about specific animal or tool categories) from the activation patterns in the different ROI, reasoning that, if a classifier is able to decode animal and tool TPW at the basic level in a particular ROI (LATL or LIPL), then that ROI must represent information about both animals and tools.

Basic-level category membership information of animal and tool TPW was successfully decoded from searchlights within the LATL ROI (Fig. 3B; Table 1; thresholded at a voxelwise p < 0.005 and cluster-level p < 0.05 small volume FWE-corrected with a mask of the LATL ROI). Notably, the animal-selective ROI (MNI coordinate of most significant voxel: x = −48) was located more laterally and the tool-selective ROI more medially (x = −36), indicating that animal and tool information are encoded in spatially distinct subregions of the LATL. In contrast, basic-level semantics of tool, but not animal TPW, were successfully decoded from LIPL (Fig. 4B; Table 1), suggesting that this region represents information about different categories of tools. Finally, basic-level category information about animals (but not tools) was decoded from a region in right superior frontal gyrus (rSFG; Fig. 4C; Table 1). Visualization with Xjview determined that this cluster was predominantly located in the supplementary motor area (SMA). It is important to note that univariate contrasts (animal > tool and tool > animal TPW) revealed no significant differences in activation between conditions (voxelwise p < 0.005 (uncorrected) and cluster-level p < 0.05 (FWE-corrected).

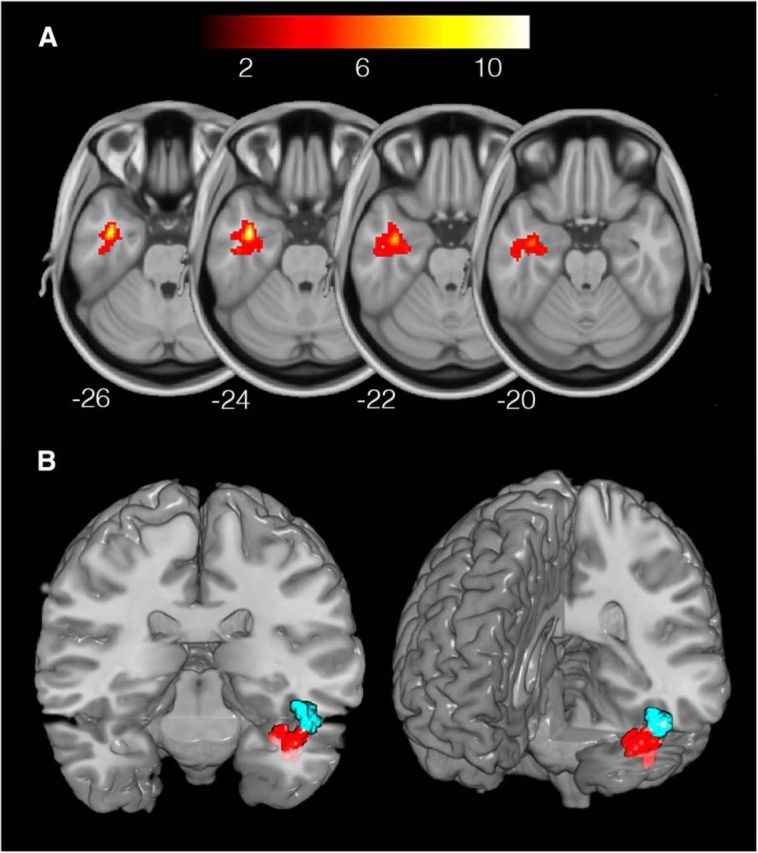

We next explored functional connectivity of the animal and tool-selective subregions of the LATL with the rest of the brain. The tool-selective subregion showed significant functional connectivity with LIPL, left ventral premotor cortex, left dorsolateral prefrontal cortex, bilateral inferior, middle, and superior temporal gyri, bilateral inferior frontal gyri, and other regions (Fig. 5A). Fifty-four percent of voxels within the IPL ROI that was functionally connected with LATL overlapped with the tool-selective IPL region from MVPA Analysis 2. The animal-selective subregion was functionally connected with bilateral middle and superior temporal gyrus, orbitofrontal cortex, posterior fusiform, and other regions (Fig. 5B). Between-seed contrasts found that the tool-selective subregion showed greater connectivity with LIPL and left ventral premotor cortex than the animal-selective subregion. The animal-selective subregion showed greater connectivity with bilateral middle temporal gyri (Fig. 5C). Results were thresholded at a voxelwise p < 0.005 (uncorrected) and cluster-level p < 0.05 (FWE-corrected).

Figure 5.

Functional connectivity of tool- and animal-selective subregions of LATL. A, Connectivity of the tool-selective LATL subregion. B, Connectivity of the animal-selective LATL subregion. C, The tool-selective subregion showed greater connectivity with left ventral premotor cortex and LIPL (but not right, data not shown) (orange/red), and the animal-selective subregion showed greater connectivity with bilateral middle temporal gyri (green/blue). Color bar represents t statistic. Thresholded at a voxelwise p < 0.005 (uncorrected) and cluster-level p < 0.05 (FWE-corrected).

Discussion

Our study provides evidence for the encoding of semantic information of written TPWs in spatially distinct, category-selective subregions of the medial LATL. Additionally, the LATL subregions exhibited distinct patterns of functional connectivity; the tool-selective subregion was more strongly connected with the LIPL and ventral premotor cortex, whereas the animal-selective subregion was more strongly connected with bilateral middle temporal gyri. We also found that the LIPL selectively encoded information about the learned meaning of tool, but not animal TPWs, whereas the rSFG represented animal TPWs, but not tool. Our experimental design, in which a classifier was trained and tested on disjoint sets of TPWs, ensured that our findings were not confounded by perceptual similarities between visual stimuli. Our study design also permitted the isolation of semantic representations from other language processes, such as phonological or orthographic processing.

Our study has several implications for theories of semantic representation in the brain. The proposed role of the LATL as a key region for semantic processing, possibly even a “semantic hub,” is predominantly based on clinical observations of patients with semantic dementia. These patients have semantic deficits involving multiple concept categories across all modalities of reception and expression (Patterson et al., 2007). This interpretation has been controversial (Martin et al., 2014), however, partly because the pattern of atrophy in semantic dementia is not confined to the ATL. Broad territories of temporal cortex extending posteriorly, as well as frontal and subcortical regions are also affected (Gorno-Tempini et al., 2004). Therefore, it is not clear whether all of these regions, or some subregion, serve as the semantic hub. To address this question, Mion et al. (2010) correlated semantic measures with brain hypometabolism in a cohort of semantic dementia patients. They found that the only strong predictor of scores on verbal semantic tasks was the degree of glucose hypometabolism in the left anterior fusiform. Transcranial magnetic stimulation of the LATL has similarly replicated semantic deficits in normal participants (Pobric et al., 2007; Jackson et al., 2015).

The semantic hub theory contends that all categories of semantic knowledge converge into a single hub in the LATL. An alternative account is that multiple hubs exist throughout temporal and parietal cortices (Binder et al., 2009) rather than a single convergence zone. Our findings bridge these theories, providing evidence for spatially distinct, category-related organization within the LATL. Specifically, we found a lateral-medial segregation for animals and tools in anterior fusiform cortex that is similar to the category-selective organization in posterior fusiform cortex observed in previous studies using pictures of animals and tools. For instance, a classic study by Chao et al. (1999) found that medial aspects of the posterior fusiform were preferentially activated when subjects viewed pictures of tools, whereas lateral regions of posterior fusiform were activated in response to animals. Our findings suggest that this category-related organization in posterior fusiform is maintained into higher-level regions in the LATL containing more abstract representations.

An important prediction of a multihub theory of semantic memory is that each category-related hub should exhibit distinct patterns of functional connectivity with category-selective regions across cortex. In our study, the tool-selective LATL subregion was functionally connected with the LIPL, ventral premotor cortex, dorsolateral prefrontal cortex, and inferior frontal gyrus, all regions that have previously been shown to be associated with tool use and knowledge (for review, see Johnson-Frey, 2004; Lewis, 2006; Orban and Caruana, 2014). The finding of connectivity between the LATL and the middle temporal gyrus is consistent with previous reports of the role of the middle temporal gyrus in semantic memory of written words (Dronkers et al., 2004; Binder et al., 2009).

We also found evidence for selective encoding of animal TPW in the right SMA. This finding is not easily interpreted based on other findings in the literature. The SMA has been hypothesized to play a role in the retrieval of semantic knowledge (Hart et al., 2013); however, this retrieval has not been demonstrated to be specific to conceptual knowledge of animals. Further investigation is warranted to elucidate the role of the SMA in semantic processing.

Our study adds to a growing body of literature that has probed conceptual representations in the brain using pictures (Peelen and Caramazza, 2012; Tyler et al., 2013) or words or crossmodal stimuli (Bruffaerts et al., 2013; Devereux et al., 2013; Fairhall and Caramazza, 2013a,b; Liuzzi et al., 2015). Notably, several of these studies also reported ROI involved in semantic processing more posteriorly in the temporal lobe compared with our LATL ROI. It is interesting to speculate why our study was able to specifically identify more anterior concept-selective ROI. The aforementioned papers all compared fMRI response similarity matrices to behaviorally determined semantic similarity for various items belonging to different concept categories (e.g., different fruits, different birds). This kind of analysis likely taps into semantic feature representations that are either shared among different representatives of larger categories or differ between categories. In contrast, our design can target the representation of the basic-level concepts themselves, as all TPWs for a particular concept are associated with the same, precisely defined basic-level meaning (e.g., “elephant”), thereby producing highly consistent activation patterns at that level of abstraction. In this framework, our findings, combined with those of the aforementioned studies, suggest that the anterior concept representations we have identified might receive input from the more posterior, putatively semantic feature representations identified in the other studies.

Interestingly, such a distinction between concept-tuned and conceptual feature-tuned representations might also explain the observation that in LIPL, we found slightly different locations for superordinate-level (Fig. 4A) and basic-level ROI (Fig. 4B): While animals and tools can be distinguished based on broad semantic features (e.g., “can be held in hand”), the distinction between different kinds of tools requires a finer-grained comparison (e.g., one that is mediated by neurons selective for the different kinds of tools; e.g., “hammer” neurons vs “wrench” neurons). These tool-tuned neurons may integrate input from distinct sets of semantic features (e.g., “used with a turning motion,” “used with a pounding motion,” etc.) to achieve the required level of specificity. Thus, the superordinate level classification could be successfully achieved by activation patterns in searchlight spheres that capture semantic features shared across all tools (“can be held in hand”) and not shared with animals. Yet, these features might not be distinctive enough to allow differentiation between different kinds of tools at the basic level. Conversely, basic level classification could be achieved with searchlights that capture neuronal representations selective for different kinds of tools (i.e., “hammer” and “wrench” neurons). Because different tools activate different subsets of tool-tuned neurons, overall activation patterns for different tools would be too variable to allow reliable superordinate-level distinctions. Likewise, this distinction between feature and concept-tuned neurons would interpret the finding of basic- but not superordinate-level selectivity for animals in SFG as arguing for a concept-level representation of animals in the SFG. This could also explain why the studies analyzing the similarity of fMRI activation patterns mentioned above have not previously identified concept selectivity in the SFG. It will be interesting to test this hypothesis in future studies.

It is noteworthy that our study did not find evidence for semantic representations in posterior fusiform cortex, in particular, the VWFA region (around coordinates −46, −56, −24) (Glezer et al., 2015). The absence of semantic information in the VWFA is consistent with theories that the VWFA is a purely visual area, with representations highly selective for orthography but not semantics (Glezer et al., 2009; Dehaene and Cohen, 2011). This is not to say that activation in the VWFA/posterior fusiform cortex cannot be modulated by semantic factors through top-down signals from semantic areas under certain conditions, as has been proposed previously (Price and Devlin, 2011; Campo et al., 2013).

In conclusion, we provide evidence for a central role of distinct subregions of the ATL in the representation of multiple categories of semantic knowledge. Future studies should further investigate the category-related organization of the LATL. Are there distinct regions in ATL for additional categories of semantic knowledge? If so, does each of these regions exhibit distinct patterns of connectivity with the rest of the brain? Furthermore, do these patterns reflect the specific features, at the sensory, motor, or other levels (e.g., emotional, social), that define category membership? Future studies should also investigate whether the LATL encodes amodal representations. It would be interesting, for example, to determine whether semantic information could be decoded from the LATL when TPWs are heard, rather than read. Finally, it is interesting to consider the computational advantage that would result from the colocalization of different semantic regions in the LATL. For example, a learning procedure known as “fast-mapping” facilitates the learning of novel concepts by relating them to previously acquired concepts (Coutanche and Thompson-Schill, 2014). The encoding of multiple categories of semantic knowledge in close proximity in LATL would facilitate the integration of newly acquired concepts into existing memory networks. Fruitful questions for further study abound.

Footnotes

This work was supported by National Science Foundation Grant 1026934 to M.R., National Science Foundation Grant PIRE OISE-0730255, and NIH Intellectual and Development Disorders Research Center Grant 5P30HD040677. We thank Dr. Clara Scholl for help with data collection and Dr. Alumit Ishai for helpful comments on this manuscript.

The authors declare no competing financial interests.

References

- Behzadi Y, Restom K, Liau J, Liu TT. A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. Neuroimage. 2007;37:90–101. doi: 10.1016/j.neuroimage.2007.04.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH. The neurobiology of semantic memory. Trends Cogn Sci. 2011;15:527–536. doi: 10.1016/j.tics.2011.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruffaerts R, Dupont P, Peeters R, De Deyne S, Storms G, Vandenberghe R. Similarity of fMRI activity patterns in left perirhinal cortex reflects semantic similarity between words. J Neurosci. 2013;33:18597–18607. doi: 10.1523/JNEUROSCI.1548-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campo P, Poch C, Toledano R, Igoa JM, Belinchón M, García-Morales I, Gil-Nagel A. Anterobasal temporal lobe lesions alter recurrent functional connectivity within the ventral pathway during naming. J Neurosci. 2013;33:12679–12688. doi: 10.1523/JNEUROSCI.0645-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Coutanche MN, Thompson-Schill SL. Fast mapping rapidly integrates information into existing memory networks. J Exp Psychol Gen. 2014;143:2296–2303. doi: 10.1037/xge0000020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. The unique role of the visual word form area in reading. Trends Cogn Sci. 2011;15:254–262. doi: 10.1016/j.tics.2011.04.003. [DOI] [PubMed] [Google Scholar]

- Devereux BJ, Clarke A, Marouchos A, Tyler LK. Representational similarity analysis reveals commonalities and differences in the semantic processing of words and objects. J Neurosci. 2013;33:18906–18916. doi: 10.1523/JNEUROSCI.3809-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers NF, Wilkins DP, Van Valin RD, Jr, Redfern BB, Jaeger JJ. Lesion analysis of the brain areas involved in language comprehension. Cognition. 2004;92:145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- Fair DA, Schlaggar BL, Cohen AL, Miezin FM, Dosenbach NU, Wenger KK, Fox MD, Snyder AZ, Raichle ME, Petersen SE. A method for using blocked and event-related fMRI data to study “resting state” functional connectivity. Neuroimage. 2007;35:396–405. doi: 10.1016/j.neuroimage.2006.11.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Caramazza A. Category-selective neural substrates for person- and place-related concepts. Cortex. 2013a;49:2748–2757. doi: 10.1016/j.cortex.2013.05.010. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Caramazza A. Brain regions that represent amodal conceptual knowledge. J Neurosci. 2013b;33:10552–10558. doi: 10.1523/JNEUROSCI.0051-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glezer LS, Jiang X, Riesenhuber M. Evidence for highly selective neuronal tuning to whole words in the “visual word form area.”. Neuron. 2009;62:199–204. doi: 10.1016/j.neuron.2009.03.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glezer LS, Kim J, Rule J, Jiang X, Riesenhuber M. Adding words to the brain's visual dictionary: novel word learning selectively sharpens orthographic representations in the VWFA. J Neurosci. 2015;35:4965–4972. doi: 10.1523/JNEUROSCI.4031-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Dronkers NF, Rankin KP, Ogar JM, Phengrasamy L, Rosen HJ, Johnson JK, Weiner MW, Miller BL. Cognition and anatomy in three variants of primary progressive aphasia. Ann Neurol. 2004;55:335–346. doi: 10.1002/ana.10825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart J, Jr, Maguire MJ, Motes M, Mudar RA, Chiang HS, Womack KB, Kraut MA. Semantic memory retrieval circuit: role of pre-SMA, caudate, and thalamus. Brain Lang. 2013;126:89–98. doi: 10.1016/j.bandl.2012.08.002. [DOI] [PubMed] [Google Scholar]

- Hebart MN, Görgen K, Haynes JD, Dubois J. The Decoding Toolbox (TDT): a versatile software package for multivariate analyses of functional imaging data. Front Neuroinform. 2015;8:1–18. doi: 10.3389/fninf.2014.00088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson RL, Lambon Ralph MA, Pobric G. The timing of anterior temporal lobe involvement in semantic processing. J Cogn Neurosci. 2015;27:1388–1396. doi: 10.1162/jocn_a_00788. [DOI] [PubMed] [Google Scholar]

- Jiang X, Rosen E, Zeffiro T, Vanmeter J, Blanz V, Riesenhuber M. Evaluation of a shape-based model of human face discrimination using fMRI and behavioral techniques. Neuron. 2006;50:159–172. doi: 10.1016/j.neuron.2006.03.012. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH. The neural bases of complex tool use in humans. Trends Cogn Sci. 2004;8:71–78. doi: 10.1016/j.tics.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1177/1073858406288327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW. Cortical networks related to human use of tools. Neuroscientist. 2006;12:211–231. doi: 10.1073/pnas.0600244103. [DOI] [PubMed] [Google Scholar]

- Liuzzi AG, Bruffaerts R, Dupont P, Adamczuk K, Peeters R, De Deyne S, Storms G, Vandenberghe R. Left perirhinal cortex codes for similarity in meaning between written words: comparison with auditory word input. Neuropsychologia. 2015;76:4–16. doi: 10.1016/j.neuropsychologia.2015.03.016. [DOI] [PubMed] [Google Scholar]

- Ludersdorfer P, Kronbichler M, Wimmer H. Accessing orthographic representations from speech: the role of left ventral occipitotemporal cortex in spelling. Hum Brain Mapp. 2015;36:1393–1406. doi: 10.1002/hbm.22709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annu Rev Psychol. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A, Kyle Simmons W, Beauchamp MS, Gotts SJ. Is a single “hub,” with lots of spokes, an accurate description of the neural architecture of action semantics? Phys Life Rev. 2014;11:261–262. doi: 10.1016/j.plrev.2014.01.002. [DOI] [PubMed] [Google Scholar]

- Medler DA, Binder JR. An on-line orthographic database of the English language. 2005. [Accessed Sept. 10, 2010]. Available from http://www.neuro.mcw.edu/mcword/

- Mion M, Patterson K, Acosta-Cabronero J, Pengas G, Izquierdo-Garcia D, Hong YT, Fryer TD, Williams GB, Hodges JR, Nestor PJ. What the left and right anterior fusiform gyri tell us about semantic memory. Brain. 2010;133:3256–3268. doi: 10.1093/brain/awq272. [DOI] [PubMed] [Google Scholar]

- Orban GA, Caruana F. The neural basis of human tool use. Front Psychol. 2014;5:1–12. doi: 10.3389/fpsyg.2014.00310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Caramazza A. Conceptual object representations in human anterior temporal cortex. J Neurosci. 2012;32:15728–15736. doi: 10.1523/JNEUROSCI.1953-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira F, Mitchell T, Botvinick M. Machine learning classifiers and fMRI: a tutorial overview. Neuroimage. 2009;45:S199–S209. doi: 10.1016/j.neuroimage.2008.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pobric G, Jefferies E, Ralph MA. Anterior temporal lobes mediate semantic representation: mimicking semantic dementia by using rTMS in normal participants. Proc Natl Acad Sci U S A. 2007;104:20137–20141. doi: 10.1073/pnas.0707383104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The interactive account of ventral occipitotemporal contributions to reading. Trends Cogn Sci. 2011;15:246–253. doi: 10.1016/j.tics.2011.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn WM, Kinoshita S. Congruence effect in semantic categorization with masked primes with narrow and broad categories. J Mem Lang. 2008;58:286–306. doi: 10.1016/j.jml.2007.03.004. [DOI] [Google Scholar]

- Schurz M, Wimmer H, Richlan F, Ludersdorfer P, Klackl J, Kronbichler M. Resting-state and task-based functional brain connectivity in developmental dyslexia. Cereb Cortex. 2015;25:3502–3514. doi: 10.1093/cercor/bhu184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL. Neuroimaging studies of semantic memory: inferring “how” from “where.”. Neuropsychologia. 2003;41:280–292. doi: 10.1016/S0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Chiu S, Zhuang J, Randall B, Devereux BJ, Wright P, Clarke A, Taylor KI. Objects and categories: feature statistics and object processing in the ventral stream. J Cogn Neurosci. 2013;25:1723–1735. doi: 10.1162/jocn_a_00419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitfield-Gabrieli S, Nieto-Castanon A. A functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect. 2012;2:125–141. doi: 10.1089/brain.2012.0073. [DOI] [PubMed] [Google Scholar]