Abstract

The role of the early visual cortex and higher-order occipitotemporal cortex has been studied extensively for visual recognition and to a lesser degree for haptic recognition and visually guided actions. Using a slow event-related fMRI experiment, we investigated whether tactile and visual exploration of objects recruit the same “visual” areas (and in the case of visual cortex, the same retinotopic zones) and if these areas show reactivation during delayed actions in the dark toward haptically explored objects (and if so, whether this reactivation might be due to imagery). We examined activation during visual or haptic exploration of objects and action execution (grasping or reaching) separated by an 18 s delay. Twenty-nine human volunteers (13 females) participated in this study. Participants had their eyes open and fixated on a point in the dark. The objects were placed below the fixation point and accordingly visual exploration activated the cuneus, which processes retinotopic locations in the lower visual field. Strikingly, the occipital pole (OP), representing foveal locations, showed higher activation for tactile than visual exploration, although the stimulus was unseen and location in the visual field was peripheral. Moreover, the lateral occipital tactile–visual area (LOtv) showed comparable activation for tactile and visual exploration. Psychophysiological interaction analysis indicated that the OP showed stronger functional connectivity with anterior intraparietal sulcus and LOtv during the haptic than visual exploration of shapes in the dark. After the delay, the cuneus, OP, and LOtv showed reactivation that was independent of the sensory modality used to explore the object. These results show that haptic actions not only activate “visual” areas during object touch, but also that this information appears to be used in guiding grasping actions toward targets after a delay.

SIGNIFICANCE STATEMENT Visual presentation of an object activates shape-processing areas and retinotopic locations in early visual areas. Moreover, if the object is grasped in the dark after a delay, these areas show “reactivation.” Here, we show that these areas are also activated and reactivated for haptic object exploration and haptically guided grasping. Touch-related activity occurs not only in the retinotopic location of the visual stimulus, but also at the occipital pole (OP), corresponding to the foveal representation, even though the stimulus was unseen and located peripherally. That is, the same “visual” regions are implicated in both visual and haptic exploration; however, touch also recruits high-acuity central representation within early visual areas during both haptic exploration of objects and subsequent actions toward them. Functional connectivity analysis shows that the OP is more strongly connected with ventral and dorsal stream areas when participants explore an object in the dark than when they view it.

Keywords: early visual cortex, fMRI, grasp, motor imagery, touch, vision

Introduction

Vision is the dominant sense for human perception, yet we often rely on other senses such as touch. Neuroimaging has revealed human brain areas implicated in using the senses of vision and touch to recognize objects (Lacey et al., 2009). These include areas in the ventral visual stream, particularly the tactile-visual subdivision of the lateral occipital tactile–visual area (LOtv) first described by Amedi and colleagues in 2001, and the dorsal visual stream, particularly the anterior intraparietal sulcus (aIPS) (Grefkes et al., 2002). Interestingly, the division of labor between primary sensory areas for perception is not sharply segregated. For example, visual information modulates activity in the primary somatosensory cortex (Meyer et al., 2011) and tactile shape perception recruits the early visual cortex (EVC) (Snow et al., 2014). In addition, although much is known about the role of vision in guiding actions, far less is known about the role of touch. However, many of our daily interactions with objects rely on previous tactile experiences. Moreover, blind individuals must rely solely on touch to explore unfamiliar shapes and to plan interactions. Introducing a delay between the presentation of a stimulus and subsequent movement provides a valuable paradigm to disentangle the contribution of perception and action.

For vision, delayed actions are thought to rely more on the ventral visual stream than immediate actions because they require access to information stored over a longer time scale than that used by the dorsal stream, which is thought to process moment-by-moment changes that require updates to the motor plan (Goodale et al., 1994; Goodale and Westwood, 2004). Indeed, fMRI has revealed that, when participants see an object and then grasp it after a delay, LOtv is reactivated at the time of action even though no visual information is available. Moreover, transcranial magnetic stimulation applied in the vicinity of LOtv affects delayed but not immediate actions (Cohen et al., 2009). Interestingly, the EVC is also reactivated at the time of delayed actions despite the absence of visual information (Singhal et al., 2013). The re-recruitment of the EVC during actions in the dark might enhance processing of detailed information about object contours to plan a grasping movement and digit placement accurately.

The neural substrates of perception and action have mostly been studied within one sensory domain: vision or touch. Here, we examined the responses in LOtv and EVC during delayed actions toward haptically explored objects compared with visually explored objects. We had two main goals. First, we determined whether haptic and visual exploration of objects recruit the same “visual” areas and, in the case of visual cortex, the same retinotopic zones. Given that EVC shows a robust retinotopic organization for visual stimuli, we investigated whether activation in EVC for haptic exploration would be tied to the retinotopic location of the stimulus (which would facilitate multisensory crosstalk) and/or the foveal representation, in which the neural representations have the highest acuity (Williams et al., 2008). Second, we investigated whether LOtv and EVC show reactivation during grasping actions in the dark toward objects that have been haptically explored and remembered. The reactivation of these areas during action might facilitate the sensorimotor system to access detailed information about action-relevant object properties even when this information has been gained through touch rather than vision. Another (but not mutually exclusive) possibility is that the reactivation is due to visual imagery, which is known to activate EVC in perceptual tasks (Kosslyn et al., 1999). Specifically, during the action, participants might visualize the remembered haptic object or the interaction of the hand with the object.

Materials and Methods

Overview of design

fMRI participants (n = 18) performed delayed actions upon objects that they had previously seen or touched in the dark (visual vs haptic trials). Each trial was divided into three phases, exploration, delay, and execution, followed by an intertrial interval (ITI) of 20 s. Four tasks were examined: grasping, reaching to touch, imagining grasping, and stopping (aborting) a grasp plan. This design enabled us to examine the activation related to haptic and visual exploration and the reactivation related to grasping (vs reaching, imagery, and stopping).

Four regions of interest (ROIs) were defined at the group level based on independent localizer scans. An independent localizer, in which participants explored objects and textures visually or haptically, was used to identify LOtv (based on higher responses for visual and haptic exploration compared with the rest baseline) and two divisions of EVC that represented the peripheral location of the stimuli (look at object > touch object) and the foveal confluence (touch object > look at object). An independent localizer in which participants grasped, reached to touch, or passively viewed objects without a delay was used to identify the aIPS, with a contrast of (immediate grasping > immediate reaching) and dorsal premotor area (PMd), with a contrast of (immediate grasping and immediate reaching > baseline).

Participants

Twenty-nine human volunteers (age range 23–35, 13 females and 16 males) participated in this study. They were all right handed and had normal or corrected-to-normal vision. All participants provided informed consent and ethics approval was obtained from the Health and Sciences Research Ethics Board at the University of Western Ontario. We collected data from 18 participants (7 females and 11 males) for the experimental runs. Ten of the 18 participants (4 females and 6 males) also participated in the LOtv localizer runs and 14 of the 18 participants (5 females and 9 males) also participated in the aIPS localizer runs. A different set of 11 participants (6 females and 5 males) underwent standard retinotopic mapping procedures.

Main experiment: timing and experimental conditions

We used high-field (4 T) fMRI to measure the BOLD signal (Ogawa et al., 1992) in a slow event-related delayed action paradigm. As shown in Figure 1A, participants first explored curvy planar objects visually or haptically and then performed delayed hand actions. Stimuli were located in the workspace of the hand below a fixation point such that the scene was viewed directly without mirrors (Culham et al., 2003). Each trial sequence (Fig. 1B) consisted of the following: (1) a color-coded cue at fixation to grasp, reach, or imagine grasping on the upcoming trial; (2) a recorded voice that instructed participants to look at or touch an object in the subsequent exploration phase; (3) a 4 s period signaled by a “beep” at the beginning and end of visual or tactile exploration of the target object; (3) a long delay interval of 18 s; (4) an auditory cue to perform the cued action upon the object based on the information held in memory (go) or to simply abort the action (stop); and (5) an ITI of 20 s before the next event. Grasping movements consisted of reaching to grasp the shape with a precision grip and reaching movements consisted of reaching to touch the shape with the knuckles. In the imagine grasping condition, participants were asked to imagine themselves grasping the shape without performing a real action; in stop trials, participants were required to abort the action, so no action was performed and there was no need to recall the stimulus. The “stop” instruction occurred in grasping trials only. The grasp stop trials were included as a control condition to distinguish whether the reactivation at the go cue was related to action execution or the end of the trial (Shulman et al., 2002). As described previously (Singhal et al., 2013), we introduced a long delay (18 s) between the initial cue and the go/stop instructions to unequivocally distinguish reactivation at the go cue from activation related to visual or haptic exploration of the shapes and to isolate the delay period. Importantly, aside from 4 s of visual illumination in visual trials, participants remained in complete darkness except for a small light-emitting diode (LED), which was too dim to allow vision of anything else within the scanner bore. Each participant was trained and tested in a short practice session (10–15 min) before the fMRI experiment. All participants were able to associate the color of the fixation point to the corresponding task with 100% accuracy before the fMRI experiment began. Because we could not check the correctness of the performance inside of the magnet bore, we do not have confirmation that participants were performing the correct tasks during the fMRI experiment.

Figure 1.

Image of the setup and timing of the experimental runs. A, The setup required participants to gaze at the fixation point marked with a cross while performing the tasks. The stimuli are shown on the right. B, At the beginning of each trial, the color of the fixation light cued participants about the task to be performed at the end of the trial (grasp, reach, or imagine grasping). After 4 s, an auditory cue instructed participants whether they had to view or touch the object during the exploration phase that occurred 2 s later and was cued by a “beep” sound. In visual trials, the object was illuminated for 4 s, whereas in haptic trials, participants haptically explored the object in the dark. The end of the exploration phase was cued by another “beep” sound. The exploration phase was followed by a delay of 18 s, after which a “go” recorded voice prompted participants to perform the movement that they had been instructed at the beginning of the trial. A “stop” recorded voice instructed participants to abort the trial. We used an ITI of 20 s. C, Group-averaged event-related BOLD activity from ventral LOtv in the LH for visual trials (dotted lines) and haptic trials (solid lines). Note that go and stop trials were indicated only at the end of the trial. Therefore, time course graphs before the go/stop cue show the %BOLD signal change (%BSC) for grasp (green), reach (light blue), and imagine grasping (orange). The time course graphs after the go/stop cue show the %BSC also for stop trials (dark green). The motion artifacts (dashed lines) at volume 1 (or second 2) and volume 12 (or second 24) were modeled with “predictors of no interest” to account for artifact variance and were excluded from further analysis. Events in C are time locked to correspond to events in B.

In summary, a 4 × 2 factorial design, with factors of task (grasp, reach, imagine grasping, and grasp stop) and sensory modality (visual or haptic) led to eight trial types in the execution phase: visual grasp, visual reach, visual imagine grasping, visual grasp stop, haptic grasp, haptic reach, haptic imagine grasping, haptic grasp stop. There were eight trials per experimental run, with each of the eight trial types presented in counterbalanced order for a run time of 7 min. Participants completed an average of 48 total trials (six trials of each of the eight tasks).

Apparatus

Goal-directed actions and shape–texture discrimination tasks were performed toward real 3D stimuli presented to the participants using our second version of the grasping apparatus or “grasparatus” (grasparatus II; Fig. 1A). This was a nonmetallic octagonal rotating drum that could be rotated to each of the eight faces between trials using a computer-controlled pneumatic system, allowing the subject to view a new stimulus after each rotation. We used a set of 37 3D stimuli of unfamiliar translucent white plastic shapes of similar size and different orientations (see example in Fig. 1A). The 3D stimuli were affixed to strips of Velcro attached to the external surface of the grasparatus, which was covered with the complementary side of the Velcro. The strips of Velcro with the attached stimuli could easily be changed between functional runs. The grasparatus was placed ∼10 cm above the subject's pelvis at a comfortable and natural grasping distance. The starting position of the right hand was placed around the navel and the left hand rested beside the body. A bright white LED supported by a flexible stalk mounted on one side of the grasparatus was placed atop and in front of the grasparatus to illuminate the object without occluding the view of the workspace.

During the experiment, participants were in complete darkness with their head tilted ∼30 degrees to permit viewing of the stimuli without mirrors. Participants were asked to maintain fixation on an LED placed above the grasparatus; therefore, the stimuli were presented in the lower visual field at ∼10–15 degrees of visual angle below the fixation LED. The right upper arm was supported with foam and gently restrained with Velcro straps to prevent movement of the shoulder and head while allowing free movement of the lower arm. All hardware (LEDs and a pneumatic solenoid) and the software (Superlab software) were triggered by a computer that received a signal from the MRI scanner at the start of each event of the trials.

Imaging parameters

This study was done at the Robarts Research Institute (London, Ontario, Canada) using a 4 T Siemens-Varian whole-body MRI system. For 16 participants, we used a single-channel head coil (for all runs). For two participants and only for the experimental and aIPS localizer runs (but not LOtv localizer runs), we used a custom-built four-channel “clam-shell” coil that enclosed the head while allowing full view of the workspace without occlusion (detailed in Cavina-Pratesi et al., 2017). The key results from the experimental and aIPS localizer runs were qualitatively similar regardless of whether the data from the two participants tested using the clamshell coil were excluded from the GLM.

fMRI volumes were collected using T2*-weighted segmented gradient-echo echoplanar imaging (19.2 cm field of view with 64 × 64 matrix size for an in-plane resolution of 3 mm; TR = 1 s; TE = 15 ms, FA = 45 degrees; TR = 1 s with two segments/plane for a volume acquisition time of 2 s). Each volume comprised 17 slices of 5 mm thickness. Slices were angled ∼30 degrees from axial to cover the occipital, parietal, posterior temporal, and posterior–superior frontal cortices. During every experimental session, a T1-weighted anatomic reference volume was acquired: 256 × 256 × 64 matrix size; 1 mm isotropic resolution, TI = 600 ms, TR = 11.5 ms; TE = 5.2 ms, FA = 11 degrees.

Preprocessing

Data were analyzed using Brain Voyager QX software version 2.8.4 (Brain Innovation). Functional data were superimposed on anatomical brain images, aligned on the anterior commissure–posterior commissure plane, and transformed into Talairach space (Talairach and Tournoux, 1988). During preprocessing, functional and anatomical data were resampled to 3 and 1 mm isotropic resolution, respectively, in Talairach space.

Functional data were preprocessed with temporal smoothing to remove frequencies <3 cycles/run and with spatial smoothing. The data from the experimental runs were smoothed with full-width at half-maximum of 8 mm; data from the localizer runs weresmoothed with full-width at half-maximum of 4 mm to allow for accurate localization of the areas. We checked the activation maps of the localizer data without spatial smoothing and observed no difference in the centroid of the ROIs compared with data with spatial smoothing of 4 mm.

Motion or magnet artifacts were screened with cine-loop animation. Motion correction was applied using the software AFNI (Oakes et al., 2005) and each volume was aligned to the volume of the functional scan closest in time to the anatomical scan. Our threshold for discarding data from further analysis was 1 mm of abrupt (volume to volume) head motion. Based on this criterion, one run from one participant was excluded from further analysis.

Slice scan time correction could not be applied because our data were collected in two shots of 1 s each (i.e., the TR was 1 s and half of k space was collected in each of two samples per volume), as was optimal for this high-field scanner. Slice scan time correction algorithms do not address multishot data and are not as necessary because the average time a slice was collected differs no more than 1 TR from any other slice (though some argue against applying slice scan time correction in general; Poldrack et al., 2011). In an earlier study using similar timing (Singhal et al., 2013), we verified that the hemodynamic response function (HRF)-convolved models provided a reasonable fit with the average time courses in many areas (averaged across conditions to avoid biasing models to favor any particular conditions).

Statistical analysis: main experiment

Data from the experimental runs were analyzed with a group random-effects GLM that included 20 predictors for each participant. For the execution phase, there were eight predictors, one for each of the eight conditions (4 tasks × 2 sensory modalities). Because go and stop trials were only distinguished at the end of the delay period, before that (in the exploration and delay phase), the data were grouped into only three task factors (grasp, reach, imagine grasping). Therefore, for the exploration and delay phases, there were six predictors apiece (3 tasks × 2 sensory modalities). Each predictor was derived from a rectangular-wave function (4 s or 2 volumes for the exploration phase, 18 s or 9 volumes for the delay phase and 2 s or 1 volume for the execution phase) convolved with a standard HRF (Brain Voyager QX's default double-gamma HRF). The GLM was performed on percentage-transformed β weights (β), so β values are scaled with respect to the mean signal level.

We observed negative spikes in the time course due to the motion of the hand in the magnetic field (Barry et al., 2010), before the haptic exploration phase (at volume 1 or second 2 of the time course in Fig. 1C), as well as in grasp and reach movements (at volume 12 or second 24 of the time course in Fig. 1C). As before, these motion artifacts were modeled with “predictors of no interest” to account for artifact variance and were excluded from further analysis. An inspection of regional time courses in the experimental runs showed deactivation (relative to baseline) during the delay phase, particularly for haptic trials. This is likely due to a large poststimulus undershoot after relatively high activation during our 4 s exploration phase. Given that the HRF shows the most interindividual variability in the poststimulus undershoot phase (Handwerker et al., 2004), a standard HRF may not fit individuals' delay-period data well, which would lead to cross-contamination of the delay phase with the exploration and execution phases. Therefore, we decided not to explore the delay phase even though this paradigm enabled us to distinguish clearly the exploration and execution phases.

Independent localizers

LOtv localizer.

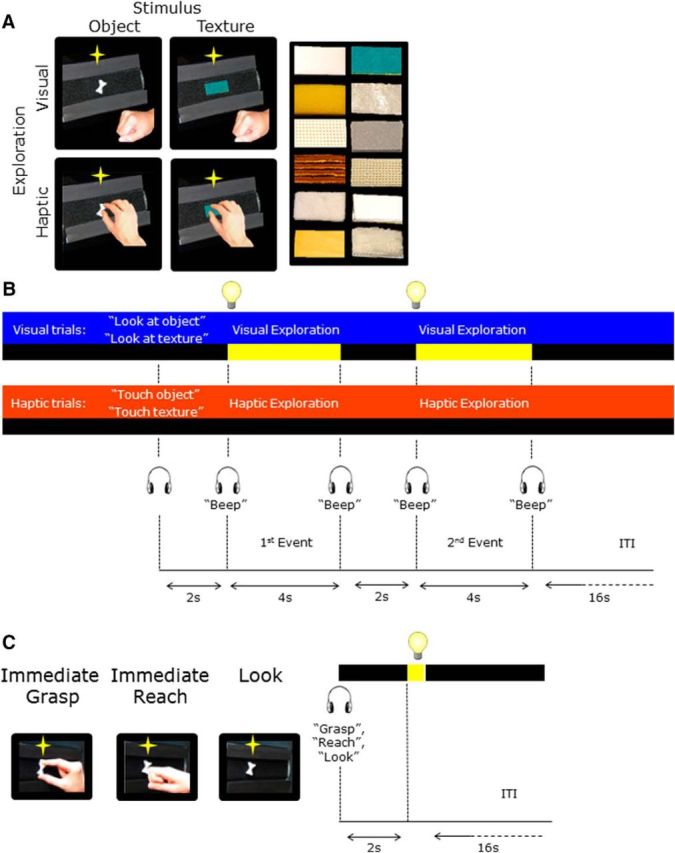

The LOtv localizer allowed us to identify the LOtv area. LOtv is involved in visual and tactile recognition of objects and is a subdivision of the larger lateral occipital complex. Amedi and colleagues (2001) showed that LOtv responded more strongly to objects than textures regardless of whether they were explored visually or haptically. Therefore the localizer consisted of a 2 × 2 factorial design with a combination of two tasks (look or touch) and two stimuli (object or texture), giving rise to four conditions: look at object, look at texture, touch object, and touch texture (Fig. 2A).

Figure 2.

Schematic representation of the tasks and timing of the LOtv and aIPS localizer runs. A, For the LOtv localizer runs, each trial type (visual and haptic) consisted of two sequential events during which participants explored either objects or textures that could be novel or repeated. The textures are shown on the right. B, At the beginning of each trial, an auditory cue instructed participants to either view or touch an object or a texture during the exploration phase that occurred 2 s later and was cued by a “beep” sound. In visual trials, the object was illuminated for 4 s, whereas in haptic trials, participants haptically explored the object in the dark until they heard another “beep” sound that cued them to return the right hand to the home position. After 2 s, the same or a different object was explored for 4 s with the sensory modality used in the first event of the trial. We used an ITI of 16 s. C, The aIPS localizer run consisted of three tasks: grasping and reaching actions that were performed immediately upon viewing the object and looking trials in which participants viewed the object without performing any action. Each trial started with an audio cue (“grasp,” reach,” or “look”) instructing participants about the task to be performed upon the illumination of the object for 250 ms.

One trial consisted of two sequential stimulus presentations separated by 2 s. At the beginning of each trial, an auditory cue (either “look” or “touch”) instructed the participants on how to explore the stimulus. In visual trials, the object was illuminated for 4 s; in haptic trials, participants touched the stimulus in the dark for 4 s. The beginning and the end of the exploration phase were cued by “beep” sounds. Participants performed a one-back comparison to indicate whether the two stimuli in a trial were the same or different by pressing the left or right button of a response box with the left hand. The offset of the second event was followed by a 16 s interval to allow the hemodynamic response to return to baseline before the next trial began.

One important point to emphasize, which will become apparent in the Results section, is that haptic exploration was performed in the dark while participants maintained fixation on an LED above the stimuli. The LED was too dim to illuminate the stimuli or the hand.

Stimuli were presented using the grasparatus described above. Each run (∼4 min) consisted of two trials per condition for a total of eight trials per run. Each subject performed three LOtv localizer runs on average.

aIPS and PMd localizer.

Dorsal stream areas such as the aIPS and PMd are well known for their involvement in immediate and delayed actions toward visually explored objects (Culham et al., 2003; Singhal et al., 2013). To examine their role in action guidance toward haptically explored objects, we localized these areas with an independent set of localizer runs.

The aIPS localizer allowed us to identify the grasp-selective aIPS area (Culham et al., 2003). In each trial, participants performed one of the three possible tasks (immediate grasp, immediate reach, or look) toward 3D shapes presented using the grasparatus (Fig. 2C). The grasp and reach actions were similar to those in the experimental runs except that the actions were performed immediately rather than after a delay. A look (passive viewing) condition served as a control in which participants were required to view the object without performing any movement. We used a slow event-related design with one trial every 16 s to allow the hemodynamic response to return to baseline during the ITI before the next trial began. This localizer has been used reliably in several published studies over the past years to localize areas involved in grasping and reaching movements (Valyear et al., 2007; Singhal et al., 2013; Monaco et al., 2015, 2011). Each trial started with the auditory instruction of the task to be performed: “grasp,” “reach,” or “look.” After 2 s from the onset of the audio cue, the stimulus was illuminated for 250 ms, cuing the participant to initiate the task. Each localizer run consisted of 18 trials and each condition was repeated 6 times in a random order for a run time of ∼7 min. Each participant performed two localizer runs for a total of 12 trials per condition.

Session duration

A session for one participant typically included set-up time, six experimental runs, three localizer runs, and one anatomical scan and took ∼3 h to be completed.

Retinotopic mapping

Although we did not collect retinotopic mapping data from our participants (because we had not anticipated the interesting retinotopic differences observed), we were nevertheless able to compare our results to data from a different set of 11 participants who underwent standard retinotopic mapping procedures (Sereno et al., 1995; DeYoe et al., 1996; Engel et al., 1997) in our laboratory (Gallivan et al., unpublished data). Although there are considerable individual differences in retinotopic areal boundaries (V1/V2, V2/V3, etc., as defined by polar-angle map reversals), eccentricity maps show a similar pattern across participants, with the foveal representations at the occipital pole (OP) and peripheral representations more anteriorly. Therefore, between-participants comparisons enabled us to localize the foveal hotspot of multiple areas coarsely, specifically V1, V2, V3, hV4, LO-1, and LO-1, which are usually grouped together into a “foveal confluence” (Wandell et al., 2007; Schira et al., 2009).

The 11 participants who underwent retinotopic mapping fixated a point in the center of the screen while viewing “traveling-wave” stimuli consisting of expanding rings (Merabet et al., 2007; Arcaro et al., 2011, 2009; Gallivan et al., 2014). The expanding ring increased logarithmically as a function of time in both size and rate of expansion to match the estimated human cortical magnification function (for details, see Swisher et al., 2007). Ingoing and outgoing stimuli were presented in two separate scans of 6 min duration and were composed of 8 cycles each lasting 40 s. Participants were encouraged to maintain fixation by performing a detection task throughout, in which they pressed a button with their right hand whenever they detected a slight dimming of the fixation point (on average, every 4.5 s). Retinotopy stimuli were rear projected with an LCD projector (NEC LT265 DLPF projector; resolution, 1024 × 768, 60 Hz refresh rate) onto a screen mounted behind the participants. The participants viewed the images through a mirror mounted to the head coil directly above the eyes. The smallest and largest ring size corresponded, respectively, to 0° and 15° of diameter. We divided the 15° into 10 equal time bins of 4 s each. Any point on the screen was within the aperture for 12.5% of the stimulus period. We convolved a boxcar-shaped predictor for each bin with a standard HRF and performed contrasts using a separate subjects GLM.

Scanning on the participants for retinotopic mapping was done on a 3 T Siemens Tim Trio MRI scanner at the University of Western Ontario with a 12-channel receive-only head coil. fMRI and anatomical MRI volumes were collected using a conventional setup with participants lying supine. Functional volumes were acquired using a T2*-weighted single-shot gradient-echo echoplanar imaging sequence (TR = 2 s, echo time = 30 ms, 3 mm isovoxel resolution). Each volume comprised 34 contiguous (no gap) oblique slices acquired at a ∼30° caudal tilt with respect to the anterior-to-posterior commissure line, providing near whole-brain coverage. The T1-weighted anatomical image was collected using an ADNI MPRAGE sequence (TR = 2300 ms, TE = 2.98 ms, field of view = 192 mm × 240 mm × 256 mm, matrix size = 192 × 240 × 256, flip angle = 9°, 1 mm isotropic voxels).

ROIs

A summary of the tasks and contrasts used to define our ROIs is provided in Table 1.

Table 1.

Summary of tasks and contrasts used to define the ROIs

| Independent localizer | Task | ROI | Contrast and conjunction analyses |

|---|---|---|---|

| LOtv localizer | Look at object Touch object in the dark Look at texture Touch texture in the dark |

LOtv | [(Look at object > baseline) AND (touch object > baseline)] |

| Cuneus | Look at object > touch object | ||

| OP | Touch object > look at object | ||

| LG | |||

| aIPS and PMd localizer | Immediate grasp Immediate reach Look at object |

aIPS | Immediate grasp > immediate reach |

| PMd | [(Immediate grasp > baseline) AND (immediate reach > baseline)] |

LOtv.

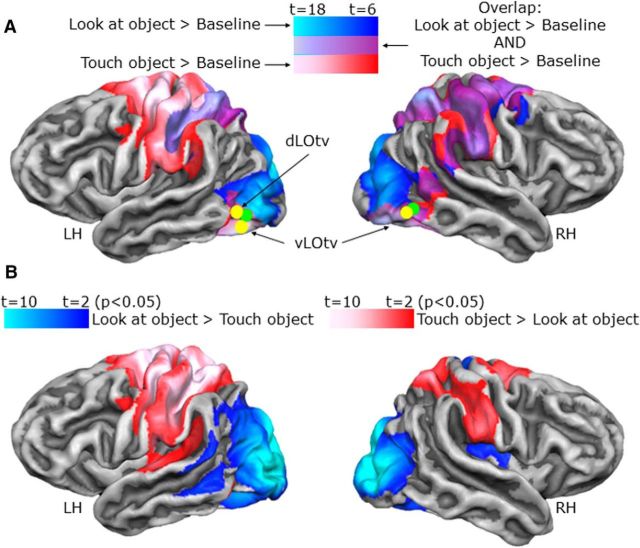

Area LOtv is involved in visual as well as haptic recognition of objects (Amedi et al., 2001). Therefore, we localized LOtv using the localizer runs with a conjunction of the following contrasts: [(look at object > baseline) AND (touch object > baseline)]. This analysis showed a region at the junction of the posterior end of the inferior temporal sulcus and the lateral occipital sulcus in both hemispheres (Fig. 3A).

Figure 3.

Activation maps in occipitotemporal and early visual cortices. Voxelwise statistical maps obtained with the separate subject GLM of the LOtv localizer runs. Although issues with anatomical homogeneity on the 4 T scanner made cortex-based alignment too painstaking to conduct, the volumetric activation maps for group ROI results are overlaid on the average cortical surface derived from the cortex-based alignment performed on 12 participants from a different experiment on a Siemens 3 T TIM Trio scanner. The surface from the average of 12 subjects has the advantage of showing less bias in the anatomy of the major sulci compared with the surface of one participant only. We verified that activation from volumetric maps appeared on the corresponding part of the cortical surface. A, The maps show brain areas that respond to visual (blue), haptic (red), and the conjunction of visual and haptic (violet) exploration of objects. The yellow dots indicate the Talairach coordinates of LOtv found in the present study. The green dots indicate the averaged Talairach coordinates of LOtv from previous studies on this area (Tal and Amedi, 2009; Amedi et al., 2010, 2007, 2002, 2001). B, Maps showing brain areas with higher activation for visual than haptic exploration of objects (blue) and areas with higher activation for haptic than visual exploration of objects (red).

We included the exploration of textures in our LOtv localizer based on previous findings by Amedi and colleagues (2001) showing higher activation during visual and tactile exploration of objects versus textures in LOtv. However, our contrasts of [(look at object > look at texture) AND (touch object > touch texture)] did not lead to any significant voxels in the vicinity of LOtv. In retrospect, because our texture stimuli consisted of rectangular samples of textures, participants may have processed shape in both the object and texture exploration conditions, leading to comparable LOtv activation. Therefore, we used the less restrictive contrast of visual AND haptic exploration of objects versus baseline (rather than the respective texture exploration conditions). We also performed an adaptation analysis by contrasting novel versus repeated objects and textures. These analyses also did not reveal any adaptation effect in our ROI.

A separate subjects GLM was performed for the localizer runs because it included only 10 participants. Each subject's GLM included four separate predictors: “look at object,” “look at texture,” “touch object,” and “touch texture.” Each predictor was derived from a rectangular wave function (10 s or 5 volumes including sequential exploration periods) convolved with a standard HRF. We observed negative spikes due to the motion of the hand in the magnetic field (Barry et al., 2010) before the haptic exploration phase. These motion artifacts were modeled with “predictors of no interest” to account for artifact variance and were excluded from further analysis.

EVC.

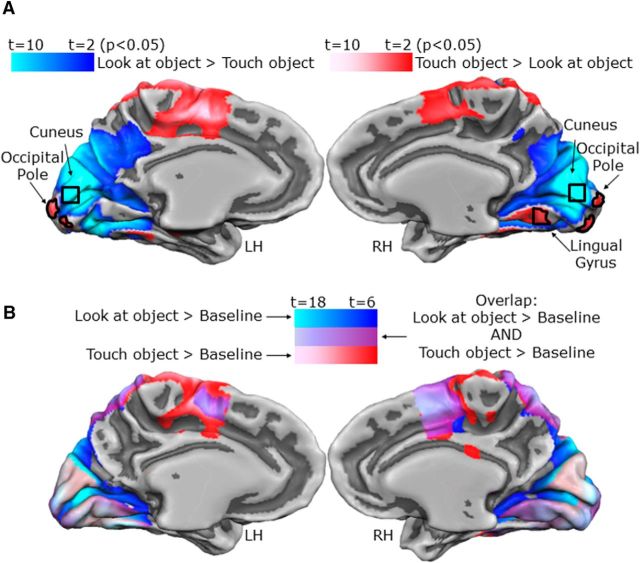

To localize the early visual areas involved in visual recognition of shapes, we used the contrast (look at object > touch object and the converse) from the LOtv localizer. This contrast revealed a region in the cuneus with higher activation for looking versus touching objects. This focus was in the anterior dorsal occipital cortex above the calcarine sulcus. Because the objects were presented in the lower visual field (below the fixation point), this focus of activation was consistent with the expected retinotopic location. Therefore, this region in the visual cortex corresponded approximately to the retinotopic location of the object (see Fig. 5A). This contrast also revealed a region in the OP with higher activation for haptic than visual exploration of objects. This OP focus corresponds to the foveal confluence of numerous retinotopic visual areas (Wandell et al., 2007; Schira et al., 2009; specifically V1, V2, V3, hV4, LO-1 and LO-2). The contrast of haptic > visual exploration also revealed a focus in the right lingual gyrus (LG), but not the left, which we also treated as an ROI at the request of a reviewer, although we had not expected activation there.

Figure 5.

Activation maps in the EVC. A, Maps showing brain areas with higher activation for visual than haptic exploration of objects (blue) and areas with higher activation for haptic than visual exploration of objects (red). B, Maps showing brain areas that respond to visual (blue), haptic (red), and conjunction of visual and haptic (violet) exploration of objects.

aIPS and PMd.

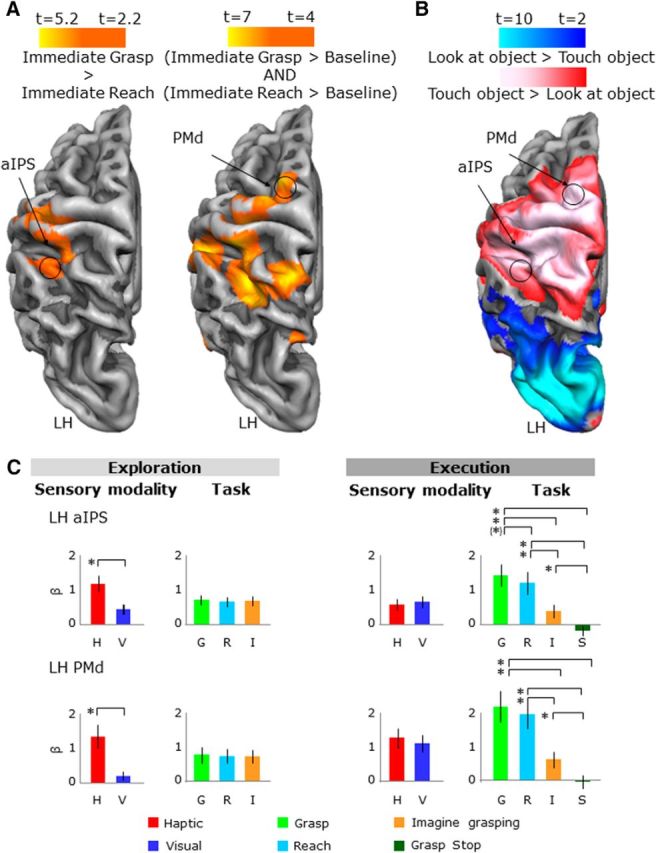

Area aIPS was localized with the contrast of (immediate grasp > immediate reach) performed with the aIPS localizer runs. This contrast has been typical in past studies of the region (Binkofski et al., 1998; Culham et al., 2003; Tunik et al., 2005; Begliomini et al., 2007). Consistent withearlier work, we defined aIPS as the grasp-selective focus nearest the junction of the intraparietal sulcus and the inferior segment of the postcentral sulcus (see Fig. 9A, left).

Figure 9.

Activation maps and activation levels in the frontoparietal cortex. Voxelwise statistical maps were obtained with the random-effects GLM of the aIPS functional localizer runs. The activation maps for group ROI results are overlaid on the average cortical surface. A, Left, aIPS localized with a contrast of immediate grasp > immediate reach. Right, PMd localized with the conjunction of (immediate grasp > baseline) AND (immediate reach > baseline). B, aIPS and PMd shown on the activation maps obtained with the LOtv functional localizer runs. The voxelwise statistical maps are the same as in Figure 3. C, β weights plotted the same as in Figure 4. Legend is the same as in Figure 4.

Area PMd has been identified with a conjunction of contrasts: [(immediate grasp > baseline) AND (immediate reach > baseline)]. This analysis is based on the functional properties of the area PMd described in previous studies showing the strong involvement of PMd in grasping and reaching actions (Cavina-Pratesi et al., 2010; Gallivan et al., 2011; Monaco et al., 2014). Consistent we earlier work, we defined PMd as the action-selective focus near the junction of the superior frontal sulcus and the precentral sulcus (Fig. 9A, right).

We performed a random-effects GLM for these localizer runs. The GLM included three predictors: “immediate grasp,” “immediate reach,” and “look.” Each predictor was derived from a rectangular-wave function (2 s or 1 volume aligned with the brief illumination of the object) convolved with a standard HRF. We observed negative spikes due to the motion of the hand in the magnetic field (Barry et al., 2010) at the onset of the grasp and reach tasks. These motion artifacts were modeled with “predictors of no interest” to account for artifact variance and were thus excluded from further analysis.

Application of ROI analysis

Because we had a priori hypotheses about specific areas, we used our localizer runs to identify ROIs (LOtv, EVC, aIPS, and PMd) at the group level and then test specific contrasts in experimental runs. Although we also analyzed the data using ROIs in individual participants and voxelwise group analyses, these results were in agreement; therefore, for conciseness, we present only the group ROI analyses and results. The selection criteria based on the localizer runs were independent from the key experimental contrasts and prevented any bias toward our predictions (Kriegeskorte et al., 2010).

After defining our four ROIs based on the localizers (as described above), we extracted estimates of and performed statistical contrasts upon (univariate) activation levels for each of our conditions and phases in the experimental runs. Therefore, for each ROI, we applied a Bonferroni correction for the number of contrasts performed.

To select ROIs, we used voxelwise contrasts. Therefore, we corrected for the large number of multiple comparisons by performing a cluster threshold correction for each activation map resulting from the contrasts done with the functional localizer runs (Forman et al., 1995) using Brain Voyager's cluster-level statistical threshold estimator plug-in (Goebel et al., 2006). This algorithm applies Monte Carlo simulations (1000 iterations) to estimate the probability of a number of contiguous voxels being active purely due to chance while taking into consideration the average smoothness of the statistical maps. Although cluster correction approaches have been challenged recently (Eklund et al., 2016), this was not problematic here because the core contrasts are based on independent ROI analyses and, as the results will show, even in the statistically weakest ROI, the OP, the experimental contrasts replicate independently the differences used to identify the region in the localizer run.

For the LOtv functional localizer runs, the contrasts of (look at object > baseline) and (touch object > baseline) had a minimum cluster size of 7 and 5 functional (3 × 3 × 3 mm3) voxels (F = 4; 189 and 135 mm3). The conjunction analysis of [(look at object > baseline) AND (touch object > baseline)] had a minimum cluster size of 5 functional voxels (F = 4; 135 mm3), whereas the contrast of (look at object > touch object) had a minimum cluster size of 65 functional voxels (F = 2.2; 1755 mm3). For the aIPS localizer runs, the contrast of (grasp > reach) had a minimum cluster size of 60 functional voxels (F = 2.2; 1620 mm3), whereas the conjunction analysis of [(grasp > baseline) AND (reach > baseline)] had a minimum cluster size of 71 functional voxels (F = 2.2; 1917 mm3).

To select the ROIs, a cube of up to 10 mm3 was centered at the voxel of peak activation and included all voxels within the cube with activation above the statistical threshold. Therefore, in areas such as the cuneus, where activation was extensive, the full cube was selected, whereas with more spatially limited activation such as the OP, the region was smaller and noncubic. This method has the advantage of being replicable, including more than the peak voxel, excluding voxels that may not be involved, and limiting the size of the ROIs to a maximum such that ROIs do not differ greatly between participants and areas. For each area, we then extracted the β weights for each participant in each experimental condition for further analyses.

We explored the pattern of activity during both the exploration and execution phase. Therefore, for each area, we performed one ANOVA for each of these two phases (exploration and execution) using SPSS. The ANOVA for the exploration phase had two levels for the sensory modality (visual and haptic) and three levels for the task (grasp, reach, and imagine grasping). The ANOVA for the exploration phase had two levels for the sensory modality (visual and haptic) and four levels for the task (grasp, reach, imagine grasping, and stop).

After the ANOVAs, we performed two sets of post hoc t tests to tease apart activation differences during the exploration and the execution phase. During both the exploration and execution phases, most regions did not show any interactions between task and sensory modality; therefore, we performed post hoc t tests only to determine the main effects. To interpret main effects of task during the exploration phase, we contrasted each pair of tasks (grasp vs reach, reach vs imagine grasping, and grasp vs imagine grasping) collapsed across visual and haptic trials. Therefore, for this group of comparisons, we used a Bonferroni correction for three comparisons (p < 0.016). Because the cuneus in the right hemisphere (RH) showed a significant interaction, we performed the comparisons on visual and haptic conditions separately for a total of six comparisons (visual grasp vs visual reach, visual reach vs visual imagine grasping, visual grasp vs visual imagine grasping, haptic grasp vs haptic reach, haptic reach vs haptic imagine grasping, and haptic grasp vs haptic imagine grasping) and corrected the p-value to p < 0.0083. To interpret main effects of task during the execution phase, we contrasted each pair of tasks (grasp vs reach, grasp vs imagine grasping, grasp vs stop, reach vs imagine grasping, reach vs stop, and imagine grasping vs stop) collapsed across visual and haptic trials. For this group of comparisons, we used a Bonferroni correction for six comparisons (p < 0.0083). Recall that, because the cue to abort the trial (stop condition) was indicated at the end of a grasp trial, the stop condition is only present only in the execution phase.

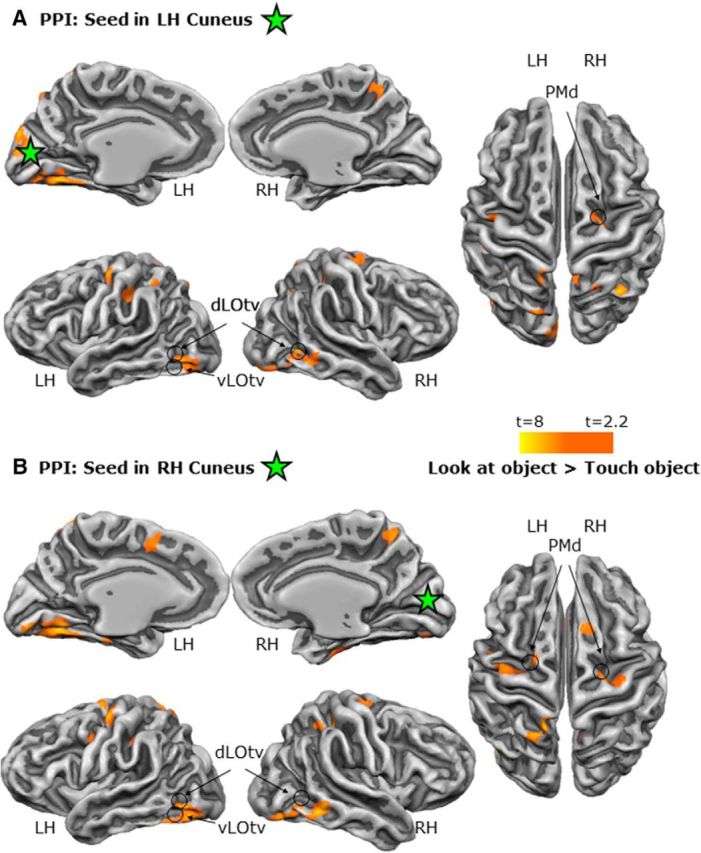

Psychophysiological interaction (PPI) analysis

We used the PPI method (Friston et al., 1997; McLaren et al., 2012; O'Reilly et al., 2012) to estimate the task-specific changes in functional connectivity between our seed regions (OP and cuneus) and the rest of the brain during the haptic versus visual exploration of objects (and vice versa) in the LOtv localizer runs (see Fig. 5A). PPI identifies brain regions in which functional connectivity is modified by the task above and beyond simple interregional correlations (“physiological component”) and task-modulated activity (“psychological component”).

For each participant, we created a PPI model for each run that included the following: (1) the physiological component, corresponding to the z-normalized time course extracted from the seed region; (2) the psychological component, corresponding to the task model (boxcar predictors convolved with a standard hemodynamic response function); and (3) the PPI component, corresponding to the z-normalized time course multiplied, volume by volume, with the task model. The boxcar predictors of the psychological component were set to +1 for the haptic (or visual) exploration task, to −1 for the visual (or haptic) exploration task, and to 0 for baseline and all other conditions. The individual GLM design matrix files were used for a random-effects model analysis (Friston et al., 1999). The functional connectivity map was corrected for multiple comparisons using the Monte Carlo simulation approach implemented in the BrainVoyager's cluster threshold plug-in (Forman et al., 1995). The minimum cluster sizes were 54 and 53 functional voxels for maps originated with left hemisphere (LH) and RH OP as seed regions, respectively (F = 2.2; 1458 and 1431 mm3) and 84 and 16 functional voxels for maps originated with LH and RH cuneus, respectively (F = 2.2; 2268 and 432 mm3).

Results

ROI analyses

We report the results of the ROI analyses for LOtv, cuneus, OP, aIPS, and PMd localized through targeted contrasts with the independent localizers. The Talairach coordinates and numbers of voxels of each ROI are specified in Table 2, Statistical values of the main effects and post hoc t tests for each area are reported in Tables 3, 4, and 5.

Table 2.

Talairach coordinates and size of ROIs

| Talairach coordinates |

ROI size (mm3) | |||

|---|---|---|---|---|

| x | y | z | ||

| LH dLOtv | −47 | −60 | −3 | 752 |

| LH vLOtv | −46 | −60 | −13 | 896 |

| RH LOtv | 48 | −60 | −12 | 832 |

| LH cuneus | −3 | −84 | 1 | 1000 |

| RH cuneus | 7 | −85 | 1 | 988 |

| LH OP | −4 | −96 | −5 | 173 |

| RH OP | −6 | −96 | −4 | 339 |

| RH LG | 12 | −59 | −8 | 651 |

| LH aIPS | −38 | −37 | 44 | 1160 |

| LH PMd | −20 | −18 | 62 | 639 |

Talairach coordinates refer to the peak activation of the functional cluster.

Table 3.

Statistical values for main effects in experimental runs

| Brain area | Exploration |

Execution |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sensory modality |

Task |

Interaction |

Sensory modality |

Task |

||||||

| p | F(1,17) | p | F(2,34) | p | F(2,34) | p | F(1,17) | p | F(3,51) | |

| LH LOtv dorsal | 0.597 | 0.3 | 0.839 | 0.2 | — | — | 0.164 | 2.1 | 0.001 | 21 |

| LH LOtv ventral | 0.833 | 0.1 | 0.616 | 0.5 | — | — | 0.408 | 0.7 | 0.001 | 15.5 |

| RH LOtv ventral | 0.267 | 1.3 | 0.208 | 1.6 | — | — | 0.157 | 2.2 | 0.004 | 4.9 |

| LH cuneus | 0.001 | 61.7 | 0.001 | 8 | — | — | 0.002 | 12.7 | 0.001 | 15.5 |

| RH cuneus | 0.001 | 28.3 | 0.001 | 14.6 | 0.003 | 6.7 | 0.001 | 17.9 | 0.001 | 16.4 |

| LH OP | 0.001 | 15.5 | 0.778 | 0.2 | — | — | 0.143 | 2.4 | 0.001 | 10.7 |

| RH OP | 0.002 | 13.5 | 0.280 | 1.3 | — | — | 0.751 | 0.1 | 0.001 | 11.5 |

| RH LG | 0.001 | 27.5 | 0.040 | 3.5 | — | — | 0.932 | 0.1 | 0.001 | 40.6 |

| LH aIPS | 0.001 | 47.1 | 0.498 | 0.7 | — | — | 0.249 | 1.4 | 0.001 | 52.9 |

| LH PMd | 0.001 | 73.4 | 0.665 | 0.4 | — | — | 0.292 | 1.2 | 0.001 | 55.5 |

Significant values are indicated in bold font.

Table 4.

Statistical values for paired-sample t test in the exploration phase of experimental runs

| Exploration (t(17), p < 0.016) |

||||||

|---|---|---|---|---|---|---|

| Grasp > reach |

Grasp > imagine |

Reach > imagine |

||||

| p | t | p | t | p | t | |

| LH LOtv dorsal | — | — | — | — | — | — |

| LH LOtv ventral | — | — | — | — | — | — |

| RH LOtv ventral | — | — | — | — | — | — |

| LH cuneus | 0.006 | 3.4 | 0.500 | 0.5 | 0.007 | −3.3 |

| RH cuneus | ||||||

| Haptic (p < 0.0083) | 0.001 | 5.3 | 0.500 | −0.8 | 0.001 | −4.9 |

| Visual (p < 0.0083) | 0.018 | 2.8 | 0.011 | 3.1 | 0.500 | −0.7 |

| RH LG | 0.046 | 2.3 | −0.500 | 0.1 | 0.783 | −2.1 |

| LH OP | — | — | — | — | — | — |

| RH OP | — | — | — | — | — | — |

| LH aIPS | — | — | — | — | — | — |

| LH PMd | — | — | — | — | — | — |

Significant values are indicated in bold font for corrected p-values and are underlined for uncorrected p-values.

Table 5.

Statistical values for paired-sample t test in the execution phase of experimental runs

| Execution (t(17), p < 0.0083) |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Grasp > reach |

Grasp > imagine |

Grasp > stop |

Reach > imagine |

Reach > stop |

Imagine > stop |

|||||||

| p | t | p | t | p | t | p | t | p | t | p | t | |

| LH LOtv dorsal | 0.010 | 3.2 | 0.001 | 4.6 | 0.001 | 5 | 0.003 | 3.8 | 0.001 | 4.8 | 0.003 | 3.8 |

| LH LOtv ventral | 0.018 | 3 | 0.001 | 4.7 | 0.001 | 4.8 | 0.049 | 2.4 | 0.006 | 3.5 | 0.027 | 2.8 |

| RH LOtv ventral | 0.047 | 2.5 | 0.003 | 3.8 | 0.034 | 2.6 | 0.102 | 2.1 | 0.333 | 1.3 | 0.333 | −0.1 |

| LH cuneus | 0.001 | 6.2 | 0.001 | 4.9 | 0.001 | 5.5 | 0.333 | 0.5 | 0.161 | 1.8 | 0.043 | 2.5 |

| RH cuneus | 0.001 | 6.1 | 0.001 | 6.1 | 0.001 | 6 | 0.333 | 0.2 | 0.213 | 1.7 | 0.059 | 2.4 |

| Haptic (p < 0.0083) | — | — | — | — | — | — | — | — | — | — | — | — |

| Visual (p < 0.0083) | — | — | — | — | — | — | — | — | — | — | — | — |

| RH LG | 0.001 | 3.5 | 0.001 | 7.1 | 0.001 | 8.6 | 0.001 | 5.1 | 0.001 | 6 | 0.269 | −1.6 |

| LH OP | 0.022 | 2.8 | 0.006 | 3.5 | 0.004 | 3.6 | 0.009 | 3.3 | 0.007 | 3.4 | 0.333 | 0.1 |

| RH OP | 0.063 | 2.3 | 0.001 | 5 | 0.001 | 4.2 | 0.023 | 2.8 | 0.029 | 2.7 | 0.333 | 1.3 |

| LH aIPS | 0.022 | 2.8 | 0.001 | 6.3 | 0.001 | 9.5 | 0.001 | 5.3 | 0.001 | 7.7 | 0.001 | 7 |

| LH PMd | 0.122 | 2 | 0.001 | 6.6 | 0.001 | 9.1 | 0.001 | 6.6 | 0.001 | 9.1 | 0.002 | 3.9 |

Significant values are indicated in bold font for corrected p-values and are underlined for uncorrected p-values.

Activation maps and activation levels for each ROI are shown in Figures 3, 4, 5, 6, 7, 8, and 9. Activation levels for each factor are plotted separately for areas where no significant sensory modality × task interactions were observed.

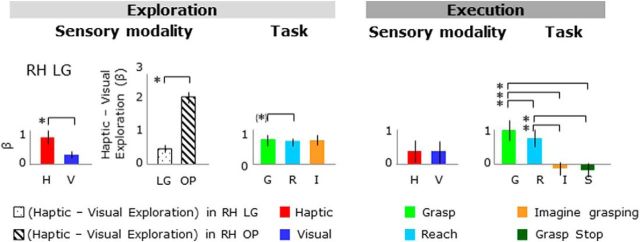

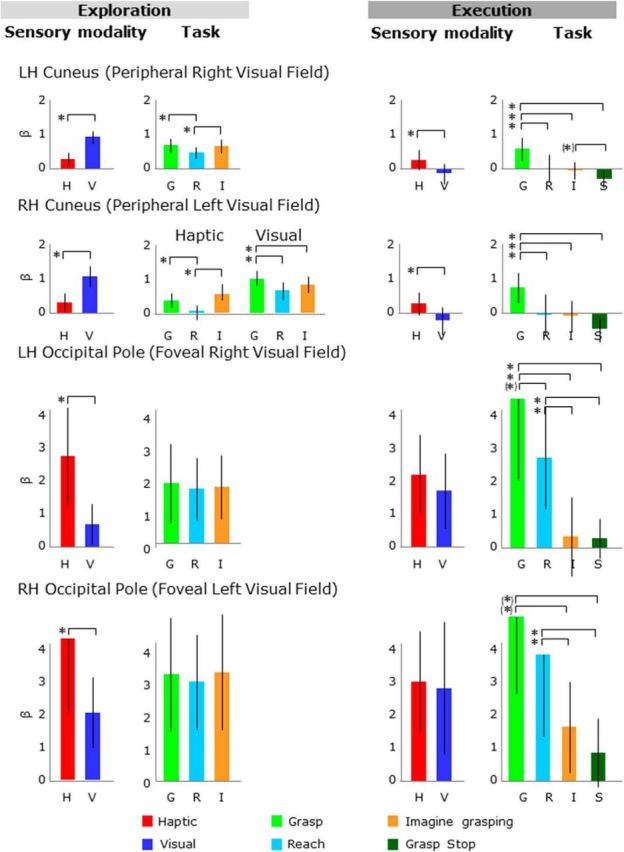

Figure 4.

Activation levels in the occipitotemporal cortex. For each area, bar graphs indicate the β weights for the sensory modality (visual and haptic) and the task (grasp, reach, and imagine grasping) in the exploration phase (left) and execution (right) phase. Note that because stop trials were revealed only at the end of the trial, the stop condition is present in only in the execution phase. *Statistical differences among conditions for corrected p-value. “(*),” Statistical differences among conditions for uncorrected p-value (p < 0.05). Error bars indicate 95% confidence intervals.

Figure 6.

β weights plotted as in Figure 4. Legend is the same as in Figure 4.

Figure 7.

β weights plotted for the LG. The differences in β weights for haptic versus visual exploration tasks are also plotted. Legend is the same as in Figure 4.

Figure 8.

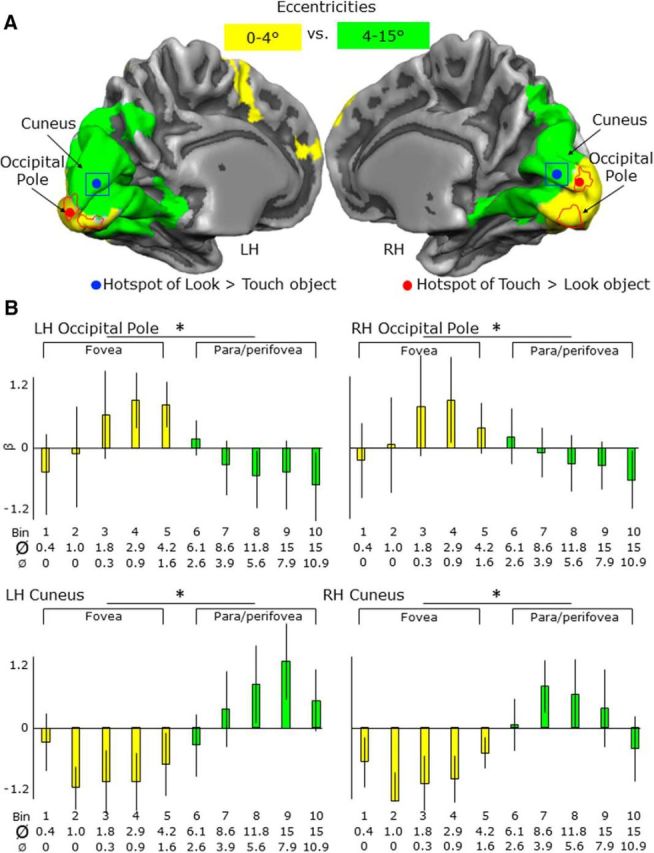

Activation levels and maps for eccentricities from 0–15° of visual angles in the OP and cuneus. A, Overlap between two activation maps showing areas with higher activation for eccentricities 0–4° than 4–15° (yellow) and areas with higher activation for 4–15° than 0–4° (green). B, For each area, bar graphs indicate the β weights for the eccentricities corresponding to the fovea (0–4°) and para/perifovea fovea (4–15°). Legend is the same as in Figure 4. Ø, Maximum diameter of the ring; Ø, minimum diameter of the ring; bin, time bins used for the eccentricity mapping.

For the ROIs, the multiple correlation coefficient R, which provides an estimate of the proportion of the variance in the data that can be explained by the model of our main experiment, was, on average, 0.46.

LOtv

Localization

For the independent LOtv functional localizer runs, the conjunction of contrasts comparing each of the look and touch object conditions versus baseline [i.e., (look at object > baseline) AND (touch object > baseline)] revealed an overlapping region at the junction of the posterior end of the inferior temporal sulcus and the lateral occipital sulcus in both hemispheres. In particular, we found two noncontiguous foci of activation in the left LH, namely ventral LOtv (vLOtv) and dorsal LOtv (dLOtv) and one focus in the RH (vLOtv) (Fig. 3A). For clarity, the contrasts comparing look versus touch object condition (and vice versa) are shown on the lateral surface of each hemisphere in Figure 3B.

Experimental runs

As shown in Figure 4, during the exploration phase, activation levels were comparable across conditions in dLOtv and vLOtv in the LH and in vLOtv in the RH (Fig. 4, left); however, during task execution after the delay, activation was stronger for the grasp condition than the reach, imagine grasping, and stop conditions, as well as the baseline activation in the three areas (Fig. 4, right). The imagine grasping condition evoked significantly higher activation than the baseline only in dLOtv in the LH. Specifically, the ANOVA on activation levels during the execution phase showed a main effect of task in dLOtv and vLOtv in the LH as well as in vLOtv in the RH. In particular, the three areas showed higher activation for grasp versus reach, grasp versus imagine grasping and grasp versus stop. In addition, vLOtv and dLOtv in the LH also showed higher activation for reach versus imagine grasping, reach versus stop and imagine grasping versus stop.

Cuneus

Localization

For the LOtv functional localizer runs, the contrast comparing look versus touch object condition showed higher activation for visual than haptic exploration of objects in a region in the anterior part above the calcarine sulcus (cuneus) in both hemispheres (Fig. 5A). Because the objects were in the lower visual field, this activation was consistent with the retinotopic location that we expected to be activated. For clarity, the conjunction of contrasts comparing each of the look and touch object conditions versus baseline [i.e., (look at object > baseline) AND (touch object > baseline)] is shown on the medial surface of each hemisphere in Figure 5B.

Experimental runs

As shown in Figure 6, during the exploration phase, cuneus activation levels were higher for visual than haptic conditions (Fig. 6, left). In addition, during the execution phase, activation was stronger for the grasp condition than the reach, imagine grasping, and stop conditions, as well as the baseline activation (Fig. 6, right). Specifically, in the cuneus of the LH, the ANOVA on activation levels during the exploration phase showed a main effect of sensory modality and a main effect of task. In particular, there was higher activation for visual than haptic conditions as well as higher activation for grasp versus reach and imagine grasping versus reach. The ANOVA on activation levels during the execution phase also showed a main effect of sensory modality and a main effect of task. Specifically, there was higher activation for haptic than visual exploration, as well as higher activation for grasp versus reach, grasp versus imagine grasping, grasp versus stop, and imagine grasping versus stop; however, the activation for the imagine grasping condition was not significantly higher than baseline activation. Likewise, despite the significant difference between haptic and visual conditions, the activation levels were not significantly higher than baseline activation.

In the cuneus of the RH, the ANOVA on activation levels during the exploration phase showed a main effect of sensory modality, a main effect of task, and a sensory modality by task interaction. In particular, for the haptic trials, there was higher activation for grasp versus reach and imagine grasping versus reach, whereas for visual trials, there was higher activation for grasp versus reach and grasp versus imagine grasping. The ANOVA on activation levels during the execution phase showed a main effect of sensory modality and a main effect of task. Specifically, there was higher activation for haptic than visual exploration; however, the activation levels were not significantly higher than baseline activation. In addition, there was higher activation for grasp versus reach, grasp versus imagine grasping, and grasp versus stop.

OP

Localization

The contrast of the (look at object > touch object) run with the independent LOtv functional localizer runs revealed an area in the OP of both hemispheres with higher activation for haptic than visual exploration. This focus was in the most posterior part of the occipital lobe and calcarine sulcus (Fig. 5A). After identifying the OP in each of the two hemispheres based on its haptic response, we then examined the β weights. This was initially surprising to us because this is the region of the EVC that represents the visual fovea; however, in our paradigm, no stimuli were presented in the fovea, only the fixation point that was present in the fovea in both vision and haptic trials. This effect suggests that haptic processing of shapes relies on different neural mechanisms compared with visual processing. In particular, it is possible that haptic exploration is based on foveal reference frames despite the fact that participants were in the dark with their eyes open and fixated a point placed above the object that they were exploring.

Given the unexpectedness of the stronger activation in the OP for haptic exploration, we wanted to verify the consistency of the results by performing an analysis of independent data. Therefore, we performed a similar contrast using the experimental runs. Because the experimental paradigm also included visual and haptic exploration of shapes, we ran the following contrast: [(haptic exploration: grasp + haptic exploration: reach + haptic exploration: imagine grasping + haptic exploration: stop) > (visual exploration: grasp + visual exploration: reach + visual exploration: imagine grasping + visual exploration: stop)]. We found a close match between the activation map obtained with this contrast and the one performed with the LOtv localizer runs, with the OP showing higher activation for haptic than visual exploration and the cuneus showing higher activation for visual than haptic exploration.

Experimental runs

As shown in Figure 6, during the exploration phase, activation levels were higher for haptic than visual conditions (Fig. 6, left); however, during task execution after the delay, activation levels were comparable for haptic and visual conditions (Fig. 6, right). In addition, during the execution phase, there was higher activation for the grasp condition than the reach, imagine grasping, and stop conditions, as well as the baseline activation. Specifically, the ANOVA on activation levels during the exploration phase showed a main effect of sensory modality with higher activation for haptic than visual exploration. The ANOVA on activation levels during the execution phase showed a main effect of task. In particular, there was higher activation for grasp versus imagine grasping, grasp versus stop, reach versus imagine grasping, and reach versus stop. The OP in the LH also showed higher activation for grasp versus reach.

LG

The contrast of (look at object > touch object) performed with the independent LOtv functional localizer runs also revealed an unexpected area in the right LG with higher activation for haptic than visual exploration of shapes (Fig. 5A).

Experimental runs

As shown in Figure 7, during the exploration phase, activation levels were higher for haptic than visual conditions (Fig. 7, left); however, during task execution afater the delay, activation levels were comparable for haptic and visual conditions (Fig. 7, right). To measure the involvement of the OP and LG in haptic versus visual exploration of shapes, we compared the difference in activation between haptic versus visual exploration tasks directly in the LG and OP in the RH (Fig. 7, left). This comparison showed higher activation for haptic versus visual exploration in the OP than the LG, suggesting a stronger involvement of the OP than the LG in the haptic identifications of shapes. In addition, during the execution phase, there was higher activation for the grasp condition than the reach, imagine grasping, and stop conditions, as well as the baseline activation. Specifically, the ANOVA on activation levels during the exploration phase showed a main effect of sensory modality with higher activation for haptic than visual exploration. In addition, there was higher activation for grasp versus reach during the exploration phase. The ANOVA on activation levels during the execution phase showed a main effect of task. In particular, there was higher activation for grasp versus reach, grasp versus imagine grasping, grasp versus stop, reach versus imagine grasping, and reach versus stop.

Eccentricity mapping

To ensure that our foci at the OP and cuneus did indeed correspond to the putative foveal and appropriate peripheral representations in retinotopic cortex (respectively), we compared our foci from the LOtv experiment with eccentricity maps derived from an independent sample of 11 participants with retinotopic mapping (Fig. 8A).

From the ROIs at the OP and the cuneus from the LOtv experiment (touch object > look at object and vice versa), we extracted the β weights from the retinotopic mapping data for each of the time bins, which correspond to increasing eccentricities. According to Wandell (1995), the diameter of the fovea is 5.2°, whereas the parafovea and perifovea extending around the fovea have diameters of ∼5–9° and 9–17°, respectively (for review, see also Strasburger et al., 2011). Therefore, in this experiment, bins from 1 to 5 correspond to the fovea, whereas bins from 6 to 10 correspond to parafovea and perifovea.

In both ROIs, we then ran a two-tailed paired-sample t test to compare activation in the fovea (time bins 1–5; minimum inner diameter = 0° to maximum outer diameter = 4.2°) with the para/perifovea (time bins 6–10; minimum inner diameter = 2.6° to maximum outer diameter = 15°). As shown in Figure 8B, and as expected, the OP showed higher activation for eccentricities corresponding to the fovea than para/perifovea. In contrast, the cuneus showed higher activation for eccentricities corresponding to the para/perifovea than the fovea.

We compared our EVC ROIs with eccentricity maps but not polar angle maps because our arguments are in terms of the eccentric locations rather than the specific visual areas implicated and the meridia from polar angle maps are less straightforward to combine across participants. Moreover, the discrimination of visual areas within the foveal confluence is rarely done because it is technically challenging (Schira et al., 2009). Given that our stimulus fell 10–15° below fixation along the lower vertical meridian, our peripheral hotspot in the cuneus would have to fall on the border between dorsal V1 and dorsal V2 (just above the calcarine, where our hotspot appears) or between dorsal V3 and V3A (which would be expected to appear much more dorsally and is thus implausible).

In sum, our comparisons with independent retinotopic data show that, indeed, our OP focus falls within the expected location for the foveal confluence, whereas our cuneus focus falls within the expected peripheral location for the lower vertical meridian at the V1/V2 boundary.

aIPS

Localization

The contrast of (immediate grasp > immediate reach) run with the independent aIPS functional localizer runs revealed activation near the junction of the intraparietal sulcus and the inferior segment of the postcentral sulcus in the LH (Fig. 9A,B).

Experimental runs

As shown in Figure 9C, during the exploration phase, activation levels were higher for haptic than visual conditions (Fig. 9C, left); however, during the execution phase after the delay, activation levels were comparable for haptic and visual conditions (Fig. 9C, right). In addition, the activation levels for grasp were higher than any other condition. Specifically, the ANOVA on activation levels during the exploration phase showed a main effect of sensory modality with higher activation for haptic than visual conditions. The ANOVA on activation levels during the execution phase showed a main effect of task. In particular, there was higher activation for grasp versus reach, grasp versus imagine grasping, grasp versus stop, reach versus imagine grasping, reach versus stop, and imagine grasping versus stop.

PMd

Localization

The conjunction of contrasts that compared each of the movements with baseline [(immediate grasp > baseline) AND (immediate reach > baseline)] performed with the independent aIPS functional localizer runs revealed activation near the junction of the superior frontal sulcus and the precentral sulcus in the LH (Fig. 9A,B).

Experimental runs

As shown in Figure 9C, there was higher activation for haptic than visual conditions in the exploration phase (Fig. 9C, left). In addition, in the execution phase there was higher activation for grasp than imagine grasping and stop conditions (Fig. 9C, right). Specifically, the ANOVA on activation levels during the exploration phase showed a main effect of sensory modality with higher activation for haptic than visual conditions. The ANOVA on activation levels during the execution phase revealed a main effect of task, with higher activation for grasp versus imagine grasping, grasp versus stop, reach versus imagine grasping, reach versus stop and imagine grasping versus stop.

PPI analysis

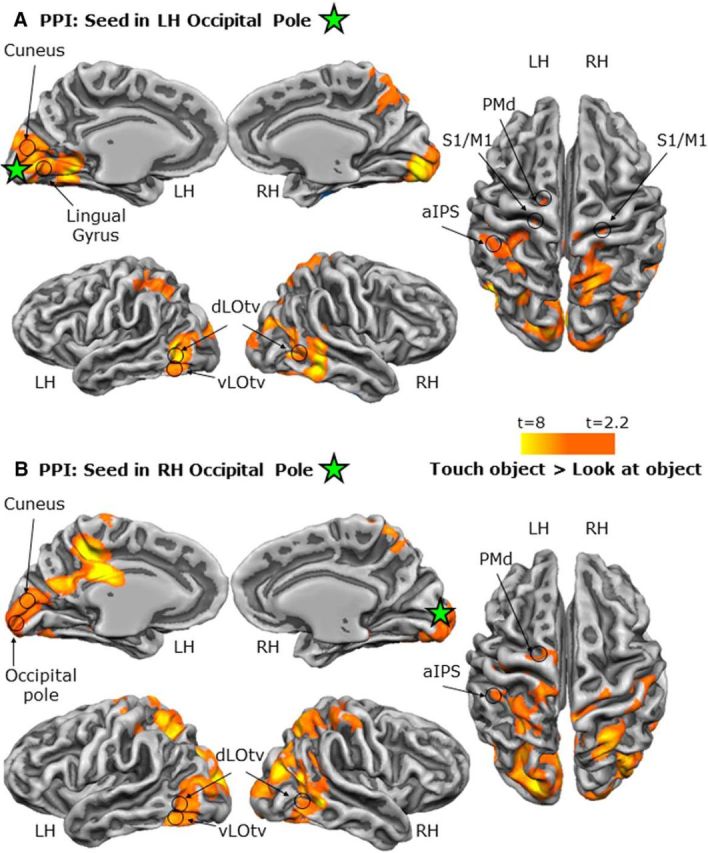

To summarize, our subtraction results show that the patch of visual cortex corresponding to foveal vision is involved in the haptic exploration of a shape in the dark, even when the shape is placed in the lower periphery. This activation in the OP might be due to feedback from high-level sensory–motor areas such as the aIPS. In fact, the aIPS is involved in visual and haptic shape processing of objects for subsequent actions (Króliczak et al., 2008; Marangon et al., 2015), as well as the guidance of grasping actions (Culham et al., 2003; Culham and Valyear, 2006). To test whether connectivity between the OP or cuneus and other regions (e.g., aIPS) is modulated by the nature of the task, we used a PPI approach.

When comparing haptic with visual exploration in the localizer, we found that the OP (Fig. 10, Table 2) showed stronger functional connectivity with numerous areas, including those implicated in sensory–motor control of grasping actions (left aIPS), multimodal recognition of objects (LOtv), somatosensory/motor function (S1/M1 and PMd), and processing more peripheral locations (cuneus and LG). In contrast, the cuneus (Fig. 11, Table 2) showed stronger functional connectivity for visual than haptic exploration with cortex in the vicinity of LOtv, as well as dorsal stream areas such as PMd. Together, the PPI results reinforce the suggestion based on activation differences that the OP plays a stronger role in haptic exploration, whereas the retinotopic zone of the cuneus plays a stronger role in visual exploration.

Figure 10.

Functional network of areas connected to the OP during haptic versus visual exploration of unfamiliar shapes in the LOtv localizer. Shown are statistical parametric maps pf the PPI results using the OP in the LH (A) and RH (B) as seed regions. The green star corresponds to the peak voxel of the seed ROIs.

Figure 11.

Functional network of areas connected to the cuneus during visual versus haptic exploration of unfamiliar shapes in the LOtv localizer. Shown are statistical parametric maps with the PPI results using the cuneus in the left (A) and right (B) hemisphere as seed regions. The green star corresponds to the peak voxel of the seed ROIs.

Discussion

We examined brain activation during haptic versus visual exploration of unfamiliar shapes and subsequently when participants used this information to perform actions or imagery after a delay. Our results demonstrate four main findings. First, haptic and visual exploration of unfamiliar shapes recruits the same visual areas but not the same retinotopic zones. Specifically, haptic recruitment of visual areas does not correspond only to retinotopic areas in the visual cortex, but also includes the high-acuity foveal portion. Second, the haptic exploration of unfamiliar shapes recruits a functional network that connects the OP with high-level function areas involved in processing object shapes such as the LOtv and object-directed hand actions such as the aIPS. Third, the EVC and ventral stream area LOtv are re-recruited during actions in the dark after a delay regardless of the sensory modality used to explore the object initially, suggesting the recruitment of an abstract representation of object shape during the movement. Fourth, the reactivation of visual areas during actions in the dark cannot be explained entirely by action imagery because the execution of real actions elicits higher activation than imagined ones. Together, these results provide a comprehensive whole-brain, network-level framework for understanding how multimodal sensory information is cortically distributed and processed during delayed actions and action imagery.

EVC is activated differentially for both haptic and visual exploration

We found activation in the EVC, not only when participants viewed the objects, but also when they touched the objects in the dark, as other studies have shown (Merabet et al., 2007; Snow et al., 2014; Rojas-Hortelano et al., 2014; Marangon et al., 2015). Even more interestingly, we found that object information recruits different parts of the EVC depending on the sensory modality used to explore the object. This is surprising given that the object was in the same position relative to the fixation point and the body of the participant for both visual and haptic trials. In particular, viewing elicited higher activation than touching the object in the dark in the expected retinotopic location (in the cuneus, corresponding to the objects' placement in the visual field 10–15° beneath fixation along the lower vertical meridian, likely at the V1/V2 boundary), whereas touching elicited higher activation than viewing the object in the OP, specifically in the location for foveal visual processing. The haptically driven recruitment of retinotopic foveal cortex was observed even though participants were touching the shapes while the eyes were open and maintaining gaze on the fixation point located above the objects. Surprisingly, retinotopic foveal cortex has been shown to contain visual information even about objects presented in the visual periphery when high-acuity processing is required and this phenomenon has been found to correlate with task performance and to be critical for extrafoveal perception (Williams et al., 2008; Chambers et al., 2013). The involvement of cortical areas that are not primarily related to the sensory modality that recruits them may enhance processing. For instance, the visual cortex, specifically the foveal zone, might allow the generation of a detailed representation of an object even in absence of visual information.

The activation of the foveal cortex during the exploration of a shape in the dark and, importantly, while maintaining fixation on a point above the object, might seem at odds with the expectation that the EVC uses an eye-centered reference frame to define targets. Indeed, previous research has investigated reference frames in association areas such as the parietal cortex and has shown that targets are mostly defined in eye-centered coordinate frames (Beurze et al., 2010; Van Pelt et al., 2010). However, reference frames have been studied mostly in contexts in which vision was the sense used to define the target. Recent research has shown that when different senses are used to explore a target, multiple reference frames are exploited for localizing the target (Buchholz et al., 2011). Neuroimaging studies have shown that areas in the premotor and posterior parietal cortex not only use multiple reference frames to define visually and proprioceptively defined targets, but parietal areas also flexibly represent an action-directed target location in eye- or body-centered reference frames depending on whether the target has been defined using vision or proprioception (Bernier and Grafton, 2010; Leone et al., 2015). The associative nature of parietal areas that receive information from different senses makes the flexibility of reference frames necessary and consistent with their nature. Despite the expectation that primary sensory areas process targets by using fixed coordinates tied to the sensory modality processed in the area, growing evidence supporting multisensory processing in primary sensory areas including the primary visual cortex (Merabet et al., 2007; Marangon et al., 2015; Snow et al., 2015) opens the door to a deeper exploration of this matter. Despite the differences in tasks, stimuli, and contrasts used in previous works, the anatomical location of the OP in this study approximately agrees with previous studies that have investigated areas in the EVC involved in tactile processing (Merabet et al., 2007; Marangon et al., 2015; Snow et al., 2015). Our results extend previous findings by providing a direct comparison between tactile and visual processing, as well as eccentricity information. Indeed, our results suggest that EVC processes target properties in reference frames that are not necessarily fixed to the eyes, as one would expect, when touch is used to explore an object. We argue that whereas the primary visual cortex, and likely other primary sensory cortices, might not be made to process information in reference frames associated with modalities that differ from the primary sense being processed in the area (i.e., body-centered reference frames used in the primary visual cortex), they might develop this capability by continuously associating information processed with different senses. Experience likely redefines the way that different modalities are processed in the primary visual cortex to refine the representation of an object by enriching it with memory traces of previous visual experiences.

PPIs: functional connectivity during haptic exploration

One intriguing possibility is that different regions of retinotopic cortex are not only differentially activated during visual and haptic exploration, but are also connected differentially. That is, one might expect stronger feedback connections between regions implicated in multimodal perception (LOtv) and haptic exploration (aIPS) with the region of retinotopic cortex best suited to provide the relevant information: retinotopic information for vision and high-acuity (foveal) information for touch. Indeed, our PPI analyses support this.

Although an intact OP or LOtv might not be necessary for accurate recognition of objects using haptic exploration (Snow et al., 2015), these cortical regions may nevertheless facilitate learning associations between what is touched and what is seen via functional connections with dorsal stream areas such as the aIPS and ultimately generate a representation of shapes that can be later retrieved from any of these areas, if intact, using either vision or touch.

EVC and LOtv are reactivated for delayed grasping

During the execution of grasping after a delay, we observed reactivation in both the cuneus and OP regardless of the sensory modality used initially to explore the shape. In addition, the EVC was more involved in real than imagined actions.

In the OP, the reactivation for actions after the delay was comparable for visual and haptic trials even though haptic exploration of objects elicited higher activation than did visual exploration. These results suggest that once the representation of the shape is formed, the OP might store an abstract representation that is largely independent of the sensory modality used to acquire it and recruited during actions that require detailed information about object shape (e.g., grasping movements), but not for tasks that require less detailed information about the object (e.g., reaching) or imagining an action toward the same object without any real movement. Indeed, behavioral and neuroimaging research have revealed that the processing of action-relevant features (e.g., orientation during grasping) can be enhanced during movement preparation and relies on neural correlates that include the early and primary visual cortex (Bekkering and Neggers, 2002; Gutteling et al., 2015). Here, we show that enhancement of action-relevant visual information also occurs for delayed actions (when the object is unseen) and even when the stimulus is explored through touch rather than vision.

Interestingly, we found that the cuneus showed opposite preferences during reactivation at the time of the movement (haptic > visual) compared with exploration (visual > haptic).

Though speculative, one possible account may be that during visual exploration, there are many visual features to process at the retinotopic location of the cuneus (such as the global shape of the object and its material properties) that are not visible during haptic exploration, whereas during haptic and visual reactivation, no visual stimuli are present at the retinotopic location in either case and the recall of certain properties (particularly the object-graspable dimension in grasp trials) may require somewhat more additional processing for haptic than visual trials.