Abstract

Uncorrected refractive error is a major cause of vision impairment, and is indexed by visual acuity. Availability of vision assessment is limited in low/middle-income countries and in minority groups in high income countries. eHealth tools offer a solution; two-thirds of the globe own mobile devices. This is a scoping review of the number and quality of tools for self-testing visual acuity. Software applications intended for professional clinical use were excluded. Keyword searches were conducted on Google online, Google Play and iOS store. The first 100 hits in each search were screened against inclusion criteria. After screening, 42 tools were reviewed. Tools assessed near and distance vision. About half (n = 20) used bespoke optotypes. The majority (n = 25) presented optotypes one by one. Four included a calibration procedure. Only one tool was validated against gold standard measures. Many self-test tools have been published, but lack validation. There is a need for regulation of tools for the self-testing of visual acuity to reduce potential risk or confusion to users.

Subject terms: Refractive errors, Vision disorders, Diagnosis, Visual system, Neuroscience

Introduction

Vision impairment is rising year on year, with global estimates of 237.1 million affected by moderate or severe vision impairment by 2020.1 Visual impairment has negative impacts on quality of life, mental and physical health,2–6 impedes performance in school, reduces employability and productivity7 and is associated with a greater risk of all-cause mortality in older persons.8 Uncorrected refractive errors (URE) and unoperated cataracts are the top global causes of vision impairment and over 80% of all vision impairments are preventable.9 UREs are responsible for 53% of vision impairment.10,11 URE’s contribution to all moderate-severe vision impairments is estimated to be higher in lower and middle-income countries (LMIC) than in high-income countries e.g.,11–13

A potential reason for the prevalence of UREs may be the lack of access to vision care services. In some LMICs, vision care services are only offered at the secondary and tertiary levels of care, not at community level.7,14 In high-income countries that do have readily available vision screening, uptake is limited15 so UREs may go undetected and uncorrected.16 In high-income countries UREs are more common among low socioeconomic and ethnic minority groups.17–19 Availability of eye examinations in people’s own homes, living alone, cost of vision care and perceptions that declining visual acuity is normal with ageing are also associated with UREs.20 eHealth vision screening tools may help tackle some of the issues relating to visual impairment due to UREs by increasing identification and promoting correction of refractive errors. eHealth tools may also be helpful in identifying visual acuity problems due to other treatable conditions, such as macular degeneration.

Over the past decade, the number of digital health tools has risen. Over 200 online health tools are published every day, and over 318,000 are currently available.21 With growth of technology, increasing quantity of online tools, and the potential for savings in healthcare costs, investment in digital health grows year on year.21 Two-thirds of the world’s population are now connected via mobile devices.22 However, the quality of eHealth tools is uncertain. Developers of eHealth tools generally have no training in healthcare and health professionals are not involved in the development of the majority of tools.23–25

Vision assessment is an area of eHealth that may have particularly benefitted from advancements in technology including larger screen sizes and higher screen resolutions, more processing power and lower costs of hardware. Numerous tools for vision assessment have already been developed and published. But the range of tools available has made it difficult for clinicians and the public to determine which tools are the most effective.26

The aims of the review were (i) to provide an overview of online or app-based tools for self-testing of visual acuity; and (ii) to identify and critique the quality of these tools with respect to validity and reliability. The review excluded software applications intended for professional clinical use (e.g., the AT20P Acuity Tester27 or the Vision Toolbox28). The review focussed on tools for self-testing of visual acuity because visual acuity is a strong predictor of self-reported vision related quality of life29 and International Classification of Diseases 11 (2018)30 definitions of vision impairment are based on visual acuity; visual acuity is the primary index of vision impairment.

Results

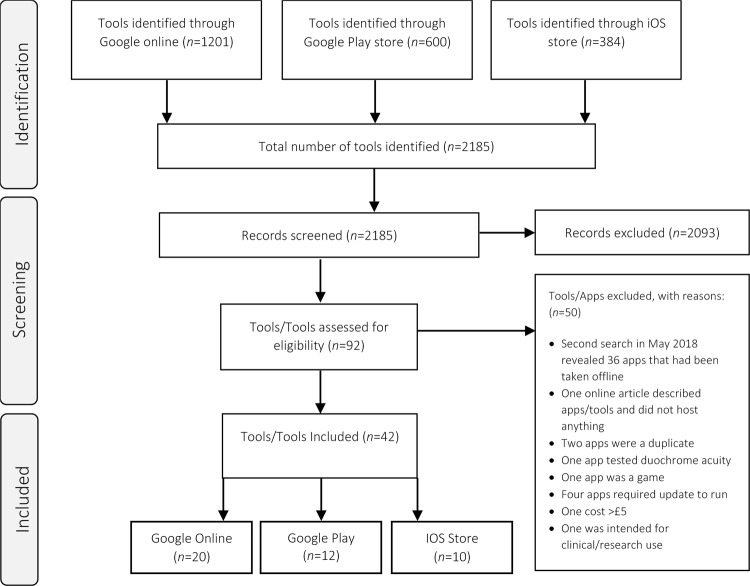

After screening the results, 92 tools were assessed for eligibility, and 42 mobile and/or online tests (Fig. 1) were included for review. All tests took ~5–20 min to complete, including setup and calibration time. Several tests did not specify testing one eye at a time, so were categorised as testing binocular vision. Table 1 provides a description of the tools.

Fig. 1.

Prisma flow diagram indicating the tools search and screening process

Table 1.

Description of eHealth visual acuity tools

| Test Title | Visual Acuity Typea | Optotypeb | Calibration | Chart Presentation | Platform | Reliabilityc | Validityc | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N | F | B | M | SN | SL | E | C | B | O | Singly | Whole | Other | |||||

| Online Eye Test | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||

| Eye Chart and Vision Test Online | ✓ | ✓ | ✓ | ✓ | Web | ✓ | |||||||||||

| Online Eye Tests | ✓ | ✓ | ✓ | ✓ | ✓ | Web | |||||||||||

| Snellen Eye Test Online | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Devlyn Vision Screening | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||

| Snellen Online Eye Test | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Online Eye Test | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Self-Vision Test | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Test Your Eyesight Now | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| The Online Eye Test | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Better Vision | ✓ | ✓ | ✓ | ✓ | ✓ | Web | |||||||||||

| Presbyopia Test | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Vutest | ✓ | ✓ | ✓ | ✓ | Web | ✓ | ✓ | ||||||||||

| SeeDrivePro | ✓ | ✓ | ✓ | ✓ | ✓ | Web | ✓ | ✓ | |||||||||

| Online Eye Exam | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Online Eye Tests | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Essilor Vision Test | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Online Eye Exam | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Online Eye Tests | ✓ | ✓ | ✓ | ✓ | Web | ||||||||||||

| Zeiss Online Vision Screening | ✓ | ✓ | ✓ | ✓ | ✓ | Web | |||||||||||

| EyeTester | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| EyeTesterPro | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| REST Rapid Eye Screening Test | ✓ | ✓ | ✓ | ✓ | App | ✓ | ✓ | ||||||||||

| Eye Test by Boots Opticians | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| Advanced VISION Test | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| Eye Test Free | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| Eyesight Checking | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| OPSM Eye Check | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| Vision Scan Lite | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| Vision Scan Universal | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| Eye Checker | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| Eye Doctor Trainer - Vision Up | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| Eye Exam - Andrew Brusentsov | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||

| Eye Exam Pro - Andrew Brusentsov | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||

| Eye Exercises - Eye Care Plus | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||

| Eye Test Charts | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | App | ||||||||||

| Eye Test Girls | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| Eye Vision Test | ✓ | ✓ | ✓ | ✓ | App | ||||||||||||

| EyeXam | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| iKit - For Your Eyes Only | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| OPSM Eye Check | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

| Vision Test | ✓ | ✓ | ✓ | ✓ | ✓ | App | |||||||||||

aN Near Vision; F Far Vision; B Binocular; M Monocular;

bSN Snellen; SL Sloan; E Tumbling E; C Landolt C; B Bespoke; O Others

cGrey shading reported as having data, but not provided, or in preparation

Optotypes

There were twenty-one tools using a mixture of standard optotypes; these included Sloan Letters, original Snellen, tumbling E and Landolt C. Within these twenty-one tools, five also offered non-standard optotypes and three included options for pictures or numbers. Twenty tools provided alternative optotypes only; these included an assortment of serif and sans serif typefaces. One tool included numerical optotypes only.

Presentation and response interface

The majority of tools (n = 25) presented optotypes one by one and none provided optotypes with crowding as an option. This format was employed for optotypes that required the participant to decide on the orientation of the figure, for example Landolt C or tumbling E. For mobile devices, a swiping gesture could be used to record response to the direction of the optotype. The second most common presentation format was to present the entire chart (n = 11). Ten were found through Google Online searches and were intended for use with a desktop/laptop computer interface. There was also a small number that presented the optotypes using non-validated methods, for example line by line (e.g., better vision by Cambridge Institute for Better Vision; SeeDrivePro by EyeLab Ltd), or within a circle of selectable letters (e.g., vutest by EyeLab Ltd).

Calibration

The majority of tools (n = 38) provided instructions for completing the test in a standard way, for example, positioning at the correct distance from the display. Six tools (e.g., Eye Chart and Vision Test Online by MindBluff.com; Online Eye Tests by Ross Brown Optometry; The Online Eye Test by Jim Allen; vutest by EyeLab Ltd; Online Eye Tests by Dr Oliver Findl and Online Eye Tests, Free eye exams and vision test by Prokerala;) provided information on the testing distance dependent on the resolution of the display. Three additional tools (e.g., Snellen Online Eye Test by Smart Buy Glasses; Self- Vision test by Milford Eye Care; Online Eye Exam by Jem Optical) included a standard Snellen chart with the addition of a calibration bar. The calibration bar must be measured in centimetres by the user and the result is the distance that the user should stand from the display in feet. One tool (Snellen Eye Test Online by eyes-and-vision.com) asked the user to measure the largest optotype (i.e., the optotype that sits at the top of the vision chart) in inches. This result is then multiplied by a constant to obtain the distance the user must be from the display.

Four tools provided a calibration procedure, which involved standardising the optotype size by using a reference object (such as a credit card) to adjust the image sizes appropriately using on screen toggles (e.g., Online Eye Test by Easee Online; Devlyn Vision Screening by Devlyn Optical; SeeDrivePro by EyeLab Ltd; Zeiss Online Vision Screening by Zeiss). Calibration of one tool (Online Eye Test by Easee Online) involved taking into account screen brightness, screen placement (i.e., monitor/laptop position), and colour calibration. The tools that offered calibration procedures were only those on web-hosted platforms. No tools on mobile platforms provided calibration options.

The majority of tools (n = 32) included a statement that they were screening tools and were not intended as a replacement for professional testing. The tools recommended consulting a professional if the user had any concerns about their vision, and recommended regular vision testing.

Presentation of results

The presentation of results varied widely. The most common (n = 13) was the Snellen fraction with the majority measured in feet (n = 9).31 Five of those thirteen tools simply provided information on the scoring of the chart and the user would then be required to interpret the results by following the instructions. For example, the user would be required to determine Snellen score based on which line was read (e.g., the third line would be equivalent to 20/40). The remaining 11 tools automatically provided a Snellen fraction along with an explanation (e.g., “20/100 means that when you stand 20 feet from the chart you can see what a normal person standing 100 feet away can see, 20/100 is considered moderate vision impairment”).

Eighteen tools provided a visual performance score but without any further interpretation. For example, some tools provide a percentage score or visual performance categorised as low, medium or high risk. The calculation of risk score was not explained, nor what the “risk” score related to (e.g., OPSM Eye Check by OPSM; Devlyn Vision Screening by Devlyn Vision) Two tools (Vutest and SeeDrivePro by EyeLab Ltd) required a monetary payment in order to retrieve the results.

Four tools produced a simple decimal value with no further clarifications. These decimal scores may be derived from Snellen scores, however this was not detailed (Eye Tester and Eye Tester Pro by Fuso Precision; Eye Checker by Nanny_786; Eye Doctor Trainer—Vision Up by BytePioneers S.R.O.). Two tools provided a dioptre-based prescription for near vision; Nanyang optical recommended the lens strength for the user, and the test by Easee Online provided prescriptions with values relating to sphere, cylinder and vision percentage per eye.

Lastly, five tools (Eye Test Free by Magostech Information System Pvt Ltd; Eye Exam and Eye Exam Pro by Andrew Brusentsov; Eye Exercises—Eye Care Plus by healthcare4mobile; Vision Test by NeoVize Group) gave a non-standard percentage-based score for each eye (e.g., L80%, R75%) with no details of how these percentages are calculated or interpreted.

The Easee Online test also provided a prescription service at a cost to the user. The prescription generated by the online test was validated by the in-house specialist at Easee Online and an eyewear prescription dispatched to the user.

Validity and reliability

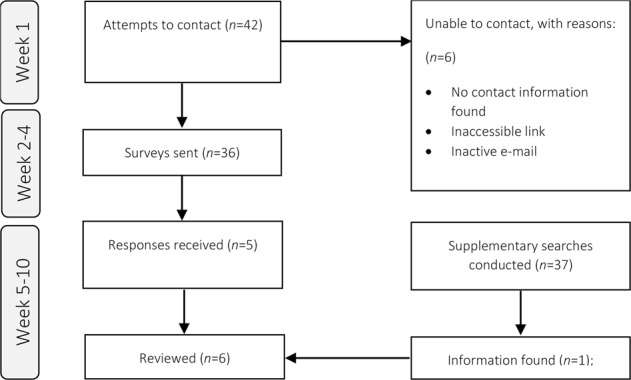

Five electronic surveys were returned, and a further 37 supplementary searches were conducted to uncover validity or reliability data (Fig. 2). Survey responses were received for the “Online Eye Test” by Easee, the “Eye Chart and Vision Test” by mindbluff.com, “Vutest” and “Seedrive Pro” by EyeLab Ltd and the “Eye Test” by Boots Opticians (Table 1). Supplementary searches identified information for three additional tools, however only one of these yielded relevant data (REST by Zu Quan Ik).

Fig. 2.

Prisma flow diagram indicating attempts to contact and survey the tool developers for further information

The five tools that responded to the survey reported the collection of reliability and validity data, but did not present any data to support this being the case.

Jan-Bond et al. conducted a study to determine the agreement and intraclass correlation coefficient (ICC) of visual acuity between the REST rapid eye screening test (Zu Quan Ik) and an Early Treatment Diabetic Retinopathy Study (ETDRS) tumbling E chart.32 The REST utilises a tumbling E chart, with calibrated optotype sizes to 1 and 3-m testing distances. Visual acuity was tested at 3-m distance on both the ETDRS and the REST with 101 adult participants who were patients and staff (mean age = 37.0 ± 15.9; range 5.0–75.0) of the eye clinic at Hospital Universiti Sains Malaysia. Data were not provided on the eye conditions of participants. Participants with acuity worse than 6/60 were excluded. The ethnic background of participants reflected the Malaysian general population, with the majority being Malays (62.4%). However, the sample was not representative in terms of educational background; over half of the participants were professionals with tertiary level education. Strong agreements were observed between the two tools for the right (ICC = 0.91; 95%CI from 0.86 to 0.94, p < 0.001) and left (ICC = 0.93; 95%CI from 0.90 to 0.95, p < 0.001) eye. The REST was also significantly quicker to administer than ETDRS. A second study compared REST with ETRDS chart among 200 adult patients with immature cataracts. This study also reported similar relationships between REST and ETRDS results (Pearson’s r = 0.99, p < 0.001).33 Reliability of the REST was not ascertained to our knowledge.

Discussion

Online vision self-assessment tools may help address the increasing burden of visual impairment in both high-income countries and in LMIC. But of the 42 tools for self-assessment of visual acuity that were included for review, only one tool reported validity data. And for that one tool, the number and types of patients in the validation were not reported. No tools reported reliability data. The uncertain validity and reliability of most tools is a concern because unreliable tools may miss cases of visual impairment, or may cause undue anxiety by falsely identifying cases. Poor quality eHealth apps may compromise patient safety (e.g., in relation to dose calculation,34 or melanoma detection35). There may be similar issues for vision assessment tools. The vision assessment tools that were identified in the mobile app stores tended to be categorised under “Medical” or “Health and Fitness” sections. Use of such terminology may increase the likelihood of users relying on these tools for medical information. There is a need to establish regulatory standards for vision self-assessment tools to ensure that user safety is not compromised.

A variety of models of regulation are available. National governmental regulatory authorities (e.g., the US Food and Drug Administration or the UK Medicines and Healthcare products Regulatory Agency [MHRA]) may struggle to keep up with the rapid development of eHealth tools.36 Voluntary certification or the European Union’s system of decentralised registration are alternatives to regulation by central national authorities.37 For example, a voluntary certification system was developed based on a set of standards developed by commercial eHealth companies.38 The system involved app developers paying to have their apps certified as meeting the prescribed standards. In the European Union’s model, app developers can file an application for medical device registration with any member state of the European Union.39 The Conformité Européenne (CE) mark that is issued by the respective body in each member state is then valid throughout the European Union.

However, these registration systems all have limitations. For example, the FDA only regulates apps that are defined by the FDA as being a medical device, or apps that may risk patient safety, and most apps fall outside of these definitions.40

The voluntary industry-led registration system mentioned above38 was discontinued after security flaws were identified in some certified apps and after low uptake of registration by app developers. The main limitation of the European Union’s system of certification is that registration standards only relate to standards of safety and performance but do not require clinical efficacy of the device to be established.37

One potential solution may be endorsement by credible national health agencies and/or third sector organisations based on an internationally agreed set of standards that take into account the efficacy and safety of the eHealth tool. The United Kingdom’s National Health Service (NHS) recently created an online register of digital health tools for self-care of various health conditions that meet standards of quality, safety and effectiveness (https://apps.beta.nhs.uk/). Standards are assessed based on digital assessment questions (DAQ), a framework developed by subject matter experts.41 Healthcare organisations elsewhere could establish similar registries of eHealth apps according to DAQ standards.

Another potential solution is based on the Clinical Laboratory Improvement Amendments of 1988 (CLIA) model.42 CLIA is a system for ensuring that diagnostic testing laboratories comply with US regulatory standards. Non-profit accrediting agencies with authority to issue certification under US federal CLIA standards ensure consistency of record keeping and staff training.43 The CLIA model could be applied to eHealth tools to ensure that they comply with basic standards of accessibility (the inclusion of clear language, ease of use, affordability and usability), privacy and security (assurances that the tool appropriately secures private health data, and compliance with data sharing laws [e.g., general data protection regulation]) and content (apps are developed with healthcare professional involvement, with accurate information and limited monetisation practices).37,44 As US healthcare providers do not do business with laboratories that are not CLIA certified, users could choose to use eHealth tools with appropriate certification.

Many professional clinical assessment systems utilise technology similar to those used by the tools covered in this review (e.g., Thomson Software Solutions’ products28). Professional systems reliably assess a much wider range of parameters than visual acuity, the typical focus of self-test apps. Developers of most self-test apps may not have a background in vision healthcare.23–25 It may therefore be that any lack of reliability in self-testing apps is not a technological problem, but is due to lack of awareness on the part of app developers concerning the optometrically important features of the test (e.g., the style of the optotypes or their spacing). Guidelines that involve a minimum quality standards may be useful in ensuring that self-test apps are of comparable reliability to professional systems.

In addition to systems of quality certification for eHealth tools, eHealth tools for vision impairment should include a clear link to care that supports users acting on the result of the vision test. The visual acuity self-assessment tools in this review directed the user to visit a professional for further advice or testing. Not including a specific link to clinical or support services may mean that only a small proportion of people who fail a vision self-test act on the results. Additional possibilities may include directly linking self-test apps with clinical services, and/or remotely delivered care via video conferencing, or other technologies. This type of telehealth care is on the rise, for example in the remote delivery of a cardiac rehabilitation exercise programme45 or in the self-management of skincare,46 where an eHealth app enables two-way communication between patient and clinician. The Easee Online tool identified in this review conducts an eye exam and provides the user with a prescription, which can then be issued (for a fee) by Easee optometrists. The remote care paradigm presented by Easee is of interest, but a serious limitation of this paradigm is the use of an internet tool of uncertain reliability that cannot provide a complete examination of ocular health and which may result in users being falsely reassured by the finding of normal visual acuity.

Utilising video-conferencing and telehealth methods may facilitate the reach of clinical services in underserved areas (such as in LMICs). A study conducted on an Indian population looked at the efficacy of a computerised visual acuity screening system, and report good agreement between the computerised telehealth method versus face-to-face assessment.47

A limitation of this review was that it only offers a snapshot of self-assessment vision tools at one point in time in a rapidly changing online landscape. However the issues related to quality and links to care identified in this review are likely to continue to pertain to eHealth self-assessment vision tools that may be released in the months and years following this review. A further limitation is that only tools for self-testing of visual acuity were evaluated; tools may assess additional parameters that may relate to specific eye health conditions. However, the focus on visual acuity is valid, as acuity is one of the primary indices of vision impairment.

Conclusion

eHealth vision tools have potential to meet a growing burden associated with vision impairment, particularly in LMIC. But the validity and reliability of most has not been established. There is a need to ensure that vision eHealth vision tools are of good quality. Solutions include following established frameworks for regulation that take into account accessibility, privacy and content, and the creation of repositories of high quality tools by national health agencies or third sector organisations that users can depend on. There is also a need for effective linking self-tests of visual acuity with a care pathway, which could also involve remotely delivered care.

Methods

Design

A systematic search was conducted between May 2017 and May 2018 to identify candidate screening tools for inclusion. The search engine Google was used to identify online tools and Google Play and the Apple App Store were used to identify smartphone and tablet applications. The search terms used were “Online” OR “Internet-based” AND “Vision” OR “Eye” AND “Test” OR “Screen” OR “Check”. For the online tools search, the additional search term “Online” was included in combination with the other search terms. On both Google Play and Google online searches, the first hundred hits were screened. These were sorted automatically according to relevance by the search engine. Location services were disabled, and adverts were not included. For the iOS store, all results returned were screened, as each search combination often returned fewer than hundred results.

A first reviewer screened all the titles, identifying candidate tools for inclusion. A second reviewer then screened 10% of the titles to ensure consensus opinion. All candidate tests for inclusion were screened by both reviewers and in instances of disagreement, a third reviewer decided on whether the measure met criteria for inclusion.

Inclusion criteria were (i) tests were intended as self-administered tests of visual acuity that can be completed without the support of a professional; (ii) tests provide some feedback on performance; (iii) tests were hosted online or as a smartphone application; (iv) tests were freely available or low cost; an upper limit of £5 was chosen to select tools that are readily available and within the average price range for mobile apps.48 Tests designed for use by professionals were excluded.

Survey design

A survey was devised to obtain information about the design, availability, development and validity of the tools. The survey was adapted from a survey of clinical cognitive assessments developed by Snyder et al. with permission from the author49 (see Supplementary Table 1). Survey data were collected via an internet-based questionnaire over a 3-month period. A contact email was identified for each tool (where available), and an email was sent to the developer that contained a link to the online survey, explained the purpose of the survey and invited the developer to respond. Developers were sent periodic reminder emails at fortnightly intervals until a response was received or until 10 weeks had passed. For tools for which no response was received, or no contact could be identified, supplementary searches were conducted. Searches were conducted on Google (to identify grey literature), Google Scholar and Medline (to identify published/peer reviewed articles). The search terms used were “(name of developer, if available)” AND “(name of the tool)”. The first hundred results (sorted by relevance) were used. Applicable titles and webpages were reviewed for their relevance, and relevant materials were downloaded and saved. Data were extracted from these materials according to a data extraction form based on questions asked in the survey.

Regulatory approvals

None of the tools were registered with a health regulatory authority that we were aware of. The Easee tool was reported to be certified as a CE class 1 Medical device, which is the lowest risk category of medical devices. Although self-certified CE marking is an indicator of safety and performance, it does not certify clinical efficacy.

Supplementary information

Acknowledgements

This work was supported by SENSE-Cog project. This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 668648. PD is supported by the NIHR Manchester Biomedical Research Centre.

Author contributions

W.K.Y. conducted an update of the searches and wrote the manuscript including the introduction, methods, results and discussion. P.D. and A.P. wrote the review protocol including the search strategy and methodology, and had significant input in writing the introduction, methods, results and discussion sections. A.P.C., A.P. and M.N. conducted the initial searches. T.A. and C.D. contributed to the development of the manuscript and acted as expert consultants on the ophthalmological aspects of the review. I.L. and P.D. are principal investigators for the SENSE-Cog project, and oversaw the development of the review.

Data availability

The authors declare that the data supporting the findings of this study are available within the paper and its supplementary information files.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

11/26/2019

An amendment to this paper has been published and can be accessed via a link at the top of the paper.

Supplementary information

Supplementary Information accompanies the paper on the npj Digital Medicine website (10.1038/s41746-019-0154-5).

References

- 1.Flaxman Seth R, Bourne Rupert R A, Resnikoff Serge, Ackland Peter, Braithwaite Tasanee, Cicinelli Maria V, Das Aditi, Jonas Jost B, Keeffe Jill, Kempen John H, Leasher Janet, Limburg Hans, Naidoo Kovin, Pesudovs Konrad, Silvester Alex, Stevens Gretchen A, Tahhan Nina, Wong Tien Y, Taylor Hugh R, Bourne Rupert, Ackland Peter, Arditi Aries, Barkana Yaniv, Bozkurt Banu, Braithwaite Tasanee, Bron Alain, Budenz Donald, Cai Feng, Casson Robert, Chakravarthy Usha, Choi Jaewan, Cicinelli Maria Vittoria, Congdon Nathan, Dana Reza, Dandona Rakhi, Dandona Lalit, Das Aditi, Dekaris Iva, Del Monte Monte, deva Jenny, Dreer Laura, Ellwein Leon, Frazier Marcela, Frick Kevin, Friedman David, Furtado Joao, Gao Hua, Gazzard Gus, George Ronnie, Gichuhi Stephen, Gonzalez Victor, Hammond Billy, Hartnett Mary Elizabeth, He Minguang, Hejtmancik James, Hirai Flavio, Huang John, Ingram April, Javitt Jonathan, Jonas Jost, Joslin Charlotte, Keeffe Jill, Kempen John, Khairallah Moncef, Khanna Rohit, Kim Judy, Lambrou George, Lansingh Van Charles, Lanzetta Paolo, Leasher Janet, Lim Jennifer, LIMBURG Hans, Mansouri Kaweh, Mathew Anu, Morse Alan, Munoz Beatriz, Musch David, Naidoo Kovin, Nangia Vinay, Palaiou Maria, Parodi Maurizio Battaglia, Pena Fernando Yaacov, Pesudovs Konrad, Peto Tunde, Quigley Harry, Raju Murugesan, Ramulu Pradeep, Rankin Zane, Resnikoff Serge, Reza Dana, Robin Alan, Rossetti Luca, Saaddine Jinan, Sandar Mya, Serle Janet, Shen Tueng, Shetty Rajesh, Sieving Pamela, Silva Juan Carlos, Silvester Alex, Sitorus Rita S., Stambolian Dwight, Stevens Gretchen, Taylor Hugh, Tejedor Jaime, Tielsch James, Tsilimbaris Miltiadis, van Meurs Jan, Varma Rohit, Virgili Gianni, Wang Ya Xing, Wang Ning-Li, West Sheila, Wiedemann Peter, Wong Tien, Wormald Richard, Zheng Yingfeng. Global causes of blindness and distance vision impairment 1990–2020: a systematic review and meta-analysis. The Lancet Global Health. 2017;5(12):e1221–e1234. doi: 10.1016/S2214-109X(17)30393-5. [DOI] [PubMed] [Google Scholar]

- 2.Tournier Marie, Moride Yola, Ducruet Thierry, Moshyk Andriy, Rochon Sophie. Depression and mortality in the visually-impaired, community-dwelling, elderly population of Quebec. Acta Ophthalmologica. 2008;86(2):196–201. doi: 10.1111/j.1600-0420.2007.01024.x. [DOI] [PubMed] [Google Scholar]

- 3.Gopinath Bamini, McMahon Catherine M., Burlutsky George, Mitchell Paul. Hearing and vision impairment and the 5-year incidence of falls in older adults. Age and Ageing. 2016;45(3):409–414. doi: 10.1093/ageing/afw022. [DOI] [PubMed] [Google Scholar]

- 4.Seland Johan H., Vingerling Johannes R., Augood Cristina A., Bentham Graham, Chakravarthy Usha, deJong Paulus T. V. M., Rahu Mati, Soubrane Gisele, Tomazzoli Laura, Topouzis Fotis, Fletcher Astrid E. Visual Impairment and quality of life in the Older European Population, the EUREYE study. Acta Ophthalmologica. 2009;89(7):608–613. doi: 10.1111/j.1755-3768.2009.01794.x. [DOI] [PubMed] [Google Scholar]

- 5.Rogers M. A. M., Langa K. M. Untreated Poor Vision: A Contributing Factor to Late-Life Dementia. American Journal of Epidemiology. 2010;171(6):728–735. doi: 10.1093/aje/kwp453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Carrière Isabelle, Delcourt Cécile, Daien Vincent, Pérès Karine, Féart Catherine, Berr Claudine, Laure Ancelin Marie, Ritchie Karen. A prospective study of the bi-directional association between vision loss and depression in the elderly. Journal of Affective Disorders. 2013;151(1):164–170. doi: 10.1016/j.jad.2013.05.071. [DOI] [PubMed] [Google Scholar]

- 7.Resnikoff Serge. Global magnitude of visual impairment caused by uncorrected refractive errors in 2004. Bulletin of the World Health Organization. 2008;86(1):63–70. doi: 10.2471/BLT.07.041210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ferreyra Henry A. Management of Autoimmune Retinopathies With Immunosuppression. Archives of Ophthalmology. 2009;127(4):390. doi: 10.1001/archophthalmol.2009.24. [DOI] [PubMed] [Google Scholar]

- 9.World Health Organization. Draft action plan for the prevention of avoidable blindness and visual impairment 2014-2019. Towards universal eye health: a global action plan 2014–2019. Sixty-Sixth World Health Assembly (2013).

- 10.Bourne Rupert R A, Stevens Gretchen A, White Richard A, Smith Jennifer L, Flaxman Seth R, Price Holly, Jonas Jost B, Keeffe Jill, Leasher Janet, Naidoo Kovin, Pesudovs Konrad, Resnikoff Serge, Taylor Hugh R. Causes of vision loss worldwide, 1990–2010: a systematic analysis. The Lancet Global Health. 2013;1(6):e339–e349. doi: 10.1016/S2214-109X(13)70113-X. [DOI] [PubMed] [Google Scholar]

- 11.Naidoo Kovin S., Leasher Janet, Bourne Rupert R., Flaxman Seth R., Jonas Jost B., Keeffe Jill, Limburg Hans, Pesudovs Konrad, Price Holly, White Richard A., Wong Tien Y., Taylor Hugh R., Resnikoff Serge. Global Vision Impairment and Blindness Due to Uncorrected Refractive Error, 1990–2010. Optometry and Vision Science. 2016;93(3):227–234. doi: 10.1097/OPX.0000000000000796. [DOI] [PubMed] [Google Scholar]

- 12.Sherwin Justin C, Lewallen Susan, Courtright Paul. Blindness and visual impairment due to uncorrected refractive error in sub-Saharan Africa: review of recent population-based studies. British Journal of Ophthalmology. 2012;96(7):927–930. doi: 10.1136/bjophthalmol-2011-300426. [DOI] [PubMed] [Google Scholar]

- 13.Sabanayagam Charumathi, Cheng Ching-Yu. Global causes of vision loss in 2015: are we on track to achieve the Vision 2020 target? The Lancet Global Health. 2017;5(12):e1164–e1165. doi: 10.1016/S2214-109X(17)30412-6. [DOI] [PubMed] [Google Scholar]

- 14.Naidoo, K. & Ravilla, D. Delivering refractive error services: primary eye care centres and outreach. Community Eye Health J.20, 42–44 (2007). [PMC free article] [PubMed]

- 15.Khandekar, R., Mohammed, A. J. & Al Raisi, A. Compliance of spectacle wear and its determinants among schoolchildren of Dhakhiliya region of Oman: a descriptive study. Sultan Qaboos Univ. Med. J. 4, 1–2 (2002). [PMC free article] [PubMed]

- 16.Vitale Susan, Cotch Mary Frances, Sperduto Robert D. Prevalence of Visual Impairment in the United States. JAMA. 2006;295(18):2158. doi: 10.1001/jama.295.18.2158. [DOI] [PubMed] [Google Scholar]

- 17.Varma Rohit, Wang Michelle Y., Ying-Lai Mei, Donofrio Jill, Azen Stanley P. The Prevalence and Risk Indicators of Uncorrected Refractive Error and Unmet Refractive Need in Latinos: The Los Angeles Latino Eye Study. Investigative Opthalmology & Visual Science. 2008;49(12):5264. doi: 10.1167/iovs.08-1814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Thiagalingam S. Factors associated with undercorrected refractive errors in an older population: the Blue Mountains Eye Study. British Journal of Ophthalmology. 2002;86(9):1041–1045. doi: 10.1136/bjo.86.9.1041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dawes Piers, Dickinson Christine, Emsley Richard, Bishop Paul N., Cruickshanks Karen J., Edmondson-Jones Mark, McCormack Abby, Fortnum Heather, Moore David R., Norman Paul, Munro Kevin. Vision impairment and dual sensory problems in middle age. Ophthalmic and Physiological Optics. 2014;34(4):479–488. doi: 10.1111/opo.12138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Naël Virginie, Moreau Gwendoline, Monfermé Solène, Cougnard-Grégoire Audrey, Scherlen Anne-Catherine, Arleo Angelo, Korobelnik Jean-François, Delcourt Cécile, Helmer Catherine. Prevalence and Associated Factors of Uncorrected Refractive Error in Older Adults in a Population-Based Study in France. JAMA Ophthalmology. 2019;137(1):3. doi: 10.1001/jamaophthalmol.2018.4229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.IQVIA Institute. The Growing Value of Digital Health in the United Kingdom. 1–40 (IQVIA Institute, Parsippany, NJ, 2017).

- 22.GSMA Intelligence. Global Mobile Trends 2017. 1–105 (GSMA Intelligence, London, 2017).

- 23.Hamilton A.D., Brady R.R.W. Medical professional involvement in smartphone ‘apps’ in dermatology. British Journal of Dermatology. 2012;167(1):220–221. doi: 10.1111/j.1365-2133.2012.10844.x. [DOI] [PubMed] [Google Scholar]

- 24.Rosser Benjamin A, Eccleston Christopher. Smartphone applications for pain management. Journal of Telemedicine and Telecare. 2011;17(6):308–312. doi: 10.1258/jtt.2011.101102. [DOI] [PubMed] [Google Scholar]

- 25.Rodrigues M. A., Visvanathan A., Murchison J. T., Brady R. R. Radiology smartphone applications; current provision and cautions. Insights into Imaging. 2013;4(5):555–562. doi: 10.1007/s13244-013-0274-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Powell Adam C., Landman Adam B., Bates David W. In Search of a Few Good Apps. JAMA. 2014;311(18):1851. doi: 10.1001/jama.2014.2564. [DOI] [PubMed] [Google Scholar]

- 27.Medmont. AT20P Acuity Tester. https://www.medmont.com.au/products/at20p-visual-acutiy-tester/

- 28.Thomson Software Solutions. Vision Toolbox. http://www.thomson-software-solutions.com/vision-toolbox/

- 29.Lennie, P. & Hemel, S. B. Van. Tests of Visual Functions. Vis. Impair. 10.17226/10320 (2002).

- 30.World Health Organization. International statistical classification of diseases and related health problems (11th Revision). (World Health Organization, Geneva, 2018).

- 31.Holladay Jack T., Msee Visual acuity measurements. Journal of Cataract & Refractive Surgery. 2004;30(2):287–290. doi: 10.1016/j.jcrs.2004.01.014. [DOI] [PubMed] [Google Scholar]

- 32.Jan-Bond, C. et al. REST—an innovative rapid eye screening. Test. J. Mob. Technol. Med.4, 20–25 (2015).

- 33.Sastry, V., Shet, S., Jivangi, V., Katti, V. & Kanakpur, S. FP1078: RAPID EYE SCREENING TEST (REST)—MobiApp Based Visual Screening in Outreach Camps. In 76th Annual Conference of the All India Ophthalmological Society, 1–12 (2018).

- 34.Huckvale, K., Adomaviciute, S., Prieto, J. T., Leow, M. K. S. & Car, J. Smartphone apps for calculating insulin dose: a systematic assessment. BMC Med. 10.1186/s12916-015-0314-7 (2015). [DOI] [PMC free article] [PubMed]

- 35.Wolf Joel A., Moreau Jacqueline F., Akilov Oleg, Patton Timothy, English Joseph C., Ho Jonhan, Ferris Laura K. Diagnostic Inaccuracy of Smartphone Applications for Melanoma Detection. JAMA Dermatology. 2013;149(4):422. doi: 10.1001/jamadermatol.2013.2382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Steinhubl Steven R., Muse Evan D., Topol Eric J. Can Mobile Health Technologies Transform Health Care? JAMA. 2013;310(22):2395. doi: 10.1001/jama.2013.281078. [DOI] [PubMed] [Google Scholar]

- 37.Larson Richard S. A Path to Better-Quality mHealth Apps. JMIR mHealth and uHealth. 2018;6(7):e10414. doi: 10.2196/10414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Palmer Sean. Swipe Right for Health Care: How the State May Decide the Future of the mHealth App Industry in the Wake of FDA Uncertainty. Journal of Legal Medicine. 2017;37(1-2):249–263. doi: 10.1080/01947648.2017.1303289. [DOI] [PubMed] [Google Scholar]

- 39.Van Norman Gail A. Drugs and Devices. JACC: Basic to Translational Science. 2016;1(5):399–412. doi: 10.1016/j.jacbts.2016.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.U.S. Food and Drug Administration. Mobile Medical Applications- Guidance for Industry and Food and Drug Administration Staff. 1, 1–44 (U.S. Food and Drug Administration, Maryland, USA, 2015).

- 41.NHS. NHS Apps Library. https://apps.beta.nhs.uk/. (2017).

- 42.CMS. Clinical Laboratory Improvement Amendments. https://www.cms.gov/Regulations-and-Guidance/Legislation/CLIA/index.html (1988)

- 43.Yost Judith A. Laboratory Inspection: The View From CMS. Laboratory Medicine. 2003;34(2):136–140. doi: 10.1309/UV7DPF0U581Y2M8H. [DOI] [Google Scholar]

- 44.Cook Victoria E., Ellis Anne K., Hildebrand Kyla J. Mobile health applications in clinical practice: pearls, pitfalls, and key considerations. Annals of Allergy, Asthma & Immunology. 2016;117(2):143–149. doi: 10.1016/j.anai.2016.01.012. [DOI] [PubMed] [Google Scholar]

- 45.Rawstorn Jonathan C, Gant Nicholas, Meads Andrew, Warren Ian, Maddison Ralph. Remotely Delivered Exercise-Based Cardiac Rehabilitation: Design and Content Development of a Novel mHealth Platform. JMIR mHealth and uHealth. 2016;4(2):e57. doi: 10.2196/mhealth.5501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Parmanto, B., Pramana, G., Yu, D. X., Fairman, A. D. & Dicianno, B. E. Development of mHealth system for supporting self-management and remote consultation of skincare. BMC Med. Inform. Decis. Mak. 10.1186/s12911-015-0237-4 (2015). [DOI] [PMC free article] [PubMed]

- 47.Sreelatha OK, Ramesh SV, Jose J, Devassy M, Srinivasan K. Virtually controlled computerised visual acuity screening in a multilingual Indian population. Rural Remote Health. 2014;14:2908. [PubMed] [Google Scholar]

- 48.Statista. Apple App Store: Average app price 2018. (Statista, 2018). https://www.statista.com/statistics/267346/average-apple-app-store-price-app.

- 49.Snyder Peter J., Jackson Colleen E., Petersen Ronald C., Khachaturian Ara S., Kaye Jeffrey, Albert Marilyn S., Weintraub Sandra. Assessment of cognition in mild cognitive impairment: A comparative study. Alzheimer's & Dementia. 2011;7(3):338–355. doi: 10.1016/j.jalz.2011.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The authors declare that the data supporting the findings of this study are available within the paper and its supplementary information files.