Abstract

Background

Many surgical specialties are increasingly looking towards robot-assisted surgeries to improve patient outcome. Surgeons conducting robot-assisted operations require real-time surgical view. Ophthalmic robots can lead to novel vitreoretinal treatments, such as cannulating retinal vessels or even gene delivery to targeted retinal cells. This study investigates the feasibility of smartphone-delivered stereoscopic vision for microsurgical use.

Methods

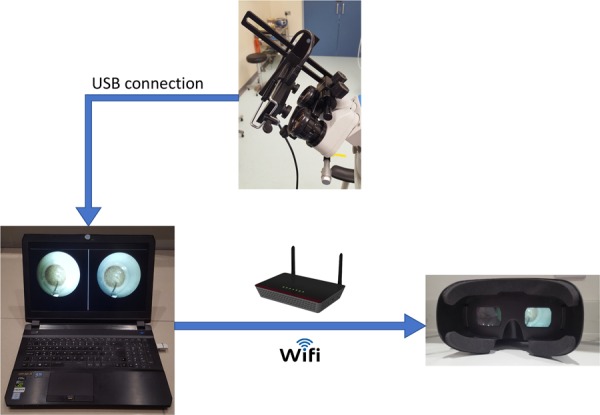

A stereo-camera, connected to a laptop, was used to capture the 3D view from a binocular surgical microscope. Wi-Fi connection was used to live-stream the laptop display onto the smartphone screen wirelessly. Finally, a Virtual Reality (VR) headset, which acts as a stereoscope, was used to house the smartphone. The headset wearer then fused these images to achieve stereoscopic perception.

Results

Using smartphone-delivered 3D vision, the author performed a simulated cataract extraction operation successfully, despite a time lag of 0.354 s ± 0.038. To the author’s knowledge, this is the first simulated ophthalmic operation performed via smartphone-delivered stereoscopic vision.

Conclusions

Microscopic output in 3D with minimal time lag can be readily achievable with smartphones and VR headsets. Uncoupling the surgeon from the operating microscope is required to achieve tele-presence, an essential step in tele-robotics. Where operating theatre space is a concern, head-mounted displays may be more convenient than 3D televisions. This 3D live-casting technique can be used in teaching and mentoring settings, where microsurgeries can be live-streamed stereoscopically onto smartphones via local Wi-Fi network. When connected to the internet, microsurgeries can be broadcasted live and viewers worldwide can see the surgeon’s view wearing their VR headsets.

Subject terms: Technology, Microscopy

Introduction

As technology advances, different surgical specialties are increasingly looking towards robot-assisted surgeries with the aim of increasing precision and dexterity, reducing surgical wound size and operating time, eliminating surgeon-related factors such as tremor and fatigue and ultimately improving patient outcome. In ophthalmology, microsurgical robots can lead to novel vitreoretinal treatments, such as cannulating retinal vessels for targeted drug delivery to occluded vessels (thrombolysis) or intraocular tumour (chemotherapy), or even gene delivery to targeted retinal cells for hereditary diseases, as robots are not confined by the physiological tremor of a human hand [1]. Notable examples include the Da Vinci Robotic Surgical System (Intuitive Surgical), which has been used in simulated intraocular surgery [2] and the first robotically-assisted anterior segment operation [3], Preceyes Surgical System, which was used in the world’s first robot-assisted human intraocular operation in 2016 and the Intraocular Robotic Interventional and Surgical System (IRISS) by UCLA [4].

Surgeons who conduct robot-assisted operations require real-time surgical view. Using a stereoscopic camera, carried by one of the laparoscopic arms, the Da Vinci system can capture the view inside the surgical cavity. This is transmitted in real-time to the dual screens in front of the operating surgeon’s eyes, similar to the display system in Virtual Reality (VR) headsets such as the Oculus Rift© and HTC Vive©, where slightly different images are projected to the user’s two eyes, giving an impression of three-dimension (3D) due to binocular disparity. Stereoscopic visualisation in ophthalmic surgeries is arguably more crucial, for example in identifying important structures such as the posterior capsule and internal limiting membrane.

Surgery by remote control, or tele-surgery, builds upon advances in robotics and has proven its use over the past decade. Human telesurgery was first demonstrated by Marescaux et al. in 2001, when a laparoscopic cholecystectomy was successfully performed on a patient in Strasbourg, France remotely by surgeons in New York with a time-lag of 155 ms [5]. Telesurgery has the potential of delivering surgical expertise to patients anywhere in the world, provided that low-latency network connection is available. This has important implications in expedition, battlefield and even space surgery. It may also be used to enhance surgical training, where the surgical robot can act as the ‘training wheels’ of a bicycle, delivering recorded movements from the trainer to the trainee to provide a safe, controlled and immersive tactile learning experience [1].

The aim of this study is to investigate whether it is possible for surgeons to receive real-time microscopic video transmission in 3D using widely-available smartphones and VR headsets.

Materials and methods

An OVRVISION stereo-camera, connected via USB cable to a laptop, was mounted in front of a binocular surgical microscope to capture the stereoscopic view, as previously described by the author [6]. This produces a side-by-side view on the laptop screen, with the left and right halves originating from the left and right microscope eyepieces, respectively.

iDisplay (SHAPE, Germany), a screen-mirroring program, was used to ‘mirror’ the laptop display onto a smartphone via Wi-Fi connection. A VR headset, which acts as a stereoscope, housed the smartphone. The headset wearer can then fuse these slightly dissimilar images to achieve stereoscopic perception. (Fig. 1)

Fig. 1.

Flowchart of image transmission from stereo-camera to VR headset via a connecting laptop

Using smartphone-delivered 3D vision, the author performed three simulated phacoemulsification surgeries on ‘Phaco Eyes’ (Phillips Studio, Bristol) at the Royal College of Ophthalmologists.

Results

Relying on the smartphone-derived microscopic view, three simulated phacoemulsification surgeries were performed successfully, each taking around 15 min. (Video 1) Despite a small yet noticeable time-lag, the feasibility of smartphone-delivered stereoscopic vision in the use of microsurgery was confirmed. As the VR headset was strapped onto the surgeon’s head, the ‘view down the microscope’ was continuously delivered stereoscopically, unimpeded by any head or neck movements.

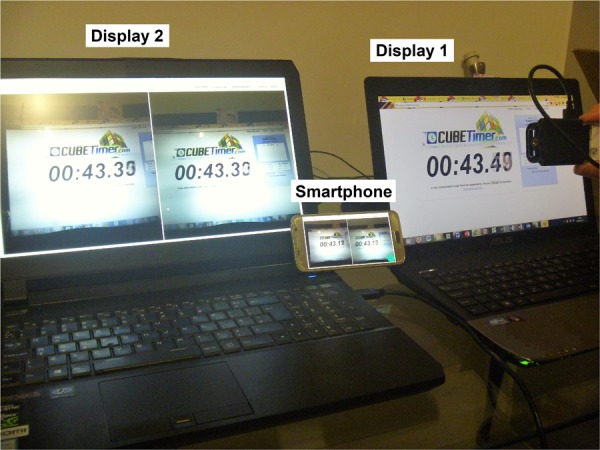

To calculate the time delay from real-time to the smartphone display, multiple photographs were taken to simultaneously capture the first laptop running an online ‘stopwatch’ program (Display 1), the second laptop displaying the side-by-side view captured by the USB-connected stereo-camera (Display 2) and the smartphone relaying the second laptop’s screen using iDisplay, the screen-mirroring program (Smartphone) (Fig. 2). Statistical analysis of the time differences shown on the three display screens, as seen on these photographs, showed a delay of 0.354 s ± 0.038 between the stopwatch and the smartphone.

Fig. 2.

Time delay from real-time (Display 1) to the laptop side-by-side display (Display 2), to the relayed smartphone display (Smartphone)

Discussion

To the author’s knowledge, this is the first time a simulated ophthalmic operation was performed via smartphone-delivered stereoscopic vision. The study also demonstrated that microscopic visual output in 3D with minimal time-lag can be readily achievable using smartphones and VR headsets.

Uncoupling the operating surgeon from the microscope, as demonstrated in this study, is required to achieve tele-presence, which is essential in tele-robotics. Decoupling the surgeon from the operating microscopes has the additional benefit of greater flexibility in patient posturing, as previously suggested [7]. Using the Alcon NGENUITY© 3D Visualization System, where live feed from the surgical microscope is captured by a stereoscopic, high-definition camera and displayed onto a 4 K television screen in 3D, the authors performed a pars plana vitrectomy and retinal detachment repair to a patient with severe thoracic kyphosis, who was operated upon in Trendelenburg position.

A few difficulties were encountered during the study. The time-lag, at around 350 ms, gave an uncomfortable sense of dissociation between the observed and actual instrument movements, leading to a loss of hand-eye coordination. This correlates with the findings by Perez et al. using laparoscopic surgical trainers that while a latency of 100–200 ms had no clear effect, performance deterioration became noticeable from 300 ms onwards, with a delay of ≥500 ms leading to significantly increased error rate [8]. Certainly, considering the small amount of inadvertent movement needed to cause ophthalmic complications, the maximum time-lag in ophthalmic surgeries that can be considered safe may be significantly less. For this reason, attempts to trial this setup on human eye operations were not considered. The time-lag when using wireless Wi-Fi connection between laptop and smartphone can be further reduced from 0.184 ± 0.030 s to 0.065 ± 0.032 s by utilizing USB connection. Of course, connecting a cable to the VR headset negates the freedom of movement it offers in the first place.

Wearing a VR headset also impeded peripheral vision, which restricted situational awareness and made simple tasks such as changing surgical instruments more challenging. This problem could potentially be resolved by using augmented reality technology, so that both the microscopic view and the surrounding environment become visible to the surgeon. Lastly, depending on how well the VR headset fits on the user’s face, it may be pulled downwards by the weight of the smartphone, leading to a loss of visual axis alignment and the need to readjust the headset position, which would be undesirable in a sterile operating theatre.

Compared to commercially available 3D visualization systems such as the Alcon NGENUITY® 3D Visualization System, this setup suffers from clinically unacceptable amount of latency. Minor degree of image degradation was also noted when comparing the view of a 1951 USAF Resolution Target, an optical resolution chart standardized by the U.S. Air Force, from the microscope and from the smartphone via the VR headset, with the resolution of the latter setup determined to be 8.0 lp/mm, equivalent to logMAR 0.025. The overall cost of the stereo-camera, laptop, smartphone and VR headset totalled US$2500.

This 3D live-casting technique can be used in teaching and mentoring settings, where microsurgeries can be live-streamed stereoscopically via smartphones within a local Wi-Fi network. When connected to the internet, microsurgical operations can be broadcasted live to viewers around the globe, who can see the operating surgeon’s view simply using their smartphones and VR headsets.

Summary

What was known before

Ophthalmic microsurgical robots can lead to novel vitreoretinal treatments, such as targeted drug delivery to retinal vessels to treat occlusion or intraocular tumour, or even gene delivery to targeted retinal cells for genetic disorders.

Surgery by remote control, or telesurgery, builds upon advances in robotics and a ‘remote-controlled’ laparoscopic cholecystectomy was first achieved in 2001.

What this study adds

This study presented a low-cost solution to the uncoupling of operating surgeon from the microscopes and demonstrated its feasibility on a simulated cataract extraction surgery.

This was the first time a simulated ophthalmic operation was performed via smartphone-delivered 3D vision.

Supplementary information

Acknowledgements

The author would like to thank Mr Chris Blyth and the Ophthalmology Department in Royal Gwent Hospital for providing the surgical instruments and invaluable advice, Dr Yvonne Yeung for her assistance during the procedure and Miss Doreen Agyeman for her help in arranging the use of the wet lab and providing the Phaco eyes.

Compliance with ethical standards

Conflict of interest

I declare that the manuscript is original research, has not been previously published and has not been submitted for publication elsewhere while under consideration. I declare financial support in the form of Travel Award from the Worshipful Company of Spectacle Makers (WCSM) Education Trust (Registered Charity no. 1135045).

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version of this article (10.1038/s41433-019-0356-8) contains supplementary material, which is available to authorized users.

References

- 1.Channa R, Iordachita I, Handa JT. Robotic vitreoretinal surgery. Retina. 2017;37:1220–8. doi: 10.1097/IAE.0000000000001398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bourla DH, Hubschman JP, Culjat M, Tsirbas A, Gupta A, Schwartz SD. Feasibility study of intraocular robotic surgery with the da Vinci surgical system. Retina. 2008;28:154–8. doi: 10.1097/IAE.0b013e318068de46. [DOI] [PubMed] [Google Scholar]

- 3.Bourcier T, Becmeur PH, Mutter D. Robotically assisted amniotic membrane transplant surgery. JAMA Ophthalmol. 2015;133:213–4. doi: 10.1001/jamaophthalmol.2014.4453. [DOI] [PubMed] [Google Scholar]

- 4.Rahimy E, Wilson J, Tsao TC, Schwartz S, Hubschman JP. Robot-assisted intraocular surgery: development of the IRISS and feasibility studies in an animal model. Eye (Lond) 2013;27:972–8. doi: 10.1038/eye.2013.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Marescaux J, Leroy J, Rubino F, Smith M, Vix M, Simone M, et al. Transcontinental robot-assisted remote telesurgery: feasibility and potential applications. Ann Surg. 2002;235:487–92. doi: 10.1097/00000658-200204000-00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ho Derek Kwun-hong. Stereoscopic Microsurgical Videography of Phacoemulsification Surgery. JAMA Ophthalmology. 2018;136(4):432. doi: 10.1001/jamaophthalmol.2018.0089. [DOI] [PubMed] [Google Scholar]

- 7.Skinner CC, Riemann CD. “Heads Up” digitally assisted surgical viewing for retinal detachment repair in a patient with severe kyphosis. Retin Cases Brief Rep. 2018;12:257–9. doi: 10.1097/ICB.0000000000000486. [DOI] [PubMed] [Google Scholar]

- 8.Perez M, Xu S, Chauhan S, Tanaka A, Simpson K, Abdul-Muhsin H, et al. Impact of delay on telesurgical performance: study on the robotic simulator dV-Trainer. Int J Comput Assist Radiol Surg. 2016;11:581–7. doi: 10.1007/s11548-015-1306-y. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.