Abstract

The biomedical literature provides a rich source of knowledge such as protein-protein interactions (PPIs), drug-drug interactions (DDIs) and chemical-protein interactions (CPIs). Biomedical relation extraction aims to automatically extract biomedical relations from biomedical text for various biomedical research. State-of-the-art methods for biomedical relation extraction are primarily based on supervised machine learning and therefore depend on (sufficient) labeled data. However, creating large sets of training data is prohibitively expensive and labor-intensive, especially so in biomedicine as domain knowledge is required. In contrast, there is a large amount of unlabeled biomedical text available in PubMed. Hence, computational methods capable of employing unlabeled data to reduce the burden of manual annotation are of particular interest in biomedical relation extraction. We present a novel semi-supervised approach based on variational autoencoder (VAE) for biomedical relation extraction. Our model consists of the following three parts, a classifier, an encoder and a decoder. The classifier is implemented using multi-layer convolutional neural networks (CNNs), and the encoder and decoder are implemented using both bidirectional long short-term memory networks (Bi-LSTMs) and CNNs, respectively. The semi-supervised mechanism allows our model to learn features from both the labeled and unlabeled data. We evaluate our method on multiple public PPI, DDI and CPI corpora. Experimental results show that our method effectively exploits the unlabeled data to improve the performance and reduce the dependence on labeled data. To our best knowledge, this is the first semi-supervised VAE-based method for (biomedical) relation extraction. Our results suggest that exploiting such unlabeled data can be greatly beneficial to improved performance in various biomedical relation extraction, especially when only limited labeled data (e.g. 2000 samples or less) is available in such tasks.

Keywords: Biomedical Literature, Relation extraction, Semi-supervised learning, Variational autoencoder

1. Introduction

Currently there are over 29 million articles in PubMed, and each year the biomedical literature grows by more than one million articles [1, 2]. As a result, a vast amount of valuable knowledge about proteins, drugs, diseases and chemicals, critical for various biomedical research studies, is “locked” in the unstructured free text [3, 4]. With the rapid growth, it is increasingly challenging to manually curate information from biomedical literature, such as protein-protein interactions (PPIs), drug-drug interactions (DDIs) and chemical-protein interactions (CPIs). Biomedical information extraction is a task to automatically detect biomedical concepts and their relations through advanced natural language processing (NLP) and machine learning techniques.

Over the past decade, a number of hand-annotated datasets have been created for biomedical relation extraction such as the various PPI corpora [5] and DDI 2013 corpus [6]. Based on these public corpora, a number of methods [7–10] have been attempted. For example, Airola, et al. (2008) proposed an all path kernel approach to extract PPIs based on the lexical and syntactic features from the dependency syntactic graph. More recently, models based on deep neural networks such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have shown promising results in various tasks [11–13]. Peng et al. [12] proposed an ensemble method to extract CPIs, which combines support vector machines (SVM), CNNs and RNNs. For the SVM, they chose the surrounding word features, bag-of-words features, distance features, keywords features and shortest-path features. For the CNN-based model, the input is the sentence sequence and shortest dependency paths (SDPs). For the RNN-based model, the input is the sentence sequence only. They concatenated the word, Part-of-Speech (POS), position and chunk embeddings as the embedding input representation for both the CNN and RNN models. The final results were then determined by majority voting based on SVM, CNNs and RNNs. Zhang et al. [13] also proposed a hybrid model based on RNNs and CNNs to classify PPIs and DDIs. The inputs of the hybrid model are sentence sequences and SDPs generated from the dependency graph. RNNs and CNNs models were employed to learn the feature representation from sentence sequences and SDPs, respectively.

Most of the aforementioned high-performing systems in biomedical relation extraction to date are based on supervised machine-learning approaches, which are known to be dependent on manually labeled data. Although data exist for few relation types such as PPIs and DDIs, there is a lack of large-scale training data for many other critical relationships in the biomedical domain, including gene-disease, drug-disease, and drug-mutation, due to the prohibitive expense of manual annotation [14]. In response, alternative methods such as distant supervision have been proposed [15, 16], which automatically create training data based on existing curated biological databases. However, since the databases are generally incomplete and our language is highly rich and diverse, such automatically created labeled data are always noisy [17].

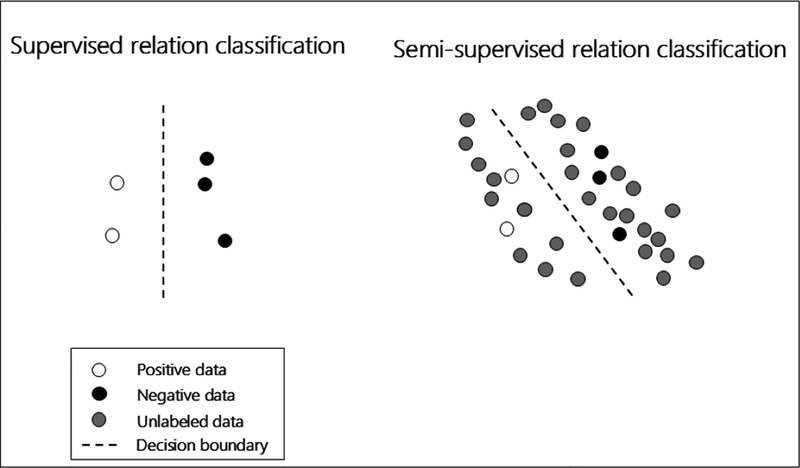

In this paper, we introduce a novel semi-supervised variational autoencoder (VAE)-based method for biomedical relation extraction. In contrast to supervised learning, the semi-supervised method learns discriminative features from both labeled and unlabeled data. Fig. 1 represents an illustrative example of the difference between supervised and semi-supervised learning methods. It is difficult to predict the decision boundary accurately based on a small number of labeled instances. However, if we integrate the unlabeled instances – generally distributed according to a mixture of individual-class distributions – with the labeled ones, we may considerably improve the learning accuracy. Given the great abundance of freely available texts in PubMed, it is particularly valuable to explore semi-supervised-based methods for improving biomedical relation extraction.

Fig. 1. An illustrative example of a semi-supervised learning method.

The white, black and gray circles represent positive, negative and unlabeled relation instances, respectively. The dashed line represents the decision boundary decided by the classifier.

Motivated by the recent success of semi-supervised VAE methods in image classification [18, 19], text modeling [20] and text classification tasks [21], in this work we investigate its feasibility for relation extraction, which differs significantly from the other tasks. To the best of our knowledge, this is the first VAE-based method for (biomedical) relation extraction. To demonstrate its robustness, we validate our method on multiple different biomedical relation types: PPIs, DDIs and CPI extraction.

The rest of this paper is organized as follows. We introduce and describe the biomedical relation extraction task, related datasets, and our proposed method in Section 2. Then, we present the experimental results in Section 3. Finally, we discuss and conclude in Section 4.

2. Materials and methods

2.1. Biomedical Relation Extraction

Biomedical relation extraction is generally approached as the task of classifying whether a specified semantic relation holds between two biomedical entities within a sentence or document. According to the number of semantic relation classes, biomedical relation extraction can be further categorized into binary vs. multi-class relation extraction.

In this paper, we focus on PPI, DDI and CPI extraction. PPI is a binary relation extraction task, whereas DDI and CPI are multi-class relation extraction task. We show some examples as follows.

PPI extraction example: These results suggest that profilin may be involved in the pathogenesis of glomerulonephritis by reorganizing the actin cytoskeleton.

DDI extraction example: The concomitant administration of gemfibrozil with Targretin capsules is not recommended.

CPI extraction example: Compound C diminished AMPK phosphorylation and enzymatic activity, resulting in the reduced phosphorylation of its target acetyl CoA carboxylase.

In the case of PPIs, a system only needs to identify whether the candidate entity pair has a semantic relation or not. For DDI and CPI, a system requires not only the detection of the semantic relation between two candidate entities but also the classification of the specific semantic relation into the correct type. For example, the DDI extraction task requires a system to distinguish five different DDI types, including Advice, Effect, Mechanism, Int and Negative[6, 22]. Similarly, the CPI extraction task includes six specific types: Activator, Inhibitor, Agonist, Antagonist, Substrate and Negative[23].

2.2. Datasets

For PPI, we used the BioInfer dataset [24]. For DDI, the DDI extraction 2013 corpus [6, 22] was used. For CPI, we use the recent ChemProt corpus [25], which was served as the benchmarking data in the BioCreative VI challenge task. The detailed statistics of the datasets are listed in Tables 1, 2 and 3, respectively.

Table 1.

The statistics of the PPI corpus

| Dataset | Sentences | Positive | Negative | Total |

|---|---|---|---|---|

| BioInfer | 1100 | 2,534 | 7,132 | 9,666 |

Table 2.

The statistics of the DDI corpus

| Dataset | Advice | Effect | Mechanism | Int | Negative | Total |

|---|---|---|---|---|---|---|

| Training set | 826 | 1,687 | 1,319 | 188 | 23,772 | 27,792 |

| Test set | 221 | 360 | 302 | 96 | 4,737 | 5,716 |

Table 3.

The statistics of the ChemProt corpus

| Relations | Training set | Development set | Test set |

|---|---|---|---|

| Activator | 768 | 550 | 664 |

| Inhibitor | 2251 | 1092 | 1661 |

| Agonist | 170 | 116 | 194 |

| Antagonist | 234 | 197 | 281 |

| Substrate | 705 | 457 | 643 |

| Negative | 12461 | 8070 | 11013 |

| Total | 16589 | 10482 | 14456 |

2.3. Our Semi-supervised VAE-based Approach

2.3.1. Overview of our approach

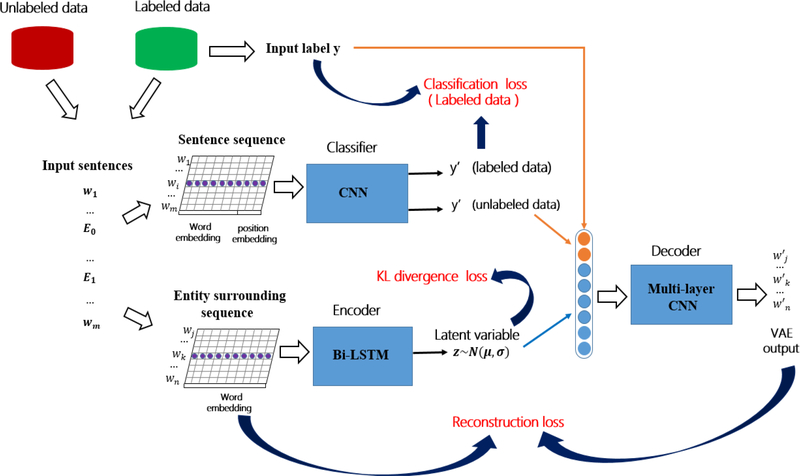

Our goal of using a semi-supervised method is to leverage unlabeled data for improved performance. A schematic overview of our method is shown in Fig. 2. Our approach includes three parts: a classifier, an encoder, and a decoder. In a nutshell, our semi-supervised VAE model works by calibrating the classifier through the use of the encoder and decoder. More specifically, the encoder and decoder work together to reconstruct the input for both labeled and unlabeled data, during which the classifier learns comprehensive feature representation. The encoder network first learns the mapping from all the labeled and unlabeled data into the latent space, a compressed representation of the input data. Next, the semi-supervised VAE samples the latent variable z. Then, the decoder network strives to reconstruct the input based on the latent variable z and the discrete label y (classifier output). The latent variable distribution is usually parametrized by a Gaussian distribution, with its mean and variance generated by the encoder. In our work, the classifier takes as input a sentence with an entity pair, where each word is represented by a word vector embedding and position embedding. The position embedding represents the position feature of each word, which captures the relative distances between each word and the two entities. In this work, the position embedding is randomly initialized following a standard normal distribution. The input of the decoder is a vector combined by the latent variable z generated by the encoder (in blue) and the output of our classifier: the known label y or the predicted label y′ depending on whether the input sentences are the labeled data or not (in orange). In this way, the predicted labels y′ of the classifier directly affect the output of the decoder. Intuitively, an accurate predicted label y′ will be much more informative and helpful for the decoder to reconstruct the input of the encoder than a random y′. Therefore, when the input sentences are the unlabeled data, the optimization of the reconstruction loss will benefit the classifier.

Fig. 2.

The overview of the semi-supervised VAE-based model for relation extraction.

Based on the proposed model, the unlabeled data are incorporated with the labeled data to help train the parameters of the classifier, which means that the semi-supervised VAE model is learning useful features from both labeled and unlabeled data.

2.3.2. The semi-supervised VAE model

We briefly introduce the semi-supervised variational inference here [18]. Let (x, y)~Dl and x~ Du denote the labeled data and unlabeled data, respectively. The semi-supervised generative model [18] was proposed to incorporate the continuous latent variable z and the discrete label y.

| (1) |

The semi-supervised variational model consists of three parts, including a discriminative network q(y|x), an inference network q(z|x, y) and a generative network q(x|y, z). For the labeled data (x, y)~Dl, a data point and its label are given and the variational bound is as follows:

| (2) |

where E denote the expectation, p(z) is the prior distribution, q(z|x, y) is the learned latent posterior, the term Eq(z|x, y) [logp(x|y, z)] is the expectation of the conditional log-likelihood on latent variable z, and the term KL(q(z|x, y)||p(z)) is the Kullabck-Leibler divergence of p(z) and q(z|x, y).

For the unlabeled data x~Du, the label y′ is predicted by the discriminative network q(y|x). Thus, the variational bound of the unlabeled data is as follows:

| (3) |

In addition, the classification loss of the labeled data is . Incorporating the labeled and unlabeled terms, the objective function of the entire dataset is as follows:

| (4) |

where the hyperparameter α balances the relative weight between generative and discriminative learning.

Typically, the discriminative network q(y|x), the inference network q(z|x, y) and the generative network p(x|y, z) are implemented by a classifier fcla(·), an encoder network fenc(·) and a decoder network fdec(·), respectively. First, the encoder network encodes all the labeled and unlabeled data into the latent space. Next, the semi-supervised VAE samples the latent variable z. Then, the decoder network reconstructs the input based on the latent variable z and the discrete label y. The latent variable distribution is usually parametrized by a Gaussian distribution, with its mean and variance generated by the fenc(·).

2.3.3. Embedding input representation

The input of the classifier and encoder are sentence sequences and the entity surrounding sequences. Given a sentence S, we replace the two candidate entities with “E0” and “E1”. The classifier input is the sentence sequence {w1, w2, …, wm}. Word embedding [26] maps words to low-dimensional vector space and preserves the syntactic and semantic information underlying the words. Position embedding is shown to be also important for relation extraction [27], which captures the distance feature between each word to the candidate entities. In our experiments, we trained word embedding using the fastText model [28] on the entire PubMed Central (PMC) Open Access subset and the Medical Subject Headings (MeSH).

Let Wword and Wdis denote the word embedding and position embedding, respectively. For each word wi of the sentence sequence {w1, w2, …, wm}, the word embedding vector and two position vectors and can be obtained, respectively, based on Wword and Wdis. The final embedding representation of wi is . In addition, unlike the sentence classification or text modeling task, the words around the two candidate entities are generally more valuable than other words in the sentence for the relation extraction. In particular, there are many long and complicated sentences in the biomedical literature, where only the words surrounding the targeted entities are useful to distinguish and classify the candidate relation. Therefore, we only use the word sequence surrounding the two candidate entities as the encoder input. More specifically, we empirically choose the 10 preceding words and the 5 succeeding words of the first target entity and the first 5 preceding words and the 10 succeeding words of the second entity. The selected word sequences are concatenated to the entity surrounding sequence {wj, wk, …, wn}, whose size is 30. If the surrounding words of the target entities are not available in the sentence, zeros are padded in the entity surrounding sequence. We only use word embeddings to represent the entity surrounding sequence. For each word wj of the entity surrounding sequence, the embedding representation is .

2.3.4. The model architecture

The semi-supervised VAE are implemented using deep neural networks. Bidirectional LSTMs (Bi-LSTMs) and CNNs are two major neural networks for the semi-supervised VAE. Some recent studies [20, 21] have suggested the (Bi-)LSTM is more suitable to be used as encoder than CNNs. In our work, we experimented with (Bi-)LSTM and CNNs on decoder and classifier, respectively. We found the CNNs was more effective and efficient on decoder and classifier than (Bi-)LSTMs. Hence, in our semi-supervised VAE architecture the encoder and decoder are implemented by bidirectional LSTMs (Bi-LSTMs) and multilayer CNNs, respectively. For the classifier, we implemented with the CNNs architecture. Next, we provide a brief introduction of the CNNs and (Bi-)LSTM architectures.

CNN model:

The CNN model learns the features from the text by applying a convolution operation in the sentences. Given a sentence {w1, w2, …, wm}, the embedding input representation is represented as {z1, z2, …, zm}. CNNs model [29] generally contains a set of convolution filters. Each convolution filter applies convolution operations to n continuous words to generate a new feature based on n-grams, which is parameterized by the weight matrix W. For a given window of words zi:i+n−1, a feature ci is generated by a convolution filter as follows:

| (6) |

where f is a nonlinear function, and b is a bias parameter. Thus, the convolution filter is applied to the whole sentence step by step {z1: n, z2: n+1, …, zm−n+1: m} to produce a feature map as follows:

| (7) |

To choose the most salient feature, we employ the max pooling operation [30] over the feature map c. For the classifier, the input is the embedding representation of the sentence sequences and the output is the one-hot label vector. We employ the Softmax function to implement the classification based on the feature representation generated by CNNs. The decoder is implemented by three-layer CNNs. The latent variable and the one-hot label vector are combined as the decoder input. For the labeled data, we simply map the labels into the one-hot label vector. For the unlabeled data, the one-hot label vector is predicted by the classifier. In our experiments, we apply a fully connected layer to connect the decoder input and the three-layer CNNs. Similar to machine translation, the output of the three-layer CNNs is fed into a fully connected layer to implement the reconstruction of the entity surrounding sequences.

LSTM model:

The LSTM model exploits the gate mechanism to learn the long-term dependency feature from the sentences. In LSTM models [31], the current hidden state hj and memory cell cj, at the time step j, are generated based on the current input word xj, the previous hidden state hj−1 and the previous memory cell cj−1. Specifically, the input gate ij, the forget gate fj, the output gate oj and the extracted feature vector gj are defined as follows.

| (8) |

| (9) |

| (10) |

| (11) |

where W*, U* and b* are weight matrices and bias vectors. Based on Eqs. (8)–(11), the memory cell cj and hidden state hj are calculated as follows.

| (12) |

| (13) |

where ☉ denotes element wise multiplication.

The Bi-LSTMs capture contextual features from the input sentence both forward and backward. Let and denote the output of the forward LSTMs and backward LSTMs. The output of the Bi-LSTMs is the concatenation . In our model, we use Bi-LSTM to implement the encoder. As mentioned, the encoder input is the embedding representation of the entity surrounding sequences. The encoder output is the latent variables.

Our semi-supervised model learns the features from both the labeled and unlabeled data. According to the Eq. 4, the loss functions of the labeled and unlabeled data are different. Our semi-supervised VAE model contains three loss terms including classification loss, reconstruction loss and KL divergence loss. The classification loss is only calculated on the labeled data. The reconstruction loss is used to reconstruct the encoder input and decoder output. The KL divergence loss is the major character of a VAE model. Basically, the VAE model uses the latent variable to describe the input data. To infer the latent variable distribution, VAE model firstly uses simpler distribution that is easy to evaluate, such as Gaussian, to model the latent variable distribution. The KL divergence loss can effectively measure the difference between the latent variable distribution and the Gaussian distribution. Then, the VAE model minimizes the KL divergence loss to adjust the latent variable distribution to the Gaussian distribution. For the labeled data, we compute all three loss terms: classification loss, reconstruction loss and KL divergence loss. For the unlabeled data, we only calculate the reconstruction loss and KL divergence loss. The classification loss and reconstruction loss are calculated by cross-entropy and sparse cross-entropy. The KL divergence loss is calculated in the closed form [18]. In our experiments, the encoder learns to output the mean and variance of the latent variable z. Thus, the latent variable z can be calculated by z = μ + σϵ, where ϵ = (0, In×n). The KL divergence loss for a single sample is calculated as: . The objective loss for the labeled and unlabeled data are Lossreconstrust + LossKL + Lossclassify and Lossreconstrust + LossKL, respectively.

In our experiment, the proposed model is implemented by the Keras library. In each training batch, we first sample the training instances from the labeled and unlabeled data, respectively. Then, our model is trained by labeled instances and unlabeled instances, separately. Hence, the parameters of the classifier are trained by both labeled and unlabeled data. We apply the resilient mean square propagation (RMSProp) [32] to optimize the parameters of the model. We manually tuned the hyperparameters based on the system results on the validation set. The final hyperparameters used in our experiments are listed in Table 4. A dropout mechanism was applied in the encoder, decoder and classifier, to alleviate the overfitting of the neural networks model [33].

Table 4.

The hyperparameters used in the experiments

| Hyperparameter name | Value |

|---|---|

| Word embedding dimensionality | 200 |

| Position embedding dimensionality | 20 |

| Mini batch size | 64 |

| Latent variable size | 32 |

| Encoder Bi-LSTM hidden units | 600 |

| 1st Decoder CNNs hidden units | 300 |

| 2nd Decoder CNNs hidden units | 600 |

| 3rd Decoder CNNs hidden units | 1000 |

| Classifier CNNs hidden units | 300 |

| Encoder dropout rate after embedding | 0.5 |

| Decoder dropout rate before output | 0.5 |

| Classifier dropout rate after embedding | 0.5 |

| Classifier dropout rate before output | 0.5 |

3. Results

3.1. Evaluation metrics and setups

The standard F-score, precision and recall are used as the evaluation metrics in our experiments. The F1-score is the harmonic mean of both precision and recall, which is defined as (2 · precision ·recall)/(precision + recall). For the DDI and CPI extraction task, we compute the micro average to assess the overall performances [12, 34].

Generally, the semi-supervised experiments need labeled, unlabeled, validation and test set. For the BioInfer corpus, we randomly chose 1000 samples as the test set and another 1000 samples as the validation set (approximately 10% of total PPI data for each set). For the DDI corpus, we randomly chose 2000 training samples as the validation set. For the ChemProt corpus, we first combined the training and development sets (as shown in Table 4) and similarly chose 2000 training samples (again, approximately 10%) as the validation set. The validation set was used for optimizing the hyperparameters and the number of epochs for our model training. The held-out test set was used for evaluating the performance of our model. For each labeled size, the selection of the training and validation sets was repeated ten times to reduce any potential selection bias.

3.2. Experimental Results

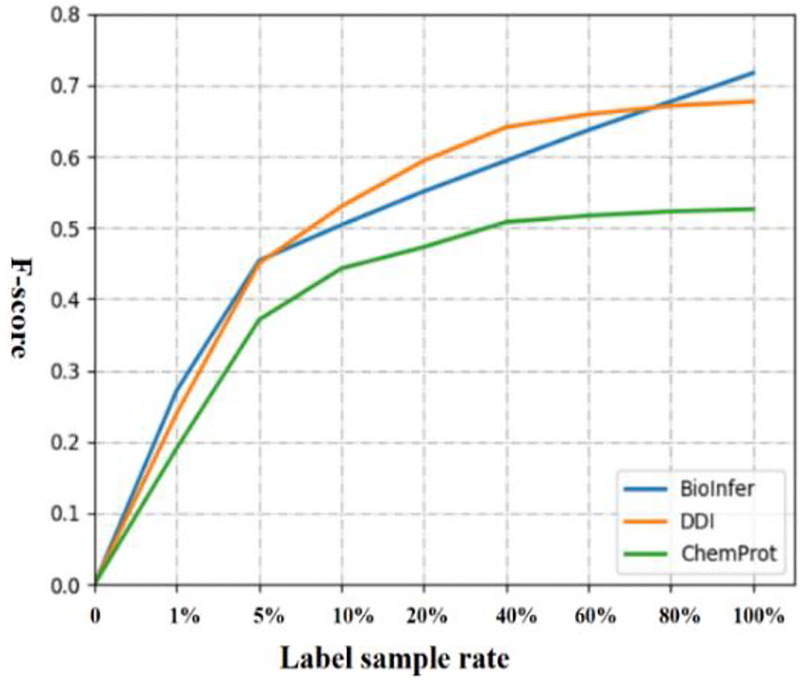

Firstly, we used the baseline CNNs model with different number of labeled samples to evaluate the supervised classifier performance on the three corpora. After splitting the validation and testing sets, the number of labeled data for BioInfer, DDI and ChemProt are 7,666, 25,792 and 25,071, respectively. We experimented with the different labeled training rate which ranges from 1% to 100% on three corpora. For BioInfer, the labeled training rate ranges from 1% (77) to 100% (7,666), with corresponding performance of 0.276 to 0.717 in F-score. Similar trends can be seen for DDI and CPI also, showing that more data helps improve the performance of supervised CNNs model on all three corpora. But the performance increase slows down significantly once training samples have reached a certain amount (e.g. roughly 40% for both DDI and CPI). We also noticed that CNNs model achieved a higher F-score on BioInfer only using 7,666 labeled samples than DDI or ChemProt with ~ 25,000 labeled samples, likely due to the fact that BioInfer is a binary relation corpus, whereas DDI and ChemProt are multi-class relation corpora.

In the biomedical domain, annotating labeled training data is time-consuming and bears high cost. Hence, a critical question is how much labeled data is needed minimally for obtaining competitive performance on biomedical relation extraction. Our results on the three corpora show that the proposed CNNs model with ~2,000 labeled samples can achieve above 70% of the best performance.

Then, we compared our semi-supervised method with the baseline CNN on the same corpora where unlabeled data were directly taken from those un-used instances in the training set (with their labels removed). Table 5 shows that our proposed semi-VAE method can effectively improve the performance of both binary and multi-class relation extractions. For example, on BioInfer, our method improved the F-score from 0.483 to 0.544 with 500 labeled data, which outperformed the F-score of 0.518 achieved by the CNNs baseline using 1000 l,abeled data. Similarly, on ChemProt, our method improved the F-score from 0.292 to 0.352 using 500 labeled data, which also outperformed the F-score of 0.349 achieved by the CNNs baseline using 1,000 labeled data.

Table 5.

The evaluation results on F-score.

| Corpus | Labeled data | CNN | Semi-Supervised VAE |

|---|---|---|---|

| BioInfer | 250 | 0.447 | 0.521 |

| 500 | 0.483 | 0.544 | |

| 1000 | 0.518 | 0.563 | |

| 2000 | 0.556 | 0.587 | |

| 4000 | 0.620 | 0.622 | |

| All (7666) | 0.717 | - | |

| DDI | 250 | 0.236 | 0.367 |

| 500 | 0.324 | 0.415 | |

| 1000 | 0.418 | 0.482 | |

| 2000 | 0.495 | 0.528 | |

| 4000 | 0.572 | 0.579 | |

| All (25792) | 0.677 | - | |

| ChemProt | 250 | 0.203 | 0.287 |

| 500 | 0.292 | 0.352 | |

| 1000 | 0.349 | 0.381 | |

| 2000 | 0.418 | 0.443 | |

| 4000 | 0.505 | 0.509 | |

| All (25071) | 0.526 | - |

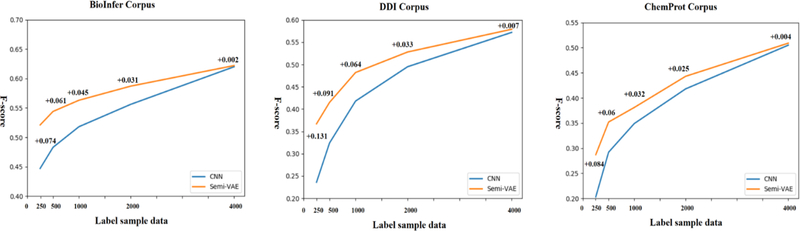

Our semi-supervised method can significantly and consistently improve the performance when the labeled data is 2,000 or less. From Fig. 4, it can be seen also that the performance improvement of our semi-supervised model gradually decreases when more labeled data becomes available. For example, the performance of our method is generally no different to the baseline when 4,000 labeled instances were used. This is mainly because of the classifier is greatly underfitting to the data when trained with only a small amount of labeled data, such as 250 or 500. In such a situation, the classifier can learn a lot from the unlabeled data, and our semi-supervised VAE model can effectively exploit unlabeled data for improved performance. In contrast, the underfitting issue of the classifier is gradually alleviated as more labeled data is used. And as a result, the benefits of using unlabeled data diminishes accordingly.

Fig. 4.

Comparison of baseline CNN vs. Semi-VAE performance on three corpora as the labeled training data is varied from 250 to 4,000.

Table 6 shows the performance of semi-supervised model for each class on the DDI 2013 dataset that contains two different subsets (DrugBank and MEDLINE). The semi-supervised model achieved higher performance on DrugBank than that on MEDLINE. For the different DDI types, the performance varies significantly. As a whole, the performance on the int type is the worst on both DrugBank and MEDLINE. This indicates that it is hard to accurately classify the int type DDI on DDI 2013 corpus. From Table 1, we can see that the training set for int type only contains 188 instances which is far less than that of other DDI types. Table 7 shows the performance of our semi-supervised model for each class on ChemProt. We see that our VAE method is able to help improve the classification performance with noticeable increases in all classes.

Table 6.

The F-score of semi-supervised model for each class on DDI.

| Labeled data | DrugBank | MEDLINE | ||||||

|---|---|---|---|---|---|---|---|---|

| Effect | Mechanism | Advice | Int | Effect | Mechanism | Advice | Int | |

| 250 | 0.450 | 0.338 | 0.391 | 0 | 0.256 | 0.249 | 0.19 | 0 |

| 500 | 0.485 | 0.369 | 0.447 | 0.315 | 0.288 | 0.274 | 0.238 | 0 |

| 1000 | 0.512 | 0.471 | 0.551 | 0.441 | 0.321 | 0.301 | 0.384 | 0 |

| 2000 | 0.536 | 0.517 | 0.617 | 0.518 | 0.422 | 0.327 | 0.421 | 0 |

| 4000 | 0.627 | 0.560 | 0.654 | 0.548 | 0.449 | 0.417 | 0.471 | 0.444 |

Table 7.

The F-score of semi-supervised model for each class on ChemProt.

| Labeled data | CPR:3 (Activator) |

CPR:4 (Inhibitor) |

CPR:5 (Agonist) |

CPR:6 (Antagonist) |

CPR:9 (Substrate) |

|---|---|---|---|---|---|

| 250 | 0.202 | 0.364 | 0 | 0 | 0.131 |

| 500 | 0.227 | 0.434 | 0 | 0.156 | 0.170 |

| 1000 | 0.251 | 0.461 | 0.295 | 0.406 | 0.221 |

| 2000 | 0.268 | 0.521 | 0.406 | 0.475 | 0.356 |

| 4000 | 0.330 | 0.568 | 0.508 | 0.590 | 0.440 |

In Table 8, we compared our semi-supervised method with two other external methods. Dai and Le [35] proposed a semi-supervised learning method based on LSTM networks, which first trains a model using unlabeled data then fine-tunes the pre-trained model by labeled data. In our experiment, we keep the same experimental settings and pretrain a Bi-LSTM model using the unlabeled set of BioInfer, DDI and ChemProt. From Table 8, our supervised model achieved a better average improvement of 0.05 in the F-score compared to that of 0.032 in the case of [35]. One major difference between our method and [35] is that they use unlabeled data only in the pretraining stage, but our semi-supervised model exploits the unlabeled data in the whole training process. This means that our model is able to learn from unlabeled data constantly. We also notice that the pre-train strategy [35] achieved higher performance when 4,000 labeled instances were used. We also compared our semi-supervised model with a state-of-the-art hybrid model proposed by Zhang et al [13] on the three corpora. Similarly, the proposed semi-supervised model outperformed the hybrid model when the labeled data is 2,000 or less. These comparisons further confirm that our method can outperform or compete with other state-of-the-art methods when only limited labeled data (2,000 instances or less) is available.

Table 8.

Performance comparison with other methods.

| Corpus | Labeled data | Our method | Dai and Le [35] | Zhang et al. [34] |

||||

|---|---|---|---|---|---|---|---|---|

| CNN | Semi. VAE | Δ | Bi-LSTM | Pretrain strategy | Δ | |||

| BioInfer | 250 | 0.447 | 0.521 | 0.074 | 0.454 | 0.506 | 0.052 | 0.459 |

| 500 | 0.483 | 0.544 | 0.061 | 0.489 | 0.533 | 0.044 | 0.512 | |

| 1000 | 0.518 | 0.563 | 0.045 | 0.527 | 0.558 | 0.031 | 0.551 | |

| 2000 | 0.556 | 0.587 | 0.031 | 0.569 | 0.583 | 0.014 | 0.577 | |

| 4000 | 0.620 | 0.622 | 0.002 | 0.627 | 0.633 | 0.006 | 0.638 | |

| DDI | 250 | 0.236 | 0.367 | 0.131 | 0.258 | 0.319 | 0.061 | 0.289 |

| 500 | 0.324 | 0.415 | 0.091 | 0.332 | 0.384 | 0.052 | 0.381 | |

| 1000 | 0.418 | 0.482 | 0.064 | 0.425 | 0.463 | 0.038 | 0.474 | |

| 2000 | 0.495 | 0.528 | 0.033 | 0.502 | 0.522 | 0.020 | 0.531 | |

| 4000 | 0.572 | 0.579 | 0.007 | 0.588 | 0.596 | 0.008 | 0.595 | |

| ChemProt | 250 | 0.203 | 0.287 | 0.084 | 0.194 | 0.253 | 0.059 | 0.227 |

| 500 | 0.292 | 0.352 | 0.06 | 0.279 | 0.33 | 0.051 | 0.324 | |

| 1000 | 0.349 | 0.381 | 0.032 | 0.337 | 0.361 | 0.024 | 0.365 | |

| 2000 | 0.418 | 0.443 | 0.025 | 0.424 | 0.439 | 0.015 | 0.44 | |

| 4000 | 0.505 | 0.509 | 0.004 | 0.508 | 0.518 | 0.010 | 0.521 | |

| Avg. | - | - | - | 0.05 | - | - | 0.032 | - |

4. Discussion & Conclusions

Automatically extracting relations in biomedical text is a crucial task in biomedical NLP. The supervised-based methods in biomedical relation extraction heavily depend on labeled data, which is expensive and difficult to obtain at a large scale in the biomedical domain. In this work, we propose a semi-supervised VAE-based method to extract biomedical relation while effectively learns useful features from both labeled data and unlabeled data. By exploiting the unlabeled data, our model is able to achieve competitive performance with a small amount of labeled data while taking advantage of freely available unlabeled data in the literature.

In this work, we also experimented and evaluated additional reconstruction strategies, albeit not reported here. For example, we reported above the use of candidate entities surrounding sequences as the encoder input. We also evaluated two other strategies. First, we experimented using the whole sentence sequence as the encoder input, which reconstructs the whole sentence words between the input of the encoder and the output of the decoder. In the other case, we used both the whole sentence sequence and word position information as the encoder input, and we reconstructed not only the words of the whole sentence but also the position of each word. The performance of three reconstruction strategies were found to be similar. However, using candidate entities surrounding sequences was much more efficient than other two strategies because the VAE only needs to reconstruct a small part of the sentence.

Generally speaking, the selection of the labeled data may affect the performance of the semi-supervised method. Thus in our experiments, we randomly selected the labeled data and repeated each training ten times to reduce potential selection bias. Intuitively, the value of each labeled instance may play a different role for the classifier. If we are able to effectively select the best labeled data, it may further benefit our semi-supervised method. Thus, we plan to further investigate how to integrate the active learning strategy into our semi-supervised model in the future. In future research, we would also like to combine entity recognition and relation extraction to develop an end-to-end system, as well as to explore distant supervision [36] for improving biomedical relation extraction with no or limited labelled data.

Fig. 3.

The learning curve of CNNs model on three corpora.

Highlights.

A semi-supervised method is proposed based on variational autoencoders (VAE) for biomedical relation extraction.

Cutting-edge neural networks are used to implement the semi-supervised VAE model.

The latent features are learnt from both the labeled and unlabeled data.

The proposed method effectively exploits the unlabeled data to improve the performance.

Acknowledgments

The authors thank I. Segura-Bedmar, P. Martínez, M. Herrero-Zazo, and T. Declerck for their support with the DDI 2013 corpus.

Funding This work was supported by the NIH Intramural Research Program, National Library of Medicine.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Competing interests

The authors declare no competing interests.

References

- [1].Fiorini N, Canese K, Starchenko G, Kireev E, Kim W, Miller V, Osipov M, Kholodov M, Ismagilov R, Mohan S, Best Match: New relevance search for PubMed, Plos Biology 16(8) (2018) e2005343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Fiorini N, Leaman R, Lipman DJ, Lu Z, How user intelligence is improving PubMed, Nature Biotechnology 36 (2018) 937. [DOI] [PubMed] [Google Scholar]

- [3].Wei CH, Peng Y, Leaman R, Davis AP, Mattingly CJ, Jiao L, Wiegers TC, Lu Z, Assessing the state of the art in biomedical relation extraction: overview of the BioCreative V chemical-disease relation (CDR) task, Database 2016(1) (2016) baw032–baw032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Singhal A, Simmons M, Lu Z, Text Mining Genotype-Phenotype Relationships from Biomedical Literature for Database Curation and Precision Medicine, Plos Computational Biology 12(11) (2016) e1005017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Pyysalo S, Airola A, Heimonen J, Björne J, Ginter F, Salakoski T, Comparative analysis of five protein-protein interaction corpora, BMC bioinformatics 9(3) (2008) S6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Herrero-Zazo M, Segura-Bedmar I, Martínez P, Declerck T, The DDI corpus: An annotated corpus with pharmacological substances and drug–drug interactions, Journal of biomedical informatics 46(5) (2013) 914–920. [DOI] [PubMed] [Google Scholar]

- [7].Airola A, Pyysalo S, Björne J, Pahikkala T, Ginter F, Salakoski T, All-paths graph kernel for protein-protein interaction extraction with evaluation of cross-corpus learning, BMC bioinformatics 9(11) (2008) S2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Kim S, Yoon J, Yang J, Park S, Walk-weighted subsequence kernels for protein-protein interaction extraction, BMC bioinformatics 11(1) (2010) 107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Segura-Bedmar I, Martinez P, de Pablo-Sánchez C, Using a shallow linguistic kernel for drug–drug interaction extraction, Journal of biomedical informatics 44(5) (2011) 789–804. [DOI] [PubMed] [Google Scholar]

- [10].Zhang Y, Lin H, Yang Z, Wang J, Li Y, A single kernel-based approach to extract drug-drug interactions from biomedical literature, PloS one 7(11) (2012) e48901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Rios A, Kavuluru R, Lu Z, Generalizing Biomedical Relation Classification with Neural Adversarial Domain Adaptation, Bioinformatics 34(17)(2018) 2973–2981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Peng Y, Rios A, Kavuluru R, Lu Z, Extracting chemical–protein relations with ensembles of SVM and deep learning models, Database 2018(1) (2018) bay073–bay073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Zhang Y, Lin H, Yang Z, Wang J, Zhang S, Yuanyuan L Yang, A Hybrid Model Based on Neural Networks for Biomedical Relation Extraction, Journal of Biomedical Informatics 81 (2018) 83. [DOI] [PubMed] [Google Scholar]

- [14].Chapman WW, Nadkarni PM, Hirschman L, D’Avolio LW, Savova GK, Uzuner O, Overcoming barriers to NLP for clinical text: the role of shared tasks and the need for additional creative solutions, Journal of the American Medical Informatics Association 18(5) (2011) 540–543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Li G, Wu C, Vijay-Shanker K, Noise Reduction Methods for Distantly Supervised Biomedical Relation Extraction, BioNLP 2017 (2017) 184–193. [Google Scholar]

- [16].Bobić T, Klinger R, Thomas P, Hofmann-Apitius M, Improving distantly supervised extraction of drug-drug and protein-protein interactions, Proceedings of the Joint Workshop on Unsupervised and Semi-Supervised Learning in NLP, Association for Computational Linguistics, 2012, pp. 35–43. [Google Scholar]

- [17].Roth B, Barth T, Wiegand M, Klakow D, A survey of noise reduction methods for distant supervision, Proceedings of the 2013 workshop on Automated knowledge base construction, ACM, 2013, pp. 73–78. [Google Scholar]

- [18].Kingma DP, Mohamed S, Rezende DJ, Welling M, Semi-supervised learning with deep generative models, Advances in Neural Information Processing Systems, 2014, pp. 3581–3589. [Google Scholar]

- [19].Li Y, Pan Q, Wang S, Peng H, Yang T, Cambria E, Disentangled variational auto-encoder for semi-supervised learning, arXiv preprint arXiv:1709.05047 (2017). [Google Scholar]

- [20].Yang Z, Hu Z, Salakhutdinov R, Berg-Kirkpatrick T , Improved Variational Autoencoders for Text Modeling using Dilated Convolutions, in: Doina P, Yee Whye T (Eds.) Proceedings of the 34th International Conference on Machine Learning, PMLR, Proceedings of Machine Learning Research, 2017, pp. 3881–3890. [Google Scholar]

- [21].Xu W, Sun H, Deng C, Tan Y, Variational Autoencoder for Semi-Supervised Text Classification, AAAI, 2017, pp. 3358–3364. [Google Scholar]

- [22].Segura-Bedmar I, Martínez P, Herrero-Zazo M, Lessons learnt from the DDIExtraction-2013 shared task, Journal of biomedical informatics 51 (2014) 152–164. [DOI] [PubMed] [Google Scholar]

- [23].Krallinger M, Rabal O, Akhondi SA, Overview of the BioCreative VI chemical-protein interaction Track, Proceedings of the BioCreative VI Workshop. Bethesda, MD, USA, 2017, pp. 141–146. [Google Scholar]

- [24].Pyysalo S, Ginter F, Heimonen J, Björne J, Boberg J, Järvinen J, Salakoski T, BioInfer: a corpus for information extraction in the biomedical domain, BMC bioinformatics 8(1) (2007) 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Kringelum J, Kjaerulff SK, Brunak S, Lund O, Oprea TI, Taboureau O, ChemProt-3.0: a global chemical biology diseases mapping, Database 2016 (2016) bav123–bav123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Mikolov T, Chen K, Corrado G, Dean J, Efficient estimation of word representations in vector space, arXiv preprint arXiv:1301.3781 (2013). [Google Scholar]

- [27].Zeng D, Liu K, Lai S, Zhou G, Zhao J, Relation Classification via Convolutional Deep Neural Network, COLING, 2014, pp. 2335–2344. [Google Scholar]

- [28].Bojanowski P, Grave E, Joulin A, Mikolov T, Enriching word vectors with subword information, arXiv preprint arXiv:1607.04606 (2016). [Google Scholar]

- [29].Krizhevsky A, Sutskever I, Hinton GE, Imagenet classification with deep convolutional neural networks, Advances in neural information processing systems, 2012, pp. 1097–1105. [Google Scholar]

- [30].Collobert R, Weston J, Bottou L, Karlen M, Kavukcuoglu K, Kuksa P, Natural language processing (almost) from scratch, Journal of Machine Learning Research 12(Aug) (2011) 2493–2537. [Google Scholar]

- [31].Hochreiter S, Schmidhuber J, Long short-term memory, Neural computation 9(8) (1997) 1735–1780. [DOI] [PubMed] [Google Scholar]

- [32].Tieleman T, Hinton G, Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude, COURSERA: Neural Networks for Machine Learning vol. 4 (2012). [Google Scholar]

- [33].Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R, Dropout: A simple way to prevent neural networks from overfitting, The Journal of Machine Learning Research 15(1) (2014) 1929–1958. [Google Scholar]

- [34].Zhang Y, Zheng W, Lin H, Wang J, Yang Z, Dumontier M, Drug–drug interaction extraction via hierarchical RNNs on sequence and shortest dependency paths, Bioinformatics 34(5) (2018) 828–835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Dai AM, Le QV, Semi-supervised sequence learning, Advances in Neural Information Processing Systems, 2015, pp. 3079–3087.27279724 [Google Scholar]

- [36].Peng Y, Wei CH, Lu Z, Improving chemcial disease relation extraction with rich features and weakly labeled data, 2016; 8:53. [DOI] [PMC free article] [PubMed] [Google Scholar]