Abstract

Background.

To study visual processing, it is necessary to precisely control visual stimuli while recording neural and behavioral responses. It can be important to present stimuli over a broad area of the visual field, which can be technically difficult.

New Method.

We present a simple geometry that can be used to display panoramic stimuli. A single digital light projector generates images that are reflected by mirrors onto flat screens that surround an animal. It can be used for behavioral and neurophysiological measurements, so virtually identical stimuli can be presented. Moreover, this geometry permits light from the projector to be used to activate optogenetic tools.

Results.

Using this geometry, we presented panoramic visual stimulation to Drosophila in three paradigms. We presented drifting contrast gratings while recording walking and turning speed. We used the same projector to activate optogenetic channels during visual stimulation. Finally, we used two-photon microscopy to record responses in direction-selective cells to drifting gratings.

Comparison with Existing Method(s).

Existing methods have typically required custom hardware or curved screens, while this method requires only flat back projection screens and a digital light projector. The projector generates images in real time and does not require pre-generated images. Finally, while many setups are large, this geometry occupies a 30×20 cm footprint with a 25 cm height.

Conclusions.

This flexible geometry enables measurements of behavioral and neural responses to panoramic stimuli. This allows moderate throughput behavioral experiments with simultaneous optogenetic manipulation, with easy comparisons between behavior and neural activity using virtually identical stimuli.

Keywords: Visual stimuli, panoramic stimuli, psychophysics, behavior, physiology, electrophysiology, two-photon microscopy, Drosophila, visual processing, visual behaviors

1. Introduction

In visual neuroscience, it is critical to have precise control of visual stimuli. For many experiments, and particularly in behavioral experiments, this means controlling all or most of the visual field with a panoramic visual display. There are many ways to create panoramic visual displays, but these set-ups often require custom hardware. Here, we present a simple geometry that uses a single projector to generate a panoramic display. This method takes advantage of modern graphics processing units and software perspective correction, and can be used for moderate throughput behavioral experiments, optogenetics experiments, and physiology.

Panoramic visual stimuli can be displayed using many methods. The oldest methods use a rotating drum or nested drums, on which patterns are drawn or printed, in order to generate rigidly translating rotational stimuli (Hassenstein and Reichardt, 1956). Modern experiments have often used many small arrays of LEDs with custom hardware to create cylindrical LED screens with very fast update rates (Reiser and Dickinson, 2008). Standard flat screen computer displays have also been arrayed about animals to act as panoramic displays (Bahl et al., 2013; Yamaguchi et al., 2008). Projectors have been used to generate panoramic stimuli by projecting onto spheres or cylinders from the outside (Stowers et al., 2017; Williamson et al., 2018) or inside (Harvey et al., 2009). Projectors have also been used with multiple flat screens, reorienting the image by using coherent fiber optic cables (Clark et al., 2011; Clark et al., 2014) or by using mirrors, in a geometry similar to the geometry described here (Cabrera and Theobald, 2013). There are trade-offs between these existing methods in update rates, expense, flexibility, and physical size.

Here, we present a simple, compact geometry that combines a small foot print with ease of use, fast update rates, and flexibility for different experiments. It uses a single projector and flat mirrors to generate a panoramic display. Because it is compact and relatively low-cost, many rigs may be used in parallel. Its geometry is flexible, so that it may be used for behavioral or physiological experiments, and the projector light itself may be used in optogenetic experiments. Importantly, we provide computer code to generate visual stimuli in this geometry. Thus, this apparatus may be widely useful in behavioral and physiological experiments in Drosophila and other small animals.

2. Method

2.1. Geometry of screens and mirrors

The geometry of our stimulus rig is shown in Figure 1A, while a 3-dimensional rendering is shown in Supplementary Movie 1. Three flat rear-projection screens surround the animal as three sides of a cube. This geometry occupies 270° in azimuthal angle and ~90° in vertical angle.

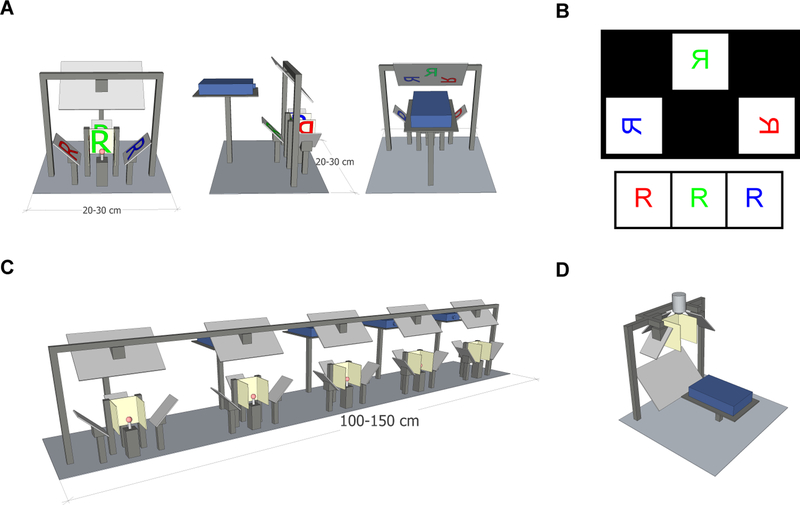

Figure 1.

Geometry of the stimulus setup. A) Front, side, and back views of the panoramic setup. Mirrors are light gray, screens are beige, and a ball treadmill is shown in red. The light path of the letter R is projected in red, blue, and green onto the 3 screens.

B) (Top) Diagram of the output of the projector from the setup in (A), if it were projected onto a front-projection screen, such as a piece of paper. (Bottom) Image that the fly would experience on the three screens arrayed about it.

C) Several of these rigs arranged in parallel. The compact setup allows multiple stations to be positioned next to each other.

D) An inverted version of the setup can be used with a two-photon microscope or electrophysiology rig. A microscope objective is shown above the panoramic screens.

The vertical angle can be made larger by using non-square screens with a larger vertical extent. Mirrors are placed at 45° from vertical to reflect light onto the back of each screen. This geometry has the advantage that all 3 screens are in the identical focal plane for a projected image coming from above or below the screens. Thus, a single projector can be used to project images onto all 3 screens, forming a panorama about the animal. Because all the screens are flat, no specialized mirrors or optics are required. The screens can be set up so that they abut one another and there is negligible space between images (Fig. 1A). We used the rear projection material Da-Lite 81324 (Da-Lite, Warsaw, Indiana). This material is designed to scatter light into many off-axis angles, so that to an animal at the center of the apparatus the edge of each screen appears almost as bright as the screen center. Mirrors were mounted on adjustable optical mirror mounts to permit fine alignment of the images with the screens. STL files containing this three-dimensional schematic are available at https://github.com/ClarkLabCode/PanoramicStimulus.

2.2. Projecting visual stimuli

This stimulus geometry reflects light from a light projector onto the three screens. Any projector may be used in principle, but we have used the OEM Lightcrafter series from Texas Instruments (Dallas, TX), which have a variety of nice properties. In the firmware, these projectors can be programmed not to perform any hardware power-law scaling of intensity, so that software gamma correction is not required. Moreover, these projectors allow one to project in three color channels at up to 240 frames per second, or in a single-color channel at up to 1440 frames per second. As the frames per second increases, the bit depth of the images decreases. The maximum intensity of green light on the screen for this set up with a LightCrafter DLP3000 Evaluation Module was ~200 cd/m2.

By using a projector, this method also employs modern graphics processing units (GPUs), which can generate complex visual stimuli in real time, using common graphics programming languages. In the experiments presented here, we used an NVIDIA GeForce GTX 660 Ti, which was able to generate panoramic stimuli at 360 and 720 frames per second using the code provided. This sort of on-line stimulus generation can create random dot kinetograms (Britten et al., 1992), high-dimensional stochastic stimuli (Clark et al., 2014; Leong et al., 2016; Salazar-Gatzimas et al., 2016), or complex virtual worlds that are updated in response to experimental measurements (Creamer et al., 2018; Harvey et al., 2009).

2.3. Stimulus generation and code

We generated visual stimuli in this set up using Psychtoolbox in Matlab (Mathworks, Natick, MA), which uses OpenGL to render scenes quickly on the graphics card (Brainard, 1997; Kleiner et al., 2007; Pelli, 1997). Our code is available with this manuscript at https://github.com/ClarkLabCode/PanoramicStimulus. It runs well on a Windows 7 PC with 16 GB RAM and a i7–4790K processor, using an NVIDIA GeForce GTX 660 Ti GPU. The code generates a virtual cylinder, which is then projected onto the 3 rear-projection panels, simulating the geometry of a cylindrical stimulus for an animal at the center. To generate 180 frames-per-second (fps) visual stimuli, which are typical for insect experiments, the code generates 3 frames, one for each color frame, which are sent to the projector at 60 fps. The projector projects the color frames sequentially, but using only one LED for all three frames, generating a monochrome stimulus at 180 fps. Our code can also be used to generate monochrome stimuli at 360 fps, 720 fps, and 1440 fps. PsychToolBox can also be used with Linux or MacOS systems with minimal changes in this code.

To generate each projected image, each scene is rendered 3 separate times, once for each of the three projection screens. To accommodate the projection and reflections onto the three screens, we programmed the appropriate geometry into the three windows shown in the individual projector images (Fig. 1B). By using a single projector rather than one for each screen, it ensures that there are no timing differences between the different screens.

2.4. Use with behavior

This rig is compatible with measurements of both flight and walking behavior. Flight behavior may be measured by shadows cast by flapping wings (Reiser and Dickinson, 2008) or by directly imaging the wings at a low frame rate (Maimon et al., 2010). To measure walking behaviors, a fly may also be suspended over a ball, which rotates freely underneath the fly as it walks (Buchner, 1976). In this set up, an optical computer mouse could read off the ball position (Clark et al., 2011; Clark et al., 2014; Creamer et al., 2018; Kain et al., 2013; Salazar-Gatzimas et al., 2016) or a dedicated camera could monitor the ball position (Chiappe et al., 2010; Moore et al., 2014; Seelig et al., 2010). The results in this paper measured fly walking behaviors using a 6.3 mm diameter polystyrene ball, and a mouse to read out the ball position (Fig. 1A).

This apparatus has a small footprint. For instance, with the 3-cm wide screens used here, each rig occupies a space ~ 20 cm wide by ~30 cm deep and 20–30 cm tall. In this geometry, the top mirror that bounces light downwards can be adjusted to varying heights, as long as the total pathlength from projector to screens remains constant. This small size means that many rigs can be set up on a single benchtop. Passive HDMI splitters were used to split signals from a single computer and send them to all five projectors, so that one computer generated visual stimuli on five rigs. Moreover, the rigs are moderately priced, dominated by the cost of the single projector for each station. This permits labs to run moderate-throughput behavioral experiments, testing up to 5 or 10 flies simultaneously with the same visual stimuli (Fig. 1C) (Creamer et al., 2018).

Because stimuli are generated in real time, it is possible to use the measured behavior to modify the visual stimulus, creating a virtual closed-loop experiments (Creamer et al., 2018; Dombeck and Reiser, 2012). With our code, using digital mice to read changes in ball position, the lag in such experiments—namely, the time between when a behavior occurs and when its result is visible to the fly—was 45 ms.

2.5. Use with physiology

By positioning the projector below the animal and inverting the reflecting scheme, one may place this set of screens below a physiology stage, permitting this screen geometry to be used with electrophysiology or microscopy (Fig. 1D). In our physiology rigs, which used Olympus microscope bodies (Scientifica, UK), we had 24–27 cm of clearance between the objective and the optical table, which provided enough clearance for this rig. Condensers and any other items below the objectives must be removed, as is also typically required for visual stimuli presented single screens below the objective (Behnia et al., 2014; Freifeld et al., 2013). Using the same screen geometry for physiology means that the identical visual stimuli may be presented, using identical presentation code, in behavior and in physiology. Since physiological experiments often require a slightly different head orientation, this may be corrected in the code for the visual stimulus by changing the pitch of the virtual cylinder (Creamer et al., 2018; Salazar-Gatzimas et al., 2018; Salazar-Gatzimas et al., 2016).

2.6. Combination with optogenetics

In this geometry, the projector typically projects onto the three screens, and projects no light at the locations in the image that would hit the animal itself. The light intensity from the projector measured at the animal was ~10 μW/mm2 in the green channel, which is within range to activate some modern light-gated channelrhodopsins (Dawydow et al., 2014; Klapoetke et al., 2014; Mohammad et al., 2017). We demonstrate that by projecting light directly onto the fly, this set up may be used to optically activate optogenetic channels (see Results). We demonstrate this by measuring behavior, but in principle, this could work for physiology experiments as well.

2.7. Filtering with two-photon microscopy

Because a projector is used, all the light originates at one source and is relatively well-collimated as it leaves the projector optics. This means that high-quality optical filters can be placed in front of the projector to sculpt the spectrum of the output. In turn, this means that the projector is easily compatible with scanning-two-photon microscopy (Denk et al., 1990), since the spectrum from the projector can be filtered to avoid overlap with the emission spectrum of green or red fluorophors (Clark et al., 2011). To run these experiments, we enclosed the projector in a light-tight box with a window covered by an emission filter. By placing a 514/30 filter in series with a 512/25 (Semrock, Rochester, New York), acting as emission filters in the microscope, combined with a 565/24 filter in front of the projector, we have been able to use this screen geometry with little bleed through from the stimulus into the images and with ~80 cd/m2 on the screens (Creamer et al., 2018; Salazar-Gatzimas et al., 2018; Salazar-Gatzimas et al., 2016).

2.8. Data acquisition and analysis

In the example data detailed in the Results, we used the following flies and analyses. For behavior, we measured isogenic wildtype OregonR flies (Gohl et al., 2011) raised at 25°C in a 12-hour-light, 12-hour-dark cycle at 50% relative humidity. Behavioral rigs were heated to 36°C, which made them compatible with thermogenetic tools and increased fly activity (Creamer et al., 2018; Salazar-Gatzimas et al., 2016). Behavior was also tested at 50% relative humidity. Data on ball rotation was collected by optical mice at 60 Hz and analyzed with custom code in Matlab (Natick, MA).

For the optogenetic manipulation experiments, we expressed GtACR, an anion channelrhodopsin (Mohammad et al., 2017), using the driver R42F06-GAL4, which expresses in T4 and T5 cells (Maisak et al., 2013). After eclosion, before testing, flies were raised on ATR food for 48 hours at 25°C in a 12-hour-light, 12-hour-dark cycle at 50% relative humidity (de Vries and Clandinin, 2013). Behavioral measurements were collected and analyzed as with the wildtype behavior. No surgery was necessary for these experiments, since the projector light could activate channels through the cuticle.

For the two-photon imaging experiments, images were collected at ~13 Hz on a commercial microscope (Scientifica, Uckfield, United Kingdom) using a MaiTai eHP laser (Spectra-Physics, San Jose, California) tuned to 930 nm and emitting less than ~30 mW of power, measured at the sample. Flies expressed the calcium indicator GCaMP6f (Chen et al., 2013) in both T4 and T5 neurons under the control of R42F06-GAL4 (Maisak et al., 2013). In order to record from these neurons, we performed a surgery to expose the optic ganglia by removing the cuticle on the posterior face of the head-capsule. Signals from T4 and T5 neurons were distinguished as previously described (Salazar-Gatzimas et al., 2016). Using custom Matlab code, signals were first averaged across regions of interest and then across flies to generate mean traces (Creamer et al., 2018).

Throughout, statistics were computed using the non-parametric rank-sum test. Means and standard errors of the mean are displayed.

3. Results

3.1. Moderate throughput behavioral measurements

To show how this rig may be used with behavioral measurements (Fig. 2AB), we presented wildtype Drosophila with sine wave gratings rotating at different temporal frequencies. By stimulating with these gratings, we could elicit rotational optomotor responses and walking speed modulations with this rig (Creamer et al., 2018). We presented flies with 60° sine waves with constant temporal frequencies for a duration of 1s. The sine waves had a Weber contrast of 25%. In response to these sine waves the flies turned in the direction of the stimulus displacement and slowed down (Fig. 2CD). Flies began to turn immediately following the stimulus presentation, and continued to turn for the duration of it, though turning strength lessened over time. Likewise, flies slowed on presentation and continued to walk more slowly for the duration of the stimulus presentation. Next, we measured tuning curves for turning and slowing by averaging the responses to sine waves over the presentation. We did this for a range of temporal frequencies from 0.25 to 32 Hz (Fig. 2EF). These results show that under these conditions flies turned maximally to stimuli with a temporal frequency of ~8 Hz, and slowed maximally to stimuli with a temporal frequency of ~16 Hz.

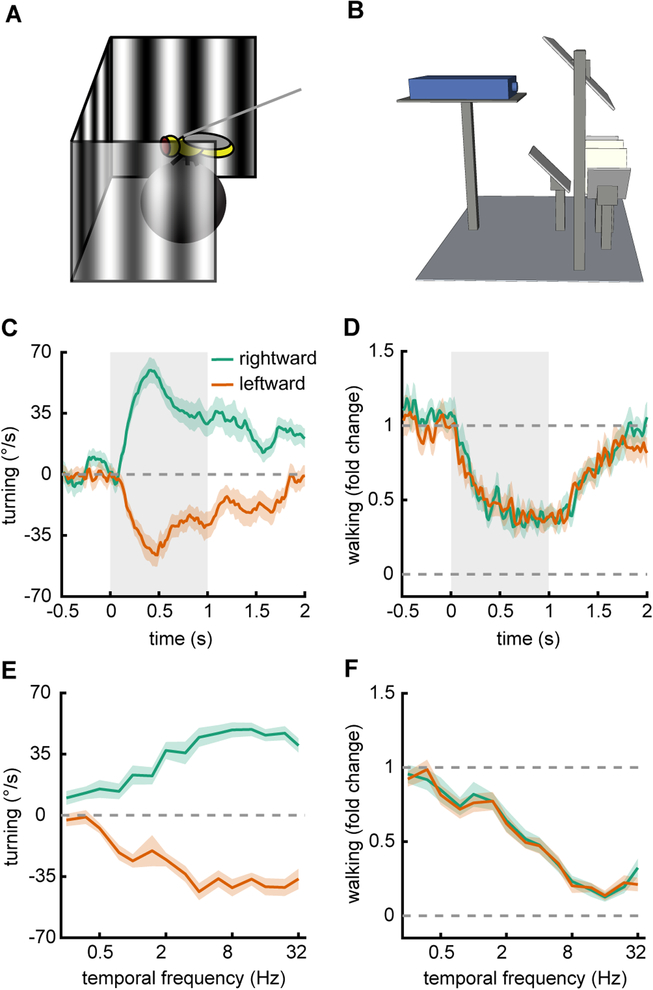

Figure 2.

Moderate throughput optomotor behavior measurements in 40 individual flies. A) Schematic of a fly on a ball being presented with drifting sine wave gratings.

B) Diagram of the full set up as in Figure 1A.

C) Average rotational time traces for flies presented with sine waves gratings that move leftward or rightward for durations of 1 second (spatial wavelength 60°, temporal frequency 2 Hz, contrast 25%). Shaded regions represent ± SEM.

D) Same as in (C), but measuring the normalized walking speed of the flies.

E) Rotational responses of flies, averaged over the presentation duration, plotted for drifting gratings with several different temporal frequencies.

F) Same as in (E), but measuring the relative walking speed of the flies.

To obtain these measurements, we ran 40 flies in four groups of 10, which were run in parallel on 10 of these panoramic stimulus rigs. Thus, the data presented here took roughly 4 hours to obtain, showing that this rig set up can be used to perform moderate throughput individual psychophysics experiments in flies.

3.2. Optogenetic silencing during behavior

To show that the projector in this rig can be used to optogenetically manipulate neural activity, we expressed GtACR, a hyperpolarizing anion channelrhodopsin (Mohammad et al., 2017), in the neurons T4 and T5 in Drosophila. These neurons are the earliest known direction-selective neurons in the fly (Maisak et al., 2013), and their activity is required for direction-selective behaviors and downstream direction-selective neural signals (Creamer et al., 2018; Maisak et al., 2013; Salazar-Gatzimas et al., 2016; Schnell et al., 2012). We presented rotational visual stimuli to flies (Fig. 3A), but this time we shone green light on the fly during some of the trials, but not others (Fig. 3BC).

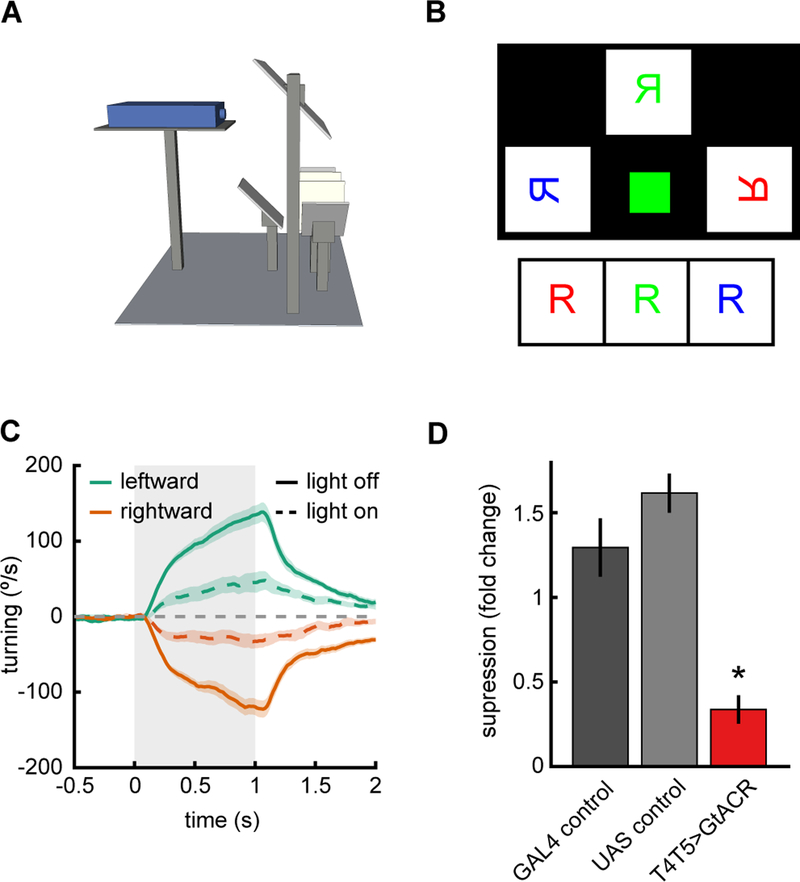

Figure 3.

Optogenetic silencing during visual stimulus presentation. A) Schematic of setup as in Figure 1A.

B) Diagram of the output of the projector if it were projected onto a single front projection screen. The green square projects light directly onto the fly in order to activate optogenetic tools. C) Average rotational response of flies to leftward and rightward stimuli with (dotted lines) and without (solid lines) the additional light stimulation. The drifting contrast gratings had a wavelength of 45°, temporal frequency of 4 Hz, and contrast of 50%. Shaded region represents ±1 SEM.

D) Fold change in average turning between light on and light off conditions. Asterisk marks significance from both controls at p<0.001.

We found that when light was used to activate GtACR in T4 and T5 the flies did not turn as strongly as genetic control flies in which GtACR was not expressed (Fig. 3CD). This demonstrates that this projector set up can be used to generate visual stimuli for flies while it is simultaneously used to inactivate neurons via optogenetic methods. This data was acquired for behavior, but in principle, the projector may be used to stimulate optogenetic channels in an electrophysiology or imaging setup as well. For imaging, care would need to be taken to appropriately filter the projector light for both stimulus and optogenetic purposes.

3.3. Two-photon imaging

To demonstrate that this rig could be used in combination with neurophysiological measurements, we recorded two-photon movies of the direction-selective neurons T4 and T5 during panoramic visual stimulation (Fig. 4AB). To measure activity, we expressed the calcium indicator GCaMP6f in the neurons T4 and T5 (Chen et al., 2013; Maisak et al., 2013). We then presented to the fly 60° drifting sine wave gratings of various temporal frequencies, recorded from T4 and T5, and selected T4 and T5 regions of interest as previously described (Salazar-Gatzimas et al., 2016). The neurons T4 and T5 responded direction-selectively to various temporal frequencies, showing sustained responses for the duration of the stimulus (Fig. 4CD). The neurons responded most strongly to frequencies in the 1 Hz to 4 Hz range.

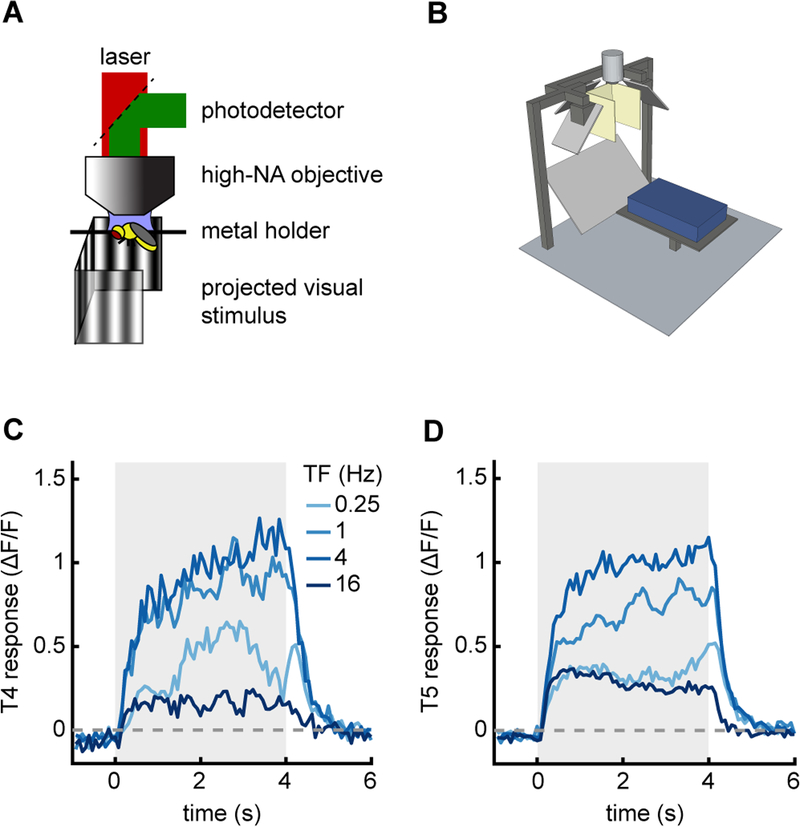

Figure 4.

Stimulus device used with two-photon microscopy. A) Diagram of two-photon setup.

B) Model of panoramic display under microscope objective.

C) Average response of T4 cells when presented with drifting sine wave gratings of different temporal frequencies (wavelength 60° and contrast 50%). The temporal frequency of the stimulus is denoted by line color. Stimulus presentation lasted for 4 seconds.

D) As in (C), but measured from T5 cells.

Because we use the same projector setup for both behavior and imaging, the stimulus used in this experiment is identical to that used in Figure 2, only with the contrast increased to 50% from 25%. The filters at the projector lens meant that there was negligible bleed through from the visual stimulus into the imaging channels. This panoramic geometry can therefore be used in concert with two-photon microscopy. A similar stimulus geometry could also be combined with electrophysiological rigs, which have similar steric constraints to the two-photon set up (Behnia et al., 2014; Joesch et al., 2008).

4. Discussion

4.1. Similarity to measurements using other stimulus devices

The behavioral and physiological responses we measured with this panoramic display were broadly similar to those measured using alternative set ups. The behavioral results we measured are similar to previous work in walking flies, both using alternative projector optics and using LED arenas (Clark et al., 2011; Silies et al., 2013; Strother et al., 2018) (Fig. 2). They are also similar to classical measurements of single fly optomotor behaviors (Buchner, 1976). Moreover, the reduced turning resulting from GtACR activation matched results obtained using fiber optics to activate these channels in the same set of neurons (Mauss et al., 2017) (Fig. 3). Finally, the physiological measurements in the neurons T4 and T5 obtained with this set up match previous ones obtained using LED arenas (Maisak et al., 2013) (Fig. 4).

4.2. Trade-offs in panoramic visual stimulus rigs

What are the trade-offs to consider when building a panoramic visual stimulus arena? Some of the most important considerations are cost and ease of use, physical size, and spatiotemporal resolution.

Cost and ease of use.

Many rigs designed for visual stimulus presentation require custom hardware that is expensive or time-consuming to make and use. For Drosophila experiments, there are two primary factors that lower the cost and improve ease of use for the rig described here. First, this geometry permits the use of a standard projector and simple flat screens, since all screens are in the same focal plane. This eliminates the need for specialized curved screens and mirrors used elsewhere (Harvey et al., 2009; Williamson et al., 2018), as well as specialized hardware (Reiser and Dickinson, 2008). Because this geometry relies on commercial projectors and flat mirrors and screens, its cost is more modest than curved or LED-panel-based geometries.

Second, in this configuration, the stimulus presented on the screens is updated using an HDMI signal, rather than being pre-generated and stored, as in LED array systems (Reiser and Dickinson, 2008). This means that the immense computational power of GPUs can be exploited in generating the stimulus. For instance, in the software accompanying this paper, the GPU performs the on-line projection and perspective-warping of virtual worlds. This allows one to generate complex stimuli, like those in closed loop virtual reality arenas (Creamer et al., 2018; Harvey et al., 2009; Stowers et al., 2017), as well as high-dimensional stimuli, such as white noise, which can be generated for long durations without repetition. More generally, this allows visual stimuli to take full advantage of an array of software designed to generate visual scenes with ease.

Physical size.

The method we have described here has a small footprint, comparable to LED arenas (Reiser and Dickinson, 2008). It is smaller than rigs that use coherent fiber optic bundles to bend the light (Clark et al., 2011; Clark et al., 2014), since those bundles often have a substantial minimum radius of curvature and have footprints of ~50 × 50 cm or more. The geometry described here is also smaller than full-sized monitors used to create panoramic stimuli, in which arenas are the size of the monitors (Bahl et al., 2013; Yamaguchi et al., 2008).

The relatively small area occupied by this geometry is important because it makes it feasible to build an array of these arenas in lab, as well as use them in concert with physiology rigs. In our lab, it has been possible to put five arenas on a single lab bench, enabling moderate throughput single-fly psychophysics (Creamer et al., 2018).

Spatiotemporal resolution.

Compared to human vision, Drosophila has relatively low spatial resolution and high temporal resolution. Drosophila has a flicker fusion rate above 100 Hz (Cosens and Spatz, 1978), so it is important that the display updates frequently. Drosophila ommatidia accept light from approximately 5° of visual space and are separated by approximately 5° (Stavenga, 2003), serving to limit the resolution of visual processing (Buchner, 1976; Götz, 1964).

LED-panel arenas typically have a spatial resolution of a few degrees and update rates of 100s of Hz (Reiser and Dickinson, 2008), making them well-tuned for Drosophila experiments. The projectors we used can have update rates of 360 fps and higher, giving them comparable temporal resolution to the LED-panel screens in wide use (Reiser and Dickinson, 2008). However, using the geometry described here, we achieved a spatial resolution of ~0.3° pixels, making this geometry compatible with animals that have high acuity vision (Land, 1997; Rigosi et al., 2017; Srinivasan and Lehrer, 1984).

4.3. Combination with optogenetics

We showed that this geometry can use the projector to directly activate GtACR expressed in fly neurons (Fig. 3). This makes it an easy, convenient way to combine optogenetic manipulations with visual stimuli. The light generated by the projectors was ~10 μW/mm2, which was sufficient to activate GtACR (Mohammad et al., 2017) (Fig. 3). This should also be intense enough to activate other channelrhodopsins engineered with enhanced sensitivity, such as Chrimson (Klapoetke et al., 2014), ReaChR (Lin et al., 2013), and ChR2-XXL (Dawydow et al., 2014). It is unlikely to be a bright enough to reliably elicit spikes using ChR2 (Boyden et al., 2005). However, optogenetic activation and inactivation depend not just on the affector, but also on the expression level and the cellular input resistance. This means that tools will have variable efficacy, depending on both driver strength and on neuron identity (Jenett et al., 2012).

If the projector light intensity is not sufficient for a particular tool and driver, other standard methods for optogenetic activation are still compatible with this geometry. For instance, optical fibers or lasers could still be used to pipe in bright light (Mauss et al., 2017). The set up described here simply provides another option for optogenetic stimulation that requires minimal modifications and can be easily synchronized with visual stimuli. Finally, using a brighter projector could make this geometry compatible with a broader range of optogenetic tools.

4.4. Applications beyond insects

The method described here presents high-resolution panoramic images at high frame rates, so it may be suitable for many species. In particular, mouse behavioral and imaging experiments have benefited from panoramic displays, but these have tended to require specialized curved screens and specialized curved mirrors for projection (Harvey et al., 2009). The flat screens in this geometry make the optics simpler and are compatible with simultaneous imaging set ups. Since typical imaging experiments in mice use screens that only occupy a fraction of its field of view, panoramic displays with the presented geometry would allow experiments to test a broader range of naturalistic stimuli.

Supplementary Material

Highlights:

A simple geometry permits panoramic visual stimulation using flat screens

Small physical footprint allows multiple rigs on single bench

Easily adapted for behavior, imaging, or electrophysiology

Enables optogenetic stimulation in Drosophila without additional modifications

Acknowlegements

This study used stocks obtained from the Bloomington Drosophila Stock Center (NIH P40OD018537). MSC was supported by an NSF GRF. This project was supported by NIH Training Grants T32 NS41228. DAC and this research were supported by NIH R01EY026555, NIH P30EY026878, NSF IOS1558103, a Searle Scholar Award, a Sloan Fellowship in Neuroscience, the Smith Family Foundation, and the E. Matilda Ziegler Foundation.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Citations

- Bahl A, Ammer G, Schilling T, and Borst A (2013). Object tracking in motion-blind flies. Nat Neurosci 16, 730–738. [DOI] [PubMed] [Google Scholar]

- Behnia R, Clark DA, Carter AG, Clandinin TR, and Desplan C (2014). Processing properties of ON and OFF pathways for Drosophila motion detection. Nature 512, 427–430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyden ES, Zhang F, Bamberg E, Nagel G, and Deisseroth K (2005). Millisecond-timescale, genetically targeted optical control of neural activity. Nat Neurosci 8, 1263. [DOI] [PubMed] [Google Scholar]

- Brainard DH (1997). The psychophysics toolbox. Spatial vision 10, 433–436. [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, and Movshon JA (1992). The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci 12, 4745–4765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchner E (1976). Elementary movement detectors in an insect visual system. Biol Cybern 24, 85–101. [Google Scholar]

- Cabrera S, and Theobald JC (2013). Flying fruit flies correct for visual sideslip depending on relative speed of forward optic flow. Frontiers in behavioral neuroscience 7, 76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen T-W, Wardill TJ, Sun Y, Pulver SR, Renninger SL, Baohan A, Schreiter ER, Kerr RA, Orger MB, and Jayaraman V (2013). Ultrasensitive fluorescent proteins for imaging neuronal activity. Nature 499, 295–300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiappe ME, Seelig JD, Reiser MB, and Jayaraman V (2010). Walking modulates speed sensitivity in Drosophila motion vision. Curr Biol 20, 1470–1475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark DA, Bursztyn L, Horowitz MA, Schnitzer MJ, and Clandinin TR (2011). Defining the computational structure of the motion detector in Drosophila. Neuron 70, 1165–1177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark DA, Fitzgerald JE, Ales JM, Gohl DM, Silies M, Norcia AM, and Clandinin TR (2014). Flies and humans share a motion estimation strategy that exploits natural scene statistics. Nat Neurosci 17, 296–303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cosens D, and Spatz HC (1978). Flicker fusion studies in the lamina and receptor region of the Drosophila eye. J Insect Physiol 24, 587–594. [Google Scholar]

- Creamer MS, Mano O, and Clark DA (2018). Visual Control of Walking Speed in Drosophila. Neuron 100, 1460–1473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawydow A, Gueta R, Ljaschenko D, Ullrich S, Hermann M, Ehmann N, Gao S, Fiala A, Langenhan T, and Nagel G (2014). Channelrhodopsin-2–XXL, a powerful optogenetic tool for low-light applications. Proc Natl Acad Sci USA 111, 13972–13977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Vries SE, and Clandinin T (2013). Optogenetic stimulation of escape behavior in Drosophila melanogaster. Journal of visualized experiments: JoVE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denk W, Strickler JH, and Webb WW (1990). Two-photon laser scanning fluorescence microscopy. Science 248, 73–76. [DOI] [PubMed] [Google Scholar]

- Dombeck DA, and Reiser MB (2012). Real neuroscience in virtual worlds. Curr Opin Neurobiol 22, 3–10. [DOI] [PubMed] [Google Scholar]

- Freifeld L, Clark DA, Schnitzer MJ, Horowitz MA, and Clandinin TR (2013). GABAergic lateral interactions tune the early stages of visual processing in Drosophila. Neuron 78, 1075–1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gohl DM, Silies MA, Gao XJ, Bhalerao S, Luongo FJ, Lin CC, Potter CJ, and Clandinin TR (2011). A versatile in vivo system for directed dissection of gene expression patterns. Nat Methods 8, 231–237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Götz K (1964). Optomotorische untersuchung des visuellen systems einiger augenmutanten der fruchtfliege Drosophila. Biol Cybern 2, 77–92. [DOI] [PubMed] [Google Scholar]

- Harvey CD, Collman F, Dombeck DA, and Tank DW (2009). Intracellular dynamics of hippocampal place cells during virtual navigation. Nature 461, 941–946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassenstein B, and Reichardt W (1956). Systemtheoretische Analyse der Zeit-, Reihenfolgenund Vorzeichenauswertung bei der Bewegungsperzeption des Rüsselkäfers Chlorophanus. Zeits Naturforsch 11, 513–524. [Google Scholar]

- Jenett A, Rubin GM, Ngo TTB, Shepherd D, Murphy C, Dionne H, Pfeiffer BD, Cavallaro A, Hall D, Jeter J, et al. (2012). A GAL4-Driver Line Resource for Drosophila Neurobiology. Cell Rep. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joesch M, Plett J, Borst A, and Reiff D (2008). Response properties of motion-sensitive visual interneurons in the lobula plate of Drosophila melanogaster. Curr Biol 18, 368–374. [DOI] [PubMed] [Google Scholar]

- Kain J, Stokes C, Gaudry Q, Song X, Foley J, Wilson R, and De Bivort B (2013). Leg-tracking and automated behavioural classification in Drosophila. Nature communications 4, 1910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klapoetke NC, Murata Y, Kim SS, Pulver SR, Birdsey-Benson A, Cho YK, Morimoto TK, Chuong AS, Carpenter EJ, and Tian Z (2014). Independent optical excitation of distinct neural populations. Nat Methods 11, 338–346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleiner M, Brainard D, Pelli D, Ingling A, Murray R, and Broussard C (2007). What’s new in Psychtoolbox-3. Perception 36, 1. [Google Scholar]

- Land MF (1997). Visual acuity in insects. Annu Rev Entomol 42, 147–177. [DOI] [PubMed] [Google Scholar]

- Leong JCS, Esch JJ, Poole B, Ganguli S, and Clandinin TR (2016). Direction selectivity in Drosophila emerges from preferred-direction enhancement and null-direction suppression. J Neurosci 36, 8078–8092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin JY, Knutsen PM, Muller A, Kleinfeld D, and Tsien RY (2013). ReaChR: a redshifted variant of channelrhodopsin enables deep transcranial optogenetic excitation. Nat Neurosci 16, 1499–1508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maimon G, Straw AD, and Dickinson MH (2010). Active flight increases the gain of visual motion processing in Drosophila. Nat Neurosci 13, 393–399. [DOI] [PubMed] [Google Scholar]

- Maisak MS, Haag J, Ammer G, Serbe E, Meier M, Leonhardt A, Schilling T, Bahl A, Rubin GM, Nern A, et al. (2013). A directional tuning map of Drosophila elementary motion detectors. Nature 500, 212–216. [DOI] [PubMed] [Google Scholar]

- Mauss AS, Busch C, and Borst A (2017). Optogenetic neuronal silencing in Drosophila during visual processing. Scientific reports 7, 13823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohammad F, Stewart JC, Ott S, Chlebikova K, Chua JY, Koh T-W, Ho J, and Claridge-Chang A (2017). Optogenetic inhibition of behavior with anion channelrhodopsins. Nat Methods 14, 271. [DOI] [PubMed] [Google Scholar]

- Moore RJ, Taylor GJ, Paulk AC, Pearson T, van Swinderen B, and Srinivasan MV (2014). FicTrac: a visual method for tracking spherical motion and generating fictive animal paths. J Neurosci Methods 225, 106–119. [DOI] [PubMed] [Google Scholar]

- Pelli DG (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial vision 10, 437–442. [PubMed] [Google Scholar]

- Reiser MB, and Dickinson MH (2008). A modular display system for insect behavioral neuroscience. J Neurosci Methods 167, 127–139. [DOI] [PubMed] [Google Scholar]

- Rigosi E, Wiederman SD, and O’Carroll DC (2017). Visual acuity of the honey bee retina and the limits for feature detection. Scientific reports 7, 45972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salazar-Gatzimas E, Agrochao M, Fitzgerald JE, and Clark DA (2018). The Neuronal Basis of an Illusory Motion Percept Is Explained by Decorrelation of Parallel Motion Pathways. Curr Biol 28, 3748–3762. e3748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salazar-Gatzimas E, Chen J, Creamer MS, Mano O, Mandel HB, Matulis CA, Pottackal J, and Clark DA (2016). Direct measurement of correlation responses in Drosophila elementary motion detectors reveals fast timescale tuning. Neuron 92, 227–239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnell B, Raghu SV, Nern A, and Borst A (2012). Columnar cells necessary for motion responses of wide-field visual interneurons in Drosophila. J Comp Physiol A 198, 389–395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seelig J, Chiappe M, Lott G, Dutta A, Osborne J, Reiser M, and Jayaraman V (2010). Two-photon calcium imaging from head-fixed Drosophila during optomotor walking behavior. Nat Methods. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silies M, Gohl DM, Fisher YE, Freifeld L, Clark DA, and Clandinin TR (2013). Modular use of peripheral input channels tunes motion-detecting circuitry. Neuron 79, 111–127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Srinivasan MV, and Lehrer M (1984). Temporal acuity of honeybee vision: behavioural studies using moving stimuli. J Comp Physiol A 155, 297–312. [Google Scholar]

- Stavenga D (2003). Angular and spectral sensitivity of fly photoreceptors. II. Dependence on facet lens F-number and rhabdomere type in Drosophila. Journal of Comparative Physiology A: Neuroethology, Sensory, Neural, and Behavioral Physiology 189, 189–202. [DOI] [PubMed] [Google Scholar]

- Stowers JR, Hofbauer M, Bastien R, Griessner J, Higgins P, Farooqui S, Fischer RM, Nowikovsky K, Haubensak W, and Couzin ID (2017). Virtual reality for freely moving animals. Nat Methods 14, 995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strother JA, Wu S-T, Rogers EM, Eliason JL, Wong AM, Nern A, and Reiser MB (2018). Behavioral state modulates the ON visual motion pathway of Drosophila. Proc Natl Acad Sci USA 115, E102–E111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williamson WR, Peek MY, Breads P, Coop B, and Card GM (2018). Tools for Rapid High-Resolution Behavioral Phenotyping of Automatically Isolated Drosophila. Cell Rep 25, 1636–1649. e1635. [DOI] [PubMed] [Google Scholar]

- Yamaguchi S, Wolf R, Desplan C, and Heisenberg M (2008). Motion vision is independent of color in Drosophila. Proc Natl Acad Sci USA 105, 4910–4915. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.