Abstract

This paper presents nonparametric two-sample bootstrap tests formeans of randomsymmetric positivedefinite (SPD) matrices according to two differentmetrics: the Frobenius (or Euclidean)metric, inherited from the embedding of the set of SPD metrics in the Euclidean set of symmetric matrices, and the canonical metric, which is defined without an embedding and suggests an intrinsic analysis. A fast algorithm is used to compute the bootstrap intrinsic means in the case of the latter. The methods are illustrated in a simulation study and applied to a two-group comparison of means of diffusion tensors (DTs) obtained from a single voxel of registered DT images of children in a dyslexia study.

Keywords: extrinsic mean, intrinsic mean, Fréchet mean, center of mass, non-parametric bootstrap, diffusion tensor imaging, fast algorithms

1. Introduction

Statistical inference for distributions on manifolds is a broad discipline with wide-ranging applications. Its study has gained momentum, due to its applications in biosciences and medicine, geosciences, astronomy, computer vision and image analysis, electrical engineering, and other fields.

A general framework for nonparametric inference for location on manifolds was introduced in Patrangenaru (1998) and Bhattacharya and Patrangenaru (2003, 2002, 2005). There, properties were derived for two types of Fréchet means on finite-dimensional manifolds: (1) the (embedding-dependent) extrinsic mean, associated with the Euclidean distance induced on the manifold by an embedding in Euclidean space, and (2) the (Riemannian-structure-dependent) intrinsic mean, associated with the geodesic distance derived from a Riemannian metric on the manifold. Furthermore, the consistency of extrinsic sample means as an estimator of extrinsic means, under one set of conditions, and of intrinsic means, under another set of conditions, was established. Derivations of the asymptotic distributions of intrinsic and extrinsic sample means, and of confidence regions for the population means based on them, were also provided.

Extrinsic means are appealing for their simplicity. When an explicit embedding is available, the extrinsic mean of a set of data points can be computed by taking the mean in the embedding Euclidean space and projecting the result onto the manifold (assuming there is a unique closest point on the embedded manifold, which is the case for almost all data-sets). In contrast, the intrinsic mean is computed directly on the manifold as the point that minimizes the sum of squared geodesic distances from the data points to it. Intrinsic means (also called centers of mass in the literature) are mathematically elegant and do not require an embedding, but require a Riemannian geometric structure instead. One type of manifold on which intrinsic means are guaranteed to exist, and is particularly relevant to this paper, is a Cartan-Hadamard manifold: a complete, simply-connected Riemannian manifold of nonpositive curvature.

Computational speed is of particular importance in a nonparametric analysis when applying computationally intensive resampling methods like the bootstrap, as each resample requires a new computation of the intrinsic or extrinsic sample mean. Computing both extrinsic and intrinsic sample means is trivial if the manifold is flat in a sufficiently small neighborhood that contains the sample, in the Euclidean sense in the case of the former and in the Riemannian sense in the case of the latter (Patrangenaru, 2001).

In more general situations, extrinsic means sometimes have closed-form expressions while intrinsic means, when they exist, typically require iterative methods to compute. Therefore it is not surprising that in several applications, computations of extrinsic means have been found to be considerably faster than computation of intrinsic means by some commonly-used methods (Bhattacharya et al., 2012). However, there are other iterative algorithms that, when applied to compute intrinsic means of samples of small enough diameter in an arbitrary Riemannian manifold, require very few iterations. Among these is what Groisser (2004, 2005) called the “Riemannian averaging algorithm”, a gradient-descent algorithm that has been suggested independently by several authors (Pennec, 1999; Le, 2001; Groisser, 2004; Fletcher et al., 2004). See also Smith (1994) and Edelman et al. (1998). This algorithm has been tested numerically for some special manifolds by several authors (Pennec et al., 2006; Fletcher and Joshi, 2007). For a Riemannian manifold possessing a lot of symmetry, such as a Riemannian homogeneous space (see the Appendix for a definition), the gradient-descent algorithm is fast in the sense of computation-time as well, because there are simple closed-form expressions for geodesics. For a wide class of algorithms that includes Newton’s method as well as the gradient-descent algorithm, Groisser (2004) established quantitative sufficient conditions for convergence, and convergence-rate bounds.

As a specific manifold, in this paper we study the space Sym+(p) of p × p symmetric positive-definite (SPD) matrices, a convex open subset of the space M(p,ℝ) of all real p × p matrices. When endowed with the Frobenius (Euclidean) metric inherited from , the space Sym+(p) is flat, and therefore provides an example in which the extrinsic sample mean has a simple closed-form expression and coincides with the intrinsic mean according to that metric. As an alternative, it has been suggested to analyze data on this manifold using a canonical metric gcan (Lenglet et al., 2006; Pennec et al., 2006; Schwartzman, 2006; Fletcher and Joshi, 2007), with respect to which Sym+(p) is a Cartan-Hadamard symmetric space (see the Appendix for a definition of symmetric space). The space Sym+(p) with this metric is complete but curved, suggesting an intrinsic analysis. In statistics literature, covariance matrices are perhaps the most visible occurrence of SPD matrices. In such instances, p may be any positive integer and is limited only be the number of variables considered in a given study. As such, it may be quite large. However, SPD matrices also arise as observations in cosmic background radiation (CBR), where p = 2, and in diffusion tensor imaging (DTI), where p = 3. While the theory we consider holds true for arbitrary p, we will focus our attention for computations on p = 3 to motivate an application to DTI analysis.

A useful methodology in estimating the variability of sample means is Efron’s nonparametric bootstrap (Efron, 1982). It is documented that the coverage error of confidence intervals produced by pivotal nonparametric bootstrap has faster convergence than that of standard confidence intervals (Babu and Singh, 1984; Bhattacharya and Ghosh, 1978; Hall, 1997). Data analysis on manifolds has often been performed via nonparametric bootstrap, with the first examples in directional and axial data analysis (Ducharme et al., 1985; Fisher and Hall, 1989). In this paper, we compare the bootstrap-based analysis under the flat Euclidean metric with an intrinsic bootstrap analysis under the canonical metric. For each bootstrap resample, we compute the canonical intrinsic sample mean using the gradient-descent algorithm. To be precise about the metric used, we hereafter refer to these two types of analysis as Frobenius (or Euclidean) and canonical, rather than extrinsic and intrinsic.

In this paper we design nonparametric two-sample bootstrap tests for means of random positive definite matrices, according to both the Frobenius and canonical metrics. We present a simulation study to show the effectiveness of these methods. Then, as an application, we apply these procedures to compare means of populations of diffusion tensor images.

The rest of the paper is organized as follows. Section 2 introduces basic properties of the set of SPD matrices. Section 3 presents the Frobenius analysis, including the statistical theory behind our tests and the methods for and results of our simulation study. Section 4 presents the corresponding canonical-metric analysis, as well as a discussion of the algorithm used to compute intrinsic means, including its convergencebehavior. Section 5 presents a simulation study to compare coverage probabilities for confidence regions for the Frobenius and canonical methodologies. Section 6 contains our application of the methodology to DTI analysis. Section 7 concludes. For completeness, the necessary theoretical background for SPD matrices and the Riemannian geometry of the canonical metric is provided in Appendix A.

2. SPD matrices

Let Sym(p) ⊂ M(p,ℝ) denote the set of symmetric matrices, and let Sym+(p) ⊂ Sym(p) denote the set of positive-definite symmetric matrices:

| (2.1) |

Sym+(p) is an open subset of Sym(p), and it is easily seen from (2.1) that it is also a convex subset: if M1,M2 ∈ Sym+(p), then (1 − t)M1 + tM2 ∈ Sym+(p) for all t ∈ [0,1]. Sym+(p) is also an open cone: if M ∈ Sym+(p), then tM ∈ Sym+(p) for all t > 0.

The Euclidean metric on , when transferred to M(p,ℝ) by the natural identification , is often called the Frobenius metric on M(p,ℝ). The same terminology is used for the Riemannian metric on M(p,ℝ) obtained by transferring the standard Riemannian metric on , and also for the induced Riemannian metric on any vector subspace of M(p,ℝ). All of these Riemannian metrics are flat (the curvature is identically zero). Thus Sym(p), with the Frobenius Riemannian metric, is flat. The open subset Sym+(p) ⊂ Sym(p) therefore inherits the structure of a flat, but incomplete, Riemannian manifold: the geodesics are open segments of straight lines constrained by the boundary of Sym+(p) as a subset of Sym(p) (see Schwartzman (2006)). We refer to this Riemannian structure on the Sym+(p) as the Frobenius metric structure on this space.

Another Riemannian metric considered in analysis of DTI data is the “canonical metric” mentioned in the Introduction (Arsigny et al., 2006; Schwartzman, 2006). This metric is complete on Sym+(p), but it is not flat. However, the curvature of this metric is non-positive, an extremely convenient geometric feature, as we will see in Section 4.1. Details on this canonical metric are given in the Appendix.

Geodesic convexity—a generalization to Riemannian manifolds of the notion of convexity in vector spaces—suffices for meaningful intrinsic data-analysis on a Riemannian manifold. The convexity of Sym+(p) in M(p,ℝ) trivially makes the entire open manifold Sym+(p) geodesically convex with respect to the Frobenius metric, so one may think of this metric, rather than the vector-space structure, as what facilitates a statistical analysis on Sym+(p). Moreover, the intrinsic and extrinsic means for the Frobenius metric coincide, so it is enough to refer to both as the Frobenius mean. The manifold Sym+(p) is also geodesically convex with respect to the canonical Riemannian metric as well, allowing the definition of an intrinsic mean. Furthermore, Sym+(p) with this metric is also a homogeneous space (in fact, a symmetric space), which facilitates the rapid computation of the intrinsic mean using an algorithm from Groisser (2004)1. We describe the analysis using these two different metrics in the next two sections.

3. Nonparametric estimation of Frobenius means

3.1. Nonparametric inference for Frobenius means

Suppose we are given an i.i.d. sample of n SPD matrices Y1, …, Yn ∈ Sym+(p). Let vecd(·) be a vectorization operator that extracts the entries of its symmetric matrix argument of size p × p into a vector in a Euclidean vector space of dimension p(p + 1)/2. The exact form of this operator is not crucial; for ease of interpretation, here we extract the diagonal entries first and then the off-diagonal entries above the diagonal (see Table 6). The Frobenius sample mean of the vectorizations Xi = vecd(Yi), i = 1, …, n, is simply the entry-by-entry average . Similarly, we can compute their sample covariance matrix

Table 6:

DTI data in a group of control (columns 1 – 6) and dyslexia (columns 7 – 12)

| 1 | 2 | 3 | 4 | 5 | 6 | |

| d11 | 0.8847 | 0.6516 | 0.4768 | 0.6396 | 0.5684 | 0.6519 |

| d22 | 0.9510 | 0.9037 | 1.1563 | 0.9032 | 1.0677 | 0.9804 |

| d33 | 0.8491 | 0.7838 | 0.6799 | 0.8265 | 0.7918 | 0.7922 |

| d12 | 0.0448 | −0.0392 | 0.0217 | 0.0229 | −0.0427 | 0.0269 |

| d13 | −0.1168 | −0.0631 | −0.0091 | −0.1961 | −0.0879 | −0.1043 |

| d23 | 0.0162 | −0.0454 | −0.1890 | −0.1337 | −0.1139 | −0.0607 |

| 7 | 8 | 9 | 10 | 11 | 12 | |

| d11 | 0.5661 | 0.6383 | 0.6418 | 0.6823 | 0.6159 | 0.5643 |

| d22 | 0.7316 | 0.8381 | 0.8776 | 0.8376 | 0.7296 | 0.8940 |

| d33 | 0.8232 | 1.0378 | 1.0137 | 0.9541 | 0.9683 | 0.9605 |

| d12 | 0.0358 | −0.0044 | −0.0643 | 0.0309 | −0.0929 | −0.0635 |

| d13 | −0.2289 | −0.2229 | −0.1675 | −0.2217 | −0.1713 | −0.1307 |

| d23 | −0.1106 | −0.0449 | −0.0192 | −0.0925 | −0.0965 | −0.1791 |

REMARK 3.1 (Estimation of the Euclidean mean for SPD matrices)

Due to convexity, the Central Limit Theorem (CLT) can be applied to any distribution of SPD matrices. The Euclidean mean of such a probability distribution can be estimated using the studentized version of the CLT if a large random sample is available, or by using nonparametric bootstrap if only a small random sample is available.

Now suppose we are given two independent such samples of sizes n1 and n2, where the vectors Xa,i, i = 1, …, na i.i.d.r.vec.’s with the mean μa,a = 1,2. For testing H0 : μ1 − μ2 = δ0, Hotelling’s T2 statistic is

| (3.1) |

where , j = 1,2 are the Frobenius means of the two samples and Sj, j = 1,2 are their sample covariance matrices.

If the samples are i.i.d. with , ∑a, ja = 1, …, na, a = 1,2 from two independent multivariate populations, and the total sample size n = n1 + n2, is such that as n →∞, and , then by the CLT, we have that under the null hypothesis H0 : μ1 − μ2 = δ0, in distribution, as n → ∞. Therefore, a parametric test based on this asymptotic limit would reject H0 at level α if . A parametric approximation for finite samples is the Fdistribution with p(p + 1)/2 degrees of freedom for the numerator and Yao’s approximation for the degrees of freedom of the denominator (Yao (1965)). If the distributions are unknown and the samples are small, as in our data example, the parametric asymptotic distribution may not provide an accurate approximation to the distribution of the test statistic. Here, instead, we use a nonparametric approach based on the bootstrap. We compute a bootstrap distribution of

| (3.2) |

and we take , the 100(1 − α) percentile of T2*, where the and are, respectively, bootstrap replicates of the sample mean and covariance, for i = 1, 2. The 100(1 − α)% confidence region for δ = μ1 − μ2 based on this bootstrap distribution is given by

| (3.3) |

In each bootstrap resample, two samples of sizes n1 and n2 are sampled with replacement from the original data and the test statistic (3.2) is recomputed.

REMARK 3.2

All of the above apply to two random samples from a pair of independent random vectors in general. In particular they apply to any marginals of a pair of random symmetric matrices, with the number of degrees of freedom of the chi-square asymptotic distribution of T2 being equal to the number of marginals under consideration.

In cases where n1 and n2 are small, however, (3.1) and (3.2) may not be usable due to the covariance term being non-invertible. For such cases, confidence regions may instead be based upon the nonpivotal statistic

| (3.4) |

and the bootstrap distribution of

| (3.5) |

Denoting the 100(1 − α) percentile of W2∗ by , a second 100(1 − α)% confidence region for δ = μ1 − μ2 is given by

| (3.6) |

Alternatively, the p-value for a bootstrap test of the null hypothesis H0 : μ1 − 𝜇2 = δ0 against the two-sided hypothesis can be calculated using T2 as follows:

| (3.7) |

where # (T2(δ0) > T2* denotes the number of bootstrap replicates of T2∗ that T2(δ0) is larger than and B is the number of bootstrap replicates. Similarly, a test can be performed using W2 by calculating the following p-value:

| (3.8) |

3.2. Simulation Study

In order to explore how the above hypothesis tests perform, we performed a simulation study for data in Sym+(3). We consider three cases for the relationships between the Frobenius means. For the first case, the Frobenius means for both populations are equal. For the second case, the means are not equal, but the angles between the corresponding principal directions of the matrices are small. For the final case, these angles are somewhat larger. The exact angles will be provided as each case is explored.

We simulated n SPD matrices from a population with a given mean 𝜇 as follows. We generated each SPD matrix as the sample covariance matrix for a sample of m vectors from a trivariate normal distribution with a population covariance matrix of 𝜇. As such, m controls the variability of the population via consistency of the sample covariance matrix.

For each case, we performed both hypothesis tests (when applicable) at a variety of values of n1 = n2 = n and m. For each set of data, in addition to recording the p-values, we recorded the Fréchet sample variance (FSV) for each sample to show the effect of changing m. 10,000 bootstrap replicates were used to perform the tests.

The results of the simulations are shown in Table 1. Note that the T2 test could not be performed for n = 6 due to lack of invertibility and that FSV decreases as mincreases. For Case 1, all p-values were large, indicating that both tests would correctly fail to reject H0.

Table 1:

Results of the simulation study using the Frobenius metric. FSV is the Fréchet sample variance, where each “a/b” entry represents the quantity for group a and b, respectively. PvalueT is the p-value for the T2 bootstrap test. PvalueW is the p-value for the nonpivotal bootstrap test.

| n | m | Case 1 |

Case 2 |

Case 3 |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| FSV | PvalueT | PvalueW | FSV | PvalueT | PvalueW | FSV | PvalueT | PvalueW | ||

| 6 | 6 | 2.6656/0.6292 | N/A | 0.2909 | 1.02/.2047 | N/A | 0.0396 | .5563/.5883 | N/A | 0.04 |

| 6 | 24 | .0729/.2940 | N/A | 0.6601 | .2388/.0876 | N/A | 0.0585 | .1296/.1013 | N/A | 0.1396 |

| 6 | 96 | .0472/.0490 | N/A | 0.1295 | .0511/.0314 | N/A | 0.0441 | .0408/.0571 | N/A | 0 |

| 6 | 192 | .0291/.0334 | N/A | 0.3765 | .0372/.0191 | N/A | 0.089 | .0310/.0245 | N/A | 0 |

| 6 | 288 | .0134/.0107 | N/A | 0.4991 | .0149/.0125 | N/A | 0.0132 | .0200/.0133 | N/A | 0 |

| 18 | 6 | .9662/1.0111 | 0.2823 | 0.3006 | .6573/.7830 | 0.9518 | 0.9117 | 1.0722/1.0990 | 0.0891 | 0.2323 |

| 18 | 24 | .1953/.1231 | 0.9279 | 0.8591 | .2290/.1905 | 0.3456 | 0.2928 | .0978/.1833 | 0.0032 | 0.0027 |

| 18 | 96 | .0477/.0536 | 0.5307 | 0.5894 | .0636/.0403 | 0.1121 | 0.1777 | .0496/.0604 | 0 | 0 |

| 18 | 192 | .0205/.0316 | 0.9198 | 0.6084 | .0195/.0328 | 0.0689 | 0.0169 | .0249/.0194 | 0 | 0 |

| 18 | 288 | .0189/.0211 | 0.2832 | 0.3141 | .0114/.0134 | 0.0293 | 0.0023 | .0165/.0143 | 0 | 0 |

| 36 | 6 | 1.2569/.6997 | 0.4837 | 0.4616 | .7014/.7015 | 0.1854 | 0.1748 | 1.1635/.5277 | 0.0431 | 0.0094 |

| 36 | 24 | .1718/.1813 | 0.1783 | 0.253 | .1591/.1883 | 0.0177 | 0.0538 | .1567/.2164 | 0.0001 | 0 |

| 36 | 96 | .0516/.0491 | 0.6765 | 0.8858 | .0500/.0466 | 0.0002 | 0.0018 | .0418/.0410 | 0 | 0 |

| 36 | 192 | .0222/.0216 | 0.5149 | 0.3523 | .0301/.0242 | 0.0118 | 0.0032 | .0200/.0248 | 0 | 0 |

| 36 | 288 | .0175/.0165 | 0.6665 | 0.6189 | .0148/.0140 | 0 | 0 | .0162/.0136 | 0 | 0 |

For Case 2, the angles between the corresponding principal directions of the population means are 9.6217 degrees, 5.1220 degrees, and 9.0139 degrees. For n = 6, the W2 test showed significant differences between the means at the 0.10 level at all values of m, but not at the 0.05 level. However, for the other sample sizes, the W2 test failed to find significant differences between the means except for the cases in which the variability is quite low. The T2 test was also not able to find significant differences for higher levels of variability for n = 18. It did, however, find significant differences for n = 36 except when m = 6, when the variability was rather large.

For Case 3, the angles between the corresponding principal directions of the population means are 79.1643 degrees, 37.6759 degrees, and 73.7522 degrees. Both tests performed quite well for all sample sizes considered. The T2 test only once failed to find a significant difference between the means at the 0.05 level, with this occurring when there was a large amount of variability present and the difference was still significant at the 0.10 level. For the smaller sample sizes, the W2 test also performed very well, except for those cases where there was a large amount of variability.

Based upon these results, it appears that both tests tend to perform better when there is lower amounts of variability present in the data. The tests also appear to be better at finding significant differences between the means when the angles between the corresponding principal directions are large since they produce very low p-values even for moderate levels of variability.

4. Computation and Nonparametric estimation of canonical intrinsic means

4.1. Intrinsic means

Let Q be a probability measure on a metric space . The Fréchet mean set of Q is the set of all global minimizers of the Fréchet function FQ on defined by

| (4.1) |

If is a Riemannian manifold, and d = dg is the geodesic distance function, we call the Fre´chet mean set of Q the intrinsic mean set of Q. The points of the intrinsic mean set are also called (global) Riemannian centers of mass in the literature (see Afsari (2011) and the references therein).

When FQ has a unique minimizer, we call that point the intrinsic mean 𝜇I(Q). If the Riemannian manifold is complete, then the intrinsic mean set of any probability measure Q is non-empty; at least one minimizer of FQ exists. For complete manifolds, the sharpest uniqueness result to date is due to Afsari (2011): if Q is supported in a ball of radius less than the convexity radius of , then the minimizer of FQ is unique. Whether or not is complete or has positive convexity radius, a sufficient condition for existence and uniqueness of a minimizer is that Q be sufficiently concentrated.

For probability measures supported in a geodesically convex set, Groisser (2004) makes the following definition (up to notational changes)2:

DEFINITION 4.1

Let be geodesically convex. Let Q be a probability measure on U, and define a vector field YQ on U by

| (4.2) |

If YQ(qQ) = 0 at a unique point qQ ∈ U, we call qQ the (Riemannian) center of mass of Q relative to U. When Q is a finitely-supported distribution of the form associated with points x1, …, xn ∈ U, we call qQ the Riemannian average of Q relative to U. In this case (4.2) reduces to

| (4.3) |

Above (and below), is the Riemannian exponential map based at q, and “” means the unique vector of minimal norm such that Expq(v) = x; uniqueness is guaranteed by the assumed geodesic convexity of U. In this paper we reserve the lower-case notation “exp” for the exponential of matrices.

In the setting of (4.3), note that . Heuristically, YQ(q) represents a “balancing” average of the points xi as seen from q.

In addition to ensuring that “” makes sense, the geodesic convexity of U in Definition 4.1 implies that FQ is smooth and that

| (4.4) |

hence the zeroes of YQ are exactly the critical points of FQ. Groisser (2004) uses a geometric criterion, rather than the global- minimization property, to single out a “best” critical point (and to get rid of the “relative to U”): in the setting of Definition 4.1, if YQ has a unique zero qQ in a (suitably defined) convex hull of the support of Q, Groisser calls qQ the primary center of mass (or simply the center of mass) of Q. In (Groisser, 2004) the question is raised whether the intrinsic mean, when it exists, of a probability measure Q supported in a convex set, lies in the convex hull of its support (equivalently, thanks to Afsari (2011), whether the primary center of mass qQ coincides with the intrinsic mean 𝜇I(Q) when both exist.) A partial answer is provided in Afsari (2011): for a discrete probability measure Q supported in a ball of radius less than the convexity radius of , the intrinsic mean 𝜇I(Q) exists (by the result mentioned previously) and lies in the closure of the convex hull of the support of Q.

In the example of greatest interest to us in this paper, the Riemannian manifold (Sym+(p),gcan), the convexity restriction on U in Definition 4.1 is no restriction at all. The reason is that (Sym+(p),gcan) is a Cartan-Hadamard manifold: a simply-connected complete Riemannian manifold with non-positive sectional curvature (see Section A.2). It is a classical result that any two points in a Cartan-Hadamard manifold can be joined by a unique geodesic arc; hence the entire manifold is convex, and we can take in Definition 4.1, omit the phrase “relative to U”, and omit U from the notation. If is a Cartan-Hadamard manifold, then for any Q the function FQ is strictly convex and achieves a minimum at some (necessarily unique) point qQ, which is also necessarily the unique zero of YQ. Thus on a Cartan-Hadamard manifold, every distribution Q has a (unique) center of mass qQ, and this center of mass coincides with the intrinsic mean 𝜇I(Q). The existence/uniqueness proof in the case of the empirical distribution goes back to É. Cartan in the 1920s (Cartan, 1928). Indeed, an intrinsic sample mean on such a manifold may be thought of as a Cartan mean.

4.2. Intrinsic sample means on a Cartan-Hadamard manifold

To simplify certain definitions, we restrict attention to Cartan-Hadamard manifolds . Our first definition simply provides a name and notation for an important special case of Definition 4.1.

DEFINITION 4.2

Let be a Cartan-Hadamard manifold. Let X1, …, Xn be -valued i.i.d. random variables with common distribution Q, and let be their empirical distribution. The intrinsic sample mean of X1, …, Xn is the intrinsic mean of :

| (4.5) |

For particular values x1, …, xn of the random variables X1, … Xn, Definition 4.2 corresponds to the Riemannian average of the points x1, …, xn in the terminology of Definition 4.1. Groisser (2004) presents an iterative procedure for finding zeroes of vector fields on a general Riemannian manifold, and establishes sufficient conditions for convergence as well as convergence-rate bounds.3 Applied to the negative-gradient vector field YQ, this procedure becomes the “Riemannian averaging algorithm”, which is simply unit-stepsize gradient-descent for the function FQ. It is proven in Groisser (2004) that if Q is contained in a geodesic ball BD(q0) whose radius D is smaller than a certain number , and the algorithm is initialized at any point in a larger, concentric ball Bρ(q0) with ρ less than a certain number ρ3(D) that increases as D decreases, then the algorithm converges to the intrinsic mean of Q. (In Groisser (2004), specific lower bounds on and ρ3(D) are given in terms of local geometric invariants; these radii are not tiny in general. We will see this for the case of (Sym+(3),gcan) in Section 4.3.) When applied to the distribution , this algorithm computes the Riemannian average of x1, …, xn.

REMARK 4.3

Some common variants of this algorithm are obtained by replacing “unit step-size” with a more general constant step-size τ (replacing YQ with τYQ) or with variable step-size that is updated at each iteration; cf. (Fletcher and Joshi, 2007; Afsari et al., 2011) (and, in a more general context, Smith (1994)). When the data are not too spread out, as is typically the case for biological samples, the unit-stepsize algorithm generally works well. When the data are more spread out, convergence may be slower (and is not guaranteed if the data are too spread out). For an explanation of this in terms of the “eigenvalues” of the Hessian of FQ, and some instructive examples, see Afsari et al. (2011).

For a general distribution , the Riemannian averaging algorithm It consists simply of iterating the map defined by

| (4.6) |

The upper-bound restriction on D mentioned above is merely sufficient for the algorithm to converge; the algorithm can converge without this condition being satisfied. Note that if converges, the point it converges to must be a fixed-point of , and therefore a zero of (because Expq is one-to-one on a Cartan-Hadamard manifold, and Expq(0) = q).

We now focus attention on and distributions , where V1, … Vn ∈ Sym+(p). In practice, the algorithm is computable on a (small enough) convex subset of a general Riemannian manifold if we can efficiently invert the exponential map. For the Cartan-Hadamard manifold (Sym+(p),gcan), we can do this easily and explicitly because (Sym+(p), gcan) is also a symmetric space (see Section A.2).

Furthermore, as noted at the end of Section 4.1, for any probability measure on a Cartan-Hadamard manifold, we know a priori both that has exactly one zero, and that this zero is the intrinsic mean of . In particular, for (Sym+(p),gcan), any time converges, it converges to the intrinsic sample mean of V1, …,Vn. In our application to DTI data, the algorithm was found to converge quite rapidly (see Table 4), and therefore was an efficient method for computing intrinsic sample means .

Table 4:

Convergence behavior of the averaging algorithm on (Sym+(3),gcan), initialized at the Frobenius mean, for the simulated data and actual DTI data. n = 6 for the DTI control group and dyslexia group, as described in Section 6. D is the radius of the smallest ball, centered at the Frobenius mean, containing all the data, and κ = κ(D) is as defined in Section 4.3. “Actual iterations needed” is the number of iterations that were needed for convergence with a canonical-distance threshold of 10−6. Nit(κ) is the smallest integer N for which κND ≤ 10−6; see inequality (4.9).

| n | m | D | κ | Actual Iterations Needed | Nit(κ) |

|---|---|---|---|---|---|

| 6 | 6 | 2.1868 | 1.3965 | 8 | N/A |

| 6 | 24 | 0.8116 | 1.2106 | 4 | N/A |

| 6 | 48 | 0.5906 | 0.3481 | 4 | 13 |

| 6 | 96 | 0.3449 | 0.0854 | 3 | 5 |

| 6 | 192 | 0.3103 | 0.0680 | 3 | 5 |

| 6 | 288 | 0.2496 | 0.0431 | 3 | 4 |

| 18 | 6 | 3.1204 | 1.4751 | 8 | N/A |

| 18 | 24 | 1.1137 | 1.2628 | 5 | N/A |

| 18 | 48 | 0.6343 | 1.1751 | 5 | N/A |

| 18 | 96 | 0.4836 | 0.1866 | 4 | 8 |

| 18 | 192 | 0.3804 | 0.1060 | 4 | 6 |

| 18 | 288 | 0.3788 | 0.1049 | 4 | 6 |

| 36 | 6 | 3.7187 | 1.5147 | 9 | N/A |

| 36 | 24 | 1.2048 | 1.2769 | 5 | N/A |

| 36 | 48 | 0.8856 | 1.2242 | 4 | N/A |

| 36 | 96 | 0.5403 | 0.2529 | 3 | 10 |

| 36 | 192 | 0.4632 | 0.1676 | 3 | 7 |

| 36 | 288 | 0.2646 | 0.0486 | 3 | 4 |

| Control Group | 0.4346 | 0.1437 | 3 | 7 | |

| Dyslexia Group | 0.3063 | 0.0662 | 3 | 5 | |

To write an explicit formula for on (Sym+(p),gcan), we first make one more definition. In any Cartan-Hadamard manifold , the exponential map is a diffeomorphism for all ; thus is well-defined globally. We will use a name for this inverse that is common in the statistics literature, though not in the differential geometry literature:

DEFINITION 4.4

Let be a Cartan-Hadamard manifold and let . The Riemannian logarithm map based at M is the map .

In the Appendix, we use the characterization of (Sym+(p),gcan) as a symmetric space to compute explicit formulas for ExpM and LogM for all M ∈ Sym+(p); these are given in (A.17)–(A.18). Substituting these into (4.3) and (4.6), for a sample V1,...Vn of matrices Vi ∈ Sym+(p), the map iterated in the Riemannian averaging algorithm is given by

| (4.7) |

where “log” is the logarithm function on SPD matrices (see the proof of Proposition A.11).

4.3. Convergence of the averaging algorithm on Sym+(3)

The literature on the Riemannian averaging algorithm and its variants (see Remark 4.3) contains quite a bit of misinformation, especially concerning convergence of the algorithm. Afsari et al. (2011) is a good reference for correct statements of what has been proven to date. Many other papers make implicit assumptions in their proofs, while some make assertions that have not been proven (and may be mathematically incorrect), citing sources that do not prove what it is said they prove. At least one reference suggests that theorems about Newton’s method apply to this gradient-descent algorithm for averaging, and therefore that the latter should be quadratically convergent. To our knowledge, though, this has never been proven.

While the non-positive curvature of a Cartan-Hadamard manifold helps existence and uniqueness of the intrinsic mean of any probability distribution , the combination of negative curvature and large diameter of supp (large compared to a length-scale determined by curvature) seems to interfere with convergence of the unit-step-size gradient-descent averaging algorithm. Some evidence for this is reported in Rentmeesters and Absil (2011), whose authors found that in numerical experiments with points uniformly distributed in a ball of radius D in (Sym+(3),gcan), the algorithm failed to converge for D ≥ 4. The mathematical basis for expecting such a problem is discussed in Afsari et al. (2011).

Nonetheless, Groisser (2004) shows that the unit-step-size averaging algorithm on any Riemannian manifold always does converge if supp is contained in a ball of radius no larger than a certain (nonsharp!) number that is explicitly computable in terms of curvature-bounds and the convexity radius of For Cartan-Hadamard manifolds, the convexity radius is infinite, so is computable from curvature-bounds alone. In Section 6 of Groisser (2004), the general results earlier in the paper are used to compute for complete, locally symmetric manifolds of non-negative curvature. The same general results and method can be used to compute for manifolds of bounded non-positive curvature and infinite convexity radius (in particular, for any Cartan-Hadamard symmetric space); one simply has to replace “ψ(1, x)” in equation (6.1) with “ψ(−1, x)”, defined in Table 1 (p. 104) of Groisser (2004); specifically, ψ(−1, x) := ψ−(x) := x coth x − 1.

Theorem 4.8 and 5.3 of Groisser (2004) give additional information, including convergence-rate estimates. For simplicity, we will state the results that we wish to use here only for a Cartan-Hadamard manifold with curvature bounded below by the negative number δ, and combine these with results from the non-positive curvature analog of the computations in Groisser (2004, Section 6).

- There is a unique solution of the pair of equations where . Approximate values of and are

(4.8) Let . For let p1(D) = inf {p | s(p,D) > D} and p3(D) = sup {p | s(p,D) > D}. Then

- Assume that is a probability distribution supported in a closed ball , where , and that ρ1(D) < ρ < ρ3(D). Then:

-

(a)The map defined in (4.6) maps Bρ(q0) into itself.

-

(b)For every q ∈ Bρ3(q0), the sequence of iterates converges to the intrinsic mean . These iterates satisfy

where κ(D) = ψ−((D + ρ1(D))|δ|1/2).(4.9)

-

(a)

We will use these results in Section 4.5 (where will be a discrete distribution as in Definition 4.2).

REMARK 4.5

The main focus of Afsari et al. (2011) is on computing Riemannian means using constantstep-size gradient-descent with step-size not required to be 1, since this added flexibility can improve convergence. However, Theorem 4.1 of Afsari et al. (2011) can be used to derive a statement about the unitstep-size algorithm that is relevant here: the value of the largest radius D for which the convergence is guaranteed for the unit-step-size gradient-descent algorithm on a Cartan-Hadamard manifold (for a distribution supported in a ball can be improved by about 11% over the number above. (This theorem in Afsari et al. (2011) is stated only for distributions with finite support, but that assumption does not seem essential to the proof, and is satisfied anyway for the applications in the present paper.) This theorem guarantees convergence of the unit-step-size algorithm if , where is the unique positive number for which (equivalently, ) —which works out to , or 0.6771 in the case of Sym+(3). However, the convergence-rate bound (4.9) does not apply for .

4.4. Nonparametric inference for canonical intrinsic means

Due to the nature of the canonical metric, tests of equality of canonical means cannot be performed using the statistics (3.1) and (3.4). Instead, alternative test statistics can be formulated in a tangent space, as in Huckemann (2012). In particular, suppose we are given two independent samples of sizes n1 and n2, where the observations Va, i,i = 1, …, na, a = 1, 2, are i.i.d. SPD matrices with canonical means μa,I, a = 1,2. We are interested in testing H0 : 𝜇1, I = 𝜇2, I = 𝜇.

Let Ya,i = Log𝜇(Va,i), for i = 1, …, na, a = 1,2, be projections of the observations to the tangent space at 𝜇, where Log𝜇 is as defined in (A.18). To form the statistic, let Xa,i = vecd(Ya,i), for i = 1, …,na,a = 1,2. Define Sa to be the sample covariance matrix of the X1,i. Define the quantity B as

| (4.10) |

where , a = 1,2 are the canonical sample means. Then a T2 statistic can be defined as

| (4.11) |

However, since 𝜇 is unknown, we instead perform inference at the tangent space of , the pooled canonical sample mean of all n1 + n2 observations, redefining the Ya,i,Xa,i,Sa, and B accordingly, and plug these into (4.11).

To perform inference, we compute a bootstrap distribution of

| (4.12) |

where

| (4.13) |

and the and are bootstrap replicates of, respectively, the canonical sample mean and sample covariance, for a = 1, 2. Bootstrap p-values for this test can be calculated as in (3.7).

Alternatively, especially for those cases when n1 and n2 are small, a nonpivotal test statistic can be defined as

| (4.14) |

We can then compute a bootstrap distribution of

| (4.15) |

where B∗ is as defined in (4.13). Bootstrap p-values can then be calculated as in (3.8).

4.5. Simulation Study

To explore the performance of the hypothesis test procedures presented above, we conducted a simulation study, just as in Section 3.2. The results of this study are shown in Table 2. Both test procedures for the canonical means perform very similarly to their corresponding tests for the Frobenius means, as the p-values are nearly the same.

Table 2:

Results of the simulation study using the canonical metric. n and m are as in Section 3.2. FSV is the Fréchet sample variance, where each “a/b” entry represents the quantity for group a and b, respectively. PvalueT is the p-value for the T2 bootstrap test. PvalueW is the p-value for the nonpivotal bootstrap test.

| n | m | Case 1 |

Case 2 |

Case 3 |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| FSV | PvalueT | PvalueW | FSV | PvalueT | PvalueW | FSV | PvalueT | PvalueW | ||

| 6 | 6 | 5.2433/4.3517 | N/A | 0.2059 | 2.215/1.642 | N/A | 0.1073 | 2.5667/2.6636 | N/A | 0.0821 |

| 6 | 24 | .2252/.3796 | N/A | 0.7497 | .3671/.3279 | N/A | 0.0389 | .3184/.5010 | N/A | 0.1136 |

| 6 | 96 | .1049/.1505 | N/A | 0.1494 | .0932/.1096 | N/A | 0.0412 | .1186/.1345 | N/A | 0 |

| 6 | 192 | .0924/.0645 | N/A | 0.3497 | .0658/.0457 | N/A | 0.0947 | .0739/.0644 | N/A | 0 |

| 6 | 288 | .0340/.0210 | N/A | 0.4947 | .0361/.0283 | N/A | 0.0126 | .0465/.0386 | N/A | 0 |

| 18 | 6 | 4.3803/4.1406 | 0.4019 | 0.3956 | 2.5019/4.7915 | 0.6683 | 0.3786 | 4.0874/3.1783 | 0.0496 | 0.1167 |

| 18 | 24 | .5325/.3980 | 0.8601 | 0.8402 | .5511/.4719 | 0.3268 | 0.2049 | .4642/.7620 | 0.0024 | 0.0006 |

| 18 | 96 | .1164/.1413 | 0.4955 | 0.5422 | .1234/.1127 | 0.1137 | 0.1877 | .1175/.1416 | 0 | 0 |

| 18 | 192 | .0536/.0668 | 0.8909 | 0.5922 | .0474/.0762 | 0.0601 | 0.015 | .0758/.0676 | 0 | 0 |

| 18 | 288 | .0382/.0544 | 0.307 | 0.3762 | .0375/.0391 | 0.033 | 0.002 | .0460/.0467 | 0 | 0 |

| 36 | 6 | 3.6592/3.6854 | 0.6359 | 0.5911 | 3.7022/4.1293 | 0.0498 | 0.0637 | 4.8348/4.7676 | 0.0339 | 0.0169 |

| 36 | 24 | .6213/.5235 | 0.2355 | 0.2587 | .5224/.5685 | 0.0208 | 0.0228 | .5454/.6272 | 0.0001 | 0.0001 |

| 36 | 96 | .1137/.1144 | 0.6779 | 0.8799 | .1378/.1174 | 0.0005 | 0.0026 | .1396/.1426 | 0 | 0 |

| 36 | 192 | .0548/.0585 | 0.4863 | 0.3311 | .0746/.0679 | 0.0143 | 0.0058 | .0626/.0695 | 0 | 0 |

| 36 | 288 | .0429/.0418 | 0.6715 | 0.6275 | .0353/.0413 | 0.0001 | 0 | .0404/.0466 | 0 | 0 |

For this study, we also recorded the amount of time, in seconds, needed to calculate all 20,000 canonical means (10,000 for each group) in the bootstrap replications. These results are shown in Table 3. We additionally recorded the number of iterations needed for the original canonical sample means to converge for both groups. To initialize the algorithm for computing the canonical means, we used the Frobenius sample means.

Table 3:

Timing information for the simulation study using the canonical metric. Time refers to the amount of time required to perform all 20,000 mean calculations needed for the bootstrap test. Iterations refers to the number of iterations needed for the averaging algorithm to converge for the original sample, where each “a/b” entry represents the quantity for group a and b, respectively.

| n | m | Case 1 |

Case 2 |

Case 3 |

|||

|---|---|---|---|---|---|---|---|

| Time (sec) | Iterations | Time (sec) | Iterations | Time (sec) | Iterations | ||

| 6 | 6 | 772.7 | 12/10 | 477.6 | 8/6 | 543.6 | 8/7 |

| 6 | 24 | 261.4 | 4/4 | 287.3 | 4/4 | 308.8 | 4/4 |

| 6 | 96 | 226.1 | 3/3 | 227.8 | 3/3 | 225.2 | 3/3 |

| 6 | 192 | 224.0 | 3/3 | 229.8 | 3/3 | 240.5 | 3/3 |

| 6 | 288 | 208.0 | 2/3 | 232.2 | 3/3 | 234.2 | 3/3 |

| 18 | 6 | 1811.4 | 9/8 | 1622.5 | 7/9 | 1697.9 | 8/7 |

| 18 | 24 | 806.5 | 4/4 | 826.3 | 4/4 | 877.8 | 4/5 |

| 18 | 96 | 627.5 | 3/3 | 638.3 | 3/3 | 634.3 | 3/3 |

| 18 | 192 | 650.7 | 3/3 | 663.3 | 3/3 | 641.9 | 3/3 |

| 18 | 288 | 650.6 | 3/3 | 715.1 | 3/3 | 642.1 | 3/3 |

| 36 | 6 | 2923.9 | 6/7 | 3132.0 | 8/8 | 3380.2 | 8/9 |

| 36 | 24 | 1541.8 | 4/4 | 1569.3 | 4/4 | 1575.4 | 4/4 |

| 36 | 96 | 1300.9 | 3/3 | 1212.4 | 3/3 | 1248.3 | 3/3 |

| 36 | 192 | 1347.4 | 3/3 | 1289.6 | 3/3 | 1286.0 | 3/3 |

| 36 | 288 | 1383.1 | 3/3 | 1311.4 | 3/3 | 1360.6 | 3/3 |

It is interesting to compare the convergence behavior in the canonical-mean computations to what can be predicted from the general results stated in Section 4.3. For (Sym+(3),gcan), it can be shown that a sharp lower bound on sectional curvature is (this is essentially done in (Rentmeesters and Absil, 2011)). Thus, from (4.8), we have . Since we initialized the algorithm at the Frobenius mean q0, the distance from q0 to the furthest data-point is the radius D of a closed ball containing all the data-points. The algorithm always converged in our simulations, even though for about half of the (n,m) pairs we used, D was greater than . For the cases in which , we can compare the number of iterations needed for convergence to within the threshold we used (d(qk+1,qk) < 10−6) to the “worst case” number determined by (4.9), Nit(κ). Table 6 lists our findings for simulated data in an experiment separate from our bootstrap experiments, as well as for the actual DTI data used in Section 6.2.4

Note that since sectional curvature has dimensions of (length)−2, and the range of the sectional-curvature function at each point of (Sym+(3),gcan) is [δ0,0], where , and the injectivity radius is infinite, a natural length-scale for this Riemannian manifold is . Thus the “normalized” radii obtained by dividing the values of D in Table 4 by give a meaningful measure of how localized the data were. We note that for the actual DTI data we used, D was less than for both groups. However, we do not have a suggestion at this time for what multiples of should be used to make quantitative definitions of notions like “very localized data”. The largest value of D seen in our simulated data was approximately 3.7, which occurred for (n,m) = (36, 6), and came from a sample for which the algorithm converged in 9 iterations. We note that in the numerical experiments mentioned in Rentmeesters and Absil (2011), Rentmeester and Absil found this gradient-descent algorithm not to converge for their data with D ≥ 4. It seems likely that the reason we observed convergence for a value of D close to which Rentmeesters and Absil (2011) observed non-convergence is, again, a reflection of difference between the distributions from which Rentmeesters and Absil (2011) and we simulated data. As mentioned earlier, Rentmeesters and Absil (2011) used data that were uniformly distributed over a ball. The probability density function for our simulated data is related to a Wishart distribution. As such, the data is concentrated around the mode. Thus for our (n,m) = (36, 6) sample, the large value of D may have been due to a single outlier, whose influence on convergence-behavior would be limited, whereas the uniform distribution used in Rentmeesters and Absil (2011) on a given ball would have led to a larger number of data-points near the boundary of the ball, more greatly influencing convergence-behavior.

The fact that the algorithm converged in all our simulations, and that it converged faster (often much faster) than “predicted” by Nit(κ), is interesting, and highlights the nature of the bounds given in Section 4.3. First, the radius is a very coarse bound, and is “critical” only for the proof of convergence in Groisser (2004). (Similarly, the somewhat larger in Remark 4.5 is a coarse bound that is simply what Afsari et al. (2011, Theorem 4.1) happens to imply for the unit-step-size algorithm.) Second, for , the convergence-rate κ(D) is a bound on the slowest convergence we can ever see. Our simulated data were chosen from a probability distribution concentrated about the mode, but slowest convergence is expected for data-clouds that have relatively large diameter and whose distribution with respect to direction in the tangent space at the mean is much farther from being uniform, for theoretical reasons discussed in Afsari et al. (2011). This expectation is supported by the fact that the number of iterations needed for convergence in our simulations was less than Nit(κ), with the difference increasing as D increased.

5. Coverage Probabilities for Confidence Regions

In the preceding simulation studies, we illustrated that the Frobenius and canonical methodologies perform similarly for hypothesis tests of equality of means. In each case, regardless of whether the T2 or W2 procedures were used, the p-values that were produced were very similar for each distance. Since the same data was used for both methods, this indicates that, for a given data set, the inference procedures will perform similarly. However, it is also important to examine how these procedures perform in the long term.

To examine this, we performed an additional simulation study to compare coverage probabilities for nominal 95% confidence regions for the difference between the means of two populations. A summary of the results of this study are displayed in Table 5. Cases 1, 2, and 3 refer to the same scenarios considered in the previous studies. In this context, the probabilities for Case 1 reflect probabilities of true coverage. That is, the means are, in fact, equal. Since the means are not equal for Cases 2 and 3, though, these probabilities reflect probabilities of false coverage.

Table 5:

Coverage probabilities for confidence regions for the difference between means of two populations. Probabilities for Case 1 are probabilities of true coverage. Those for Cases 2 and 3 are probabilities of false coverage.

| n | m | Case 1 |

Case 2 |

Case 3 |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

T2 |

W2 |

T2 |

W2 |

T2 |

W2 |

||||||||

| Fro | Can | Fro | Can | Fro | Can | Fro | Can | Fro | Can | Fro | Can | ||

| 6 | 24 | N/A | N/A | 0.918 | 0.926 | N/A | N/A | 0.890 | 0.900 | N/A | N/A | 0.566 | 0.580 |

| 6 | 96 | N/A | N/A | 0.890 | 0.894 | N/A | N/A | 0.782 | 0.780 | N/A | N/A | 0.012 | 0.012 |

| 6 | 192 | N/A | N/A | 0.924 | 0.924 | N/A | N/A | 0.676 | 0.684 | N/A | N/A | 0.000 | 0.000 |

| 18 | 24 | 0.962 | 0.964 | 0.930 | 0.928 | 0.932 | 0.918 | 0.860 | 0.862 | 0.146 | 0.140 | 0.070 | 0.000 |

| 18 | 96 | 0.974 | 0.976 | 0.958 | 0.950 | 0.772 | 0.770 | 0.584 | 0.592 | 0.000 | 0.000 | 0.000 | 0.000 |

| 18 | 192 | 0.962 | 0.960 | 0.920 | 0.908 | 0.434 | 0.438 | 0.246 | 0.248 | 0.000 | 0.000 | 0.000 | 0.000 |

| 36 | 24 | 0.952 | 0.960 | 0.940 | 0.942 | 0.834 | 0.834 | 0.788 | 0.808 | 0.000 | 0.000 | 0.000 | 0.000 |

| 36 | 96 | 0.958 | 0.952 | 0.932 | 0.926 | 0.350 | 0.348 | 0.278 | 0.296 | 0.000 | 0.000 | 0.000 | 0.000 |

| 36 | 192 | 0.942 | 0.948 | 0.950 | 0.944 | 0.048 | 0.046 | 0.030 | 0.032 | 0.000 | 0.000 | 0.000 | 0.000 |

In all but one scenario (Case 1, n = 36,m = 192, Frobenius) the T2 region has a higher coverage probability than the associated W2 statistic, as expected due to the pivotal nature of the T2 statistic. Despite this, the W2 statistic has the advantage that it can be used even when n is small relative to p, as illustrated by the fact that the T2 statistic cannot be used with p = 3 for n = 6 because the sample covariances are frequently not invertible in the bootstrap resamples. If one were to consider larger values of p, this deficiency of the T2 statistic would present a larger problem since the dimension of the data is p(p + 1)/2, thus requiring increasingly large sample sizes as p increases. However, for Case 1, both procedures work reasonably well for both metrics in all cases. This is also the case for all but one instance of Case 3 in which the sample size is small (n = 6) and the amount of variability is large (m = 24). This is to be expected due to the sizeable differences between these means. On the other hand, because the means differ only somewhat in Case 2, the procedures for both metrics only perform particularly well when the sample size is reasonably large and the variability is low (n = 36,m = 192). Finally, for both the T2 and W2 confidence regions, neither the Frobenius nor canonical procedures perform uniformly better than the other. In fact, the coverage probabilities are typically quite close to each other.

6. DTI Application

In recent years, there has been a rapid development in the application of nonparametric statistical analysis on manifolds to medical imaging. In particular, data taking values in the space Sym+(3) appear in diffusion tensor imaging (DTI), a modality of magnetic resonance imaging (MRI) that allows visualization of the internal anatomical structure of the brain’s white matter (Basser and Pierpaoli, 1996; LeBihan et al., 2001). At each point in the brain, the local pattern of diffusion of the water molecules at that point is described by a diffusion tensor (DT), a 3 × 3 SPD matrix. A DTI image is a 3D rectangular array that contains at every voxel (volume pixel) a 3 × 3 SPD matrix that is an estimate of the true DT at the center of that voxel. (Thus DTI differs from most medical-imaging techniques in that, at each point, what the collected data are used to estimate is a matrix rather than a scalar quantity.) At each voxel, the estimated DT is constructed from measurements of the diffusion coefficient in at least six directions in three-dimensional space. The eigenvalues of the DT measure diffusivity, an indicator of the type of tissue and its health, while the eigenvectors relate to the spatial orientation of the underlying neural fibers.

A common statistical problem in DTI group studies is to find regions of the brain whose anatomical characteristics differ between two groups of subjects. The analysis typically consists of registering the images to a common template so that each voxel corresponds to the same anatomical structure in all the images, and then applying two-sample tests at each voxel. To our knowledge, existing statistics literature on DTI group comparisons is based on certain parametric assumptions, such as multivariate normality (Whitcher et al., 2007; Schwartzman et al., 2008b). But testing for multivariate normality requires large data-sets (Székely and Rizzo, 2005; Alva and Estrada, 2009; Hanusz and Tarasin’ska, 2008), so such testing could not be done in those studies because the number of subjects was simply too small. Furthermore, while the individual DT estimates at each voxel could be modeled by a multivariate normal distribution under some measurement conditions (Basser and Pajevic, 2003), there is no evidence that the distribution across subjects is multivariate normal. We therefore prefer not to assume a specific probability model for the distribution of DTs across subjects and utilize the nonparametric methods described previously in this paper to analyze the data.

6.1. Description of Data

For this application, our primary goal is to use nonparametric methodology to detect a significant difference between the means of the clinically normal and dyslexia groups. To illustrate these methods, we apply the methodology presented in Section 3.1 and Section 6.2 to a DTI data set previously analyzed in Schwartzman et al. (2008a), for which we compare means of populations of DT images as overall markers for dyslexic children when compared with clinically normal counterparts. This data-set consists of 12 spatially registered DT images belonging to two groups of children, a group of 6 children with normal reading abilities and a group of 6 children with a diagnosis of dyslexia. Here we present the analysis of a single voxel at the intersection of the corpus callosum and corona radiata in the frontal left hemisphere that was found in Schwartzman et al. (2008a) to exhibit the strongest difference between the two groups.

Table 6 shows the data at this voxel for all 12 subjects. The dij in the table are the entries of the DT on and above the diagonal (the below-diagonal entries would be superfluous since the DTs are symmetric).

The Frobenius sample means, and and the canonical sample means and for the clinically normal and dyslexia groups, respectively, are as follows:

and

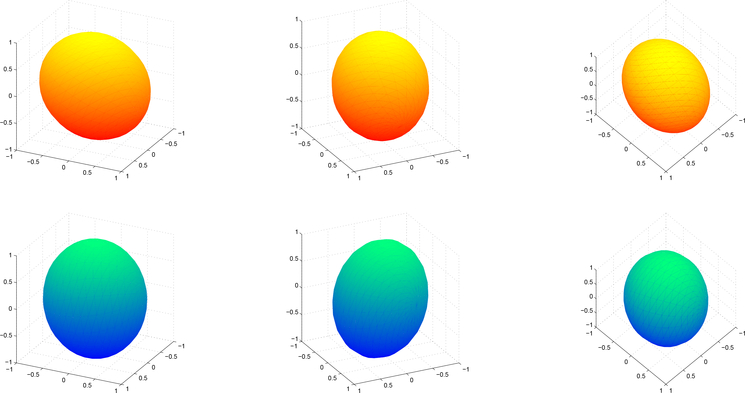

Diffusion tensors are commonly visualized as ellipsoids constructed from their spectral decompositions. Ellipsoids representing the two sample Frobenius means are provided in Figure 1and those for the sample canonical means are shown in Figure 2. In both figures, the ellipsoids are shown from three views for both groups to better display the differences between the mean tensors.

Figure 1:

Three views of the ellipsoids for the sample Frobenius means of the clinically normal (top, red) and dyslexia (bottom, blue) groups

Figure 2:

Three views of the ellipsoids for the sample canonical means of the clinically normal (top, red) and dyslexia (bottom, blue) groups

6.2. Nonparametric Inference

We can perform the hypothesis tests presented in Sections 3.1 and 6.2 by repeatedly resampling observations from the original data. As in the simulation studies in the previous sections, we used 10,000 bootstrap resamples to perform the tests. However, since n1 = n2 = 6, the T2tests cannot be used, so we can only consider the nonpivotal W2 bootstrap tests. For the Frobenius W2 test, the p-value is 0.0004, indicating a highly significant difference between the Frobenius means of the two groups. The p-value for the canonical W2 test is 0.0006, which indicates that there is also a highly significant difference between the canonical means.

Because both of the tests were able to detect significant differences between the means, it is of interest to further examine the data to see which entries of the matrices appear to differ. Table 7 displays the ranges of the marginal bootstrap distributions of both types of means for the clinically normal and dyslexia groups. The marginal bootstrap distributions for the Frobenius means are plotted in Figure 3 by iteration. As suggested by the ranges in Table 7, the marginal bootstrap distributions of the canonical means are nearly identical to those of the Frobenius means.

Table 7:

Ranges of the marginal bootstrap distributions for the Frobenius and canonical sample means in the space of SPD matrices for the clinically normal and dyslexia groups.

| Marginal | Frobenius |

Canonical |

||||||

|---|---|---|---|---|---|---|---|---|

| Clinically Normal |

Dyslexia |

Clinically Normal |

Dyslexia |

|||||

| Min | Max | Min | Max | Min | Max | Min | Max | |

| d11 | 0.4921 | 0.8459 | 0.5643 | 0.6755 | 0.4902 | 0.8408 | 0.5626 | 0.6746 |

| d22 | 0.9033 | 1.1415 | 0.7299 | 0.8940 | 0.9018 | 1.1402 | 0.7269 | 0.8894 |

| d33 | 0.6986 | 0.8453 | 0.8232 | 1.0338 | 0.6942 | 0.8429 | 0.8425 | 1.0378 |

| d12 | −0.0421 | 0.0418 | −0.0880 | 0.0358 | −0.0422 | 0.0413 | −0.0887 | 0.0350 |

| d13 | −0.1829 | −0.0222 | −0.2289 | −0.1307 | −0.1852 | −0.0159 | −0.2283 | −0.1365 |

| d23 | −0.1798 | 0.0059 | −0.1791 | −0.0192 | −0.1788 | 0.0032 | −0.1645 | −0.0273 |

Figure 3:

Marginals of the bootstrap distribution for the Frobenius mean for d11, d22, d33, d12, d13, and d23; clinically normal (red) vs dyslexia (blue)

As shown in Table 7 and Figure 3, it appears likely that the Frobenius means of the clinically normal and dyslexia groups differ in the d22 and d33 marginals. The minimum value of the d22 marginal for the clinically normal group is greater than the maximum value for that of the dyslexia group, indicating that the ranges of this marginal differ. For the d33 marginal, while there is a slight overlap in the ranges, closer inspection reveals that this is due to just two particular resamples out of the 10,000 considered. From examining the original data, it appears that these outlying occurrences are due to a heavy prevalence of observation 1 from the dyslexia group in these particular resamples.

Histograms of the bootstrap distributions for these two marginals are displayed in Figure 4 and show that there is a distinct separation in the bootstrap distributions for both the d22 and the d33 marginals of the Frobenius sample means. The marginal bootstrap distributions for the canonical sample means are nearly identical to those of the Frobenius sample means, suggesting a difference between the canonical means of the clinically normal and dyslexia groups in the same two marginals.

Figure 4:

Histogram of the marginal bootstrap distributions of the Frobenius Mean for d22 and d33; clinically normal (red) vs dyslexia (blue).

To put these results in context of the simulation study, we will now consider the amount of variability present in the data and the differences in angles between corresponding principal directions of the sample means. For the Frobenius means, the FSV for the control group is 0.0265 and it is 0.0106 for the dyslexia group. The angles between the principal directions of these means are 50.5974 degrees, 50.2119 degrees, and 7.2442 degrees. The FSV with respect to the canonical metric for the control group is 0.0739 and it is 0.0423 for the dyslexia group. The angles between the principal directions of the canonical means are 51.1738 degrees, 50.9964 degrees, and 7.0153 degrees.

As shown throughout this section, the sample means and the associated marginal bootstrap distributions are numerically close for the different metrics. This is not surprising since the data being averaged lay in a ball of relatively small radius with respect to either metric. Overall, both methods detect that there is a significant difference between the means of the dyslexia and clinically normal groups using the nonpivotal bootstrap tests. Furthermore, the methods for both metrics detect differences in the bootstrap distributions of the d22 and d33 marginals. It should be kept in mind, however, that this particular voxel was chosen precisely for having shown a significant difference between the groups in a previous parametric analysis (Schwartzman et al., 2008a).

7. Discussion

In this paper, we have considered nonparametric methodologies based upon nonparametric bootstrapping for comparing means of distributions of SPD matrices. The idea of using Fréchet-mean analysis for such data is motivated by previous literature on DTI analysis, including Chefd’hotel et al. (2004), Fletcher (2004), Arsigny et al. (2006), Schwartzman (2006), Zhu et al. (2007, 2009), and Dryden et al. (2009), which considered various non-Euclidean distances on the space of SPD matrices. We applied our nonparametric methodologies for the Frobenius and canonical metrics on this space. While extending these methods to more general spaces, such as the spaces of p×p positive definite matrices or p×p symmetric matrices, may be desirable in some applications, doing so is highly non-trivial due to the differing natural geometries of these spaces. For instance, while the Frobenius metric may be applicable for such data, these spaces do not have an analogous canonical metric. As such, extensions of this methodology to such spaces remain for future work.

To illustrate the effectiveness of the statistical methodology, we performed simulation studies for both metrics, showing that both the pivotal and nonpivotal procedures perform as desired. We also included an application of this methodology to analyzing the mean diffusion tensor at a voxel location in MRI images for dyslexic vs. clinically normal children. The voxel we used is the one that was found in Schwartzman et al. (2008a) to exhibit the strongest difference between the two groups. As illustrated in Section 6, the nonpivotal methodologies developed for both metrics were able successfully able to find a statistically significant difference between the means of these groups. In both the simulation study and the DTI application, the p-values obtained from the tests for both metrics, suggesting that the procedure for both perform well for comparing population means.

To compare computational cost, the data analysis procedures described in Sections 3.3 and 4.3 were each performed a number of times using MATLAB on a machine running Macintosh OS 10.8.5 on an Intel Core i5 processor running at 2.53 GHz. For the canonical metric, the computation times for various sample sizes were presented in Section 4.5. An algorithm must be used to compute the Fréchet sample means, since there is no closed-form expression for them. We found the gradient-descent algorithm we used for these computations to be fast, as was also observed in Pennec (1999), Le (2001), Groisser (2004), Fletcher et al. (2004), Smith (1994), Edelman et al. (1998), Pennec et al. (2006), and Fletcher and Joshi (2007). For the calculations using the Frobenius metric, the computational time required to compute sample means was fairly constant regardless of sample size and variability. Computing 20,000 sample means required a total of 1.02 seconds, on average. The MATLAB code for performing the tests for both metrics is available from the authors upon request.

For the DTI application, our methods, when applied to a single voxel, are fast. However, because bootstrapping requires repeated computation of the sample mean for each resample, application of the methods to the hundreds of thousands of voxels typically present in a DTI image remains computationally challenging. This is particularly true for the methods involving the canonical mean. As such, this problem is left for future work.

Acknowledgements

LE thanks the National Science Foundation for partial support from DMS-0805977. DO thanks the National Science Foundation for partial support from DMS-1106935. VP thanks the National Science Foundation for support from DMS-0805977 and DMS-1106935. AS thanks the National Institutes of Health for partial support from 1R21-EB-012177. We wish to thank the Statistical and Applied Mathematical Sciences Institute (SAMSI) and the Mathematical Biosciences Institute (MBI) for facilitating and partially supporting this collaboration.

A Appendix: A symmetric-space structure for Sym+(p)

A (Riemannian) symmetric space is a Riemannian manifold with the property that for each , there exists an isometry (a metric-preserving diffeomorphism) such that F(q) = q and whose derivative at q (a linear map ) is minus the identity. It can be shown that, for each q, there is never more than one such isometry. This isometry is often called the geodesic symmetry at q because, loosely speaking, it corresponds to “reflecting”, about q, every geodesic through q. We will see later that Sym+(p), endowed with the canonical metric (to be defined in Section A.2) is a symmetric space.

The group of isometries of any Riemannian manifold is a Lie group5, and the study of symmetric spaces is carried out most efficiently when recast in terms of Lie groups. That is the approach we will take here. First, we review some terminology and features of group actions and exhibit the group action on Sym+(p) that will be of importance to us.

A.1. Group actions, homogeneous spaces, and Sym+(p)

We recall some standard group-action terminology and features. Let be an arbitrary group, let be the identity element, and an arbitrary nonempty set. Let be a map , and for all , define . The map is called a (left) action of on if the following two properties are satisfied:

| (A.1) |

| (A.2) |

(If the order of composition on the right-hand side of (A.1) is reversed, is called a right action.) Observe that (A.1)–(A.2) imply that for all is the identity map . Hence each map is invertible, and .

To apply this abstract idea concretely, we fix some notation. Let GL(p,ℝ) ⊂ M(p,ℝ) be the set of invertible p × p matrices. Let O(p) ⊂ GL(p,ℝ) denote the set of orthogonal p × p matrices (G ∈ O(p) ⇐⇒ GT = G−1). Recall that GL(p,ℝ) and O(p) are Lie groups, with identity element Ip, the p×p identity matrix. We denote the Lie algebras of these Lie groups by gl(p,ℝ) and so(p) respectively. These Lie algebras can be canonically identified with spaces of matrices:

The example of a group-action that is of greatest importance to us in this paper is the following.

EXAMPLE A.1

For any G ∈ GL(p,ℝ) and any M ∈ Sym+(p), the matrix GMGT also lies in Sym+(p). For such G and M, let us define

| (A.3) |

Then the map α: GL(p,ℝ) × Sym+(p) → Sym+(p) is smooth (i.e. continuously differentiable) and is a left-action of GL(p,ℝ) on Sym+(p).

Let be a (general) left-action of a group on a set . Given an action as above, for , the isotropy group at q, or stabilizer of q, is the subgroup . The action is called transitive if for all q1, , there exists such that . Because of (A.1), if we are given any “basepoint” , a necessary and sufficient condition for transitivity is that for all , there exists such that .

Assume now that the action above is transitive. Fix a point , and let be the isotropy group at q0. For each , let , the set of group-elements that carry q0 to q. Note that Cq0 is just the isotropy group . For each , transitivity guarantees that Cq is nonempty, and if k1,k2 ∈ Cq, then so . Thus, k2 lies in the left . It is easy to check that, conversely, if , then k ∈ Cq. Thus the set Cq is precisely the coset , where k1 is any element of Cq. For later use, we record this fact in the following form:

| (A.4) |

The set of all left -cosets in is denoted . The analysis above shows that the assignment q ↦ Cq is a 1–1 correspondence

| (A.5) |

A homogeneous space for a Lie group is a manifold together with a smooth, transitive left-action of on .

REMARK A.2

For any Lie-group action on a manifold, the isotropy group of any point is always a (topologically) closed subgroup. An important theorem in Lie theory ((Helgason, 1978), Theorem II.4.2, p. 123) is that if is a Lie group and is a closed subgroup, then inherits the structure of a smooth manifold; furthermore, if is a homogeneous space for with isotropy group at some point q0, then the map q ↦ Cq giving the correspondence (A.5) is a diffeomorphism.

Returning to our fundamental Example A.1, and taking the element Ip ∈ Sym+(p) as the basepoint of this space, we have the following:

PROPOSITION A.3

The action α of GL(p,ℝ) on Sym+(p) is transitive, and the isotropy group at Ip is O(p).

Proof. Let M ∈ Sym+(p) be arbitrary and let G be any square root of M (e.g. the unique symmetric positive-definite square root). Then αG(Ip) = GGT = G2 = M. Hence α is transitive. By definition of “isotropy group” and the map α, the isotropy group at Ip is {G ∈ GL(p,ℝ) : GGT = Ip}, which is exactly O(p).

Hence the assignment M ↦ CM, M ∈ Sym+(p), sets up a 1–1 correspondence

| (A.6) |

The proof of Proposition A.3 showed more than was stated: if we restrict α, in its first argument, to the subgroup GL+(p,ℝ) ⊂ GL(p,ℝ) consisting of positive-determinant matrices, the restricted action is still transitive. For this restricted action, the isotropy group at Ip is SO(p), the orthogonal matrices of determinant 1. Thus, in addition to (A.6), we also have a 1–1 correspondence

| (A.7) |

A.2 Riemannian homogeneous spaces, symmetric spaces, and the canonical metric on Sym+(p).

Let be a smooth action of a Lie group on a manifold . For k ∈ and q ∈ , let be the derivative of the map . A Riemannian metric g on is called K-invariant if is an isometry for all k ∈ ; i.e. if

| (A.8) |

(In other words, for all k, q as above, is a linear isometry from the inner-product space to the inner-product space A Riemannian homogeneous space for a Lie group is a Riemannian manifold , where is a homogeneous space for , and the Riemannian metric g is -invariant. The following is a standard result from the theory of Riemannian homogeneous spaces.

LEMMA A.4

Let be a smooth, transitive action of a Lie group on a manifold , let , and let be the isotropy group at q0. A scalar product gq0 on Tq0 can be extended to a -invariant Riemannian metric on if and only if the inner product gq0 is -invariant, in which case there is a unique -invariant extension.

Partial proof. Necessity is obvious. To prove sufficiency, assume that for each , choose an arbitrary and define an inner product gq on by . Then clearly (A.8) is satisfied with q = q0 and k = k1. Furthermore gq0 is the only inner product on for which this is true, establishing uniqueness. Then for any k ∈ Cq, we have k = k1h for some (see (A.4)), and using the group-action properties and the Chain Rule for maps of manifolds, we have

A straightforward computation then shows that for u,v ∈ Tq0M we have

Hence for all k ∈ Cq, is a linear isometry from to and is a linear isometry in the other direction. Now let be arbitrary, and let be such that . Transitivity implies that there exist ki ∈ Cqi, i = 1,2, such that Then , a composition of linear isometries, hence a linear isometry.

It remains only to establish that the assignment q ↦ gq is smooth. This requires a technical result from Lie theory whose statement would require additional definitions and a significant digression from the main path of this paper. We refer the reader to (Cheeger and Ebin, 1975, Chapter 3) for these details.

Returning to the example that concerns us, is an open subset of the vector space Sym(p), so for each M ∈ Sym+(p) there is a canonical isomorphism

| (A.9) |

We make use of the isomorphisms ιM to express various formulas in terms of the fixed, concrete vector space Sym(p), rather than the M-dependent, abstract vector space TMSym+(p).

Convention. We will write tangent vectors of Sym+(p) using capital letters A,B, etc., and elements of Sym(p) (viewed as the image of for some M) as , etc. When working at a point M ∈ Sym+(p) that is unambiguous from context, if we are given an element A ∈ TMSym+(p), then “ “ means (A); if we are given an element of Sym(p), then “A” means . Similarly, given an inner product gM on TMSym+(p), we use to denote the inner product defined by ; given an inner product on Sym(p), we use gM to denote the inner product defined by this same equation.

Also, for M ∈ Sym+(p), G ∈ GL(p,ℝ), we write

With these conventions, we have

| (A.10) |

The Frobenius (Euclidean) inner product on is given by , where . From Proposition A.3, the isotropy subgroup of the action (A.3) at Ip is the orthogonal group O(p). Using equation (A.10) we see that for H ∈ O(p),

Therefore, by Lemma A.4, we can make the following definition:

DEFINITION A.5

The canonical metric gcan on Sym+(p) is the unique GL(p,ℝ)-invariant extension of the Frobenius inner product on TIpSym+(p).

As shown in the (partial) proof of Lemma A.4, the canonical metric is obtained from gIp as follows. (For simplicity, we write g for gcan in this discussion.) Given M ∈ Sym+(p), let G ∈ GL(p,ℝ) be such that αG(Ip) = M (see Proposition A.3). The canonical inner product is given at M by , for all A,B ∈ TMSym+(p). Explicitly, we have GGT = M, and therefore, using (A.10),

| (A.11) |

REMARK A.6

Schwartzman (2006) gives an alternate proof of the GL(p,ℝ)-invariance, by noting that conceptually, the point M ∈ Sym+(p) is a translation of the identity Ip by the group action, M = GIpGT, and this result does not depend on the specific choice of G.

As mentioned early in this section, the criteria for a Riemannian manifold to be a symmetric space can be expressed purely in terms of Lie groups. The reason for this is that it can be shown that the isometry group of a symmetric space always acts transitively (and smoothly) on . Thus a symmetric space is always a homogeneous space for its isometry group.

While every Riemannian symmetric space is a Riemannian homogeneous space, the converse is not true. Rather than give the most general Lie-theoretic characterization of symmetric spaces, we will simply state a proposition giving sufficient conditions for a homogeneous space to be a symmetric space. The reader may consult Helgason (1978) or Kobayashi and Nomizu (1969) for proofs.

Before stating the proposition, we recall that an involution of a group 𝒢 is an isomorphism such that σ ○ σ is the identity map .

PROPOSITION A.7

Let be a Riemannian homogeneous space for a connected Lie group , and let be the isotropy group, at some point , for the -action. Suppose there exists a smooth involution σ of whose fixed-point set is . Then is a symmetric space.

To apply Proposition A.7 to our space (Sym+(p),gcan), we use the characterization (A.7) of Sym+(p) (because the group GL+(p,ℝ) is connected, while GL(p,ℝ) is not). Define σ : GL+(p,ℝ) → GL+(p,ℝ) by σ(G) = (GT )−1. Then σ is a smooth involution whose fixed-point set is exactly the subgroup SO(p). This yields the following corollary, well-known to differential geometers (cf. Helgason (1978, Section VI.2) or Freed and Groisser (1989)).

COROLLARY A.8

(Sym+(p),gcan) is a symmetric space.

The map is given by dσ|Ip(A) = −AT, whose 1-eigenspace h is exactly the space so(p) of antisymmetric matrices, and whose (−1)-eigenspace is m = Sym(p). Define π : GL+(p,ℝ) → Sym+(p) by π(G) = GGT. The derivative dπ|Ip is given by dπ|Ip(A) = A + AT (here we are slightly abusing notation, by not writing explicitly the appropriate analogs of the maps ι(·) defined earlier), which annihilates h and carries m isomorphically to TIpSym+(p) = Sym(p). Theorem IV.4.2 in Helgason (1978) yields a formula for the curvature of a symmetric space in terms of the Lie-bracket operation (in our case, just the commutator of matrices) on the (−1)-eigenspace of dσe (in our case, e = Ip). In our case, the formula for sectional curvature of the two-plane spanned by {dπ|Ip(A),dπ|Ip(B)}, where A,B ∈ m are orthonormal, is ; cf. Freed and Groisser (1989, p. 327), which is non-positive since the commutator of symmetric matrices is antisymmetric. Since (Sym+(p),gcan) is also complete and simply connected, it is therefore a Cartan-Hadamard manifold.

The characterization of symmetric spaces as (special) homogeneous spaces allows for a particularly simple characterization of geodesics (see Helgason (1978, Theorem IV.3.3(iii))), which we state here just for (Sym+(p),gcan): for A ∈ TIpSym+(p), the geodesic γ with γ(0) = Ip,γ′(0) = A, is given by

| (A.12) |

where exp : M(p,ℝ) → GL+(p,ℝ) is the matrix exponential function.

COROLLARY A.9

The Riemannian manifold (Sym+(p),gcan) is complete, and Riemannian exponential map is given by

| (A.13) |

For general M ∈ Sym+(p), for all G ∈ GL(p,ℝ) that satisfy GGT = M, the Riemannian exponential map ExpM : TMSym+(p) → Sym+(p) is given by

| (A.14) |

Proof. Completeness follows from (A.12) and the Hopf-Rinow Theorem (Cheeger and Ebin, 1975, Theorem 1.8). Equation (A.13) is also immediate from (A.12).

For (A.14), first consider, more generally, an arbitrary Riemannian manifold , and suppose is an isometry. It is easily seen that F carries geodesics to geodesics. Since a geodesic is determined uniquely by its basepoint and initial tangent vector, it follow that if we denote by γ(q,v) the geodesic with basepoint q and initial tangent vector , then γ(F(q),(dF)q(v)) = F ○ γ(q,v). Note that, by definition, EXPq(v)=γ(q,v)(1).

Now apply this to (Sym+(p),gcan), with F = αG and with M,G as in the hypotheses. Using (A.10), we find that for A ∈ TMSym+(p),

| (A.15) |

Replacing ,with (A.14) follows.

REMARK A.10

All symmetric spaces are complete; our formula (A.12) simply made the completeness of (Sym+(p),gcan) easy to see without quoting the theorem for the general case.

A.3 Riemannian Logarithm Maps

PROPOSITION A.11

The Riemannian exponential maps appearing in Corollary A.9 are diffeomorphisms.