Abstract

Background

The Internet has become a leading source of health information accessed by patients and the general public. It is crucial that this information is reliable and accurate.

Objectives

The purpose of this systematic review was to evaluate the overall quality of online health information targeting patients and the general public.

Methods

The systematic review is based on a pre-established protocol and is reported according to the PRISMA statement. Eleven databases and Internet searches were performed for relevant studies. Descriptive statistics were used to synthesize data. The NIH Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies was used to assess the methodological quality of the included studies.

Results

Out of 3393 references, we included 153 cross-sectional studies evaluating 11,785 websites using 14 quality assessment tools. The quality level varied across scales. Using DISCERN, none of the websites received a category of excellent in quality, 37–79% were rated as good, and the rest were rated as poor quality. Only 18% of websites were HON Code certified. Quality varied by affiliation (governmental was higher than academic, which was higher than other media sources) and by health specialty (likely higher in internal medicine and anesthesiology).

Conclusion

This comprehensive systematic review demonstrated suboptimal quality of online health information. Therefore, the Internet at the present time does not provide reliable health information for laypersons. The quality of online health information requires significant improvement which should be a mandate for policymakers and private and public organizations.

Electronic supplementary material

The online version of this article (10.1007/s11606-019-05109-0) contains supplementary material, which is available to authorized users.

KEY WORDS: quality, online health information, systematic review, Internet, patient education, health literacy

BACKGROUND

Patients living with health conditions are faced with complex challenges of managing their health, family life, work conditions, psychological problems, and a lack of understanding from society, family members, and healthcare professionals. This has led patients to express their concerns of the need for trustworthy relevant information that may play a vital role by increasing the understanding of a health problem, helping make informed decisions about treatment choices, increasing the perception of control of own health, and ultimately improving quality of life while living with an illness.1–3 Patients have reported that the information they receive from their healthcare professionals was not clear, satisfactory, or conductive for asking additional questions.4, 5 To fulfill their health information needs, patients and the public thus seek information from multiple sources such as social service providers, librarians, peers, support groups, and the Internet in addition to their healthcare providers.3, 6–8

As stated by Valore Crooks, “an important element of negotiating life with a chronic illness, for many, is seeking out information which can be used to come to a greater understanding of one’s changed/changing body and possible treatment options”2 [p. 52]. The Internet has increasingly become the key source of information about health, having more than 100,000 websites with this information.9 Examples of a few well-known websites recommended by the Medical Library Association10 for patient education are as follows: the National Institute of Health,11 Mayo Clinic,12 Medline Plus,13 Center for Disease Control and Prevention,14 and the Centre for Addiction and Mental Health.15 The Internet has been recognized as a basis to educate and empower patients by providing information on their health problems, prevention/management of diseases, and related health services. The Internet is perceived to have the ability to reach those with limited access to information, the potential for online support/interaction, access to the sphere of information on a wide breadth of topics, and the ability to access information when needed.16–18 It has been consistently advocated by many researchers that web-based health information can change behavior, improve adherence to treatment, reduce health risks, increase satisfaction with care, reach peers in real time, improve health outcomes, and facilitate shared decision-making between patients and healthcare professionals.19–21 Disseminating health and medical information on the Internet has the potential to improve knowledge transfer from health professionals to consumers.

According to the “Internet and American Life Project” conducted by the Pew Research Center, about 80% of American Internet users have surfed the Internet to access health information.6 Yet, concerns remain about the potential adverse effects of patients using independently retrieved web-based health information. About 85 million of those Internet users take online health advice without assessing the quality of the content found on the Internet.22 The more people access the Internet for health information, the more concern for quality will continue to grow. To understand this phenomenon, the team has conducted a series of systematic reviews.23 The purpose of this review was to further evaluate the quality of web-based health information targeted to patients and the general public stratified by health conditions and type of organization. Since there is no consensus on the definition of “health information quality,” we have considered the definition provided by the authors in the included studies for the purpose of this study.

METHODS

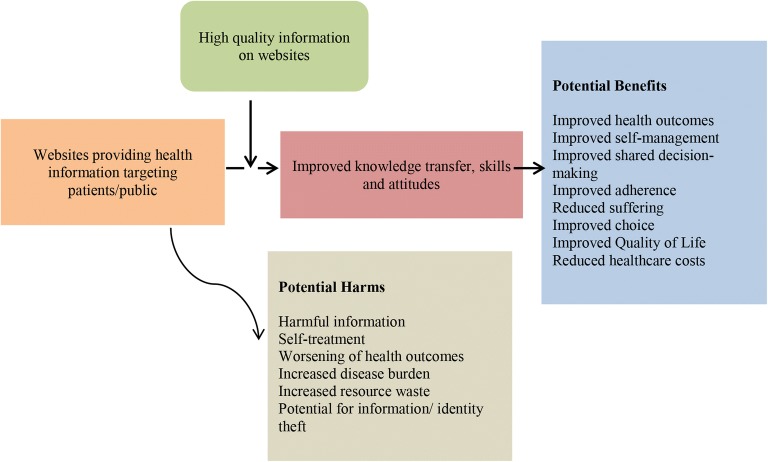

This systematic review was based on a pre-established protocol and was reported according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement.24 In Figure 1, we illustrated the analytic framework of this meta-narrative review. It was hypothesized that websites disseminating high-quality health information may improve knowledge transfer, skills, and attitudes for patients/public regarding their healthcare. Consequently, that enhancement may yield benefits to both the patients/public and the healthcare systems (e.g., improve health outcomes, reduce costs, improve quality of life).

Figure 1.

Analytic framework. *Reasons for exclusion, study not about quality assessment; website information not targeted to patients; quality tool for printed materials; study in foreign language; study on websites’ quality of other languages; evaluate quality of patient record, patient portal; commercial/editorial/book.

Literature Search and Study Selection

We conducted a comprehensive search of the following databases: EMBASE, EBM Reviews–Cochrane Central Register of Controlled Trials, EBM Reviews–Cochrane Database of Systematic Reviews, Ovid MEDLINE® Epub Ahead of Print, In-Process & Other Non-Indexed Citations, Ovid MEDLINE®, CINAHL, LISA, Scopus, Web of Science, and ERIC from database inception to November 2017. We searched all study designs and conducted reference mining of relevant publications to identify additional literature. Gray literature was also searched through all of the following sources: conference abstracts, dissertations, AHRQ, Health Canada, the first 100 entries of Google Scholar, and OpenGrey. A health sciences librarian with consultation with the PI developed and executed the search strategy (Appendix in the Electronic Supplementary Material).

Eligibility Criteria

We included all types of study designs that systematically evaluated websites’ quality with validated and non-validated scales. These websites needed to fulfill the following characteristics: provide information on any health condition, have the general public as the target population, and are published in English. We excluded studies targeting healthcare workers, professionals, or medical students and studies focused on validation of quality tools or studies of quality assessment of printed materials. We also excluded editorials, letters, and abstracts. We did not restrict studies to any specific region and included publications from the last 10 years (2008 to 2017).

Independent reviewers screened the titles, abstracts, and then full text in duplicate to select eligible references. Discrepancies among reviewers were resolved through discussions and consensus.

Data Extraction and Methodological Quality Assessment

We developed a data extraction form which was first pilot tested by all of the reviewers. For eligible references, data extracted included the following: author, year, journal, study design, search engines, health conditions, type of organization, quality scales, quality scores, and definition of quality.

For classifying affiliations of websites, we used the following method: (1) websites with “.gov” domains were classified as government, (2) websites with “.org” domains and foundations, support groups, or societies were classified as non-profit, (3) websites with “.edu” domain or affiliated with university, hospitals, clinics, or professional medical organization were classified as academic/hospitals/professional medical, (4) websites with news portals were classified as media, (5) websites that did not disclose affiliation, had commercial contents, or had affiliation to a private holder were classified as private/commercial, and the rest were classified as other.

For classifying websites by health conditions, we combined all health conditions into 10 different categories: (1) anesthesiology; (2) ear, nose, and throat (ENT); (3) gynecology and obstetrics; (4) internal medicine; (5) neurology/neurosurgery; (6) oncology; (7) orthopedic surgery; (8) psychiatry; (9) surgery; (10) pediatric; and (11) other.

For classifying websites’ quality, we developed the following criteria by consulting the quality scales identified in this systematic review (Table 1):

> 80%: excellent

66–79%: very good

45–65%: good

< 44%: poor

Table 1.

List of Quality Scales with Minimum and Maximum Scores

| Scale | Total score |

|---|---|

| Adapted Depression Website Content Checklist (ADWCC) | 0–10 |

| Brief DISCERN | 0–30 |

| Centers for Disease Control and Prevention Clear Communication Index (CDC CCI) | 0–100 |

| DISCERN | 0–80 |

| 0–75 | |

| 0–5 | |

| Ensuring Quality Information for Patients (EQIP) | 0–36 |

| Global Quality Score (GQS) | 1–5 |

| Health-Related Website Evaluation Form (HRWEF) | > 90%: excellent |

| 75–89%: adequate | |

| HON Code of Conduct | Yes/no |

| Journal of the American Medical Association (JAMA) Benchmark | 0–4 |

| LIDA | 0–165 |

| Patient Education Materials Assessment Tool (PEMAT-P) | Percentage /100 |

| Quality Component Scoring System (QCSS) | > 80%: excellent |

| 70–79%: very good | |

| 60–69%: good | |

| 50–59%: fair | |

| < 50%: poor | |

| Suitability Assessment of Materials (SAM) | 70–100%: superior |

| 40–69%: adequate | |

| 0–39%: not suitable | |

| University of Michigan Healthcare Website Evaluation Checklist | 0–25: poor |

| 26–50: weak | |

| 51–60: average | |

| 61–70: good | |

| 71–80: excellent |

For the methodological quality appraisal, we modified the NIH Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies to fit the goal of this study.25 Data extraction and quality assessment were completed by independent reviewers and audited by a third reviewer for completeness and accuracy.

Data Synthesis and Analysis

Descriptive statistics were used to synthesize data. Using Stata 14 (StataCorp LP., College Station, TX), we calculated medians and interquartile ranges (IQR) for the percentiles of total scores for each scale. Data was presented using graphs and tables.

We stratified quality by type of organization and by health conditions using the two most commonly applied tools:

DISCERN

The initial intent of DISCERN was to evaluate written information on treatment choices for one specific health condition by expert users and health information producers. The tool consists of 15 key questions and an overall quality scoring option. Each key question represents a separate quality criterion, and the questions are organized into three sections: reliability (questions 1–8), specific information about treatment choices (questions 9–15), and overall quality rating (question 16). To facilitate analysis, we used 3 different sets of total scores: 5, 75, and 80, and analyzed and reported data based on the percentile of these total scores.26–28

HON Code of Conduct

The HON Code is a code of ethics developed for site managers to follow for disseminating quality, objective, and transparent medical information on the Internet.29, 30 The Code consists of eight quality criteria, each with a definition: (1) authoritative, (2) complementarity, (3) privacy, (4) attribution, (5) justifiability, (6) transparency, (7) financial disclosure, and (8) advertising policy. Organizations are required to obtain certification from the Health On the Net Foundation to use the Code. We analyzed data based on two categories: (1) HON certified and (2) not certified.

For the rest of the quality scales, we have separately analyzed and synthesized quality scores.

RESULTS

Included Studies

The systematic review reports data only on self-reported validated quality scales.

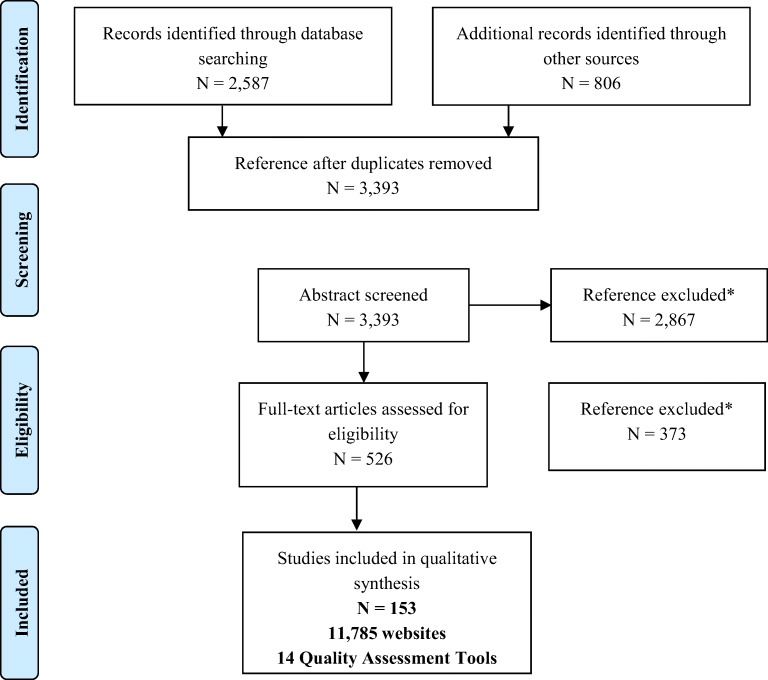

The literature search yielded 3393 references; of which 153 met the inclusion criteria. A total of 149 studies evaluating 11,785 websites were included for qualitative synthesis since several studies did not report any scales or scores suitable for data synthesis. The process of study selection is depicted in Figure 2. The characteristics of included studies are summarized in Supplementary Table 1 in the Appendix. All included studies used a cross-sectional design. Google, Yahoo, Bing, and Ask.com were the preferred search engines. However, a list of other search engines was also used in some studies.

Figure 2.

PRISMA flow diagram. Figure 2 contains cutoff data inside the artwork. Please confirm if we can retain the current presentation.I have attached all the figures in original format which have appropriate quality. I hope these are helpful. Thank you.

Methodological Quality Assessment

The methodological quality for the included studies was considered adequate or “good” based on the NIH quality assessment tool.25 Almost all (95%) had an adequate response to the various quality domains (Supplementary Table 2 in the Appendix).

Quality Scales

A total of 14 self-reported quality assessment scales were identified which are listed below:

Overall Quality

Quality level varied across scales. The median range of all the scales fell between 37 and 79%, which was categorized as good (Supplementary Table 3 in the Appendix). A total of 74 studies with 5583 websites used HON Code of Conduct, of which 18% (1004) of the websites were HON Code certified. DISCERN was used by 87 studies including 5693 websites and the quality ranged between good to very good. None of the websites was categorized as excellent for quality.

Among the 14 identified quality scales, DISCERN and HON Code of Conduct were the most commonly used scales to assess the quality of a website. For our analysis, we have used these two scales to stratify the quality of web-based health information by health conditions and by type of organization.

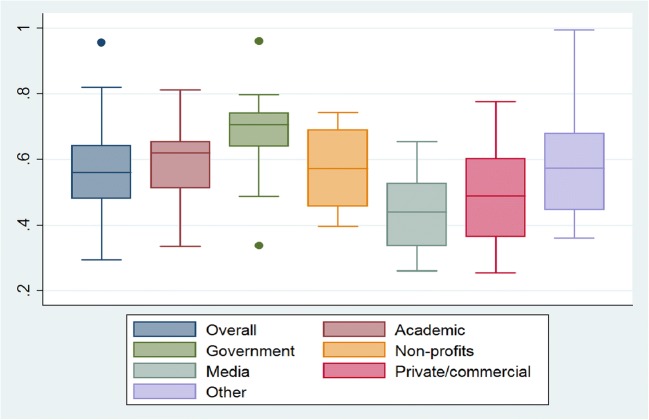

Quality by Type of Organization

Figure 3 shows the qualities of the websites by type of organization as measured by DISCERN. The quality varied by type of organization ranging from poor to very good. Government organizations received the highest score of 71% which is equivalent to very good, academic organizations received a score of 62% which is equivalent to good, and media-related sources received the lowest score of 44% which is equivalent to poor.

Figure 3.

Level of quality by type of organization—DISCERN. Figures 3–6 contain poor-quality text inside the artwork. Please do not re-use the file that we have rejected or attempt to increase its resolution and re-save. It is originally poor; therefore, increasing the resolution will not solve the quality problem. We suggest that you provide us the original format. We prefer replacement figures containing vector/editable objects rather than embedded images. Preferred file formats are eps, ai, tiff, and pdf.I have attached all the figures in original format which have appropriate quality. I hope these are helpful. Thank you.

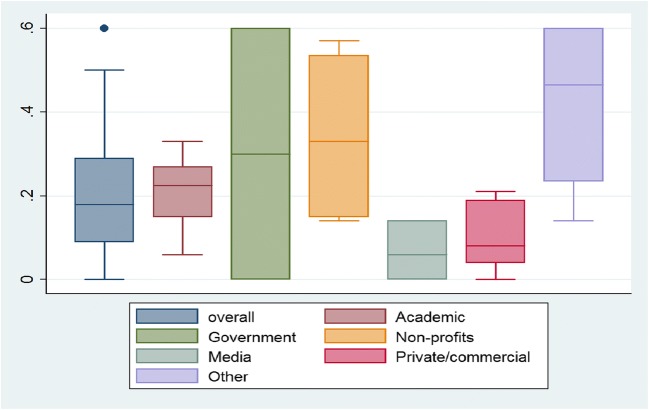

Figure 4 shows the percentage of websites that were HON Code certified. About 33% non-profit, 30% government, 23% academic, 19% private/commercial, 6% media, and 47% of other organizations had HON certification.

Quality by Health Conditions

Figure 4.

Level of quality by type of organization—HON Code of Conduct.

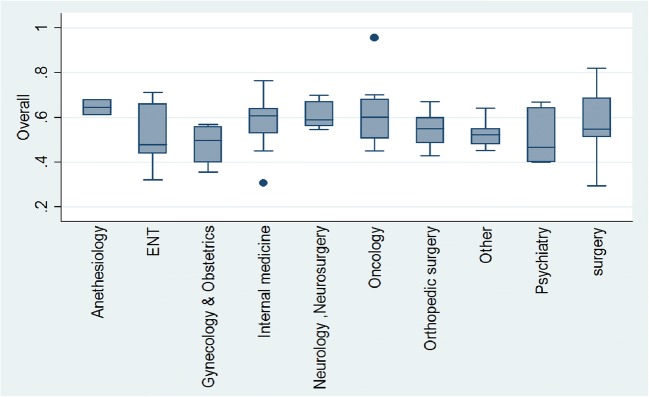

The mean level of quality using the DISCERN scale was consistently good across various specialties (Fig. 5) with the lowest in psychiatry (47%) and the highest (65%) in anesthesiology.

Figure 5.

Level of quality by health conditions—DISCERN.

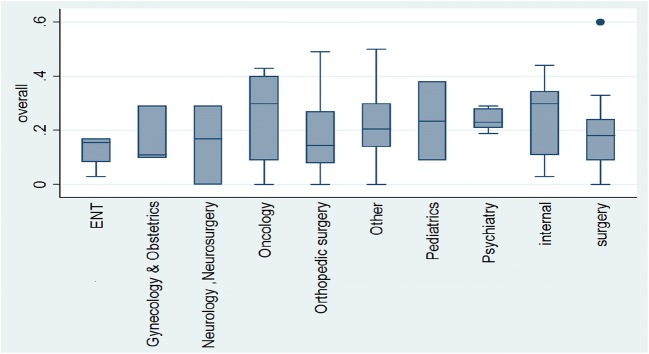

Figure 6 shows the percentage of websites by health specialties obtained HON certification. Among the websites providing information, oncology and internal medicine were highest (30%) in receiving HON certification and gynecology and obstetrics obtained the lowest (11%).

Figure 6.

Level of quality by health conditions—HON Code of Conduct.

DISCUSSION

Main Findings

This systematic review evaluated the quality of web-based health information targeting patients and the general public. There were three main outcomes. First, despite the variability in reporting scores among quality scales, the result was consistent. We found that the mean level of quality across websites remains consistent as good. Unfortunately, none of the websites received an excellent for quality.

Second, stratification by type of organization or health condition revealed thought-provoking findings. It is a mutual assumption that the quality of health information from academic organizations such as universities, academic hospitals, and professional medical organization is of good quality. However, our findings suggested that government organizations ranked better (based on DISCERN) than academic organizations when it comes to disseminating health information on their websites. Similar findings were also observed for HON Code certification where more government organizations obtained the certification to meet quality standard than academic institutions. General people tend to be non-clinical and may not be able to judge the quality of information resources in order to put them into context.7 This exhibits a cautionary call for academic organizations to pay more attention to their effort in maintaining the accuracy and reliability of health information targeted to patients and the general public.

Third and major finding, for the content of specific disease information, all of the 10 conditions were ranked as good but lacked very good and excellent categories in quality. This finding was consistent with patient and public’s concerns for their lack of access to quality health information20 on the Internet for all health conditions. On the other hand, less than 40% of the websites that provide information about different health specialties were HON Code certified. Studies have demonstrated that HON Code certification can be linked with an improved quality, though there are limitations observed in the criterion that are used to evaluate quality by the Code.31, 32 For example, the Code does not provide the ability to judge if a topic is well covered (content) or appropriate for target audience (usefulness). In 1998, the US Federal Trade Commission organized an International Health Claim Surf Day where 1200 websites were acknowledged that contained incorrect and misleading information about treatment and prevention for six major diseases: arthritis, cancer, diabetes, heart disease, HIV/AIDS, and multiple sclerosis.33 This crucial state of the reliability of online health information has not changed in the last decade as demonstrated in this review.

Practical Implications

There is a rationale for how information might impact on a variety of aspects of a person’s ability to live with a chronic health condition and to interact in the healthcare system. The impact of web-based health information is therefore critical and can affect patients’ safety, outcomes, healthcare expenditure, management of chronic conditions, and the quality of life of the ever increasing segment of the population depending on the Internet for information.34, 35 Billions of dollars are wasted on unproven, deceptive cures and false medications/therapies that cause delay in evidenced-based treatments. This systematic review demonstrated variation based on scale, organization, and specialty, thus providing an imperative to improve the quality of information provided on certain conditions and by certain organizations.

From a research perspective, the variation in quality according to different scales suggests a need to perform content analysis of the scales to identify overlapping and non-overlapping domains. This can lead to the development of more accurate tools and instruments. It can also help define the construct of quality which includes various concepts. For example, one aspect of quality of online health information is readability. A systematic review has suggested that this readability was not consistent with recommended standards.23 The mean readability grade level across websites offering health information to the general public ranged from grade 10 to 15 based on the different scales (sixth-grade level is the recommended target). Similarly, most of the quality assessment instruments do not use consistent criteria and are developed by organizations and individuals based on their own knowledge and target audience. As a result, using DISCERN or a HON Code certification does not necessarily mean that the quality of a website is of high quality.

A study that critically assessed four web health evaluation tools to evaluate their content and readability found similar findings and recommended the following seven key principles of quality to help consumers/patients gain valid and useable health knowledge using the Internet: (1) authorship, (2) content, (3) currency, (4) usefulness, (5) disclosure, (6) user support, and (7) privacy and confidentiality.36 A ready-to-use patient handout (quality checklist) incorporating several of these quality principles is attached in the Appendix (ESM) for clinicians to use for their patients. Nevertheless, there still remains a need of a gold standard that is useful, meets reading level for lay users, and has content validity.

Limitations of the Study

The construct of quality includes multiple domains, which leads to variability across tools and limits inferences in this field. We restricted this systematic review to studies that self-reported the tools as validated. DISCERN and HON Code both pose some limitations in terms of their use and specific criteria. For example, DISCERN does not include many of the criteria that are important for assessing specific information content and the dissemination of the information, namely accuracy, completeness, disclosure, and readability. Another important limitation of both DISCERN and HON Code is that these tools were not intended for lay users (patients and caregivers); instead, they were developed for experts and health information producers. As a result, there is no gold standard for comparison to evaluate the quality of websites. Websites may have overlapped across studies and website content can be presented in multiple websites.

CONCLUSION

Access to useful and understandable health information is an important factor when making health decisions. Trustworthy online health information enables patients and the public with knowledge to take control of their health and healthcare. It may also reduce a burden on individuals and on the healthcare system. This comprehensive systematic review has evaluated health information targeted to patients and the general public stratified by health conditions and type of organization. We found suboptimal quality across various medical specialties suggesting a major gap in evidence-based online information. In addition to improving Internet access and improving the readability of online health information, improving the quality of information should be a priority for policymakers as well as private and public organizations. Partnerships of academic institutions and governmental agencies are needed to establish quality standards and develop a monitoring system for online health information producers to reduce healthcare waste and improve health outcomes.

Electronic Supplementary Material

(DOCX 447 kb)

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Sylvain H, Talbot LR. Synergy towards health: a nursing intervention model for women living with fibromyalgia, and their spouses. J Adv Nurs. 2002;38(3):264–273. doi: 10.1046/j.1365-2648.2002.02176.x. [DOI] [PubMed] [Google Scholar]

- 2.Crooks VA. “I go on the Internet; I always, you know, check to see what’s new”: Chronically ill women’s use of online health information to shape and inform doctor-patient interactions in the space of care provision. ACME: An International E-Journal for Critical Geographies. 2006;5(1):50–69. [Google Scholar]

- 3.Aranda S, Schofield P, Weih L, et al. Mapping the quality of life and unmet needs of urban women with metastatic breast cancer. Eur J Cancer Care. 2005;14(3):211–222. doi: 10.1111/j.1365-2354.2005.00541.x. [DOI] [PubMed] [Google Scholar]

- 4.Söderberg S, Lundman B, Norberg A. Struggling for dignity: The meaning of women’s experiences of living with fibromyalgia. Qual Health Res. 1999;9(5):575–587. doi: 10.1177/104973299129122090. [DOI] [PubMed] [Google Scholar]

- 5.Alpay L, Verhoef J, Xie B, Te'eni D, Zwetsloot-Schonk J. Current challenge in consumer health informatics: Bridging the gap between access to information and information understanding. Biomed inform Insights. 2009;2:BII. S2223. doi: 10.4137/BII.S2223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fox S. The social life of health information. 2014. http://www.pewresearch.org/fact-tank/2014/01/15/the-social-life-of-health-information/. Accessed April 1, 2019.

- 7.Daraz L, MacDermid JC, Wilkins S, Gibson J, Shaw L. Information preferences of people living with fibromyalgia–a survey of their information needs and preferences. Rheumatol Rep. 2011;3(1):7. doi: 10.4081/rr.2011.e7. [DOI] [Google Scholar]

- 8.Bishop FL, Bradbury K, Jeludin NNH, Massey Y, Lewith GT. How patients choose osteopaths: a mixed methods study. Compr Ther Med. 2013;21(1):50–57. doi: 10.1016/j.ctim.2012.10.003. [DOI] [PubMed] [Google Scholar]

- 9.Eysenbach G, Köhler C. How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. Bmj. 2002;324(7337):573–577. doi: 10.1136/bmj.324.7337.573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.The Medical Library Association. Find Good Health Information. https://www.mlanet.org/p/cm/ld/fid=398. Accessed April 1, 2019.

- 11.National Institute of Health. Health Information. https://www.nih.gov/health-information. Accessed Accessed April 1, 2019.

- 12.Mayo Clinic. Patient Care and Health Information. https://www.mayoclinic.org/patient-care-and-health-information. Accessed April 1, 2019.

- 13.Medline Plus. https://medlineplus.gov/. Accessed Accessed April 1, 2019.

- 14.Centers for Disease Control and Prevention. https://www.cdc.gov/. Accessed April 1, 2019.

- 15.Center for Addiction and Mental Health. https://www.camh.ca/en/health-info. Accessed April 1, 2019.

- 16.Cline RJ, Haynes KM. Consumer health information seeking on the Internet: the state of the art. Health Educ Res. 2001;16(6):671–692. doi: 10.1093/her/16.6.671. [DOI] [PubMed] [Google Scholar]

- 17.Eriksson-Backa K. Who uses the web as a health information source? Health Inform J. 2003;9(2):93–101. doi: 10.1177/1460458203009002004. [DOI] [Google Scholar]

- 18.Tao Donghua, LeRouge Cynthia, Smith K Jody, De Leo Gianluca. Defining Information Quality Into Health Websites: A Conceptual Framework of Health Website Information Quality for Educated Young Adults. JMIR Human Factors. 2017;4(4):e25. doi: 10.2196/humanfactors.6455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Suggs LS. A 10-year retrospective of research in new technologies for health communication. J Health Commun. 2006;11(1):61–74. doi: 10.1080/10810730500461083. [DOI] [PubMed] [Google Scholar]

- 20.Daraz L, MacDermid JC, Wilkins S, Gibson J, Shaw L. The quality of websites addressing fibromyalgia: an assessment of quality and readability using standardised tools. BMJ Open 2011:bmjopen-2011-000152. [DOI] [PMC free article] [PubMed]

- 21.Wang J, Ashvetiya T, Quaye E, Parakh K, Martin SS. Online Health Searches and their Perceived Effects on Patients and Patient-Clinician Relationships: A Systematic Review,✯✯✯. Am J Med 2018. [DOI] [PubMed]

- 22.Fox S. Online health search 2006: Pew Internet & American Life Project; 2006. http://www.pewinternet.org/2006/10/29/online-health-search-2006/. Accessed April 1, 2019.

- 23.Daraz L, Morrow AS, Ponce OJ, et al. Readability of Online Health Information: A Meta-Narrative Systematic Review. Am J Med Qual 2018:1062860617751639. [DOI] [PubMed]

- 24.Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Int J Surg. 2010;8(5):336–341. doi: 10.1016/j.ijsu.2010.02.007. [DOI] [PubMed] [Google Scholar]

- 25.National Institutes of Health. National Institutes of Health Quality Assessment tool for Observational Cohort and Cross-Sectional Studies. 2016. https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools. Accessed April 1, 2019.

- 26.Charnock D, Shepperd S. Learning to DISCERN online: applying an appraisal tool to health websites in a workshop setting. Health Educ Res. 2004;19(4):440–446. doi: 10.1093/her/cyg046. [DOI] [PubMed] [Google Scholar]

- 27.University of Oxford. Institute of Health Sciences. Nuffield Department of Population Health and the Nuffield Department of Primary Care Health Sciences. discern online: quality criteria for consumer health information. http://www.discern.org.uk/original_discern_project.php. Accessed Accessed April 1, 2019.

- 28.Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999;53(2):105–111. doi: 10.1136/jech.53.2.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Health on the net Foundation. The HON Code of Conduct for medical and health Web sites (HONcode). 1997. https://www.healthonnet.org/HONcode/Conduct.html. Accessed April 1, 2019.

- 30.Boyer C, Selby M, Scherrer J-R, Appel R. The health on the net code of conduct for medical and health websites. Comput Biol Med. 1998;28(5):603–610. doi: 10.1016/S0010-4825(98)00037-7. [DOI] [PubMed] [Google Scholar]

- 31.Mousiolis A, Michala L, Antsaklis A. Polycystic ovary syndrome: double click and right check. What do patients learn from the Internet about PCOS? Eur J Obstet Gynecol Reprod Biol. 2012;163(1):43–46. doi: 10.1016/j.ejogrb.2012.03.028. [DOI] [PubMed] [Google Scholar]

- 32.Nason GJ, Byrne DP, Noel J, Moore D, Kiely PJ. Scoliosis-specific information on the internet: has the “information highway” led to better information provision? Spine. 2012;37(21):E1364–E1369. doi: 10.1097/BRS.0b013e31826619b5. [DOI] [PubMed] [Google Scholar]

- 33.Commission FT. Remedies Targeted in International Health Claim Surf Day. 1998. https://www.ftc.gov/news-events/press-releases/1998/11/remedies-targeted-international-health-claim-surf-day. Accessed April 1, 2019.

- 34.Statistics Canada. Canadian Internet Use Survey (CIUS). 2013. http://www23.statcan.gc.ca/imdb/p2SV.pl?Function=getSurvey&SDDS=4432. Accessed April 1, 2019.

- 35.Fox S. The social life of health information. Pew Internet & American Life Project 2009. https://www.pewinternet.org/2009/06/11/the-social-life-of-health-information/. Accessed April 1, 2019.

- 36.Daraz L, MacDermid J, Wilkins S, Shaw L. Tools to evaluate the quality of web health information: A structured review of content and usability. Int J Tech Knowl Soc 2009;5:3.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 447 kb)