Abstract

Objective

To reliably improve diagnostic fidelity and identify delays using a standardized approach applied to the electronic medical records of patients with emerging critical illness.

Patients and Methods

This retrospective observational study at Mayo Clinic, Rochester, Minnesota, conducted June 1, 2016, to June 30, 2017, used a standard operating procedure applied to electronic medical records to identify variations in diagnostic fidelity and/or delay in adult patients with a rapid response team evaluation, at risk for critical illness. Multivariate logistic regression analysis identified predictors and compared outcomes for those with and without varying diagnostic fidelity and/or delay.

Results

The sample included 130 patients. Median age was 65 years (interquartile range, 56-76 years), and 47.0% (52 of 130) were women. Clinically significant diagnostic error or delay was agreed in 23 (17.7%) patients (κ=0.57; 95% CI, 0.40-0.74). Median age was 65.4 years (interquartile range, 60.3-74.8) and 9 of the 23 (30.1%) were female. Of those with diagnostic error or delay, 60.9% (14 of 23) died in the hospital compared with 19.6% (21 of 107) without; P<.001. Diagnostic error or delay was associated with higher Charlson comorbidity index score, cardiac arrest triage score, and do not intubate/do not resuscitate status. Adjusting for age, do not intubate/do not resuscitate status, and Charlson comorbidity index score, diagnostic error or delay was associated with increased mortality; odds ratio, 5.7; 95% CI, 2.0-17.8.

Conclusion

Diagnostic errors or delays can be reliably identified and are associated with higher comorbidity burden and increased mortality.

Abbreviations and Acronyms: APACHE III, Acute Physiology, Age, Chronic Health Evaluation III; ICU, intensive care unit; IOM, Institute of Medicine; IQR, interquartile range; RRT, rapid response team; SOP, standard operating procedure

Variations or deviations in diagnostic fidelity are contributors to avoidable illness. The accurate and timely delivery of treatment within a critical period improves patient outcomes.1, 2, 3 Diagnostic errors and delays are forms of deviation from diagnostic fidelity. Autopsy studies have identified diagnostic errors in 10.0% (10 of 100) to 38.7% (36 of 93) of deaths.4, 5 The Institute of Medicine (IOM) defined diagnostic error as 2-fold: the failure to establish an accurate and timely explanation of the patient's health problem or communicate that explanation to the patient.1

Delayed or incorrect medical management leads to unintended injuries, classified as serious adverse events.6 In the United States, Canada, Europe, and Australia, these occur at a rate of up to 18% amongst hospitalized patients, with patients exhibiting physiologic deterioration before the event.7, 8 Early warning systems have been developed to identify these deteriorations and rapid response systems for timely evaluation and interventions.8, 9, 10, 11, 12 Up to 31.4% (114 of 364) of clinical deteriorations requiring rapid response system activations have been attributed to medical errors, 67.5% (77 of 364) of which were related to diagnostic error or delay.13

The landscape of diagnostic fidelity, including identifying errors and delays, remains a largely understudied area in health care.1 Standardized measurement tools are lacking and processes for reporting errors or near-misses remain underdeveloped. The lack of consensus agreement on definitions for errors and delays and the complexities related to the cognitive and systems-based failures involving the care of the critically ill patient are attributed to the lack of standardization.14

The goals of this study were to use the electronic medical record to establish a reliable method for identifying variations in diagnostic fidelity through identification of errors and delays in patients with emerging critical illness. Additionally, the goal was to identify predictors and evaluate the impact on outcomes. The central hypothesis was that in the nontrauma critically ill adult patient, variations of diagnostic fidelity could be reliably identified by using a standardized approach to classifying error and delay that applies a taxonomy-based approach for reviewing electronic medical records. Furthermore, it was hypothesized that patients for whom there was variation in diagnostic fidelity would have worse outcomes.

Patients and Methods

Setting and Study Design

This was a single-center retrospective observational study conducted at Mayo Clinic in Rochester, Minnesota (MN), between June 1, 2016, and June 30, 2017. The study protocol was reviewed and approved by the Mayo Clinic Institutional Review Board. Mayo Clinic in Rochester, MN, is an academic tertiary referral center that has approximately 129,500 admissions per year. The rapid response team (RRT) system was instituted at Mayo Clinic in 2007 and involves a team led by a critical care fellow, critical care respiratory therapist, and intensive care unit (ICU) nurse with supervision from an on-site board-eligible or board-certified intensivist. For reference, criteria for RRT activation are outlined in Supplemental Table 1 (available online at http://mcpiqojournal.org).

A previous study at our institution identified patients who had a rapid response system call from January 1, 2012, to December 31, 2012, who were older than 18 years and had prior research authorization.9 A database was established at the time of this study identifying all patients with an RRT call. This database was populated with information including patient demographic characteristics, physiologic variables, reason for RRT call, time of day of RRT call, disposition following RRT call, and patient outcomes. These patients exhibited deteriorations before the event of the RRT call. Thus, this trigger served as the identification point of deterioration whereby diagnostic fidelity could be retrospectively reviewed.

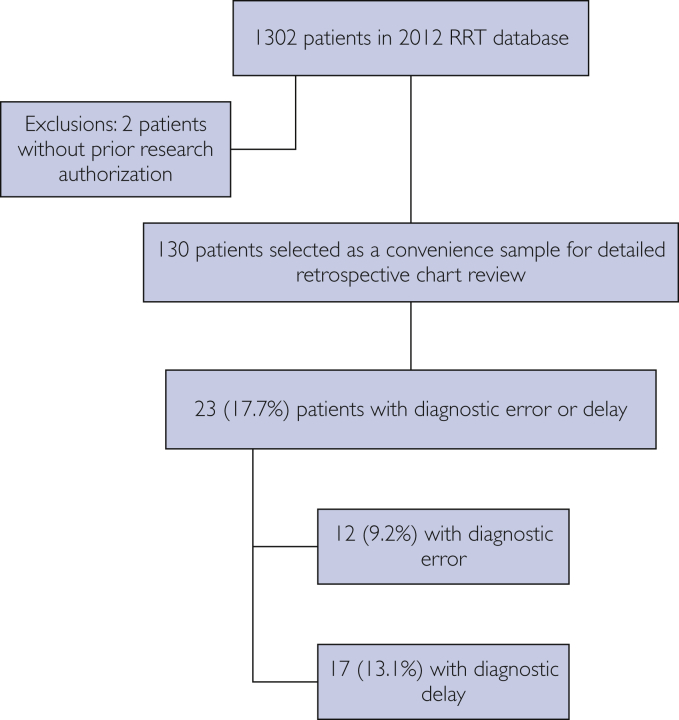

For purposes of this study, a subset of 130 patients was selected from the RRT database for a detailed retrospective electronic medical record review (Figure). This sample was identified sequentially as the first 130 patients of the RRT database. Because manual chart review by clinicians was required and a significant proportion of time was anticipated for this careful review, we elected to take a pragmatic approach and selected this limited sample of 130 patients (∼10% of the database).

Figure.

Representation of selected study cohort. RRT, rapid response team.

Inclusion Criteria

Individuals 18 years or older admitted to the hospital who met RRT criteria as outlined in Table 1 were included. Patients were also included if an RRT call was placed based on the judgment of nursing staff without having met institutional criteria.

Table 1.

Patient and RRT Call Characteristicsa

| Characteristics | Variation in Diagnostic Fidelity (error or delay) (N=23) | No Variation in Diagnostic Fidelity (no error or delay) (N=107) | Pb |

|---|---|---|---|

| Age (y), median (IQR) | 65.4 (60.3-74.8) | 66.1 (54.0-77.1) | .37 |

| Female sex, N (%) | 9 (39.1) | 52 (48.6) | .40 |

| Primary reason for RRT call, N (%)c | |||

| Tachycardia | 1 (4.3) | 24 (22.4) | .30 |

| Altered level of consciousness | 6 (26.1) | 20 (18.7) | |

| Hypotension | 7 (30.4) | 22 (20.6) | |

| Respiratory distress | 3 (13.0) | 10 (9.3) | |

| Chest pain | 2 (8.7) | 8 (7.5) | |

| Hypertension | 1 (4.3) | 4 (3.7) | |

| Oxygen saturation <90% | 3 (13.0) | 7 (6.5) | |

| Otherd | 0 (0.0) | 12 (11.2) | |

| Disposition after RRT, N (%) | |||

| Intensive care unit transfer | 17 (73.9) | 33 (30.8) | <.001e |

| Remained on unit | 6 (26.1) | 74 (69.2) | <.001e |

| RRT shift, N (%) | |||

| 12:00 am-7:59 am | 9 (39.1) | 31 (29.0) | .62 |

| 8:00 am-3:59 pm | 8 (34.8) | 41 (38.3) | |

| 4:00 pm-11:59 am | 6 (26.1) | 35 (32.7) | |

| Code status, N (%) | |||

| Full code | 15 (65.2) | 84 (78.5) | .18 |

| Do not intubate/do not resuscitate | 8 (34.8) | 23 (21.5) | |

| Charlson comorbidity index score, median (IQR) | 5 (2-7) | 2(0-5) | .02e |

| Cardiac arrest triage score, median (IQR) | 17 (12-26) | 12 (4-21) | .01e |

| APACHE III score 1 h after intensive care unit admission, median (IQR) | 48 (41.5-69.5) | 51 (40-64.5) | .89 |

| Vital signs immediately before RRT activation, median (IQR) | |||

| Oxygen saturation, % | 95 (90-97) | 94 (90-98) | .76 |

| Respiratory rate, breaths/min | 22 (20-29) | 18 (16-24) | <.001e |

| Systolic blood pressure, mm Hg | 108 (83-130) | 115 (91-137) | .28 |

| Diastolic blood pressure, mm Hg | 52 (42-67) | 67 (53-84) | .01e |

| Heart rate, beats/minf | 90 (70-99) | 84 (72-97) | .86 |

Abbreviations: APACHE III, Acute Physiology, Age, Chronic Health Evaluation III; IQR, interquartile range; RRT, rapid response team.

Wilcoxon rank sum test was used for continuous variables; Pearson χ2 test was used to compare categorical variables.

Freeman-Halton extension of Fisher exact test was used.

Other reasons include bradycardia, 5 patients; lethal arrhythmia, 1 patient; respiratory depression, 1 patient; seizure, 2 patients; staff concern, 1 patient; stroke symptoms, 1 patient; unspecified, 1 patient.

Statistically significant.

Missing heart rate measurements: 57 patients.

Exclusion Criteria

Individuals younger than 18 years, those admitted with evidence of trauma, and those who did not have prior documented research authorization were not included.

Classifying Variation in Diagnostic Fidelity

Variations in diagnostic fidelity take the form of errors or delays. For this study, recommendations from IOM were used to develop our definition of diagnostic error and delay1:

-

•

Diagnostic error is defined as a failure to establish an accurate diagnosis or failure to communicate the diagnosis in medical records

-

•

Diagnostic delay is the failure to establish a timely explanation of the patient's health problem and communicate it in the medical records

Variation in diagnostic fidelity is interpreted to exist if reviewers identified errors and/or delays in the diagnostic process. The identification of errors and/or delays was standardized using a taxonomy approach, previously described by Schiff et al15 and reproduced with permission in Supplemental Table 2, available online at http://mcpiqojournal.org. This approach was designed to identify at what point in the diagnostic process errors or delays occurred and also what those errors or delays were. If reviewers identified errors, these were further classified into major (types I and II) or minor (types III and IV) errors based on the modified Goldman classification system (Supplemental Table 3, available online at http://mcpiqojournal.org).4, 16 A significant error was one that would fall under major (types I and II) classification.

Developing a Standard Operating Procedure

A standard operating procedure (SOP; see Supplemental Appendix, available online at http://mcpiqojournal.org) was developed for detailed review of the electronic medical records of the study population. The time and date of the RRT call was used to identify the relevant hospital encounter for each patient. The entire encounter, including clinical notes, laboratory results, imaging, vital signs, and nursing assessments from initial presentation to discharge, was included as part of the review process.

Approach

Two critical care fellows were provided with the SOP to guide the process of independent reviews. The purpose of the review process was to identify diagnostic error or delay as a reflection of variation in diagnostic fidelity. The review process was initially tested in a sample of 10 randomly selected patients outside of the study sample before application to the study sample. Reviewers were asked to make a judgment on whether diagnostic error or delay was present at the time of the RRT call. Errors that were identified were then further classified using the modified Goldman classification.16 Delays were subclassified by time ranges of less than 2 hours, 2 or more to 6 hours, 6 or more to 18 hours, and 18 or more to 24 hours. When fellows disagreed on conclusions regarding the presence of diagnostic error or delay, senior critical care clinicians acting as final arbitrators reviewed each of these encounters and categorized accordingly. Final analysis was performed after arbitration.

Last, reviewers were asked to form a retrospective impression of the clinical syndrome and whether predefined critical diagnostic and therapeutic information were provided for the syndrome identified. Because this interpretation was formed in hindsight to the best judgment of the reviewer, this was not used for identification of error or delay.

Outcomes

The primary outcome of interest was in-hospital mortality, and the secondary outcome was hospital length of stay.

Statistical Analyses

All categorical variables are reported as percentages. All continuous variables are reported as median with interquartile range (IQR) or mean ± standard deviation as appropriate. Chi-square and Wilcoxon rank sum tests were used to compare baseline characteristics and outcome data across diagnostic fidelity groups. Multivariate logistic regression was used to identify predictors of error or delay. Agreement among reviewers for identifying error and/or delay is reported as a percentage, with interrater reliability further assessed using κ agreement statistics. The 95% CIs are reported, and 2-sided P<.05 was considered statistically significant for the purpose of this study. All statistical analysis was performed using JMP statistical software (version 9.0; SAS Institute Inc).

Results

A total of 130 electronic medical records were reviewed by 2 critical care fellows to evaluate variation in diagnostic fidelity through identification of error and/or delay at the time of RRT evaluation. A total of 23 (17.7%) patients were identified as having a clinically significant error or delay at the time of the RRT call or leading up to the time of the RRT call. These included 12 (9.2%) who had diagnostic error and 17 (13.1%) with diagnostic delay. Some patients were identified as having both diagnostic error and delay and thereby crossed over groups. Baseline characteristics of our convenience sample, including median age, Charlson comorbidity index score, Acute Physiology, Age, Chronic Health Evaluation (APACHE III) score 1 hour after ICU admission, and sex, are outlined in Table 1.

The median age for patients with error or delay was 65.4 (IQR, 60.3-74.8) years compared to 66.1 (IQR, 54.0-77.1) years in those without error or delay. There was no statistical difference across the 2 groups with regard to time of RRT call, code status, and APACHE III score 1 hour after ICU admission. However, patients with a higher Charlson comorbidity index score (P=.02) and cardiac arrest triage score (P=.01) were statistically more likely to have variations in diagnostic fidelity in the form of error or delay. Additionally, these patients were more likely to transfer to the ICU (P<.001) after the RRT evaluation.

Incidence of Variation in Diagnostic Fidelity

Table 2 identifies areas in the diagnostic process in which the primary reviewers identified error or delay. These factors contributed to judgments regarding diagnostic fidelity. Diagnostic error (type I and II error) or delay were identified in 23 of 130 (17.7%) individuals. Error without delay was identified in 12 of 130 (9.2%) individuals, and delay without error, in 17 of 130 (13.1%) individuals.

Table 2.

Identified Areas of Diagnostic Error and/or Delay

| Where Along the Diagnostic Process Did the Error or Delay Occur? | Reviewer A | Reviewer B |

|---|---|---|

| Access/presentation | 2 | 0 |

| History | 4 | 2 |

| Physical examination | 2 | 1 |

| Tests (laboratory/radiology) | 5 | 4 |

| Assessment | 7 | 13 |

| Referral/consultation | 3 | 3 |

| Follow-up | 0 | 1 |

Hospital Mortality and Length of Stay

Fourteen of 23 (60.9%) patients with variations in diagnostic fidelity, identified as error or delay, died in the hospital. In patients for whom no variation in diagnostic fidelity was identified, only 21 of 107 (19.6%) died in the hospital; P<.001. When this mortality was adjusted for predictors such as age, do not intubate/do not resuscitate status, and Charlson comorbidity index score, we noticed a markedly increased association of dying in the hospital: odds ratio, 5.7; 95% CI, 2.0-17.8. For patients with an error or delay, the median hospital stay was 4 (IQR, 2-7) days, compared with 2 (IQR, 2-4) days; P=10.

Interrater Reliability of Identifying Variations in Diagnostic Fidelity

Following final arbitration, the observed agreement was calculated for the categories of error without delay, delay without error, and error or delay. For error without delay, agreement was identified with a calculated κ coefficient of 0.48 (95% CI, 0.22-0.74). In terms of delay without error, observed agreement was also identified with a κ coefficient of 0.61 (95% CI, 0.43-0.78). For observed agreement for error or delay, a κ coefficient of 0.57 (95% CI, 0.40-0.74) was identified.

Discussion

This retrospective observational study used an SOP to retrospectively evaluate the electronic medical record and identify variations in diagnostic fidelity. Diagnostic fidelity was further classified as error or delay. This approach reliably identified error or delay in 17.7% (23 of 130) of our cohort. There was moderate agreement among senior critical care clinicians in the classification of error or delay. Patients identified as having error or delay had significantly higher Charlson comorbidity index and cardiac arrest triage scores, as well as greater adjusted odds of dying in the hospital.

Studies to date that relate to diagnostic fidelity and identification of error and delay have largely focused on autopsy assessments, morbidity and mortality reviews, adverse event reporting, malpractice litigation, and largely unsystematic feedback methodologies.15, 16, 17, 18 Classification systems for diagnostic error described in the literature include the Goldman et al4 classification system of major and minor errors. Other classification systems use descriptions such as no-fault errors, system-related factors, and cognitive errors.19 Autopsy-based studies report that diagnostic error contributes to approximately 10% (10 out of 100) to 38.7% (36 out of 93) of patient deaths.4, 5 Our study applies a standardized approach to identify variations in diagnostic fidelity identified as error and/or delay using the electronic medical record. This approach was feasible and able to identify error or delay in 17.7% (23 of 130) of the cohort and error without delay in 9.2% (12 of 130). Additionally, in this study, having an error or delay increased the chance of dying almost 6-fold compared with not having an error or delay.

Measurement of variations in diagnostic fidelity is important for establishing the magnitude and nature of the problem, determining the causes and risks, evaluating the effectiveness of diagnostic interventions, assessing skills in education and training, and establishing accountability.2 A lack of consensus for definitions of error and delay and a lack of standardized measurement processes limits the analysis of contributing factors on patient outcomes. The IOM defines diagnostic error, using a patient-focused approach, as a failure to establish an accurate and timely explanation of the patient's health problem or failure to communicate that explanation to the patient.1, 3 Ultimately, a variation in diagnostic fidelity affects the patient more than the clinician and thus for this study, we focused our definitions of error on the IOM's patient-focused definition and adapted it to further define delay.

Advancement of the electronic medical record in health care systems provides an opportunity to better understand the timeline of a patient's clinical course and diagnosis. It also acts as a portal for providers to communicate the diagnosis. The modern electronic medical record has the ability to capture and record physiologic and diagnostic parameters throughout a patient's hospitalization. Thus, it has been used to identify adverse events that trigger interventions that improve patient outcomes.20, 21, 22, 23, 24 Using the electronic medical record to screen for diagnostic fidelity in the future may provide an opportunity to develop a system that identifies errors and/or delays before the occurrence of adverse events or outcomes. Identifying patient characteristics for those at risk for variations in diagnostic fidelity can guide the development of alerts and triggers prospectively applied to the electronic medical record.

Our study provides a standardized approach evaluating variation of diagnostic fidelity in terms of error and delay. Applying this retrospectively to the electronic medical record identified patients with greater odds of dying in the hospital. The purpose of this study was to assess the reliability and internal validity of this methodology. Identifying variations in diagnostic fidelity are important. It provides opportunities for learning, implementation of change, and improvement in clinical performance that ultimately affect patient outcomes. Even with a standardized process, identifying variations in diagnostic fidelity remains challenging. A broad definition categorizing errors or delays together addresses the possibility of error leading to delay or vice versa.

Limitations

This study has several limitations. The data are limited by the retrospective nature. Interpretations of the medical record including provider documentation are limited and subject to hindsight bias. The reviewers are interpreting the provider's thought processes and decision-making algorithms based only on the available documentation. Thus, this method cannot eliminate bias. Interpretation of the clinical course is limited by the reviewers' clinical experience.

In this study, fellow-level agreement on error was limited and thus arbitration by senior critical care clinicians was an integral step before analysis. During the initial screening and review, the critical care fellows agreed that 6 patients had error without delay. However, they disagreed on the presence of error without delay on a further 11 patients. More experienced clinicians were ultimately required to make final decisions on error without delay, delay without error, and presence of error or delay. Thus, this process is dependent on experienced clinicians to classify error or delay that identifies variations in diagnostic fidelity. We note that the broader inclusion of error or delay in our assessment for variation of diagnostic fidelity could have positively affected our κ values and conclusion regarding agreements.

Although our definition of variation in diagnostic fidelity and identification of error and delay were based on recommendations from the IOM, the lack of standardization in this definition limits its use and generalizability. However, the purpose of this study was to identify and test a methodology that uses an SOP to reliably assess the presence of variation in diagnostic fidelity, identify patient characteristics, and compare outcomes. We acknowledge the application of this method to a single center, and the limited sample limits generalizability. Our choice of a convenience sample that is not a probability sample further limits generalizability. The chosen classifications of error were based on previously published research, lending an evidence base for our approach that takes into account both a cognitive and systems-based review of areas at risk for error and/or delay. Our systematic approach allows for an opportunity to test external validity.

Finally, it is important to note that in our model, age, previously identified do not intubate/do not resuscitate code status, increased comorbid conditions, and acute illness were associated with higher odds for dying in the hospital, and there may be other unmeasured variables associated with death affecting this association. This may have inflated the coefficient for error and/or delay in the regression equation.

Conclusion

Variations in diagnostic fidelity undoubtedly contribute to adverse patient outcomes. In this study, we developed a standardized approach to reliably identify error and/or delay in adult nontrauma patients at risk for emerging critical illness. Patients who have variations in diagnostic fidelity have a higher comorbidity burden identified through higher Charlson comorbidity index and cardiac arrest triage scores. Additionally, the presence of variation in diagnostic fidelity is associated with increased hospital mortality. Understanding these determinants and predictors of emerging critical illness helps identify at-risk populations early, promote timely intervention, and improve the impact on adverse events and patient outcomes.

Acknowledgments

The authors acknowledge Mayo Epidemiology and Translational Research in Intensive Care and the Mayo Clinic Critical Care Research Committee for its support in data abstraction and protocol review. All work for this study was conducted at Mayo Clinic, Rochester, Minnesota. Drs Jayaprakash and Chae were co-primary authors.

Footnotes

Grant Support: This study was supported by the Center for Translational Science Activities grant UL1 TR000135 from the National Center for Advancing Translational Sciences, a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH. This study received internal funding from the Mayo Clinic Critical Care Research Committee.

Potential Competing Interests: The authors report no competing interests.

Supplemental Online Material

Supplemental material can be found online at http://mcpiqojournal.org. Supplemental material attached to journal articles has not been edited, and the authors take responsibility for the accuracy of all data.

References

- 1.Committee on Diagnostic Error in Health Care. Board on Health Care Services. Institute of Medicine. National Academies of Sciences Engineering and Medicine . National Academies Press; Washington, DC: 2015. Improving Diagnosis in Health Care. [Google Scholar]

- 2.McGlynn E.A., McDonald K.M., Cassel C.K. Measurement is essential for improving diagnosis and reducing diagnostic error: a report from the Institute of Medicine. JAMA. 2015;314(23):2501–2502. doi: 10.1001/jama.2015.13453. [DOI] [PubMed] [Google Scholar]

- 3.Singh H., Graber M.L. Improving diagnosis in health care-the next imperative for patient safety. N Engl J Med. 2015;373(26):2493–2495. doi: 10.1056/NEJMp1512241. [DOI] [PubMed] [Google Scholar]

- 4.Goldman L., Sayson R., Robbins S., et al. The value of the autopsy in three medical eras. N Engl J Med. 1983;308(17):1000–1005. doi: 10.1056/NEJM198304283081704. [DOI] [PubMed] [Google Scholar]

- 5.Aalten C.M., Samson M.M., Jansen P.A. Diagnostic errors; the need to have autopsies. Neth J Med. 2006;64(6):186–190. [PubMed] [Google Scholar]

- 6.Brennan T.A., Localio A.R., Leape L.L., et al. Identification of adverse events occurring during hospitalization. A cross-sectional study of litigation, quality assurance, and medical records at two teaching hospitals. Ann Intern Med. 1990;112(3):221–226. doi: 10.7326/0003-4819-112-3-221. [DOI] [PubMed] [Google Scholar]

- 7.Beitler J.R., Link N., Bails D.B., et al. Reduction in hospital-wide mortality after implementation of a rapid response team: a long-term cohort study. Crit Care. 2011;15(6):R269. doi: 10.1186/cc10547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Boniatti M.M., Azzolini N., Viana M.V., et al. Delayed medical emergency team calls and associated outcomes. Crit Care Med. 2014;42(1):26–30. doi: 10.1097/CCM.0b013e31829e53b9. [DOI] [PubMed] [Google Scholar]

- 9.Barwise A., Thongprayoon C., Gajic O., et al. Delayed rapid response team activation is associated with increased hospital mortality, morbidity, and length of stay in a tertiary care institution. Crit Care Med. 2016;44(1):54–63. doi: 10.1097/CCM.0000000000001346. [DOI] [PubMed] [Google Scholar]

- 10.Hillman K.M., Bristow P.J., Chey T., et al. Antecedents to hospital deaths. Intern Med J. 2001;31(6):343–348. doi: 10.1046/j.1445-5994.2001.00077.x. [DOI] [PubMed] [Google Scholar]

- 11.Kause J., Smith G., Prytherch D., et al. Intensive Care Society (UK) Australian and New Zealand Intensive Care Society Clinical Trials Group A comparison of antecedents to cardiac arrests, deaths and emergency intensive care admissions in Australia and New Zealand, and the United Kingdom--the ACADEMIA study. Resuscitation. 2004;62(3):275–282. doi: 10.1016/j.resuscitation.2004.05.016. [DOI] [PubMed] [Google Scholar]

- 12.Schein R.M., Hazday N., Pena M., et al. Clinical antecedents to in-hospital cardiopulmonary arrest. Chest. 1990;98(6):1388–1392. doi: 10.1378/chest.98.6.1388. [DOI] [PubMed] [Google Scholar]

- 13.Braithwaite R.S., DeVita M.A., Mhidhara R., et al. Medical Emergency Response Improvement Team (MERIT) Use of medical emergency team (MET) responses to detect medical errors. Qual Saf Health Care. 2004;13(4):255–259. doi: 10.1136/qshc.2003.009324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bergl P.A., Nanchal R.S., Singh H. Diagnostic error in the critically ill: defining the problem and exploring next steps to advance ICU safety. Ann Am Thorac Soc. 2018;15(8):903–907. doi: 10.1513/AnnalsATS.201801-068PS. [DOI] [PubMed] [Google Scholar]

- 15.Schiff G.D., Kim S., Abrams R., et al. Agency for Healthcare Research and Quality; Rockville, MD: 2005. Diagnosing Diagnosis Errors: Lessons from a Multi-institutional Collaborative Project. [PubMed] [Google Scholar]

- 16.Cifra C.L., Jones K.L., Ascenzi J.A., et al. Diagnostic errors in a PICU: insights from the morbidity and mortality conference. Pediatr Crit Care Med. 2015;16(5):468–476. doi: 10.1097/PCC.0000000000000398. [DOI] [PubMed] [Google Scholar]

- 17.Custer J.W., Winters B.D., Goode V., et al. Diagnostic errors in the pediatric and neonatal ICU: a systematic review. Pediatr Crit Care Med. 2015;16(1):29–36. doi: 10.1097/PCC.0000000000000274. [DOI] [PubMed] [Google Scholar]

- 18.Mort T.C., Yeston N.S. The relationship of pre mortem diagnoses and post mortem findings in a surgical intensive care unit. Crit Care Med. 1999;27(2):299–303. doi: 10.1097/00003246-199902000-00035. [DOI] [PubMed] [Google Scholar]

- 19.Graber M.L., Franklin N., Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165(13):1493–1499. doi: 10.1001/archinte.165.13.1493. [DOI] [PubMed] [Google Scholar]

- 20.Sebat F., Johnson D., Musthafa A.A., et al. A multidisciplinary community hospital program for early and rapid resuscitation of shock in nontrauma patients. Chest. 2005;127(5):1729–1743. doi: 10.1378/chest.127.5.1729. [DOI] [PubMed] [Google Scholar]

- 21.Manaktala S., Claypool S.R. Evaluating the impact of a computerized surveillance algorithm and decision support system on sepsis mortality. J Am Med Inform Assoc. 2017;24(1):88–95. doi: 10.1093/jamia/ocw056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Morimoto T., Gandhi T.K., Seger A.C., et al. Adverse drug events and medication errors: detection and classification methods. Qual Saf Health Care. 2004;13(4):306–314. doi: 10.1136/qshc.2004.010611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Singh H., Thomas E.J., Khan M.M., Petersen L.A. Identifying diagnostic errors in primary care using an electronic screening algorithm. Arch Intern Med. 2007;167(3):302–308. doi: 10.1001/archinte.167.3.302. [DOI] [PubMed] [Google Scholar]

- 24.Tien M., Kashyap R., Wilson G.A., et al. Retrospective derivation and validation of an automated electronic search algorithm to identify post operative cardiovascular and thromboembolic complications. Appl Clin Inform. 2015;6(3):565–576. doi: 10.4338/ACI-2015-03-RA-0026. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.