Abstract

Using 3D sensing for plant phenotyping has risen within the last years. This review provides an overview on 3D traits for the demands of plant phenotyping considering different measuring techniques, derived traits and use-cases of biological applications. A comparison between a high resolution 3D measuring device and an established measuring tool, the leaf meter, is shown to categorize the possible measurement accuracy. Furthermore, different measuring techniques such as laser triangulation, structure from motion, time-of-flight, terrestrial laser scanning or structured light approaches enable the assessment of plant traits such as leaf width and length, plant size, volume and development on plant and organ level. The introduced traits were shown with respect to the measured plant types, the used measuring technique and the link to their biological use case. These were trait and growth analysis for measurements over time as well as more complex investigation on water budget, drought responses and QTL (quantitative trait loci) analysis. The used processing pipelines were generalized in a 3D point cloud processing workflow showing the single processing steps to derive plant parameters on plant level, on organ level using machine learning or over time using time series measurements. Finally the next step in plant sensing, the fusion of different sensor types namely 3D and spectral measurements is introduced by an example on sugar beet. This multi-dimensional plant model is the key to model the influence of geometry on radiometric measurements and to correct it. This publication depicts the state of the art for 3D measuring of plant traits as they were used in plant phenotyping regarding how the data is acquired, how this data is processed and what kind of traits is measured at the single plant, the miniplot, the experimental field and the open field scale. Future research will focus on highly resolved point clouds on the experimental and field scale as well as on the automated trait extraction of organ traits to track organ development at these scales.

Keywords: 3D plant scanning, Plant traits, Parameterization, Plant model, Point cloud

Background

Measuring three-dimensional (3D) surface information from plants has been introduced during the last three decades [1–3]. Having access to the plant architecture [4] enables tracking the geometrical development of the plant and the parameterization of plant canopies, single plants and plant organs. As 3D measuring is non-destructive the implementation of a monitoring over time is possible [5]. Doing this in 3D is essential to differentiate between plant movement and real growth on plant and organ level [6]. Plant phenotyping defines the goal of bridging the gap between genomics, plant function and agricultural traits [7]. Therefore 3D measuring devices are a well-suited tool as these devices enable exact geometry and growth measurements.

This can be reached using different techniques as there are laserscanning, structure from motion, terrestrial laser scanning or structured light approaches, as well as time of flight sensors or light field cameras. Each of these technologies has its own use cases for (single) plant scale (laboratory, < 10 plants), miniplot scale (greenhouse, < 1000 plants), experimental field (< 10,000 of plants) or use on an open field (< 10,000 of plants) to meet the different requirements regarding robustness, accuracy, resolution and speed for the demands of plant phenotyping as there are the generation of functional structural plant models to link the geometry with function, [8] to differentiate between movement and growth to visualize and measure diurnal patterns [6] to monitor the influence of environmental stress to the plant development [9].

All techniques result in a point cloud, where each single point provides a set of X, Y, Z coordinates that locate the point in the 3D space. Depending on the measuring device, this coordinate can be enriched with intensity- or color-information representing the reflected light into the direction of recording. Existing 2.5D approaches measure distances from one single point of view. In contrast to this real 3D models depict point clouds recorded from different views showing different spatial levels of points and thus show a smaller amount of occlusion, a higher spatial resolution and accuracy. Furtheron resolution is defined as the smallest possible point to point distance for a scan—also known as sampling distance. Accuracy depicts the distance between real and measured target point.

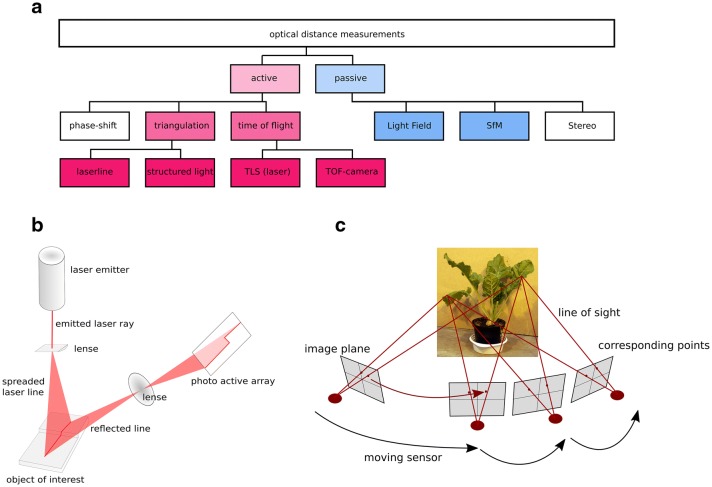

A technical categorization of 3D measuring techniques is shown in Fig. 1a. It mentions the two main categories which use active illumination based and passive approaches. Active illumination describes sensors that use an active light emitter, passive sensors use the environmental light condition to measure. Triangulation based systems and time of flight measurements are active measurement techniques. Triangulation based techniques are laser triangulation (LT) and structured light (SL) techniques, time of flight based techniques are terrestrial laser scanning (TLS) and time of flight (ToF) cameras. Light field cameras (LF) and structure from motion (SfM) approaches belong to the group of passive methods. A more technical description with focus on the output and price is shown in Table 1.

Fig. 1.

The hierarchy of the introduced 3D measuring techniques which are most relevant for plant phenotyping (highlighted in color) is presented. Laser triangulation, structured light approaches, time of flight sensing, structure from motion and light field imaging are shown in their technical connection (a). The two most important techniques laser triangulation (b) and structure from motion (c) are introduced in detail to show the procedure of point measuring

Table 1.

Technical overview of 3D measuring methods

| Name | Abbr. | Resolution | Active illumination | Direct point cloud access | Output values | Price | Literature |

|---|---|---|---|---|---|---|---|

| Laser triangulation | LT | < mm | Yes | Yes | XYZ (I) | €€€ | [10, 26] |

| Structured light approaches | SL | < mm | Yes | No | XYZI (RGB) | €€ | [18, 27] |

| Structure from motion | SfM | mm | No | No | XYZRGB | € | [17, 28] |

| Time of flight | ToF | mm | Yes | Yes | XYZ (I) | €€ | [29, 30] |

| Light field measuring | LF | mm | No | No | XYZRGB | €€€ | [31, 32] |

| Terrestrial laserscanning | TLS | cm | Yes | Yes | XYZ (I/RGB) | €€€ | [33, 34] |

The differences in illumination, direct or needed postprocessing, pricing and resulting values is given. Pricing is encoded by € < 1000 Euro, €€ < 10,000 Euro and €€€ > 10,000 Euro. The output values show XYZ coordinates and a possible enrichment with I for intensity/reflectance or RGB for a Red-Green-Blue image combination

This review aims to giving an answer to significant questions regarding 3D plant phenotyping. What are the point cloud requirements used for 3D plant phenotyping at different scales regarding point resolution and accuracy? What are the sensor techniques that can be used for specific plant phenotyping tasks? How are these datasets processed, what kind of traits have been extracted and what is their biological relevance?

Laser triangulation, LT

LT is mostly applied in laboratory environment due to its high resolution and high accuracy measurements [10] or due to its easy setup using low-cost components [11, 12]. Laserscanning describes systems based on laser distance measurement and a sensor movement. Typically this means the use of a laser triangulation system. Hereby a laser ray is spread into a laser line to illuminate the surface of interest. The reflection of the laser line is recorded using a sensitive photoactive array (CCD or PSD). The calibration of the setup enables an interpretation of the measurement on the camerachip as a distance measurement (see Fig. 1b). A complete 3D point cloud can be extracted by moving the sensor setup. LT systems work with active illumination and can be used independently of the outer illumination. A point resolution of a few microns can be reached [10].

LT setups always include a trade-off between possible point resolution and measurable volume. Either a small volume can be measured with highest resolution or a big volume is measured in low resolution. This requires a sensor system adaption for a complete experiment before and a good estimation of the necessary resolution and measurable volume.

Adapted sensor systems aiming at plant point clouds with a resolution of millimeters have risen within the last few years. These sensors use laser triangulation for measurements on field scale using non-visible laser wavelength (NIR, usually 700–800 nm), which results in a better reflection under sunlight [13, 14].

Structure from motion, SfM

SfM approaches use a set of 2D images captured by RGB cameras to reconstruct a 3D model from the object of interest [15]. After estimation of intrinsic (distortion, focal length etc.) and extrinsic (position and orientation) camera parameters the images were set into context [16] using corresponding points within the images (see Fig. 1c). These corresponding points are used to connect the images and to calculate the 3D model. Depending on the camera type the result is a 3D point cloud including color (RGB) or intensity of the measured reflection [17]. The resolution is comparable to LT point clouds but it strongly depends on the number of images used for 3D calculation, the amount of different viewing angles from where the pictures were taken as well as from the camera chip (CCD) resolution [17].

In contrast to LT where most effort is needed during measuring and the immediate result is the point cloud, SfM approaches need a short time for capturing the image, but need much effort for the reconstruction algorithm.

SfM approaches are mostly used on UAV (unmanned areal vehicle) platforms as they do not need a special active illumination or complex camera setups. As this approach just needs a camera for the image acquisition the hardware setup is very small and lightweight. Thus, this approach fulfills the lightweight demands that were defined by UAV restrictions on weight. As cheap consumer cameras can be used and the algorithms are mostly free to use, this technique is commonly used for modelling input models for 3D printers from the non-professional community using handheld or tripod mountings. Thus many applications are available focusing not on accuracy but reproducibility.

Structured light (SL) and time of flight (ToF) and light field (LF) and terrestrial laser scanning (TLS)

There are various other techniques to image three-dimensional data beside LT and SfM approaches. Most common are SL, ToF and LF approaches. SL uses patterns, mostly a grid or horizontal bars, in a specific temporal order. For each pattern an image is recorded from the camera. By using a pre-defined camera-projector setup the 2D points on the pattern are connected to their 3D information by measuring the deformation of the pattern [18, 19]. As SL setups are rather big regarding the used space for the measuring setup and need a lot of time to acquire the images either the object or the measuring system has to be moved to connect different points of view. SL approaches are implemented in industry to perform reverse engineering or for quality control providing high resolution and high accuracy in a bigger measuring volume [20].

ToF uses active illumination, the time between emitting light and returning of the reflection is measured by using highly accurate time measuring methods [21]. This can be performed for thousands of points at the same time. ToF cameras are small regarding the hardware size but capture images with a rather small resolution. These cameras are mostly used for indoor navigation [22] or in the gaming industry (see Kinect 2, [23]).

LF cameras [24] provide, beside a RGB image, additional depth information by measuring the direction of the incoming light using small lenses on each pixel of the camera array. This enables reconstruction of 3D information.

Tof and LF Setups have to be moved to get a complete 3D point cloud, but as ToF is rather slow it suffers on a low resolution similar to LF approaches, when compared to LT and SfM measuring approaches (see Fig. 1).

A technique coming from land surveying is terrestrial laser scanning. Using a time of flight or a phase shift approach these scanners scan the environment and have to be moved to another position to capture occlusions. Nevertheless these systems are very well established for surveying jobs like landslides detection of deformation monitoring of huge areas [25]. For plant monitoring their advantage of big measurable volume ( m), accuracies of millimeters are possible but surveying knowledge is needed especially when using more than one point of view. Nevertheless the technique is well established tool for canopy parameters. Nevertheless as it is cost intensive, hard to process as the different position measurements have to be connected and its time consuming measuring procedure it is not very appropriate for plant measuring.

Point cloud resolution—its effect on the extracted traits

To answer the question for the needed requirements on point clouds and thus on 3D measuring devices for the demands of plant phenotyping it is important to compare these tools regarding their accuracy with established tools for trait measuring. 3D plant measuring has proven to be a reliable tool for plant phenotyping when compared to established manual or invasive measurements [3].

Nevertheless the comparison between proven non-invasive technologies as well as the requirements regarding the scan resolution for an accurate measurement in a specific scenario remains an open question.

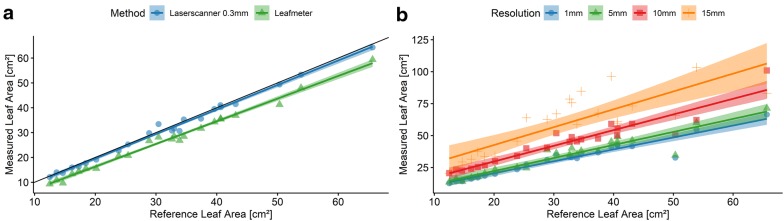

An experiment was conducted to show the comparison between a high precision LT system and a non-invase established technology—a leafmeter. Both techniques were compared to an established, but invasive photo based reference method [35, 36] with an accuracy of below mm. The photo based method uses a RGB image and a metric reference frame and comes together with destruction of the plant as the leaves were cutted and positioned within the metric frame. The reference experiment includes ten different barley plants. Each plant had six to seven leaves, where at least five leaves have been measured due to constraints of the leafmeter which makes it impossible to measure the inner leaves. During the measurement the plants were in the BBCH 30 growth stage. The plants were cultivated in a greenhouse. For the measurements a leafmeter (Portable Laser Leaf Meter CI-203, CID Inc., Camas, WA, USA) was used as a well established tool for leaf area measuring [37] and a laserscanner (Romer measuring arm + Perceptron v5, [38]) were used. The laser scanner point cloud consists of several thousand 3D lines, which were automatically merged. To receive an evenly distributed point cloud it has been rastered (0.3 mm point to point distance) and meshed using a surface smoothing approach as it has been provided by CloudCompare (version 2.10 Alpha, http://www.cloudcompare.org). The leaf area was calculated by summing up the area of all triangles of the mesh, a method that has been applied to corn measurements before [39]. The error metric (RMSE and MAPE) was calculated according to [3].

Figure 2a shows a correlation between laserscanner and reference measurements (), same for the leafmeter and the reference measurements (). The leafmeter shows a small offset due to its way of handling, as there is a small offset while positioning the leafmeter at the leaf base. Error measurements were provided in Table 2.

Fig. 2.

Laserscanning accuracy—reference experiment using a photogrammetric method as reference to evaluate the accuracy of the Laserscanning device and the Leafmeter as a device for measuring leaf area [35, 36]. Both methods show a high correlation compared to the reference method (a). The comparison between the laser scanner using different point resolutions and the introduced reference method is visualized in addition (b). The transparent color in both plots indicates the confidence intervals (95%). The black line describes the bisecting line of the angle as the line of highest correlation

Table 2.

Comparison of the error measurements for the leafmeter–laserscanner combination using different sampling resolutions for the laserscanned point cloud between 0.3 and 15 mm

| Resolution [mm] | MAPE % | RMSE cm2 | ||

|---|---|---|---|---|

| Leafmeter | 0.99 | 0.16 | 5.01 | |

| Laserscanner | 0.3 | 0.99 | 0.04 | 1.34 |

| 1.0 | 0.91 | 0.05 | 3.94 | |

| 5.0 | 0.89 | 0.11 | 5.4 | |

| 10.0 | 0.89 | 0.43 | 13.96 | |

| 15.0 | 0.68 | 0.90 | 30.66 |

Sampling included a reduction in resolution as well as adding noise in the same dimension. error measurements are shown as well as a MAPE and RMSE calculation

By reducing the laser scanned point cloud regarding resolution and point accuracy the error levels compared to the established leafmeter can be determined.

A further analysis focusing on the applicability of different point resolutions (1–15 mm) was conducted as the introduced 3D measuring techniques provide differences regarding resolution and accuracy (Fig. 2b). Therefore, the scans of the first experiment were resampled and the amount of points was reduced. In addition, noise in the dimension of the resolution (1–15 mm) was added to the single points to simulate other 3D sensing sensors and technology in a more accurate way. In Table 2 the results of the correlation analysis and related error measurements were described. Errors were below (MAPE) for all point clouds with reduced quality compared to the reference measurement. Point resolutions above 15 mm were not investigated as not enough points were left to model the leaf.

As expected with decreasing resolution the error is increasing. A laser based 3D measuring device that provides a resolution of 5 mm is comparable with a leafmeter regarding the proportional error measurement. Down to a resolution of 15 mm the percentage error was still below although the RMSE raised up to 30 cm2. This means, that even with low resolution 3D measuring devices exact trait measurements are possible.

Data processing and 3D trait analysis

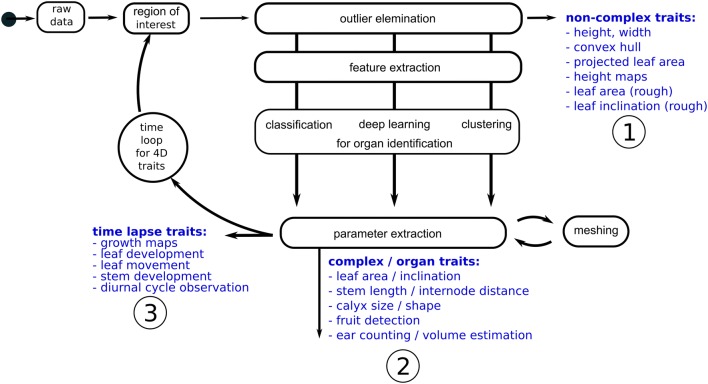

3D scanning of plants enables capture of the geometry of the plant and individual organs like leaves or stems. Thus, parameters for the whole plant and the organs can be calculated to describe size, shape and development. Subsequent traits using the complete plant point cloud (canopy) are depicted as non-complex traits, whereas parameters describing geometry at the organ level are depicted as complex traits, as they require a previous identification of plant organs by using classification routines. Non-complex traits are height, width, volumetric measures, maps showing information about height or inclination or a rough leaf area estimation. The latter describes the trait leaf area from a non-segmented point cloud where a large percentage of the points are leaf points. Complex plant traits describe plant traits on organ level such as the exact leaf area, stem length, internode distance, fruit counting or ear volume estimation. By repeating these measuring/analysis setups over time the extraction of time lapse traits like leaf surface development, leaf movement or field maps showing the growth at different locations is possible. As time can be described as an additional dimension time lapse traits are named 4D traits.

Even non-complex traits often need a definition before a comparison to well-established measuring tools is possible. For example the internode distance can be depicted to be the distance between two consecutive leaf petiole at the stem, or as the distance between leaf centre points projected to the plant stem [27].

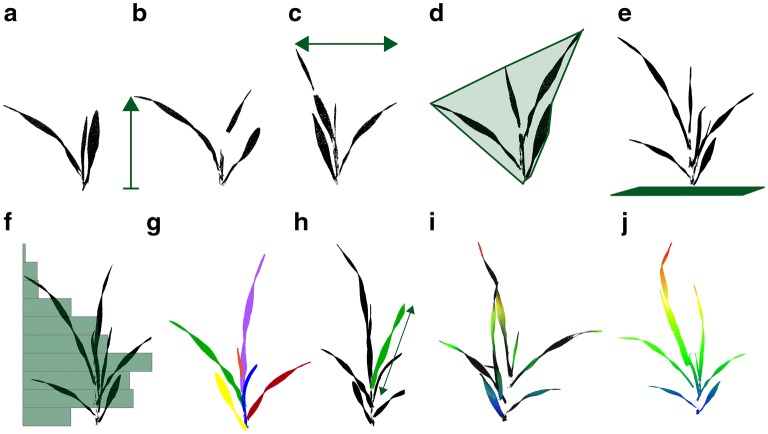

Figure 3 illustrates the derivation of traits from a barley point cloud without any reflection information. It shows the derivation of the parameters plant height, plant width, convex hull, projected leaf area, the leaf area density, the number of leaves, the single leaf length as well as height and inclination maps.

Fig. 3.

Traits that can be extracted from a 3D point cloud of a young barley plant. From the XYZ point cloud (a) non-complex parameters like plant height (b), plant width (c), the convex hull (d) and the projected leaf area (e) can be extracted. Furthermore the leaf area density (f) can be derived. The number of leaves (g) and the respective leaf length (h) can be measured after identifying the individual plant organs. For each point the inclination and its height can be calculated resulting in a inclination (i) or height map (j)

Height or width can be extracted by using the difference between lowest and highest z-axis coordinate for height and same for x- and y-axis to get a measurement for the width [3]. A more complex trait is the convex hull. In 2D this describes the smallest convex polygon covering all the points. It approximates the volume of the plants in 3D [3]. The projected leaf area represents the cover of the ground by the plants leaves. It is widely used to characterize canopy light conditions and is used to calculate (projected) leaf area index [40]. The height distribution of leaf surface points it is an indication for variation in leaf mass per area as it was shown for rice between different varieties and different nitrogen levels [41]. The number of leaves is one important trait as it is used, among others, to describe the growth stage of plants in the BBCH scale [42]. Unfortunately accessing the leaf number automatically is difficult. For 2D plant images this problem has already been addressed but it was noted to be rather complicated [43]. Existing datasets have been used to raise a challenge to solve this problem [44]. In 3D different methods can be used to identify the plant organs and to give semantic meaning to the point cloud or respectively to the organs. There are approaches using meshing algorithms [45] that uses the mesh structure for segmentation, approaches that fit the plant measurements into a model [46], others use the point environment within the point cloud and machine learning methods like Support Vector Machines coupled to Conditional Random Field techniques to overcome errors in the classification to identify the organs [47]. Further methods are Region Growing [48] and clustering routines [49] and Skeleton Extraction approaches [50] which can be used. Nevertheless, results of these approaches correlate with the quality of the underlying point cloud.

When the single leaves are identified the parameterization can be performed on organ level to calculate the leaf area of single leaves. Paulus et al. [3] showed an approach for manual leaf tracking and to monitor the leaf development over time. Leaf organs can be parameterized by using a triangle mesh. Here the sum of all triangles corresponds to the leaf area. Organs like the plant stems need a more sophisticated parameterization. Mathematical primitives like cylinders show a good approximation of the stem shape [51] and enable extract measurements like height or volume [5]. Further analysis of point height distribution mostly is used to generate maps to identify areas of differences in growth [13].

Figure 4 shows a processing pipeline for 3D point clouds coming from a common point cloud generating 3D scanning device. After cutting the point cloud to the region of interest and a first cleaning step using an outlier removal algorithm non-complex parameters like height and width can be derived based on the point cloud parameters. Using routines from standard data processing software libraries like Matlab (MATLAB, The MathWorks, Inc., Natick, Massachusetts, United States.), OpenCV [52] or the Point Cloud Library [53] non-complex traits like the convex hull volume, projected leaf area or height maps can be extracted. By use of plane fitting and meshing algorithms parameters, leaf area and inclination can be calculated (see Fig. 4, part 1).

Fig. 4.

A common 3D processing pipeline including the use of a region of interest and outlier handling to extract non-complex parameters as height, width and volume (1). The use of routines like machine learning/deep learning enables the identification and parameterization of plant organ parameters (2). Using multiple recordings over time monitoring of development and differentiation between growth and movement is possible (3)

Further processing uses either machine learning approaches to identify (segment) plant organs like leaf, stem or ears [54]. These routines work on 3D features like surface feature histograms or point feature histograms [55, 56] and encode the surface structure. Machine learning algorithms such as Support Vector Machines (as provided by LibSVM [57]) needs pre-labeled data for training and belong to the supervised learning methods. They can be applied if labeled data is available and use this training data to develop a model for classification. Unlike this, methods that use the structure within the data are called unsupervised learning methods, they do not need any labelling but they are hard to optimize. 3D geometry features and clustering methods have been successfully applied to divide barley point clouds into logical groups for stem and leaf points [49] (see Fig. 4, part 2).

Measurements over time (4D) and repeated application of the described workflow growth parameters for plant development like growth curves on plant and organ level can be derived. As 3D devices enable a differentiation between growth and movement the diurnal cycle can be observed and can be compared to the daily growth [6]. As growth is a direct indicator of stress high precision 3D measuring devices are well suited to detect this stress by measuring the 3D shape change [2] (see Fig. 4, part 3).

3D parameters on different scales

The following section gives an overview of different parameters that have been described in literature. The parameters have been grouped into four different scales “Single Plant”, “Miniplot”, “Experimental Field” and “Open Field”. Single plant scale as it is focused in laboratories describes the scale from seedlings to fully grown plants but with a focus on single plants or smallest groups of plants. Here, high resolution sensors (< mm) working in a reproducible setup with highest accuracy were used. Miniplots in greenhouses describe production farms with fixed plant locations as well as high throughput plant phenotyping facilities where the plants stand on conveyor belts and were imaged in imaging cabinets. These setups are commonly used for research studies [58]. The experimental field scale describes measurements in the field with stationary sensors, maybe on a tripod or slowly moving sensor platforms. The largest scale shown here describes open fields. Sensors that are used here are commonly mounted on UAV platforms. These sensors provide a lower resolution (cm), but a high scan speed (> 50 Hz), which is essential when used during motion. The accuracy measurements (see Table 3) are based on a linear correlation using notation or the use of the MAPE [3].

Table 3.

Overview of plant traits that have been measured for the single plant scale, miniplot, experimental field and open field scale

| Trait | Plant | Sensor | Biological connection | Literature | |

|---|---|---|---|---|---|

| Single plant scale | Plant height | Sugar beet | LT | Drought response | [3] |

| Plant width | Sugar beet | LT | Drought response | [3] | |

| Root volume | Sugar beet | LT | Trait analysis | [11] | |

| Root surface | Sugar beet | LT | Trait analysis | [11] | |

| Root compactness | Sugar beet | LT | Trait analysis | [11] | |

| Leaf area | Barley | LT | Drought response | [3] | |

| Projected leaf area | Sugar beet | LT | Trait analysis | [11] | |

| Leaf width | Cotton | SfM | Growth analysis | [45] | |

| Leaf length | Cotton | SfM | Growth analysis | [45] | |

| Leaf movement | Arabidopsis | LT | Growth analysis | [6] | |

| Single leaf growth | Barley | LT | Growth analysis | [3] | |

| Number of leaves | Cabbage | SL | Trait analysis | [27] | |

| Cucumber | SL | Trait analysis | [27] | ||

| Tomato | SL | Trait analysis | [27] | ||

| Stem length/growth | Barley | LT | Growth analysis | [5] | |

| Calyx shape | Strawberry | SfM | Trait analysis | [59] | |

| Achene shape | Strawberry | SfM | Trait analysis | [59] | |

| Internode distance | Cabbage | SL | Trait analysis | [27] | |

| Cucumber | SL | Trait analysis | [27] | ||

| Tomato | SL | Trait analysis | [27] | ||

| Ear volume | Wheat | LT | Yield estimation | [54] | |

| Ear shape | Wheat | LT | Yield estimation | [54] | |

| Miniplot | Plant height | Pepper | SfM | QTL analysis | [60] |

| Leaf angle | Pepper | SfM | QTL analysis | [60] | |

| Leaf area | Rapeseed | LT | Growth analysis | [14] | |

| Proj. leaf area | Rapeseed | LT | Growth analysis | [14] | |

| Leaf angle | Maize | ToF | Trait analysis | [24] | |

| Sorghum | ToF | Trait analysis | [24] | ||

| Soybean | SFM | Drought response | [61] | ||

| Fruit detection | Tomato | LF | Trait analysis | [21] | |

| Experimental field | Plant height/canopy height | Wheat | LT | Growth analysis | [13] |

| Proj. canopy area | Cotton | TLS | Growth and yield | [62] | |

| Plant volume | Cotton | TLS | Growth and yield | [62] | |

| Leaf area index (LAI) | Maize, sorghum | SfM | Trait analysis | [63] | |

| Leaf area | Grapevine | SfM | Trait analysis | [64] | |

| Peanut | LT | Water budget | [65] | ||

| Cowpea | LT | Water budget | [65] | ||

| Pearl millet | LT | Water budget | [65] | ||

| Open field | Plant height and canopy height | Maize | SfM | Growth analysis | [66] |

| Sorghum | SfM | Growth analysis | [66] | ||

| Eggplant | SfM | Biomass estimation | [67] | ||

| Tomato | SfM | Biomass estimation | [67] | ||

| Cabbage | SfM | Biomass estimation | [67] |

If possible an error measurement is provided as well as the plant type, the sensor and the biological connection as the purpose of the study

To define the different scenarios of applications on the plant, miniplot, experimental field and open field scale. Table 3 provides an overview of measured plants, traits and biological connection.

Multiple studies focus on scenarios with just a few plants in laboratories. Here a differentiation between single organs is mostly not necessary. Non-complex parameters that are easy to measure like height, volume, number of leaves or projected leaf area have been extracted with high precision (, [17]). A further step that needs either a modelling of the plant [45] or the use of a sophisticated classifier working on the pure point cloud [54] enables a differentiation between the single organs. This can be used for wheat ear volume calculation for yield estimation [54] using the -shape technique or measuring of stem parameters by using cylinder fitting routines [3].

On the miniplot scale, which comes along with similar prerequisites regarding resolution and accuracy like the single plant scale, there are further demands regarding recording speed as it is essential for high throughput phenotyping using automated greenhouses and conveyor systems. For trait and growth analysis laser triangulation systems [14] are very common, but time of flight sensing [24] and structure from motion [64] approaches are also used, mostly due their high speed during the recording, although a not negligible amount of processing time is needed after the scan pass. In comparison to the single plant scale parameters assessed here commonly are non-complex parameters like height or leaf area where the stem points were neglected due to the smaller resolution or lower proportion of measured points.

Experimental field measurements concentrate on parameters like plant/canopy height [13], volume [62] or leaf area index [63]. At this scale terrestrial laser scanners are often used as they provide a range of 10s to 100s of meters and a high resolution of a few millimeters [62]. Structure-from-motion approaches are used on wheeled carrier vehicles with mounted cameras [64] as well as on UAV-based measurements. The latter comes along with measurements of easy accessable parameters like plant height or canopy volume and can be utilized for growth analysis and biomass estimation [66, 67].

Table 3 introduces the biological connection of the 3D parameters as there are links to trait analysis, growth analysis, drought responses, analysis of water budget, yield estimation, biomass estimation and QTL analysis (quantitative trait loci, [68]).

By comparing different groups of plants regarding their responses on water access drought can be described [3, 61]. Combining 3D measurements with gravimetric measurements of the transpiration enables measuring the water budget and the transpiration rate over day on a single plant scale [65]. These experiments use a non-destructive measuring method to link an accompanying sensor to 3D plant traits. Using destructive yield measurements enables linking the 3D traits to yield parameters like thousand kernel weight or kernel number as shown for wheat [54]. Similar to this, the scan of a complete plant can be linked to fresh mass/biomass even on field scale [67]. QTL analysis describes the identification of genetic regions that are responsible for specific plant traits. 3D measuring helps to identify and describe traits that are linked to these regions [60] and to understand the genotype-phenotype interaction.

Adding information to the 3D data

The phenotype as the result of genotype and environment interaction is expressed in numerous plants traits which are not all expressed in geometrical differences. Therefore different sensors were taken into account. RGB cameras are common in plant phenotyping being used to extract different traits regarding size, shape and colour [69]. Multispectral- or hyperspectral cameras are used to identify indications or proxies in the non-visible spectrum to detect plant stress [70] or plant diseases [71]. Thermal cameras show differences in temperature between plants or within a single plant [72].

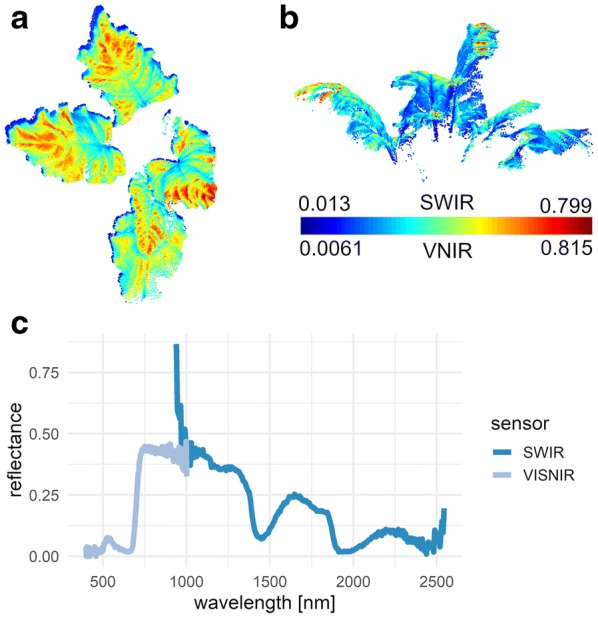

Depending on the plant surface geometry these recording devices vary in their measurements. [73] showed a connection between high NDVI (normalized difference vegetation index [74]) values and the inclination angle on sugar beet leaves. By using the plant’s 3D information the effect of different reflection angles with respect to illumination source, camera and observed surface can be recorded [75]. For combining 3D and hyperspectral images the camera system has to be geometrically modelled. The result is a combined 3D-reflection model that combines 3D geometry and reflection information from hyperspectral cameras (see Fig. 5). As it is advantageous to take this into account and to reduce the described error future measurement hardware should include this correction method internally as by a proper modelling of the optical ray path [76].

Fig. 5.

A combination of 3D point cloud and hyperspectral image data is possible by calibrating the sensor setup including the 3D imaging sensor and the hyperspectral camera. The top (a) and side (b) view of a combined point cloud is shown for combination of 3D- and VISNIR-data (911 nm, a) as well as for 3D and SWIR data(1509 nm, b). The VISNIR and the SWIR spectrum can be investigated at the same point in the 3D point cloud (c)

A critical consideration on 3D scanning of plants

The shown experiment (see Fig. 2) and the the intense literature work (see Table 3) indicate that 3D measuring devices and especially laserscanning devices are reliable tools for plant parameterization with respect to plant phenotyping. Existing invasive tools can be replaced and exceeded in accuracy. Furthermore an estimation for the required resolution for a laboratory/greenhouse experiment was given together with resulting error measurements. A MAPE of 5–10% was previously defined to be acceptable for morphological scale phenotyping, as this limit reflects the magnitude of errors already inherent in manual measurements and which is low enough to to distinguish changes in relevant traits between to imaging dates during development [45]. Although the resolution and point accuracy was decreased down to 15.0 mm within this experiment the MAPE measurement never broke this limit.

One big advantage that is shared by all 3D measuring devices is the fact that the point cloud represents the surface at a specific point in time. As this is, a very general representation of the plant surface and not a single measurement, many different traits can be extracted even afterwards. If leaf area is focused in an experiment and later on leaf inclination becomes relevant, this trait can be calculated afterwards and compared to the current experiment [77].

All the 3D measuring methods have in common that with increasing age of the plants the complexity and thus the amount of occlusion is increasing. This can be reduced by using more viewpoints for each sensor. Nevertheless occlusion is always present independent of sensor, number of viewpoints or sensor setup as the inner center of the plant is at a specific time occluded by the plant (leaves) itself. One solution could be to use MRI (magnetic resonance imaging) or radar systems that use volumetric measurements [78], taking into account a more complex and expensive measuring setup. Depending on the measuring technique the registration (fusion) of different views is rather difficult when wind occurs or plants were rotated during single scans. Referencing becomes impossible and the results loses quality. This holds for almost any 3D measuring technique as long as imaging is not performed in one shot from many different positions at the same time as it has been already published for tracking of human motion [79].

Although 3D measuring devices provide a very high resolution they are only able to measure visible objects. Plant roots can be imaged when growing in transparent soil like agar. Their traits can be distinguished into static and dynamic root traits, depending if they can be measured at a single point in time (static) or at multiple points in time (dynamic) [80]. The latter can be related to growth and spatiotemporal changes in root characteristics, but only the static traits can be measured by 3D devices as the roots have to be taken from the soil, washed and measured. One effect that has to be taken into account is the problem of refraction when measuring through different substances.

In general, LT is able to cover applications where a high resolution and accuracy is needed in a rather small measuring volume as it is essential for organ-specific trait monitoring on the single plant scale. Whereas Sfm covers most of the application scenarios in plant phenotyping across all scales as the resolution and the measuring volume just depend on the camera and the amount of acquired images. The more data from different points of view is merged independent of the sensor the less occlusion can be found in the resulting point cloud.

Summing up LT

To resolve smallest details the high resolution of microns using LT technique is well prepared. Its exact point clouds are a well suited input for machine learning methods to extract parameters of plant organs like stem length or calyx shape. Nevertheless, the interaction between laser and plant tissue has to be taken into account when using measuring systems with active illumination and laser triangulation in special. Although laser scanning is depicted to be non penetrating, latest experiments have shown that plant material below the cuticula and lasercolor and intensity have a significant influence on the measuring result and its accuracy [81, 82]. Furthermore the edge effect, measurements of partly leaf and partly background, can lead to outliers or completely wrong measurements [83].

Summing up SfM

SfM approaches provide a quick acquisition and are lightweight. This makes them well suited for use on flying platforms to image field trials. The more images recorded the better is the resolution of the resulting point cloud. SfM approaches provide a high accuracy (mm) [17], but strongly interfere with illumination from the environment. Light is problematic when it is changing during or between consecutive measurements. Furthermore wind is a problem as the object moves between two consecutive recordings. This causes errors during the reconstruction process [84]. This can be reduced by using a high measuring repetition rate (> 50 Hz) but this raises the time needed for reconstruction (> 1 h). Latest research focuses on reducing the post-processing time [85] as it is a key capability for autonomous driving. As autonomous driving is strongly pushed forward, a huge increase regarding the performance of the reconstruction algorithms is be expected.

Summing up SL, ToF, TLS and LF

SL, ToF, TLS and LF measurements have shown their applicability for the demands of plant phenotyping. Nevertheless the accuracy and resolution have to be increased for the demands of high throughput plant phenotyping. There are prototype setups where these techniques are the method of choice.

Further methods

In addition to the shown devices for 3D imaging of plants on the different scales there are more devices like 3D measuring systems for the microscopic scale using interferometry to localize the 3D position of proteins [86] or three-dimensional structured illumination microscopy to measure images of plasmodesmata in plant cells [87]. On a laboratory scale techniques like volume carving [88] were used for the determination of seed traits [89]. Magnetic resonance imaging (MRI) based techniques were used for 3D reconstruction of invisible structures [90] or in combination with positron emission tomography (PET) to allocate growth and carbon allocation in root systems [78]. Root imaging can also be performed using X-rays as a further technology that does not need visible contact to the object of interest to determine root length and angle [91]. On the beyond-UAV scale airborne methods were used like airborne laser scanning [2] to gather carbon stock information from 3D-tree scans. Measuring traits from trees has been done since many years [92]. Traits like diameters at breast height (DBHs) have been used to predict yield at trees [93, 94], but crops and vegetables grow much faster than forest trees.

Opportunities and challenges

Visiting the introduced traits and methods the current challenges can be described as the transfer from the methods from the single plant scale to the field scale (experimental and open field). A requirement is the raising of the point cloud resolution which comes along with demands for sensor and carrier platforms. Sensors and algorithms have to overcome the limitations of the problems of plant movement (due to wind), the big amount of occlusion and the combination of different sensors together in a way that 3D information help to correct the influence of the geometry on radial measurements [73, 75]. Drones have to increase their accuracy as it could be provided by RTK GPS [95] or sensor fusion of on-board sensors for a better localization [96]. Nevertheless, 3D measuring sensors show a huge potential to measure, track and derive geometrical traits of plants at the different scales non-invasively. Further research should focus the definition of the traits, regarding the way plant height or internode distance is measured to enable a comparison of algorithms, plants and treatments among different research groups and countries.

Concluding remarks

This review provides a general overview of 3D traits for plant phenotyping with respect to different 3D measuring techniques, the derived traits and biological use-cases. A general processing pipeline for use-cases in 3D was explained and connected to the derivation of non-complex traits for the complete plant as well as for more complex plant traits on organ level. If performing measurements over time the generation of growth curves for monitoring of organ development (4D) was introduced as well as their linking to biological scientific issues. Sensor techniques for the different scales from single plants to the field scale were recapped and discussed.

This review gives an overview about 3D measuring techniques used for plant phenotyping and introduces the extracted 3D traits so far for different plant types as well as the biological used-cases.

Acknowledgements

Thanks to Heiner Kuhlmann for conferring the datasets, Anne-Katrin Mahlein and Nelia Nause for proof-reading and Jan Behmann for support regarding the datafusion-examples.

Abbreviations

- LT

laser triangulation

- SfM

structure from motion

- SL

structured light

- LF

light field

- ToF

time of flight

- NIR

near infrared area (700–1000 nm)

- SWIR

short wave infrared (1000–2500 nm)

- UAV

unmanned areal vehicle

- CCD

charged coupled device

- PSD

position sensitive device

- TLS

terrestrial laser scanning

- QTL

quantitative trait loci

- RSME

root mean square error

- MAPE

mean absolute percentage error

- BBCH

a plant development scale

Authors’ contributions

SP is the sole author for this manuscript. He conceived the study, designed the experiments, analyzed results, and wrote the manuscript. The author read and approved the final manuscript.

Funding

This work was supported by the Institute of Sugar Beet Research, Göttingen, Germany.

Availability of data and materials

The datasets during and/or analysed during the current study available from the corresponding author on reasonable request.

Ethics approval and consent to participate

Not applicable.

Consent for publication

The author agreed to publish this manuscript.

Competing interests

The author declares that he has no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Walklate PJ. A laserscanning instrument for geometry measuring crop. Science. 1989;46:275–284. [Google Scholar]

- 2.Omasa K, Hosoi F, Konishi A. 3D lidar imaging for detecting and understanding plant responses and canopy structure. J Exp Bot. 2007;58(4):881–98. doi: 10.1093/jxb/erl142. [DOI] [PubMed] [Google Scholar]

- 3.Paulus S, Schumann H, Leon J, Kuhlmann H. A high precision laser scanning system for capturing 3D plant architecture and analysing growth of cereal plants. Biosyst Eng. 2014;121:1–11. [Google Scholar]

- 4.Godin C. Representing and encoding plant architecture: a review. Ann For Sci. 2000;57(5):413–438. [Google Scholar]

- 5.Paulus S, Dupuis J, Riedel S, Kuhlmann H. Automated analysis of barley organs using 3D laserscanning—an approach for high throughput phenotyping. Sensors. 2014;14:12670–12686. doi: 10.3390/s140712670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dornbusch T, Lorrain S, Kuznetsov D, Fortier A, Liechti R, Xenarios I, et al. Measuring the diurnal pattern of leaf hyponasty and growth in Arabidopsis—a novel phenotyping approach using laser scanning. Funct Plant Biol. 2012;39:860–869. doi: 10.1071/FP12018. [DOI] [PubMed] [Google Scholar]

- 7.Furbank RT, Tester M. Phenomics—technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011;16(12):635–644. doi: 10.1016/j.tplants.2011.09.005. [DOI] [PubMed] [Google Scholar]

- 8.Lou L, Liu Y, Shen M, Han J, Corke F, Doonan JH. Estimation of branch angle from 3D point cloud of plants. In: 2015 international conference on 3D vision. New York: IEEE; 2015.

- 9.Maphosa L, Thoday-Kennedy E, Vakani J, Phelan A, Badenhorst P, Slater A, et al. Phenotyping wheat under salt stress conditions using a 3D laser scanner. Israel J Plant Sci. 2016 [Google Scholar]

- 10.Dupuis J, Kuhlmann H. High-precision surface inspection: uncertainty evaluation within an accuracy range of 15 μm with triangulation-based laser line scanners. J Appl Geodesy. 2014;8(10):109–118. [Google Scholar]

- 11.Paulus S, Behmann J, Mahlein AK, Plümer L, Kuhlmann H. Low-cost 3D systems—well suited tools for plant phenotyping. Sensors. 2014;2:3001–3018. doi: 10.3390/s140203001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dupuis J, Paulus S, Behmann J, Plümer L, Kuhlmann H. A multi-resolution approach for an automated fusion of different low-cost 3D sensors. Sensors. 2014;14:7563–7579. doi: 10.3390/s140407563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Virlet N, Sabermanesh K, Sadeghi-Tehran P, Hawkesford MJ. Field scanalyzer: an automated robotic field phenotyping platform for detailed crop monitoring. Funct Plant Biol. 2017;44(1):143. doi: 10.1071/FP16163. [DOI] [PubMed] [Google Scholar]

- 14.Kjaer K, Ottosen CO. 3D laser triangulation for plant phenotyping in challenging environments. Sensors. 2015;15(6):13533–13547. doi: 10.3390/s150613533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hartley R, Zisserman A. Multiple view geometry in computer vision. 2. New York: Cambridge University Press; 2003. [Google Scholar]

- 16.Tsai RY, Lenz RK. Real time versatile robotics hand/eye calibration using 3D machine vision. In: Proceedings 1988 IEEE international conference on robotics and automation. IEEE Comput. Soc. Press; 1988. p. 554–61.

- 17.Rose JC, Paulus S, Kuhlmann H. Accuracy analysis of a multi-view stereo approach for phenotyping of tomato plants at the organ level. Sensors. 2015;15:9651–9665. doi: 10.3390/s150509651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Geng J. Structured-light 3D surface imaging: a tutorial. Adv Opt Photonics. 2011;3(2):128. [Google Scholar]

- 19.Zhang S. High-speed 3D shape measurement with structured light methods: a review. Opt Lasers Eng. 2018;106:119–131. [Google Scholar]

- 20.Li L, Schemenauer N, Peng X, Zeng Y, Gu P. A reverse engineering system for rapid manufacturing of complex objects. Robot Comput Integr Manuf. 2002;18(1):53–67. [Google Scholar]

- 21.Polder G, Hofstee JW. Phenotyping large tomato plants in the greenhouse using a 3D light-field camera. St. Joseph: American Society of Agricultural and Biological Engineers; 2014. [Google Scholar]

- 22.Dario Piatti DSaFRDSe Fabio Remondino . TOF range-imaging cameras. 1. Berlin: Springer; 2013. [Google Scholar]

- 23.Corti A, Giancola S, Mainetti G, Sala R. A metrological characterization of the Kinect V2 time-of-flight camera. Robot Auton Syst. 2016;75:584–594. [Google Scholar]

- 24.Thapa S, Zhu F, Walia H, Yu H, Ge Y. A novel LiDAR-based instrument for high-throughput, 3D measurement of morphological traits in maize and sorghum. Sensors. 2018;18(4):1187. doi: 10.3390/s18041187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Vezočnik R, Ambrožič T, Sterle O, Bilban G, Pfeifer N, Stopar B. Use of terrestrial laser scanning technology for long term high precision deformation monitoring. Sensors. 2009;9(12):9873–9895. doi: 10.3390/s91209873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Franca JGDM, Gazziro MA, Ide AN, Saito JH. A 3D scanning system based on laser triangulation and variable field of view. In: IEEE international conference on image processing 2005. New York: IEEE; 2005.

- 27.Nguyen T, Slaughter D, Max N, Maloof J, Sinha N. Structured light-based 3D reconstruction system for plants. Sensors. 2015;15(8):18587–18612. doi: 10.3390/s150818587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wu C. Towards linear-time incremental structure from motion. In: 2013 international conference on 3D vision. New York: IEEE; 2013.

- 29.Zennaro S, Munaro M, Milani S, Zanuttigh P, Bernardi A, Ghidoni S, et al. Performance evaluation of the 1st and 2nd generation Kinect for multimedia applications. In: 2015 IEEE international conference on multimedia and expo (ICME). New York: IEEE; 2015. p. 1–6.

- 30.May S, Werner B, Surmann H, Pervolz K. 3D time-of-flight cameras for mobile robotics. In: 2006 IEEE/RSJ international conference on intelligent robots and systems. New York: IEEE; 2006.

- 31.Ihrke I, Restrepo J, Mignard-Debise L. Principles of light field imaging: briefly revisiting 25 years of research. IEEE Signal Process Mag. 2016;33(5):59–69. [Google Scholar]

- 32.Tao MW, Hadap S, Malik J, Ramamoorthi R. Depth from combining defocus and correspondence using light-field cameras. In: The IEEE international conference on computer vision (ICCV); 2013.

- 33.Disney M. Terrestrial LiDAR : a three-dimensional revolution in how we look at trees. New Phytol. 2018;222(4):1736–1741. doi: 10.1111/nph.15517. [DOI] [PubMed] [Google Scholar]

- 34.Haddad NA. From ground surveying to 3D laser scanner: a review of techniques used for spatial documentation of historic sites. J King Saud Univ Eng Sci. 2011;23(2):109–118. [Google Scholar]

- 35.Bylesjö M, Segura V, Soolanayakanahally RY, Rae AM, Trygg J, Gustafsson P, et al. LAMINA: a tool for rapid quantification of leaf size and shape parameters. BMC Plant Biol. 2008;8:82. doi: 10.1186/1471-2229-8-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sanz-Cortiella R, Llorens-Calveras J, Escolà A, Arnó-Satorra J, Ribes-Dasi M, Masip-Vilalta J, et al. Innovative LIDAR 3D dynamic measurement system to estimate fruit-tree leaf area. Sensors. 2011;11(6):5769–5791. doi: 10.3390/s110605769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rady MM. Effect of 24-epibrassinolide on growth, yield, antioxidant system and cadmium content of bean (Phaseolus vulgaris L.) plants under salinity and cadmium stress. Sci Hortic. 2011;129(2):232–237. [Google Scholar]

- 38.GmbH HM. Technical data perceptron ScanWorks V5. 2015. http://www.hexagonmi.com. Accessed 29 Aug 2019.

- 39.Wang H, Zhang W, Zhou G, Yan G, Clinton N. Image-based 3D corn reconstruction for retrieval of geometrical structural parameters. Int J Remote Sens. 2009;30(20):5505–5513. [Google Scholar]

- 40.Zheng G, Moskal LM. Retrieving leaf area index (LAI) using remote sensing: theories, methods and sensors. Sensors. 2009;9(4):2719–2745. doi: 10.3390/s90402719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Xiong D, Wang D, Liu X, Peng S, Huang J, Li Y. Leaf density explains variation in leaf mass per area in rice between cultivars and nitrogen treatments. Ann Bot. 2016;117(6):963–971. doi: 10.1093/aob/mcw022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zadoks JC, Chang TT, Konzak CF. A decimal code for the growth stages of cereals. Weed Res. 1974;14(6):415–421. [Google Scholar]

- 43.Minervini M, Abdelsamea MM, Tsaftaris SA. Image-based plant phenotyping with incremental learning and active contours. Ecol Inf. 2014;23:35–48. [Google Scholar]

- 44.Scharr H, Minervini M, Fischbach A, Tsaftaris SA. Annotated image datasets of rosette plants. 2014.

- 45.Paproki A, Sirault X, Berry S, Furbank R, Fripp J. A novel mesh processing based technique for 3D plant analysis. BMC Plant Biol. 2012;12(1):63. doi: 10.1186/1471-2229-12-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Vos J, Evers JB, Buck-Sorlin GH, Andrieu B, Chelle M, de Visser PHB. Functional-structural plant modelling: a new versatile tool in crop science. J Exp Bot. 2010;61(8):2101–15. doi: 10.1093/jxb/erp345. [DOI] [PubMed] [Google Scholar]

- 47.Sodhi P, Vijayarangan S, Wettergreen D. In-field segmentation and identification of plant structures using 3D imaging. In: 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS). New York: IEEE; 2017.

- 48.Lin YW, Ruifang Z, Pujuan S, Pengfei W. Segmentation of crop organs through region growing in 3D space. In: 2016 Fifth international conference on agro-geoinformatics (Agro-Geoinformatics). 2016. p. 1–6.

- 49.Wahabzada M, Paulus S, Kerstin C, Mahlein AK. Automated interpretation of 3D laserscanned point clouds for plant organ segmentation. BMC Bioinform. 2015;16:248. doi: 10.1186/s12859-015-0665-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wu S, Wen W, Xiao B, Guo X, Du J, Wang C, et al. An accurate skeleton extraction approach from 3D point clouds of maize plants. Front Plant Sci. 2019 doi: 10.3389/fpls.2019.00248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Frasson RPDM, Krajewski WF. Three-dimensional digital model of a maize plant. Agric For Meteorol. 2010;150(3):478–488. [Google Scholar]

- 52.Bradski G. The OpenCV library. Dr Dobb’s Journal of Software Tools. 2000.

- 53.Rusu RB, Cousins S. 3D is here: Point Cloud Library (PCL). In: 2011 IEEE international conference on robotics and automation. New York: IEEE; 2011. p. 1–4.

- 54.Paulus S, Dupuis J, Mahlein AK, Kuhlmann H. Surface feature based classification of plant organs from 3D laserscanned point clouds for plant phenotyping. BMC Bioinform. 2013;13:238. doi: 10.1186/1471-2105-14-238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Rusu RB, Marton ZC, Blodow N, Beetz M, Systems IA, München TU. Persistent point feature histograms for 3d point clouds. In: In Proceedings of the 10th international conference on intelligent autonomous systems (IAS-10). 2008. p. 1–10.

- 56.Rusu RB, Blodow N, Beetz M. Fast Point Feature Histograms (FPFH) for 3D registration. In: Proceedings of the IEEE international conference on robotics and automation (ICRA), Kobe, Japan. 2009. p. 3212–7.

- 57.Chang CC, Lin CJ. LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol. 2011;2:27. [Google Scholar]

- 58.Camargo AV, Mackay I, Mott R, Han J, Doonan JH, Askew K, et al. Functional mapping of quantitative trait loci (QTLs) associated with plant performance in a wheat magic mapping population. Front Plant Sci. 2018;9:887. doi: 10.3389/fpls.2018.00887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.He JQ, Harrison RJ, Li B. A novel 3D imaging system for strawberry phenotyping. Plant Methods. 2017;13(1):93. doi: 10.1186/s13007-017-0243-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.van der Heijden G, Song Y, Horgan G, Polder G, Dieleman A, Bink M, et al. SPICY: towards automated phenotyping of large pepper plants in the greenhouse. Funct Plant Biol. 2012;39(11):870. doi: 10.1071/FP12019. [DOI] [PubMed] [Google Scholar]

- 61.Biskup B, Scharr H, Schurr U, Rascher U. A stereo imaging system for measuring structural parameters of plant canopies. Plant Cell Environ. 2007;30(10):1299–308. doi: 10.1111/j.1365-3040.2007.01702.x. [DOI] [PubMed] [Google Scholar]

- 62.Sun S, Li C, Paterson AH, Jiang Y, Xu R, Robertson JS, et al. In-field high throughput phenotyping and cotton plant growth analysis using LiDAR. Front Plant Sci. 2018;9:16. doi: 10.3389/fpls.2018.00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Shafiekhani A, Kadam S, Fritschi F, DeSouza G. Vinobot and vinoculer: two robotic platforms for high-throughput field phenotyping. Sensors. 2017;17(12):214. doi: 10.3390/s17010214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Klodt M, Herzog K, Töpfer R, Cremers D. Field phenotyping of grapevine growth using dense stereo reconstruction. BMC Bioinform. 2015;16(1):143. doi: 10.1186/s12859-015-0560-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vadez V, Kholová J, Hummel G, Zhokhavets U, Gupta SK, Hash CT. LeasyScan: a novel concept combining 3D imaging and lysimetry for high-throughput phenotyping of traits controlling plant water budget. J Exp Bot. 2015;66(18):5581–5593. doi: 10.1093/jxb/erv251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Shi Y, Thomasson JA, Murray SC, Pugh NA, Rooney WL, Shafian S, et al. Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS ONE. 2016;11(7):e0159781. doi: 10.1371/journal.pone.0159781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Moeckel T, Dayananda S, Nidamanuri R, Nautiyal S, Hanumaiah N, Buerkert A, et al. Estimation of vegetable crop parameter by multi-temporal UAV-borne images. Remote Sens. 2018;10(5):805. [Google Scholar]

- 68.Liu BH. Statistical genomics. Boca Raton: CRC Press; 2017. [Google Scholar]

- 69.Minervini M, Scharr H, Tsaftaris S. Image analysis: the new bottleneck in plant phenotyping [applications corner] IEEE Signal Process Mag. 2015;32(4):126–131. [Google Scholar]

- 70.Rumpf T, Mahlein AK, Steiner U, Oerke EC, Dehne HW, Plümer L. Early detection and classification of plant diseases with Support Vector Machines based on hyperspectral reflectance. Comput Electron Agric. 2010;74(1):91–99. [Google Scholar]

- 71.Mahlein AK. Plant disease detection by imaging sensors—parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2016;100(2):241–251. doi: 10.1094/PDIS-03-15-0340-FE. [DOI] [PubMed] [Google Scholar]

- 72.Jones HG, Serraj R, Loveys BR, Xiong L, Wheaton A, Price AH. Thermal infrared imaging of crop canopies for the remote diagnosis and quantification of plant responses to water stress in the field. Funct Plant Biol. 2009;36(11):978. doi: 10.1071/FP09123. [DOI] [PubMed] [Google Scholar]

- 73.Behmann J, Mahlein A-K, Paulus S, Dupuis J, Kuhlmann H, Oerke E-C, et al. Generation and application of hyperspectral 3D plant models: methods and challenges. Mach Vis Appl. 2015;27(5):611–624. [Google Scholar]

- 74.Bannari A, Morin D, Bonn F, Huete AR. A review of vegetation indices. Remote Sens Rev. 1995;13(1–2):95–120. [Google Scholar]

- 75.Behmann J, Mahlein AK, Paulus S, Kuhlmann H, Oerke EC, Plümer L. Calibration of hyperspectral close-range pushbroom cameras for plant phenotyping. ISPRS J Photogramm Remote Sens. 2015;106:172–182. [Google Scholar]

- 76.de Visser PHB, Buck-Sorlin GH, van der Heijden GWAM. Optimizing illumination in the greenhouse using a 3D model of tomato and a ray tracer. Front Plant Sci. 2014;5:48. doi: 10.3389/fpls.2014.00048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Gibbs JA, Pound M, French AP, Wells DM, Murchie E, Pridmore T. Approaches to three-dimensional reconstruction of plant shoot topology and geometry. Funct Plant Biol. 2017;44(1):62. doi: 10.1071/FP16167. [DOI] [PubMed] [Google Scholar]

- 78.Jahnke S, Menzel MI, van Dusschoten D, Roeb GW, Bühler J, Minwuyelet S, et al. Combined MRI-PET dissects dynamic changes in plant structures and functions. Plant J. 2009;59(4):634–644. doi: 10.1111/j.1365-313X.2009.03888.x. [DOI] [PubMed] [Google Scholar]

- 79.Joo H, Liu H, Tan L, Gui L, Nabbe B, Matthews I, et al. Panoptic studio: a massively multiview system for social motion capture. In: 2015 IEEE international conference on computer vision (ICCV). New York: IEEE; 2015. p. 1–9.

- 80.Clark RT, MacCurdy RB, Jung JK, Shaff JE, McCouch SR, Aneshansley DJ, et al. Three-dimensional root phenotyping with a novel imaging and software platform. Plant Physiol. 2011;156(2):455–465. doi: 10.1104/pp.110.169102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Paulus S, Eichert T, Goldbach HE, Kuhlmann H. Limits of active laser triangulation as an instrument for high precision plant imaging. Sensors. 2014;14:2489–2509. doi: 10.3390/s140202489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Dupuis J, Paulus S, Mahlein AK, Kuhlmann Eichert T. The impact of different leaf surface tissues on active 3D laser triangulation measurements. Photogrammetrie-Fernerkundung-Geoinformation. 2015;2015(6):437–447. [Google Scholar]

- 83.Klapa P, Mitka B. Edge effect and its impact upon the accuracy oF 2d and 3d modelling using laser scanning. Geomat Landmanag Landsc. 2017;1:25–33. [Google Scholar]

- 84.Jay S, Rabatel G, Hadoux X, Moura D, Gorretta N. In-field crop row phenotyping from 3D modeling performed using structure from motion. Comput Electron Agric. 2015;110:70–77. [Google Scholar]

- 85.Cao M, Jia W, Lv Z, Li Y, Xie W, Zheng L, et al. Fast and robust feature tracking for 3D reconstruction. Opt Laser Technol. 2019;110:120–128. [Google Scholar]

- 86.Shtengel G, Galbraith JA, Galbraith CG, Lippincott-Schwartz J, Gillette JM, Manley S, et al. Interferometric fluorescent super-resolution microscopy resolves 3D cellular ultrastructure. Proc Natl Acad Sci. 2009;106(9):3125–3130. doi: 10.1073/pnas.0813131106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Fitzgibbon J, Bell K, King E, Oparka K. Super-resolution imaging of plasmodesmata using three-dimensional structured illumination microscopy. Plant Physiol. 2010;153(4):1453–1463. doi: 10.1104/pp.110.157941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Scharr H, Briese C, Embgenbroich P, Fischbach A, Fiorani F, Müller-Linow M. Fast high resolution volume carving for 3D plant shoot reconstruction. Front Plant Sci. 2017;8:1680. doi: 10.3389/fpls.2017.01680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Jahnke S, Roussel J, Hombach T, Kochs J, Fischbach A, Huber G, et al. Pheno seeder—a robot system for automated handling and phenotyping of individual seeds. Plant Physiol. 2016;172(3):1358–1370. doi: 10.1104/pp.16.01122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Schulz H, Postma JA, van Dusschoten D, Scharr H, Behnke S. 3d reconstruction of plant roots from MRI images. In: International conference on computer vision theory and application; 2012. p. 1–9. Record converted from VDB: 12.11.2012.

- 91.Flavel RJ, Guppy CN, Rabbi SMR, Young IM. An image processing and analysis tool for identifying and analysing complex plant root systems in 3D soil using non-destructive analysis: Root1. PLoS ONE. 2017;12(5):e0176433. doi: 10.1371/journal.pone.0176433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Hopkinson C, Chasmer L, Young-Pow C, Treitz P. Assessing forest metrics with a ground-based scanning lidar. Can J For Res. 2004;34(3):573–583. [Google Scholar]

- 93.Murphy G. Determining stand value and log product yields using terrestrial lidar and optimal bucking: a case study. J For. 2008;106(6):317–324. [Google Scholar]

- 94.Malhi Y, Jackson T, Bentley LP, Lau A, Shenkin A, Herold M, et al. New perspectives on the ecology of tree structure and tree communities through terrestrial laser scanning. Interface Focus. 2018;8(2):20170052. doi: 10.1098/rsfs.2017.0052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Zimmermann F, Eling C, Klingbeil L, Kuhlmann H. Precise positioning of UAVS-dealing with challenging RTK-GPS measurement conditions during automated UAV flights. ISPRS Ann Photogramm Remote Sens Spatial Inf Sci. 2017;IV–2:W3:95–102. [Google Scholar]

- 96.Benini A, Mancini A, Longhi S. An IMU/UWB/vision-based extended Kalman filter for mini-UAV localization in indoor environment using 802.15.4a wireless sensor network. J Intell Robot Syst. 2012;70(1–4):461–476. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets during and/or analysed during the current study available from the corresponding author on reasonable request.