Abstract

Knowledge integration is an important aspect of education. In clinical education, there is an emphasis on the integration of basic medical science with clinical practice to provide a higher order of comprehension for future physicians. Also of importance in medical education is the promotion and development of professional behaviors (i.e., teamwork and interpersonal professional behavior). We set out to design and implement a weekly, 2 hour educational active learning activity for first-year preclinical medical students to foster knowledge integration and to promote professional development. As part of our case-based curriculum, we used a small-group active-learning approach involving 3 stages: concept mapping, student peer-review, and student group evaluation. Specific learning objectives and behavioral outcomes were designed to focus the learning activities. Rubrics were designed to (1) assess learners’ group generated concept maps, (2) determine effective student peer review, and (3) appropriate evaluation of group dynamics. In addition to assessment data from the rubrics, course evaluations from participating students were collected. Analysis of rubric assessments and student evaluation data confirmed that there was significant statistical achievement in critical thinking and teamwork among students. Furthermore, when analyzing concept mapping scores between the first and last case, the data displayed significant statistical improvement supporting that student groups were further integrating basic science and clinical concepts. Our concept map-based active-learning approach achieved our designated objectives and outcomes.

Keywords: Group-developed concept mapping, peer review, interprofessional collaboration, collaborative learning, faculty facilitation

Introduction/Background

Active learning emphasizes active participation in learning and self-awareness of the learning process.1,2 A current trend in medical education is to move away from traditional passive learning environments toward more active and engaging learning exercises. Didactic delivery in medical curriculum tends to promote rote memorization with little student participation and minimal usage of critical thinking. One method to promote active and meaningful learning is through the use of concept mapping.3-5 Concept mapping is a teaching and learning strategy where students connect knowledge domains to demonstrate a deeper understanding between theory and practice.5 Concept maps consist of concepts (also referred to as knowledge domains or nodes) which are linked together using linking words or phrases to create propositions.5 Through the process of concept map construction, learners must intentionally link and differentiate concepts, or knowledge domains, in a hierarchical structure. The resulting product is a graphical representation of integrated knowledge from previous experience and newly acquired information.

In clinical practice, physicians need to acquire various pieces of information and integrate this new information with previous experience to provide proper patient care, thereby ultimately fulfilling the National Board of Medical Examiners competency domain 4 of evidence-based medicine (http://www.nbome.org/Content/Flipbooks/FOMCD/index.html#p=28). One way to aid medical students to achieve this competency is to practice integrating medical science with clinical practice concepts using concept mapping.3,6 The methodology of concept mapping has been used in medical education to promote both knowledge integration3,7-11 and assessment.10,12,13

Along with knowledge integration, another important aspect of modern health care is collaborative and team-based care. According to the American Medical Association, physician-led team-based care is the most effective way to maximize skill sets of health care professionals. The significance and the need for collaboration in a health care environment, as well as the importance of knowledge integration for clinical reasoning, were well highlighted by Torres et al.9 Here, the authors discussed how collaboration is important for improving learning outcomes and for fostering integration of knowledge. They further defined the use of group concept mapping as an active learning experience. Our goal was to implement a learning exercise that would actively engage first-year medical students working in small groups to promote critical thinking, knowledge integration, and professional collaboration within a single course. With this goal, the following 4 learning objectives were determined. Students should be able to

Identify and integrate basic science and clinical concepts;

Develop critical thinking skills;

Develop and demonstrate teamwork and professional interaction skills;

Assess peer-developed concept maps to increase content comprehension and improve feedback skills.

The purpose of this study is to report on the qualitative results of the student course evaluations and quantitative changes in concept map scoring to support achievement of the learning objectives.

Methods

Participants

Two independent cohorts of first-year preclinical medical students (matriculating classes of 2021 and 2022) conducted small-group case-based learning using concept maps. Each cohort was randomly divided into groups of 4 to 6 learners. There were a total of 51 student groups participating in the case-based course during the 2-year study (Table 1). A group leader was assigned to each team, who is responsible for submitting to the instructors the completed concept map in addition to completing the peer review rubric collectively on behalf of the group. The students also assigned different roles within the group that rotated weekly, which consisted of the following:

Table 1.

Cohort group and concept map number.

| Cohort |

||

|---|---|---|

| 2021 | 2022 | |

| Student groups | 24 | 25 |

| Concept maps/group | 11 | 8 |

| Total maps generated | 264 | 200 |

A scribe (learner responsible for constructing the map on the CMAP tools program: https://cmap.ihmc.us/cmaptools/);

A timekeeper (learner responsible for keeping the team on task and to make sure they are finished on time);

A discussion leader (learner responsible for ensuring equal participation of all members);

A case presenter (learner responsible for presenting the clinical case to the faculty).

The student groups operated independently with minimum supervision by faculty facilitators. This was deliberate primarily to discourage interference in group dynamics and to encourage students to take ownership and charge of their own learning.

Learning session outline

Several (8-11) concept map sessions were designed for preclinical first-year medical students as part of the case-based learning component of the principles and practices of osteopathic medicine course (Table 1). The first session was an introduction/training for the learners. The training included the learning theory behind concept mapping, its use in medical training, its high value in enhancing critical thinking, peer review feedback, and use of course rubrics. The remaining sessions involved active learning, developing concept maps on topics which aligned with the didactic lecture portion of the curriculum. Each 1-hour 50-minute session was divided as follows:

0 to 75 minutes: group construction of concept map;

75 to 95 minutes: peer review of concept map;

95 to 100 minutes: student evaluation of their group;

100 to 110 minutes: session wrap-up (overall and self-reflective).

Students received a clinical case the week before the session to review and become familiar with the different medical sciences and clinical practice concepts. A total of 30 to 35 concepts, mostly divided equally between medical sciences and clinical practice, were included in each case. Providing concept terms for map construction without restricting the overall structure of the map has been shown to elicit more complex maps as opposed to providing no concepts.14 In addition, allowing student groups the freedom in map structure with the given concepts promotes higher order thinking as opposed to rote learning associated with fill-in-the-blank maps.15 Clinical cases and concepts were created through collaborative efforts by faculty from clinical and biomedical science backgrounds. Faculty also created “expert” concept maps as a comparative tool for discussion.

During the first 75 minutes of each session, the groups collaboratively built the concept map using the concepts. As stated above, all student groups used the CMAP tool software to build their maps, allowing simple concept map submission to faculty. After this was completed, 2 randomly assigned groups exchanged maps for peer review using the same rubric used and developed by the faculty for grading, which students were trained on in the first session. The student groups shared the feedback review with each other to help improve the maps. Next, each member of the group filled out a group evaluation that gave insight into group dynamics and the ability of the group to collaborate and function cohesively. Finally, a wrap-up session that consisted of the faculty facilitators sharing with the students the “expert” map, created by clinical and biomedical faculty, and discussed what terms or connections the learners may have struggled with as well as address any misconceptions.

Assessment

The student groups were assessed on a weekly basis by faculty using specific rubrics (Figures 1 and 2) on all components of the learning activity for which they were trained with during the first session. Weekly point scores were based on concept map scoring (50%), peer review feedback scoring (30%), and group evaluation/participation (20%).

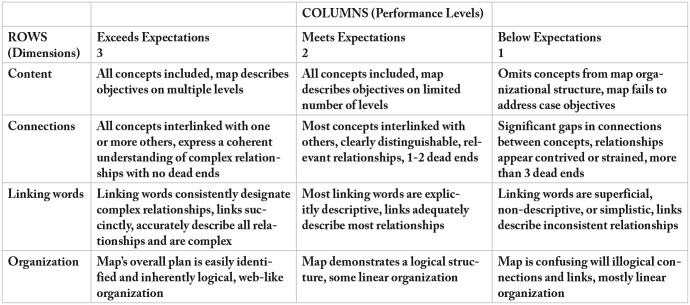

Figure 1.

Concept map assessment rubric. The rubric was modified from Jennings13 to include a 3-point scale with 4 criteria domains.

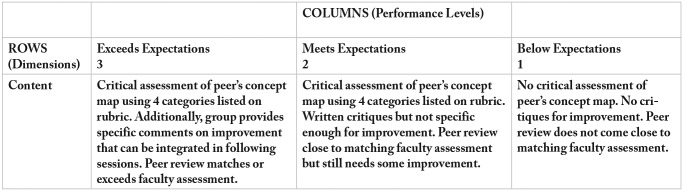

On a weekly basis, faculty facilitators assessed the group concept maps using a 4-criteria, 3-point scale rubric (Figure 1) and provided detailed written feedback on the strengths and weaknesses of the maps as well as tips for improvement. In addition, the group’s ability to provide proper feedback through the peer review process was assessed using a 1-criteria, 3-point scale rubric (Figure 2). Faculty provided weekly feedback regarding student peer-review to reinforce feedback quality. The combination of student training on feedback and weekly formative evaluation from both peers and faculty had a measurable impact on student course evaluations (Slieman and Camarata, manuscript in preparation). Faculty facilitators were trained on all rubric use to insure accurate and consistent assessment.

Concept map rubric

The concept map assessment rubric was modified from Jennings (2012) by a team of clinical and biomedical science faculty.16 The rationale behind the rubric design was to develop an assessment tool that included important aspects of map construction, revealed student group knowledge, and was not overburdensome to grade. Previous assessment tools for concept maps focused on either map structure (concept hierarchy, number of connections and linking words) or the relationship between concepts by focusing on the propositions created by connecting concepts with linking words.7,15,17,18,19 The faculty-developed rubric assesses overall structure (Organization) while looking deeper into the accuracy of the propositions (Connections, Linking words) without the burden of counting and comparing specific number of connections or linking words; outside of the goal of every concept should be connected to at least 2 others. The encouragement of more than 1 connection between concepts, especially between separate concepts on different sides of the map, can promote new learning and is termed integrative reconciliation.3,15

Peer review rubric

Figure 2.

Rubric to assess student peer feedback.

Course evaluation surveys

Both precourse and postcourse evaluations were administered to each student cohort. The questions were designed to measure initial learners’ attitudes and perceptions as well as final progression toward meeting our 4 learning objectives. The evaluation questions used a 5-point Likert-type scale and started with the prompt “on a scale of 1 (lowest) through 5 (highest), please answer the following questions.” There was also space provided for open responses.

Data collection and analysis

Anonymous course evaluations were provided to students electronically during the first session and the last session of the course. Ordinal Likert-type-scale evaluation question responses were exported into a spreadsheet for statistical analysis. The paired and non-normally distributed precourse and postcourse evaluation question responses were analyzed using Wilcoxon Rank Signed Test (Mann-Whitney). For comparison between first and final concept map assessments of each group, Student’s t-test was performed. This study received an exempt designation by the New York Institute of Technology’s Institutional Review Board (IRB).

Results

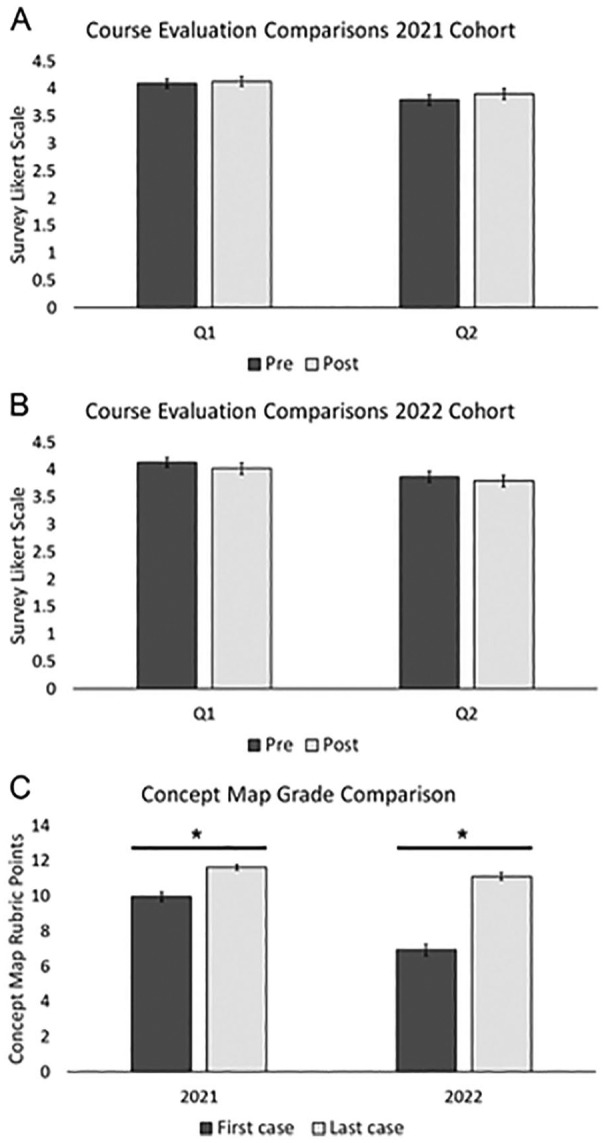

To determine whether the course objectives were met, we compared student responses provided in the precourse and postcourse evaluations using a 5-point Likert-type scale (see “Methods” section). Analyses of course evaluations were performed for both, 2021 and 2022 student cohorts with equivalent results obtained from both (Figure 3). In the precourse evaluation, students were asked “Do you believe your critical thinking skills will improve from this exercise?” (Figure 3A and B: Q1). Most students believed the course would have a positive impact on critical thinking skills (Likert-type scale response 2021 cohort, 4.09 [SE ± 0.082], n = 122; 2022 cohort, 4.13 [SE ± 0.084], n = 117). Following the course, students were asked “To what extent did this CBL activity encourage critical thinking in the learning process?” and both cohorts had similar responses on the postcourse evaluation survey (2021 cohort, 4.13 [SE ± 0.082], n = 124; 2022 cohort, 4.02 [SE ± 0.099], n = 112). As most students reported at the beginning of the course that it would improve critical thinking and by the end of the course that it did encourage critical thinking, this suggests that the second learning objective had been met. In support of this, there was no statistical difference between the precourse and postcourse evaluations for questions related to critical thinking. The second learning objective could also have been met if students did not have a positive perception between concept mapping and critical thinking at the beginning of the course and a more positive outlook on critical thinking by the end of the course. However, our course evaluation data suggest that most students had a positive attitude toward concept mapping enhancing critical thinking both before and after the course. In addition, students were asked on the precourse evaluation “Do you believe this exercise will enhance your ability to work in a group?” with the corresponding postcourse question “To what extent has your ability to work in a group improved?” (Figure 3: Q2). The 2021 and 2022 student cohorts strongly agreed that the course would enhance the ability to work in a group (2021 cohort, 3.79 [SE ± 0.095]; 2022 cohort, 3.87 [SE ± 0.096]) and believed the course did achieve the objective based on the postcourse evaluations (Figure 3A and B: Q2; 2021 cohort, 3.90 [SE ± 0.096]; 2022 cohort, 3.79 [SE ± 0.097]). No statistical difference was found between student responses for question 2, showing that students felt the course helped their ability to work in groups (Figure 3A and B).

Figure 3.

Concept mapping course evaluation analysis. (A) Course evaluation analysis of 2021 student cohort for survey question 1 (Q1) and question 2 (Q2) showing average Likert-type scale response. (B) Course evaluation analysis of 2022 student cohort for survey Q1 and Q2 showing average Likert-type scale response value. (C) Rubric score comparison of concept maps from first and last clinical case. Error bars represent standard error of the mean.

*P < 0.0001.

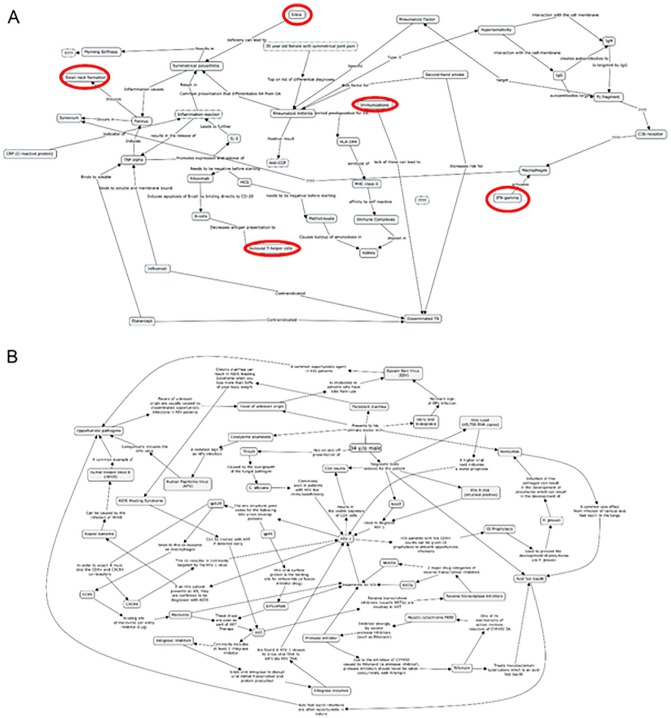

To further determine whether the educational objectives were met, we analyzed concept map scoring between the first and last case student groups completed (Figure 3C). Using the concept map assessment rubric (Figure 1), concept maps produced by the student groups were graded with a maximum of 12 points possible. The 2021 cohort began the course with an average score of 9.96 (SE ± 0.266) on the first case concept maps. The 2022 cohort began with a lower average score on the first case of 6.92 (SE ± 0.310). However, both cohorts reached comparable average scores on the final concept maps (2021 cohort, 11.625 [SE ± 0.157]; 2022 cohort, 11.12 [SE ± 0.218]). Both cohorts displayed significant improvement on concept map scoring between the first concept map produced and the last (2021 cohort, 24 groups, P < 0.0001, t = –5.4, df = 37.3; 2022 cohort, 25 groups, P < 0.0001, t = –11.06, df = 43.1). Improvement in concept map construction could also be observed in representative student group-created maps (Figure 4). As students progressed in the course, they displayed better knowledge integration by creating more connections between concepts and provided more complex linking word descriptions, which reinforced content comprehension. These data strongly support the objective to identify and integrate basic science and clinical concepts was achieved.

Figure 4.

Representative student group concept maps. (A) Student group concept map from the beginning of the course showing several dead ends (red circles), simplistic, or missing links. (B) Example student group concept map at the end of the course. Every concept term contains multiple connections, and each link has complex descriptions.

Discussion

Here, we are describing and presenting supporting data for a case-based, small-group learning course using concept mapping in a first-year medical curriculum. The results of the course evaluations showed that the students thought the course aided in the development of critical thinking skills supporting the achievement of 1 of the 4 stated learning objectives. Critical thinking development using concept mapping has been shown in previous studies.9,20 Furthermore, concept map improvement was evident by the increase in the map score averages throughout the semester, suggesting learner groups were able to identify and integrate basic scientific knowledge with clinical medicine and with more complexity. The demonstration of concept integration supports the achievement of the first learning objective listed above (ie, identify and integrate basic science and clinical concepts). To accomplish this objective, learners had to work as a team to create a final product as well as peer-review other group’s map. This design permitted us to achieve our last 2 objectives which were the development of skills relating to teamwork and professional interactions as well as provide proper feedback. All of the stated learning objectives were measured through the different rubrics used throughout the course.

The creation of concept maps can range from complete freedom of map construction by providing no terms to more restrictive approaches by providing a skeleton concept map where students fill in terms or links.15,19,21 Our approach was intermediate to these methodologies. Within each clinical case, terms were provided and separated into 2 domains, medical science (basic science) and clinical medicine. The student groups had complete freedom to construct concept maps with the provided terms and were encouraged to add more terms and links as they desire. The clinical cases and provided terms were aligned with the didactic lecture curriculum. This approach allowed students to explore and visualize content material that was delivered separately and integrate the information prompted by the clinical case and using concept mapping. Although concept mapping has been used in problem-based medical curricula,8,11,13 this is the first report of this active learning method to be engaged and aligned with didactic lecture curriculum for first-year medical students.

A noteworthy difference between the concept map rubric developed and used here with other rubrics was the emphasis on both the development of web-like organizational structure of the map and increased complexity of descriptors that linked the terms. Concept map assessment rubrics have been developed to score maps with an emphasis on hierarchical structure5,10,22 or focusing on the number and accuracy of links.7,10 The rubric used in this study was modified from Jennings (2012) to assess overall map structure, number of connections, and accuracy of links.16 This challenges the learners not only to make as many connections as possible but also to deeply understand the mechanisms of how the concepts are connected with complex links. Concepts for each clinical case were intentionally chosen by faculty to allow for and promote multiple connections for learners to discover the numerous relationships and connections between basic and clinical science needed for medical practice. Through the process of concept map peer review, continual improvement of knowledge integration could be demonstrated along with weekly formative feedback from faculty (Figure 3C). Although the cohorts in this study had different average concept map scores for the first case, they both finished the course with essentially the same final average map scores, suggesting growth of knowledge integration occurred in both cohorts. All groups in both cohorts displayed improvements in concept map rubric scores throughout the term. The improvement of concept map construction was observed as the groups continued to work through the case-based concept mapping sessions. The 2021 cohort had 11 case-based concept mapping sessions, whereas the 2022 cohort worked through 8 cases during the term. The combination of continual use of concept mapping aligned to the curriculum along with the regular formative feedback by faculty and peer review feedback contributed greatly to group performance improvement.

Development of clinical cases and “expert” concept maps for each case were collaborative efforts between basic science and clinical faculty. The process of using the concept map rubric and providing continual feedback had a positive effect on the faculty-developed concept maps. Using a similar process as students, faculty worked in teams and developed “expert” concept maps that progressed in both complexity and interconnection over a period of 3 consecutive years. This indicated that this approach advanced the maps to further integration and complexity that require higher order thinking. The faculty collaborations highlighted a number of positive outcomes for instructors involved in concept mapping such as motivation, cooperative learning, and articulation of information.23

Conclusions

Based on our metrics, the implementation of this exercise was successful, and measured progress was achieved regarding all 4 learning objectives. This approach further encourages learners’ independence and ownership as faculty facilitators had minimal involvement in the student groups’ construction of maps or the peer review process beyond assessment. In the future, we would like to explore qualitatively whether this exercise had any effect on students’ performance on didactic assessment tools and/or retention of material.

Acknowledgments

The authors thank the basic science and clinical faculty at NYITCOM at Arkansas State for their valuable contribution and input into creating the case-based learning course. The authors acknowledge the efforts of Dr. Rajendram Rajnarayanan for supporting this study, and Drs Joerg Leheste and R. Rajnarayanan for critical comments on the manuscript.

Footnotes

Funding:The author(s) received no financial support for the research, authorship, and/or publication of this article.

Declaration of conflicting interests:The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Author’s Note: The authors report no conflict of interest either financial or personal. The authors alone are responsible for the content and writing of this article. The authors had full access to all of the data in this study, and we take complete responsibility for the integrity of the data and the accuracy of the data analysis.

Author Contributions: Both authors contributed equally to the design, draft, revision and all aspects of this work.

ORCID iD: Tony A Slieman  https://orcid.org/0000-0001-9594-1038

https://orcid.org/0000-0001-9594-1038

References

- 1. Bonwell CC, Eison JA. Active Learning: Creating Excitement in the Classroom (Ashe-Eric Higher Education Report 1). Washington, DC: George Washington University; 1991. [Google Scholar]

- 2. Graffam B. Active learning in medical education: strategies for beginning implementation. Med Teach. 2007;29:38-42. [DOI] [PubMed] [Google Scholar]

- 3. Daley BJ, Durning SJ, Torre DM. Using concept maps to create meaningful learning in medical education. Mededpublish. 2016;5:1-29. [Google Scholar]

- 4. Moon BM, Hoffman RR, Novak JD, Cañas JJ. Applied Concept mapping: Capturing, analyzing, and organizing knowledge. New York, NY: CRC Press; 2011. [Google Scholar]

- 5. Novak JD, Gowin DB. Learning How to Learn. New York, NY: Cambridge University Press; 1984. [Google Scholar]

- 6. Novak JD. Learning, Creating, and Using Knowledge: Concept Maps as Facilitative Tools in Schools and Corporations. New York, NY: Taylor & Francis; 2010. [Google Scholar]

- 7. Ruiz-Primo MA, Shavelson RJ. Problems and issues in the use of concept maps in science assessment. J Res Sci Teach. 1996;33:569-600. [Google Scholar]

- 8. Thomas L, Bennett S, Lockyer L. Using concept maps and goal-setting to support the development of self-regulated learning in a problem-based learning curriculum. Med Teach. 2016;38:930-935. [DOI] [PubMed] [Google Scholar]

- 9. Torre D, Daley BJ, Picho K, Durning SJ. Group concept mapping: an approach to explore group knowledge organization and collaborative learning in senior medical students. Med Teach. 2017;39:1051-1056. [DOI] [PubMed] [Google Scholar]

- 10. West DC, Park JK, Pomeroy JR, Sandoval J. Concept mapping assessment in medical education: a comparison of two scoring systems. Med Educ. 2002;36:820-826. [DOI] [PubMed] [Google Scholar]

- 11. Veronese C, Richards JB, Pernar L, Sullivan AM, Schwartzstein RM. A randomized pilot study of the use of concept maps to enhance problem-based learning among first-year medical students. Med Teach. 2013;35:e1478-e1484. [DOI] [PubMed] [Google Scholar]

- 12. Chen W, Allen C. Concept mapping: providing assessment of, for, and as learning. Med Sci Educat. 2017;27:149-153. [Google Scholar]

- 13. Kassab SE, Hussain S. Concept mapping assessment in a problem-based medical curriculum. Med Teach. 2010;32:926-931. [DOI] [PubMed] [Google Scholar]

- 14. Soika K, Reiska P, Mikser R. Concept mapping as an assessment tool in science education. Paper presented at: Concept Maps: Theory, Methodology, Technology, Proceedings of the Fifth International Conference on Concept Mapping; 2012. http://cmc.ihmc.us/cmc2012papers/cmc2012-p188.pdf. [Google Scholar]

- 15. Canas AJ, Novak JD, Reiska P. Freedom vs. restriction of content and structure during concept mapping—possibilities and limitations for construction and assessment. In: Proceedings of the Fifth International Conference on Concept Mapping; 2012:247-257. https://pdfs.semanticscholar.org/563e/4d23ef789c01c46e21eb01539de848550af0.pdf. [Google Scholar]

- 16. Jennings D. Concept maps for assessment. Dublin, Ireland: UCD Teaching and Learning; 2012. [Google Scholar]

- 17. Buhmann SY, Kingsbury M. A standardised, holistic framework for concept-map analysis combining topological attributes and global morphologies. Knowled Manage E learn. 2015;7:20-35. [Google Scholar]

- 18. Kinchin IM. Concept mapping in biology. J Biol Edu. 2000;34:61-68. [Google Scholar]

- 19. Penuela-Epalza M, De la Hoz K. Incorporation and evaluation of serial concept maps for vertical integration and clinical reasoning in case-based learning tutorials: perspectives of students beginning clinical medicine. Med Teach. 2019;41:433-440. [DOI] [PubMed] [Google Scholar]

- 20. Torre DM, Daley B, Stark-Schweitzer T, Siddartha S, Petkova J, Ziebert M. A qualitative evaluation of medical student learning with concept maps. Med Teach. 2007;29:949-955. [DOI] [PubMed] [Google Scholar]

- 21. Kinchin IM. Concept mapping as a learning tool in higher education: a critical analysis of recent reviews. J Contin High Edu. 2014;62:39-49. [Google Scholar]

- 22. Torre DM, Durning SJ, Daley BJ. Twelve tips for teaching with concept maps in medical education. Med Teach. 2013;35:201-208. [DOI] [PubMed] [Google Scholar]

- 23. Vink SC, Van Tartwijk J, Bolk J, Verloop N. Integration of clinical and basic sciences in concept maps: a mixed-method study on teacher learning. BMC Med Educ. 2015;15:20. [DOI] [PMC free article] [PubMed] [Google Scholar]