Abstract

The orbital prefrontal cortex (PFo) operates as part of a network involved in reward-based learning and goal-directed behavior. To test whether the PFo is necessary for guiding behavior based on the value of expected reward outcomes, we compared four rhesus monkeys with two-stage bilateral PFo removals and six unoperated controls for their responses to reinforcer devaluation, a task that assesses the monkeys' abilities to alter choices of objects when the value of the underlying food has changed. For comparison, the same monkeys were tested on a standard test of flexible stimulus-reward learning, namely object reversal learning. Relative to controls, monkeys with bilateral PFo removals showed a significant attenuation of reinforcer devaluation effects on each of two separate assessments, one performed shortly after surgery and the other ∼19 months after surgery; the operated monkeys were also impaired on object reversal learning. The same monkeys, however, were unimpaired in acquisition of object discrimination learning problems and responded like controls when allowed to choose foods alone, either on a food preference test among six different foods or after selective satiation. Thus, satiety mechanisms and the ability to assign value to familiar foods appear to be intact in monkeys with PFo lesions. The pattern of results suggests that the PFo is critical for response selection based on predicted reward outcomes, regardless of whether the value of the outcome is predicted by affective signals (reinforcer devaluation) or by visual signals conveying reward contingency (object reversal learning).

Keywords: orbital prefrontal cortex, reinforcer devaluation, decision making, reward value, reward contingency, rhesus monkey

Introduction

Recent neurophysiological studies in monkeys and rats have shown that neurons in the orbital prefrontal cortex (PFo) signal not just occurrence of reward but the value of an expected reward, based on past experience (rats: Schoenbaum et al., 1998, 1999; monkeys: Tremblay and Schultz, 1999; Hikosaka and Watanabe, 2000; Wallis and Miller, 2003; Roesch and Olson, 2004). For example, in monkeys performing a delayed response or visual discrimination task in which visual stimuli are linked to reward delivery, the activity of many PFo neurons reflects the value of the upcoming reward rather than the identity of the stimulus or the (upcoming) motor response. Importantly, this neural signal predicting reward can be observed not only in the delay period between stimulus presentation and choice but also during the period in which the associated visual stimulus is presented (Tremblay and Schultz, 1999; Wallis and Miller, 2003; Roesch and Olson, 2004). In addition, the signal of the predicted reward outcome observed during stimulus presentation depends on input to the PFo from the basolateral amygdala (Schoenbaum et al., 2003b).

There are few neuropsychological tasks that evaluate animals' choices among positive objects and that require the value of the reward to be taken into account. One such task is reinforcer devaluation. In this task, monkeys must associate objects with the foods they cover on a test tray and then adjust their choices of these objects in the face of changing value of the food reinforcers. Baxter et al. (2000) found that crossed disconnection of the amygdala and PFo attenuated the effects of reinforcer devaluation in monkeys, indicating that normal performance requires the functional interaction of these two structures. Although Malkova et al. (1997) found that selective amygdala lesions disrupt performance on this task, the effects of bilateral lesions of the PFo have not yet been examined in monkeys. Accordingly, the present study examined the effects of bilaterally symmetrical lesions of the PFo on reinforcer devaluation. For comparison, we tested the same monkeys on object reversal learning, a task that requires subjects to associate a single object with a food reward and to respond flexibly to changes in reward contingency. Based on previous work in monkeys (Iversen and Mishkin, 1970; Jones and Mishkin, 1972; Dias et al., 1996; Meunier et al., 1997) and rats (Chudasama and Robbins, 2003; McAlonan and Brown, 2003; Schoenbaum et al., 2003a), it was expected that damage to the PFo would disrupt performance on this task. Taken together, the tasks used in the present study were meant to provide more complete information about the contribution of the PFo to two different aspects of response selection, namely response selection based on reward value (as assessed by the reinforcer devaluation task) and response selection based on reward contingency (as assessed by the object reversal task).

Materials and Methods

Subjects

Ten experimentally naive monkeys (Macaca mulatta), all male, were used. They weighed 4.4-6.6 kg at the beginning of the study, were housed individually in rooms with automatically regulated lighting (12 hr light/dark cycle; lights on at 7:00 A.M.), and were maintained on primate chow (catalog number 5038; PMI Feeds, St. Louis, MO) supplemented with fresh fruit. Monkeys were fed a controlled diet to ensure both sufficient motivation to respond in the test apparatus and a healthy body weight. Water was always available in the home cage. Four monkeys were assigned to the experimental group (group PFo), and six monkeys were assigned to the unoperated control group (group Con). Four of the six controls (Con-1 to Con-4) were historical controls (Baxter et al., 2000); these four monkeys had been on rest for 13.5 months before joining the present experiment 1. The two other controls were started concurrently with the experimental subjects. To match the training histories of the groups and allow them to be tested concurrently, all the monkeys were initially given two tests of reinforcer devaluation, only the second of which is reported here as test 1. Thus, the scores of the four controls reported by Baxter et al. (2000) as test 2 are the same as those given here for test 1.

Apparatus

For the reinforcer devaluation and object reversal learning tasks, monkeys were trained in a modified Wisconsin general testing apparatus (WGTA) located in a dark room. The test compartment was illuminated with two 60 W bulbs, whereas the test room and monkey's compartment were always unlit. The test tray, measuring 19.2 × 72.7 × 1.9 cm, contained two food wells 290 mm apart, center to center, on the midline of the tray. The wells were 38 mm in diameter and 6 mm deep. For pretraining, several dark gray matboard plaques measuring 76 mm on each side and three junk objects dedicated to this phase were used. For discrimination learning, two different sets of “junk” objects were available; each set consisted of 120 objects that varied widely in color, shape, size, and texture. Food rewards for each monkey were two of the following: a single (300 mg) banana-flavored pellet (P. J. Noyes, Lancaster, NH); a half-peanut, a raisin, a sweetened dried cranberry (Craisins; Ocean Spray, Lakeville-Middleboro, MA); a fruit snack (Giant Food, Landover, MD); or a chocolate candy (M&Ms; Mars Candies, Hackettstown, NJ). One monkey was reluctant to take most of these foods, and we therefore used Marshmallow Juniors (P. J. Noyes) for this monkey only. For object reversal learning, two additional novel objects were used. Food rewards consisted of half-peanuts for all but one monkey; PFo-4 refused peanuts and was trained using trail mix instead.

As a control, monkeys were also trained on a progressive ratio (PR) task using an automated apparatus. The test apparatus was similar in design to the WGTA but contained a 19-inch color monitor fitted with a touch-sensitive screen instead of a test tray. Stimuli were colored 45 mm typographic characters presented in the center of the monitor screen. Food rewards were 190 mg banana-flavored pellets (P. J. Noyes).

Surgery

Monkeys in the experimental group received the bilateral PFo lesion in two stages. Two monkeys (PFo-1 and PFo-3) received removal of the left PFo as the first operation, whereas the two others (PFo-2 and PFo-4) received the removal on the right side first. After the first-stage surgery, all monkeys were given a food preference test, were trained on 60 concurrent object discrimination problems, and then were assessed for their responses to reinforcer devaluation. Monkeys in the operated group then received the second stage of surgery, removal of PFo in the hemisphere opposite the first removal. The operation was performed using the same methods as for the first stage.

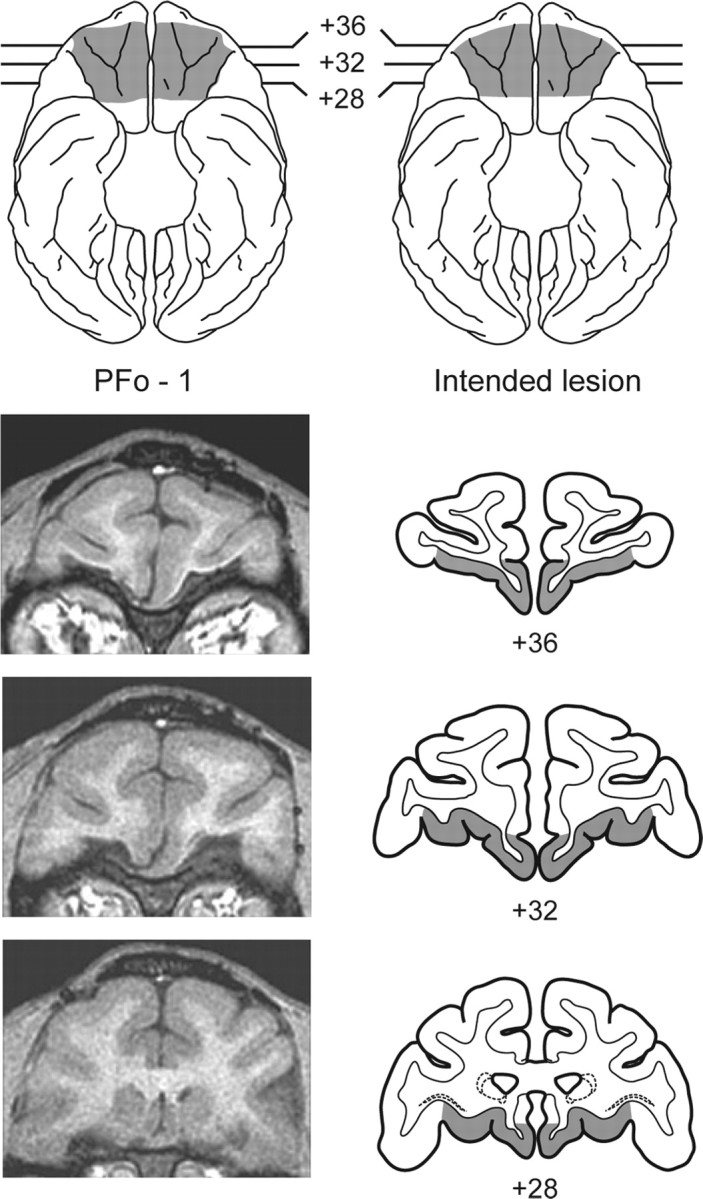

Anesthesia was induced with ketamine hydrochloride (10 mg/kg, i.m.) and maintained using isoflurane gas (1.0-3.0% to effect). Monkeys received isotonic fluids via an intravenous drip. Heart and respiration rates, body temperature, blood pressure, and expired CO2 were all monitored throughout the surgery. Aseptic procedures were used. A crescent-shaped craniotomy was first performed over the region of the prefrontal cortex. The dura mater was then cut around the dorsal edge of the bone opening and reflected ventrally. After the sulci on the orbital surface had been identified, the boundaries of the lesion were marked with a line of electrocautery. Using a combination of suction and electrocautery, the PFo was removed by subpial aspiration through a fine-gauge metal sucker, insulated except at the tip. The intended lesion (Fig. 1) extended from the fundus of the lateral orbital sulcus laterally to the fundus of the rostral sulcus medially. The rostral limit of the lesion was a line joining the anterior tips of the lateral and medial orbital sulci, and the caudal limit of the lesion was ∼5 mm rostral to the junction of the frontal and temporal lobes. The intended lesion included Walker's areas 11, 13, 14, and the caudal part of area 10 (Walker, 1940). When the lesion was completed, the wound was closed in anatomical layers. The location and extent of the lesions were intended to be the same as described by Baxter et al. (2000) and Izquierdo and Murray (2004).

Figure 1.

Top, Ventral view of a standard rhesus monkey brain showing the location and extent of the PFo lesion for monkey PFo-1 (left; shaded region) and the extent of the intended PFo lesion (right; shaded region). Bottom, Representative T1-weighted MR images from PFo-1 (left) and coronal sections at matching levels from a standard rhesus monkey brain (right) showing the extent of the intended removal. The numerals indicate the distance in millimeters from the interaural plane (0).

Monkeys received a preoperative and postoperative treatment regimen consisting of dexamethasone sodium phosphate (0.4 mg/kg) and Cefazolin antibiotic (15 mg/kg) for 1 d before surgery and 1 week after surgery to reduce swelling and prevent infection, respectively. Directly after surgery, and for 2 additional days, monkeys received the analgesic ketoprofen (10-15 mg); ibuprofen (100 mg) was provided for 5 additional days.

Assessment of the lesions

The lesions in three of the four monkeys were quantitatively assessed using postoperative magnetic resonance imaging (MRI) scans. The extent of damage to the PFo was obtained from T1-weighted scans (fast-spoiled gradient; echo time, 5.8; repetition time, 13.1; flip angle, 30; number of excitations, 8; 256 square matrix; field of view, 100 mm; 1 mm slices) performed an average of 11.3 months after surgery. Representative MR images from PFo-1 are shown in Figure 1. During the study, one monkey in the experimental group (PFo-3) became ill. Because the illness did not respond to treatment, this monkey was killed, and its brain was subjected to routine histological processing. Histological verification of the lesion is therefore available for this one case.

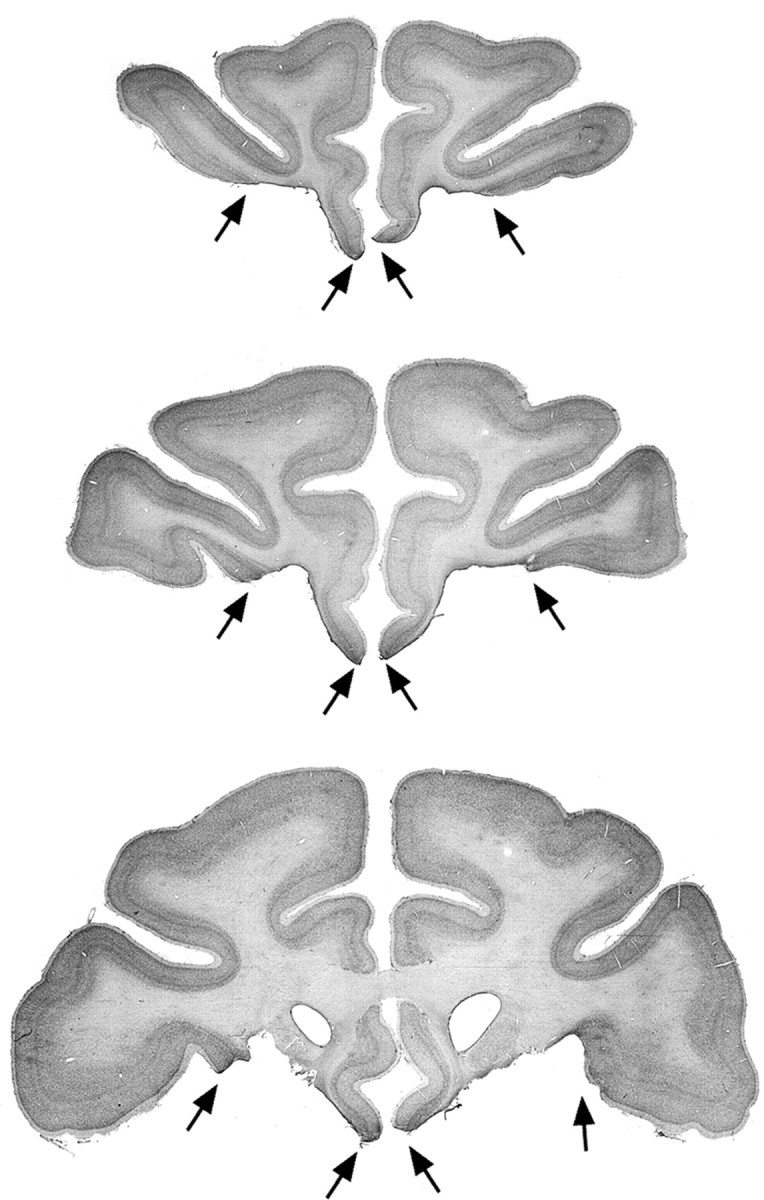

PFo-3 was anesthetized with ketamine, given a lethal dose of sodium pentobarbital (100 mg/kg, i.p.), and perfused transcardially with 0.9% saline, followed by 10% buffered formalin. The brain was removed, photographed, and frozen in a solution of 20% glycerol and 10% formalin. Tissue sections were cut in the coronal plane at 50 μm on a freezing microtome. Every fifth section was mounted on gelatin-coated slides, defatted, stained with thionin, and coverslipped. Photomicrographs of representative sections through the lesion in PFo-3 are shown in Figure 2.

Figure 2.

Photomicrographs of Nissl-stained coronal sections through the lesion in PFo-3. The black arrows mark the boundaries of the bilateral PFo lesion. Top to bottom, The levels shown are approximately +36, +32, and +28 mm anterior to the interaural plane (0). Compare and contrast with Figure 1.

For the three animals evaluated with MR, scan slices were matched to drawings of a standard rhesus monkey brain at 1 mm intervals. The extent of the lesion visible in the T1-weighted scan was then plotted onto the standard sections. The procedure for the brain that underwent histological processing was similar, except that Nissl-stained coronal sections, rather than scan slices, were matched to the standard sections. We subsequently measured the volume of the lesion as a function of the total volume of the structure in the standard using a digitizing tablet (Wacom, Vancouver, WA).

The estimated extent of damage to PFo for the four monkeys in group PFo is provided in Table 1. The operated monkeys sustained 78.7% removal, on average, of PFo. The removals systematically spared a narrow strip of cortex immediately ventral to the rostral sulcus, a region classified as infralimbic cortex by Preuss and Goldman-Rakic (1991), and there was a small, variable amount of spared cortex evident on the orbital surface in all operated monkeys as well.

Table 1.

Percentage of damage to the PFo

|

Monkey |

Left |

Right |

Mean |

|---|---|---|---|

| PFo-1 | 92.5 | 85.2 | 88.9 |

| PFo-2 | 76.1 | 62.2 | 69.2 |

| PFo-3 | 85.4 | 72.9 | 79.2 |

| PFo-4 |

85.0 |

70.0 |

77.5 |

The numerals indicate the percentage of damage to the PFo sustained by each of the operated monkeys. PFo-1 to −4, Monkeys with bilateral removals of the PFo; Left, left hemisphere; Right, right hemisphere; Mean, average of the values for the left and right hemispheres.

Behavioral testing procedures

All monkeys underwent accommodation and pretraining before the first stage of surgery. After they had sustained a unilateral lesion, a test of food preference, discrimination learning, and the first test of reinforcer devaluation were administered. After the second stage of surgery, all the same tests were repeated, the monkeys in the experimental group now under the influence of the bilateral removal of the PFo. Controls received the same training but remained unoperated.

Accommodation and pretraining. All monkeys were introduced to the WGTA and allowed to take food ad libitum from the test tray. Gradually, through successive approximation, monkeys were trained to displace plaques that completely covered the food wells to obtain a food reward hidden underneath. After the monkeys successfully displaced plaques, they were given two to five sessions of additional training with objects; monkeys were required to displace one of three pretraining objects to retrieve the food reward hidden underneath. Pretraining was considered complete when monkeys successfully displaced each of the three objects presented to them singly for a total of 30 trials.

Food preference testing. Starting 18-49 d after the first stage surgery, operated monkeys, together with the unoperated controls, were assessed for their preferences for six different foods. On each trial, monkeys were presented with two different foods, one in each food well. At the onset of each trial, the opaque screen was raised, signaling the monkey to make its choice. Monkeys were allowed to choose only one of the two foods, and the choice was noted by the experimenter. All food types were encountered 10 times during each session; each food was paired with each of the other foods twice, with the left-right positions balanced within a session. The different food pairs were presented in pseudorandom order each day. Each session comprised 30 trials with a 10 sec intertrial interval. Monkeys were tested for a total of 15 d. The one monkey that refused most foods was given 5 additional days testing using Marshmallow Juniors instead of fruit snacks.

The data for each monkey were tabulated in terms of total number of choices of each food across the last 5 days of testing, after food preferences had stabilized. In addition, we tabulated choices for each possible pairing of two foods, because this was a more specific indication of relative palatability. Two foods that were approximately equally preferred were selected for each monkey; these were designated as food 1 and food 2.

Visual discrimination: initial learning. Monkeys were trained to discriminate 60 pairs of objects. For each pair, one object was arbitrarily designated as positive (i.e., baited with a food reward) and the other negative (i.e., unbaited). Half of the positive objects were assigned to be baited with food 1, and the other half with food 2. On each trial, the two objects comprising a pair were presented for choice, each overlying one of the two food wells on the test tray. If the monkey displaced the positive object, it was allowed to take and eat the food reward hidden underneath. If the monkey chose the negative object, the trial was terminated without correction. Each pair of objects appeared in only one trial per session, for a total of 60 trials per day. The positive and negative assignment of objects, the presentation order of the object pairs, and the food reward assignments remained constant across days; the left-right position of positive objects followed a pseudorandom order. The intertrial interval was 20 sec. The criterion was set at a mean of 90% correct responses over 5 consecutive days (i.e., 270 or more correct responses in 300 trials).

Reinforcer devaluation test 1. After the monkeys had attained the criterion, their choices of objects were assessed in four critical test sessions (test 1). In these sessions, the negative objects were set aside, and only the positive objects were used. Thirty pairs of objects, each consisting of one food 1 object and one food 2 object, were presented to the monkey for choice. All objects were baited with the same foods they had covered during the visual discrimination learning phase. The monkeys were allowed to choose only one of the objects in each pair to obtain the reward hidden underneath. Two of the four critical test sessions were preceded by a selective satiation procedure, described below, intended to devalue one of the two foods. The other two sessions were preceded by no satiation procedure and provided baseline measures. At least 2 d of rest followed each session that had been preceded by the selective satiation procedure. In addition, between critical sessions, the monkeys were given one regular training session with the original 60 pairs of objects presented in the same manner as during acquisition. These extra sessions were given to ensure that monkeys were willing to select and to eat both foods and that there were no long-term effects of the satiation procedures that, if undetected, would reduce the sensitivity of our measure. Critical sessions occurred in the following order for each monkey: (1) first baseline session; (2) session preceded by selective satiation with food 1; (3) second baseline session; and (4) session preceded by selective satiation with food 2. The unit of analysis, the “difference score,” was the change in choices of object type (food 1- and food 2-associated objects) in the sessions preceded by selective satiation compared with baseline sessions.

Selective satiation. For the selective satiation procedure, a food box measuring 7.7 × 10.3 × 7.7 cm and attached to the monkey's home cage was filled with a known quantity of either food 1 or food 2 while the monkey was in its home cage. The monkey was then left to eat unobserved for 15 min, at which time the experimenter checked to see whether all the food had been eaten. If it had, the monkey's food box was refilled. Whether additional food was given or not, 30 min after the satiation procedure had been initiated, the experimenter started observing the monkey through a window outside the animal housing room. The procedure was considered complete when the monkey refrained from taking food for 5 consecutive minutes. The test session in the WGTA was then initiated within 10 min. For baseline sessions, the monkey was simply taken from its home cage to the WGTA without undergoing a selective satiation procedure.

Relearning and reinforcer devaluation test 2. Thirty-eight to 48 d after the second-stage surgery, or after an equivalent period of rest for the controls, all monkeys were retrained on the original set of object discriminations to the same criterion as before. After relearning, the reinforcer devaluation test was repeated in exactly the same manner described above.

Reinforcer devaluation with objects and with foods only, test 3 and test 4. Approximately 19 months after surgery (or rest), monkeys were required to learn a novel set of 60 pairs of objects and were subsequently tested for their responses to reinforcer devaluation (test 3) in the same manner as before. Thus, test 3 was a replication of test 2 using a new set of objects but the same two foods that had been assigned initially to each monkey. In addition, however, we now evaluated the effect of satiation on the monkeys' choices of foods directly, without the intervening objects (test 4). Test 4 was performed in exactly the same way as the standard task, except that no objects were used in the sessions with critical trials. Thus, for these sessions, monkeys received 30 consecutive trials involving a choice between food 1 and food 2. The order of tests was the same as for the standard task: (1) first baseline session; (2) session preceded by selective satiation with food 1; (3) second baseline session; and (4) session preceded by selective satiation with food 2. The unit of analysis, the difference score, was the change in choices of foods (food 1 and food 2) in the sessions preceded by selective satiation compared with baseline sessions. Test 4 was designed to assess whether the effects of satiation transferred from the home cage to the WGTA, as expected. If so, when confronted with foods directly, both operated monkeys and controls should avoid choosing the sated foods.

PR test. Monkeys were also tested on a PR task, a measure of the the monkeys' willingness to work for food rewards in the face of increasing response requirements. Test procedures were based on those used by Weed et al. (1999), and the method was the same as that used by Baxter et al. (2000). Testing was performed in an automated apparatus. After initial shaping in which monkeys learned to touch a stimulus on the monitor screen to obtain food rewards, formal training began. Initially, monkeys were required to complete several 10 min sessions of fixed ratio responding. Subsequently, a PR schedule was introduced in which the response requirement doubled after each block of eight trials. One response per reinforcer (two pellets) was required for the first eight trials, and then two, four, and eight responses per reinforcer were required for the last three sets of eight trials, respectively. The session ran for a maximum of 32 trials, but if a monkey failed to respond within 3 min at any point during testing, the session ended automatically. Although the pellet dispenser made a noise that accompanied food delivery, no cues were available that could serve as secondary reinforcers. The PR schedule was administered for a total of 20 d at the rate of 5 d per week. The measure of interest was the maximum (total) number of responses emitted by each monkey in each session. The scores for each monkey were averaged across the 20 sessions. Five additional sessions were then administered to measure the effect of satiation on PR performance. These included two sessions to establish baseline, one session after satiation (in which the monkeys were allowed to eat their fill of banana pellets before test), 1 d of rest, and two final sessions to provide another baseline measure of responding. One monkey (PFo-4) with a PFo removal refused to work in the automated apparatus, so for this task there were only three subjects in the experimental group.

Food preference retest. Monkeys were evaluated for their food preferences a second time to examine whether rankings of the six familiar foods changed over time or differed by group. The method was the same as that used before.

Object reversal learning. After being given additional tasks to evaluate emotional responses, the results of which are not reported here, monkeys began object reversal learning. Approximately 26 months after surgery (or rest), each monkey was trained on a single visual discrimination problem and its reversal; monkeys learned through trial and error that a previously unrewarded stimulus was now rewarded and a previously rewarded stimulus was no longer rewarded. Whereas reinforcer devaluation evaluated the monkeys' abilities to choose between positive objects after changes in the value of the associated food reward, object reversal learning assessed the monkeys' abilities to choose between two objects when the reinforcement contingencies reversed but food values remained unchanged. For this task, only three monkeys with PFo lesions were available, PFo-3 having been killed before training began. We used the same method as Jones and Mishkin (1972) and Murray et al. (1998), with the exception that training was continued for nine serial reversals instead of seven. On the first trial of initial learning, both objects were either baited (for half the monkeys in the control group and two of the three monkeys in the operated group) or unbaited (remaining monkeys), and the object chosen was designated as either the S+ (if it had been baited) or the S- (if it had been unbaited). Thus, the monkeys' choices on trial 1 determined the designation of the S+ and S- for initial learning, a procedure intended to prevent response biases attributable to object preferences. On each trial thereafter, the two objects were presented, one baited and one unbaited, one each overlying the two food wells. The monkey was allowed to displace only one of the two objects and, if correct, to retrieve the food reward underneath. A noncorrection procedure was used. The intertrial interval was 10 sec, and the left-right position of the correct object followed a pseudorandom order. Monkeys were trained for 30 trials per daily test session at the rate of five sessions per week. The criterion was set at 28 correct responses of 30 trials (93%) on 1 d, followed by at least 24 correct of 30 trials (80%) the next day. After monkeys attained the criterion on the original problem, the reward contingencies were reversed (starting the next day), and each monkey was trained to the same criterion as before. This procedure was repeated until a total of nine reversals had been completed.

Results

Visual discrimination learning and relearning

The two groups of monkeys did not differ in their rate of acquisition of the 60 discrimination problems (mean sessions to criterion: controls, 8.3; unilateral PFo, 13.8; Mann-Whitney U test, 21.0; p > 0.05) or in the reacquisition of these problems after the second surgery or rest (mean sessions to reattain criterion: controls, 1.0; bilateral PFo, 1.3; Mann-Whitney U test, 12.0; p > 0.05). In addition, an ANOVA with repeated measures on trials and errors obtained in the first five sessions of initial learning (the only sessions common to all monkeys) revealed no significant differences between groups (trials: F(1,8) = 2.932, p > 0.05; errors: F(1,8) = 2.997, p > 0.05).

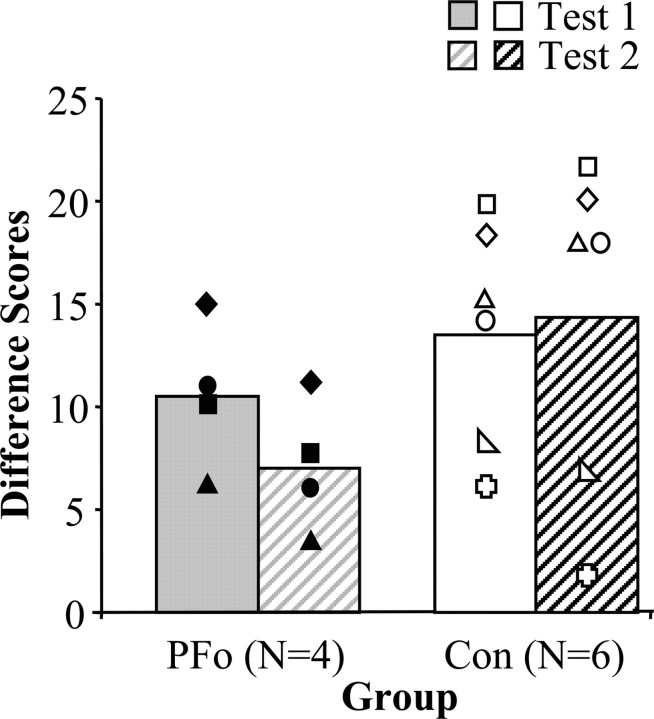

Reinforcer devaluation tests 1 and 2

Scores for reinforcer devaluation tests 1 and 2 are shown in Figure 3. The difference scores were analyzed using a two-by-two ANOVA with between-subjects factors of group (control, PFo) and within-subjects factors of stage (test 1, test 2). The analysis revealed a significant interaction between group and stage (F(1,8) = 6.026; p = 0.04). Whereas monkeys with PFo removals chose more objects covering the devalued food on test 2 than they had on test 1, the controls, on average, showed the reverse pattern. There was no significant main effect of group (F(1,8) = 1.878; p > 0.05) or of stage (F(1,8) = 2.282; p > 0.05).

Figure 3.

Difference scores of monkeys with PFo lesions (filled symbols) relative to unoperated controls (open symbols). The higher the bar, the greater the response to changes in reinforcer value (solid bars, test 1; hatched bars, test 2). The symbols represent scores for individual monkeys: filled diamond, PFo-1; filled square, PFo-2; filled circle, PFo-3; filled triangle, PFo-4; open circle, Con-1; open right-angled triangle, Con-2; open square, Con-3; open equilateral triangle, Con-4; open plus sign, Con-5; open diamond, Con-6.

The amount of food consumed by the two groups during the satiation procedures before test 1 did not differ significantly (mean grams eaten: controls, 110.3; unilateral PFo, 62.8; Mann-Whitney

U test, 5; p > 0.05). Unlike the case for test 1, however, the amount of food eaten by the two groups during the satiation procedures before test 2 did differ (mean grams eaten: controls, 137.8; bilateral PFo, 61.0; Mann-Whitney U test, 1; p = 0.019). When the groups were considered separately, however, the number of grams eaten during these satiation procedures was not correlated with difference scores. This was true for selective satiation procedures for both test 1 and test 2. Furthermore, the amounts eaten on tests 1 and 2 by the PFo group did not differ. Consequently, it seems unlikely that the difference in amount of food consumed in the satiation phase could account for the group difference on test 2.

Visual discrimination learning: novel set of 60 pairs

Acquisition of the new set of objects did not differ between groups (mean sessions to criterion: controls, 18.8; PFo, 13.5; Mann-Whitney U test, 11.5; p > 0.05), and a repeated-measures ANOVA for the first five sessions confirmed that there was no group difference (F(1,8) = 0.007; p > 0.05).

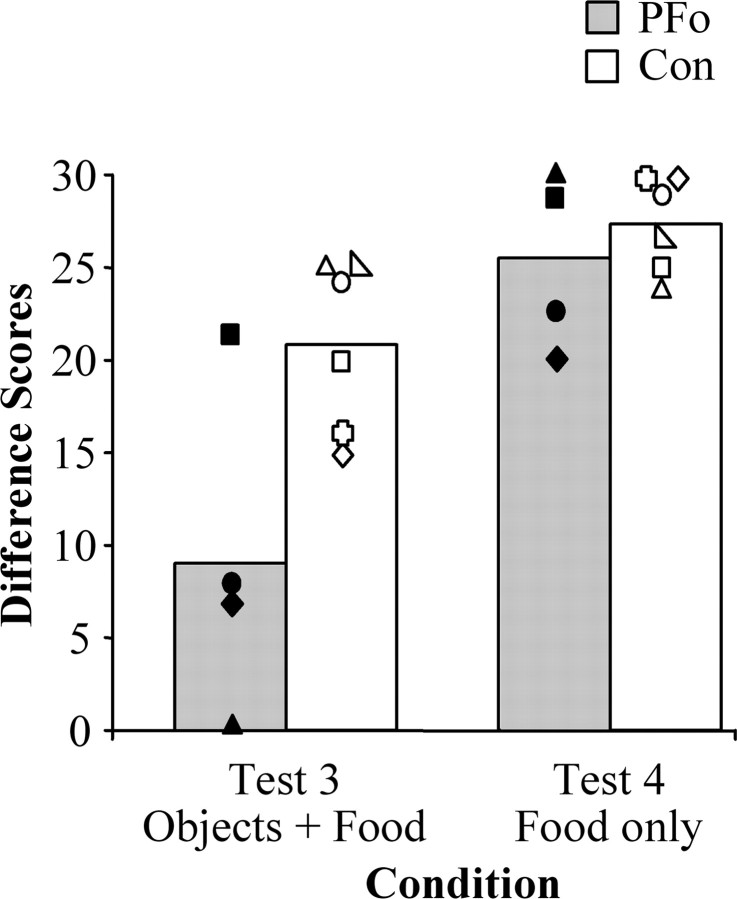

Reinforcer devaluation tests 3 and 4

Figure 4 illustrates difference scores for the two groups across the two conditions. The difference scores from reinforcer devaluation tests 3 and 4 were analyzed with a two-by-two repeated-measures ANOVA using between-subjects factors of group (control, PFo) and within-subjects factors of condition (object, food). The ANOVA revealed a significant main effect of group (F(1,8) = 9.152; p = 0.016) and a significant within-subjects effect of condition (F(1,8) = 20.540; p = 0.002). There was a marginally significant interaction of group by condition (F(1,8) = 3.833; p = 0.08). Individual ANOVAs revealed that groups differed in the objects condition (F(1,8) = 8.078; p = 0.022) but not in the food condition (F(1,8) = 0.585; p > 0.05). The marginally significant interaction is most likely attributable to a statistical outlier, PFo-2, in the object condition (studentized residual, 3.089); monkey PFo-2 sustained the smallest lesion in the operated group. A separate analysis conducted without this monkey revealed a still significant main effect of group (F(1,7) = 69.451; p < 0.001), a within-subjects effect of condition (F(1,7) = 23.465; p = 0.002), and a significant group by condition interaction (F(1,7) = 5.791; p = .047). As before, post hoc tests revealed that the group difference was in the objects condition and not in the food condition.

Figure 4.

Mean difference scores of monkeys with bilateral PFo lesions (shaded bars) and unoperated controls (open bars) on two tests of re inforcer devaluation: a test administered with objects overlying food rewards (test 3) and a test with food rewards presented alone (test 4). Monkeys with bilateral PFo removals were only impaired when required to choose objects. The symbols represent scores for individual monkeys: filled diamond, PFo-1; filled square, PFo-2; filled circle, PFo-3; filled triangle, PFo-4; open circle, Con-1; open right-angled triangle, Con-2; open square, Con-3; open equilateral triangle, Con-4; open plus sign, Con-5; open diamond, Con-6.

All monkeys considered together ate an average of 179.0 gm during the two satiation procedures for test 3 and 187.0 gm for test 4. The number of grams did not differ by group for either test (test 3: Mann-Whitney U test, 6.0; p > 0.05; test 4: Mann-Whitney U test, 9.0; p > 0.05).

Objects “stolen” during learning and relearning

Object “stealing” behavior has been reported after bilateral damage to the amygdala as well as after unilateral lesions that include the orbital cortex (Izquierdo and Murray, 2004). Three controls (Con-1, -2, and -4) and two operated monkeys (PFo-1 and PFo-4) in the present study took objects from the test tray to explore manually and orally, although the frequency of this behavior did not differ by group (mean number of objects stolen during learning: controls, 0.83; unilateral PFo, 3.0; Mann-Whitney U test, 10.0; p > 0.05; mean number of objects stolen during relearning: controls, 0; bilateral PFo, 1.5; Mann-Whitney U test, 9.0; p > 0.05; mean number of objects stolen during learning of novel set: controls, 0; bilateral PFo, 3; Mann-Whitney U test, 9.0; p > 0.05).

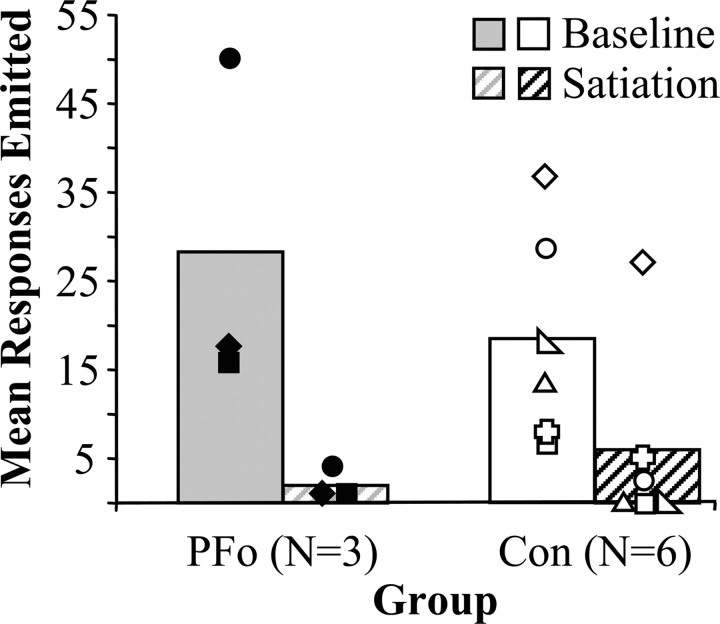

Progressive ratio

Figure 5 shows the responses on the PR task (baseline), as well as the effect of satiation on PR performance (satiation) in the two groups. The groups did not differ in their willingness to work for food, as measured by the maximum number of responses emitted during 20 sessions of the PR schedule (controls, 18.45; PFo, 28.3; Mann-Whitney U test, 12.0; p > 0.05). The scores were analyzed using a two-by-two repeated-measures ANOVA that revealed no main effect of group (F(1,7) = 0.154; p > .05) or group by satiation interaction (F(1,7) = 2.775; p > .05). As expected, however, there was a significant effect of satiation on the number of responses emitted in the PR task (F(1,7) = 21.787; p = .002).

Figure 5.

Group mean scores on the PR task. Baseline data are the mean number of responses emitted during 20 baseline sessions (solid bars) compared with the mean responses emitted during a single session after satiation (hatched bars). All monkeys decreased responding after the food reinforcer was devalued by selective satiation. The symbols represent scores for individual monkeys: filled diamond, PFo-1; filled square, PFo-2; filled circle, PFo-3; open circle, Con-1; open right-angled triangle, Con-2; open square, Con-3; open equilateral triangle, Con-4; open plus sign, Con-5; open diamond, Con-6.

Food preference test and retest

Thelast 5 d of food preference testing were analyzed using twoby-two ANOVAs with between-subjects factors of group (control, PFo) and within-subjects factors of stage (initial test, retest). There was a significant main effect of group for Craisins (F(1,8) = 7.754; p = 0.024; mean number chosen during initial test: controls, 15.2; unilateral PFo, 26.8; mean number chosen during retest: controls, 15.2; bilateral PFo, 22.5) but no significant group by stage interaction for this one food (F (1,8) = 0.832; p > 0.05). There were no significant differences between groups or group by stage interactions for any other foods. Of the 10 monkeys, only one (PFo-1) was trained using Craisins, and it had a strong preference for them.

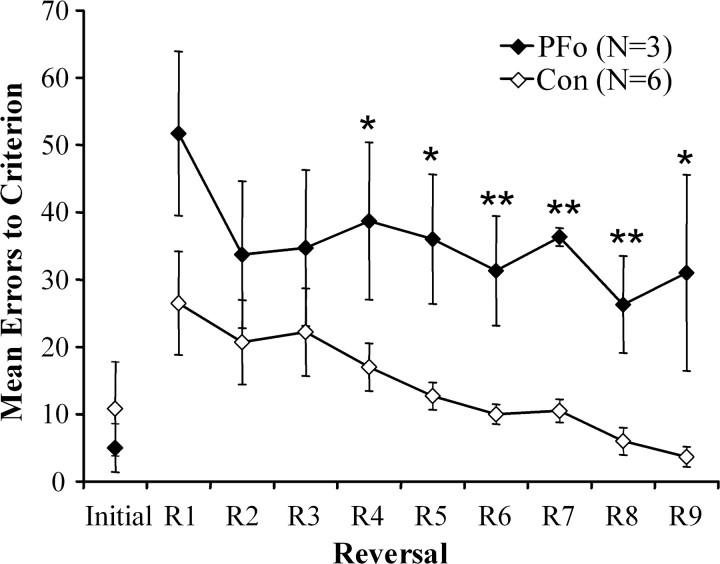

Object reversal learning

The number of errors scored in acquisition of the initial discrimination and during the subsequent reversals is illustrated in Figure 6. Although the groups did not differ in initial learning (mean trials to criterion: controls, 35.0; PFo, 30.0; Mann-Whitney U test, 10.5; p > 0.05; mean errors to criterion: controls, 10.8; PFo, 5.0; Mann-Whitney U test, 8.5; p > 0.05), an ANOVA with repeated measures on errors to criterion during reversals 1 through 9 revealed a significant effect of group (F(1,7) = 9.591; p = 0.017), a significant effect of session (F(1,7) = 4.295; p < 0.001), and a nonsignificant group by session interaction (F(1,7) = 0.629; p > 0.05). Individual ANOVAs revealed that the groups differed significantly on reversals 4 through 9 (reversal 4: F(1,7) = 5.483, p = 0.05; reversal 5: F(1,7) = 11.187, p = 0.012; reversal 6: F(1,7) = 13.653, p = 0.008; reversal 7: F(1,7) = 93.275, p < 0.001; reversal 8: F(1,7) = 13.316, p = 0.008; reversal 9: F(1,7) = 7.810, p = 0.027).

Figure 6.

Group mean errors to criterion for initial learning (Initial) and nine serial reversals in object reversal learning. Filled diamonds, Monkeys with bilateral PFo lesions; open diamonds, unoperated controls. Error bars indicate ± SEM.

Reversals were also analyzed according to stage of reversal learning (Jones and Mishkin, 1972). Stages are categories of error type intended to identify when, during reversal learning, errors occur. For each session of each reversal, all errors scored within that session were assigned to stages according to the following criterion: stage 1, 21 or more errors; stage 2, 10-20 errors; stage 3, 3-9 errors. Thus, stage 1 errors are accrued during the period that the monkey is unlearning or suppressing the previously learned response, stage 2 errors reflect near chance performance, and stage 3 errors are accrued during the learning of the new association. Individual ANOVAs on the errors scored in the three stages revealed a significant difference between groups only on stage 2 (stage 1: F(1,7) = 1.849, p > 0.05; stage 2: F(1,7) = 46.342, p < 0.001; stage 3: F(1,7) = 1.704, p > 0.05).

Relationship of performance on reinforcer devaluation and object reversal

To determine whether performance on the two main tasks might be related, we examined the extent to which scores on reinforcer devaluation and object reversal learning were correlated. Only three monkeys in group PFo were tested on both tasks, so correlations were not run for this group. For the controls, difference scores on reinforcer devaluation test 3 were marginally negatively correlated with total errors accrued on object reversal learning (r = -0.795; p = 0.059 with Bonferroni correction). Thus, good performance on reinforcer devaluation (reflected by high difference scores) is associated with good performance on object reversal learning (reflected by low error scores) and, likewise, poor performance on one task associated with poor performance on the other.

Discussion

Monkeys with PFo lesions were impaired in making adaptive responses to objects after changes in the value of rewards underlying those objects (reinforcer devaluation) and after changes in reward contingency (object reversal). Given that the operated group was impaired on these tasks ∼19 (reinforcer devaluation test 3) and ∼26 (object reversal) months after surgery, the deficit appears to be stable and long lasting. There were no group differences in either relearning of the 60 object pairs or in acquisition of the second set of 60 pairs. In addition, the operated monkeys exhibited food choices (food preference test and retest, reinforcer devaluation test 4) and satiety mechanisms (reinforcer devaluation test 4 and PR task) that were nearly indistinguishable from those of controls. Accordingly, neither changes in visual perceptual abilities, the appreciation of foodstuffs, nor changes in satiety mechanisms can account for the present pattern of results. Furthermore, as evidenced by the PR task, and consistent with a report by Pears et al. (2003), the monkeys with PFo lesions were just as willing as intact monkeys to work for food rewards. Thus, global changes in the level of motivation seem unlikely to account for the results. Instead, the results from the two experiments taken together suggest that monkeys with PFo lesions cannot choose adaptively when choices are guided by the visual properties of objects, especially in conditions in which two or more of the items available for choice have been reinforced. There are several aspects of the results that deserve discussion, and these are taken up in the following sections.

Food choices

The finding that monkeys with PFo lesions in the present study had stable food choices appears discrepant with a report by Baylis and Gaffan (1991), who found that monkeys with lesions similar to those used here made more “strange” food choices (i.e., choices inconsistent with their own overall preferences), relative to controls. There are two main differences between their food preference test and ours. First, they used four foods (apple, olive, lemon, and meat), only two of which (apple and lemon) were palatable to intact monkeys. In contrast, in the present study, all the foods were palatable. Second, and perhaps more important, they collected data for one session, whereas we collected data for 15 sessions and analyzed food choices only after the foods had become familiar. Thus, the measure of Baylis and Gaffan (1991) emphasized the ability to learn about the relative palatability of new foods based on the appearance of those foods, whereas our measure reflected visual choices of familiar foods. In line with the interpretation of the results from our main tasks, outlined below, the strange choices in their study may reflect a deficit in associating the visual properties of novel foods with the food value, a step circumvented in the present study by use of familiar foods. For reasons already provided, difficulty with assigning value to individual food items cannot explain the deficits in our monkeys with PFo lesions.

Spared versus impaired abilities

In the present study, the same monkeys that were impaired in their ability to shift responses in the face of changing reward value or contingency acquired visual discrimination problems (e.g., novel set of 60 pairs; initial learning of single pair for object reversal) at the same rate as the controls. Consistent with these findings, learning to approach the S+ in standard discrimination learning paradigms is affected only slightly by large removals of the prefrontal cortex (cf. Voytko, 1985; Parker and Gaffan, 1998) and is unaffected by selective amygdala damage (Zola-Morgan et al., 1989; Malkova et al., 1997). Presumably, this type of visual learning, in which monkeys need only distinguish rewarded from unrewarded objects, can be achieved either via storage directly in the neocortex (Platt and Glimcher, 1999; Jagadeesh et al., 2001; Roesch and Olson, 2004) or via convergence of cortical efferents in the striatum (Parker and Gaffan, 1998).

Neural networks underlying response selection

As indicated in the Introduction, performance on the reinforcer devaluation task is supported by a set of structures that includes the basolateral amygdala and PFo, and perhaps the mediodorsal nucleus of the thalamus as well (Corbit et al., 2003; A. Izquierdo and E. A. Murray, unpublished data). Surgical disconnection of the amygdala and PFo in monkeys produces impairment on reinforcer devaluation (Baxter et al., 2000). The present study is the first to show that monkeys with bilateral removals of the PFo are impaired on this task. Pertinent to the present discussion, one class of neurons in the PFo, active during odor presentation while rats are performing an odor discrimination task, reflects the identity of the to-be-delivered reward. Critically, this cue-period activity in the PFo fails to develop in rats that have sustained amygdala lesions (Schoenbaum et al., 2003b). Thus, the evidence from both neurophysiological studies in rats and neuropsychological studies in monkeys points to the critical interaction of the amygdala and PFo in signaling the value of predicted rewards on the basis of perceiving a stimulus such as an odor or an object that has been previously paired with that reward (for review, see Holland and Gallagher, 2004). Consistent with these findings, a functional MRI study has reported reduced activation of both the amygdala and PFo in humans in the presence of cues predicting a devalued reinforcer relative to cues predicting a nondevalued reinforcer (Gottfried et al., 2003).

As expected, based on previous work in monkeys (Iversen and Mishkin, 1970; Jones and Mishkin, 1972; Dias et al., 1996; Meunier et al., 1997), rats (Chudasama and Robbins, 2003; McAlonan and Brown, 2003; Schoenbaum et al., 2003a), and humans (Fellows and Farah, 2003), our monkeys with bilateral PFo lesions were significantly impaired on object reversal learning. Interestingly, it is unlikely that an amygdala-PFo interaction underlies object reversal learning. First, preliminary evidence indicates that monkeys with bilateral excitotoxic lesions of the amygdala are unimpaired on object reversal learning (Izquierdo et al., 2003). Instead, another region in the temporal lobe, namely the rhinal (i.e., perirhinal and entorhinal) cortex, is necessary for efficient object reversal learning. Damage to the rhinal cortex yields a severe impairment on object reversal learning, one just as severe as that observed here after PFo lesions (Murray et al., 1998). Thus, it is possible that interaction of the rhinal cortex with the PFo is essential for this type of learning.

It is well established that the presence or absence of food carries informational value independent of its hedonic value (Medin, 1977; Gaffan, 1979). Although a change from the presence to the absence of food reward, as occurs in object reversal learning, could be construed as an extreme devaluation of the reinforcer, we contend that it is a manipulation in which the information about reward contingencies is conveyed by the visual processing system (by the presence or absence of food reward) rather than the affective processing system. If so, the pattern of results might indicate that when an affective signal is guiding responses, as in the case of reinforcer devaluation (Balleine, 2001), it is supplied via the amygdala; but, in contrast, when a visual informational signal (e.g. presence or absence of food reward) is guiding responses, the information is conveyed via the rhinal cortex. On this view, both object reversal learning and reinforcer devaluation would make use of the same mechanism within PFo, the predictive signaling of reward outcome, but they would elicit the signal by different routes. The marginally significant correlation of scores on the two tasks in intact monkeys provides some support for the first part of this proposition, but this idea deserves additional study. Whether the second part of this proposition is true remains to be investigated. The prediction can be tested by disconnecting the rhinal cortex from PFo in one group of monkeys and the amygdala from PFo in a separate group, and assessing the effects of both operations on object reversal learning and reinforcer devaluation. The former manipulation should disrupt object reversal learning but not reinforcer devaluation, whereas the latter should produce the converse pattern of results.

The prefrontal cortex contributes in a necessary way to many tasks in addition to those studied here. For example, conditional visual-motor learning (Bussey et al., 2001, 2002), visual-visual learning (Eacott and Gaffan, 1992; Gutnikov et al., 1997), and reward-visual conditional learning (Parker and Gaffan, 1998) all depend on the prefrontal cortex. All of these tasks require a context-dependent choice among objects or actions associated with rewards. Thus, to choose correctly, monkeys likely predict the value that they can obtain by each potential choice, based on current context. Neurons in the prefrontal cortex show such associative or prospective coding, both in the context of a visual-visual conditional task (Rainer et al., 1999) as well as in discrimination learning and delayed response tasks in which different visual stimuli predict different sizes or types of reward (Watanabe, 1996; Tremblay and Schultz, 1999; Schoenbaum et al., 1999, 2003b; Wallis and Miller, 2003). Indeed, neurons in the prefrontal cortex show conjunctive coding for all these various pieces of information required for conditional responding: the sensory stimuli, the rules guiding behavior, the actions, and rewards (for review, see Miller, 2000).

Footnotes

This work was supported by the Intramural Research Program of the National Institute of Mental Health. We thank R. Saunders and the staff of the Nuclear Magnetic Resonance Facility, National Institute of Neurological Disorders and Stroke, for assistance obtaining magnetic resonance scans; P.-Y. Chen for histological support; and Y. Chudasama and K. Wright for help testing monkeys.

Correspondence should be addressed to Dr. A. Izquierdo, Laboratory of Neuropsychology, National Institute of Mental Health, 49 Convent Drive, Building 49, Room 1B80, Bethesda, MD 20892-4415. E-mail: izquiera@mail.nih.gov.

Copyright © 2004 Society for Neuroscience 0270-6474/04/249540-09$15.00/0

References

- Balleine BW (2001) Incentive processes in instrumental conditioning. In: Handbook of contemporary learning theories (Mowrer RR, Klein SB, eds), pp 307-366. Mahwah, NJ: Erlbaum.

- Baxter MG, Parker A, Lindner CCC, Izquierdo AD, Murray EA (2000) Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J Neurosci 20: 4311-4319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baylis LL, Gaffan D (1991) Amygdalectomy and ventromedial prefrontal ablation produce similar deficits in food choice and in simple object discrimination learning for an unseen reward. Exp Brain Res 86: 617-622. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Wise SP, Murray EA (2001) The role of ventral and orbital prefrontal cortex in conditional visuomotor learning and strategy use in rhesus monkeys (Macaca mulatta). Behav Neurosci 115: 971-982. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Wise SP, Murray EA (2002) Interaction of ventral and orbital prefrontal cortex with inferotemporal cortex in conditional visuomotor learning. Behav Neurosci 116: 703-715. [PubMed] [Google Scholar]

- Chudasama Y, Robbins TW (2003) Dissociable contributions of the orbitofrontal and infralimbic cortex to pavlovian autoshaping and discrimination reversal learning: further evidence for the functional heterogeneity of the rodent frontal cortex. J Neurosci 23: 8771-8780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbit LH, Muir JL, Balleine BW (2003) Lesions of the mediodorsal thalamus and anterior thalamic nuclei produce dissociable effects on instrumental conditioning in rats. Eur J Neurosci 18: 1286-1294. [DOI] [PubMed] [Google Scholar]

- Dias R, Robbins TW, Roberts AC (1996) Dissociation in prefrontal cortex of affective and attentional shifts. Nature 380: 69-72. [DOI] [PubMed] [Google Scholar]

- Eacott MJ, Gaffan D (1992) Inferotemporal-frontal disconnection: the uncinate fascicle and visual associative learning in monkeys. Eur J Neurosci 4: 1320-1332. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ (2003) Ventromedial frontal cortex mediates affective shifting in humans: evidence from a reversal learning paradigm. Brain 126: 1830-1837. [DOI] [PubMed] [Google Scholar]

- Gaffan D (1979) Acquisition and forgetting in monkeys' memory of informational object-reward associations. Learn Motiv 10: 419-444. [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ (2003) Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science 301: 1104-1107. [DOI] [PubMed] [Google Scholar]

- Gutnikov SA, Ma YY, Buckley MJ, Gaffan D (1997) Monkeys can associate visual stimuli with reward delayed by 1 s even after perirhinal cortex ablation, uncinate fascicle section or amygdalectomy. Behav Brain Res 87: 85-96. [DOI] [PubMed] [Google Scholar]

- Hikosaka K, Watanabe M (2000) Delay activity of orbital and lateral prefrontal neurons of the monkey varying with different rewards. Cereb Cortex 10: 263-271. [DOI] [PubMed] [Google Scholar]

- Holland PC, Gallagher M (2004) Amygdala-frontal interactions and reward expectancy. Curr Opin Neurobiol 14: 148-155. [DOI] [PubMed] [Google Scholar]

- Iversen SD, Mishkin M (1970) Perseverative interference in monkeys following selective lesions of the inferior prefrontal convexity. Exp Brain Res 11: 376-386. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Murray EA (2004) Combined unilateral lesions of the amygdala and orbital prefrontal cortex impair affective processing in rhesus monkeys. J Neurophysiol 91: 2023-2039. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA (2003) Effects of selective amygdala and orbital prefrontal cortex lesions on object reversal learning in monkeys. Soc Neurosci Abstr 29: 90.2. [Google Scholar]

- Jagadeesh B, Chelazzi L, Mishkin M, Desimone R (2001) Learning increases stimulus salience in anterior inferior temporal cortex of the macaque. J Neurophysiol 86: 290-303. [DOI] [PubMed] [Google Scholar]

- Jones B, Mishkin M (1972) Limbic lesions and the problem of stimulus-reinforcement associations. Exp Neurol 36: 362-377. [DOI] [PubMed] [Google Scholar]

- Malkova L, Gaffan D, Murray EA (1997) Excitotoxic lesions of the amygdala fail to produce impairments in visual learning for auditory secondary reinforcement but interfere with reinforcer devaluation effects in rhesus monkeys. J Neurosci 17: 6011-6020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAlonan K, Brown VJ (2003) Orbital prefrontal cortex mediates reversal learning and not attentional set shifting in the rat. Behav Brain Res 146: 97-103. [DOI] [PubMed] [Google Scholar]

- Medin DL (1977) Information processing and discrimination learning set. In: Behavioral primatology (Schrier AM, ed), pp 33-69. Mahwah, NJ: Erlbaum.

- Meunier M, Bachevalier J, Mishkin M (1997) Effects of orbital frontal and anterior cingulate lesions on object and spatial memory in rhesus monkeys. Neuropsychologia 35: 999-1015. [DOI] [PubMed] [Google Scholar]

- Miller EK (2000) The prefrontal cortex and cognitive control. Nat Rev Neurosci 1: 59-65. [DOI] [PubMed] [Google Scholar]

- Murray EA, Baxter MG, Gaffan D (1998) Monkeys with rhinal cortex damage or neurotoxic hippocampal lesions are impaired on spatial scene learning and object reversals. Behav Neurosci 112: 1291-1303. [DOI] [PubMed] [Google Scholar]

- Parker A, Gaffan D (1998) Memory after frontal/temporal disconnection in monkeys: conditional and non-conditional tasks, unilateral and bilateral frontal lesions. Neuropsychologia 36: 259-271. [DOI] [PubMed] [Google Scholar]

- Pears A, Parkinson JA, Hopewell L, Everitt BJ, Roberts AC (2003) Lesions of the orbitofrontal but not medial prefrontal cortex disrupt conditioned reinforcement in primates. J Neurosci 23: 1189-11201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW (1999) Neural correlates of decision variables in parietal cortex. Nature 400: 233-238. [DOI] [PubMed] [Google Scholar]

- Preuss TM, Goldman-Rakic PS (1991) Myelo- and cytoarchitecture of the granular frontal cortex and surrounding regions in the strepsirhine primate Galago and the anthropoid primate Macaca J Comp Neurol 310: 429-474. [DOI] [PubMed] [Google Scholar]

- Rainer G, Rao SC, Miller EK (1999) Prospective coding for objects in primate prefrontal cortex. J Neurosci 19: 5493-5505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Olson CR (2004) Neuronal activity related to reward value and motivation in primate frontal cortex. Science 304: 307-310. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M (1998) Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci 1: 155-159. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M (1999) Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci 19: 1876-1884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Nugent SL, Saddoris MP, Gallagher M (2003a) Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learn Mem 10: 129-140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M (2003b) Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron 39: 855-867. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W (1999) Relative reward preference in primate orbitofrontal cortex. Nature 398: 704-708. [DOI] [PubMed] [Google Scholar]

- Voytko ML (1985) Cooling orbital frontal cortex disrupts matching-to-sample and visual discrimination learning in monkeys. Physiol Psychol 13: 219-229. [Google Scholar]

- Walker AE (1940) A cytoarchitectural study of the prefrontal area of the macaque monkey. J Comp Neurol 73: 59-86. [Google Scholar]

- Wallis JD, Miller EK (2003) Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci 18: 2069-2081. [DOI] [PubMed] [Google Scholar]

- Watanabe M (1996) Reward expectancy in primate prefrontal neurons. Nature 382: 629-632. [DOI] [PubMed] [Google Scholar]

- Weed MR, Taffe MA, Polis I, Roberts AC, Robbins TW, Koob GF, Bloom FE, Gold LH (1999) Performance norms for a rhesus monkey neuropsychological testing battery: acquisition and long-term performance. Cognit Brain Res 8: 185-201. [DOI] [PubMed] [Google Scholar]

- Zola-Morgan S, Squire LR, Amaral DG (1989) Lesions of the amygdala that spare adjacent cortical regions do not impair memory or exacerbate the impairment following lesions of the hippocampal formation. J Neurosci 9: 1922-1936. [DOI] [PMC free article] [PubMed] [Google Scholar]