Abstract

The human amygdala plays a crucial role in processing affective information conveyed by sensory stimuli. Facial expressions of fear and anger, which both signal potential threat to an observer, result in significant increases in amygdala activity, even when the faces are unattended or presented briefly and masked. It has been suggested that afferent signals from the retina travel to the amygdala via separate cortical and subcortical pathways, with the subcortical pathway underlying unconscious processing. Here we exploited the phenomenon of binocular rivalry to induce complete suppression of affective face stimuli presented to one eye. Twelve participants viewed brief, rivalrous visual displays in which a fearful, happy, or neutral face was presented to one eye while a house was presented simultaneously to the other. We used functional magnetic resonance imaging to study activation in the amygdala and extrastriate visual areas for consciously perceived versus suppressed face and house stimuli. Activation within the fusiform and parahippocampal gyri increased significantly for perceived versus suppressed faces and houses, respectively. Amygdala activation increased bilaterally in response to fearful versus neutral faces, regardless of whether the face was perceived consciously or suppressed because of binocular rivalry. Amygdala activity also increased significantly for happy versus neutral faces, but only when the face was suppressed. This activation pattern suggests that the amygdala has a limited capacity to differentiate between specific facial expressions when it must rely on information received via a subcortical route. We suggest that this limited capacity reflects a tradeoff between specificity and speed of processing.

Keywords: amygdala, binocular rivalry, emotion, faces, fMRI, unconscious perception

Introduction

The phenomenon of binocular rivalry occurs when different visual images are presented to corresponding regions of the two eyes (Wheatstone, 1838; von Helmholtz, 1867). Under such conditions, one of the two images dominates in perception at any given moment, while the other image is suppressed from awareness. With prolonged viewing, each image undergoes a period of dominance, followed by a period of suppression. Recent functional neuroimaging studies have shown reciprocal activation in primary visual cortex with oscillations of eye dominance, supporting the hypothesis that binocular rivalry arises from inhibition between monocular channels in the primary visual cortex (Polonsky et al., 2000; Tong and Engel, 2001). Category-specific extrastriate visual areas, such as the fusiform face area (Kanwisher et al., 1997; Haxby et al., 2000) and the parahippocampal place area (Epstein et al., 1999), are active under conditions of binocular rivalry only when the appropriate stimulus (face or house, respectively) is currently dominant in perception and not when it is suppressed (Tong et al., 1998). Here we used functional magnetic resonance imaging (fMRI) to investigate patterns of brain activity associated with rivalrous face and house stimuli in which the faces conveyed a fearful, happy, or neutral expression.

The amygdala plays a key role in processing threat-related stimuli, particularly as conveyed by facial expressions of fear and anger (Adolphs et al., 1994; Breiter et al., 1996; Calder et al., 1996; Morris et al., 1996). It has been suggested that the amygdala receives visual inputs via two main pathways: a subcortical pathway that conveys crude but rapid signals before awareness and facilitates early detection of threat, and a geniculostriate pathway that conveys detailed but slower information, allowing fine-grained analysis of the visual input (LeDoux, 2000). Several studies have shown increased amygdala activity in response to threatening facial expressions under conditions of reduced attention (Vuilleumier et al., 2001; Anderson et al., 2003) or when faces are presented briefly and masked from awareness (Morris et al., 1998; Whalen et al., 1998); these conditions are likely to favor inputs from the rapid, subcortical pathway (Vuilleumier et al., 2003).

Here we examined amygdala responses to facial expressions under conditions of binocular suppression. Although the amygdala has been viewed as an early “threat detector” (LeDoux, 2000), inputs to this structure via the fast subcortical pathway may permit only limited differentiation of affective valence (Anderson et al., 2003). We therefore compared amygdala responses to fearful and happy faces, with pictures of houses as the rivalrous images. We predicted that the fusiform gyrus and parahippocampal gyrus would respond selectively to their preferred stimulus class when the relevant image was dominant but not when the same stimulus was suppressed (as found by Tong et al., 1998). In contrast, we expected a significant increase in amygdala activity for both fearful and happy faces relative to neutral faces under conditions of binocular suppression, consistent with the view that subcortical inputs to the amygdala do not differentiate affective valence.

Materials and Methods

Participants

Twelve individuals (six males, six females; mean age, 28 years; SD, 3.72) gave written, informed consent to participate, according to the guidelines of the Human Research Ethics Committee at the University of Melbourne. All participants were right-handed, as assessed by the Edinburgh Handedness Inventory; all were classified as neurologically normal by medical review, and all had normal or corrected-to-normal visual acuity.

Materials

Visual stimuli were presented as dichoptic displays on a uniform gray background. Face photographs of six individuals (three males, three females), each displaying a happy, fearful, or neutral expression, were selected from a standardized set of stimuli (Ekman and Friesen, 1975). Photographs of six houses were selected to match as closely as possible the overall area of the faces, with as few extraneous details as possible. All stimuli were cropped using Photoshop 5.5 (Adobe Systems, San Jose, CA) to fit within a rectangle subtending 2.2° × 2.9°. Mean luminance and contrast were matched for all stimuli across both categories. Color filters were then applied to make sets of “red only” and “green only” face and house stimuli, and the opacity of the resulting images was adjusted to 50% to make them semi-transparent. Red houses were then overlaid on green faces, and vice versa, to create a fully balanced set of 216 composite face–house stimuli. A black and white circle subtending 0.4° × 0.4° was positioned in the center of each display to serve as a fixation point. To induce binocular rivalry, participants wore noncorrective spectacles with one lens replaced by a “red” filter and the other replaced by a “green” filter [Kodak Wratten Filters; 25 (red) and 58 (green); Edmund Industrial Optics, Barrington, NJ], thus ensuring that the two images in each face–house composite were presented to separate eyes.

During initial behavioral testing outside the scanner, visual stimuli were presented centrally on a 15 inch CRT monitor (ViewSonic Graphics Series G771, 800 × 600 pixel screen resolution, 60 Hz refresh rate; ViewSonic, Walnut, CA) controlled by an IBM compatible computer (Compaq Evo N600c, Pentium III CPU, 1066 MHz, 256 MB RAM, 16 MB ATI Mobility Radeon Video card; Compaq, Palo Alto, CA). During fMRI acquisition, the same images were back-projected via a Sony (Tokyo, Japan) SVGA LCD data projector (VPL-SC1) onto an opaque screen positioned at the foot of the patient gurney. Participants viewed the screen through a mirror mounted on the quadrature head coil. Customized software written in Visual Basic 6.0, and using DirectX 9.0b technology (Microsoft, Redmond, WA), was used to present the stimuli. Optic-fiber button boxes were used to record participant responses.

Experimental paradigm

A previous fMRI study that used (neutral) faces and houses as rivalrous stimuli (Tong et al., 1998) correlated brain activity with participants' subjective reports of the dominant image. Although this approach is easy to implement during image acquisition, it does not permit objective verification of which of the two images is dominant at any given moment in time. This limitation is a major concern when testing whether the amygdala maintains or increases its activity for emotional versus neutral faces during periods of binocular suppression; even occasional glimpses of an emotional face may be sufficient to induce significant amygdala activity. We overcame this problem by manipulating the contrast and hue of the rivalrous images so that just one image class was reliably perceived during presentations of a short, fixed duration, as described in detail below.

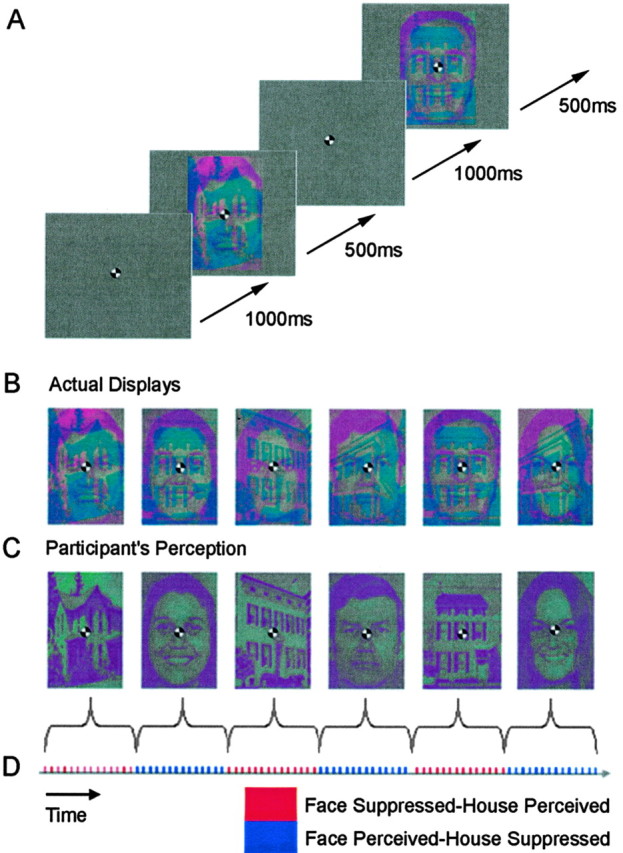

Each trial began with the black and white fixation stimulus appearing alone for 1000 msec in the center of the display, followed by a face–house composite that appeared for 500 msec. The fixation and face–house composites alternated in this way throughout each run (Fig. 1 A). Experimental epochs within each run contained 14 face–house composites. Within each epoch, the valence of the facial expression remained constant (fearful, happy, or neutral), but the identity could change. Each run consisted of 20 alternating epochs of fearful and neutral face–house composites, or 20 alternating epochs of happy and neutral face–house composites. Five rest epochs (with displays consisting of the fixation stimulus alone) were presented within each run, counterbalanced to ensure equal presentation before and after each experimental epoch. Thus, there were 25 epochs within each of the two runs (fear–neutral and happy–neutral), with each epoch lasting 21 sec, for a total of 525 sec per run.

Figure 1.

Examples of the visual stimuli used to induce binocular rivalry (not to scale). A, Face–house composites in the fearful–neutral face condition. Participants performed a one-back task throughout each run, indicating any repetition of a face or house image via an optic-fiber response box. B, Face–house composites incorporating face images with neutral and happy expressions. C, Illustration of an individual participant's perception of the face–house composites shown in B. Either the face or house alone was the dominant percept within each epoch (see Materials and Methods). D, Schematic showing the design of the experiment. Different facial expressions (happy–neutral or fearful–neutral) were presented in separate runs according to an ABBA sequence. There were 14 stimuli within each epoch, and the dominant image type (face or house) alternated between successive epochs. Condition order was fully counterbalanced across participants to control for any order effects.

One-half the participants wore spectacles with the red filter over the left eye and the green filter over the right eye, and one-half wore spectacles with the reverse configuration. To reduce the likelihood of mixed perceptions of the two rivaling stimuli, all testing was performed in a dimly illuminated room (O'Shea et al., 1994). One way to influence the dominant percept under conditions of binocular rivalry is to vary the luminance or contrast of the two images (Blake, 2001). In the present study, we exploited a physiological property of the retina to ensure that either the red or green image would always dominate during the initial period of presentation of the face–house composites. Humans with normal color vision have an unequal number of S (blue), M (green), and L (red) cones in the retina (Roorda and Williams, 1999), resulting in a subtle bias in sensitivity to particular wavelengths in the visible spectrum. When the images were displayed to each eye pass through a red or green filter, this bias causes one of the images initially to dominate over the other.

Pilot testing revealed that, when the face–house composites were displayed for <1 sec, participants reliably perceived only one of the two images (face or house) and that the dominant image (face or house) depended on its color. For example, participant 5, a 29-year-old female, consistently perceived only the red stimulus (i.e., the image presented to the eye with the green filter) over the first 1–2 sec of presentation. Crucially, this pattern was highly consistent for every individual observer and held regardless of whether the image was a face or a house. Nine of the 12 participants perceived only the green stimulus when the face–house composites were presented for <1 sec, whereas the remaining three participants perceived only the red stimulus. For rivalrous displays lasting <1 sec, therefore, we could effectively control which of the two images within a composite would be perceived and which would be suppressed by changing the colors of the image types within each composite according to each participant's own bias (Fig. 1 B–D).

To verify the alternating pattern of dominance and suppression of each image type across successive epochs, we had participants report repetitions of any image in a simple one-back task. Participants were asked to indicate via a button-press when they saw two successive faces or houses that were identical. Within each epoch, at least one such repetition occurred for each image type (mean, 1.6 repetitions per epoch for each image type; total repetitions, 64 per run). For the rivalrous displays used here, we expected detection of repeated images in the one-back task to be high for epochs in which repetitions occurred in the dominant image and low for epochs in which repetitions occurred in the suppressed image.

Functional imaging and analysis

Data acquisition. Sagittal images were acquired on a 3T GE Signa MRI scanner. Functional MRI runs were acquired using a gradient-echo echoplanar [echo planar imaging (EPI)] sequence (repetition time, 3 sec; echo time, 40 msec; 128 × 128 matrix; 240 mm field of view; 21 slices of 4-mm-thick with 1.5 mm spacing). Before the first run, a T1-weighted sequence (spin-echo; 256 × 192 matrix; 21 slices of 4-mm-thick with 1.5 mm spacing) was performed to obtain anatomical detail in the same slice planes used for fMRI. A high-resolution T1-weighted sequence was also acquired at the end of each subject's session (inversion recovery prepared, spoiled gradient refocused gradient echo; 1.5 × 1.5 mm in-plane; 1.5-mm-thick slices).

Data analysis. Pre-processing and data analysis were performed using SPM2 (Wellcome Department of Imaging Neuroscience; http://www.fil.ion.ucl.ac.uk/spm/spm2.html). The first four volumes of each run were removed automatically before analysis. Functional data were realigned within scanning runs to correct for head motion using a set of six rigid body transformations determined for each image. Each functional run was spatially normalized to the EPI template supplied with SPM, beginning with a local optimization of the 12 parameters of an affine transformation. These images were then smoothed with an 8 mm Gaussian filter. The blood oxygen level-dependent (BOLD) signal was analyzed within each run using a high-pass frequency filter (cutoff, 144 sec). Autocorrelations between scans and epochs were modeled by a standard hemodynamic response function at each voxel. Parameter estimates were obtained for each condition and participant to allow second-level random effects analysis of between-participant variability. One-way ANOVAs were then performed on the statistical maps obtained from predefined cortical regions of interest, using uncorrected p values for all comparisons.

Results

Behavioral data

Responses in the one-back task were analyzed separately for epochs in which a particular image type (face or house) was dominant and epochs in which an image was suppressed (as determined by the criteria outlined in Materials and Methods). Overall, participants were very accurate in detecting repetitions of faces when face images were dominant and in detecting repetitions of houses when house stimuli were dominant (mean, 86%; SE, 3.3; range, 57–97%). Conversely, only 1 of the 12 participants ever detected a repetition of an image that should have been suppressed, and this occurred only once within a single run. For all of the remaining runs, across all participants, repetitions of images that should have been suppressed were never detected, thus verifying objectively the effectiveness of our rivalrous displays.

Imaging data

Category-specific extrastriate areas

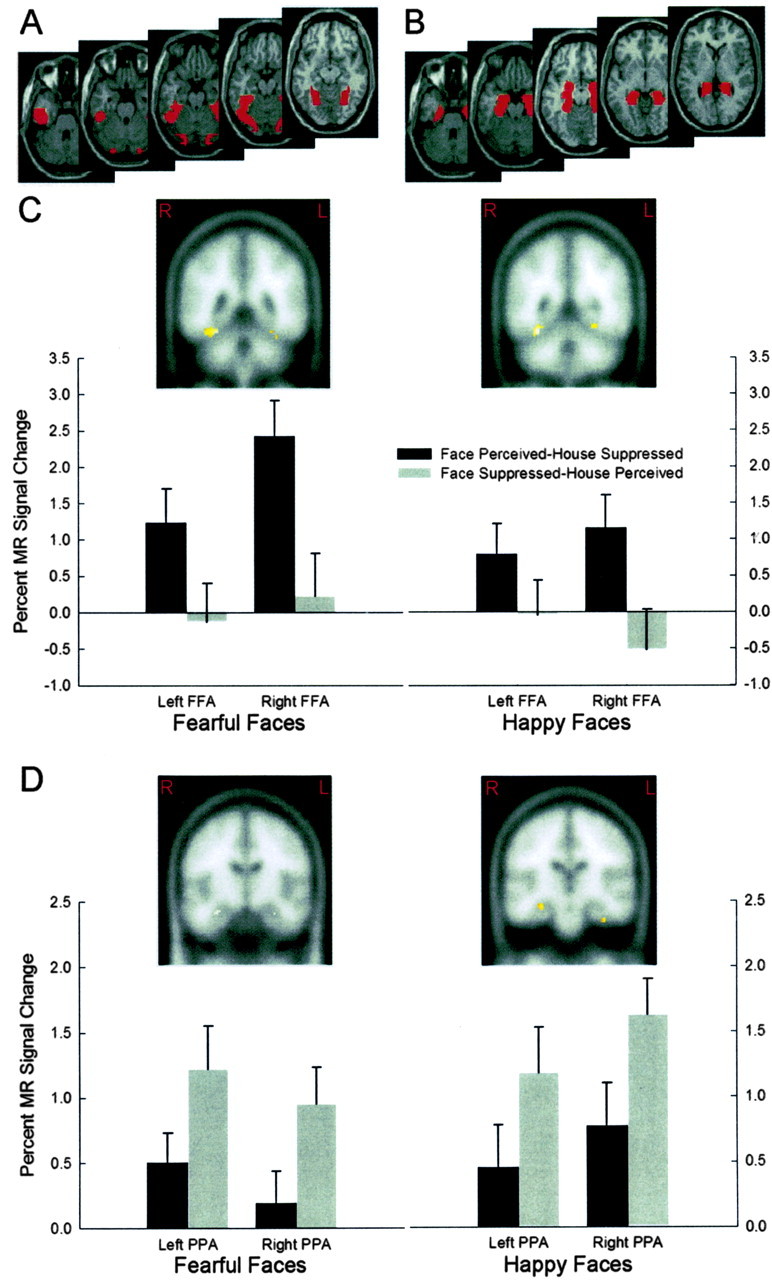

Following from Tong et al. (1998), we focused our analyses on the fusiform gyrus and parahippocampal gyrus to determine whether increased activation in these areas corresponded with epochs in which face and house images were perceived. The fusiform gyrus and parahippocampal gyrus were independently defined in each hemisphere using WFU_PickAtlas (Maldjian et al., 2003).

Examination of areas of greater activity during the face perceived–house suppressed epochs compared with the face suppressed–house perceived epochs, collapsed across emotional and neutral facial expressions, revealed significant activation bilaterally in the fusiform gyrus in both the fear–neutral and happy–neutral runs (fear–neutral: left peak xyz, –28 –44 –18, t = 3.86, p < 0.001; right peak x y z, 32 –50 –20, t = 6.29, p < 0.001; happy–neutral: left peak xyz, –26 –52 –12, t = 3.51, p < 0.001; right peak x y z, 32 –50 –18, t = 4.36, p < 0.001) (Fig. 2C). Additional t tests, examining areas specific to house perception, during the face suppressed–house perceived epochs compared with the face perceived–house suppressed epochs, again collapsed across emotional and neutral facial expressions, revealed significant bilateral activation in the parahippocampal gyrus in both the fear–neutral and happy–neutral runs (fear–neutral: left peak xyz, –28 –16 –22, t = 2.61, p < 0.01; right peak xyz, 30 –12 –20, t = 2.74, p < 0.005; happy–neutral: left peak xyz, –28 0 –30, t = 3.39, p < 0.001; right peak xyz, 30 –16 –16, t = 4.63, p < 0.001) (Fig. 2D). Analysis of differences in activation between neutral and emotional facial expressions within the same gyri revealed no significant activations (p > 0.05).

Figure 2.

Regions of interest and BOLD responses in extrastriate cortex obtained during the fearful–neutral and happy–neutral runs of the binocular rivalry experiment collapsed across emotional and neutral facial expressions. Regions of interest were defined using WFU_PickAtlas (Maldjian et al., 2003). A, Masks used to define the fusiform gyrus in each hemisphere. B, Masks used to define the parahippocampal gyrus in each hemisphere. C, Coronal brain slices show greater activation with in the fusiform gyrus during the face perceived–house suppressed condition compared with the face suppressed–house perceived condition (fear–neutral: left peak x y z, –28 –44 –18; right peak x y z, 32 –50 –20; happy–neutral: left peak x y z, –26 –52 –12; right peak x y z, 32 –50 –18). Panels below show corresponding MR signal change during the fear–neutral and happy–neutral runs, plotted separately for the face perceived–house suppressed and face suppressed–house perceived conditions. D, Coronal brain slices show greater activation within the parahippocampal gyrus during the face suppressed–house perceived condition compared with the face perceived–house suppressed condition (fear–neutral: left peak x y z, –28 –16 –22; right peak x y z, 30 –12 –20; happy–neutral:left peak x y z, –280 –30; right peak x y z, 30 –16 –16). Panels below show corresponding MR signal change during the fear–neutraland happy–neutral runs, plotted separately for the face perceived–house suppressed and face suppressed–house perceived conditions. Regions of significant activity are overlaid on standard Montreal Neurological Institute space templates (Wellcome Department of Imaging Neuroscience; http://www.fil.ion.ucl.ac.uk/spm/spm2.html). FFA, Fusiform face area; PPA, parahippocampal place area.

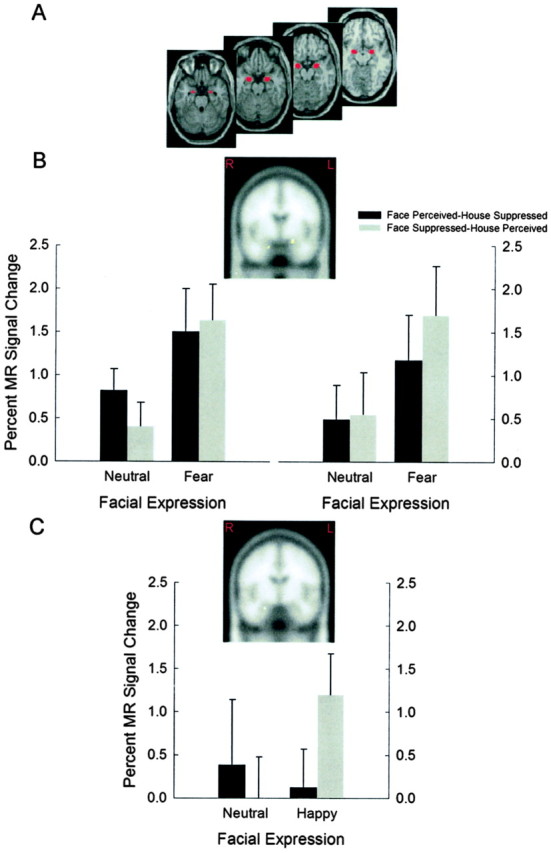

Amygdala

Region of interest analyses were conducted on voxels within a region centered on the amygdaloid complex in each hemisphere, as determined by WFU_PickAtlas (Maldjian et al., 2003). The amygdaloid complex in humans is composed of several nuclei (Sah et al., 2003), but the limited spatial resolution of conventional fMRI does not permit us to distinguish activity in any particular nucleus. For brevity, we use the term amygdala here to describe the amygdaloid complex. There was a significant increase in right amygdala activity in the face perceived–house suppressed condition for fearful versus neutral faces (right peak xyz, 18 –2 –20; t = 3.26; p < 0.005) (Fig. 3B). Crucially, there was also a significant increase in amygdala activity bilaterally in the face suppressed–house perceived condition for fearful versus neutral faces (left peak x y z, –20 –2 –14, t = 3.17, p < 0.005; right peak x y z, 18 –2 –26, t = 3.04, p < 0.005) (Fig. 3B). Thus, amygdala activity increased significantly for face–house composites containing a fearful face versus those with a neutral face, regardless of whether the face images were perceived or suppressed in the rivalrous displays. This finding is consistent with the hypothesis that the amygdala signals the presence of potentially threatening stimuli in the absence of perceptual awareness (LeDoux, 2000).

Figure 3.

Regions of interest and MR signal change in the amygdala obtained during the fearful–neutral and happy–neutral runs of the binocular rivalry experiment. Regions of interest were defined using WFU_PickAtlas (Maldjian et al., 2003). A, Masks used to define the amygdaloid complex in each hemisphere. B, Coronal brain slices show greater activity bilaterally in the amygdala when comparing the fearful facial expression epochs with the neutral facial expression epochs in the face suppressed–house perceived condition (left peak x y z, –20 –2 –14; right peak x y z, 18 –2 –26). Panels below show corresponding BOLD signal change during the fear–neutral run, plotted separately for the face perceived–house suppressed and face suppressed–house perceived conditions. C, Coronal brain slices show greater activity unilaterally in the amygdala when comparing the happy facial expression epochs with the neutral facial expression epochs in the face suppressed–house perceived condition (right peak x y z, 24 –8 –22). Panel below shows corresponding BOLD signal change during the happy–neutral run, plotted separately for the face perceived–house suppressed and face suppressed–house perceived conditions. Regions of significant activity are overlaid on standard Montreal Neurological Institute space templates (Wellcome Department of Imaging Neuroscience; http://www.fil.ion.ucl.ac.uk/spm/spm2.html).

A comparison of activation in the face perceived–house suppressed conditions containing happy versus neutral faces failed to reveal any significant activation (p > 0.05). For the face suppressed–house perceived condition, however, there was a significant increase in right amygdala activity for happy versus neutral faces (right peak xyz, 24 –8 –22; t = 2.66; p < 0.005) (Fig. 3C). Thus, amygdala activity remained unchanged during perception of happy versus neutral faces but increased significantly in the right hemisphere for the same comparison when the face stimulus was suppressed.

Discussion

The principal aim of our study was to examine activity within the amygdala and ventral extrastriate areas in response to fearful and happy facial expressions presented under conditions of binocular suppression. We used spatially overlaid, composite face–house stimuli in which the face and house images were displayed exclusively to the left or right eye via red-colored or green-colored filters, resulting in binocular rivalry. In pilot testing, we confirmed that prolonged viewing of the composite stimuli resulted in alternating periods of dominance and suppression of the two images, interspersed by short periods of “blending” between them, as reported by many previous investigators (Blake, 2001). During scanning, however, we displayed the stimuli briefly (for 500 msec), so that only one of the two image types (face or house) was perceived, while the other was reliably suppressed.

Both the fusiform gyrus and parahippocampal gyrus showed increased activity when their “preferred” image type (face or house) was dominant (and thus consciously perceived) relative to when the preferred image was suppressed. These findings are consistent with several previous reports of face-specific activity in the fusiform gyrus and place-specific activity in the parahippocampal place area (Kanwisher et al., 1997; Haxby et al., 2000; Tong et al., 2000; Tong and Engel, 2001). The present results also replicate the findings of Tong et al. (1998), which suggest that activity within the fusiform and parahippocampal gyri is reduced or absent during periods of suppression during binocular rivalry.

Most importantly, we found that activity in the amygdaloid complex (here termed the amygdala) increased significantly for fearful and happy faces relative to neutral faces, when the faces were suppressed. Under conditions of binocular rivalry, therefore, the amygdala continues to encode affective information from face stimuli that are not consciously perceived. This finding is consistent with the view that the amygdala receives inputs via two main pathways: a rapid, subcortical pathway that conveys crude information enabling efficient processing of affective stimuli in the absence of attention and awareness, and a slower, geniculostriate pathway that conveys more complex visual information, allowing consciously mediated analysis of affective valence (Morris et al., 1998; Whalen et al., 1998; LeDoux, 2000; Vuilleumier et al., 2003). During binocular rivalry, image suppression has been suggested to arise from inhibition of monocular cells in the primary visual cortex (Polonsky et al., 2000; Tong, 2001). Because information conveyed via the cortical pathway would not be represented in the primary visual cortex or extrastriate areas, it seems likely that the increased amygdala activity we observed for suppressed affective faces is driven primarily or exclusively by inputs conveyed via the subcortical pathway.

The proposed dissociation between cortical and subcortical inputs to the amygdala is based on converging lines of evidence. A recent behavioral and anatomical study in the rat revealed two subcortical and two cortical routes carrying visual information to the amygdala (Shi and Davis, 2001), consistent with a large body of anatomical and physiological data (LeDoux, 2000; Sah et al., 2003). In humans, imaging studies of patients with occipital lesions have demonstrated increased activity in the amygdala and extrastriate cortex during presentation of visual stimuli in the “blind” field (Baseler et al., 1999; Morris et al., 2001). In addition, increases in amygdala activation to the presentation of masked (unseen) facial expressions has been demonstrated in neurologically normal individuals (Morris et al., 1998, 2001; Whalen et al., 1998; Öhman, 2002). Although the current study is unable to determine which subcortical route might convey affective information, the significant amygdala response observed during cortical suppression of facial expressions suggests that a subcortical pathway is likely to be involved.

Our findings reveal a degree of specificity in amygdala responses to explicitly perceived threatening versus nonthreatening expressions. We observed an increase in right amygdala activity for consciously perceived fearful faces but no such increase in the left or right amygdala for consciously perceived happy faces, consistent with previous reports (Adolphs et al., 1994, 1999; Calder et al., 1996; Morris et al., 1996; Blair et al., 1999). In contrast, there was a comparative lack of specificity in amygdala responses to threatening versus nonthreatening expressions for faces that were not consciously perceived because of binocular suppression. Amygdala activity increased bilaterally for suppressed fearful faces and also increased on the right for suppressed happy faces.

Together, the pattern of amygdala responses we observed under conditions of binocular rivalry are broadly consistent with those of Anderson et al. (2003), who examined attentional modulation of amygdala responses to facial expressions of fear and disgust (the latter being a response to threat related to potential physical contamination). They found no reduction in amygdala activity for unattended versus attended faces with an expression of fear but a significant increase in amygdala activity for unattended versus attended expressions of disgust. Their results might imply that amygdala processing is specific to fearful expressions under conditions of focused attention and more broadly tuned to various threat-related expressions (including disgust) under conditions of reduced attention. The present findings significantly extend this notion by showing that, when faces are completely suppressed because of binocular rivalry, even nonthreatening (happy) expressions may elicit significant increases in amygdala activity.

Although we observed some overlap in the amygdala regions responsive to suppressed fearful and happy faces, distinct peaks of activity were apparent for the two emotions. One interpretation of these different loci of maximum activity is that the human amygdaloid complex comprises distinct “affective nodes” that are differentially engaged depending on the affective valence of a stimulus. Single-unit recordings in non-human primates have shown that rewarding and nonrewarding associations are represented by different populations of neurons in the amygdala (Ono and Nishijo, 1992; Wilson and Rolls, 1993). In humans, different activity peaks have been demonstrated to facial expressions of fear and surprise (Kim et al., 2003) and to expressions of fear and disgust (Anderson et al., 2003). Together, these findings are consistent with the idea that the amygdala consists of distinct, valence-dependent affective nodes. A challenge for future studies will be to develop techniques that can uniquely resolve neural activity within these putative nodes.

We suggest that a subregion of the amygdala responds unselectively to a range of facial expressions, both threatening and nonthreatening, when visual inputs are restricted to the subcortical route, as occurs under conditions of binocular suppression. This hypothesis is consistent with evidence that patients with unilateral occipital damage show increased amygdala activity in response to both fearful and happy faces presented in their blind field (Morris et al., 2001). It is also consistent with findings from a recent study of parietal extinction, in which extinguished happy and sad faces primed responses to a consciously perceived central target face (Williams and Mattingley, 2004). Damage to striate and extrastriate areas would be expected to reduce the efficiency of cortical inputs to the amygdala while leaving any subcortical inputs relatively intact. It has been suggested that the subcortical route to the amygdala is selectively tuned for low spatial frequency visual information (Vuilleumier et al., 2003). It is therefore unlikely to distinguish between fine-grained facial features, such as lines and wrinkles, which determine the subtle idiosyncrasies in expressions of different valence. Low spatial frequency information conveyed via the subcortical pathway should therefore support only a crude distinction between affective and neutral faces. In contrast, visual inputs to the amygdala via the (consciously mediated) cortical pathway support fine-grained distinctions between threatening and nonthreatening expressions on the basis of an analysis of high spatial frequency information (Vuilleumier et al., 2003).

We suggest that an affective node within the amygdala that receives fast subcortical input and that is selectively tuned for facial expressions regardless of valence could assist in the rapid detection of potential danger. This notion is supported by the anatomy in that the nuclei of the amygdala have afferent and efferent connections to cortical and subcortical regions, including the brainstem (Sah et al., 2003). In addition, engagement of parietal attentional mechanisms (Corbetta and Shulman, 2002) is implied, as facial expressions capture attention (Hansen and Hansen, 1988; Fox et al., 2000; Eastwood et al., 2001). To determine the facial expression, a more refined analysis is necessary requiring processing within the temporal lobe (Rolls, 2000), from which information could be relayed back to modulate activity in the amygdala on the basis of the valence of the expression. Concurrent activation of the brainstem and hypothalamic nuclei would permit a rapid response to a possible threat (Sah et al., 2003) before conscious perception of the face.

In conclusion, the results of our study further elucidate the role of the amygdala in processing socially relevant stimuli and extend previous findings that the amygdala responds to fearful expressions independently of attention (Vuilleumier et al., 2001; Anderson et al., 2003) and awareness (Morris et al., 1998, 2001; Whalen et al., 1998; Öhman, 2002). We showed that, under conditions of binocular suppression, when visual inputs are restricted to noncortical pathways, the amygdala responds nonselectively to both threatening and nonthreatening facial expressions, reflecting a tradeoff between specificity and speed of processing.

Footnotes

This work was supported by a grant from Unilever Research, UK. We acknowledge a contribution toward scan costs made by the Brain Imaging Research Foundation. We also thank Ross Cunnington, Aina Puce, and Anina Rich for their suggestions on a previous draft of this manuscript.

Correspondence should be addressed to Dr. Jason B. Mattingley, Cognitive Neuroscience Laboratory, School of Behavioural Science, University of Melbourne, Parkville, Victoria 3010, Australia. E-mail: j.mattingley@psych.unimelb.edu.au.

DOI:10.1523/JNEUROSCI.4977-03.2004

Copyright © 2004 Society for Neuroscience 0270-6474/04/242898-07$15.00/0

References

- Adolphs R, Tranel D, Damasio H, Damasio AR (1994) Impaired recognition of emotion in facial expression following bilateral damage to the human amygdala. Nature 372: 669–672. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, Young AW, Calder AJ, Phelps EA, Anderson A, Lee GP, Damasio AR (1999) Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia 37: 1111–1117. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JDE (2003) Neural correlates of the automatic processing of threat facial signals. J Neurosci 23: 5627–5633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baseler HA, Morland AB, Wandell BA (1999) Topographic organization of human visual areas in the absence of input from primary cortex. J Neurosci 19: 2619–2627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair RJR, Morris JS, Frith CD, Perrett DI, Dolan RJ (1999) Dissociable neural responses to facial expressions of sadness and anger. Brain 122: 883–893. [DOI] [PubMed] [Google Scholar]

- Blake R (2001) A primer on binocular rivalry, including current controversies. Brain Mind 2: 5–38. [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR (1996) Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Rowland D, Perrett DI, Hodges JR, Etcoff NL (1996) Facial emotion recognition after bilateral amygdala damage: differentially severe impairment of fear. Cogn Neuropsychol 13: 699–745. [Google Scholar]

- Corbetta M, Shulman GL (2002) Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 3: 201–215. [DOI] [PubMed] [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM (2001) Differential attentional guidance by unattended faces expressing positive and negative emotion. Percept Psychophys 63: 1004–1013. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W (1975) Pictures of facial affect. Palo Alto, CA: Consulting Psychologists.

- Epstein R, Harris A, Stanley D, Kanwisher N (1999) The parahippocampal place area: recognition, navigation, or encoding? Neuron 23: 115–125. [DOI] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K (2000) Facial expressions of emotion: are angry faces detected more efficiently? Cogn Emotion 14: 61–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen CH, Hansen RD (1988) Finding the face in the crowd: an anger superiority effect. J Pers Soc Psychol 54: 917–924. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Ida Gobbini M (2000) The distributed human neural system for face perception. Trends Cogn Sci 4: 223–232. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Somerville LH, McLean AA, Johnstone T, Shin LM, Whalen PJ (2003) Functional MRI responses of the human dorsal amygdala/substantia innominata region to facial expressions of emotion. Ann NY Acad Sci 985: 533–535. [Google Scholar]

- LeDoux JE (2000) Emotion circuits in the brain. Annu Rev Neurosci 23: 155–184. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Burdette JB, Kraft RA (2003) An automated method for neuroanatomical and cytoarchitectonic atlas-based interrogation of fMRI datasets. NeuroImage 19: 1233–1239. [DOI] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ (1996) A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383: 812–815. [DOI] [PubMed] [Google Scholar]

- Morris JS, Öhman A, Dolan RJ (1998) Conscious and unconscious emotional learning in the human amygdala. Nature 393: 467–470. [DOI] [PubMed] [Google Scholar]

- Morris JS, De Gelder B, Weiskrantz L, Dolan RJ (2001) Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain 124: 1241–1252. [DOI] [PubMed] [Google Scholar]

- Öhman A (2002) Automaticity and the amygdala: nonconscious responses to emotional faces. Curr Dir Psychol Sci 11: 62–66. [Google Scholar]

- Ono T, Nishijo H (1992) Neurophysiological basis of the Klüver-Bucy syndrome: responses of monkey amygdaloid neurons to biologically significant objects. In: The amygdala (Aggleton JP, ed). New York: Wiley.

- O'Shea RP, Blake R, Wolfe JM (1994) Binocular rivalry and fusion under scotopic luminances. Perception 23: 771–784. [DOI] [PubMed] [Google Scholar]

- Polonsky A, Blake R, Braun J, Heeger DJ (2000) Neuronal activity in human primary visual cortex correlates with perception during binocular rivalry. Nat Neurosci 3: 1153–1159. [DOI] [PubMed] [Google Scholar]

- Rolls ET (2000) Functions of the primate temporal lobe cortical visual areas in invariant visual object and face recognition. Neuron 27: 205–218. [DOI] [PubMed] [Google Scholar]

- Roorda A, Williams DR (1999) The arrangement of the three cone classes in the living human eye. Nature 397: 520–522. [DOI] [PubMed] [Google Scholar]

- Sah P, Faber ESL, Lopez De Armentia M, Power J (2003) The amygdaloid complex: anatomy and physiology. Physiol Rev 83: 803–834. [DOI] [PubMed] [Google Scholar]

- Shi C, Davis M (2001) Visual pathways involved in fear conditioning measured with fear-potential startle: behavioral and anatomical studies. J Neurosci 21: 9844–9855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F (2001) Competing theories of bincoular rivalry: a possible resolution. Brain Mind 2: 55–83. [Google Scholar]

- Tong F, Engel SA (2001) Interocular rivalry revealed in the human cortical blind-spot. Nature 411: 195–199. [DOI] [PubMed] [Google Scholar]

- Tong F, Nakayama K, Vaughan JT, Kanwisher N (1998) Binocular rivalry and visual awareness in human extrastriate cortex. Neuron 21: 753–759. [DOI] [PubMed] [Google Scholar]

- Tong F, Nakayama K, Moscovitch M, Weinrib O, Kanwisher N (2000) Response properties of the human fusiform face area. Cogn Neuropsychol 17: 257–279. [DOI] [PubMed] [Google Scholar]

- von Helmholtz H (1867) Handbuch der physiologischen Optik. Leipzig: Voss.

- Vuilleumier P, Armony JL, Driver J, Dolan RJ (2001) Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron 30: 829–841. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ (2003) Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci 6: 624–631. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA (1998) Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J Neurosci 18: 411–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheatstone C (1838) Contributions to the physiology of vision—Part I: On some remarkable and hitherto unobserved phenomena of binocular vision. Philos Trans R Soc Lond B Biol Sci 128: 371–394. [Google Scholar]

- Williams MA, Mattingley JB (2004) Unconscious processing of nonthreatening facial emotion in parietal extinction. Exp Brain Res 154: 403–406. [DOI] [PubMed] [Google Scholar]

- Wilson FAW, Rolls ET (1993) The effects of stimulus novelty and familiarity on neuronal activity in the amygdala of monkeys performing recognition memory tasks. Exp Brain Res 93: 367–382. [DOI] [PubMed] [Google Scholar]