Abstract

Previous work has suggested that object and place processing are neuroanatomically dissociated in ventral visual areas under conditions of passive viewing. It has also been shown that the hippocampus and parahippocampal gyrus mediate the integration of objects with background scenes in functional imaging studies, but only when encoding or retrieval processes have been directed toward the relevant stimuli. Using functional magnetic resonance adaptation, we demonstrated that object, background scene, and contextual integration of selectively repeated objects and background scenes could be dissociated during the passive viewing of naturalistic pictures involving object-scene pairings. Specifically, bilateral fusiform areas showed adaptation to object repetition, regardless of whether the associated scene was novel or repeated, suggesting sensitivity to object processing. Bilateral parahippocampal regions showed adaptation to background scene repetition, regardless of whether the focal object was novel or repeated, suggesting selectivity for background scene processing. Finally, bilateral parahippocampal regions distinct from those involved in scene processing and the right hippocampus showed adaptation only when the unique pairing of object with background scene was repeated, suggesting that these regions perform binding operations.

Keywords: object processing, scene processing, binding, fMR-A, hippocampus, parahippocampal region

Introduction

The association between viewed items and the context in which they appear has been termed “contextual binding” (Chalfonte and Johnson, 1996; Mitchell et al., 2000). The capacity to encode such associations can be distinguished from the ability to separately encode either the item or its context.

The hippocampal and parahippocampal regions have been shown to be responsible for the association of objects with their spatial location in the stimulus environment (Burgess et al., 2002). Other neuroimaging evidence indicates that these regions are also involved in relational processing (Cohen et al., 1999), that is, in integrating or binding disparate elements in a complex scene to form a meaningful representation. For example, greater activation of the hippocampus and parahippocampal region occurs when stimulus elements are encoded relationally or “bound” together rather than encoded individually (Henke et al., 1997, 1999). Thus far, in vivo demonstration of hippocampal and parahippocampal activations during binding operations have used paradigms that required effortful encoding (Henke et al., 1997, 1999; Montaldi et al., 1998). However, behavioral data suggest that these processes operate without explicit intention (Luck and Vogel, 1997; Cohen et al., 1999). In the present study, we sought to identify regions engaged in contextual binding without explicit instruction to do so.

In this study, we used functional magnetic resonance adaptation (fMR-A) to dissociate the neural correlates of object and background scene processing from those involved in contextual binding. fMR-A involves the successive presentation of stimuli that differ along a critical dimension of interest. Adaptation refers to the attenuation of blood oxygenation level-dependent (BOLD) signal associated with the repetition of critical components of these stimuli (Grill-Spector et al., 1999; Grill-Spector and Malach, 2001). This facilitates identification of neural substrates sensitive to the component of interest. In the present study, the critical components of interest were objects and contextually congruent background scenes. In most previous studies, the neural correlates of repetition have been studied by varying the appearance of a target object while holding constant the context in which experimental items were presented. Here, we independently varied the object and background scene, permitting us to assess neural response to object or background scene changes, or the bound relationship between the two. Instead of presenting pictures as paired stimuli usually used in adaptation paradigms (Kourtzi and Kanwisher, 2000; Chee et al., 2003), we presented picture quartets. This was intended to increase adaptation to components of interest (Grill-Spector et al., 1999) while concurrently recruiting larger BOLD signal change (Dale and Buckner, 1997) through additive responses to single events. This served to amplify differences in response to the critical feature.

We reasoned that using this methodology, object-processing areas would show adaptation to repeated objects but not repeated background scenes, whereas background scene-processing areas would show adaptation to repeated scenes but not repeated objects. In addition, a contextual binding area would only adapt when an entire object with background scene was repeated.

Materials and Methods

Participants. Twenty healthy right-handed volunteers (8 males, mean age 21 years, range 19-24 years) gave informed consent for this study. Approval for this study was granted by the Singapore General Hospital Institutional Review Board (EC 36/2004).

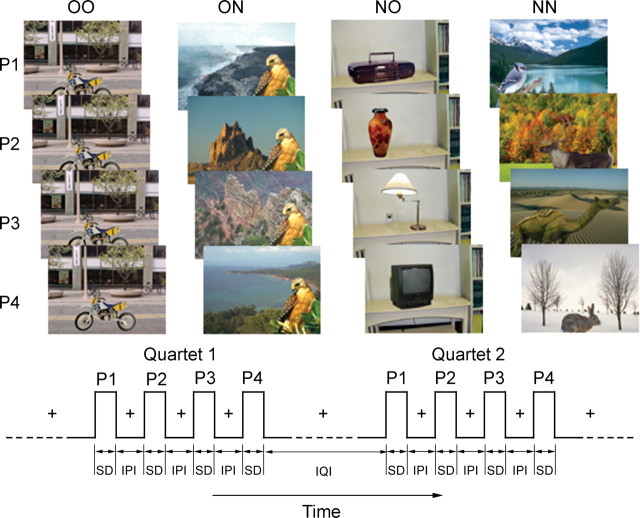

Stimuli. Full color pictures of 200 objects with 200 place scenes were used in a hybrid block event-related fMRI experiment in which quartets of picture stimuli comprising object-scene pairings were presented (Fig. 1). The response to each picture quartet was analyzed like an event would be in a canonical event-related fMRI design.

Figure 1.

Picture stimuli and presentation sequence used in this study. Each picture (P) consisted of an object placed within a background scene. Pictures were presented in quartets with objects and scenes selectively repeated. Four types of quartets were used: (1) four repeated, identical pairings of object and background scene (OO); (2) an identical object repeated in four novel background scenes (ON); (3) novel objects in each of four repeated background scenes (NO), or (4) four novel objects paired with four novel background scenes (NN). Each picture was presented for a stimulus duration (SD) of 1500 msec with an IPI of 250 msec. Quartets were presented with a mean IQI of 9000 msec.

Four experimental conditions were used in this experiment. These were (1) four repeated, identical pairings of object and scene (OO: old object, old scene); (2) an identical object repeated in four novel scenes (ON: old object, new scene); (3) novel objects in each of four repeated scenes (NO: new object, old scene), or (4) four novel objects paired with four novel scenes (NN: new object, new scene). Objects and scenes consisted of an approximately balanced number of animate and inanimate items and indoor and outdoor scenes, respectively. Objects subtended approximate visual angles ranging from 0.5 × 1.0° (minimum) to 2.5 × 5.5° (maximum) while scenes subtended a visual angle of 4.6 × 6.3°.

The position of an object relative to the scene in all the conditions was adjusted to be contextually congruent. For example, an airplane in an aerial scene would be located above ground and in the top half of an image, whereas an animal walking on the ground would appear in the bottom half. Each picture was configured so that an attentive viewer could unambiguously discern an object from its background scene.

The four pictures within each quartet were presented consecutively and for 1500 msec each (stimulus duration). They were separated by an interval of 250 msec [interpicture interval (IPI)]. Between each quartet was an interquartet interval (IQI) that randomly varied between 6000, 9000, and 12,000 msec, with a mean of 9000 msec. A fixation cross was shown during the IPIs and IQIs when there was no picture on display. The order in which each experimental condition was presented was randomized for each subject such that a given condition did not occur more than three times consecutively. Each subject underwent four experimental runs that lasted 348 sec each. A run was composed of 20 quartets that were preceded and followed by periods of fixation that lasted 30 sec. Each subject therefore viewed 20 quartets of each experimental condition.

Some features of our experimental design bear mention because they differ in important ways from previous studies in which object-scene combinations have been used. First, object and scene pairings were chosen on the basis that there would be a plausible, natural semantic relationship between the pair. This is to be distinguished from studies of source memory in which unrelated pairs are generated to control for semantic biases during source retrieval (Tsivilis et al., 2003). Second, we facilitated the segmentation of object and background scene by changing the critical component without further manipulations. This is to be contrasted with previous studies in which a colored outline (Tsivilis et al., 2003) or colored objects in black and white scenes (Epstein et al., 2003) were used. Third, given what is known about visual adaptation, we used multiple repetitions of the critical feature to enhance adaptation effects (Grill-Spector et al., 1999). Fourth, the use of multiple repetitions also yielded larger signal change per experimental condition as a result of summation of responses to individual stimuli (Dale and Buckner, 1997; Glover, 1999). Finally, we exposed the pictures for a longer duration: 1500 msec in contrast to around 400 msec for other fMR-A experiments (Grill-Spector and Malach, 2001; Chee et al., 2003; Epstein et al., 2003). This allowed subjects more time to detect a change in object or scene, if such were present. The long picture exposure duration could also have increased the likelihood of engaging binding processes because it has been suggested that increasing exposure time may facilitate post-perceptual processes that enable this (Mitchell et al., 2000; Bar et al., 2001). The combination of the last three design features was intended to amplify differences in neural responses to the critical features of interest.

Imaging protocol. The fMRI experiments were performed in a 3.0 T Allegra scanner (Siemens, Erlangen, Germany). One hundred and sixteen functional scans were acquired in each run using a gradient-echo echoplanar imaging sequence with repetition time of 3000 msec, and a field of view of 19.2 × 19.2 cm, 64 × 64 matrix. Thirty-six oblique axial slices approximately parallel to the anterior commissure-posterior commissure line and 3-mm-thick (0.3 mm gap) were acquired. High-resolution coplanar T2 anatomical images were also obtained. For the purpose of image display in Talairach space [three-dimensional (3D) coordinates given in Talairach space], a further high-resolution anatomical reference image was acquired using a 3D-MP-RAGE (magnetization-prepared rapid gradient echo) sequence. Stimuli were projected onto a screen at the back of the magnet while participants viewed the screen using a mirror.

Data analysis. Functional images were processed using Brain Voyager 2000 version 4.9 and Brain Voyager QX version 1.1 (Brain Innovation, Maastricht, The Netherlands) customized with in-house scripts. Details concerning image preprocessing have been described in previous studies from our laboratory (Chee and Choo, 2004; Chee et al., 2004). Briefly, Gaussian smoothing in the spatial domain was applied, using a full width at half maximum kernel of 8 mm. Functional image data were analyzed using a general linear model in which the hemodynamic response associated with each of the four experimental conditions was modeled using 28 finite-impulse-response predictors, seven for each condition. Thus, there was one predictor for each scan spanning from stimulus onset to 21 sec after this (seven scans). This approach does not make a priori assumptions about response onset latency, peak or waveform. A statistical threshold of p < 0.001 (uncorrected) and a cluster size of >27 contiguous voxels was used except for the hippocampus where a reduced threshold of p < 0.005 was used. This latter threshold was used because of the lower signal-to-noise in this region (Ojemann et al., 1997; Eldridge et al., 2000; Strange et al., 2002). Furthermore, the method of identifying functional regions by specifying conjunctions is conservative (Nichols et al., 2004). A random effects analysis was used to identify significant voxels at the group level of analysis.

Region-of-interest (ROI)-based analysis of activation magnitude was performed on voxels identified as significantly activated using the fourth predictor (9 sec from stimulus onset) for each condition. This time point was chosen as an optimal trade-off between signal magnitude and adaptation effects after considering data from pilot studies. Voxels contributing to each ROI lay within a bounding cube of edge 10 mm surrounding the activation peak for that ROI. Parameter estimates of signal change for the group data for each ROI were used to plot the activation time course for each condition.

Results

Object and scene processing areas

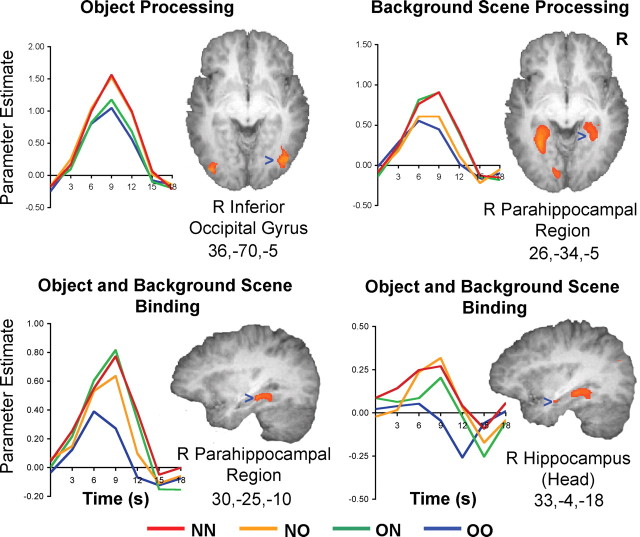

Regions involved in object processing were those showing adaptation when the object in a picture was repeated but not when the accompanying scene was repeated (i.e., voxels jointly fulfilling NN > OO, NN > ON, NO > OO, and NO > ON). The method is very conservative in that the regions identified through this process surpassed the statistical threshold of p < 0.001 in all the contrasts of interest. As such the t values cited henceforth indicate the comparison showing the contrast with the smallest signal difference (Nichols et al., 2004). Using this procedure, the right and left fusiform areas (BA 37; R: t(19) = 5.80, p < 0.001; L: t(19) = 4.46, p < 0.001) as well as bilateral inferior occipital gyri (BA 19; R: t(19) = 6.14, p < 0.001; L: t(19) = 5.81, p < 0.001) were found to be involved in object processing (Table 1, Fig. 2). In these regions, there was no significant difference in BOLD signal between the OO and ON or between NN and NO conditions, indicating that adaptation was related to object repetition and not scene repetition. These regions broadly correspond to the functionally defined lateral occipital complex (Malach et al., 1995; Grill-Spector et al., 2001) and fusiform face area (FFA) (Haxby et al., 1996; Puce et al., 1996; Kanwisher et al., 1997). There was slight right hemisphere preponderance in the object-sensitive regions.

Table 1.

Talairach coordinates of voxels that showed the largest fMR-A effects in the conjunction analyses for object processing, background scene processing, and object and background scene binding

|

Brain region |

Brodmann's area |

x |

y |

z |

t value |

||

|---|---|---|---|---|---|---|---|

| Object processing (NN > OO and NN > ON and NO > OO and NO > ON) | |||||||

| Right fusiform gyrus | 37 | 39 | −49 | −10 | 5.80 | ||

| Right inferior occipital gyrus | 19 | 36 | −70 | −5 | 6.14 | ||

| Left fusiform gyrus | 37 | −42 | −51 | −11 | 4.46 | ||

| Left inferior occipital gyrus | 19 | −36 | −80 | −5 | 5.81 | ||

| Background scene processing (NN > OO and NN > NO and ON > OO and ON > NO) | |||||||

| Right parahippocampal gyrus | 19 | 26 | −34 | −5 | 3.89 | ||

| Left parahippocampal gyrus | 19 | −27 | −44 | −4 | 5.38 | ||

| Object and background scene binding (NN > OO and NO > OO and ON > OO and (NN − OO) > (NN − ON) + (NN − NO)) | |||||||

| Right hippocampus | 35 | 33 | −4 | −18 | 3.10 | ||

| Right parahippocampal gyrus | 37 | 30 | −25 | −10 | 4.66 | ||

| Left parahippocampal gyrus | 36 | −27 | −34 | −11 | 3.26 | ||

| Left fusiform gyrus | 37 | −36 | −52 | −5 | 5.23 | ||

| Left superior parietal lobule |

7 |

−24 |

−73 |

47 |

3.22 |

||

The specific contrasts considered in each conjunction analysis are shown in parentheses. NN, New object, new background; NO, new object, old background; ON, old object, new background; OO, old object, old background.

Figure 2.

Time course plots of signal change obtained from regions participating in object processing, background scene processing, and object and background-scene binding. Threshold set at p < 0.001 (uncorrected), except for the hippocampal head in which the threshold was p < 0.005 (uncorrected). In regions responsible for binding, signals elicited by NN, ON, and NO at the peak of the response curve, were each statistically different from the signal elicited by OO. However, the signals elicited by each of the three conditions did not differ significantly in peak amplitude.

Regions involved in scene processing were denoted as those showing adaptation when the scene in a picture was repeated but not when the object was repeated (i.e., voxels jointly fulfilling NN > OO, NN > NO, ON > OO, and ON > NO). The conjunction of these contrasts showed right and left parahippocampal areas to be involved in scene processing (BA 19; R: t(19) = 3.89, p < 0.001; L: t(19) = 5.38, p < 0.001) (Table 1, Fig. 2). No significant difference in signal was observed between the OO and NO conditions or between ON and NN, indicating that adaptation was related to scene repetition and not object repetition. These regions revealed correspond to the parahippocampal place area (Epstein and Kanwisher, 1998).

Unlike previous studies in which a localizer scan was first used to identify object- and place-sensitive regions before performing individual ROI-based signal magnitude analysis (Grill-Spector et al., 1999; Epstein et al., 2003), the present result was obtained by showing whole pictures in which there were no overt cues to parse object and scene. We speculate the clear demarcation of object and scene-processing areas in the ventral visual pathway may be related to the presentation of naturalistic scenes in which a specific picture element change occurs repeatedly and in succession. Previous work has shown that within the ventral visual pathway, activation of the FFA may be increased by attending to face identity rather than color similarity (Clark et al., 1997). Conversely, FFA activation was reduced by attention to houses when faces and houses both appeared in the stimulus (Wojciulik et al., 1998). We posit that in object-processing regions, when objects change in NO, they elicit activity in the object-processing region to the same level as that elicited by NN as a result of attention to the changing objects (changing backgrounds in NN are irrelevant to processing in this region). Conversely when volunteers view ON pictures, attention to the changing background, implicitly established by the “blocked” nature of the repetition design, could serve to reduce activation of repeated objects in object processing areas to floor, i.e., the same level as that elicited by OO. The possibility that recurrent feedback pathways between each extrastriate visual area contribute to competitive interactions that facilitate the construction of neural representations of objects has been raised previously (O'Craven et al., 1999). Attentional effects may thus conspire with those involved in repetition suppression to explain the absence of graded responses to ON or NO relative to NN and OO in either the object processing or background scene processing areas.

Areas involved in object-scene binding

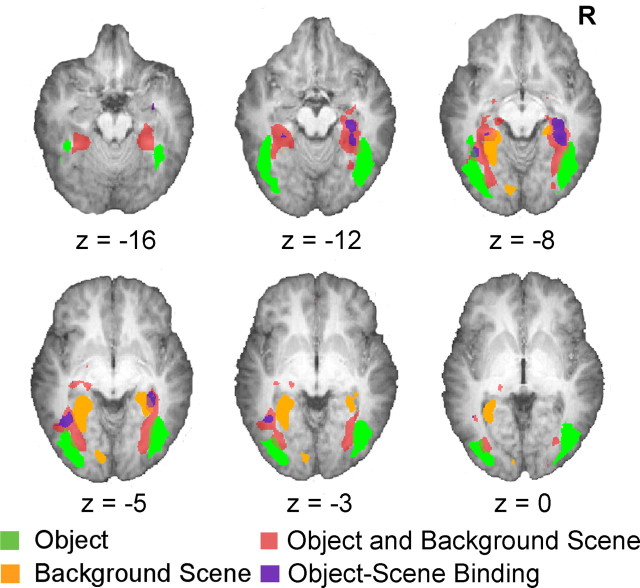

Regions involved in binding were identified as those showing: (1) adaptation only when both objects and scenes were repeated but not when any element in a picture was novel (NN > OO, ON > OO, and NO > OO) and (2) adaptation to repeated object-scene pairs that was greater than the sum of object and scene adaptation effects [(NN -OO) > ((NN -ON) + (NN -NO))]. Fulfillment of both criteria differentiates regions showing adaptation to a particular combination of object and scene (Fig. 3, purple areas) from those showing independent, weak adaptation to both objects and scenes (Fig. 3, dark pink) (also see Grill-Spector, 2003). The subset of regions fulfilling the strict conjunction of conditions lay in the right and left parahippocampal areas (BA 36, 37; R: t(19) = 4.66, p < 0.001; L: t(19) = 3.26, p < .001) a left fusiform area distinct from that involved in object processing (BA 37; t(19) = 5.23, p < 0.001), as well as a tiny region at the head of the right hippocampus (BA 35, t(19) = 3.10, p = 0.003) (Table 1, Fig. 2).

Figure 3.

Activation maps from the three conjunction analyses superimposed on axial brain slices illustrating the neuroanatomical distribution of areas involved in object processing, background scene processing, the weak combination of object and background scene processing, and object and background scene binding. Threshold set at p < 0.005 (uncorrected) for clearer illustration.

Importantly, there was no significant difference in the peak responses to the NN, ON, and NO conditions, indicating that there was no difference in adaptation in these regions when at least one picture component was novel. The irregular time course of activation observed and the relative “deactivation” within the hippocampus in the OO condition where the lowest amount of processing was expected (Eldridge et al., 2000; Stark and Squire, 2001) may reflect the fact that it is continuously active in response to most stimuli (Fletcher et al., 1995).

Also of note: regions sensitive to the conjunction of object and scene i.e., those involved in binding, were located between the areas for processing object and scene separately and extended more anteriorly along the ventral extrastriate visual pathway (Fig. 3). These regions are a subset of the regions showing adaptation to both objects and scenes

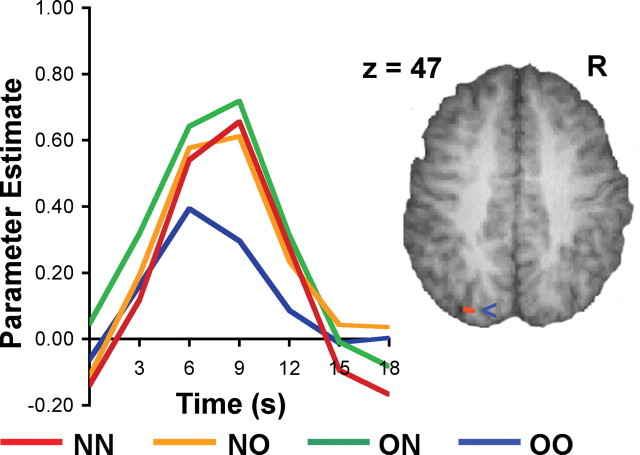

A region in the left parietal lobe close to the intraparietal sulcus (BA 7, t(19) = 3.22, p < 0.001) (Fig. 4) showed relatively higher signal change in each of the three conditions in which a novel stimulus was present. Previous work has shown sensitivity of this region to both objects and places (Grill-Spector, 2003). Activation in this region has also previously been attributed to visual feature binding in the setting of a spatial attention task (Shafritz et al., 2002). However, given that the parietal region performs multiple cognitive operations including attentional orienting and spatial working memory (Culham and Kanwisher, 2001), it is appropriate to exercise caution in making a functional attribution in the present experiment given that we did not manipulate these other processes.

Figure 4.

Time course plot of signal change in the parietal region emerging from the conjunction analysis of object and background scene binding. Figure thresholded at p < 0.001 (uncorrected).

Discussion

The highlight of the present study was the demonstration of contextual binding areas in the right hippocampus and bilateral parahippocampal regions by the use of passively viewed naturalistic picture stimuli. Furthermore, we were able to demonstrate activations related to object processing in the occipitotemporal region and scene processing in the medial parahippocampal region without specifically prompting volunteers to segregate object and background scene. These results are congruent with the notion that the hippocampus and the parahippocampal region are involved in integrating information from various regions in the ventral visual cortex.

Regions involved in contextual binding

Multiple lines of evidence point to the hippocampus as being responsible for context binding. Although their specific connectivity may differ, we discuss the hippocampus and parahippocampal region together (Cohen et al., 1999) because in most imaging studies to date, a clear distinction in their functional properties has not emerged (for review, see Squire et al., 2004). First, the hippocampus receives converging unimodal and poly-modal input from various neocortical regions. In vivo recordings within the human hippocampus have demonstrated neurons sensitive to feature conjunctions (Fried et al., 1997). Electrophysiological recordings also show that although hippocampal neurons are difficult to drive with single sensory stimuli, they respond strikingly after learning multiple stimulus configurations (Eichenbaum, 1997). Second, the hippocampus plays an important role in binding when subjects are directed to actively integrate elements of a stimulus array (Mitchell et al., 2000). Third, the hippocampus undergoes atrophy with healthy aging (Petersen et al., 2000), and functional activation of this region is reduced when older adults are presented with tasks that have substantial relational processing or contextual integration demands (Park et al., 1984, 2003).

Despite the evidence implicating the medial temporal region in binding operations, the present results are significant because they demonstrate the engagement of medial temporal areas in contextual binding without explicit task instructions to relate picture elements. Additionally, the relatively anterior position of the binding regions we identified relative to the object and scene sensitive regions is in keeping with the notions that object processing along the ventral visual pathway proceeds in a hierarchical manner from posterior to anterior (Grill-Spector and Malach, 2004), that receptive field size enlarges correspondingly as one moves anteriorly (Smith et al., 2001), and that the parahippocampal region operates on input from higher order visual areas (Cohen et al., 1999).

The identification of the left fusiform as sensitive to the conjunction of object and background scene information represents a novel imaging finding and extends the previous finding that this region is sensitive to the conjunction of features relevant to object processing (Schoenfeld et al., 2003). This observation is further corroborated by invasive neurophysiological investigations in primates that have revealed the existence of neurons sensitive to conjunctions of object features (Baker et al., 2002; Brincat and Connor, 2004).

The influence of experiment context on repetition suppression effects

The present result poses an interesting comparison with those obtained in an experiment that also tested repetition of novel, repeated, and recombined item-scene elements (Tsivilis et al., 2003) but in the context of evaluating recognition memory. That study reported an equivalent quantum of repetition suppression for OO, ON, NO and recombined OO and no signal reduction for NN. Thus, the repetition of any picture element in all the ventral visual areas reduced activation. This pattern of change denotes sensitivity to any old picture element in the entire ventral visual pathway. In contrast, the present study identifies regions showing four different patterns of sensitivity to repeated picture elements in spatially dissociable regions within the ventral visual pathway.

Several experimental design differences between the Tsivilis et al. (2003) study and the present one could account for the divergent findings. We used fMR-A, imaging neural responses to stimulus changes taking place during passive viewing. In contrast, Tsivilis et al. (2003) had subjects actively integrate a target object to a contextually unrelated or irrelevant background during encoding. These investigators then studied neural responses sampled during explicit recognition judgments. It is likely that repetition effects studied in Tsivilis et al. (2003) and fMR-A tap different neurophysiological processes (Grill-Spector and Malach, 2001; Henson and Rugg, 2003). Of particular interest is an experiment comparing the effect of face repetition on fusiform activation. Repetition suppression was evident when faces were repeated in the context of an implicit task but not when volunteers were expected to make episodic memory judgments (Henson et al., 2002; Henson, 2003). Thus, type of paradigm used, differences in stimuli themselves, phase of imaging (encoding versus recognition), and explicit attention to deliberately demarcated picture elements may influence repetition effects.

In sum, contextual binding between items and background scenes can be shown to take place in the hippocampus and parahippocampal region in the absence of explicit instructions to associate the two entities. Further work would be helpful in clarifying the role of the parietal lobe in binding as well as the basis for how experimental conditions influence repetition effects.

Footnotes

This work was supported by National Medical Research Council 2000/0477, Biomedical Research Council 014, and The Shaw Foundation awarded to M.W.L.C. as well as by National Institute on Aging Grants R01 AGO15047 and R01AGO60625-15 awarded to D.P.

Correspondence should be addressed to Michael W. L. Chee, Cognitive Neuroscience Laboratory, 7 Hospital Drive, #01-11, Singapore 169611, Singapore. E-mail: mchee@pacific.net.sg.

Copyright © 2004 Society for Neuroscience 0270-6474/04/2410223-06$15.00/0

References

- Baker CI, Behrmann M, Olson CR (2002) Impact of learning on representation of parts and wholes in monkey inferotemporal cortex. Nat Neurosci 5: 1210-1216. [DOI] [PubMed] [Google Scholar]

- Bar M, Tootell RB, Schacter DL, Greve DN, Fischl B, Mendola JD, Rosen BR, Dale AM (2001) Cortical mechanisms specific to explicit visual object recognition. Neuron 29: 529-535. [DOI] [PubMed] [Google Scholar]

- Brincat SL, Connor CE (2004) Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nat Neurosci 7: 880-886. [DOI] [PubMed] [Google Scholar]

- Burgess N, Maguire EA, O'Keefe J (2002) The human hippocampus and spatial and episodic memory. Neuron 35: 625-641. [DOI] [PubMed] [Google Scholar]

- Chalfonte BL, Johnson MK (1996) Feature memory and binding in young and older adults. Mem Cognit 24: 403-416. [DOI] [PubMed] [Google Scholar]

- Chee MW, Choo WC (2004) Functional imaging of working memory after 24 hr of total sleep deprivation. J Neurosci 24: 4560-4567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chee MW, Soon CS, Lee HL (2003) Common and segregated neuronal networks for different languages revealed using functional magnetic resonance adaptation. J Cogn Neurosci 15: 85-97. [DOI] [PubMed] [Google Scholar]

- Chee MW, Goh JO, Lim Y, Graham S, Lee K (2004) Recognition memory for studied words is determined by cortical activation differences at encoding but not during retrieval. NeuroImage 22: 1456-1465. [DOI] [PubMed] [Google Scholar]

- Clark VP, Parasuraman R, Keil K, Kulansky R, Fannon S, Maisog JM, Ungerleider LG, Haxby JV (1997) Selective attention to face identity and color studied with fMRI. Hum Brain Mapp 5: 293-297. [DOI] [PubMed] [Google Scholar]

- Cohen NJ, Ryan J, Hunt C, Romine L, Wszalek T, Nash C (1999) Hippocampal system and declarative (relational) memory: summarizing the data from functional neuroimaging studies. Hippocampus 9: 83-98. [DOI] [PubMed] [Google Scholar]

- Culham JC, Kanwisher NG (2001) Neuroimaging of cognitive functions in human parietal cortex. Curr Opin Neurobiol 11: 157-163. [DOI] [PubMed] [Google Scholar]

- Dale AM, Buckner RL (1997) Selective averaging of rapidly presented individual trials using fMRI. Hum Brain Mapp 5: 329-340. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H (1997) Declarative memory: insights from cognitive neurobiology. Annu Rev Psychol 48: 547-572. [DOI] [PubMed] [Google Scholar]

- Eldridge LL, Knowlton BJ, Furmanski CS, Bookheimer SY, Engel SA (2000) Remembering episodes: a selective role for the hippocampus during retrieval. Nat Neurosci 3: 1149-1152. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N (1998) A cortical representation of the local visual environment. Nature 392: 598-601. [DOI] [PubMed] [Google Scholar]

- Epstein R, Graham KS, Downing PE (2003) Viewpoint-specific scene representations in human parahippocampal cortex. Neuron 37: 865-876. [DOI] [PubMed] [Google Scholar]

- Fletcher PC, Frith CD, Grasby PM, Shallice T, Frackowiak RS, Dolan RJ (1995) Brain systems for encoding and retrieval of auditory-verbal memory. An in vivo study in humans. Brain 118 (Pt 2): 401-416. [DOI] [PubMed] [Google Scholar]

- Fried I, MacDonald KA, Wilson CL (1997) Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron 18: 753-765. [DOI] [PubMed] [Google Scholar]

- Glover GH (1999) Deconvolution of impulse response in event-related BOLD fMRI. NeuroImage 9: 416-429. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K (2003) The neural basis of object perception. Curr Opin Neurobiol 13: 159-166. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R (2001) fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol (Amst) 107: 293-321. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R (2004) The human visual cortex. Annu Rev Neurosci 27: 649-677. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R (1999) Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron 24: 187-203. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kourtzi Z, Kanwisher N (2001) The lateral occipital complex and its role in object recognition. Vision Res 41: 1409-1422. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Horwitz B, Maisog JM, Rapoport SI, Grady CL (1996) Face encoding and recognition in the human brain. Proc Natl Acad Sci USA 93: 922-927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henke K, Buck A, Weber B, Wieser HG (1997) Human hippocampus establishes associations in memory. Hippocampus 7: 249-256. [DOI] [PubMed] [Google Scholar]

- Henke K, Weber B, Kneifel S, Wieser HG, Buck A (1999) Human hippocampus associates information in memory. Proc Natl Acad Sci USA 96: 5884-5889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson RN (2003) Neuroimaging studies of priming. Prog Neurobiol 70: 53-81. [DOI] [PubMed] [Google Scholar]

- Henson RN, Rugg MD (2003) Neural response suppression, haemodynamic repetition effects, and behavioural priming. Neuropsychologia 41: 263-270. [DOI] [PubMed] [Google Scholar]

- Henson RN, Shallice T, Gorno-Tempini ML, Dolan RJ (2002) Face repetition effects in implicit and explicit memory tests as measured by fMRI. Cereb Cortex 12: 178-186. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302-4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N (2000) Cortical regions involved in perceiving object shape. J Neurosci 20: 3310-3318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK (1997) The capacity of visual working memory for features and conjunctions. Nature 390: 279-281. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB (1995) Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci USA 92: 8135-8139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell KJ, Johnson MK, Raye CL, Mather M, D'Esposito M (2000) Aging and reflective processes of working memory: binding and test load deficits. Psychol Aging 15: 527-541. [DOI] [PubMed] [Google Scholar]

- Montaldi D, Mayes AR, Barnes A, Pirie H, Hadley DM, Patterson J, Wyper DJ (1998) Associative encoding of pictures activates the medial temporal lobes. Hum Brain Mapp 6: 85-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB (2004) Valid conjunction inference with the minimum statistic. NeuroImage, in press. [DOI] [PubMed]

- O'Craven KM, Downing PE, Kanwisher N (1999) fMRI evidence for objects as the units of attentional selection. Nature 401: 584-587. [DOI] [PubMed] [Google Scholar]

- Ojemann JG, Akbudak E, Snyder AZ, McKinstry RC, Raichle ME, Conturo TE (1997) Anatomic localization and quantitative analysis of gradient refocused echo-planar fMRI susceptibility artifacts. NeuroImage 6: 156-167. [DOI] [PubMed] [Google Scholar]

- Park DC, Puglisi JT, Sovacool M (1984) Picture memory in older adults: effects of contextual detail at encoding and retrieval. J Gerontol 39: 213-215. [DOI] [PubMed] [Google Scholar]

- Park DC, Welsh RC, Marshuetz C, Gutchess AH, Mikels J, Polk TA, Noll DC, Taylor SF (2003) Working memory for complex scenes: age differences in frontal and hippocampal activations. J Cogn Neurosci 15: 1122-1134. [DOI] [PubMed] [Google Scholar]

- Petersen RC, Jack Jr CR, Xu YC, Waring SC, O'Brien PC, Smith GE, Ivnik RJ, Tangalos EG, Boeve BF, Kokmen E (2000) Memory and MRI-based hippocampal volumes in aging and AD. Neurology 54: 581-587. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Asgari M, Gore JC, McCarthy G (1996) Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J Neurosci 16: 5205-5215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenfeld MA, Tempelmann C, Martinez A, Hopf JM, Sattler C, Heinze HJ, Hillyard SA (2003) Dynamics of feature binding during object-selective attention. Proc Natl Acad Sci USA 100: 11806-11811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shafritz KM, Gore JC, Marois R (2002) The role of the parietal cortex in visual feature binding. Proc Natl Acad Sci USA 99: 10917-10922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AT, Singh KD, Williams AL, Greenlee MW (2001) Estimating receptive field size from fMRI data in human striate and extrastriate visual cortex. Cereb Cortex 11: 1182-1190. [DOI] [PubMed] [Google Scholar]

- Squire LR, Stark CE, Clark RE (2004) The medial temporal lobe. Annu Rev Neurosci 27: 279-306. [DOI] [PubMed] [Google Scholar]

- Stark CE, Squire LR (2001) When zero is not zero: the problem of ambiguous baseline conditions in fMRI. Proc Natl Acad Sci USA 98: 12760-12766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strange BA, Otten LJ, Josephs O, Rugg MD, Dolan RJ (2002) Dissociable human perirhinal, hippocampal, and parahippocampal roles during verbal encoding. J Neurosci 22: 523-528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsivilis D, Otten LJ, Rugg MD (2003) Repetition effects elicited by objects and their contexts: an fMRI study. Hum Brain Mapp 19: 145-154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N, Driver J (1998) Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. J Neurophysiol 79: 1574-1578. [DOI] [PubMed] [Google Scholar]